Abstract

Artificial intelligence (AI) is being increasingly applied in healthcare to improve patient care and clinical outcomes. We previously developed an AI model using ICD-10 (International Classification of Diseases, Tenth Revision) codes with other clinical variables to predict in-hospital mortality among trauma patients from a nationwide database. This study aimed to externally validate the performance of the AI model. Validation was conducted using a multicenter retrospective cohort study design, analyzing patient data from January 2020 to December 2021. The study included trauma patients based on specific ICD-10 codes, with other clinical variables. The performance of the AI model was evaluated against conventional metrics, including the ISS, and the ICISS (ICD-based ISS), using sensitivity, specificity, accuracy, balanced accuracy, precision, F1-score, and area under the receiver operating characteristic curve (AUROC) analyses. Data from 4,439 patients were analyzed. The AI model demonstrated high overall performance, achieving an AUROC of 0.9448 and a balanced accuracy of 85.08%, thereby outperforming traditional scoring systems such as ISS, or ICISS. Furthermore, the model accurately predicted mortality across datasets from each hospital (AUROCs of 0.9234 and 0.9653, respectively) despite significant differences in hospital characteristics. In the subset of patients with ISS < 9, the model showed a robust AUROC of 0.9043, indicating its effectiveness in predicting mortality, even in cases with lower-severity injuries. For patients with ISSs ≥ 9, the model maintained high sensitivity (93.60%) and balanced accuracy (77.08%), proving its reliability in more severe injury cases. External validation demonstrated the AI model’s high predictive accuracy and reliability in assessing in-hospital mortality risk among trauma patients across different injury severities and heterogeneous cohorts. These findings support the model’s potential integration into emergency departments and offer a significant tool for enhancing patient triage and treatment protocols.

Similar content being viewed by others

Introduction

Trauma is one of the leading causes of in-hospital deaths and has been a burden on patients and healthcare providers1. Estimating severity and predicting prognosis is a prerequisite for reducing this burden, as it enables clinicians to allocate resources more effectively and prioritize interventions such as angioembolization or surgery2,3. Despite advances in trauma care, the ability to accurately predict outcomes in trauma patients remains a challenge. To date, several scoring systems have been used to estimate trauma mortality and severity, such as the Injury Severity Score (ISS), International Classification of Diseases, Tenth Revision–based Severity Score (ICISS), or Trauma and Injury Severity Score (TRISS)4,5,6. However, the performance and availability of the conventional metrics remain limited7,8,9.

Recent AI models designed to predict in-hospital mortality have demonstrated potential to aid critical decision-making processes, although their integration into routine clinical practice remains limited10,11. We previously developed and trained an AI model that leveraged the International Classification of Diseases, Tenth Revision (ICD-10) coding system to predict in-hospital mortality in trauma patients, with promising results11. This model has demonstrated its efficacy within a comprehensive national database, exhibiting substantial accuracy, sensitivity, and specificity against established trauma-scoring systems11. This model was initially trained and tested on a comprehensive National Emergency Department Information System (NEDIS) dataset encompassing over 778,111 patients from more than 400 hospitals across South Korea. With an impressive area under the receiver operating characteristic curve (AUROC) of 0.9507, the model achieved not only high sensitivity and specificity but also demonstrated significantly balanced accuracy when compared to traditional trauma scoring systems.

However, the generalizability of any AI model must be tested through rigorous external validation before it can be used reliably in clinical practice. This study aimed to externally validate an ICD-10-based AI model for predicting in-hospital mortality in trauma patients using a multicenter retrospective cohort study. By leveraging datasets from heterogeneous centers, this study sought to assess the generalizability and accuracy of the AI model across different settings, patient populations, and practices. Trauma prediction models have clinical applicability across various points in the trauma care system, such as for triage in the emergency department and prognosis during hospitalization. The focus of our study is to predict in-hospital mortality, providing a critical prognostic tool at the point of admission or during early care stages, which can inform resource allocation and treatment prioritization.

Materials and methods

Validation of AI model for predicting in-hospital mortality in diverse hospital settings

In this study, we validated our previously trained AI model for predicting in-hospital mortality11. In the previous study11, we constructed a 9-layer deep neural network model (DNN) and incorporated a comprehensive set of variables, including age, gender, intentionality of injury, mechanism of injury, presence of emergent symptoms, and the Alert/Verbal/Painful/Unresponsive (AVPU) scale12, in addition to the initial and altered Korean Triage and Acuity Scale (KTAS)13, specific ICD-10 codes (international version), and categorized procedure codes for surgical or interventional radiology procedures. The DNN comprised an input layer, followed by 7 fully connected (FC) hidden layers with 512, 256, 128, 64, 32, 16, and 8 nodes, respectively, and an output layer. Dropout with a rate of 0.3 and L2 regularization were applied to the FC hidden layers to prevent overfitting. Based on the one-hot encoding process for all variables, we integrated 866 ICD-10 codes (beginning with letters S or T) to derive 914 distinct input features for the AI model, as summarized in Supplementary Table 1. The KTAS serves as a standardized severity triage tool in emergency department (ED) to minimize complexity and uncertainty, categorizing patients into five levels: level 1, resuscitation; level 2, emergent; level 3, urgent; level 4, less urgent; and level 5, non-urgent13. According to the NEDIS policy, KTAS assessments are performed by certified personnel within two minutes of ED admission, with alterations made as necessary based on the patient’s condition prior to transition to the operating room, intensive care unit (ICU), or general ward. For ICD-10 codes, we used 866 codes starting with S or T. Procedure codes required for billing through the National Health Insurance Review & Assessment Service, covering surgical and angioembolization interventions, are more specifically categorized as follows and summarized in Supplementary Table 2: head procedures, vascular torso procedure, abdominal torso procedures, chest torso procedures, heart torso procedures, and extracorporeal membrane oxygenation.

Our AI model was constructed with seven fully connected layers serving as hidden layers, and it features an output layer that provides the probability of patient mortality. In a previous study, we trained the model using 778,111 patients from the NEDIS dataset, which was collected from 2016 to 2019 from 400 + hospitals in South Korea. The model provided an AUROC of 0.9507, with 87.68% sensitivity, 86.25% specificity, and 86.97% balanced accuracy. In this study, we validated the model using 4,439 patients from two regional trauma centers in South Korea. The training set for the development of the AI model comprised all types of EDs in South Korea, while the external validation set comprised two regional trauma centers.

Study design and dataset for external validation of AI model

This study was conducted at two regional trauma centers in South Korea, corresponding to level 1 trauma centers in the US. This study was approved by the relevant institutional review board approval was obtained, and the requirement for informed consent was waived because of the observational nature of this study. All patient data were coded anonymously to ensure privacy and confidentiality. Institutional review board (IRB) approval was obtained from Cheju Halla General Hospital and Chonnam National University Hospital (IRB numbers: CHH-2023-L16-01 and CNUH-2022-L02-01, respectively).

This multicenter retrospective cohort study was conducted in accordance with the TRIPOD (Transparent Reporting of a Multivariable Prediction Model for Individual Prognosis or Diagnosis) statement14and STROCSS (Strengthening the Reporting of Cohort, Cross-sectional, and Case–Control Studies in Surgery) criteria15. The dataset was collected from the Korean Trauma Data Bank (KTDB) data of two regional trauma centers in South Korea from January 2020 to December 2021. This study aimed to externally validate the AI model for predicting in-hospital mortality in trauma patients. The primary outcome of our study was the in-hospital mortality rate.

The inclusion criteria for the study were as follows: (1) trauma patients identified by a diagnostic code starting with S or T according to the Korean version of the ICD-10; (2) patients who were admitted to the ICU or a general ward directly from the ED; and (3) patients who were admitted to the ICU or a general ward following surgery or a procedure initiated in the ED. According to the KTDB policy, patients were excluded from the KTDB for the following reasons: (1) absence of a diagnostic code starting with S or T; (2) presence of diagnostic codes related to frostbite (T33-T35.6), intoxication (T36-T65), or unspecified injuries or complications (T66-T78, T80-T88). To focus the analysis on in-hospital mortality, we excluded patients who (1) died in the ED or were transferred to another facility before admission (n = 686) and (2) were transferred to another facility or left the hospital against medical advice after admission (n = 2,061). Consequently, the data of 4,439 patients were used for the external validation of the model. The selection process is illustrated in Fig. 1.

In South Korea, regional trauma centers are the highest-level trauma centers, corresponding to Level-1 trauma centers. CNUH, as the highest-level tertiary university hospital in the region, experienced a high patient transfer rate. However, transfers to other tertiary hospitals were rare (3%, 52/1,726), with approximately 97% of transferred patients sent to lower-level hospitals, likely for conservative treatment rather than critical care.

Statistical analysis

All statistical analyses were performed using R version 4.1.2 (R Foundation for Statistical Computing, Vienna, Austria). Proportions were compared using the chi-squared test or Fisher’s exact test, as appropriate. Statistical significance was set at p< 0.05. AI models were implemented using Python (version 3.7.13) with TensorFlow (version 2.8.0), Keras (version 2.8.0), NumPy (version 1.21.6), Pandas (version 1.3.5), Matplotlib (version 3.5.1), and Scikit-learn (version 1.0.2). The performance of the prediction model was evaluated using seven metrics: sensitivity, specificity, accuracy, balanced accuracy, precision, F1-score, and AUROC. Sensitivity is a crucial measure of the ability to correctly identify positive cases, which is particularly important in serious conditions (e.g., death) in trauma, to avoid missing positive cases. Balanced accuracy accounts for both sensitivity and specificity, making it useful when dealing with imbalanced datasets, whereas AUROC provides a summary of model performance across all thresholds, which is helpful for comparing models in a general sense. In the evaluation of our AI model, calibration was assessed on the dataset using the Brier score16, calibration slope, and calibration intercept. To further ascertain the clinical utility of the model, we performed decision curve analysis17.

Conventional metrics for comparison

For comparison with traditional metrics, we employed ISS4, and ICISS5. ]. ICISS calculates the probability of survival using survival risk ratios (SRRs)5. In our previous study, we determined the SRR for each diagnostic code and applied these SRRs in the current study. We performed a correlation analysis to evaluate variability and complementarity between models. Models with low correlations are more likely to capture different patterns in the data, making them useful for improving predictive accuracy across diverse data points18,19,20,21.

Results

Patient characteristics

Notably, patients excluded due to death in the ED, being transferred, or leaving against medical advice were predominantly found at Chonnam National University Hospital (CNUH) compared to Cheju Halla General Hospital (CHH) (61.4% (2204/3585) at CNUH vs. 15.1% (543/3058) at CHH, p < 0.001). Other patient characteristics, according to hospital and mortality rates, are summarized in Table 1. The in-hospital mortality was significantly higher at CNUH (8.8% vs. 3.2%, p < 0.001). Significant differences were observed in terms of vascular procedures (3.5% at CHH vs. 5.3% at CNUH, p = 0.008), abdominal procedures (2.2% at CHH vs. 5.6% at CNUH, p < 0.001), head procedures (0% at CHH, 0.4% at CNUH, p = 0.004). Initial KTAS levels showed significant differences across levels 1 (0.8% at CHH vs. 3.2% at CNUH), 3 (59.6% at CHH, 37.1% at CNUH, p < 0.001), 4 (20.6% at CHH vs. 37.9% at CNUH, p < 0.001), and 5 (0.0% at CHH, 2.8% at CNUH, p < 0.001). Further analysis showed the difference in intentionality and mechanisms of injury. The AVPU scale responses, alert (91.7% at CHH vs. 88.9% at CNUH, p = 0.004), semi-coma (3.1% at CHH vs. 4.4% at CNUH, p = 0.036), and coma (1.0% at CHH vs. 2.7% at CNUH, p < 0.001), as well as sex distribution (62.3% male at CHH vs. 73.6% at CNUH, p < 0.001) demonstrated significant differences. For all cases, including both hospitals, the comparison of patient characteristics between surviving and deceased patients is summarized in Supplementary Table 3. The comparison of patient characteristics between patients with ISS < 9 and ISS ≥ 9 is summarized in Supplementary Table 4. The distribution of ICD-10 codes between the two hospitals is summarized in Supplementary Table 5. A comparison of ICD-10 codes between deceased and surviving patients is summarized in Supplementary Table 6. The comparison of ICD-10 codes between patients with ISS < 9 and ISS ≥ 9 is summarized in Supplementary Table 7.

Validation results: AI model performance

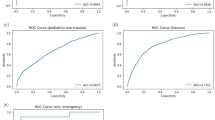

Our AI model for predicting in-hospital mortality demonstrated high accuracy across the entire dataset (Table 2). The TRISS could not be calculated for 156 patients due to factors such as intubation and injuries to the extremities. Therefore, we did not use TRISS to evaluate model performance. The model’s performance is illustrated in Fig. 2(A), showing an AUROC of 0.9448, outperforming traditional scoring systems such as ISS (AUROC of 0.8807) and ICISS (AUROC of 0.7978). The model achieved a sensitivity of 89.95%, surpassing ISS-16 (78.08%), ICISS (76.71%), and ISS-25 (53.88%). The AI model’s specificity was 80.21%, balancing sensitivity and specificity better than traditional models. The balanced accuracy of our model was the highest at 85.08%. Additionally, our model’s precision was 95.39%, indicating exceptional reliability and precision in predictions. Overall, the AI model outperformed ISS and ICISS across various datasets, including hospital and injury severity variations. (Fig. 2. A-I) Supplementary Table 8 provides a comparative analysis with other machine learning models.

Comparative ROC curves of our AI model and traditional models (ISS and ICISS) for predicting in-hospital mortality (A) from the entire dataset, (B) among patients with ISS < 9 from the entire dataset, (C) among patients with ISS ≥ 9 from the entire dataset, (D) from the CHH dataset, (E) among patients with ISS < 9 from the CHH dataset, (F) among patients with ISS ≥ 9 from the CHH dataset, (G) from the CNUH dataset, (H) among patients with ISS < 9 from the CNUH dataset, and (I) among patients with ISS ≥ 9 from the CNUH dataset.

AI model performance in patients with ISS < 9

For patients with lower-severity injuries (ISS < 9), the AI model continued to demonstrate commendable accuracy (Fig. 2B,E,H). Figure 2B shows an AUROC of 0.8979, significantly better than ISS (AUROC = 0.5455) and ICISS (AUROC = 0.5). As summarized in Table 2, our model balanced sensitivity and specificity better, with a sensitivity of 40.0% compared to zero sensitivity for ISS and ICISS. The model’s balanced accuracy was 68.74%, indicating precise risk stratification for less severe injuries. The model also showed exceptional precision (99.01%), minimizing false positives and optimizing resource allocation and patient management in emergency care. Characteristics and predictive probabilities for deceased patients with ISS < 9 are summarized in Table 3.

AI model performance in patients with ISS ≥ 9

For patients with more severe injuries (ISS ≥ 9), the AI model’s capability was highlighted again (Fig. 2C,F,I). Figure 2C shows an AUROC of 0.9143, superior to ISS (AUROC = 0.8171) and ICISS (AUROC = 0.7164). Table 2 shows a high sensitivity of 93.63%, indicating strong performance in identifying in-hospital mortality. Although specificity was 60.56%, lower than traditional metrics, the balanced accuracy of 77.09% suggests a favorable equilibrium between sensitivity and specificity. This balance is crucial for high-risk patients, where accurate risk identification is essential. The lower specificity compared to ISS-16, ISS-25, and ICISS is offset by the higher sensitivity, preventing potentially fatal oversights in patient care.

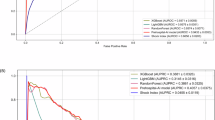

Correlation of ISS and ICISS vs. our AI model

Figure 3 shows the correlation between the AI model’s predictive probabilities and traditional scoring systems: ISS and ICISS. The regression for ISS versus the AI model yielded a slope of 15.62, an intercept of 4.97, and a moderate R-squared value of 0.34 (Fig. 3A). For ICISS versus the AI model, the regression showed a negative correlation with a slope of −0.37, an intercept of 0.96, and an R-squared value of 0.25 (Fig. 3B).

Calibration of AI model and decision curve analysis

Figure 4A shows the calibration of the AI model, with a Brier score of 0.10, indicating good accuracy. The calibration slope of 0.89 and intercept of −3.10 reflect the model’s calibration accuracy. The R-squared value of 0.52 indicates the model explains a substantial portion of the variance in observed outcomes.

Figure 4B presents the decision curve analysis for the AI model, ISS and ICISS. The AI model shows the highest net benefit around threshold probabilities of 8.8% (mortality at CNUH) and 3.2% (mortality at CHH), indicating significant clinical advantages in decision-making processes, particularly at low threshold probabilities for intervention. Traditional scoring systems show lower net benefits across the same threshold range.

Discussion

In this study, we leveraged a large, diverse cohort to externally validate an ICD-10-based AI model pre-trained on a comprehensive national dataset of over 778,111 patients11. Despite notable heterogeneity between the two hospitals, our AI model exhibited outstanding performance. This study highlights several key points in evaluating medical AI applications. Firstly, the two hospitals had distinct characteristics. One hospital, situated on an island with few nearby alternatives, had a high transfer rate (48.2%), while the other, inland and surrounded by many hospitals, had a low transfer rate (9.2%). It underscores the importance of considering the generalizability of AI models across various data distributions. We anticipate that our model will be applicable in diverse clinical settings. Secondly, we validated the AI model for patients with low ISS (< 9). Elderly patients and those with multiple comorbidities are at increased risk of mortality even from minor injuries, which traditional ISS cannot estimate. Our AI model demonstrated potential as an alternative to conventional metrics such as ISS and ICISS in predicting mortality. Despite its higher predictive performance for mortality, ISS is based on various measures, including organ damage and potential for recovery. The complementary nature of ISS, ICISS, and our AI model is highlighted by their wide distribution and variance. Thirdly, our AI model can predict mortality risk as soon as a diagnosis is made, even before surgery, making it useful at the admission stage in emergency rooms. The model can be used in various hospital stages and is especially beneficial in non-trauma centers, given its nationwide applicability. Nonetheless, prospective validation in real-world emergency department settings is necessary, as assigning ICD codes may be limited during the initial stages of care, and some injuries are only diagnosed intraoperatively. Additionally, further validation in non-trauma centers is required to assess the model’s generalizability and feasibility in broader clinical environments.

Several systematic reviews have reported AI models for predicting in-hospital mortalities. Zhang reported that only 12% of studies performed external validation22, which is crucial for model robustness, identifying overfitting, unveiling hidden biases, and assessing real-world applicability. The appropriate methodology for external validation remains unclear. For example, Gorczyca et al. used the Nationwide Readmission Database23, while Kwon et al. validated an AI model using a single hospital dataset24. Our study used two diverse cohorts, potentially increasing the AI model’s robustness. A reporting guideline for AI is under development25, indicating the need for further discussion on external validation methodologies.

The primary outcome was in-hospital mortality, focusing on patients with low ISS (< 9). Deceased patients were predominantly older with high comorbidities. Our AI model accurately predicted deaths that traditional metrics missed, although it also had false negatives. The vulnerability of older patients with severe comorbidities to trauma needs further investigation. Recent studies have also used in-hospital mortality as a primary outcome, regardless of hospital stay duration26,27,28,29,30. In the real world, distinguishing trauma-related mortality is challenging. Our model discriminated mortality well, even in patients with low ISS, where age and comorbidities contribute to specific patient vulnerabilities.

Our AI model focused on in-hospital mortality. However, injury severity also includes tissue damage extent, hospitalization need, intensive care, treatment cost, treatment complexity, treatment length, temporary or permanent disability, and quality of life31. The comparison with ISS may not be entirely fair because the ISS does not include a physiological component, which limits its ability to comprehensively predict mortality. However, the ISS remains the most widely used conventional tool for evaluating injury severity and is the standard assessment tool in Korean regional trauma centers. Our study aimed to overcome the limitations of conventional tools, such as the ISS, by leveraging AI technology to provide a more robust and accurate prediction of in-hospital mortality. Our model addresses one aspect of injury severity—mortality—and aims to complement rather than replace conventional prediction models such as ISS. Notably, TRISS showed poor performance in previous studies in the US8and South Korea9. TRISS was not calculated for 156 patients who transferred from other hospitals and intubated without checked GCS, limiting its reliability. Vital signs at admission, used in TRISS, may not reflect the initial patient condition accurately. Our model development excluded vital signs due to their insignificance in predicting outcomes, highlighting TRISS’s limitations in different clinical settings. Given that there were patients with uncheckable TRISS, an alternative AI model seems to be more useful. Notably, in our study, deceased patients with ISS < 9 showed a high TRISS (over 97%).

In our previous study, we did not consider dataset diversity during model development, leading to several critical issues. AI models trained on biased data may produce outcomes that favor certain groups while disadvantaging or discriminating against others. The NEDIS dataset encompasses various types of hospitals, including trauma centers, non-trauma centers, and small hospitals. This is because the AI model was derived from a nationwide dataset. Consequently, the models may exhibit poor performance in specific situations or with certain inputs, highlighting the need for evaluation in real-world scenarios. Our AI model, derived from a comprehensive dataset, exhibited excellent performance in the context of trauma centers due to the data diversity in NEDIS. Christie et al. demonstrated that machine learning provided excellent discrimination of trauma mortality in diverse settings, including hospitals in the US and South Africa, with a high AUROC exceeding 0.9 in both cohorts30. The validation results of the diverse cohorts in our study were excellent as well.

In this study, we used a cutoff value of 9 on the ISS for minor trauma and analyzed the validation performances. Specifically, we intended to investigate the AI model’s discriminative power in patients with minor injuries, focusing on those with ‘very minor’ injuries, and tested our AI model accordingly. However, we also recognize the importance of evaluating the model with a more widely accepted definition of major trauma using a cutoff value of 16. Using the cutoff value of 16, the results show that our AI model consistently demonstrates high sensitivity, specificity, and balanced accuracy across all datasets, outperforming traditional models. This consistent performance highlights the AI model’s potential as a reliable tool for improving patient triage and treatment protocols.

Our study had several limitations to be addressed in future work. First, our AI model did not incorporate significant confounders such as the precise extent of injuries, initial treatment, fluid resuscitation, infection control, or insurance. These factors can substantially influence the mortality outcomes of trauma patients. The exclusion of these confounders is a limitation of the NEDIS dataset used in this study. Future studies should aim to include these confounders to provide a more comprehensive analysis. Bias and risk of bias are important considerations when developing and validating AI models, particularly when applying them across diverse patient populations. Factors such as sex, age, or socioeconomic status can influence injury outcomes and model performance. Although we did not stratify results by sex in this study, we recognize this as a potential limitation. Future work should include subgroup analyses to evaluate performance across demographic groups, ensuring fairness and generalizability of the model. Addressing these biases is essential when transferring AI models to different clinical settings to avoid inequities in care. Using more appropriate datasets that capture these variables will be crucial for improving the accuracy and generalizability of the AI model. Second, the high rate of exclusion of patients may have caused selection bias. Nonetheless, the performance of the AI model was excellent even in hospitals where the number of excluded patients was 61.4%. Due to the excellent health insurance system in South Korea and the low medical costs for trauma patients, hospital stays can be long. Therefore, some trauma centers transfer patients to lower-level hospitals after acute care and stabilization. Although CNUH exhibited a high transfer rate, most transferred patients were sent to lower-level hospitals for conservative treatment, minimizing the possibility that the most severe trauma patients were transferred out and excluded from the study. However, CHH is located in an island area with only four other hospitals, which contributes to its lower transfer rate. Further prospective studies are required to mitigate this bias. Third, the ICD-10 and procedure codes in each hospital were used for billing and not for evaluating accurate diagnoses. Nonetheless, the ICD-10–based model can complement the weaknesses of ISS. AI can detect consistent patterns of human behavior. Fourth, we included children because our previous AI model incorporated children’s data. Future tailored models for pediatric trauma patients are needed to improve accuracy and performance in this subgroup. Fifth, we did not consider serious injuries to the extremities, such as vascular injuries or extensive soft tissue damage. Future studies are needed to evaluate the impact of extremity injuries on mortality. Sixth, the calibration slope of 0.89, although close to 1.0, indicates slight miscalibration. This finding suggests that the model tends to slightly underestimate or overestimate probabilities at certain ranges. Additionally, the intercept of −3.10 and the R-squared value of 0.52 highlight areas for further improvement in calibration and overall accuracy. Despite achieving a moderate Brier score of 0.10, future efforts should focus on refining calibration to enhance the model’s generalizability and robustness in real-world clinical settings. Finally, beyond validation research, a prospective study on the clinical decision support system is required for the practical use of AI. Future research should focus on prospective studies to evaluate how the AI model can be integrated into clinical workflows. Additionally, further investigations are needed to explore the model’s performance across different healthcare settings and populations. These steps will help in refining the AI model and ensuring its robustness and generalizability in various clinical environments. Furthermore, determining the appropriate application of the model at various time points in the chain of care is crucial for identifying feasible predictors that are available at those specific stages. This will help align the model with real-world clinical workflows and ensure its utility in supporting relevant decisions, rather than relying solely on extensive data to drive performance improvements. Future work should focus on identifying practical predictors for early-stage decision-making in emergency departments while maintaining model accuracy and clinical relevance.

Conclusion

The external validation of the ICD-10–based AI model exhibited excellent performance. The AI model derived from a large nationwide dataset outperformed performance compared to conventional prediction models, despite the significant diversity of each cohort. It appears to serve as both a complement and alternative to the traditional model. Leveraging pre-existing big data is useful for development, validation, and implementation.

Data availability

Data availability statement: The dataset used in this study contains potentially sensitive patient information and, therefore, will be made available upon reasonable request to ensure compliance with ethical guidelines and institutional privacy policies.

Code availability

The code and input matrix files for the relevant analysis can be accessed at the following link: https://github.com/LeeSS96/NEDIS-Mortarlity.

Abbreviations

- AI:

-

Artificial intelligence

- ISS:

-

injury severity score

- ICISS:

-

International Classification of Diseases, Tenth Revision–based Severity Score

- TRISS:

-

Trauma and Injury Severity Score

- ICD-10:

-

International Classification of Diseases, Tenth Revision

- NEDIS:

-

National Emergency Department Information System

- AUROC:

-

area under the ROC curve

- AVPU:

-

Alert/Verbal/Painful/Unresponsive

- KTAS:

-

Korean Triage and Acuity Scale

- ED:

-

emergency department

- ICU:

-

intensive care unit

- KTDB:

-

Korean Trauma Data Bank

- TRIPOD:

-

Transparent Reporting of a Multivariable Prediction Model for Individual Prognosis or Diagnosis

- STROCSS:

-

Strengthening the Reporting of Cohort, Cross-sectional, and Case–Control Studies in Surgery

- SRRs:

-

survival using survival risk ratios

- Ps:

-

probability of survival

- RTS:

-

Revised Trauma Score

- CNUH:

-

Chonnam National University Hospital

- CHH:

-

Cheju Halla General Hospital

References

Park, Y. et al. Major causes of preventable death in Trauma patients. J. Trauma. Inj 34, 225–232 (2021).

Jung, P. Y. et al. Clinical practice guideline for the treatment of traumatic shock patients from the Korean Society of Traumatology. J. Trauma. Inj. 33, 1–12 (2020).

Kim, O. H. et al. Part 2. Clinical Practice Guideline for Trauma Team Composition and Trauma Cardiopulmonary Resuscitation from the Korean Society of Traumatology. J. Trauma. Inj. 33, 63–73 (2020).

Baker, S. P., O’Neill, B., Haddon, W. & Long, W. B. The injury severity score: A method for describing patients with multiple injuries and evaluating emergency care. J. Trauma 14, 187–196 (1974).

Bergeron, E. et al. Canadian benchmarks in trauma. J. Trauma 62, 491–497 (2007).

Jeong, T. S., Choi, D. H., Kim, W. K., Korea Neuro-Trauma Data Bank (KNTDB) Investigators2. The relationship between trauma scoring systems and outcomes in patients with severe traumatic brain injury. Korean J. Neurotrauma 18, 169–177 (2022).

Gagné, M., Moore, L., Beaudoin, C., Kuimi, B., Sirois, M. J. & B. L. & Performance of international classification of diseases-based injury severity measures used to predict in-hospital mortality: A systematic review and meta-analysis. J. Trauma. Acute Care Surg. 80, 419–426 (2016).

Demetriades, D. et al. TRISS methodology: An inappropriate tool for comparing outcomes between trauma centers. J. Am. Coll. Surg. 193, 250–254 (2001).

Ha, M., Yu, S., Lee, J. H., Kim, B. C. & Choi, H. J. Does the probability of survival calculated by the trauma and injury severity score method accurately reflect the severity of neurotrauma patients admitted to regional trauma centers in Korea? J. Korean Med. Sci. 38, e265 (2023).

Kang, W. S. et al. Artificial intelligence to predict in-hospital mortality using novel anatomical injury score. Sci. Rep. 11, 23534 (2021).

Lee, S. et al. Model for predicting in-hospital mortality of physical trauma patients using artificial intelligence techniques: Nationwide population-based study in Korea. J. Med. Internet Res. 24, e43757 (2022).

McNarry, A. F. & Goldhill, D. R. Simple bedside assessment of level of consciousness: Comparison of two simple assessment scales with the Glasgow Coma scale. Anaesthesia 59, 34–37 (2004).

Ryu, J. H. et al. Changes in relative importance of the 5-level triage system, Korean triage and acuity scale, for the disposition of emergency patients induced by forced reduction in its level number: A multi-center registry-based retrospective cohort study. J. Korean Med. Sci. 34, e114 (2019).

Moons, K. G. M. et al. Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis (TRIPOD): Explanation and elaboration. Ann. Intern. Med. 162, W1–73 (2015).

Agha, R. et al. STROCSS 2019 Guideline: Strengthening the reporting of cohort studies in surgery. Int. J. Surg. 72, 156–165 (2019).

Rufibach, K. Use of Brier score to assess binary predictions. J. Clin. Epidemiol. 63, 938–939 (2010).

Vickers, A. J., van Calster, B. & Steyerberg, E. W. A simple, step-by-step guide to interpreting decision curve analysis. Diagn. Progn Res. 3, 18 (2019).

Wood, D. et al. A unified theory of diversity in ensemble learning. J. Mach. Learn. Res. 24, 1–49 (2023).

Brown, G., Wyatt, J., Harris, R. & Yao, X. Diversity creation methods: A survey and categorisation. Inf. Fusion 6, 5–20 (2005).

Zhou, Z. H. Ensemble Methods: Foundations and Algorithms (CRC Press, 2012).

Kuncheva, L. I. & Whitaker, C. J. Measures of diversity in classifier ensembles and their relationship with the ensemble accuracy. Mach. Learn. 51, 181–207 (2003).

Zhang, T., Nikouline, A., Lightfoot, D. & Nolan, B. Machine learning in the prediction of Trauma outcomes: A systematic review. Ann. Emerg. Med. 80, 440–455 (2022).

Gorczyca, M. T., Toscano, N. C. & Cheng, J. D. The trauma severity model: An ensemble machine learning approach to risk prediction. Comput. Biol. Med. 108, 9–19 (2019).

Kwon, J. M. et al. Validation of deep-learning-based triage and acuity score using a large national dataset. PloS ONE 13, e0205836 (2018).

Collins, G. S. et al. Protocol for development of a reporting guideline (TRIPOD-AI) and risk of bias tool (PROBAST-AI) for diagnostic and prognostic prediction model studies based on artificial intelligence. BMJ Open 11, e048008 (2021).

Abujaber, A. et al. Prediction of in-hospital mortality in patients with post traumatic brain injury using National Trauma Registry and machine learning approach. Scand. J. Trauma. Resusc. Emerg. Med. 28, 44 (2020).

Matsuo, K. et al. Machine learning to predict in-hospital morbidity and mortality after traumatic brain Injury. J. Neurotrauma 37, 202–210 (2020).

Rau, C. S. et al. Mortality prediction in patients with isolated moderate and severe traumatic brain injury using machine learning models. PLoS ONE 13, e0207192 (2018).

Ahmed, F. S. et al. A statistically rigorous deep neural network approach to predict mortality in trauma patients admitted to the intensive care unit. J. Trauma Acute Care Surg. 89, 736–742 (2020).

Christie, S. A. et al. Machine learning without borders? An adaptable tool to optimize mortality prediction in diverse clinical settings. J. Trauma. Acute Care Surg. 85, 921–927 (2018).

Loftis, K. L., Price, J. & Gillich, P. J. Evolution of the abbreviated Injury Scale: 1990–2015. Traffic Inj Prev. 19, S109–S113 (2018).

Acknowledgements

This research was supported by the MSIT (Ministry of Science and ICT), Korea, under the ITRC (Information Technology Research Center) support program (IITP-2024-RS-2024-00438239), the Institute of Information & Communications Technology Planning & Evaluation (IITP) grant funded by the Korea government (MSIT) (No. RS-2024-00509257, Global AI Frontier Lab), and Chonnam National University Hospital Biomedical Research Institute (BCRI24068).

Author information

Authors and Affiliations

Contributions

All the authors wrote the manuscript and created the figure. Concept and design: SSL, DWK, WSK, and JL. Statistical Analysis: SSL, NO, HL, SP, DKY, WSK, and JL. Interpretation of data: SSL, DWK, NO, HL, SP, DKY, WSK, and JL. All authors critically reviewed and agreed to the submission of the final manuscript. SSL and DWK contributed equally to this work and should be considered co-first authors. WSK and JL contributed equally to this work and should be considered corresponding authors.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Institutional Review Board Statement

Institutional review board (IRB) approval was obtained from Cheju Halla General Hospital and Chonnam National University Hospital (IRB numbers: CHH-2023-L16-01 and CNUH-2022-L02-01, respectively).

Human ethics and consent to participate declarations

Not applicable. Informed consent was waived due to the study’s observational nature and the de-identification of each patient.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Lee, S., Kim, D.W., Oh, Ne. et al. External validation of an artificial intelligence model using clinical variables, including ICD-10 codes, for predicting in-hospital mortality among trauma patients: a multicenter retrospective cohort study. Sci Rep 15, 1100 (2025). https://doi.org/10.1038/s41598-025-85420-5

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-85420-5

Keywords

This article is cited by

-

Feasibility of AI-simulated patients for tailored selection of diabetes remission prediction models in metabolic bariatric surgery: a proof-of-concept study

Diabetology & Metabolic Syndrome (2025)

-

Artificial intelligence for surgical scene understanding: a systematic review and reporting quality meta-analysis

npj Digital Medicine (2025)

-

Epidemiological characteristics of pediatric trauma and the role of the Injury Severity Score in predicting mortality risk: a multicenter study

European Journal of Pediatrics (2025)