Abstract

Lung cancer remains a major global health challenge, and accurate pathological examination is crucial for early detection. This study aims to enhance hyperspectral pathological image analysis by refining annotations at the cell level and creating a high-quality hyperspectral dataset of lung tumors. We address the challenge of coarse manual annotations in hyperspectral lung cancer datasets, which limit the effectiveness of deep learning models requiring precise labels for training. We propose a semi-automated annotation refinement method that leverages hyperspectral data to enhance pathological diagnosis. Specifically, we employ K-means unsupervised clustering combined with human-guided selection to refine coarse annotations into cell-level masks based on spectral features. Our method is validated using a hyperspectral lung squamous cell carcinoma dataset containing 65 image samples. Experimental results demonstrate that our approach improves pixel-level segmentation accuracy from 77.33% to 92.52% with a lower level of prediction noise. The time required to accurately label each pathological slide is significantly reduced. While pixel-level labeling methods for an entire slide can take over 30 mins, our semi-automated method requires only about 5 mins. To enhance visualization for pathologists, we apply a conservative post-processing strategy for instance segmentation. These results highlight the effectiveness of our method in addressing annotation challenges and improving the accuracy of hyperspectral pathological analysis.

Similar content being viewed by others

Introduction

Lung cancer remains a leading global health challenge, with rising incidence and mortality rates. According to the International Agency for Research on Cancer (IARC), GLOBOCAN estimated 2.5 million new lung cancer cases (12.4% of all cancers) and 1.8 million deaths (18.7% of total cancer deaths) in 20221,2. As a result, lung cancer diagnosis and treatment have become critical public health priorities worldwide3. Currently, histopathology is the gold standard for diagnosing lung cancer4. Pathologists rely on morphological features observed through microscopes to classify cancer cells. However, this process is labor-intensive, time-consuming, costly, and prone to subjectivity, increasing the risk of misdiagnosis or missed diagnoses, especially when using conventional RGB imaging5. While whole slide images (WSI) have been widely used in tumor analysis, they are inherently limited by their reliance on spatial features such as cell size, texture, and color, which lack sufficient spectral information for capturing subtle pathological changes6.

Hyperspectral Microscopic Imaging (HMI) presents a promising alternative by combining hyperspectral imaging (HSI) with microscopy7. Unlike traditional RGB imaging, which captures only three spectral bands visible to the human eye, HMI acquires spectral data across numerous narrow bands, providing richer biochemical and pathological information8,9. HMI can capture subtle spectral variations by analyzing the intensity at different spatial positions and wavelengths, providing significant advantages in identifying molecular and pathological changes in tissues10. This capability has enabled diverse medical applications9, including melanoma classification11, cervical cancer detection12, and diabetic complications research13. Machine learning (ML) techniques have been widely applied across various domains, including environmental studies, geosciences, and transportation analysis, demonstrating their effectiveness in predictive modeling14,15,16. For example, models have been developed to predict soil properties17 and analyze environmental changes18. Deep learning methods have achieved considerable success in lung tumor segmentation on RGB images, with Dice coefficients ranging from 0.73 to 0.83 using coarse annotations6. In hyperspectral imaging, spectral-based models such as support vector machine (SVM) and convolutional neural network (CNN) have been applied for head and neck squamous cell carcinoma19 and melanoma segmentation20, achieving accuracies of 0.89 and 0.92, respectively. These advancements highlight the versatility and power of ML, providing a strong foundation for its application in hyperspectral pathological image analysis.

Despite its potential, the application of HMI in lung cancer diagnosis remains limited, primarily due to data scarcity and annotation challenges21,22. Strict ethical regulations and patient privacy concerns restrict access to human lung tissue specimens, while generating high-quality hyperspectral datasets is both time-consuming and costly23,24. Additionally, tumor boundaries are often vague and non-rigid, making precise manual labeling difficult, even for experienced pathologists25. Coarse annotations can significantly degrade the performance of ML models, particularly in hyperspectral imaging, where incorrect labels misguide model training26.

To address annotation noise and data scarcity, researchers have explored techniques such as data augmentation, semi-supervised learning, and loss function modifications27. For instance, Zhang et al.28 proposed a 3D-PulCNN for lung tumor classification, achieving 0.96 overall accuracy with patch-level annotations. Zhang et al.29 introduced a two-stage segmentation-classification network trained on 100 image scenes with cell-level annotations generated by a Generative Adversarial Network (GAN), achieving a Dice coefficient of 0.76. However, these studies either deviate from traditional segmentation tasks or still rely heavily on precise, labor-intensive annotations.

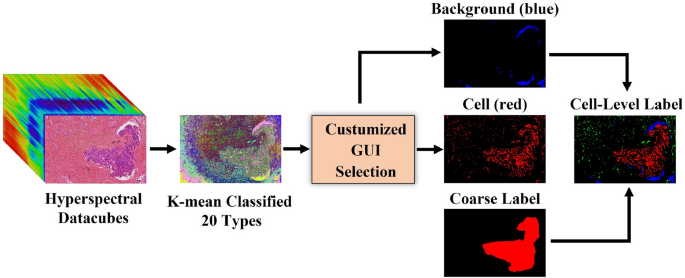

This study aims to improve the accuracy and efficiency of hyperspectral pathological image diagnosis for lung tumors. A semi-automatic method is proposed to refine coarse manual annotations into cell-level labels, reducing dependence on pathologist-drawn annotations while maintaining segmentation performance with minimal time cost and acceptable precision. We apply the K-means unsupervised clustering algorithm to divide hyperspectral data into 20 types based on spectral similarity and develop an interactive user interface to allow users to manually choose cell and background regions. The selected cell regions are superimposed on annotations provided by pathologists to create cell-level annotations used in model training. This transformation of the original pathology identification process allows for the classification of cancerous and non-cancerous cells. From the perspective of model performance, such label refinement can reduce annotation noise from uncertain elements within cancerous tissues and improve the prediction quality. From the perspectives of motivation and interpretability, emphasizing local spectral differences at the cellular level aligns with the focus of pathological analysis on cellular structures and the inherent strength of hyperspectral imaging in capturing spectral features.

In summary, the contributions of this study are summarized as follows:

-

Designed and built an HMI system to acquire high-quality hyperspectral images of pathological slides, with the actual resolution range of 1.025–1.830 \(\upmu\)m, the magnification of 10\(\times\), and adjustable spectral range, suitable for acquiring hyperspectral images of most slides;

-

Proposed a streamlined semi-automatic cell-level annotation refinement procedure and a corresponding user interface to refine coarse manual annotations from pathologists into cell-level labels used in model training for hyperspectral pathological data in a short time;

-

Introduced and publicly released a microscopic pathological hyperspectral dataset for lung squamous cell carcinoma (LUSC) segmentation, with the image size of 3088\(\times\)2064, spectral range of 420–750 nm, and spectral resolution of 5 nm, providing a valuable resource for future related research;

-

Implemented a HybridSN-Att model with attention module and conducted comprehensive experiments on our dataset for ML models before and after annotations refinement, showing that label refinement can greatly improve local features extraction performance and segmentation accuracy.

The rest of this paper is arranged as follows. “Materials and methods” section describes materials, the proposed HMI system and dataset, and the experimental methods. “Experimental results and analysis” section describes the experimental results of the proposed methods and discusses the effect of label refinement and post-processing strategy. “Discussion” section discusses the the results, limitations, and future directions of this study. Finally, this paper is summarized in “Conclusion” section.

Materials and methods

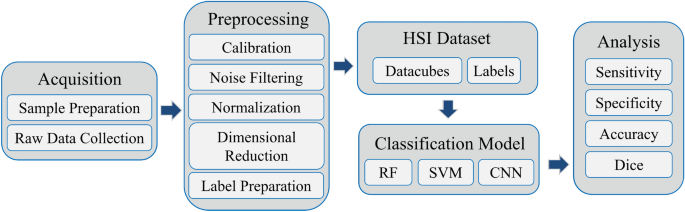

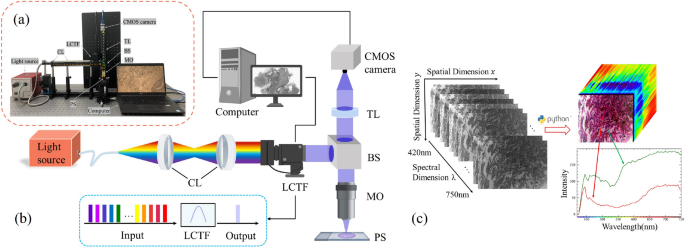

The framework of this study is illustrated in Fig. 1. Initially, we developed a custom hyperspectral microscopy system to acquire raw hyperspectral data from H&E-stained pathological tissue samples. Next, we performed a series of preprocessing steps and label preparation on the hyperspectral data to establish a high-quality hyperspectral dataset. Subsequently, we employed random forest (RF), SVM, 2D CNN, 3D CNN, and an improved hybrid CNN model to identify tumor tissues. Specifically, our improved model, named HybridSN-Att, draws inspiration from the recent work in remote sensing of Roy et al.30, which combines the 2D CNN and 3D CNN to enhance performance.

Biological samples

In this study, ten pathological slides of lung tissue were used, as shown in Fig. 2a. These slides were provided by the State Key Laboratory of Respiratory Disease at the First Affiliated Hospital of Guangzhou Medical University (GMU). We confirm that all methods were performed in accordance with the relevant guidelines and regulations, and the study protocol and procedures were approved by the ethics committee of GMU. The number of Ethics Review Approval Statement is 2022No.163.

All tissue samples underwent a series of standard preparation procedures carried out by professional physicians. The tissues were then stained with H&E. Finally, the entire tissue section was scanned using a digital slide scanner (NanoZoomer S360, Hamamatsu Photonics, Japan) at a magnification of 40x, achieving a pixel-level resolution of 0.23 \(\upmu\)m\(\times\)0.23 \(\upmu\)m. Several pathologists with expertise labeled the digital histological images, as shown in Fig. 2b, primarily outlining the cancer margins of the tissue samples, which included edges between cancerous and normal tissues.

Hyperspectral microscopic collection system setup and characterization

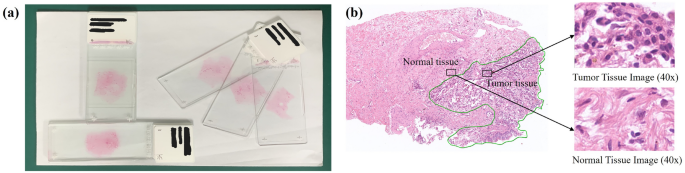

In order to obtain hyperspectral images of lung histopathology sections, we designed an HMI collection system. The physical diagram of the HMI system used in our experiments is shown in Fig. 3a, and the main instruments included a halogen light source (OSL2, 150W, 400-1600nm, Thorlabs Inc., New Jersey, USA), a Complementary Metal Oxide Semiconductor (CMOS) camera (acA3088-57um, Basler Inc., Arensberg, Germany), collimating lens set (AC254-030-AB/AC254-050-AB, Thorlabs Inc., New Jersey, USA), an objective lens (10\(\times\)/0.25, Olympus, Tokyo, Japan) and a liquid crystal tunable filter (LCTF-V10, Wayho Technology Co.). The HMI system has a wavelength range of 420-750 nm, with a total of 67 bands and a spectral resolution of 5 nm. The system is capable of acquiring a set of hyperspectral data in a time frame of less than 20 seconds. The system has a magnification of 10\(\times\), a field of view of 741 \(\upmu\)m \(\times\)495 \(\upmu\)m, and a practical resolution in the range of 1.025–1.830 \(\upmu\)m depending on the light wavelength. Compared with current acquisition devices (see Table 1), the device used in this work has larger resolution and faster scanning speed. Figure. 3b demonstrates the optical collection process of hyperspectral microscopy data. The light from the light source is shaped into parallel light after being transmitted to the collimating lens group through the supporting fiber optic bundle. By continuously adjusting the voltage, the broad-spectrum light is split into a series of narrow spectral bands by the LCTF. This light is then reflected by the beam-splitting prism into the objective lens and focused on the lung histopathology section sample. The reflected light which carries information about the tissue, passed through the beam-splitting prism again and reached the target surface of the CMOS camera after being focused by the tube lens. In this way, we obtained spectral images stored in frequency band order. To prevent interference from external stray light, the experiments conducted in this study were performed in a specific optical darkroom.

Experimental apparatus photograph (a) and experimental setup schematic diagram (b) of the HMI collection system. Collimating lens - CL; Liquid crystal tunable filter - LCTF; Beam splitter - BS; Microscopic objective - MO; Pathological slides - PS; Tube lens - TL; Complementary metal oxide semiconductor - CMOS. Schematic diagram of the hyperspectral data cube (c). Each data cube consists of 67 spectral channels and 3088\(\times\)2064 pixels, with each pixel containing information from different spectral bands, with the red and green curves corresponding to the cell nucleus and cytoplasm.

Microscopic hyperspectral dataset for pathological sections of lung tissue

In this experiment, within the pathologist-annotated areas of each slide, representative and relatively confidently labeled areas were selected as regions of interest (ROI). Then, these were scanned and collected previously used HMI system. A total of 65 microscopic hyperspectral images were obtained from pathological slides of lung tissues, which were shaped into HMI datacube (specific methods will be detailed in subsequent chapters) and made up the hyperspectral dataset. As illustrated in Fig. 3c, each data cube consists of 67 spectral channels and 3088\(\times\)2064 pixels, with each pixel containing information from all spectral bands. Then, under the guidance of experienced pathologists, these images were annotated, primarily marking the boundaries between the tumor and normal tissues, as well as specifying the locations of some cancer cells. The annotated tumor images were later used for label refinement as described in “Label preparation” section.

Data preprocessing

Hyperspectral data have high dimensionality and are often subject to noise interference from the environment, instruments, and the samples themselves. This complexity makes data processing challenging and time-consuming37. Some studies have shown that data processing difficulties and noise interference can be relieved by applying appropriate preprocessing methods38,39,40. The data preprocessing pipeline proposed in this research is based on four steps:

Generation of the hyperspectral data cube

Firstly, the continuous single-band images collected from the CMOS detector are stored as multi-band images in Band Sequential Format (BSQ) without undergoing any processing. Subsequently, as depicted in Figure. 3c, we utilize the Python programming language along with its GDAL library41 to add corresponding parameter information to each band in the stacked multi-band images and transform these into a hyperspectral datacube. Finally, the datacube is stored in RAW file format.

Image calibration

The second step involves the calibration of hyperspectral images. Initially, an area without any tissue on the glass slide is selected as a blank region, and images from this region are captured to serve as the white reference image (\(I_{white}\)). Subsequently, images of the dark noise (\(I_{dark}\)) of the CMOS detector are obtained under no illumination by turning off the light source and covering the objective lens. Calibration aims to eliminate the effects arising from spectral nonuniformity and detector dark current, making it an essential step in the preprocessing of hyperspectral images42, as indicated by Equ. (1):

Noise filtering and normalization

The third step involves smoothing all hyperspectral data using the Savitzky-Golay (S-G) algorithm43 (window width is 5 and polynomial order is 2). This process aims to reduce interference from external noise and instrument noise on the HMI system. After smoothing, the hyperspectral data are standardized by the Standard Normal Variate (SNV)44, which reduces variability caused by different illumination conditions or sensor settings and allows comparison between different bands. Furthermore, SNV enhances the differences in spectral features between tumor and normal cells, making them more distinguishable from noise and background variation.

Dimensional reduction

The hyperspectral data in this study contain high-dimensional information, which not only increases computational and storage costs but also complicates data analysis and modeling. Dimensionality reduction has proven to be essential for analyzing high-dimensional data45, as it removes redundant information and accelerates algorithm execution, thereby enhancing data processing efficiency and model performance. In this work, the principal component analysis (PCA)46 method is employed to reduce the dimensionality of the hyperspectral dataset, aiming to improve model accuracy and algorithm speed.

Label preparation

Accurate labeling is crucial in hyperspectral imaging data. Physicians diagnose tumors primarily using cytomorphology3, which provides clear insights into cell structures and tissue. However, the annotation process is often tedious and labor-intensive, leading to coarse labels that can degrade data quality and negatively impact model training. To address this challenge, some studies have manually annotated approximately 10%-20% of the datasets and used these annotations to train network models or experimented with ML methods by randomly selecting a small subset of feature points for testing47,48. Additionally, some researchers have collaborated with pathologists to comprehensively annotate the entire dataset28,49,50. Although these methods have shown certain levels of effectiveness, they also come with limitations, such as the risk of overfitting models or the significant human effort and time required-often exceeding 30 minutes per slide. To overcome these issues, we implement a semi-automatic label refinement procedure to reduce data contamination and improve annotation precision, as illustrated in Fig. 4, this procedure requires only 5 minutes to process a single slide. We utilize the K-means unsupervised clustering algorithm to classify hyperspectral data pixels into 20 types based on spectral vectors. A custom interface is used for the manual selection of cell and background regions based on these 20 types. Subsequently, cell regions, background regions and coarse labels are combined together to generate cell-level annotation with four types: non-cell (black), non-tumor cell (green), tumor cell (red), and background (blue). The distinction between non-tumor and tumor cells is guided by tumor annotations provided by pathologists. The resulting classification map is overlaid on the original pathology image for pathologists to verify and adjust. This process improves the accuracy and consistency of the labels, thereby facilitating subsequent analysis and model training.

Supervised classification

The supervised classification algorithms used in this research work include RF, SVM, and CNN, which have been widely used for the classification of hyperspectral images51. RF is an integrated decision tree-based classifier that aggregates the predictions of multiple decision trees to classify new data. It leverages the uncertainty introduced by randomness to enhance the robustness and generalization of the model36. SVM is a kernel-based supervised classifier. It excels in analyzing high-dimensional feature spaces due to its strong generalization ability and robustness52. CNN is a deep learning model widely used in image processing and computer vision. In recent years, researchers have applied CNN to the field of hyperspectral data processing and achieved remarkable results53. Compared with traditional ML algorithms, CNN can effectively utilize spatial and spectral information in hyperspectral data to extract multiscale and multilevel features.

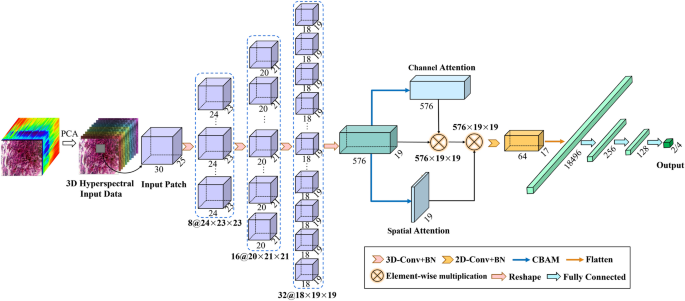

This study proposes a CNN model called HybridSN-Att inspired by recent papers published by Roy et al.30, where the authors proposed a new CNN model for remote sensing image classification. As shown in Fig. 5, the CNN model consists mainly of 3D-CNN and 2D-CNN, where 3D-CNN helps to acquire spatial and spectral features from a set of specific bands, and 2D-CNN effectively extracts the spatial features of hyperspectral images. In addition, we add batch normalization and special convolutional block attention modules in the network structure, including spectral channel attention and spatial attention model, to improve the classification efficiency and generalization ability of the model.

Experimental results and analysis

This study aims to evaluate the feasibility of generating cost-effective high-quality lung tumor pathological labels in hyperspectral data through cell annotation refinement via K-means clustering and interactive selection. To assess the annotation quality differences, several ML algorithms focusing on local features with small receptive fields are trained and tested on datasets before and after the annotation refinement. The qualitative and quantitative comparison of the prediction performance of all models from two labels are compared to infer the effect and the limitations of annotation refinement. The results indicate that accurate labels reduce generalization noise, enhance prediction performance, and enable models to focus on cell-specific spectral features. Combined with our HybridSN-Att network, this approach delivers more reliable and informative tumor segmentation results, outperforming other models and better supporting pathologists in diagnostic tasks.

Experimental settings and metrics

We use our custom HSI dataset with 65 datacubes and remove eight spectral bands due to noise contamination. Labels are categorized into four types: non-cell ingredients (black), non-tumor cell (green), tumor cell (red), and background (blue). The ratio of training set and test set images is 8:1. From each training image, 1000 pixels are randomly selected as the center of patches, with a ratio of 4:1:3:2 for different label types to mitigate class imbalance. This ratio is determined experimentally and can be further optimized. From each pixel center, we generate a 25\(\times\)25 patch with zero padding for the edges, assigning the label at the center of the pixel to the entire patch.

All model codes are executed on a Supermicro 7049GP-TRT workstation running a Linux operating system. This workstation is equipped with two Intel Xeon Gold 5118 CPU processors and four NVIDIA GeForce RTX 2080 Ti GPUs. We use HybridSN-Att, 2D CNN, and 3D CNN as our network models for comparison purposes. All network models are trained 50 epochs with Adam optimizer with an initial learning rate of \(5e^{-5}\). The batch size is set to 256. The SVM uses the radical basis function (RBF) kernel with default parameters. The RF model sets the number of estimators at 50 and the maximum depth to 10. To evaluate supervised classification results from multiple perspectives, sensitivity (see Equ. (2)), specificity (see Equ. (3)), accuracy(see Equ. (4)), and dice coefficient (see Equ. (5)) were used in this study.

Among them, the true positive (TP) indicates that the predicted result is positive and the actual value of the classification is positive. The false positive (FP) indicates that the predicted result is positive and the actual value of classification is negative. True negative (TN) indicates that the predicted result is negative and the actual classification value is negative. The false negative (FN) indicates that the predicted result is negative and the actual value of the classification is positive.

Evaluation of misguidance from coarse labels

We first investigated the data distribution differences between the two annotation methods, evaluated the resulting changes in model performance, and analyzed the underlying reasons for the experimental outcomes. The spectral differences among tissues, background, and cells are substantial, and significant class imbalance exists in pathological slides. Figure 6a visually compares cell-level and coarse annotations for a sample image (LUSC-3-8), while Figure 6b presents the percentages of tumor and non-tumor cells. Notably, non-cell components and background regions occupy a large portion of the image. Based on coarse annotations, tumor and non-tumor cells account for 2.5% and 2.7% of the total pixel occurrences, respectively. However, when considering the overall pixel distribution, tumor and non-tumor regions show a substantial imbalance, comprising 12.8% and 87.2% of the total pixels, respectively. This highlights the significant disparity in pixel occurrences between the two categories despite the similar proportions of tumor and non-tumor cells. This class imbalance suggests that training models with coarse annotations likely emphasizes the differences between tumor and non-tumor tissues rather than the chemical characteristics distinguishing tumor and non-tumor cells. Such a focus undermines the potential of HMI, which is designed to differentiate tumor and non-tumor cells based on spectral signatures. Addressing this issue may require incorporating loss functions or mechanisms that emphasize the spatial characteristics of cells during model training to better exploit cell-specific information.

This hypothesis can be supported by the results of our experiments visually shown in Fig. 7, which contains HybridSN-Att predictions from four training images and two test images based on coarse and precise labels separately. Although coarse predictions generate similar results with coarse labels in the training images, the generalization ability of the model is very low as significant noise can be observed in test images in the last two rows. As contrast, although precise predictions classify fewer tumor cells than precise labels, a conservative and stable classification of tumor cells is achieved with few non-tumor regions being classified as tumor cells. From Table 2, the use of coarse labels reduces accuracy significantly from 92.52% to 77.33%, specificity from 97.26% to 84.68%, and generates a higher standard error for uncertainty. These quantitative metrics indicate that coarse labels would create more aggressive tumor identifications with the cost of more noise, uncertain boundaries around susceptible tumor areas, and unclear guidance for pathologists. Although coarse labels create a better sensitivity from 54.34% to 66.09% and Dice coefficient from 55.28% to 60.6%, the improvement is minor and can be an illusion created by the class imbalance or the difference in the number of tumor pixels. It is possible that HybridSN-Att predictions trained on coarse labels fail to distinguish tiny spectral differences between tumor and non-tumor areas and concentrate more on the color of tissue texture and the existence of background.

To this point, we have demonstrated that incorporating precise labels, which account for cells and background separately rather than relying on coarse annotations, significantly reduces generalization noise and enhances prediction performance. Coarse labels misguide the model by emphasizing global tissue differences rather than the distinct spectral characteristics of individual cells. This simplification leads the model to prioritize irrelevant or overly broad features, which dilutes its ability to accurately distinguish tumor cells from non-tumor cells based on their unique spectral signatures. Consequently, transitioning to cell-level annotations not only aligns the training process with the strengths of hyperspectral imaging but also provides a more robust foundation for cell-specific diagnostic tasks, ultimately improving the reliability and precision of pathological analysis.

Post-processing analysis and model comparison

To assist pathologists in finding tumor cells, instance segmentation must be made so each cell should only be classified as either tumor or non-tumor without confusion or noise. In this study, post-processing techniques are used to reduce classification noise and create better visual segmentation presentations for pathologists. In this subsection, we will first explain the mechanism and effect of our post-processing strategy. After that, we will compare the prediction results of all models after post-processing and analyze the strengths and limitations of the models revealed by visual presentation. For simplicity, we introduce the term “direct predictions” to describe the model classifications with the highest probability. This term is used in the absence of a standardized equivalent in the existing literature to provide clarity in the context of this study. In our post-processing strategy shown in Fig. 8, pixels are initially assigned a type based on the highest probability as shown in Fig. 8a. To ensure robustness, the assignment is refined by retaining only pixels with a probability higher than 0.5 and forming a connected area of at least 50 pixels for a given type. Pixels that fail to meet these criteria are considered undefined and displayed as white, resulting in intermediate predictions, as shown in Fig. 8b. In the next step, undefined pixels are reassigned to adjacent types based on a predefined priority: non-tumor-cell (green), tumor-cell (red), non-cell ingredient (black), and background (blue), as shown in Fig. 8c. When adjacent tumor and non-tumor cells are present, the connected area is adjusted into a single type based on majority voting. This refinement process enhances the accuracy and consistency of LUSC predictions by resolving ambiguous pixel classifications.

Although this post-processing strategy is simple and effective, it predicts tumor-cell too conservatively and introduces additional bias. We calculated the evaluation metrics (see Eqs. (2–5) in “Experimental settings and metrics” section) separately for each slide. The average value and standard deviation for each evaluation metric in tumor cells are presented in Table 3 for direct predictions and Table 4 for post-processing predictions. Comparing the two tables, incorporating a conservative tumor detection post-processing strategy does not significantly reduce accuracy. However, fewer tumor cell predictions result in varying decreases in average sensitivity across all models. The smallest change occurs in HybridSN-Att, with sensitivity decreasing from 54.34% to 51.95%, while the largest change is observed in 2D CNN, dropping from 34.4% to 18.5%. For the Dice coefficient, HybridSN-Att also shows the smallest decrease from 55.28% to 52.19%, while RF and SVM drop almost to zero. These quantitative results indicate that HybridSN-Att produces the most stable prediction probabilities by being least affected by the post-processing strategy. It specifically results in fewer undefined pixels and fewer connected areas of adjacent tumor and non-tumor regions.

Next, we will evaluate the quality of post-processing predictions, which are seen by pathologists. From qualitative perspective, several observations can be concluded from Fig. 9. All models predict cells relatively well, and there are considerably more cells predicted than labels contain, indicating that tissues near cells are also predicted as cells. From rows two and three, there is confusion between tissue and background, which frequently occurs in large areas of tissues without cells or corners of the images. We contend that the incorporation of long-distance reliance or background boundary detection can serve to mitigate this issue. Additionally, the model limitation can increase the confusion between tissue and background as unoptimized SVM and RF misclassified two types severely in rows one and five. RF and SVM fail to distinguish non-tumor cells from tumor cells, resulting in predictions where most cells are classified as non-tumor cells. 3D CNN and 2D CNN predict tumor cells differently for all images, indicating that the two models focus on different spatial or spectral features as expected. HybridSN-Att gives predictions most similar to the ground truth labels with minor discrepancies probably due to both incorrectness of ground truth labels and model limitations. From quantitative perspective, all models generate similar accuracy from 90.51% to 92.52% where HybridSN-Att achieves the best performances by Table 3. However, since tumor cells occupy a small ratio in the images, usually less than 20%, the accuracy metric cannot fully reflect the ability to recognize tumor cells fairly. As a result, sensitivity is often used as another important metric in pathologist care in real medical applications, because the higher the sensitivity, the lower the probability of missed diagnosis. For sensitivity, unoptimized RF and SVM have poor performance with less than 5% sensitivity, almost unable to detect tumor cells, as Fig. 9 shows. Regular 2D and 3D CNN achieve similar sensitivity of 34.49% and 31.01%. HybridSN-Att achieves the best sensitivity with 54.34%, which means that more than half of tumor-labeled cells are predicted as tumors and this performance is already useful for pathologists who do not care about clear boundaries and detections of all tumor areas in real applications. This comparison reflects that the neural network model, specifically HybridSN-Att which captures both spatial and spectral information, can better distinguish small differences between tumor and non-tumor cells under 25\(\times\)25 local patch comparison. In contrast, traditional RF and SVM need better parameter tuning to distinguish these small differences. For large differences such as between cell and non-cell ingredients, RF and SVM achieve similar performance with other models, such as sensitivity between 80% and 87% and dice score from 66% to 73% as Table 5 shows, with SVM as an exception with reduced performance due to smaller 5\(\times\)5 instead of 25\(\times\)25 patch size.

We have demonstrated that our post-processing strategy, leveraging the HybridSN-Att network focused solely on local features, can provide valuable tumor segmentation insights to assist pathologists. In contrast, simpler models such as unoptimized 2D CNNs and Random Forest (RF) yield less informative results, highlighting their limitations in capturing complex patterns. Nonetheless, all models, including the simpler ones, are capable of performing basic cell segmentation when precise annotations are available, underscoring the critical role of accurate labeling in enhancing segmentation performance.

Discussion

Our study presents a method for efficiently refining coarse annotations into cell-level labels, significantly improving segmentation performance and assisting pathologists. However, certain limitations require further investigation to improve the scalability and enhance the clinical applicability of the proposed framework. First, the method was evaluated exclusively on hyperspectral lung tumor pathology slides. This scope may limit its applicability to other organs or clinical scenarios. Expanding the evaluation to a broader range of pathology datasets, including diverse tumor types and imaging conditions, is essential to validate its generalizability. Second, the dataset used in this study is relatively small, which may affect the model’s robustness and ability to generalize. Future efforts should focus on expanding the dataset to include a larger number of samples and more heterogeneous tumor types, which would further improve model performance and reliability. Third, we employed conventional CNN-based models to validate the proposed approach. While effective, CNNs may struggle to fully capture the long-range spatial dependencies inherent in hyperspectral data. Incorporating more advanced architectures, such as U-Net, Vision Transformers (ViT), or hybrid models, holds promise for achieving state-of-the-art segmentation accuracy and further enhancing the performance of hyperspectral imaging analysis.

Additionally, the label refinement method used in this study generates cell-level annotations efficiently, completing the process within approximately 5 minutes per slide. While this demonstrates clear improvements over manual annotation methods, future work could further explore semi-supervised learning techniques. Iterative training strategies may further enhance cell annotations by improving the model’s ability to differentiate spectral characteristics between tumor and non-tumor cells.

Finally, the post-processing strategy applied in this work provides a solid foundation for refining segmentation results but leaves room for further advancements. Future developments could focus on intelligent post-processing techniques to resolve ambiguities, such as differentiating between tumor and non-tumor labels within the same cell. Additionally, integrating interactive segmentation tools would allow pathologists to manually adjust and refine results, improving both precision and reliability. Further efforts could also address the challenge of classifying cells with similar spectral curves through advanced clustering or classification methods, enhancing the overall accuracy of the framework. By addressing these limitations, future studies can further optimize the framework, enhancing its scalability, generalizability, and clinical applicability. This will pave the way for a more robust and adaptive tool, capable of advancing hyperspectral imaging analysis and supporting pathologists in accurate and efficient tumor diagnosis.

Conclusion

The accurate identification of tumor cells is crucial for reducing the time and effort required by pathologists to analyze pathological images. However, the performance of existing algorithms remains limited, primarily due to the lack of high-quality hyperspectral datasets. To address this challenge, this study proposes a novel method to mitigate the impact of noisy annotations on deep learning models for lung tumor diagnosis. Our approach refines coarse annotations into cell-level labels by combining K-means unsupervised learning with manual selection, effectively delineating cell and background regions based on spectral information.

The method was validated using a hyperspectral LUSC dataset of 4355 images acquired with a custom-built HMI system. Both deep learning and traditional ML models were trained and evaluated, demonstrating a substantial improvement in pixel-level segmentation accuracy from 77.33% to 92.52% for the optimal model when incorporating spectral-based cell masks. These results highlight the potential of our approach to improve tumor diagnosis and pave the way for advanced techniques, such as semi-supervised learning and two-stage classification, to further enhance hyperspectral imaging applications.

The proposed method presents practical implications by streamlining the pathology examination process. Instead of manually annotating entire slides, pathologists can focus on confirming classification results, leading to substantial time savings and supporting the development of automated diagnostic tools. Additionally, the release of our hyperspectral dataset offers a valuable resource for future research, promoting innovation in hyperspectral imaging for medical diagnostics.

Data availability

The datasets generated during and/or analyzed during the current study are available in the HMILungDataset repository, https://github.com/Intelligent-Imaging-Center/HMILungDataset, or by contacting the corresponding author via email.

Code availability

The code for training and evaluating the HybridSN-Att model, and, also, the weighting of the best results presented in this paper, is available at: GitHub https://github.com/Intelligent-Imaging-Center/HMILungTestCode.

References

Ferlay, J. et al. Cancer statistics for the year 2020: An overview. Int. J. Cancer 149, 778 (2021).

Bray, F. et al. Global cancer statistics 2022: Globocan estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J. Clin. 74, 1 (2024).

Leiter, A., Veluswamy, R. R. & Wisnivesky, J. P. The global burden of lung cancer: Current status and future trends. Nat. Rev. Clin. Oncol. 20, 1–16 (2023).

Vo-Dinh, T. et al. A hyperspectral imaging system for in vivo optical diagnostics. IEEE Eng. Med. Biol. Mag. 23, 40–49 (2004).

National Lung Screening Trial Research Team. Reduced lung-cancer mortality with low-dose computed tomographic screening. N. Engl. J. Med. 365, 395–409 (2011).

Li, Z. et al. Deep learning methods for lung cancer segmentation in whole-slide histopathology images-the ACDC@lunghp challenge 2019. IEEE J. Biomed. Health Inform. 25, 429–440 (2021).

Jian-Sheng, W. et al. Identification and measurement of cutaneous melanoma superficial spreading depth using microscopic hyperspectral imaging technology. J. Infrared Millim. Waves 39, 749 (2020).

Schultz, R. A. et al. Hyperspectral imaging: A novel approach for microscopic analysis. Cytometry 43, 239–247 (2001).

Lu, G. & Fei, B. Medical hyperspectral imaging: A review. J. Biomed. Opt. 19, 010901 (2014).

Jansen-Winkeln, B. et al. Feedforward artificial neural network-based colorectal cancer detection using hyperspectral imaging: A step towards automatic optical biopsy. Cancers 13, 967 (2021).

Dicker, D. T. & Lerner, J. Differentiation of normal skin and melanoma using high resolution hyperspectral imaging. Cancer Biol. Therapy 5, 1033–1038 (2006).

Siddiqi, A. M. et al. Use of hyperspectral imaging to distinguish normal, precancerous, and cancerous cells. Cancer 114, 13–21 (2008).

Dremin, V. et al. Skin complications of diabetes mellitus revealed by polarized hyperspectral imaging and machine learning. IEEE Trans. Med. Imaging 40, 1207–1216 (2021).

Abdulla, A. M., Ullah, I., Alshboul, O., Bandara, N. & Shehadeh, A. Vehicle collisions analysis on highways based on multi-user driving simulator and multinomial logistic regression model on US highways in Michigan. Int. J. Crashworthiness 28, 770–785 (2022).

Shehadeh, A., Alshboul, O., Al-Shboul, K. F. & Tatari, O. An expert system for highway construction: Multi-objective optimization using enhanced particle swarm for optimal equipment management. Expert Syst. Appl. 249, 123621 (2024).

Alshboul, O., Mamlook, A., Shehadeh, A. & Munir, T. Empirical exploration of predictive maintenance in concrete manufacturing: Harnessing machine learning for enhanced equipment reliability in construction project management. Comput. Ind. Eng. 190, 110046–110046 (2024).

Al-Shboul, K. F., Almasabha, G., Shehadeh, A. & Alshboul, O. Exploring the efficacy of machine learning models for predicting soil radon exhalation rates. Stoch. Env. Res. Risk Assess. 37, 4307–4321 (2023).

Shehadeh, A., Alshboul, O. & Tamimi, M. Integrating climate change predictions into infrastructure degradation modelling using advanced Markovian frameworks to enhanced resilience. J. Environ. Manag. 368, 122234–122234 (2024).

Ma, L. et al. Automatic detection of head and neck squamous cell carcinoma on histologic slides using hyperspectral microscopic imaging. J. Biomed. Opt. 27, 046501 (2022).

Wang, Q. et al. Identification of melanoma from hyperspectral pathology image using 3d convolutional networks. IEEE Trans. Med. Imaging 40, 218–227 (2021).

Iglesias, J. E. & Sabuncu, M. R. Multi-atlas segmentation of biomedical images: A survey. Med. Image Anal. 24, 205–219 (2015).

Karimi, D., Dou, H., Warfield, S. K. & Gholipour, A. Deep learning with noisy labels: Exploring techniques and remedies in medical image analysis. Med. Image Anal. 65, 101759 (2020).

LeBlanc, A. K. & Mazcko, C. N. Improving human cancer therapy through the evaluation of pet dogs. Nat. Rev. Cancer 20, 727–742 (2020).

Oh, J. H. & Cho, J. Y. Comparative oncology: Overcoming human cancer through companion animal studies. Exp. Mol. Med. 55, 725–734 (2023).

Dorent, R. et al. Scribble-based domain adaptation via co-segmentation. in Medical Image Computing and Computer Assisted Intervention–MICCAI 2020, 479-489 https://doi.org/10.1007/978-3-030-59710-8-47, (2020).

Akram, M. U. & Usman, A. Computer aided system for brain tumor detection and segmentation. IEEE Xplore01 (2022).

Tajbakhsh, N. et al. Embracing imperfect datasets: A review of deep learning solutions for medical image segmentation. Med. Image Anal. 63, 101693 (2020).

Zhang, Q. et al. 3d-PulCNN: Pulmonary cancer classification from hyperspectral images using convolution combination unit based CNN. J. Biophotonics 14, e202100142 (2021).

Zhang, Q. et al. Taj-Net: A two-stage clustered cell segmentation network with adaptive joint learning of spatial and spectral information. Biomed. Opt. Express 15, 4584–4604 (2024).

Roy, S. K., Krishna, G., Dubey, S. R. & Chaudhuri, B. B. Hybridsn: Exploring 3-d-2-d CNN feature hierarchy for hyperspectral image classification. IEEE Geosci. Remote Sens. Lett. 17, 277–281 (2020).

Huebschman, M. L., Schultz, R. A. & Garner, H. R. Characteristics and capabilities of the hyperspectral imaging microscope. IEEE Eng. Med. Biol. Mag. 21, 104–117 (2002).

Boucheron, L. E., Bi, Z., Harvey, N. R., Manjunath, B. & Rimm, D. L. Utility of multispectral imaging for nuclear classification of routine clinical histopathology imagery. BMC Cell Biol. 8, 1 (2007).

Akbari, H. et al. Hyperspectral imaging and quantitative analysis for prostate cancer detection. J. Biomed. Opt. 17, 0760051 (2012).

Zhu, S. et al. Identification of cancerous gastric cells based on common features extracted from hyperspectral microscopic images. Biomed. Opt. Express 6, 1135 (2015).

Hu, B., Du, J., Zhang, Z. & Wang, Q. Tumor tissue classification based on micro-hyperspectral technology and deep learning. Biomed. Opt. Express 10, 6370–6370 (2019).

Liu, L. et al. Staging of skin cancer based on hyperspectral microscopic imaging and machine learning. Biosensors 12, 790–790 (2022).

Bioucas-Dias, J. M. et al. Hyperspectral remote sensing data analysis and future challenges. IEEE Geosci. Remote Sens. Mag. 1, 6–36 (2013).

Yoon, J. Hyperspectral imaging for clinical applications. BioChip J. 16, 1–12 (2022).

Jo, J. A., Callico, G. M., Camacho, R., Plaza, L. & Sarmiento, R. Detecting brain tumor in pathological slides using hyperspectral imaging. Biomed. Opt. Express 9, 818–818 (2018).

Liu, N., Guo, Y., Jiang, H. & Yi, W. Gastric cancer diagnosis using hyperspectral imaging with principal component analysis and spectral angle mapper. J. Biomed. Opt. 25, 1 (2020).

GDAL/OGR Contributors. GDAL/OGR Geospatial Data Abstraction software Library. Open Source Geospatial Foundation, https://doi.org/10.5281/zenodo.5884351 (2024).

Lu, G. et al. Detection of head and neck cancer in surgical specimens using quantitative hyperspectral imaging. Clin. Cancer Res. 23, 5426–5436 (2017).

Amigo, J. M. Practical issues of hyperspectral imaging analysis of solid dosage forms. Anal. Bioanal. Chem. 398, 93–109 (2010).

Bi, Y. et al. A local pre-processing method for near-infrared spectra, combined with spectral segmentation and standard normal variate transformation. Anal. Chim. Acta 909, 30–40 (2016).

Ray, P., Reddy, S. S. & Banerjee, T. Various dimension reduction techniques for high dimensional data analysis: A review. Artif. Intell. Rev. 54, 3473–3515 (2021).

Alhayani, B. & Ilhan, H. Hyperspectral image classification using dimensionality reduction techniques. Int. J. Innov. Re. Electr. Electron. Instrum. Control Eng. 5, 71–74 (2017).

Zhang, X. et al. Fd-net: Feature distillation network for oral squamous cell carcinoma lymph node segmentation in hyperspectral imagery. IEEE J. Biomed. Health Inform. 28, 1552–1563 (2024).

Chen, Y. et al. Classification of hyperspectral images for detection of hepatic carcinoma cells based on spectral-spatial features of nucleus. J. Innov. Opt. Health Sci. 13, 2050002 (2019).

Ortega, S. et al. Hyperspectral database of pathological in-vitro human brain samples to detect carcinogenic tissues. https://doi.org/10.1109/isbi.2016.7493285 (2016).

Zhang, X., Li, W., Gao, C., Yang, Y. & Chang, K. Hyperspectral pathology image classification using dimension-driven multi-path attention residual network. Expert Syst. Appl. 230, 120615 (2023).

Ghamisi, P., Plaza, J., Chen, Y., Li, J. & Plaza, A. J. Advanced spectral classifiers for hyperspectral images: A review. IEEE Geosci. Remote Sens. Mag. 5, 8–32 (2017).

Fang, L., Li, S., Duan, W., Ren, J. & Benediktsson, J. A. Classification of hyperspectral images by exploiting spectral-spatial information of superpixel via multiple kernels. IEEE Trans. Geosci. Remote Sens. 53, 6663–6674 (2015).

Halicek, M. et al. Head and neck cancer detection in digitized whole-slide histology using convolutional neural networks. Sci. Rep. 9, 14043 (2019).

Acknowledgements

This work was supported in part by the Equipment Research Program of the Chinese Academy of Sciences under Grant YJKYYQ20180039, in part by the Informatization Plan of the Chinese Academy of Sciences under Grant CASWX2021-PY-0110, in part by the Beijing Municipal Natural Science Foundation under Grant JQ22029, and in part by the Major Project of Guangzhou National Laboratory under Grant GZNL2023A03009. Yunfeng Nie acknowledges the Flemish Fonds Wetenschappelijk Onderzoek for supporting her research (1252722N, G0A3O24N, VS03924N).

Author information

Authors and Affiliations

Contributions

Z.Y.: Study design, Experimental system prototyping, Image acquisition, Data curation, Experiment result analysis, Writing - Original Draft; H.H.: Programming, Experimentation, Statistical analysis, Writing - Original Draft; R.G.: Medical sample acquisition, Data analysis, Validation; Y.C.: Medical sample acquisition, Data analysis, Project administration, Writing - Review & Editing; J.Z.: Funding acquisition, Methodology, Project administration, Supervision, Writing - Review & Editing; Y.N.: Conceptualization, Methodology, Experimental system prototyping, Experimentation, Supervision, Funding acquisition, Writing - Review & Editing. All authors reviewed the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Yan, Z., Huang, H., Geng, R. et al. Improving lung cancer pathological hyperspectral diagnosis through cell-level annotation refinement. Sci Rep 15, 8086 (2025). https://doi.org/10.1038/s41598-025-85678-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-85678-9