Abstract

To address the challenges posed by varying heat generation modules in Ground Control Stations (GCS) during various work modes, a cooling system has been developed. This research introduces an Adaptive Variable Channel Control (AVCC) cooling approach using the Deep Reinforcement Learning Soft Actor-Critic (SAC) algorithm. The primary contributions of this paper include: (1) the design of a distributed cooling module featuring multiple cooling fans, which enables a variable channel cooling structure; (2) the development of a multi-module temperature control platform that simulates the heat generation conditions of each module under six work modes, providing a training environment for the cooling control algorithm; (3) the formulation of a model-free control method based on the SAC algorithm, AVCC, to optimize the cooling efficiency and endurance of the GCS. Finally, the effectiveness of the AVCC method was evaluated under maximum load conditions, contrasting it with a rule-based policy. The experimental results indicate that the AVCC method is able to cool the modules B (voltage stabilizer), Q (battery), E (charger), G (data transmission), D (voltage stabilizer), C (picture transmission), H (computer), and F (voltage stabilizer) from 78.9 °C, 58.5 °C, 88.3 °C, 88.3 °C, 79.2 °C, 88.9 °C, 77.5 °C, and 78.8 °C, respectively, to 50 °C in 280 s, improving the cooling efficiency by 40.4% and decreasing the energy consumption by 42.2% compared to the rule-based approach. The proposed distributed cooling module and AVCC method improve cooling efficiency and reduce energy consumption in the GCS. This study provides a valuable reference for the control and design of cooling systems in electronic equipment cabinets, especially those with similar shapes, sizes, cooling methods, number of heat sources and environmental conditions.

Similar content being viewed by others

Introduction

The development of ground control stations (GCS) for power line inspection robots (PLIRs) has progressed alongside technological advancements. The GCS, serving as the command center for the inspection robots, is equipped with a variety of electronic modules1. As PLIR technology evolves, the modules integrated into the GCS are becoming increasingly varied. These electronic modules inevitably generate heat during operation, leading to substantial heat buildup in the confined space over time2. Poor heat dissipation, leading to localized hot spots or excessive temperatures, is a major reason why electronic components may underperform or even fail3. Addressing these cooling challenges requires comprehensive research into the module distribution, cooling structures, and control algorithms within the electronic equipment.

The thermal characteristics of a GCS with a rectangular box structure have been extensively studied in previous research, primarily focusing on the internal structures containing multiple heat sources. These studies examine the layout, number, and density of heat sources to optimize module distribution and cooling channel design. One study explored the conjugate heat transfer in electronic modules within a rectangular box equipped with two axial fans for forced convection cooling, showing better results compared to natural convection4; Similarly, Venkatachalapathy conducted both experimental and numerical studies on heat transfer from protruding heat sources in a 5 × 4 array, discovering higher cooling efficiency in the first row of the array nearest to the inlet under various outlet conditions5. Ajmera et al. explored convective heat transfer in a multi-ventilated enclosed box with three flush-mounted heat sources at the bottom, discovering that the heat source closest to the inlet had the lowest surface temperature at all Reynolds numbers6. Ahamad studied laminar conjugate mixed convection and surface radiation in a vertical channel containing protruding heat sources, developing a data assimilation CFD model with reduced computational complexity7.

For small electronic devices, selecting appropriate cooling equipment is crucial to enhance cooling efficiency. Mongia et al. developed a compact refrigeration system for laptops, incorporating a micro compressor, microchannel condenser, microchannel evaporator, and a capillary tube as the throttling device, achieving a performance coefficient of about 3.7, comparable to that of modern household refrigerators8. Kong et al. proposed an innovative structural design of a two-stage channel hybrid PCM-air heat sink (HPHS). They evaluated the thermal performance of three design schemes: an air-cooled heat sink (AHS), a single-channel HPHS, and a two-stage channel HPHS. The results demonstrated that incorporating PCM significantly lowered the maximum temperature of the HPHS and enhanced the temperature uniformity of the heating surface compared to the AHS9. Qu et al. proposed a fin heat sink for thermal management in an electrical cabinet. The cooling system featured four identical heat sinks, each equipped with eight water-filled coreless L-shaped heat pipes at the base. The condensation section of the heat pipe employs hollow fins to improve heat transfer, significantly enhancing the temperature uniformity of the heat source10.

Jackson et al. used a two-phase cooling cycle with micro-evaporation technology for chip cooling experiments and evaluation. Through steady and transient condition tests, they maintained the average chip temperature below 85 °C using a simple single-input, single-output (SISO) strategy11. Wang et al. proposed a supervisory control method combining deep reinforcement learning (DRL) and proportional-integral-derivative (PID) control, deriving a set of the most inconvenient variables to measure as proxy observations to effectively describe the heat transfer process, thereby improving control efficiency under large disturbances. Additionally, the local heat transfer process was used as the training environment, which significantly reduced the training costs12. Typical SISO control strategies include PID control, model predictive control, adaptive control, etc. Considering the corresponding behaviors of each component under different operating states, finding the global optimal tuning of the system is not easy, and it may even be impossible to directly derive control laws13.

Model-based methods, such as model predictive control, use the system model to predict future states and minimize a cost function over the prediction period. This real-time rolling optimization process is highly dependent on the model’s accuracy14. In contrast, data-driven methods do not require physical information about the object, relying instead on statistical evaluation and artificial intelligence to assess and estimate operating conditions15.

Deep reinforcement learning (DRL) is a model-free method that combines deep neural networks (Q-networks) and reinforcement learning (RL). DRL has been applied to the HVAC industry16. Unlike model-based approaches, model-free methods such as reinforcement learning develop and refine their control strategies through trial-and-error interactions between the agent and the environment. This technique does not require prior knowledge of the device or environment to create a model.

With the development of PLIR technology, the proliferation of electronic modules in GCS has escalated, resulting in increased heat generation during their operation. While various cooling strategies have been investigated, comprehensive studies integrating module distribution, cooling structures and control strategies remain limited. This research identifies three main gaps: the need for dynamic adaptability and control strategies in GCS cooling systems; the lack of integrated optimization studies involving module distribution, cooling structures and control strategies; and the challenges associated with applying DRL to GCS cooling control. Therefore, this paper aims to analyze the heat dissipation schemes for electronic modules in GCS, explore and evaluate the application of DRL-based control methods in GCS cooling systems, and propose an efficient and robust cooling control system.

In this paper, a cooling control system for a GCS is designed based on the SAC algorithm of DRL. The primary contributions and technical advancements presented in this paper are as follows:

-

(1)

A distributed fan cooling module is engineered to tailor the flow channels according to the cooling demands of the GCS across different work conditions and modes. The performance of this module is analyzed, resulting in the development of its air volume control curve.

-

(2)

A cooling control strategy, termed AVCC, based on the SAC algorithm, is proposed, and the cooling control problem of the GCS is transformed into a suitable task for solving the AVCC algorithm by using the finite discrete Markov decision process (MDP). A heat source simulation test platform, HAS (Heat Approximation System), is developed to simulate the heat generation of different modules of the GCS under various work modes. This platform is compatible with algorithm training integration.

-

(3)

A comparative analysis between the AVCC method and traditional SISO methods verifies the efficacy and advantage of the AVCC cooling control strategy.

The rest of the paper is organized as follows. The second part introduces the basic structure and work modes of the GCS, as well as the heat generation state corresponding to its various work modes. The third part defines the cooling problem as an MDP and details the cooling control scheme based on the SAC. The fourth section presents the results of the experimental research. Finally, the fifth section discusses the conclusions and future work through comparison with the simulation results.

Problem description

Ground control station

In Fig. 1, the GCS serves as an integrated device for multiple electronic modules, generating substantial heat during operation, thus consuming the performance of the modules and increasing energy consumption. Therefore, it is necessary to design the cooling performance of the GCS to improve the efficiency of the modules while extending the work time. The modules required to work under different work conditions of the GCS are not the same, which changes the heat source. For example, when the robot is autonomously inspecting, the GCS only needs to work with the image transmission radio and the display; in addition, the ground control station needs to add or reduce some modules to extend its functions. The position of the added or reduced modules is different, the shape is different, and the heat generation is different, which further changes the heat source and the flow channel. Therefore, altering the fan speed for a single flow channel may not be the most effective approach for enhancing cooling efficiency.

Heat source distribution

Figure 2 displays the module distribution of the GCS under study. Heat flow arises from temperature differences, allowing temperature to be likened to potential difference, and thermal resistance can be derived from Ohm’s law. When assessing the radiator’s heat dissipation capacity, the total thermal resistance of the radiator is commonly employed as an evaluation metric. In actual engineering, the radiator’s temperature field is three-dimensional and non-uniformly distributed, requiring consideration of various factors.

Radiation heat transfer is primarily influenced by location and surface materials; hence, when input power and ambient temperature remain constant, radiation heat transfer resistance can be regarded as a constant value. Thus, the impact of radiative thermal resistance can be disregarded in the thermal resistance network model. Additionally, employing the thermal superposition algorithm4, the thermal resistance network model of ground control station modules can be analyzed as shown in Fig. 3.

By employing the thermal resistance network model, the heat source is represented as a resistance. Through the analysis of the box’s distribution, the fundamental principle of the series and parallel thermal resistance network model is illustrated in Fig. 3, where \({T}_{in}\) represents the external environment temperature, \({T}_{out}\) is the temperature released by the box to the outside world, \({\text{T}}^{\text{min}}\) and \({\text{T}}^{\text{max}}\) represent the temperature change before and after the fluid flows through the heat source, respectively, the letters B, D, F represent the voltage module, C for pictorial radio station, E for 6S charger, G for digital radio station, Q for battery, H for industrial control machine. Incorporating the ventilation design of the GCS, the left side of the box serves as the air inlet, while the right side as the air outlet.

According to the above thermal resistance network model, for the current module layout of GCS, the main thermal resistance area is distributed on both sides of the box, which provides a reference for the installation position of the radiator.

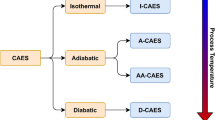

In addition, the following six main heating states are obtained by analyzing the GCS operating modes in Fig. 4: (a) the modules Q and E work simultaneously in charging mode; (b) the Q, C, G, B, and D modules work simultaneously in the remote control mode; (c) the modules B, Q, C, D, H, G, and F belong to the manual control mode; (d) the modules B, C, D, and Q work simultaneously in image transmission mode; (e) the modules B, Q, H and F work simultaneously in data processing mode; (f) the modules B, C, D, Q, H, E, G and F all work in maximum load mode.

The GCS remotely controls the PLIR to transmit, store and process images at room temperature of 25 °C. The maximum operating temperature of each module is determined by measuring the temperature reached after 30 min of stable operation. An NTC temperature sensor with a resistance of 10 K, ± 1% accuracy, and a detection range of − 25 °C to 125 °C was employed. The measurement results are shown in Table 1.

Design and method

Distributed heat dissipation module design

To improve heat dissipation and considering the existing module layout of the ground station, the selection of the heat dissipation method is initially analyzed. Compared to natural convection, forced air cooling relies on a fan for power, significantly increasing air movement speed and enhancing heat dissipation. The heat flow density of forced air cooling is approximately 5 to 10 times higher than that of natural convection17. Designing a forced air-cooling system involves optimizing heat dissipation parameters, selecting appropriate cooling fans, and designing fluid air ducts. These aspects must balance the heat dissipation area, airflow, and air pressure drop to maximize cooling efficiency. While forced air cooling is more effective than natural convection and not as effective as forced liquid cooling, it offers advantages in terms of complexity, size, weight, and maintenance. Therefore, the GCS uses forced air cooling for heat dissipation.

Structural design

Based on the above analysis, the heating conditions of the module vary during different operating modes and a single heat dissipation channel is insufficient to manage all heat generation. To accommodate the varying thermal requirements of the GCS work module and to minimize power consumption, two sets of heat dissipation fans are installed on either side of the enclosure, as shown in Fig. 5. Each set consists of an intake fan and an exhaust fan.

The configuration of the modules and the placement of the fan determines the creation of distinct flow channels, allowing precise control of the position of each module within the enclosure. Detailed parameters of a single fan are given in Table 2.

Calibrating radiator parameters is crucial for electronic equipment, as the radiator is responsible for efficiently dissipating the generated heat to ensure stable operation. Accurate calibration optimizes heat dissipation, enhancing the radiator’s performance and extending the equipment’s lifespan. The reverse series structure of the two fans in each module group alters their original characteristics, necessitating testing and analysis of the fan module airflow parameters. The tests use a lithium battery power supply to stabilize the output voltage, an STM32 to generate PWM waves with varying duty cycles to control fan speed, and an airflow meter to measure airflow changes. To mitigate the effects of external airflow, sleeves are fitted to both sides of the fan set. Polynomial fitting curves establish the relationship between PWM and airflow in CFM.

where \({a}_{i}\) is the coefficient of the polynomial,\(x\) is the PWM value.

Flow channel design

According to the structural design, varying flow channels can be generated by controlling different fan modules. Different number of modules and the spiral controllable area of each module; however, the arrangement of the modules can impact the channels and potentially lead to channel blockages; Fig. 6 shows the position layout of the modules in the box. L and W are the distance between the module and the box, the dimensions of the box and the fan are such that the fan occupies 53% of the side area of the box. These fans are evenly positioned on both sides of the box.

To optimize the positioning of the internal module for adjusting airflow, ensuring it reaches the surface of the primary heat source and enhances cooling efficiency, we rely on fluid flow principles governed by equations of mass, momentum, and energy conservation. In this context, we focus on the distribution of the flow field and the air outlet without considering heat transfer. Thus, the fluid control equations in the cooling air channel encompass only the conservation equations for mass and momentum.

The mass conservation equation18:

where \(\rho\) represents the gas density; \(t\) stands for time; \(\overrightarrow{v}\) is the velocity vector.

The momentum conservation equation is expressed19:

where \(\rho\) represents the gas density; \(\overrightarrow{v}\) is the velocity vector; \(t\) stands for time; \(\overrightarrow{F}\) is the force of the fluid in all directions; \(p\) is the static pressure; and \(\mu\) is the dynamic viscosity.

As the airflow drive is a basic forced air-cooling setup, the chosen turbulence model is the RNG \(k\)-\(\varepsilon\) model, and the model entails two equations governing the turbulent kinetic energy \(k\) and the airflow dissipation rate \(\varepsilon\), which are expressed as follows20:

\(k\) equation:

\(\varepsilon\) equation:

For the turbulent viscosity \({\mu }_{t}\), the expression is:

the expression of \({C}_{1}^{*}\) is:

where \({C}_{1}=1.42,{\eta }_{0}=4.377,\beta =0.012,\eta ={\left(2{E}_{ij} \cdot {E}_{ij}\right)}^{1/2}\frac{k}{\varepsilon }{,E}_{ij}=\frac{1}{2}\left(\frac{\partial {u}_{i}}{\partial {u}_{j}}+\frac{\partial {u}_{j}}{\partial {u}_{i}}\right)\) and further the values of the remaining constant terms in the model are \({C}_{2}=1.68,{\sigma }_{k}=1.0,{\sigma }_{\varepsilon }=1.3,{C}_{\mu }=0.0845\).

Based on the above analysis, COMSOL simulation analysis can be used to minimize calculations. The design of the distributed cooling module, with its high air volume, eliminates the need for special design within the ground control station box module. Achieving effective heat dissipation from the enclosure involves maintaining a specific distance between each module and avoiding particularly module layouts.

Heat dissipation control method

Method outline

To improve the heat dissipation efficiency of the GCS, we first design the structure of the heat dissipation module. We then analyze the internal flow field of the GCS to identify suitable heat dissipation flow channels. We then introduce two control algorithms for the heat dissipation module: SISO and AVCC. During testing, we evaluate the key parameters of these algorithms, including wind speed, equivalent heat sources and the heat transfer principles of the HAS. The wind speed test has a significant impact on the evaluation of the algorithms, as both algorithms regulate the fan speed by adjusting the PWM value of the heat dissipation module. Without measuring the output voltage and airflow corresponding to each PWM value, the control accuracy of the algorithms would be compromised, affecting the evaluation results. In testing, an equivalent heat source replaces the actual heat source, necessitating an analysis of its alignment with the real heat source. The degree of alignment directly affects the accuracy of the test data. Finally, to create an environment consistent with the operation of the AVCC algorithm, we analyze the HAS control signals and the rules for reading data logs.

SISO algorithm

After the design of the distributed heat dissipation module is completed, an appropriate algorithm is selected to control the heat dissipation module. Conventional electronic cabinets use constant airflow to dissipate heat, and some use a hierarchical approach to adjust the heat dissipation at a certain temperature. This increases the degree of freedom compared to constant airflow. These two methods are referred to as single-input and single-output control mode (Single In Single Out—SISO). For comparison, the hierarchical SISO control mode of the ground control station is shown in Fig. 7.

AVCC algorithm

However, compared to SISO control, GCS thermal control needs to identify the heating states of different modules and construct the optimal air-cooling flow channels in real time by controlling the distributed heat dissipation modules. Reinforcement learning is well suited to solve this type of problem, which not only requires strong perception, adaptation to the dynamic environment and improved decision quality, but also needs to solve the continuous multi-stage decision problem under uncertainty. The essence of the reinforcement learning problem lies in defining it as a Markov decision process (MDP), depicting the interplay between the agent and the environment. This MDP framework comprises four fundamental components: state (\(S\)), action (\(A\)), state transition probability (\(P\)), and reward (\(R\))16. The state space, action space, and rewards are deliberately predefined, and we’ll delve into their specifics later. Unlike the MPC, the state transition probability matrix is unknown and requires the agent to update it iteratively by trial and error21. DRL algorithms can be divided into two families: value-based method and policy-based method. In contrast to the policy-based approach, the value-based method is limited to discrete actions but offers lower computational costs for training22. This is because the policy-based methods need to maintain the action network and the critical networks separately, while the value-based method is only based on the Bellman equation and only needs to calculate the Q-network in the iterative process23. In addition, deep RL algorithms can be divided into two types: on-policy and off-policy; on-policy algorithms use the current strategy, while off-policy algorithms can be trained with the data collected by other strategies24. Common on-policy algorithms are Reinforce, common off-policy algorithms are DQN, DDPG, PPO, etc.25. SAC algorithm is an off-policy algorithm developed for maximum entropy reinforcement learning (Maximum Entropy Reinforcement learning)26. Compared with DDPG, Soft Actor-Critic uses a random strategy stochastic policy, which has certain advantages over the deterministic strategy27.

We introduce the concept of the MDP and apply it to the thermal management system under consideration. In this context, the state is solely determined by the action taken at time step t + 1 and the state information available at time step t, completely independent of any prior states at t-1, t-2, and so forth. This relationship is formally described by the following equation:

This study employs deep reinforcement learning, specifically leveraging the maximum entropy principle alongside the SAC algorithm, to tackle the optimal resolution of the heat dissipation problem.

To formulate the heat dissipation regulation task of the GCS (Distributed Heat Dissipation Module Control) into a solvable form by RL, we integrate the fundamental RL framework consisting of an agent and the thermal environment of the GCS. By incorporating the MDP modeling approach, the heat dissipation procedure of the GCS is investigated as follows:

State \(S\): The current state \({s}_{t}\) predominantly comprises the actual temperature \({\text{T}}_{m}\) of each module, the ideal work temperature \({\text{T}}_{e}\) of the module, and the work state \({\text{F}}_{m}\) of the fan group.

Action \(A\): The action signal \({a}_{t}\) is transmitted to the environment by the agent, and the system’s controller analyzes the data to regulate fan output and control the temperature of the heat source. Fan input is determined based on the policy \(\uppi\), which continuously explores directions of higher reward value through the algorithm.

Rewards \(R\): The instantaneous reward \({r}_{t}\) indicates the reward received by the cooling module in a given time \(t\), which is obtained when the agent performs different cooling actions \({a}_{t}\) based on the current temperature state \({s}_{t}\) of each module.

Transfer probability \(P\): After determining the current temperature value, the transition probability \({P}_{{s}_{t+1}|{s}_{t},{a}_{t}}\) to the next state \({s}_{t+1}\) is calculated based on the current state \({s}_{t}\) and the heat dissipation behavior \({a}_{t}\).

The deep reinforcement learning framework is shown in Fig. 8.

Given the state information \({s}_{t}\in S\) and the policy \(\uppi\) provided by the environment, the agent interacts with the environment. At each time step, the agent receives rewards for its actions. Through exploration of the action space \(A\), the Agent continuously learns optimal policies \({\pi }^{*}\) to maximize rewards.

If the simulation starts at the time \(t\) of a session, the cumulative reward is:

where the discount factor \(\upgamma \in \left[\text{0,1}\right]\) determines the balance between present and future rewards. Furthermore, as the agent iteratively refines its policy \({\pi }^{*}\), the value function of the state in the period is expressed as follows:

Therefore, the most effective policy \({\pi }^{*}\) for the Agent is to maximize the \({V}^{\pi }\left({s}_{0},0\right)\). Generally, the above reward function is regarded as a sensible approach to reward. Besides considering the objective function, it is also important to take into account the system constraints. Thus, they need to be included in the reward scheme for optimization. Consequently, several penalty functions are introduced to tackle these constraints. The penalty function for the number of fans FN constraint at time step t is defined as follows:

where \({\varphi }_{1}\) and \({\varphi }_{2}\) are penalty coefficients, generally larger and associated with the model.

In addition, considering the constraint of the fan running time FT and the heat source temperature change HT, the penalty function is as follows:

where \(\Delta t\) represents the ratio of the actual operating time of the fan to the total duration of the cycle,

where \({\varphi }_{3},{\varphi }_{4}\) and \({\varphi }_{5}\) are penalty coefficients respectively.

Subsequently, the overall penalty function of the HAS system over the specified duration can be expressed as follows:

According to the construction of the above problem and the definition of the penalty function, the immediate reward in the period t can be given by:

Hence, the state value function \({V}^{\pi }\) is formulated as follows:

After tuning the coefficients \(\left\{{\varphi }_{1},{\varphi }_{2},{\varphi }_{3},{\varphi }_{4},{\varphi }_{5}\right\}\), the optimal policy \({\pi }^{*}\) that maximizes \({V}^{\pi }\left({s}_{0},0\right)\) can be determined by carrying out the optimal action \({a}^{*}\).

Therefore, a finite MDP \(\left(S,A,P,r,\gamma \right)\) is constructed to represent the optimization challenge of discrete HAS systems, and the RL approach is applied to solve this task.

According to the SAC algorithm and the network structure, the conventional model-free DRL algorithm faces two significant challenges: high sampling complexity and poor convergence, which mainly depends on parameter adjustment. Particularly, the proximal policy optimization and A3C, as commonly used policy gradient algorithms, will face the conflict of exploration and exploitation, while the greedy mechanism neutralizes these two situations. To enhance sampling efficiency, non-policy algorithms like deep deterministic policy gradient are introduced, but these algorithms perform poorly due to high parameters. Therefore, the maximum entropy-based SAC algorithm, a non-policy DRL approach, is utilized to enhance learning robustness and sampling efficiency. The SAC algorithm uses three key techniques: an actor-critic architecture with policy and value DNNs (Separate actor and critic), reuse of the experience replay buffer for non-policy formulation, and maximum entropy to balance exploration and learning stability.

Soft value function: The primary goal of the RL task is to follow the policy \(\uppi \left(a|s\right)\) that maximizes the expected future return. Unlike traditional RL algorithms, this study incorporates a maximum entropy term \(\text{H}\left(\uppi \left(a|s\right)\right)=-\text{log } \uppi\left(a|s\right)\) into the reward function to promote exploration. The redefined reward formula can be constructed as follows:

where \(\lambda\) modulates the level of randomness in the strategy, termed as the temperature parameter. For a given current state \({s}_{t}\) and action \({a}_{t}\), transform the entropy term into a Q-value function:

State values \({V}^{\pi }\left(s\right)\) derived from the Q-value function can be represented as follows:

Then the optimal solution to the policy equation is:

Since the LogSumExp operation is referred to as Softmax, the value function is termed the soft-value function, while the Q-value function is designated as the soft Q-value function. Furthermore, the optimal policy \({\pi }^{*}\left( \cdot |s\right)\) is no longer in the form of a Gaussian distribution. Instead, it is an entropy-based policy (EBP) model linked to the value function, offering enhanced generalization capability.

Critical section: Within the actor-critic network framework, the critic and actor play two key roles, handling tasks known as policy evaluation and policy improvement. By iteratively applying these two processes, the learning algorithm progresses towards achieving maximum long-term rewards. This section introduces the strategy evaluation method and uses the good convergence and stability of the deep neural network (DNN) to perform the value function approximation task.

The soft-Q values were estimated using the output values \({Q}^{\theta }\left(s,a\right)\) of the parameterized DNN by \(\theta\), i.e. \(Q\left(s,a\right)\approx {Q}^{\theta }\left(s,a\right)\). To break the correlation between input data required by the DNN, this research uses the empirical memory replay mechanism to store the historical experience of each time step interaction with the environment in a replay buffer, i.e., \(M\leftarrow \left(s,a,r,{s}^{\prime}\right)\cup M\). The parameter \(\theta\) of the DNN is updated based on the mini-batch tuple information \(\left(s,a,r,{s}^{\prime}\right)\) randomly sampled in batches in the replay buffer.

The performance evaluation of the DNN, parameterized by \(\theta\), depends on the mean square error (MSE) between the target Q-value \(\widehat{Q}\left(s,a\right)\) and the estimated Q-value \({Q}_{\theta }\left(s,a\right)\), akin to the deep Q network (DQN) algorithm.

where \({\widehat{V}}_{\widehat{\vartheta }}\left({s}_{i}^{{{\prime}}}\right)\), representing the target state values. To ensure the accuracy of \(Q\left(s,a\right)\) and minimize the loss function L, a commonly used method is gradient descent to update the parameter \(\theta\):

where \({\alpha }_{c}>0\) representing the learning rate of the evaluation network, typically set to a small value, and \({\nabla }_{\theta }\text{L}\left(\theta \right)\) signifies the gradient of \(\text{L}\left(\theta \right)\), computed as:

To improve the stability of the training, another DNN is introduced via parameterization \(\vartheta\) as a state value estimation function, i.e., \(V\left(s\right)\approx {V}_{\vartheta }\). Similarly, the mean square error (MSE) is employed to assess the performance of the DNN:

Correspondingly, the updating procedure for the DNN for the parameter \(\vartheta\) is calculated as follows:

Furthermore, to stabilize the updated comments, the \(\widehat{\vartheta }\) parameterized DNN is used to estimate the target state value function \({\widehat{V}}_{\widehat{\vartheta }}\left(s\right)\). The parameter \(\widehat{\vartheta }\) is obtained by the smoothly updating the weights of the value network based on the soft state.

where \(\kappa \in \left(\text{0,1}\right)\) is the soft factor.

Action section: Once the estimate is obtained, the primary objective is to determine the direction of policy improvement. It’s crucial to note that the state space is continuous, necessitating the use of approximate functions to formulate strategies for each state. Another parameterized DNN by \(\varphi\) is used to represent the policy \({\pi }_{\varphi }\left( \cdot |s\right)\), which is trained by the Eq. (23). Directly adopting the EPB model or the general normal distribution can be challenging. Hence, instead of the EBP, a random network of state conditions is utilized to account for sampling effects. Subsequently, the KL divergence is employed to quantify the disparity between \({\pi }^{*}\left( \cdot |s\right)\) and \({\pi }_{\varphi }\left( \cdot |s\right)\).

This section also introduces a reparameterization technique \({f}_{\varphi }\left({\tau }_{t};{s}_{t}\right)\), where \(\tau\) is sampled from a standard normal distribution for action noise. Specifically, the policy \({\pi }_{\varphi }\left( \cdot |s\right)\) produces a mean distribution \({\pi }_{\varphi }^{\mu }\left({s}_{t}\right)\) and a standard distribution \({\pi }_{\varphi }^{\sigma }\left({s}_{t}\right)\), constructing a normal distribution. This resultant normal distribution is then utilized for the action decision process.

Based on Eqs. (27) and (28), Eq. (32) can be reformulated as follows:

The gradient equation \(L\left(\varphi \right)\) for the parameter \(\varphi\) is as follows:

Actions under the parameter \(\varphi\) is updated to:

where \({\alpha }_{a}\) is the learning rate of the action, for a smaller positive value.

Thus, the overall system structure of the AVCC algorithm is illustrated in Fig. 9. It comprises three components: the actor network, the critic network, and the experience replay buffer. The actor network maps states to actions, the critic network estimates the values of states and state-action pairs, and the replay buffer stores experiences. The action is generated by a parameterized DNN, which includes a dense layer and a ReLU activation function. The critic network comprises a soft-Q network, a state value network, and a target state value network. These networks are adjusted using time-domain difference, error correction, and stochastic gradient descent methods.

Prior to using the policy approximation policy \({\pi }_{\varphi }\left( \cdot |s\right)\), a re-parameterisation method with standard normal distributed sampling noise \(\text{N}\sim (\text{0,1})\) is used to address the sampling problem of the EBP model. In the presence of the current environmental state \({s}_{t}\), the actor determines the action \({a}_{t+1}\) according to the policy \({\pi }_{\varphi }\left( \cdot |s\right)\). Subsequently, after receiving the reward \({r}_{t}\) and the next state \({s}_{t+1}\), the tuple \(\left({s}_{t},{a}_{t},{r}_{t},{s}_{t+1}\right)\) is stored in the empirical replay buffer M. To mitigate the correlation of the data inputs, the critic obtains random samples from M, using the state values \({V}_{\vartheta }\left(s\right)\) and the Q-value networks \({Q}_{\theta }\left(s,a\right)\) for training purposes. During training, the temporal difference (T.D.) error is computed along with the associated loss function for backpropagation, which updates the parameters ϑ and θ of the corresponding deep neural networks (DNNs), refining the parameters \({Q}_{\theta }\left(s,a\right)\) and \({V}_{\vartheta }\left(s\right)\). After multiple iterations, the target state value network’s parameters are updated using a smoothing update approach. The learning process is sustained through iterative interactions with the environment, accumulation of experiences, and adjustment of DNN parameters based on batch sampling from the replay buffer. This cycle continues until termination or the achievement of the final state.

Key parameter analysis

Wind speed test

The ability of the algorithm to control the distributed heat dissipation module can be optimized is largely dependent on the ability to accurately control the fan module. Therefore, we determined the parameters of the fan module through testing, as is shown in Fig. 10. The wind speed test platform is controlled by an STM32 single chip machine, which uses a 6S power supply to provide power to the test platform. However, the 12 V voltage regulates the speed of the fan module via the PWM driver, which outputs a PWM waveform. The wind speed sensor then measures the airflow of the fan module. To reduce the interference from the external environment, we installed sleeves on both sides of the fan module, installed a wind speed sensor in one side of the sleeve, and used a multimeter to detect the output voltage of the PWM driver.

Equivalent heat source

To evaluate the performance of the algorithms and ensure the reliability of the test data, a dedicated test platform must be established. Due to the high cost of the GCS, this study replaces the internal electronic module of the GCS with a custom sized silicone heater. It operates at a standard heating power of 0.5 W/cm2, with a control voltage of 12 V, and can generate temperatures up to 150 °C. Each side of the heater is equipped with a temperature sensor to accurately monitor the module’s actual temperature. The arrangement of all the heaters matches the dimensions of the original module to maintain consistency.

At low speeds, gas flow is stable, incompressible, and three-dimensional. In considering heat source conduction and conjugate heat transfer, we assume uniform heat conduction within the electronic module, and the bottom of no heat conduction, gas property is constant, can use the natural convection assumption Boussinesq to prove the silicone heaters and electronic modules have similarity. Then the heat transfer equation for silicone heaters in Cartesian coordinate system is proved by the following equation.

As shown in Fig. 11 the three-dimensional size of a single module is \({L}_{x}\times {L}_{y}\times {L}_{z}\). Therefore, the three-dimensional steady-state thermal conductivity equation \(T\left(x,y,z\right)\) of the module is expressed as follows28:

Set the boundary condition as follows:

-

1.

The side surface of the module is surface average convection with heat transfer coefficients \({h}_{x}\) and \({h}_{y}\);

-

2.

The top surface of the module is surface average convection with a heat transfer coefficient \({h}_{z}\) of convective heat exchange;

-

3.

The bottom surface of the module is a uniform boundary heat source Q.

Then the boundary condition description formula is:

where \(k\) is the thermal conductivity of the module and the \({T}_{f}\) is temperature of air flow. The three-dimensional steady state thermal conductivity equation is solved using a bivariate Fourier expansion, which can be varied as:

where \({\lambda }_{xm},{\lambda }_{yn}\) and \({\beta }_{mn}\) are all feature values that meet:

Utilizing the upper surface boundary conditions of the module, we can deduce that \({A}_{mn}\) and \({B}_{mn}\) satisfy the following equation:

among:

Therefore, the relationship \({A}_{mn}\) and \({B}_{mn}\) can be obtained, and the two-parameter Fourier expansion of the boundary conditions on the bottom surface of the module (heat source surface) can be derived as follows:

Integrating the above formula:

Thus, the 3D steady-state thermal conductivity equation \(T\left(x,y,z\right)\) of the box can be approximated.

Heat transfer principle of the HAS

To realize the control capability of the AVCC algorithm, the control method of the HAS needs to be constructed. The HAS needs to control the operation of the heat dissipation module, and read the temperature of each heat source at the same time, so the controller is designed as follows29. The HAS body consists of two STM32s, temperature sensor, PWM driver, voltage regulator module, etc. One of the STM32s is tasked with receiving the control signal from the upper computer to regulate the PWM driver, while the other is responsible for acquiring temperature sensor data and transmitting it to the host computer. The 8 drivers control the distributed heat dissipation module, and the other 8 drivers control the heat status of the heat source. The host computer, a PC, executes the AVCC algorithm to either receive or transmit control signals via the serial port.

The control signal rules of the hardware controller are as follows. The temperature sensor reads the data \(S\_{TEM}_{i}\) of all the modules every 3 s and uploads it to the PC via the STM32:

In addition, there are two separate temperature data \({T}_{avg},{T}_{env}\), where \(\left[\begin{array}{ccc}{M}_{1}& {M}_{2}& \begin{array}{cc}\cdots & {M}_{8}\end{array}\end{array}\right]\) is the module number, the temperature value sampled for five points of each module, \(\left[\begin{array}{ccc}{T}_{0}& {T}_{1}& \begin{array}{cc}\cdots & {T}_{4}\end{array}\end{array}\right]\) is the temperature sampled at five points of each module, \({T}_{avg}\) is the temperature inside the box, and \({T}_{env}\) is the ambient temperature.

After the upper computer PC receives the state data, the required speed and running time of each fan \({{\text{A}}_{\text{FAN}}}_{j}\) are obtained through the algorithm analysis and sent to STM32 through the serial port.

where \(\left[\begin{array}{cccc}FA& FB& FC& FD\end{array} \right]\) is the distributed fan module label, each group contains two fans, 1 represents forward rotation and 2 represents reverse rotation; \(\left[\begin{array}{cccc}PA& PB& PC& PD\end{array} \right]\) is the relative speed value of each module fan; \(\left[\begin{array}{cccc}TA& TB& TC& TD\end{array} \right]\) represents the running time. For two Fans of each module, the relative speed P and running time T must be determined (take fan module A as an example):

-

Case 1: If, then \({PA}_{1}>0{{TA}_{1}\ne 0,PA}_{2}=0,{TA}_{2}=0;\)

-

Case 2: or;\({PA}_{1}=0{{TA}_{1}=0,PA}_{2}\ne 0,{TA}_{2}\ne 0\);

-

Case 3: or, then.\({PA}_{1}=0{{TA}_{1}=0,PA}_{2}=0,{TA}_{2}=0\).

Considering the single-threaded nature of STM32, a multi-task algorithm founded on time series principles has been devised to guarantee the execution time and priority of each task, which is essential for verifying program correctness and ensuring stability:

First, the time series \({T}_{0}\) in the extraction \({{\text{A}}_{\text{FAN}}}_{j}\),

Set the minimum cycle delay time \(min(t)\), this value is 1 s, and find the time series maximum \(\text{max}({T}_{0})\), and define the maximum as the total number of cycles; then do the following operations on the time series value,

where \({T}_{1}\) is the new time series. Extract the action sequence \({P}_{0}\), and do the following operations to get a new action sequence \({P}_{1}\).

Map the calculated time series \({T}_{1}\) and action sequence \({P}_{1}\) to the action state value, get the update \({{\text{A}}_{\text{FAN}}}_{j}\), and return to the loop.

Additionally, the STM32 requires time to execute each instruction, a duration influenced by the clock frequency. Typically, a higher clock frequency results in shorter execution times for statements, with each instruction executing within dozens of clock cycles. The clock frequency of the STM32 used in this paper is 168 MHz. This allows millions of seconds of less than one second. For the algorithm, the cycle time is 300 s, i.e., the longest execution time of the action sequence is 300 s, so the error of this parallel algorithm can be considered negligible. However, in the actual test, the Pt100 temperature sensor has a certain hysteresis, which introduces a certain error. The principle of the hardware controller is shown in Fig. 12.

Simulation and experiment

Flow channel simulation

To analyze the channel under different combinations of cooling modules, the cooling channel was simulated using COMSOL software. In this simulation, the two fan modules on one side serve as the air inlets, defined as velocity inlets, with an air volume of 8.78 dm/s. The two fan modules on the opposite side function as the air outlets, defined as pressure outlets, with the surface pressure set to zero by default. The walls are designated as no-slip boundaries, and the standard wall function is applied for the boundary layer. The six module combinations and their corresponding results are presented in Table 3.

Combination 1 is the two fan modules on the left and the two fan modules on the right providing outward air; Combination 2 is the two fan modules on the left providing inward air and Fan 3 on the right is the two fan modules on the left providing inward air and two fans on the right as an air outlet; Combination 4 is the Fan 2 providing inward air and Fan 3 providing outward air; Combination 5 is the Fan 1 providing inward air and Fan 2 providing outward air; Combination 6 is the Fan 3. These six combinations are used as the basic movements of the training model.

From the simulation results, Combination 1 is the most uniform streamline; basically covers the whole box body inside, and the flow rate is higher; In Combination 2, due to the inward air supply of Fan 4, the convective flow experienced significant weakening, causing the internal flow channel to shift to the right side of the dense streamline, where a higher flow rate occurs; The streamline of Combination 3 is dense on the left but relatively sparse on the right, the high flow rate appears on the left side; The streamline of the combination 4 is composed of Fan 2 and Fan 4, mainly distributed in the lower part of the box; The streamlines of combination 5 are mainly distributed on the left, the right side is relatively sparse, and the high flow rate appears on the left side; Combination 6 is a single Fan 4, although the streamline is more dense, but the flow rate is low, the high flow rate area is concentrated near the fan. Therefore, it can be demonstrated that the distributed heat dissipation module can control the formation of the flow channel within the enclosure by adjusting the inward air supply and outward exhaust of the fan module, as well as different operational combinations.

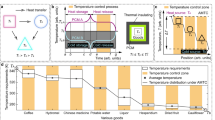

Algorithm training test platform

According to the above analysis, the test platform is shown in Fig. 13. The AVCC algorithm runs on the PC terminal, and the hardware controller is connected by two data lines. One line sends action instructions to the hardware controller, while the other transmits the temperature data readout to the PC terminal. The hardware controller is powered by a 12 V DC power supply. During training, we set the interval for each action to 10 s and the stopping condition to a maximum temperature of 50 °C. After each cycle, the HAS resets to its initial state and awaits the next set of control instructions.

Result and discussion

Distributed heat dissipation module

Equivalent heat source performance test results

We also measured the heating effect of the equivalent heat source, i.e. the passive cooling effect. As shown in Fig. 14, B, D, F, and G use heaters of the same specification, and C and E use heaters of the same specification. Each heater heats up to our desired temperatures in a relatively short time, with more uniform heating and cooling, and the simulated heat source performs as required for the test.

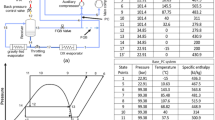

Fan parameter calibration results

In the experiment, we controlled the output range of PWM to [0,600] with a load value of 600 and a frequency of 16,800 Hz. When the PWM output value is 600, the PWM will output the voltage value of 11.98 V, which can control the fan speed, and then realize the measurement of different air volume. Set the PWM output step to 30, and determine the maximum value within 10 s after each change as the determination value. According to the results, the maximum airflow of a single fan module is 8.78 dm3/s, which represents a 31.6% c decrease compared to the original airflow. This reduction achieves the parameter correction of the designed fan module. By analyzing the test data, the air volume of the fan module varies greatly in the first five tests, so the output value step of PWM needs to be further shortened. As shown in Table 4, the PWM output step size was set to 10, and the maximum value was determined within 10 s after each adjustment. As the determined value, the PWM value output range was [0,150]. Since the starting voltage of the fan is 5 V, the adjustment range of the fan module is \(\text{5-12V}\), and the lowest air volume during operation is 2.78 dm3/s.

According to Table 4, the following curve can be fitted: As shown in Fig. 15, when the PWM value approaches 100, the rate of change in air volume begins to decrease as the PWM value increases. This indicates reduced control performance and the need for a broader range of PWM values. The formula obtained by fitting a 5th-degree polynomial is as follows:

where CFM is the air volume, \(x\) is the PWM value, and \({\text{R}}^{2}=0.964\) is the degree of fit.

Four sets of eight small cooling fans are positioned on each side of the enclosure. Testing of the distributed cooling module reveals that the maximum airflow of a single fan module is 8.78 dm3/s, representing a 31.6% reduction compared to the airflow of a single fan. By testing within the PWM output range of [0, 600], we obtained the air volume PWM fitting, achieving precise control of the cooling module. Additionally, simulation experiments demonstrated that the distributed heat dissipation module can control the airflow channels by operating different fans.

In six groups of simulations, we used the maximum air volume as the initial value for the fan. The airflow distribution from one side of the enclosure to the exhaust channel exhibited the most uniformity and achieved the highest outlet flow rate. Each operational mode presented its own set of advantages and disadvantages. The fourth group proved optimal for heat dissipation at the bottom of the cabinet, the fifth group for the left side, and the sixth group for the top right corner.

Different flow channels can be employed based on the areas covered and the heating conditions, achieving more accurate heat dissipation and energy saving. The air volume-PWM fitting formula allows reasonable transmission of air volume by detecting the heating situation of the heat source in the area, further saving energy in the heat dissipation system. However, our algorithm slightly limits the high degree of freedom inherent in the distributed cooling module. This limitation confines the simulation to six modes. To save training time, we set the PWM range to [− 600, 600] with a step size of 100. A negative PWM value represents the fan exhausting outward, a positive value represents air intake, and a PWM of 0 indicates that the fan module is stopped and only acts as a vent.

SISO to control the effect of heat dissipation

In this hierarchical control mode, the test uses fan modules 1 and 2 for inward air intake and modules 3 and 4 for outward air exhaust, resulting in a forward superposition configuration. Based on the simulation results, this arrangement of channels achieves the optimum cooling effect. The algorithm is relatively simple and can be implemented by writing it to an STM32 microcontroller. To monitor the data, the system connects to a PC via serial communication, using a serial communication assistant to obtain relevant data. Under the control of the SISO algorithm, the temperature sensor constantly reads the temperature value of each module. Once the temperature of a module exceeds 50 °C, the heat dissipation module is activated. When the maximum temperature of a module is within the range of 50 °C to 60 °C, all PWM values are set to 200 for heat dissipation, resulting in an airflow of 7.3 dm3/s per fan. When the temperature is in the range of 60 °C to 70 °C, all PWM values are set to 400, with an air volume of 8.1 dm3/s per fan. If the maximum temperature exceeds 70 °C, all fans operate at their maximum air volume.

The temperature of the GCS in the maximum load work mode and the changes in the SISO graded control mode are shown in Fig. 16. The heating test platform is adjusted upwards according to the temperature value of the module under actual work conditions. The initial temperatures of modules C, G, and E are set to 90 °C, the temperatures of modules B, D, H, and F are set to 80 °C, and the temperature of module Q is set to 60 °C. Module Q reaches the target temperature the fastest. By observing the change in curve for module G, there are two inflection points. The temperatures at these inflection points are 70 °C and 60 °C, respectively, proving that SISO graded control switches between different air volumes as the temperature changes. All modules experienced a decrease in temperature from their initial values and reached the target temperature at approximately 350 s.

AVCC training results

The algorithm was trained for 300 episodes, and the results are shown in the Fig. 17. The training process begins to converge around the 40th episode. This early convergence suggests a rapid learning rate, which is advantageous for the efficiency of the algorithm. Moreover, after reaching convergence, the stability of the curve fluctuations indicates that the algorithm is resilient to variations in hyperparameters. This robustness is a crucial characteristic, as it implies that the algorithm can maintain its performance despite potential changes in the training environment or parameter settings. Therefore, the algorithm has good performance in dealing with the heat dissipation problem of GCS.

AVCC to control the effect of heat dissipation

To verify the effectiveness of the algorithm, experiments were performed with all simulated heat sources operating at their maximum temperatures. Figure 18 illustrates the heat dissipation performance regulated by the AVCC algorithm under full load conditions. The heating test platform was configured based on the actual temperature values of each simulated heat source during operation. However, due to real-time response delays, the initial temperature of each simulated heat source may deviate from the preset temperature.

As illustrated in Fig. 18, among all the modules, C and E exhibit the highest initial temperatures, both at 90.7 °C. Conversely, module Q has the lowest starting temperature, at 60.5 °C. The curves for modules C and E indicate that their heat dissipation efficiencies are nearly identical during the initial 80 s of cooling. However, after 90 s, module C demonstrates a higher heat dissipation efficiency compared to module E. The thermal efficiency of module G closely resembles that of modules C and E, yet module C remains the fastest in dissipating heat. Notably, module Q reaches 50 °C the quickest, owing to its lower initial temperature. Nevertheless, module Q is also the first among the eight modules to experience temperature fluctuations, which are attributed to the heater console’s continuous heating of the module.

Additionally, the initial temperatures of modules B, D, H, and F are 81.3 °C, 82.2 °C, 80.3 °C, and 80.8 °C, respectively. The module B exhibits the highest thermal efficiency, followed by the modules D and F, while the module H has the lowest efficiency. This discrepancy in thermal efficiency for the module H is attributed to its placement and volume.

Fan action control results under AVCC

Figure 19 shows the actions taken by the AVCC algorithm during the heat dissipation process, reflecting the regulation of the airflow channels. In this context, a positive action value indicates that the fan is supplying air into the box, while a negative value indicates that the fan is exhausting air outwards.

At the beginning of the process, Fans 1 and 2 are exhausting air outwards, while Fans 3 and 4 are supplying air inwards. After the second time step, and during the third time step, the rotation of the fans changes and remains in this state for approximately 90 s. This adjustment reflects the initial high temperatures concentrated around Fans 3 and 4. As the algorithm adjusts the fan rotations, higher reward values are obtained, leading to maintained rotations with only minor adjustments to the airflow of each fan.

At 110 s, the rotation of each fan changes again, and the airflow from the four fans gradually decreases. By this time, the temperature of module Q has dropped to the target temperature (50 °C), and the maximum temperature of all modules is below 75 °C. Continuous high-speed fan operation did not achieve the highest reward value, prompting further adjustments.

Fan 1 stops after 180 s, followed by Fan 2. At this point, modules B, C, D, and F have reached the target temperature, leaving only Fans 3 and 4 running. Due to the higher temperatures of modules G and H, Fans 3 and 4 are most effective in cooling these modules. As these modules approach the target temperature, the airflow is minimized, and the process continues for 240 s, considering the effect of running time on the reward value.

It is noteworthy that when the PWM output action value is set to 0, the HAS halts the operation of the fan and the heat source in accordance with the action command. In this case, a PWM-0 value is assigned to 30, causing the fan to run at its minimum air volume. This accounts for the fluctuations in the temperature of each module after reaching the target temperature.

Comparison the heat dissipation effects under AVCC and SISO

Figure 20 illustrates the comparative cooling performance of the AVCC and SISO control algorithms. Under the AVCC control algorithm, when the heat dissipation time reaches 80 s, the temperatures of the modules H and B are 68.5 °C and 65.4 °C, respectively. In contrast, under the SISO control algorithm, the temperatures of the modules H and B at the same time are 68.6 °C and 65.5 °C, respectively. At 90 s, the temperature of the G module under the AVCC algorithm is 75.1 °C, compared to 75.5 °C under the SISO algorithm. When the heat dissipation time reaches 120 s, the temperatures of the modules D, C, F, and Q are 58 °C, 64.5 °C, 61.3 °C, and 49.5 °C, respectively, under the AVCC algorithm. In comparison, under the SISO algorithm, these temperatures are 58.5 °C, 65.3 °C, 61.8 °C, and 50.5 °C, respectively. The temperature change curves in Fig. 20 indicate that the modules H and B, the module G, and the modules D, C, F, and Q under the AVCC algorithm exhibit faster heat dissipation rates starting from the 80 s, 90 s, and 120 s time nodes, respectively, compared to their counterparts under the SISO algorithm. This demonstrates the superior heat dissipation control of the AVCC algorithm over the SISO algorithm, particularly in the middle and late stages of heat dissipation. Additionally, the AVCC algorithm reduces energy consumption by 42.2% compared to the SISO algorithm under high load conditions.

Comparison of energy consumption and heat dissipation efficiency under AVCC and SISO

As shown in the Fig. 21, the energy consumption of the AVCC algorithm is mapped as the area enclosed by the red graph and the axes, and the energy consumption of the SISO algorithm is mapped as the area enclosed by the blue graph and the axes, and the area of the AVCC and the SISO area (the blue area in the Fig. 21) is the energy consumption saved by the AVCC over the SISO, which is 42.2%.

The change of PWM value characterizes the rotational speed change of the heat dissipation module during operation, and the power consumption of the heat dissipation module is also related to the air volume and air pressure. Since the rotational speed of the heat dissipation module is constant within the sampling point (10 s per unit of runtime), the product of rotational speed (i.e., the PWM value of the heat dissipation module) and the runtime can be used to map the change of its energy consumption during the whole cycle, and the relevant derivation is shown in the following equation:

Total energy consumption is expressed as:

where \(P\) is the total energy consumption , \(E\) is energy, and \(t\) is time.

Fan energy consumption:

where \(\rho\) is the air density, \(Q\) is the air volume, \(H\) is the air pressure, and \(\eta\) is the fan efficiency.

The equation of rotational speed versus air volume and air pressure:

where \({k}_{1}\) , \({k}_{2}\) are proportionality constants.

Bringing the equation for speed versus airflow and air pressure into the equation for fan power consumption:

Since power is the rate of change of energy, combining the above equation gives:

Then the change in energy \(\Delta E\) over the time period \(t\) can be expressed as:

Since the rotational speed \(N\) is a function \(N(t)\) of time \(t\) during the control process, it follows that:

From the control strategy, it can be seen that the rotational speed of the fan module is constant within a control step of 10 s, from which the above equation can be simplified as:

At this time, the energy consumption is proportional to the time, but in order to simplify the calculation and more intuitive embodiment of the energy consumption of the cooling module changes, the present use of the product of the PWM value and time for mapping, as shown in Fig. 21.

In the maximum load condition, Fig. 22 shows the time taken for each module to cool down from the maximum temperature to 50 °C under the control of the AVCC and SISO algorithms. The fan does not stop working until all modules have been reduced to 50 °C. The total time spent by the AVCC algorithm and the SISO algorithm for each module to reduce to 50 °C is 810 s and 1360 s, respectively, and there is a difference of 550 s between them, and the ratio of the difference of 550 s to 1360 s can be known to show that the AVCC algorithm improves the overall thermal efficiency of the AVCC algorithm relative to that of the SISO algorithm by 40.4%. Among them, under the control of AVCC and SISO algorithm, the G module is the slowest to drop to 50 °C, respectively, spend 230 s and 340 s, the difference between them is 110 s, through the ratio of 110 s and 340 s can be concluded that the AVCC algorithm cooling efficiency is higher than the SISO algorithm by 31.4%. The module Q in the control of the two algorithms spends the same amount of time for 90 s, and the cooling efficiency is the same, the module B in the control of AVCC algorithm spends the same amount of time for 90 s, and its thermal efficiency is the same. The module B spends 160 s to drop to 50 °C under AVCC algorithm control and 180 s under SISO algorithm control. For the module B alone, the cooling efficiency under AVCC algorithm is only 11.1% higher than that of the SISO algorithm. It is the module with the smallest difference in thermal efficiency among all the modules, and the modules Q and B have lower starting temperatures among all the modules with less significant effect on thermal efficiency compared to other modules with higher starting temperatures. This shows that the higher starting temperature, which has a more significant effect on the thermal efficiency. It highlights the effectiveness of AVCC algorithm in high temperature modules.

Limitations

This study focuses exclusively on the cooling performance of the cooling system for a heat source at room temperature. However, environmental factors have a significant impact on the performance of the cooling system and the inlet air temperature may vary with changes in ambient temperature. Future research could investigate the effect of varying ambient temperatures on the performance of the cooling system.

The tests conducted in this paper use simulated heat sources due to the higher cost of testing under actual electronic module conditions. Although the simulated heat source differs from a physical module in both material and shape, this may introduce some inaccuracies in the analysis. Future work could consider testing directly under real heat source conditions.

In this paper, a simulated heat source is used as a training environment for the algorithm, which is time consuming. In real-time applications, DRL can face limitations such as high computational cost, demanding hardware requirements, and delayed responses. These aspects could be optimized and improved in future research.

Conclusion

In this research, we designed an in-box distributed heat dissipation module and built a physical heat source simulation platform. The AVCC method is used to improve the heat dissipation capacity of GCS. The conclusions can be summarized as follows:

-

(1)

By analyzing six different operating modes of the GCS at a room temperature of 25 °C and combining them with its heat dissipation requirements, a distributed heat dissipation structure capable of regulating the flow channel variations was designed.

-

(2)

The HAS was developed using the STM32 platform. A pseudo-parallel control algorithm based on time series analysis was designed and implemented, providing a robust hardware framework for algorithm training. A test platform for algorithm training was built, allowing the HAS to simulate the heating conditions of different heat sources and collect training data. The heat source within the HAS is simulated and the training data obtained may differ from the real conditions. Subsequent training will be carried out under actual heat source conditions where possible.

-

(3)

A model-free heat dissipation control algorithm, AVCC, using DRL was proposed to effectively manage the heat dissipation under different work modes. The optimization problem was formulated as a decision problem using a finite discrete MDP, facilitating algorithmic implementation. Comparative analysis showed that the AVCC algorithm, based on the SAC algorithm, exhibited a 40.4% improvement in heat dissipation efficiency over the SISO-based control method, accompanied by a 42.2% reduction in energy loss.

Data availability

The datasets used and/or analyzed during the current study are available from the second author (Jiali Tao) on reasonable request.

References

Alhassan, A. B. et al. Power transmission line inspection robots: A review, trends and challenges for future research. Int. J. Electr. Power Energy Syst. 118, 105862. https://doi.org/10.1016/j.ijepes.2020.105862 (2020).

Rehman, T. et al. Experimental investigation on the performance of RT-44HC-nickel foam-based heat sinks for thermal management of electronic gadgets. Int. J. Heat Mass Transf. 188, 122591. https://doi.org/10.1016/j.ijheatmasstransfer.2022.122591 (2022).

Yuan, C. et al. Locally reinforced polymer-based composites for efficient heat dissipation of local heat source. Int. J. Therm. Sci. 102, 202–209. https://doi.org/10.1016/j.ijthermalsci.2015.11.015 (2016).

Thepsut, W. et al. Numerical and experimental investigation on heat transfer of multi-heat sources mounted on an array of printed circuit boards in a rectangular case. Appl. Therm. Eng. 156, 156–167. https://doi.org/10.1016/j.applthermaleng.2019.04.032 (2019).

Venkatachalapathy, S. et al. Experimental and numerical investigation of mixed-convection heat transfer from protruding heat sources in an enclosure. Exp. Heat Transfer 25, 92–110. https://doi.org/10.1080/08916152.2011.582566 (2012).

Ajmera, S. K. et al. Experimental investigation of mixed convection in multiple ventilated enclosure with discrete heat sources. Exp. Therm. Fluid Sci. 68, 402–411. https://doi.org/10.1016/j.expthermflusci.2015.05.012 (2015).

Ahamad, S. I. et al. A simple thermal model for mixed convection from protruding heat sources. Heat Transfer Eng. 36, 396–407. https://doi.org/10.1080/01457632.2014.923984 (2015).

Mongia, R. et al. Small Scale Refrigeration System for Electronics Cooling Within a Notebook Computer 751–758 (IEEE, 2006).

Kong, D. et al. Design of additively manufactured hybrid PCM-air heat sink with a two-stage channel for enhancing thermal performance. Appl. Therm. Eng. 218, 119325. https://doi.org/10.1016/j.applthermaleng.2022.119325 (2023).

Qu, P. et al. Numerical and experimental investigation on heat transfer of multi-heat sources mounted on a fined radiator within embedded heat pipes in an electronic cabinet. Int. J. Therm. Sci. 183, 107833. https://doi.org/10.1016/j.ijthermalsci.2022.107833 (2023).

Marcinichen, J. B. et al. A review of on-chip micro-evaporation: Experimental evaluation of liquid pumping and vapor compression driven cooling systems and control. Appl. Energy 92, 147–161. https://doi.org/10.1016/j.apenergy.2011.10.030 (2012).

Wang, X. et al. Deep reinforcement learning-PID based supervisor control method for indirect-contact heat transfer processes in energy systems. Eng. Appl. Artif. Intell. 117, 105551. https://doi.org/10.1016/j.engappai.2022.105551 (2023).

Marcano, M. et al. Low speed longitudinal control algorithms for automated vehicles in simulation and real platforms. Complexity (New York, N.Y.) 2018, 1–12. https://doi.org/10.1155/2018/7615123 (2018).

Dušek, F. et al. Desired terminal state concept in model predictive control: a case study. J. Control Sci. Eng. 2019, 1–7. https://doi.org/10.1155/2019/5208612 (2019).

Zhang, Z. et al. Bioinformatics identified 17 immune genes as prognostic biomarkers for breast cancer: application study based on artificial intelligence algorithms. Front. Oncol. 10, 330–330. https://doi.org/10.3389/fonc.2020.00330 (2020).

Qin, H. et al. Energy-efficient heating control for nearly zero energy residential buildings with deep reinforcement learning. Energy (Oxford) 264, 126209. https://doi.org/10.1016/j.energy.2022.126209 (2023).

Liu, W. et al. Numerical investigation on forced, natural, and mixed convective heat transfer of n-decane in laminar flow at supercritical pressures. Int. J. Heat Mass Transf. 209, 7–9. https://doi.org/10.1016/j.ijheatmasstransfer.2023.124129 (2023).

Hu, X. et al. Design and optimization of air-cooled heat dissipation structure of an on-board supercapacitor box. Appl. Therm. Eng. 249, 3–5. https://doi.org/10.1016/j.applthermaleng.2024.123458 (2024).

Yi, F. et al. Effects analysis on heat dissipation characteristics of lithium-ion battery thermal management system under the synergism of phase change material and liquid cooling method. Renew. Energy 181, 475–478. https://doi.org/10.1016/j.renene.2021.09.073 (2022).

Ghoudi, Z. et al. Towards the modeling of the effect of turbulent water batches on the flow of slurries in horizontal pipes using CFD. Eur. J. Mech. B Fluids 208, 209–211. https://doi.org/10.1016/j.euromechflu.2023.04.003 (2023).

Chen, J. et al. Multiobjective optimization of air-cooled battery thermal management system based on heat dissipation model. Ionics 27, 1307–1322. https://doi.org/10.1007/s11581-020-03853-6 (2021).

Cai, M. et al. Safe reinforcement learning under temporal logic with reward design and quantum action selection. Sci. Rep. 13, 1925. https://doi.org/10.1038/s41598-023-28582-4 (2023).

Afram, A. et al. Artificial neural network (ANN) based model predictive control (MPC) and optimization of HVAC systems: A state of the art review and case study of a residential HVAC system. Energy Build. 141, 96–113. https://doi.org/10.1016/j.enbuild.2017.02.012 (2017).

Zhang, B. et al. Deep reinforcement learning–based approach for optimizing energy conversion in integrated electrical and heating system with renewable energy. Energy Convers. Manag. 202, 112199. https://doi.org/10.1016/j.enconman.2019.112199 (2019).

Xiong, Y. et al. Intelligent thermal control algorithm based on deep deterministic policy gradient for spacecraft. J. Thermophys. Heat Transfer 34, 683–695. https://doi.org/10.2514/1.T5951 (2020).

Tuomas, H. et al. Soft actor-critic: off-policy maximum entropy deep reinforcement learning with a stochastic actor. In Proceedings of the 35th International Conference on Machine Learning (2018).

Zhang, B. et al. Soft actor-critic –based multi-objective optimized energy conversion and management strategy for integrated energy systems with renewable energy. Energy Convers. Manag. 243, 114381. https://doi.org/10.1016/j.enconman.2021.114381 (2021).

Behtarinik, J. et al. Numerical simulation of fluid flow and heat transfer in steady state laminar natural convection in horizontal circular cylindrical enclosures. Int. J. Heat Fluid Flow 105, 2–5. https://doi.org/10.1016/j.ijheatfluidflow.2023.109261 (2024).

Zheng, H. et al. Research on time series prediction of multi-process based on deep learning. Sci. Rep. 14, 3739. https://doi.org/10.1038/s41598-024-53762-1 (2024).

Funding

This study was supported by the Financial Science and Technology Program of the XPCC (grant nos. 2022CB002-07 and 2022CB011) and the National Natural Science Foundation of China (grant nos. 62063030 and 62163032).

Author information

Authors and Affiliations

Contributions

Conceptualization, D.W., and J.L.; methodology, D.W., X.Q., and J.L.; software, D.W.; validation, D.W., J.T., Y.W., J.S., and T.F.; formal analysis, D.W. and J.L.; investigation, D.W., J.T., Y.W., J.S., and Y.Z.; resources, Y.W., J.S., and Y.Z.; data curation, D.W., J.T., and Y.W.; writing—original draft preparation, D.W., and J.L.; writing—review and editing, D.W. and J.L. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Wang, D., Tao, J., Lei, J. et al. Adaptive variable channel heat dissipation control of ground control station under various work modes. Sci Rep 15, 5668 (2025). https://doi.org/10.1038/s41598-025-85689-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-85689-6