Abstract

Understanding the nuanced emotions and points of view included in user-generated content remains challenging, even though text data analysis for mental health is a crucial instrument for assessing emotional well-being. Most current models neglect the significance of integrating viewpoints in comprehending mental health in favor of single-task learning. To offer a more thorough knowledge of mental health, in this study, we present an Opinion-Enhanced Hybrid BERT Model (Opinion-BERT), built to handle multi-task learning for simultaneous sentiment and status categorization. With the help of TextBlob and SciPy, we extracted opinions and dynamically constructed new opinion embeddings to complement the pre-trained BERT model. Using a hybrid architecture, these embeddings are integrated with the contextual embeddings of BERT, whereby the CNN and BiGRU layers collected local and sequential characteristics. This combination helps our model to identify and categorize user status and attitudes from the text more accurately, which leads to more accurate mental health assessments. When we compared the performance of Opinion-BERT to some baseline models, including BERT, RoBERTa, and DistilBERT, we found that it performed much better. Opinion-enhanced embeddings are crucial for improving performance, as demonstrated by our multi-task learning framework’s 96.77% sentiment classification accuracy of 94.22% status classification accuracy. This work provides a more nuanced understanding of emotions and psychological states by demonstrating the potential of combining opinion and sentiment data for mental health analysis in a multi-task learning environment.

Similar content being viewed by others

Introduction

Mental health is becoming increasingly prevalent globally, as millions of people suffer from a range of psychiatric disorders1. Effective treatment of mental health concerns requires early recognition and ongoing monitoring. Massive volumes of text data are produced daily due to the growing popularity of social media and digital platforms2, which frequently represent users’ thoughts, feelings, and emotional states. By analyzing this data, it is possible to assess mental health issues and identify early indicators of emotional discomfort. However, accurately discerning the subtleties of people’s feelings and thoughts from text remains a significant challenge.

Previous methods for analyzing mental health from textual data have predominantly relied on single-task learning models that perform either status detection or sentiment classification independently3,4. These models, while helpful, often fail to capture the nuanced human emotions and subjective viewpoints coexisting in real-world contexts5. For instance, sentiment analysis alone may overlook critical psychological cues embedded in opinions, which are subjective interpretations and viewpoints6. This limitation reduces the effectiveness of traditional models in understanding the intricate emotional context required for mental health analysis.

Sentiment analysis provides valuable information, but it typically treats emotions as general categorizations, lacking the granularity required to extract nuanced emotional states or subjective interpretations of mental health-related text6. Existing models also fail to integrate external opinion-based insights that could augment the contextual embeddings provided by transformer-based models like BERT7,8. Thus, there is a critical need for a framework that can incorporate both factual and subjective dimensions of textual data to provide a comprehensive understanding of mental health indicators.

To address these gaps, we present the Opinion-Enhanced Hybrid BERT Model (Opinion-BERT), a novel multi-task learning framework specifically designed for mental health assessment. The key differentiator of our study is the introduction of opinion embeddings, which are dynamically constructed from external sentiment annotations and pre-trained opinion extraction models. Unlike previous studies, our approach explicitly captures subjective viewpoints, enabling the model to analyze opinions alongside contextual information from BERT embeddings. This dual representation allows us to discern subtle emotional patterns and psychological states more effectively than sentiment analysis alone.

Furthermore, we utilize a hybrid architecture combining Convolutional Neural Networks (CNNs) and Bidirectional Gated Recurrent Units (BiGRUs), tailored to capture both local patterns (e.g., emotionally charged phrases) and sequential dependencies (e.g., progression of thoughts in text). By employing multi-task learning, our model simultaneously performs sentiment and status classification, leveraging shared representations to improve performance on both tasks.

Our extensive evaluations demonstrate that Opinion-BERT achieves 96.77% accuracy in sentiment classification and 94.22% accuracy in status classification, outperforming state-of-the-art baseline models like BERT9, RoBERTa10, and DistilBERT11. These results underscore the critical role of opinion embeddings in improving model performance and achieving a more comprehensive understanding of mental health indicators.

The key contributions of this work are as follows:

-

We propose a novel Opinion-Enhanced Hybrid BERT model that integrates opinion embeddings into a BERT-based architecture, enabling the model to capture and utilize subjective opinions for enhanced mental health predictions.

-

We introduce a hybrid architecture combining BERT with CNN and BiGRU layers, allowing the model to effectively extract both local features through convolutional layers and sequential dependencies via recurrent layers.

-

Our approach leverages multi-task learning to simultaneously perform sentiment and status classification, improving overall performance by sharing information between the two tasks.

-

We explicitly address the limitations of previous works by demonstrating the superiority of opinion-augmented embeddings in achieving nuanced understanding and accurate predictions.

These contributions highlight the novelty and effectiveness of our approach in advancing textual data analysis for mental health assessment. By bridging the gap between sentiment and opinion-based insights, Opinion-BERT offers a robust framework for early identification and intervention in mental health challenges.

The remainder of this paper is organized as follows. A thorough assessment of the literature is provided in Section “Literature review”. Section “Data description” presents the dataset and data pre-processing. The proposed methodology is outlined in Section “Problem statement”, followed by an experimental setup in Section “Experimental setup” and an analysis of the results in Section “Results analysis”. Section “Discussion” presents a discussion, Section “Limitation and future work” outlines the limitations and future work, and Section “Conclusion” concludes the paper.

Literature review

The increased availability of textual data from social media, online forums, and patient records has drawn considerable interest in the use of natural language processing (NLP) in mental health studies in recent years. Traditional machine learning techniques have been the mainstay of early approaches, but the development of deep learning models such as BERT, RoBERTa, and GPT, has made substantial progress in the field. However, these models frequently fail to consider the complex role that feelings and views play in texts on mental health. This overview of the literature looks at multi-task learning models, sentiment analysis, and mental health detection, emphasizing the significant developments and gaps that our Opinion-Enhanced Hybrid BERT model seeks to fill.

Machine learning and NLP techniques are increasingly being applied to early mental health diagnosis through text analysis on various platforms. Singh et al.12 explored SMS-based mental health assessments using classifiers such as decision trees, random forest, and logistic regression, with logistic regression achieving 93% accuracy. Inamdar et al.13 focused on detecting mental stress in Reddit posts, using ELMo and BERT embeddings, achieving an 0.76 F1 score with Logistic Regression. Alanazi et al.14 investigated the influence of financial news on public mental health and found that SLCNN was the most effective with 93.9% accuracy in sentiment classification. A systematic review conducted by Abd Rahman et al.15 highlighted SVM as a commonly used model for mental health detection in Online Social Networks, emphasizing challenges such as data quality and ethical concerns. Abdulsalam et al.16 introduced an Arabic suicidality detection dataset with 5719 tweets, demonstrating that the AraBert model outperforms traditional models such as SVM and Random Forest, achieving 91% accuracy and an F1 score of 88%. Almeqren et al.17 applied Arabic Sentiment Analysis (ASA) to predict anxiety levels during the COVID-19 pandemic in Saudi Arabia, using a Bi-GRU model and a custom Arabic Psychological Lexicon (AraPh), reaching 88% accuracy in classifying anxiety from tweets. Ameer et al.18 focused on detecting depression and anxiety from 16,930 Reddit posts, with the RoBERTa model achieving the highest accuracy of 83%, highlighting the role of automation in supporting mental health providers. Su et al.19 conducted a scoping review on deep learning applications in mental health, categorizing studies across clinical, genetic, vocal, and social media data, underscoring DL’s potential for early detection, while noting challenges in model interpretability and data diversity. Together, these studies demonstrate the transformative potential of AI in enhancing early mental health diagnosis across various platforms and languages.

Several studies have highlighted the effectiveness of multi-task learning (MTL) in detecting mental health conditions and improving sentiment analysis across various domains. Liu and Su20 explored a BERT-based MTL framework for mental health detection on social media, achieving superior performance on the Reddit SuicideWatch and Psychiatric-disorder Symptoms datasets compared to single-task models and general-purpose Large Language Models (LLMs). Buddhitha and Inkpen21 focused on suicide ideation detection using CNN-based MTL models applied to Reddit and Twitter, outperforming baselines and emphasizing the role of comorbid conditions such as PTSD in improving prediction accuracy. Sarkar et al.22 introduced AMMNet, an MTL model that integrates word embeddings and topic modeling to predict depression and anxiety in Reddit posts, demonstrating strong performance through active learning despite data limitations. Li et al.23 applied MTL to detect depression in dialogues, combining tasks such as emotion, dialogue act, and topic classification, and achieved a 70.6% F1 score on dialogue datasets. Similarly, Plaza-Del-Arco et al.24 proposed an MTL model for hate speech detection in Spanish tweets, combining sentiment analysis and emotion classification, where their MTLsent+emo configuration outperformed the single-task learning models. Finally, Jin et al.25 developed a sentiment classification MTL model (MTL-MSCNN-LSTM), that integrates multi-scale CNN and LSTM to capture global and local text features in commodity reviews, achieving higher accuracy and F1 scores across six datasets. These studies collectively demonstrate the potential of MTL in improving the prediction accuracy, handling multiple tasks, and addressing challenges such as data sparsity and model efficiency across different application areas.

Multiple studies have focused on accelerating opinion-embedding approaches, primarily using attention mechanisms and state-of-the-art architectures in sentiment analysis. Malik et al.26 introduced a hybrid model combining multi-head attention with Bi-LSTM and Bi-GRU classifiers, achieving a remarkable 95% accuracy, 97% recall, and 96% F1 score on student feedback sentiment analysis using advanced embeddings such as FastText and RoBERTa. In a similar manner, Chen et al.27 proposed a BERT-based dual-channel hybrid neural network that integrates a CNN and BiLSTM with attention mechanisms, which significantly improves sentiment analysis on hotel review datasets, achieving 92.35% accuracy. On the other hand, Dimple Tiwari and Nagpal28 introduced KEAHT, a knowledge-enriched hybrid transformer for analyzing sentiment related to COVID-19 and farmer protests, incorporating LDA topic modeling and BERT, demonstrating its ability to handle complex social media sentiment analysis tasks. The AEC-LSTM model introduced by Huang et al.29 uses a novel approach that integrates emotional intelligence and attention mechanisms, resulting in superior performance on real-world datasets for sentiment classification. In contrast, Han and Kando30 focused on improving opinion expression detection using a BiLSTM-CRF model with deep contextualized embeddings such as BERT, showing superior results for detecting subjective expressions. Jebbara and Cimiano31 explored character-level embeddings to improve opinion target expression (OTE) extraction, addressing issues such as misspellings and domain-specific terminology, whereas Liu et al.32 highlight the effectiveness of RNNs, particularly LSTMs, over traditional CRF models in fine-grained opinion mining, achieving enhanced performance by fine-tuning word embeddings. These studies highlight the evolution of sentiment analysis models, emphasizing the need for advanced embeddings, attention mechanisms, and hybrid architectures to improve sentiment classification and opinion mining across diverse applications.

In conclusion, the progress made in sentiment analysis, especially concerning mental health, highlights the revolutionary potential of deep learning and machine learning methods. Sophisticated models that use RNNs, CNNs, and transformer architectures such as BERT have replaced traditional lexicon-based methods, improving the capacity to extract subtle emotions from textual input. Multi-task learning (MTL) and attention processes have helped handle complicated tasks and improve prediction accuracy in recent experiments conducted on a variety of platforms. Sentiment analysis addresses the issues of data variety and requirement for interpretability in AI models. As it continues to be incorporated, it presents potential paths for early mental health diagnoses and interventions.

Data description

The dataset employed in this study is an extensive and painstakingly assembled set of utterances from several platforms annotated with mental health statuses. It combines information from other Kaggle datasets, such as the 3k Conversations dataset for chatbots and depressions. The Reddit Cleaned, Human Stress Prediction, Bipolar Mental Health Dataset, Reddit Mental Health Data, Students Anxiety and Depression Dataset, Suicidal Mental Health Dataset, and Suicidal Tweet Detection Dataset are resources related to mental health and anxiety prediction. One of the following seven mental health statuses is associated with the statements in this dataset: Normal, Depression, Suicidal, Anxiety, Stress, Bipolar, or Personality Disorder. Every entry in the data was marked with a particular mental health condition, and it was gathered from a variety of sites, such as Reddit and social media. The dataset, obtained from Kaggle, is already labeled and does not include any user-level information, such as the number of posts per user.

The dataset, which is organized with variables such as unique_id, statements, and mental health status, is a priceless tool for researching mental health trends, creating sophisticated chatbots for mental health, and conducting in-depth sentiment analyses.

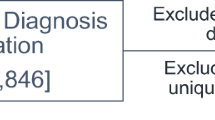

Important insights into the attitudes and mental health conditions of the sample are shown in Fig. 1. The distribution of mental health statuses is shown in Fig. 1a, where “Normal” accounts for the biggest percentage (31%), followed by “Depression” (20.2%) and “Suicidal” instances (20.2%). Smaller percentages are explained by other categories including “Anxiety” (7.3%), “Bipolar” (5.3%), “Stress” (4.9%), and “Personality Disorder” (2.0%). The frequency of mental health conditions such as depression and suicidal thoughts in the data was demonstrated by this distribution.

The sentiment distribution is shown in Fig. 1b, where “Positive” sentiment is most prevalent at 40.3%, followed by “Negative” emotion at 37.6% and “Neutral” sentiment at 22.1%. This sentiment breakdown provides crucial context for sentiment analysis in the study of mental health trends by reflecting the emotional tones connected to mental health statuses.

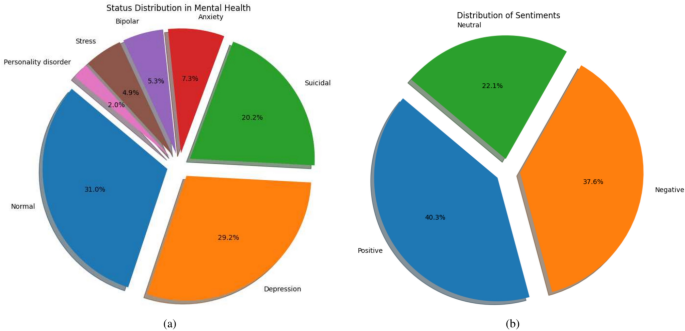

Figure 2 shows that the majority of posts in the dataset are relatively short, with most containing fewer than 500 words and a significant concentration below 100 words. The sharp decline in the number of posts as the word count increases highlights the dominance of shorter posts in user-generated content. This pattern suggests that users tend to communicate their thoughts in brief formats, which is typical for social media or digital platforms where brevity is often encouraged. Posts exceeding 1,000 words are rare, further reinforcing the preference for concise communication. Understanding this distribution is critical for natural language processing tasks, as it helps us set appropriate tokenization limits, ensuring that most posts are fully captured while minimizing the need for truncation or excessive padding. Additionally, this insight allows us to design models optimized for the typical length of user content, improving efficiency and accuracy in downstream tasks like classification, sentiment analysis, or topic modeling.

Data pre-processing

Data cleaning

Data cleaning requires several important procedures to ensure the quality and integrity33. Duplicate entries were identified and eliminated to avoid redundancy. Special characters, URLs, and other non-alphanumeric components were removed to streamline text data. Furthermore, the text was transformed to lowercase to preserve uniformity and eliminate problems due to case sensitivity. Missing values were carefully handled by imputing suitable values depending on the context or eliminating impacted rows. Finally, to improve the analysis’s emphasis on the main ideas, stopwords-common words that had minimal bearing on the statements’ meanings-were eliminated. All of these procedures worked together to provide a clean and trustworthy dataset for further processing and examination.

Lemmatization

Lemmatization, which focuses on breaking words down to their most basic or root forms, is an essential stage in text preparation. In contrast to stemming, which frequently truncates words, lemmatization seeks to yield legitimate words by considering meaning and context. First, to maintain uniformity, all punctuation was removed and the text was converted to lowercase. Following the tokenization of the text into individual words, frequent stop words were eliminated. Next, each surviving word is lemmatized with the help of WordNetLemmatizer from NLTK34,35, which guarantees that terms like “running” and “runs” are reduced to their root, “run.” This method aids in text normalization, which increases the accuracy of the ensuing analytical jobs. The lemmatized tokens were subsequently combined into a processed statement, prepared for the subsequent stages of feature extraction and modeling.

Data augmentation with synonym replacement

As part of our data augmentation process, we used a synonym replacement technique to improve the variety and resilience of the dataset36,37. This method finds words and replaces them with synonyms by using the WordNet lexical database38. Using WordNet synsets, the procedure begins by extracting synonyms for every word in a given text. A synonym is randomly selected from the list of potential synonyms for every word in the text. This replacement introduces modifications in wording while maintaining the textual meaning up to a predetermined number of times (n=3, in this example). Subsequently, the modified texts were combined and added to the dataset, increasing both the quantity and variety. To provide a more complete dataset, several copies of each original text item were created and appended using synonyms. Using the synonym_replacement function, this method significantly increases the diversity of the dataset and can help machine learning models that have been trained on it perform better and become more broadly applicable. For consistency in further analysis or training, the original labels were retained in the augmented dataset created using both original and freshly generated texts.

Sentiment analysis with TextBlob

During the sentiment analysis stage, TextBlob is used to assess each statement in the supplemented dataset to ascertain its sentiment polarity39. Based on the polarity score, \(p\), where \(p \in [-1, 1]\), TextBlob divides attitudes into three categories: “Positive,” “Neutral,” or “Negative.” The following section describes how sentiment categorization was performed.

-

Positive sentiment: \(p > 0\)

-

Neutral sentiment: \(p = 0\)

-

Negative sentiment: \(p < 0\)

Function \(\text {analysis}\_\text {sentiment}\_\text {by}\_\text {status}\) was used to perform this categorization. It processes each statement \(s\) and the status that accompanies it \(\text {status}\), evaluates the sentiment, and yields a tuple \((s, \text {status}, \text {sentiment})\). After the sentiments are combined, we can determine the number of sentiment types for each status by examining a DataFrame called \(\text {sentiment}\_\text {df}\). In particular, the counts were acquired by employing

where the number of statements with status \(i\) and sentiment \(j\) is represented by \(\text {sentiment}\_\text {counts}_{ij}\). This distribution sheds light on the distribution of feelings across various mental health states and is described in \(\text {sentiment}\_\text {counts}\). Analyzing the emotional tone of utterances in connection with reported mental health issues requires an understanding of this distribution.

To ensure the robustness of our findings, we also experimented with other sentiment analysis tools, including VADER and Afinn. These tools offer varying approaches to sentiment scoring and were used to reassess the dataset’s sentiment labels. The comparative analysis revealed that while TextBlob provided a balanced distribution of sentiment categories, VADER detected more nuanced polarity shifts, especially in “Neutral” and “Negative” sentiments. A summary of these results and their impact on the final model is presented in the Results section.

Opinions

We identified important opinion-related terms using spaCy’s English NLP model to extract and sanitize subjective expressions from textual input40. We refined the extraction procedure by removing auxiliary verbs and concentrating on adjectives, adverbs, and verbs likely to communicate subjective feelings by running each text through the model. For convenience, the detected opinions were combined into a string format. In conclusion, we have used a cleaning mechanism after extraction. This function eliminated non-alphabetic letters and phrases that were too repetitive or insignificant, leaving only the significant and original expressions. The end product is a dataset enhanced with pertinent and clean opinion phrases that, offers a strong basis for additional research or model training.

The average number of opinions for each sentiment type is presented in Table 1. With 36.31 opinions on average, the “Positive” category has the most opinions, closely followed by the “Negative” category with 33.80 opinions. The “Neutral” category, on the other hand, has a far lower average-just 3.64 opinions per feeling. This shows that users record fewer neutral comments and prefer to express stronger sentiments more frequently in terms of positivity or negativity. There might be a general trend toward polarized sentiment in the dataset, as seen by the imbalance in the number of neutral thoughts.

The opinions most frequently used in the dataset are listed in Table 2. With 81,114 occurrences, the verb “feel” is the most common, suggesting that emotional expressiveness is important to the dataset. Verbs like “know” and “want,” which appear 52,940 and 50,827 times, respectively, are closely followed, indicating that knowledge and want are strongly associated with user attitudes. Additional frequently used words such as “get,” “even,” and “really” denote typical declarations of emphasis or intensity. These frequently expressed opinions draw attention to important phrases that users frequently use to communicate their feelings, ideas, and behaviors, thereby providing insights into the recurrent themes of the dataset.

Data tokenization and label encoding

We used a two-step procedure of tokenization and label encoding to obtain textual input for model training. First, the raw text data were transformed into token IDs and attention masks appropriate for BERT-based models using BertTokenizer9. After processing the phrases in the DataFrame’s statement column, the tokenize_data function generates input_ids and attention_masks using the given parameters of padding and truncating to a maximum length of 100 tokens, among other things. The text data were structured correctly for the model input owing to this tokenization.

We set the input sequence length to 100 tokens after conducting an exploratory analysis of our dataset. Initially, we examined the distribution of tokenized sentences and observed that the majority of instances contained fewer than 100 tokens. Specifically, our preliminary data analysis showed that approximately 90% of sentences in the dataset had a token count below this threshold, minimizing the amount of unnecessary padding for most samples. Selecting a slightly longer length would have increased computational overhead, while a shorter length risked truncating relevant context. Thus, a padding length of 100 tokens represented a balanced compromise between computational efficiency and preserving important textual information.

The next step involved label encoding, which converts category labels into numerical values required for model training41. For the status, emotion, and opinions_str columns, we initialized label encoders. These encoders enable the model to comprehend and handle categorical data efficiently by mapping distinct class labels to integers. The opinions labels were kept as integer encodings, whereas the status and emotion labels were transformed into one-hot encoded vectors by using the to_categorical function. Thorough preparation guarantees that both categorical and textual data are correctly prepared and incorporated into the training pipeline. These encoded labels are now included in the new DataFrame, which makes managing the data and training the models easier.

Data splitting

The dataset was systematically divided into training, validation, and test sets to simplify the training and evaluation of the model. For compatibility with the scikit-learn’s train_test_split function, the input tensors (input_ids and attention_masks) and encoded labels (status_labels, sentiment_labels, and opinions_labels) were first transformed from TensorFlow tensors to NumPy arrays.

Initially, the dataset was split into training and temporary sets, with 30% set aside for testing and validation and 70% going toward training. The temporary set was then divided evenly into the test and validation sets, each comprising 15% of the initial data. This method guarantees solid model training and objective assessment by evenly distributing data. The corresponding dataset sizes were 1,10,365 samples for the training, 23,650 samples for validation, and 23,650 samples for testing. These splits are essential for evaluating model performance, fine-tuning hyperparameters, and guaranteeing the applicability of the model to new data.

Problem statement

Mental health analysis through text requires a deep understanding of both sentiments and subjective opinions expressed in user-generated content. This challenge can be effectively addressed using multi-task learning, in wich a model is trained to handle multiple related tasks simultanously. In this study, we aim to classify both sentiment and status using a BERT-based hybrid model that integrates external opinion embeddings to enhance the model’s interpretative capabilities.

Input: Let \(X_i\) represent the input sequence for the \(i\)-th instance, where

represents a sequence of \(m\) tokens. In addition, let \(O_i\) denote the external opinion information associated with the input sequence, represented as an embedding.

-

Text input: \(X_i \in \mathbb {R}^{m \times d}\) (BERT embeddings of the input text sequence)

-

Opinion Input: \(O_i \in \mathbb {R}^{d}\) (dense opinion embedding)

Output: The model is designed for multi-task learning, providing predictions for both sentiment and status classification:

-

Sentiment output: \(Y_i^{\text {sentiment}} \in \mathbb {R}^C\), where \(C\) is the number of sentiment classes.

-

Status output: \(Y_i^{\text {status}} \in \mathbb {R}^S\), where \(S\) is the number of status classes.

-

Final output: The model predicts:

$$\begin{aligned} Y_i = (Y_i^{\text {sentiment}}, Y_i^{\text {status}}) \end{aligned}$$

where \(Y_i\) is a pair consisting of the predicted sentiment and status labels for input sequence \(X_i\).

This formulation encapsulates the multi-task nature of the problem, aiming to accurately classify both sentiment and status from text data enriched with opinion-based insights.

Proposed methodology

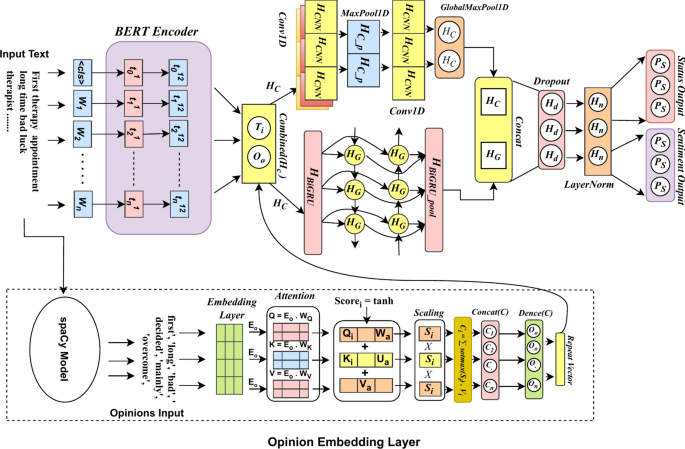

Figure 3 illustrates the architecture of our Opinion-Enhanced Hybrid BERT Model, which is designed to handle two tasks simultaneously: sentiment classification and status classification in mental health-related text data. Our model enhances standard BERT embeddings with custom opinion embeddings, capturing subjective opinions expressed in the text. These opinions are critical for understanding mental health conditions. By combining contextual information from BERT with subjective insights through opinion embeddings, we provide a deeper understanding of emotional states.

Our proposed architecture combines dynamically produced opinion embeddings with BERT embeddings. We integrate these opinion embeddings with BERT’s contextual representations, which are obtained via sentiment annotations. Our design uses a hybrid feature extraction technique to capture sequential relationships as well as local patterns. This mechanism consists of layers of CNN and BiGRU. We apply concatenation, dropout, and normalizing layers to the final representations, yielding outputs for status prediction and sentiment categorization.

We employed BERT as the foundation of our model to generate contextual embeddings. We extended this using a custom OpinionsEmbedding Layer that integrates opinion-based information. A hybrid feature extraction mechanism, utilizing both CNN and BiGRU layers, captures the local patterns and long-range dependencies in the text. Our model is structured within a multi-task learning framework, enabling us to perform sentiment and status classification in parallel, learning shared representations that improve performance on both tasks.

-

1.

BERT encoder: Our model is based on BERT and produces embeddings represented by \(H_{\text {BERT}}\). These BERT embeddings capture syntactic and semantic relationships between words, offering a comprehensive, context-aware representation of the input text. By leveraging BERT, we ensure the model identifies subtle nuances in meaning, which is essential for correctly understanding information linked to mental health. Each word is represented in relation to its surrounding context, which is particularly helpful when handling complex language patterns often found in mental health-related text, such as metaphorical language and emotive expressions.

To enhance sentiment and status classification accuracy, we complement the contextual understanding provided by \(H_{\text {BERT}}\) with additional components, such as CNN, BiGRU, and attention-based opinion embeddings. This layered approach enables the model to capture nuanced changes in tone, inferred emotions, and subtle viewpoints.

-

2.

Opinion embeddings: We incorporate a specific layer called the OpinionsEmbedding Layer, which extracts subjective information like opinions and emotions. This layer complements the contextual information captured by BERT embeddings. To extract sentiment-related subtleties, we employ an attention mechanism that highlights the most relevant textual elements. Specifically, we calculate queries \(Q_i\), keys \(K_i\), and values \(V_i\) for each word in the input sequence:

$$\begin{aligned} Q_i = E_o \cdot W_{Q_i}, \quad K_i = E_o \cdot W_{K_i}, \quad V_i = E_o \cdot W_{V_i} \end{aligned}$$Here, \(E_o\) is the base opinion embedding derived from external sentiment annotations, while \(W_{Q_i}\), \(W_{K_i}\), and \(W_{V_i}\) are learned weight matrices that transform \(E_o\) into queries, keys, and values.

Next, we compute the attention score \(S_i\), which determines the significance of each word in expressing opinions or emotions:

$$\begin{aligned} S_i = \tanh (Q_i \cdot W_a + K_i \cdot U_a + V_a) \end{aligned}$$This score leverages learned weight matrices \(W_a\), \(U_a\), and a vector \(V_a\) to highlight opinion-relevant words. Applying these scores to the values generates the final opinion embeddings \(O_{\text {opinions}}\), which encapsulate the subjective dimensions of the text.

We then concatenate these opinion embeddings with the contextual embeddings from BERT to create a unified representation:

$$\begin{aligned} H_{\text {combined}} = \text {Concat}(H_{\text {BERT}}, O_{\text {opinions}}) \end{aligned}$$This combined embedding \(H_{\text {combined}}\) enriches the model’s understanding by integrating both factual and emotional insights, which is crucial for mental health studies.

-

3.

Hybrid architecture (CNN and BiGRU): We process the combined embeddings \(H_{\text {combined}}\) using a hybrid architecture that includes convolutional neural networks (CNNs) and bidirectional GRUs (BiGRUs). The CNN branch captures local patterns, such as emotionally charged phrases, using 1D convolution:

$$\begin{aligned} H_{\text {CNN}} = \text {Conv1D}(H_{\text {combined}}, k) \end{aligned}$$A max-pooling operation reduces the dimensionality while retaining significant features:

$$\begin{aligned} H_{\text {CNN}} = \text {MaxPool1D}(H_{\text {CNN}}) \end{aligned}$$Simultaneously, the BiGRU captures long-range dependencies and sequential relationships in the text. We generate the BiGRU output \(H_{\text {BiGRU}}\) as:

$$\begin{aligned} H_{\text {BiGRU}} = \text {BiGRU}(H_{\text {combined}}) \end{aligned}$$A global max-pooling operation further condenses the BiGRU output:

$$\begin{aligned} H_{\text {BiGRU}} = \text {GlobalMaxPooling1D}(H_{\text {BiGRU}}) \end{aligned}$$By combining the CNN and BiGRU outputs, our architecture captures both short-term and long-term dependencies, making it well-suited for analyzing nuanced emotional expressions.

-

4.

Multi-task learning: We adopt a multi-task learning framework to perform sentiment classification and status classification simultaneously. This approach enables the model to generalize better by leveraging shared patterns between the two tasks. We apply dropout and layer normalization to the concatenated outputs from the CNN and BiGRU layers:

$$\begin{aligned} H_{\text {final}} = \text {LayerNormalization} (\text {Dropout} (\text {Concat} (H_{\text {CNN}}, H_{\text {BiGRU}}))) \end{aligned}$$Finally, two separate softmax layers generate predictions for sentiment and status classifications:

$$\begin{aligned} \hat{y}_{\text {sentiment}}= & \text {softmax}(W_{\text {sentiment}} H_{\text {final}})\\ \hat{y}_{\text {status}}= & \text {softmax}(W_{\text {status}} H_{\text {final}}) \end{aligned}$$This framework effectively integrates data from both tasks, improving overall accuracy and efficiency.

Our CNN-BiGRU hybrid architecture, combined with opinion embeddings and BERT, captures both subjective viewpoints and factual information in mental health-related texts. Consequently, we achieve a comprehensive understanding of emotional tone (sentiment) and broader emotional contexts (status). This approach makes our model a robust tool for analyzing text in the domain of mental health.

Loss functions

For sentiment and status categorization, the described model uses categorical cross-entropy loss functions, that are subsequently applied to the respective outputs. Each task has an output layer, however, the two loss functions are calculated separately and combined to provide the overall loss.

\(\mathcal {L}_{\text {status}}\) represents the categorical cross-entropy loss for the status classification problem, whereas \(\mathcal {L}_{\text {sentiment}}\) represents the sentiment classification task. The overall loss function is the weighted sum of these individual losses and is represented by the model as \(\mathcal {L}\).

where:

Here:

-

\(y_{\text {status},i}\) is the true label for the status classification, where \(C_{\text {status}}\) is the number of status classes.

-

\(p_{\text {status},i}\) is the predicted probability for the status classification.

-

\(y_{\text {sentiment},j}\) is the true label for sentiment classification, where \(C_{\text {sentiment}}\) denotes the number of sentiment classes.

-

\(p_{\text {sentiment},j}\) is the predicted probability for the sentiment classification.

Weights \(\alpha\) and \(\beta\) balance the contributions of each loss term to the overall loss function, allowing for tailored optimization based on the importance of each task. These weights can be adjusted to consider task-specific needs or performance. For equal weighting, these weights were typically set to one.

The model minimizes the combined loss \(\mathcal {L}\), which guides the optimization process by balancing errors across both classification tasks to guarantee that it learns to perform well in both status and sentiment predictions.

Experimental setup

The trials in this study were conducted using a machine with 16GB of RAM and an NVIDIA GeForce RTX 2060 GPU, which provided sufficient processing capability for deep learning model training. The implementation was completed using TensorFlow 2.10.1, a popular deep learning framework that facilitates GPU acceleration for effective training and inference. Python 3.9.19 was used to construct the codebase because it provides a stable environment for integrating different libraries and performing machine learning operations. Fast training iterations and efficient use of computing resources were made possible by this configuration, which guaranteed a stable and consistent platform for testing the proposed Opinion-Enhanced Hybrid BERT Model.

Baseline models

The baseline models used in this study were DistilBERT11, RoBERTa10, and BERT9. These sophisticated models are quite effective at comprehending text. Strong language comprehension was provided by the primary model, BERT. To outperform BERT, RoBERTa requires longer training times and more data. DistilBERT is a useful alternative to BERT, as it is a scaled-down version of the algorithm that operates more quickly without sacrificing much of its accuracy.

-

BERT-base-uncased: A transformer-based model that uses a masked language-modeling objective for pre-training, making it effective for various NLP tasks.

-

RoBERTa: An optimized version of BERT that modifies key hyperparameters, removes the next sentence prediction objective, and trains on larger mini-batches.

-

DistilBERT: A smaller, faster, and lighter version of BERT trained using knowledge distillation, providing competitive performance with reduced resource consumption.

Because these models have demonstrated efficacy in natural language processing tasks and consistently deliver high performance across a range of benchmarks, we chose them as baselines. We can evaluate efficiency and accuracy by comparing their different sizes and training approaches, which provide information about the trade-offs associated with model selection.

Ablation study

The goal of this ablation study was to evaluate the efficacy of several elements incorporated into the suggested model. We performed this by experimenting with different configurations and evaluating how well they performed in comparison to the entire BERT-CNN-BiGRU model with attention-based opinion embeddings. In the original version, sentiment and status predictions were made using only the BERT encoder, thereby eliminating opinion embeddings. Subsequently, we simply used BERT and opinion embeddings to evaluate the model, not the hybrid CNN-BiGRU architecture, to see how opinion information contributed. We also experimented with the more straightforward hybrid models, BERT-BiGRU and BERT-CNN, to separate the effects of each element. To determine how adding or removing CNN, BiGRU, and opinion embeddings impacted the overall performance, each configuration was examined. The effects of adding or removing the CNN, BiGRU, and opinion embeddings on the overall performance were examined for each configuration. The full BERT-CNN-BiGRU model with attention-based opinion embeddings consistently outperformed all other configurations, according to the results, proving that attention-enhanced opinion integration and local and sequential feature extraction are essential for precise and nuanced mental health analysis.

Hyperparameter settings

Certain hyperparameters were used to fine-tune the suggested Opinion-BERT model. The Adam optimizer was employed with a learning rate of 2e−5 and a batch size of 32. With an embedding dimension of 768, the length of the input sequence is restricted to 100 tokens. The CNN component employed 64 filters with a kernel size of 3, and MaxPooling1D came next. The attention mechanism features four heads. The 64 units of the BiGRU layer were used to record sequential data. The model was trained for 15 epochs with categorical cross-entropy as the loss function, and a dropout rate of 0.3 was used to avoid overfitting. Early halting with four-epoch patience was used to maximize training, and when the validation loss plateaued, the learning rate scheduler modified the learning rate. This setup allowed the model to accurately capture complex sentiments and status patterns in mental health texts.

Evaluation metrics

Several assessment measures were used to evaluate how well the suggested Opinion-BERT model classified sentiment and status:

-

Accuracy: This metric measures the proportion of correct predictions to the total predictions. It is a straightforward indicator of how well the model performs in both sentiment and status classification tasks.

$$\begin{aligned} \text {Accuracy} = \frac{\text {Number of Correct Predictions}}{\text {Total Number of Predictions}} \end{aligned}$$ -

Precision: Precision evaluates the accuracy of positive predictions. This is the ratio of true positive predictions to the total predicted positives, providing insight into the ability of the model to avoid false positives.

$$\begin{aligned} \text {Precision} = \frac{\text {True Positives}}{\text {True Positives} + \text {False Positives}} \end{aligned}$$ -

Recall (sensitivity): Recall assesses the model’s capability to correctly identify positive instances. This is the ratio of true positive predictions to the total actual positives, indicating the effectiveness of the model in detecting relevant cases.

$$\begin{aligned} \text {Recall} = \frac{\text {True Positives}}{\text {True Positives} + \text {False Negatives}} \end{aligned}$$ -

F1-score: The F1-Score is the harmonic mean of the Precision and Recall, providing a balanced measure that accounts for both false positives and false negatives. This is particularly useful when dealing with unbalanced datasets.

$$\begin{aligned} \text {F1-Score} = 2 \times \frac{\text {Precision} \times \text {Recall}}{\text {Precision} + \text {Recall}} \end{aligned}$$ -

AUC-ROC (area under the receiver operating characteristic curve): This metric evaluates the model’s ability to distinguish between classes. A higher AUC indicates better performance, reflecting the trade-off between the true positive rate (recall) and the false positive rate.

A thorough assessment of the model’s efficacy in sentiment and status categorization is provided by these metrics combined, enabling a thorough examination of its prediction abilities and dependability while processing intricate text data on mental health.

Results analysis

Baseline comparison

The suggested Opinion BERT model and the baseline models (BERT, RoBERTa, and DistilBERT) are thoroughly compared in Table 3 for the Status and Sentiment Classification tasks. As can be seen, the proposed model achieved the maximum accuracy, macro precision, recall, and F1-score metrics while continuously outperforming the baselines in both tasks. Opinion BERT outperformed the next best BERT, with an accuracy of 93.74% for Status Classification, compared to 90.98% for BERT. Opinion BERT outperformed BERT in Sentiment Classification, with 96.25% accuracy. Incorporation of attention-based opinion embeddings, which improve the model’s comprehension of complex contextual information, is responsible for the model’s higher performance. Furthermore, a higher F1-score in both tests indicates that Opinion BERT is competent at properly identifying both positive and negative examples, as seen by its more balanced accuracy and recall values. This demonstrates the effect of combining the BERT-CNN-GRU architecture with opinion-aware embeddings.

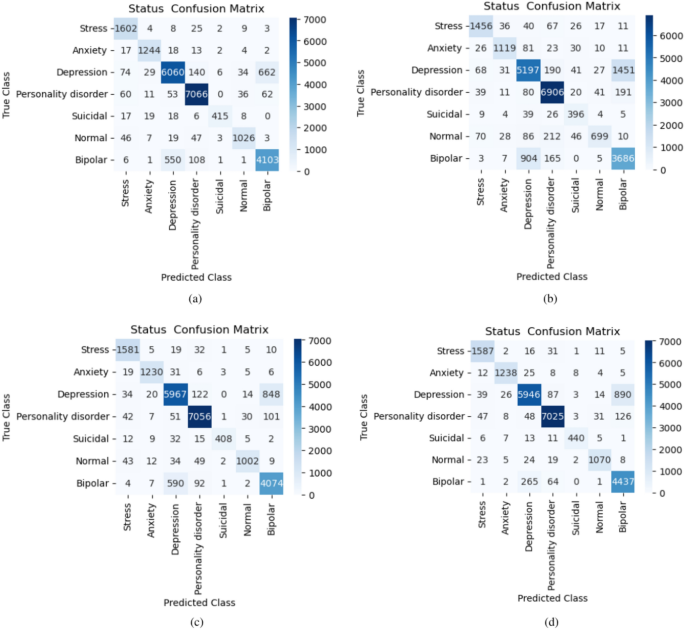

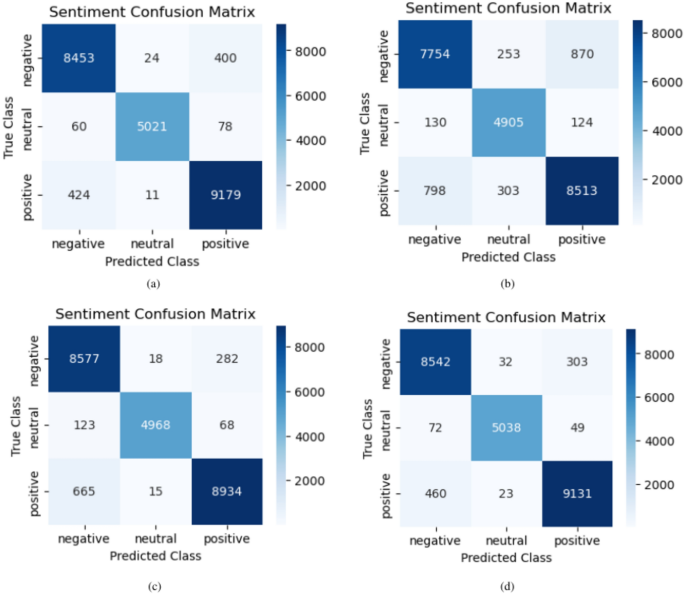

The confusion matrices for status and sentiment classification tasks across different models are shown in Figs. 4 and 5, respectively. The effectiveness of BERT, RoBERTa, DistilBERT, and the suggested model in categorizing mental health status into groups including stress, anxiety, depression, and personality disorder are contrasted in Fig. 4. Improved prediction accuracy across several categories is demonstrated by the suggested model’s decreased misclassification rates, particularly in more complicated categories like bipolar and suicidal. With a greater count in the diagonal element for the true class, for instance, it more accurately identifies bipolar instances.

The confusion matrices for sentiment classification show how well various models distinguish between positive, neutral, and negative attitudes, as shown in Fig. 5. Compared with the conventional BERT and RoBERTa models, the suggested model shows improved accuracy in predicting Positive and Neutral classes, lowering the number of cases that are incorrectly categorized. The proposed model exhibits fewer mistakes in differentiating between Positive and Neutral sentiments, which results in improved overall performance metrics. This is especially evident in the accurate classification of positive sentiment. The outcomes demonstrate the effectiveness of the proposed model design, which combines CNN, BiGRU, and sophisticated attention mechanisms to capture subtle linguistic signals essential for mood and status predictions.

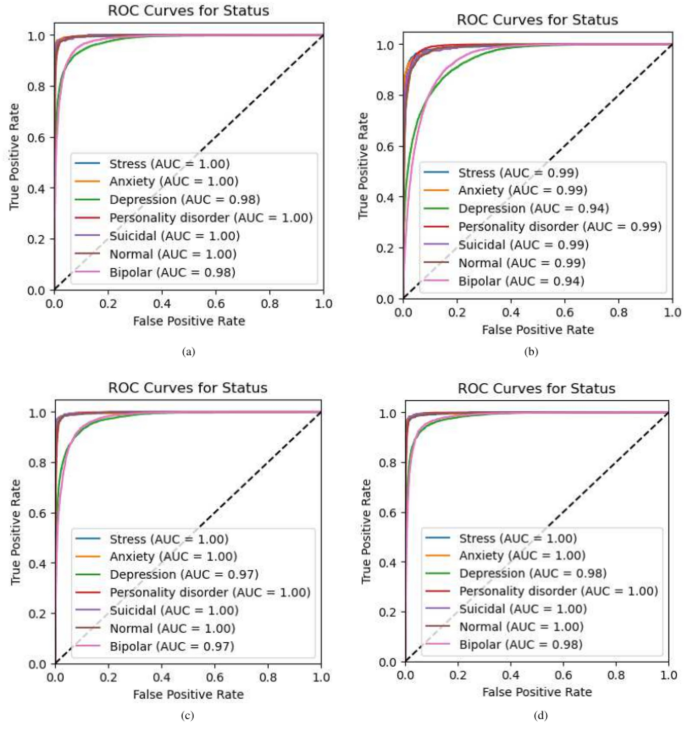

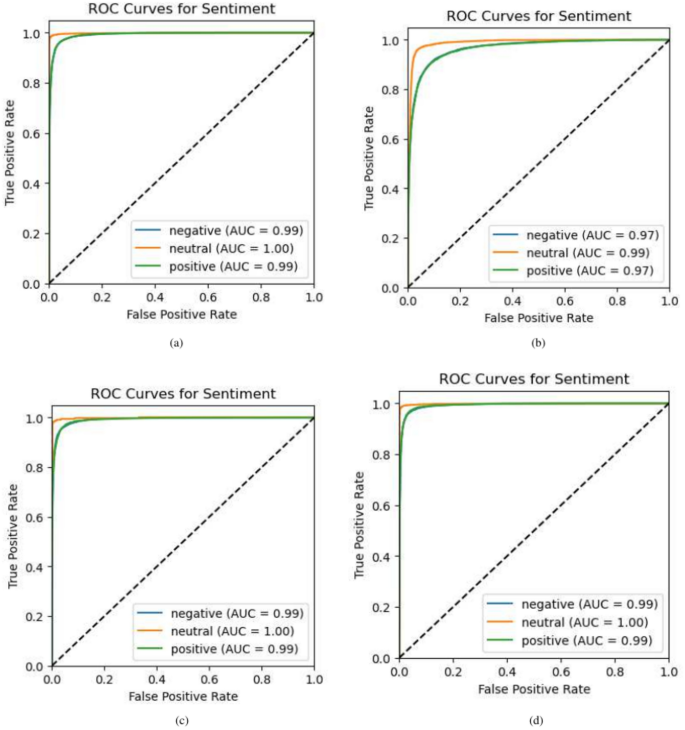

The performance of several models across status and sentiment classification tasks are depicted by the ROC curves in Figs. 6 and 7, respectively. These graphs, which plot the True Positive Rate (sensitivity) versus the False Positive Rate at different threshold values, demonstrate the capacity of the models to discriminate between the classes.

A strong discriminative capacity is indicated by the proposed model’s high AUC values across all mental health status categories, as shown in Fig. 6. With an AUC of 1.00, the model attained perfect or almost perfect AUC values for categories, such as stress, anxiety, and suicidal thoughts. Interestingly, the suggested model achieves AUC values of 0.98, exceeding RoBERTa and DistilBERT, especially in more complicated categories such as depression and bipolar disorder, which sometimes offer difficulties because of overlapping symptoms. This demonstrates how well the proposed approach can identify minute patterns connected to various mental health issues.

A strong performance in the emotion categorization challenge is also shown in Fig. 7. The suggested model regularly outperformed the conventional BERT and RoBERTa models, producing AUC values of 0.99 or higher, across Positive, Neutral, and Negative attitudes. According to this, the architecture that combines CNN and BiGRU layers with sophisticated attention mechanisms is very good at capturing the subtleties of sentiments, which reduces misclassifications and improves overall precision, particularly when separating positive and neutral sentiments. These ROC curves highlight the accuracy and dependability of the proposed model in sentiment and status classification tasks.

Comparison of sentiment lexicons

Table 4 highlights the performance comparison of sentiment lexicons-Afinn, VADER, and TextBlob-across status and sentiment classification tasks. TextBlob consistently outperforms the others, achieving the highest test accuracy of 93.74% for status classification and 96.25% for sentiment classification. It also demonstrates superior macro precision, recall, and F1-scores, reaching 94.17%, 94.48%, and 94.32% for status classification, and 96.50%, 96.68%, and 96.58% for sentiment classification. VADER shows strong performance, particularly in sentiment classification, with a test accuracy of 94.12% and an F1-score of 93.68%. Afinn performs reliably, achieving a sentiment classification F1-score of 91.97%. As shown in Table 4, TextBlob proves to be the most effective lexicon for sentiment analysis, with VADER and Afinn providing solid alternatives for specific use cases.

Ablation study

Table 5 displays the findings of the ablation research, which was conducted to assess how well different models performed on tasks involving the categorization of emotion and status. BERT with opinion embedding, BERT-CNN-BiGRU with attention-based opinion embedding, BERT-BiGRU with opinion embedding, BERT-CNN with opinion embedding, BERT-CNN-BiGRU without opinion embedding, and suggested Opinion BERT with attention-based opinion embedding are among the models that were evaluated. For both classification tasks, each model’s test accuracy, F1-score, recall, and macro precision are displayed.

Notably, the suggested Opinion BERT demonstrated its efficacy in capturing subtle semantic links through attention-based opinion embedding, with the highest test accuracy of 93.74% for status classification and 96.25% for sentiment classification. By concentrating on the important elements of the input text, this novel embedding technique improves the model’s comprehension and contextualization of the attitudes conveyed. More accurate predictions are produced by the model’s ability to evaluate the importance of various words or phrases according to their applicability to the attitude under study, owing to the inclusion of attention processes.

Additionally, BERT-CNN-BiGRU with conventional opinion embedding performed competitively, particularly in status categorization, where it achieved 91.94% accuracy. The CNN and BiGRU layers work together to effectively extract features and comprehend the context, both of which are essential for precise categorization.

Furthermore, the findings show that opinion embedding is important for enhancing performance. Illustration of the significance of integrating domain-specific information, BERT-BiGRU with opinion embedding fared better than versions utilizing conventional embeddings alone, although it had lower accuracy than the suggested model. The BERT-CNN-BiGRU model without opinion embedding, on the other hand, performed the worst, highlighting the need for embedding techniques to increase model efficacy.

Overall, the findings demonstrate how various model architectures and embedding tactics affect performance, highlighting the significance of customized methods in opinion analysis, especially with the inclusion of attention mechanisms in the suggested Opinion BERT.

Prediction analysis

The anticipated and actual sentiment and mental health conditions for many models, including BERT, RoBERTa, DistilBERT, and the suggested Opinion-BERT, are compared in Table 6. The sentiment and actual mental health state of the input text were compared with each model’s predictions. When the actual state was “Depression,” as in the first example, all models expected the status to be “Suicidal,” but the predicted attitude stayed consistently “Positive.” This shows that certain algorithms have a propensity to correctly detect emotions but misclassify mental health conditions. However, in the same scenario, Opinion-BERT demonstrated an improved capacity to comprehend subtle manifestations of mental health by effectively matching the projected condition with the actual status.

Opinion-BERT continuously performed well in a variety of scenarios according to additional examinations of other entrants. While other models showed differences, particularly in sentiment predictions, the second entry accurately classified the status and sentiment as “Bipolar” and “Positive,” respectively. Opinion-BERT further proved its resilience by maintaining accurate predictions under both neutral and negative circumstances. These findings imply that, in comparison to its competitors, Opinion-BERT’s attention-based opinion embedding may provide a deeper understanding of mental health manifestations and improve classification performance. This table demonstrates how well the suggested model interprets complicated sentiments and mental health states in a variety of textual inputs.

Comparison among and existing work

A comparison of several methods for sentiment analysis and mental health identification across the datasets is presented in Table 7. When Kokane et al.42 applied NLP transformers such as DistilBERT to social media data, they were able to obtain noteworthy accuracy for Reddit (84%) and Twitter (91%). Chen et al.43 achieved a 92.35% accuracy rate for hotel reviews by combining BERT with CNN, BiLSTM, and Attention processes to overcome the conventional drawbacks of CNN and RNN. By using a Bi-LSTM architecture for social media data from sites such as Facebook, Instagram, and Twitter, Selva Mary et al.44 were able diagnosed with depression, with an astounding 98.5% accuracy rate. Sowbarnigaa et al.45 classified depression with a 93% accuracy rate using a CNN-LSTM combination. By using the EmoMent corpus and RoBERTa, Atapattu et al.46 concentrated on South Asian settings and obtained F1 values of 0.76 and 0.77 for post-categorization and mental health prediction, respectively. The intricacy of the MAMS dataset was addressed by Wu et al.47 work on multi-aspect sentiment analysis using a RoBERTa-TMM ensemble, which demonstrated good F1 scores in both ATSA (85.24%) and ACSA (79.41%). In contrast, our study uses a multi-input neural network called Opinion-BERT, which integrates CNN, BiGRU, Transformer blocks, token embeddings, and attention mechanisms. It achieved an accuracy of 93.74% for classifying mental health status and 96.25% for sentiment analysis. This demonstrates how well our method handles the complex and multidimensional characteristics of mental health literature.

Discussion

The proposed Opinion-BERT model has shown great promise for improving mental health analysis through multi-task learning by integrating attention-based opinion embeddings. Understanding both explicit and subtle material in texts pertaining to mental health has been demonstrated to be greatly aided by the combination of opinion-specific data with contextual embeddings of BERT. This integrated method reflects the intricacies of human language, which frequently consists of a combination of ideas, feelings, and factual information that is difficult for standard models to comprehend.

The effect of opinion embeddings on overall model performance is one noteworthy finding. Incorporating subjective signals is crucial because the ablation study makes it evident that adding opinion embeddings improves emotion and status classification tasks. According to these results, opinions are important in mental health analysis since they frequently give emotional states and behavioral inclinations context. To extract sentiment-related information from the text, the Opinions Embedding Layer’s attention mechanism effectively finds the most pertinent passages, which enhances the accuracy and dependability of the model.

Additionally, the hybrid design that included CNN and BiGRU layers worked well. Local patterns and relationships, including phrases and word n-grams, are well captured by the CNN component, but the BiGRU component represents the long-term context and sequential dependencies. In texts on mental health, which may contain subtle tone changes, sarcasm, or complicated emotional states that call for an awareness of both local and global text elements, this combination is particularly helpful.

Furthermore, by utilizing shared information between various tasks, the multi-task learning architecture enabled the model to predict sentiment and status at the same time. This shared learning was beneficial because of its improved generalization and decreased the possibility of overfitting to a single task. The findings support the idea that multi-task learning works well in complicated fields like mental health, where overlapping variables affect different emotional states.

All things considered, the Opinion-BERT model shows promise for enhancing mental health analysis through the use of cutting-edge NLP approaches. A step toward developing more precise and thorough language models for this delicate and important topic has been made with the effective integration of context and subjective perspectives.

Limitation and future work

Despite the encouraging outcomes of the Opinion-BERT model, it is important to recognize several limitations. First, both the quality and representativeness of the training data are critical to the success of the model. Real-world applications may perform less well if the dataset is undiversified or skewed toward particular emotions or mental health issues. While the model’s dependence on attention-based embeddings is advantageous for capturing context, it can also present problems such as overfitting, especially in situations where there is a lack of data. Moreover, there is still uncertainty over the interpretability of the model’s choices. Although the attention mechanism emphasizes significant terms, it might be challenging to comprehend the underlying logic of certain forecasts.

To strengthen the generalizability of the Opinion-BERT model across a range of demographics and circumstances, future research should concentrate on expanding and diversifying its datasets. Further improvements classification accuracy and resilience may include investigating different embedding structures and methodologies, such as adding knowledge graphs or strengthening multi-task learning techniques. Future research could also focus on including explainability tools to provide more precise information on how the model makes decisions. Finally, expanding the Opinion-BERT framework’s scope and fostering a more thorough comprehension of mental health feelings across various settings may be achieved by applying it to other fields, including social media and healthcare.

Conclusion

We introduced the Opinion-BERT model in this study, which effectively integrates attention-based opinion embeddings to classify emotional states and mental health conditions. Through extensive experimentation, including an ablation study, we demonstrated that incorporating opinion-related features significantly enhances the model’s performance. Our results show that Opinion-BERT outperforms baseline models across key evaluation metrics, such as accuracy, precision, recall, and F1-score.

Our findings emphasize the critical role of both emotional and contextual cues in improving the accuracy of machine learning models for mental health analysis. By combining sentiment-specific embeddings with advanced contextual representations from BERT, we lay a strong foundation for future advancements in sentiment analysis and multi-task learning frameworks.

This research opens new avenues for more effective and nuanced mental health evaluations, offering the potential for better understanding and intervention in this important field. Integrating sentiment analysis with mental health assessments not only improves classification accuracy but also contributes to more insightful and adaptable mental health monitoring tools.

In conclusion, the Opinion-BERT model takes a significant step forward in leveraging machine learning for mental health applications, offering a robust framework for future research and practical implementation in the field.

Data availability

Dataset available at https://shorturl.at/LzeMH.

References

Vigo, D., Thornicroft, G. & Atun, R. Estimating the true global burden of mental illness. Lancet Psychiatr. 3, 171–178 (2016).

Ghani, N. A., Hamid, S., Hashem, I. A. T. & Ahmed, E. Social media big data analytics: A survey. Comput. Hum. Behav. 101, 417–428 (2019).

Kusal, S. et al. A systematic review of applications of natural language processing and future challenges with special emphasis in text-based emotion detection. Artif. Intell. Rev. 56, 15129–15215 (2023).

Fudholi, D. H. Mental health prediction model on social media data using CNN-BILSTM. In Kinetik: Game technology, information system, computer network, computing, electronics, and control 29–44 (2024).

Gratch, J. & Marsella, S. A domain-independent framework for modeling emotion. Cogn. Syst. Res. 5, 269–306 (2004).

Suleiman, S. R. & Crosman, I. (eds.) The reader in the text: Essays on audience and interpretation, Vol. 617 (Princeton University Press, 2014).

Dogra, V. et al. A complete process of text classification system using state-of-the-art NLP models. Comput. Intell. Neurosci. 2022, 1883698 (2022).

Acheampong, F. A., Nunoo-Mensah, H. & Chen, W. Transformer models for text-based emotion detection: A review of BERT-based approaches. Artif. Intell. Rev. 54, 5789–5829 (2021).

Devlin, J., Chang, M., Lee, K. & Toutanova, K. BERT: pre-training of deep bidirectional transformers for language understanding. CoRRabs/1810.04805 (2018). arXiv:1810.04805.

Liu, Y. et al. Roberta: A robustly optimized BERT pretraining approach. CoRRabs/1907.11692 (2019). arXiv:1907.11692.

Sanh, V., Debut, L., Chaumond, J. & Wolf, T. Distilbert, a distilled version of BERT: Smaller, faster, cheaper and lighter (2020). arXiv:1910.01108.

Singh, A. The early detection and diagnosis of mental health status employing NLP-based methods with ml classifiers. J. Mental Health Technol. 12, 45–60 (2024).

Inamdar, S., Chapekar, R., Gite, S. & Pradhan, B. Machine learning driven mental stress detection on reddit posts using natural language processing. Hum.-Centric Intell. Syst. 3, 80–91 (2023).

Alanazi, S. A. et al. Public’s mental health monitoring via sentimental analysis of financial text using machine learning techniques. Int. J. Environ. Res. Public Health 19, 9695 (2022).

Abd Rahman, R., Omar, K., Noah, S. A. M., Danuri, M. S. N. M. & Al-Garadi, M. A. Application of machine learning methods in mental health detection: A systematic review. IEEE Access 8, 183952–183964 (2020).

Abdulsalam, A., Alhothali, A. & Al-Ghamdi, S. Detecting suicidality in Arabic tweets using machine learning and deep learning techniques. Arabian J. Sci. Eng. 1–14 (2024).

Almeqren, M. A., Almuqren, L., Alhayan, F., Cristea, A. I. & Pennington, D. Using deep learning to analyze the psychological effects of COVID-19. Front. Psychol. 14, 962854 (2023).

Ameer, I., Arif, M., Sidorov, G., Gómez-Adorno, H. & Gelbukh, A. Mental illness classification on social media texts using deep learning and transfer learning. arXiv preprint arXiv:2207.01012 (2022).

Su, C., Xu, Z., Pathak, J. & Wang, F. Deep learning in mental health outcome research: A scoping review. Transl. Psychiatr 10, 116 (2020).

Liu, J. & Su, M. Enhancing mental health condition detection on social media through multi-task learning. medRxiv 2024–02 (2024).

Buddhitha, P. & Inkpen, D. Multi-task learning to detect suicide ideation and mental disorders among social media users. Front. Res. Metrics Anal. 8, 1152535 (2023).

Sarkar, S., Alhamadani, A., Alkulaib, L. & Lu, C. T. Predicting depression and anxiety on reddit: a multi-task learning approach. In 2022 IEEE/ACM international conference on advances in social networks analysis and mining (ASONAM), 427–435 (IEEE, 2022).

Li, C., Braud, C. & Amblard, M. Multi-task learning for depression detection in dialogs. arXiv preprint arXiv:2208.10250 (2022).

Plaza-Del-Arco, F. M., Molina-González, M. D., Ureña-López, L. A. & Martín-Valdivia, M. T. A multi-task learning approach to hate speech detection leveraging sentiment analysis. IEEE Access 9, 112478–112489 (2021).

Jin, N., Wu, J., Ma, X., Yan, K. & Mo, Y. Multi-task learning model based on multi-scale CNN and LSTM for sentiment classification. IEEE Access 8, 77060–77072 (2020).

Malik, S. et al. Attention-aware with stacked embedding for sentiment analysis of student feedback through deep learning techniques. PeerJ Comput. Sci. 10, e2283 (2024).

Chen, N., Sun, Y. & Yan, Y. Sentiment analysis and research based on two-channel parallel hybrid neural network model with attention mechanism. IET Control Theory Appl. 17, 2259–2267 (2023).

Tiwari, D. & Nagpal, B. Keaht: A knowledge-enriched attention-based hybrid transformer model for social sentiment analysis. N. Gener. Comput. 40, 1165–1202 (2022).

Huang, F. et al. Attention-emotion-enhanced convolutional lstm for sentiment analysis. IEEE Trans. Neural Netw. Learn. Syst. 33, 4332–4345 (2021).

Han, W. & Kando, N. Opinion mining with deep contextualized embeddings. In Proceedings of the 2019 conference of the north american chapter of the association for computational linguistics: student research workshop, 35–42 (2019).

Jebbara, S. & Cimiano, P. Improving opinion-target extraction with character-level word embeddings. arXiv preprint arXiv:1709.06317 (2017).

Liu, P., Joty, S. & Meng, H. Fine-grained opinion mining with recurrent neural networks and word embeddings. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, 1433–1443 (2015).

Gudivada, V., Apon, A. & Ding, J. Data quality considerations for big data and machine learning: Going beyond data cleaning and transformations. Int. J. Adv. Softw. 10, 1–20 (2017).

Vatri, A. & McGillivray, B. Lemmatization for ancient greek: An experimental assessment of the state of the art. J. Greek Linguistics 20, 179–196 (2020).

Bondugula, R., Udgata, S., Rahman, N. & Sivangi, K. Intelligent analysis of multimedia healthcare data using natural language processing and deep-learning techniques. In Edge-of-things in personalized healthcare support systems, 335–358 (Academic Press, 2022).

Xiang, L., Li, Y., Hao, W., Yang, P. & Shen, X. Reversible natural language watermarking using synonym substitution and arithmetic coding. Comput. Mater. Continua 55, 541–559. https://doi.org/10.3970/cmc.2018.03510 (2018).

Michalopoulos, G., McKillop, I., Wong, A. & Chen, H. Lexsubcon: Integrating knowledge from lexical resources into contextual embeddings for lexical substitution. arXiv preprint arXiv:2107.05132 (2021).

Huang, K., Geller, J., Halper, M., Perl, Y. & Xu, J. Using wordnet synonym substitution to enhance UMLS source integration. Artif. Intell. Med. 46, 97–109 (2009).

Hermansyah, R. & Sarno, R. Sentiment analysis about product and service evaluation of pt telekomunikasi indonesia tbk from tweets using textblob, naive bayes & k-nn method. In 2020 international seminar on application for technology of information and communication (iSemantic), 511–516 (IEEE, 2020).

Hilal, A. & Chachoo, M. A. Aspect based opinion mining of online reviews. Gedrag Organisatie Rev. 33, 1185–1199 (2020).

Meng, Y. et al. Text classification using label names only: A language model self-training approach. arXiv preprint arXiv:2010.07245 (2020). arXiv:2010.07245.

Kokane, V., Abhyankar, A., Shrirao, N. & Khadkikar, P. Predicting mental illness (depression) with the help of nlp transformers. In 2024 second international conference on data science and information system (ICDSIS), 1–5 (IEEE, 2024).

Chen, N., Sun, Y. & Yan, Y. Sentiment analysis and research based on two-channel parallel hybrid neural network model with attention mechanism. IET Control Theory Appl. 17, 2259–2267 (2023).

Selva Mary, G. et al. Enhancing conversational sentimental analysis for psychological depression prediction with BI-LSTM. J. Autonomous Intell., 7 (2023).

Sowbarnigaa, K. S. et al. Leveraging multi-class sentiment analysis on social media text for detecting signs of depression. Appl. Comput. Eng. 2, 1020–1029. https://doi.org/10.54254/2755-2721/2/20220660 (2023).

Atapattu, T. et al. Emoment: An emotion annotated mental health corpus from two south asian countries. arXiv preprint arXiv:2208.08486 (2022).

Wu, Z., Ying, C., Dai, X., Huang, S. & Chen, J. Transformer-based multi-aspect modeling for multi-aspect multi-sentiment analysis. In Natural Language Processing and Chinese Computing: 9th CCF International Conference, NLPCC 2020, Zhengzhou, China, October 14–18, 2020, Proceedings, Part II, 546–557 (Springer International Publishing, 2020).

Acknowledgements

The authors extend their appreciation to the Research Chair of Online Dialogue and Cultural Communication, King Saud University, Riyadh, Saudi Arabia for funding this study.

Funding

This research is funded by the Research Chair of Online Dialogue and Cultural Communication, King Saud University, Riyadh, Saudi Arabia.

Author information

Authors and Affiliations

Contributions

M.M.H. Conceptualization, development of the proposed model, Writing- original draft. M.S.H. Contributed to the coding implementation and writing- of the original draft. M.F.M. Supervision, manuscript review. M.S. Supervision, validation, manuscript editing. S.A. Supervision, validation, manuscript editing.

Corresponding authors

Ethics declarations

Competing interests

The authors assert that there are no conflicts of interest to disclose.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Hossain, M.M., Hossain, M.S., Mridha, M.F. et al. Multi task opinion enhanced hybrid BERT model for mental health analysis. Sci Rep 15, 3332 (2025). https://doi.org/10.1038/s41598-025-86124-6

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-86124-6