Abstract

Colorectal cancer (CRC) is a prevalent malignant tumor that presents significant challenges to both public health and healthcare systems. The aim of this study was to develop a machine learning model based on five years of clinical follow-up data from CRC patients to accurately identify individuals at risk of poor prognosis. This study included 411 CRC patients who underwent surgery at Yixing Hospital and completed the follow-up process. A modeling dataset containing 73 characteristic variables was established by collecting demographic information, clinical blood test indicators, histopathological results, and additional treatment-related information. Decision tree, random forest, support vector machine, and extreme gradient boosting (XGBoost) models were selected for modeling based on the features identified through recursive feature elimination (RFE). The Cox proportional hazards model was used as the baseline for model comparison. During the model training process, hyperparameters were optimized using a grid search method. The model performance was comprehensively assessed using multiple metrics, including accuracy, F1 score, Brier score, sensitivity, specificity, positive predictive value, negative predictive value, receiver operating characteristic curve, calibration curve, and decision curve analysis curve. For the selected optimal model, the decision-making process was interpreted using the SHapley Additive exPlanations (SHAP) method. The results show that the optimal RFE-XGBoost model achieved an accuracy of 0.83 (95% CI 0.76–0.90), an F1 score of 0.81 (95% CI 0.72–0.88), and an area under the receiver operating characteristic curve of 0.89 (95% CI 0.82–0.94). Furthermore, the model exhibited superior calibration and clinical utility. SHAP analysis revealed that increased perioperative transfusion quantity, higher tumor AJCC stage, elevated carcinoembryonic antigen level, elevated carbohydrate antigen 19–9 (CA19-9) level, advanced age, and elevated carbohydrate antigen 125 (CA125) level were correlated with increased individual mortality risk. The RFE-XGBoost model demonstrated excellent performance in predicting CRC patient prognosis, and the application of the SHAP method bolstered the model’s credibility and utility.

Similar content being viewed by others

Introduction

Colorectal cancer (CRC), which originates from abnormal cell proliferation in the mucosa of the colon or rectum, is one of the most common malignant tumors of the digestive system. The incidence and mortality rates of CRC are continuously increasing, making it the third most common cancer globally and posing significant challenges to public health and healthcare systems1. According to the World Health Organization (WHO), there were approximately 1.93 million new cases of CRC and 935,000 CRC-related deaths worldwide in 2020, accounting for about 10% of all new cancer cases and 9.4% of all cancer deaths, respectively2. From 2000 to 2016, the incidence and mortality rates of CRC in China consistently increased annually, ranking second and fourth, respectively, among malignant tumors3. This trend exacerbates the burden on public health.

The Chinese Protocol of Diagnosis and Treatment of CRC (2023 edition) indicates that the current treatment for CRC primarily involves surgical resection, supplemented by preoperative neoadjuvant therapy, postoperative chemoradiotherapy, targeted therapy, and immunotherapy as part of a comprehensive treatment approach4. Standardized treatment is a crucial factor affecting the prognosis of CRC patients. Actively promoting standardized early diagnosis and treatment is essential for further improving the prognosis of CRC patients5. The specific treatment and follow-up plans need to be assessed by a clinician based on the patient’s individual situation and prognosis6,7. Therefore, accurately assessing patient prognosis risks is essential for formulating personalized treatment interventions. Ensuring adequate treatment while minimizing unnecessary iatrogenic harm and the economic burden of overintervention has become a key factor affecting the survival time and quality of life of CRC patients.

Currently, clinicians mainly assess patients’ prognostic risk based on staging by the American Joint Committee on Cancer (AJCC)8,9. However, due to tumor heterogeneity, even patients at the same stage may exhibit different survival outcomes10,11. To more accurately identify high-risk patients with adverse prognoses, various assessment tools have been developed, most of which are based on traditional logistic regression and Cox proportional hazards regression models12. Traditional statistical models struggle to handle nonlinear relationships and high-dimensional data; in contrast, machine learning (ML) models have fewer assumption constraints, enabling them to better capture complex patterns in the data, thus exhibiting superior predictive performance13. However, ML models also face challenges; when feature dimensions are too high, ML models suffer from issues such as high complexity, low generalization ability, and high computational costs14. Additionally, ML models often struggle to provide intuitive or easily understandable explanations, posing challenges for clinicians' decision-making15.

To address these issues, we collected clinically accessible data from hospitalized CRC patients and established a longitudinal cohort dataset through follow-up. For this follow-up dataset, we employed various models combined with the recursive feature elimination (RFE) method to construct and select the best-performing predictive model. Furthermore, we utilized the SHapley Additive exPlanations (SHAP) method to visualize the “black-box model” for decision-making. The RFE algorithm can eliminate redundant features, reduce model complexity, and improve model generalization ability. ML models can capture complex relationships within the data, leading to more accurate predictions. SHAP can explain the model’s prediction results, aiding in the understanding of the underlying principles of the model’s operation. By integrating RFE algorithms, ML models, and SHAP, a predictive model with high accuracy and strong interpretability can be constructed16,17,18. Therefore, this study employed the aforementioned methods for modelling, aiming to enhance the accuracy and interpretability of the CRC prognostic model, thus providing more reliable support for personalized treatment and clinical decision-making.

Materials and methods

Study design and data source

This study aimed to develop and validate a prognostic model for CRC based on a five-year follow-up dataset of CRC patients. CRC patients undergoing surgical treatment at Yixing Hospital were selected as the study subjects. A total of 470 patients were included in the baseline. The inclusion criteria were as follows: (1) patients who were hospitalized and underwent surgical treatment between January 2006 and December 2010; (2) patients who were diagnosed with primary CRC by histopathology, and the exclusion criteria were as follows: (1) patients who had received chemotherapy, radiotherapy, or immunotherapy prior to the operation; (2) patients with malignant tumors of other organs or tissues or other serious systemic diseases (e.g., liver or kidney dysfunction, haematological diseases, infections or inflammatory diseases); (3) patients who were lost to follow-up. Collected data included demographic information, clinical blood test indicators, histopathological results, and additional treatment-related information. All data were obtained from the medical records documented in the clinical electronic medical record system during the patients' hospitalization. The blood test indicators specifically referred to the laboratory results of the last blood sample collected before surgery. Follow-up for survival time and status of these patients was completed before December 30, 2015. The endpoint was overall survival (OS). OS was calculated from the date of diagnosis to the date of death or the last follow-up. For the purpose of the analysis, patients were divided into two groups based on their five-year survival status: those who died within five years and those who survived for at least five years.

Data cleaning and splitting

Based on clinical availability and modelling requirements, a sample is deleted if more than 30% of the feature variables are missing in the sample, and a feature variable is deleted if more than 30% of the feature variables are missing in all samples. In data science and ML practice, the reason for using 30% as a threshold for variable exclusion is to retain a sufficient number of samples and features, while avoiding too much missing data that can negatively affect data quality, thus ensuring data reliability and model stability19,20. Eventually, a clinical follow-up dataset containing 411 patients with 73 feature variables was established. The specific variables collected are shown in Table S1. The proportion of missing data in the follow-up dataset is shown in Fig. S1 and Table S2. For feature variables with a missing value rate of less than 30%, Little's MCAR (Missing Completely At Random) test was used to assess the randomness of the missing data. The p-value was 0.922, greater than 0.05, indicating that these missing values are completely random (MCAR). For this missing pattern, multiple imputation was used to fill in the gaps21. Multiple imputations generated several complete datasets, each representing different estimates of the missing values22. Akaike Information Criterion (AIC) and Bayesian Information Criterion (BIC) were used as widely accepted model selection criteria to balance goodness of fit and model complexity23,24,25. Among the multiple datasets generated by multiple imputation, the dataset with the smallest AIC and BIC values was selected as the modeling dataset for subsequent model construction. Meanwhile, additional datasets were generated using Random Forest Imputation and K-Nearest Neighbors (KNN) Imputation for subsequent sensitivity analysis. The modeling dataset was randomly split into training and validation sets at a ratio of 7:3. Feature selection and model training were conducted based on the training set data, while model evaluation was performed using the validation set to verify the performance of the models developed during the training phase.

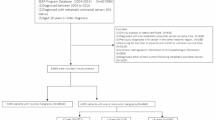

Feature selection and model building

Based on the characteristics of the dataset, five models were selected, including the Cox proportional hazards model, decision tree (DT), random forest (RF), support vector machine (SVM), and extreme gradient boosting (XGBoost) to construct prognostic models. The Cox proportional hazards model was used as the baseline for model comparison. The steps of feature selection and model training are as follows: (1) The model was fitted using all feature variables, and the hyperparameters were optimized through a grid search. During the grid search process, tenfold cross-validation was employed, with accuracy used as the evaluation metric to select the optimal hyperparameter combination. (2) After hyperparameter tuning, individual feature selection was performed for each model, resulting in different feature variables being included in each final model. For the Cox proportional hazards model, the Least Absolute Shrinkage and Selection Operator (Lasso) was used for variable selection. The Lasso method introduces an L1 norm penalty, encouraging sparsity in model coefficients, thereby achieving both variable selection and regularization. For the other four ML models, RFE was used for feature selection. RFE is a wrapper-based feature selection method that iteratively identifies and removes the least important features based on the feature importance ranking provided by the model. Using the respective RFE algorithms for each model, and with ten-fold cross-validation accuracy as the evaluation metric, different optimal feature subsets were selected for these four ML models. (3) The corresponding models were refitted and trained based on the optimal feature subset obtained through feature selection for each model. Parameters were adjusted, and the models were finalized to complete the training process. The specific process of model construction is shown in Fig. 1.

Model evaluation and interpretation

The models were comprehensively evaluated using various metrics to select the optimal classifier. The evaluation metrics included sensitivity, specificity, positive predictive value, negative predictive value, F1 score, Brier score, and accuracy. For each evaluation indicator, 1000 bootstrap samples were used to calculate its 95% confidence interval. Model discrimination was assessed using receiver operating characteristic (ROC) curves, calibration was evaluated using calibration curves, and clinical utility was determined using decision curve analysis (DCA) curves. The selected optimal model was interpreted using the SHAP method. Based on Shapley value theory, the SHAP method improves model interpretability by assigning an importance value to each feature, revealing each feature's contribution to the model's output and prediction results26. This approach clearly demonstrates how each feature influences the model’s predictions, thereby increasing the model's credibility. In this study, the decision process of the model was visualized by drawing variable importance plots, SHAP summary plots, and SHAP decision plots. Additionally, to evaluate the impact of multiple imputation of missing values on model performance robustness, sensitivity analyses were conducted using complete datasets without missing values, datasets imputed with Random Forest, and datasets imputed with KNN imputation. Furthermore, to assess potential implications of missing treatment-specific information, model performance was stratified by AJCC stages, allowing for a comprehensive evaluation of the model's predictive capability across different disease progression levels. This stratified analysis provided insights into the model's reliability and generalizability across various patient subgroups, while accounting for potential information gaps in treatment documentation.

Statistical analysis

Categorical variables are described as percentages, and between-group comparisons were made using the chi-square test. Continuous variables are described using the median and interquartile range. For continuous variables following a normal distribution, differences were analyzed using the t-test; for skewed continuous variables, the Mann–Whitney U test was used. The significance level was set at a P value of less than 0.05. All analyses in this study were conducted using Python V3.11.4 and R V4.2.2. Statistical testing was performed using the “stats” package (version 4.2.2) and “coin” package (version 1.4.3) in R, and missing values were imputed using the “mice” package (version 3.16.0), “missForest” package (version 1.5), and “VIM” package (version 6.2.2). Several packages in Python were used for data modeling, including “pandas” (version 1.5.3), “numpy” (version 1.24.3), “scikit-learn” (version 1.2.2), “xgboost” (version 1.7.3), and “lifelines” (version 0.27.7). Additionally, the “matplotlib” (version 3.7.1) and "shap" (version 0.42.1) packages were used for model interpretation and data visualization.

Ethics approval

This study was approved by the Ethics Committee of both Yixing Hospital and the Affiliated Hospital of Xuzhou Medical University in accordance with the Declaration of Helsinki (Grant No: IRB-2017-TECHNOLOGY-068). Informed consent was obtained from all the participants.

Results

Patient characteristics

This study ultimately included 411 CRC patients who underwent surgical treatment at our hospital and completed a 5-year follow-up. The differences in age, sex, and pathological stage between included and excluded patients were not statistically significant, indicating that the included population was not biased towards low-risk or high-risk cases (Supplementary Table S3). Among these included patients, 166 were female (40.389%) and 245 were male (59.611%). The median age of the patients was 64 years. Colon cancer accounted for 46.473% of the cases, while rectal cancer accounted for 53.527%. Patients in stages I, II, III, and IV accounted for 21.410%, 38.200%, 36.740%, and 3.650%, respectively. The proportions of patients with a history of blood transfusion, low tumor differentiation rate, advanced TNM and AJCC stages; the median age, median admission weight, perioperative transfusion quantity; and the levels of carcinoembryonic antigen (CEA), carbohydrate antigen 125 (CA125), carbohydrate antigen 19–9 (CA19-9), percentage of neutrophils, red cell volume distribution width, prothrombin time, and fibrinogen were greater in the deceased group than in the surviving patient group. Conversely, the levels of red blood cell count, hemoglobin concentration, hematocrit, lymphocyte count, thrombin time, serum prealbumin, serum sodium, and serum albumin were all lower in the deceased group than in the surviving patient group (Supplementary Table S1).

Feature selection and model training

For the Cox proportional hazards model, a Lasso regression model with survival time as the dependent variable was established for feature selection. The model achieved the minimum mean squared error (MSE) and optimal predictive performance on the training set when 18 feature variables were selected (Supplementary Fig. S2). For the DT, RF, SVM, and XGBoost models, feature selection was performed using the RFE algorithm based on each model. The RFE algorithm iteratively selected features for these four models by evaluating accuracy with ten-fold cross-validation, ultimately choosing 5, 15, 34, and 16 feature variables, respectively. The results of the RFE iterations for each model are shown in Supplementary Fig. S3. During the model training process, grid search was used to optimize the hyperparameters. The search ranges and optimal values of the hyperparameters are shown in Table S4.

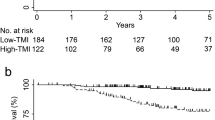

Model performance evaluation

The performance of the five models was primarily evaluated by calculating their accuracy, F1 score, sensitivity, specificity, positive predictive value, negative predictive value, and Brier score on the validation set (Table 1). The results showed that the XGBoost model performed best in terms of accuracy (0.83 [95% CI 0.76–0.90]), F1 score (0.81 [95% CI 0.72–0.88]), sensitivity (0.75 [95% CI 0.64–0.86]), and negative predictive value (0.80 [95% CI 0.69–0.88]), surpassing the other four models. The positive predictive value of the XGBoost model was slightly lower than that of the RF model. The specificity of the XGBoost model was slightly lower than that of the RF and DT models. However, the area under the receiver operating characteristic curve (AUC) of the XGBoost model (0.89 [95% CI 0.82–0.94]) was the highest among the five models, indicating good discrimination ability (Fig. 2A). Its Brier score (0.13 [95% CI 0.09–0.17]) is the lowest, indicating the highest consistency between the predicted and actual probabilities and demonstrating a more stable performance compared to other models (Fig. 2B). Additionally, the DCA curve demonstrated that the XGBoost model had a greater net clinical benefit, indicating its potential for clinical application (Supplementary Fig. S4). The performance of each model on the training set was shown in Table 2. Combined with its excellent and stable performance on both the training and validation sets, the XGBoost model was selected as the optimal model for prognosis prediction in this study. Additionally, sensitivity analysis for the best model indicated that the impact of missing data and different interpolation methods on model performance was within the acceptable range, further demonstrating the robustness of the model (Supplementary Table S5). The stratified performance evaluation by AJCC stages, maintaining fixed hyperparameters, demonstrated stable model performance in stages 2 and 3. A slight performance decline was observed in stage 1, which could be attributed to class imbalance without further hyperparameter optimization for this specific stage. Stage 4 was excluded from the stratified performance evaluation due to the presence of only positive samples. Detailed stratified performance metrics are presented in Supplementary Table S6.

Global interpretation of the optimal model

To enhance the interpretability of ML models, this study employed the SHAP method to explain the factors influencing the prognosis of CRC patients. The results revealed that the top six factors most strongly associated with CRC prognosis risk were perioperative transfusion quantity, tumor AJCC stage, CEA level, CA19-9 level, age, and CA125 level (Fig. 3A). To further explore the actual impact of these risk factors on CRC prognosis, we used SHAP summary plots to illustrate the contribution of each feature to the overall model output (Fig. 3B). Higher values of perioperative transfusion quantity, AJCC stage, CEA, CA19-9, age, and CA125 were associated with higher SHAP values, indicating that individuals with higher values of these features had a greater risk of death; thus, they can be interpreted as risk factors for CRC prognosis.

SHAP representation of the XGBoost model. (A) Importance ranking of the features predicted by the model. An evaluation based on the average absolute SHAP values revealed the importance of 16 feature variables in the XGBoost model. (B) The influence of each feature on the model output. In each feature row, each point represents a sample, where the x-axis represents its SHAP value for the given feature, and the color represents the magnitude of its feature value, with red indicating higher feature values and blue indicating lower feature values. PTQ: perioperative transfusion quantity; CEA: carcinoembryonic antigen; CA199: carbohydrate antigen 19–9; CA125: carbohydrate antigen 125; MCV: mean corpuscular volume; LDH: lactate dehydrogenase; P: serum phosphorus; Na: serum sodium; AFU: α-L-fucosidase; bas percentage: basophil granulocyte percentage; PDW: platelet distribution width; PT: prothrombin time; APTT, activated partial thromboplastin time.

Personalized decision process of the optimal model

Furthermore, we visualized the model decision process for two independent samples using SHAP decision plots (Fig. 4). From Fig. 4A, it can be observed that an AJCC stage of 1 and a perioperative transfusion quantity of 0 move the prediction line lower than the average prediction, resulting in a negative effect on the prediction of a positive event (death), which can be considered protective factors. Conversely, an age of 76 years and an MCV of 92.1 move the prediction line higher than the average prediction, resulting in a positive effect on the prediction of a positive event (death), which can be considered risk factors. The final model, based on the cumulative output SHAP values of 16 feature variables, predicted a negative outcome (survival), with a predicted survival probability of 0.889. Similarly, Fig. 4B shows that an AJCC stage of 3 and a CA19-9 value of 29.3 were prognostic risk factors, while an age of 61 years was a protective factor. The final model’s cumulative output SHAP value predicted a positive outcome (death), with a predicted death probability of 0.784. The SHAP decision plots elucidate the influence of different feature values on the outcomes during the model decision and prediction process.

SHAP decision plot of the XGBoost model. (A and B) Individual predictions of two patients. In the graph, the gray vertical line indicates the baseline prediction of the model, while the colored prediction lines represent the impact of each feature of the sample on the final prediction. The movement of the colored prediction lines demonstrates how individual feature values move the output prediction above or below the average prediction, thereby identifying the magnitude and direction of the influence of feature values on the prediction results. The feature values are provided alongside the prediction lines for reference. PTQ: perioperative transfusion quantity; CEA: carcinoembryonic antigen; CA199: carbohydrate antigen 19–9; CA125: carbohydrate antigen 125; MCV: mean corpuscular volume; LDH: lactate dehydrogenase; P: serum phosphorus; Na: serum sodium; AFU: α-L-fucosidase; bas percentage: basophil granulocyte percentage; PDW: platelet distribution width; PT: prothrombin time; APTT: activated partial thromboplastin time.

Discussion

In this study, we employed feature selection combined with ML models and integrated the SHAP method to construct and validate a highly accurate and interpretable predictive model for postoperative survival status in CRC patients, aiming to guide personalized treatment and clinical decisions. Among the five prognostic models, the model constructed using XGBoost combined with the RFE algorithm exhibited the best predictive performance, with an accuracy of 0.83 (95% CI 0.76–0.90), an F1 score of 0.81 (95% CI 0.72–0.88), and an AUC of 0.89 (95% CI 0.82–0.94), outperforming the other four models. Additionally, this model demonstrated good calibration and clinical applicability. In addition, the analysis based on the SHAP method effectively highlights the key characteristics of the ML model in predicting the prognosis of CRC patients. The SHAP summary plots and decision plots of XGBoost intuitively help clinicians understand these key features.

It is worth mentioning that our modeling is based on highly accessible data extracted from real-world clinical electronic medical record systems. These systems contain a wealth of obtainable data, which may have significant value for constructing disease prognosis models. Several studies have discussed the practical value of real-world data in disease diagnosis and prognosis prediction. For example, Yoshida et al. reported that the use of real-world data could more accurately predict the risk of developing cardiovascular diseases27. Margue et al. conducted research using prospective real-world data and ML to develop an individual postoperative disease-free survival (DFS) prediction model28. This model provided accurate individual DFS predictions, outperforming traditional prognostic scores and offering more reliable support for personalized treatment.

The optimal model constructed in this study is the RFE-XGBoost model, which has several advantages. XGBoost, as a powerful gradient-boosting tree algorithm, performs well in handling large-scale datasets and complex problems. It can effectively manage various types of features, including numerical and categorical ones, reducing the complexity of feature preprocessing and achieving more accurate predictive performance. Previous studies have demonstrated the successful application of the XGBoost model in disease prediction. For instance, Li et al. utilized the XGBoost model to more accurately predict the metastatic status of breast cancer29. Additionally, Tian and Yan found that the XGBoost model demonstrated excellent accuracy and robustness in predicting the prognosis of patients with chronic heart failure30.

However, ML models also face specific challenges, such as the tendency of the XGBoost model to overfit when the feature dimension is high, as well as computational complexity. To address these challenges, this study employed the RFE algorithm for feature selection. By combining RFE and XGBoost, RFE optimized the feature set by reducing the number of features, thus reducing the complexity of the model and the risk of overfitting. These selected key features provided a more accurate and effective feature set for the XGBoost model, allowing it to focus more on learning important features. This combination further improved the predictive accuracy and generalization ability of the model. Previous studies have demonstrated the successful application of the combination of RFE and the XGBoost model. For instance, Deng et al. utilized the RFE-XGBoost model in a multiclassification task of CRC-related causes of death and reported that the RFE-based feature selection method improved the model's performance in classifying CRC-related causes of death using clinical and omics data, or other data with highly redundant and imbalanced features31. Similarly, Zhang et al. employed this algorithm for spinal disease diagnosis, compared different combinations of feature selection methods and models, and found that the RFE-XGBoost model achieved the highest accuracy and F1 score32. These research examples indicate that the RFE-XGBoost combination model has broad application prospects in disease prediction, providing strong support for improving the accuracy and efficiency of disease diagnosis and prognosis prediction.

Furthermore, a major challenge for predictive models is how to intuitively present decisions. To address this issue, this study applies the SHAP framework to XGBoost's ‘black box’ tree ensemble model to reveal the specific decision process of the model. The SHAP method elucidates the magnitude and direction of the influence of feature variables on the model's output, providing important clinical insights. These clinical insights have significant practical implications. In this study, SHAP analysis showed that perioperative blood transfusion volume and AJCC stage were relatively important risk factors for predicting the prognosis of CRC patients. Studies have shown that perioperative blood transfusion has a significant negative impact on long-term prognosis after CRC surgery and increases the likelihood of short-term complications33. McSorley et al. also arrived at similar conclusions, indicating an association between perioperative blood transfusion and postoperative inflammation, complications, and poorer survival rates in CRC surgery patients34. Additionally, there may be a synergistic effect between perioperative blood transfusion and infectious complications, leading to proinflammatory activation conducive to tumor recurrence35. Additionally, the AJCC staging directly indicates the patient's prognosis by reflecting the extent of tumor progression8,9.

The optimal model ultimately included 16 feature variables encompassing various aspects of patient information, including age, histopathological results, tumor markers, routine blood tests, blood biochemistry, and coagulation function indicators. These features serve as crucial inputs for the predictive model and further guide patient prognosis. Previous studies have demonstrated the relevance of age, pathological results, and tumor markers to the prognosis of CRC, where older age, later stage, and elevated tumor markers typically indicate a poor prognosis36,37,38. Additionally, tumor location is associated with prognosis, with rectal cancer having a worse prognosis than colon cancer39. Furthermore, routine blood tests, blood biochemistry, and coagulation function tests also play a role in the prognostic assessment of CRC patients. These clinical laboratory indicators provide information about the overall health status and physiological function of patients. Such supplementary information can assist clinicians in better evaluating the overall condition of CRC patients and their tolerance to treatment, thereby enhancing prognosis prediction. Therefore, in the study of CRC prognosis, comprehensive consideration of clinical laboratory test indicators and other clinical examination information can provide a more thorough understanding of the overall condition of patients, achieving a more accurate individualized assessment.

In general, this study has significant practical implications. The establishment of the model and the identification of key features provide potential applications for personalized treatment and patient management. By accurately predicting patient prognosis, physicians can formulate personalized treatment plans more effectively, thereby improving treatment outcomes and patient survival rates. Moreover, the model, combined with the additional insights provided by the SHAP method, helps physicians to more fully consider patient conditions and risks in clinical decision-making, making the process more scientific and reliable.

Meanwhile, this study has several limitations that need to be acknowledged. First, the data used in this study are derived from a small, single-center cohort, which limits the external validity and generalizability of our findings. The lack of external validation raises doubts about the performance of the models in different populations and environments. Second, the model has not been tested or applied in clinical settings, so its practical utility and validity have not been verified. Third, there was a small proportion of missing data in this study, which was addressed using imputation methods. Although sensitivity analyses indicated that the model's robustness was minimally affected by imputation, the process may still have a potential impact on the model's performance. Additionally, due to the limitations of the collected information, this study did not adequately account for the differential treatment approaches across AJCC stages. This limitation may have constrained the model's ability to identify prognostic heterogeneity within each stage, as treatment regimens can significantly influence patient outcomes and the predictive accuracy of the model. Treatment strategies often vary substantially across disease stages, and the lack of detailed treatment information may have impacted the model's capacity to capture stage-specific survival patterns. To address these limitations, future studies should aim to incorporate comprehensive treatment-specific data across different AJCC stages, validate findings using larger multicenter datasets, and enhance the model's performance within each stratified stage. The integration of detailed treatment-related variables would better reflect their stage-specific impact on prognosis, thereby improving the model's clinical utility and its ability to identify prognostic heterogeneity within each AJCC stage.

Conclusion

In this study, we established an RFE-XGBoost model based on clinically available data to predict the postoperative survival status of CRC patients. Compared with the other four models, this model demonstrated superior predictive performance. Furthermore, SHAP elucidated the impact of key features on the prediction results, enhancing the credibility and practicality of the model, with features such as perioperative transfusion quantity and AJCC staging being of significant importance to the prognosis of CRC patients. Finally, this study provides an effective model approach for predicting the prognosis of CRC patients and offers a valuable reference for its future application in clinical practice. However, further work and validation are still needed to improve and optimize the model to improve clinical practice and improve the treatment outcomes and survival rates of CRC patients.

Data availability

The data that support the findings of this study are not openly available due to reasons of sensitivity and are available from the corresponding author upon reasonable request.

References

Hossain, M. S. et al. Colorectal cancer: A review of carcinogenesis, global epidemiology, current challenges, risk factors. Prevent. Treat. Strateg. Cancers (Basel) https://doi.org/10.3390/cancers14071732 (2022).

Sung, H. et al. Global cancer statistics 2020: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J. Clin. 71, 209–249. https://doi.org/10.3322/caac.21660 (2021).

Zheng, R. et al. Cancer incidence and mortality in China, 2016. J. Natl. Cancer Center 2, 1–9. https://doi.org/10.1016/j.jncc.2022.02.002 (2022).

[Chinese Protocol of Diagnosis and Treatment of Colorectal Cancer (2023 edition)]. Zhonghua Wai Ke Za Zhi 61, 617–644 (2023).

[Expert consensus on the early diagnosis and treatment of colorectal cancer in China (2023 edition)]. Zhonghua Yi Xue Za Zhi 103, 3896–3908 (2023).

Abbass, M. & Abbas, M. Preoperative workup, staging, and treatment planning of colorectal cancer. Dig. Dis. Interv. 07, 003–009 (2023).

Melli, F. et al. Evaluation of prognostic factors and clinicopathological patterns of recurrence after curative surgery for colorectal cancer. World J. Gastrointest. Surg. 13, 50–75 (2021).

Benson, A. B. et al. Colon cancer, version 2.2021, NCCN clinical practice guidelines in oncology. J. Natl. Compr. Cancer Netw. 19, 329–359 (2021).

Benson, A. B. et al. Rectal cancer, version 2.2022, NCCN clinical practice guidelines in oncology. J. Natl. Compr. Canc. Netw. 20, 1139–1167 (2022).

Dagogo-Jack, I. & Shaw, A. T. Tumour heterogeneity and resistance to cancer therapies. Nat. Rev. Clin. Oncol. 15, 81–94 (2018).

Fu, J. et al. Development and validation of prognostic nomograms based on De Ritis ratio and clinicopathological features for patients with stage II/III colorectal cancer. BMC Cancer 23, 620 (2023).

Mahar, A. L. et al. Personalizing prognosis in colorectal cancer: A systematic review of the quality and nature of clinical prognostic tools for survival outcomes. J. Surg. Oncol. 116, 969–982 (2017).

Tian, Y. et al. Spatially varying effects of predictors for the survival prediction of nonmetastatic colorectal Cancer. BMC Cancer 18, 1084 (2018).

Tufail, S., Riggs, H., Tariq, M. & Sarwat, A. Advancements and challenges in machine learning: A comprehensive review of models, libraries, applications, and algorithms. Electron. (Basel) 12, 1789 (2023).

Hakkoum, H., Abnane, I. & Idri, A. Interpretability in the medical field: A systematic mapping and review study. Appl. Soft. Comput. 117, 108391 (2022).

Fan, Z. et al. Construction and validation of prognostic models in critically Ill patients with sepsis-associated acute kidney injury: Interpretable machine learning approach. J. Transl. Med. 21, 406 (2023).

Liu, L., Bi, B., Cao, L., Gui, M. & Ju, F. Predictive model and risk analysis for peripheral vascular disease in type 2 diabetes mellitus patients using machine learning and shapley additive explanation. Front. Endocrinol. Lausanne 15, 1320335 (2024).

Ozkara, B. B. et al. Development of machine learning models for predicting outcome in patients with distal medium vessel occlusions: A retrospective study. Quant. Image Med. Surg. 13, 5815–5830 (2023).

Lin, W. & Tsai, C. Missing value imputation: A review and analysis of the literature (2006–2017). Artif. Intell. Rev. 53, 1487–1509 (2020).

Mera-Gaona, M., Neumann, U., Vargas-Canas, R. & López, D. M. Evaluating the impact of multivariate imputation by MICE in feature selection. PLoS One 16, e0254720 (2021).

Pedersen, A. B. et al. Missing data and multiple imputation in clinical epidemiological research. Clin. Epidemiol. 9, 157–166 (2017).

Sterne, J. A. et al. Multiple imputation for missing data in epidemiological and clinical research: potential and pitfalls. BMJ 338, b2393 (2009).

Akaike, H. A new look at the statistical model identification. IEEE Trans. Automat. Contr. 19, 716–723 (1974).

Schwarz, G. Estimating the dimension of a model. Ann. Stat. 6, 461–464 (1978).

Chaurasia, A. & Harel, O. Using AIC in multiple linear regression framework with multiply imputed data. Health Serv. Outcome 12, 219–233 (2012).

Nohara, Y., Matsumoto, K., Soejima, H. & Nakashima, N. Explanation of machine learning models using shapley additive explanation and application for real data in hospital. Comput. Meth. Prog. Bio. 214, 106584 (2022).

Yoshida, S. et al. Development and validation of ischemic heart disease and stroke prognostic models using large-scale real-world data from Japan. Environ. Health Prev. 28, 16 (2023).

Margue, G. et al. UroPredict: Machine learning model on real-world data for prediction of kidney cancer recurrence (UroCCR-120). NPJ Precis. Oncol. 8, 45 (2024).

Li, Q. et al. XGBoost-based and tumor-immune characterized gene signature for the prediction of metastatic status in breast cancer. J. Transl. Med. 20, 177 (2022).

Tian, J. et al. Machine learning prognosis model based on patient-reported outcomes for chronic heart failure patients after discharge. Health Qual Life Outcomes 21, 31 (2023).

Deng, F., Zhao, L., Yu, N., Lin, Y. & Zhang, L. Union with recursive feature elimination: A feature selection framework to improve the classification performance of multicategory causes of death in colorectal cancer. Lab. Invest. 104, 100320 (2023).

Zhang, B., Dong, X., Hu, Y., Jiang, X. & Li, G. Classification and prediction of spinal disease based on the SMOTE-RFE-XGBoost model. PeerJ Comput. Sci. 9, e1280 (2023).

Pang, Q. Y., An, R. & Liu, H. L. Perioperative transfusion and the prognosis of colorectal cancer surgery: A systematic review and meta-analysis. World J. Surg. Oncol. 17, 7 (2019).

McSorley, S. T. et al. Perioperative blood transfusion is associated with postoperative systemic inflammatory response and poorer outcomes following surgery for colorectal cancer. Ann. Surg. Oncol. 27, 833–843 (2020).

Puértolas, N. et al. Effect of perioperative blood transfusions and infectious complications on inflammatory activation and long-term survival following gastric cancer resection. Cancers (Basel) 15, 144 (2022).

Li, C. et al. Prediction models of colorectal cancer prognosis incorporating perioperative longitudinal serum tumor markers: A retrospective longitudinal cohort study. BMC Med. 21, 63 (2023).

Jin, L. J. et al. Analysis of factors potentially predicting prognosis of colorectal cancer. World J. Gastrointest. Oncol. 11, 1206–1217 (2019).

Yang, L. et al. Comparisons of metastatic patterns of colorectal cancer among patients by age group: A population-based study. Aging (Albany NY) 10, 4107–4119 (2018).

Kang, S. I. et al. The prognostic implications of primary tumor location on recurrence in early-stage colorectal cancer with no associated risk factors. Int. J. Colorectal. Dis. 33, 719–726 (2018).

Acknowledgements

This work was supported by the National Natural Science Foundation of China (Nos. 82173475, 12001470), the China Postdoctoral Science Foundation (No. 2020M671607), the 2023 Qing Lan Project (Chen Yansu), and the College Student Innovation and Entrepreneurship Training Program of Jiangsu Province, China (NO. 202310313070Y).

Author information

Authors and Affiliations

Contributions

YS.C. and LS.X. designed the research study, BA.X., M.Y., and M.Y. analysed the data, researched, and BA.X and M.Y. wrote the paper, Y.M., WM.W., CL.Y. and LL.B. contributed to the data collection. YS.C. and LS.X. provided suggestions on the study and revised the manuscript. All the authors have read and approved the final manuscript for publication.

Corresponding authors

Ethics declarations

Competing interests

14789The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Xiao, B., Yang, M., Meng, Y. et al. Construction of a prognostic prediction model for colorectal cancer based on 5-year clinical follow-up data. Sci Rep 15, 2701 (2025). https://doi.org/10.1038/s41598-025-86872-5

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-86872-5