Abstract

This article deals with prediction of buckling damage of steel equal angle structural members using a surrogate model combining machine learning and metaheuristic optimization technique. In particular, a hybrid Artificial Intelligence (AI)-based model involving Artificial Neural Network (ANN) and Particle Swarm Optimization (PSO) was developed and calibrated for the problem at hand. For this purpose, a database concerning compression tests of steel equal angle structural members was constructed from available resources with geometry variables such as length, width, thickness, mechanical properties of materials such as yield strength and initial imperfections (i.e. residual peak stress and initial geometric imperfections) and critical buckling load of columns. The hybrid PSOANN model was adopted because its prediction capability is higher than the traditional technique – i.e. scaled conjugate gradient (SCG). Indeed, ANN trained by PSO delivered better performance in terms of RMSE, MAE, ErrorStD, R2 and Slope in comparison to ANN trained by SCG, for instance. RMSE decreases from 0.141 to 0.055; MAE decreases from 0.108 to 0.042; R2 increases from 0.749 to 0.959, when switching from ANN alone to hybrid PSOANN, respectively. Moreover, a Partial Dependence (PD) investigation was performed to interpret the “black-box” PSOANN model.

Similar content being viewed by others

Introduction

Structural elements under compression are widely used in diverse projects due to the efficiency of exploitation: truss systems1 or reinforced concrete columns2,3,4. In the case of instability of structural members, there is a significant risk of damage to the structure, see2 for an example of buckling damage of the steel bar in a reinforced concrete column. Iasinski’s work5 and Johnson’s formula6 enabled us to look at with critical loads for structural elements with low and medium slenderness. However, these formulas apply only for isotropic, homogeneous materials and there are many assumptions about the structural elements: cross-section geometry, length, boundary conditions, concentric axial loads, lack of initial stress, etc. In reality, though, these conditions are not verified. The presence of these factors reduces the stability force-resistance of structural members under compression, compared to the original design7,8,9.

In terms of modeling, theoretical investigations mainly focus on simply supported boundary conditions10. Recently, with the development of numerical analysis, iterative algorithms have been widely applied to nonlinear problems such as instability in the field of computational mechanics, for instance, Arc-Length method11,12,13. These methods could be applied to the numerical finite element scheme14 to investigate the buckling behavior of structural members. However, there are a range of drawbacks in using finite element software including mesh scheme15, iteration convergence16, implementation of initial imperfections17, etc. For example, for intricate shapes, automatic mesh generation using highly skewed tetrahedral elements may create distorted or poorly-shaped elements in thin-walled structures18. Iterative solvers in FEA may struggle to converge, particularly for problems with non-linearities such as material plasticity, contact mechanics, or large deformations19. In addition, simulating pre-existing stresses in welds or formed components requires advanced techniques like importing results from thermal analysis20.

Recently, soft computing techniques, such as surrogate models, have begun to contribute solutions to various structural and mechanical engineering problems in a significant manner. As proved in the literature, soft computing techniques provide more reliable designs21, evaluations22 and predictions23 than conventional methods. For instance24, successfully developed a surrogate model for the design of self-compacting concrete based on various mixtures of ingredients, which could be extremely time-consuming in laboratory experiments. In another study25, improved the design of square concrete steel tube columns by using an artificial neural network (ANN) model to explore the nonlinear relationship between geometry, mechanical properties and axial capacity of structural elements26. have proposed an ANN tool for prediction and design of an orthotropic steel-deck bridge, but the study has not yet been carried out, even using professional software tools. This computational model helped the authors to evaluate many different cases that would be time-consuming, effort-intensive and costly to investigate experimentally. In addition, the work of other researchers, including27, has confirmed the efficiency of artificial intelligence techniques for the prediction of related mechanical engineering problems.

Within this context, the current work presents the development of a surrogate model based on the combination of Particle Swarm Optimization (PSO) and ANN to predict buckling damage to steel equal angle structural members. The hybrid PSOANN model was proposed because of its higher prediction capability than the single ANN model. The database and selection of variables are presented in Sect. 2.1, while Sect. 2.2 introduces the ANN and PSO algorithms. Section 3 presents the results and a discussion thereof, including the PSO optimization process and Partial Dependence (PD) investigation to explore the relationship between variables and output response. Finally, the dependence of the output response on input variables is shown through sensitivity analysis.

Materials and methods

Database and selection of variables

The selected geometry variables (length, width, thickness) were chosen because they directly influence the structural behavior under compression. These parameters are critical in defining the cross-sectional properties and slenderness ratio of steel equal angle members, which are known to significantly affect buckling performance28. Besides, yield strength was selected as a key material property because it defines the elastic limit of the material, directly influencing the transition to plastic buckling behavior29. Last but not least, initial imperfections, such as residual stress peak and initial geometric deviations, were included because they are well-documented to reduce the buckling capacity of members30.

In this paper, 66 configurations of compression test on steel equal angle structural members were collected from the available literature31. In Ban et al.31, an experimental program was conducted to test 420 MPa high-strength steel equal angle (see Fig. 1a) columns under axial loading. The boundary conditions of the tests were pinned-pinned, as shown in Fig. 1c. The min, max, average, standard deviation, coefficient of variation and quantile values at 25 and 75% of input variables are summarized in Table 1. It should be noticed that our proposed model is valid for the range of variables shown in Table 1. High-strength steel was selected because it exhibits better mechanical behavior than normal strength steel, and therefore higher durability32. The main geometry parameters of the structural elements are the length l, the thickness t, the width w, the free outstanding width b of angle legs, and four initial geometric imperfections v1, v2, v3, v4 as shown in Fig. 1b. Also, the residual stress was measured and reported in the analysis33. The mechanical properties of the constituent materials are expressed by yield strength, also measured for each configuration, as indicated in Table 1. It should be noted that the columns were designed to exhibit slenderness ranging from 30 to 80, which covered a wide range, from medium to long columns. Further, the correlation matrix within the database is also given in Table 2, showing an initial evaluation (linear mode) of the relationship between input variables and output response.

The entire dataset was divided into training (70%) and testing (30%) subsets. Data points were randomly assigned to the training and testing sets to ensure an even distribution of variable ranges across both subsets. This randomness avoids bias toward specific parameter values in either set, therefore reduce the risk of overfitting. Moreover, to avoid bias in the training process of the ANN model, data variables were made to range from 0 to 1. This also reduces the risk of overfitting. It is interesting to notice that after training, the model’s performance was assessed on the independent testing set, which it had never used during training.

Methods used

Artificial neural network

In this work, as the database contained a large number of input variables (i.e. 10), ANN was selected as the soft computing technique. As proved in the literature, ANN is highly efficient with large dimensional problems – for instance25, in structural engineering. Figure 2 shows a representation of ANN, including the input, hidden and output layers, which are three basic elements of an ANN model. The three above layers are inter-connectable via artificial neurons (i.e. computational nodes), whose objective is to compute weight parameters of the ANN model. For a single-output prediction problem, ANN model generalizes the following non-linear function:

where X and Y represent the input and output vectors, respectively. The function f can be expressed as the following:

where W and M represent the weight matrix of hidden and output layers, respectively. fh and f0 represent the activation functions of hidden and output layers, respectively. b and b0 represent the bias vectors of hidden and output layers, respectively. Such a relationship is illustrated in Fig. 2.

There are numerous algorithms available for training ANN. Some, such as Levenberg-Marquardt backpropagation, require a large amount of memory to answer numerous classification problems34, and the convergence rate in this approach is slow35. To tackle these challenges, other approaches have been developed, such as ANN models based on the principle of gradient descent36, i.e. the first-order conjugate gradient algorithm. However, because the line search is performed at each iteration, this strategy is still costly. In this study, we employed the scaled conjugate gradient algorithm (SCG)36 in conjunction with the PSO approach to train the ANN model. This type of evaluation enables us to investigate the performance of the PSO metaheuristic optimization technique.

Particle swarm optimization

Kennedy and Eberhart developed this efficient-swarm intelligence technique for addressing difficult optimization problems based on the social behavior of animals (e.g., a flock of birds or a school of fish)37. The basic idea is to move a swarm of particles iteratively to discover the global best position in a search space. The particles go across the search space, looking at various ordinary expressions for the optimum placements. The particles’ final placements are optimum answers to the challenge at hand.

Assume that the population size, or number of particles, in a D-dimensional search space is m. The particle’s location and velocity i (i = 1, 2,…, m) are represented by \({x_i}\) and \({v_i}\), respectively. Individual particles are associated with the best location in the swarm, pBest, and the best position of all particles, gBest. Figure 3 depicts the PSO method.

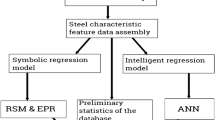

Figure 4 introduces the flowchart methodology of the current study. The raw data points are analyzed statistically for deducing correlation matrix. Then the entire dataset is divided into training (70%) and testing (30%) subsets. The ANN model is trained by optimizing weights and bias using PSO global optimization technique. The model prediction performance is then validated by using different quality metrics such as R2, RMSE and MAE. Finally, sensitivity analysis is carried out for the proposed model and variables.

Performance indicators

In this work, several performance indicators including the Root Mean Squared Error (RMSE), Mean Absolute Error (MAE) and coefficient of determination (R2) have been used to assess the quality of the prediction model, compared to experimental data. Details of these performance indicators and their formulas can be found in38,39.

Results and discussion

Optimization of ANN’s weight parameters using PSO

In this section, the weight parameters of the proposed ANN model are optimized using the PSO technique. It is worth noting that the prediction results are very much dependent on the architecture of the ANN model40. With a fixed quantity of inputs and outputs, the sought parameters of the ANN model are the number of hidden layers and neurons in each of them41. As demonstrated by many research works, one hidden layer might be enough to deal with complex non-linear relationships in the prediction problem. For example, in42, the authors have employed an ANN model with a single hidden layer to predict the axial capacity of square concrete-filled steel tubular columns;43 used such a model when investigating earthquake slope stability. For these reasons, a single-hidden-layer ANN model has been adopted in this work to reduce computational resources. In terms of number of computational nodes in each hidden layer, it has been chosen to be 2 times higher than the number of inputs, as recommended by several research works44. Consequently, the selected architecture of the ANN model in this study was 1 hidden layer containing 20 neurons. In terms of activation functions, a sigmoid activation function has been employed for the hidden layer and a linear activation function has been chosen for the output layer45. A mean square error function has been employed to be the cost function. In the current study, Matlab was employed for the training and post-processing of the model, using a Dell computer of 16 Gb of RAM and Intel Core i5, 2.9 GHz.

On the other hand, the key purpose of applying evolutionary algorithms when training AI models is to calibrate the connection between population size and the problem dimension46. In many cases of evolutionary algorithms – for instance, Differential Evolution – it is strongly suggested that the population should be 5 to 10 times greater than the number of predictors47. However, it is worth noting that a large population size does not always mean a good performance of the model48. In this work, the population size for PSO was chosen as 50. Inertia weight was chosen as 0.1, the personal learning coefficient was set as 2, the global learning coefficient 4 and the velocity limit 10%. It should be noted that these types of parameter ranges are often used to train AI models using PSO – for example49, .

Cost functions in terms of (a) RMSE, (b) MAE and (c) R2 during the optimization of ANN’s weight parameters by using PSO, for training and testing phases, respectively.

In this work, a maximum number of 100 iterations was employed as the stopping criterion during optimization by PSO. Figure 5 presents the cost functions (RMSE, MAE and R2, respectively) during training and also testing. The choice of 100 iterations is shown to be relevant to obtain optimized results for all three performance indicators. During the training phase, the proposed model exhibited a good performance in terms of three quality assessments. It should be noted that the testing data were new at the time of application. This remark enables us to make the observation that no overfitting (i.e. performance indicators of testing data lead to an incorrect direction) occurred during the training phase. The robustness and efficiency of PSO during the training phase were then confirmed.

Prediction capability

In this section, the prediction capability of two ANN models trained by scaled conjugate gradient and PSO, respectively, is presented for comparison purposes. Figure 6 presents the expected and output data in a regression mode, when training ANN with SCG and PSO, respectively. All quantitative information was summarized in Table 3, including the values of RMSE, MAE, ErrorMean, ErrorStD, R2, and Slope of training and testing phases. Analysis of the results shows that for the training dataset, ANN trained by PSO yielded better performance in terms of RMSE, MAE, ErrorMean, ErrorStD, R2 and Slope in comparison to ANN trained by SCG. Similar results were obtained for the testing part: the PSOANN model exhibited the best prediction results for five statistical criteria: RMSE, MAE, R2, ErrorStD and Slope (RMSE decreases from 0.141 to 0.055; MAE decreases from 0.108 to 0.042; R2 increases from 0.749 to 0.959; ErrorStD decreases from 0.144 to 0.056; Slope increases from 0.922 to 0.926, using lone ANN and hybrid PSOANN, respectively). Thus, based on both error analysis and assessment of prediction quality, we can say that PSOANN is the most efficient model for predicting the buckling of a steel equal angle.

Sensitivity analysis and discussion

In this section, PD50 was applied to investigate the marginal effect of input variables on the predicted result of the PSOANN prediction model, as presented in the previous section. As proved in many investigations, PD can determine the nature of the relationship between output and input (linear, monotonic or more complex)51. In other words, PD shows how the average prediction changes when the input is changed52. In this project, PD code was directly implemented in Matlab, as it is favorable for matrix computation. However, it should be noticed that PD technique has several drawbacks as below. PD assumes that the input variables being analyzed are independent of others, which may not always be true in real-world datasets where variables are often correlated. This can lead to misleading results, particularly for structural mechanics problems where geometry and material properties are interdependent. Most recently, SHAP (Shapley Additive Explanations) has received attention because this technique provides a complementary approach to PD, addressing many of its limitations53,54. For instance, SHAP could highlight how initial imperfections and thickness interact to affect the critical buckling load.

Based on the PSOANN model developed previously (weights and bias), PD allows us to interpret the “black-box” PSOANN model as shown in Figs. 7 and 8, respectively. Figure 7a shows that the relationship between output and length l can be fitted by a linear equation, with a negative slope of −0.175. That means the buckling capacity of the columns decreases with increasing column length. Figure 7b presents the relationship between output and thickness t. However, a nonlinear positive effect is obtained, i.e. the buckling capacity increases with increasing thickness, following a quadratic equation. It is also seen that within the range of thickness values considered in this study, the variation of PD is the highest. For other cases of b/t ratio, yield strength, residual peak stress, and initial imperfection v4, a positive effect was obtained. However, a negative effect was observed in the rest of the cases – i.e. w/t ratio, initial imperfections v1, v2 and v3. Nonetheless, the variation of PD in the case of w/t ratio and five initial imperfections was not huge compared to the cases of length, thickness and b/t ratio, for instance.

The percentage of sensitivity (i.e. level of influence) of an input variable is calculated as the integral of its respective PD curve. Ten obtained values of the area were scaled into the range of [0, 1], sorted and plotted in Fig. 9b in a bar graph, together with the linear correlation coefficient obtained directly from the database shown in Fig. 9a (also see Table 2). Thickness exhibits the most influence (positive effect) on the buckling capacity of steel equal angles, as identified by both the linear correlation coefficient and PSOANN model (through PD investigation). We can also see that the influence of thickness by far surpasses the contribution of other inputs.

As obtained by PD for the PSOANN model, l, bt and fy are the variables that had most influence on the buckling capacity of steel equal angles. However, a maximum of 12% sensitivity was observed for the case of length l. On the other hand, bt, wt, fy, σr and v2 exhibit linear correlation coefficients from 0.17 to 0.25, which are not relevant, especially in view of the number of entries in the database. Nonetheless, PSOANN and linear correlation coefficient are in close agreement in demonstrating the effect of input variables on the buckling capacity of columns.

The integration of the model’s predictions into current design codes or standards depends on several factors, including the model’s validation, its alignment with regulatory requirements, and the comprehensiveness of the underlying data. It should be noticed that design codes typically require extensive real-world validation across diverse scenarios to ensure reliability and consistency. Besides, the model proposed in the present study was trained on a specific dataset, which may not cover all possible structural configurations, loading conditions, or material types. For integration, these variables must align with the formats and parameters used in design codes (e.g., safety factors, design equations).

In short, the present study introduces a hybrid PSOANN model, where PSO significantly improves the training process by avoiding local minima and enhancing model performance metrics like RMSE and R2, as shown in Fig. 6; Table 3. The application of PSO provides a robust global optimization approach compared to single ANN model. Moreover, unlike several techniques such as Scaled Conjugate Gradient, PSO searches the solution space globally, ensuring better model generalization. Next, by performing PD analysis, the current work reveals the influence of individual input variables on the buckling load prediction. This step enhances user confidence in deploying machine learning models for structural design and assessment.

Conclusions

In this work, an ANN model whose weights and bias are optimized by PSO is proposed to the critical buckling load of steel equal-angle structural members. Such a model was demonstrated to be efficient when compared to ANN trained by traditional techniques such as SCG. The hybrid PSOANN was a potential surrogate model for the estimation of the buckling capacity of columns, reducing cost and time in laboratory experiments. In addition, PSOANN model allows us to investigate the dependence of output on input variables by using PD. This information can be helpful in structural engineering; for example, some materials whose properties or presence in the structure do not significantly influence the performance of the final design, such as buckling resistance, strength, or durability, can be minimized to reduce production cost. For instance, reducing the need for additional coatings, reinforcements, or filler materials can speed up fabrication and reduce the cost of additional processing or handling.

Despite its achievements, the study has some limitations. First, the dataset used for training may not represent all possible geometric and material configurations. Additionally, the proposed Machine learning model poses challenges for integration into design codes and engineering practices that prioritize simplicity and transparency. Furthermore, the computational intensity of the PSOANN framework may limit its applicability for real-time or large-scale applications without further optimization.

In future works, a larger database should be investigated (to cover a broader range of values). Nonetheless, the feasibility of using surrogate modeling (e.g. ANN-based model) to study nonlinear buckling of columns should also be evaluated, together with other types of damage of structural members. Besides, SHAP analysis should be employed for further investigation of the interaction between variables. Finally, a statistical context should be applied in subsequent studies to exploit the dependence of prediction output on input variables.

Data availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Wang, W., Kodur, V., Yang, X. & Li, G. Experimental study on local buckling of axially compressed steel stub columns at elevated temperatures. Thin-Walled Struct. 82, 33–45 (2014).

Giamundo, V., Lignola, G. P., Prota, A. & Manfredi, G. Analytical evaluation of FRP wrapping effectiveness in restraining reinforcement bar buckling. J. Struct. Eng. 140, (2014).

Megahed, K., Mahmoud, N. S. & Abd-Rabou, S. E. M. Application of machine learning models in the capacity prediction of RCFST columns. Sci. Rep. 13, 20878 (2023).

Wu, F., Tang, F., Lu, R. & Cheng, M. Predicting compressive strength of RCFST columns under different loading scenarios using machine learning optimization. Sci. Rep. 13, 16571 (2023).

Kollar, L. Structural Stability in Engineering Practice CRC. (1999).

Beer, F. P. & Johnston, E. R. Mechanics of Materials McGraw-Hill. (1992).

Kim, D. K. et al. Strength and residual stress evaluation of stub columns fabricated from 800 MPa high-strength steel. J. Constr. Steel Res. 102, 111–120 (2014).

Shi, G., Zhou, W. J., Bai, Y. & Lin, C. C. Local buckling of 460 MPa high strength steel welded section stub columns under axial compression. J. Constr. Steel Res. 100, 60–70 (2014).

Liu, Y. & Liang, Y. Satin Bowerbird optimizer-neural network for approximating the capacity of CFST columns under compression. Sci. Rep. 14, 8342 (2024).

Saetiew, W. & Chucheepsakul, S. Post-buckling of linearly tapered column made of nonlinear elastic materials obeying the generalized Ludwick constitutive law. Int. J. Mech. Sci. 65, 83–96 (2012).

Crisfield, M. A. A faster modified newton-raphson iteration. Comput. Methods Appl. Mech. Eng. 20, 267–278 (1979).

Chang, T. Y. & Sawamiphakdi, K. Large deflection and post-buckling analysis of shell structures. Comput. Methods Appl. Mech. Eng. 32, 311–326 (1982).

Stoykov, S. Buckling analysis of geometrically nonlinear curved beams. J. Comput. Appl. Math. 340, 653–663 (2018).

Baydoun, I., Savin, É., Cottereau, R., Clouteau, D. & Guilleminot, J. Kinetic modeling of multiple scattering of elastic waves in heterogeneous anisotropic media. Wave Motion. 51, 1325–1348 (2014).

Jiang, D., Bechle, N. J., Landis, C. M. & Kyriakides, S. Buckling and recovery of NiTi tubes under axial compression. Int. J. Solids Struct. 80, 52–63 (2016).

Valeš, J. & Kala, Z. Mesh convergence study of solid FE model for buckling analysis. AIP Conf. Proc. 150005. (1978).

Stoffel, M., Bamer, F. & Markert, B. Artificial neural networks and intelligent finite elements in non-linear structural mechanics. Thin-Walled Struct. 131, 102–106 (2018).

Nguyen, P., Doškář, M., Pakravan, A. & Krysl, P. Modification of the quadratic 10-node tetrahedron for thin structures and stiff materials under large-strain hyperelastic deformation. Int. J. Numer. Methods Eng. 114, 619–636 (2018).

Peña, J. M., LaTorre, A. & Jérusalem, A. SoftFEM: the Soft Finite element Method. Int. J. Numer. Methods Eng. 118, 606–630 (2019).

Karalis, D. G. Increasing the efficiency of computational welding mechanics by combining solid and shell elements. Mater. Today Commun. 22, 100836 (2020).

Sebaaly, H., Varma, S. & Maina, J. W. Optimizing asphalt mix design process using artificial neural network and genetic algorithm. Constr. Build. Mater. 168, 660–670 (2018).

Ye, F. et al. Artificial neural network based optimization for hydrogen purification performance of pressure swing adsorption. Int. J. Hydrog. Energy. 44, 5334–5344 (2019).

Sadowski, Ł., Piechówka-Mielnik, M., Widziszowski, T., Gardynik, A. & Mackiewicz, S. Hybrid ultrasonic-neural prediction of the compressive strength of environmentally friendly concrete screeds with high volume of waste quartz mineral dust. J. Clean. Prod. 212, 727–740 (2019).

Asteris, P. G. & Kolovos, K. G. Self-compacting concrete strength prediction using surrogate models. Neural Comput. Appl. 31, 409–424 (2019).

Tran, V. L., Thai, D. K. & Kim, S. E. Application of ANN in predicting ACC of SCFST column. Compos. Struct. 228, 111332 (2019).

Fahmy, A. S. & El-Madawy, M. E. T. Atef Gobran, Y. using artificial neural networks in the design of orthotropic bridge decks. Alex. Eng. J. 55, 3195–3203 (2016).

Le, B. A., Yvonnet, J. & He, Q. C. Computational homogenization of nonlinear elastic materials using neural networks. Int. J. Numer. Methods Eng. 104, 1061–1084 (2015).

Kaveh, A., Eskandari, A. & Movasat, M. Buckling resistance prediction of high-strength steel columns using Metaheuristic-trained Artificial neural networks. Structures 56, 104853 (2023).

Mojtabaei, S. M., Becque, J., Hajirasouliha, I. & Khandan, R. Predicting the buckling behaviour of thin-walled structural elements using machine learning methods. Thin-Walled Struct. 184, 110518 (2023).

Hou, Z., Hu, S. & Wang, W. Interpretable machine learning models for predicting probabilistic axial buckling strength of steel circular hollow section members considering discreteness of geometries and material. Adv. Struct. Eng. https://doi.org/10.1177/13694332241289175 (2024).

Ban, H. Y., Shi, G., Shi, Y. J. & Wang, Y. Q. Column buckling tests of 420 mpa high strength steel single equal angles. Int. J. Str. Stab. Dyn. 13, 1250069 (2013).

Ma, T. Y., Liu, X., Hu, Y. F., Chung, K. F. & Li, G. Q. Structural behaviour of slender columns of high strength S690 steel welded H-sections under compression. Eng. Struct. 157, 75–85 (2018).

Huiyong, B. & Gang, S. Wang Yuanqing. Residual stress tests of high-strength steel equal angles. J. Struct. Eng. 138, 1446–1454 (2012).

Narayanakumar, S. & Raja, K. A BP Artificial neural network model for Earthquake Magnitude Prediction in Himalayas, India. Circuits Syst. 7, 3456–3468 (2016).

Zweiri, Y. H., Whidborne, J. F. & Sceviratne, L. D. A three-term Backpropagation Algorithm. Neurocomputing 50, 305–318 (2002).

Schmidhuber, J. Deep learning in neural networks: an overview. Neural Netw. 61, 85–117 (2015).

Shi, Y. & Eberhart, R. A modified particle swarm optimizer. in IEEE International Conference on Evolutionary Computation Proceedings. IEEE World Congress on Computational Intelligence (Cat. No.98TH8360) https://doi.org/10.1109/ICEC.1998.699146 69–73. (1998).

Chai, T. & Draxler, R. R. Root mean square error (RMSE) or mean absolute error (MAE)?–Arguments against avoiding RMSE in the literature. Geosci. Model Dev. 7, 1247–1250 (2014).

Willmott, C. J. & Matsuura, K. Advantages of the mean absolute error (MAE) over the root mean square error (RMSE) in assessing average model performance. Clim. Res. 30, 79–82 (2005).

Sarir, P., Chen, J., Asteris, P. G., Armaghani, D. J. & Tahir, M. M. Developing GEP tree-based, neuro-swarm, and whale optimization models for evaluation of bearing capacity of concrete-filled steel tube columns. Eng. Comput. https://doi.org/10.1007/s00366-019-00808-y (2019).

Sheela, K. G. & Deepa, S. N. Review on methods to fix number of hidden neurons in neural networks. Math. Probl. Eng. https://doi.org/10.1155/2013/425740https://www.hindawi.com/journals/mpe/2013/425740/ (2013).

Ren, Q., Li, M., Zhang, M., Shen, Y. & Si, W. Prediction of Ultimate Axial Capacity of Square Concrete-Filled Steel Tubular Short Columns using a Hybrid Intelligent Algorithm. Appl. Sci. 9, 2802 (2019).

Gordan, B., Jahed Armaghani, D., Hajihassani, M. & Monjezi, M. Prediction of seismic slope stability through combination of particle swarm optimization and neural network. Eng. Comput. 32, 85–97 (2016).

Kuri-Morales, A. Closed determination of the number of neurons in the hidden layer of a multi-layered perceptron network. Soft Comput. 21, 597–609 (2017).

Abambres, M., Rajana, K., Tsavdaridis, K. D. & Ribeiro, T. P. Neural network-based formula for the buckling load prediction of I-Section Cellular Steel beams. Computers 8, 2 (2019).

Javed, H. et al. On the efficacy of ensemble of Constraint Handling techniques in Self-Adaptive Differential Evolution. Mathematics 7, 635 (2019).

Piotrowski, A. P. Review of Differential Evolution population size. Swarm Evol. Comput. 32, 1–24 (2017).

Chen, T., Tang, K., Chen, G. & Yao, X. A Large Population Size Can Be Unhelpful in Evolutionary Algorithms. arXiv (2012).

Momeni, E., Jahed Armaghani, D., Hajihassani, M. & Mohd Amin, M. F. Prediction of uniaxial compressive strength of rock samples using hybrid particle swarm optimization-based artificial neural networks. Measurement 60, 50–63 (2015).

Zhao, Q. & Hastie, T. Causal interpretations of Black-Box models. J. Bus. Econ. Stat., 1–10 (2019).

Qi, C. et al. Towards Intelligent Mining for Backfill: a genetic programming-based method for strength forecasting of cemented paste backfill. Miner. Eng. 133, 69–79 (2019).

Goldstein, A., Kapelner, A., Bleich, J. & Pitkin, E. Peeking inside the black box: visualizing statistical learning with plots of individual conditional expectation. J. Comput. Graph. Stat. 24, 44–65 (2015).

Nguyen, V. H. et al. Optimization of milling conditions for AISI 4140 steel using an integrated machine learning-multi objective optimization-multi criteria decision making framework. Measurement 242, 115837 (2025).

Sun, Y., Zhang, L., Yao, M. & Zhang, J. Neural network models and shapley additive explanations for a beam-ring structure. Chaos Solitons Fractals. 185, 115114 (2024).

Funding

This research was funded by Phenikaa University under grant number PU2022-1-A-01.

Author information

Authors and Affiliations

Contributions

Nang Xuan Ho, Tien-Thinh Le are responsible for analysis and preparing the first draft of the manuscript. The-Hung Dinh and Van-Hai Nguyen are responsible for material preparation and data collection. All authors contributed to the study conception and design, reviewed all previous versions of the manuscript, and read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ho, N.X., Le, TT., Dinh, TH. et al. Prediction of buckling damage of steel equal angle structural members using hybrid machine learning techniques. Sci Rep 15, 4696 (2025). https://doi.org/10.1038/s41598-025-87869-w

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-87869-w

Keywords

This article is cited by

-

Precipitation modeling in Northeastern Bangladesh–India transboundary flood regions using bi-metaheuristic-optimized NMF-neural network

Scientific Reports (2025)

-

Metaheuristic-trained adaptive activation neural networks for reliable buckling resistance prediction of high strength steel columns

Asian Journal of Civil Engineering (2025)