Abstract

While DNA barcoding methods are an increasingly important tool in biological conservation, the resource requirements of constructing reference libraries frequently reduce their efficacy. One efficient way of sourcing taxonomically validated DNA for reference libraries is to use museum collections. However, DNA degradation intrinsic to historical museum specimens can, if not addressed in the wet lab, lead to low quality data generation and severely limit scientific output. Several DNA extraction and library build methods that are designed to work with degraded DNA have been developed, although the ability to implement these methods at scale and at low cost has yet to be formally addressed. Here, the performance of widely used DNA extraction and library build methods are compared using museum specimens. We find that while our selected DNA extraction methods do not significantly differ in DNA yield, the Santa Cruz Reaction (SCR) library build method is not only the most effective at retrieving degraded DNA from museum specimens but also easily implemented at high throughput for low cost. Results highlight the importance of lab protocol on data yield. An optimised “sample to sequencing” high-throughput protocol which incorporates SCR is included to allow for easy uptake by the wider scientific community.

Similar content being viewed by others

Introduction

DNA-based identification is an increasingly principal component of biology, as it allows rapid and accurate species identification from a range of sample types. However, the potential for this approach is limited by the availability of a high-quality reference databases1,2. Barcode reference sequence databases need to not only have good sequence accuracy, but also incorporate a wide range of taxa from across the range of candidate species, and contain sequences derived from expertly identified specimens3.

Specimens from museum collections are well placed to make an important contribution in this context given that museums are estimated to house more than a billion specimens, covering the vast majority of described species4,5,6.

The potential for museum collections to act as “storehouses of DNA” has been well appreciated for decades7, but it is only with the advent of cheaper DNA extraction and sequencing methods, coupled with increased automation, that they are starting to meet that potential8. DNA recovered from museum specimens is of lower quality than that found in fresh tissues, and so specialist methods are required to work at scale across multiple taxonomic groups of a range of ages. Of particular importance is that the DNA in collections specimens is highly fragmented9, rendering the use of PCR based methods extremely difficult, especially if the required dataset is across a wide taxonomic range10.

In order to develop a protocol for DNA barcoding that was compatible with the scale required to fill gaps in the European barcode reference library, suitable for use with museum specimens at low cost, and had the potential for automation to allow high throughput and speed, the performance of two DNA extraction methods 11,12, and three library build methods, two of which are commercially available kits, alongside a recently published DIY method (Santa Cruz Reaction;13) were tested. Performance was assessed using metrics associated with the quality and quantity of DNA recovered, and each protocol‘s ability to be carried out at scale and at low cost is considered.

Methods

Preliminary investigation of DNA extraction methods

Methods previously used on museum specimens can be broadly split into those using precipitation, or those using magnetic beads or silica columns to capture the DNA. With the overarching goal to develop a low-cost high-throughput method we did not test a commonly used ancient DNA (aDNA) specific DNA extraction method14, given (i) large volume centrifugation (reducing potential for scaling and high throughput) and (ii) relatively expensive large volume silica columns. However binding buffer D14 was used with silica beads following15, with beads offering similar DNA retention but allows for upscaling. We did not test ethanol/isopropanol precipitation methods due to the large volume centrifugation steps which require expensive equipment and cannot be scaled up sufficiently for high throughput.

Initial tests used Ultra Low Range Ladder (ThermoFisher cat. # 10597012) which has 10–300 bp bands, reflecting the size distribution typical for museum specimens9,16. For each tested method mock lysate was produced using 0.5uL of ladder (250ng input DNA) and 90uL lysis “Buffer C”17; 200mM Tris pH8,25mM EDTA pH8,0.05% (vol/vol) Tween 20,0.4 mg/ml Prot. K), excluding the Proteinase K. Each method was then followed as published with four replicates, and the resulting DNA extract was quantified using a Qubit 4 Fluorometer (dsDNA assay kit), and a Tapestation 4200 (Agilent) using D1000 tapes. The best performing column and magnetic bead methods, as measured by percentage recovery of input DNA ≥ 35 bp, were further refined with ULR ladder and then taken forward for testing with museum specimens.

Specimens

Two species with high quality reference genomes were selected covering a range of ages: four specimens of the beetle Rhagonycha fulva collected between 1953 and 2011: NHMUK011517159 (coll. 1953), NHMUK011517162 (coll. 1972), NHMUK011517258 (coll. 2005), NHMUK014422990 (coll. 2011); and one specimen of the bumblebee Bombus pascuorum collected in 1964 (NHMUK014564519) were sourced from the collections of the Natural History Museum, London (NHMUK). We focus on Insecta species, given (i) their utility as ecosystem health indications, (ii) their global prevalence and high species diversity, (iii) their ubiquity in natural history collections, and (iv) the prediction that genomic studies incorporating insect species will increase over the next decade5. Number of samples incorporated was not statistically predetermined and was instead dependent on the availability of samples and feasibility of performing the experiment. Prior study has suggested that DNA preservation is largely consistent across insect specimens under the same curatorial stewardship9, meaning numerous replicates were not required in this instance. This limited sample size also ameliorated ethical issues associated with the sampling of museum specimens solely for developmental purposes, in line with institutional guidelines.

For each insect specimen, one leg (bumblebee) or two legs (beetles) were detached with sterile forceps and lysed overnight at 56 °C in 90ul of lysis buffer C17. The lysate was then divided into two aliquots of 45ul, with the first aliquot processed following DNA extraction method 1 and the second aliquot processed following DNA extraction method 2 below:

DNA extraction methods

DNA extraction method 1 – Rohland (R)

Lysate was extracted as per12 DNA purification option B, with a ratio of 10:1 binding buffer D and the following modifications: 45ul of lysate was processed following the method for 150ul lysate. Beads were dried for approx. 5 min, until visibly dry.

DNA extraction method 2 – Patzold (P)

Lysate was extracted using a Monarch PCR & DNA Clean-up Kit (New England Biolabs) via a modified version of 11. Lysate was made up to 50ul with ultra-pure water. 100ul of DNA clean up binding buffer was added, followed by 300ul 100% ethanol. After gentle mixing by flicking, the total volume was passed through a spin column, 700ul at a time. Columns were washed twice with 500ul of wash buffer. Supernatant was discarded after each spin.

Columns were centrifuged for 1 min to dry the membrane then transferred to clean 1.5 ml low bind Eppendorf tubes. Elutions were 2 × 15ul of elution buffer with 1 min RT incubation.

All centrifugation steps were 30s at 16,000 g.

DNA was then quantified using Qubit dsDNA HS reagents and assessed using an Agilent Tapestation with High Sensitivity D1000 ScreenTapes.

Library build methods

Illumina libraries were prepared from each DNA extraction via the following methods:

Library method 1: NEB next ultra II library preparation kit (New England Biolabs) - NEB

The manufacturer’s protocol NEBNext® Ultra™ II DNA Library Prep Kit for Illumina® for use with NEBNext Multiplex Oligos for Illumina (Unique Dual Index UMI Adaptors DNA Set 1, NEB #E7395) Steps 1 to 3b were followed with the following exceptions:

-

Half volumes of all reagents were used.

-

All SPRI bead cleans were 1.2x to best retain small fragments.

Post-ligation clean-up, libraries were amplified using P5 and P7 Illumina primers and AmpliTaq Gold™ mastermix as per the manufacturer’s instructions. AmpliTaq Gold™ was used instead of NEB Next Q5 Mastermix as it is uracil tolerant, following consultation with NEB tech support. Cycle number was determined by input concentration as recommended in the NEB Next Ultra II protocol. Cleaned libraries were assessed via Tapestation 4200 (Agilent) with D1000 tapes. Three samples contained large amounts of adaptor-dimer so were cleaned with 1x SPRI beads.

Library method 2: xGen™ ssDNA & low-input DNA library preparation kit - IDT

Libraries were prepared following the manufacturer’s instructions with one exception: indexing PCR was performed with AmpliTaq Gold™ mastermix as per the manufacturer’s instructions. AmpliTaq was used instead of the reagents supplied with the kit as it is uracil tolerant, following consultation with IDT tech support. Cycle number was determined by input concentration as recommended in the xGen protocol. Cleaned libraries were assessed via Tapestation 4200 (Agilent) with D1000 tapes.

Library method 3: Santa Cruz Reaction (SCR) - SCR

Libraries were prepared as per13 with the following modifications;

-

i)

Indexing PCR cycle number was estimated from ng DNA input, not using qPCR, as:

DNA (ng) | PCR cycles |

|---|---|

2–4.9 | 10 |

5–19.9 | 8 |

20- 29.9 | 6 |

30–41 | 4 |

-

ii)

The entire purified product from SCR library build was indexed, not just 2ul.

Indexing PCR products were cleaned using 1.2x QuantBio SparQ beads as per manufacturer’s protocol “post PCR amplification clean-up 1 C”. Cleaned library DNA concentration was assessed via Tapestation 4200 (Agilent) with D1000 tapes. Three samples showed no discernible DNA content and indexing PCR was repeated for an additional 4 cycles. The commercial kit available most similar to SCR (SRSLY™ from Claret Biosciences) was not included here, due to its similar performance to SCR13 and increased cost (~ 10x more expensive).

All 36 libraries were normalised and pooled (equimolarity calculated using outputs from both Qubit and Tapestation) before being sequenced on a NextSeq500 Mid output PE 2 × 75 kit at the Natural History Museum sequencing facility.

Computational analysis

Samples were demultiplexed using blc2fastq2. Adapters were trimmed and paired end reads collapsed from demultiplexed raw fastq files using Adapterremovalv2.0 (18; —mm 3 —minlength 30). For IDT treatment samples,10 bases were trimmed off the 5’ and 3’ ends of each read following the manufacturer’s protocol. Only collapsed reads were retained for alignment.

Alignment was performed using bwa v 0.7.1719 with a random seed and an initial low quality filter (-l 1024 -q 10) to the relevant species mitochondrial and nuclear reference genomes. Genomes used were accessed from NCBI (assession numbers GCA_905340355.1, HG996561, GCA_905332965 and HG995285) and were all recently published assemblies stemming from the Darwin Tree of Life Project (R. fulva:20; B. pascuorum:21). Output sam files were converted to bam files using samtools22, before reads were sorted, quality filtered (QC ≥ 30) and duplicated reads removed (see Supp. T1 for alignment details).

To allow for comparisons across specimens, library and DNA extraction methods, several summary statistics were calculated. Endogenous content (Q30 unique mapped reads/total sequenced reads), duplication rates (Q30 unique mapped reads/Q30 mapped total reads) and collapsed read proportions (total collapsed reads/total sequenced reads) were calculated from the read count outputs of samtools. Average read length and GC content for each sample were obtained using Qualimap23, and samtools stats $i | grep ^RL | cut -f 2 used to obtain read length distributions. Preseq24 lc_extrap was used to calculated library complexity using unfiltered sorted bam files.

Statistical analysis and data visualisation was performed in R v4.1.1 (R Core Team, 2021).

Results

Normalised costs for each DNA extraction and library build method can be found in Supplementary Table 2. In short, there is little cost difference between the two extraction methods compared. SCR is 15x cheaper than the next cheapest method (IDT), although we did not consider the potential to use smaller reagent volumes than recommended for each kit.

Wet lab

Testing DNA extractions

Based on Qubit quantification of the ULR ladder the best performing silica column method was11, 2020 which recovered an average of 74% input DNA (range: 61 − 89%), followed by17 which recovered an average of 67% input DNA (range: 60 − 77%).

The best performing magnetic bead method was12 which recovered an average of 45% input DNA (range: 41 − 48%), followed by25 which recovered an average of 38% input DNA (range: 37 − 40%).

While silica columns recover more total DNA tapestation reports suggest the much higher recovery from silica columns is due to the recovery of 10 bp and 20 bp fragments which together account for 40% of the input DNA in the ULR ladder.

Sequence data

DNA extraction comparison

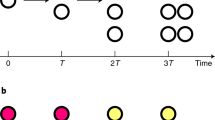

Of the two DNA extraction methods carried forward to sequencing, no significant difference is identified in terms of endogenous content, aligned q30 unique read length distribution, library complexity (calculated using preseq), and collapsed read proportion (Fig. 1; Table 1, Supplementry Table 1)

Library build comparison

Library building protocol was found to significantly impact all summary statistics calculated, with the Santa Cruz Reaction13 showing the best performance of the three treatments (Fig. 1; Table 1, Supplementry Table 1).

The effect of DNA extraction and library building protocols on (a) endogenous content; (b) collapsed read proportion; (c) library complexity (as calculated using preseq); and (d) aligned q30 unique read length distribution. See Supplementary Table 1 for detailed alignment statistics.

Endogenous content was found to be significantly higher in libraries built using the SCR method (Fig. 1(a); ANOVA: p < 0.01, R2 = 0.745).

Read length was found to differ significantly across library building methods (p < 0.01; R2 = 0.773; Table 1). Read length distributions for each sample can be seen in Fig. 1(b), with samples subjected to the SCR showing read length distribution indicative of a higher propensity for short, degraded DNA to be sequenced when compared with the profiles of both IDT and NEB. Results for IDT and NEB indicate more longer reads are incorporated into built libraries and subsequently sequenced. Specimen was found to significantly influence read length, indicating that the preservation history and DNA fragmentation profile that is unique to each specimen impacts the read length of sequenced DNA regardless of DNA extraction or library treatment.

Library complexity was found to differ across library building methods (Fig. 1c), with SCR libraries showing greater complexity (number of predicted unique reads) than both IDT and NEB methods. DNA extraction method has no effect on library complexity.

The proportion of collapsed reads was found to differ significantly across library building methods (Fig. 1d; ANOVA: p < 0.05; R2 = 0.221), with samples subjected to SCR showing a greater number of collapsed reads than both IDT and NEB methods. DNA extraction method has no impact on the proportion of collapsed reads.

At scale

Following on from the results of this study, the protocol developed and shared here has successfully been implemented at scale by authors. Over 40 96 sample well plates have been run over the course of a year, resulting in the generation of over 300 billion paired end reads (20x Illumina X Plus 25B 2 × 150PE flow cells, and 5x Illumina X Plus 10B 2 × 150PE flow cells). Steps have also been taken to consider and ameliorate potential issues associated with ancient DNA26and cross contamination. These steps included (i) the separation of pre- and post- PCR steps to minimise PCR contamination during extraction, (ii) the testing for cross contamination within a well plate using alternating positive and negative controls, and (iii) a reduction in binding buffer volume that reduces the potential for any cross contamination during extraction, but has no impact on efficiency (detailed in the SI). Although the use of an aDNA specific clean lab for all pre-PCR steps would be optimal, the high throughput required and the limited PCR contamination seen both within and between samples reduces its necessity in this instance. However, when extremely degraded, precious or ancient samples are incorporated, it remains essential to perform all pre-PCR steps in a dedicated ancient DNA laboratory27.

Discussion

The Santa Cruz library build Reaction (SCR) is found to generate indexed DNA libraries that allow significantly more of the DNA endogenous to the museum specimen to be sequenced and aligned (Fig. 1). The complexity of SCR libraries is greater than other tested library building methods, with the read length distribution indicating that the SCR method incorporates more short reads (indicative of degraded DNA) into indexed libraries. IDT shows intermediate performance, whilst NEB performs the worst of the three library building methods. DNA extraction method is not seen to influence the quality and quantity of sequence data produced, and no relationship between DNA extraction and library build methods is identified.

At high throughput, the column-based DNA extraction method11 is both more costly and more time consuming28 than the bead based12 method. As such12, is preferential for low-cost high throughput activities.

The performance of SCR here is not surprising, given it was developed to incorporate short deaminated reads that are commonly found in degraded museum specimens13. The method has previously been shown to offer similar performance to the aDNA specific ssDNA2.029 and BEST30 library build methods, with the performance of SCR only seen to be drop-off when input DNA mass is extremely low and deamination is prevalent13, properties that are not widely seen in historical museum specimens. The worst performing library build method tested in this study is not explicitly designed for degraded DNA from museum specimens but instead is aimed at DNA from modern samples, with the expected DNA fragmentation and deamination identified previously in museum specimens likely limiting performance9,31. Results serve to highlight the importance of considering the expected DNA preservation of specimens prior to deciding on wet lab protocols.

The ability to implement the SCR method rapidly, cheaply and at a high throughput is enabled from the limited cost of reagents and short protocol (Supplementary Table 2). Further, it is seen to outperform the other high throughput library build methods tested here. Given the extensive time and effort taken to increase DNA yields whilst reducing cost, we present a complete step-by-step protocol “from sample to sequencing” in the supplementary text, allowing for quick and easy implementation by other researchers looking to incorporate museum specimens into their research. We clearly demonstrate that this optimised protocol can be easily and efficiently upscaled with a single lab technician producing 760 libraries per month using SCR with minimal automation, generating over 300 billion DNA sequence reads over the last year.

Conclusion

The Santa Cruz Reaction (SCR) library build13 is shown to outperform off-the-shelf library build methods. These results, alongside the speed and cost of the method, indicate that SCR should be strongly considered for use in future large scale museomics projects where sequence data of high quantity and quality at low cost is required . An optimised “sample to sequencing” high throughput protocol which incorporates a bead-based DNA extraction and SCR is provided.

Data availability

Sequence data supporting the findings of this study are deposited on Zenodo: https://zenodo.org/records/13642777.

Change history

19 March 2025

A Correction to this paper has been published: https://doi.org/10.1038/s41598-025-94879-1

References

Brandies, P., Peel, E., Hogg, C. J. & Belov, K. The value of reference genomes in the conservation of threatened species. Genes (Basel). 10, 846 (2019).

Church, D. M. et al. 2011 modernizing reference genome assemblies. PLoS Biol. 9, e1001091 .

Santos, B. F. et al. Enhancing DNA barcode reference libraries by harvesting terrestrial arthropods at the Smithsonian’s National Museum of Natural History. Biodivers. Data J. 11 (e100904), 11–e100904. https://doi.org/10.3897/BDJ.11.E100904 (2023).

Yeates, D. K., Zwick, A. & Mikheyev, A. S. Museums are biobanks: unlocking the genetic potential of the three billion specimens in the world’s biological collections. Curr. Opin. Insect Sci. 18, 83–88 (2016).

Johnson, K. R., Owens, I. F. P. & Group, G. C. 2023 a global approach for natural history museum collections. Sci. 379, 1192–1194 (1979).

Wheeler, Q. D. et al. Mapping the biosphere: exploring species to understand the origin, organization and sustainability of biodiversity. Syst. Biodivers. 10, 1–20 (2012).

Graves, G. R. & Braun, M. J. Museums: storehouses of DNA? Sci. 255, 1335–1336 (1992).

Jensen, E. L. et al. Ancient and historical DNA in conservation policy. Trends Ecol. Evol. 37, 420–429. https://doi.org/10.1016/j.tree.2021.12.010) (2022).

Mullin, V. E. et al. First large-scale quantification study of DNA preservation in insects from natural history collections using genome-wide sequencing. Methods Ecol. Evol. 14, 360–371. (https://doi.org/10.1111/2041-210X.13945) (2023).

D’Ercole, J., Prosser, S. W. J. & Hebert, P. D. N. A SMRT approach for targeted amplicon sequencing of museum specimens (Lepidoptera)—patterns of nucleotide misincorporation. PeerJ 9, e10420 (2021).

Patzoldi, F., Zilli, A. & Hundsdoerfer, A. K. Advantages of an easy-to-use DNA extraction method for minimal-destructive analysis of collection specimens. PLoS One. 15, e0235222. https://doi.org/10.1371/JOURNAL.PONE.0235222) (2020).

Rohland, N., Glocke, I., Aximu-Petri, A. & Meyer, M. Extraction of highly degraded DNA from ancient bones, teeth and sediments for high-throughput sequencing. Nat. Protoc. 13, 2447–2461 (2018).

Kapp, J. D., Green, R. E. & Shapiro, B. A fast and efficient single-stranded genomic Library Preparation Method optimized for ancient DNA. J. Hered. 112, 241–249. (https://doi.org/10.1093/jhered/esab012) (2021).

Dabney, J. et al. Complete mitochondrial genome sequence of a middle pleistocene cave bear reconstructed from ultrashort DNA fragments. Proc. Natl. Acad. Sci. U S A. 110, 15758–15763. https://doi.org/10.1073/pnas.1314445110) (2013).

Rohland, N. & Hofreiter, M. Ancient dna extraction from bones and teeth. Nat. Protoc. 2, 1756–1762. https://doi.org/10.1038/nprot.2007.247) (2007).

Dabney, J., Meyer, M. & Pääbo, S. Ancient DNA damage. Cold Spring Harb Perspect. Biol. 5, 1–8. https://doi.org/10.1101/cshperspect.a012567) (2013).

Korlević, P. et al. A minimally morphologically destructive Approach for DNA Retrieval and whole-genome Shotgun sequencing of pinned historic Dipteran Vector species. Genome Biol. Evol. 13, evab226 (2021).

Schubert, M., Lindgreen, S. & Orlando, L. AdapterRemoval v2: rapid adapter trimming, identification, and read merging. BMC Res. Notes. 9, 88. https://doi.org/10.1186/s13104-016-1900-2 (2016).

Li, H. & Durbin, R. Fast and accurate short read alignment with Burrows–Wheeler transform. Bioinformatics 25, 1754–1760 (2009).

Crowley, L. M., Consortium, D. T. & of L. The genome sequence of the common red soldier beetle, Rhagonycha fulva (Scopoli, 1763). Wellcome Open. Res. 6, 243 (2021).

Crowley, L. M. et al. of Consortium DT of L. The genome sequence of the Common Carder Bee, Bombus pascuorum (Scopoli, 1763). Wellcome Open Res 8 (2023).

Li, H. et al. The sequence Alignment/Map format and SAMtools. Bioinformatics 25, 2078–2079. https://doi.org/10.1093/BIOINFORMATICS/BTP352 (2009).

Okonechnikov, K., Conesa, A. & García-Alcalde, F. Qualimap 2: advanced multi-sample quality control for high-throughput sequencing data. Bioinformatics 32, 292–294. https://doi.org/10.1093/bioinformatics/btv566) (2016).

Daley, T. & Smith, A. D. Predicting the molecular complexity of sequencing libraries. Nat. Methods. 10, 325–327. https://doi.org/10.1038/NMETH.2375) (2013).

Tin, M. M. Y., Economo, E. P. & Mikheyev, A. S. Sequencing degraded DNA from Non-destructively Sampled Museum specimens for RAD-Tagging and Low-Coverage Shotgun Phylogenetics. PLoS One. 9, e96793 (2014).

Orlando, L. et al. Ancient DNA analysis. Nat. Reviews Methods Primers 1, 14 (2021).

Fulton, T. L. Setting up an ancient DNA laboratory. Anc. DNA: Methods Protocols, 1–11. (2012).

Marotz, C. et al. DNA extraction for streamlined metagenomics of diverse environmental samples. Biotechniques 62, 290–293. https://doi.org/10.2144/000114559 (2017).

Gansauge, M-T. et al. Single-stranded DNA library preparation from highly degraded DNA using T4 DNA ligase. Nucleic Acids Res. 45, e79–e79. https://doi.org/10.1093/nar/gkx033 (2017).

Carøe, C. et al. single‐tube library preparation for degraded DNA. Methods Ecol. Evol. 9, 410–419 (2018).

Wales, N. et al. New insights on single-stranded versus double-stranded DNA library preparation for ancient DNA. Biotechniques 59, 368–371. (https://doi.org/10.2144/000114364) (2015).

Acknowledgements

This study was partially funded by Science Internal Funding (SIF) that is available to internal researchers at the Natural History Museum, London. IB was supported by NERC grant NE/P012574/1, and WM supported with funding provided by the Calleva Foundation. We’d like to thank curatorial staff, researchers, and members of the Core Research Labs at the NHM, most notably Michael Geiser, Joe Monks, Piotr Cuber, Jo Wilbraham, Juliet Brodie, Raju Misra, Gavin Broad, Silvia Salatino, Selina Brace, Pia Aanstad, Matt Clark, Owain Powell and Josh Kapp for their assistance with the study.

Author information

Authors and Affiliations

Contributions

BP and IB conceived the project. BP sourced the specimens. AH performed all lab work. WM performed all computational analysis and data visualisation. WM and AH wrote the paper. BP and IB provided suggestions and edits.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original online version of this Article was revised: The original version of this Article contained an error in the legend of Figure 1. Furthermore, Supplementary Material file 1 published with the original version of this Article was a previous version. Full information regarding the corrections made can be found in the correction for this Article.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Marsh, W., Hall, A., Barnes, I. et al. Facilitating high throughput collections-based genomics: a comparison of DNA extraction and library building methods. Sci Rep 15, 6013 (2025). https://doi.org/10.1038/s41598-025-88443-0

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-88443-0