Abstract

The detection and recognition of vehicles are crucial components of environmental perception in autonomous driving. Commonly used sensors include cameras and LiDAR. The performance of camera-based data collection is susceptible to environmental interference, whereas LiDAR, while unaffected by lighting conditions, can only achieve coarse-grained vehicle classification. This study introduces a novel method for fine-grained vehicle model recognition using LiDAR in low-light conditions. The approach involves collecting vehicle model data with LiDAR, performing projection transformation, enhancing the data using contrast limited adaptive histogram equalization combined with Gamma correction, and implementing vehicle model recognition based on EfficientNet. Experimental results demonstrate that the proposed method achieves an accuracy of 98.88% in fine-grained vehicle model recognition and an F1-score of 98.86%, showcasing excellent performance.

Similar content being viewed by others

Introduction

With the advancement of science and technology, intelligent vehicles and autonomous driving are increasingly becoming part of everyday life1. Intelligent vehicles are multidisciplinary integrated systems2,3, typically comprising three main components: environmental perception, behavior decision-making, and motion planning and control. Among these, environmental perception is the prerequisite and foundation for intelligent vehicles. The performance of environmental perception directly impacts the quality of vehicle decision-making and control4. As a significant participant in traffic environments, vehicles are crucial identification and detection objects in environmental perception tasks that play an essential role in enhancing traffic safety and practical work efficiency5. This plays a vital role in improving traffic safety and operational efficiency6,7. Recognizing vehicle types in actual scenarios, such as underground garages, indoor parking lots, intersections, etc., can not only assist traffic management and law enforcement, but also provide necessary environmental perception information for unmanned vehicles8.In recent years, with the rapid development of computer technology and sensor hardware, many new techniques and methods have been applied to the field of vehicle detection and recognition9,10,11. From the perspective of sensors, the common automatic recognition and classification methods of vehicle types mainly include methods based on video images12,13,14 and laser radar15,16,17.

Nowadays, vehicle detection and recognition have achieved high levels in favorable road environments18,19. However, the performance of camera-based detection and recognition is greatly reduced in harsh conditions such as nighttime, rain, snow, and foggy days20, while LiDAR can maintain good working conditions even in low-light situations21. As one of the primary sensors equipped in most new energy vehicles, LiDAR detects obstacles by transmitting and receiving laser echo signals, thereby providing perception information about the traffic environment through point clouds22,23. In comparison to cameras, LiDAR is unaffected by lighting conditions and exhibits excellent adaptability to various adverse weather conditions. And the point cloud collected by it contains multi-channel data, which can not only be processed directly from a three-dimensional perspective24, but also can be converted into two-dimensional images for more efficient processing25. This makes it widely used in many fields including intelligent vehicle systems26. Jin et al.27 proposed a robust vehicle detection method based on LiDAR. The improved Density-Based Spatial Clustering of Applications with Noise (DBSCAN) algorithm effectively dealt with the uneven distribution of point clouds and the deterioration of clustering quality, and built an advanced PointNet using deep learning technology to stably complete vehicle target recognition. Zhou et al.28 developed a new framework based on Convolutional Neural Network and LiDAR data, achieving vehicle detection from roadside LiDAR data. Wu et al.29 fused features obtained by projecting 3D-LiDAR data into image space with region features from camera images, accurately classifying various vehicle types and pedestrians using a Faster-RCNN. Wu et al.30 proposed an improved DBSCAN method named 3D-SDBSCAN for distinguishing vehicle points from snowflakes in LiDAR data, effectively solving weather occlusion problems. The aforementioned methods effectively leverage the high precision and robustness of LiDAR; however, they only provide coarse-grained recognition and classification for vehicles. These methods can merely differentiate between various objects such as cars, trucks, or pedestrians, without achieving fine-grained recognition and classification for specific vehicle types.

In order to achieve precise recognition of vehicle types, numerous deep learning-based methods31,32have been proposed by scholars, which utilize video images captured by cameras for car identification. Ke et al.33 introduced a dense attention network-based approach for fine-grained detection and recognition of vehicle types. Liu et al.34 presented the Multi-task Visual Language Model for vehicle recognition, while Joseph Sanjaya et al.35 conducted experiments to validate the effectiveness of using EfficientNet architecture in car image recognition. However, images captured at night often suffer from low brightness or quality, and the features used for recognition and classification may not be distinct enough. To address these issues, researchers have proposed various image preprocessing methods to enhance image quality and improve recognition accuracy. Histogram equalization36,37,38 enhances image contrast by redistributing pixel values to achieve a histogram closer to uniform distribution, thereby improving visual effect. However, global histogram equalization can sometimes reduce image details while ignoring local contrast enhancement. Median filtering39 improves clarity and detail in an image by replacing each pixel value with the median value of its neighborhood pixels while reducing noise and details in the process. Nevertheless, it has relatively slow calculation speed and cannot remove strong noise effectively. Dark channel prior40 restores image details and contrast by removing over-bright areas in an image to enhance visual effect; however, its operation efficiency is low making it unsuitable for real-time processing applications. Retinex41, based on human retina processing mechanism separates reflection component from illumination component of an image to recover details and contrast thus enhancing visual effect; however, its color retention ability is weak especially when dealing with brighter images. Although the aforementioned methods can enhance the accuracy of car image recognition and classification to a certain extent, under certain conditions such as extremely low light environments, such as underground garage and other scenes, it may not be feasible to utilize the camera-captured images through enhancement techniques.

Aiming to address the issue of LiDAR’s inability to achieve precise recognition of car models and the limitation of camera images in extremely low-light conditions, this paper proposes a novel approach that combines LiDAR point cloud data with EfficientNet and image enhancement technology to accomplish fine-grained recognition of car models. The main contributions of this study are as follows:

-

1)

Leveraging LiDAR-collected car point cloud data, which undergo rotation, translation, and Angle projection processes, we realize car classification based on data augmentation techniques and EfficientNet architecture. This provides a new processing method for fine-grained recognition of car models in the field of unmanned driving perception.

-

2)

We propose a data augmentation method that combines Contrast Limited Adaptive Histogram Equalization (CLAHE) with Gamma correction to enhance low-brightness datasets transformed by point clouds. This enhances vehicle recognition accuracy and demonstrates its effectiveness across multiple models.

-

3)

Testing and evaluation on our self-collected dataset demonstrate the practicality of the proposed method for fine-grained recognition of car models under dim conditions, achieving an overall recognition accuracy rate of 98.88% and an F1-score reaching 98.86%.

II. Methodology

Aiming to address the challenge of recognizing vehicle types accurately under low-light conditions, which poses significant inconvenience to traffic safety and human activities, this paper proposes a refined method for fine-grained vehicle type recognition based on EfficientNet using LiDAR point cloud data and image enhancement techniques. This approach effectively enhances the accuracy of vehicle recognition in dim conditions and exhibits enhanced practicality.

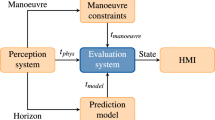

The overall framework of the proposed method is shown in Fig. 1 After data acquisition using LiDAR, the specific processing workflow includes three core steps:

-

1)

Point Cloud Processing Module: Convert the LiDAR-captured point cloud data into common JPEG images.

-

2)

Data Enhancement: Use CLAHE and Gamma correction techniques to process the converted images, enhancing contrast and details to improve recognition capability.

-

3)

EfficientNet Classification: Classify the enhanced images using the EfficientNet network to achieve accurate vehicle recognition.

Point cloud processing: from PCD to JPG

Point cloud data is widely used in fields such as remote sensing target detection and autonomous driving. However, due to its large data volume and inherent sparsity, processing and recognizing point clouds directly, particularly for 3D object detection tasks, poses significant challenges in terms of computational complexity, memory requirements, and real-time performance. Converting multi-channel lidar point cloud data into dual-channel images on the x and y planes serves as a dimensionality reduction step, which significantly reduces the data volume while preserving critical spatial information. This transformation allows the use of well-established image processing techniques and pre-trained deep learning models, such as the EfficientNet network, to achieve higher recognition accuracy without the need to design complex 3D-specific architectures from scratch.

Although 3D vehicle detection methods can theoretically provide higher precision, they often come with increased computational costs, higher model complexity, and greater training data demands, which may not be suitable for real-time applications or resource-constrained environments. By leveraging image-based processing, we can effectively balance accuracy and efficiency, enabling the system to achieve robust recognition performance even under limited computational resources. Furthermore, this approach facilitates the integration of advanced image enhancement techniques to improve the quality of data and further enhance recognition accuracy. Therefore, converting point cloud data into dual-channel images represents a practical and efficient alternative, particularly when considering real-time applications and the challenges associated with raw 3D data processing.

Point cloud data typically contains a large number of three-dimensional coordinate points, providing information on the distribution of objects in 3D space. Preprocessing involves denoising and downsampling the point cloud data to reduce computational load and enhance processing efficiency. To facilitate subsequent processing, the point cloud data must be rotated and translated to align with a unified coordinate system. First, calculate the centroid of the point cloud data and translate it to the origin. The centroid calculation formula is as follows:

where N is the number of points and \({P_i}\) is the coordinate of the th point.

Next, compute the principal direction of the point cloud data and generate a rotation matrix to align the point cloud with the coordinate axes. To convert 3D point cloud data into 2D images, project the point cloud data onto a 2D plane. The angle projection method maps each point in 3D space to 2D coordinates by calculating the projection angle:

where \({\theta _y}\)and \({\theta _z}\)represent the projection angles on the y-axis and z-axis, respectively.

After projecting the point cloud data onto the 2D plane, some blank spots may appear. To fill these gaps, interpolation is applied to the image. Using the barycentric coordinate interpolation method, each pixel’s value is interpolated based on the barycentric coordinates within its adjacent triangle. The barycentric coordinate formula is as follows:

where u、v and w are the three weights of the barycentric coordinates.

To meet specified size requirements, the generated images are scaled and padded. First, scale the image proportionally to the target size, then pad the edges to fit the specified dimensions. As shown in Fig. 2, these steps are integrated to efficiently convert large amounts of point cloud data into image data, facilitating subsequent image enhancement and deep learning model training.

B. Data enhancement: CLAHE + Gamma correction.

Data enhancement techniques play a crucial role in improving image visibility and contrast, especially under poor lighting and environmental conditions. In this study, we use a combination of contrast-limited adaptive histogram equalization and Gamma correction to enhance images generated from point cloud data. This method significantly improves visual effects, thereby increasing the recognition accuracy of deep learning models.

CLAHE involves calculating the number of pixels at each gray level, and then using the cumulative distribution function to represent the cumulative probability of pixels at a given gray level, as shown in Eq. (5).

where k is the current gray level, is the total number of gray levels, and () is the probability of gray level .

By normalizing \(Fcd(k)\) the histogram equalization transformation function () is obtained, as shown in Eq. (6).

where \(Fc{d_{\hbox{min} }}\) is the minimum value of \(Fcd(k)\) and is the number of gray levels.

To address the issue of excessive noise amplification in homogeneous regions of the image, a contrast limiting function () is introduced, as shown in Eq. (7).

where \({B_{\hbox{min} }}\) and \({B_{\hbox{max} }}\) are the minimum and maximum contrast values set according to the characteristics of vehicle images, and is the output value of the function. Finally, the contrast limiting function is applied to each pixel in the image to obtain the equalized image.

Gamma correction is a nonlinear operation used to adjust the brightness of an image. By changing the Gamma value of an image, it can be made brighter or darker to better match the human visual characteristics. The Gamma correction formula is as follows:

where \({I_{out}}\) and \(I_{{in}}^{\gamma }\) represent the output and input pixel values, respectively, and γ is the Gamma value.

By selecting an appropriate Gamma value, the details and contrast of the image can be enhanced. Typically, the Gamma value ranges from 0.5 to 2.5. The specific value needs to be adjusted based on the characteristics and application requirements of the image. In the method proposed in this paper, the selected Gamma value is 1.5.

In practical applications, CLAHE is first applied to the input image to enhance contrast, followed by Gamma correction to adjust brightness. This significantly improves the visual quality of the image. Figure 3 shows a comparison of images before and after applying the combination of CLAHE and Gamma correction.

As shown in Fig. 3, the combination of CLAHE and Gamma correction has a significant impact on the original images converted from vehicle point clouds. The contrast of the original image was 28.73, and the brightness was 32.03. After enhancement, the image’s contrast increased to 39.67, and the brightness to 44.72. The overall image became brighter, and the contrast was significantly enhanced, demonstrating the effectiveness of CLAHE and Gamma correction in adjusting image contrast and brightness.

Vehicle recognition: based on EfficientNet-B0 network

Convolutional Neural Networks (CNNs) are a prevalent type of deep learning network widely used in image classification, object detection, and image segmentation. As shown in Fig. 4, we chose the EfficientNet-B0 network for vehicle recognition in this study.

EfficientNet, proposed by Google, is a highly efficient and accurate convolutional neural network. Its design significantly improves model performance and efficiency through a compound scaling method that adjusts the network’s depth, width, and resolution. The largest model in the EfficientNet series has 66 million parameters and achieves a top-1 accuracy of 84.3% on ImageNet, with a smaller network structure and faster runtime than the best models of its time.

The core idea of EfficientNet-B0 is to adjust the network’s three dimensions—depth, width, and resolution-simultaneously through compound scaling. Traditionally, model scaling focused on a single dimension, such as increasing the number of layers or the input image resolution, which could lead to wasted computational resources or performance bottlenecks. Compound scaling, however, balances model complexity and computational cost by simultaneously adjusting the network’s depth, width, and resolution, achieving higher performance.

As illustrated in Fig. 4, EfficientNet-B0 consists of multiple convolutional layers, pooling layers, and fully connected layers. Its main features include:

MBConv Modules: EfficientNet-B0 extensively uses Mobile Inverted Bottleneck Convolution modules. These modules enhance the model’s expressive power and performance while maintaining low computational costs. MBConv is similar to the Inverted Residual Block in the MobileNetV3 network but with differences. One difference is the activation function used; MBConv in EfficientNet uses the Swish activation function. Additionally, each MBConv includes a Squeeze-and-Excitation (SE) module. Figure 5 depicts the MBConv structure of EfficientNet-B0.

As shown in the Fig. 5, the MBConv structure mainly consists of a 1 × 1 convolution for dimensionality increase, including Batch Normalization (BN) and Swish; a k*k Depthwise Conv convolution, with typical sizes of 3 × 3 and 5 × 5; an SE module; and a 1 × 1 convolution for dimensionality reduction, including BN and a Dropout layer.

The SE module, depicted in Fig. 6, comprises of a global average pooling layer and two fully connected layers. The first fully connected layer has one-fourth the number of nodes as the input MBConv feature matrix channels and utilizes the Swish activation function. The second fully connected layer has an equal number of nodes as the output channels feature matrix generated by the Depthwise Convolutional layer and employs the Sigmoid activation function.

Experiments setup

Data collection and processing

In this section, we validate the effectiveness of our proposed method through real-world experiments. The experiments utilized a LiDAR sensor manufactured by RoboSense (China), specifically the M1 solid-state LiDAR. Table 1 presents the detailed parameters of the LiDAR.

The M1 is mounted on the actual platform within a dim working environment, as depicted on the left side of Fig. 6. Subsequently, a point cloud dataset required for the experiment is acquired under low-light conditions. This dataset encompasses point cloud data from three distinct vehicle models: BYD Song Pro, Nissan Xuan Yi, and Toyota Corolla. Each vehicle type comprises a total of 1000 samples of point cloud data, accompanied by an additional 1000 samples representing environmental background. Consequently, the overall dataset consists of 4000 samples.

To improve the model’s generalization and robustness, we pre-processed the point cloud data, including converting point clouds to images (PCD to JPG) and enhancing the images using CLAHE and Gamma correction. Figure 7 (right) shows the vehicle JPG dataset after point cloud conversion and image enhancement.

Experimental setup

The experiments were conducted on a workstation equipped with an NVIDIA RTX 4070 GPU and an Intel i7-13700KF CPU, running Windows 11. The primary software environment included Python 3.10, Pytorch 2.2.1 + CUDA11.8, Open3D 0.18.0, and OpenCV 4.9.0.

During training, the batch size was set to 32, the learning rate to 0.001, and the number of epochs to 10. The enhanced vehicle JPG dataset was loaded and pre-processed, resizing the training and test set images to (224, 224), converting them to tensors, and performing normalization and standardization.

The dataset was split into a training set (80% of the total dataset) and a test set (20%). DataLoader was used to package the training and test sets into iterable data loaders. The training set data loader was set to shuffle the data (shuffle = True) to increase randomness. During model training, the cross-entropy loss function was used, and the Adam optimizer was selected.

Evaluation metrics

To comprehensively evaluate the model’s performance, we used four metrics: accuracy, precision, recall, and F1 score. The formulas are as follows:

where: TP: true positives, the number of correct positive predictions; TN: true negatives, the number of correct negative predictions; FP: false positives, the number of incorrect positive predictions; FN: false negatives, the number of incorrect negative predictions.

Result

Classification performance of the proposed method on various types of samples

Table 2 shows the classification performance of the proposed method on various types of samples. The experimental results demonstrate that the proposed method performs excellently in the classification tasks for different vehicle point cloud data, achieving an overall recognition accuracy of 98.88%, with precision and recall rates of 98.89% and 98.85%, respectively, and an F1-score of 98.86%. Specifically, the recognition accuracy for BYD Song and Toyota Corolla reaches 100%. Misclassification mainly occurs between Nissan Sylphy and background images. The F1-score indicates successful recognition of vehicle samples, while errors in recognizing backgrounds are relatively higher, possibly due to the diverse nature of the background samples, which distracts the model’s attention.

Comparison of classification performance between different models

We evaluated the performance of six models: InceptionV3, DenseNet, MobileNetV2, ShuffleNetV2, SENet, and EfficientNet, including the four evaluation metrics and the confusion matrices for the recognition results of each vehicle type. The experimental results are shown in Table 3; Fig. 8.

In terms of overall performance, EfficientNet significantly outperforms the other models across all evaluation metrics, achieving an impressive F1-score of 98.86%, with an overall classification accuracy of 98.88%. The confusion matrix analysis reveals that EfficientNet not only provides high precision in distinguishing between the tested vehicle models (Tesla, Nissan Xuan Yi, and BYD Song Pro), but it also avoids misclassifications between different car types. This superior performance can be attributed to EfficientNet’s compound scaling approach, which balances network depth, width, and resolution to achieve higher model efficiency and expressiveness.

SENet, ranking second in overall performance, achieves an F1-score of 96.30%, showcasing strong capabilities in vehicle type recognition. Its squeeze-and-excitation modules help emphasize meaningful features in the dataset, though its performance slightly lags behind EfficientNet in fine-grained classification tasks. DenseNet also demonstrates competitive results, with an F1-score of 95.58%. Its densely connected architecture enables feature reuse, enhancing recognition accuracy.

Conversely, lightweight models such as ShuffleNetV2 and MobileNetV2, while offering faster inference times and lower computational complexity, exhibit reduced classification accuracy, with F1-scores of 92.81% and 90.96%, respectively. These models face challenges in distinguishing between fine-grained vehicle features, which may explain the observed performance gap. InceptionV3, despite being widely adopted in image recognition tasks, records the poorest performance in this study, with an F1-score of 84.35%. This underperformance may stem from its architectural constraints, which are less suited for tasks demanding high expressiveness in distinguishing between similar vehicle models.

This study highlights the importance of selecting a model that balances precision and efficiency based on specific application requirements. By leveraging EfficientNet’s high accuracy and efficient design, our proposed recognition method is well-suited for deployment in real-world applications such as underground parking garages, where computational resources may be limited, and accurate vehicle model recognition is critical.

Accuracy comparison before and after image enhancement

Table 4 shows that after applying data enhancement techniques, the classification accuracy of all models improved. This indicates that the enhancement methods used in this study effectively improve the models’ image processing capabilities, enabling them to better extract useful features from the images. For EfficientNet, training with the original point cloud-converted images and the enhanced images increased its classification accuracy from 96.79 to 98.88%, a 2.09% improvement, which, though small, is still significant. This may be because EfficientNet already has good performance, and the image enhancement techniques further optimized its image processing capabilities. For InceptionV3, classification accuracy improved from 70.88 to 84.62%, a significant 13.74% increase, showing that image enhancement techniques played an important role in boosting its performance. This may be because InceptionV3 has relatively weak image processing capabilities, and image enhancement techniques can compensate for its deficiencies. These results indicate that the image enhancement methods used in this study help improve the models’ generalization ability and robustness, enhancing recognition accuracy.

Evaluation of recognition results at different distances

The experimental datasets for the above three sections were all collected when the LiDAR and the target vehicle were 2 m apart. To verify the validity of the datasets and the feasibility of the recognition method proposed in this study, we evaluated the recognition results of multiple networks at other collection distances. The experimental results are shown in Table 5.

As shown in the table, there are certain differences in the recognition accuracy of each model at 2-meter and 5-meter distances. Among them, the EfficientNet model achieved the highest accuracy under both conditions, with 98.88% at 2 m and 97.75% at 5 m, indicating its strong generalization ability across different distances. SENet and DenseNet followed in accuracy and showed relatively stable performance, with minimal changes in accuracy at the two distances.

From the comparison of recognition accuracy at different distances, all models demonstrated slightly lower accuracy at the 5-meter distance compared to the 2-meter distance, though the overall differences were small. This suggests that the proposed method can adapt to vehicle recognition tasks under varying distance conditions. However, it also reflects that increasing the target distance may impact the model’s ability to extract detailed features.

Complexity and computational cost evaluation of different network models

To comprehensively evaluate the applicability of different network models in the target recognition task, this study compared the complexity and computational cost of the six network models mentioned above. The experimental results are shown in Table 6.

By comprehensively evaluating the models’ recognition performance, complexity, and computational costs, EfficientNet stood out due to its excellent recognition accuracy (98.88%), low complexity (4.013 M parameters, 0.421G FLOPs), and reasonable inference time (5.14ms), demonstrating the best overall performance in this study.

In contrast, although SENet and DenseNet also performed well in terms of recognition accuracy, their large model sizes or long inference times significantly increase computational and storage burdens. ShuffleNetV2 and MobileNetV2 exhibited outstanding performance in lightweight design and inference efficiency but showed relatively low recognition accuracy, which may limit their applicability in scenarios requiring high precision. InceptionV3, on the other hand, did not meet expectations. Its complex architecture and high computational cost did not result in a significant improvement in recognition performance, making it unsuitable for this study’s requirements.

Disscussion

The proposed method of vehicle recognition using LiDAR point cloud, combined with image enhancement and EfficientNet, demonstrates significant advantages in low-light environments. Firstly, the experimental data solely rely on LiDAR without dependence on other sensors, ensuring that the recognition performance remains unaffected by adverse weather conditions or poor lighting conditions. By converting point cloud data into images and applying image enhancement techniques such as CLAHE and Gamma correction, the quality of the images is significantly enhanced leading to improved classification accuracy. Experimental results indicate that when processing the enhanced image data, EfficientNet achieves superior performance with a classification accuracy of 98.88%. This novel approach of converting point clouds into images for enhancement and recognition not only showcases its effectiveness but also exhibits exceptional robustness in classifying different car models, thereby validating its practical applicability.

Despite the promising results, there are some limitations to this method. Firstly, due to the constraints of data collection efficiency, the dataset only includes point cloud data for three types of vehicles, which may limit the model’s generalization ability to other vehicle types. Because this dataset was created by collecting point cloud data from a fixed vehicle at a fixed distance from the lidar sensor. This setup allows for accurate and noise-free data acquisition, ensuring high quality baseline data for algorithm development. However, we acknowledge that this setup represents an idealized scenario compared to real-world environments where vehicles are often in motion, introducing challenges such as motion distortions, occlusions, and incomplete point cloud data. In real-world scenarios, moving vehicles may result in blurred or fragmented point cloud captures, which could degrade recognition performance. Secondly, although the method achieves good recognition performance, the operations, processing, and computational complexity involved are relatively high, potentially hindering real-time applications. Additionally, this study primarily focuses on data processing from a single sensor, without considering the potential advantages of multi-sensor fusion.

To address these potential issues, future iterations of this research will consider augmenting the dataset to include dynamic scenarios where vehicles are in motion and occlusion effects are present. Advanced data preprocessing techniques, such as motion compensation algorithms, can be applied to mitigate motion-induced distortions. For instance, point cloud alignment techniques can be employed to stitch fragmented data into coherent shapes, while occlusion-aware algorithms can improve robustness by reconstructing missing data based on context.

Looking ahead, there are several directions in which this research can be further improved and expanded: First, increasing the diversity of vehicle types and the number of samples in the dataset will enhance the model’s generalization ability. Collecting data under different environmental conditions, including varying distances and dynamic settings, will also contribute to improving robustness. Second, from another perspective, introducing small-sample learning techniques will enable the model to recognize new vehicle models with limited training data, thereby adapting to the frequent introduction of new vehicle designs in the real world. Third, integrating motion compensation and data augmentation techniques will address real-world challenges such as motion distortions and occlusions. Finally, considering practical applications, developing a lightweight model suitable for edge computing environments will be crucial for achieving real-time deployment in autonomous driving systems and other resource-constrained applications.

Conclusion

In this paper, we propose a novel approach that combines image enhancement and EfficientNet to achieve precise vehicle recognition using LiDAR point clouds in low-light conditions. By converting the point cloud data into images and applying CLAHE and Gamma correction techniques, we significantly enhance the quality of the input data. When combined with the EfficientNet model, our method achieves highly accurate recognition results. Experimental results validate the effectiveness of our approach, demonstrating a recognition accuracy of 98.88% and an F1-score of 98.86%. Our method not only introduces novelty but also exhibits exceptional robustness by solely utilizing LiDAR data, thereby providing a new perspective and auxiliary technique for intelligent transportation vehicle type recognition.

The automotive point cloud data set collected using lidar in the current study is available from the corresponding author upon reasonable request.

Data availability

The experimental results data of this study have been presented in the article.The automotive point cloud data set collected using lidar in the current study is available from the corresponding author upon reasonable request.

References

Chen, Q., Xie, Y., Guo, S., Bai, J. & Shu, Q. Sensing system of environmental perception technologies for driverless vehicle: A review of state of the art and challenges. Sens. Actuators A: Phys. 319 https://doi.org/10.1016/j.sna.2021.112566 (March. 2021).

Khan, M. A. et al. Level-5 autonomous driving—Are we there yet? A review of research literature. ACM Comput. Vol. https://doi.org/10.1145/3485767 (Feb. 2023).

Wang, Z. et al. A review of vehicle detection techniques for Intelligent vehicles. IEEE Trans. Neural Networks Learn. Syst. 34 (8), 3811–3831. https://doi.org/10.1109/TNNLS.2021.3128968 (Aug 2023).

Zhao, J. et al. Burke,Autonomous driving system: a comprehensive survey. Expert Syst. Appl. 242 https://doi.org/10.1016/j.eswa.2023.122836 (May. 2024).

Xin Xia, Z. et al. An automated driving systems data acquisition and analytics platform. Transp. Res. Part. C: Emerg. Technol. 151 https://doi.org/10.1016/j.trc.2023.104120 (June. 2023).

Luo, R. et al. Deep Learning Approach for Long- Term Prediction of Electric Vehicle (EV) Charging Station availability. 2021 IEEE Int. Intell. Transp. Syst. Conf. (ITSC). Indianapolis, IN, USA, 3334–3339. https://doi.org/10.1109/ITSC48978.2021.9564633 (2021).

Luo, R., Song, Y., Huang, L., Zhang, Y. & Su, A. S. T. G. I. N. Attribute-augmented spatiotemporal graph informer network for electric vehicle charging station availability forecasting, Sensors 23 (2023). https://doi.org/10.3390/s23041975

Shen, J., Liu, N., Sun, H., Li, D. & Zhang, Y. Lightweight deep network with context information and attention mechanism for vehicle detection in aerial image. IEEE Geosci. Remote Sens. Lett., 19, pp. 1–5 (2022). https://doi.org/10.1109/LGRS.2022.3153115

Fayyad, J. & Jaradat, M. A., Gruyer, D., & Najjaran, H. Deep learning sensor fusion for autonomous vehicle perception and localization: A review. Sensors 20(15), 4220 (2020). https://doi.org/10.3390/s20154220

Kim, J. A., Sung, J. Y., & Park, S. H. Comparison of faster-RCNN, YOLO, and SSD for real-time vehicle type recognition, in 2020 IEEE International Conference on Consumer Electronics - Asia (ICCE-Asia), Seoul, Korea (South), pp. 1–4, (2020). https://doi.org/10.1109/ICCE-Asia49877.2020.9277040

Wang, M., Zhao, L. & Yue, Y. PA3DNet: 3-D vehicle detection with pseudo shape segmentation and adaptive camera-LiDAR fusion. IEEE Trans. Ind. Informat., 19(11), 10693–10703 (2023). https://doi.org/10.1109/TII.2023.3241585

Švorc, D., Tichý, T. & Růžička, M. An infrared video detection and categorization system based on machine learning. Neural Netw. World, 31(4), (2021). https://doi.org/10.14311/NNW.2021.31.014

Li Kang, Z., Lu, L., Meng, Z. & Gao, Y. O. L. O. F. A. Type-1 fuzzy attention based YOLO detector for vehicle detection. Expert Syst. Appl. 237 https://doi.org/10.1016/j.eswa.2023.121209 (March. 2024).

Janak, D., Trivedi, S. D., Mandalapu, D. H. & Dave Vision-based real-time vehicle detection and vehicle speed measurement using morphology and binary logical operation. J. Industrial Inform. Integr. Vol. 27 https://doi.org/10.1016/j.jii.2021.100280 (May. 2022).

Lee, E., Jeon, H. J., Choi, J., Choi, H. T. & Lee, S. Development of vehicle- recognition method on water surfaces using LiDAR data: SPD2 (spherically stratified point projection with diameter and distance). Def. Technol. 36, 95–104. https://doi.org/10.1016/j.dt.2023.09.013 (June. 2024).

Gupta, A., Jain, S., Choudhary, P., Parida, M. & Part, B. Dynamic object detection using sparse LiDAR data for autonomous machine driving and road safety applications. Expert Syst. Appl., 255, (2024). https://doi.org/10.1016/j.eswa.2024.124636

Ciyun Lin, Y., Wang, B., Gong, H. & Liu Vehicle detection and tracking using low-channel roadside LiDAR. Measurement 218 https://doi.org/10.1016/j.measurement.2023.113159 (Aug. 2023).

Berwo, M. et al. Deep learning techniques for vehicle detection and classification from images/videos: A survey. Sensors, 23(10), 4832 (2023). https://doi.org/10.3390/s23104832

Tan, S. H., Chuah, J. H., Chow, C. O. & Kanesan, J. Spatially recalibrated convolutional neural network for vehicle type recognition. IEEE Access, 11, 142525–142537 (2023). https://doi.org/10.1109/ACCESS.2023.3342109

Yuan, J., Liu, T., Xia, H. & Zou, X. A novel dense generative net based on satellite remote sensing images for vehicle classification under foggy weather conditions. IEEE Trans. Geosci. Remote Sens 61, 1–10 (2023). https://doi.org/10.1109/TGRS.2023.3336546

Zhang, Y., Carballo, A., Yang, H. & Takeda, K. Feb., Perception and sensing for autonomous vehicles under adverse weather conditions: A survey. ISPRS J. Photogramm. Remote Sens., 196, 146–177 (2023). https://doi.org/10.1016/j.isprsjprs.2022.12.021

Li, Y. & Ibanez-Guzman, J. Lidar for autonomous driving: The principles, challenges, and trends for automotive lidar and perception systems. IEEE. Signal. Process. Mag. 37(4), 50–61. https://doi.org/10.1109/MSP.2020.2973615 (July 2020).

Wu, Y., Wang, Y., Zhang, S. & Ogai, H. Deep 3D object detection networks using LiDAR Data: A review. IEEE Sensors J. , 21(2), 1152–1171 (2021). https://doi.org/10.1109/JSEN.2020.3020626

Mao, J. et al. 3D object detection for autonomous driving: A comprehensive survey. Int. J. Comput. Vis.. 131(8), 1909–1963 (2023).

Dong, Q. et al. Pavement crack detection based on point cloud data and data fusion. Philos. Trans. R. Soc. A. 381(2254), 20220165 (2023).

Duarte Fernandes, A. et al. João Monteiro, Pedro Melo-Pinto, Point-Cloud based 3D object detection and classification methods for self-driving applications: A survey and taxonomy. Inform. Fusion. 68, 161–191. https://doi.org/10.1016/j.inffus.2020.11.002 (Apr. 2021).

Jin, X., Yang, H., He, X., Liu, G. & Wang, Q., Yan, Z., Robust LiDAR-Based vehicle detection for on-road autonomous driving. Remote Sensing 15(12), 3160 (2023). https://doi.org/10.3390/rs15123160

Zhou, S., Xu, H., Zhang, G., Ma, T. & Yang, Y. Leveraging deep convolutional neural networks pre-trained on autonomous driving data for vehicle detection from roadside LiDAR data. IEEE Trans. Intell. Transp. Syst. 23(11), 22367–22377 (2022). https://doi.org/10.1109/TITS.2022.3183889

Qingyu Wu, X., Li, K., Wang, H. & Bilal Regional feature fusion for on-road detection of objects using camera and 3D-LiDAR in high-speed autonomous vehicles. Soft Comput. 27, 18195–18213. https://doi.org/10.1007/s00500-023-09278-3 (Oct. 2023).

Wu, J., Xu, H., Zheng, J. & Zhao, J. Automatic vehicle detection with roadside LiDAR data under rainy and snowy conditions. IEEE Intell. Transp. Syst. Mag. 13(1), 197–209 (2021). https://doi.org/10.1109/MITS.2019.2926362

Kuutti, S., Bowden, R., Jin, Y., Barber, P. & Fallah, S. A survey of deep learning applications to autonomous vehicle control. IEEE Trans. Intell. Transp. Syst. 22(2), 712–733 (2021). https://doi.org/10.1109/TITS.2019.2962338

Shen, J. et al. An anchor-free lightweight deep convolutional network for vehicle detection in aerial images. IEEE Trans. Intell. Transp. Syst., 23(12), 24330–24342 (2022). https://doi.org/10.1109/TITS.2022.3203715

Ke, X. & Zhang, Y. Fine-grained vehicle type detection and recognition based on dense attention network. Neurocomputing 399, 247–257. https://doi.org/10.1016/j.neucom.2020.02.101 (July.2020).

Wenjin Liu, S., Zhang, L., Zhou, N., Luo, M. & Xu A semi-supervised mixture model of visual language multitask for vehicle recognition. Appl. Soft Comput. 159 https://doi.org/10.1016/j.asoc.2024.111619 (July. 2024).

Joseph Sanjaya, M., Ayub, H. & Toba comparative study of convolutional neural networks-based algorithm for fine-grained car recognition. Comput. Sci., 18–25. https://doi.org/10.5220/0010743800003113

Dhal, K. G. et al. Histogram equalization variants as optimization problems: A review. Arch. Comput. Methods Eng. 1471–1496. https://doi.org/10.1007/s11831-020-09425-1 (April. 2020).

Al-Shemarry, M. S. & Li, Y. Developing learning-based preprocessing methods for detecting complicated vehicle licence plates, IEEE Access, 8, 170951–170966 (2020). https://doi.org/10.1109/ACCESS.2020.3024625

Wu, B. F. & Juang, J. H. Adaptive vehicle detector approach for complex environments. IEEE Trans. Intell. Transp. Syst. 13(2), 817–827. https://doi.org/10.1109/TITS.2011.2181366 (June 2012).

El-Khoreby, M. A. et al. Sep. Vehicle detection and counting using adaptive background model based on approximate median filter and triangulation threshold techniques. Control Comp., 346–357 (2020). https://doi.org/10.3103/S0146411620040057

Chen, R., Sheng, Y., Wei, S. & Tang, D. Research on safe distance measuring method of front vehicle in foggy environment, in 2019 Third World Conference on Smart Trends in Systems Security and Sustainablity (WorldS4), London, UK, pp. 333–338, (2019). https://doi.org/10.1109/WorldS4.2019.8903942

Alluhaidan, M. S., Abdel-Qader, I. & International conference on scientific computing (CSC). Visibility enhancement in poor weather-tracking of vehicles. In Proceedings of the the Steering Committee of The World Congress in Computer Science, Computer Engineering and Applied Computing (WorldComp), : 183–188. (2018).

Huang, Y. et al. Senet: Spatial information enhancement for semantic segmentation neural networks. Vis. Comput. 40, 3427–3440. https://doi.org/10.1007/s00371-023-03043-1 (2024).

Canese, L. et al. Sensing and detection of Traffic signs using CNNs: An Assessment on their performance. Sensors 22, 8830. https://doi.org/10.3390/s22228830 (2022).

Shen, X., Wei, X. A. & Real-time subway driver action sensoring and detection based on lightweight ShuffleNetV2 network. Sensors 23, 9503. https://doi.org/10.3390/s23239503 (2023).

Ngoc, H. T. et al. Steering angle prediction for autonomous vehicles using deep transfer learning. J. Adv. Inform. Technol. 15(1), 138–146 (2024).

Kheder, M. Q. & Mohammed, A. A. Transfer learning based traffic light detection and recognition using CNN inception-V3 model. Iraqi J. Sci., 6258–6275. (2023).

Author information

Authors and Affiliations

Contributions

Ruan Guanqiang designed the whole experimental idea, and prepared most of the experimental equipment and materials.Tao Hu performed the program and algorithm design, performed the experiments, and wrote the vast majority of the manuscript.Chenglin Ding performed verification of the experimental design and analyzed part of the data.Kuo Yang collated some of the diagrams and wrote parts of the manuscript.Kong Fanhao collated part of the experimental data.Cheng Jinrun prepared the site and some materials for the experiment.Yan Rong sorted out part of the experimental data.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Ruan, G., Hu, T., Ding, C. et al. Fine-grained vehicle recognition under low light conditions using EfficientNet and image enhancement on LiDAR point cloud data. Sci Rep 15, 4691 (2025). https://doi.org/10.1038/s41598-025-89002-3

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-89002-3

Keywords

This article is cited by

-

Utilizing a deep neural network for robot semantic classification in indoor environments

Scientific Reports (2025)