Abstract

Resistor networks are crucial in various fields, and solving problems on these is challenging. Existing numerical methods often suffer from limitations in accuracy and computational efficiency. In this paper, a structured zeroing neural network (SZNNCRN) for solving the mathematical model of time-varying cobweb resistance networks is proposed to address these challenges. Firstly, a SZNNCRN model is designed to solve the time-varying Laplacian equation system, which is a mathematical model representing the relationship between voltage and current in a cobweb resistance network. By leveraging the hidden structural attributes of a Laplacian matrix, the study devises optimized algorithms for the neural network models, which markedly improve computational efficiency. Subsequently, theoretical analyses validate the model’s global exponential convergence, while numerical simulation results further corroborate its convergence and accuracy. Finally, the model is applied to calculate the equivalent resistance within a cobweb resistive network and for path planning on cobweb maps.

Similar content being viewed by others

Introduction

Resistor networks have essential applications in various fields of natural science and electronic circuit engineering. People used resistor networks’ reliability, repeatability, and controllability to precisely control the current flow in the system, enabling deep exploration of the electrical and magnetic properties of nanomaterials and devices1. The impact of nonlinear topological circuits on optical and electronic devices was investigated, grounded in the principles of linear topological circuits2. In condensed matter physics, resistor network models were employed to analyze and calculate the influence of defects on the surface of topological insulators, including changes in resistance due to defects3. Andre Geim won the Nobel Prize for pioneering graphene network research4,5. In organic electronics, resistor networks were essential for studying defect regions in organic thin films6. Equivalent circuit models and modeling methods were utilized to analyze and optimize the diffraction patterns of printed circuit board gratings7 and microstrip reflectarray antennas8. Materials science used numerical calculation methods based on resistor network models to research solid oxide fuel cells9. To locate the damage in carbon-reinforced materials (CFRP), resistor network theory was utilized to simulate and calculate resistance changes10. Utilizing an electrical analysis strategy grounded in resistance network principles allows for an in-depth investigation into the positional distortion issues encountered during the localization process of \(\gamma\)-ray imaging systems, enabling effective resolution of these challenges11. Therefore, the study of resistive networks holds significant importance and value.

Calculating potential and equivalent resistance are pivotal issues in resistor networks. Gustav Kirchhoff pioneered research on this topic in 184712. Wu skillfully expressed the resistivity between any two arbitrary nodes in a finite regular lattice network using the sophisticated Laplacian op-erator13. Nevertheless, this approach encounters difficulties with irregular lattices. Consequently, Lzmailian, et al. drawing on the sophisticated properties of the Laplacian matrix with notable insight, successfully adapted this method to impedance networks14. Following their work, many researchers have explored theoretical solution methods for resistor networks, deepening the knowledge within this field15,16,17,18,19,20,21. Despite these advancements, a notable oversight persists in these investigations of resistor networks: the significant fact that resistivity is a time-varying parameter has yet to be adequately addressed.

Neural networks demonstrate powerful capabilities in applications22,23,24,25,26. In particular, a recurrent neural network based on vector error, known as the zeroing neural network (also referred to as the Zhang neural network), proposed by Zhang in 200227, has received extensive application and development. For example, The proposed noise-resistant zeroing neural network significantly improves the accuracy and operational efficiency of the algorithm in noisy environments28,29,30. It has been successfully applied to rehabilitation robotics, achieving a technological breakthrough31,32,33. Discrete-time recurrent neural networks have made significant progress in numerical stability, robustness, and accuracy34,35,36,37, and these breakthroughs have led to successful applications in intelligent human-machine control, signal processing, and image processing38,39,40. Compared to traditional algorithms, zeroing neural network algorithms demonstrate superior ability in solving time-varying problems. This distinctive attribute makes it well-suited for solving dynamic resistor networks. Recent advancements in ZNN have significantly enhanced its robustness and applicability. For example, fuzzy-enhanced robust discrete-time ZNN (DZNN) improves the handling of uncertainties in dynamic systems41, while triple-integral noise-resistant RNN enhances robustness in noisy environments42. Additionally, discrete-time advanced zeroing neurodynamics offers higher precision for complex time-varying problems43. These developments underscore ZNN’s growing relevance in solving real-world challenges. Recently, Gradient neural networks (GNN) have also shown promise in addressing time-varying problems. For instance, Zhang et al. proposed GNN models with robust finite-time convergence for time-varying systems of linear equations44 and time-varying matrix inversion45. These advancements highlight the potential of GNN in dynamic systems. However, we chose ZNN for our study due to its extensive application and alignment with the structural characteristics of cobweb resistor networks.

ZNN’s versatility is evident across diverse fields. It has been applied to multi-robot tracking and formation for precise control46, mobile localization for improved accuracy in dynamic environments47, and inter-robot management for optimizing sensing and measurement48. In Medical IoT, ZNN enhances data processing and device management49, and in portfolio management, it optimizes risk-profit tradeoffs under transaction costs50. These applications highlight ZNN’s effectiveness in addressing complex, time-varying problems. Based on the unique structural features of cobweb resistance networks, this paper develops a structured zeroing neural network algorithm for efficiently solving dynamic cobweb resistance network problems. The main contributions of this paper are summarized as follows:

-

1.

This paper designs the SZNNCRN model for solving the mathematical model of dynamic cobweb resistor networks. This algorithm not only enriches the existing methodology for solving resistor networks but also effectively addresses the dynamic cobweb resistor network solutions, thereby making it closer to practical application scenarios.

-

2.

By deeply exploring the structural properties of the Laplacian matrix, the fast algorithm proposed in this paper significantly saves computational resources and substantially enhances the computational efficiency of SZNNCRN, enabling it to handle larger-scale dynamic cobweb resistor network problems.

-

3.

Comparative experiments fully demonstrate the computational advantages of the SZNNCRN method. Meanwhile, theoretical analysis rigorously proves the convergence of the time-varying SZNNCRN, further validating the reliability and stability of this method.

-

4.

The equivalent resistances obtained using the SZNNCRN method are highly consistent with exact results, which indirectly proves the accuracy of this method. Additionally, this method has been successfully applied to path planning, demonstrating its broad practical value and application prospects.

The structure of this paper is organized as follows: The first section reviews the construction of the cobweb resistor network and designs the dynamic cobweb resistor network. The second section proposes a structured zeroing neural network model applicable to the dynamic cobweb resistance network. The third section develops a fast algorithm for neural unit computation. The fourth section presents theoretical analyses. The fifth section contains numerical simulations. The final section demonstrates two applications of the SZNNCRN model.

Mathematical model and equivalent resistance for cobweb resistance network

Cobweb resistance network

The cobweb resistor network provided by N. Sh. Lzmailian, R. Kenna, and F. Y. Wu is reviewed firstly14.

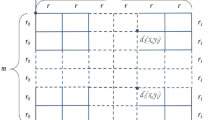

The cobweb grid is a wheel lattice composed of n spokes and m concentric circles, with a total of \(mn+1\) nodes. The resistors in the latitude and longitude directions are denoted as r and s, respectively. Figure 1 shows that the cobweb network presents a cobweb structure with \(m=5\), \(n=8\), radial resistance r, and latitudinal resistance s. To calculate the resistance of the cobweb network, the central node O is chosen as node 0, with the Laplacian potential set to zero.

Derived from Kirchhoff’s Law, a Laplacian matrix equation, i.e., the mathematical model of the cobweb resistor network

is obtained14, where V and \(\mathcal {I}\) are mn-dimensional vectors, i.e.,

\(V_i\) represents the potential of the i-th node and \(\mathcal {I}_i\) represents the current of the i-th node, \(\Delta _{mn}\) is a mn-dimensional matrix,

each element \(c_{i,j} (i, j = 1, 2, \ldots ,mn)\) of matrix \(\Delta _{mn}\) represents the inverse of the resistance value between nodes i and j, \(E_n\) and \(E_m\) are the \(n \times n\) and \(m \times m\) identity matrices, respectively, r and s are real numbers (denoted by \(\mathbb {R}\)), \(L_n^\text {per}\) is the Laplacian operator of a \(1\text {D}\) lattice with periodic boundary condition,

and \(L_m^\text {DN}\) is the \(1\text {D}\) lattice Laplacian operator with Dirichlet-Neumann boundary condition,

r and s represent the longitudinal and latitudinal resistivity, respectively.

As there is no source or sink of current, the current satisfies the following constraint condition

Dynamic cobweb resistance network

Most electrically conductive materials’ resistivity tends to vary with time51. In this paper, a linear model is used to represent this change. According to (1), the mathematical model of the dynamic cobweb resistor network is

where \(r_0\) and \(s_0\) denote the initial longitudinal and latitudinal resistivity, respectively. \(\rho\) represents the rate of change of resistivity over time, while t stands for the time variable.

The mathematical model of dynamic cobweb resistor network is

where

and V(t) represents the time-varying potential.

Equivalent resistance

The equivalent resistance \(R_{\alpha ,\beta }\) between any two nodes \(\alpha\) and \(\beta\) is defined as follows: Connect nodes \(\alpha\) and \(\beta\) to an external battery, with no other nodes connected to an external source. Measure the current \(\mathcal {I}_{\alpha ,\beta }\) flowing through the battery. The potentials at nodes \(\alpha\) and \(\beta\) be \(V_{\alpha }\) and \(V_{\beta }\), respectively. According to Ohm’s law, \(R_{\alpha ,\beta }\) can be calculated

When the center point O is excluded, the resistance \({R^{\text {cob}}}\) \(({r_1},{r_2})\) between arbitrary two points \({r_1} = \left( {{x_1},{y_1}} \right)\) and \({r_2} = \left( {{x_2},{y_2}} \right)\) in the cobweb network is14

where

When one point in the cobweb network is the center point O, and another point is \(P = \left( {x,y} \right)\), the resistance \({R^{\text {cob}}}(O,P)\) is14

where

Structured zeroing neural network for solving time-varying Laplacian linear equation system

First, the vector-valued error function is defined

where \(\Delta _{mn}(t)\) is given in (2), \(V(t) \in \mathbb {R}^{mn}\). If the error function E(t) is zero for all elements, then V(t) becomes the exact solution of Eq. (1).

To ensure \(\frac{dE(t)}{dt}\) tends toward zero, the negative of the gradient is used as the descent direction27,

where the parameter \(\Gamma (t) \in {\mathbb {R}^{mn}}\) is a positive definite matrix used to control the convergence rate and \({{\mathcal {F}} }\left( \cdot \right) :{\mathbb {R}^{mn}} \rightarrow {\mathbb {R}^{mn}}\) is a vector-valued activation function.

To ensure the SZNNCRN model possesses good convergence capabilities, we take \(\Gamma (t) = \gamma {[\Delta _{mn}(t){\Delta _{mn}^T(t)} + \lambda {E_{mn}}]^k}\), which the convergence rate can be controlled by adjusting the design parameters \(\gamma , \lambda , k,\)

where \(E_{mn}\) is a unit matrix of order mn, and the design parameters \(\lambda ,\gamma ,k\) satisfy \(\gamma > 0, \lambda \ge 1,k \ge 1\). The activation function \({{\mathcal {F}}}\left( \cdot \right)\) significantly impacts the performance of a neural network. The following are two widely utilized types of activation functions.

-

1.

Monotonically increasing odd activation function:

-

(a)

Linear activation function (LAF)52

$$\begin{aligned} f({u}) = {u}. \end{aligned}$$ -

(b)

Biploar sigmoid activation function (BSAF)52

$$\begin{aligned} f(u) = \frac{{1 - \exp ( - \zeta u)}}{{1 + \exp ( - \zeta u)}}, \end{aligned}$$where \(\zeta \ge 1.\)

-

(c)

Power sigmoid activation function (PSAF)52

$$\begin{aligned} f(u) = \left\{ \begin{array}{ll} u^p, & \text {if } |u| \ge 1 \\ \frac{{1 + \exp (-q)}}{{1 - \exp (-q)}} \frac{{1 - \exp (-q u)}}{{1 + \exp (-q u)}}, & \text {if } |u| < 1 \end{array} \right. , \end{aligned}$$where \(q > 2,p = 2s + 1,s = 1,2,3 \ldots\)

-

(d)

Smooth power sigmoid function (SPSF)52

$$\begin{aligned} f({u}) = \frac{1}{2}{u} + \frac{{1 + \exp ( - q)}}{{1 - \exp ( - q)}}\frac{{1 - \exp ( - qu)}}{{1 + \exp ( - qu)}}, \end{aligned}$$where \(q > 2,p = 2s + 1,s = 1,2,3 \ldots\)

-

(a)

-

2.

Activation function satisfy the following form: \({{\mathcal {F}}}\left( \cdot \right) = x \cdot \Psi \left( x \right) , \forall x, \Psi \left( x \right) > 0\).

-

(a)

Hyperbolic Tangent (Tanh)53

$$\begin{aligned} f(u) = \tanh (u). \end{aligned}$$ -

(b)

Swish53

$$\begin{aligned} f(u) = {u} \cdot \text {sigmoid}(u). \end{aligned}$$ -

(c)

Gaussian error linear unit (GELU)53

$$\begin{aligned} f(u) = {u} \cdot \Phi (u), \end{aligned}$$where \(\Phi (u)\) is the standard normal cumulative distribution function.

-

(d)

Mish53

$$\begin{aligned} f(u) = u \cdot \tanh (\ln (1 + {e^u})). \end{aligned}$$

-

(a)

Remark 1

The SZNNCRN model is a recurrent neural network, it follows from Eq. (11) that the dynamics of the i-th (with \(i=1,2,\dots , mn)\) neuron can be formulated as

where

where \(V_i\) denotes the i-th entry of the neural state vector V(t) and the i-th neuron of the model (11), \(p_{i,j}\), \(q_{i,j}\), and \(\mu _{i,j}\) represent the i, j-th elements of matrices \(E_{mn}- \Delta _{mn}(t)\), \(\left[ \Delta _{mn}(t) \Delta _{mn}(t)^T + \lambda E_{mn} \right] ^k\), and \(\dot{\Delta _{mn}(t)}V(t)\), respectively. \(\eta _{j,k}\) represents the j, k-th element of vector \(\Delta _{mn}(t) V(t)\). \(b_i\) represents the i-th element of \({{\mathcal {I}}}_{mn}\). \(f(\cdot )\) represents the element of \(\mathcal {F}(\cdot )\), respectively. A schematic diagram of the neural network structure of the SZNNCRN model (11) is illustrated in Fig. 2.

Fast algorithm for SZNNCRN

Fast algorithms play a crucial role in computer science. The notable advantage is their ability to significantly enhance time efficiency, reduce the occupation of computational resources, and effectively address the requirements of large-scale data processing. This section aims to thoroughly explore and fully exploit the unique structural properties of \(\Delta _{mn}(t)\) to design an efficient algorithm tailored for SZNNCRN.

Fast algorithm for \(\Delta _{mn}(t)V(t)\)

Given the matrix \(\Delta _{mn}(t)\) and a time-varying vector V(t), for a moment \(v = V (t^*) = [V_1(t),V_2(t),\dots ,V_{mn}(t)]^T\), to compute the product \(\Delta _{mn}(t)v\), \((L_n^\text {per} \otimes {E_m})v\) and \((L_m^\text {DN} \otimes {E_n})v\) can be computed separately. \(L_n^\text {per} \otimes {E_m}\) is a circulant matrix determined by the elements of the first row. \({E_n} \otimes L_m^\text {DN}\) is a block diagonal matrix. Relying on the structure of these matrices, inspired by fast algorithms for specially structured matrices54,55,56,57,58,59,60,61,62,63,64, efficient methods for computing the matrix-vector multiplication will subsequently be presented. \(L_n^\text {per} \otimes {E_m}\) is generated by circularly shifting the first column c, where c is of the form

The efficient method for computing \((L_n^\text {per} \otimes {E_m})v\) is presented in the following algorithm.

The product \(({E_n} \otimes L_m^\text {DN})v\) is essentially the independent product of n sub-blocks \(L_m^\text {DN}\) with the vector \(\xi _i\),

where \({\xi _i} = {[{v_{(i-1)m + 1}},{v_{(i-1)m + 2}},...,{v_{(i-1)m + m}}]}, \quad i = 1,2, \ldots , n.\)

Therefore, if \(z_i=L_m^\text {DN}\xi _i\) is computed, \(({E_n} \otimes L_m^\text {DN})v\) can be obtained by sequentially combining the n \(z_i\)’s.

Based on Algorithms 1 and 2, and euqation

the efficient computation of \(\Delta _{mn}V(t)\) is achieved. The calculation of \(\mathop {{\Delta _{mn}}(t)}\limits ^ \bullet V(t)\) requires first computing the derivative of \(\Delta _{mn}\), while the remaining steps are consistent with the computation of \(\Delta _{mn}V(t)\).

Fast algorithm for \({\left[ {\Delta _{mn}(t){\Delta _{mn}^T(t)} + \lambda {E_{mn}}} \right] ^k}{{\mathcal {F}}}\left( \cdot \right)\)

Given the matrix \({\left[ {\Delta _{mn}(t){\Delta _{mn}^T(t)} + \lambda {E_{mn}}} \right] ^k}\) and activated error vector \({{\mathcal {F}}}\left( \cdot \right)\) such that \({{\mathcal {F}}}\left( \cdot \right) = {\left[ {{f_1}\left( \cdot \right) ,{f_2}\left( \cdot \right) , \cdots {f_{mn}}\left( \cdot \right) } \right] ^T}\). Based on the hidden structural characteristics of the matrix, a fast algorithm for computing \({\left[ {\Delta _{mn}(t){\Delta _{mn}^T(t)} + \lambda {E_{mn}}} \right] ^k}{{\mathcal {F}}}\left( \cdot \right)\) will be designed as follows.

The Laplacian matrix \(\Delta _{mn}(t)\) is similar to a diagonal matrix by the transition matrix,

where \({F_n} = \left( {{f_{j,k}}} \right) _{j,k = 1}^n\) is the fast Fourier transform (FFT) matrix,

\(S_m^{VI}\) is type VI discrete sine transform(DST-VI),

the matrices \(L_n^{\text {per}}\) and \(L_m^{\text {DN}}\) are similar to the diagonal matrices \({\Lambda _n}\) and \({\Lambda _m}\), respectively,

the matrices \(\Delta _{mn}(t)\) is similar to the diagonal matrice \({\Lambda _{mn}}\),

Based on Eq. (10), \(\Delta _{mn}(t)\) can expressed as

Then, the k-th power of the matrix \(\Delta _{mn}(t){\Delta ^T _{mn}(t)} + \lambda {E_{mn}}\) can be obtained

where \(\mathrm{M (t)} = {\left( {\Lambda _{mn}^2(t) + \lambda {E_{mn}}} \right) }\) is a diagonal matrix,

To calculate matrix-vector multiplication \({\left[ {\Delta _{mn}(t){\Delta ^T _{mn}(t)} + \lambda {E_{mn}}} \right] ^k}{{\mathcal {F}}}\left( \cdot \right)\), \({\delta } = \left( {{E_{mn}} \otimes {{\left( {S_m^{VI}} \right) }^{ - 1}}} \right) {{\mathcal {F}}}\left( \cdot \right)\) is first calculated, i.e.

where

when \({\delta }_j={{{\left( {S_m^{VI}} \right) }^{ - 1}}{\chi _j}}\) is computed, \(\left( {{E_n} \otimes {{\left( {S_m^{VI}} \right) }^{ - 1}}} \right) {{\mathcal {F}}}\left( \cdot \right)\) can be obtained by sequentially combing the n’s. It is worth noting that \({{{\left( {S_m^{VI}} \right) }^{ - 1}}{\chi _j}}\) can be efficiently computed by IDST-VI.

Based on the decomposition of the matrix \(\Delta _{mn} (t)\), perform the calculations from right to left sequentially. \(b = \left( {F_n^{ - 1} \otimes {I_m}} \right) \delta\) will be quick calculation by m-times \(\text {IFFT}({\vartheta }_j)\), where

Then, the product of matrix \(\mathrm M^k(t)\) and vector b is the element-wise vector-vector multiplication.

Similarly, following the same vector decomposition method of (12) and (13), by sequentially executing DST-VI and FFT, and arranging the obtained vectors in order, a fast algorithm for \({\left[ {\Delta _{mn}(t){\Delta ^T _{mn}(t)} + \lambda {E_{mn}}} \right] ^k}{{\mathcal {F}}}\left( \cdot \right)\) is achieved.

Comparison of computational efficiency

Since the complexities of Algorithm 1 and Algorithm 2 can be done in \(O(N\text {log}_2N)\) and O(N) operations, respectively, where \(N = mn\)65, the matrix-vector multiplication module \(\Delta _{mn}v\) can be obtaioned in \(O(N\text {log}_2N)\), which is lower than \(O(N^2)\) the direct computation in MATLAB. Algorithm 3 based on FFT, DST-VI, IFFT, and IDST-VI66 has a complexity of \(O(N\text {log}_2N)\), while the complexity of direct computation is \(O(N^3)\). Hence, the right of implicit dynamical Eq. (11) is performed efficiently in \(O(N\text {log}_2N)\) operations instead of \(O(N^3)\) in original MATLAB computation. The SZNNCRN solver reduces computational complexity and saves storage space, thereby enabling the implementation of large-scale resistive network computations.

Numerical simulations were conducted on resistor networks of different scales using the SZNNCRN and IMRNN52 (i.e. Eq. (11)) methods, respectively. The same design parameters were employed for both models: \(\gamma = 3, \lambda = 2\), \(k = 2\), a linear activation function was selected, and the simulation duration was set to \(t = 0.3\) seconds, when the model has converged. Resistor networks of different sizes were calculated, in which the longitudinal resistivity is \(r(t) = 1 + 0.004 t\) and the longitudinal resistivity is \(s(t) = 1 + 0.004 t\). Figure 3 presents a comparative analysis of the runtime performance between IMRNN and SZNNCRN, depicted as a line graph. Under identical hardware configurations, parameter settings, and other operational conditions, the computational efficiency of SZNNCRN surpasses that of IMRNN by approximately \(400-3000\) orders of magnitude. Furthermore, as the network size escalates, the advantage of SZNNCRN in terms of computational speed becomes increasingly pronounced.

Figure 4 compares the computational speed of the SZNNCRN method against the ZNN27 and SZDN67 methods, which are commonly used for solving general linear systems of equations. The detailed parameter configurations are provided in Table 1, where t represents the time parameter after the model reaches a steady state. In the experiments, various sizes of resistor networks were considered for computation, and the runtime comparisons among different methods across different scales were presented in the form of line charts. Analysis of the charts reveals the following: For resistor networks of various sizes, the SZNNCRN method consistently demonstrates superior computational speed compared to the ZNN and SZDN methods. As the scale of computation increases, the advantage of the SZNNCRN method in computational efficiency becomes increasingly pronounced.

Tables 2 and 3 present the CPU runtime required for solving dynamic cobweb resistor networks of various sizes using different methods. Through analysis of the information in the table, the following conclusions can be drawn:

-

1.

The runtime of the SZNNCEN method consistently outperforms other methods, demonstrating higher computational efficiency.

-

2.

As the computational scale gradually increases, the computational efficiency of the SZNNCRN method becomes increasingly prominent.

-

3.

Under the same computational resources and time constraints, the SZNNCRN method is capable of effectively handling larger-scale dynamic cobweb resistor network models, indicating its robust capability in dealing with complex problems.

Convergence analysis

Theorem 1

For a lattice \({\Delta _{mn} (t)} \in {\mathbb {R}^{mn}}\) of the resistive network and the current vector \(\mathcal {I} \in {\mathbb {R}^{mn}}\), if a monotonically increasing odd activation function \({{\mathcal {F}}}\left( \cdot \right)\) is used, then the state vector V(t) of the SZNNCRN, starting from any randomly generated initial state V(0), always globally converges to the theoretical solution \({V^*} (t) = \Delta _{mn}^{ - 1}(t){{\mathcal {I}} }\) of Eq. (11).

Proof

Given a dynamic cobweb lattice \({\Delta _{mn}(t)} \in {\mathbb {R}^{mn}}\) and current vector \({{\mathcal {I}}} \in {\mathbb {R}^{mn}}\), let \(E(t) = V(t) - {V^*}(t) = V(t) - \Delta _{mn}^{ - 1}(t){{\mathcal {I}}}\) represents the error between the state V(t) and theoretical solution \({V^*}(t)\). As E(t) approaches zero, the state vector V(t) can infinitely approach the theoretical solution \({V^*}(t)\). Moreover,

In connection with Eq. (11), can obtain

Define the following Lyapunov candidate function L(E(t), t)

then

It is evident that, under the condition of \(E(t) \ne 0\), the time derivative of the function L(E(t), t) with respect to t can be derived

Observe that the inequality

holds when matrix A is a symmetric semi-definite and matrix B is a symmetric, where \(\lambda _{\min }(A)\) represents the minimum eigenvalue of the matrix \(A\), and \(\lambda _{\max }(B)\) represents the maximum eigenvalue of the matrix \(B\).

\({\Delta ^T _{mn}(t)}\) and \({\Delta _{mn}(t)}\) have the same eigenvalues, thus, it be can conclude that

where \(\alpha (t) = {\lambda _{\min }}({\Delta _{mn}(t)})\).

Let \(f( \cdot )\) be the elemental function of the monotonically increasing odd activation function \({{\mathcal {F}}}\left( \cdot \right)\). Hence, \(f( \cdot )\) satisfies the following conditions

Given that \(e( \cdot )\) is the elemental function of the error function \(E\left( \cdot \right)\), it can deduce that

In conclusion

Taking into account the inequality above and Lyapunov’s stability theorem, it can ascertained that for any arbitrary initial point V(0), E(t) globally converges to zero.

Specifically, when the activation function \({{\mathcal {F}}}\left( \cdot \right)\) is a Linear activation function (LAF), Eq. (14) becomes

Upon analyzing the aforementioned differential inequality

It can be inferred that

When the SZNNCRN model utilizes a linear activation function, its convergence rate is

\(\square\)

Theorem 2

For a lattice \({\Delta _{mn}(t)} \in {\mathbb {R}^{mn}}\) of the resistive network and the current vector \(\mathcal {I} \in {\mathbb {R}^{mn}}\), if a activation function \({{\mathcal {F}}}\left( \cdot \right) = x \cdot \psi \left( x \right) , \forall x, \Psi \left( x \right) > 0\) is used, then the state vector V(t) of the SZNNCRN, starting from any randomly generated initial state V(0), always globally converges to the theoretical solution \({V^*}(t) = \Delta _{mn}^{ - 1}(t){{\mathcal {I}}}\) of Eq. (11).

Proof

Let \(f( \cdot )\) be the elemental function of the activation function \({{\mathcal {F}}}\left( \cdot \right)\), which takes the form \({f}\left( \cdot \right) = x \cdot \varphi \left( x \right)\), where \(\varphi \left( x \right) > 0\) and \(\varphi \left( x \right)\) is the elemental function of \(\Psi \left( x \right)\). Refer to Eq. (15), the following is obtained,

It can be deduced that

Given the inequality above and Lyapunov’s stability theorem, it can ascertain that for any arbitrary initial point V(0), E(t) globally converges to zero. \(\square\)

Numerical examples

In this segment, Utilizing Eq. (11), Algorithms 1, 2, and 3, the proposed SZNNCRN model is numerically simulated. These simulations further corroborated the model’s superior convergence performance, assisting in selecting optimal parameters.

Figure 5 depicts the temporal evolution of the potential V(t) in a \(2 \times 3\) resistor network with different activation functions and the rate of change of resistivity \(\rho\), the six curves shown represent the elements of V(t). The network’s design parameters are \(\gamma =10\), \(\lambda =3\), and \(k=2\). The figure illustrates the evolution of the electric potential V(t) over time under various conditions. Specifically, when different activation functions are employed, the curves depicting the change in electric potential over time exhibit consistency. However, when \(\rho\) varies, the corresponding curves of the electric potential’s temporal evolution undergo noticeable alterations.

Figure 6 simulates the temporal evolution of the error function \({\left\| {{\Delta _{mn}(t)}V(t) - \mathcal {I}} \right\| _2}\) in a \(3 \times 3\) resistor network with resistors of values \(r=1+0.004t\) and \(s=100(1+0.004t)\). The network’s design parameters are \(\gamma =10\), \(\lambda =3\), and \(k=2\). The network is initialized using an initial potential V(0), and a spectrum of activation functions is utilized. The figure illustrates the convergence of the error under these different activation functions. Both types of activation functions are capable of reducing the error to zero. Under the parameter conditions depicted in Fig. 6, the convergence rates of SPSF, PSAF, BSAF, and LAF are observed to surpass those of the other activation functions evaluated.

Figure 7a, b present analysis of a \(3 \times 3\) resistor network, which employs resistors with values \(r=1+0.004t\) and \(s=100(1+0.004t)\) and utilizes the Linear activation function (LAF). These figures depict the effects of varying design parameters \(\gamma , \lambda ,\) and k on the convergence performance of the model. The other parameters of the subgraph are designed as follows: In Fig. 7a \(\lambda = 3, k = 2\), In Fig. 7b \(\gamma = 10, k = 2\), In Fig. 7c \(\gamma = 3, \lambda = 5\). The analysis reveals that, given the limitations of the hardware, an increase in these design parameters leads to an enhancement in the convergence speed. It should be noted that while the convergence rate is affected by the selection of design parameters, the final convergence outcome remains consistent.

Figure 8 simulates the time evolution of the error function \({\left\| {{\Delta _{mn}(t)}V(t) - \mathcal {I}} \right\| _2}\) under different initial states in a \(3 \times 3\) resistor network with resistors of values \(r=1+0.004t\) and \(s=100(1+0.004t)\). The design parameters of the network are \(\gamma =10\), \(\lambda =3\) and \(k=2\). The figure illustrates the convergence of the error for different initial states. From any initial state, the error converges to zero, indicating that the convergence of the model is not affected by the initial state.

Applications

Solving for equivalent resistance

The numerical method SZNNCRN is applied to solve the Laplacian matrix Eq. (1) to precisely determine the potential distribution \(V^*\). Additionally, building upon formula (6), the method is further applied to calculate the equivalent resistance between any two points in the cobweb resistance network. Hence, the SZNNCRN technique is efficient in calculating the potential distribution and extends its applicability to the determination of the equivalent resistance value.

Without loss of generality, the activation function in the SZNNCRN model uses a linear activation function (LAF) with the design parameters taken as \(\lambda = 3, k = 5,\gamma = 10\), respectively. Table 4 compares the equivalent resistance obtained by the exact formula (7) and SZNNCRN (11) method. It is showed that the solution results of SZNNCRN methd are consistent with the results obtained from the exact formulas.

The SZNNCRN method offers a practical and feasible approach for directly calculating the equivalent resistance of cobweb resistor networks. In contrast to exact formulas, this method efficiently circumvents intricate numerical derivation steps, yielding precise numerical solutions. Moreover, it can determine the numerical equivalent resistance for specific networks where an exact solution is unavailable.

An application in path planning

Path planning68,69,70 is a major research focus of artificial intelligence and robotics71,72,73. In practical applications, path planning often conducts with specialized environments74,75,76,77, which brings new challenges for the process. This aection performs preliminary path planning on the cobweb maps.

The cobweb resistor network exhibits distinctive structural and nodal distribution features. Within this network, the entry and exit points of the current align with the nodes possessing the highest and lowest potentials, respectively. The potential experiences an irregular natural potential descent from regions of high potential to areas of low potential. This characteristic is harnessed for path planning: utilizing the potential solution results obtained from the SZNNCRN model, the potential gradient can be computed. This computation identifies the direction in which the potential decreases most rapidly. Subsequently, this direction is employed to determine the optimal path. The starting point corresponds to the node with the highest potential, while the endpoint corresponds to the node with the lowest potential. In the presence of obstacles, they are mapped onto the potential distribution view. The potential value corresponding to the location of the obstacle is set to a significantly high value to avoid traversal.

Leveraging the principles of potential distribution and gradient descent for path generation constitutes the core concept of this application study on path planning. First, based on the observed cobweb environment with obstacles, a grid-based discretization approach was applied, accurately positioning the obstacles at the network nodes. Secondly, neglecting the existence of obstacles, a resistor network model precisely corresponding to the shape of the physical grid was constructed. Next, utilizing the SZNNCRN method, the potential values at each node within the resistor network were calculated. Subsequently, a detailed distribution map of the potentials at all nodes is constructed, visually representing their spatial distribution characteristics across the network. Then, to account for the obstacles’ impact, weighted potential processing was implemented for the nodes corresponding to the obstacles. This resulted in a weighted potential distribution map that better reflected the realities of the environment. Subsequently, based on this weighted distribution map, the gradient descent algorithm was employed to computationally determine the optimal path for robot path planning within this complex environment. Finally, the optimal path computed was mapped onto a cobweb-like environmental map incorporating obstacles, enabling efficient navigation for robots and related mobile entities within complex environments. The specific steps and flowchart of the pathfinding algorithm are presented in Algorithm 4.

In Fig. 9, a \(30\times 16\) cobweb resistance network with \(r = s = 1\), the current is directed towards \(d_1(19,13)\) and the output is \(d_2(5,4)\), adding arbitrary obstacles, as an initial environmental map. Based on the above Algorithm 4 and Flowchart, the potential V is calculated, and the potential distribution is shown in Fig. 10. In Fig. 11, the robot begins at the starting point and follows the path corresponding to the steepest potential descent within the graph, aiming to reach the endpoint eventually. The path planning for this network map is depicted in Fig. 12.

Conclusions

The present study designs a structural zeroing neural network algorithm for solving the Laplacian system of cobweb resistor networks. The algorithm ingeniously harnesses the unique structural properties of the Laplacian matrix, developing an efficient computational method for neurons that is specifically attuned to such problems. Comparative analysis with existing algorithms highlights the significant speed advantage of the proposed method. Rigorous theoretical analysis demonstrates the convergence of the method, and numerical experiments further validate the accuracy of the theoretical findings. Building on this foundation, the method is applied to calculate the equivalent resistance between any two points within the cobweb resistor network. It is creatly extended to robot path planning in specialized environments. However, due to hardware limitations, our method currently lacks the practical computational capacity for ultra-large-scale resistance networks. Additionally, while our approach is specifically tailored for the spider web resistance network, it is not directly applicable to other types of resistance networks. In the future, we will continue to explore other fast algorithms for resistive networks.

Data availability

All data generated or analysed during this study are included in this article.

References

Willke, P., Kotzott, T., Pruschke, T. & Wenderoth, M. Magnetotransport on the nano scale. Nat. Commun. 8, 15283 (2017).

Hadad, Y., Soric, J., Khanikaev, A. B. & Alu, A. Magnetotransport on the nano scale. Nat. Electron. 1, 178–182 (2018).

Lupke, F. et al. Electrical resistance of individual defects at a topological insulator surface. Nat. Commun. 8, 15704 (2017).

Novoselov, K. S. et al. Electric field effect in atomically thin carbon films. Science 306, 666–669 (2004).

Novoselov, K. S. et al. Two-dimensional gas of massless Dirac fermions in graphene. Nature 438, 197–200 (2005).

Darwish, M., Boysan, H., Liewald, C., Nickel, B. & Gagliardi, A. A resistor network simulation model for laser-scanning photo-current microscopy to quantify low conductance regions in organic thin films. Organ. Electron. 62, 474–480 (2018).

Xu, G., Eleftheriades, G. V. & Hum, S. V. Analysis and design of general printed circuit board metagratings with an equivalent circuit model approach. IEEE Trans. Antennas Propag. 69, 4657–4669 (2021).

Hum, S. V. & Du, B. Equivalent circuit modeling for reflect arrays using Floquet modal expansion. IEEE Trans. Antennas Propag. 65, 1131–1140 (2017).

Rhazaoui, K., Cai, Q., Adjiman, C. S. & Brandon, N. P. Towards the \(3\)D modeling of the effective conductivity of solid oxide fuel cell electrodes: I. Model development. Chem. Eng. Sci. 99, 161–170 (2013).

Zhang, D. et al. Impact damage localization and mode identification of CFRPS panels using an electric resistance change method. Compos. Struct. 276, 114587 (2021).

Jeon, S. J., Lee, J. S., Kim, Y. J. & Choi, Y. W. Electrical analysis method for \(\gamma\)-ray imaging system based on resistive network readout. IEEE Trans. Nucl. Sci. 68, 2392–2399 (2021).

Kirchhoff, G. Uber die auflösung der gleichungen, auf welche man bei der untersuchung der linearen verteilung galvanischer ströme geführt wird. Ann. Phys. 148, 497–508 (1847).

Wu, F. Y. The Potts model on a Cayley tree. J. Phys. A Math. Gen. 37, 6653 (2004).

Izmailian, N. S., Kenna, R. & Wu, F. Y. The two-point resistance of a resistor network: A new formulation and application to the cobweb network. J. Phys. A Math. Theor. 3, 1751–8113 (2014).

Zhou, Y., Zheng, Y., Jiang, X. & Jiang, Z. Fast algorithm and new potential formula represented by Chebyshev polynomials for an \(m \times n\) globe network. Sci. Rep. 12, 21260 (2022).

Jiang, Z., Zhou, Y., Jiang, X. & Zheng, Y. Analytical potential formulae and fast algorithm for a horn torus resistor network. Phys. Rev. E 107, 044123 (2023).

Zhou, Y., Jiang, X., Zheng, Y. & Jiang, Z. Exact novel formulas and fast algorithm of potential for a hammock resistor network. AIP Adv. 13, 095127 (2023).

Zhao, W., Zheng, Y., Jiang, X. & Jiang, Z. Two optimized novel potential formulas and numerical algorithms for \(m \times n\) cobweb and fan resistor networks. Sci. Rep. 23, 12417 (2023).

Jiang, X., Zhang, G., Zheng, Y. & Jiang, Z. Explicit potential function and fast algorithm for computing potentials in \(\alpha \times \beta\) conic surface resistor network. Expert Syst. Appl. 238, 122157 (2024).

Chair, N. Trigonometrical sums connected with the chiral Potts model, Verlinde dimension formula, two-dimensional resistor network, and number theory. Ann. Phys. 341, 56–76 (2014).

Chair, N. The effective resistance of the \(n\)-cycle graph with four nearest neighbors. J. Stat. Phys. 154, 1177–1190 (2014).

Bapst, V. et al. Unveiling the predictive power of static structure in glassy systems. J. Stat. Phys. 16, 448–454 (2020).

Wei, P., Wang, X. & Wei, Y. Neural network models for time-varying tensor complementarity problems. Neurocomputing 523, 18–32 (2023).

Wang, X., Che, M. & Wei, Y. Neural networks based approach solving multi-linear systems with \(m\)-tensors. Neurocomputing 351, 33–42 (2019).

Gao, Z. et al. Non-intrusive reduced order modeling of convection dominated flows using artificial neural networks with application to Rayleigh-Taylor instability. Comput. Phys. 30, 97–123 (2021).

Wei, X., Don, W., Gao, Z. & Hesthaven, J. An edge detector based on artificial neural network with application to hybrid compact-Weno finite difference scheme. J. Sci. Comput. 83, 1–21 (2020).

Zhang, Y., Jiang, D., Wang, J. & Hesthaven, J. A recurrent neural network for solving Sylvester equation with time-varying coefficients. IEEE Trans. Neural Netw. 13, 1053–1063 (2002).

Sun, Z., Li, F., Zhang, B., Sun, Y. & Jin, L. Different modified zeroing neural dynamics with inherent tolerance to noises for time-varying reciprocal problems: A control-theoretic approach. Neurocomputing 337, 165–179 (2019).

Sun, Z., Li, F., Zhang, B., Sun, Y. & Jin, L. Different modified zeroing neural dynamics with inherent tolerance to noises for time-varying reciprocal problems: A control-theoretic approach. Neurocomputing 337, 165–179 (2019).

Sun, Z. et al. Noise-suppressing zeroing neural network for online solving time-varying nonlinear optimization problem: A control-based approach. Neural Comput. Appl. 32, 11505–11520 (2020).

Lian, Y. et al. Neural dynamics for cooperative motion control of omnidirectional mobile manipulators in the presence of noises: A distributed approach. IEEE/CAAJ. Autom. Sin. 11, 1–16 (2024).

Sun, Z., Tang, S., Jin, L., Zhang, J. & Yu, J. Nonconvex activation noise-suppressing neural network for time-varying quadratic programming: Application to omnidirectional mobile manipulator. Neural Comput. Appl. 19, 10786–10798 (2023).

Sun, Z., Tang, S., Zhang, J. & Yu, J. Nonconvex noise-tolerant neural model for repetitive motion of omnidirectional mobile manipulators. IEEE/CAA J. Autom. Sin. 10, 1766–1768 (2024).

Shi, Y., Jin, L., Li, S., Qiang, J. & Gerontitis, D. K. Novel discrete-time recurrent neural networks handling discrete-form time-variant multi-augmented Sylvester matrix problems and manipulator application. IEEE Trans. Neural Netw. Learn. Syst. 33, 587–599 (2020).

Shi, Y. & Zhang, Y. New discrete-time models of zeroing neural network solving systems of time-variant linear and nonlinear inequalities. IEEE Trans. Syst. Man Cybern. Syst. 50, 565–576 (2017).

Shi, Y., Jin, L., Li, S. & Qiang, J. Proposing, developing and verification of a novel discrete-time zeroing neural network for solving future augmented Sylvester matrix equation. J. Franklin Inst. 357, 3636–3655 (2020).

Jin, L. & Zhang, Y. Discrete-time Zhang neural network of \(O(\tau ^3)\) pattern for time-varying matrix pseudoinversion with application to manipulator motion generation. Neurocomputing 142, 165–173 (2014).

Shi, Y. et al. Real-time tracking control and efficiency analyses for Stewart platform based on discrete-time recurrent neural network. IEEE Trans. Syst. 54, 5099–5111 (2024).

Jin, L., Du, W., Ma, D., Jiao, L. & Li, S. Pseudoinverse-free recurrent neural dynamics for time-dependent system of linear equations with constraints on variable and its derivatives. IEEE Trans. Syst. Man Cybern. Syst. 54, 4966–4975 (2024).

Jin, L., Li, Y., Zhang, X. & Luo, X. Fuzzy \(k\)-winner-take-all network for competitive coordination in multirobot systems. IEEE/CAA J. Autom. Sin. 32, 2005–2016 (2023).

Qiu, B., Guo, J., Mao, M. & Tan, N. A fuzzy-enhanced robust DZNN model for future multi-constrained nonlinear optimization with robotic manipulator control. In IEEE Transactions on Fuzzy Systems (2023).

Yu, H., Cheng, G. & Qiu, B. A triple-integral noise-resistant RNN for time-dependent constrained nonlinear optimization applied to manipulator control. In IEEE Transactions on Industrial Electronics (2024).

Qiu, B., Guo, J., Li, X., Zhang, Z. & Zhang, Y. Discrete-time advanced zeroing neurodynamic algorithm applied to future equality-constrained nonlinear optimization with various noises. IEEE Trans. Cybern. 52, 3539–3552 (2020).

Zhang, Y., Liao, B. & Geng, G. GNN model with robust finite-time convergence for time-varying systems of linear equations. IEEE Trans. Syst. Man Cybern. Syst. 54, 4786–4797 (2024).

Zhang, Y., Li, S., Weng, J. & Liao, B. GNN model for time-varying matrix inversion with robust finite-time convergence. IEEE Trans. Neural Netw. Learn. Syst. 35, 559–569 (2024).

Li, X. et al. A varying-parameter complementary neural network for multi-robot tracking and formation via model predictive control. Neurocomputing 609, 128384 (2024).

Tang, Z. & Zhang, Y. Continuous and discrete gradient-Zhang neuronet (GZN) with analyses for time-variant overdetermined linear equation system solving as well as mobile localization applications. Neurocomputing 561, 126883 (2023).

Liao, B., Cheng, H., Qian, X., Cao, X. & Li, S. Inter-robot management via neighboring robot sensing and measurement using a zeroing neural dynamics approach. Expert Syst. Appl. 244, 122938 (2024).

Jin, J., Wu, M., Ouyang, Y., Li, K., & Chen, C. A novel dynamic hill cipher and its applications on medical IoT. IEEE Internet Things J. (2025).

Cao, X. et al. Decomposition based neural dynamics for portfolio management with tradeoffs of risks and profits under transaction costs. Neural Netw. 10, 107090 (2024).

Greiner, W. Classical Electrodynamics. (Springer, 2012).

Wu, W. & Zheng, B. Improved recurrent neural networks for solving Moore-Penrose inverse of real-time full-rank matrix. Neurocomputing 418, 221–231 (2020).

Jentzen, A., Kuckuck, B. & Wurstemberger, P. V. Mathematical introduction to deep learning: Methods, implementations, and theory. arXiv preprint[SPACE]arXiv:2310.20360 (2023).

Fu, Y., Jiang, X., Jiang, Z. & Jhang, S. Properties of a class of perturbed Toeplitz periodic tridiagonal matrices. Comput. Appl. Math. 39, 1–19 (2020).

Fu, Y., Jiang, X., Jiang, Z. & Jhang, S. Inverses and eigenpairs of tridiagonal Toeplitz matrix with opposite-bordered rows. J. Appl. Anal. Comput 10, 1599–1613 (2020).

Wang, J., Zheng, Y. & Jiang, Z. Norm equalities and inequalities for tridiagonal perturbed Toeplitz operator matrices. Anal. Comput. 13, 671–683 (2023).

Wei, Y., Jiang, X., Jiang, Z. & Shon, S. On inverses and eigenpairs of periodic tridiagonal Toeplitz matrices with perturbed corners. J. Appl. Anal. Comput 10, 178–191 (2020).

Wei, Y., Zheng, Y., Jiang, Z. & Shon, S. The inverses and eigenpairs of tridiagonal Toeplitz matrices with perturbed rows. J. Appl. Math. Comput. 68, 1–14 (2022).

Wei, Y., Zheng, Y., Jiang, Z. & Shon, S. A study of determinants and inverses for periodic tridiagonal Toeplitz matrices with perturbed corners involving mersenne numbers. Mathematics 7, 893 (2019).

Wei, Y., Jiang, X., Jiang, Z. & Shon, S. Determinants and inverses of perturbed periodic tridiagonal Toeplitz matrices. Adv. Differ. Equ. 1–11 (2019).

Jiang, Z., Wang, W., Zheng, Y., Zuo, B. & Niu, B. Interesting explicit expressions of determinants and inverse matrices for Foeplitz and Foeplitz matrices. Mathematics 7, 939 (2019).

Meng, Q., Zheng, Y. & Jiang, Z. Exact determinants and inverses of \((2,3,3)\)-Loeplitz and \((2,3,3)\)-Foeplitz matrices. Comput. Appl. Math. 41, 1 (2022).

Meng, Q., Zheng, Y. & Jiang, Z. Determinants and inverses of weighted Loeplitz and weighted Foeplitz matrices and their applications in data encryption. J. Appl. Math. Comput. 68, 3999–4015 (2022).

Meng, Q., Zheng, Y. & Jiang, Z. Interesting determinants and inverses of skew Loeplitz and Foeplitz matrices. J. Appl. Anal. Comput. 11, 2947–2958 (2021).

Zhang, X., Zheng, Y., Jiang, Z. & Byun, H. Fast algorithms for perturbed Toeplitz-plus-Hankel system based on discrete cosine transform and their applications. Jpn. J. Indus. Appl. Math. 41, 567–583 (2024).

Pei, S. C. & Ye, M. H. The discrete fractional cosine and sine transforms. IEEE Trans. Signal Process. 49, 1198–1207 (2001).

Jin, L., Liufu, L., Lu, H. & Zhang, Z. Saturation-allowed neural dynamics applied to perturbed time-dependent system of linear equations and robots. IEEE Trans. Indus. Electron. 68, 9844–9854 (2020).

Zhu, Z., Yin, Y. & Lyu, H. Automatic collision avoidance algorithm based on route-plan-guided artificial potential field method. Ocean Eng. 271, 113737 (2023).

Pan, Z., Zhang, C., Xia, Y., Xiong, H. & Shao, X. An improved artificial potential field method for path planning and formation control of the multi-UAV systems. IEEE Trans. Circuits Syst. II Exp. Briefs 69, 1129–1133 (2021).

Ji, Y. et al. Tripfield: A \(3\)D potential field model and its applications to local path planning of autonomous vehicles. IEEE Trans. Intell. Transport. Syst. 24, 3541–3554 (2023).

Liu, L., Xu, W., Yang, X., Liu, H. & Wang, P. Path planning techniques for mobile robots: Review and prospect. Expert Syst. Appl. 227, 120254 (2023).

Patle, B. K., Pandey, A., Parhi, D. R. K. & Jagadeesh, A. J. D. T. A review: On path planning strategies for navigation of mobile robot. Defence Technol. 15, 582–606 (2019).

Yang, L. et al. Path planning technique for mobile robots: A review. Machines 11, 980 (2023).

Aybars, U. & \(\check{{\rm G}}\), U. R. Path planning on a cuboid using genetic algorithms. Inf. Sci.178, 3275–3287 (2008).

Fu, Z., Zhao, Y. Z., Qian, Z. Y. & Cao, Q. X. Wall-climbing robot path planning for testing cylindrical oilcan weld based on Voronoi diagram. In 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems . 2749–2753 (IEEE, 2006).

Mazaheri, H., Goli, S. & Nourollah, A. Path planning in three-dimensional space based on butterfly optimization algorithm. Sci. Rep. 14, 2332 (2024).

Kulathunga, G. A reinforcement learning based path planning approach in \(3\)D environment. Proc. Comput. Sci. 212, 152–160 (2022).

Acknowledgements

The research was Supported by the National Natural Science Foundation of China (Grant No. 12101284), the Natural Science Foundation of Shandong Province (Grant No. ZR2022MA092), and the Department of Education of Shandong Province (Grant No. 2023KJ214).

Author information

Authors and Affiliations

Contributions

Xiao-Yu, Jiang and Yan-Peng, Zheng conceived the project, performed and analyzed formulae calculations. De-Xin, Meng Validated the correctness of the calculations. Zhao-Lin, Jiang Presented a fast algorithm. All authors contributed equally to the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Jiang, X., Meng, D., Zheng, Y. et al. A dynamic cobweb resistance network solution based on a structured zeroing neural network and its applications. Sci Rep 15, 5222 (2025). https://doi.org/10.1038/s41598-025-89471-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-89471-6

This article is cited by

-

Research on the Equivalent Resistance Between the Arbitrary-Stage Nodes in the 2 × 6 × m Cascaded Cobweb Resistor Network

Circuits, Systems, and Signal Processing (2025)