Abstract

The accurate diagnosis of retinal diseases, such as Diabetic Macular Edema (DME) and Age-related Macular Degeneration (AMD), is essential for preventing vision loss. Optical Coherence Tomography (OCT) imaging plays a crucial role in identifying these conditions, especially given the increasing prevalence of AMD. This study introduces a novel Reinforcement-Based Leveraging Transfer Learning (RBLTL) framework, which integrates reinforcement Q-learning with transfer learning using pre-trained models, including InceptionV3, DenseNet201, and InceptionResNetV2. The RBLTL framework dynamically optimizes hyperparameters, improving classification accuracy and generalization while mitigating overfitting. Experimental evaluations demonstrate remarkable performance, achieving testing accuracies of 98.75%, 98.90%, and 99.20% across three scenarios for multiclass OCT image classification. These results highlight the effectiveness of the RBLTL framework in categorizing OCT images for conditions like DME and AMD, establishing it as a reliable and versatile approach for automated medical image classification with significant implications for clinical diagnostics.

Similar content being viewed by others

Introduction

In the modern era, the significant advancement of Artificial Intelligence (AI) has driven progress in intelligent healthcare applications spanning various medical sectors1,2. Integrating AI into the healthcare sector has been a significant contributor to facilitating collaborations to achieve sustainable development objectives. These intelligent medical applications have made substantial contributions in detecting pathological differences that could present diagnostic challenges for physicians3.

Currently, ophthalmologists frequently rely on Optical Coherence Tomography (OCT) medical images for diagnosing a range of eye conditions. This technology enables the production of exceptional-quality images, offering detailed information about the structure and function of the human retina. OCT is an eminent standard for identifying and evaluating macular conditions such as Diabetic Macular Edema Diabetic Macular Edema (DME) and Age-related Macular Degeneration (AMD). AMD can be categorized into two main types, i.e., neovascular and non-neovascular AMD. Farther classification is possible based on specific disease characteristics. The non-neovascular type, commonly referred to as ‘dry’ AMD, represents the majority of cases, accounting for approximately 80–85%. It usually has a more favourable visual prognosis. Neovascular AMD, commonly known as ‘wet’ AMD, accounts for roughly 15–20% of cases and is responsible for about 80% of severe vision loss associated with AMD. AMD is a widespread eye condition and one of the primary causes of vision loss in individuals aged 50 and above. In 2015, the global prevalence of AMD was recorded at 6.2 million people. Furthermore, it effectively conveys the idea that the prevalence of AMD in the United States is expected to more than double by 2050, going from 2.07 million in 2010 to an estimated 5.44 million. DME is a complication of diabetic retinopathy, which affects people with diabetes. DME occurs when fluid leaks into the macula, causing it to swell and disrupting central vision. People with diabetes, particularly those with poor blood sugar control, are at risk. Managing diabetes through medical check-ups, maintaining healthy blood sugar levels, and controlling other risk factors can reduce the risk of DME. These eye conditions can potentially cause visual impairment and result in blindness in severe cases. OCT is essential for capturing detailed images, allowing for early detection of these conditions. Facilitating timely interventions and personalized treatment plans can be implemented, which leads to improved patient4,5,6.

The remarkable capabilities of OCT have transformed the field of ophthalmology, empowering healthcare professionals to provide more precise diagnoses and enhanced treatment options for individuals suffering from retinal conditions2,7.

Nevertheless, the medical field encounters a shortage of accessible supervised data, necessitating expertise. To address this issue, several Deep Learning (DL) techniques have been devised8. An alternative approach involves unsupervised learning, encompassing semi-supervised learning, multi-instance, and Leveraging Transfer Learning (LTL)9. LTL has become increasingly popular in recent time with lately popular lately due to its ability to efficiently share model knowledge across various tasks, even those that may not be directly related, with minimal retraining needed. Several methods have been employed to identify retinal OCT lesions during the last decade10. These methods use image processing techniques, i.e., image data generation, image resizing, fine-tuning hyper-parameters, and similar approaches to extract relevant image features. When it comes to image classification tasks, well-established Reinforcement Learning (RL) approaches are employed, including reinforcement learning techniques that involve efficient image pre-processing and data augmentation.

The proposed Reinforcement-Based Leveraging Transfer Learning (RBLTL) framework directly addresses these gaps by integrating reinforcement Q-learning with pre-trained transfer learning models. Unlike traditional transfer learning methods, the RBLTL framework dynamically optimizes hyperparameters during training, allowing for improved generalization and robustness across diverse datasets. Reinforcement Q-learning ensures an adaptive learning process that minimizes overfitting and maximizes classification accuracy. Additionally, leveraging advanced pre-trained models such as InceptionV3, DenseNet201, and InceptionResNetV2 enables the extraction of high-quality features critical for distinguishing between Normal, DME, Drusen, and AMD classes.

This study offers the following contributions:

-

Exploring and analyzing reinforcement-based leveraging transfer learning for multiclass OCT image classification, focusing on AMD and related conditions.

-

Identifying optimal transfer learning approaches and reinforcement Q-learning strategies to address key challenges in OCT image classification.

-

Investigating classification-based approaches to reduce the complexities of multiclass OCT image diagnosis.

By addressing the limitations of existing methodologies, the RBLTL framework provides a robust and adaptive solution for OCT image classification, paving the way for improved diagnostic reliability and applicability in ophthalmology.Hence, the research objective explores reinforcement-based models and their appropriate hyperparameters by applying transfer learning. This exploration focused on categorizing OCT images associated with Normal, DME, Drusen, and AMD. The transfer learning process involved the employment of reinforcement-based models, incorporating a 43-layer NasNetLarge pre-trained model specifically11, 53 layers MobileNetV212, InceptionV3 and InceptionResNetv2 models with 48 and 164 layers13, and DenseNet models with 121, and 201 layers (DenseNet121, and DenseNet201)14. The deployment of reinforcement-based transfer learning demonstrated excellent performance and capability in categorizing OCT medical images associated with DME and AMD.

Motivation for using Q-learning

The motivation for using Q-learning in this paper includes: (1) exploring the performance-oriented strategies for OCT tasks. Ensuring high classification accuracy, this strategy improves diagnostic reliability. (2) Integrating reinforcement Q-learning and transfer learning to promote the performance of OCT image classification, mitigating overfitting and improving generalization across diverse datasets. This integration significantly improves the model’s accuracy and adaptability, making it more effective in real-world diagnostic scenarios. This comprehensive approach significantly advances the accuracy and reliability of AI-driven diagnostics in ophthalmology.

The rest of this study is structured as follows: Sect. 2 highlights the related work, Sect. 3 elucidates the research methods and steps, and Sect. 4 showcases experiments and results. Section 5 unfolds a discussion. Finally, Sect. 6 concludes the paper.

Related work

The research study extensively explores the intersection of three prominent research fields: (1) OCT Imaging, (2) Transfer Learning in Medical Imaging, and (3) Machine Learning in Image Classification. This section provides a comprehensive review of existing works within these domains.

OCT imaging

Optical Coherence Tomography (OCT) is a non-invasive imaging technique widely used for diagnosing retinal diseases like Diabetic Macular Edema (DME) and Age-related Macular Degeneration (AMD). Prior studies such as OCT has emerged as a powerful imaging technique for the non-invasive evaluation of diverse retinal abnormalities15. In their study, the researchers16 tackled the challenge of automatically categorizing medical images obtained through OCT for detecting DME. They introduced an accessible and effective approach that utilizes OCT image data and integrated tensors for the reliable identification of DME. The study included the assessment of the characteristics derived from thickness profiles and cysts, replaced utilizing with harnessing a dataset that included 55 patients diagnosed with DME and 53 individuals with normal OCT scans, sourced from the Duke Dataset. The study’s findings indicated that the application of a machine learning classifier with a (leave-one-out) strategy achieved the highest accuracy, reaching 79%.

In an alternative approach, the authors17 introduced the Binary Residual Feature Fusion (BARF) technique, incorporating Monte Carlo (MC) dropout. Their emphasis lies in medical image classification, demonstrating its effectiveness in identifying abnormalities in OCT images. The literature18 introduced a DL method, i.e., SD-OCT-based depth wise separable convolution, tailored for glaucoma classification using OCT images specifically. The author19 presented an interpretable CNN named Deep OCT, constructed based on Block-Matching and 3D Filtering (BM3D) principles. Remarkably lightweight, this architecture showcased the capability to achieve high accuracy with fewer parameters compared to existing state-of-the-art pre-trained models. In their work, the authors20 introduced a DL method specifically developed for the classification of retinal OCT images, known as the Label Smoothing Generative Adversarial Network (LSGAN). LSGAN, Generative Adversarial Network GAN-based model comprises three essential components, i.e., a generator, a discriminator, and a classifier. Its chief objective is to create synthetic images and integrate them with authentic images in the training dataset, thereby improving the model’s efficacy overall. The comprehensive analysis of recent relevant studies, including the depiction of their datasets, is provided in Table 1.

Transfer learning in medical imaging

Transfer learning has emerged as a powerful technique for medical image classification, leveraging pre-trained models like VGG19 and ResNet50. In their work21, the authors implemented an ensemble learning strategy, incorporating a pair of sophisticated neural networks, namely Xception, and InceptionResNetV2, along with a customized optimization technique. In this research, two pivotal experiments are recognized for the screening of AMD. The initial experiment involved the classification of images into two categories based on medical relevance: Absence or early-stage AMD and Intermediate or Advanced AMD. The second experiment classified images into four distinct groups, i.e., No AMD, early-stage AMD, Intermediate AMD, and Advanced AMD. Implementing the AREDS images dataset, the proposed ensemble method demonstrated exceptional performance, achieving precision rates surpassing 95% for the binary experiment and extending over 86–95% for the multi-class experiment. In authors22 presented an innovative and effective Universal Attention Unit (UAU) designed to establish feed-forward CNN specifically. The development and thorough evaluation of this lightweight classification model, i.e., UAU Net, are conducted using a general retinal OCT Data set from UCSD. The dataset included 108,312 medical images assembled from 4,686 patients, covering CNV, DME, and Drusen and normal instances. The researchers23 proposed a DL methodology focusing on complete automation in extricating features to facilitate a comprehensive analysis of OCT scan images from start to finish. Li and collaborators24, and the literature25 designed a compact convolutional attention module presented at the ECCV conference in 2018. This component integrates both the channel attention module (CAM) and the spatial attention module (SAM). The authors26 addressed the classification task dealing with it as an anomaly detection problem. They developed an approach that applied a Gaussian Mixture Model (GMM) to characterize the features of typical OCT images and then identified images with pathological characteristics as anomalies. The final diagnosis for the OCT volume depended on the count of identified anomalies. Recently, the OCT research community has shown a growing interest in DL techniques27. DL methodologies harness autonomously to acquire relevant features for classification tasks, thereby eliminating the necessity for manually crafted, static feature extraction techniques28.

Machine learning in image classification

Machine Learning has gained attention for its ability to optimize training processes dynamically. Recent advances in machine learning and deep learning techniques have significantly enhanced the early detection and precise diagnosis of age-related macular degeneration (AMD) and other retinal diseases. The authors introduced a novel computer-aided diagnosis (CAD) framework leveraging local and global appearance markers from fundus images, achieving high accuracy, sensitivity, and specificity in AMD classification29. They utilized generative adversarial networks (GANs) to generate realistic indocyanine green angiography (ICGA) images from color fundus photographs, improving AMD classification accuracy without the invasiveness of traditional ICGA30. Similarly, the authors developed a hybrid CNN-LSTM model, AMDNet23, incorporating advanced preprocessing techniques to achieve superior performance in AMD detection31. Another study used DeepAlienorNet, a model that enhances AMD diagnosis using heatmaps for interpretability32. Additionally, the authors explored federated learning (FL) to train deep learning models for AMD classification, addressing data privacy concerns and achieving performance comparable to centralized models33.

Further expanding the capabilities of deep learning in retinal diagnostics, the literature emphasizes the integration of various imaging techniques for comprehensive assessment of geographic atrophy (GA) secondary to AMD34. The authors proposed a vision transformer (ViT) model for multi-label classification of retinal diseases, leveraging the self-attention mechanism to outperform traditional CNNs35. Researchers demonstrated the potential of ultra-wide-field fundus imaging (UFI) combined with deep learning for accurate and efficient retinal disease diagnosis, with ResNet152 achieving high performance36. The authors also proposed a hybrid SqueezeNet-vision transformer (SViT) model for classifying retinal diseases using OCT images, addressing computational complexity and data requirements while achieving high classification accuracy. Collectively, these studies underscore the transformative impact of advanced deep learning models in enhancing the accuracy, efficiency, and applicability of retinal disease diagnosis36.

However, for the identification of DME, a satisfactory accuracy of 78% was attained through an approach involving.

the variation in OCT structure depth. In their research, the authors37 implemented a computationally efficient single-layer Convolutional Neural Network (CNN) architecture to categorize retinal anomalies in OCT scans of the retina. Following training on a publicly available OCT dataset consisting of 83,484 patient images, the model achieved a commendable level of classification accuracy. When confronted with a multi-class classification task involving Choroidal Neovascularization (CNV), DME, Drusen, and normal conditions, the model demonstrated an impressive accuracy rate of 95.66%. The literature38,39 used various methods, such as local texture analysis, ResNet50, DenseNet169, and Histogram of Oriented Gradient (HOG), and extracted bottleneck features from OCT images. They conducted a comparative evaluation using the efficiency of both manually crafted and methods based on Deep Neural Networks (DNN) for extracting bottleneck features.

The dataset used for this analysis comprised 32,339 images categorized into four groups (Normal, Drusen, DME, and CNV). The outcomes revealed the effectiveness of the DNN-based approaches, specifically DenseNet-169 and ResNet-50, which achieved higher accuracy levels of 88% and 89%, respectively compared to the non-automated feature models (HOG and LBP with 50% and 42% accuracy, respectively). Furthermore, approaches based on advanced neural networks showed superior performance, particularly in the less-represented category. In a study40,41 the authors employed a diagnostic tool to screen individuals for prevalent, treatable retinal diseases that can lead to blindness. This diagnostic tool was based on a DL framework that availed transfer learning during the model training process. In the research conducted by42,43 an OCT-NET model relying on CNN was used for an automated classification of OCT scan images. The effectiveness of the OCT-NET method underwent evaluation using OCT scan images designed specifically for the diagnosis of DME, employing a leave-one-out cross-validation methodology, resulting in impressive figures of 93.75% for accuracy, sensitivity, and specificity.

In this study, the RBLTL framework uniquely combines reinforcement Q-learning with pre-trained transfer learning models to enhance classification performance for OCT images. Unlike traditional transfer learning approaches, which rely solely on pre-trained networks, our method incorporates reinforcement Q-learning to dynamically optimize the learning process, improving model adaptability and robustness by mitigating overfitting and enhancing generalization across diverse datasets. This adaptive learning approach explores optimal hyperparameters and performance-oriented strategies tailored specifically for OCT image classification tasks, resulting in superior diagnostic reliability and accuracy for multiclass classifications involving categories such as Normal, DME, Drusen, and AMD. Furthermore, the framework evaluates and benchmarks several advanced pre-trained models, including NasNetLarge, MobileNetV2, InceptionV3, InceptionResNetV2, DenseNet121, and DenseNet201, highlighting their strengths while demonstrating the significant performance boost achieved through reinforcement-based learning strategies. By addressing critical challenges such as overfitting and ensuring high generalization, the RBLTL framework enhances the practical applicability of AI-driven diagnostics in ophthalmology, bridging the gap between experimental research and real-world clinical settings.

Materials and methods

The research methodology encompassed several key steps, including dataset overview, and pre-processing of OCT images related to DME and AMD. The initial step involved the selection of a pre-trained neural network suitable for transfer learning. Afterward, reinforcement-based techniques are used in combination with transfer learning for classifying OCT images associated with DME and AMD.

Dataset overview

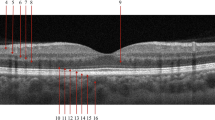

Table 2 provides further information on the makeup of the DME, CNV, Drusen, and Normal testing and training datasets. The dataset utilized in this study was assembled from multiple reputable institutions, including the Medical Centre Ophthalmology Associates, Shanghai First People’s Hospital, Beijing Tongren Eye Centre, the California Retinal Research Foundation, and the Shiley Eye Institute at the University of California San Diego. The data spans from July 1, 2013, to March 1, 2017, and comprises OCT ocular retina images depicting conditions such as Age-related Macular Degeneration (AMD) and Diabetic Macular Edema (DME). Ethical approvals for data collection and sharing were obtained by the original dataset authors stated in46, ensuring adherence to ethical standards in medical research. To mitigate potential biases, the images were carefully organized into subfolders representing four categories: Normal, Choroidal Neovascularization (CNV), DME, and Drusen. The training dataset consists of 83,484 images, distributed as follows: 37,205 images of CNV, 11,348 of DME, 8,616 of Drusen, and 26,315 of Normal retinal health. A testing dataset of 1,000 images, with 250 images per class, was used to evaluate the network’s performance. These datasets were balanced as much as possible within the constraints of the available data. Class imbalance, a potential issue, was mitigated using data augmentation techniques, such as rotation, flipping, and scaling, to enhance the representation of under-represented classes. This ensured robust training and improved the model’s ability to generalize across all categories. Furthermore, careful preprocessing, including image resizing and normalization, was applied to maintain uniformity and improve classification accuracy. This rigorous approach to dataset preparation and management enhances the reliability of the proposed RBLTL framework, ensuring its effectiveness in real-world diagnostic applications. For better understanding, Fig. 1 illustrates various image kinds graphically. On the other hand, Fig. 2 provides visual representations.

Data augmentation

Out of the 83,484 photographs in the training set, 60,500 are used for the goal of saving computational resources, namely memory utilization and time. The research successfully created a new dataset with different orientations, dimensions, or grayscale values by improving the existing data. Many consider this updated dataset to be a more accurate representation of the perfect dataset. A new training dataset is generated in this study by applying transformations, including rotation, scaling, and translation, one of three augmentation strategies used. In Fig. 3, you can see these methods shown graphically. One common technique for enhancing picture data is rotation. Rotating the picture clockwise from zero to three hundred and sixty degrees is all it takes to do this. This method moves pixels about and fills up blank spots in the picture. This investigation made use of a certain number of degrees of rotation. Scaling is the process of systematically investigating different ways to change the size of an image’s frame. The research also looked at image translation, which is changing an image’s height (horizontal), breadth (vertical), or both. In this particular instance, the image underwent a 25% horizontal displacement and a 25% vertical displacement.

Pre-processing

Each image of the OCT retina is transformed into a 224 × 224 × 3 image, with 3 standing for the colour channel pictures, to verify its suitability for Reinforcement-based leverage transfer learning, as seen in Fig. 4. Critical to this process is checking the image quality and training the model on the most important parts of the images. To enhance their quality, the images are pre-processed using a variety of approaches. Pictures pre-processing tasks, using (RBLTL), often make use of cropping and scaling. The procedure of reinforcement-based leverage transfer learning is shown in Fig. 5, a flow diagram.

Multi-model transfer learning pre-trained models

In the domain of machine learning methodology, the integration of Transfer Learning (TL) involves repurposing a model initially designed for a specific task to serve as a foundation for constructing a model adapted to a distinct task46. Supervised Learning (SL) and Transfer Learning will emerge as a prominent commercial use case for machine learning. In transfer learning, the initial phase involves the selection and implementation utilization of a pre-trained model as a foundation for creating a predictive model47. A significant portion of pre-trained networks have undergone training using a subset of the ImageNet database, a dataset notably employed in the ImageNet Large-Scale Visual Recognition Challenge. Upon being trained on a dataset containing over one million images, these networks could classify images into a total of 1000 distinct object categories. For instance, this includes items like laptops, water glasses, pens, and various animals. It’s significant to emphasize integrating transfer learning in a network with pre-training typically results in markedly accelerated performance compared to a network without pre-training. The concept of leveraging transfer learning, as illustrated in Fig. 6, involves using a model that has been trained on extensive datasets like ImageNet47 and applying it to tasks involving relatively smaller datasets. Employing transfer learning involves adopting a pre-trained neural network, i.e., InceptionV3, InceptionResNetV2, and DenseNet201, initially trained on a large dataset for a specific task like ImageNet classification. Furthermore, fine-tuning it for a different but related task. It can save significant training time and data compared to training a neural network from scratch.

Fine-tuning a pre-trained model using CNN-based transfer learning

We implemented a fine-tuning technique for pre-trained models, specifically InceptionV3, InceptionResNetV2, and DenseNet201, for classifying OCT images of AMD and DME. Fine-tuning a network is slower and requires more effort than simple feature extraction; however, since the network can learn to extract a different feature set, the final network is often more accurate. The starting point for fine-tuning involves the deeper layers of the pre-trained Convolutional Neural Networks (CNNs) for transfer learning, such as InceptionV3, InceptionResNetV2, and DenseNet20147, which were initially trained on a large dataset for tasks like ImageNet classification.

A novel RBTL method for OCT images classification

In the realm of pathological diagnoses, RBLTL aspires to faithfully replicate the actual setting of centralized decision-making. Two distinct stages, called (RBLTL), make up the innovation framework. To link with Reinforcement Q-learning and get around problems across different models, a pre-trained network is used as a go-between when first using transfer learning. This method makes it possible to mimic the work of seasoned pathologists while also gaining insight and understanding from their collective experiences. Pathologists use a Q-learning method with remarkable generalizability in the second phase of Reinforcement Learning. At this stage, a centralized decision-making process is put into place. A schematic depicting the conceptual framework of the proposed (RBLTL) method is shown in Fig. 6.

Using a pre-trained network to construct proposed model is the first step in implementing transfer learning; this ensures that RBLTL provides a thorough foundation for Reinforcement Q-learning. Here, convolution, pooling, and parameter updating are applied to all of the pre-trained networks. Consequently, it is common practice to optimize and fine-tune the whole network, including the initial layer and feature map, during training.

For the pre-trained model’s features, the variable ‘Fextracted’ stands in the previous equation. In this context, “pre-trained” means that the models InceptionV3, InceptionResNetV2, and DenseNet201 have already undergone training. Optical coherence tomography (OCT) images served as the input. In the Leverage Transfer Learning section, a probability distribution is created for all of the characteristics by using the SoftMax function.

According to this distribution, each class’s probability may be represented using the recovered attributes in a probabilistic way.

A conditional probability of class ‘y’ given the extracted features is represented by ‘Pclass(y/Fextracted)’ in the equation above. ‘Fy’ stands for the unnormalized logit score for the ‘y’ class. ‘N’ stands for the total number of courses.

Reinforcement learning

Reinforcement learning is a subset of machine learning algorithms grounded in the principles of Markov Decision Processes (MDPs). In these algorithms, the learner, referred to as an agent, interacts with an environment. The agent receives rewards or penalties based on its actions as it attempts to solve classification problems. Over multiple iterations, the agent learns the optimal strategy, which is the sequence of actions that maximizes the total accumulated rewards48.

To define the value function and Q-function in reinforcement learning, we consider the tuple [S, A, T, r,\(\:\:\varvec{\delta\:}\)]. Here, S represents a finite set of states (features extracted from the pre-trained models), A denotes a finite set of actions (Q-function to classify the images into different categories such as AMD, DME, CNV and NORMAL), T is the transition dynamics, r is the expected rewards function (provides feedback in the form of accuracy and loss), and δ ∈ (0,1) is the discount factor. The value function calculates the optimal discounted sum of rewards that can be obtained.

Where,

On the other hand, the Q-function represents the discounted reward for taking an action ‘a’ in state ‘s’.

The reinforcement learning process begins with an arbitrary value that converges to the true value function. The learner only knows S, A, and \(\:\delta\:\). A number of algorithms are proposed for reinforcement learning which Q-learning is perhaps the best-known algorithms. In this algorithm Q-function starts arbitrarily. In iteration i + 1 the agents state s1 takes an action a1. In this scenario, the Q-function updates as follows:

In the above equations, \(\:\varvec{\beta\:}\) represents the learning rate, \(\:{\varvec{r}}_{\varvec{i}+1}\:\) is the reward received after taking action \(\:{\varvec{a}}_{1}\) in state \(\:{\varvec{s}}_{1}\), \(\:\varvec{\delta\:}\) is the discount factor, and \(\:{\varvec{m}\varvec{a}\varvec{x}}_{\varvec{a}}\:{\varvec{Q}}_{\varvec{i}}\:({\varvec{s}}_{\varvec{t}+1\:},\:\varvec{a})\:\)is the maximum Q-value for the next state \(\:{\varvec{s}}_{\varvec{t}+1\:}\) over all possible actions.

Integration with reinforcement learning

In the second stage of research, it is integrated the extracted features from the pre-trained model with Reinforcement Q-learning, establishing a resilient framework for the classification of OCT images associated with eye diseases. This stage involved several crucial components that enhanced the effectiveness of proposed approach. In the proposed Reinforcement-Based Leveraging Transfer Learning (RBLTL) framework, transfer learning is employed with pre-trained models such as InceptionV3, InceptionResNetV2, and DenseNet201, which are known for their ability to extract high-quality features from complex image data. These models leverage their pre-trained weights from large-scale image datasets to effectively capture critical features from Optical Coherence Tomography (OCT) images. However, static transfer learning alone often struggles with optimal hyperparameter tuning for specific datasets and tasks. To address this limitation, the RBLTL framework integrates Reinforcement Q-Learning, which optimizes training parameters such as learning rates, dropout, and weight regularization. Through iterative exploration, Q-Learning identifies the hyperparameter configurations that maximize classification accuracy while minimizing overfitting.

First, comprehensive state representation is designed denoted as ‘St’ which encapsulated both the extracted features ‘Fextracted’ from the pre-trained model and additional clinical context ‘Ct’, such as patient medical history. This holistic state representation ensured that proposed. The q-Learning agent had a well-rounded understanding of the environment when making decisions ‘St = [Fextracted, Ct]’. The Q-Learning agent used a policy based on Q-Values for action selection, utilizing an epsilon-greedy exploration strategy to strike a balance between exploration and exploitation. Throughout the Q-learning process, the ‘(Q (S, A))’ is iteratively updated by using the Bellman equation, a fundamental concept in reinforcement learning.

This equation described how the Q-Values evolved as the agent learned to make decisions, considering factors like learning rate, immediate rewards, discount factors, and temporal learning.

A specific state ‘St’ and action ‘At’ are represented by the Q-values in the equation above. The learning rate, represented by the notation ‘Alpha(α)’, regulates the magnitude of updates to the Q-value. As a kind of feedback for the actions performed by the agent, the word ‘Rt’ denotes the immediate reward at time ‘t’. The discount factor, symbolised by the symbol ‘Gamma (γ)’, shows how much the agent values immediate gains above future rewards. As a crucial component of temporal learning, the word ‘St + 1’ represents the state of a subsequence.

The researchers in this study improved Q-Learning’s performance by repeatedly tweaking the algorithm’s hyperparameters and network topology. Using a different dataset, the model was validated, and its classification accuracy was thoroughly evaluated. In contrast to a baseline model that was trained uniquely, our study’s application of transfer learning yielded clear benefits. The all-encompassing summary of our suggested method is shown in Fig. 6.

Experiment and results

Dataset used for the proposed ensemble model

All the experiments are conducted on Google Collaboratory utilizing two Google processing units, one being a GPU (Graphics Processing Unit) and the other a TPU (Tensor Processing Unit). In experiments, NASNetLarge, MobileNet2, InceptionV3, InceptionResNetV2, DenseNet121, and Densent201 are selected as the foundational networks. The images have been resized to dimensions of 224 × 224. The chosen size of 224 × 224 strikes a balance between computational efficiency and preserving essential image details, which is critical for accurately classifying subtle variations in OCT images associated with conditions like DME, AMD, and Drusen. Furthermore, the resized images underwent preprocessing steps such as normalization, ensuring uniform input distributions and improved convergence during training. This approach aligns with best practices in the literature, enhancing the performance and reliability of the proposed ensemble models. To enhance the performance of proposed models, a technique is employed as proposed previously in the literature for the initialization of network weights.

Evaluation metric

Different measures have been used to give a complete assessment of the RBLTL model. This is based on prove methods that can be found in other books. The F1 score, accuracy, memory recall (sensitivity), and overall correctness for different image types are all checked as part of the evaluation. The test needed careful adding of these numbers, and their ways are written down this way. To understand how to calculate sensitivity, specificity, precision, F1-score, and accuracy at the picture level across several classes, consider the following equations:

Sensitivity (Recall), calculated as \(\:\frac{\left(TP\right)}{(TP+FN)}\), measures the proportion of actual positives correctly identified. TP represents True Positives (correct positive predictions) and FN represents False Negatives (missed positives). High sensitivity indicates good identification of positive instances.

Specificity, given by\(\:\frac{\left(TN\right)}{(TN+FP)}\), measures the proportion of actual negatives correctly identified. TN stands for True Negatives (correct negative predictions) and FP stands for False Positives (incorrect positives).

Precision, defined as \(\:\frac{TP}{TP+FP}\), measures the proportion of positive predictions that are correct. TP refers to True Positives and FP to False Positives (incorrect positives). High precision indicates that most positive predictions are accurate.

F1-Score, calculated as \(\:\frac{(2\times\:TP)}{(2\times\:TP+FN+FP)}\), is the harmonic mean of precision and recall. It balances both metrics and is useful when dealing with imbalanced classes. TP is True Positives, FN is False Negatives, and FP is False Positives.

Accuracy, given by \(\:\frac{(TP+TN)}{(TP+TN+FP+FN)}\), measures the overall correctness of the model by calculating the proportion of total correct predictions. TP is True Positives, TN is True Negatives, FP is False Positives, and FN is False Negatives. High accuracy indicates good overall performance.

In the equations provided above, during the classification of patients with AMD and DME, TP represents the count of positive images accurately classified, TN denotes the count of negative images correctly, FP denotes the number of positive images that are incorrectly classified, while FN indicates the number of incorrectly classified negative images. As per the methods outlined in previous references49,50, the evaluation metrics and corresponding confusion matrices are computed using the scikit-learn toolkit48, and experiments are conducted multiple times. In each experiment, mean and standard deviation values are derived to present the results in the format of mean ± standard deviation.

Performance in image-level classification

In the evaluation at the image level, OCT is randomly divided the dataset into training and testing sets. Same training and testing datasets are employed for all experiments. It is essential to acknowledge that medical data is often limited due to the high cost of annotation. A crucial objective of this study is to assess whether proposed method can mitigate the issue of data scarcity.

Several benchmark models have exhibited substantial advancements in the context of multi-class image classification. In experiments, a novel reinforcement-based leveraging transfer learning approach is implemented, incorporating meticulously selected hyperparameters. The objective is to classify OCT images associated with AMD, DME, Drusen, and Normal conditions. The accuracy metrics obtained during the training of the RBLTL model are subsequently corroborated through the assessment of classification metrics, including precision, recall, and F1-score.

Setting hyper-parameters

Different Reinforcement-based models require different algorithm hyperparameters for their training phase. The hyperparameter values mentioned here are established before the learning process. In Table 3 below, you can see the selected pre-trained models along with their corresponding algorithmic hyperparameters. In this particular training scenario, ‘Adam’ optimizer is utilized with momentum. The mini-batch size is configured to consist of 32 observations per iteration. The maximum number of training epochs is determined by the Max-Epochs parameter. The InitialLearnRate option is employed to manage the adjustments of the learning rate throughout the training process. Table 4 provides details on the accuracy achieved in each experimental run for each pre-trained model. This involves calculating the median accuracy across all standard deviations (SD) in accuracy during the evaluation of OCT medical images about DME and AMD. This information is derived from four independent iterations of the experiments conducted on both the training and testing datasets.

Additional, hyperparameters such as the learning rate, batch size, and maximum training epochs are tuned using Q-learning’s iterative reward-based mechanism. The Adam optimizer with momentum was employed to accelerate convergence. Sensitivity analysis revealed that lower learning rates (e.g., InitialLearnRate = 0.001) and smaller batch sizes (batch size = 32) significantly improved classification stability and accuracy. Table 3 highlights the hyperparameters used, and Table 4 demonstrates consistent results across experimental runs, with minimal standard deviations validating the robustness of these settings.

Table 4 shows that the median accuracy on the testing dataset ranged from 0.8765 to 0.9582 using the pre-trained model with suitable hyperparameters for transfer learning. InceptionV3, InceptionResNetV2, and DenseNet201 achieved average accuracies of 0.9514, 0.9582, and 0.9560, respectively, demonstrating exceptional performance. Notably, InceptionResNetV2 had a consistently low standard deviation of 0.0026 in accuracy, indicating its robustness and consistent performance in categorizing OCT images associated with AMD and DME.

Figure 7 illustrates the gradual enhancement in accuracy throughout the training process of the suggested model employing InceptionV3. For training, the sigmoid optimization method is utilized with a minimum batch size of 32 observations per iteration. The total number of trainings images divided by 32 determined the number of iterations per epoch, which was 1,890 (= 60,500 / 32). The maximum number of epochs to 14 are set and resulting in a total of 1,890 iterations (computed as 26,460 iterations per epoch multiplied by 14). In the figure below, the yellow curve illustrates the continuous enhancement in validation metrics concerning the testing set, while the blue line indicates the ongoing enhancement in accuracy for the training dataset.

The continuous enhancement in accuracy observed during the training of the proposed model using InceptionResNetV2 can be observed in Fig. 8. For training time, the sigmoid optimization method is employed with a minimum batch size of 32 observations per iteration. The number of iterations is determined as 1, 890 (= 60, 500/32), which is derived from the total number of training images divided by the batch size of 32. Max-epochs to 10 is set, indicating the maximum number of epochs consequently, the maximum number of iterations was 1890, computed as 18,900(iteration per epoch) multiplied by 10 (Max-Epochs). The yellow curve illustrates the continuous enhancement in validation metrics concerning the testing set, while the blue curve denotes the gradual refinement in training metrics for the training dataset. Figure 9 illustrates the gradual improvement in accuracy throughout the training process of the proposed model using DenseNet201.

For training, the sigmoid optimization method is applied with a minimum batch size of 32 observations per iteration. The total number of training images divided by 32 determined the number of iterations per epoch, which is 1,890 (calculated as 60,500/32). Maximum number of epochs to 10 are set, resulting in a total of 1,890 iterations (computed as 18,900 iterations per epoch multiplied by 10). In Fig. 9 below, the yellow curve depicts the continuous improvement in validation metrics for the testing set, while the blue line indicates the ongoing enhancement in accuracy for the training dataset.

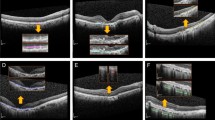

Figure 10 presents the confusion matrices for three models—InceptionV3, DenseNet201, and InceptionResNetV2—used to classify OCT images into four categories: Normal, CNV (Choroidal Neovascularization), DME (Diabetic Macular Edema), and Drusen. These matrices show how well each model performed by highlighting the correct and incorrect predictions for each class. The InceptionV3 model (Fig. 10a) performs well overall but shows some misclassifications, such as Normal images being mistaken for Drusen and occasional confusion between CNV and DME. The DenseNet201 model (Fig. 10b) improves on this with fewer errors, although similar patterns of misclassification persist. On the other hand, the InceptionResNetV2 model (Fig. 10c) demonstrates the best performance, with minimal errors and robust differentiation between classes. A closer look at the misclassifications reveals that similarities in retinal features—such as overlapping patterns between Normal and Drusen or CNV and DME—might have contributed to these errors. To address this, enhancing preprocessing techniques, such as feature normalization or focusing on key retinal regions, could help reduce confusion. Additionally, expanding the dataset to include a wider variety of examples could further improve the models’ ability to distinguish between challenging cases. The standout performance of InceptionResNetV2 is likely due to its advanced architecture, which effectively captures complex and subtle features in the images. Combining these insights with ensemble approaches could help achieve even better classification accuracy in the future.

Confusion matrices showcasing classification results for OCT images into four categories (Normal, CNV, DME, Drusen) utilizing the (a). InceptionV3, (b). DenseNet201, and (c). InceptionResNetV2 models. Each matrix highlights true positives, false positives, false negatives, and true negatives for each class, with DenseNet201 and InceptionResNetV2 demonstrating superior accuracy and minimal misclassifications compared to InceptionV3.

In Table 4, it is evident that the RBLTL model demonstrates superior performance on the OCT dataset. Specifically, employing pre-trained models such as InceptionV3, DenseNet201, and InceptionResNetV2 resulted in testing dataset accuracies of 0.9530, 0.9610, and 0.9636 for best-1, best-2, and best-3, respectively. Upon integrating RBLTL with these models, enhancements are observed in both training and testing datasets. The most substantial improvements in testing dataset accuracy are 0.345% for best-1, 0.280% for best-2, and 0.284% for best-3. Notably, RBLTL demonstrates a relatively significant performance boost in multi-classification mode.

In Table 5, efficacy of different models assesses on the testing set using OCT images. The (RBLTL) model demonstrates outstanding performance across various metrics, including classifier accuracy, average F1-score, average sensitivity, average precision, and average specificity. Applying the proposed model to the testing set resulted in an accuracy of 0.9952. Notably, the accuracies achieved in experiments surpass those reported in studies by Najeeb37, Nugroho45, and Kermany26.

Moreover, experiment showcases exceptional performance in additional metrics, including average specificity, average precision, average F1-score, and average sensitivity, employing knowledge transfer with appropriate hyperparameters. Thus, the proposed (RBLTL) model exhibits outstanding capabilities in classifying OCT medical images related to DME and AMD.

In Table 6, the efficacy of different models assesses on the testing set using OCT images. The (RBLTL) model demonstrates outstanding performance across various metrics, including classifier accuracy, average F1-score, average sensitivity, average precision, and average specificity. Applying the proposed model to the testing set resulted in an accuracy of 0.9952. Notably, the accuracies achieved in experiments surpass those reported in studies37,38. Moreover, experiment showcases of exceptional performance in additional metrics, including average specificity, average precision, average F1-score, and average sensitivity, employing knowledge transfer with appropriate hyperparameters. Thus, the proposed (RBLTL) model exhibits outstanding capabilities in classifying OCT medical images related to DME and AMD.

In addition, Table 6 provides a detailed comparison of the RBLTL framework with state-of-the-art methods. The proposed ensemble models, leveraging transfer learning with pre-trained networks (InceptionV3, DenseNet201, and InceptionResNetV2), consistently outperform existing approaches across multiple evaluation metrics. For instance, RBLTL + InceptionResNetV2 achieves the highest accuracy (99.20%), surpassing methods like Kermany et al. (96.10%) and Parsa Riazi (98.71%), while also delivering superior average precision (99.60%), recall (99.46%), specificity (99.34%), and F1-score (99.20%). The inclusion of confidence intervals and standard deviations in Table 4 validates the statistical significance of the results. The low standard deviations across experiments (e.g., 0.0026 for DenseNet201 and 0.0016 for InceptionV3) further demonstrate the stability of the proposed framework’s performance. These validations, combined with comparative analysis, confirm the robustness, adaptability, and superiority of the RBLTL framework in multiclass OCT image classification for diagnosing complex retinal diseases like DME and AMD.

In the best-1 accuracy experiment conducted on Google Colab, an in-depth analysis of (RBLTL + InceptionV3) performance presented, as in Table 7. The model demonstrated commendable results, achieving an accuracy of 0.9875%, an average precision of 0.9885%, an average recall of 0.9877%, an average specificity of 0.9875%, and an average F1-score of 0.9875%. To provide a visual representation, Fig. 10 provides a comprehensive view of the classification performance for the proposed models. Figure 10 (a), the InceptionV3 model performs well overall but exhibits notable misclassifications, such as Normal images being confused with Drusen and occasional errors between CNV and DME.

In the best-2 accuracy experiment conducted on Google Collab, the evaluation results of (RBLTL + DenseNet201) presented as in Table 6. The model achieved notable performance metrics, including an accuracy of 0.9890%, an average precision of 0.9932%, an average recall of 0.9906%, an average specificity of 0.9909%, and an average F1-score of 0.9890%. Furthermore, Fig. 10b demonstrates the confusion matrix for DenseNet201, which shows significant improvements in accuracy, with fewer errors across all classes, particularly in distinguishing Normal and Drusen images.

In the best-3 accuracy experiment conducted on Google Colab, we present a comprehensive analysis of (RBLTL + InceptionResNetV2) performance in Table 8. The model exhibited notable results, achieving an accuracy of 0.9920%, an average precision of 0.9960%, an average recall of 0.9946%, an average specificity of 0.9934%, and an average F1-score of 0.9920%. To provide a visual representation, Fiinally, Fig. 10c highlights the performance of InceptionResNetV2, which achieves the best results, with minimal misclassifications across all categories. The enhanced performance of DenseNet201 and InceptionResNetV2 reflects their ability to effectively learn and generalize from the OCT dataset, making them more reliable for diagnosing retinal conditions.

Discussion

In this research work, the precise tuning of algorithm hyperparameters played a pivotal role in the context of classifying OCT images related to AMD and DME through (RBLTL). This significance is validated through experiments, where the InceptionV3, InceptionResNetV2, and DenseNet201 models achieved a remarkable classification of surpassing 99%. It is evident in classification accuracy. For example, consider the case of MobileNetV2 leveraging transfer learning. The results presented in below Table 9 demonstrate that a judicious selection of hyperparameters can substantially enhance 4the performance of reinforcement-based leveraging transfer learning.

Specifically, when employing the ’Adam’ optimizer and setting the ’InitialLearnRate’ to 10–3, are chosen for this study due to their ability to consistently deliver the best accuracy on both the training and testing datasets. Figure 11 presents a selection of four sample images, displaying their corresponding predicted labels and the associated probabilities. The outcomes for these four randomly selected sample images closely align with the predicted categories, and the prediction probabilities approach 100%. This underscores the high classification capability of the model based on Reinforcement-based leveraging transfer learning.

Currently, (RBLTL) is recognized for its efficiency and stability. The cornerstone of successful image classification lies in the accurate categorization of original images. This phenomenon is empirically validated in this study, the Reinforcement-based model achieved a classification accuracy greater than 99%. Consequently, when combined with suitable hyperparameters, reinforcement-based leveraging transfer learning emerges as the most effective approach for the precise classification of OCT images associated with DME and AMD.

While the RBLTL framework demonstrates exceptional accuracy and adaptability, certain limitations must be acknowledged. First, the dataset primarily consists of images from a limited number of institutions, which may not fully represent the diversity of retinal conditions observed globally. This could impact the model’s ability to generalize to populations with different demographic or clinical characteristics. Second, although reinforcement Q-learning helps mitigate overfitting, there remains a possibility of overfitting to the specific training data due to the high complexity of pre-trained models and the relatively small testing dataset. Lastly, clinical applicability is constrained by the need for large-scale validation studies to ensure reliability in real-world diagnostic settings, particularly across varied imaging equipment and clinical protocols.

The RBLTL framework has significant implications for advancing automated diagnostics in ophthalmology. By achieving high classification accuracy for OCT images, the framework can assist clinicians in early and accurate detection of retinal diseases such as DME and AMD, potentially reducing vision loss in affected patients. Beyond retinal imaging, this framework could be extended to other medical imaging modalities, such as CT or MRI scans, where multiclass classification and feature extraction are critical. Furthermore, its reinforcement-based optimization strategy could be applied to tasks requiring real-time adaptability, such as telemedicine diagnostics or mobile health applications. This study thus serves as a foundation for developing robust, adaptive AI systems for broader medical and clinical applications.

Conclusion

This study presents the Reinforcement-Based Leveraging Transfer Learning (RBLTL) framework for the accurate classification of Optical Coherence Tomography (OCT) images of Diabetic Macular Edema (DME) and Age-related Macular Degeneration (AMD). By integrating reinforcement Q-learning with transfer learning and leveraging pre-trained models such as InceptionV3, DenseNet201, and InceptionResNetV2, the framework achieves exceptional classification accuracies of 98.75%, 98.90%, and 99.20% for Best-1, Best-2, and Best-3 scenarios, respectively. These results surpass those reported in prior studies, highlighting the robustness and reliability of the proposed method. The RBLTL framework capitalizes on reinforcement learning’s dynamic optimization capabilities, reducing overfitting and improving generalization across datasets. Performance metrics such as precision, recall, specificity, and F1-score further validate the model’s effectiveness in OCT image classification, with low validation losses across all experiments.

Future research could focus on expanding the dataset to include more diverse populations and imaging sources to improve clinical applicability and generalization. Additionally, the framework could be extended to other medical imaging modalities, such as MRI or CT scans, to explore its adaptability to various diagnostic challenges. Real-world deployment in clinical settings and integration with telemedicine platforms for automated diagnosis are other potential avenues for further exploration.

Data availability

The datasets analysed during the current study are available in the Large Dataset of Labeled Optical Co-herence Tomography (OCT) and Chest X Ray Images 2018, \url: https://data.mendeley.com/datasets/rscbjbr9sj/2.

References

Jiang, F. et al. Artificial intelligence in healthcare: past, present and future. Stroke Vascular Neurol. 2, 4 (2017).

Alanazi, H. O., Abdullah, A. H. & Qureshi, K. N. A critical review for developing accurate and dynamic predictive models using machine learning methods in medicine and health care. J. Med. Syst. 41, 1–10 (2017).

Al-Issa, Y. & Alqudah, A. M. A lightweight hybrid deep learning system for cardiac valvular disease classification. Sci. Rep. 12(1), 14297 (2022).

Podoleanu, A. et al. Optical coherence tomography for medical imaging and nondestructive testing. in Advances in 3OM: Opto-Mechatronics, Opto-Mechanics, and Optical Metrology vol. 12170 166–174 (SPIE, 2022).

Qureshi, K. N., Alhudhaif, A., Ali, M., Qureshi, M. A. & Jeon, G. Self-assessment and deep learning-based coronavirus detection and medical diagnosis systems for healthcare. Multimedia Syst. 1–10 (2022).

Alanazi, H., Abdullah, A., Qureshi, K. & Ismail, A. Accurate and dynamic predictive model for better prediction in medicine and healthcare. Irish J. Med. Sci. 187, 501–513 (2018).

Lim, L. S., Mitchell, P., Seddon, J. M., Holz, F. G. & Wong, T. Y. Age-related macular degeneration. Lancet 379(9827), 1728–1738 (2012).

Iqbal, A. See discussions, stats, and author profiles for this publication at: Ovarian Leiomyoma Associated with Serous Cystadenoma-A Case Report of an Uncommon Entity Ovarian Leiomyoma Associated with Serous Cystadenoma-A Case Report of an uncommon entity, 2023. https://www.researchgate.net/publication/323614212.

Ferris, F. L. et al. III., Clinical classification of age-related macular degeneration. Ophthalmology 120(4), 844–851 (2013).

An, G., Akiba, M., Omodaka, K., Nakazawa, T. & Yokota, H. Hierarchical deep learning models using transfer learning for disease detection and classification based on small number of medical images. Sci. Rep. 11(1), 4250 (2021).

Cheplygina, V., De Bruijne, M. & Pluim, J. P. Not-so-supervised: a survey of semi-supervised, multi-instance, and transfer learning in medical image analysis. Med. Image. Anal. 54, 280–296 (2019).

Das, V., Dandapat, S. & Bora, P. K. A data-efficient approach for automated classification of OCT images using generative adversarial network. IEEE Sens. Lett. 4(1), 1–4 (2020).

Xiao, Z. & Kunkel, G. J. Automatic Head Overcoat Thickness Measure with NASNet-Large-Decoder Net. in Proceedings of the Future Technologies Conference (FTC) 359, 159 (Springer Nature, 2021).

Younis, A., Shixin, L., Jn, S. & Hai, Z. Real-time object detection using pre-trained deep learning models MobileNet-SSD. in Proceedings of 2020 6th International Conference on Computing and Data Engineering 44–48 (2020).

Adole, E., Edirisinghe, B., Li & Bearchell, C. Investigation of faster-RCNN inception Resnet V2 on offline Kanji handwriting characters. in Proceedings of the International Conference on Pattern Recognition and Intelligent Systems 1–5 (2020).

Chauhan, T., Palivela, H. & Tiwari, S. Optimization and fine-tuning of DenseNet model for classification of COVID-19 cases in medical imaging. Int. J. Inform. Manage. Data Insights 1(2), 100020 (2021).

He, X., Fang, L., Rabbani, H., Chen, X. & Liu, Z. Retinal optical coherence tomography image classification with label smoothing generative adversarial network. Neurocomputing 405, 37–47 (2020).

Kermany, D. S. et al. Identifying medical diagnoses and treatable diseases by image-based deep learning. Cell 172(5), 1122–1131. e9 (2018).

Serener, A. & Serte, S. Dry and wet age-related macular degeneration classification using oct images and deep learning. in 2019 Scientific Meeting on electrical-electronics & Biomedical Engineering and Computer Science (EBBT) 1–4 (IEEE, 2019).

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521(7553), 436–444 (2015).

Das, V., Dandapat, S. & Bora, P. K. Automated classification of retinal OCT images using a deep multi-scale fusion CNN. IEEE Sens. J. 21(20), 23256–23265 (2021).

Li, X., Shen, X., Zhou, Y., Wang, X. & Li, T. Q. Classification of breast cancer histopathological images using interleaved DenseNet with SENet (IDSNet). PLoS ONE 15(5), e0232127 (2020).

Woo, S., Park, J., Lee, J. Y. & Kweon, I. S. Cbam: Convolutional block attention module. in Proceedings of the European conference on computer vision (ECCV) 3–19 (2018).

Sidibe, D. et al. An anomaly detection approach for the identification of DME patients using spectral domain optical coherence tomography images. Comput. Methods Programs Biomed. 139, 109–117 (2017).

Kim, K. G. Book review: deep learning. Healthc. Inf. Res. 22(4), 351 (2016).

Abdar, M. et al. A new direct and cross-based binary residual feature fusion with uncertainty-aware module for medical image classification. Inf. Sci. 577, 353–378 (2021).

Sunija, A., Kar, S., Gayathri, S., Gopi, V. P. & Palanisamy, P. Octnet: A lightweight cnn for retinal disease classification from optical coherence tomography images. Comput. Methods Programs Biomed. 200, 105877 (2021).

Altan, G. DeepOCT: an explainable deep learning architecture to analyze macular edema on OCT images. Eng. Sci. Technol. Int. J. 34, 101091 (2022).

Abd El-Khalek, A. et al. A concentrated machine learning-based classification system for age-related macular degeneration (AMD) diagnosis using fundus images. Sci. Rep. 14(1), 2434 (2024).

Chen, R. et al. Translating color fundus photography to indocyanine green angiography using deep-learning for age-related macular degeneration screening. Npj Digit. Med. 7(1), 34 (2024).

Ali, M. A. et al. AMDNet23: hybrid CNN-LSTM deep learning approach with enhanced preprocessing for age-related macular degeneration (AMD) detection. Intell. Syst. Appl. 21, 200334 (2024).

Mathieu, A. et al. DeepAlienorNet: a deep learning model to extract clinical features from colour fundus photography in age-related macular degeneration. Acta Ophthalmol. (2024).

Gholami, S. et al. Federated learning for diagnosis of age-related macular degeneration. Front. Med. 10 (2023).

Pfau, M. et al. Multimodal imaging and deep learning in geographic atrophy secondary to age‐related macular degeneration. Acta Ophthalmol. 101(8), 881–890 (2023).

Nguyen, T. D. et al. Retinal disease diagnosis using deep learning on ultra-wide-field fundus images. Diagnostics 14(1), 105 (2024).

Hemalakshmi, G., Murugappan, M., Sikkandar, M. Y., Begum, S. S. & Prakash, N. Automated retinal disease classification using hybrid transformer model (SViT) using optical coherence tomography images. Neural Comput. Appl. 1–18 (2024).

Najeeb, S. et al. Classification of retinal diseases from OCT scans using convolutional neural networks, in 10th International conference on electrical and computer engineering (ICECE), 2018: IEEE, pp. 465–468. (2018).

Nugroho, K. A. A comparison of handcrafted and deep neural network feature extraction for classifying optical coherence tomography (OCT) images. in 2nd international conference on informatics and computational sciences (ICICoS) 1–6 (IEEE, 2018).

Ho, W. H. et al. Artificial intelligence classification model for macular degeneration images: a robust optimization framework for residual neural networks. BMC Bioinform. 22, 1–10 (2021).

Perdomo, O., Otálora, S., González, F. A., Meriaudeau, F. & Müller, H. Oct-net: A convolutional network for automatic classification of normal and diabetic macular edema using sd-oct volumes. in IEEE 15th international symposium on biomedical imaging (ISBI 2018) 1423–1426 (IEEE, 2018).

Hussein, S. M., Al-Saadi, E. H. & Al-Sultan, A. Y. Convolutional Neural Network (CNN) for diagnosing age-related macular degeneration (AMD) in retinal images.

Govindaiah, A., Smith, R. T. & Bhuiyan, A. A new and improved method for automated screening of age-related macular degeneration using ensemble deep neural networks. in 40th Annual international conference of the IEEE engineering in medicine and biology society (EMBC) 702–705 (IEEE, 2018).

Huang, X. et al. GABNet: global attention block for retinal OCT disease classification. Front. NeuroSci. 17, 1143422 (2023).

Li, R. et al. Ocular biomarkers of cognitive decline based on deep-learning retinal vessel segmentation. BMC Geriatr. 24(1), 28 (2024).

Naz, S., Hassan, T., Akram, M. U. & Khan, S. A. A practical approach to OCT based classification of diabetic macular edema. in international conference on signals and systems (ICSigSys) 217–220 (IEEE, 2017).

Chen, D., Lu, Y., Li, Z. & Young, S. Performance evaluation of deep transfer learning on multi-class identification of common weed species in cotton production systems. Comput. Electron. Agric. 198, 107091 (2022).

Rahman, T. et al. Transfer learning with deep convolutional neural network (CNN) for pneumonia detection using chest X-ray. Appl. Sci. 10(9), 3233 (2020).

Pedregosa, F. et al. Scikit-learn: machine learn-ing in Python. J. Mach. Learn. Re-search 12 (2011).

Thakoor, K. et al. Hybrid 3d-2d deep learning for detection of neovascularage-related macular degeneration using optical coherence tomography B-scans and angiography volumes. in 2021 IEEE 18th International Symposium on Biomedical Imaging (ISBI) 1600–1604 (IEEE, 2021).

Hwang, D. K. et al. Artificial intelligence-based decision-making for age-related macular degeneration. Theranostics 9(1), 232 (2019).

Lu, W. et al. Deep learning-based automated classification of multi-categorical abnormalities from optical coherence tomography images. Translational Vis. Sci. Technol. 7(6), 41–41 (2018).

Fang, L. et al. Attention to lesion: lesion-aware convolutional neural network for retinal optical coherence tomography image classification. IEEE Trans. Med. Imaging 38(8), 1959–1970 (2019).

Ho, W. H., Huang, T. H., Yang, P. Y., Chou, J. H. & Huan, H. S. Artificial intelligence classification model for macular degeneration images: a robust optimization framework for residual neural networks. BMC Bioinform. 22, 1–10. (2021).

Acknowledgments

The authors would like to acknowledge the support of Prince Sultan University, Saudi Arabia in paying the Article Processing Charges (APC) for this publication. The final manuscript was read and approved by all listed authors.

Author information

Authors and Affiliations

Contributions

Author Contributions StatementYawar Abbas (Y.A.) and Kamran Aziz (K.A.) jointly conceptualized the study and designed the methodology. Y.A. led the data acquisition and analysis phases. Both Y.A. and K.A. were primarily responsible for drafting the manuscript and revising it critically for important intellectual content.Hassan Jalil Hadi (H.J.H.) and Naveed Ahmad (N.A.) contributed to the development of the methodology and assisted significantly in analyzing the data. They also participated actively in revising the manuscript for key intellectual content and ensured the integrity of the work.Muhammad Usman Akhtar (M.U.A.) provided technical expertise in software tools used in the study and contributed to the drafting and revision of the manuscript, particularly in the sections dealing with software implementation and data analysis.Mohammed Ali Alshara (M.A.A.) played a significant role in overseeing the research ethics and was pivotal in ensuring that the manuscript met the research integrity standards. M.A.A. also contributed to the manuscript by revising it for content related to ethical considerations and data accuracy.Prasun Chakrabarti (P.C.) contributed to refining the study’s analytical frameworks and was instrumental in interpreting the analysis results. P.C. also helped in enhancing the discussion sections of the manuscript and reviewed the final manuscript draft.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Abbas, Y., Hadi, H.J., Aziz, K. et al. Reinforcement-based leveraging transfer learning for multiclass optical coherence tomography images classification. Sci Rep 15, 6193 (2025). https://doi.org/10.1038/s41598-025-89831-2

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-89831-2