Abstract

The non-Gaussian characteristic of the external disturbance poses a great challenge for system modeling and identification. This paper develops a robust recursive estimation algorithm for the errors-in-variables nonlinear system with the impulsive noise. The algorithm is formulated by minimizing the continuous logarithmic mixed p-norm criterion, and is capable of giving a robust estimation against the impulsive noise through an adjustable weight gain. The nonlinear monomials of the noisy input are estimated by the recursive expressions based on the bias correction. Furthermore, a continuous logarithmic mixed p-norm based robust hierarchical estimation algorithm is derived to reduce the computational loads. The simulation studies demonstrate the feasibility of the proposed algorithms.

Similar content being viewed by others

Introduction

System identification is to establish mathematical models or certain quantitative relations to reflect the characteristics of practical plants from collected data1,2. The conventional algorithms, such as the least squares algorithms3,4,5, the gradient descent algorithms6,7,8 and the maximum likelihood algorithms9,10,11,12, generally assume that system inputs can be accurately measured and only the output data are corrupted by noises13,14,15. However, this assumption may lead to the model errors because of ignoring the input noise caused by sensor failures and environmental interferences16,17.

For decades, the identification for the errors-in-variables (EIV) systems has become a hot topic18,19,20. EIV systems refer to the systems which take into account the input noise as well as the output noise21,22,23. Zheng presented a bias-eliminated recursive least squares algorithm for linear EIV systems24. Söderström gave a survey for the identification approaches of EIV systems and discussed the identifiability under weak assumptions25. Fan et al. proposed the instrumental variable method for EIV systems based on correlation analysis26. In the works, the output disturbances are subject to white Gaussian processes.

The external noise can be regarded as a summation of random kinetic energy from multiple sources, and its statistical characteristic has an important influence on parameter estimation27,28,29. The hypothesis of Gaussian noise may be inappropriate in many engineering applications due to sensor malfunction, data transmission failure and sudden cyberattacks30,31,32. In fact, the injected attack signal of deception attacks can be regarded as non-Gaussian noises33. Bi et al.34 proposed a novel adaptive fuzzy control approach for a class of uncertain nonlinear cyber-physical systems to mitigate the effects of cyberattacks. When the non-Gaussian noise or outliers are encountered, the traditional least squares algorithm faces severe performance deterioration and is non-optimal35,36. An intuitive approach is to detect the outliers and to conduct the estimation algorithms using the data set without detected outliers, but it is difficult to guarantee all of outliers are exactly found especially for nonlinear systems37,38,39,40.

To cope with the identification problem with outliers, Stojanovic and Nedic constructed a least favorable function-based cost function and presented a robust recursive estimation algorithm for output error models with time-varying parameters, and successfully applied it to model the pneumatic servo drives41. Guo and Zhi developed a generalized hyperbolic secant adaptive filter, in which the disturbance is modeled by alpha-stable noise42. Yang et al. modeled the distribution of outliers by generalized hyperbolic skew Student’s t distribution and presented an expectation-maximization algorithm for the nonlinear state-space system43.

Although there have been many identification methods for the linear and nonlinear systems with impulsive noise, to the best of our knowledge, the identification of the EIV nonlinear system with impulsive noise has not been fully investigated. Therefore, this paper aims to propose the robust recursive identification schemes for the EIV nonlinear system contaminated by impulsive noise. Since the input nonlinearity contains the unknown input noise, a bias correction scheme is presented to obtain the unbiased estimates of the monomial basis functions of the input nonlinearity. Furthermore, a differentiable continuous logarithmic mixed p-norm cost function is formulated and the robust recursive estimation algorithms are presented. The main contributions of this paper are as follows.

-

Construct a differentiable continuous logarithmic mixed p-norm cost function for the EIV nonlinear system and optimize this criterion function to resist the influence of impulsive noise and improve the robustness of the parameter estimation.

-

Introduce the bias correction principle to estimate the unknown nonlinear input functions and propose a continuous logarithmic mixed p-norm based robust recursive estimation (CLMpN-RRE) algorithm for the EIV nonlinear system with impulsive noise.

-

Divide the identification model into sub-models and develope a CLMpN-based robust hierarchical estimation (CLMpN-RHE) algorithm to improve the calculation efficiency.

The structure of the rest paper is as follows. Section “Problem description” discusses the system model. Section “The continuous logarithmic mixed p-norm based robust recursive estimation algorithm” derives the CLMpN-RRE algorithm based on the continuous logarithmic mixed p-norm criterion. To improve the computational efficiency, Section “The continuous logarithmic mixed p-norm based robust hierarchical estimation algorithm” presents the CLMpN-RHE algorithm by means of the model decomposition. Section “Simulation studies” provides two simulation examples to verify the effectiveness of the proposed algorithms. Section “Conclusions” offers some conclusions.

Problem description

Consider the following errors-in-variables nonlinear system:

where u(k) and y(k) are the measured input and output, w(k) and v(k) are stochastic noises with zero means and variances \(\sigma ^2\) and \(\varsigma ^2\), respectively, \({\bar{\xi }}(k):=f(\xi (k))\) is the nonlinear function of the unmeasurable true input \(\xi (k)\). Various models can be applied to describe the nonlinearity \(f(\xi (k))\). A feasible scheme is to express the nonlinearity as the combination of the monomial functions:

The polynomial functions A(z) and B(z) are defined as:

where \(z^{-1}\xi (k)=\xi (k-1)\). Due to abrupt disturbances and human errors in real systems, there are some outliers that deviate from the collected data greatly. To describe the characteristics of outliers, w(k) and v(k) are supposed as the impulsive noises.

Let \({\mathbb {R}}^{n}\) be the real vector space composed of the n-dimensional vectors and \({\mathbb {R}}^{m\times n}\) be the real matrix space composed of the matrices with m rows and n columns. Define

Let \(b_0=1\). Inserting (3) into (1) gives

Based on the sum of squared-error criterion (SSEC), the identification model in (5) can be easily identified. However, the SSEC-based algorithms are sensitive to outliers, since the SSEC function enlarges the overall error and leads to the inaccurate estimation44,45. The paper aims to develop the robust recursive algorithms to estimate \({\varvec{\vartheta }}\) from the measurements with impulsive noise.

The continuous logarithmic mixed p-norm based robust recursive estimation algorithm

To take advantage of the characteristics of the p-norm to resist the outliers, Zayyani comprehensively considered various p norms (\(1\leqslant p\leqslant 2\)) and proposed a continuous mixed p-norm (CMpN)46, which can be defined as

where \(\lambda _k(p)\) represents the weight function and meets that \(\int _{1}^2\lambda _k(p)\textrm{d}p=1\), \(e(k):=y(k)-{\varvec{\varphi }}^{\textrm{T}}(k){\varvec{\vartheta }}\in {\mathbb {R}}\) denotes the error and \(\textrm{E}(\cdot )\) denotes the expectation operator. However, when \(e(k)=0\), the cost function \(J_1({\varvec{\vartheta }})\) is not differentiable. Moreover, the CMpN-based identification algorithm has the stability problem in the impulsive noise environment. It has been found that the logarithmic transformation can decrease the effect of impulsive noise and increase the robustness of the parameter estimation47. Thus the term \(\textrm{E}\{|e(k)|^p\}\) in \(J_1({\varvec{\vartheta }})\) can be replaced by \(\textrm{E}\{\ln [1+|e(k)|^p]\}\approx \frac{1}{k}\sum _{j=1}^k\ln [1+|e(j)|^p]\) and leads to the continuous logarithmic mixed p-norm criterion, which is defined by:

where \(e(j):=y(j)-{\varvec{\varphi }}^{\textrm{T}}(j){\varvec{\vartheta }}\) and \(\tau _0>0\) is a small positive number to ensure the differentiability of \(J_2({\varvec{\vartheta }})\). The constant \(\frac{1}{k}\) is removed in \(J_2({\varvec{\vartheta }})\) because multiplying the objective function by a constant does not affect the extreme point in optimization problems. Thus we have

where

When \(\lambda _k(p)=\frac{1}{p\ln 2}\), the equality constraint \(\int _{1}^2\lambda _k(p)\textrm{d}p=1\) is met and \(\zeta (k)\) can be computed by

where \(\tau (k):=\sqrt{\tau _0+e^2(k)}=\sqrt{\tau _0+(y(k)-{\varvec{\varphi }}^{\textrm{T}}(k){\varvec{\vartheta }})^2}\). Assume that \({\varvec{\varphi }}(k)\) is persistently exciting. From (6), we have

Define

Applying the matrix inversion formula \((\varvec{A}+\varvec{B}\varvec{C})^{-1}=\varvec{A}^{-1}-\varvec{A}^{-1}\varvec{B}(\varvec{I}+\varvec{C}\varvec{A}^{-1}\varvec{B})^{-1}\varvec{C}\varvec{A}^{-1}\) to (8), we have

where

Equation (7) can be written as

Thus we have the following recursive form:

The information vector \({\varvec{\varphi }}(k)\) contains the unknown \(\xi ^i(k)\) and \({\bar{\xi }}(k)\). To implement the algorithm in (9)–(11), their estimates should be calculated. From (2), the unmeasurable true input \(\xi (k)\) is relevant to the collected input u(k). Based on the bias compensation idea, one can assume that the monomial \(\xi ^i(k)\) meets

where \(r_i(k)\) is a correction term between the true input monomial \(\xi ^i(k)\) and the measured input monomial \(u^i(k)\). Inserting (2) into (12) gives

where \(C_i^j\) (\(1\leqslant j\leqslant i\)) denotes the combinatorial number. Thus we have

Inserting (13) into (12) yields

Note that w(k) is stochastic noise with zero-mean and variance \(\sigma ^2\), we have

Taking i in (14) one by one, we can recursively obtain the estimate of the monomials \(\xi (k),\xi ^2(k),\cdots ,\xi ^m(k)\) by \(u^1(k)+r_1(k),u^2(k)+r_2(k),\cdots ,u^m(k)+r_m(k)\), respectively, e.g.,

Substituting the obtained \(i-1\) equtions into (14), one can give the estimate \({\hat{\xi }}^i(k)\) of \(\xi ^i(k)\). From (3), the estimate of the nonlinear input \({\bar{\xi }}(k)\) can be calculated by

where the estimates \({\hat{\xi }}^i(k)\) and \({\hat{c}}_i(k)\) are in the place of their true values \(\xi ^i(k)\) and \(c_i\) in (3). Thus the following recursive relations can be acquired:

Equations (14)–(25) form the continuous logarithmic mixed p-norm based robust recursive estimation (CLMpN-RRE) algorithm for EIV nonlinear system with impulsive noise in (1) and (2).

Remark 1

The gain \(\varvec{G}(k)\) in (20) is relevant to \({\hat{\zeta }}(k)\), which depends on \({\hat{\tau }}^2(k)\) in the denominator of (24). As an outlier is encountered, the sudden increasing of the error \(\epsilon (k):=y(k)-{\hat{{\varvec{\varphi }}}}(k){\hat{{\varvec{\vartheta }}}}(k-1)\in {\mathbb {R}}\) leads to the rapid decay of \({\hat{\zeta }}(k)\), which generates negligible change of \(\varvec{G}(k)\). Thus the CLMpN-RRE algorithm has the ability to resist the impulsive noise.

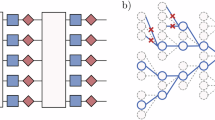

The continuous logarithmic mixed p-norm based robust hierarchical estimation algorithm

The CLMpN-RRE algorithm in (14)–(25) can efficiently identify the EIV nonlinear system with impulsive noise. To enhance the computational efficiency of the CLMpN-RRE algorithm, the following derives a CLMpN-based robust hierarchical estimation algorithm.

Let \(y_1(k):=y(k)-{\varvec{\psi }}^{\textrm{T}}(k)\varvec{c}\) and \(y_2(k):=y(k)-{\varvec{\phi }}^{\textrm{T}}(k){\varvec{\theta }}\). From (4), we have

Equations (26) and (27) are two fictitious identification sub-models. Define the cost functions

Similar with the derivation of the CLMpN-RRE algorithm, letting the gradients of the cost functions \(J_3({\varvec{\theta }})\) and \(J_3(\varvec{c})\) with respect to \({\varvec{\theta }}\) and \(\varvec{c}\) be zero vectors, respectively, and utilizing the estimates \({\hat{\xi }}^i(k)\), \(\hat{{\bar{\xi }}}(k)\) and \({\hat{c}}_i(k)\) in place of the unknown \(\xi ^i(k)\), \({\bar{\xi }}(k)\) and \(c_i\) give

Equations (14)–(18) and (28)–(39) form the continuous logarithmic mixed p-norm based robust hierarchical estimation (CLMpN-RHE) algorithm for the EIV system with impulsive noise in (1) and (2).

Remark 2

To demonstrate the advantages of reducing calculational amount of the CLMpN-RHE algorithm, Tables 1 and 2 list the computational loads of the CLMpN-RRE and CLMpN-RHE algorithm using the floating point operations.

When m is an odd number, the difference of the computational cost between two algorithms at each step is

When m is an even number, the difference is

This indicates that the CLMpN-RHE algorithm has higher calculational efficiency than the CLMpN-RRE algorithm. In fact, the computational costs of the CLMpN-RRE algorithm mainly depend on the calculations of \(\varvec{L}(k)\) and \(\varvec{P}(k)\), whose computations approximatively equal \(4n_0^2=4(n_1+m)^2\). Similarly, the sum of the computational costs corresponding to these two terms in the CLMpN-RHE algorithm approximatively equal \(4(n_1^2+m^2)\). Note that \(n_1^2+m^2<(n_1+m)^2\). This explains why the CLMpN-RHE algorithm has less computational cost than the CLMpN-RRE algorithm.

In recursive algorithms, the initial values of the parameter vectors to be estimated are usually taken as small real vectors, i.e., \({\hat{{\varvec{\theta }}}}(0)=\textbf{1}_{n_1}/p_0\) and \({\hat{\varvec{c}}}(0)=\textbf{1}_m/p_0\), where \(\textbf{1}_m\) denotes an m-dimensional column vector whose elements are 1 (i.e., \(\textbf{1}_m=[1,1,\cdots ,1]^{\textrm{T}}\in {\mathbb {R}}^m\)) and \(p_0\) is a large number, e.g., \(p_0=10^6\). The initial values \(\varvec{P}_1^{-1}(0)\) and \(\varvec{P}_2^{-1}(0)\) should be taken as small positive definite matrices, i.e., \(\varvec{P}_1^{-1}(0)=\varvec{I}_{n_1}/p_0\) and \(\varvec{P}_2^{-1}(0)=\varvec{I}_m/p_0\), where \(\varvec{I}_m\) denotes an identity matrix with m rows and m columns. In other words, \(\varvec{P}_1(0)=p_0\varvec{I}_{n_1}\) and \(\varvec{P}_2(0)=p_0\varvec{I}_m\). The initial values of the unknown variables are usually specified as zeros when \(k\leqslant 0\), i.e., \(\hat{{\bar{\xi }}}(k)=0\) (\(k\leqslant 0\)). These initial values may affect the results of the parameter estimation, but the overall effect is negligible.

The steps of implementing the CLMpN-RHE algorithm (14)–(18) and (28)–(39) are as follows.

-

1.

Let \(k=1\), set \({\hat{{\varvec{\theta }}}}(0)=\textbf{1}_{n_1}/p_0\), \({\hat{\varvec{c}}}(0)=\textbf{1}_m/p_0\), \(\hat{{\bar{\xi }}}(k)=0\) (\(k\leqslant 0\)), \(\tau _0=p_0\), \(\varvec{P}_1(0)=p_0\varvec{I}_{n_1}\), \(\varvec{P}_2(0)=p_0\varvec{I}_m\), where \(p_0=10^6\). Give the data length L.

-

2.

Record the measurements u(k) and y(k).

-

3.

Compute \({\hat{\xi }}(k),{\hat{\xi }}^2(k),{\hat{\xi }}^3(k),\cdots ,{\hat{\xi }}^m(k)\) using (14)–(18). Compute \(\hat{{\bar{\xi }}}(k)\) using (37).

-

4.

Construct \({\hat{{\varvec{\varphi }}}}(k)\), \({\hat{{\varvec{\phi }}}}(k)\) and \({\hat{{\varvec{\psi }}}}(k)\) using (34)–(36). Compute \({\hat{\zeta }}(k)\) and \({\hat{\tau }}(k)\) using (38) and (39).

-

5.

Compute \(\varvec{G}_1(k)\) using (29) and \(\varvec{P}_1(k)\) using (30). Update \({\hat{{\varvec{\theta }}}}(k)\) using (28).

-

6.

Compute \(\varvec{G}_2(k)\) using (32) and \(\varvec{P}_2(k)\) using (33). Update \({\hat{\varvec{c}}}(k)\) using (31).

-

7.

Construct \({\hat{{\varvec{\vartheta }}}}(k)\) using (34).

-

8.

If \(t<L\), increase k by 1 and go to Step 2; otherwise, stop and obtain the parameter estimation vector \({\hat{{\varvec{\vartheta }}}}(L)\).

The presented parameter estimation algorithm in this paper can joint other estimation strategies48,49,50,51,52,53,54,55 to model dynamic systems and can be applied to industrial process systems56,57,58,59,60,61,62,63,64,65 and manufacture systems. characteristic parameters. The flowchart of identifying the errors-in-variables nonlinear systems with impulsive noise using the CLMpN-RHE algorithm is shown in Fig. 1.

Simulation studies

Example 1

Consider the following EIV nonlinear system with impulsive noise,

where the nonlinearity \({\bar{\xi }}(k)\) is a quadratic polynomial function. In simulation, the input u(k) is selected as a realization of the persistent excitation signal, w(k) and v(k) are chosen as symmetric \(\alpha\)-stable (S\(\alpha\)S) impulsive noises with the characteristic function:

where the shape coefficient \(0<\alpha \leqslant 2\) and the dispersion coefficient \(0<\tau \leqslant 1\). The S\(\alpha\)S distribution noise is heavier tailed and has a larger amplitude as \(\alpha\) decreases. When \(\alpha =2\), it corresponds to the Gaussian distribution.

Figure 2 depicts the S\(\alpha\)S impulsive noise process when \(\alpha =1.6\). To check the performance of the CLMpN-RRE algorithm, Fig. 3 demonstrates the CLMpN-RRE estimates and errors, where the parameter estimation error is defined by the root mean square relative error \(\delta :=\Vert {\hat{{\varvec{\vartheta }}}}(k)-{\varvec{\vartheta }}\Vert /\Vert {\varvec{\vartheta }}\Vert \times 100\%\). It turns out that the CLMpN-RRE algorithm can provide a monotonically decreasing error with k increasing, which returns satisfactory estimates.

The impulsive noise versus k for Example 1.

The CLMpN-RRE estimation errors versus k for Example 1.

To test the influence of the shape parameter \(\alpha\) to the CLMpN-RRE algorithm, Fig. 4 exhibits the CLMpN-RRE estimates and errors when \(\alpha\) takes three different values 1.2, 1.6 and 2.0. The results suggest that the CLMpN-RRE error curves keep declining as \(\alpha\) gets larger, and the CLMpN-RRE algorithm has fastest convergence rate under the Gaussian noise (\(\alpha =2\)), which shows that the CLMpN-RRE algorithm is robust to both impulsive disturbance and the Gaussian one.

The CLMpN-RRE estimation errors \(\delta\) versus k under different \(\alpha\) for Example 1 (\(\sigma ^2=0.30^2\)).

Example 2

Consider the following EIV nonlinear system with impulsive noise,

where the nonlinearity \({\bar{\xi }}(k)\) is a cubic polynomial function. Under the similar simulation environments as Example 1, Fig. 5 displays the comparison of the CLMpN-RRE and CLMpN-RHE estimates. It illustrates that the CLMpN-RRE algorithm converges slightly faster than the CLMpN-RHE algorithm, but the CLMpN-RHE algorithm has higher computational efficiency—see Tables 1 and 2.

The CLMpN-RHE and CLMpN-RRE errors versus k for Example 2.

To examine the sensitivities of the variation of parameter values on the estimation results, each parameter randomly fluctuates with the amplitude of 0.1 around its original true value and the Monte Carlo simulations for the CLMpN-RHE algorithm with 50 different realizations are conducted. The mean values and variances of the CLMpN-RHE estimates and errors are shown in Table 3. As can be seen, when the parameter values change, the CLMpN-RHE estimates are close to their true values, which indicates that the CLMpN-RHE estimates are not sensitive to the variations of the parameters.

To validate the superiority of the proposed algorithm, Fig. 6 compares the estimation errors between the CLMpN-RHE algorithm and the bias-correction least-squares (BC-RLS) algorithm18. As can be seen, the CLMpN-RHE algorithm has higher parameter estimation accuracy than the BC-RLS algorithm in non-Gaussian noise environment.

The comparison of the estimation errors of the CLMpN-RHE algorithm and the BC-RLS algorithm for Example 2.

Figure 7 illustrates the performance of the CLMpN-RHE algorithm by comparing the predictive outputs with actual measurements from \(k=4001\) to \(k=4200\). It reveals that the model outputs are consistent with the actual ones, and the CLMpN-RHE algorithm can give accurate prediction.

The CLMpN-RHE prediction and errors for Example 2.

Conclusions

This paper seeks a solution for the identification problem of the errors-in-variables nonlinear system under the realistic hypothesis of impulsive noise disturbance. The identification algorithm utilizes the continuous logarithmic mixed p-norm as the criterion function, and the correction term enables that the nonlinear monomials of the noisy input can achieve the unbiased estimates. To reduce the computational loads, a continuous logarithmic mixed p-norm based robust hierarchical estimation algorithm is presented. The proposed algorithms not only have good robustness against the impulsive noise, but also guarantee the accuracy of recursive estimation.

Although the continuous logarithmic mixed p-norm based robust hierarchical estimation algorithm can be used for recursive identification of errors-in-variables nonlinear systems with impulsive noise, the proposed algorithm is derived based on the premise that the basis functions of the input nonlinearity are the monomials. When the basis functions are not monomials, the input nonlinearity can be approximated by monomials or polynomials using Taylor expansion and the proposed algorithm can be also applied to identify the errors-in-variables nonlinear system. As we known, when the nonlinear function is weakly nonlinear, the truncated Taylor expansion can accurately represent the nonlinear function. However, when the nonlinear function is strongly nonlinear, the truncated Taylor expansion may cause huge errors and low estimation accuracy. So can we develop high precision estimation algorithm for errors-in-variables nonlinear systems with impulsive noise when the input nonlinearity have strong nonlinearity? This problem will remain as an open problem in future.

Data availability

All data generated or analysed during this study are included in this published article.

References

Khajuria, R., Bukya, M., Lamba, R. & Kumar, R. Optimal parameter identification of solid oxide fuel cell using modified fire hawk algorithm. Sci. Rep. 14(1), 22469 (2024).

Ji, Y. & Jiang, A. Filtering-based accelerated estimation approach for generalized time-varying systems with disturbances and colored noises. IEEE Trans. Circuits Syst. II: Expr. Briefs 70(1), 206-210 (2023).

Azzollini, I. A., Bin, M., Marconi, L. & Parisini, T. Robust and scalable distributed recursive least squares. Automatica 158, 111265 (2023).

Ding, F. et al. Hierarchical gradient- and least squares-based iterative estimation algorithms for input-nonlinear output-error systems by using the over-parameterization. Int. J. Robust Nonlinear Control 34(2), 1120–1147 (2024).

Samada, S. E., Puig, V. & Nejjari, F. Zonotopic recursive least-squares parameter estimation: application to fault detection. Int. J. Adapt. Control Signal Process. 37(4), 993–1014 (2023).

Fan, Y., Liu, X. & Li, M. Data filtering-based maximum likelihood gradient-based iterative algorithm for input nonlinear Box-Jenkins systems with saturation nonlinearity. Circuits Syst. Signal Process. 43(11), 6874–6910 (2024).

Chen, J. & Zhu, Q. Accelerated gradient descent estimation for rational models by using Volterra series: structure identification and parameter estimation. IEEE Trans. Circuits Syst. II Expr. Briefs 69(3), 1497–1501 (2022).

Xu, L. & Xu, H. Adaptive multi-innovation gradient identification algorithms for a controlled autoregressive autoregressive moving average model. Circuits Syst. Signal Process. 43(6), 3718–3747 (2024).

Almahorg, K. A. & Gohary, R. H. Maximum likelihood detection in single-input double-output non-Gaussian Barrage-Jammed systems. IEEE Trans. Signal Process. 71, 979–994 (2023).

Wang, X., Ma, J. & Xiong, W. Expectation-maximization estimation algorithm for bilinear state-space systems with missing outputs using Kalman smoother. Int. J. Control Autom. Syst. 21(3), 912–923 (2023).

Liu, L., Li, F., Liu, W. & Xia, H. Sliding window iterative identification for nonlinear closed-loop systems based on the maximum likelihood principle. Int. J. Robust Nonlinear Control 35(3), 1100–1116 (2025).

Pote, R. R. & Rao, B. D. Maximum likelihood-based gridless DOA estimation using structured covariance matrix recovery and SBL with grid refinement. IEEE Trans. Signal Process. 71, 802–815 (2023).

Liu, W. & Xiong, W. Robust gradient estimation algorithm for a stochastic system with colored noise. Int. J. Control Autom. Syst. 21(2), 553–562 (2023).

Lv, L., Sun, W. & Pan, J. Two-stage and three-stage recursive gradient identification of Hammerstein nonlinear systems based on the key term separation. Int. J. Robust Nonlinear Control 34(2), 829–848 (2024).

Xia, H. F. Maximum likelihood gradient-based iterative estimation for closed-loop Hammerstein nonlinear systems. Int. J. Robust Nonlinear Control 34(3), 1864–1877 (2024).

Knox, J., Blyth, M. & Hales, A. Advancing state estimation for lithium-ion batteries with hysteresis through systematic extended Kalman filter tuning. Sci. Rep. 14(1), 12472 (2024).

Yang, S. et al. A parameter adaptive method for state of charge estimation of lithium-ion batteries with an improved extended Kalman filter. Sci. Rep. 11(1), 5805 (2021).

Hou, J. et al. Bias-correction errors-in-variables Hammerstein model Identification. IEEE Trans. Ind. Electron. 70(7), 7268–7279 (2023).

Xu, L. & Zhu, Q. A delta operator dtate estimation algorithm for discrete-time systems with state time-delay. IEEE Signal Process. Lett. 32, 391–395 (2025).

Fan, S. J., et al. Correlation analysis-based stochastic gradient and least squares identification methods for errors-in-variables systems using the multi-innovation. Int. J. Control Autom. Syst. 19(1), 289–230 (2021).

Zong, T. C., Li, J. H. & Lu, G. P. Bias-compensated least squares and fuzzy PSO based hierarchical identification of errors-in-variables Wiener systems. Int. J. Syst. Sci. 54(3), 633–651 (2022).

Xie, L., Huang, J. C., Tao, H. F. & Yang, H. Z. Identification of dual-rate sampled errors-in-variables systems with time delays. Optim. Control Appl. Meth. 44(5), 2316–2337 (2023).

Kreiberg, D. A confirmatory factor analysis approach for addressing the errors-in-variables problem with colored output noise. Automatica 156, 111187 (2023).

Zheng, W. X. A bias correction method for identification of linear dynamic errors-in-variables models. IEEE Trans. Automat. Control 47(7), 1142–1147 (2002).

Söderström, T. A user perspective on errors-in-variables methods in system identification. Control Eng. Pract. 89, 56–69 (2019).

Fan, S. J. et al. Recursive identification of errors-in-variables systems based on the correlation analysis. Circuits Syst. Signal Process. 39(12), 5951–5981 (2020).

Zhao, S., Wang, X. & Liu, Y. Cauchy kernel correntropy-based robust multi-innovation identification method for the nonlinear exponential autoregressive model in non-Gaussian environment. Int. J. Robust Nonlinear Control 34(11), 7174–7196 (2024).

Lubeigt, C. et al. Approximate maximum likelihood time-delay estimation for two closely spaced sources. Signal Process. 210, 109056 (2023).

Wang, Y. J. et al. Online identification of Hammerstein dystems eith B-spline networks. Int. J. Adapt. Control Signal Process. 38(6), 2074–2092 (2024).

Chen, J. et al. Two iterative reweighted algorithms for systems contaminated by outliers. IEEE Trans. Instrum. Meas. 72, 6504911 (2023).

Li, M. & Liu, X. The filtering-based maximum likelihood iterative estimation algorithms for a special class of nonlinear systems with autoregressive moving average noise using the hierarchical identification principle. Int. J. Adapt. Control Signal Process. 33(7), 1189–1211 (2019).

Wang, S. Y., Wang, Z. D., Dong, H. L. & Chen, Y. Recursive state estimation for stochastic nonlinear non-Gaussian systems using energy-harvesting sensors: a quadratic estimation approach. Automatica 147, 110671 (2023).

Song, H. et al. Distributed filtering based on Cauchy-kernel-based maximum correntropy subject to randomly occurring cyber-attacks. Automatica 135, 110004 (2022).

Bi, Y. et al. Adaptive decentralized finite-time fuzzy secure control for uncertain nonlinear CPSs under deception attacks. IEEE Trans. Fuzzy Syst. 31(8), 2568–2580 (2023).

Filipovic, V., Nedic, N. & Stojanovic, V. Robust identification of pneumatic servo actuators in the real situations. Forschung im Ingenieurwesen 75, 183–196 (2011).

Stojanovic, V., Nedic, N., Prsic, D. & Dubonjic, L. Optimal experiment design for identification of ARX models with constrained output in non-Gaussian noise. Appl. Math. Model. 40(13–14), 6676–6689 (2016).

Pan, J., Liu, Y. & Shu, J. Gradient-based parameter estimation for a nonlinear exponential autoregressive time-series model by using the multi-innovation. Int. J. Control Automat. Syst. 21(1), 140–150 (2023).

Wang, L. et al. The filtering based maximum likelihood recursive least squares parameter estimation algorithms for a class of nonlinear stochastic systems with colored noise. Int. J. Control Automat. Syst. 21(1), 151–160 (2022).

Wang, X., Liu, Y. & Zhao, S. Robust fixed-point Kalman smoother for bilinear state-space systems with non-Gaussian noise and parametric uncertainties. Int. J. Adapt. Control Signal Process. 38(11), 3636–3655 (2024).

Mao, Y., Xu, C. & Chen, J. Regularization based reweighted estimation algorithms for nonlinear systems in presence of outliers. Nonlinear Dyn. 112, 13131–13146 (2024).

Stojanovic, V. & Nedic, N. Identification of time-varying OE models in presence of non-Gaussian noise: Application to pneumatic servo drives. Int. J. Robust Nonlinear Control 26(18), 3974–3995 (2016).

Guo, W. Y. & Zhi, Y. F. Nonlinear spline prioritization optimization generalized hyperbolic secant adaptive filtering against alpha-stable noise. Nonlinear Dyn. 111(15), 14351–14363 (2023).

Liu, X. P. & Yang, X. Q. Identification of nonlinear state-space systems with skewed measurement noises. IEEE Trans. Circuits Syst. I Regular Pap. 69(11), 4654–4662 (2022).

Chen, B. D., Xing, L., Zhao, H. Q., Zheng, N. N. & Príncipe, J. C. Generalized correntropy for robust adaptive filtering. IEEE Trans. Signal Process. 69(11), 4654–4662 (2022).

Li, Q. & Wang, X. The maximum correntropy criterion-based robust hierarchical estimation algorithm for linear parameter-varying systems with non-Gaussian noise. Circuits Syst. Signal Process. 41(12), 7117–7144 (2022).

Zayyani, H. Continuous mixed \(p\)-norm adaptive algorithm for system identification. IEEE Signal Process. Lett. 21(9), 1108–1110 (2014).

Lu, L. et al. Robust adaptive algorithm for smart antenna system with alpha-stable noise. IEEE Trans. Circuits Syst. II Expr. Briefs 65(11), 1783–1787 (2018).

Xu, L. et al. Decomposition and composition modeling algorithms for control systems with colored noises. Int. J. Adapt. Control Signal Process. 38(1), 255-278 (2024).

Ding, F. et al. Recursive identification methods for general stochastic systems with colored noises by using the hierarchical identification principle and the filtering identification idea. Annu. Rev. Control 57, 100942 (2024).

Ding, F. et al. Filtered generalized iterative parameter identification for equation‐error autoregressive models based on the filtering identification idea. Int. J. Adapt. Control Signal Process. 38(4), 1363–1385 (2024).

Li, J. M. et al. Online parameter optimization scheme for the kernel function-based mixture models disturbed by colored noises. IEEE Trans. Circuits Syst. II: Express Briefs, 71(8), 3960–3964 (2024).

Liu, S. Y. et al. Joint iterative state and parameter estimation for bilinear systems with autoregressive noises via the data filtering. ISA Trans. 147, 337–349 (2024).

Xing, H. M. et al. Highly-efficient filtered hierarchical identification algorithms for multiple-input multiple-output systems with colored noises. Syst. Control Lett. 186, 105762 (2024).

Xu, L. et al. Novel parameter estimation method for the systems with colored noises by using the filtering identification idea. Syst. Control Lett. 186, 105774 (2024).

Xu, L. et al. The filtering-based recursive least squares identification and convergence analysis for nonlinear feedback control systems with coloured noises. Int. J. Syst. Sci. 55(16), 3461–3484 (2024).

Liu, X. et al. Parameter Estimation and Model-free Multi-innovation Adaptive Control Algorithms. Int. J. Control Autom. Syst. 22(11), 3509–3524 (2024).

Fan, Y. M. et al. Data filtering-based maximum likelihood gradient-based iterative algorithm for input nonlinear Box-Jenkins systems with saturation nonlinearity. Circuits Syst. Signal Process. 43(11), 6874–6910 (2024).

Ding, F. et al. Filtered auxiliary model recursive generalized extended parameter estimation methods for Box–Jenkins systems by means of the filtering identification idea. Int. J. Robust Nonlinear Control 33(10), 5510–5535 (2023).

Zhang, X. et al. Highly computationally efficient state filter based on the delta operator. Int. J. Adapt. Control Signal Process. 33(6), 875–889 (2019).

Ji, Y. et al. An identification algorithm of generalized time-varying systems based on the Taylor series expansion and applied to a pH process. J. Process Control 128, 103007 (2023).

Ding, F. et al. Hierarchical gradient- and least squares-based iterative algorithms for input nonlinear output-error systems using the key term separation. J. Frankl. Inst. 358(9), 5113–5135 (2021).

Zhang, X. et al. Optimal adaptive filtering algorithm by using the fractional-order derivative. IEEE Signal Process. Lett. 29, 399–403 (2022).

Zhang, X. et al. Hierarchical parameter and state estimation for bilinear systems. Int. J. Syst. Sci. 51(2), 275–290 (2019).

Zhang, X. et al. State estimation for bilinear systems through minimizing the covariance matrix of the state estimation errors. Int. J. Adapt. Control Signal Process. 33(7), 1157–1173 (2019).

Xu, L. Parameter estimation for nonlinear functions related to system responses. Int. J. Control Autom. Syst. 21(6),1780–1792 (2023).

Acknowledgements

This work was supported by the Science and Technology Project of Henan Province (No. 202102210297).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Wang, X., Zhu, F. Robust recursive estimation for the errors-in-variables nonlinear systems with impulsive noise. Sci Rep 15, 6031 (2025). https://doi.org/10.1038/s41598-025-89969-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-89969-z