Abstract

The concept of a smart city has spread as a solution ensuring wider availability of data and services to citizens, apart from as a means to lower the environmental footprint of cities. Crowd density monitoring is a cutting-edge technology that enables smart cities to monitor and effectively manage crowd movements in real time. By utilizing advanced artificial intelligence and video analytics, valuable insights are accumulated from crowd behaviour, assisting cities in improving operational efficiency, improving public safety, and urban planning. This technology also significantly contributes to resource allocation and emergency response, contributing to smarter, safer urban environments. Crowd density classification in smart cities using deep learning (DL) employs cutting-edge NN models to interpret and analyze information from sensors such as IoT devices and CCTV cameras. This technique trains DL models on large datasets to accurately count people in a region, assisting traffic management, safety, and urban planning. By utilizing recurrent neural networks (RNNs) for time-series data and convolutional neural networks (CNNs) for image processing, the model adapts to varying crowd scenarios, lighting, and angles. This manuscript presents a Deep Convolutional Neural Network-based Crowd Density Monitoring for Intelligent Urban Planning (DCNNCDM-IUP) technique on smart cities. The proposed DCNNCDM-IUP technique utilizes DL methods to detect crowd densities, which can significantly assist in urban planning for smart cities. Initially, the DCNNCDM-IUP technique performs image preprocessing using Gaussian filtering (GF). The DCNNCDM-IUP technique utilizes the SE-DenseNet approach, which effectually learns complex feature patterns for feature extraction. Moreover, the hyperparameter selection of the SE-DenseNet approach is accomplished by using the red fox optimization (RFO) methodology. Finally, the convolutional long short-term memory (ConvLSTM) methodology recognizes varied crowd densities. A comprehensive simulation analysis is conducted to demonstrate the improved performance of the DCNNCDM-IUP technique. The experimental validation of the DCNNCDM-IUP technique portrayed a superior accuracy value of 98.40% compared to existing DL models.

Similar content being viewed by others

Introduction

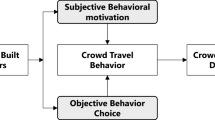

Crowd management is the capability of monitoring and directing a set of people to guarantee their safety1. Similar supporting technology is used to help move people to their destinations effectively and design a new service based on their behaviour. Crowd management is to take care of people on foot. It applies to people on public transport and in cars2. Every day, the entire solution permits the city to notice the activity of their citizens, allowing them to improve the urban schedule and resulting in improved satisfaction between city people and visitors. Through the fast development of smart securities, smart communities, and smart cities, abnormal behaviour studies have become a new point in the crowd-even investigation3. The influence of urbanization puts immense pressure on city planning and management. Miniaturization-sensing technologies and the advent of IoT devices have inspired the emergence of smart cities4. Crowd analysis is paramount in various smart city applications, viz., crowd counting, crowd tracking, and drone-based crowd analysis. In crowd examination, crowd counting is an essential everyday mission, such as gathering many walkers in an image or video sequence5.

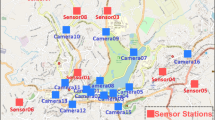

The crowd-counting work applies to many real-life situations, likely urban planning, huge gatherings, and pedestrian surveillance. Intelligent monitoring has increased tremendously in public places like hospitals, stations, shopping malls, and campuses. Among them, crowd point is the fundamental problem that disturbs the investigation of the unusual manner of the crowd6. Owing to its extensive coverage and affluent information, video-based sensing is a powerful sensing technology for crowd monitoring7. Therefore, there is a need for dedicated video processing techniques to extract crowd density parameters from raw images. Recently, it has achieved remarkable growth in video-based crowd density estimation algorithms, and video processing algorithms have shifted from classical computer vision to DL-based techniques, i.e., CNN8. Generally, DL-based techniques improved estimation accuracy significantly, but the extreme computing difficulty of DL models intensified the model’s performance, causing poor real-time performance9. Hence, enhancing the effectiveness of the crowd density estimation algorithm without any performance loss is crucial for improving public safety10. Figure 1 represents the structure of smart cities.

This manuscript presents a Deep Convolutional Neural Network-based Crowd Density Monitoring for Intelligent Urban Planning (DCNNCDM-IUP) technique on smart cities. The proposed DCNNCDM-IUP technique utilizes DL methods to detect crowd densities, which can significantly assist in urban planning for smart cities. Initially, the DCNNCDM-IUP technique performs image preprocessing using Gaussian filtering (GF). The DCNNCDM-IUP technique utilizes the SE-DenseNet approach, which effectually learns complex feature patterns for feature extraction. Moreover, the hyperparameter selection of the SE-DenseNet approach is accomplished by using red fox optimization (RFO) methodology. Finally, the convolutional long short-term memory (ConvLSTM) methodology recognizes varied crowd densities. A comprehensive simulation analysis is conducted to demonstrate the improved performance of the DCNNCDM-IUP technique. The key contribution of the DCNNCDM-IUP technique is listed below.

-

The DCNNCDM-IUP model utilizes DL techniques to precisely detect crowd densities, giving valuable insights for urban planning in smart cities. Advanced methods for real-time crowd analysis assist in better decision-making and optimization of urban spaces. Furthermore, crowd monitoring aims to improve safety, resource allocation, and overall city management.

-

The GF approach is employed as an image preprocessing step to improve the quality of input data, mitigating noise and improving clarity. This preprocessing technique confirms that the subsequent DL models receive cleaner, more accurate data. Refining the image quality significantly enhances the model’s performance in detecting crowd densities.

-

The SE-DenseNet-based methodology extracts key features from images, improving the model’s capability to detect crowd densities with high accuracy. This technique employs the power of dense connections and SE blocks to capture complex patterns. As a result, it significantly improves the precision and efficiency of crowd density detection in urban environments.

-

The RFO methodology is utilized to choose optimal hyperparameters for the SE-DenseNet model, improving its performance. By effectively searching the parameter space, RFO confirms that the model achieves the optimum possible accuracy for crowd density detection. This optimization process crucially improves the overall efficiency and robustness of the DL model.

-

The integration of ConvLSTM for recognizing varying crowd densities presents a novel methodology that integrates both spatial and temporal data. This method allows the model to capture dynamic changes in crowd behaviour over time, enhancing accuracy in real-world scenarios. By using ConvLSTM, the system can predict crowd patterns in complex urban environments with greater precision. This innovative integration improves the capability of the model to monitor and manage crowd dynamics in smart cities.

The remaining sections of the article are arranged as follows: “Literature review” section offers the literature review, and “Methods and techniques” represents the proposed method. Then, “Experimental analysis and results” section elaborates on the results evaluation, and “Conclusion” section completes the work.

Literature review

Gazis and Katsiri11 presented a new middleware that uses less power computing and cost-effective devices like Raspberry Pi to analyze data from WSN. It is mainly planned for internal settings such as past museums and buildings. It helps as a removal support by observing possession and determining the reputation of exact subjects, art exhibitions, or areas. The middleware uses a simple method, the MapReduce technique, to collect and allocate data on WSN through obtainable computer nodes. Guo et al.12 developed a lightweight system called the Ghost Attention Pyramid Networks (GAPNets) method. A lightweight GhostNet was assumed to support encrypting lower-level features. Furthermore, an effective pyramid fusion unit with a 4-branch structure was constructed to get a multiscale hierarchy representation while decreasing the parameter. Lastly, a decoder produces the estimation by using a sequence of transferred convolutional blocks. The authors13 presented Aquila Optimizer with TL-based Crowd Density Analysis for Sustainable Smart Cities (AOTL-CDA3S). To attain this, the developed method first uses a weighted average filter (WAF) model to enhance the excellence of the input surrounds. Then, the proposed system uses an AO technique with the SqueezeNet method for feature extractor purposes. Lastly, an XGBoost technique has been utilized to categorize crowd densities. In14, the scale attentive aggregation network (SA2Net) has been projected. In particular, the SA2Net has dual fundamental modules, i.e. background noise suppressor (BNS) and multiscale feature aggregator (MFA). The MFA is intended in a 4-pathway infrastructure and gathers the multiscale feature to simplify the correlation among dissimilar measures. The BNS module uses the background data among the matrices of the input key and self-attention to overpower the contextual noise. Zhai et al.15 introduced a new attentive hierarchy ConvNet (AHNet). The AHNet removes the hierarchy feature by intended discriminative feature extraction and extracts the semantic feature using a coarse-to-fine method using a hierarchical mixture approach. A module of recalibrated attention (RA) was constructed in numerous stages to overwhelm the effect of contextual interference, and a feature enhancement model was made to detect head areas using innumerable measures.

Alrowais et al.16 presented a Meta-heuristics with Deep TL-Enabled Intelligent CDDC (MDTL-ICDDC) technique for the video surveillance methods. The presented model influences an SSA with NASNetLarge architecture as a feature extractor, so the hyperparameter selection method was implemented using SSA. Also, the WELM model was used to determine crowd density and identify the population. Lastly, the krill swarm algorithm (KSA) was employed for the parameter optimizer method. Lin and Hu17 presented a multi-branch method, Repmobilenet, for a multiscale spatial extraction of features. Repmobilenet trains with lightweight depthwise separable convolution block (DSBlock) that can efficiently remove features of the dense crowd for managing with scale disparity. Here, the multi-branch framework is changed into a one-branch feedforward method over mechanical reparameterization. In this method, Repmobilenet utilizes a multi-branch over-parameterized layout. Also, the technique inserted dilated convolution in the back end to enlarge the receptive area of the method. Sharma et al.18 introduced a united solution to achieve the crowd density valuation and behaviour diagnosis. The density of crowd valuation was implemented by emerging a novel CNN-based structure that reflects scale data and overwhelms the problems of scale disparities. To address this concern of scale disparity, the paper presented a module of scale-aware attention. In contrast, manifold branches of the self-attention model were connected to recover the gap of scale recognition. Selvarajan et al.19 analyze blockchain (BC) technology for improving the security of Internet of Things (IoT) systems in smart city applications. The model also integrates a BC technique with a neuro-fuzzy approach to improve data processing security, maximize monitoring unit confidence, and ensure energy-efficient task completion with enhanced security. In20, parametric design models incorporating point-to-point protocol and mode control optimization are proposed to enhance a smart transportation system. Applying multi-objective min-max functions minimizes waiting times for users. Additionally, it presents a line-following mechanism with improved connectivity, independent detection, and revitalization functions. Selvarajan and Manoharan21 integrate wireless technologies and digital twins for monitoring smart cities, improving economic progress and decision-making. The system mitigates packet exchange and retransmission using IoT and Constrained Application Protocol (CoAP). The authors in22 explore the integration of sensors and IoT for smart city monitoring, utilizing a node control process. It integrates K-means clustering and C4.5 classification approaches to secure data and ensure proper responses.

Shitharth et al.23 developed a Cyborg intelligence security model for smart city systems, utilizing QIDI for data preprocessing, CSOM for feature optimization, and RMML for intrusion classification. The framework improves attack detection performance by enhancing feature selection, learning speed, and classification accuracy, ensuring efficient security for smart city networks. Thirumalaisamy et al.24 propose the Averaged One-Dependence Estimators (AODE) classifier and the SELECT Applicable Only to Parallel Server (SELECT-APSL ASA) method for data separation. The AODE classifier, incorporated with hybrid data obfuscation, effectively separates smart city data. Khadidos et al.25 developed a new security model for smart city networks, utilizing the Collaborative Mutual Authentication (CMA) mechanism for user identity validation and the Meta-heuristic Genetic Algorithm – Random Forest (MGA-RF) technique for attack detection, ensuring enhanced network security. Xie et al.26 propose the Bidirectional Gated and Dynamic Fusion Network (BGDFNet) model for RGB-T crowd counting. The model comprises a novel Bidirectional Gated Module (BGM) method to bridge modality gaps and a Multiscale Dynamic Fusion (MSDF) module for effectual feature fusion. A crowd density map is generated through the decoder and fully connected layer. John Pradeep et al.27 utilize a Multi-CNN (MCNN) based on the VGG-19 architecture for crowd density estimation. The deeper VGG-19 model captures complex features, improving crowd counting accuracy, while the MCNN handles varying image sizes and viewpoint effects on head sizes. Kamra et al.28 explore ML models such as Mobilenet SSD, Yolov4, and Mask RCNN for crowd density estimation. It examines integrating surveillance data, lidar, and thermal sensors in real-time systems. It emphasizes big data analytics and cloud computing, focusing on future multimodal data fusion and privacy preservation developments. Hussain et al.29 propose a novel DL model by integrating Graph Convolutional and Spatio-temporal Gated Recurrent Units (GCST-GRU) to predict the next traffic state. The model captures spatial dependencies using graph convolution and temporal dependencies through the GRU neural network. Qaraqe et al.30 present PublicVision using a Swin Transformer-based DL model. A novel dataset is used to identify crowd size and violence levels, with security ensured through a Dynamic Multipoint Virtual Private Network (DMVPN), IPSec, and Firewall for data confidentiality and integrity. Sonthi et al.31 This research reviews CNN-based approaches for gender and age identification, analyzing 30 publications to predict future trends in CNN methods and transportation systems. It also evaluates two DL techniques, CNN and AlexNet, for gender classification from facial images.

Vu et al.32 employ three CNN models, namely W-Net, UASD-Net (a fusion of U-Net and Adaptive Scenario Discovery), and Congested Scene Recognition Network (CSR-Net) to assess traffic density from images captured in Vietnam. A novel methodology is also introduced to reallocate label points to generate more accurate density maps. Gündüz and Işık33 propose a real-time method for monitoring physical distancing in indoor spaces using computer vision and DL. The technique employs the YOLO object detection model pre-trained on the Microsoft COCO dataset. Alotaibi et al.34 propose the Osprey Optimization Algorithm with Deep Learning Assisted Crowd Density Detection and Classification (OOADL-CDDC) technique for smart video surveillance to detect and classify crowd densities using SE-DenseNet and attention bidirectional gated recurrent unit (ABiGRU) models. Tripathy et al.35 propose a secure, reliable service delivery strategy for smart cities by integrating fog and mist servers with an intrusion detection system. Alasiry and Qayyum36 integrate UOAL for video anomaly detection, TempoNet for motion forecasting, and BDSO for resource optimization, improving accuracy, responsiveness, and efficiency in surveillance. Lakhan et al.37 present a BC-based, energy-efficient IoE smart grid architecture for smart homes and electric vehicles, integrating a BC-energy-efficient IoE sensors data scheduling (BEDS) approach for sensor data scheduling and integrating fibre optics for data offloading. Gao et al.38 introduce a Utility-driven Destination-aware Spatial Task Assignment (UDSTA) problem and solve it by utilizing a dual-embedding-based deep Q-Network (DE-DQN) for efficient task-worker matching and route planning. An enhanced DE-Rainbow version optimizes further using Rainbow DQN. Mohammed et al.39 introduce the Pattern-Proof Malware Validation (PoPMV) approach for BC in ICPS, using LSTM and reinforcement learning to improve security, processing speed, and attack detection. Ji et al.40 propose the Cloud-Edge Large-Tiny Model Collaborative (CELTC) architecture, using the deep reinforcement learning (DRL) model to optimize surveillance data processing on offshore drilling platforms by deploying large models on the cloud and tiny models on edge devices. Jayasingh et al.41 integrate IoT devices and the YOLO model for real-time, accurate crowd counting in public spaces. Sidharta et al.42 distinguish between group and non-group pedestrians using semantic segmentation with directional-oriented density features and a shallow U-network architecture. Zhou et al.43 propose the MJPNet-S* for drone-based RGB-T/D crowd density estimation, using a trimodal fusion module and knowledge distillation to enhance accuracy and efficiency and reduce resource consumption.

While significant advancements have been made in integrating DL, IoT, and sensor technologies for smart city applications, various limitations and research gaps remain. Many existing approaches, such as those using CNNs and other ML models for crowd density estimation, mostly face challenges related to occlusions, varying environmental conditions, and the requirement for large, annotated datasets. Moreover, despite efforts to improve model accuracy, issues like real-time processing efficiency and system scalability for large-scale urban environments still pose significant challenges. Additionally, while several techniques integrate multimodal data fusion, attaining seamless integration of diverse data types (e.g., thermal, LiDAR, and video) without compromising privacy remains a concern. The energy effectiveness of smart city systems, particularly those using IoT, has not been sufficiently addressed, specifically in minimizing energy consumption during continuous data transmission and analysis. Furthermore, existing models often lack robustness against dynamic changes in traffic patterns or crowd behaviours, resulting in inaccurate predictions. Therefore, there is a requirement for more adaptable and resilient models that can handle real-world complexities such as rapidly changing environments, heterogeneous data, and privacy concerns.

Methods and techniques

This paper presents a novel DCNNCDM-IUP methodology for smart cities. The proposed method utilizes the DL approach for detecting crowd densities, which helps to aid urban planning in smart cities. Figure 2 illustrates the entire flow of the DCNNCDM-IUP technique.

Dataset

The DCNNCDM-IUP technique’s experimental evaluation is tested using a crowd-density dataset16. Table 1 depicts 1000 samples with four classes.

Image preprocessing

At the initial stage, the DCNNCDM-IUP technique undergoes image preprocessing and is performed using the GF approach44. The key advantage of choosing the GF model over other methods is its ability to minimize high-frequency noise without significantly blurring edges. Unlike median or mean filters, GFs apply a weighted average where nearby pixels have a higher influence, improving edge preservation. This technique particularly benefits object detection and feature extraction applications, where clear boundaries are crucial. Moreover, GF is computationally effectual, making it appropriate for real-time applications. Its adaptability in handling various image noise levels makes it a robust choice for preprocessing in complex visual tasks. Figure 3 depicts the workflow of the GF model.

GF is a basic image preprocessing method to mitigate noise and detail by image smoothing. This also employs a GF, considered by its bell-shaped curve, to compute the weighted average of a pixel value within a defined neighbourhood. The result is a blurred image where the variance of the Gaussian function controls the degree of blurring. By allocating weights to the pixel closer to the centre of the neighbourhood, GF is utilized to preserve edges better than other linear smoothing approaches, which makes it incredibly efficient for applications requiring noise reduction while retaining structural data.

DL model: SE-DenseNet model

The DCNNCDM-IUP technique utilizes SE-DenseNet, which effectively learns complex feature patterns by leveraging the power of dense connectivity and the SE mechanism45. DenseNet improves feature propagation and reuse by connecting each layer to every other layer in a feedforward fashion, which assists in better gradient flow and reduces the vanishing gradient problem. The SE module improves the model’s capability to selectively emphasize crucial features by adaptively recalibrating channel-wise feature responses. This integration allows SE-DenseNet to attain superior performance in tasks comprising high-level feature extraction, such as image classification and object recognition. Compared to conventional CNNs or standalone DenseNet, SE-DenseNet’s ability to adaptively focus on essential features confirms higher accuracy and robustness, particularly in complex datasets. The model’s efficient use of parameters makes it computationally efficient without compromising accuracy, presenting a balanced tradeoff for real-time applications. Figure 4 demonstrates the working flow of the SE-DenseNet model.

The basic concept of the attention module is to train the model for autonomously learning the most crucial channels within specific tasks. As a result, this technique considerably improves the attention model on the feature, eventually producing precise and more efficient predictive or classification outcomes. The channel attention mechanism (CAM), in particular, may help to focus further on salient channels during feature processing, thus improving the model’s generalization capability and overall performance. This model holds hidden value and immense application potential in many areas, namely natural language processing, image processing, and voice recognition.

Generally, the typical CAM pattern is the squeeze-and‐excitation network (SENet). The primary function is to decrease global spatial information. Consequently, the feature is learned across channels to ascertain each channel’s significance. Finally, weight is allocated to each channel through the excitation phase. \(\:h\) and \(\:w\) are the feature matrix dimensions, and \(\:c\) is the channel number. Lastly, the normalized weight is multiplied by the input feature mapping to generate the weighted feature maps.

The SENet automatically learns feature weight according to loss through the FC network. The SENet optimizes the channel weight rather than categorizing it based on the mathematical values of the feature channel. Then, criticality is used to assign weight to the feature by obtaining the degree of importance for all the channels in the feature mapping. Consequently, the NN focuses on specific feature channels through the SENet.

DenseNet is a CNN framework where all the layers take the output of each prior layer as an input. It helps the backpropagation of the gradient during training and allows the DCNN model to be trained. Feature multiplexing is a kind of DenseNet obtained by connecting features on the channel. DenseNet has an \(\:i(i+1)/2\) connection for a NN with \(\:i\) layers. It exploits less computation to obtain superior outcomes. Each prior layer is connected. The output at \(\:{i}^{th}\) layers is given below:

In Eq. (1), the quantity of network layers is \(\:i\). The outcome corresponding to \(\:{i}^{th}\) layer is \(\:{X}_{i}\). \(\:{H}_{i}\) is a set of nonlinear transformations, such as activation, batch normalization, pooling, and convolution.

In DenseNet, a small growth rate, Dense Block, and Transition Layer are utilized. It reduces the calculation and narrows the network, efficiently suppressing overfitting problems. Classical CNN relies on pooling and convolution to minimize the feature map dimensionality. In DenseNet, the layer is a dense connection, necessitating the feature map retention of a similar dimension for forward propagation. Therefore, the Transition Layer and Dense Block better solve these problems.

The feature vector is first zero-padded through the Zero‐Padding layer to control the feature vector lengths and later passed through the convolutional layers. Decreased feature map dimensions enhance computation efficacy. Moreover, the Transition Layer decreases the feature map dimension while reducing the feature number.

DL model optimization

Moreover, the hyperparameter selection of the SE-DenseNet approach is accomplished by employing the RFO technique46. This model presents various advantages over conventional methods. RFO employs the power of decision trees to handle the complex, nonlinear relationships between hyperparameters, making it highly effective for optimizing models such as SE-DenseNet. It is robust against overfitting and can give more accurate hyperparameter configurations by computing diverse feature interactions. RFO is more computationally efficient and adaptive than grid or random search, as it concentrates on the most promising regions of the hyperparameter space. Furthermore, RFO automatically handles high-dimensional optimization problems, making it ideal for DL models with many hyperparameters like SE-DenseNet. This approach ensures the optimum configuration for improved performance without excessive computational cost. Figure 5 illustrates the structure of the RFO method.

The red fox is a predator that hunts farm and wild animals, and it is hunted by the humans who interrupt it in this attack. Since the better individual survives to pursue the various territories, the relations between individuals, predators, and prey in a similar pack are mathematically mimicked. Foxes have a hunting strategy that is considered for both local and global searches. Along with these two stages, a population control mechanism has been further suggested, which simulates the group leaving for a different place or being killed by the hunter. These two better individuals are chosen to replace and reproduce the weaker component in all the iterations. This allows us to achieve the global extrema, reducing the risk of being trapped in local extrema. The steps of the proposed algorithm are given below:

Step 1—Initial Population: The population is generated by the number of foxes selected, which is constant in all the iterations. All the components are characterized as \(\:{\left({\overline{x}}_{i}^{j}\right)}^{t}\), where \(\:i\) is the individual identification in the population, \(\:j\) is the coordinate in size of the solution space, and \(\:t\) denotes the existing iteration. The individual that offers the better solution is recorded, creating the population of the initial iteration according to the assessment of the population produced by the objective function (OF).

Step 2—Global Search: The pack members move towards the remote location, looking for food, and later, the gathered data is given to other members to ensure their development and survival. Therefore, the optimum individuals are recorded, and the Euclidean distance is evaluated as follows:

Individual moves to the best red foxes using:

Where \(\:\alpha\:\) is a random integer for each individual in all the iterations, which belongs to the range \(\:\left[0,\:d\left((\overline{{x}_{i}}{)}^{t},({\overline{x}}_{best}{)}^{t}\right)\right]\), the novel population is now assessed by the OF. If the optimum value is better, the individual takes the newest position or returns to the initial location. This is simulated by the path the other group members should follow once the information is transferred by the components that have searched the surrounding territories, where they keep finding feasible locations. Also, the model is used to predict the reproduction of optimum individuals or the death of the worst individual from the population.

Step 3—Local Search: Once the fox spots the target, they try to approach it slowly in a circular movement until they are closer enough to catch it. This behaviour is modelled through the \(\:\mu\:\) parameter. During the approach movement, this is randomly produced within \(\:\left[\text{0,1}\right]\) and simulates the fox probability being realized by the target. If \(\:\mu\:>0.75\), the fox methods the target, or it leaves. The observation and approach movement of all the individuals to the prey has been estimated by the modified Cochleoid equation, and \(\:{\varphi\:}_{0}\) model the angle of vision of all the individuals and is generated randomly within \(\:\left[\text{0,2}\pi\:\right]\) for each individual.

In Eq. (4), \(\:\theta\:\) denotes the random integer within \(\:\left[\text{0,1}\right]\) and characterizes the impact of atmospheric conditions. The movement is modelled by Eq. (14) for the spatial coordinates, where all the angular values \(\:{\varphi\:}_{n-1}\) are randomly produced within \(\:\left[\text{0,2}\pi\:\right].\)

Step 4—Reproduction and Pack Abandonment: the worst individual in the population is chosen based on the OF for the dynamic control of the population to introduce slight modifications to the pack. Since these are the worst individuals, it is considered that they have been killed by the hunter or moved territory, but the worst individual is replaced by a newer one to make sure that the population is constant. At all the iterations, the two better individuals (the alpha couple) are chosen, \(\:{\left({\overline{X}}^{\left(1\right)}\right)}^{t}\) and \(\:{\left({\overline{X}}^{\left(2\right)}\right)}^{t}\), and the centre of diameter and habitat are evaluated:

A random variable \(\:\kappa\:\) is produced in \(\:\left[\text{0,1}\right]\) that determines the individual’s replacement for all the iterations. If \(\:\kappa\:\ge\:0.45\), the new individual is presented into the population, or the alpha couple reproduces. Initially, new member moves towards other areas, being capable of reproducing. Next, a new individual is the result of the alpha couple reproduction as

Step 5—Evaluate all the individuals based on the OF— the best value \(\:{x}_{best}\) is recorded.

Algorithm 1 illustrates the RFO model.

The RFO approach derives an FF to achieve better classifier outcomes. It describes a positive integer to characterize the more significant outcomes of the solution candidate. Now, reducing the classifier error rate is regarded as an FF.

Classification method: ConvLSTM

Finally, the recognition of varied crowd densities is accomplished by the ConvLSTM47. This approach integrates the merits of CNN and LSTM networks. This hybrid architecture allows ConvLSTM to capture spatial patterns through CNN layers and effectually model temporal dependencies in crowd dynamics via LSTM units. Unlike conventional CNNs, which concentrate solely on spatial features, ConvLSTM is specifically appropriate for crowd density recognition as it handles sequential data and temporal changes, such as crowd movement over time. This makes it more accurate in dynamic environments where crowd density fluctuates. Additionally, ConvLSTM needs fewer parameters than entirely separate CNN and LSTM models, presenting computational efficiency while maintaining high accuracy. Its capability to process video frames or time-series data makes it superior for real-time crowd monitoring and analysis compared to other methods that may face difficulty with temporal information. Figure 6 specifies the structure of the ConvLSTM technique.

As a traditional DL model, the DFN comprises an input, hidden layer (HL), and output layer. This is suitable to find output values based on the input value. However, it is not applicable to predict the time series data. As a result, an RNN is introduced to train the time series data. An RNN is a DL method where hidden nodes are linked to a directed edge to form the cyclic structure known as a directed cycle. It helps predict data series, viz., text and voice. In the next step, the state of the prior time step is utilized, and the resultant values are affected by the previous state. The LSTM among different RNN models is applied to prevent the vanishing gradients. In addition, the LSTM is better than the DFN for understanding the features. Many DL models have been introduced for training data time series. LSTM is more prevalent among them.

The cell state is a conveyor belt. Thus, the gradient of input values is relatively propagated well.

In the equations, \(\:\sigma\:\) and \(\:\text{t}\text{a}\text{n}\text{h}\) are the sigmoid and hyperbolic tangent functions, \(\:W\) denotes the weight for all the layers, \(\:{x}_{t}\) indicates the input at the \(\:t\) time step\(\:,\) and \(\:b\) shows the bias. In Eqs. (13) and (14), \(\:\odot\:\) indicates the Hadamard product operators. The forget gate (\(\:{f}_{t})\) forgets the prior data. The values are attained by taking the sigmoid once it receives \(\:{h}_{t-1}\), and the value \(\:{x}_{t}\) denotes the forget gate transmits. The sigmoid output function ranges within [0,1]. The data from the prior state is overlooked if the value is\(\:\:0,\:\)and the data from the preceding state is retained if it is \(\:1\). The input gate \(\:{i}_{t}\odot\:{g}_{t}\) stores the existing data. It takes \(\:{h}_{t-1}\) and \(\:{x}_{t}\) and exploits the sigmoid function. Then, the values that take the Hadamard product and \(\:tanh\) functions are passed through the input gate. Each represents the direction and intensity of storing existing data since \(\:{i}_{t}\) ranges from \(\:0\) to \(\:1\), and the \(\:{g}_{t}\) ranges from \(\:-1\) to \(\:1\).

The 2D images (data) must be utilized as input to consider spatial connection in learning. 2D data have the feature that increases the size; the data dimension is considerably augmented during training, viz., evaluating the model weight. Thus, one of the DL models, a CNN considering the image features, may be utilized in the LSTM model. The widely adopted strategy includes an LRCN, where the \(\:2D\) feature vectors are extracted first through the CNN and used as an LSTM input. Next, the input dataset is an image (2D). However, while \(\:2D\) images from various channels are used as input datasets, a \(\:3D\) tensor is utilized as input, and the feature vector extracted by the CNN undergoes a similar process within the LSTM. Thus, it is unfit for long-term data training. On the other hand, the ConvLSTM exploits various approaches. The computation amount is reduced dramatically by carrying out the internal operation of LSTM as a convolutional function.

This is mathematically modelled as follows:

When compared Eqs. (9)–(14) and Eqs. (15)–(20) are quite similar. But there are two important distinctions. Firstly, the 3D tensor includes forget gate \(\:f\), input gate \(\:i\), output gate \(\:o\), cell output \(\:C\), cell input \(\:X\), and cell state \(\:H\). Unlike the original LSTM, each element is \(\:a\:1D\) vector. Next, matrix multiplication was implemented, each replaced by a convolution operation. This implies that the weight existing in \(\:W\) might be lesser than LSTM. This is similar to the FC layer replaced by the convolution layer; it reduces the number of weights and is appropriate for effectively training an enormous quantity of data.

Experimental analysis and results

This section examines the experimental result analysis of the DCNNCDM-IUP technique. Figure 7 represents the sample images.

Figure 8 reports a set of confusion matrices produced by the DCNNCDM-IUP method on several epochs. On 500 epochs, the DCNNCDM-IUP method detected 246 samples in class 0, 213 in class 1, 234 in class 2, and 240 in class 3. Likewise, on 1000 epochs, the DCNNCDM-IUP method detected 249 samples in class 0, 232 in class 1, 241 in class 2, and 241 in class 3. In addition, on 1500 epochs, the DCNNCDM-IUP method has detected 250 samples in class 0, 232 in class 1, 244 in class 2, and 242 in class 3. Additionally, on 2000 epochs, the DCNNCDM-IUP method detected 249 samples in class 0, 216 in class 1, 240 in class 2, and 244 in class 3. Finally, on 3000 epochs, the DCNNCDM-IUP method detected 248 samples in class 0, 224 in class 1, 239 in class 2, and 240 in class 3.

Table 2; Fig. 9 indicate the overall crowd classification outcomes of the DCNNCDM-IUP technique. The results exemplify that the DCNNCDM-IUP technique correctly detected varied classes. With 500 epochs, the DCNNCDM-IUP technique offers average \(\:acc{u}_{y}\), \(\:pre{c}_{n}\), \(\:rec{a}_{l}\), \(\:{F}_{score}\), and \(\:{G}_{measure}\) of 96.65%, 93.38%, 93.30%, 93.26%, and 93.30%, correspondingly. Also, with 1000 epochs, the DCNNCDM-IUP model exhibits average \(\:acc{u}_{y}\), \(\:pre{c}_{n}\), \(\:rec{a}_{l}\), \(\:{F}_{score}\), and \(\:{G}_{measure}\) of 98.15%, 96.32%, 96.30%, 96.29%, and 96.30%, correspondingly. Besides, with 1500 epochs, the DCNNCDM-IUP model exhibits average \(\:acc{u}_{y}\), \(\:pre{c}_{n}\), \(\:rec{a}_{l}\), \(\:{F}_{score}\), and \(\:{G}_{measure}\) of 98.40%, 96.82%, 96.80%, 96.79%, and 96.80%, correspondingly. Moreover, with 2000 epochs, the DCNNCDM-IUP model exhibits average \(\:acc{u}_{y}\), \(\:pre{c}_{n}\), \(\:rec{a}_{l}\), \(\:{F}_{score}\), and \(\:{G}_{measure}\) of 97.45%, 95.03%, 94.90%, 94.85%, and 94.91%, correspondingly. Finally, with 3000 epochs, the DCNNCDM-IUP model exhibits average \(\:acc{u}_{y}\), \(\:pre{c}_{n}\), \(\:rec{a}_{l}\), \(\:{F}_{score}\), and \(\:{G}_{measure}\) of 97.55%, 95.12%, 95.10%, 95.08%, and 95.09%, correspondingly.

Figure 10 illustrates the DCNNCDM-IUP method’s training and validation accuracy outcomes. The accuracy values are calculated in the range of 0-3000 epochs. The figure emphasizes that the training and validation accuracy values exhibit a rising tendency, which indicates the method’s ability to enhance performance over various iterations. Furthermore, the training and validation accuracy remain closer over the epochs, exhibiting improved performance and indicating low minimal overfitting of the DCNNCDM-IUP method, guaranteeing consistent prediction on unseen samples.

Figure 11 shows the training and validation loss graph of the DCNNCDM-IUP technique. The loss values are calculated in the range of 0-3000 epochs. It is represented that the training and validation accuracy values validate a decreasing tendency, which notified the capability of the DCNNCDM-IUP method in balancing a tradeoff between data fitting and generalization. The progressive reduction in loss values additionally guarantees the enhanced performance of the DCNNCDM-IUP method and tunes the prediction results over time.

In Fig. 12, the precision-recall (PR) investigation of the DCNNCDM-IUP method under several epochs offers an interpretation of its performance by plotting Precision against Recall for all the classes. The figure exhibits that the DCNNCDM-IUP method constantly achieves enhanced PR values across various classes, representing its ability to maintain a significant portion of true positive predictions amongst each positive prediction (precision) while capturing a large proportion of actual positives (recall). The continual increase in PR outcomes among all classes depicts the efficiency of the DCNNCDM-IUP method in the classifier process.

In Fig. 13, the ROC curve of the DCNNCDM-IUP technique under various epochs is studied. The results imply that the DCNNCDM-IUP method obtains superior ROC outcomes over all the classes, representing the significant ability to discriminate the classes. This reliable trend of enhanced ROC values over different classes signifies the proficient performance of the DCNNCDM-IUP technique in predicting classes, highlighting the drastic nature of the classifier process.

To demonstrate the proficiency of the DCNNCDM-IUP method, a detailed comparison study is made in Table 348.

Figure 14 provides comparative \(\:pre{c}_{n}\) and \(\:rec{a}_{l}\) results of the DCNNCDM-IUP method. The results indicate that the BoW-SRP, BoW-LBP, and GLCM-SVM techniques have shown worse values of \(\:pre{c}_{n}\) and \(\:rec{a}_{l}\). Simultaneously, the GoogleNet and VGGNet models have attained slightly improved \(\:pre{c}_{n}\) and \(\:rec{a}_{l}\). Meanwhile, the MDTL-ICDDC and AICDA-SSC models have demonstrated closer values of \(\:pre{c}_{n}\) and \(\:rec{a}_{l}\). Nevertheless, the DCNNCDM-IUP technique results in enhanced performance with \(\:pre{c}_{n}\) and \(\:rec{a}_{l}\) of 96.82% and 96.80%, correspondingly.

Figure 15 provides a comparative \(\:acc{u}_{y}\) and \(\:{F}_{score}\) results of the DCNNCDM-IUP model. The outcomes specify that the BoW-SRP, BoW-LBP, and GLCM-SVM techniques have demonstrated worse values of \(\:acc{u}_{y}\) and \(\:{F}_{score}\). Simultaneously, the GoogleNet and VGGNet models have obtained slightly improved \(\:acc{u}_{y}\) and \(\:{F}_{score}\). Meanwhile, the MDTL-ICDDC and AICDA-SSC models have established closer values of \(\:acc{u}_{y}\) and \(\:{F}_{score}\). Nevertheless, the DCNNCDM-IUP method leads to superior performance with \(\:acc{u}_{y}\) and \(\:{F}_{score}\) of 98.40% and 96.79%, respectively.

Therefore, the DCNNCDM-IUP technique is applied to enhance the detection of crowd densities.

Table 4; Fig. 16 demonstrate the computational time (CT) analysis of the DCNNCDM-IUP approach with existing methodologies. Among the listed methods, the DCNNCDM-IUP model demonstrates the fastest detection time with a CT of 9.80 s, outperforming others significantly. In comparison, methods like BoW-SRP, Bow-LBP, and GLCM-SVM show higher detection times of 15.40s, 16.28s, and 17.50s, respectively. Other advanced models, including GoogleNet, VGGNet, MDTL-ICDDC, and AICDA-SSC, take even longer, with detection times ranging from 18.15s to 21.15s. This demonstrates the efficiency and speed of the DCNNCDM-IUP model in cyberthreat detection compared to its counterparts.

Conclusion

In this paper, a novel DCNNCDM-IUP method was presented for smart cities. The presented DCNNCDM-IUP method aimed to utilize the DL approach for detecting crowd densities, which, in turn, helps aid urban planning in smart cities. Initially, the DCNNCDM-IUP technique performed image preprocessing using the GF approach. The DCNNCDM-IUP technique utilized the SE-DenseNet method, which effectually learns complex feature patterns for feature extraction. Moreover, the RFO system selected the hyperparameter of the SE-DenseNet approach. Finally, the recognition of varied crowd densities was accomplished by the design of ConvLSTM. A widespread simulation analysis was conducted to illustrate the enhanced performance of the DCNNCDM-IUP technique. The experimental validation of the DCNNCDM-IUP technique portrayed a superior accuracy value of 98.40% compared to existing DL models. The limitations of the DCNNCDM-IUP technique comprise its reliance on a fixed population size and a static learning rate, which may not be optimal for dynamic or complex problem spaces. Moreover, the model’s performance can degrade in high-dimensional problems, as it may face difficulty efficiently exploring the entire solution space. The study does not address the computational cost and scalability issues when applied to massive datasets. Future work may incorporate adaptive population sizes, dynamic learning rates, and hybrid models to improve flexibility. Furthermore, exploring parallelization or distributed computing strategies could improve scalability and reduce computation time. Additionally, more robust evaluation metrics across various real-world applications provide deeper insights into the generalization capabilities of the model.

Data availability

The datasets used and analyzed during the current study available from the corresponding author on reasonable request.

References

Santana, J. R. et al. A privacy-aware crowd management system for smart cities and smart buildings. IEEE Access. 8, 135394–135405 (2020).

Solmaz, G., Baranwal, P. & Cirillo, F. CountMeIn: Adaptive Crowd Estimation with Wi-Fi in Smart Cities. In 2022 IEEE International Conference on Pervasive Computing and Communications (PerCom), 187–196 (Pisa, Italy, 2022).

Ahmed, I., Ahmad, M., Ahmad, A. & Jeon, G. IoT-based crowd monitoring system: Using SSD with transfer learning. Comput. Electr. Eng. 93, 107226 (2021).

Yu, Q. et al. Intelligent visual-IoT-enabled real-time 3D visualization for autonomous crowd management. IEEE Wirel. Commun., 28(4) 34–41 (2021).

Guastella, D. A., Campss, V. & Gleizes, M. P. A cooperative multiagent system for crowd sensing based estimation in smart cities. IEEE Access. 8, 183051–183070 (2020).

Yang, Y., Yu, J., Wang, C. & Wen, J. Risk assessment of crowdgathering in urban open public spaces supported by spatio-temporal big data. Sustainability 14(10), 6175 (2022).

Bai, L., Wu, C., Xie, F. & Wang, Y. Crowd density detection method based on crowd gathering mode and multi-column convolutional neural network. Image Vis. Comput. 105, 104084 (2021).

Fan, Z. et al. A survey of crowd counting and density estimation based on convolutional neural network. Neurocomputing 472, 224–251 (2022).

Schweizer, P. Neutrosophy for physiological data compression: In particular by neural nets using deep learning. Int. J. Neutrosophic Sci. 1(2), 74–80 (2020).

Fitwi, A., Chen, Y., Sun, H. & Harrod, R. Estimating interpersonal distance and crowd density with a single-edge camera. Computers 10, 143 (2021).

Gazis, A. & Katsiri, E. Streamline intelligent crowd monitoring with iot cloud computing middleware. Sensors 24(11), 3643 (2024).

Guo, X. et al. Crowd counting in smart cities via lightweight ghost attention pyramid network. Future Gener. Comput. Syst. 147, 328–338 (2023).

Al Duhayyim, M. et al. I. and M., Aquila optimization with transfer learning based crowd density analysis for sustainable smart cities. Appl. Sci. 12(21), 11187 (2022).

Zhai, W. et al. Scale Attentive Aggregation Network for Crowd Counting and Localization in Smart City (ACM Transactions on Sensor Networks, 2024).

Zhai, W. et al. An attentive hierarchy ConvNet for crowd counting in smart city. Clust. Comput. 26(2), 1099–1111 (2023).

Alrowais, F. et al. M., Deep transfer learning enabled intelligent object detection for crowd density analysis on video surveillance systems. Appl.Sci. 12(13), 6665 (2022).

Lin, C. & Hu, X. Efficient crowd density estimation with edge intelligence via structural reparameterization and knowledge transfer. Appl. Soft Comput. 111366 (2024).

Sharma, V. K., Mir, R. N. & Singh, C. Scale-aware CNN for crowd density estimation and crowd behavior analysis. Comput. Electr. Eng. 106, 108569 (2023).

Selvarajan, S. et al. SCBC: Smart city monitoring with blockchain using internet of things for and neuro fuzzy procedures. Math. Biosci. Eng. 20(12), 20828–20851 (2023).

Selvarajan, S., Manoharan, H., Khadidos, A. O., Khadidos, A. O. & Hasanin, T. Directive transportation in smart cities with line connectivity at distinctive points using mode control algorithm. Sci. Rep. 14(1), 17938 (2024).

Selvarajan, S. & Manoharan, H. Digital Twin and IoT for Smart City Monitoring. In Learning Techniques for the Internet of Things (131–151). Cham: Springer Nature Switzerland. (2023).

Selvarajan, S. et al. SCMC: Smart city measurement and control process for data security with data mining algorithms. Meas. Sens. 31, 100980 (2024).

Shitharth, S. et al. A conjugate self-organizing migration (CSOM) and reconciliate multi-agent Markov learning (RMML) based cyborg intelligence mechanism for smart city security. Sci. Rep. 13(1), 15681 (2023).

Thirumalaisamy, M. et al. Interaction of secure cloud network and crowd computing for smart city data obfuscation. Sens. 22(19), 7169 (2022).

Khadidos, A. O. et al. An intelligent security framework based on collaborative mutual authentication model for smart city networks. IEEE Access 10, 85289–85304 (2022).

Xie, Z. et al. Bgdfnet: bidirectional gated and dynamic fusion network for rgb-t crowd counting in smart city system. IEEE Trans. Instrum. Meas. (2024).

John Pradeep, J., Raja Arumugham, M., Santhi, S. & Kalaiselvi, S. October. Multi-convolutional neural network based crowd counting using VGG-19 architecture. In International Conference on Robotics, Control, Automation and Artificial Intelligence, 365–378. (Springer Nature Singapore, Singapore, 2023).

Kamra, V., Vaishnav, A., Verma, A., Khan, R. & Singh, S. May. A Novel approach for crowd analysis and density estimation by using machine learning techniques. In 2024 International Conference on Intelligent Systems for Cybersecurity (ISCS) 1–6. (IEEE, 2024).

Hussain, B., Afzal, M. K., Anjum, S., Rao, I. & Kim, B. S. A novel graph convolutional gated recurrent unit framework for network-based traffic prediction. IEEE Access 11, 130102–130118 (2023).

Qaraqe, M. et al. PublicVision: A secure smart surveillance system for crowd behavior recognition. IEEE Access 12, 26474–26491 (2024).

Sonthi, V. K. et al. May. A Deep learning technique for smart gender classification system. In 2023 3rd International Conference on Advance Computing and Innovative Technologies in Engineering (ICACITE) 983–987. (IEEE, 2023).

Vu, L. Q. P., Le Bui, B. D., Nguyen, M. T. T. & Pham, N. K. Estimating traffic density using convolutional neural networks based on crowd counting. In International Conference on Future Data and Security Engineering 282–296. (Springer Nature Singapore, Singapore, 2024).

Gündüz, M. Ş. & Işık, G. A new YOLO-based method for social distancing from real-time videos. Neural Comput. Appl. 35(21), 15261–15271 (2023).

Alotaibi, S. R. et al. Integrating explainable artificial intelligence with advanced deep learning model for crowd density estimation in real-world surveillance systems. IEEE Access (2025).

Tripathy, S. S. et al. A secure mist-fog-assisted cooperative offloading framework for sustainable smart city development. Digit. Commun. Netw. (2024).

Alasiry, A. & Qayyum, M. A smart vista-lite system for anomaly detection and motion prediction for video surveillance in vibrant urban settings. J. Supercomput. 81(1), 1–49 (2025).

Lakhan, A. et al. BEDS: Blockchain energy efficient IoE sensors data scheduling for smart home and vehicle applications. Appl. Energy 369, 123535 (2024).

Gao, Y. et al. A Dual-embedding based reinforcement learning scheme for task assignment problem in spatial crowdsourcing. World Wide Web 28(1), 13 (2025).

Mohammed, M. A. et al. Securing healthcare data in industrial cyber-physical systems using combining deep learning and blockchain technology. Eng. Appl. Artif. Intell. 129,107612. (2024).

Ji, X., Gong, F., Wang, N., Xu, J. & Yan, X. Cloud-edge collaborative service architecture with large-tiny models based on deep reinforcement learning. IEEE Trans. Cloud Comput. (2025).

Jayasingh, S. K., Naik, P., Swain, S., Patra, K. J. & Kabat, M. R. Integrated crowd counting system utilizing IoT sensors, OpenCV and YOLO models for accurate people density estimation in real-time environments. In 2024 1st International Conference on Cognitive, Green and Ubiquitous Computing (IC-CGU) 1–6. (IEEE, 2024).

Sidharta, H. A., Al Kindhi, B., Mulyanto, E. & Purnomo, M. H. Semantic segmentation of pedestrian groups based on directional-oriented density features with shallow U-net Architecture. Int. J. Intell. Eng. Syst., 18(1). (2025).

Zhou, W. et al. MJPNet-S*: Multistyle joint-perception network with knowledge distillation for drone RGB-thermal crowd density estimation in smart cities. IEEE Internet Things J. (2024).

Nyemeesha, V. & Ismail, B. M. Implementation of noise and hair removals from dermoscopy images using hybrid gaussian filter. Netw. Model. Anal. Health Inf. Bioinf. 10, 1–10 (2021).

Tang, J. et al. A SE-DenseNet-LSTM model for locomotion mode recognition in lower limb exoskeleton. PeerJ Comput. Sci. 10, e1881 (2024).

Gaspar, J. S. D., Loja, M. A. R. & Barbosa, J. I. Metaheuristic optimization of functionally graded 2D and 3D discrete structures using the red fox algorithm. J. Compos. Sci. 8(6), 205 (2024).

Kim, K. S., Lee, J. B., Roh, M. I., Han, K. M. & Lee, G. H. Prediction of ocean weather based on denoising autoencoder and convolutional LSTM. J. Marine Sci. Engi. 8(10), 805 (2020).

Alsubai, S. et al. Design of artificial intelligence driven crowd density analysis for sustainable smart cities. IEEE Access (2024).

Acknowledgments

The authors extend their appreciation to the Deanship of Research and Graduate Studies at King Khalid University for funding this work through Large Research Project under grant number RGP2/267/45. Princess Nourah bint Abdulrahman University Researchers Supporting Project number (PNURSP2025R330), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia. Researchers Supporting Project number (RSPD2025R608), King Saud University, Riyadh, Saudi Arabia. The authors extend their appreciation to the Deanship of Scientific Research at Northern Border University, Arar, KSA for funding this research work through the project number “NBU FFR-2025-2899-01.

Author information

Authors and Affiliations

Contributions

Conceptualization: W.M. Data curation and Formal analysis: H.A. Investigation and Methodology: W.M., A.M. Project administration and Resources: Supervision; M.A.A., M.A. Validation and Visualization: N.A., A.M. Writing—original draft, W.M. Writing—review and editing, M.A.A., M.A. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethical approval

This article contains no studies with human participants performed by any authors.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Mansouri, W., Alohali, M.A., Alqahtani, H. et al. Deep convolutional neural network-based enhanced crowd density monitoring for intelligent urban planning on smart cities. Sci Rep 15, 5759 (2025). https://doi.org/10.1038/s41598-025-90430-4

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-90430-4

Keywords

This article is cited by

-

A deep neural network-based green space design optimization framework for smart cities

Scientific Reports (2025)

-

Robust crowd anomaly detection via hybrid ensemble learning for real-world surveillance

Scientific Reports (2025)

-

Pedestrian flow prediction using a spatiotemporal multi-head attention graph convolutional network integrated with knowledge graph

Applied Intelligence (2025)

-

Tomorrow’s Smart Cities: Reflections on the Chinese Practices of Smart Cities and Strategy Development for the Future

Chinese Geographical Science (2025)