Abstract

In this paper, a new method for producing movie trailers is presented. In the proposed method, the problem is divided into two sub-problems: “genre identification” and “genre-based trailer production”. To solve the first sub-problem, the poster image and subtitle text processing strategy has been used in which, a convolutional neural network (CNN) model has been used to extract features related to the movie genre from its poster image. In addition to these features, the content features of the movie are described in the form of a TF-IDF vector, which is extracted through the pre-processed text of the subtitle. Using a classification and regression tree, a combination of extracted features is classified to identify the genre of the movie. After determining the genre of the movie, a CNN model is defined for each movie genre, and this model is trained based on the key sequences of movies of a particular genre. For this purpose, at the beginning of the second phase of the proposed method, the film is divided into its constituent scenes, and then two categories of visual and textual features are used to describe the characteristics of the scenes. These features are combined to determine the key scenes based on the CNN model corresponding to the movie genre. Finally, the movie trailer is created based on the time constraint specified by the user. The performance of the proposed method has been investigated from two aspects of genre recognition accuracy and trailer production quality. Based on the results, the proposed method can achieve 83.39% accuracy in detecting movie genres, which is at least 1% higher than compared methods. On the other hand, the 56.69% precision of the proposed method in trailer generation shows an improvement of at least 8% compared to the compared methods.

Similar content being viewed by others

Introduction

Movie genres are significant aspects of movies since they can serve as tags for targeted searches on multimedia platforms and facilitate tailored movie recommendations. The scope of film genres has expanded over the past few decades, and their categorization have gotten more precise. The action film, for instance, can be further classified into Kung fu, Wuxia, Police, and so on. As a result, building an automatic movie genre classifier to update the genre labels of already-released films is crucial for precise personalized recommendation and for offering a fine-grained classification of films in online multimedia platforms like Netflix and Internet movie databases like IMDB1. Major manufacturers are becoming interested in applications like social media for relevant and engaging content for particular target consumers, digital storytelling, movie trailer creation, video recommendation, and video retrieval due to the exponential expansion of video in sharing platforms. Video analysis is a crucial area of computer vision with significant use in a variety of problem-solving scenarios. Movies, one of the most popular forms of entertainment, are becoming more and more significant in the commercial economy as well as the cultural and creative domains.

Large-capacity data sets regarding the movie also emerged, including MMTF-14K2 and internet movie database (IMDB)3. Researchers have put out a number of automatic movie genre classification techniques in recent decades4,5,6. The suggested techniques mostly concentrate on examining visual elements7,8 that are taken from movie posters9,10 or trailers4,5 in order to classify the genre of movies. A video classification problem is the classification (or detection) of trailer genres. Using the information provided by the movie trailer, genre categorization attempts to group films according to their genres, such as drama and comedy. The IMDB selects a film’s final category based on suggestions from reviewers and users, while people still manually classify movies in various genres. Also, the trailer genre is used, among other things, by major movie streaming services like Netflix and Hulu for document classification and movie suggestion. As a result, there is increased interest in the capacity to automatically anticipate genre.

The comprehensive and overlapping character of these genres presents two major problems for this task when compared to other computer vision domains like object tracking or action detection. The first problem is that the genres in the media are not physically expressed. It is not enough to perceive the movie genre from a single frame or a few scenes; one needs view the complete video material. The fact that genre classification has multiple labels presents the second major obstacle. Typically, a movie features multiple genres at once11. In recent years, numerous deep learning and artificial neural network-based techniques have been introduced to solve various real-life problems like computer vision, image and video captioning, multi-label text classification (MLTC) with an improved performance12,13,14. However, such methods usually demand intensive resources and require more time to complete the executions15.

This article focuses on the drawbacks of the present moderately automatic trailer production approach. These techniques most of the time lack the genre-specific features that are very important for the successful delivery of the entertainment content to the audience. This is because they are based on the same patterns or very little details which ignore the immersive information that movie genres contain. As a result, the generated trailers may depict the movies wrongly, in terms of their tone, themes and target audience, which makes them ineffective because they can’t attract viewers.

This research aims to deal with the context of such limitations and provides a new strategy that utilizes the power of convolutional neural networks (CNNs) for genre recognition. CNNs have unique ability to capture genre-related features from data. This method of generating trailers gets its influence from the genre understanding and thereby attempts to produce trailers which are in sync with the movie genre and its target audience. The proposed research is based on the assumption that the use of the genre-specific strategy will influence the accuracy of genre recognition and the quality of the automated trailers.

In this paper, the innovation in our method is the use of a genre-based approach for extracting trailers. This means that key scenes are identified based on the film genre, using a genre-specific model for this purpose. The combination of techniques and features used in this paper is another innovation that sets it apart from other approaches. This paper contributes in three ways:

-

Providing a method for detecting movie genre, only based on subtitle and movie poster features.

-

Providing a new model for identifying key sequences in movies based on subtitle and visual features.

-

Presenting a genre-specific model for producing trailer from movies.

The continuation of the paper is as follows: In the following part, we looked at some similar research. In the third portion, the materials and technique were presented. The fourth portion provides an assessment of the findings, and the fifth section concludes.

Related works

Numerous people are now conducting study in the area of movie trailer creation. In this section, we discuss the fieldwork that has been done.

Doshi et al.16 proposed predicting movie genres using topological data analysis (TDA) discourse traits, notably homological persistence. The IMDb dataset’s movie genre identification categorization findings were enhanced by their analysis of movie data, which was indifferent to the specific metric selected. Hoang17 investigated multiple neural networks built on gated recurrent units (GRU) to identify movie genres based on narrative summaries. In addition, he used the rank approach, K-binary transformation, Naive Bayes, Word2Vec with XGBoost, and Recurrent Neural Networks classifiers for predicting movie genres.

Wehrmann et al.5 used movie trailers for multi-label genre classification using convolution through-time (CTT) modules. Five models were proposed, with three adjusting classification layers based on video content, one using audio content, and the fifth combining results from audio and video networks. Experiments were conducted on LMTD-9, a dataset of 4007 movie trailers, with the CTT-MMC-TN model achieving the best results at 74.2%. Portolese et al.18 improved their dataset to 13,394 films, categorized into 18 genres and textual feature categories, but with Portuguese synopses only. Additionally, they worked with a dataset that had been oversampled. A TF-IDF based classifier produced the best results for the original dataset, with an average F1-score of 0.478; however, a combination of multiple feature groups produced the best results for the oversampled dataset, with an average F1-score of 0.611.

Mangolin et al.19 addressed the challenge of movie genre classification using a multimodal approach. A dataset of trailer video clips, subtitles, synopses, and movie posters from 152,622 movie titles was created, labeled according to eighteen genre labels. Descriptors were extracted using various methods and evaluated using classifiers like BinaryRelevance and ML-kNN. This study found that the best results were obtained by combining LSTM and CNN classifiers on synopses and trailer frames, and 0.673 on the AUC-PR metric. JIANG20 presented a hybrid PlacesNet-LSTM model for movie trailer genre classification, combining multimodal visual and audio features with multiple machine learning techniques. The model has significant advantages for classification, compared to previous studies using SVM and CNN features. However, the model had limitations, such as time-consuming image preprocessing and room for improvement in performance for complex tasks like multi-label movie genre classification. This study also explored the impact of scene-based CNN features on classification structure.

Wi et al.21 have introduced a Gram layer for classifying movie posters into multiple genres. The layer extracted style features using feature summarizing inter-channel relations for input feature maps. It generated style weights and weighted input feature maps. The Gram layer proved to be effective in identifying movie poster characteristics and genre correlations, enabling more accurate multi-genre classification. It could be applied to various CNN architectures. Hesham et al.22 proposed an adaptive framework called Smart-Trailer (S-Trailer) to automate creating online movie trailers based on English subtitles. The framework analyzes subtitle files to extract textual features and classifies movies into genres. The system has high classification accuracy rates (0.89) and returns automated trailers with an average recall of 47%. Deep learning techniques are used to adapt the system to users’ preferences.

Tabernik et al.23 Movie2trailer was an unsupervised approach for automatic movie trailer generation, utilizing anomaly detection in shot selection. It extracted information directly from the input movie, producing high-quality trailers in less time than professional editors. The approach achieved visual attractiveness and closeness to the real trailer, revealing new horizons in anomaly detection applications. Mangolin et al.19 developed a multimodal technique for multi-label classification issues. The 152,622 movie items in the dataset—which included information like titles, synopses, and subtitles—were acquired from TMDb and carefully selected. Eighteen possible labels were to be used to select the genres. Numerous descriptors were produced, including convolutional neural networks (CNN), spectrograms, mel frequency cepstral coefficients (MFCCs), statistical spectrum descriptor (SSD), and local binary pattern (LBP) from spectrograms. ML-kNN and Binary Relevance classifiers were employed in the final model. F-score (0.674) and AUC-PR (0.725) metrics were best achieved by their final model, which combined an LSTM trained on synopses with another LSTM trained on subtitles.

Wang et al.24 offered a solution to the multi- label text classification problem by inspecting new terms and text categories for more accurate and robust classifications. Semantic words appropriate for a deep neural network and semantic representation model (DSRM-DNN) are chosen using word embeddings and clustering. Their final model uses a weighted combination of word properties, which function as the DSRM-DNN elements, to classify text elements. Low frequency words are subject to semantic words under sparse constraint and re-expressed throughout the categorization process. Yu et al.1 presented a two-module system for classifying movie trailer genres. In order to extract spatio-temporal information from trailer frames that contain the aforementioned features, the first module, called spatio-temporal, uses sophisticated convolution neural networks. The recovered spatio-temporal properties are processed by the second module, which is called the attention-based sequential module, in order to capture global high-level sequential semantic concepts.

Argaw et al.25 were the first to present a trailer generation framework that focused on shot-selection and composition within a full movie. This research presents a deep neural network based on the encoding-decoding structure and is called trailer generation transformer (TGT). The authors can effectively solve the problem of shot selection, but they cannot fully authentically capture the genre-specific elements. Smith et al.26 proposed a creative human–machine cooperation for generating trailers. However, this work bridges the gap between automation and human expertise by utilizing human intervention to complete final assembly, which makes automation limited.

Papalampidi et al.27 tackled the complexity of trailer generation by decomposing it into subtasks: narrative structure identification and sentiment classification. The unsupervised learning method they suggest comes with a refreshing view of decomposition but at the same time it might limit the control over generated content. Kakimoto et al.28 explore content editing techniques to personalize trailers to the audience. On the one hand, this work sustains audience preferences but on the other hand, it might be shallow to have a sole focus on editing techniques.

These works demonstrate that automatic trailer generation is still evolving. This research presents a new way of creating trailers using genre specific CNNs for both genre recognition and key scenes selection. Different from the old ways that normally utilize a common model or fail to take into account the specific genre of a movie, our approach adapts the trailer creation to the unique features of each movie genre. Herein lies the key advantage:

-

Genre-specific CNNs: Through using separate CNNs that are trained on data specific to each genre, our method can basically catch the sophisticated stylistic features and main scene clichés that differ from genre to genre. This is the case in spite of the fact that they might not be able to discriminate between the genres while a human being can.

-

Enhanced trailer quality: With the genre being covered, our model will choose the scenes that are most significant and representative of the specific genre. This is how the trailers are made more likely to connect with the audience intended for the movie.

Such a focus on the feature of genre is unique for the automatic trailer generation methods used at the moment, and it has the potential of enhancing the quality and success rate of automatically generated trailers.

Research methodology

Generating attractive trailers from movies requires efficient learning models and a comprehensive data set to train these models. The current section first describes the features of the used data set and then provides the details of the proposed method for automatic movie trailer generation.

Data

This section describes the specification of the dataset employed for training and testing the proposed movie trailer generation method. The goal was to gather a complete database with diverse genres of movies and useful features for both genre identification and key scenes selection.

Dataset encompasses 307 movies, each with the average duration of 73.95 min. Each sample includes the following items:

-

Visual information: Each movie is represented with video frames of size 240 × 320 pixels.

-

Audio information: The dataset includes the original English audio track for each film.

-

Textual information: Every sample includes English subtitles and an official poster that was released for the movie.

-

Genre labels: Each sample came with a respective genre label that was assigned so as to classify its category by genre (comedy, or fantasy in this case). For simplicity, the genre of each movie was defined as a single label. In other words, while some movies might belong to multiple genres, for the purpose of training and evaluation, a primary genre label was assigned to each movie sample.

-

Scene segmentation: Every film is broken down to its segments which comprise a total of 8912 scenes for the whole data set. Scene priorities are designated to each scene, “key” and “non-key” labels respectively indicate the importance of the trailer creation.

The mean number of scenes is 29.07 per movie, which is a relatively realistic range of scene amounts in feature films.

To create this dataset, we followed a meticulous process:

-

Movie selection: Our list of 307 movies was made up of different genres (comedy, fantasy, and horror), and we chose them with great care to make sure we have a range of styles, themes and production values.

-

Data acquisition: All the movies were obtained through legal channels, and this includes validating their licensing and copyright terms of use.

-

Pre-processing: Extensive pre-processing steps were applied:

-

Scenes within movies were identified by means of shot boundary detection algorithms, such as those used for the segmentation.

-

The tracks and subtitles were extracted from the movies.

-

Movie posters were gathered, and resized to the same standard format (270 × 400 pixels).

-

We had human experts giving importance labels to each scene which were then reviewed for their relevance to a trailer. Therefore, the scene importance dataset was labeled with this method to facilitate quality and accuracy.

-

The dataset size and genre balance were chosen for the purpose of providing an appropriate compromise between representativeness and computational feasibility. Though the increase of the training dataset size may lead to a better model performance, we chose to train a model with a reasonable size that still allows the model to include the most essential characteristics of every genre. The chosen genres (comedy, fantasy, and horror) are diverse and represent different filming techniques, giving our model a chance to learn those features that are genre-specific and key for scene selection in them. With this dataset we can consider it as a good basis for a training and evaluation of the proposed approach.

Proposed method

Generating attractive trailers should be done according to the style and genre of the film. In other words, key scenes in different genres may have different patterns and their identification through a common mechanism and model does not lead to a favorable result. For this reason, in the proposed method, the problem of automatic movie trailer generation is divided into two sub-problems: “genre identification” and “trailer generation based on the genre”. To solve the first sub-problem, the poster image and subtitle processing approach is used; while the second sub-problem is done through the simultaneous analysis of movie scenes and their subtitles. In both of these phases, the proposed approach utilizes the convolutional neural network (CNN) and tries to extract an attractive and comprehensive trailer from the movie by identifying the key scenes of the movie. With these explanations, the proposed method can be divided into the following main phases:

-

1.

Identifying the movie genre.

-

2.

Identifying key scenes and generating trailers based on genre.

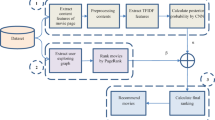

The steps of the proposed method are depicted as a diagram in Fig. 1. The steps of the first phase are shown as green blocks in this figure. Based on this figure, only the image of the movie poster and its subtitle are used to identify the genre of the movie. Therefore, the input data for the first phase (genre identification) includes the movie poster image in a specific resolution and the pre-processed subtitle text as a sequence of tokens. The pre-processing involves removing punctuation and stop words, and also stemming words. In the first phase, the processing steps imply taking visual features from the poster image via a CNN. On the other hand, the TF-IDF is a textual feature extractor from the text of the subtitles. They are the combined into a single feature vector. With that, the concatenated feature vector is then inputted into the classification and regression tree (CART) model for genre classification. The result of this phase is a genre of the film with the score that indicates the confidence of model to this classification.

After determining the genre of the movie, a CNN model specific to that genre is used to identify the key scenes of the movie. In other words, in the proposed method, a CNN model is defined for each movie genre, and this model is trained based on the key scenes of movies of a particular genre. For this purpose, at the beginning of the second phase, the film is decomposed into its constituent scenes, and then two classes of visual and textual features are used to describe the features of the scene. These two classes of features are combined to determine the key scenes based on the CNN model corresponding to the movie genre. The output format of this step (key scene selection) is a list of identified key scenes for the movie trailer. Each scene in the list is represented by its index within the movie footage, along with a confidence score reflecting the model’s belief in its importance for the specific genre. After determining the key scenes of the movie, the movie trailer is generated based on the time limit specified by the user.

Identifying the movie genre

In the first phase of the proposed method, movie genre identification is performed based on machine learning techniques. The purpose of identifying the movie genre is to use specific models for each genre so that based on them, key scenes for trailer generation can be identified more accurately and in accordance with the style of the movie. The proposed method for detecting the movie genre uses only the set of features extracted from the poster image and the subtitle of the movie. These two sets of features are often highly related to the movie genre and at the same time (unlike the features extracted from the sound or image of the movie) they entail very little processing load. Movie posters are used to convey some information about the genres through visual elements like the color palettes, theme or settings. As an example, a poster with the color scheme of darkness, gothic architecture, and characters in cloaks may give hints towards the horror genre. Furthermore, subtitle texts may also carry keywords used as references to dialogue or plot aspects specific to the genre. For instance, a regular use of words such as ‘investigation,’ ‘suspense,’ and ‘murder’ in subtitles can be pointed out as evidence of the horror genre. Thus, the first phase of the proposed method can be divided into the following partial steps:

-

1.

Describing features related to the genre based on the image of the movie poster.

-

2.

Describing content features based on the subtitle of the movie.

-

3.

Merging features and genre identification.

The rest of this section is dedicated to providing the details of each of the above partial steps.

Describing the features related to the genre based on the movie poster image

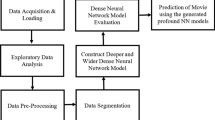

In the first step of the genre identification phase in the proposed method, the features related to the film genre are extracted through its poster image. For this purpose, a CNN is used. The structure of this neural network is shown in Fig. 2. This CNN model receives movie poster images as input. The movie genre variable is used as a target label in the training process. In this way, this CNN model is trained to be able to predict its genre based on the movie poster image. Since the standard dimension ratio in a movie poster image is 24:40; therefore, all inputs of this CNN model are resized to 270 × 400 dimensions. Each sample is described in terms of the RBG color system.

The proposed CNN model extracts features related to the movie genre in the input image through three convolution stages. In each convolution stage, a 2D convolution layer is first used to extract feature maps. Each convolutional layer uses ReLU (Rectified Linear Unit) activation functions for introducing non-linearity. We chose this architecture based on its effectiveness in capturing spatial relationships within visual data, which is crucial for analyzing movie posters. The final convolutional layer is followed by a fully connected layer with a SoftMax activation function, which outputs the probabilities of the movie belonging to each genre. The max pool layer is used to sample feature maps. As follows, each convolution step in this CNN model is formulated:

where \({\text{f}}_{{{\text{mpool}}}}\) denotes the max pool layer operator, which applies to each N × N patch and sends the maximum value of that patch as an output. Also, \({\text{Conv}}\left( {\text{x}} \right)\) describes the convolution operator on input x, and finally, \(\max \left( {[],0} \right)\) represents the behavior of the ReLU activation function, which replaces negative values with zero. According to Fig. 2, as the convolution layers progress, the layer dimensions decrease and the number of filters increases. Because with the increase in the depth of the network, the number of extracted feature maps increases, and for their detailed analysis, it is necessary to examine more states of the data patterns. It should be noted that in all convolution and pooling layers, the stride is set equal to the filter size, and in other words, there is no padding in the convolution and pooling operations. As mentioned, this CNN model is trained based on the genre label of samples to identify the relationship between the features of the poster image and the genre of the movie. However, this set of features is not enough to capture the diversity of poster design patterns, and the content features of the film are also very important. For this reason, after training the CNN model based on the genre label; in the test phase, the layers related to the classification are ignored and the activation output from the FC1 layer is considered as the output of the CNN model. This 500-length numeric vector, extracted from the movie poster by the proposed CNN model, represents the movie genre features. The Adam (Adaptive Moment Estimation) optimizer was used in CNN training. Adam helps with the effectiveness because of its efficiency, adaptivity and inertia, which result in the speed and quality of the solution found. During the training, the initial learning rate was considered as 0.001. This is a common starting point for Adam, particularly for image processing tasks with CNNs and experiments showed that, 0.001 offers a balance between exploration and exploitation during training. Also, the batch size was considered as 32 and the model was rained within 90 epochs.

Describing content features based on the subtitle of the movie

In the second step of the genre detection phase, the proposed method uses the subtitles of the films to extract the content features as TF-IDF vectors, after applying text processing techniques. We apply TF-IDF to get a set of textual features from the subtitle text. TF-IDF identifies keywords that occur commonly in a subtitle but are less frequently used in subtitles of the other genres. In that way it is possible to identify the genre-specific information which is within the textual content.

This mechanism starts with the pre-processing of the subtitles, which includes the processes of segmenting the text, removing ineffective words, and finding the stems of words. First, the subtitle is word-level analyzed. Then, each proper name and number in the text is replaced by “NameIdentifier” and “NumeralValue” keywords, respectively. In the following, all the ineffective words in the text that do not reflect any content in the text are identified and removed from it. During this process, a blacklist containing all the ineffective words of the English language is utilized. The pre-processing process is completed by finding the stems of the remaining words (except keywords). In the proposed method, the Porter stemming algorithm is used to find the stems of words. The computational steps of the Porter stemming algorithm for English texts are described in29, therefore, the details of this algorithm are omitted. Each of the processes used in the pre-processing step can be effective in reducing the length of the TF-IDF feature vector and increasing the generality of the proposed feature extraction model. Removing ineffective words helps to create a feature description model that reflects the content features of the sample, and this process eliminates redundant and useless information. Word stemming strategy makes different forms of a word (prefix and suffix) the same, which reduces the feature vector length and the overfitting risk.

After the pre-processing of the texts, the content features of the subtitles are described through the TF-IDF approach. Thus, a unique list of all stem words in the database texts is formed as \({\text{U}} = \left\{ {{\text{u}}_{1} , \ldots ,{\text{u}}_{{\text{n}}} } \right\}\). Then the number of repetitions of each word U in each subtitle is counted. \({\text{TF}}\left( {\text{d}} \right) = \left\{ {{\text{t}}_{{{\text{d}},1}} , \ldots ,{\text{t}}_{{{\text{d}},{\text{n}}}} } \right\}\) is the resulting vector for each text like d. Next, the frequency of each word U in all the database subtitles is counted to obtain a set like \({\text{IDF}} = \left\{ {{\text{f}}_{1} , \ldots ,{\text{f}}_{{\text{n}}} } \right\}\). The i-th element in the sets TF(d) and IDF shows the frequency of the root word ui in the sample d and all samples, respectively. The TF-IDF vector, which describes the importance of each concept in the film text, is obtained by combining these values. The proposed method uses the logarithmic combination of these two sets to reflect the content features more accurately. Therefore, in the end, the weight of each word such as ui for the subtitle d in the TF-IDF vector is calculated according to Eq. (2)30:

where \(\left| {\text{S}} \right|\) indicates the number of database samples, and \(\left| {\text{d}} \right|\) represents the number of words that make up the text d. Using the above relation, each sample is described in the form of a numerical vector with length n.

Merging features and genre recognition

In the third step of the genre identification phase in the proposed method, the CART model is used to classify CNN and TF-IDF features. For this purpose, first, the set of features extracted in the first step (CNN features extracted from the movie poster), and second, the TF-IDF features extracted from the subtitle, are combined into a single feature vector. This composite vector involves the display of both video and textual data in one place, which is pertinent to the purpose of genre identification. This vector is used as the input of a CART model to determine the movie genre based on it. CART is the algorithm that constructs a tree structure to evaluate the features within the vector. Every node of the tree stands for a decision rule constituted using a particular feature value. The CART model is designed to walk through the tree that is constructed from the extracted features, finally classify the movie into a particular genre that is learned by means of the deduced decision bounds.

The CART model is a machine learning model for classification and regression purposes, that builds a decision tree based on input features and divides the data into different classes the decision tree begins with a root node and splits the data recursively into different branches based on the input features until the specified stopping criteria are met. The resulting tree has a hierarchical structure. The CART model used in the proposed method is of binary type and each decision node can generate two children through a split point. The leaf nodes of the tree represent the target label of the samples that have reached that node by traversing the branches of the tree. In the proposed method, the Gini impurity criterion is used to construct tree branches. In this process, at each level of tree construction, the best split point for each node is determined by minimizing the Gini impurity of the resulting child nodes, which indicates how well the classes are separated. The functional mechanism of the CART model is explained in31. After identifying the movie genre, a learning model specific to that genre is used to identify the key scenes and generate the trailer, which is explained in the next section.

Identification of key scenes and trailer generation based on the genre

The proposed model for trailer generation uses a separate learning model to identify key scenes specific to each genre. Thus, after determining the genre of the movie in the first phase of the proposed method, the features of each scene of the movie are extracted and analyzed by the learning model corresponding to the genre of the movie to determine the importance of the scene (key or non-key scene). The proposed method for determining key scenes uses a combination of visual and textual features and classifies these features by a 1D CNN model. After identifying the key scenes, the movie trailer is generated considering the time limit based on the key scenes. Thus, the second phase of the proposed method consists of 3 partial steps, which are explained in the following section:

-

1.

Scene features description.

-

2.

Key scenes Detection based on CNN.

-

3.

Trailer generation based on key scenes.

Scene features description

In the first step of the second phase of the proposed method, the combination of subtitle features and visual features of the scene is used to describe each scene. The first feature set describing a scene is extracted through its subtitle. These features are extracted through the TF-IDF vector describing the scene. The TF-IDF vector extraction mechanism for a scene is similar to the mechanism described in “Describing content features based on the subtitle of the movie” section; With the difference that in this step, the resulting TF-IDF vector (instead of describing the content of the whole movie text) describes only the text content of the scene. To put it more clearly, in this step, the set of unique words U is generated only based on the words in the movie text. The subtitle of each scene of the current movie is considered as a separate sample. Then, to calculate the TF related to a scene, the number of repetitions of each stem word from U in the scene is counted, and the IDF shows the number of repetitions of each word in the entire current movie. Finally, (2) is used to extract the TF-IDF vector of the scene. The resulting TF-IDF vector is too long and too specific to the words used in the movie, so it cannot be used to determine key scenes. This vector requires a more complex model to learn patterns, and on the other hand, it describes sample-specific features instead of class-specific features, which increases the risk of overfitting. Because of these conditions in the proposed method, instead of using the scene TF-IDF vector, only one set of statistical features is used to describe the general features of the scene TF-IDF. These features include:

-

G1–2: Maximum and minimum weight value in TF-IDF scene.

-

G3–4: mean and standard deviation of scene TF-IDF vector values.

-

G5: The number of non-zero elements in the TF-IDF vector of the scene, which indicates the number of unique words used in the scene.

-

G6: The ratio of words that do not contribute to the meaning of the scene subtitle to the total number of words in the subtitle.

-

G7: The average of the TF-IDF vector similarity of the scene.

In this way, the features related to the subtitle of each scene are described through 7 numerical features. In the above set, the average similarity feature indicates the average cosine similarity of the current scene’s TF-IDF vector with the TF-IDF vectors of the other scenes that make up the movie. Based on this, the content importance of the scene can be described effectively. In order to calculate this feature for scene x, Eq. (3) is used:

where \({\text{F}}_{{\text{x}}}\) represents the TF-IDF vector extracted for scene x and K describes the set of scenes that make up the movie.

The second set of features used to describe each movie scene is the visual features of the scene, which can be used to identify key scenes more effectively by merging them with the features extracted from the text.

At this stage, the system tries to find the features that are most likely to be important for each scene and uses them to create an appealing trailer. The set of features used in the proposed method are as follows:

-

F1: the starting point of the scene, which is calculated by dividing the starting time of the scene (minutes) by the length of the film.

-

F2: duration of the scene in seconds.

-

F3: the sequential position of the scene in the film, which shows that the current scene is the nth scene of the film.

-

F4: The total number of scenes that make up the movie.

-

F5: Number of people with dialogue in the current scene.

-

F6–8: The standard deviation of the brightness intensity in the pixels of the current scene. In order to calculate this feature, first the standard deviation of each pixel in the scene frames of the scene is calculated and then the average values obtained for all pixels are considered. Note that this feature is calculated for each RGB color layer of the frame scene.

-

F9–11: The standard deviation of the brightness intensity of the current scene with other scenes: In this feature set, the standard deviation of the feature F6 to F8 in each scene is calculated with other scenes.

At the end of this step, the two feature sets G1–7 and F1–11 are appended to each other in the form of a numeric vector with a length of 18 to identify the key scenes based on them.

Detecting key scenes based on CNN

In the second step of the trailer generation phase, CNN models are used to determine the key scenes of the film. In the proposed method, the number of CNN models corresponds to the number of movie genres. In other words, the determination of key scenes in the movies of each genre is done by a dedicated CNN model, which is trained only based on samples belonging to that genre. Using genre-based models can be effective in identifying key scenes more accurately. Because the criteria and patterns of a key scene in each movie genre can be different from other genres, and for this reason, these differences can be modeled more accurately through special learning models. The CNN model used in the proposed model to determine the key scenes in each genre is depicted in Fig. 3.

According to Fig. 3, the proposed learning model is a 1D CNN whose input layer is fed through 18 features extracted in the previous step. This CNN model includes 3 convolution layers whose dimensions are defined as 15, 8, and 4 respectively. The number of filters in these convolution layers is set to 12, 32, and 64 respectively. The step size in all these layers is equal to 1. Each 1D convolution layer is followed by a logarithmic sigmoid function, and further, average pooling layers are used to sample the extracted feature maps. This function reduces the dimensions by half by calculating the average of both consecutive values in its input. At the end of this CNN model, fully connected layers are utilized to reduce feature dimensions so that the probability of each scene belonging to key and non-key classes can be determined using a SoftMax layer. The model of CNN employing for identifying the main scenes used the Adam optimizer during the training process. Besides, according to the experiments, the highest resulting values of 0.001, 20 and 60 were picked for learning rate, batch size and number of epochs, correspondingly.

Trailer generation based on key scenes

After classifying the movie scenes based on their keyness, a process based on iteration is used to generate the movie trailer. For this purpose, first, the key scenes identified based on the probability of belonging to the positive (key) class are sorted in descending order and the key scenes are added to the trailer based on the order of priority. This process is as follows:

-

Ranking key scenes: These scenes are ordered in the way how their prediction of being the key scenes looks like. By listing them in order of importance, this ranking will help to ensure that the scenes which are most critical to the telling of the story are offered up in the trailer.

-

Temporal ordering: What follows is the arrangement of the ranked key scenes, in terms of time, therefore maintaining the flow of scenes in the movie. It also serves to keep the time line going and does not pull the readers in different directions in terms of time.

-

Time constraint optimization: An ordering mechanism is employed to choose a set of important scenes that would not only give the maximal relevance and cohesiveness to the trailer but also fit the given time limit. This mechanism takes into account factors of scene length and scene importance.

-

Transition smoothing: To create continuity between the selected scenes when transferring from one scene to another transition is used. This refers to the technique of using fade-in, fade out and cross fading on the clips to avoid making certain cuts and thereby keeping the subject’s continuity almost perfect.

-

Audio integration: The audio tracks corresponding to the selected scenes are extracted and synchronized with the video.

-

Final trailer assembly: In the selected scenes, the synchronized audio is mixed to bring out a final trailer.

As a result, the proposed method produces trailers that are informative and contextually related to the movie, and would thus provide an accurate insight of the movie and compel the potential viewers.

Implementation results

Implementation details

MATLAB 2020a was used to implement and evaluate the proposed model. To carry out the studies and ensure robust performance evaluation, we used a cross-validation technique with 10 repeats. The proposed method was implemented in two stages. Using the proposed method, we recognized the movie genre in the first step. In the second phase, we discussed the identification of key sequences utilizing the proposed method. The proposed method focuses on automatic movie trailer generation by breaking it down into two sub-problems: genre recognition and production. To create an appealing trailer, it incorporates poster image and subtitle text processing, as well as a convolutional neural network. In the following, after explaining the evaluation metrics, the results obtained through examining the efficiency of the proposed method in genre classification and trailer generation has been presented.

Evaluation metrics

The following will outline the F-Measure, Accuracy, Precision, and Recall criteria, as metrics used to evaluate the proposed method. TP: true positive; FP: false positive; TN: true negative; FN: false negative.

Accuracy for each instance is the ratio of correct predicted output to total number of possible outputs for that instance.

Precision is a measure of the number of positive observations predicted. It is the proportion of successfully anticipated positives to total positive forecasts.

Recall is the proportion of predicted positive observations that are accurately predicted, compared to the total number of actual positive observations.

The F-Measure is the harmonic mean of the precision and recall values achieved for a specific classification model.

In the following, first the performance of the proposed method in identifying genres of the movies has been evaluated (“Performance analysis on genre identification” section, and then, its efficiency in producing genre-specific movie trailers has been examined (“Performance analysis on trailer generation” section).

Performance analysis on genre identification

The first phase of the experiments was carried out to evaluate the performance of the proposed method in movie genre classification. To comprehensively evaluate the performance of the proposed method in genre classification, we compared it with the following existing state-of-the-art approaches:

-

Mangolin et al.19: The technique adopts a pre-trained CNN model (VGG16) that is used to extract movie features from frames and then an RNN model that generates trailers. A similar two-stage approach (feature extraction and generation) is used by it, while another type of CNN architecture is used for feature extraction.

-

Placenet-LSTM20: The method involves using a pre-trained model to spot significant scenes for trailer creation. It provides a comparison of multiple scene identification methods in the trailers creation stage.

-

Jeong et al.21: This project is devoted to the movie summary and the scene segmentation, which consist of the scene recognition and the scene importance prediction. The technique is also relevant to this step of the approach as it deals with the same sub-question of what type of scenes should be chosen. We could show that our scene identifier approach is more effective and it can be done with genre-specific CNN models.

Additionally, we included two ablation cases within our own method to assess the impact of different feature sets:

-

Prop. (Subtitle): This experiment is to see whether poster features are helpful for genre classification by using the subtitle feature as vector representation with TF-IDF.

-

Prop. (Poster): In this study, the efficiency of subtitling is evaluated by performing genre classification, using only the CNN-extracted features from the poster as input data.

By comparing our proposed method with these diverse approaches and ablation cases, we gain a comprehensive understanding of the impact of combining poster and subtitle features. To put it in a nutshell, Prop. (Subtitle) and Prop. (Poster) full method demonstration becomes a strong evidence of the effectiveness of joining poster and subtitle properties to accomplish the genre classification.

Figure 4 illustrates the precision measure, the three genres (Horror, Sci-Fi, and Comedy) displayed. As you can see, precision values of 0.83, 0.82, and 0.80 have been attained by our suggested approach, Mangolin19, and Placenet-LSTM20. Furthermore, it has improved by 0.11 when compared to the Prop. (Subtitle) technique. This demonstrates that our technique uses a convolutional neural network to build an appealing trailer, poster image, and subtitle text processing that outperforms other methods.

We can see the recall criterion in Fig. 5, with the three genres (comedies, sci-fi, and horror) represented by the columns. As you can see, the recall criterion for our proposed method, Mangolin19, Placenet-LSTM20, and Jeong et al.21 are 0.83, 0.82, 0.80, and 0.77, respectively. Moreover, our method outperformed Prop. (Subtitle) by 0.11, because Prop. (Subtitle) only uses the set of features retrieved from the subtitle text to recognize the movie genre. If the approach we propose employs a convolutional neural network in addition to the characteristics derived from the subtitle and movie poster, this demonstrates that we were able to accurately recognize the number of examples of numerous movie genres using the recall criterion.

Figure 6 depicts the F-measure between the proposed approach and comparison methods. The comparable approaches of Mangolin19 and Placenet-LSTM20 have attained values of 0.82 and 0.80, respectively, while our suggested method has reached a value of 0.83 in this figure. As you can see, the F-measure is the consequence of the proposed method’s superiority in terms of precision and recall, which has generally provided excellent accuracy.

Figure 7 depicts the precision, recall, and F-measure measurements. The precision criteria for the suggested approach, Mangolin19, and Placenet-LSTM20 are, respectively, 0.83, 0.82, and 0.80. Additionally, they each have values of 0.83, 0.82, and 0.80 for the recall criterion. Additionally, their respective F-measure values are 0.83, 0.82, and 0.80. Also, comparing the proposed method with Prop. (Subtitle) and Prop. (Poster), it can be seen that Prop. (Subtitle) is somewhat better than Prop. (Poster), but the combination of these two mentioned methods in addition to the convolutional neural network It has caused our method to have high precision, recall and F-measure compared to all comparative methods.

Figure 8 displays the confusion matrix with the columns representing the ground-truth genres (Horror, Sci-Fi, and Comedy), while rows of the matrix represent predictions made by the algorithm. The results demonstrate the superiority of our method over other comparison methods: our suggested method has achieved an accuracy value of 83.39, whereas the Mangolin19 and Placenet-LSTM20 methods have reached values of 82.41 and 80.13, respectively.

Figure 9 depicts the average accuracy measure; in this chart, our suggested approach has the greatest value of 83.38, whereas the comparative methods of Mangolin19 and Placenet-LSTM20 have values of 82.41 and 80.13, respectively. Furthermore, our suggested approach provides a 1% advantage over the comparing approaches. And it has high accuracy in detecting and identifying the movie genre.

Figure 10 shows the ROC curves obtained by classification of movie genres by each method. The area under the curve (AUC) of the proposed method is 0.9084 and Prop. (Subtitle) is 0.8053 and also the comparative method of Mangolin19 is 0.8812. An increase in the area under the ROC curve indicates a decrease in the false positive rate (FPR) and an increase in the true positive rate (TPR). The graph’s output values demonstrate that, in comparison to other comparable approaches, our suggested method performs well.

In Table 1 the proposed method and Mangolin19 outperform other methods in terms of precision, recall, F-measure, accuracy, and AUC, with the latter showing better performance than the others. The proposed method and Mangolin19 have higher precision, recall, F-measure, accuracy, and AUC compared to other methods listed.

Performance analysis on trailer generation

This experiment, evaluates the efficiency of the proposed model in producing movie trailers. To evaluate the efficiency of the proposed method in generating trailers, we compared it with the following existing approaches:

-

SmartTrailer22: This paradigm explores the use of the subtitles from the English movies and extracts textual features, classifies movies into genres, and automatically generates the trailers. The comparison of our genre-specific selection of scenes and other techniques with SmartTrailer will examine how the quality metrics beyond classification accuracy can be affected as well.

-

Movie2Trailer23: This unsupervised fashion of anomaly detection is a way for automatic movie trailer generation. By comparing movie2trailer with the proposed method, we are able to see that there is a tradeoff between visual appeal and genre-specific accuracy within the trailer creation process.

Additionally, we included three ablation cases within our own method to assess the impact of different scene selection strategies.

-

Prop. (Visual features): It is a test case which analyzes the performance of subtitle features in giving crucial scenes for understanding the film. In this ablation scenario we choose key scene cut-outs only based on the visual features extracted from the camera shots. Let’s then go ahead and compare the entire suggested procedure with Prop. (Visual features) is supportive of illustration of advantages of applying data from movies subtitles along with visual clues for the identification of the important scenes.

-

Prop. (Subtitle features): Here visual features will be evaluated to identify which scenes are crucial. At this stage we choose the most important scenes from movies by analyzing them in no more than the subtitles (for instance, keywords, sentiment analysis). Evaluating the entire alternative method as compared to Prop. (Visual Features) is an attempt to show that combining visual features with subtitle features is ultimately a more efficient way of determining scene importance.

-

Prop. (No genre identification): This scenario refers to the case that trailer generation in the proposed method is performed based on a single CNN model. In other words, genre identification step is omitted in this scenario and a common CNN is used to identify key scenes for including in the trailer, for all instances. By comparing the results of the proposed method with this scenario, the influence of genre identification step on the quality of the generated trailers can be interpreted.

Figure 11 presents the precision, recall, and F-measure measures in trailer generation. In this graph, our suggested technique received a precision criteria value of 0.56, while the comparative methods of SmartTrailer22 and Movie2Trailer23 received values of 0.48 and 0.41, respectively. In the recall criterion, the suggested technique received a value of 0.45, while the comparative methods of SmartTrailer22 and Movie2Trailer23 received values of 0.42 and 0.37, respectively. Also, for the F-measure, values of 0.50, 0.45, and 0.39 were obtained, demonstrating the great performance of the technique we recommended in the precision, recall, and F-measure criteria. As shown in this figure, compared to the “Visual Features” and “Subtitle Features” cases, the proposed approach could improve the studied metrics. This proves that each of the utilized feature sets has a positive influence on the quality of generated trailers. Also, the proposed approach improves the precision, recall and F-measure compared to the “no Genre Identification” case by 13%, 6.2% and 9.1%, respectively. This can be interpreted as the positive influence of the proposed strategy for generating trailers based on the genre of the movies on the quality of the results.

Figure 12 indicates the Precision-Recall Curve (PRC), illustrating different precision values for various recall thresholds. According to the results, the area under the curve (AUC) for PRC of our proposed method exceeds those of the compared methods, signifying that our method not only identified key scenes with greater accuracy but also produced outputs closer to reality. As a result, in addition to increasing precision, it has also resulted in a higher recall value.

To evaluate the quality and effectiveness of the generated trailers a user satisfaction survey was conducted. 10 participants, with different experience and preferences in films, were selected to watch the trailers. Every subject was shown 30 one-minute-length trailers, produced by each of the compared methods. The test instances were randomly permuted and all models generated trailers for the same movies. The participants were also asked to complete a 10 Likert scale for each trailer where 1 represented low satisfaction and 10 represented high satisfaction. While rating trailers, the participants were told to rate 2 points at maximum for every of the following factors.

-

1.

Coherence: The logical continuity of the trailer.

-

2.

Engagement: The extent to which it engages the viewer and excite him or her about the content of the movie.

-

3.

Emotional Impact: To what extent does the trailer build the right feelings (fear, joy, excitement, etc.).

-

4.

Representativeness: To what extend does the trailer depict the general mood and the major messages of the film.

-

5.

Overall quality: A brief summary of the kind of trailer that has just been produced.

The average of each model was then computed and compared to the proposed model in order to evaluate the performance of the latter. Thus, the higher the average of these ratings, the more satisfied users are, which means that with the help of the given model, trailers that would be more attractive and effective are produced. The results of these rating are presented in Fig. 13. According to these results, the proposed model gained higher average scores which shows the higher quality of generated trailers in terms of user satisfaction. Additionally, the range of score variation for the proposed model is smaller than the compared approaches, showing higher reliability of the outputs generated by this model.

Table 2 depicts precision, recall, and F-measure values for various methods, including the proposed method, Prop. (Visual Features), Prop. (Subtitle Features), SmartTrailer22, and Movie2Trailer23. The proposed technique has the highest precision (0.5669) and F-measure (0.5018). These measurements provide a comparative summary of each method’s precision and recall performance.

Conclusion

The paper presented a novel use of CNNs for autonomously creating movie trailers. Two sub problems of the main research problem are addressed by the method: “genre identification” and “genre-based trailer production.” From the movie poster image, the authors use a CNN model to extract genre-related information in order to address the sub-problem of genre identification. In addition, a TF-IDF vector created from previously processed subtitle text is used to describe the content aspects of the film. To identify the genre of the film, a CART is used to classify a combination of these features. After the genre has been determined, key scenes from films in that genre are used to train a specialized CNN model for that genre. The film is segmented into scenes in the second phase, and each scene is given a unique visual and textual identity. Based on the CNN model linked to the genre of the film, these features are then integrated to determine important moments. Lastly, the user-specified time constraint is used to construct the movie trailer. Two metrics are used to assess the success of the proposed method: the quality of the trailer production and the accuracy of genre recognition. The findings show that the proposed approach outperforms other compared methods by at least 1%, achieving an accuracy of 83.39% in identifying movie genres. Additionally, the suggested approach shows a 56.69% trailer creation precision, which is at least 8% better than the methods that were compared. Ultimately, this work presents a promising way for autonomously creating movie trailers by using CNN models for scene selection and genre identification. This method produces better results than previous approaches in terms of accuracy and precision.

Data availability

All data generated or analysed during this study are included in this published article.

References

Yu, Y., Lu, Z., Li, Y. & Liu, D. ASTS: attention based spatio-temporal sequential framework for movie trailer genre classification. Multimed. Tools Appl. 80, 9749–9764 (2021).

Deldjoo, Y., Constantin, M. G., Ionescu, B., Schedl, M. & Cremonesi, P. MMTF-14K: A multifaceted movie trailer feature dataset for recommendation and retrieval. In Proceedings of the 9th ACM Multimedia Systems Conference, 450–455 (2018).

Simoes, G. S., Wehrmann, J., Barros, R. C. & Ruiz, D. D. Movie genre classification with convolutional neural networks. In 2016 International Joint Conference on Neural Networks (IJCNN), 259–266 (IEEE, 2016).

Wehrmann, J. & Barros, R. C. Movie genre classification: A multi-label approach based on convolutions through time. Appl. Soft Comput. 61, 973–982 (2017).

Zhou, H., Hermans, T., Karandikar, A. V. & Rehg, J. M. Movie genre classification via scene categorization. In Proceedings of the 18th ACM International Conference on Multimedia, 747–750 (2010).

Huang, H. Y., Shih, W. S. & Hsu, W. H. A film classifier based on low-level visual features. In 2007 IEEE 9th Workshop on Multimedia Signal Processing, 465–468 (IEEE, 2007).

Rasheed, Z., Sheikh, Y. & Shah, M. On the use of computable features for film classification. IEEE Trans. Circuits Syst. Video Technol. 15(1), 52–64 (2005).

Chu, W. T. & Guo, H. J. Movie genre classification based on poster images with deep neural networks. In Proceedings of the Workshop on Multimodal Understanding of Social, Affective and Subjective Attributes, 39–45 (2017).

Kundalia, K., Patel, Y. & Shah, M. Multi-label movie genre detection from a movie poster using knowledge transfer learning. Augment. Hum. Res. 5, 1–9 (2020).

Behrouzi, T., Toosi, R. & Akhaee, M. A. Multimodal movie genre classification using recurrent neural network. Multimed. Tools Appl. 82(4), 5763–5784 (2023).

Bhowmik, A., Kumar, S. & Bhat, N. Evolution of automatic visual description techniques-a methodological survey. Multimed. Tools Appl. 80(18), 28015–28059 (2021).

Dong, S., Wang, P. & Abbas, K. A survey on deep learning and its applications. Comput. Sci. Rev. 40, 100379 (2021).

Kumar, S. & Kumar, M. Predicting customer churn using artificial neural network. In Engineering Applications of Neural Networks: 20th International Conference, EANN 2019, Xersonisos, Crete, Greece, May 24–26, 2019, Proceedings 20, 299–306 (Springer, 2019).

Kumar, S., Kumar, N., Dev, A. & Naorem, S. Movie genre classification using binary relevance, label powerset, and machine learning classifiers. Multimed. Tools Appl. 82(1), 945–968 (2023).

Doshi, P. & Zadrozny, W. Movie genre detection using topological data analysis. In Statistical Language and Speech Processing: 6th International Conference, SLSP 2018, Mons, Belgium, October 15–16, 2018, Proceedings 6, 117–128 (Springer, 2018).

Hoang, Q. Predicting movie genres based on plot summaries. arXiv preprint https://arxiv.org/abs/1801.04813 (2018).

Portolese, G., Domingues, M. A. & Feltrim, V. D. Exploring textual features for multi-label classification of Portuguese film synopses. In Progress in Artificial Intelligence: 19th EPIA Conference on Artificial Intelligence, EPIA 2019, Vila Real, Portugal, September 3–6, 2019, Proceedings, Part II 19, 669–681 (Springer, 2019).

Mangolin, R. B. et al. A multimodal approach for multi-label movie genre classification. Multimed. Tools Appl. 81(14), 19071–19096 (2022).

Jiang, D. A hybrid PlacesNet-LSTM model for movie trailer genre classification. J. Theor. Appl. Inf. Technol. 100(14) (2022).

Wi, J. A., Jang, S. & Kim, Y. Poster-based multiple movie genre classification using inter-channel features. IEEE Access 8, 66615–66624 (2020).

Hesham, M., Hani, B., Fouad, N. & Amer, E. Smart trailer: Automatic generation of movie trailer using only subtitles. In 2018 First International Workshop on Deep and Representation Learning (IWDRL), 26–30 (IEEE, 2018).

Tabernik, D., Lukezic, A. & Grm, K. movie2trailer: Unsupervised Trailer Generation Using Anomaly Detection.

Wang, T. et al. A multi-label text classification method via dynamic semantic representation model and deep neural network. Appl. Intell. 50, 2339–2351 (2020).

Argaw, D. M., Soldan, M., Pardo, A., Zhao, C., Heilbron, F. C., Chung, J. S. & Ghanem, B. Towards automated movie trailer generation. arXiv preprint https://arxiv.org/abs/2404.03477 (2024).

Smith, J. R., Joshi, D., Huet, B., Hsu, W. & Cota, J. Harnessing AI for augmenting creativity: Application to movie trailer creation. In Proceedings of the 25th ACM International Conference on Multimedia, 1799–1808 (2017).

Papalampidi, P., Keller, F. & Lapata, M. Film trailer generation via task decomposition. arXiv preprint https://arxiv.org/abs/2111.08774 (2021).

Kakimoto, H., Wang, Y., Kawai, Y. & Sumiya, K. Extraction of movie trailer biases based on editing features for trailer generation. In 2018 IEEE International Symposium on Multimedia (ISM), 204–208 (IEEE, 2018).

Kulkarni, A. R. & Mundhe, S. D. An application of porters stemming algorithm for text mining in healthcare. Int. J. Manag. IT Eng. 7(11), 223–228 (2019).

Qaiser, S. & Ali, R. Text mining: Use of TF-IDF to examine the relevance of words to documents. Int. J. Comput. Appl. 181(1), 25–29 (2018).

Daniya, T., Geetha, M. & Kumar, K. S. Classification and regression trees with Gini index. Adv. Math. Sci. J. 9(10), 8237–8247 (2020).

Author information

Authors and Affiliations

Contributions

All authors wrote the main manuscript text. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Yao, X., Du, W., Sun, L. et al. Automatic trailer generation for movies using convolutional neural network. Sci Rep 15, 7819 (2025). https://doi.org/10.1038/s41598-025-91084-y

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-91084-y