Abstract

Leprosy is a dermatoneurological disease and can cause irreversible nerve damage. In addition to being able to mimic different rheumatological, neurological and dermatological diseases, leprosy is underdiagnosed because several professionals present lack of training. The World Health Organization instituted active search for new leprosy cases as one of the four pillars of the zero-leprosy strategy. The Leprosy Suspicion Questionnaire (LSQ) was created aiming to be a screening tool to actively detect new cases; it is composed of 14 simple yes/no questions that can be answered with the help of a health professional or by the very patient themselves. During its development, it was noticed that the combination of marked questions was related to new case detections. To better encapsulate and being able to expand its use, we developed MaLeSQs, a Machine Learning tool whose output may be LSQ Positive when the subject is indicated for being further clinically evaluated or LSQ Negative when the subject does not present any evidence that justify being further evaluated for leprosy. To achieve a reasonable product, we trained four classifiers with different learning paradigms, Support Vectors Machine, Logistic Regression, Random Forest and XGBoost. We compared them based on sensitivity, specificity, positive predicted value, negative predicted value, and area under the ROC curve. After the training process, the Support Vectors Machine was the classifier with the most balanced metrics of 85.7% sensitivity, 69.2% specificity, 18.6% precision, 98.3% negative predicted values and an area under the ROC curve of 0.775, and it was chosen as the MaLeSQs. With Shapley values, we were able to evaluate variable importance and nerve symptoms were considered important to differentiate between subjects that potentially had leprosy from those who did not.

Similar content being viewed by others

Introduction

Leprosy is a chronic contagious disease and can cause irreversible nerve damage. Its etiological agents are Mycobacterium leprae and Mycobacterium lepromatosis that present a long incubation time of 2 to 5 years1. They mainly attack the Schwann’s cell in the peripheral nerves2 and skin cells causing dermatoneurological symptoms and signs3, which are essential for the clinical diagnosis of leprosy4.

Leprosy diagnosis faces some limitations. The disease can mimic rheumatological pathologies like inflammatory arthritis, nonspecific arthritis, and vasculitis5, diabetic and amyloid neuropathy6, or dermatological diseases like lupus7, mycosis fungoides8 and psoriasis9. Besides, leprosy is usually not suspected as it is no longer emphasized in medical curricula nowadays10. Although there is a preeminent serological immunoassay for leprosy11, there is no laboratory test that alone can make the diagnosis of the disease1. A study made in the emergency room12 with patients who have had wrong diagnosis in the referral showed that leprosy can mimic acute arterial occlusion, acute coronary syndrome, deep vein thrombosis, and venous ulcer, corroborating to the fact that several professionals present a lack of training in leprosis diagnosis, indicating a possible serious cases of undiagnosed leprosy in patients of the emergency room.

In 2019, more than 200,000 new cases of leprosy were globally reported to the World Health Organization (WHO)13. One of the four strategic pillars of WHO goal of a zero-leprosy world is an integrated active case detection14. Several active search strategies are usually employed by healthcare professionals or trained volunteers like door-to-door search15,16, household contacts evaluation17, evaluation among school children18 and search in prison population19,20.

The Leprosy Suspicion Questionnaire (LSQ) is composed of 14 questions that are normally asked during a medical appointment and cover both neurological and dermatological symptoms. The LSQ is a screening tool for the most common signs and symptoms related to leprosy from its early to late stages. By applying it in the community, we aim to inform the population about the disease (health education), in addition to selecting individuals suspected of having early neurological symptoms of the disease, significantly increasing the chance of them being recognized as having the disease (screening of early cases). The LSQ is a questionnaire for patient screening, and it proved to be an easy-to-use, low-cost tool that can be filled either by a health worker or by the very individual whose answer could indicate the possibility of being clinically evaluated by specialists. It has been developed by the team from the National Referral Center for Sanitary Dermatology and Hansen’s Disease during several active search campaigns19,20,21. After those campaigns, the studies that followed remarked different patterns between healthy individuals and new cases, where the neurological symptoms were more important compared to the cutaneous signs. A computational tool that handles patterns very well is machine learning algorithms and classifiers.

Since the dawn of artificial intelligence, different applications and studies have been developed in several usages within healthcare employing machine learning techniques. These techniques were applied to predict sepsis in ICU patients22,23, heart disease24,25,26, Parkinson’s disease stage27,28,29, cancer30,31,32,33, and so on, as well as in preventive medicine34,35,36.

Machine Learning techniques have also been useful for classification and analysis in leprosy studies37. For instance, AI4Leprosy, a diagnosis assistant running an image-based Artificial Intelligence (AI) that employed convolutional neural networks plus traditional methods as logistic regression, random forest and XGBoost38 resulting in an AUC of 98.74%. Another remarkable application of AI was the work conducted in the Brazilian North clustering vulnerable regions susceptible to a leprosy endemic using Self-Organizing Map (an unsupervised learning technique) in epidemiological data from Geographic Information System39. Also, a leprosy screening purpose application of AI was developed in Brazil whose data came from the National Notifiable Disease Information System – SINAN, but it was meant only to distinguish paucibacillary and multibacillary, not intended to screen patients in an active search for new cases40. An interesting approach was carried on predicting new cases among household contacts implementing a random forest as classifier using molecular and serological results as data entry41. However, none of the solutions were provided with the sole purpose of screening, but more intended to help doctors in diagnosis and set regions to an active search campaign.

Our work intended to apply machine learning algorithms to screen individuals for leprosy based on how they filled the LSQ, we called this tool Machine Learning for Leprosy Suspicion Questionnaire Screening (MaLeSQs). We have chosen the best one within four classifiers representing each a different paradigm: the kernel-based Support Vectors Machine, the regressor Logistic Regression, the tree Random Forest, and the boosting XGBoost. Data cleaning was performed to handle missing values, an exploratory data analysis was conducted to allow readers to better understand data and create a more solid comprehension to the machine learning approach. A pre-processing stage was made with data augmentation by crossing questions and applying the Synthetic Minority Oversampling Technique (SMOTE), correlation was handled with phi-coefficient. Boruta was implemented to clean up noisy attributes. Hyperparameter optimization was performed by exhaustive search by pairs. The metrics used to evaluate classification were sensitivity, specificity, precision, negative predicted value, receiver operating characteristic curve and the area under the curve. Shapley values42 were calculated for each classifier to better understand the classification process43 as well as bring some insights about the LSQ and its relation to the disease. We finally discuss and compare our results with other screening strategies based on the new case detection rate (NCDR) and other machine learning models built to assist leprosy diagnosis.

Methods

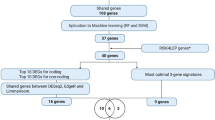

In this section we describe the methodology employed to achieve the best classifiers. We first introduce the Leprosy Suspicion Questionnaire, the studied population and the necessary adaptations made to fit old questionnaires into the registered version in Sect. 2.1 to 2.3. Secondly, we present the implementation environment employed to train MaLeSQs in Sect. 2.4, describe the preprocessing steps in Sect. 2.5 to 2.7, and the machine learning step in Sect. 2.8; these can be followed with the aid of Fig. 1.

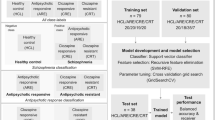

Design of machine learning algorithms. The missing data was dropped from the dataset. A data augmentation process was implemented by combining questions. The data set was split in training and test sets in the proportion of 80:20. Based on Φ coefficient (check supplementary material for definition), variables with high associations were dropped from training set and those same variables were dropped from the test set. SMOTE was applied in a copy of the training set and only the variables accepted by Boruta were kept in both training and test set. Four classifiers, Support Vectors Machine (SVM), Logistic Regression (LR), Random Forest (RF) and XGBoost (XGB), had their hyperparameters optimized (HyperOp) within a pipeline with SMOTE implemented and the trained models were used to classify the test set whose predictions were compared to their true values generating several metrics availed to compare performances.

The leprosy suspicion questionnaire

The 14 questions of the LSQ are shown in Table 1. They cover dermatological symptoms (\(\:q6\), \(\:q8\), \(\:q9\), \(\:q10\) and \(\:q13\)), neurological symptoms (\(\:q1\), \(\:q2\), \(\:q3\), \(\:q4\), \(\:q5\), \(\:q7\), \(\:q11\) and \(\:q12\)), and the contact factor of leprosy (\(\:q14\)). Those questions are going to be treated as variables in the next sections and, therefore, are graphed as such in this text. The registered questionnaire can be downloaded in https://www.crndsh.com.br/qsh both in Portuguese and English. These questions wait marked or not-marked answers. If the question was marked, it received value 1, if blank, the value was 0.

Study population

The dataset contained 1,842 instances. As it had been applied in several situations, database came from five different events. There were three different campaigns to identify leprosy patients in Ribeirão Preto region in the state of São Paulo, Brazil19,20,21 (Jardinópolis City, J; Female Prison in Ribeirão Preto, FPRP; and Center of Penitentiary Progression from Jardinópolis, CPP); a screening process made in Ribeirão Preto City during a professional training (PLTD)44; and patients from a private clinic (PC). Those campaigns pursued an active search for leprosy patients. The distribution of LSQs from each event is shown in Fig. 2. The clinical diagnosis of every participant of this study was made by a specialist in leprosy. The entire dataset was anonymized and only the variables with respect to the LSQ were maintained.

Distribution of patients and health individuals from the five campaigns whose data was available to make the present study. In gray we see that all the 22 individuals from private clinic (PC) were patients. In light orange, from the 34 individuals screened during the professional training in leprosy diagnosis (PTLD) 26 were healthy individuals and 8 were patients. In light blue, from the 487 individuals that filled the LSQ in the city of Jardinópolis (J), 423 were healthy individuals and 64 were patients. Within the 404 individuals from the female prison in Ribeirão Preto (FMRP) showed in blue in the diagram, 390 were healthy individuals whereas 14 were patients. Finally, 895 inmates from the Center of Penitentiary Progression (CPP) were screened leading to 31 new cases and 864 healthy individuals that are shown in dark blue. All the campaigns combined resulted in 139 new case detections and 1703 healthy individuals portrayed in red and orange, respectively.

From the 1,842 participants, 1,089 were male and 753 were female. The mean age of 1,839 participants (3 were missing) was 36 with a standard deviation of 15 years. The minimum age was 2 and the maximum was 94 with median of 33 years, with first quartile in 25 years and third quartile in 44 years. Within the new cases detected, 122 of the diagnosis were borderline leprosy, 7 were tuberculoid, 5 were neural, 1 was indetermined, and 4 were blank. The NCDR within the studied population was 7.5%.

Adapting LSQ

All data was provided in Excel sheets. In all five events where LSQ was applied, two different versions were used, and both were slightly different from the registered version. Both versions are in Supplementary Tables 1 and Supplementary Table 2. According to the specialist advice, the first version of the LSQ was adapted in the following way: questions 1, 2 and 3 were kept in the same order being exactly the same questions as in the registered version, question 4 was assigned an empty value because a question about muscle cramps was not part of this version, then questions 5, 4, 7, 6, 8 and 9 in this sequence, as they present the same statements of those in the registered version of the questionnaire in this very order, questions 10 and 11 were merged because in the registered version there is only one question with both of these statements, then question 12 being the same question as in the registered version, questions 13 and 14 were merged because there is one question with both assertions. To merge questions, we used an OR (˅) operator. The second version of the LSQ only went through a change in the order of the questions since they are the same questions of the registered version of the LSQ but in a different order. The order was changed as follows, questions 1, 2, 5, 4, 3, 6, 8, 7, 9, 10, 12, 13, 14 and 16; question 15 was not present in the registered version of the questionnaire. The necessary adaptations to the LSQ as described above are in Table 2. The adaptations were made directly within the last version available of Excel and a csv version of the file was exported.

Implementation environment

Most of the code was implemented in Python 3.9 on the latest available version of Jupyterlab in the Anaconda environment. The libraries employed to this study were Numpy 1.21, Pandas 1.4, Matplotlib 3.5, Sklearn 1.0, XGboost 1.5, Imblearn 0.7 e Shap 0.7. To implement Boruta, we used R language with Boruta 7.0 package. A simplified version of the notebook will be made available over demand. All ran on a machine with Windows 10 Pro 64-bits operational system in an AMD Ryzen 5 3350G processor with 16 GB RAM. The implementation of the code took around one month to complete, and the experiment took two days to run. In Table 3 we summarized the setup for the experiment.

Missing data and exploratory data analysis

Due to the necessary adaptations, and because most LSQ came from version 1, a couple of questions were almost entirely blanked. As questions 4 and 14 presented 98.2% of missing data, we had to drop both variables. So, muscle cramp was not evaluated as a symptom linked to leprosy and the contact influence implied by question 14 was set aside, leaving the opportunity for future research. Finally, the chi-square test was used to verify associations between each question and the diagnosis made by the specialists.

Data augmentation and train-test split

To enrich the dataset, a process of data augmentation was implemented. This process consisted of creating new variables based on the combination of a pair of questions using an “AND” operator. If questions qi and qj were marked (i.e., the value of 1), a new variable qiqj was created with value 1; else, the new variable qiqj would receive a value of zero. This was replicated to every question in the dataset. This data augmentation process resulted in a total of 78 variables in total.

After this process, we stratified and splitted the dataset into training and test sets in the proportion of 80:20. This resulted in 1362 healthy individuals and 111 new cases detection in the training set, and 341 healthy individuals and 28 new cases detection in the test set. All the next processing steps were performed and calculated in the training set and replicated in the test set, except for SMOTE, that was applied only in the training set.

Balancing the data and data-cleaning

The data was slightly imbalanced presenting a 12.25:1 ratio. To overcome this problem, the Synthetic Minority Oversampling Technique (SMOTE) was applied only in the training set, i.e. in the 80% data split in the previous step. SMOTE was applied in two different steps during this work. First it was used before applying Boruta to calculate feature importance as it will be described next session. Second, it was applied within a pipeline during hyperparameters optimization and model training to avoid data leakage. After SMOTE, we ended up with 1,251 synthetic samples of new cases detection in the training set. SMOTE was not applied in the 20% test set, where data was let as it was collected in the real world.

The data augmentation process implemented could result in undesirable associations between variables, spoiling the dataset to feed classification algorithms in further steps. As we had only binary variables, we applied Matthews Correlation Coefficient φ to investigate these associations in the training set. To choose a coefficient threshold to drop features we trained the four classifiers without hyperparameters optimization, using SMOTE and Boruta. We tested from moderated to strong correlation coefficients45 whose results can be checked in Supplementary Tables 4 and 5. Except for the Support Vectors Machine, that all AUC values were statistically equal, we can observe a small drop in AUC values for Logistic Regression, Random Forest and XGBoost with the increase of the correlation coefficient used as threshold to drop features. When we compare 0.65–0.70, 0.65–0.75, and 0.70–0.75 for the four classifiers, the AUC are statistically equal. Therefore, 0.75 was chosen as a threshold to maintain the greatest number of features as possible in a way that not too much information would be lost, but still improving the dataset to hyperparameter optimization. For all associations with φ ≥ 0.75 at least one of the variables was dropped from the dataset.

Also, due to data augmentation process, noisy variables might appear. To get rid of this noise, we used Boruta method to verify feature importance. But first we had to balance the training set. For this purpose, the Synthetic Minority Oversampling Technique (SMOTE) was applied. And only after SMOTE, Boruta was availed to the training set. The p-value threshold considered to keep a variable was set to 0.05. Solely the variables accepted by the algorithm were maintained on the dataset. Details on how Boruta algorithm works can be found in the Supplementary Material. Finally, the data was ready to feed the classifier models for hyperparameter optimization and training.

Machine learning stage

The classifiers and hyperparameter optimization

Four classifiers with different paradigms were employed. The one representing kernel-based models was the Support Vectors Machine (SVM); Logistic Regression (LR) represented linear classifiers; ensemble tree methods were embodied by Random Forest (RF); and to stand for boosting methods, the Extreme Gradient Boosting (XGBoost or XGB). All the classifiers were implemented within a pipeline to facilitate their combination with SMOTE during hyperparameter optimization and avoid data leaking to the validation set.

Hyperparameter optimization is an important stage. It was performed because best classification means more correct predictions, and bad predictions might mean several ill people been classified as healthy. This is not interesting at all for the screening purpose intended for the present study.

Hyperparameters were optimized exhaustively in pairs of hyperparameters with stratified cross validation with 5 splits and 20 repetitions. The score parameter to evaluate best performance in optimization was the area under the receiver operating curve (AUROC). The intervals and steps chosen for each hyperparameter for each classifier are shown in Table 4. The hyperparameters were optimized in the same order as depicted in the table. Identical superscript symbols mean that the hyperparameters were optimized at the same time. A description of each hyperparameter can be seen in Supplementary Table 3. The hyperparameters for the 4 machine learning methods are: (1) SVM: C and kernel, if the best kernel chosen was polynomial one, we would optimize degree as well (it was not the case of the present work); (2) LR: C and penalty; (3) RF: number of estimators (n_estimators) and maximum depth of trees (max_depth), minimum samples split in each node (min_samples_split) and maximum features (max_features) used in each tree, minimum samples in each leaf (min_samples_leaf) and maximum samples to draw during bootstrap (max_samples), and the maximum number of leaf nodes (max_leaf_nodes); and (4) XGBoost: were learning rate (learning_rate) and number of estimators (n_estimators), maximum depth of trees (max_depth) and minimum sum of instance weight in a child node (min_child_weight), subsample ratio of instances (subsamples) and subsample ratios of columns (colsample_bytree), L2 regularization (gamma) and the minimum loss reduction required to partition a node (lambda), and L1 regularization (alpha). To ensure that good values were chosen, we plotted the entire range (check Supplementary Fig. 3) and avoided picking hyperparameters in the extremes of the interval for the first pair of hyperparameters that were being optimized. Adjustments were not made to prevent overfitting46 and to avoid local optimum47 during this validation step.

Evaluation of classifiers performance and explaining classification

After hyperparameter optimization, the trained classifier models were applied in the test set. The classification from the models was compared to the diagnosis given by the specialists. Performance of classifiers were measured with metrics from confusion matrix, sensitivity, specificity, precision, and negative predicted values. ROC and AUROC were also reported to show the predictive power of models. We also translated the sensitivity to NCDR, being able to compare with other studies.

To better understand what is happening inside each classifier, Shapley Values were calculated. With these values, it was possible to bring important insights from the classification and better understand how each model is using the top 10 most important features from the dataset to predict the outcome.

Role of the funding source

The funder of the study had no role in the study design, data collection, data analysis, data interpretation, or writing of the report. All authors had full access to all the data in the study and had final responsibility for the decision to submit for publication.

Result

Exploratory data analysis and pre-preprocessing

A chi-square test was done to compare the LSQ results between healthy individuals and Hansen’s disease new case detections. The test shows that questions q8 (p = 1.0000), q10 (p = 0.1627) and q13 (p = 0.8880) did not present any significant statistical difference (Table 5). However, we held the three variables within the dataset to keep as most information as possible at this stage of the analysis. Also, nine questions with p-value < 0.05 strongly indicates LSQ as a useful patient screening tool because of remarkable statistical difference between groups.

The association matrix built with ϕ-coefficient can be checked on the heatmap in Supplementary Fig. 1. The process of dropping variables with ϕ > 0.75 reduced the number of variables in the dataset from 78 to 54.

To enhance computational cost during hyperparameters optimization Boruta feature selection was implemented. Only the Accepted variables were kept in the dataset resulting in a dataset with 38 variables. These results are shown in Fig. 3. All the single questions were accepted showing a different angular coefficient with respect to feature importance as compared to combination of questions. We leave this door open for future research.

Result from Boruta feature selection implemented in the dataset. The blue boxplots are the importance of the shadows (check Supplementary Material to read more about Boruta and the shadow variables), the yellow ones are the tentative, and the green ones are the accepted variables. Only the ones marked as Accepted were kept in the dataset.

Classifiers and hyperparameters optimization

Table 6 depicts the best values chosen by grid search for each hyperparameter. The best hyperparameters chosen for SVM were C = 0.0464 and the Kernel chosen was the radial basis function (rbf). The best hyperparameters for LR were C = 0.00202 and the L2 penalty. The hyperparameters for random forest were 450 estimators (trees) with the maximum depth of 5, the minimum samples split was 100, the maximum number of features was 6, the maximum samples rate was 0.3, the minimum number of samples per leaf was 10 and the maximum amount of leaf nodes was 9. For the XGBoost, the best learning rate was 0.0001 for 100 estimators, with a maximum depth of the trees of 6, the minimum child leaf weight of 5, a subsample rate of 0.5, a rate of columns chosen by tree of 0.5, a gamma equals 0.2, the best lambda was 1.0, and the best alpha was 0.02.

Performance of the classifiers

The metrics used to measure performance were from confusion matrix, and the ROC and the respective area under the curve. In Table 7 we show sensitivity, specificity, precision, and negative predicted value for each classifier. With SVM we achieved a sensitivity of 85.7%, a specificity of 69.2%, a precision of 18.6% and a negative predicted value (NPV) of 98.3%. Logistic Regression achieved a sensitivity of 60.7%, specificity of 80.7%, a precision of 20.5% and a NPV of 26.2%. Random Forest reached a sensitivity of 75.0%, a specificity of 76.0%, a precision of 20.4% and a NPV of 97.4%. Last, XGBoost got a sensitivity of 67.9%, a specificity of 77.7%, a precision of 20.0% and a NPV of 96.7%. As we searched for a balance between sensitivity and specificity, the best classifier following these metrics was SVM, even though it presented a low precision, because as this is intended for screening purposes only, it becomes very interesting to have some false positives instead of risking having more false negatives.

In Fig. 4 we show the ROC curves for each classifier with the respective area value depicted in the legend. We can state from these results that the classifier with the strongest predictive power is SVM, due to its bigger AUROC of 0.776, 1.7% bigger than the second place XGBoost, and 8.7% bigger than the worst classifier Logistic Regression.

Interpretability of models with Shapley values

Figure 5 shows the results of Shapley values for each classifier. The high value in the colorbar means marked answer for the question, and low value, a non-marked answer. From these results it is interesting to highlight two aspects. The first one is that the top 10 most important variables for all the classifiers were basically the same, i.e., q1, q2, q3, q5, q6, q7, q8, q11, q1q7 appeared within the top 10 of the four classifiers, q9 appeared in two (SVM and XGB), and q3q5 also in two (LR and RF). The second one is the counterintuitive fact that for some questions, notably q8, q9 and q11, when the individual response was positive, it had a negative impact on the model, meaning that when individuals presented those symptoms, the model tended to classify the individual as healthy. This happened because these three questions concern more advanced symptoms of the disease.

Shapley values for each classifier. In the left pictures are the individual Shapley value for every participant in the research where red means marked question and blue no marked question. In the right side of the pictures are the mean of the absolute Shapley values of the participants for the respective variable. In (a) we show the values for SVM, in (b) for Logistic Regression, in (c) for Random Forest and in (d) for XGBoost. The + 0 that appears in the bar graphs is a rounding provided by the Shap library, but their values are the ones in the x-axis.

Discussion

In past years, several works applying machine learning in healthcare questionnaires with yes-no answers have been presented to the community48,49,50,51. Many research exploited the use of feature selection52,53,54. Numerous classifications implemented SMOTE to achieve better prediction results55,56,57 and brought more interpretability to their model with Shapley Values58,59,60,61. However, none of them addressed Hansen’s Disease and the Leprosy Suspicion Questionnaire.

Leprosy is an under-diagnosed disease. In 2022, the LSQ was recognized by the Brazilian Health Ministry as a successful experience in leprosy field62 as a tool to improve diagnosis and health education. Furthermore, Brazilian government adopted the LSQ as part of its effort in active search for new leprosy cases63 and our Primary Care Units are prepared to apply it in their clinical routine making a checklist of symptoms and signs to think of and recognize leprosy. Besides, several studies and strategies for municipal health promotion addressing communities are employing LSQ as a tool for active search for new leprosy cases19,20,21,44,64,65,66,67,68,69,70.

Our results point out the strength of MaLeSQs applying machine learning algorithms on the analysis of LSQ responses for diagnosing HD. The good balance of sensitivity and specificity achieved by the classifiers, when their sum is bigger than 1.5 71, shows evidence that the test is useful, and an acceptable AUC value (0.7–0.8)72 guarantees the quality of the model. The low precision assures that even though healthy people might be alarmed by a false positive, these will look for health assistance, and health workers will be able to evaluate and educate a person about the disease. It is not necessary to fully understand the machine learning models to employ them, being able to correctly apply and instruct people about the LSQ filling is enough.

The LSQ is easy to distribute, and people can fill it by themselves or aided by a community health agent. Besides, there is no need to think on calculations to decide whether an LSQ is positive or negative, the classifier does it by itself. The model encapsulates all knowledge acquired during the campaigns in regards of the LSQ and can be easily distributed via a web application and reach far places with a relatively low cost with no need for an expensive staff to be mobilized on field to exclusively work on patient screening.

Based on the values of Table 7 it is possible to calculate the relative risk (RR). A person classified as New Case (LSQ+) by the SVM is 10.9 times more likely to carry leprosy than one classified as Healthy Individual (LSQ-). As for Logistic Regression, an LSQ + is 5.4 times more likely to be leprosy than an LSQ-. Whereas for Random Forest, an LSQ + is 7.8 times more likely to be a New Case than an LSQ-. Finally, an LSQ + by XGBoost means 6.1 times more likely to be a new case. Therefore, an LSQ + by any of the classifiers employed in this study presents a high risk (RR > 2.0) of being a new case.

During the campaigns in the CPP19, in the FPRP20 and in Jardinópolis21, they elicited positive LSQ as a screening criteria. LSQ + meant at least one marked question. Applying the same idea in the present dataset we would achieve a sensitivity of 92.9% and a specificity of 64.8%. The sum of 1.58 is above the threshold of usefulness of a test in health care, whereas applying machine learning we achieved a sum of 1.55 with SVM and 1.51 with Random Forest. Only LSQ alone is already a powerful tool to screen leprosy patients. However, the decrease of 7.2% points in sensitivity meant only 2 more subjects being classified as false negatives, and the increase of 4.4% points meant 15 more subjects being classified as true negatives (Supplementary Fig. 2 shows the confusion matrix of both cases illustrating this result). Those 15 subjects classified as LSQ- would not be called for a consultation or further investigation with clinicians and specialists, saving money and several medical-hours, considering the long time needed for diagnosing leprosy.

A systematic review of diagnostic accuracy of tests for leprosy73 included 78 studies. This review evaluated the detection of IgM antibodies against phenolic glycolipid I using ELISA, qPCR and conventional PCR. The sensitivities were 63.8% (95% CI 55.0–71.8), 78.5% (95% CI 61.6–89.2) and 75.3% (95% CI 67.9–81.5) respectively. The specificities were 91.0% (95% CI 86.9–93.9), 89.3% (95% CI 61.4–97.8) and 94.5% (95% CI 91.4–96.5) respectively. SVM achieved a higher sensitivity (85.7%) than all those traditional tests and RF presented an equivalent sensitivity (75.0%). Despite the lower specificities (69.2% for SVM and 76.0% for RF), the combination of the LSQ with machine learning algorithms does not require either blood samples, expensive equipment, or highly trained personnel, demonstrating its high applicability in guiding leprosy diagnosis.

The work closest in goals to ours was the AI4Leprosy38. Although they had good results with an AUC of 98%, a sensitivity of 89% and a specificity of 100%, they used only 222 patients, focusing most on spots on the skin, using pictures taken under controlled circumstances with DLSR cameras in a photographic studio. Whereas in this study we used the LSQ, a questionnaire with simple questions that can be filled with the aid of a health professional or by the individuals themselves. Moreover, because it was an image-based screening tool, they had to use neural networks (Inception-V4 and ResNet-50) combined with machine learning methods (elastic-net logistic regression, XGBoost and Random Forest), and cloud computing to train their model38. While our questionnaire-based screening approach was trained only on machine learning methods using a common desktop. Besides, our tool was able to differentiate healthy individuals from new cases even if they did not present any spot on the skin, focusing also on the early leprosy neurological symptoms74. MaLeSQs is an economical tool to be used in screening in remote regions or based on telemedicine75,76,77. That turns it into a low cost, accessible tool to be used by public healthcare systems attending large geographical regions. Note that MaLeSQs may be integrated alongside an image analyzer78.

We used a high prevalence study population (7.5%), what probably caused a high NCDR of 85.7% for SVM, 60.7% for LR, 75% for RF and 67.9% for XGB, i.e., the sensitivity of each algorithm. A study in Ethiopia conducted full village surveys in selected villages whose screening methods were looking for skin lesions suspected for leprosy and including all household contacts; they achieved a NCDR of 9.3 per 10,000 population79. Another study performed in Cambodia among household contacts and neighbor contacts who presented clinical signs of leprosy followed by a leprologist evaluation achieved respectively a NCDR of 25.1 per 1000 population and 8.7 per 1000 population80. A community wide screening conducted in Malaysia where medical officers went through an active case detection activity searching for abnormal skin changes achieved a NCDR of 722 per 10,000 population, n = 6/8381. Therefore, further studies might be pursued where these algorithms are used as a screening tool in the field to check if this high performance is sustainable. In addition, geostatistical data might be used to select regions with higher probabilities to find new cases.

The Shapley values for feature importance brought great insights into the interpretation of our results. Although the ratio of combining dermatological and other signs questions to neurological ones was 5:7, the ratio of questions associated within the top 10 to those symptoms selected by importance varied from 7:3 (p < 0.0001) to 8:2 (p < 0.0001), with statistical difference. We can infer that neurological symptoms had a greater statistical importance as compared to other symptoms for the classifiers when both types of symptoms are evaluated together. Besides, questions related to high degree of physical incapacity or a more advanced disease within the spectrum (notably q8, q9 and q11) were more related to negative Shapley values. Thus, those symptoms are not the best ones to find an early diagnosis82, but indicating that the association of LSQ and machine learning algorithms might be ideal to be used during screening for initial symptoms.

For further studies, a wider application of the LSQ is indicated. We limited our dataset within a Brazilian region where leprosy is endemic; then different outcomes may be expected in low endemic regions. Results may also vary in countries and regions with population outside the age range, given that peripheral neuropathy prevalence is higher amongst elderlies83, and with different degrees of the disease within the leprosy spectrum, considering that people in different degrees of the spectrum present different signs and symptoms74. In future work, we intend to validate MaLeSQs on external datasets with different populations to ensure generalizability across various regions. Unsupervised feature selection53 instead of Boruta might lead to a more balanced choice for question this part of the experiment. Frameworks that optimize SVM parameters and select optimal features like JASMA-SVM84 are interesting innovative approaches to improve performance. It would be compelling as well to compare results obtained in this study with the LSQ filled by people with diabetes, carpal tunnel syndrome, and other diseases that may affect the peripheral nervous system.

Data availability

The code developed during the study and the datasets are not publicly available since it is still being further analyzed. Some parts may be made available on reasonable request to the corresponding author.

References

Kundakci, N., Erdem, C. & Leprosy A great imitator. Clin. Dermatol. 37, 200–212 (2019).

Maymone, M. B. C. et al. Leprosy: Clinical aspects and diagnostic techniques. Contin. Med. Educ. 83, 1–14 (2020).

Sollard, D. M. et al. The continuing challenges of leprosy. Clin. Microbiol. Rev. 19, 338–381 (2006).

Guidelines for the diagnosis, treatment and prevention of leprosy. https://apps.who.int/iris/handle/10665/274127 (2018).

Salvi, S. & Chopra, A. Leprosy in a rheumatology setting: A challenging mimic to expose. Clin. Rheumatol. 32, 1557–1563. https://doi.org/10.1007/s10067-013-2276-5 (2013).

J Nascimento, O. Leprosy neuropathy: Clinical presentations. Arq. Neuropsiquiatr. 71, 661–666 (2013).

Hsieh, T. T. & Wu, Y. H. Leprosy mimicking lupus erythematosus. Dermatol. Sin. 32, 47–50. https://doi.org/10.1016/j.dsi.2013.01.004 (2014).

Rodríguez-Acosta, E. D. et al. Borderline tuberculoid leprosy mimicking mycosis fungoides. Skinmed 11, 379–381 (2013).

Vora, R. V., Pilani, A. P., Jivani, N. & Kota, R. K. Leprosy mimicking psoriasis. J. Clin. Diagn. Res.: JCDR 9, WJ01 (2015).

Alemu Belachew, W. & Naafs, B. Position statement: LEPROSY: diagnosis, treatment and follow-up. J. Eur. Acad. Dermatol. Venereol. 33, 1205–1213. https://doi.org/10.1111/jdv.15569 (2019).

Lima, F. R. et al. Serological immunoassay for Hansen’s disease diagnosis and monitoring treatment: Anti-Mce1A antibody response among Hansen’s disease patients and their household contacts in Northeastern Brazil. Front. Med. 9, 855787–855787. https://doi.org/10.3389/fmed.2022.855787 (2022).

Bernardes-Filho, F., Lima, F. R., Voltan, G., de Paula, N. A. & Frade, M. A. C. Leprosy case series in the emergency room: A warning sign for a challenging diagnosis. Brazilian J. Infect. Dis. 25, 101634. https://doi.org/10.1016/j.bjid.2021.101634 (2021).

World Health Organization. Leprosy (Hansen’s disease). Accessed 13 Sept 2022. https://www.who.int/data/gho/data/themes/topics/leprosy-hansens-disease (2021).

World Health Organization. Leprosy. Accessed 13 Sept 2022. https://www.who.int/news-room/fact-sheets/detail/leprosy (2022).

Gillini, L. et al. Implementing the global leprosy strategy 2016–2020 in Nepal: Lesson learnt from active case detection campaigns. Lepr. Rev. 89, 77–82 (2018).

Kumar, M. S. et al. Hidden leprosy cases in tribal population groups and how to reach them through a collaborative effort. Lepr. Rev. 86, 328–334 (2015).

Silva, K. K. P. et al. Serum IgA antibodies specific to M. leprae antigens as biomarkers for leprosy detection and household contact tracking. Front. Med. 8, 8:698495 (2021).

Pedrosa, V. L. et al. Leprosy among schoolchildren in the Amazon region: A cross-sectional study of active search and possible source of infection by contact tracing. PLoS Negl. Trop. Dis. 12, e0006261 (2018).

Bernardes Filho, F. et al. Leprosy in a prison population: A new active search strategy and a prospective clinical analysis. PLoS Negl. Trop. Dis. 14, e0008917 (2020).

Silva, C. M. L. et al. Innovative tracking, active search and follow-up strategies for new leprosy cases in the female prison population. PLoS Negl. Trop. Dis. 15, e0009716 (2021).

Bernardes Filho, F. et al. Active search strategies, clinicoimmunobiological determinants and training for implementation research confirm hidden leprosy in inner São Paulo, Brazil. PLoS Negl. Trop. Dis. 15, e0009495 (2021).

Nemati, S. et al. An interpretable machine learning model for accurate prediction of Sepsis in the ICU. Crit. Care Med. 46, 547–553 (2018).

Gao, J. et al. Prediction of sepsis mortality in ICU patients using machine learning methods. BMC Med. Inf. Decis. Mak. 24 https://doi.org/10.1186/s12911-024-02630-z (2024).

Shah, D., Patel, S. & Bharti, S. K. Heart disease prediction using machine learning techniques. SN Comput. Sci. 1, 345. https://doi.org/10.1007/s42979-020-00365-y (2020).

Chandrasekhar, N. & Peddakrishna, S. Enhancing heart disease prediction accuracy through machine learning techniques and optimization. Processes 11, 1210. https://doi.org/10.3390/pr11041210 (2023).

Bouqentar, M. A. et al. Early heart disease prediction using feature engineering and machine learning algorithms. Heliyon 10, e38731. https://doi.org/10.1016/j.heliyon.2024.e38731 (2024).

Huang, G. H. et al. Multiclass machine learning classification of functional brain images for Parkinson’s disease stage prediction. Stat. Anal. Data Mining: ASA Data Sci. J. 13, 508–523 (2020).

Nancy Noella, R. S. & Priyadarshini, J. Machine learning algorithms for the diagnosis of alzheimer and Parkinson disease. J. Med. Eng. Technol. 47, 35–43. https://doi.org/10.1080/03091902.2022.2097326 (2023).

Fei, X., Wang, J., Ying, S., Hu, Z. & Shi, J. Projective parameter transfer based sparse multiple empirical kernel learning machine for diagnosis of brain disease. Neurocomputing 413, 271–283. https://doi.org/10.1016/j.neucom.2020.07.008 (2020).

Sharma, A. & Rani, R. A. Systematic review of applications of machine learning in cancer prediction and diagnosis. Arch. Comput. Methods Eng. 28, 4875–4896 (2021).

Hussain, S. et al. Breast cancer risk prediction using machine learning: A systematic review. Front. Oncol. 14, 1343627. https://doi.org/10.3389/fonc.2024.1343627 (2024).

Das, A. K., Biswas, S. K., Mandal, A., Bhattacharya, A. & Sanyal, S. Machine learning based intelligent system for breast cancer prediction (MLISBCP). Expert Syst. Appl. 242, 122673. https://doi.org/10.1016/j.eswa.2023.122673 (2024).

Maimaitiyiming, A. et al. Machine learning-driven mast cell gene signatures for prognostic and therapeutic prediction in prostate cancer. Heliyon 10, e35157. https://doi.org/10.1016/j.heliyon.2024.e35157 (2024).

Yu, C. S. et al. Development of an online health care assessment for preventive medicine: A machine learning approach. J. Med. Internet. Res. 22, e18585 (2020).

Rojek, I., Kotlarz, P., Kozielski, M., Jagodziński, M. & Królikowski, Z. Development of AI-based prediction of heart attack risk as an element of preventive medicine. Electronics 13, 272. https://doi.org/10.3390/electronics13020272 (2024).

Summers, K. L., Kerut, E. K., To, F., Sheahan, C. M. & Sheahan, M. G. Machine learning-based prediction of abdominal aortic aneurysms for individualized patient care. J. Vasc. Surg. 79, 1057–1067e1052. https://doi.org/10.1016/j.jvs.2023.12.046 (2024).

Deps, P. D. et al. The potential role of artificial intelligence in the clinical management of Hansen’s disease (leprosy). Front. Med. 11, 1338598. https://doi.org/10.3389/fmed.2024.1338598 (2024).

Barbieri, R. R., Xu, Y., Setian, L. & Souza-Santos, P. T. et al. Reimagining leprosy elimination with AI analysis of a combination of skin lesion images with demographic and clinical data. Lancet Reg. Health : Amer. 9, 100192 (2022).

da Silva, R. E., Conde, V. M. G., Baia, M. J. S., Salgado, C. G. & Conde, G. A. B. in Intelligent Systems and Applications 802–823 (Springer Nature, 2020).

De Souza, M. L. M., Lopes, G. A., Branco, A. C., Fairley, J. K. & Fraga, L. A. O. Leprosy screening based on artificial intelligence: development of a Cross-Platform app. JMIR Mhealth Uhealth 9, e23718 (2021).

Gama, R. S. et al. A novel integrated molecular and serological analysis method to predict new cases of leprosy amongst household contacts. PLoS Negl. Trop. Dis. 13, e0007400 (2019).

Sun, J., Sun, C. K., Tang, Y. X., Liu, T. C. & Lu, C. J. Application of SHAP for explainable machine learning on age-based subgrouping mammography questionnaire data for positive mammography prediction and risk factor identification. Healthcare 11, 2000. https://doi.org/10.3390/healthcare11142000 (2023).

Cabitza, F., Rasoini, R. & Gensini, G. F. Unintended consequences of machine learning in medicine. JAMA 318, 517. https://doi.org/10.1001/jama.2017.7797 (2017).

Lugão, H. B. et al. in 10º Simpósio Brasileiro de Hansenologia.

Schober, P., Boer, C. & Schwarte, L. A. Correlation coefficients: Appropriate use and interpretation. Anesth. Analgesia 126, 1763–1768. https://doi.org/10.1213/ane.0000000000002864 (2018).

Aburass, S. & Rumman M. A. 1–7 (IEEE).

Chen, M. R., Yang, L. Q., Zeng, G. Q., Lu, K. D. & Huang, Y. Y. IFA-EO: An improved firefly algorithm hybridized with extremal optimization for continuous unconstrained optimization problems. Soft. Comput. 27, 2943–2964. https://doi.org/10.1007/s00500-022-07607-6 (2023).

Krishna, R., Teja, R., Neelima, N. & Peddi, N. Advanced machine learning models for depression level categorization using DSM 5 and personality traits. Proc. Comput. Sci. 235, 2783–2792. https://doi.org/10.1016/j.procs.2024.04.263 (2024).

Nakhleh, A., Spitzer, S. & Shehadeh, N. ChatGPT’s response to the diabetes knowledge questionnaire: Implications for diabetes education. Diabetes. Technol. Ther. 25, 571–573. https://doi.org/10.1089/dia.2023.0134 (2023).

Song, W. et al. Improved accuracy and efficiency of primary care fall risk screening of older adults using a machine learning approach. J. Am. Geriatr. Soc. 72, 1145–1154. https://doi.org/10.1111/jgs.18776 (2024).

Singh, D. et al. (eds) in International Conference on Innovative Computing and Communications. (eds Aboul Ella Hassanien, Oscar Castillo, Sameer Anand, & Ajay Jaiswal) 911–926 (Springer Nature, Singapore).

Esfahan, S. S., Haratian, A., Haratian, A., Shayegh, F. & Kiani S. 220–225 (IEEE).

Zhou, P. et al. Unsupervised feature selection for balanced clustering. Knowl. Based Syst. 193, 105417. https://doi.org/10.1016/j.knosys.2019.105417 (2020).

Hennebelle, A., Materwala, H. & Ismail, L. HealthEdge: A machine learning-based smart healthcare framework for prediction of type 2 diabetes in an integrated IoT, edge, and cloud computing system. Procedia Comput. Sci. 220, 331–338. https://doi.org/10.1016/j.procs.2023.03.043 (2023).

Hassanzadeh, R., Farhadian, M. & Rafieemehr, H. Hospital mortality prediction in traumatic injuries patients: Comparing different SMOTE-based machine learning algorithms. BMC Med. Res. Methodol. 23, 101. https://doi.org/10.1186/s12874-023-01920-w (2023).

Narayanan, J. & Jayashree, M. Implementation of efficient machine learning techniques for prediction of cardiac disease using SMOTE. Proc. Comput. Sci. 233, 558–569. https://doi.org/10.1016/j.procs.2024.03.245 (2024).

Sarayu, M. K., Bhanu, S. A., Deekshitha, K., Meghana, M. & Joseph, I. T. 15–20 (IEEE).

Allgaier, J., Mulansky, L., Draelos, R. L. & Pryss, R. How does the model make predictions? A systematic literature review on the explainability power of machine learning in healthcare. Artif. Intell. Med. 143, 102616. https://doi.org/10.1016/j.artmed.2023.102616 (2023).

Guleria, P., Srinivasu, P. N. & Hassaballah, M. Diabetes prediction using Shapley additive explanations and DSaaS over machine learning classifiers: A novel healthcare paradigm. Multim. Tools Appl. 83, 40677–40712. https://doi.org/10.1007/s11042-023-17212-w (2023).

Savanović, N. et al. Intrusion detection in healthcare 4.0 internet of things systems via metaheuristics optimized machine learning. Sustainability 15, 12563. https://doi.org/10.3390/su151612563 (2023).

Ali, S. et al. The enlightening role of explainable artificial intelligence in medical & healthcare domains: A systematic literature review. Comput. Biol. Med. 166, 107555. https://doi.org/10.1016/j.compbiomed.2023.107555 (2023).

Saúde, B. M. d. Experiências exitosas em hanseníase. https://bvsms.saude.gov.br/bvs/publicacoes/experiencias_exitosas_hanseniase.pdf (2022).

Saúde, B. M. D. Ministério da Saúde lança Estratégia de Busca Ativa de Casos de Hanseníase em 78 municípios brasileiros. https://www.gov.br/saude/pt-br/assuntos/noticias/2022/maio/ministerio-da-saude-lanca-estrategia-de-busca-ativa-de-casos-de-hanseniase-em-78-municipios-brasileiros (2022).

de Figueiredo, E. L. Aplicação do questionário de suspeição de hanseníase para aumentar a detecção de casos na atenção primária à saúde. Jornada Mato-Grossense De Epidemiologia Clínica 1, 120–121 (2024).

Ribeiro, K. S. M. A. et al. Relato de experiência: questionário de Suspeição Em Hanseníase. Bionorte 12, e835 (2023).

dos Santos Ritá, F., Santos, C. S., Couto, C. C., Rabelo, V. M. & Queiroz, L. A teoria vivenciada na prática: A busca ativa de hanseníase para o alcance da agenda (2030).

Sampaio, A. P. F. et al. Incapacidades físicas de Pessoas com Hanseníase. Revista Eletrônica Acervo Saúde 25, e18341–e18341 (2025).

Vitiritti, B., Lima, F. R., de Castilho, N. T., Somensi, L. B. & Ogoshi, R. C. Hidden leprosy in a low-endemic area in Southern Brazil: Changes in endemicity following an active search. Brazilian J. Infect. Dis. 28, 103853. https://doi.org/10.1016/j.bjid.2024.103853 (2024).

Souza, R. S. et al. Simulation-based training in leprosy: development and validation of a scenario for community health workers. Revista Brasileira De Enfermagem 76, e20230114 (2023).

Sy, M. F., Lubis, R. D. & Nadeak, K. Relationship between leprosy suspicion questionnaire with IgM anti-PGL-1 antibody levels in household contacts of leprosy. Bali Med. J. 13, 699–703 (2024).

Power, M., Fell, G. & Wright, M. Principles for high-quality, high-value testing. BMJ Evid.-Based Med. 18, 5–10 (2012).

Mandrekar, J. N. Receiver operating characteristic curve in diagnostic test assessment. J. Thorac. Oncol. 5, 1315–1316 (2010).

Gurung, P., Gomes, C. M., Vernal, S. & Leeflang, M. M. G. Diagnostic accuracy of tests for leprosy: A systematic review and meta-analysis. Clin. Microbiol. Infect. 25, 1315–1327. https://doi.org/10.1016/j.cmi.2019.05.020 (2019).

Chen, X., Zha, S. & Shui, T. J. Presenting symptoms of leprosy at diagnosis: Clinical evidence from a cross-sectional, population-based study. PLoS Negl. Trop. Dis. 15, e0009913. https://doi.org/10.1371/journal.pntd.0009913 (2021).

Kadum, S. Y. et al. Machine learning-based telemedicine framework to prioritize remote patients with multi-chronic diseases for emergency healthcare services. Netw. Model. Anal. Health Inf. Bioinf. 12, 11. https://doi.org/10.1007/s13721-022-00407-w (2023).

Kothamali, P. R., Banik, S., Dandyala, S. S. M. & Karne, V. K. Advancing telemedicine and healthcare systems with AI and machine learning. Int. J. Mach. Learn. Res. Cybersecur. Artif. Intell. 15, 177–207 (2024).

Sreedhar, P. S. S. et al. IGI Global. in Analyzing Current Digital Healthcare Trends Using Social Networks (eds Sukanta Kumar Baral & Richa Goel) 209–234 (2024).

Rana, M. & Bhushan, M. Machine learning and deep learning approach for medical image analysis: Diagnosis to detection. Multimed. Tools Appl. 82, 26731–26769. https://doi.org/10.1007/s11042-022-14305-w (2023).

Urgesa, K. et al. Evidence for hidden leprosy in a high leprosy-endemic setting, Eastern Ethiopia: The application of active case-finding and contact screening. PLoS Negl. Trop. Dis. 15, e0009640. https://doi.org/10.1371/journal.pntd.0009640 (2021).

Fürst, T. et al. Retrospective active case finding in Cambodia: An innovative approach to leprosy control in a low-endemic country. Acta Trop. 180, 26–32. https://doi.org/10.1016/j.actatropica.2017.12.031 (2018).

Utap, M. S. & Kiyu, A. Active case detection of leprosy among Indigenous people in Sarawak, East Malaysia. Lepr. Rev. 88, 563–567 (2017).

Rodríguez-Pérez, R. & Bajorath, J. Interpretation of machine learning models using Shapley values: Application to compound potency and multi-target activity predictions. J. Comput. Aided Mol. Des. 34, 1013–1026. https://doi.org/10.1007/s10822-020-00314-0 (2020).

Bronge, W., Lindholm, B., Elmståhl, S. & Siennicki-Lantz, A. Epidemiology and functional impact of early peripheral neuropathy signs in older adults from a general population. Gerontology 70, 257–268. https://doi.org/10.1159/000535620 (2023).

Shi, B. et al. Prediction of recurrent spontaneous abortion using evolutionary machine learning with joint self-adaptive Sime mould algorithm. Comput. Biol. Med. 148, 105885. https://doi.org/10.1016/j.compbiomed.2022.105885 (2022).

Acknowledgements

We thank the staff members and all patients who agreed to participate in this study.

Funding

This study was financed in part by the Coordenação de Aperfeiçoamento de Pessoal de Nível Superior-Brazil (CAPES)-Finance Code 001 with Ph.D. scholarship program for MS, by the National Council for Scientific and Technological Development (CNPq) with Ph.D. scholarship program for FL and by a research grant for MF (423635/2018-2), and by the Oswaldo Cruz Foundation-Ribeirão Preto (TED 163/2019–Protocol N° 515 25380.102201/2019-62/Project Fiotec: PRES-009-FIO-20). We also acknowledge the financial support of the Research and Assistance Support Foundation of the Hospital of the Medical School of Ribeirão Preto at USP (FAEPA) to the National Referral Center for Sanitary Dermatology and HD.

Author information

Authors and Affiliations

Contributions

MS implemented the code. MS, FL, MF and AR substantially contributed to manuscript conception/design and analysis/interpretation of data. HL and MF contributed to the clinical care of patients. MF, NP and CS contributed with data acquisition. MF author gave final approval of the final submitted version and any revisions, as well as provided supervision and orientation of the study. All authors contributed to the interpretation of the results and critical revision.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Mendonça Ramos Simões, M., Rocha Lima, F., Barbosa Lugão, H. et al. Development and validation of a machine learning approach for screening new leprosy cases based on the leprosy suspicion questionnaire. Sci Rep 15, 6912 (2025). https://doi.org/10.1038/s41598-025-91462-6

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-91462-6

Keywords

This article is cited by

-

Serum geoepidemiology of leprosy biomarkers in a city-wide COVID-19 survey in Brazil

BMC Infectious Diseases (2026)