Abstract

Data imputation is a critical step in data processing, directly influencing the accuracy of subsequent research. However, due to the temporal nature of ride-hailing trajectory data, traditional imputation methods often struggle to adequately consider spatiotemporal characteristics, leading to limitations in both convergence speed and accuracy. To address this issue, this study employs a prediction-based approach to enhance imputation accuracy. Given the limited feature parameters in trajectory data, traditional prediction models often fail to comprehensively capture data characteristics. Therefore, this study proposes a feature generation model based on LightGBM-GRU, combined with a SARIMA-GRU prediction model, to more thoroughly capture and enrich the data characteristics. This approach effectively imputes missing data, thereby laying a solid foundation for subsequent research.

Similar content being viewed by others

Introduction

Trajectory data loss, frequently caused by environmental factors such as adverse weather, tunnel obstructions, or sensor failures, introduces temporal gaps that critically compromise data integrity. Such incompleteness undermines downstream spatiotemporal analytics, leading to biased conclusions or rendering advanced modeling impractical. Missingness mechanisms are categorized into three types: Missing Completely at Random (MCAR), where missingness is independent of observed and unobserved variables; Missing at Random (MAR), where it correlates with observed variables; and Missing Not at Random (MNAR), where dependencies on unobserved factors exist. Temporal autocorrelation in missingness patterns may further manifest as isolated or cascading gaps.

Conventional approaches to address missing data include deletion (discarding incomplete entries) and imputation (estimating missing values). While deletion risks information loss and temporal discontinuity—particularly detrimental to time-series analysis—imputation preserves dataset utility by reconstructing coherent sequences. Effective management of missing data is therefore essential for robust spatiotemporal analytics.

Imputation methods span statistical techniques (e.g., mean/median substitution, Lagrange interpolation1) to model-driven approaches. While basic methods offer computational efficiency, they lack contextual awareness and precision in complex scenarios2. Algorithmic strategies include regression-based imputation (prone to covariate bias3), expectation–maximization (EM4, scalable but memory-intensive), and k-nearest neighbors (KNN5, reliant on similarity metrics with optimization challenges). Missing Value Completion (MVC6) achieves high accuracy at the expense of throughput, while multiple imputation (MI) trades computational overhead for robustness. Model-based predictions risk overfitting with limited training data.

Ride-hailing trajectories exhibit strong spatiotemporal dependencies that simplistic imputation fails to capture, while their low feature dimensionality restricts comprehensive pattern characterization. To address these limitations, we propose a hybrid feature-driven imputation framework combining LightGBM (gradient-boosted feature engineering), SARIMA (temporal decomposition), and GRU (nonlinear sequence modeling)—the LG-SG model—to enable high-fidelity data recovery for downstream analytical tasks.

Theoretical basis

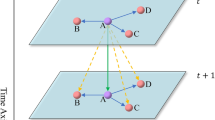

The proposed LG-SG framework integrates two synergistic modules: (1) a LightGBM-GRU architecture for feature generation and (2) a SARIMA-GRU hybrid model for spatiotemporal prediction.

In the feature generation stage, LightGBM addresses sparse feature representation by learning hierarchical interactions from limited input data (e.g., temporal intervals, velocity), enhancing discriminative capacity through gradient-boosted tree ensembles. Its memory-efficient design accelerates large-scale training while generating enriched feature vectors. These outputs are further refined via GRU’s gated recurrent layers, which capture nonlinear temporal dependencies to mitigate oversimplification in traditional tree-based methods.

For spatiotemporal prediction, SARIMA decomposes trajectory data into cyclical and trend components, while GRU models residual nonlinear patterns through its simplified gating mechanisms. This hybrid architecture synergizes SARIMA’s interpretable temporal decomposition with GRU’s ability to handle vanishing/exploding gradients, enabling robust imputation of missing values in complex time-series applications.

Light gradient boosting machine

Ensemble learning improves model robustness by integrating diverse machine learning algorithms, mitigating the inherent limitations of individual models in classification and anomaly detection tasks. Its operational framework involves training multiple weak learners and aggregating their outputs through context-specific fusion strategies: averaging is optimal for learners with comparable performance metrics, voting achieves superior accuracy in classification scenarios, and stacking employs meta-learning to refine predictions through secondary training.

Prominent methodologies—Bagging, Boosting, and Stacking—target distinct performance dimensions. Boosting algorithms demonstrate exceptional efficacy by iteratively constructing strong learners through error correction, systematically balancing the bias-variance trade-off. The methodological evolution of ensemble techniques has propelled transformative advancements in machine learning applications. The following Table 1 is the Boosting Algorithm Development History.

Xinran He et al. pioneered GBDT application in CTR prediction, automating feature extraction and interaction discovery to enhance linear classifier performance10.

The Lightweight Gradient Boosting Machine (LightGBM), developed through continuous refinement, achieves comparable accuracy to XGBoost while reducing computation time by approximately tenfold and memory usage by around threefold. These distinct performance advantages over other methods are primarily enabled by the following strategies.

-

1.

LightGBM utilizes a histogram-based algorithm11 that discretizes features through value binning, using bin medians as histogram indices for split point selection (Fig. 1). This approach reduces memory usage and computational costs while providing implicit regularization to enhance model robustness.

-

2.

GOSS prioritizes under-trained instances through gradient-based sampling while preserving data distribution. It intelligently groups features while maintaining exclusivity through greedy optimization.

-

3.

EFB compresses sparse features into dense representations via mutualexclusion analysis, employing graph-based optimization to solve feature bundling12.

LightGBM operates on standard Boosting frameworks, with implementation specifics visualized in Figs. 2 and 3.

In the LightGBM algorithm, the expression of the loss function is critically important as it is closely related to the results of the decision tree. Let the loss function be denoted as R, representing the gradient boosting tree after t iterations. In the t-th iteration, the negative gradient of the loss function with respect to the predicted value from the \(\text{t}-1\)-th iteration is equal to the residual of the model in the \(\text{t}-1\)-th iteration, as shown in Eq. (1):

In this context, \(h\left(\text{x},{\uptheta }_{\text{t}}\right)\) represents the prediction result obtained after training a regression tree, i.e., the output of the decision tree model with input \(\text{x}\). \({\uptheta }_{\text{t}}\) denotes the parameters of decision tree \(\text{t}\). In each iteration, the parameters of the decision tree, \({\uptheta }_{\text{t}}\), are optimized using Eq. (3).

The final prediction result, \({\text{H}}_{\text{T}}(\text{x})\), is the linear sum of the prediction results from each iteration, \(h\left(\text{x},{\uptheta }_{\text{t}}\right)\), as shown in Eq. (4).

Seasonal auto-regressive integrated moving average model

SARIMA (Seasonal Autoregressive Integrated Moving Average), an extension of ARIMA incorporating seasonal differencing, is designed for time-series data with pronounced periodic patterns (e.g., traffic flow). Following the Box-Jenkins framework, it integrates historical data, decomposes seasonal trends via STL filtering, and transforms non-stationary sequences into stationary components for robust forecasting13.

The workflow involves stabilizing non-stationary data through differencing to remove seasonal/local trends, followed by predictive equation construction. Implementation includes preprocessing, stationarity testing, differencing, Bayesian Information Criterion(BIC)-based model order selection, parameter estimation, and residual diagnostics14. Formally expressed as SARIMA(p,d,q)(P,D,Q,S), the model employs multiplicative seasonality: non-seasonal parameters (p,d,q) govern short-term dynamics, while (P,D,Q,S) capture periodic trends, with S defining seasonal cycle length. This dual architecture concurrently models transient and cyclical patterns, as mathematically defined below15:

In the equation:\({\varphi }(\text{B})\) represents the non-seasonal autoregressive polynomial, \(\uptheta (\text{B})\) denotes the non-seasonal moving average polynomial, and \(\upphi (\text{B}\) is the lag operator.

Gated recurrent neural network

The rise of deep learning has revolutionized predictive analytics, with gated architectures like LSTM and GRU becoming mainstream for time series forecasting. LSTM excels in modeling nonlinear, non-stationary sequences, while GRU (Cho et al.16) simplifies the architecture using two gating mechanisms, achieving comparable performance with reduced computational complexity.

These architectures are particularly effective for traffic prediction tasks due to their ability to model spatiotemporal dependencies through recurrent neural connections. GRU’s update and reset gates address recurrent neural network (RNN) limitations by regulating gradient flow, thereby enhancing stability and enabling efficient real-time forecasting. Figure 4 schematically illustrates GRU’s operational framework, highlighting its streamlined architecture optimized for sequential data analysis.

The operation process of GRU is described by Eqs. (10) to (13) 16:

In the equation: '*' represents matrix multiplication, and ':' represents element-wise multiplication. \({\text{z}}_{\text{t}}\) denotes the update gate, \({\text{r}}_{\text{t}}\) is the reset gate, \({\text{x}}_{\text{t}}\) represents the input information at time step \(\text{t}\), \({h}_{\text{t}-1}\) is the input information at time step \(\text{t}-1\), \({\tilde{h}}_{\text{t}}\) refers to the retained input information at the current time step, \(\upsigma\) is the Sigmoid activation function, \(\text{tanh}\) is the hyperbolic tangent activation function, \({\text{W}}_{\text{XZ}},{\text{W}}_{\text{HZ}},{\text{W}}_{\text{XR}},{\text{W}}_{\text{HR}}\) represents the weight matrix, and \({\text{r}}_{\text{t}}, {\text{z}}_{\text{t}}\) is the bias vector. The iterative process of GRU is as follows17: After the current input information \({\text{x}}_{\text{t}}\) enters the gated unit, the input data at time step \({\text{x}}_{\text{t}}\) is fused with the output data at time step \(\text{t}-1\), producing the reset gate output signal \({\text{r}}_{\text{t}}\). The update gate generates the output signal \({\text{z}}_{\text{t}}\), and the input signal is reset according to Eq. (12), yielding the modified data \({\tilde{h}}_{\text{t}}\) . Finally, the output result is \({h}_{\text{t}}\). Through continuous iterations of the GRU neural network, historical information is processed efficiently, allowing it to capture and output important time-series information, thereby reflecting correlations within the data.

Based on LG-SG data filling method

Model construction

This study introduces a feature generation-based hybrid prediction framework (hereafter abbreviated as LG-SG) for robust data imputation18. The framework comprises two synergistic components: (1) a LightGBM-GRU integration for feature generation and (2) a SARIMA-GRU hybrid architecture for spatiotemporal prediction. Traditional decision tree algorithms exhibit inherent limitations in learning rare feature combinations from sparse training data, while deep learning methods—despite their capacity to derive high-level interactions through hidden vector operations—remain constrained in capturing low-order feature relationships19. To address these gaps, we develop a hybrid feature generation framework that synergizes tree-based and deep learning paradigms. This dual-strategy approach not only augments the representational capacity of input features but also generates discriminative feature expressions, thereby improving imputation accuracy.

The feature selection protocol incorporates the spatiotemporal attributes of ride-hailing datasets, prioritizing three critical dimensions: temporal intervals, velocity profiles, and travel distances. These features collectively characterize traffic dynamics across temporal, spatial, and behavioral domains, providing a robust foundation for identifying discriminative patterns to address missing data. The LightGBM-GRU feature generation workflow is implemented through the following sequential steps:

-

1.

Data preprocessing: Address anomalies and missing values in raw datasets through noise filtering and outlier removal.

-

2.

Temporal feature engineering: Transform temporal data into timestamp formats to enable time-window partitioning and differencing operations.

-

3.

Numerical feature normalization: Standardize numerical features (e.g., time-series velocities) via z-score normalization, ensuring dimensional homogeneity across the input feature matrix.

-

4.

Categorical feature embedding: Apply one-hot encoding to convert categorical variables into high-dimensional sparse vectors, followed by dimensionality reduction using feature embedding techniques.

-

5.

LightGBM configuration: Initialize LightGBM with core hyperparameters (boosting type, learning rate, and tree complexity metrics). Partition datasets into training/validation subsets (70:30 ratio), then train the model to derive interaction-enhanced feature representations.

-

6.

GRU input preparation: Integrate LightGBM-generated features with initial features as composite inputs for the GRU network.

-

7.

GRU architecture optimization: Optimize GRU hyperparameters (hidden units, time steps, and activation functions) through grid search, minimizing mean squared error (MSE) via backpropagation through time (BPTT).

-

8.

Feature fusion via stacking: Perform hierarchical fusion of original and generated features using stacking ensembles, yielding an enhanced feature matrix. The workflow is schematically summarized in Fig. 5.

To address missing data imputation, we develop a hybrid SARIMA-GRU prediction model by integrating combined spatiotemporal feature vectors. The workflow initiates with an Augmented Dickey-Fuller (ADF) test to assess the stationarity of raw traffic data. Subsequently, core SARIMA parameters (p,d,q)(P,D,Q,S) are identified through autocorrelation function (ACF)/partial autocorrelation function (PACF) analysis and iterative grid search. The SARIMA component applies Seasonal-Trend decomposition using LOESS (STL) to isolate cyclical trends and generate preliminary forecasts, with model validity rigorously validated through residual diagnostics. Prediction residuals are then channeled into a GRU network to produce error-correction terms, which are iteratively refined via backpropagation. Final predictions are derived by synergizing SARIMA outputs with GRU-corrected residuals. The workflow is systematically illustrated in Fig. 6.

Model evaluation

To comprehensively assess the performance of the proposed model, four complementary evaluation metrics were selected: Mean Squared Error (MSE), Mean Absolute Error (MAE), Mean Absolute Percentage Error (MAPE), and Accuracy (ACC). These criteria quantify prediction errors and classification reliability from distinct perspectives, with their mathematical formulations detailed in20.

where \(\text{y}\) represents the actual value, \({{\hat{\text{y}}}}\) denotes the predicted value, and \(\text{n}\) refers to the number of data points.

Case verification

Experimental data

This study evaluates the proposed LG-SG framework using ride-hailing GPS trajectory data from Chengdu’s primary urban corridors. Post-preprocessing, we focus on weekday (7 November 2018) and weekend (10 November 2018) datasets, analyzing road-segment velocity fluctuations at 5-min intervals over 24-h periods (00:00–24:00), yielding 288 temporal intervals. To simulate real-world data loss scenarios, artificial gaps are introduced into the complete dataset, and the LG-SG model is applied for imputation. Model efficacy is validated by comparing imputed values against ground-truth missing data.

The experimental protocol targets the north–south corridor of Xinhua Avenue, inducing both single-point and full-segment missing data patterns for the selected dates. Detailed classifications of missing data types and their morphological representations are provided in Tables 2 and 3.

Result analysis

The LG-SG framework operates through two sequential modules. The first module implements a LightGBM-GRU hybrid architecture for feature generation.

Step 1: Ingest preprocessed raw data, where the initial feature matrix \({\text{X}}_{\text{fe}}\) comprises temporal intervals, average velocity, and travel distance.

To ensure the comparability of the features, the three features in matrix \({\text{X}}_{\text{fe}}\) need to undergo normalization. After this step, the model construction and computation can begin. First, the initial parameters for the LightGBM algorithm are set. While some parameters of the decision tree model use default values, certain hyperparameters need to be optimized to find the best combination, thereby reducing model error. Tuning the parameters improves accuracy and prevents overfitting. Grid search and cross-validation (GridSearch CV) are employed for hyperparameter tuning, with the final parameters shown in Table 4.

Based on the parameter settings above, input \({\text{X}}_{\text{fe}}\) into the LightGBM model to generate a new feature column, \({\text{X}}_{{\text{fe}}_{-}\text{LGB}}\), using the existing time, speed, and travel distance features.

Step 2: A GRU architecture with dual hidden layers is implemented. The model employs mean squared error (MSE) as the loss function, hyperbolic tangent (tanh) for hidden state activation, and a piecewise linear approximation of the sigmoid function for recurrent gate operations. Training is conducted via the RMSProp optimizer over 1000 epochs to optimize the candidate network topology. Hyperparameter configurations for the GRU are summarized in Table 5.

Using \({\text{X}}_{\text{fe}}^{{^{\prime}}}=\left[\begin{array}{llll}\text{T}& \text{V}& {\text{S}}_{\text{d}}& {\text{X}}_{{\text{fe}}_{-}\text{LGB}}\end{array}\right]\) as the input, it is fed into the model for computation, resulting in the generation of the new feature \({\text{X}}_{{\text{fe}}_{-}\text{GRU}}\).

Step 3: The Stacking method is then applied to merge the features generated by the base classifiers, LightGBM and GRU, to form the fused feature matrix \({\text{X}}_{\text{fe\_saccing}}\),. The final data matrix used for prediction is \({\text{X}}_{\text{fe}}^{{^{\prime}}{^{\prime}}}=\left[\begin{array}{llll}\text{T}& \text{V}& {\text{S}}_{\text{d}}& {\text{X}}_{\text{fe\_stacking}}\end{array}\right]\).

The second part involves constructing a prediction model based on SARIMA-GRU.

Step 1: Construct the SARIMA(p, d, q) (P, D, Q, s) model with the following steps.

-

1.

The stationarity test was performed on the original data, and the results of ADF stationarity test were shown in Table 6:

The augmented Dickey–Fuller (ADF) test results demonstrate statistical significance at the 1%, 5%, and 10% levels. The calculated test statistic (\({\text{T}}_{\text{ADF}}\)) exceeds critical values at all three significance thresholds (∣\({\text{T}}_{\text{ADF}}\)∣ > CV), rejecting the null hypothesis of non-stationarity. Further corroboration is provided by the near-zero p-value (p ≈ 0), which is substantially smaller than the conventional 0.05 significance level. These findings confirm the stationarity of the processed dataset.

-

2.

Determine parameters p, q, d.

From (1), the data series is stationary with the order of differencing d = 0. Based on the autocorrelation function (ACF) and partial autocorrelation function (PACF) plots (as shown in Fig. 7), determine the values of the non-seasonal autoregressive order p and the non-seasonal moving average order q.

As shown in the figure above, both the ACF and PACF plots of the stationary trajectory data series exhibit a trailing-off pattern. The ACF coefficients become zero after lag 3, while the PACF coefficients become zero after lag 1. This suggests selecting p = 1 for the non-seasonal autoregressive order and q = 3 for the non-seasonal moving average order. Consequently, the model is identified as SARIMA(1, 0, 3)(P, D, Q).

-

3.

Determine parameters P, Q.

Using the Bayesian Information Criterion (BIC), the seasonal autoregressive order P and seasonal moving average order Q were determined. Combined with a grid search approach, 18 combinations of P,Q ∈ {0,1,2} and seasonal differencing order D ∈ {0,1} were evaluated. The combination with the minimum BIC value was selected as the final parameters. The resulting model is identified as SARIMA(1, 0, 3)(0, 1, 1).

-

4.

Bring the confirmed SARIMA model to the test.

The validity was assessed using the Ljung-Box statistic. The final test results show that all p-values are greater than 0.05, which demonstrates that the SARIMA model has successfully captured the essential information in the trajectory data. This confirms that the residuals contain no significant autocorrelation, and the model can be reliably applied to subsequent applications.

-

5.

Stepwise prediction.

Through the aforementioned four steps, a fully specified SARIMA model is obtained. This model is then used to forecast the input data X, and the residuals (prediction errors) are extracted for further analysis.

Step 2: Build the GRU model.

The error data extracted from SARIMA predictions is used as input to train a GRU model, which outputs predicted error values. The final predicted values are obtained by combining the SARIMA forecasted values with the error compensation values derived from the GRU model. These predicted values are then used to fill in missing data. Figure 8 compares the true values with the imputed values, demonstrating the effectiveness of the hybrid approach.

As shown in Fig. 8, the LG-SG-based data imputation model struggles to achieve precise predictions for abrupt changes in speed values, but its overall trend aligns well with the true values. Tables 7 and 8 summarize the imputation errors across different time periods. The results indicate that the LG-SG method achieves higher accuracy under stable traffic conditions (e.g., off-peak hours) but lower accuracy during periods of high volatility (e.g., peak hours). Specifically:

-

1.

On November 7 (weekday), frequent traffic fluctuations during morning and evening peak hours led to reduced imputation accuracy compared to off-peak periods.

-

2.

On November 11 (weekend), the morning peak saw significantly lower commuting demand, resulting in higher imputation accuracy during both the morning peak and mid-morning off-peak period. However, accuracy declined during the evening peak due to increased travel demand and road condition volatility.

To evaluate the model’s performance, multiple metrics were employed, including Mean Squared Error (MSE), Mean Absolute Error (MAE), and Mean Absolute Percentage Error (MAPE). The results are detailed in Table 9.

The results demonstrate that, compared to several commonly used forecasting methods, the model proposed in this study achieves superior imputation performance. Therefore, when processing ride-hailing trajectory data, adopting the LG-SG model proposed in this paper for data imputation yields significantly more accurate results.

Ablation experiments

This section will validate the contribution of each module in the LG-SG model through systematic ablation experiments, including component removal and combination analysis, to test the prediction accuracy and effectiveness of the LG-SG model. The specific test data is shown in Table 10 and Fig. 9 below.

Ablation experiments systematically validate the interdependent roles of SARIMA, GRU, and LightGBM modules in the LG-SG framework for time series imputation. The full model achieves optimal performance (MSE: 32.332, MAE: 3.698, MAPE: 0.096, ACC: 90.4%), significantly outperforming both standalone and dual-module configurations. When components are isolated, critical limitations emerge: SARIMA maintains periodicity modeling (MSE = 38.523) but fails to capture nonlinear dynamics (ACC = 88.2%); GRU exhibits pronounced error accumulation in long-sequence gaps (MAPE = 0.125); LightGBM processes static features effectively (MAE = 3.937) but ignores temporal dependencies (MSE = 42.318). These results confirm that individual modules only partially address the multidimensional challenges of spatiotemporal data imputation.

Dual-module combinations reveal compensatory but incomplete synergies. LightGBM-SARIMA integrates static-temporal patterns (MSE = 34.515, ACC = 89.4%) yet incurs localized errors (MAPE = 0.106) due to missing dynamic correction. Conversely, SARIMA-GRU combines linear decomposition with nonlinear residuals (MSE = 36.258, MAPE = 0.104) but sacrifices robustness (ACC = 89.6%) without contextual feature fusion. The LightGBM-GRU configuration (MSE = 36.493, ACC = 88.7%) demonstrates SARIMA’s essential role in suppressing error propagation through explicit trend modeling. Notably, SARIMA-GRU’s superior MAPE (0.104 vs. LightGBM-SARIMA’s 0.106) coupled with lower ACC (89.6% vs. 89.4%) highlights a critical trade-off between local error reduction and global consistency.

The LG-SG architecture overcomes these limitations through staged integration: SARIMA first decomposes trends/seasonality via differencing and moving averages; GRU then corrects residual nonlinearities through gated memory units; finally, LightGBM enhances generalization by fusing exogenous static features. This hierarchical workflow reduces MSE by 6.3%, increases ACC by 1.0%, and lowers MAPE by 9.4% compared to LightGBM-SARIMA. Component ablation quantifies their contributions: SARIMA prevents 13.2% MSE degradation in long-term sequences, GRU reduces localized MAPE errors by 10.4%, and LightGBM improves ACC by 1.2% through contextual awareness. The framework achieves Pareto-optimal balance between precision (MSE/MAE) and robustness (ACC), establishing a replicable paradigm for multimodal temporal modeling that synergizes statistical decomposition, deep sequence learning, and ensemble feature engineering.

Discussion

When compared to global studies, the findings of this research demonstrate both alignment and innovation. For instance, Wang14 and Yan17 have shown the effectiveness of combining LightGBM with neural networks for time series forecasting, which resonates with the strengths of the LG-SG framework. Similarly, Zhang15 highlighted the benefits of hybrid models in short-term traffic flow prediction, reinforcing the notion that integrating multiple modeling techniques enhances accuracy and robustness. However, this study distinguishes itself by introducing a multi-layered architecture that combines SARIMA, LightGBM, and GRU, effectively capturing both static and dynamic patterns in shared mobility trajectory data. This unique integration not only improves the model’s interpretability but also enhances its predictive power in complex scenarios.

Despite these advancements, certain limitations persist. The LG-SG model’s performance during high volatility periods remains a challenge, as it struggles to maintain high accuracy in the face of sudden or irregular data fluctuations. This issue aligns with findings from Zhou22, who noted that hybrid models often face difficulties in handling abrupt changes in data. Additionally, the study relied on a dataset that did not incorporate external factors such as weather conditions or event information, which could have provided valuable context for improving predictions. These limitations underscore the need for further refinement and expansion of the model.

Future studies could focus on enhancing the LG-SG model’s robustness in extreme spatiotemporal fluctuations by incorporating advanced techniques such as attention mechanisms or Transformer architectures. Exploring the integration of external data sources, such as weather forecasts or event schedules, could also improve the model’s predictive accuracy. Furthermore, extending the applicability of the LG-SG model to different domains, such as urban planning or logistics, would provide insights into its adaptability across diverse contexts. Incorporating dynamic and multi-modal data, such as real-time traffic conditions or public transit information, could further validate the model’s scalability and effectiveness in handling complex, real-world scenarios. By addressing these areas, the LG-SG model could continue to serve as a valuable benchmark, demonstrating the potential of hybrid approaches in advancing time series analysis and its applications.

Conclusion

This study proposes a novel data imputation framework, LG-SG, which integrates LightGBM, SARIMA, and GRU models to effectively address missing data challenges. By leveraging the complementary strengths of these algorithms, the proposed framework constructs an enhanced feature generation mechanism, significantly improving the comprehensiveness and accuracy of imputed data. Specifically, SARIMA is utilized for initial prediction and error extraction, while GRU refines these predictions through error compensation. The integration of SARIMA predictions with GRU-based error correction yields highly accurate imputed values. Extensive simulation experiments on complete datasets validate the model’s robustness and superior performance across diverse data conditions, demonstrating its efficacy in handling missing data scenarios.

Building on these technical advantages, this study further explores the practical application value of the model in traffic management and provides critical policy recommendations for decision-makers. First, the establishment of data standardization and sharing frameworks is identified as a key measure to enhance the availability and quality of traffic data, thereby supporting more efficient and accurate traffic management. Second, integrating advanced data imputation methods, such as LG-SG, into real-time traffic prediction systems could significantly improve the accuracy of traffic forecasting while optimizing decision-making processes and facilitating the rational allocation of resources for infrastructure development and maintenance.

The implementation of these recommendations is expected to deliver substantial benefits to key stakeholders. Government agencies involved in urban planning and traffic management would gain access to more accurate data-driven insights, enabling improved traffic flow management, optimized signal control, and enhanced responsiveness during peak or disrupted traffic conditions. Transport service providers, including ride-hailing platforms, could leverage the LG-SG model to predict demand more accurately, optimize routes, and enhance operational efficiency. Additionally, end-users, such as commuters and drivers, would experience reduced travel times, decreased congestion, and an overall improvement in transportation experiences. Furthermore, the research findings provide valuable insights for data scientists and researchers, fostering further innovation in spatiotemporal data analysis and contributing to the development of smarter and more sustainable transportation systems.

By combining the LG-SG data imputation model with policy recommendations, this study not only deepens the understanding of advanced data imputation techniques but also offers actionable insights to guide the development of smarter, more sustainable, and efficient urban transportation systems.

Data availability

The datasets generated and/or analyzed during the current research period can be purchased from this repository. [https://www.goofish.com/item?spm=a21ybx.search.searchFeedList.1.21173da6TLcKs3&id=814568319820&categoryId=202036301].

References

Yuanyuan, Z. & Jie, Ji. Restoration of missing data on national and provincial highways based on lagrange interpolation method. Wirel. Internet Technol. 18(10), 97–100 (2021).

Bowen, C. et al. Comparison of five interpolation methods for handling missing water level data. J. Disaster Prev. Mitig. 39(02), 58–65 (2023).

Zhiwen, Z., Shan, J. & Dongxue, L. Parameter estimation of interval autoregressive model with missing data. J. Jilin Normal Univ. (Nat. Sci. Ed.) 44(01), 63–69 (2023).

Lin, D. Research on Handling Incomplete Measurement Data Based on the EM Algorithm. (Central South University, 2012).

Xiaojie, C. A study on an optimized weighted k-nearest neighbors algorithm for missing data imputation. Wirel. Internet Technol. 19(08), 121–125 (2022).

Wang, C. A Missing Data Imputation Method Based on k-means Algorithm and Association Rules. (Harbin Engineering University, 2014).

Friedman, J. Greedy function approximation: a gradient boosting machine. Ann. Stat. 29, 1189–1232 (1999).

Chen, T, Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining 785–794 (Association for Computing Machinery, 2016).

Ke, G., Meng, Q., Finley, T., et al. LightGBM: a highly efficient gradient boosting decision tree. In Proceedings of the 31st International Conference on Neural Information Processing Systems. 3149–3157 (Curran Associates Inc., 2017).

He, X., Pan, J., Jin, O., et al. Practical Lessons from Predicting Clicks on Ads at Facebook

Li, P., Burges, C. J. C., Wu, Q. McRank: learning to rank using multiple classification and gradient boosting. In Proceedings of the 20th International Conference on Neural Information Processing Systems. 897–904 (Curran Associates Inc., 2007).

Wang, Y. Research and Application of a Classification Prediction Algorithm Based on Improved LightGBM. (Beijing Institute of Petrochemical Technology, 2022).

Ping, C. Missing Data Imputation in Bridge Health Monitoring Systems Based on a Hybrid SARIMA and Neural Network Model. (Chongqing University, 2011).

Wang, Yu. et al. A load forecasting method for power grid hosts based on SARIMA-LSTM model. Comput. Eng. Sci. 44(11), 2064–2070 (2022).

Xujun, Z. & Chenhui, W. A short-term traffic flow forecasting method based on SARIMA-GA-Elman combined model. J. Lanzhou Univ. Technol. 48(05), 107–113 (2022).

Cho, K., Van Merrienboer, B., Gulcehre, C., et al. Learning phrase representations using RNN encoder-decoder for statistical machine translation. Comput. Sci. (2014).

Yi, J., Yan, H. Research on foreign trade risk prediction and early warning based on wavelet decomposition and ARIMA-GRU hybrid model. China Manag. Sci. 1–11.

Cheng, W., Li, J., Xiao, H.-C., et al. Combination predicting model of traffic congestion index in weekdays based on LightGBM-GRU. Sci. Rep. 12(1), 2912 (2022).

Yangxue, S. & Wei, M. A cross-modal retrieval method for special vehicles based on deep learning. Comput. Sci. 47(12), 205–209 (2020).

Chunxia, Y. et al. Research on short-term traffic flow prediction based on multi-lane weighted fusion. Highway Traffic Technol. 38(01), 121–127 (2021).

.Challu, C., Olivares, K. G., Oreshkin, B. N., Garza, F., Mergenthaler, M., & Dubrawski, A. N-HiTS: Neural hierarchical interpolation for time series forecasting. Adv. Neural Inf. Process. Syst. NeurIPS. 35, 16977–16990 (2022).

Zhou, H., Zhang, S., Peng, J., Zhang, S., Li, J., Xiong, H., & Zhang, W. A Decomposition-based linear model for multivariate long-term time series forecasting. In Proceedings of the 40th International Conference on Machine Learning (ICML), PMLR, vol. 202, 42538–42555 (2023).

Chen, S., Kwok, Y., & Zeng, A. TSMixer: An All-MLP Architecture for Time Series Forecasting. arXiv preprint arXiv:2303.06053 (2023).

Author information

Authors and Affiliations

Contributions

H.X: Conceptualization of the research, methodology development, and model construction (LightGBM-GRU and SARIMA-GRU); data collection and preprocessing; drafting the manuscript. X.S: Data analysis, model validation, and optimization; reviewing and editing the manuscript; interpretation of results. J.L: Data curation and case study design; visualization of experimental outcomes; assistance with data preprocessing. X.Y: Supervision, funding acquisition, and critical review of the manuscript; provided academic guidance on predictive model design and spatiotemporal analysis.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Xiao, H., Shen, X., Li, J. et al. A method for filling traffic data based on feature-based combination prediction model. Sci Rep 15, 8441 (2025). https://doi.org/10.1038/s41598-025-92547-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-92547-y