Abstract

Diabetic Retinopathy (DR) demands precise hemorrhage detection for early diagnosis, yet manual identification faces challenges due to hemorrhagic lesions’ varied sizes, complex shapes, and color similarities to surrounding tissues, which obscure boundaries and reduce contrast. To address this, we propose SAM-ada-Res, a novel dual-encoder model integrating a pre-trained Segment Anything Model (SAM) and ResNet101. SAM captures global semantic context to distinguish ambiguous lesions from vessels, while ResNet101 extracts fine-grained details through its deep hierarchical layers. Feature maps from both encoders are fused via channel-wise concatenation, enabling the decoder to localize lesions with high precision. A lightweight Adapter fine-tunes SAM for retinal tasks without retraining its backbone, ensuring task-specific adaptation. Evaluated on three datasets (OIA-DDR, IDRiD, JYFY-HE), SAM-ada-Res outperforms state-of-the-art methods in nDice (0.6040 on JYFY-HE) and nIoU (0.4182 on IDRiD), demonstrating superior generalization and robustness. An online platform further streamlines clinical deployment, enhancing diagnostic efficiency. By synergizing SAM’s generalizable vision capabilities with ResNet’s localized feature extraction, SAM-ada-Res overcomes key challenges in DR hemorrhage detection, offering a robust tool for early intervention. This work bridges technical innovation and clinical practicality, advancing automated DR diagnosis.

Similar content being viewed by others

Introduction

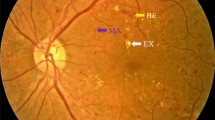

According to the current clinical practice guidelines for Diabetic Retinopathy (DR), the quantity of retinal hemorrhages (HE) in fundus images is considered one of the key indicators for assessing the progression stage of DR. However, the existing diagnostic methods for DR, particularly the manual identification and quantification of HE by ophthalmologists, face several significant challenges that limit their efficiency and precision.

The manual identification of HE in fundus images by ophthalmologists is a complex and demanding task. It requires clinicians to possess high-level professional skills to accurately distinguish HE from other retinal features and artifacts. This process is not only time-consuming but also highly susceptible to human error, especially when dealing with large volumes of images or subtle hemorrhages that are difficult to detect. Moreover, inter-observer variability among different ophthalmologists can lead to inconsistent diagnoses, further complicating the assessment of disease progression.

The manual process of identifying and counting retinal HE in each quadrant of fundus images is extremely labor-intensive. It places a significant burden on ophthalmologists, limiting the number of patients that can be screened and diagnosed within a given timeframe. This is particularly problematic in regions with limited healthcare resources or high patient volumes, where timely diagnosis and treatment are essential to prevent the progression of DR.

Given these challenges, there is a pressing need in the field of retinal lesion analysis for intelligent auxiliary diagnostic tools1,2,3 that can accurately and efficiently identify and quantify retinal hemorrhages in fundus images. These tools should not only alleviate the burden on ophthalmologists but also improve the precision and consistency of DR diagnosis4,5.

In recent years, the breakthrough progress of Large Language Models (LLM) in the field of natural language processing has sparked widespread attention towards the application of Large Vision Models (LVM) in medical image analysis research. These vision models, based on deep learning algorithms, can learn and extract features from massive amounts of image or video data, thereby achieving tasks such as image classification, semantic segmentation, and object detection. LVM typically have a vast number of parameters, allowing them to capture complex visual features and, through pre-training on large-scale datasets, possess strong generalization and multitask processing capabilities. Recently emerged large vision models such as segment anything model (SAM)6 and its latest version, SAM27, are generic segmentation models trained on over ten million images and more than one billion segmentation masks, demonstrating significant potential for few-shot or even zero-shot learning across various image segmentation tasks. However, to make LVM more effectively serve specific application scenarios, they usually require fine-tuning on smaller domain-specific datasets to optimize model performance and ensure applicability to new tasks. Especially when facing the challenge of detecting HE in DR, where high-quality image data and their semantic annotations are relatively scarce, directly adopting unadjusted LVM is unlikely to achieve satisfactory detection outcomes. Therefore, how to effectively transfer the knowledge learned by LVM from large datasets to HE segmentation tasks becomes a crucial research problem that needs to be addressed. Exploring effective knowledge transfer strategies not only helps overcome the issue of data scarcity in DR diagnosis but also promises to enhance the accuracy and efficiency of early DR detection8, providing strong technical support for improving patient outcomes.

Due to the fact that SAM was trained exclusively on natural images, it lacks specific knowledge related to medical imaging, leading to sub-optimal performance on multi-modal and multi-object medical datasets. To address this issue, Cheng et al. proposed a large vision model tailored for medical image segmentation tasks, SAM-Med2D9, which utilized a large-scale medical image dataset comprising over four million images and nearly twenty million Masks to fine-tune the three prompt modes (point, bounding box, and mask) of SAM, catering to the needs of different scenarios in medical image segmentation tasks. However, the segmentation results produced by SAM-Med2D still require improvement for objects with complex shapes/boundaries, small sizes, or low contrast. Since SAM’s segmentation capability is highly dependent on prompts, the quality of segmentation decreases and adaptability to specific scenes worsens when high-quality prompts are lacking. This limits the effective transfer of SAM to downstream tasks. To tackle this problem, Li et al. introduced nnSAM10, aiming to combine the strengths of nnUNet and SAM. nnSAM leverages only the encoder part of SAM to integrate its feature extraction advantages, while delegating dataset-specific tasks to nnUNet, achieving superior segmentation accuracy even in few-shot scenarios. However, the parameters of the SAM encoder were frozen during training, used merely as a plug-and-play component, which means that nnSAM did not fully exploit the potential of large segmentation models. To further address this limitation, Xiong et al. proposed SAM2-Unet11 based on SAM2, utilizing the Hiera backbone network of SAM2 as the encoder and extending SAM2 to medical image segmentation tasks through adapter fine-tuning. Chen et al. also introduced SAM-Adapter12 and SAM2-Adapter13 via adapter fine-tuning, differing from SAM2-Unet in that SAM-Adapter not only fine-tunes the image encoder but also retrains the mask decoder of SAM. Structurally, SAM-Adapter is better suited for scenarios requiring the use of SAM’s characteristics, whereas SAM2-Unet, by removing most of the modules from SAM2, is easier to use and offers greater potential for expansion. These advancements highlight the ongoing efforts to optimize large vision models for medical image analysis, aiming to improve the accuracy and efficiency of medical diagnoses.

Although the application of SAM and SAM2 in medical image segmentation tasks is growing, the focus has primarily been on modalities such as CT, MRI, and X-ray imaging, as well as segmentation of the optic disc, cup, and retinal vasculature, with limited attention given to the segmentation of HE, which are irregular in shape and size14,15. Inspired by nnSAM and SAM2-Unet, this study proposes SAM-ada-Res, a HE segmentation model for DR based on fine-tuning SAM2. SAM-ada-Res employs a backbone network consisting of the image encoder from SAM2 and Residual Networks (ResNet), coupled with a standard UNet decoder to generate segmentation results. Given that fundus image are composed of RGB images and share similarities with natural images, the ResNet pre-trained on the ImageNet dataset can effectively extract rich local features from the images. Meanwhile, the self-attention mechanism within the SAM2 encoder can capture long-range dependencies in the images, providing global context to the model. By combining the outputs of the two encoders, SAM-ada-Res integrates the advantages of both in processing spatial and sequential data, enhancing the model’s capabilities in feature extraction and global understanding. This allows the model to maintain strong local analytical abilities while also leveraging global information to further improve performance.

To address the inherent limitations of foundational large models, that the training data for SAM2 cannot cover all possible scenarios, this study introduces an Adapter fine-tuning approach. This method effectively utilizes the multi-resolution and hierarchical characteristics of SAM2, successfully applying it to the task of HE segmentation in DR, achieving more accurate and robust segmentation outcomes.

The rest of the paper is organized as follows. Section “Methods” elaborates the proposed SAM-ada-Res. Section “Experiment results” reports the experiments in detail and discusses the experimental results. Finally, a brief conclusion is drawn in section “Conclusion”.

Methods

The overall architecture of SAM-ada-Res, as illustrated in Fig. 1, adopts a dual-encoder fusion strategy that integrates the SAM2 encoder and the ResNet encoder, along with a U-Net decoder. This innovative design is aimed at capturing both high-level semantics and low-level details to achieve precise HE localization in DR images.

The SAM2 encoder is made up of the Hiera backbone network from the pre-trained image encoder of the large segmentation model SAM2. This backbone is designed to capture high-level semantic information from input images, providing global context awareness essential for distinguishing hemorrhagic regions from surrounding tissues. On the other hand, the ResNet encoder adopts the ResNet101 architecture, which consists of 101 layers deep, enabling it to learn complex features and retain detailed information from the input data. The depth of this network makes it particularly adept at recognizing subtle patterns and textures critical for medical image recognition tasks where accuracy is paramount.

Embeddings extracted from both encoders are concatenated and fed into the decoder. This fusion process ensures that the model benefits from both the global contextual understanding provided by the SAM2 encoder and the fine-grained detail extraction capabilities of the ResNet encoder. By combining these two types of information, SAM-ada-Res can effectively localize HE even when they exhibit irregular shapes or sizes, or when boundaries are not clearly defined.

To integrate specific knowledge about the retinal image segmentation task with the general knowledge learned by the large model SAM into downstream tasks, this study introduces a lightweight Adapter framework to input task-related knowledge into SAM, enabling targeted application of SAM in downstream tasks. The Adapter includes a linear layer for down-sampling, followed by a GeLU activation function, another linear layer for up-sampling, and concludes with a final GeLU activation function. During the training phase, the weights of the pre-trained Hiera backbone network remain frozen, ensuring that the learned general knowledge is preserved while only updating the weight parameters within the Adapter to incorporate task-specific details.

This design of SAM-ada-Res not only leverages the extensive knowledge acquired by the large pre-trained model SAM2 but also adapts it specifically to the unique challenges of retinal hemorrhage segmentation. By integrating the deep feature extraction capabilities of ResNet101 with the global context-awareness provided by the SAM2 encoder, and fine-tuning with task-specific knowledge through the Adapter framework, SAM-ada-Res is poised to deliver enhanced performance in the critical task of identifying and segmenting HE lesions in DR, thus supporting earlier and more accurate diagnosis and treatment.

The decoder in the SAM-ada-Res architecture employs a standard U-Net structure, designed for effectively reconstruct high-resolution images from the encoded representations. Each module within the decoder consists of an interpolation block followed by two “Conv-BN-ReLU” blocks. The interpolation block is specifically utilized for up-sampling feature maps, aiming to restore the spatial resolution lost during the encoding process and thereby preserving essential detail information. Within each “Conv-BN-ReLU” block, “Conv” denotes a \(\:3\times\:3\) convolutional layer used to capture local patterns and features, “BN” stands for batch normalization which helps in accelerating the training process and improving the stability of the neural network, and “ReLU” is the activation function that introduces non-linearity into the model, enhancing its ability to learn complex patterns. This design ensures that the decoder not only refines and enhances the feature representations received from the encoders but also accurately reconstructs detailed aspects of the original image.

The final layer of the decoder outputs through a \(\:3\times\:3\) convolutional segmentation head, which produces the segmentation mask as the output. This segmentation head is crucial as it refines the predicted masks, ensuring that the boundaries between different segments are clear and accurate.

For the training objective, a combination of weighted Dice loss (Dice_loss) and binary cross-entropy loss (BCE_loss) is utilized. The Dice loss is particularly effective for segmentation tasks because it measures the overlap between the predicted and ground truth segmentation masks, making it well-suited for imbalanced datasets where certain classes may be underrepresented. The binary cross-entropy loss, on the other hand, penalizes incorrect predictions, with the penalty based on how far the prediction is from the actual label. By combining these two losses, the model can be optimized not only to accurately predict the presence or absence of features (through BCE_loss) but also to ensure that the segmentation closely matches the ground truth (through Dice_loss), leading to more precise and reliable segmentation outcomes.

where \({y_{true}}\) represents the true segmentation labels (ground truth), and \({y_{pred}}\) represents the segmentation predictions generated by the model.

This approach ensures that the SAM-ada-Res model is robust and performs well on the challenging task of HE segmentation, providing valuable support for medical professionals in diagnosing conditions such as DR.

Experiment results

Datasets

This study utilized three hemorrhagic lesion datasets: two publicly accessible datasets (OIA-DDR16 and IDRiD17), alongside a privately held dataset (JYFY-HE). To enhance the quantity and diversity of the training samples, we applied augmentation techniques including rotations at 90°, 180°, and 270°, as well as horizontal and vertical flips. Given that the original fundus images possessed high resolutions (e.g., 2400 × 2400), which posed significant computational demands, all images and their corresponding masks were resized to 512 × 512 using bicubic interpolation to preserve structural details while reducing memory overhead. Furthermore, pixel values were scaled to [0, 1] by dividing by 255. Detailed information regarding the number of images within each dataset is summarized in Table 1.

Experimental setup

All datasets were processed with a batch size of 4, using the Adam optimizer with a weight decay parameter of 1e−3. The initial learning rate was 1e-4, and the model was trained for 100 epochs. All experiments were implemented using PyTorch and conducted on a Nvidia L40 GPU with 48GB memory.

When evaluating SAM-ada-Res, this study compared it with three well-known medical image segmentation models: AgileFormer18, G-CASCADE19, and Rolling-Unet20. Additionally, two recent medical image segmentation models based on Mamba21, namely Mamba-Unet22 and VM-Unet23, were also included in the comparison to provide a comprehensive assessment. For each model, this study followed the official code implementations and used the recommended settings to ensure consistency in the training conditions.

This study adopted two standardized quantitative metrics, the normalized Dice coefficient (nDice) and the normalized Intersection over Union (nIou)24, to measure the accuracy of model in detecting hemorrhagic lesions from individual images.

where I denotes the total number of images in the test set, \(T{P_i}\) represents the number of pixels correctly predicted as the target class in the i-th image, \(F{P_i}\) represents the number of background class pixels incorrectly predicted as the target class in the i-th image, and \(F{N_i}\) represents the number of target class pixels incorrectly predicted as the background class in the i-th image.

Additionally, to visually demonstrate the recognition results and assess model performance, this study conducted a visualization comparison. First, five fundus images were randomly selected from the test sets of the OIA-DDR, JYFY-HE, and IDRiD datasets as test images. Second, these selected images were input into the trained models for prediction. Third, the model predictions were compared with the ground truth labels. In this comparison, incorrectly identified locations are marked in red, while missed detection are marked in blue. This marking scheme helps clearly highlight the discrepancies in the model’s recognition process. Finally, the original fundus images, ground truth labels, and annotated prediction results are displayed side by side to provide a clear visual assessment of the model’s performance. This visualization not only aids researchers in understanding the strengths and limitations of the model but also facilitates clinical practitioners in quickly evaluating the model’s applicability in real-world scenarios.

Quantitative comparison

Table 2 presents a comprehensive comparison of quantitative metrics for SAM-ada-Res and other medical image segmentation methods, highlighting their respective performances on different datasets. The results demonstrate that SAM-ada-Res exhibits superior performance across various models, particularly on the JYFY-HE and IDRiD datasets, where it achieves higher nIou and nDice values. These metrics are crucial indicators of segmentation accuracy and robustness, suggesting that SAM-ada-Res is highly effective in segmenting complex hemorrhagic regions in DR images.

In contrast, other methods show varying degrees of performance on different datasets. For instance, G-CASCADE demonstrates the highest nIou value on the OIA-DDR dataset, indicating its potential advantages in specific scenarios. However, its performance on the JYFY-HE and IDRiD datasets is relatively lower compared to SAM-ada-Res. This suggests that while G-CASCADE may have certain strengths in handling certain types of data, it may not be as versatile and effective in segmenting complex hemorrhagic regions across diverse datasets as SAM-ada-Res. Mamba-Unet and VM-Unet perform poorly on the OIA-DDR dataset, especially VM-Unet, which has very low nIou and nDice values. This indicates significant limitations in their ability to accurately segment hemorrhagic regions in this particular dataset. However, VM-Unet performs well on the IDRiD dataset, suggesting that these models may have better adaptability to specific types of data but lack the generalizability and robustness required for handling complex hemorrhagic regions in various DR images.

Overall, the technical advancements of SAM-ada-Res, particularly its dual-encoding architecture, provide it with a distinct advantage in handling complex hemorrhagic regions in DR images. Its superior performance across multiple datasets underscores its potential as a leading method for accurate and reliable segmentation of HE, which is crucial for the diagnosis and treatment of DR.

Visual comparison

Figures 2, 3 and 4 respectively display the visualization results of SAM-ada-Res compared to other medical image segmentation methods. This study selected the two medical image segmentation methods with the highest quantitative metric rankings, AgileFormer and G-CASCADE, for comparison with SAM-ada-Res.

For the larger OIA-DDR dataset, as shown in Fig. 2, AgileFormer exhibits more false positives compared to SAM-ada-Res and G-CASCADE, often misclassifying normal retinal areas as hemorrhages. SAM-ada-Res, on the other hand, has more false negatives compared to G-CASCADE, tending to miss hemorrhages and classify them as normal retinal areas.

For the smallest dataset, JYFY-HE, the results in the first and second rows of Fig. 3 show that AgileFormer and G-CASCADE are more prone to missing detection compared to SAM-ada-Res. The results in the third, fourth, and fifth rows of Fig. 3 indicate that AgileFormer and G-CASCADE are also more likely to produce false positives compared to SAM-ada-Res.

For the IDRiD dataset, the results in the second, fourth, and fifth rows of Fig. 4 show that AgileFormer and G-CASCADE exhibit more false positives compared to SAM-ada-Res. In all three models, the occurrence of false positives is higher than that of missed detection.

Ablation study

To further validate the effectiveness of SAM-ada-Res, this study conducted ablation experiments comparing SAM-ada-Res with models using different encoder configurations: ResNet alone, Adapter fine-tuned SAM2 (SAM-ada), and Low-Rank Adaptation (LoRA) fine-tuned SAM2 (SAM-lora). LoRA is a commonly used method for fine-tuning large models, involving the insertion of a parallel weight matrix \(\Delta {\text{W}} \in {{\mathbb{R}}^{{\text{d}} \times {\text{k}}}}\) alongside the pre-trained weights of the large model. This parallel matrix \(\Delta {\text{W}}\) can be decomposed into a down-projection matrix \({\text{A}} \in {{\mathbb{R}}^{{\text{r}} \times {\text{k}}}}\) and an up-projection matrix \({\text{B}} \in {{\mathbb{R}}^{{\text{d}} \times {\text{r}}}}\), where \({\text{r}} \ll {\text{min}}\left( {{\text{d}},{\text{k}}} \right)\), with only these matrices being updated during training, thus achieving fine-tuning with minimal additional parameters.

The results, as shown in Table 3, indicate that the SAM-ada-Res model, which combines the ResNet and Adapter fine-tuned SAM2 encoders, achieved the best segmentation results across all datasets. The model using the SAM-lora and ResNet encoders (SAM-lora-Res) achieved the second-best results. These findings suggest that the synergistic effect of rich local features and global context information contributes to improved segmentation performance. Furthermore, the Adapter fine-tuning approach appears to be more suitable for these three datasets compared to the LoRA method.

HE intelligent segmentation online tool

Based on the SAM-ada-Res model, this study has developed an intelligent HE segmentation online tool specifically tailored to serve as a convenient auxiliary diagnostic aid for ophthalmologists. As shown in Fig. 5, users can select the fundus image to be analyzed by clicking the “Choose File” button. After selecting the image, users click the “Upload and Analyze” button, and the system automatically invokes the SAM-ada-Res model to process the uploaded fundus image, generating the segmentation results. This seamless interaction minimizes the technical complexity involved in handling sophisticated AI models, allowing practitioners to focus more on clinical decision-making rather than on the intricacies of data processing.

The segmentation results, as shown in Fig. 6, provide comprehensive insights into the distribution and extent of hemorrhagic regions within the fundus image. Specifically, the tool not only marks hemorrhagic regions across the entire fundus but also offers a detailed count of these regions within each quadrant. This functionality enables doctors to quickly locate hemorrhagic regions and assess the distribution of lesions, thereby providing crucial reference information for diagnosis.

The design of the tool places a strong emphasis on user experience, ensuring that the workflow is simple and intuitive. By encapsulating the complex technical processes in the backend, users need only follow a few straightforward steps to obtain accurate segmentation results. This streamlined approach not only accelerates the diagnostic process but also reduces the cognitive load on healthcare professionals, thereby increasing their productivity and effectiveness.

Conclusion

This study proposes a novel dual-encoder architecture, SAM-ada-Res, designed for the automatic detection and segmentation of HE in DR. The architecture integrates the strengths of a pre-trained SAM2 encoder and a traditional ResNet101 encoder, effectively combining their feature representations to significantly enhance the accuracy of HE identification. Specifically, the SAM2 encoder leverages its powerful image understanding capabilities to capture complex visual patterns, while the ResNet101 encoder, with its deep network structure, efficiently extracts multi-level image features. The fusion of these two encoders, followed by processing through a decoder, results in segmentation outputs that exhibit improved boundary clarity and superior performance in detecting small lesions.

Experimental results demonstrate that SAM-ada-Res achieves excellent segmentation performance on both public and internal datasets, showing good adaptability to HE of various sizes and shapes. Notably, tests on the JYFY-HE dataset reveal that the model can effectively identify HE, with visualization comparisons showing a high degree of consistency between the model’s predictions and the ground truth labels. The number of false positives and false negatives is significantly reduced.

SAM-ada-Res not only serves as an effective tool for the early diagnosis of DR but also provides a new solution approach for other medical image analysis tasks. Future work will focus on further optimizing the model’s generalization capabilities and computational efficiency, exploring a variety of data augmentation techniques25,26 to enhance model robustness, and considering the application of this method to a broader range of medical imaging scenarios. Through continuous technological iteration and clinical validation, it is anticipated that SAM-ada-Res will play a more significant role in practical medical practice, contributing to the advancement of precision medicine.

Data availability

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

References

Wei, Y. et al. Exploring the causal relationships between type 2 diabetes and neurological disorders using a Mendelian randomization strategy. Medicine 103, e40412. https://doi.org/10.1097/md.0000000000040412 (2024).

Bilal, A., Liu, X., Shafiq, M., Ahmed, Z. & Long, H. NIMEQ-SACNet: A novel self-attention precision medicine model for vision-threatening diabetic retinopathy using image data. Comput. Biol. Med. 171, 108099. https://doi.org/10.1016/j.compbiomed.2024.108099 (2024).

Bilal, A. et al. Improved support vector machine based on CNN-SVD for vision-threatening diabetic retinopathy detection and classification. PloS One 19, e0295951. https://doi.org/10.1371/journal.pone.0295951 (2024).

Bilal, A., Sun, G., Li, Y., Mazhar, S. & Khan, A. Q. Diabetic retinopathy detection and classification using mixed models for a disease grading database. IEEE Access. 9, 23544–23553. https://doi.org/10.1109/ACCESS.2021.3056186 (2021).

Bilal, A., Zhu, L., Deng, A., Lu, H. & Wu, N. AI-Based automatic detection and classification of diabetic retinopathy using U-Net and deep learning. Symmetry 14, 1427 (2022).

Kirillov, A. et al. In IEEE/CVF International Conference on Computer Vision (ICCV) 3992–4003 (2023).

Ravi, N. et al. SAM 2: segment anything in images and videos. arXiv preprint arXiv:2408.00714. https://doi.org/10.48550/arXiv.2408.00714 (2024).

Bilal, A. et al. U-Net-based automatic detection of diabetic retinopathy from fundus images. Comput. Methods Biomech. Biomed. Eng. Imaging Visualiz. 10(6), 663–674. https://doi.org/10.1080/21681163.2021.2021111 (2022).

Cheng, J. L. et al. SAM-Med2D. ArXiv Preprint arXiv:2308 16184. https://doi.org/10.48550/arXiv.2308.16184 (2023).

Li, Y., Jing, B., Li, Z., Wang, J. & Zhang, Y. Plug-and-play segment anything model improves NnUNet performance. Med. Phys. https://doi.org/10.1002/mp.17481 (2024).

Xiong, X. Y. et al. SAM2-UNet: segment anything 2 makes strong encoder for natural and medical image segmentation. arXiv preprint arXiv:2408.08870. https://doi.org/10.48550/arXiv.2408.08870 (2024).

Chen, T. R. et al. In IEEE/CVF International Conference on Computer Vision Workshops (ICCVW) 3359–3367 (2023).

Chen, T. et al. SAM2-Adapter: evaluating & adapting segment anything 2 in downstream tasks: camouflage, Shadow, medical image segmentation, and more. ArXiv Preprint arXiv:2408 04579. https://doi.org/10.48550/arXiv.2408.04579 (2024).

Li, J. et al. Integrated image-based deep learning and language models for primary diabetes care. Nat. Med. 30, 2886–2896. https://doi.org/10.1038/s41591-024-03139-8 (2024).

Jiang, H. et al. Real-time microaneurysm lesion segmentation with gaze-map-guided foundation model for early detection of diabetic retinopathy. IEEE J. Biomedical Health Inf.. https://doi.org/10.1109/jbhi.2024.3377592 (2024).

Li, T. et al. Diagnostic assessment of deep learning algorithms for diabetic retinopathy screening. Inf. Sci. 501, 511–522. https://doi.org/10.1016/j.ins.2019.06.011 (2019).

Porwal, P. et al. IDRiD: diabetic retinopathy—segmentation and grading challenge. Med. Image Anal. 59, 101561. https://doi.org/10.1016/j.media.2019.101561 (2020).

Qiu, P., Yang, J., Kumar, S., Ghosh, S. & Sotiras, A. AgileFormer: spatially agile transformer UNet for medical image segmentation. arXiv preprint arXiv:2404.00122. https://doi.org/10.48550/arXiv.2404.00122 (2024).

Rahman, M. M. & Marculescu, R. In IEEE/CVF Winter Conference on Applications of Computer Vision 7713–7722 (Waikoloa, 2024).

Liu, Y. et al. In AAAI Conference on Artificial Intelligence, vol. 38 3819–3827 (2024).

Gu, A. & Dao, T. Mamba linear-time sequence modeling with selective state spaces. arXiv preprint arXiv:2312.00752. https://doi.org/10.48550/arXiv.2312.00752 (2023).

Wang, Z., Zheng, J., Zhang, Y., Cui, G. & LI, L. Mamba-UNet: UNet-Like Pure Visual Mamba for Medical Image Segmentation. arXiv preprint arXiv:2402.05079. https://doi.org/10.48550/arXiv.2402.05079 (2024).

Ruan, J. & Xiang, S. VM-UNet: vision Mamba UNet for medical image segmentation. ArXiv Preprint arXiv:2402 02491. https://doi.org/10.48550/arXiv.2402.02491 (2024).

Dai, Y., Wu, Y., Zhou, F. & Barnard, K. IEEE Winter Conference on Applications of Computer Vision (WACV) 949–958 (Waikoloa, 2021).

Jia, Y., Chen, G. & Chi, H. Retinal fundus image super-resolution based on generative adversarial network guided with vascular structure prior. Sci. Rep. 14, 22786. https://doi.org/10.1038/s41598-024-74186-x (2024).

Muksimova, S., Umirzakova, S., Mardieva, S. & Cho, Y. I. Enhancing medical image denoising with innovative Teacher-Student Model-Based approaches for precision diagnostics. Sens. (Basel Switzerl.) 23, 896. https://doi.org/10.3390/s23239502 (2023).

Acknowledgements

The study was supported by PhD Research Foundation of Affiliated Hospital of Jining Medical University(Grant No. 2024-RC(BS)-004).

Author information

Authors and Affiliations

Contributions

S.T. conceived the experiments, S.T. and Q. W. performed the experiments, and analyzed the results. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Tang, S., Wu, Q. Research on recognition of diabetic retinopathy hemorrhage lesions based on fine tuning of segment anything model. Sci Rep 15, 10292 (2025). https://doi.org/10.1038/s41598-025-92665-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-92665-7