Abstract

Remote sensing images are essential in various fields, but their high-resolution (HR) acquisition is often limited by factors such as sensor resolution and high costs. To address this challenge, we propose the Multi-image Remote Sensing Super-Resolution with Enhanced Spatio-temporal Feature Interaction Fusion Network (\(\hbox {ESTF}^2\)N). This model is a deep neural network based on end-to-end. The main innovations of the \(\hbox {ESTF}^2\)N network model include the following aspects. Firstly, through the Attention-Based Feature Encoder (ABFE) module, the spatial features of low-resolution (LR) images are precisely extracted. Combined with the Channel Attention Block (CAB) module, global information guidance and weighting are provided for the input features, effectively strengthening the spatial feature extraction capability of ABFE. Secondly, in terms of temporal feature modeling, we designed the Residual Temporal Attention Block (RTAB). This module effectively weights k LR images of the same location captured at different times via a global residual temporal connection mechanism, fully exploiting their similarities and temporal dependencies, and enhancing the cross-layer information transmission. The ConvGRU-RTAB Fusion Module (CRFM) captures the temporal features using RTAB based on ABFE and fuses the spatial and temporal features. Finally, the Decoder module enlarges the resolution of the fused features to achieve high quality super resolution image reconstruction. The comparative experiment results show that our model achieves notable improvements in the cPSNR metric, with values of 49.69 dB and 51.57 dB in the NIR and RED bands of the PROBA-V dataset, respectively. The visual quality of the reconstructed images surpasses that of state-of-the-art methods, including TR-MISR and MAST etc.

Similar content being viewed by others

Introduction

In the field of image super-resolution, there are two main research directions: Single-image super-resolution (SISR) and multi-image super-resolution (MISR). SISR is the process of reconstructing a high-resolution (HR) image from a single low-resolution (LR) image. Since there is only one LR image as input, this method requires inferring the details of the HR image from the limited information. In recent years, Chen Z et al.1 introduced a dual aggregation transformer network that improves super-resolution image quality by aggregating feature and spatial information. Li A et al.2 introduced a feature-modulated Transformer network that enhances single-image super-resolution by leveraging global representation and cross-refinement of high-frequency priors. These two Transformer-based models rely on large-scale datasets for training when dealing with image super-resolution, which may lead to low inductive bias in modeling local visual cues. The performance of the models can suffer if they are trained without sufficient data. Zhang X et al.3 employed partial channel shift to improve the network’s feature extraction capability, thereby enhancing the recovery of image details. However, this method may cause information loss to some extent when processing images with complex textures. SRFormer4 Balances channel and spatial information by aligning the Permuted self-attention (PSA) module and employs the substitution operation to improve the model’s detail-capturing capability. However, it may still have a high computational cost compared to some lightweight super-resolution models.

MISR is a technique for generating HR images by combining multiple LR images. These LR images can be obtained from different sensors in the same area, from different points in time, or from different angles and viewpoints. By modeling and aligning multiple image frames in a certain dimension, the information shared between successive frames is used to improve the resolution of the image. Multi-image remote sensing superresolution techniques have been used in disaster monitoring5,6, land cover7,8,9 and vegetation growth10,11 etc. It has great potential for various applications. Recently, SinSR12 introduced a single-step image super-resolution method based on a diffusion model, which generates HR images in a single pass, thereby reducing the complexity associated with multi-step processing. Image Processing GNN13 is an image processing model based on a graph neural network (GNN). Using the flexibility of graphs, the model assigns higher degrees of nodes to regions with rich image details, treats images as a collection of pixel nodes rather than block nodes, and effectively collects pixel information at local and global scales through a flexible graph structure. However, the complexity of the model may be higher than that of traditional convolutional networks. Low-Res Leads the Way14 improves the generalization ability of the model through self-supervised learning and reduces the dependence on specific datasets. Wang L et al.15 introduced a method to learn coupled dictionaries from unpaired data, which captures shared features in multiple images and enhances super-resolution performance. Most current deep learning-based MISR methods, on the other hand, adopt an encoder-decoder architecture, integrating alignment, encoder, fusion, and decoder modules to accomplish specific tasks. The \(\hbox {ESTF}^2\)N (Mult-image Remote Sensing Super-Resolution with Enhanced Spatio-temporal Feature Interaction Fusion Network) network model proposed in this paper also follows this structure and aims to improve the accuracy and efficiency of image reconstruction.

In remote sensing applications, MISR tasks face two key challenges. First, the timespan required to capture multiple images can be lengthy, introducing uncontrollable environmental factors and potentially ambiguous image ordering. As a result, efficiently processing multiple LR images with weak temporal correlation becomes a significant challenge. Most existing MISR methods currently treat images as sequential frames, making the results highly sensitive to the input sequence order. This sensitivity can severely impact super-resolution reconstruction if the image order is disrupted. The second problem lies in the inefficient utilization of multiple images. In real-world environments, different views of the same scene may affect the extraction of effective information due to clarity differences. Some existing deep learning-based methods require high image sharpness and thus directly discard images that are not sharp enough, resulting in the loss of valuable information.

To address the aforementioned issues, this paper presents the \(\hbox {ESTF}^2\)N network model, which extracts temporal and spatial features from non-sequential images. The model performs spatio-temporal feature fusion, improving feature extraction for LR images, and minimizing information loss. The network consists of three main components: Initially, the LR input images are transformed into a set of feature maps, which are subsequently fused through a loop structure to generate a unified scene representation. Finally, the scene representation is ultimately reconstructed as a single HR image. In the initial stage, a reference image and an LR remote sensing image are selected for alignment, and the spatial features of the LR image are attended to by the Unit1, Unit2 and Channel Attention Block (CAB) modules. In the second stage, spatio-temporal feature fusion of multi-frame LR images is realized using the Convolutional Gated Recurrent Unit (ConvGRU) module and the Residual Temporal Attention Block (RTAB) module. The ConvGRU module efficiently captures the local dynamics within the image frames, while the RTAB module further captures the global inter-frame correlation between image frames to fully utilize the information interaction across time series and space. In the third stage, HR images are reconstructed from the fused feature maps by using deconvolution.

The primary contributions of this paper are summarized as follows.

-

1.

We propose the Attention-Based Feature Encoder (ABFE) module to fully extract spatial features from remote sensing images. This spatial feature extraction can effectively capture the small changes of features and spatial texture, providing rich contextual information for subsequent image reconstruction;

-

2.

We propose the spatio-temporal feature fusion module ConvGRU-RTAB Fusion Module (CRFM), which performs temporal feature extraction as well as spatio-temporal feature fusion among the extracted spatial features. Not only can it capture the change information on the time series in the LR image, but also effectively fuse the local spatial features of different time frames with the global spatio-temporal structure, thus significantly improving the super-resolution effect.

The structure of this paper is organized as follows. Section 2 offers a review of related research and existing image super-resolution techniques; Section 3 details the architecture of the proposed model; Section 4 presents the experimental results on the PROBA-V dataset and Section 5 concludes the paper.

Related work

Reconstruction-based approach

Within the domain of image super-resolution, reconstruction-based methods are widely used to generate HR images. The Bicubic interpolation algorithm is one of the most common techniques, which is based on a weighted average of pixel neighborhoods and calculates the new pixel values by interpolating the surrounding 16 pixels three times. This approach incorporates the luminance information from neighboring pixels and is able to smooth the image and reduce jagged effects and distortion when generating HR images. Compared to neighbor and bilinear interpolation, Bicubic interpolation provides a finer recovery of image details and is able to preserve the edge information of the image to some extent. However, it may still lead to blurring phenomena and difficulty capturing high-frequency details. This problem is especially obvious in complex scenes. In contrast, the IBP16 method repeatedly adjusts the HR image through an iterative process, which utilizes the error of re-projection between the LR image and the current HR estimate for feedback correction. Although IBP can effectively improve image quality, its computational complexity is high and can lead to instability and difficulty in convergence when initial estimation is poor. For this reason, the BTV17 method was developed. It combines bilateral filtering and smoothing strategies, with the aim of preserving edge information and denoising at the same time. By minimizing the full variational loss of the image, BTV is able to smooth the background while reducing the effect of noise. However, this method may still induce artifacts or over-smoothing.

Learning-based approach

In recent years, methods based on learning techniques have shown significant advantages in the field of image super-resolution. RCAN18 improves the focus on features by incorporating residual learning and channel attention mechanisms. Its structure contains multiple residual blocks and channel attention modules, which enable the network to dynamically adjust the weights of different channels, thus prioritizing the attention to key features in feature extraction. This mechanism significantly improves the quality of super-resolution image reconstruction, especially in capturing fine details and textures. In addition, VSR-DUF19 utilizes the temporal information of consecutive frames in a video sequence and employs a dynamic upsampling filter for super-resolution reconstruction. The method learns how to up-sample and reconstruct HR images more efficiently by analyzing the dynamic relationship between multiple LR frames, which improves the reconstruction results and temporal consistency. However, it requires high quality and stability of the input video and may face the problem of temporal inconsistency when processing dynamic scenes. HighRes-Net20 on the other hand, generates HR images layer by layer through a multilayer network structure to capture more detailed information. The framework recovers LR images layer by layer and effectively utilizes contextual information through inter-layer connections, thus enhancing the ability to capture high-frequency details. DeepSUM21 and its improved version, DeepSUM++22, achieve super-resolution reconstruction by fusing features from multiple LR images, effectively integrating redundant information between images to enhance reconstruction quality and clarity. DeepSUM++ further optimizes both the network architecture and training strategy, enabling it to adapt to various input conditions and improving the detail and accuracy of the reconstruction. TR-MISR23 Combines temporal and spatial information to achieve multi-image super-resolution through temporal residual modeling, which utilizes LR images from multiple time points to share information, thus enhancing the reconstruction effect. Although this method provides more consistent images in the temporal dimension, it requires higher synchronization and alignment of time series images, and the processing is more complicated. MAST24 on the other hand, adopts the Transformer25 architecture to fuse multiple LR images through multiple attentional mechanisms, demonstrating flexibility and accuracy in dealing with long-range dependencies. However, the computational complexity of MAST is higher, especially on large-scale datasets, where the training time is significantly increased and the requirements on hardware resources are higher.

In this context, our proposed \(\hbox {ESTF}^2\)N network model achieves greater adaptability without relying on a specific degradation model. \(\hbox {ESTF}^2\)N captures and exploits inter-frame temporal correlation with spatial details more comprehensively through RTAB and ConvGRU. Our approach further enhances the transfer of cross-frame information through the RTAB module to capture more detailed information at different time points, effectively improving the reconstruction accuracy of the model on the PROBA-V dataset.

Network structure

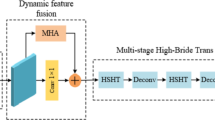

MISR aims to recover an HR image using a set of k LR images of the same scene, captured within a defined time window. Unlike single-image super-resolution, MISR can take advantage of both spatial and temporal correlations, theoretically yielding better reconstruction results. In this section, we will introduce the structure of \(\hbox {ESTF}^2\)N in detail. The general structure of the model is shown in Fig. 1. The small figure next to each module is an example of the feature map extracted by the module, which is used to evaluate whether the designed network module can meet the features extraction requirements.

The model consists of the ABFE, CRFM, and Decoder components. Firstly, in the ABFE part, the input to the model is k LR images and the corresponding HR reference images. The model extracts the deep features of each input image through a series of encoder units. Each encoder unit corresponds to a LR input image (l1 to lk), and compares it with the reference image to output feature maps (m1 to mk). The display of these feature maps is intended to intuitively reflect the role and effect of each module in the feature extraction process and to help verify the effectiveness of the network design in capturing and processing image details. These features will be passed on to downstream modules for further processing. Next, in the CRFM part, the model combines a ConvGRU and a RTAB to capture and fuse the temporal coherence and spatial details between multiple frames of images through joint modeling of spatio-temporal information. The ConvGRU unit is used to capture the temporal dependencies between consecutive frames and generate the feature map h1, while the RTAB module performs feature enhancement in the spatial dimension to generate the feature map h2. The outputs of the two are integrated together through a feature concatenation operation to form a complete spatio-temporal feature representation. Finally, in the Decoder section, the fused features are decoded to produce the final HR image. The decoder recovers the detailed HR image through stepwise upsampling and convolution operations.

Define k th LR images of the same scene of the model as \(l_k\in R^{c\times h\times w}\) , c , h and w are the channel depth, height and width of the input LR image, respectively. According to the images provided by the PROBA-V dataset, c is 1, which means that the input LR image is a grayscale image with only one channel. The true HR of the same scene is indicated as \(H\in R^{c\times h\times w}\), \(\lambda\) is the scaling factor, and in the experiment \(\lambda\) is set to 3. The output predicted by the model is denoted as \(H = F^\lambda \left( \{ l_1, \ldots , l_k \} \right)\). It should be noted that the model’s output retains its original dimension of \(1 \times 1 \times \lambda H \times \lambda W\). The colored visualization shown in figures is solely for enhancing interpretability and does not alter the actual output dimensions. Each module is described in the subsequent subsections.

ABFE

The core task of ABFE is to extract meaningful spatial features from the input LR image to provide rich image information support for subsequent image super-resolution reconstruction. Figure 2 shows the schematic structure of an encoder unit in ABFE. The small figure next to each module is the feature map extracted by that module. Unit1 is responsible for the initial feature extraction to ensure that the underlying information of the input image can be fully encoded. And Unit2 makes the feature representation richer and deeper by further processing and fusing the initial features. The organic combination of the two enables the \(\hbox {ESTF}^2\)N network to perform well in complex multi-image super-resolution reconstruction tasks.

Unit1 & unit2

The Unit1 module is implemented to extract the key features of the input image. Figure 3 illustrates the detailed structure of the Unit1 module. The small figure next to each module shows the feature maps extracted by that module. The Unit1 module consists of an initial convolutional layer, two residual blocks, and a final convolutional layer. The initial convolutional layer is composed of a convolutional layer followed by a parametrically corrected linear unit (PReLU) activation function. The initial convolutional layer uses a convolutional kernel with \(k^{\prime }=3\) and the number of output channels is \(c=64\). The residual block has an input channel number of 64, where the convolution operation uses a 3\(\times\)3 convolutional kernel. Each convolutional layer is followed by an activation function, which introduces an nonlinear transformations and automatically adjusts the negative slope through parameterization to improve the network’s expressive power. The final convolutional layer has 64 input channels which come from the output of the previous residual block. The output channels are also set to 64, maintaining consistency in the number of channels in the feature map. The convolution kernel size is 3\(\times\)3 with a padding size of 1, ensuring that the spatial size of the feature map remains unchanged after convolution. The initial feature is extracted by the convolution operation and the non-linear activation function, which is represented by the formula 1.

Where W denotes the convolution kernel, x denotes the input feature map, b is the bias, and \(*\) denotes the convolution operation.

Residual blocks address the gradient vanishing problem in deep networks utilizing residual connections. Each residual block includes two convolutional layers and a residual connection, allowing the network to learn more complex features while retaining the original input information. The features are controlled by a convolutional layer that adjusts the feature dimensions to the desired number of output channels. For the ith residual block, the output yi can be represented by formula 2.

where F denotes the residual function, \(x_i\) is the input and \(W_i\) is the weight.

The goal of the Unit1 design is to efficiently extract spatial features from the input image. Through this process, it captures spatial details such as edges and textures while enhancing key structural information in the image. In contrast, Unit2 is designed similarly to Unit1, but with twice as many input channels as Unit1’s output channels. This adjustment enables Unit2 to extract more feature information, jointly extracting LR image and reference image features. The Unit2 module also consists of an initial convolutional layer, several residual blocks, and a final convolutional layer. Through additional processing and fusion of input features, the overall feature representation capability of the network is significantly enhanced. In real experiments, this design of multilevel feature extraction and fusion performs well in experimental results and has significant advantages in processing complex scenes and detail recovery.

CAB

After the input features have passed through Unit1 and Unit2, the global information is used to guide the enhancement of the feature for each channel. We aim to re-evaluate and weight the importance of each channel during the feature extraction process, based on global context information. This adaptive weighting can significantly enhance the network’s sensitivity to the target features, thus improving the overall feature representation. Unlike conventional deep learning-based MISR methods that rely on high-quality input images, CAB adaptively enhances the feature representation of less sharp images by emphasizing critical spatial structures. This ensures that valuable information is retained even from images with lower sharpness, mitigating the limitations imposed by strict image quality requirements.

As illustrated in Fig. 4, the module consists of three main components: global pooling layers, fully connected layers, and channel weighting operation. The input feature map, denoted as \(X\in \mathbb {R}^{B\times C\times H\times W}\), where B represents the batch size, first undergoes global average pooling. This operation aggregates the spatial information from each channel to produce a global feature vector. Next, to generate adaptive weights for each channel, we introduce two fully connected layers. The first fully connected layer reduces the dimension of the feature vector from C to \(\frac{C}{r}\), where ris the reduction ratio, which takes the value of 16. This operation effectively reduces the amount of computation and the number of parameters. Then, the nonlinear characteristics are introduced by the ReLU activation function. The second fully connected layer restores the dimension of the feature vector to C and limits the output to between 0 and 1 by the Sigmoid activation function. Thus, the calculation of the fully connected layer can be expressed as the formulas 3 to 5.

where \(W_1\) and \(W_2\) are the weight matrices of the two fully connected layers respectively, \(\sigma\) denotes the Sigmoid activation function and z is the generated channel weight. Finally, the generated channel weights z are remapped to the dimensions of the input feature maps and multiplied element by element with the input feature maps to achieve channel weighting. Where \(X_{c, i, j}^{\prime }\) is the weighted feature map.

CRFM

CRFM combines ConvGRU and RTAB to capture dynamic features in the temporal dimension by modeling the temporal information of LR images. The module also establishes an effective fusion mechanism between spatial features and temporal features to ensure that the image information from different time points is fully utilized during HR reconstruction, and the model’s ability to express complex scenes is improved.

ConvGRU

ConvGRU is used to process spatial features in time-series data and perform feature fusion in the temporal dimension. Traditional image fusion methods such as pixel-level weighted average-based strategies often ignore the spatio-temporal dependencies in the image sequences, making it difficult to fully utilize the information of all images. In contrast, ConvGRU explicitly models these dependencies through its gated recurrent structure, enabling more effective preservation and refinement of information across multiple frames. ConvGRU takes advantage of the combination of convolutional neural networks (CNN)26 and recurrent neural networks (RNN)27. It can not only effectively process the time series characteristics of images, but also extract rich spatial features through convolutional layers. The ConvGRU module consists of multiple stacked ConvGRU units to model temporal characteristics of LR image sequences. In the ConvGRU module, the input features \(X_t\) at each time step t are sequentially processed by multiple ConvGRU units, each responsible for computing the hidden state \(H_t\) of a single time step. The computation within a ConvGRU unit involves the input feature \(X_t\) of the current time step and the hidden state \(H_{t-1}\) from the previous time step. These are regulated through three gates: the Reset Gate, Update Gate, and Output Gate (which computes the candidate hidden state). 2D convolutions are employed to preserve spatial features. Figure 5 illustrates the structure of the ConvGRU unit, which controls hidden state updates via gating mechanisms and performs feature fusion across the temporal dimension. The small picture next to the module is a characteristic diagram of the module’s input and output.

By stacking multiple ConvGRU units, the entire ConvGRU28 module enables features to propagate not only along the temporal dimension but also recursively across layers, thereby extracting richer spatio-temporal features. The inter-layer connections in the ConvGRU module further enhance information flow, allowing features at each time step to fuse more effectively in deeper architectures after processing by the units. This improves super-resolution reconstruction. In this study, the ConvGRU module comprises 2 layers of ConvGRU units, each with 64 hidden channels and 3\(\times\)3 convolutional kernels to ensure local feature extraction capability.

For each time step t, the input feature map X[ : , t, : , : , : ] of the current layer and the hidden state \(H_{t-1}\) from the previous time step are fed into the ConvGRU unit. The updated hidden state \(H_{t}\) is calculated as formula 6.

Formula 6 shows the input feature map \(X_{t}\) in the current time step and the hidden state \(H_{t-1}\) from the previous time step are concatenated along the channel dimension to form the combined feature matrix \(S_t\).

Formula 7 shows the Reset Gate \(R_{t}\) is computed to regulate the contribution of the previous hidden state \(H_{t-1}\) to the current time step, determining whether partial historical information should be forgotten. \(W_r\) denotes the convolution kernel for the Reset Gate, \(b_r\) is the bias term, and \(\sigma\) is the Sigmoid activation function.

Formula 8 shows the Update Gate \(Z_{t}\) controls the blending ratio between the old information \(H_{t}\) from the previous hidden state \(H_{t-1}\) and the new information \(\tilde{H}_t\) from the candidate hidden state. \(W_z\) represents the convolution kernel for the Update Gate, and \(b_z\) is the bias term.

Formula 9 shows under the influence of the Reset Gate \(R_t\), the candidate hidden state \(\tilde{H}_t\) for the current time step is computed to generate the final hidden state. tanh is the hyperbolic tangent activation function, \(W_h\) is the convolution kernel for candidate state computation, \(b_h\) is the bias term, and \(\odot\) denotes element-wise multiplication.

Formula 10 shows final hidden state \(H_t\) selectively combines the previous hidden state \(H_{t-1}\) and the candidate hidden state \(\tilde{H}_t\) through the Update Gate \(Z_t\).

At each layer, the hidden states of the output \(h_t\) for all time steps are stacked to form the output feature map of the current layer. This process is repeated across all layers of the ConvGRU unit until all features are processed, generating a fused feature representation. By accumulating features across multiple frames, ConvGRU effectively aggregates temporal information, thereby enhancing reconstruction quality in super-resolution tasks.

In order to fuse the features of multiple LR images into a single HR image representation, we employ a weighted summation strategy. Since the PROBA-V Kelvin dataset contains multiple frames of LR images of the same location captured at different time points, and the time dimension provides a wealth of information, the feature map at each time step is multiplied by the corresponding weight factor. Then we perform weighted summation and normalization on the time step dimension. This weighted fusion strategy enables the network to dynamically adjust the contribution of each frame, ensuring that frames with richer information exert greater influence on the final HR image, thereby improving reconstruction quality.

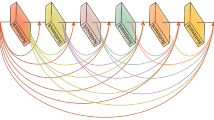

RTAB

Conventional upsampling methods typically rely on basic interpolation techniques, which often fail to effectively utilize the temporal information within the input image sequence. To overcome this challenge, we propose a novel RTAB module. Given the similarities among T input LR images, emphasizing their relevance during fusion is likely to lead to a higher-quality prediction. The module framework is shown in Fig. 6. The small figure next to each module shows the feature maps extracted by that module.

The RTAB module takes T LR images as input. For each input sample \(LR_k\), certain LR images exhibit greater similarity to one another. As a result, when merging T LR images, giving them more relevance will result in a higher-quality prediction. In this case, the use of RTAB not only conveys low-frequency information across more layers, but also allows for conscious weighting with different input times. Unlike conventional MISR approaches that assume strong temporal correlation among LR images, RTAB explicitly models global inter-frame dependencies, allowing the network to effectively handle weakly correlated remote sensing images. By adaptively weighting frames with higher correlation and reducing the influence of temporally inconsistent images, RTAB ensures stable super-resolution performance even when the temporal consistency is weak. In RTAB, a sequence of convolutional and non-linear activation operations is applied to the input tensor with the shape H\(\times\)W\(\times\)T\(\times\)F. Both local and global correlations are utilized to derive separate attention statistics for each feature F. Consequently, the features of each tensor are rescaled, allowing the network to focus on the most influential parts. In addition, the residual connection mechanism enables the model to effectively preserve key information from low-frequency features. It avoids these low-frequency features from being ignored, thus improving the overall performance and characterization ability of the model.

In Fig. 6, the first layer is a two-dimensional convolution layer (2D Conv) with weighted normalization. It extracts spatial features from the underlying layer and generates an initial feature map to enhance the ability to capture spatio-temporal information in the subsequent layers. The second convolutional layer further extracts features with the activation function ReLU to capture deeper spatial features and increase the non-linear expressive capacity of the model. The third layer is a global pooling operation that downsamples the feature mapping to a fixed size, extracts the global feature information at the channel level, and achieves dimensionality reduction through another 2D Conv. Next, by using the ReLU activation function and convolution operation again, we complete the upscaling operation and generate a channel weight matrix.The fourth layer normalizes the channel weights through the Sigmoid activation function and performs a channel-wise element-wise multiplication operation with the original feature map to achieve channel-wise weighting of the features. Finally, in the residual connection path, the scaled feature mapping is added to the initial input feature mapping to realize the residual fusion of information. This structure enables the RTAB module not only to capture spatially important information but also to further enhance the aggregation effect of features through temporal weighting, providing strong spatio-temporal feature integration.

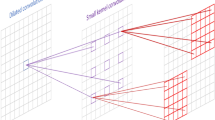

Decoder

The decoder module is a key component that converts the feature maps extracted and fused in the network into super-resolution images. The Decoder module adopts a combination of deconvolution and convolution layers to achieve mapping from LR feature maps to HR images. Figure 7 shows the detailed structure of the Decoder module. The small diagram next to each module shows a diagram of the features extracted by the module. These feature diagrams do not represent the final image size.

The feature map output from the fusion module goes through the ConvTranspose2d deconvolution layer, which enlarges the input LR feature maps up to 3 times, improving the accuracy of the final stage of the model. In this module, the input feature map size is (B,64,128,128). Where B represents batchsize, 64 refers to the number of channels, and the width and height of the feature map are 128\(\times\)128. The parameters of the deconvolution layer are configured as follows: the number of input channels is 64, the number of output channels is 64, the size of the convolution kernel is 9, and the step size is 3. Through the operation of this deconvolution layer, the size of the feature map will be enlarged to three times of its original size , from 128\(\times\)128 to 384\(\times\)384. The operation of the deconvolution layer can be expressed as formula 11.

where \(W_{\text{ deconv }}\) is the weight parameter of the deconvolution layer and PReLU is the parametric rectified linear unit.

The feature map produced by the deconvolution layer requires additional processing to generate the final super-resolution image. For this purpose we use a Conv2d convolutional layer which is configured with the following parameters: the input consists of 64 channels, while the output has a single channel. The convolutional kernel has a size of 3, with a padding of 1. The effect of this convolutional layer is to reduce the feature graph from 64 channels to 1, while keeping the length and width of the feature diagrams unchanged. This process can be expressed as formula 12.

where, \(\ W_{\textrm{final}}\) is the weight parameter of the final convolutional layer.

Experiments and discussions

Data sets

The datasets used for training and evaluation in this study comprise images collected by the PROBA-V29 Earth observation satellite. Released in 2019 as part of a super-resolution competition organized by the European Space Agency (ESA), the dataset includes images from two spectral bands: red (RED) and near-infrared (NIR). The images are encoded with 14-bit depth, ensuring detailed and rich information. The LR images are 128 \(\times\) 128 pixels, the corresponding HR target images are enlarged to 384 \(\times\) 384 pixels, achieving a 3x upsampling ratio. Regarding spatial resolution, each pixel in the LR images corresponds to 300 meters on the ground, while each pixel in the HR images represents 100 meters.

Since the pixels at the edges usually contain less useful information and are susceptible to noise, a binary mask tensor is assigned to each image to ensure data quality and eliminate interfering factors. The center of the binary mask tensor is 1 (meaning retain) and the edges are 0 (meaning crop), so that the pixels at the edges of the image are ignored and only the losses in the central area are calculated. This operation can improve the model’s focus on the features of the central area, thereby providing a clean sample of the image for subsequent analysis. The dataset covers a total of 1,450 geographical regions, of which 1,160 were carefully selected for the training of the algorithm model, and the remaining 290 regions were specially designed as test sets to check the generalization ability of the model. Each geographic region has an average of 19 LR images coverage, with a minimum of 9 images, ensuring data integrity from multiple perspectives.

Figure 8 shows an example image of a candidate region with super-resolution. By comparing the image effects at different resolutions, it visually demonstrates the super-resolution capability of the \(\hbox {ESTF}^2\)N model in the field of remote sensing image processing. All images in this figure have been visualized using a colormap (e.g. the ”viridis” colormap) to enhance interpretability and detail perception. This visualization process does not alter the actual data structure or dimensions. The figure represent the image resolution at different stages. Subfigure (a) is an overlapping view of the LR input image. The LR images represent the original remote sensing data, with low quality and blurred details, making it difficult to capture fine terrain features. Subfigure (b) shows the super-resolution image generated by the \(\hbox {ESTF}^2\)N model, which has a higher resolution and recovers more spatial details, thus improving the image’s visual quality. Subfigure (c) shows the target HR image, which is used as a reference to assess the similarity between the model’s output and the actual HR image.It can be seen that the model-generated image closely resembles the target image and better restores the detailed features of the terrain.

Data pre-processing

To enhance the efficiency of training and the generalizability of the model, pre-processing was applied to the PROBA-V dataset.

-

1.

The acquired k LR images are aligned using the ShiftNet20 method, which can make the same feature in different images overlap spatially;

-

2.

Randomly crop the image. Generate multiple training samples from a single image to expand the dataset;

-

3.

Select high-quality pixel regions for sampling by setting a sampling threshold, thereby improving the quality of training samples;

-

4.

The image data is transformed into a floating-point numerical format and subjected to standardization.

During training, if the displacement problem is not solved, the HR images generated by the model will be blurry, affecting the quality and accuracy of the images. By learning the translation parameters between images, the images can be aligned spatially with the reference image, thereby eliminating the errors caused by displacement. The key concept of this method is to compute the translation parameter (theta) of each image relative to the reference image using a set of LR images that require alignment, and then adjust their positions accordingly. In this way, the output image will be spatially consistent with the reference image, which is convenient for subsequent super-resolution reconstruction and other image processing tasks.

To avoid the influence of unreliable pixels during registration, ShiftNet introduces a mask mechanism during processing. Figure 9 shows the specific operations in this process. The first row shows the original LR remote sensing input images, where a color map (for example, the ”gray” colormap) has been applied to enhance detail visibility for better visualization. However, in the actual computation, mask generation is still based on the original LR remote sensing images without any colormap processing. The second row shows the corresponding mask images, which are used to mark and exclude unreliable pixel regions during alignment, thereby enhancing the accuracy of image registration. During the mask generation process, we calculated the proportion of reliable pixels in each image and set a threshold of \(85\%\), which means that only regions where the reliable pixel ratio reaches or exceeds \(85\%\) are retained for registration, while areas below this threshold are ignored to reduce registration errors caused by unreliable pixels.

In order to make the model learn a wider range of features and reduce the over-dependence of the model on training data, the images are randomly cropped. The cropped regions of a specified position and size are obtained in the image, and the default size of the cropped region is 64 pixels. These cropped images are used as the new LR images and HR feature maps. Load the sharpness scores of each image from the clearance.npy file in the dataset. These scores reflect the quality of the pixels or the sharpness of the image. First, the sharpness scores are weighted according to the beta parameters. This step adjusts the relative weight of the scores using an exponential function to better distinguish between high-quality and low-quality pixel regions. Using the weighted scores p, n images are selected at random from the image set. The probability of selection is proportional to the sharpness of the image, meaning that high definition images are more likely to be selected. High-quality pixel regions usually contain more structural information and details. Training the model in key areas can improve its accuracy and generalizability. Low-quality or noisy areas can have a negative impact on the model, causing it to learn undesirable features or reduce the model performance. Training by excluding or reducing these areas can improve the model’s ability to learn from real data. The image data is converted from unsigned 16-bit integers to 32-bit floating point numbers, followed by a normalization process. The image data are normalized to the range [0, 1], so a wider range of values can be processed in subsequent calculation or processing. Since the pixel value range of the unsigned 16-bit integer type is [0, 65535], the pixel data of LR images is divided by 65535.0 to normalize the pixel values to the floating-point number range.

Experimental setup

The model developed in this work is built on the PyTorch30 deep learning framework and is optimized by the end-to-end optimization algorithm31. The initial learning rate is configured to 0.0007, combined with the learning rate decay strategy, which means that when the validation set’s score does not improve for two successive epochs, the progressive learning rate decay factor is 0.97. The model is trained for 400 epochs using a batch size of 16. The training process takes approximately 12 hours on an NVIDIA RTX 4090 GPU equipped with 24 GB of memory. In the inference phase, our model can process about 12 scenes per second on the same graphics card.

During the data preprocessing stage, we use the Python programming language to process experimental data. We loaded the sharpness scores of each image from the clearance.npy file in the dataset to assess image quality and filter high-quality images for training. Furthermore, we read the norm.csv file in the dataset, which contains the standard cPSNR values of the target HR images for performance evaluation and normalization.

Considering the memory limitations and the goal of improving the generalization performance of the model, we randomly crop the LR image of 64\(\times\)64 pixels and its corresponding HR 192\(\times\)192 pixels image as training samples during the training process. Since the model adopts a fully convolutional structure, we take the LR image with a size of 128\(\times\)128 pixels as input during testing.

Evaluation indicators

Although traditional mean square error (MSE) is widely used, it is more sensitive to changes in image brightness. In super-resolution tasks, noise and detail loss are inevitably introduced due to the amplification process of LR images. Therefore, the brightness of the reconstructed image is often different from that of the original HR image. MSE cannot effectively distinguish between such brightness variations due to the reconstruction process and the intrinsic brightness difference of the image itself. To address this problem, we choose to use a corrected metric for the loss function, called corrected MSE (cMSE). cMSE performs luminance compensation on the reconstructed image before calculating the mean square error. This makes the average luminance of the reconstructed image consistent with the average luminance of the target image. In this way, cMSE is able to focus more on evaluating the recovery quality of the reconstructed image in terms of detail texture, edge contours, etc., without being affected by the overall brightness difference. The calculation of cMSE can be expressed as formula 13 and 14.

In formula 13 and 14, d denotes the brightness deviation (scalar), S is a binary image representing the clear pixels in H, and both images and \(\hat{H}\) are normalized to the [0,1] range. The corrected PSNR (cPSNR) can be computed using cMSE, and the calculation process can be expressed as formula 15.

Based on cMSE and cPSNR, we further define the evaluation metric for a single image. A normalized evaluation function \(z(\widehat{H})\) is introduced to represent the Score of the HR image generated by the model. The calculation process can be expressed as formula 16.

where N(H) denotes the standard value cPSNR of the target HR image H, which is provided by the norm.csv file in the data set. This formula can reflect the superiority of the generated image \(\widehat{H}\) compared to the target image H. The overall score of the final submission is calculated by averaging the \(z(\widehat{H})\) across all test regions. As shown in formula 17, submission represents the total number of images in the validation set. If score is less than 1, it indicates that the quality of the generated images is higher than the Bicubic baseline method.

Figure 10 compares the cPSNR values of the baseline method (Bicubic) and the \(\hbox {ESTF}^2\)N method in the validation set to visually highlight their differences. Each data point corresponds to a specific scene, where the x-axis reflects the cPSNR value of the baseline method, and the y-axis represents the cPSNR value of the \(\hbox {ESTF}^2\)N method. When the data point lies above the line y = x, it means that the \(Score < 1\), that is, the super-resolution effect of the \(\hbox {ESTF}^2\)N method in this scene is better than that of the baseline method. When the data point is below the straight line y = x, it means that \(Score > 1\), that is, the super-resolution effect of \(\hbox {ESTF}^2\)N in this scene is not as good as that of the baseline method. The results show that the \(\hbox {ESTF}^2\)N method shows excellent super-resolution effects in almost all scenes. In the NIR band, the super-resolution effect of the \(\hbox {ESTF}^2\)N method is better than the baseline method in 96.04% of the scenes. In the RED band, our method also shows significantly better super-resolution effects than the baseline method in 98.30% of the scenes.

Finally, to define a loss function to be minimized, the negative cPSNR is used. The calculation process can be expressed as formula 18.

Comparison with other methods

We conducted a series of comparative experiments to evaluate the performance of different super-resolution methods on the PROBA-V Kelvin dataset. Given the absence of ground truth in this dataset, we followed a standard approach by splitting the training set into training and validation subsets with a 7:3 ratio. Using the same training/validation split, we compared several representative methods, including SISR, video super-resolution (VSR), and MISR. The evaluation metrics include cPSNR, cSSIM and Score.

Table 1 shows the comparison of the cPSNR and cSSIM metrics of several methods in the validation set. In the NIR band, the cPSNR of \(\hbox {ESTF}^2\)N is 49.69 dB, which is significantly higher than state-of-the-art methods such as DeepSUM (47.84 dB) and MAST (49.63 dB). This represents an improvement of 4.25 dB compared to the Bicubic interpolation method (45.44 dB). cPSNR of \(\hbox {ESTF}^2\)N reaches 51.57 dB in the RED band, which is ahead of DeepSUM++ (50.08 dB) and TR-MISR (50.67 dB), and an increase of 4.24 dB compared to Bicubic (47.33 dB). In the cSSIM metric, \(\hbox {ESTF}^2\)N achieves 0.9894 and 0.9928 in the NIR and RED bands, respectively, showing better structural similarity, suggesting that the image is improved in detail.

This model effectively captures and fuses temporal and spatial features by introducing CRFM, which improves the accuracy of image reconstruction. The model overcomes the shortcomings of methods such as RCAN and VSR-DUF in modeling temporal information. With the ABFE, this model is able to accurately extract important spatial features in LR images. Our model shows superior reconstruction results for complex textures compared to Bicubic and IBP/BTV methods. In summary, the advantages of the \(\hbox {ESTF}^2\)N network model over the existing super-resolution methods in terms of integrated utilization of spatio-temporal features and spatial feature extraction promote the development of super-resolution reconstruction techniques for remote sensing images.

Ablation experiments

To assess the effectiveness of the \(\hbox {ESTF}^2\)N model, we performed ablation experiments focusing on the core RTAB module in the spatio-temporal fusion module and the core CAB module in the ABFE coding module. These experiments aim to systematically analyze and validate the influence of the modules of the proposed method on the overall performance of the model. By gradually removing or replacing the corresponding modules and comparing the super-resolution effect of the model in different bands.

Importance of RTAB

The core of the spatio-temporal fusion CRFM module is RTAB. The temporal attention mechanism focuses on the most informative frames in multiple consecutive remote sensing images, and the original features are retained through a residual connection. The module weights and fuses the multi-frame features to produce sharper HR images in super-resolution tasks. We trained two alternative networks for each spectral band , identical in architecture to \(\hbox {ESTF}^2\)N, but with the exclusion of the RTAB branch. These simplified networks were trained independently from the ground up, thereby demonstrating the importance of RTAB mechanism.

Table 2 demonstrates the significant degradation of the results in the absence of RTAB branching, proving the importance of choosing the best timing view to simplify the main branching super-resolution process. We found that this difference is particularly evident in the red light band. Because training failed multiple times without the RTAB module. When the RTAB module is removed, the model loses its ability to selectively emphasize frames with higher temporal consistency, resulting in degraded performance in scenarios where inter-frame temporal correlation is weak. Through this study, we have validated the crucial role of global residual branches in improving model performance.

Importance of CAB

The CAB module enhances the model’s ability to extract spatial features. As the core module in ABFE, Table 3 compares the performance differences between the complete model with the CAB module and the simplified model without the CAB module in the dataset. The CAB module further strengthens the focus on spatial features by weighting the importance between different channels through the channel attention mechanism. This allows the \(\hbox {ESTF}^2\)N model to more effectively capture subtle spectral differences and enhance the resolution of image details in the reconstruction. The experimental data show that the \(\hbox {ESTF}^2\)N network with the CAB module improves both in the metrics cPSNR and cSSIM, especially in the RED band. After the introduction of CAB module, the cPSNR of NIR band is improved from 48.24 dB to 49.69 dB, and the cSSIM is improved from 0.9845 to 0.9894, while the cPSNR of RED band is significantly improved from 47.82 dB to 50.17 dB. This result is much better than that of the version without the CAB module, which indicates that the module is particularly important for the extraction of spatial features in the RED band.

Visualization comparison

To visually demonstrate the advantages of the \(\hbox {ESTF}^2\)N model in generating HR images, we have selected three representative images from the scene. These scenes contain typical features such as rock textures, river contours, and mountain terrains. The results of comparing the visual effects of different models in these scenes are shown in Figs. 11, 12 and 13. The image on the left represents the LR input, and the ten images on the right correspond to 3x magnified views of the red boxes in the LR images. The orange areas in these magnified images highlight specific regions, allowing for a detailed comparison of local details.

The rock scene in Fig. 11 has complex texture details. Traditional methods (such as Bicubic and BTV) lose most of the texture information during reconstruction, and the resulting HR images tend to be smoothed. DeepSUM and RCAN can recover some texture, but the edge areas are still blurry, and the local details lack accuracy. In contrast, the \(\hbox {ESTF}^2\)N model can clearly restore the texture structure of the rock, especially the edges, which is close to the HR image. In the river scene in Fig. 12, the clarity of the river boundary and the texture of the watershed are the places that differ the most. The HR images generated by other methods are blurred or even have artifacts near the river boundary. However, \(\hbox {ESTF}^2\)N accurately restores the contour of the river with its powerful spatial feature extraction capabilities, and the riverbank boundary is clearer. The mountain scene in Fig. 13 shows the characteristics of the undulating terrain. Other methods make the area of the ridge appear flat, while the method in this paper can effectively restore the layering of the ridges and ravines, generating richer and more realistic texture layers. Although our method achieves good results in visual effects, there are still some shortcomings compared with HR images. In detail-rich areas such as edges and complex textures, the generated images occasionally appear slightly blurred, and there is a certain lack of brightness restoration. This shows that our method still has room for further improvement in capturing and reproducing fine structural details and color restoration.

Conclusions

In this paper, a novel deep learning network based on end-to-end \(\hbox {ESTF}^2\)N is proposed to improve the quality of super-resolution reconstruction of remote sensing multi-images. By incorporating the spatial feature extraction module, the model accurately captures spatial features from LR images, addressing the challenge of high image sharpness requirements and ensuring the full utilization of all available LR images. The introduction of a spatio-temporal feature fusion module is enough to capture the changes and temporal relationships between images, improve the model’s understanding and reconstruction of the scene, and successfully mitigating the impact of weak temporal correlation. Our extensive experiments on the PROBA-V dataset show that the \(\hbox {ESTF}^2\)N model outperforms the existing methods in both subjective visual effects and objective evaluation indexes (cPSNR and cSSIM), and these results fully demonstrate that the \(\hbox {ESTF}^2\)N model not only reduces the cost of acquiring HR remote sensing images, but also provides a new technological way for the acquisition of HR remote sensing images.

Discussion

Future research work can be centered on the following aspects. First, although the \(\hbox {ESTF}^2\)N model has achieved significant results in improving the quality of super- resolution reconstruction of multi-temporal remote sensing images, its generalization ability on different types of remote sensing datasets still needs to be further verified. In the future, the model can be considered to be applied to more types of remote sensing data to explore its applicability in different scenarios. In terms of network structure design, future research can explore a more lightweight model architecture to reduce the computational complexity and storage requirements of the model, making it more suitable for deployment in resource-constrained environments.

Data availibility

The dataset utilized in this study is the open source PROBA-V super-resolution dataset (https://zenodo.org/records/6327426). The analyzed data and specific usage guidelines are available upon request from the corresponding author.

References

Chen, Z. et al. Dual aggregation transformer for image super-resolution. In Proc. of the IEEE/CVF International Conference on Computer Vision, 12312–12321 (2023).

Li, A., Zhang, L., Liu, Y. & Zhu, C. Feature modulation transformer: Cross-refinement of global representation via high-frequency prior for image super-resolution. In Proc. of the IEEE/CVF International Conference on Computer Vision (ICCV), 12514–12524 (2023).

Zhang, X., Li, T. & Zhao, X. Boosting single image super-resolution via partial channel shifting. In Proc. of the IEEE/CVF International Conference on Computer Vision (ICCV), 13223–13232 (2023).

Zhou, Y. et al. Srformer: Permuted self-attention for single image super-resolution. In Proc. of the IEEE/CVF International Conference on Computer Vision (ICCV), 12780–12791 (2023).

Liu, F. et al. Aerial image super-resolution based on deep recursive dense network for disaster area surveillance. Personal and Ubiquitous Computing, 1–10 (2022).

Wang, H. et al. Multi-source remote sensing intelligent characterization technique-based disaster regions detection in high-altitude mountain forest areas. IEEE Geosci. Remote Sens. Lett. 19, 1–5 (2022).

Lepcha, D. C., Goyal, B., Dogra, A. & Goyal, V. Image super-resolution: A comprehensive review, recent trends, challenges and applications. Inf. Fusion 91, 230–260 (2023).

Malczewska, A. & Wielgosz, M. How does super-resolution for satellite imagery affect different types of land cover? sentinel-2 case. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 17, 340–363 (2024).

Tuohetahong, Y. et al. Climate and land use/land cover changes increasing habitat overlap among endangered crested ibis and sympatric egret/heron species. Sci. Rep. 14, 20736–20751 (2024).

Wang, X., Hu, Q., Cheng, Y. & Ma, J. Hyperspectral image super-resolution meets deep learning: A survey and perspective. IEEE/CAA J. Autom. Sin. 10, 1668–1691 (2023).

Fu, B. et al. Combination of super-resolution reconstruction and sga-net for marsh vegetation mapping using multi-resolution multispectral and hyperspectral images. Int. J. Digit. Earth 16, 2724–2761 (2023).

Wang, Y. et al. Sinsr: Diffusion-based image super-resolution in a single step. In 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 25796–25805 (2024).

Tian, Y., Chen, H., Xu, C. & Wang, Y. Image processing gnn: Breaking rigidity in super-resolution. In 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 24108–24117 (2024).

Chen, H. et al. Low-res leads the way: Improving generalization for super-resolution by self-supervised learning. In 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 25857–25867 (2024).

Wang, L., Li, J., Wang, Y., Hu, Q. & Guo, Y. Learning coupled dictionaries from unpaired data for image super-resolution. In 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 25712–25721 (2024).

Irani, M. & Peleg, S. Improving resolution by image registration. CVGIP: Graph. Models Image Process. 53, 231–239 (1991).

Farsiu, S., Robinson, M., Elad, M. & Milanfar, P. Fast and robust multiframe super resolution. IEEE Trans. Image Process. 13, 1327–1344 (2004).

Zhang, Y. et al. Image super-resolution using very deep residual channel attention networks. In Proc. of the European Conference on Computer Vision (ECCV), 286–301 (2018).

Jo, Y., Oh, S. W., Kang, J. & Kim, S. J. Deep video super-resolution network using dynamic upsampling filters without explicit motion compensation. In Proc. of the IEEE Conference on Computer Vision and Pattern Recognition, 3224–3232 (2018).

Deudon, M. et al. Highres-net: Recursive fusion for multi-frame super-resolution of satellite imagery (2020).

Bordone Molini, A., Valsesia, D., Fracastoro, G. & Magli, E. Deepsum: Deep neural network for super-resolution of unregistered multitemporal images.. IEEE Trans. Geosci. Remote Sens. 58, 3644–3656 (2020).

Molini, A. B., Valsesia, D., Fracastoro, G. & Magli, E. Deepsum++: Non-local deep neural network for super-resolution of unregistered multitemporal images. In IGARSS 2020 - 2020 IEEE International Geoscience and Remote Sensing Symposium, 609–612 (2020).

An, T. et al. Tr-misr: Multiimage super-resolution based on feature fusion with transformers. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 15, 1373–1388 (2022).

Li, J. et al. Multi-attention multi-image super-resolution transformer (mast) for remote sensing. Remote Sens. 15, 4183 (2023).

Han, K. et al. A survey on vision transformer. IEEE Trans. Pattern Anal. Mach. Intell. 45, 87–110 (2023).

Cong, S. & Zhou, Y. A review of convolutional neural network architectures and their optimizations. Artif. Intell. Rev. 56, 1905–1969 (2023).

Caterini, A. L., Chang, D. E., Caterini, A. L. & Chang, D. E. Recurrent neural networks. Deep Neural Networks in a Mathematical Framework, 59–79 (2018).

Wang, Q., Guo, L., Ding, S., Zhang, J. & Xu, X. Sfemgn: Image denoising with shallow feature enhancement network and multi-scale convgru. In ICASSP 2023 - 2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 1–5 (2023).

Sterckx, S. et al. The proba-v mission: Image processing and calibration. Int. J. Remote Sens. 35, 2565–2588 (2014).

Imambi, S., Prakash, K. B. & Kanagachidambaresan, G. Pytorch. Programming with TensorFlow: solution for edge computing applications 87–104 (2021).

Kingma, D. P. Adam: A method for stochastic optimization. arXiv preprint http://arxiv.org/abs/1412.6980 (2014).

Acknowledgements

I wish to convey my profound gratitude to my supervisor, Prof. Shengbing Che, for his invaluable guidance, steadfast support, and insightful advice in selecting the thesis topic, defining the research methodology, and revising the final paper. Additionally, I sincerely appreciate the support and contributions of all members of Prof. Che’s research team. Tuo Yangzhuo, Wang Wanqin, Du Yafei, and Zhang Zixuan for their many discussions with me during the conduct of the research, which pushed the research forward smoothly. Thanks to the TAPU Research Fund (Project No.2024-1128-01-HNTP01) for its financial support of this research.

Funding

Thanks to the TAPU Research Fund (Project No.2024-1128-01-HNTP01) for its financial support of this research.

Author information

Authors and Affiliations

Contributions

W.L. conceived the experiments, W.L. and S.C. conducted the experiments, W.L. and W.W. analyzed the results. The remaining team members contributed to the creation of the figures and tables. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Liu, W., Che, S., Wang, W. et al. Research on remote sensing multi-image super-resolution based on \(\hbox {ESTF}^{2}\)N. Sci Rep 15, 9501 (2025). https://doi.org/10.1038/s41598-025-93049-7

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-93049-7