Abstract

High Dynamic Range (HDR) images, with their expanded range of brightness and color, provide a far more realistic and immersive viewing experience compared to Low Dynamic Range (LDR) images. However, the significant increase in peak luminance and contrast inherent in HDR images often accentuates artifacts, thus limiting the effectiveness of traditional LDR-based image quality assessment (IQA) algorithms when applied to HDR content. To address this, we propose a novel blind IQA method tailored specifically for HDR images, which incorporates both the perception and inference processes of the human visual system (HVS). Our approach begins with multi-scale Retinex decomposition to generate reflectance maps with varying sensitivity, followed by the calculation of gradient similarities from these maps to model the perception process. Deep feature maps are then extracted from the last pooling layer of a pretrained VGG16 network to capture inference characteristics. These gradient similarity maps and deep feature maps are subsequently aggregated for quality prediction using support vector regression (SVR). Experimental results demonstrate that the proposed method achieves outstanding performance, outperforming other state-of-the-art HDR IQA metrics.

Similar content being viewed by others

Introduction

High dynamic range (HDR) images can potently represent real-world scene radiance, ranging from faint starlight to bright sunlight. As a means to generate more realistic image content, HDR imaging is now widely welcomed by both the industry and consumer society with the development of image technology and hardware support. However, similar to low dynamic range (LDR) images, HDR content can suffer from distortions during processes such as image acquisition, compression, and transmission, which may be caused by camera artifacts, coding compression, channel errors, and noise, among other factors1,2,3. Therefore, an efficient image quality assessment (IQA) metric is critical for evaluating the viewing experience and optimizing image processing algorithms. Nevertheless, compared to their LDR counterparts, HDR images’ higher peak luminance and contrast may have different visual impacts and pose new challenges for IQA.

IQA can be broadly divided into subjective and objective approaches. Subjective evaluation involves human observers assessing quality based on predefined criteria. Common methods include Mean Opinion Score (MOS), where images are rated on a scale; Good/Bad Classification, which categorizes images as “Good” or “Bad”; Pairwise Comparison, where the better image is selected from two options. While accurate, objective methods are labor-intensive and impractical for real-time use4. Objective IQA uses computational models to predict perceived quality and is categorized into Full-Reference (FR), Reduced-Reference (RR), and No-Reference (NR) metrics. FR compares a distorted image to a reference, RR uses partial reference information, and NR evaluates quality independently without any reference image. Among these methods, NR is particularly challenging, especially for HDR images. In practice, large-scale HDR reference images are often inaccessible. For example, HDR images may be created from a single LDR image or multi-exposure fusion (MEF), leaving no reference HDR image available. Therefore, developing robust NR methods for HDR images is crucial.

To ensure that quality predictions closely align with subjective scores, it is crucial to incorporate the perception factors of human visual system (HVS) into the design of an objective algorithm5,6. The HVS processes perception and inference hierarchically, which inspires the development of our IQA method. This metric aggregates both perception and inference features to enhance prediction accuracy for HDR images. Specifically, our contributions are as follows:

-

1.

Our blind HDR IQA metric aggregates both low-level (perception) and high-level (inference) features in a comprehensive manner.

-

2.

We apply multi-scale Retinex decomposition to generate multi-sensitivity reflectance maps for HDR images. Gradient similarities calculated from these maps represent the cognitive process and are used as perception features.

-

3.

We extract feature maps from the last pooling layer of a pre-trained VGG16 network, which captures various semantic information from the input image and serves as inference. Prior to being fed into VGG16, HDR images are preprocessed using multi-scale Retinex with color restoration to align their value range with that of the training data.

The remainder of this paper is organized as follows: Section II provides a review of related work in the field. In Section III, the proposed method is described in detail. Section IV presents experimental comparisons between our method and several state-of-the-art (SOTA) quality assessment algorithms. Finally, Section V concludes the paper.

Related work

In this section, we review two key research areas closely related to our work: HDR IQA and CNN-based deep feature methods for IQA.

Significant advancements have been made in LDR IQA technologies7,8,9,10,11,12,13,14. However, these technologies are primarily designed to evaluate gamma-coded LDR images, which differ fundamentally from HDR images, where pixel values are linear to radiance. As a result, traditional LDR quality metrics cannot be directly applied to HDR content. To bridge this gap, Aydin et al.15 introduced an encoding function (PU) to convert linear HDR values into approximately perceptually uniform ones, thereby extending classical LDR metrics such as PSNR and SSIM to HDR applications. Following this idea, Mantiuk et al.16proposed a more comprehensive PU encoding function, enabling the adaptation of legacy LDR IQA metrics, including VSI10, FSIM9, SSIM7and MS-SSIM8, to HDR content.

Despite these efforts, the development of specialized HDR IQA algorithms remains limited. HDR visual difference predictor (HDR-VDP)17,18and HDR video quality measure (HDR-VQM)19 were designed for HDR images and videos, respectively, based on modeling visual processing. Specifically, they modeled intra-ocular scattering, luminance masking, and photoreceptor response, and calculated visual differences between reference and test images for quality evaluation. Zhang et al.20 found that subjective scores were not affected by display luminance and proposed a FR metric by computing gradient similarity. Liu et al.21 introduced the Local-Global Frequency Model (LGFM), an FR model for HDR IQA that uses Gabor filters for local feature extraction and Butterworth filters for global feature detection, combining these similarity maps to produce a quality score. More recently, Cao et al.22 proposed a novel HDR IQA method that converts HDR images into stacks of LDR images using an inverse display model and evaluates them with established LDR IQA metrics. This method not only outperforms existing HDR IQA models on multiple datasets but also shows significant improvements in perceptual optimization for HDR novel view synthesis. The transmission of reference HDR images demands significant bandwidth, and HDR content is often synthesized from LDR images without corresponding reference HDR versions, making the development of NR methods for HDR IQA a more practical and competitive solution. Guan et al.23 developed an NR quality prediction model based on tensor decomposition, extracting structure and contrast features for quality prediction using support vector regression (SVR). Kottayil et al.24 proposed a CNN-based NR-IQA model for HDR images, consisting of an E-net for error estimation and a P-net for perception resistance, with block scores combined to generate a final image score. Banterle et al.25 trained a modified U-Net26 for the NR prediction of HDR-VDP-2 quality scores, eliminating the need for reference images and reducing computational costs. Chubarau et al.27 explored transfer learning and domain adaptation techniques to adapt pretrained neural networks from LDR to HDR IQA, leveraging the power of deep learning to improve model performance.

CNNs are widely used in various fields26,28, including image segmentation, image generation, object detection, etc. In these diverse image processing tasks, CNNs can autonomously learn perception mechanisms akin to those of the HVS, such as sensitivity to edges, colors, and motion, through the use of training data29,30. CNNs can automatically extract multi-level, complex features from images, demonstrating strong representational capabilities and adaptability, which simplifies model design. As a result, CNN-based IQA methods have gained widespread acceptance. However, there are only relatively small datasets with subjective scores available for most specific IQA applications. For HDR IQA, Narwaria’s data base31and Korshunov’s database32 consist only of 140 and 240 labeled HDR images, respectively. The scarcity of large, high-quality annotated data poses challenges for training deep learning models. To overcome such challenge, a feasible solution is to extract quality-related deep features by inputting images into a pretrained network, which has been trained on a large dataset and can capture complex patterns and structures in the images. Gao et al.33 proposed a FR IQA framework, codenamed DeepSim, by pooling local similarities between the features generated by each layer in the VGG net for a global quality score. Ma et al.34 fed the reference and distorted images into VGG net and averaged perception difference indices in the first 35 layers to calculate the objective quality score. Chaudhary et al.35 inputted saliency maps of the refence and tested Depth-Image-Based-Rendering (DIBR) views into VGG net for feature extraction, and then calculated cosine similarity between feature vectors for quality prediction. These studies demonstrate that pre-trained CNNs, originally developed for tasks like image classification, can be effectively repurposed for quality-related feature extraction and IQA model construction.

This study proposes a NR HDR IQA method that integrates Retinex theory to simulate human visual mechanisms for extracting low-level perception features. In parallel, it utilizes a pre-trained CNN to autonomously capture high-level inference features. By effectively combining these complementary feature domains, our method aims to provide enhanced accuracy and reliability in HDR image quality assessment.

Proposed method

Figure 1 shows the framework of our HDR IQA algorithm. The proposed methodology for blindly assessing HDR image quality involves several key steps. First, the HDR image is converted from the RGB color space to the LAB color space. In this space, the brightness map (L-channel) is processed using multi-scale Retinex (MSR) decomposition36. Gradient similarity maps are then computed between the original luminance map and reflectance maps at different scales, serving as perception features that capture gradient information across scales. Next, multi-scale Retinex with color restoration (MSRCR)37 is applied to obtain an enhanced image, ensuring that colors remain natural after enhancement. This enhanced image is then input into a pretrained VGG16 network, from which deep feature maps are extracted at the 5th pooling layer. These feature maps, referred to as inference features, provide high-level abstractions valuable for quality assessment. Finally, the perception features from the gradient similarity maps and the inference features from VGG16 are aggregated and fed into an SVR model, which predicts the image quality score. This approach effectively integrates traditional image processing techniques with modern deep learning to deliver a robust framework for accurately predicting HDR image quality.

Retinex decomposition

The word ‘Retinex’ is a blend of ‘retinal’ and ‘cortex’, reflecting the involvement of both eye and brain processing. It was initially proposed to explain color constancy under varying illumination conditions38. According to Retinex theory, an image can be represented as the product of a reflectance image and an illumination image, expressed as follows:

where \(\:x,\:y\) are spatial indices, \(\:ch\) denotes the color channel, and \(\:ch\), \(\:\mathcal{L}\), and \(\:\mathbb{R}\) represent the image, luminance, and reflectance, respectively. The illumination component of an image typically varies slowly and determines the dynamic range of the original image. In contrast, the reflectance component captures the inherent properties of the image, representing the object’s surface characteristics and details.

To simplify calculations, the equation is often converted to the logarithmic domain:

This logarithmic transformation, aligning well with the perceptual uniformity of HVS, converts the multiplicative relationship into an additive one, the whole process is shown in Fig. 2.

The decomposition process involves using a Gaussian filter as a low-pass filter to the original image, thereby obtaining the luminance component. The Gaussian filter is defined as:

where \(\:\sigma\:\) is the standard deviation of the Gaussian distribution.

MSR utilizes multiple Gaussian filters with different \(\:\sigma\:\)values to enhance the image36. The final result is obtained by weighting and combining the outputs from these different scales. The formula is as follows:

where \(\:MSR\left(x,y\right)\) is the multi-scale Retinex output, \(\:{\omega\:}_{i}\) is the weight for the \(\:i\)-th scale, \(\:{\mathbb{R}}_{i}(x,y)\) is the Retinex output for the \(\:i\)-th scale, and \(\:N\) is the number of scales. Each scale corresponds to a Gaussian filter with a specific \(\:\sigma\:\) value, allowing the method to capture details at various levels.

MSRCR is an enhancement of the basic MSR algorithm37. It ameliorates MSR with a color restoration step to preserve natural colors and avoid the gray-world effect produced by the standard Retinex.

Perception feature maps

The reflectance map captures the intrinsic properties of an image. We first decompose an image by MSR in the luminance domain. Different Gaussian filters adopted by MSR produce different reflectance maps. With the change in scale, we calculate the gradient similarity between the current reflectance map and the original luminance map. The gradient map of a scale is calculated as follows

with

Here, \(\:k\) denotes the scale number, and \(\:k=0\) means the original luminance map. \(\:{\varkappa\:}_{h}\left(x,y\right)\) and\(\:{\:\varkappa\:}_{v}\left(x,y\right)\) are the horizontal and vertical Sobel filter kernels, respectively. The MSR decomposition employs three Gaussian filter scales, with their \(\:\sigma\:\) values empirically set to 250, 80, and 10. The gradient similarity between the original image and scale \(\:N\) (\(\:N\)∈{1,2,3}) can be computed as follows

where \(\:\mathbb{C}\) is a small constant added to avoid division by zero and instability in the equation.

Since HDR content cannot be properly displayed in print, Fig. 3shows tone-mapped representations of three HDR images by built-in Matlab function. The HDR images, selected from the Narwaria database19, contain the same content but have different encoding rates. Figure 3a represents a highly compressed HDR image, where blurring and blocking artifacts are clearly visible as shown in the red and green bounding boxes. Additionally, we can find banding artifacts in the sky as indicated in the yellow bounding box. Figure 3b shows moderately compressed HDR content and reveals more details compared to Fig. 3a. For instance, the area within the red bounding box in Fig. 3a is heavily blurred or blocked, while the corresponding area in Fig. 3b shows only slight blurring at the bottom. Figure 3c, from a reference HDR image, displays perfect details without visible artifacts. The Mean Opinion Scores (MOS) are 1.077, 3.077, and 4.808 for the HDR images of Fig. 3a, b, and c, respectively, with 4.808 being the highest quality score and 1.077 the lowest.

Figure 4 displays the trend lines of mean gradient similarity values for Fig. 3. For Fig. 3a, the largest values and flattest line are observed, while Fig. 3c has the smallest similarities and highest score. This phenomenon suggests that Fig. 3a may lack detailed information, resulting in minimal differences from different Gaussian filtering. Similarly, the smallest values in Fig. 3c indicate detailed features well-preserved in the test image. These gradient similarities correlate well with HDR image quality scores. Hence, we compute the three gradient similarity maps as quality-related features.

Inference feature maps

In 1982, Marr39 proposed the theory of visual computation, providing a theoretical foundation for building computational models that simulate complex visual processing mechanisms. Convolutional Neural Networks (CNNs) have shown remarkable efficiency in replicating the receptive fields and layered processing of the visual system. Notable CNN models include AlexNet40, VGG1641, GoogLeNet42, ResNet43, DenseNet44, ViT45, etc.

CNN-based deep feature extraction and representation have demonstrated exceptional performance in image processing tasks, including image quality assessment. When there are insufficient training samples, techniques such as transfer learning or deep feature extraction are commonly used to achieve the desired outcomes. Transfer learning involves fine-tuning a pre-trained CNN model and its parameters, followed by retraining on a new dataset to develop a new model. On the other hand, deep feature extraction directly utilizes pre-trained CNN models to extract image features, which can then be applied to tasks like image quality assessment or image classification, etc. Deep feature extraction eliminates the need for retraining deep neural networks, thereby conserving both computational resources and time.

VGG16 is a widely recognized CNN architecture, introduced by the Visual Geometry Group (VGG) at the University of Oxford in 2014. As illustrated in Fig. 5, VGG16 consists of 13 convolutional layers, 3 fully connected layers and 5 pooling layers. Known for its simplicity and effectiveness, VGG16 excels at learning rich features from images, making it highly capable in tasks such as image classification and object detection. Today, the pre-trained VGG16 model on large-scale datasets, such as ImageNet46, is one of the leading choices for transfer learning or deep feature extraction. In this study, we use the pre-trained VGG16 network to extract deep features from HDR images as inference features. To accommodate input images of any size, the final three fully connected layers of VGG16 are removed. Deep features are then extracted from the last pooling layer, resulting in 512 feature maps.

To ensure that test images are compatible with the input requirements of the trained VGG16 model, they need to be mapped to the numerical range of the training dataset. Figure 6 illustrates the display effects produced by three different mapping methods applied to the same HDR image. Figure 6a is obtained using the built-in MATLAB tone-mapping function. While the image details are well preserved, there is significant color distortion, leading to a lack of naturalness. The mapped result is in 8-bit integer form. Figure 6b and c are generated using linear mapping and MSRCR, respectively, both in double-precision format. The scale settings for MSRCR are consistent with those used in MSR (part B). Compared to Fig. 6b, c display better detail in dark areas and richer color information. Additionally, using double-precision data as input enhances the accuracy and effectiveness of computations. Therefore, MSRCR is used as a preprocessing step to the images input to VGG16 network.

Figure 7 shows two HDR images with different content along with their deep feature maps. The leftmost column represents the MSRCR version of the HDR images, while the other columns show the deep feature maps extracted from the last pooling layer of VGG16. The numbers at the bottom indicate the position indices of the feature maps, and “…” denotes the feature maps not displayed. The comparison reveals significant differences between the deep feature maps at the same position indices. These deep feature maps contain rich target and semantic information related to image understanding, from which we can extract inference features for HDR image quality assessment.

Feature aggregation

Based on the previous content, we have obtained 3 gradient similarity maps representing perception characteristics and 512 deep feature maps representing inference characteristics. The deep feature maps are denoted as \(\:\mathcal{M}\in\:{\mathbb{R}}^{h\times\:w\times\:d}\), where \(\:h\) and \(\:w\) represent the height and width of the feature maps, respectively, and \(\:d\) represents the number of feature maps, with \(\:d=512\). The following steps outline the process of aggregating these two types of feature maps to generate a feature vector for quality assessment.

-

1.

Figure 4 demonstrates that there is a correlation between the average gradient similarity values at each decomposition scale of MSR and image quality. High similarity values correspond to low Mean Opinion Score (MOS) values, while low similarity values correspond to high MOS values. By integrating the gradient similarity maps across the three scales using the Hadamard operation, we obtain a two-dimensional matrix \(\:{G}_{t}\) that comprehensively describes the multi-scale visual perception characteristics:

Where ⊙ denotes the Hadamard operator.

-

2.

From Fig. 7, it can be observed that each deep feature map describes a portion of the region information of the image, serving as an essential component of the image representation. By weighting the feature maps with their summed values, we can emphasize the primary target features or semantic information.

First, we calculate the total sum of pixel values for each feature map:

where \(\:i\in\:\{\text{1,2},\dots\:,h\}, \,\, j\in\:\left\{\text{1,2},\dots\:,w\right\}, \,\,k\in\:\left\{\text{1,2},\dots\:,d\right\}\), \(\:h\) and \(\:w\) are the height and width of deep maps, respectively.

Next, we normalize this sum:

Finally, we compute the weighted feature map:

-

3.

Adjust the scale of \(\:{G}_{t}\) to the same size as the deep feature map:

Then implement the connection of the two types of information:

And calculate the Sum pooling along the channel dimension and obtain a vector with 512 elements:

-

4.

Finally, principal component analysis (PCA) is used to reduce the dimensionality of the vector:

The dimensionality of the vector is reduced to 32, preserving the most important features of the data. This reduction may effectively decrease the complexity of learning model and mitigate the risk of overfitting caused by the high dimensionality of the feature vectors.

Quality prediction

Regression models, including SVR47, Random Forest48, and Neural Network49, etc., have been extensively employed to integrate image features into quality scores. Among these approaches, SVR stands out due to its flexibility in selecting kernel functions, its robust capacity for handling high-dimensional features, and its notable generalization performance. Particularly in scenarios characterized by small sample sizes and high-dimensional data, SVR exhibits unique advantages compared to other machine learning algorithms. As with most training-testing methodologies, datasets are generally partitioned into two subsets: the training set and the testing set, typically through random sampling. During the training phase, an SVR evaluation model is developed based on the 32-dimensional feature vectors from the training set images alongside their corresponding MOS. In the subsequent testing phase, the features of images from the testing dataset are input into the trained SVR model to generate assessment scores.

Consequently, this study collects features related to HDR image quality and constructs an SVR model utilizing the Radial Basis Function (RBF) kernel to facilitate efficient evaluation of HDR image quality.

Experimental results

Databases and evaluation criteria

Databases

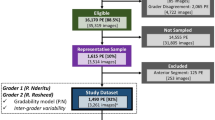

The proposed algorithm is evaluated on two public databases: Narwaria’s database31 and Korshunov’s database32.

Narwaria’s database: This database was developed using ten reference HDR images, as shown in Fig. 8, which include both indoor and natural scenes. The reference images were first tone-mapped to LDR using the iCAM06 operator. Subsequently, the tone-mapped images were JPEG compressed and then expanded to create distorted HDR images through inverse tone mapping. For each pristine content, 14 different distorted HDR images were generated by varying the JPEG compression settings and iCAM06 parameters. The database provides MOS ranging from 1 to 5, where higher values indicate better quality.

The 10 original HDR images from the Narwaria’s database (from31 ).

Korshunov’s database: This database comprises twenty pristine HDR images, encompassing a variety of scenes such as architecture, landscapes, and portraits, as shown in Fig. 9. The distorted images in this database were created using the JPEG-XT standard. A total of 240 compressed images were generated by applying different profiles and quality levels of JPEG-XT. Each image is assigned an impairment scale value ranging from 1 to 5, where higher values indicate greater impairment.

The 20 original HDR images from the Korshunov’s database (from32).

Evaluation criteria

To mitigate bias, the training-testing process of our learning method is executed 1000 times, with the median values used as the final results. In each of the 1000 iterations, the entire database is randomly divided into two parts based on image content: 80% of the samples are designated as the training set, while the remaining 20% are served as the testing set.

For performance evaluation, we employ three commonly used metrics: Root Mean Squared Error (RMSE), Pearson Linear Correlation Coefficient (PLCC), and Spearman Rank Order Correlation Coefficient (SRCC), all of which are recommended by the Video Quality Experts Group (VQEG)50. RMSE is a commonly used metric for measuring the differences between predicted and observed values. It quantifies the average magnitude of errors in a set of predictions, with lower RMSE values indicating better predictive accuracy. PLCC measures the linear correlation between two datasets, providing insight into the degree to which they vary together. The PLCC value ranges from − 1 to 1, where a value of 1 indicates perfect positive correlation, − 1 indicates perfect negative correlation, and 0 indicates no correlation. SRCC assesses the strength and direction of the association between two ranked variables. Unlike PLCC, SRCC evaluates monotonic relationships, making it robust against non-linear correlations. SRCC values range from − 1 to 1, with higher values indicating a stronger positive correlation. Prior to calculating PLCC and RMSE, it is necessary to remove the nonlinearity of the objective scores through logistic regression, defined as:

where \(\:{Q}_{p}\) is the input objective prediction score, and a1, a2, a3, a4 and a5 are the parameters to be fitted by nonlinear regression.

Results and performance

To validate the effectiveness and reliability of the algorithm, we conduct the comparative experiments against two categories of SOTA HDR IQA models. The first category involves methods that transform the linear values of HDR images into perceptually uniform values, followed by the application of established LDR IQA methods to make evaluation. Specifically, all competing LDR metrics are extended by the PU encoding of HDR images, and commonly used FR algorithms (such as PSNR, SSIM7, VIF14) and NR algorithms (such as BRISQUE11, NIQE13) are selected for comparison. The second category consists of specially designed HDR IQA methods, of which Guan’s method23, HDR-VDP-2.218, HDR-VQM19, Zhang’s method20, and Cao’s method22 are adopted as compared algorithms.

Table 1 presents the comparative results across two widely recognized public databases, with the highest-performing indices highlighted in bold. The findings from the table reveal several key insights:

-

1.

The results indicate that LDR metrics generally underperform when compared to HDR-specific metrics. This is particularly evident in the case of no-reference metrics such as PU-NIQE and PU-BRISQUE, which exhibit the poorest performance among all tested metrics.

-

2.

Our proposed method achieves the best results on Narwaria’s database, outperforming even the state-of-the-art full-reference HDR quality metrics, such as HDR-VQM and Cao’s method.

-

3.

Despite not achieving the top ranking across all indices on Korshunov’s database, our method stands out by securing the second-best performance in RMSE and SRCC, while also claiming the third best in PLCC.

-

4.

One of the most notable strengths of our method is its consistent performance across both databases. The close results between Narwaria’s and Korshunov’s databases suggest that the algorithm is highly robust.

In summary, the proposed method not only demonstrates superior prediction accuracy but also maintains a high level of consistency and robustness across different datasets.

Ablation study

The proposed method achieves HDR IQA by fusing two types of features. Specifically, gradient similarities, which represent perception features, are used to weight deep feature maps that represent inference features. To verify the complementarity of these features, we conduct experimental comparisons with and without perception feature weighting. Table 2 presents the results, where ‘Yes’ indicates that gradient similarities are combined with deep features, and ‘No’ indicates the use of deep features alone. The results show that the fusion of these two feature types significantly improves both PLCC and SRCC on the two datasets. These findings underscore the strong complementary effect between perception and inference features.

Using PCA enables dimensionality reduction of high-dimensional features. Figure 10 presents the experimental results obtained on the Narwaria and Korshunov’s datasets, with varying numbers of feature dimensions. The results indicate that on the Narwaria’s dataset, the 32-dimensional features achieve the optimal performance. For the Korshunov’s dataset, performance generally improves as the number of features increases. however, beyond 32 dimensions, the improvement trend becomes much less pronounced. Given the smaller dataset size and the need for computational efficiency, reducing the dimensionality to 32 is deemed appropriate.

Cross-dataset assessment

To evaluate the generalization capability of the proposed learning-based algorithm, we train the model on one dataset (either Narwaria or Korshunov) and conduct evaluations on the other. The MOS of both datasets were aligned following the methodology outlined in51. As presented in Table 3, the cross-dataset evaluation results are promising, with all PLCC and SSRCC values approaching around 0.85.

Further discussion

We have developed an effective HDR image quality assessment algorithm that incorporates the perception and inference characteristics of the human visual system. Compared to other state-of-the-art algorithms, our method demonstrates superior performance. However, despite its competitive edge, there are several areas that require further attention and refinement:

-

1.

The perception characteristics of the human visual system are highly complex. Our model currently captures these characteristics using gradient similarities derived from the three scales of Multi-Scale Retinex (MSR) luminance maps. However, this approach does not fully address the unique perceptual mechanisms of the human eye regarding color, structure, texture, and other factors. Future research should aim for a more comprehensive understanding of the human visual system by integrating a wider range of perceptual factors, enabling the extraction of features that are more closely correlated with perceived image quality.

-

2.

The VGG16 model effectively simulates the hierarchical processing mechanisms of the human visual system, with deep feature maps extracted from its final pooling layer offering strong representational capabilities. However, relying solely on high-level features without incorporating the characteristics of other layers may limit the full potential of VGG16’s feature extraction abilities. Future work should focus on integrating multi-layer features from VGG16 and exploring the use of more advanced CNN models to enhance the representation of image features.

-

3.

The proposed algorithm effectively assesses HDR image quality without extensive labeled data, but relies on manually extracted features. In contrast, large multimodal models (LMMs) can achieve automatic feature extraction and efficient performance in IQA, benefiting from their strengths in multimodal fusion, semantic understanding, and adaptability52,53,54. LMMs can also handle complex luminance variations and leverage zero-shot and few-shot learning, making them a promising solution for HDR IQA in future work.

-

4.

The algorithm described in this paper is implemented on a laptop equipped with an i5-12450 H CPU and 16GB of RAM. The average prediction time for each HDR image is 11.36 s, with the majority of the time spent on deep feature extraction. Using a high-performance GPU that supports parallel computing could significantly reduce this processing time, potentially enabling real-time prediction as demonstrated in55.

Conclusions

In this paper, we present a blind HDR IQA method that emulates both the low-level perception and high-level inference characteristics of the human visual system. By integrating these two types of features, the proposed method achieves efficient and accurate quality evaluation. Specifically, the luminance map of the HDR image is decomposed using MSR to generate gradient similarity maps at three different scales. These maps are then combined via the Hadamard operation to produce a visual perception map. The MSRCR-processed HDR image is subsequently fed into the VGG16 network, from which 512 deep feature maps are extracted from the final pooling layer. Finally, the visual perception map is integrated with the deep feature maps to derive quality-related features, which are used for score prediction via SVR. Experimental results on the Narwaria and Korshunov datasets demonstrate that the proposed method outperforms competing state-of-the-art algorithms.

Data availability

The data used and/or analyzed during the current study are available from the corresponding author upon reasonable request.

References

Wong, C. W., Su, G. M. & Wu, M. Impact analysis of baseband quantizer on coding efficiency for HDR video. IEEE Signal. Process. Lett. 23(10), 1354–1358 (2016).

Niu, Y., Wu, J., Liu, W., Guo, W. & Lau, R. W. HDR-GAN: HDR image reconstruction from multi-exposed LDR images with large motions. IEEE Trans. Image Process. 30, 3885–3896 (2021).

Panetta, K., Kezebou, L., Oludare, V., Agaian, S. & Xia, Z. TMO-net: a parameter-free tone mapping operator using generative adversarial network, and performance benchmarking on large scale HDR dataset. IEEE Access. 9, 39500–39517 (2021).

Chang, H. W., Bi, X. D. & Kai, C. Blind image quality assessment by visual neuron matrix. IEEE Signal. Process. Lett. 28, 1803–1807 (2021).

Zhai, G. & Min, X. Perceptual image quality assessment: a survey. Sci. China Inform. Sci. 63, 1–52 (2020).

Min, X., Duan, H., Sun, W., Zhu, Y. & Zhai, G. Perceptual video quality assessment: a survey. ArXiv Preprint arXiv:240203413 (2024).

Wang, Z., Bovik, A. C., Sheikh, H. R. & Simoncelli, E. P. Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 13(4), 600–612 (2004).

Wang, Z., Simoncelli, E. P. & Bovik, A. C. Multiscale structural similarity for image quality assessment. Asilomar Conf. Signals Syst. Comput. 37th 2, 1398–1402 (2003).

Zhang, L., Zhang, L., Mou, X. & Zhang, D. FSIM: a feature similarity index for image quality assessment. IEEE Trans. Image Process. 20(8), 2378–2386 (2011).

Zhang, L., Shen, Y. & Li, H. VSI: a visual saliency-induced index for perceptual image quality assessment. IEEE Trans. Image Process. 23(10), 4270–4281 (2014).

Mittal, A., Moorthy, A. K. & Bovik, A. C. No-reference image quality assessment in the spatial domain. IEEE Trans. Image Process. 21(12), 4695–4708 (2012).

Jiang, Q. et al. Unified no-reference quality assessment of singly and multiply distorted stereoscopic images. IEEE Trans. Image Process. 28(4), 1866–1881 (2019).

Mittal, A., Soundararajan, R. & Bovik, A. C. Making a completely blind image quality analyzer. IEEE Signal. Process. Lett. 20(3), 209–212 (2012).

Sheikh, H. R. & Bovik, A. C. Image information and visual quality. IEEE Trans. Image Process. 15(2), 430–444 (2006).

Aydin, T. O., Mantiuk, R. & Seidel, H. P. Extending quality metrics to full luminance range images. Hum. Vis. Electron. Imaging XIII. 6806, 109–118 (2008).

Mantiuk, R. K. & Azimi, M. PU21: a novel perceptionly uniform encoding for adapting existing quality metrics for HDR. Picture Coding Symposium (PCS). 1–5 (2021).

Mantiuk, R. K., Kim, K. J., Rempel, A. G. & Heidrich, W. HDR-VDP-2: a calibrated visual metric for visibility and quality predictions in all luminance conditions. ACM Trans. Graph. 30 (4), 1–14 (2011).

Narwaria, M., Mantiuk, R. K., Silva, M. P. D. & Callet, P. L. HDR-VDP-2.2: a calibrated method for objective quality prediction of high-dynamic range and standard images. J. Electron. Imaging. 24 (1), 010501 (2015).

Narwaria, M., Silva, M. P. D. & Callet, P. L. HDR-VQM: an objective quality measure for high dynamic range video. Signal. Process. : Image Commun. 35(1), 46–60 (2015).

Zhang, K., Fang, Y., Chen, W., Xu, Y. & Zhao, T. A display-independent quality assessment for HDR images. IEEE Signal. Process. Lett. 29, 464–468 (2022).

Liu, Y., Ni, Z., Wang, S., Wang, H. & Kwong, S. High dynamic range image quality assessment based on frequency disparity. IEEE Trans. Circuits Syst. Video Technol. 33(8), 4435–44400 (2023).

Cao, P., Mantiuk, R. K. & Ma, K. Perceptual assessment and optimization of HDR image rendering. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. (CVPR). 22433–22443 (2024).

Guan, F. et al. No-reference HDR image quality assessment method based on tensor space. IEEE Int. Conf. Acoust. Speech Signal. Process. (ICASSP). 1218–1222 (2018).

Kottayil, N. K., Valenzise, G., Dufaux, F. & Cheng, I. Blind quality estimation by disentangling perception and noisy features in high dynamic range images. IEEE Trans. Image Process. 27(3), 1512–1525 (2017).

Banterle, F., Artusi, A., Moreo, A., Carrara, F. Nor-Vdpnet: a no-reference high dynamic range quality metric trained on hdr-vdp 2. IEEE Int. Conf. Image Process. (ICIP). 126–130 (2020).

Ronneberger, O., Fischer, P. & Thomas, B. U-net: convolutional networks for biomedical image segmentation. Med. Image Comput. Comput. Assist. Interv Conf. 234, 241 (2015).

Chubarau, A., Yoo, H., Akhavan, T. & Clark, J. Adapting pretrained networks for image quality assessment on high dynamic range displays. ArXiv Preprint arXiv:240500670 (2024).

Shiri, F. M., Perumal, T., Mustapha, N. & Mohamed, R. A comprehensive overview and comparative analysis on deep learning models: CNN, RNN, LSTM, GRU. arXiv preprint arXiv:2305.17473 (2023).

Krizhevsky, A., Sutskever, I. & Hinton, G. E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 25, 1097–1105 (2012).

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature. 521(7553), 436–444 (2015).

Narwaria, M., Da Silva, M., Le Callet, P. & Pepion, R. Tone mapping-based high-dynamic range image compression: study of optimization criterion and perception quality. Opt. Eng. 52(10), 102008–102001 (2013).

Korshunov, P. et al. Subjective quality assessment database of HDR images compressed with JPEG XT. Proc. 7th Int. Workshop Qual. Multimedia Experience (QoMEX). 1–6 (2015).

Gao, F. et al. Deepsim: deep similarity for image quality assessment. Neurocomputing 257, 104–114 (2017).

Ma, X. & Jiang, X. Multimedia image quality assessment based on deep feature extraction. Multimedia Tools Appl. 79(47), 35209–35220 (2020).

Chaudhary, S., Mazumder, A., Mumtaz, D., Jakhetiya, V. & Subudhi, B. N. Perception quality assessment of DIBR synthesized views using saliency based deep features. IEEE Int. Conf. Image Process. (ICIP). 2628–2632 (2021).

Jobson, D. J., Rahman, Z. & Woodell, G. A. A multiscale retinex for bridging the gap between color images and the human observation of scenes. IEEE Trans. Image Process. 6(7), 965–976 (1997).

Jobson, D. J., Rahman, Z. & Woodell, G. A. Properties and performance of a center/surround retinex. IEEE Trans. Image Process. 6(3), 451–462 (1997).

Land, E. H. An alternative technique for the computation of the designator in the Retinex theory of color vision. Proc. Natl. Acad. Sci. USA. 83(10), 3078–3079 (1986).

Marr, D. Vision: a computational investigation into the human representation and processing of visual information. (2013). https://doi.org/10.7551/mitpress/9780262514620.001.0001

Krizhevsky, A., Sutskever, I. & Hinton, G. E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. (NIPS). 1097–1105 (2012).

Simonyan, K. & Zisserman, A. Very deep convolutional networks for large-scale image recognition. Int. Conf. Learn. Represent. (ICLR). arXiv preprint arXiv:1409.1556 (2014).

Szegedy, C. et al. Going deeper with convolutions. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR). 1–9 (2015).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR). 770–778 (2016).

Huang, G., Liu, Z., Laurens, V. & Weinberger, K. Q. Densely connected convolutional networks. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR). 2261–2269 (2017).

Dosovitskiy, A. et al. An image is worth 16x16 words: transformers for image recognition at scale. Int. Conf. Learn. Represent. (ICLR). arXiv preprint arXiv:2010.11929 (2020).

Deng, J. et al. Imagenet: a large-scale hierarchical image database. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR). 248–255 (2009).

Scholkopf, B. & Smola, A. J. Learning with Kernels: Support Vector Machines, Regularization, Optimization, and Beyond (MIT Press, 2018).

Breiman, L. Random For. Mach. Learn. 45, 5–32 (2021).

McCloskey, M. & Cohen, N. J. Catastrophic interference in connectionist networks: the sequential learning problem. Psychol. Learn. Motiv. 24, 109–165 (1989).

VQEG. Final report from the video quality experts group on the validation of objective models of video quality assessment. (2000). http://www.vqeg.org/

Mikhailiuk, A., Perez-Ortiz, M., Suen, W. & Mantiuk, R. UPIQ: unified photometric image quality dataset. Apollo Univ. Camb. Repository https://doi.org/10.17863/CAM.62443 (2020).

Zhu, H. et al. Adaptive image quality assessment via teaching large multimodal model to compare. ArXiv Preprint arXiv:240519298 (2024).

Cao, Y., Min, X., Gao, Y., Sun, W. & Zhai, G. AGAV-rater: adapting large multimodal model for AI-generated audio-visual quality assessment. ArXiv Preprint arXiv:250118314 (2025).

Chen, C. et al. Q-ground: image quality grounding with large multi-modality models. Proc. 32nd ACM Int. Conf. Multimedia. 486–495 (2024).

Banterle, F. et al. Nor-vdpnet++: real-time no-reference image quality metrics. IEEE Access. 11, 34544–34553 (2023).

Author information

Authors and Affiliations

Contributions

D. W. was responsible for methodology development, data collection, data analysis, writing the original draft, revising the manuscript, and overseeing the overall project management. X. J. was in charge of the primary research conceptualization and design, conducting validation, and contributing to manuscript review and editing. Q. Y. led the design and execution of experiments, assisted in visualizing results, and contributed to manuscript review and editing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Wan, D., Jiang, X. & Yu, Q. Blind HDR image quality assessment based on aggregating perception and inference features. Sci Rep 15, 10808 (2025). https://doi.org/10.1038/s41598-025-94005-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-94005-1