Abstract

The air jet loom is an energy-intensive machine, it is significantly reducing air consumption of relay nozzles for saving energy of air compressor. This paper proposes a Convolutional Neural Network (CNN)-Attention regression model to predict air consumption of the relay nozzle, enhancing accuracy and efficiency with an Improved Football Team Training Algorithm (IFTTA). We initially presented the architectural CNN-Attention model for predicting air consumption of relay nozzles. Then, the hyperparameters of CNN-Attention model were automatically tuned using an IFTTA algorithm that imitates the collaboration in football team training. Finally, experimental validation was performed. The IFTTA-CNN-Attention model stands out with the lowest mean absolute error (MAE) of 0.8686, root mean square error (RMSE) of 1.1027, and the highest determination coefficient (R2) of 0.9941. An in-depth analysis of predicted data reveals that the outlet diameter is the most sensitive factor affecting the airflow rate, followed by inlet diameters and cone angle of the relay nozzle. This study’s findings contribute to optimizing design of relay nozzles, resulting in lower electricity usage and environmental impact in textile industry.

Similar content being viewed by others

Introduction

Modern loom machines play a pivotal role in textile engineering, inserting a weft into a warp. Among various loom-types: rapier looms, water-jet looms, and air-jet looms, the air jet loom stands out for its remarkable efficiency, automation and adaptability to various fabrics1. Its weft insertion system involves key components like the main nozzles, relay nozzle alongside a profiled reed. The weft insertion process demands a continuous supply of compressed air from an air compressor to propel the weft to the warp shed. Particularly, multiply relay nozzles along a profiled reed operate alternatively. Typically, an air jet loom consumes 40–100 m3/h of air at 0.6 MPa pressure, varying with fabric type and weft insertion speed2. However, this compressed air usage and associated electricity costs contributes significantly to the overall manufacture expenses and carbon dioxide emissions in textile factory. For instance, a textile company operating 202 looms faces substantial electricity bills and carbon emissions3.

The air jet loom belongs to the intermittent-operation weaving machines that consumes most energy in textile factory. It is a challenge reducing air consumption of the air jet looms. To address this, researchers have been focusing on optimizing the air consumption of air jet looms. Pioneer Adanur4 highlighted maintaining the pressure of the main and relay nozzles to minimize air usage. Göktepe and Bozkan5 furthered this point, emphasized the importance of reducing the drive time of solenoid valve in air jet loom to obtain high production rates. Other studies have looked into critical factors influencing air consumption, such as blowing duration, nozzle structure and time of weft arrival. Haq et al.6 conducted an analysis of these factors and providing insights into their impact on air usage. Hussian et al.7 further explored air consumptions patterns of weft insertion systems, considering variables like air pressure, valve drive time and profiled reed configuration. Jabbar et al.8 employed a response surface analysis method to identify the key factors affecting compressed air consumption in air jet looms. Zegan et al.9 optimized air pressure and loom speed using a similar method, demonstrating the importance of such optimization in energy conservation. Hossain et al.10 provided a comprehensive analysis of factors contributing to air usage in air jet looms, offering strategies for mitigating them. They highlighted that relay nozzle positioning, specifically its height and angle, plays a crucial role in reducing air pressure during the weft insertion process. Innovation design in relay nozzle have also shown promising results. Grassi et al.11 developed a convergent relay nozzle that could save up to 30% of the energy in air jet loom. Khiani et al.12 furthered this research, studying the impact of various factors such as distance between air jet looms, the number of joints, and the pressure of main and relay nozzles on air consumption. Girase et al.13 also emphasized the importance of adjusting air pressure and operation time of relay valves to increase weft insertion efficiency and minimize energy consumption. Salama et al.14 proposed a strategy to adjust both air pressure and blowing time of the valves to optimize energy savings. Existing prediction techniques for air consumption in air jet loom primarily rely on traditional statistical models. However, these techniques often suffer from limited accuracy and require large sample sizes. Further research should explore innovative designs and the use of more advanced machine learning or deep learning techniques to improve accuracy and efficiency of air jet looms.

With the accelerated evolution of computational fluid dynamics (CFD) methods, researchers have been actively exploring ways to enhance weft insertion efficiency and decrease air consumptions from relay nozzles. Pioneer, Adámek et al.15 made advancements by optimizing the exit shape of relay nozzle. Belforte et al.16 analyzed the airflow patterns from relay nozzles with varying emission cross-sections. Delcour et al.17 studied the transient characteristics of jet generated by relay nozzles, further refining understanding. Li et al.18 demonstrated that star-shaped relay nozzle offered superior performance in terms of air consumption, airflow clustering, and weft stability compared to traditional shapes. Xiao et al.19 designed a new tri-elliptical center-array relay nozzle that boosted the axial velocity while mitigating fluctuation amplitude. Additionally, multiple loom manufacturers have released new relay nozzle designs aiming to minimize air consumption. Notably, Piconol company20 designed a 16 holes relay nozzle to minimize air usage. However, despite these advancements, CFD simulations often pose considerable time challenges. Exploring the nonlinear relationship between the air consumption and relay nozzle structural parameters remains a challenge, even with recent technological breakthroughs.

Artificial intelligence (AI) has emerged as an innovative tool in industry, leading to significant efficiency gains and energy conservation. Pereira21 et al. examined the application of Artificial Intelligence in textile sector, introducing a fresh perspective on Artificial Neural Networks (ANN) model for prediction fabric quality. Elkateb22 proposed an ANN to forecast mechanical properties such as tensile strength, bending stiffness and elongation in plain woven fabric. This model leveraged four input variables: weft density, yarn count, polyester fiber blend ratio and cotton fiber blend. Almetwally et al.23 used a distinct ANN to predict twist loss in woven yarns produced by air jet looms. Azevedo et al.24 went a step further, introducing multiple machine learning models to forecast faults during fabric production by air jet looms. They specifically focused on loom downtime caused by weft breaks, warp breaks, and yarn bursts, analyzing these factors using three regression models. Despite the promise of AI methods, it’s crucial to note that the accuracy of these machine learning models hinges on meticulously selected parameters and an adequate sample size. Future research should aim to optimize these models to ensure reliable predictions and further enhance efficiency and energy conservation in the air jet loom industry.

Enhancing prediction accuracy has been a key focus across various disciplines, leading to the development of advanced deep learning models. Convolutional Neural Networks (CNN), Long Short-erm Memory (LSTM) and Gated recurrent unit (GRU) neural networks have emerged in this pursuit25,27. The CNN is primarily utilized for image recognition and natural language processing within the realm of deep learning. Researchers like Cong and colleagures25 have extensively explored CNN models in various domains, delving into aspects such as fast computation, regularization, activation function, loss function, layer design, and optimization strategies. Similarly, Yao et al.26 have presented a LSTM regression model optimized with the Whale Optimization Algorithm(WOA) to predict the deformation behavior of surrounding rock formations. Furthermore, Mirzavand et al.27 have introduced an interpretable GRU model tailored for forecasting air pollution levels, levering techniques like Layer-wise Relevance Propagation (LRP) to streamline training and reduce complexity. Despite its effectiveness in processing large data, CNN may struggle with capturing features from small sample sizes. CNN combines with attention mechanism can identify salient features in input parameter and improve the accuracy. Liu et al.28 have integrated CNN and LSTM architectures over attention mechanism to estimation of nonlinear uncertain system. Similarly, Guan et al.29 have developed a hybrid CNN-Attention regression model with Grasshoper optimization algorithm(GOA) for enhancing accuracy. Wang et al.30 have proposed a hybrid CNN-LSTM network for accurately predicting the thermal performance of green roofs. Pan et al.31 have integrated CNN, LSTM, and self-attention mechanism. These hybrid models have outperformed than a single counterpart. However, these hybrid models require human expertise, and intensive efforts solve hyperparameter to ensure relative higher accuracy.

In general, success in solving hyperparameter depends upon the choice of an appropriate optimization algorithm. Researchers have developed various optimization algorithms to obtain the best hyperparameters of deep learning models, such as Particle Swarm Optimization (PSO)32, Grey Wolf Optimization (GWO)33, Whale Optimization Algorithms(WOA)34, Red-billed blue magpie(RBMO)35, Bayesian optimization(BO)36, etc. The PSO is a well-known population-based algorithm where particles within a swarm collectively explore and exploit the search space to find optimal solutions. The limitations of the PSO algorithm are obtaining trapped in the local optima and premature convergence. Singh et al37. proposed an improved PSO (IPSO) algorithm to find the best hyperparameters of the CNN model simultaneously. Aljohani et al38. developed a combination of CNN and attention mechanism with a Grey Wolf Optimization (GWO) algorithm to distinguish between normal, abusive and contents in Arabic. Further advancements in this domain, the Bayesian optimization (BO) is an iterative, model-based optimization algorithm that is suited for sample-efficient, and costs computation time. Zhang et al39. proposed a hybrid deep learning model and optimized parameters using BO algorithm. One of the most challenges using BO algorithm is real-world hyperparameter tuning for deep learning models. Even experts with relevant experience, it is hard to pin down what exactly this prior looks like. Recently, a Football Team Training algorithm (FTTA) was developed that simulates football players’ training phases and has a wide range of applications, such as path planning40. The FTTA algorithms offer simplicity, high efficiency, and fast search speeds. However, the FTTA algorithms often suffer from low convergence and insufficient global optimization capability for complicated deep learning models41. Thus, the review optimization algorithms often suffer from low convergence and insufficient global optimization capability for complicated hybrid models.

To cope with the above problems, this work proposes a combination CNN-Attention model with improved football team training optimizer for accurately predicting air consumption of the relay nozzle in air jet loom. The contribution of this research is three-fold. (1) A hybrid CNN-Attention model is proposed for accurately prediction air consumption of the relay nozzle in air jet loom. The CNN layer retrieves spatial features from the input data, while the self-attention mechanism captures the feature correlation. (2) To establish an automatic hyper-parameter tuning framework for deep learning, an improved football team training optimizer (IFTTA) is integrated in CNN-Attention model. A small size sample to perform regression analysis that will reduce training time without reducing prediction accuracy. (3) The effectiveness and robustness of the IFTTA-CNN-Attention model are compared with the IPSO, Bayesian and FTTA optimized CNN-Attention models. The experimental results show that the IFTTA-CNN-Attention model has superior accuracy and robustness with the least computation time for air consumption estimation task. This IFTTA-CNN-Attention technique can be extended to other industry disciplines.

Overall set-up of experimental apparatus

In air jet weaving technology, the relay nozzle plays a crucial role in maintaining the speed of weft yarn to ensure smooth weaving. Specifically, the elliptical auxiliary nozzle is designed to improve insertion efficiency and prevent any disturbances of weft in the profiled reed. The structural parameters of the relay nozzle impact air consumption during the weaving process. Figure 1 illustrates the structure of the relay nozzle, highlighting key parameters, such as the major axis d1 (mm) and minor axis d2 (mm) of the elliptical holes, inlet diameter D1 (mm), straight pipe diameter D2 (mm), conical degree α (°), and rotation angel β (°). These parameters are optimized to minimize air consumption in air jet loom. The 3D printing technology applied in engineering has revolutionized the manufacturing process for the rapid creation of complex structures without needing molds. Figure 1 depicts five types of 3D printing relay nozzles, and the serial number is E1, E2, E3, E4 and E5, respectively.

In weft insertion system, the primary measure of air consumption is the flow rate through a relay nozzle. By reducing the flow rate of relay nozzle, it is possible to decrease air and electricity usage in air jet looms. The utilization of a growing number of sensors has enabled the gathering of extensive airflow rate data, which can then be utilized for predicting air consumption through implementation of deep learning models. This study employed a MEMS flow sensor to precisely measure the volume of air passing through relay nozzles. A comprehensive analysis was conducted using 120 relay nozzles as training sample for deep learning. The experimental setup consists of a Siemens LMS data acquisition system (SCADAS), a MEMS flow sensor (model FS4008), assorted 3D-printed relay nozzles featuring elliptical exits, a pressure gauge, and a pump. In this research, the data acquisition software utilized was Simcenter Testlab version 2021(URL link: https://plm.sw.siemens.com/en-US/simcenter/physical-testing/testlab/). All experimental devices are shown in Fig. 2.

The Siemens LMS data acquisition system is crucial in collecting data from MEMS sensors and converting analogue voltage signals into digital signals. To ensure accuracy, an air pump was used to generate high-pressure air that is monitored by a pressure gauge at the relay nozzle inlet to maintain operating pressure at 0.3 MPa. At the exit of the relay nozzle, a single MEMS mass flow sensor is mounted to guarantee high precision and swift response times during real-time data acquisition. In the Simens LMS data acquisition system setup for MEMS sensors, the channel setup includes the configuration of the channel group ID, the first channel group is used to acquire analogue signals. The measurement direction of the sensor is set to “ + \(x\)”, which means that the flow rate is only measured in the positive \(x\) direction. In the Tracking Setup, the Tracking Duration is set to 10 s, meaning that the system will track and record data for 10 s. In the Acquisition Setup, the Bandwidth is set to 1024 Hz to capture subtle signal variations and ensures highly time resolution These settings ensure that the MEMS mass flow sensor can measure flow data accurately and in real time, and at the same time, the data acquisition system provides sufficient bandwidth and time resolution to accommodate the flow rate fluctuates that may be encountered in the experiment.

The voltage output range is between 0.5V and 4.5V, the flow rate range is between 0 and 40L/min. The analogue voltage was precisely converted into air flow rate (L/min). The equation describes the relationship between analogue voltage signals and airflow rate of the relay nozzle, defined as following:

where the V(v) is output analogue voltage of MEMS transducer and Q(L/min) is the measured air flow rate.

The computer utilizes the voltage signal from the MEMS sensors to calculate the flow rate through the relay nozzle. Following measurement of the flow rate for each 3D printed relay nozzle, machine learning techniques are applied to analyze and predict air consumption of relay nozzle.

Methodology

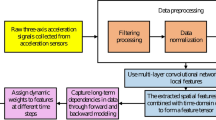

A novel approach was utilized to predict the air consumption of relay nozzle, incorporating a convolutional layer with an attention mechanism. This innovative architecture comprised a data processing unit, a CNN unit and an attention mechanism. Input features were extracted through the CNN unit, while the self-attention mechanism adapted weights to these features. The final layer of the CNN-Attention model output predicted results. The hyperparameter of the model were automatically tuned using an improved football team training algorithm, resulting in high accuracy.

Convolution neural network

The primary function of a convolutional neural network (CNN) is to extract important features from input data through a series of convolutional and pooling layers, followed by fully connected layer. This process involves weight sharing and utilization of filters to derive valuable insights from raw data. The CNN model consists of multiply one-dimensional convolutional layer (1D-CNN) layer. The pooling layer can be used to compress data and remove unnecessary information. The fully connected layer aggregated to obtain prediction result. Despite its effectiveness in processing large data, CNN may struggle with capturing features from small sample sizes. To overcome limitations, a self-attention mechanism is integrated to CNN model. This addition allows CNN to focus on key features and improve its prediction accuracy.

Self-attention mechanism

The attention mechanism operates much like human attention, emphasizing important areas while reducing attention to less relevant ones. By assigning greater weight to crucial information and less weight to less important details, it enhances the focus on valuable data. The self-attention mechanism represents an advancement from the attention mechanism. It reduces the reliance on external information. With self-attention, reliance on external information was decreased as it prioritizes internal correlations within input features. The self-attention mechanism can improve accuracy using probability distribution for key messages, making up for information loss incurred in CNN layer. Figure 3 illustrates the weight matrix of the self-attention mechanism for input channels. This self-attention mechanism is incorporated into the fully connected layers, enabling dynamic adjustment of the significance of each feature. The attention head count equals 1. The embedding dimensions are the same as output of the last CNN layer, which was automatically obtained by an optimization algorithm, such as Bayesian, IPSO, FTTA, etc.

In this section, the self-Attention weights are calculated using a scaled dot-product attention layer to effectively process information and allocate relevance, the formular is employed as follows:

where \(Q\) and \(K \in R^{{n \times d_{k} }}\), and \(V \in R^{n \times dv}\) are matrices, \(h\) is input, \(A_{attn} (h)\) is result after attention weighting, \(\sqrt d_{K}\) is a regulatory factor. The dot product of \(Q\) and \(K\) should not exceed a certain threshold to ensure that the attention remains a soft distribution.

A hybrid CNN-Attention model was created to improve the robustness and accuracy for prediction air consumption with small sample sizes. Figure 4 depicts the architecture of CNN-Attention model. The CNN-Attention model is made up of five parts: input layer, CNN layer, attention layer, fully connected layer and output. The input layer is one dimensional data consisting of six structure features of the relay nozzle. The Latin hypercubic method was used to randomly sample the structural features of relay nozzle, including inlet diameter \(D1\), straight pipe diameter \(D2\), major axis \(A1\), minor axis \({A2}\) and cone angle \(\alpha\). The output parameter is the air flow rate of relay nozzles. There are numerous neurons in the fully connected layer that are susceptible to overfitting. To prevent overfitting issues that might occur in fully connected layers, a dropout layer was introduced, which reduced neural correlation by randomly deactivating certain neurons in the network. This method can boost generalization capabilities while reducing the risk of overfitting.

Football team training algorithm for optimization hyperparameters of models

In the field of deep learning, hyperparameters are set prior to network training. The appropriate choice of hyperparameters directly affects the network’s performance and has a significant influence on the quality of regression model. We used an improved football team training algorithm (FTTA) for hyperparameter tuning. The football team training algorithm is a novel optimization algorithm proposed by Tian et al.40. The FTTA algorithm is implemented by taking a simulation of the players’ activities during a football training session. In general, football training sessions are divided into three sessions: collective training, group training and individual extra training.

Collective training

During the initial stages of collective training, the players work as a group under the supervision of the coaches. These players are categorized into four distinct categories: followers, discoverers, thinkers and volatilities. In each iteration, each player’s role may change randomly, allowing for a dynamic and versatile team dynamic. The unique characteristics and contributions of each player type are essential to achieving a goal on this stage. These equations description four types of players are defined as follows:

where, \(F_{i}^{k}\) represents the current player, \(k\) is the number of iterations, \(i\) is its player number, \(F_{i,j}^{k}\) is its value in dimension \(j\). The current best and worst players are defined as \(F_{Best}^{k}\) and \(F_{Worst}^{k}\), respectively, \(F_{i,j}^{k} new\) is the state of the player on dimension \(j\) after training, \(t(k)\) is \(t\)-distribution function, and \(k\) is the current number of iterations.

Group training

Following collective training, the team enters the group training where players are divided into Strikers, Midfielders, Defenders and Goalkeepers. The coach’s decision-making process is simulated through the MGEM adaptive clustering method (MixGaussEM). This method effectively organizes the teamers and maximizes individual player capabilities within respective positions. By using this classification method, the team can execute their plan for optimal success on the field. The specific classification form is as follows:

Next, group training involves three states: Optimal learning, Random learning, and Random communication. Each state is determined by the learning probability Pstudy and the communication probability Pcomu, with players selecting states randomly during each training. The formulas for each state are defined as:

where, \(F_{Random}^{{k,team_{l} }}\) is a random player in group, \({team}_{l}\) represents group \(l\), \(F_{Random,j}^{{k,team_{l} }}\) is the \(j\)-dimensional of random players in group \(l\), and \(rand\) is a normally distributed random number.

During the training procedure, participants unintentionally learned additional dimensions from peers. Although, the possibility of errors is low, but they do occur. The probability of error was defined as Perror:

Extra individual training

Following group training, it is necessary to do extra individual training to further enhance each player’s performance. The new fitness function was recalculated, the better fitness replaced poorer fitness value to update the player’s overall state. Ones updated, the coach will select the top player to practice and lead group practice sessions, thereby elevating the training standards for all team members. The formular for updating a player’s state involves a strategic evaluation process aimed at maximizing their potential and facilitating over team success. The player’s state update formular is defined as:

where, \(k\) is iteration number, Gauss and Cauchy represent common variation operator varying search range. Cauchy mutation has a larger range than Gaussian mutation, potentially leading to missing the optimal solution due to large step size jumps. On the other hand, Gaussian mutation is more effective in smaller ranges, as it generates smaller fluctuations, enhancing its search capabilities.

Improved football team training optimization algorithm

The FTTA suffers from low convergence accuracy and insufficient global optimization capability in the later stages of iteration. To address this, an improved football team training algorithm (IFTTA) was proposed with enhancements for more effective global optimization. Initially, players have lower skill level, IFTTA algorithm providing a probability for top players to achieve significant improvement. The IFTTA introduces the Golden Sine Strategy in collective training and utilizes adaptive t-distribution during extra individual training, preventing FTTA from getting stuck in local optimal solutions. By incorporating the Golden Sine Strategy operator and adaptive t-distribution mutation, the IFTTA strikes a balance between global search and local exploration capabilities. During training process, an individual represents a vector of CNN-Attention hyperparameters. The IFTTA algorithm utilizes collective training as a fundamental idea, emphasizing mutual learning and imitation in the population. By leveraging information from the best and worst-performance individuals, the IFTTA algorithm continuously adjusts individual positions to guide the population towards the optimal solutions. This collaborative approach enables exploration of the solution space. The general flow chart of IFTTA-CNN-Attention model is illustrated in Fig. 5.

Figure 6 shows the schematic diagram of IFTTA simulation a ootball team’s training session. At the start of the session, the palyers will do collective taining under the supervision of the coaches. All players were categorized into four categories: Followers, Discoverers, Thinkers and Vollatilities. In each iteration, vollatilities will randomly swap their locations using the Golden Sine operator. During collective training periods, various types of players gravitate toward the best value, as show in Fig. 6a. Following collective training, the football team training process has reached the stage of gourp training. The group training is divided into three states: optimal learning, random learning and random communication.

The diagram of group training is as Fig. 6b. During optimal learning state, the players learn the ability value of the best player in the group directly via a dynamically probability Pstudy. In random learning state, the palyer learns the ability value of any random player in the group via a dynamically probability Pstudy. In random learning state, players communicate with any other player in the group according to the dynamically communication probability Pcomu. Because an error will arise in which the players mistakenly learn the contents in other dimensions. The error probability is defined as Perror. In the final stage, the IFTT algorithm employs t-distribution mutation to update the player’s states during extra individuals training.

Collective training

The Golden Sine operator was developed to improve global exploration ability by incorporating the golden ratio coefficient and sine function. By introducing the mathematical principle of the Golden Sine operator, players are guided to refined positions within reduced search spaces, promoting efficiency and accuracy of search agent. The formular of Golden Sine operator is defined as:

where, \(\tau =\left(\sqrt{5}-1\right)/2\) is the Golden ratio, \({r}_{1}\) is random numbers within specified range \([\text{0,2}\pi ]\), \({r}_{2}\) is the random number within the range \([0,\pi ]\), \({x}_{1},{x}_{2}\) are golden ratio coefficients limit the search space of individuals, guiding players towards a more optimal value.

Group training

During group training phase, the IFTTA algorithm incorporates dynamical learning rates Pstudy and Pcomu that linearly decay based on the current iteration count and maximum iteration count. This gradual decrease in learning rate allows for efficient exploration of the solution space in the initial stages and precise fine-tuning during later stages. By preventing premature convergence, the IFTTA algorithm can more effectively navigate complex optimization problems. Ultimately, this adaptative approach enhances the FTTA algorithms quickly converging on optimal solutions. The formula of dynamic learning rates is as following:

where \(Iter\) is current iteration number, \(Max\_iteration\) is the max iteration number.

The integration of this strategy improves the efficiency of the FTTA algorithm by ensuring a harmonious balancing between exploration and exploitation. It prevents the FTTA algorithm from getting stuck in the early stages due to inadequate learning rates, while enabling it to fine-tune with lower learning rates as it progresses.

Extra individual training

After completing group training, the IFTTA algorithm conducts extra individual training. Regarding the tendency of FTTA algorithm fall into local convergence, a mutation operation is implemented to jump out local traps. Extra individual training includes updating the player’s position update with an adaptive t-distribution mutation, which helps improve overall performance and optimization outcomes. The t-distribution mutation is defined as follows:

where, \(t\left(\alpha \right)\) is a t-distribution function with a non-linear inertia weight \(\alpha\) as degrees of freedom, \({F}_{Best}^{k}initial\) is search agent location during the initialization, \({F}_{Best}^{k}old\) is optimal search agent location for an individual before the kth iteration, \({F}_{Best}^{k}new\) is the optimal search agent location for an individual after kth iteration.

The non-linear inertia weight \(\alpha\) is used to adjust the probability density function of the t-distribution, controlling the search step length of individuals in each iteration. This adjustment impacts the global search capability and convergence speed, improving global search ability. The formula of a non-linear inertia weight \(\alpha\) is as follows:

where \(m\) is a positive integer, Iter is the iteration number for training, and SearchAgents is the quantity of the population. By adjusting the value of m, the function output can be effectively amplified. Additionally, the non-linear inertia weight \(\alpha\) helps improving the accuracy of the FTTA algorithm.

The IFTT algorithm employs t-distribution mutation in extra individuals training, requiring boundary control to ensure the positions remain reasonable. In the early iteration steps, t-distribution mutation function is like Cauchy mutation, facilitating global exploration. However, as the number of iterations increases, it becomes difficult for players to improve their abilities. In later iterations stages, t-distribution mutation behaves more like Gaussian mutation, leading to reduced fluctuation and enhancing local search ability.

Moreover, in the CNN-Attention model, hyperparameters such as learning rate, convolutional kernel size, and the number of convolutional kernels is optimized as individuals in IFTTA algorithm. The fitness function of IFTTA is determined by the root mean square error (RMSE) between the predicted value and actual value. This approach ensures the IFTTA algorithm ultimately enhances the overall performance and prediction accuracy.

Evaluation indicators

Deep learning usually employs some statistical metrics, to quantitatively evaluate the effectiveness and accuracy of model. Here, statistical metric consists of root mean square error (RMSE), mean absolute error (MAE), Hill’s inequality coefficient (TIC), coefficient of determination (R2), and correlation coefficient (CR) was computed to evaluate the accuracy of proposed models. The TIC is also used to verify the error between the fitted value and the real value. These metrics provide a quantitative assessment of model’s accuracy and performance, enable find best hyperparameters of the CNN-Attention models. These expressions are shown as follows:

where \(\hat{y}_{i}\) is the predicted value, \(\overline{Y}_{i}\) is the mean value of the predicted flow rate, \(y_{i}\) is the actual value of air flow rate ,\(\overline{y}_{i}\) is the average of the actual value and \(N\) is the number of observed data in the training and testing set. The smaller values of the RMSE, MAE, and TIC the higher accuracy of models. However, the value of R2 and CR are closer to 1, the prediction is more accurate.

Results and discussion

This section not only describes the dataset in detail, but also mentions the evaluation metrics of the model, in addition, the method proposed in this study were implemented on a PC running Windows 10 with an Intel(R) Core (TM) i5-9300H CPU @ 2.40 GHz and 32 GB RAM. The regression model was developed with Python 3.9 programming language, Anaconda IDE and PyCharm development tools. The PyTorch deep learning framework and the Keras library were utilized for the investigation.

The airflow rate experiments were conducted in a laboratory with a constant temperature of 28°C and humidity \(36\%\). At least 120 species of 3D printed relay nozzles were measured with Siemens LMS data acquisition system. 70% of data sets were designated for training, 10% datasets for validation, and 20% for testing the CNN-Attention network model. Following measurement, the raw voltages data must be filtered using the rectangular window function, removing both abrupt and prolonged oscillations. For each relay nozzle, the measurement was repeated five times and taken average value. Figure 1 shows the E1, E2, E3, E4 and E5 type relay nozzles, which designated as No.2, No.4, No.9, No.11 and No.12, respectively. The output voltages of 1.98V, 2.71V, 2.08V, 2.60V and 3.19 V were converted into flowrate and displayed in Table 1.

As seen in Table1, a comparison of various relay nozzles reveals that the airflow rate increases dramatically with increasing outlet diameter. The airflow rate for the No.2 relay nozzle is 14.96 L/min. No.4’s relay nozzle reached 22.42 L/min as the outlet diameter grew to 1.4 mm and 0.8 mm. However, the airflow rate varies from 22.42Lmin to 22.59L/min comparing the No.4 and No.6 relay nozzles. The No.8 relay nozzle has the highest 40.56 L/min. An in-depth analysis of the airflow rates in each group indicates that the considerable effect of the change in outlet diameter on the airflow rate. Particularly, the difference in airflow rate between the No.6 and No.8 relay nozzles is worth further exploration. It demonstrates that the outlet diameter of the relay nozzle is a sensitive factor affecting the airflow rate, following the inlet diameter and spray angle. It also illustrates the non-linear relationship between airflow rate and the structural parameter of the relay nozzle.

Before training the deep learning model, data normalization is essential because deep learning is sensitive to the scale of the input data. This work uses maximum and minimum scales for data normalization, which eliminates the scale difference between different input variables. The core component of CNN-Attention model is the CNN layer while the key component of Transformer is the self-attention mechanism. The transformer consists of an encoder and a decorder. The encoder is composed of multiply identical layers stacked together, each layer containing a self-attention mechanism and a feedwork neural newwork. The parameters of CNN, CNN-Attention and Transformer networks are listed in Table 2. The maximum number of training sessions is 200. Furthermore, the learning rate descent factor is 0.01, the batch size is 32, and the dropout ratio of 0.1 is used to prevent overfitting. The Adam optimization solver was used in the training. A segmented descent learning rate strategy was set up to adjust the learning rate in the middle of the training session to improve the model performance.

To automatically search the best hyperparameters of the CNN-Attention model, an IFTTA optimization algorithm was developed. The configuration of the IFTTA algorithm is listed in Table 3. The number of search agents m is 5, the max iteration is 100 and the dimension of hyperparameter is 5. The size of convolutional kernels, number of convolutional kernels and learning rate were set as a vector and optimized. Utilizing the IFTTA algorithm, the best hyperparameters are solved as following: convolutional kernels size of three layer is 5,2,2 , respectively, the learning rate is 0.007 and the optimal output channels of first convolutional layer is 200, the second is 72 and the third layer is 54.

To evaluate the benefits of the IFTTA, Particle Swarm Optimization (PSO), improved Particle Swarm Optimization (IPSO), Bayesian and FTTA optimization algorithm are utilized to automatically search the hyperparameter of the CNN-Attention model. Figure 7 shows the iteration variation curve with fitness function (RMSE). As seen in Fig. 7, the IFTTA began convergence when iteration to the 13th generation, and it reached the minimum fitness value. The FTTA converges after the 18th iteration. The Bayesian algorithm converges after the 28th generation and IPSO algorithm converges after the 47th iteration. However, the PSO and IPSO algorithms dose not converge after 20 iterations. The RMSE values of PSO are high, indicating its prediction accuracy is not sufficient. The computation time is 228 s and 265 s for IFTTA-CNN-Attention and FTTA-CNN-Attention model, respectively. While the computation time is 280 s for Bayes-CNN-Attention models. In addition, the computation time is 460 s 520 s for IPSO-CNN-Attention model and PSO-CNN-Attention model, respectively. Through comparative experiments, the accuracy and CPU running time are verified. The IFTTA optimization algorithm achieves the best performance, which far exceeds the traditional PSO, IPSO and Bayesian optimization algorithms.

Figure 8 plots iteration curves for CNN, CNN-Attention, FTTA-CNN-Attention and IFTTA-CNN-Attention model. In purpose of observing the variation of the loss function of different regression models during the training process, 200 iterations of epochs are chosen for plotting the loss function. As can be seen from Fig. 8, the CNN model is almost stabilized after 175 iterations and its loss function is the largest. The loss fluctuation of CNN-Attention model is stable after 150 iterations, the combined effect of iteration 200 times the best results. The loss function of both FTTA-CNN-Attention and IFTTA-CNN-Attention model are stable after 25 iterations, but the loss function of the FTTA-CNN-Attention is larger than the IFTTA-CNN-Attention model. If the maximum number of training sessions is too low, the fluctuation of loss function is obviously large. If the maximum number of training sessions is too many, the model may overfit the training data, i.e., the model performs very well on the training set, but poorly on the test set. The reason is that the IFTTA algorithm adopts the adaptive t-distribution mutation during an extra individual training phase, by incorporating the number of iterations into the freedom degree parameters in the t-distribution function. As a result, it exhibits excellent global search ability in the initial stage, and as the number of iterations increases, it also demonstrates good local optimization ability, significantly improving the convergence speed and hyperparameter solving efficiency.

Benchmark models, the evaluation metrics RMSE, MAE, TIC, R2 , CR, standard error (\(\sigma\)) and confidence interval (CI) are shown in Table 4. As can be seen in Table 4, the IFTTA-CNN-Attention model performs well on all evaluation metrics and the best accuracy is achieved. Its R2 value of 0.9941 shows an extremely high degree of fit, explaining about 99.41% of the variability in the airflow rate data. The MAE value of 0.8686 and RMSE value of 1.1027 are the lowest compared to other CNN, CNN-Attention, Transformer, Bayes-CNN-Attention, IPSO-CNN-Attention and FTTA-CNN-Attention models. The correlation coefficient (CR) of the IFTTA-CNN-Attention model is 0.9972, indicating that the predicted values are in excellent agreement with the actual values. Furthermore, the Hill’s Inequality Coefficient (TIC) of the FTTA-CNN-Attention is merely 0.1255. Thus, taking all evaluation criteria into account, the IFTTA-CNN-Attention model is unquestionably the best precise and robustness for forecasting the air consumption of relay nozzles in air jet looms.

Figure 9 shows the predicted results with CNN, Transformer, Bayesian-CNN-Attention, IPSO- CNN-Attention, FTTA-CNN-Attention and IFTTA-CNN-Attention models. As shown in Fig. 9a, the CNN model exhibits some predictive ability, but the prediction error is large at some sample points. Although the predicted values follow a similar trend to the actual values in most cases, the CNN model is not as accurate as the other models in regions where the air consumption varies drastically. This is due to the CNN model has not fully learning the nonlinear input features, the training will perform poorly and easily lead to underfitting. As seen in Fig. 9b, the predicted results of the transformer model can reflect the trends of the actual data in general. But there are obvious deviations at some points, especially at the peak and valley locations of the data. In Fig. 9c, the differences between the predicted and actual values are small in the Bayesian-CNN-Attention model. In Fig. 9e, the FTTA-CNN-Attention model, the predicted values agree with the actual values in most cases. In Fig. 9f, the IFTTA-CNN-Attention model effectively captures the overall trends in the data, demonstrating a strong ability to follow the data’s general patterns. Notably, the model performs exceptionally well across all sample points, especially where the air consumption changes abruptly. Thus overall, the IFTTA-CNN-attention model performs best in air consumption prediction. This is due to the players position is important, affecting the optimization direction in FTTA algorithm, the IFTTA update the best players position using the Golden Sine algorithm in collective training, enable common individuals exchange information with the optimal individual. Hence, the IFTTA-CNN-attention model can escape from local optimal and reduce the likelihood of falling into local optimal value.

Figure 10 shows the fitted lines for evaluation accuracy between the predicted and the actual data using CNN, Transformer, Bayesian, IPSO, FTTA-CNN-Attention and IFTTA-CNN-Attention, respectively. As shown in Fig. 10, the horizontal axis represents the actual air consumption values, while the vertical axis indicates the predicted air consumption values with four regression models. The two dashed lines represent the deviation range between predicted and actual data within ± 3 L/min. As can be seen in Fig. 10a, there are many predicted data deviates from the diagonal, and outside the error range ± 3 L/min. The color bar indicates that the maximum absolute error of CNN model is highest at 37%. In Fig. 10b, many points are located outside the predicted band and the errors range between 0 and 24%, indicating the Transformer model had insufficient fitting ability. In Fig. 10c, the relationship between the actual data and predicted values is closer to the diagonal line, the errors in the range between 0 and 19%. As shown in Fig. 10d, there are fewer points outside the error range ± 3 L/min in the IPSO-CNN-Attention model.

As seen in Fig. 10e, the relationship between the predicted value and the true value of the FTTA-CNN-Attention model is very close to the diagonal, the error is smaller and more uniform, the absolute error is concentrated between 0 and 7%. In Fig. 10f, the absolute error of the IFTTA-CNN-Attention model has the smallest value within 4.2%. With respect to the evaluation criteria, it is widely recognized that accuracy is satisfactory when the predicted values closely align with the observed values. Therefore, the prediction accuracy of the IFTTA-CNN-Attention is highest. The reason is that the FTTA algorithm allows players directly learning from the best player with certain probability. The IFTTA algorithm adopts dynamic learning factors during the group training phase according to dynamic number of iterations. As the number of iterations grows, the dynamic factors gradually decrease. In the early phases, the solution space can be eventually explored, while in the later phases, fine-grained local optimization can be performed to avoid premature convergence and improv the global search capability as much as possible.

Figure 11 shows the deviation of predicted results in the testing set for various models. As seen in Fig. 11a, the bar indicates standard deviation (STD) of each model, and the scatter dots reflect each error between actual value and prediction values in the testing set. All scatter points of various models are following normal distributions. The black, red, blue, green, purple and yellow bar represents STD of the CNN, Transformer, Bayesian, IPSO-CNN-Attention model, respectively. The length of the black bar is the largest, with a max deviation of more than 20%. The max deviation of Transformer and Bayesian CNN-Attention model exceeds more than 10%. In addition, the purple bar represents STD of the FTTA-CNN-Attention model, and the yellow bar is the STD of the IFTTA-CNN-Attention model. The standard deviations of the CNN, Transformer, Bayesian-CNN-Attention, IPSO-CNN-Attention, FTTA-CNN-Attention and IFTTA-CNN-Attention models are 4.665, 4.188, 2.218,2.251,1.402 and 1.091, respectively.

By calculating the 95% confidence interval (CI) for all values within the range of observations, as shown in Fig. 11b. The ranges of CI are given by [1.978, 4.909] and [− 1.341, 1.339] for the CNN and Transformer model, respectively. The CI of Bayesian, IPSO, FTTA-CNN-Attention models are [1.628, 3.652], [0.224,1.665], and [− 0.073,0.822], respectively. As seen in Fig. 11b, several points exceed the 95% CI in the CNN and Transformer model, indicating that the CNN model’s prediction accuracy is inadequate and unstable. The Bayesian, IPSO-CNN-Attention models have a wider range than the FTTA-CNN-Attention model. The 95% CI of IFTTA-CNN-Attention model is between [− 0.657, 0.041]. Then, the error distribution is tightly clustered with the smallest standard deviation and the narrowest 95% CI, highlighting its strong stability and consistency. This enhancement stems from the self-attention mechanism, which focuses on important features of input data, and the best hyperparameter was obtained by the IFTTA optimization due to some new search strategy, improving the convergence speed and prediction accuracy.

Figure 12 shows the boxplots of calculated RMSE results by changing different sizes 110, 120 and 140 for assessment of the stability of CNN-Attention model. The CNN-Attention model’s prediction accuracy can be improved using both FTTA and IFTTA. The boxplot, a univariate outlier detection technique, depicts RMSE value. For benchmark, the numbers of the dataset vary with the running of the deep learning. As seen in Fig. 12, the black line indicates the median, the upper whisker line indicates the 75th percentile and the lower whisker line is the 25th percentile. The black bottom and top lines represent the minimum and maximum observed values, respectively. As the amount of data increases, the RMSE value in the testing set gradually decreases, mitigating the negative impact of high-dimensional data. For the FTTA-CNN-Attention model, the total number of data is 110, 120, and 140, the median values of the RMSE box are 3.6115, 2.2799 and 1.3510 respectively. For the IFTTA-CNN-Attention model, the median values are 2.6140, 1.8244, and 1.1330. When the dataset size surpasses 110 samples, the determination coefficient R2 exceeds 95% using CNN-Attention network to prediction the air consumption of relay nozzle. As a result, a modest sample size is sufficient for deep learning when employing a multiply layer CNN in conjunction with an attention network.

A more intuitive comparison performance of various models, Kiviat diagrams is shown in Fig. 13. The Kiviat diagram is a two-dimensional graphic of more quantitative variables on an axis starting from the same point. It is an intuitive and convenient graphical method displaying multivariate evaluation metrics including CR, R2, TIC, MAE, and RMSE index. The TIC index evaluates the relative errors of predictions, with smaller values indicating better model performance. The CR and R2 are the more closely to number 1, the actual and predicted values are more consistent. As can be seen from Fig. 13, the baseline CNN and Transformer models have a higher TIC, MAE and RMSE value. The optimized CNN-Attention model has a lower RMSE and higher R2 and CR coefficient. It indicates that the accuracy of CNN model is the worst. The IFTTA-CNN-Attention model has the lowest TIC metrics (less than 0.03), indicating the highest predictive accuracy. Whereas, the IFTTA-CNN-Attention has the highest CR and R2 metrics, both values greater than 0.99, and extremely close to 1. The pentagon of IFTTA-CNN-Attention model spreads further out, this model performs better. The reason is that the IFTTA-CNN-Attention model uses the Golden sine search algorithm in the collective training phase, it can achieve good individual distribution in the search space while avoiding excessive concentration or dispersion among players. By leading players toward more ideal value, the IFTTA algorithm can effectively explore the optimal hyperparameter during the training process.

Figure 14 shows the Taylor diagrams of various models. A Taylor diagram illustrates three statistical metrics: the centering root means square error (CRMSE), the correlation coefficient (CR), and the standard deviation criterion (\(\sigma\)). Red concentric circle means the root square difference. The radius of quarter circle represents correlation coefficient, and finally the standard deviation criterion. In a Taylor diagram, a model’s proximity to the reference point (red triangle) indicates greater accuracy in predicting values and better suitability. The CNN model is represented by a purple rhombus, the Bayesian-CNN-Attention model by a green square, the IPSO-CNN-Attention model by a blue circle, the FTTA-CNN-Attention model by a cyan star, the IFTTA-CNN-Attention model by a yellow diamond and the Transformer model by an orange hexagon. It is obviously seen in Fig. 14 that the CNN model is the farthest distance from the observation point. The Transformer model is clearly outperformers the standard CNN-Attention model. The Bayesian-CNN-Attention model is closer to the observation point than Transformer. The FTTA-CNN-Attention model is nearly identical to the Bayesian-CNN-Attention model. Besides, the proposed IFTTA-CNN-Attention model is nearest to the observation point (red triangle) in the Taylor diagrams. This indicates that the IFTTA-CNN attention model has the highest accuracy and best performance. This is owing to dynamic learning rates Pstudy and Pcomu update the participants in group learning, as well as an adaptive t-distribution mutation operator that perturbed the individual’s position during the extra individual training phase. It enables the IFTTA algorithm to excel at global development ability in the early stage, and better local exploration capability in the latter stages. As a result, the IFTTA algorithm can identify the optimum hyperparameter for the hybrid CNN-Attention model. Hence, the IFTTA-CNN-Attention model can be utilized to predict air consumption of the relay nozzle with strong nonlinear relationships. In the future, the IFTTA-CNN-Attention regression model can be used to optimize design relay nozzle or precisely control the output airflow volume of air compressor in the air jet loom. Thereby, a factory has more hundred air jet looms, a large amount of electricity of the jet system can be saved.

Conclusion

In summary, traditional regression methods fail to account for the nonlinear relationships and variable interactions that are commonly present in the structural data of relay nozzles in air jet loom. In this paper, the hybrid IFTTA-CNN-Attention model was developed to predict the air consumption of the relay nozzle in the air jet loom. An in-depth analysis reveals that the diameters of major axis and minor axis at outlet are the most sensitive factors affecting the airflow rate, followed by inlet diameters and cone angle of the relay nozzle.

Compared to the baseline CNN and Transformer model, this hybrid CNN-Attention model can extract prominent structural features of the relay nozzles from small sample sizes. In the IFTTA-CNN-Attention model, the error distribution is tightly clustered with the smallest standard deviation and the narrowest 95% CI, highlighting its high accuracy and strong consistency. As a result, it is sufficient for deep learning when employing a multiply layer CNN in conjunction with an Attention mechanism network with small sample sizes.

By comparing the Bayesian, IPSO and FTTA optimization algorithm, it is obvious that the proposed IFTTA-CNN-Attention model has significant advantages. Frist, the IFTTA-CNN-Attention model has the highest prediction accuracy with an R2 value of 0.9941, indicating its superior ability to capture underlying trend of air consumption data. Other evaluation metrics (MAE, RMSE, TIC) had the lowest values, particularly the MAE of 0.8686, RMSE of 1.1027 and TIC of 0.1225, indicating that the prediction error was extremely small. Furthermore, the computation time of FTTA-CNN-Attention is within 228 s. The computation time is less than the traditional PSO, Bayesian optimized CNN-Attention and Transformer models. The results indicated that the IFTTA can effectively solve hyperparameters in the hybrid CNN-Attention model, enhancing both calculation efficiency and accuracy. Hence, the proposed IFTTA-CNN-Attention model is appropriate for predicting air consumption of relay nozzle in air jet loom, and it has the potential for widespread use in other complex regression tasks.

Data availability

Data will be available upon reasonable request from corresponding author for the academic purposes.

References

Dong, G. et al. Progress of air flow field and fiber motion analysis in textile manufacturing process: A review. Text. Res. J. https://doi.org/10.1177/00405175241231826 (2024).

Rahaman, M. T., Pranta, A. D., Repon, M. R., Ahmed, M. S. & Islam, T. Green production and consumption of textiles and apparel: Importance, fabrication, challenges and future prospects. J.Open. Innov.: Technol. Market Complex. https://doi.org/10.1016/j.joitmc.2024.100280

Haque, M. E., Rahman, M. B., Kafi, W., Kaiser, M. S. U. & Dey, A. Minimization of air consumption and potential savings of textile denim fabric manufacturing process. J. Text. Sci. Technol. 9 (1), 69–83. https://doi.org/10.4236/jtst.2023.91005 (2023).

Adanur, S. & Turel, T. Effects of air and yarn characteristics in air-jet filling insertion: Part II: Yarn velocity measurements with a profiled reed. Text. Res. J. 74(8), 657–661. https://doi.org/10.1177/004051750407400801 (2024).

Göktepe, Ö. & Bozkan, O. Study on reduction of air consumption on air-jet weaving machines. Text. Res. J. 78(9), 816–824. https://doi.org/10.1177/0040517508090493 (2008).

Haq, U. N. & Hossain, M. M. A review on reduction of air consumption in air jet loom: the possible setting points. J. Asian Sci. Res. 7(3), 52–62. https://doi.org/10.18488/journal.2.2017.73.52.62 (2017).

Hussain, T., Jabbar, A. & Ahmed, S. Comparison of regression and adaptive neuro-fuzzy models for predicting the compressed air consumption in air-jet weaving. Fibers Polym. 15, 390–395. https://doi.org/10.1007/s12221-014-0390-x (2014).

Jabbar, A., Ahmed, S., Hussain, T., Haleem, N. & Ahmed, F. Statistical model for predicting compressed air consumption on air-jet looms. J. Eng. Fibers Fabrics. 9(3), 155892501400900306. https://doi.org/10.1177/155892501400900306 (2014).

Zegan, M. & Ayele, M. Optimization of air pressure and speed of air jet loom; impact on fabric tensile and tear strength properties using response surface Methodology. J. Nat. Fibers 19(15), 10227–10240 https://doi.org/10.1080/15440478.2021.1993492 (2022).

Hossain, M. M., Suchona, H. A., Nasrin, M. T. & Hossain, I. An investigation of air consumption of air-Jet Loom. J. Asian Sci. Res. 11(1), 1. https://doi.org/10.18488/journal.2.2021.111.1.14 (2021).

Grassi, C., Schröter, A., Gloy, Y. S. & Gries, T. Reducing environmental impact in air jet weaving technology. Int. J. Cloth. Sci. Technol. 28(3), 283–292. https://doi.org/10.1108/ijcst-03-2016-0037 (2016).

Khiani, R. K., Peerzada, M. H. & Abbasi, S. A. Air consumption analysis of air-jet weaving. Mehran Univ. Res. J. Eng. Technol. 35(3), 453–458. https://doi.org/10.22581/muet1982.1603.15 (2016).

Girase, A. P. et al. Impact of air pressure and valve timing on weft breakage rate in air-jet weaving. Melliand Int. 24(4), 193–195. https://doi.org/10.1109/mercon.2017.7980480 (2018).

Salama, M. Stastical analysis of some loom parameters affecting yarn stops on air jet loom. MEJ-Mansoura Eng. J. 16(2), 71–81. https://doi.org/10.21608/bfemu.2021.188269 (2021).

Adámek, K. Numerical modelling of air flow in air jet weaving system. Int. J. Polym. Mater. Polym. Biomater. 47(4), 613–623. https://doi.org/10.1080/00914030008031316 (2000).

Belforte, G., Mattiazzo, G., Testore, F. & Visconte, C. Experimental investigation on air-jet loom sub-nozzles for weft yarn insertion. Text. Res. J. 81(8), 791–797. https://doi.org/10.1177/0040517510391694 (2011).

Delcour, L., Van Langenhove, L. & Degroote, J. Towards simulation of force and velocity fluctuations due to turbulence in the relay nozzle jet of an air jet loom. Text. Res. J. 91(9–10), 990–1008. https://doi.org/10.1177/0040517520968285 (2021).

Li, S., Shen, M. & Bai, C. Influence of structure parameter of auxiliary nozzle in air-jet loom on characteristics of flow field. J. Text. Res. 40, 161–167 (2019).

Xiao, S., Shen, M., Yang, Q., Wang, Z. & Zhou, J. Characteristics of instantaneous intersecting airflow from main nozzle and various relay nozzles in an air jet loom. J. Text. Inst. 2023,1–13, https://doi.org/10.1080/00405000.2023.2262115

Messiry, M. E. & Mohamed, A. Numerical study of flows for slub yarn transmission in an air jet. Text. Res. J. 93(1–2), 242–254. https://doi.org/10.1177/00405175221117613 (2023).

Pereira, F. et al. A review in the use of artificial intelligence in textile industry[C]Innovations in mechatronics engineering. 377–392. https://doi.org/10.1007/978-3-030-79168-1_34 (2022).

Elkateb, S. N. Prediction of mechanical properties of woven fabrics by ANN, Fibres Text. East. Eur. 30(4), 54–59. https://doi.org/10.2478/ftee-2022-0036 (2022).

Almetwally, A. & El-sisy, W. Predicting the performance of Air-Jet weaving machine in relation to filling yarns’ twist loss using (ANN) and linear regression Model. J. Home Econ.-Menofia Univ. 33(02), 235–248 (2023).

Azevedo, J. et al. Predicting yarn breaks in textile fabrics: A machine learning approach. Proc. Comput. Sci. 207, 2301–2310. https://doi.org/10.1016/j.procs.2022.09.289 (2022).

Cong, S. & Zhou, Y. A review of convolutional neural network architectures and their optimizations. Artif. Intell. Rev. 56(3), 1905–1969. https://doi.org/10.1007/s10462-022-10213-5 (2023).

Yao, J., Nie, J. & Li, C. Research on prediction of surrounding rock deformation and optimization of construction parameters of high ground stress tunnel based on WOA-LSTM. Sci. Rep. 14(1), 27396. https://doi.org/10.1038/s41598-024-79059-xs (2024).

Mirzavand Borujeni, S. et al. Explainable sequence-to-sequence GRU neural network for pollution forecasting. Sci. Rep. 13(1), 9940. https://doi.org/10.1038/s41598-023-35963-2 (2023).

Liu, L. et al. Multi-layer CNN-LSTM network with self-attention mechanism for robust estimation of nonlinear uncertain systems. Front. NeuroSci. 18, 1379495. https://doi.org/10.3389/fnins.2024.1379495 (2024).

Guan, W. et al. Research on CNN-attention regression prediction method based on GOA optimization. International conference on the Efficiency and Performance Engineering Network (Springer Nature Switzerland, Cham, 2024) 209–219. https://doi.org/10.1007/978-3-031-70235-8_19.

Wang, J. et al. Advanced thermal prediction for green roofs: CNN-LSTM model with SSA optimization. Energy Build. 322, 114745. https://doi.org/10.1016/j.enbuild.2024.114745 (2024).

Pan, S. et al. Oil well production prediction based on CNN-LSTM model with self-attention mechanism. Energy 284, 128701. https://doi.org/10.1016/j.energy.2023.128701 (2023).

Saini, P., Nagpal, B. & PSO-CNN-Bi -LSTM: A hybrid optimization-enabled deep learning model for smart farming. Environ. Model. Assess. 29(3), 517–534. https://doi.org/10.1007/s10666-023-09920-2 (2024).

Singh, T. et al. Grey Wolf optimization based CNN-LSTM network for the prediction of energy consumption in smart home environment. IEEE Access 11, 114917–114935. https://doi.org/10.1109/access.2023.3311751 (2023).

Cui, X., Zhu, J., Jia, L., Wang, J. & Wu, Y. A novel heat load prediction model of district heating system based on hybrid while optimization algorithm (WOA) and CNN-LSTM with attention mechanisms. Energy 312 (15), 133536. https://doi.org/10.1016/j.energy.2024.133536 (2024).

Fu, S. et al. Red-billed blue magpie optimizer: a novel metaheuristic algorithm for 2D/3D UAV path planning and engineering design problems. Artif. Intell. Rev. 57(6), 1–89, https://doi.org/10.1007/s10462-024-10716-3 (2024).

Bharadiya, J. P. A review of bayesian machine learning principles, methods, and applications. Int. J. Innovative Sci. Res. Technol. 8(5), 36 (2023)

Singh, P., Chaudhury, S., Panigrahi, B. K. & Hybrid, M. P. S. O. C. N. N. Multi-level particle swarm optimized hyperparameters of convolutional neural network. Swarm Evol. Comput. 63, 100863. https://doi.org/10.1016/j.swevo.2021.100863 (2021).

Aljohani, A. et al. A hybrid combination of CNN attention with optimized random forest with grey Wolf optimizer to discriminate between Arabic hateful, abusive tweets. J. King Saud Univ.-Comput. Inform. Sci. 36 (2), 101961. https://doi.org/10.1016/j.jksuci.2024.101961 (2024).

Zhang, C. et al. Bottom hole pressure prediction based on hybrid neural networks and bayesian optimization. Pet. Sci. 20(6), 3712–3722. https://doi.org/10.1016/j.petsci.2023.07.009 (2023).

Tian Zhirui, G. & Mei Football team training algorithm: A novel sport-inspired meta-heuristic optimization algorithm for global optimization. Expert Syst. Appl. 245, 123088. https://doi.org/10.1016/j.eswa.2023.123088 (2024).

Li, W. et al. Unmanned aerial vehicle path planning method based on football team training algorithm. 2024 7th International Conference on Computer Information Science and Application Technology (CISAT) 25–29.( IEEE, 2024). https://doi.org/10.1109/cisat62382.2024.10695324

Acknowledgements

The Chinese National Science Foundation provided funding for this work. (Grant No. 51505344, 11872046), the Shandong Province key research and development plan projects refer to major Science and Technology Innovation Engineering (2024CXGC010215), the Project of Outstanding Young and Middle-aged Scientific and Technological Innovation Team in Universities of Hubei Province (No.T2022015) and Hubei Key Laboratory of Digital Textile Equipment Open Subjects (No.2020001).

Author information

Authors and Affiliations

Contributions

S.M.: wrote an original draft and devised the main idea of the IFTTA-CNN-Attention model. Shao Ning: completed the program of the CNN-Attention model at a high level. C.Y.: handled methodology and literature review. X.X.: focused on validation and visualization. Y.X.: measured the airflow rates and data processing. W.Z.: performed technical analysis and reviewed the draft. Y.L.: project supervision and administration. All authors discussed the results and commented on the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Min, S., Ning, S., Yongbo, C. et al. An advanced CNN-attention model with IFTTA optimization for prediction air consumption of relay nozzles. Sci Rep 15, 9849 (2025). https://doi.org/10.1038/s41598-025-94418-y

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-94418-y