Abstract

Permeability estimation plays an essential role in the assessment of reservoirs and hydrocarbon extraction. There are various methods to evaluate the formation and estimate the formation permeability, but in some cases, the evaluation may not be done or it may not be done correctly. This study focuses on a novel method to estimate the formation’s permeability with appropriate accuracy using the mud loss data. Machine learning applications are becoming more popular nowadays and can succeed in many fields. This current research focuses on the application of mud loss data and deep learning to estimate the formation’s permeability. To implement and validate our methodology, it is considered pilot cases including reservoir and drilling parameters values (depth, formation type, formation thickness, mud density, mud viscosity, and formation permeability). It is assumed that mud loss was occurred because of deferential pressure between formation pressure and bottom-hole pressure. The mud loss rate data were generated at different sets of reservoir and drilling data values using a reservoir simulator and then evaluated by calculating the correlation coefficients to ensure their validity and to check the fit under real conditions. This can be used to estimate the formation permeability values. One-dimensional convolutional neural networks(1D-CNN), a type of convolutional neural network, is utilized to be trained with data to perform a regression problem based on the contribution of flattening, dropout, and fully connected layers to estimate permeability with high accuracy (training data R2 = 0.970, testing data R2 = 0.964). Then the new deep learning method, Deep jointly informed neural network (DJINN), with the cooperation of neural networks and decision trees, provides a more accurate model than 1D-CNN (training data R2 = 0.978, testing data R2 = 0.972). These descriptions may provide new applications for mud loss data, where data while drilling can be used to predict formation permeability and provide insights for petroleum engineers to accurately measure design.

Similar content being viewed by others

Introduction

Formation permeability is a critical parameter in petroleum engineering, influencing fluid flow within reservoirs and impacting hydrocarbon recovery efficiency. Traditional methods for estimating permeability, such as core sampling and well testing, can be costly and time-consuming, often yielding limited spatial coverage of reservoir properties. Moreover, the inherent heterogeneity of geological formations can lead to significant discrepancies in permeability values, complicating reservoir characterization. As a result, there is an increasing need for innovative approaches that leverage advanced data analytics and machine learning techniques to enhance the accuracy and efficiency of permeability estimation from available well log data and other indirect measurements1.

Understanding and evaluating formation permeability is essential for several reasons:

-

1.

Hydrocarbon flow: Formation permeability directly affects the movement of oil and gas within the reservoir. High permeability allows for easier flow of hydrocarbons to the wellbore, which is essential for efficient extraction. Conversely, low permeability can hinder production rates and increase extraction costs1.

-

2.

Reservoir management: Understanding permeability is vital for effective reservoir management and development strategies. Engineers use permeability data to predict reservoir behavior under various production scenarios, optimize well placement, and enhance recovery techniques2.

-

3.

Enhanced oil recovery (EOR): In enhanced oil recovery methods, knowledge of formation permeability is crucial for selecting appropriate techniques, such as water flooding or gas injection. The effectiveness of these methods often depends on the permeability characteristics of the reservoir rock3.

-

4.

Modeling and simulation: Accurate permeability measurements are essential for creating reliable reservoir models and simulations. These models help engineers forecast production performance, evaluate the economic viability of projects, and make informed decisions regarding drilling and completion strategies4.

Therefore, the precise measurement of this parameter is of utmost importance. The prediction of reservoir permeability has been of key interest in the industry as investment decisions based on the volume of hydrocarbon resources are dependent on their accuracy5. As a result, considerable time and money have been allocated to take advantage of technological advances in data collection from cores and well testing, among other activities, to help reduce the permeability data uncertainty and Improve the reservoir performance prediction6. On the other hand, such conventional methods often face problems such as a lack of data in some regions that makes it impossible to determine formation permeability.

Machine learning (ML) is a subset of artificial intelligence (AI) that focuses on the development of algorithms and statistical models that enable computers to perform tasks without explicit programming. Instead of being programmed with specific instructions, ML systems learn from data, identifying patterns and making decisions based on that information. In the past decade, the advancements in computer science especially in the field of artificial intelligence and machine learning (ML) enabled us to effectively extract basic data from real-world data collected in oil industry applications and employ them for better reservoir characterization7,8,9,10. ML is broadly acknowledged to improve our understanding of wells11, production, and reservoir areas12. In specific, ML is most broadly utilized in reservoir management and has accomplished important outcomes such as permeability, porosity, and tortuosity prediction13, modeling CO2-oil systems minimum miscibility pressure14, shale gas production forecast15, reservoir characterization16, predicting formation damage of oil fields17, digital 3D core reconstruction18,19,20, well test interpretation21,22, shale gas production optimization23,24, well log processing25, modeling wax deposition of crude oils26 and history matching27,28. This has motivated numerous researchers to gradually abandon the use of multiple linear regression models and empirical correlations in favor of incorporating ML when forecasting significant reservoir petro-physical properties29.

ML approach for permeability prediction

Artificial neural network (ANN) is a famous approach that uses the obtained results to predict permeability30. In recent years, some authors have studied reservoir characterization problems from different aspects, including soft computing methods 31,32,33. The results of such studies revealed that soft computing models outperform regression models. The advantage of computational methods over regression lies in the fact that elemental uncertainties or heterogeneities are not explicitly included in computational regression methods32.

Permeability can be evaluated by interpreting in situ measurements taken by formation testers using well-testing equipment and well-logging. During verification, given the average permeability thickness, transient well testing provides a wealth of information about the flow capacity of the reservoir. Another worthwhile method for measuring the absolute permeability of reservoirs is to conduct flow experiments using representative core samples34. Geoscientists can therefore manage the production process effectively with the help of a reliable and accurate permeability estimation.

Several studies have been proposed to estimate permeability. Mohagueg et al.35 presented their three main approaches to permeability estimation, including analytical, statistical, and computational tools using well-log data. Chehrazi and Rezaee36 introduced a classification plan for permeability prediction models, including analytical models, soft computing models, and porous phase models using well-log data. Rezaee et al.37 presented the results of a research project that investigated permeability prediction for the Precipice Sandstone of the Surat Basin which machine learning techniques were used for permeability estimation based on multiple wireline logs. Tembely et al.38 emphasize the important role of feature engineering in predicting physical properties using machine and deep learning. The proposed framework, which integrates various learning, rock imaging, and modeling algorithms, is capable of rapidly and accurately estimating petrophysical properties to facilitate reservoir simulation and characterization. Okon et al.39 presented an ANN model to forecast the physical properties of reservoirs namely, porosity, permeability, and water saturation, developed based on logs from fifteen fields. A joint reversal technique based on a multilayer linear calculator and particle swarm optimization algorithm was applied by Yasin et al.40 to estimate the spatial variation of important petrophysical parameters e.g. porosity, permeability, and saturation, and essential geo-mechanical specifications (Poisson’s ratio, and Young’s modulus) for downhole zones using seismic data. Anifowose et al.41 conducted stringent parametric research to examine the comparative accuracy of ML techniques in estimating the permeability of the carbonate reservoir in the Middle East using integrating seismic attributes and wireline data. Akande et al.42 studied the predictability and impact of feature engineering on the precision of support vector machines in estimating carbonate reservoir permeability using well-log data. Bruce et al.43 accomplished ANN to process permeability estimation by the usage of wireline logs. El Ouahed et al.44 proposed combining the ANN with fuzzy logic to fully account for the fractured reservoir using well-log data. Al Khalifah et al.45 used ANN and genetic algorithms to estimate cores permeability measured by lab experiments.

The contents mentioned in the previous paragraph are analyzed in Table 1 which provides a comparison between the research of different researchers on permeability estimation, where the method and type of data are given. It can be seen that the researchers used statistical tools and artificial intelligence to estimate the permeability of the formation, and in their research, the data used included well log data, rock imaging data, seismic data, and core data.

Lost circulation vs. formation permeability

Lost circulation is a prevalent drilling problem, especially in formations with high permeability, and natural or induced fractures 46,47. Lost circulation can occur in a variety of formations ranging from h shallow, unconsolidated geological layers to well-consolidated geological layers which are disrupted by drilling fluids hydrostatic pressure 48,49. Two conditions are necessary for a loss of circulation in the borehole to occur. First the pressure at the bottom of the well exceeds the pore pressure and next there should be a fluid flow path for lost circulation50. Underground routes that cause to occur lost circulation can be defined as following classes:

-

Cavernous formations: In the direction of drilling in some formations, there are cavernous and empty spaces in which, as a result of drilling the formations, a large amount of mud loss occurs(Fig. 1a).

-

Natural fractures: The existence of a natural fracture network, which is created by tectonics in the formation, can act as a conduit to cause leakage in the formation, and the amount of leakage depends on several factors which are mentioned below(Fig. 1b).

-

Induced fractures (e.g. quick tripping or blowouts): In this mechanism, as a result of drilling operations such as tripping and blowouts, the bottom hole pressure increases, and cracks are created by induction. In fact, due to the low strength of some formations against stress, due to the application of additional stresses on the formation, fracture occurs in the formation(Fig. 1c).

-

Highly permeable formations: The presence of permeable formations causes a large amount of drilling fluid to leak into the formation due to the pressure difference between the bottom hole and the formation pressure(Fig. 1d).

Schematic classification of lost circulation51.

Fractures are an important cause of drilling fluids loss to formations whose lost circulation severity depends on fracture opening width, fracture density, fracture orientation, fracture distribution, fracture network, etc.52,53,54.

The loss ratio indicates paths of lost circulations and can show what remedial technique should be employed to counteract the loss. The lost circulation severity can be divided into four classes as follows 55,56,57:

-

Seepage losses: less than 1 m3/h.

-

Partial losses: 1–10 m3/h.

-

Severe losses: more than 15 m3/h.

-

Complete losses: no fluid comes out of the annulus.

In this study, for the first time, a novel method is proposed to have another important use of mud loss data. To this end, synthetic data driven from a commercial reservoir simulator is used as input to train and build our AI model. Mud loss data which is generated by a simulator is used to estimate formation permeability using Deep Jointly Informed Neural Networks(DJINN) and Convolutional Neural Networks(CNN) by formation type, formation thickness, mud density, mud viscosity, drilling depth, and mud loss rate data, which is presented as an accurate prediction of formation permeability.

Methodology

The model development diagram is shown in Fig. 2 and the method preparation is discussed in Sects. 2.1 to 2.5. The model development flowchart begins with data generation where all of the parameters related to mud loss are generated. The generated data is then subjected to statistical analysis. Next, the data undergoes preprocessing to make it suitable for modeling. The modeling phase begins with initializing the hyper-parameters for the deep learning model. When hyper-parameters are initialized, the models are trained with an adaptive moment estimation (Adam) optimizer. The hyper-parameters are adjusted and iterated using the trial-and-error method until the model shows good performance metrics with a minimum error.

Data generation

As shown in Fig. 3, the drilling fluid loss process is similar to fluid injection in the porous medium. According to Darcy’s law, the loss rate depends on the parameters of bottom-hole pressure, formation pressure, viscosity of the drilling fluid, and the formation permeability. In this study reservoir simulator software (Eclipse E100) was used to simulate the drilling fluid loss process and generate mud loss data, which can be used to.

The data available in the drilling process include mud weight, mud viscosity, drilling depth, mud loss rate, formation type, and the thickness of the drilled formation up to that depth. Therefore, mud loss data were generated according to the following assumptions in the 810 data series:

-

1-

Loss circulation limits: 1–250 bbl /hr.

-

2-

Fluid type: water-based mud.

-

3-

Increasing mud viscosity with increasing mud weight.

-

4-

Increasing mud weight with increasing depth in general.

-

5

–10 layers with different pore pressure.

Statistical analysis of generated data

Data analysis of the mud loss dataset focused on definitive and inferential statistics which focused on univariate analysis. It was summarized the data by visualizing the distribution of each parameter in Table 2 and Fig. 4 shows the histogram of each variable.

Mainly, the correlation coefficient (CC) is used to test the linear association between parameters. This can be expressed as follows:

where n represents the number of experimental data, \({x}_{m}\), and \({x}_{p}\) define the measured and predicted parameters, respectively, and \(\overline{x }\) m, and \(\overline{x }\) p signify their average values58.

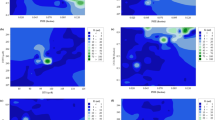

Figure 5 indicates the CC matrix for analyzed variables. According to this data mud loss rate is the main parameter that influences the permeability parameter while other parameters have insignificant effects on the permeability. By analyzing the CC matrix, it can be seen that generated data is comparable to operational data, e.g. there is a high correlation between fluid viscosity and fluid density that is similar to the relation between these parameters in the real condition.

Figure 6 shows non-linear relationships between variables. It is clear that the linearity relation between some variables, e.g. depth vs formation type, drilling fluid viscosity vs density, etc. Also, the non-linearity between some parameters is obvious, it is because of random conditions assumed for drilling conditions and no linear relationship between them at the real condition.

Data arrangement

Mud loss data were passed through three steps before fitting into deep learning models. The process initiated with organizing categorical data, pre-processing the data using a normalization scaler, and splitting the data into training and testing sets.

The categorical variable organized in the data collection stage is formation types, which were nominated values 1 to 10, respectively. Then, normalization was performed to convert the variables between 0 and 1. Normalized data had an average of zero and a standard deviation of one. Such normalized data is used to train the model Neuropathy since it enhances learning processes and reduces high computational costs 59. The formula which used for normalization is as follows:

In Eq. (2), xi is the value of the variable for the ith observation, xmin is the minimum value of a variable, and xmax is the maximum value of the variable. Finally, the reshaped data were split into two sets using 80:20 ratios for the training and testing set.

Convolutional neural networks (CNN)

In the past decade, Convolutional Neural Networks have been responsible for breakthroughs in computer vision and image processing 60,61,62, obtaining state-of-the-art results on a range of benchmark and real-world tasks. More recently, one-dimensional CNNs have shown great promise in processing structured linguistic data in tasks such as machine translation 63,64 and document classification 65,66. Bai et al. 67 in 2018 indicated that, for many sequence modeling tasks, 1D-CNNs using current best practices such as dilated convolution often perform better than other recurrent neural network architectures.

A convolutional neural network is a type of feedforward neural network that consists of multiple convolution stages that perform the task of feature extraction and a single output stage that combines the extracted high-level features to predict the desired output 68. Figure 7 indicates a sample of the 1D-CNN architecture for the forecasting model.

In this study, Table 3 shows the elements of neural networks that included one 1D-CNN layer, one flattened layer, two dropout layers with a value of 0.2, and two fully connected layers. Exponential Linear Unit (elu) is applied in the convolution and fully connected layers as an activation function.

Deep jointly informed neural networks (DJINN)

The DJINN algorithm determines the appropriate deep neural network architecture and initializes the weights using the dependency structure of the decision tree trained on the data. The algorithm can be divided into three steps: building a set of decision trees, mapping the tree to a neural network, and fine-tuning the neural network through backpropagation 69.

Decision tree construction

The first step of the DJINN algorithm is to build a model based on a decision tree. This can be a single decision tree generating a neural network or an ensemble of trees, such as random forests70, which will create a set of neural networks. The depth of the tree is often limited to avoid creating neural networks that are too large; Maximum tree depth is a hyper-parameter that must be tuned for each data set69.

Mapping decision trees to deep neural networks

The DJINN algorithm selects a deep neural network architecture and a set of initial weights based on the structure of the decision tree. The mapping is not intended to reproduce a decision tree, but instead uses the decision path as a guide for network architecture and weight initialization. Neural networks are initialized layer by layer, whereas decision trees are typically saved for each decision pass. The path starts at the top branch of the tree and follows each decision to the left and then to the right until it reaches a leaf (prediction). Due to the way trees are stored, it is difficult to navigate the tree by depth, but it is easy to traverse the tree recursively. Mapping from a tree to a neural network is easiest if the structure of the tree is known before initializing the neural network weights. Therefore, the decision pass is executed twice; first, it determines the structure and then initializes the weights 69.

Optimizing the neural networks

As soon as the tree is mapped to the initialized neural network, the weights are adjusted using backpropagation. In this example, a deep neural network is trained with Google’s deep learning software Tensor Flow. The activation function used in each hidden layer is a modified linear unit, which generally works well in deep neural networks71,72 and can retain the values of neurons in previously hidden layers. The Adam optimizer73 is used to minimize the cost function (mean squared error (MSE) for regression, cross-entropy with logit for classification)74.

Model performance evaluation

It is necessary to recognize the criteria associated with evaluating model performance. In this work, root mean squared error, mean absolute error, mean absolute percentage error, R-squared, and relative error were used as statistical indicators to evaluate the performance of the models.

Root mean squared error (RMSE)

The root mean squared error is used to see how well the network output matches the desired output. Better performance is guaranteed with smaller RMSE values. It is defined as follows 75:

Mean absolute error (MAE)

The mean absolute error is the average value of the absolute difference between the predicted value and the actual value. Errors showing a uniform distribution shall be presented. Furthermore, MAE is the most natural and accurate measure of the average level of error 58.

Mean absolute percentage error (MAPE)

The mean absolute percentage error is calculated by dividing the absolute error of each period by the observed values evident in that period. Then average these fixed percentages. This approach is useful when the size or dimensions of the predictor variable are important in assessing the accuracy of the prediction 76,77. MAPE indicates the degree of forecast error compared to the actual value.

R-squared (R2)

An important index to check the correctness of the regression algorithm is \({R}^{2}\), which ranges from 0 to 1. \({R}^{2}\) is defined as follows58:

where n represents the number of observations, \({x}_{m}\), and \({x}_{p}\) define the measured and predicted parameters, respectively, and \(\overline{x }\) m signifies the average of measured parameters.

Relative error (RE)

The relative error is defined as the ratio of the difference of the predicted to the measured value. If \({x}_{m}\) is the measured value of a quantity, \({x}_{p}\) is the predicted value of the quantity, then the relative error can be measured using the below formula78.

Results and discussion

Based on the previously mentioned methods fthe structural parameters of the CNN and DJINN for predicting formation permeability were determined, and the models were trained and tested. Real value versus predicted values of permeability (md) for training and testing data are displayed as cross plots in Fig. 8 and Fig. 9. R2 represents an alternative measure of forecast accuracy. As a precision indicator, it represents the proportion of the variance displayed by the dependent variable that can be predicted through the choice of the independent variable. If R2 = 1, this shows that the permeability of the formation can be predicted without error by the selected independent variables.

As can be seen from Table 4, the 1D-CNN prediction model has sufficiently high accuracy on training and test data (for training data: R2 = 0.968, RMSE = 50.78, MAE = 37.50, MAPE = 16.39; for test data: R2 = 0.962, RMSE = 58.17, MAE = 42.95, MAPE = 11.29). As shown in Table 5, the DJINN prediction model has also sufficiently high accuracy on training and test data (for training data: R2 = 0.973, RMSE = 46.15, MAE = 34.34, MAPE = 9.57; for test data: R2 = 0.970, RMSE = 51.39, MAE = 39.56, MAPE = 13.53).

Figure 10 and Fig. 11 indicate relative error for 1D-CNN and DJINN models. According to them the accuracy for data with low values is lower than the accuracy for data with high values. Therefore, these models are suitable for prediction data with high values.

Figure 12 compares the computational error on training and test data for used algorithms. It indicates that RMSE, R2, MAE, and MAPE for the DJINN model are more accurate than the 1D-CNN model. Therefore, DJINN is a better algorithm for predicting formation permeability.

Table 6 compares the results of this study with those of recent permeability estimation studies. While most studies have used well log data, seismic data, rock imaging data, core data to estimate formation permeability by Analytical, statistical, and computational tools, and artificial intelligence tool, this study benefits from deep learning algorithms to estimate the formation permeability through mud loss data.

Conclusions

Permeability is the key parameter to reservoir characterization. There are various methods to evaluate the formation and estimate the formation permeability, but in some cases, the evaluation may not be done or it may not be done correctly.

This study estimated formation permeability using drilling fluid data and two deep-learning algorithms. Drilling data including depth, formation type, fluid density, fluid viscosity, formation thickness, and mud loss rate were generated by reservoir simulator software similar to real-world conditions and Deep learning algorithms including 1D-CNN and DJINN.

The results show that DJINN (R2 equals 0.973 on training data and 0.970 on test data) is a more accurate model than 1D-CNN (R2 equals 0.968 on training data and 0.962 on test data) in modeling this problem. Therefore, this study could present a novel method that uses mud loss data to estimate formation permeability accurately by deep learning algorithms (Supplementary table S1).

Data availability

"The authors confirm that the data supporting the findings of this study are available within the article and its supplementary materials."

Change history

26 May 2025

A Correction to this paper has been published: https://doi.org/10.1038/s41598-025-03476-9

References

Dake, L. P. Fundamentals of reservoir engineering (Elsevier, 1983).

E. Egbogah, L. White, M. Mirkin, Steam Stimulated Enhanced Oil Recovery, Paper SPI 2400S retrieved from www. Rocando. com, (2003).

Lake, L. W., Johns, R., Rossen, B. & Pope, G. A. Fundamentals of enhanced oil recovery (Society of Petroleum Engineers Richardson, 2014).

Zhao, Y., Wang, C., Zhang, Y. & Liu, Q. Experimental study of adsorption effects on shale permeability. Nat. Resour. Res. 28, 1575–1586 (2019).

Hussain, M. et al. Reservoir characterization of basal sand zone of lower Goru Formation by petrophysical studies of geophysical logs. J. Geol. Soc. India 89, 331–338 (2017).

Ahmed, N., Kausar, T., Khalid, P. & Akram, S. Assessment of reservoir rock properties from rock physics modeling and petrophysical analysis of borehole logging data to lessen uncertainty in formation characterization in Ratana Gas Field, northern Potwar, Pakistan. J. Geol. Soc. India 91, 736–742 (2018).

Anifowose, F. & Abdulraheem, A. Fuzzy logic-driven and SVM-driven hybrid computational intelligence models applied to oil and gas reservoir characterization. J. Natural Gas Sci. Eng. 3, 505–517 (2011).

Sircar, A., Yadav, K., Rayavarapu, K., Bist, N. & Oza, H. Application of machine learning and artificial intelligence in oil and gas industry. Pet. Res. 6, 379–391 (2021).

Mohaghegh, S., Arefi, R., Ameri, S., Aminiand, K. & Nutter, R. Petroleum reservoir characterization with the aid of artificial neural networks. J. Petrol. Sci. Eng. 16, 263–274 (1996).

Anifowose, F., Adeniye, S. & Abdulraheem, A. Recent advances in the application of computational intelligence techniques in oil and gas reservoir characterisation: a comparative study. J. Exp. Theor. Artif. Intell. 26, 551–570 (2014).

C.I. Noshi, J.J. Schubert, The role of machine learning in drilling operations; a review, in SPE/AAPG Eastern regional meeting, OnePetro, 2018.

Purbey, R. et al. Machine learning and data mining assisted petroleum reservoir engineering: a comprehensive review. Int. J. Oil, Gas Coal Technol. 30, 359–387 (2022).

Graczyk, K. M. & Matyka, M. Predicting porosity, permeability, and tortuosity of porous media from images by deep learning. Sci. Rep. 10, 21488 (2020).

Lv, Q. et al. Modelling minimum miscibility pressure of CO2-crude oil systems using deep learning, tree-based, and thermodynamic models: Application to CO2 sequestration and enhanced oil recovery. Separation Purification Technol. https://doi.org/10.1016/j.seppur.2022.123086 (2023).

Hui, G., Chen, S., He, Y., Wang, H. & Gu, F. Machine learning-based production forecast for shale gas in unconventional reservoirs via integration of geological and operational factors. J. Nat. Gas Sci. Eng. 94, 104045 (2021).

Liu, X., Ge, Q., Chen, X., Li, J. & Chen, Y. Extreme learning machine for multivariate reservoir characterization. J. Petrol. Sci. Eng. 205, 108869 (2021).

Larestani, A., Mousavi, S. P., Hadavimoghaddam, F. & Hemmati-Sarapardeh, A. Predicting formation damage of oil fields due to mineral scaling during water-flooding operations: Gradient boosting decision tree and cascade-forward back-propagation network. J. Petrol. Sci. Eng. 208, 109315 (2022).

Y. Bai, V. Berezovsky, V. Popov, Digital core 3D reconstruction based on micro-CT images via a deep learning method, in 2020 International Conference on High Performance Big Data and Intelligent Systems (HPBD&IS), IEEE.2020.

Najafi, A. et al. Upscaling permeability anisotropy in digital sandstones using convolutional neural networks. J. Nat. Gas Sci. Eng. 96, 104263 (2021).

Telvari, S., Sayyafzadeh, M., Siavashi, J. & Sharifi, M. Prediction of two-phase flow properties for digital sandstones using 3D convolutional neural networks. Adv. Water Resour. 176, 104442 (2023).

Xue, L. et al. An automated data-driven pressure transient analysis of water-drive gas reservoir through the coupled machine learning and ensemble Kalman filter method. J. Petrol. Sci. Eng. 208, 109492 (2022).

Khazali, N. & Sharifi, M. New approach for interpreting pressure and flow rate data from permanent downhole gauges, least square support vector machine approach. J. Petrol. Sci. Eng. 180, 62–77 (2019).

Wang, H. et al. A novel shale gas production prediction model based on machine learning and its application in optimization of multistage fractured horizontal wells. Front. Earth Sci. https://doi.org/10.3389/feart.2021.726537 (2021).

Qiao, L., Wang, H., Lu, S., Liu, Y. & He, T. Novel self-adaptive shale gas production proxy model and its practical application. ACS Omega 7, 8294–8305 (2022).

P.-Y. Wu, V. Jain, M.S. Kulkarni, A. Abubakar, Machine learning-based method for automated well-log processing and interpretation, in 2018 SEG International Exposition and Annual Meeting, OnePetro 2018.

Amiri-Ramsheh, B., Zabihi, R. & Hemmati-Sarapardeh, A. Modeling wax deposition of crude oils using cascade forward and generalized regression neural networks Application to crude oil production. Geoenergy Sci. Eng. https://doi.org/10.1016/j.geoen.2023.211613 (2023).

Yousefzadeh, R. & Ahmadi, M. Improved history matching of channelized reservoirs using a novel deep learning-based parametrization method. Geoenergy Sci. Eng. 229, 212113 (2023).

Yousefzadeh, R. & Ahmadi, M. Fast marching method assisted permeability upscaling using a hybrid deep learning method coupled with particle swarm optimization. Geoenergy Sci. Eng. 230, 212211 (2023).

Otchere, D. A., Ganat, T. O. A., Gholami, R. & Ridha, S. Application of supervised machine learning paradigms in the prediction of petroleum reservoir properties: Comparative analysis of ANN and SVM models. J. Petrol. Sci. Eng. 200, 108182 (2021).

P. Wong, F. Aminzadeh, M. Nikravesh, Soft computing for reservoir characterization and modeling, Physica, 2013.

Ouenes, A. Practical application of fuzzy logic and neural networks to fractured reservoir characterization. Comput. Geosci. 26, 953–962 (2000).

Nikravesh, M., Zadeh, L. A. & Aminzadeh, F. Soft computing and intelligent data analysis in oil exploration (Elsevier, 2003).

Rezaee, M. R., Kadkhodaie-Ilkhchi, A. & Alizadeh, P. M. Intelligent approaches for the synthesis of petrophysical logs. J. Geophys. Eng. 5, 12–26 (2008).

Sander, R., Pan, Z. & Connell, L. D. Laboratory measurement of low permeability unconventional gas reservoir rocks: A review of experimental methods. J. Nat. Gas Sci. Eng. 37, 248–279 (2017).

S. Mohaghegh, B. Balan, S. Ameri, State-of-the-art in permeability determination from well log data: Part 2-verifiable, accurate permeability predictions, the touch-stone of all models, in SPE Eastern Regional Meeting, OnePetro, 1995.

Chehrazi, A. & Rezaee, R. A systematic method for permeability prediction, a Petro-Facies approach. J. Petrol. Sci. Eng. 82, 1–16 (2012).

Rezaee, R. & Ekundayo, J. Permeability prediction using machine learning methods for the CO2 injectivity of the precipice sandstone in Surat Basin. Australia, Energies 15, 2053 (2022).

Tembely, M., AlSumaiti, A. M. & Alameri, W. S. Machine and deep learning for estimating the permeability of complex carbonate rock from X-ray micro-computed tomography. Energy Rep. 7, 1460–1472 (2021).

Okon, A. N., Adewole, S. E. & Uguma, E. M. Artificial neural network model for reservoir petrophysical properties: porosity, permeability and water saturation prediction. Modeling Earth Syst. Environ. 7, 2373–2390 (2021).

Yasin, Q., Sohail, G. M., Ding, Y., Ismail, A. & Du, Q. Estimation of petrophysical parameters from seismic inversion by combining particle swarm optimization and multilayer linear calculator. Nat. Resour. Res. 29, 3291–3317 (2020).

Anifowose, F., Abdulraheem, A. & Al-Shuhail, A. A parametric study of machine learning techniques in petroleum reservoir permeability prediction by integrating seismic attributes and wireline data. J. Petrol. Sci. Eng. 176, 762–774 (2019).

Akande, K. O., Owolabi, T. O. & Olatunji, S. O. Investigating the effect of correlation-based feature selection on the performance of support vector machines in reservoir characterization. J. Nat. Gas Sci. Eng. 22, 515–522 (2015).

Bruce, A. G. et al. A state-of-the-art review of neural networks for permeability prediction, The. APPEA Journal 40, 341–354 (2000).

Elkatatny, S., Mahmoud, M., Tariq, Z. & Abdulraheem, A. New insights into the prediction of heterogeneous carbonate reservoir permeability from well logs using artificial intelligence network. Neural Comput. Appl. 30, 2673–2683 (2018).

Al Khalifah, H., Glover, P. & Lorinczi, P. Permeability prediction and diagenesis in tight carbonates using machine learning techniques. Marine Pet. Geol. 112, 104096 (2020).

T. Nayberg, B. Petty, Laboratory study of lost circulation materials for use in oil-base drilling muds, in SPE Deep Drilling and Production Symposium, OnePetro, 1986.

S. Mirabbasi, M. Ameri, F. Biglari, A. Shirzadi, Geomechanical Study on Strengthening a Wellbore with Multiple Natural Fractures: A Poroelastic Numerical Simulation, in 82nd EAGE Annual Conference & Exhibition, European Association of Geoscientists & Engineers, 2021.

Moore, P. Drilling Practices Manual 2nd edition (PennWell Books, 1986).

Mirabbasi, S. M., Ameri, M. J., Biglari, F. R. & Shirzadi, A. Thermo-poroelastic wellbore strengthening modeling: An analytical approach based on fracture mechanics. J. Petrol. Sci. Eng. 195, 107492 (2020).

Osisanya, S. Course notes on drilling and production laboratory (University of Oklahoma, Oklahoma (Spring), 2002).

M. Alsaba, R. Nygaard, G. Hareland, O. Contreras, Review of lost circulation materials and treatments with an updated classification, in AADE National Technical Conference and Exhibition, Houston, TX, 2014.

Fan, C. et al. Formation stages and evolution patterns of structural fractures in marine shale: case study of the Lower Silurian Longmaxi Formation in the Changning area of the southern Sichuan Basin, China. Energy Fuels 34, 9524–9539 (2020).

Fan, C. et al. Quantitative prediction and spatial analysis of structural fractures in deep shale gas reservoirs within complex structural zones: A case study of the Longmaxi Formation in the Luzhou area, southern Sichuan Basin. China, J. Asian Earth Sci. 263, 106025 (2024).

Li, J. et al. Shale pore characteristics and their impact on the gas-bearing properties of the Longmaxi Formation in the Luzhou area. Sci. Rep. 14, 16896 (2024).

B.O. Company, Various Daily Reports, Final Reports, and Tests for 2007, 2008, 2009, 2010, 2011 and 2012, in, Several Drilled Wells, Basra’s Oil Fields Basra, 2012.

Mirabbasi, S. M., Ameri, M. J., Alsaba, M., Karami, M. & Zargarbashi, A. The evolution of lost circulation prevention and mitigation based on wellbore strengthening theory: A review on experimental issues. J. Pet. Sci. Eng. https://doi.org/10.1016/j.petrol.2022.110149 (2022).

Karami, M., Ameri, M. J., Mirabbasi, S. M. & Nasiri, A. Wellbore strengthening evaluation with core fracturing apparatus: An experimental and field test study based on preventive approach. J. Petrol. Sci. Eng. 208, 109276 (2022).

Willmott, C. J. Some comments on the evaluation of model performance. Bull. Am. Meteor. Soc. 63, 1309–1313 (1982).

Francois, C. Deep Learning with Python (Manning Publications, 2017).

Krizhevsky, A., Sutskever, I. & Hinton, G. E. Imagenet classification with deep convolutional neural networks. Commun. ACM 60, 84–90 (2017).

K. Simonyan, A. Zisserman, Very deep convolutional networks for large-scale image recognition, Preprint https://arXiv.org/abs/ 1409.1556, (2014).

K. He, X. Zhang, S. Ren, J. Sun, Deep residual learning for image recognition, in Proceedings of the IEEE conference on computer vision and pattern recognition, 770–778 2016.

J. Gehring, M. Auli, D. Grangier, D. Yarats, Y.N. Dauphin, Convolutional sequence to sequence learning, in International conference on machine learning, PMLR 1243-1252 2017.

J. Gehring, M. Auli, D. Grangier, Y.N. Dauphin, A convolutional encoder model for neural machine translation, Preprint at https://arXiv.org/abs/1611.02344 (2016).

A. Conneau, H. Schwenk, L. Barrault, Y. Lecun, Very deep convolutional networks for text classification, Preprint at https://arXiv.org/abs/ 1606.01781, (2016).

R. Johnson, T. Zhang, Deep pyramid convolutional neural networks for text categorization, in Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), 2017.

S. Bai, J.Z. Kolter, V. Koltun, An empirical evaluation of generic convolutional and recurrent networks for sequence modeling, Preprint at https://arXiv.org/abs/ 1803.01271, (2018).

Shenfield, A. & Howarth, M. A novel deep learning model for the detection and identification of rolling element-bearing faults. Sensors 20, 5112 (2020).

Humbird, K. D., Peterson, J. L. & McClarren, R. G. Deep neural network initialization with decision trees. IEEE Trans. Neural Networks Learning Syst. 30, 1286–1295 (2018).

L. Breiman, Random forest, vol. 45, Mach Learn, 1 (2001).

V. Nair, G.E. Hinton, Rectified linear units improve restricted boltzmann machines, in Proceedings of the 27th international conference on machine learning (ICML-10), 2010.

G.E. Dahl, T.N. Sainath, G.E. Hinton, Improving deep neural networks for LVCSR using rectified linear units and dropout, in 2013 IEEE international conference on acoustics, speech and signal processing, IEEE 2013.

D.P. Kingma, J. Ba, Adam: A method for stochastic optimization, Preprint https://arXiv.org/abs/ 1412.6980, (2014).

De Boer, P.-T., Kroese, D. P., Mannor, S. & Rubinstein, R. Y. A tutorial on the cross-entropy method. Ann. Oper. Res. 134, 19–67 (2005).

Kuo, J.-T., Hsieh, M.-H., Lung, W.-S. & She, N. Using artificial neural network for reservoir eutrophication prediction. Ecol. Model. 200, 171–177 (2007).

McKenzie, J. Mean absolute percentage error and bias in economic forecasting. Econ. Lett. 113, 259–262 (2011).

De Myttenaere, A., Golden, B., Le Grand, B. & Rossi, F. Mean absolute percentage error for regression models. Neurocomputing 192, 38–48 (2016).

X.R. Li, Z. Zhao, Relative error measures for evaluation of estimation algorithms, in 2005 7th international conference on information fusion, IEEE, 2005.

Author information

Authors and Affiliations

Contributions

Yaser Abdollahfard authored the main manuscript text, produced Python code for result output and modeling, and created artificial data using reservoir simulation software. Dr. Seyyed Morteza Mirabbasi revised the manuscript technically as drilling engineer. Dr. Mohammad Ahmadi revised the manuscript as corresponding author. Dr. Abdolhosein Hemmati revised the manuscript as data scientist. Dr. Sefatollah Ashorian revised the manuscript as a final technical check reviser.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original online version of this Article was revised: The original version of this Article contained an error in the Author contributions section. As a result, it now reads: “Yaser Abdollahfard authored the main manuscript text, produced Python code for result output and modeling, and created artificial data using reservoir simulation software. Dr. Seyyed Morteza Mirabbasi revised the manuscript technically as drilling engineer. Dr. Mohammad Ahmadi revised the manuscript as corresponding author. Dr. Abdolhosein Hemmati revised the manuscript as data scientist. Dr. Sefatollah Ashorian revised the manuscript as a final technical check reviser.”

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Abdollahfard, Y., Mirabbasi, S.M., Ahmadi, M. et al. Formation permeability estimation using mud loss data by deep learning. Sci Rep 15, 15251 (2025). https://doi.org/10.1038/s41598-025-94617-7

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-94617-7

Keywords

This article is cited by

-

Assessing proxy and AI models performance in waterflooding optimization

Scientific Reports (2025)