Abstract

Crowd monitoring in the context of smart libraries is of great significance for resource optimization and service improvement. However, existing models struggle to achieve real-time performance and accuracy in high-density, enclosed environments.This study addresses these limitations in the following way: Firstly, pedestrian flow videos from the side view angle were collected at different time periods on the second floor of the library. The videos were frame-extracted into images and manually annotated, resulting in a high-quality dataset consisting of 5350 images (3745 for the training set, 1070 for the test set, and 535 for the validation set). Then, a lightweight convolutional data augmentation module DensityNet was designed to enhance the model’s feature extraction ability in crowded and occluded scenes. Subsequently, model pruning and knowledge distillation techniques were combined to reduce model complexity and enhance real-time detection, making it suitable for the computing requirements of edge devices. Finally, a region detection algorithm was designed to better adapt to the demand for crowd monitoring in high-density and view limited dynamic environments by extending the detection trigger time, providing an accurate and contactless solution for crowd flow monitoring in smart libraries. The experimental results show that the improved YOLOv8n model has an average accuracy in high-density scenes? mAP@0.5 ?Reaching 0.99, close to 0.991 of the original model, while mAP@0.5 At 0.95, it reached 0.861, an increase of 0.014 compared to the model before pruning; In terms of real-time performance, the frame rate (FPS) has significantly increased to 254, the computational load has decreased to 4.0 GFLOP, and the parameter count has been reduced to 2.04M, meeting the real-time detection needs of peak pedestrian flow environments in smart libraries. The model proposed by this research institute can be integrated into an intelligent library management system to achieve efficient crowd monitoring and resource optimization.

Similar content being viewed by others

Introduction

The rapid development of smart libraries has driven the digital and intelligent transformation of library services1,2,3. Leveraging information technology and big data analytics, smart libraries can gain deeper insights into user needs, optimize resource allocation, and thereby improve operational efficiency and service quality4. In this process, data analysis is increasingly becoming a key tool for supporting management decisions. As one of the core metrics, visitor flow monitoring not only directly reflects the usage of resources and space utilization but also provides a scientific basis for service optimization and the formulation of management strategies5. Therefore, achieving accurate visitor flow detection is a crucial step towards intelligent management in smart libraries6.

Currently, most libraries rely on access control systems and video detection to collect crowd flow data7. However, although access control systems based on card swiping or facial recognition can provide more accurate entry and exit data, they are prone to congestion during peak hours and have lag in data export, making it difficult to meet real-time monitoring needs8. In contrast, video analysis technology has gradually become an effective complementary solution by detecting and tracking pedestrians in surveillance footage, providing more dynamic and real-time pedestrian flow data9,10. Video analysis methods rely on deep learning techniques, such as convolutional neural networks (CNN) and Transformer object detection models, and have made significant progress in recent years11. However, due to the spatial layout of the library, existing monitoring equipment often can only obtain video data from a single perspective. In densely populated and heavily obstructed environments, detection performance is still greatly affected12,13. Specifically, the targets in the side view exhibit varying degrees of deformation and occlusion, leading to a significant decrease in the accuracy of traditional pedestrian detection methods in this scene. At the same time, the interactive motion of dense crowds increases the difficulty of target tracking and counting. In addition, although mainstream object detection models such as Faster R-CNN and YOLO perform well in the field of object recognition, they are difficult to balance between high accuracy and real-time performance. Some lightweight improvement schemes, although improving detection speed, often struggle to maintain sufficient detection accuracy in high-density scenes. Therefore, achieving accurate and real-time monitoring of pedestrian flow in a high-density and limited viewing angle dynamic environment for smart libraries remains an important challenge in the current field.

To address this challenge, this paper proposes a visitor flow monitoring method based on an improved YOLOv8n model. The main contributions of this work can be summarized as follows:

-

1.

A dataset for high-density and occluded scenarios from a side view in a library setting is constructed, and a novel data collection approach is provided. The proposed method has been validated on this dataset, with the validation results demonstrating its superior performance.

-

2.

A lightweight Dense-Stream YOLOv8n model for high-density visitor flow monitoring is introduced. Experimental results show that this model outperforms existing mainstream models in this task, exhibiting higher real-time performance and accuracy.

-

3.

A highly adaptable benchmark area counting algorithm is designed for complex layouts in specific library settings, which effectively addresses occlusion issues under side views. Additionally, this algorithm demonstrates good scalability, making it applicable to other similar scenarios.

-

4.

New methods and technical support for big data monitoring and analysis in libraries are provided, contributing to enhanced data-driven management and service levels.

Related research

With the rapid development of smart libraries, achieving accurate and real-time visitor flow monitoring in high-density and perspective-limited dynamic environments has gradually become a key research direction14,15. However, most of the currently applied methods often struggle to balance accuracy and real-time performance when dealing with the high-density and perspective-limited dynamic environments of libraries16. To address these shortcomings, the application of deep learning techniques and lightweight models has increasingly become a central focus of research in recent years17.

Traditional counting methods

Traditional methods such as manual counting, sensor counting, and access control systems are still used for crowd monitoring in libraries, but these methods have significant limitations in practical applications. Table 1 summarizes the characteristics and drawbacks of these methods to provide a clearer illustration of their limitations in the context of smart libraries.

Early visitor flow monitoring primarily relied on manual counting, which, although simple and easy to implement, was inefficient and prone to significant errors, failing to meet the requirements for precision and efficiency in smart libraries18. Subsequently, sensor-based counting was gradually introduced, improving the level of automation to some extent. However, the accuracy of these systems could be easily affected by variations in individuals’ body sizes and movement speeds.

In recent years, access control systems based on card swiping and facial recognition have been widely adopted, enabling more accurate counting and supporting management19. Nevertheless, these systems tend to cause congestion during peak entry and exit times and typically rely on offline data export, resulting in poor real-time performance. Additionally, their monitoring accuracy can be influenced by the installation location and configuration, especially in high-density scenarios where it may be difficult to comprehensively capture all entry and exit data20.

Video analysis technology, through the real-time processing of camera footage, achieves contactless and continuous monitoring, demonstrating greater adaptability to various environments21. However, due to layout constraints in some libraries, cameras often can only capture images from a single side perspective, leading to occlusion issues in high-density and dynamic crowds, which in turn affects the accuracy of visitor flow monitoring. Particularly in high-density dynamic environments with limited perspectives, traditional video detection algorithms still struggle to achieve the required levels of recognition accuracy and processing efficiency22.

Crowded scene detection method under deep learning

In recent years, with the rise of Convolutional Neural Networks (CNNs), deep learning has made breakthrough progress in the field of object detection in crowded scenes. Early object detection methods were mainly based on single-stage and two-stage detectors. Among them, the YOLO series model proposed by Redmon et al.;achieved real-time detection by transforming the object detection problem into a single regression problem23; Ren et al. proposed the Faster R-CNN framework and introduced Region Proposal Network (RPN), which significantly improved the accuracy and efficiency of detection24. However, its accuracy in complex scenarios still has certain limitations25,26,27,28,29.

To improve detection performance, researchers have proposed a series of improvement methods: CenterNet proposed by Zhou et al. improves the detection accuracy of small targets by locating the center point of the target,avoids the instability of bounding box regression in traditional methods, and demonstrates stronger robustness30 ; Luo et al.introduced attention mechanism to enable the model to focus on the features of key regions, improving the detection ability of pedestrians in complex scenes31;Zhao et al. proposed the MS2ship dataset for object detection in drone imagery, providing high-quality training data for small object detection in maritime environments32. However, these methods have achieved good detection results in specific application scenarios, but there are still problems such as decreased detection accuracy and insufficient generalization ability when facing dense occlusion, dynamic changes, and multi-scale targets in complex environments.

With the increasing complexity of object detection tasks, researchers have proposed many advanced models that combine contextual information, multi-scale analysis, and other strategies, resulting in improved accuracy and speed of object detection. For example, Liang et al. proposed a method combining Swin Transformer (SwinT) with Faster R-CNN, which utilizes window attention mechanism to extract global contextual information and improve the robustness of pedestrian detection in highly occluded scenes33; Li et al. addressed the issue of occlusion in underwater fish detection and optimized YOLOv8 using RT-DETR structure to improve detection performance in complex scenes34. Although these methods demonstrate strong detection capabilities in crowded scenarios, they often rely on high computing resources and still need further optimization to adapt to their deployment on edge devices. In addition, the combination of 3D LiDAR and Internet of Things (IoT) technology used by Guefrachi et al. can provide richer environmental information, but the hardware cost is high and its applicability in indoor environments is still limited35; Meuser et al. focused on Edge AI and explored the potential of model training and reasoning on the edge side. Although they provided a new research direction for the combination of target detection and edge computing, they still faced challenges such as model architecture constraints, computing resource constraints and data privacy36.

Therefore, in intelligent environments such as smart libraries, smart agriculture, and transportation hubs, achieving high-density, side view real-time pedestrian flow monitoring still faces many challenges, especially in the case of limited computing resources37. How to improve the model’s lightweight and real-time performance while ensuring detection accuracy remains an urgent problem to be solved . To address this issue, an improved YOLOv8n model was proposed and its functionality was enhanced by integrating the DensityNet module, using model pruning and knowledge distillation techniques.

Model lightweighting

As deep learning models are applied across various scenarios, model lightweighting has become a critical direction for improving real-time detection efficiency. The design of lightweight convolutional modules enables deep learning models to perform more efficient crowd monitoring on resource-constrained edge devices38. Pruning techniques, as a common optimization approach, reduce model complexity by removing redundant parameters, thereby significantly enhancing computational efficiency39. Studies on applying pruning techniques in YOLO models have shown that pruning can effectively reduce computational overhead, making the model more suitable for deployment on embedded devices in high-density scenarios. Furthermore, combining knowledge distillation strategies, where a teacher model guides the training of a student model, can further improve the detection accuracy and robustness of lightweight models in high-density scenarios, offering superior performance in practical deployments40.

Data collection and preprocessing

Data collection

This study continuously collected crowd flow video data over multiple days and different time periods on the second floor of Nanchang University Library using a side-angle top-down shooting method. Based on the specific layout of the library’s entrances and exits, the camera equipment was installed at positions and angles that could clearly capture the dynamics of people entering and exiting (see Fig. 1), ensuring coverage of scene changes with varying crowd densities at different times. This collection process recorded human activity data in various dynamic environments, providing a rich and representative data foundation for subsequent model training.

Data preprocessing and partitioning

To further support model training, the collected video data underwent several preprocessing steps. First, video frame extraction was applied to the captured library crowd flow videos, extracting one frame every five seconds and saving it in “.jpg” format, converting the dynamic video into a sequence of static images. This allows the model to train on static images for object detection, more accurately capturing the dynamic details of individuals. Next, the extracted image set was filtered to remove frames with no people or redundant information, retaining only effective images with varying crowd densities to ensure the diversity and representativeness of the dataset. Finally, the selected images were manually annotated using the LabelImg tool, marking the positions and categories of individuals in each image, providing high-quality labeled data for model training. The high-quality dataset generated through this preprocessing workflow lays a solid foundation for subsequent model training and performance optimization.

After annotation, the obtained image dataset was divided into training, testing, and validation sets in a ratio of 7:2:1, resulting in 3745 images for the training set, 1070 images for the testing set, and 535 images for the validation set. Each dataset covers multiple time periods and different crowd densities to ensure that the model learns from a sufficient number of samples during training, capturing features under various conditions. Additionally, during validation and testing, the model’s generalization ability can be comprehensively evaluated, further enhancing the overall performance of the model.

Constructing Dense-Stream YOLOv8n model

YOLOv8n model

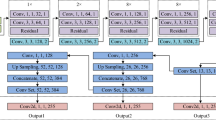

YOLO (You Only Look Once) is an efficient deep learning object detection model that simplifies detection into a regression problem, enabling rapid identification and tracking of various objects. YOLO not only excels in terms of accuracy and speed but also offers multiple functional features and variants to accommodate different scenario requirements41,42,43.Although YOLO has achieved significant success in various applications, it still requires further improvements to meet the resource constraints and real-time performance demands in high-density, occluded environments such as those found in library settings.To address these challenges, this paper introduces the Dense-Stream YOLOv8n model, which enhances the YOLOv8n model through model pruning and knowledge distillation. These modifications aim to balance detection accuracy and real-time performance, enabling efficient monitoring of visitor flow at library entrances and exits. The architecture of the proposed Dense-Stream YOLOv8n model is illustrated in Fig. 2.

Design DensityNet data augmentation module

To effectively address the occlusion issues caused by high-density crowds and side-angle data collection in the dynamic scenes of libraries, this study integrated a lightweight convolutional enhancement module, DensityNet, into the YOLOv8n model to improve its ability to extract features from pedestrian images. By comparing the original image (Fig. 3a) with the image processed by DensityNet (Fig. 3b), it is evident that after DensityNet processing, the model captures the contours and detailed features of pedestrians more accurately, making the key features of pedestrians clearer.

The core structure of the Convolutional Enhancement Module, DensityNet, includes convolutional layers, batch normalization layers, ReLU activation functions, and skip connections. Firstly, DensityNet uses a 3\(\times\)3 convolutional layer to extract local features, enhancing the model’s sensitivity to detailed variations. Secondly, the batch normalization layer is employed to standardize the feature distribution, reducing training instability caused by differences in input data. The standardized output is then transformed through a ReLU activation function, which introduces non-linearity and improves the model’s responsiveness to key features. To further preserve the original image information, DensityNet employs skip connections to perform a weighted addition of the convolutional output and the input image features. The expression for the skip connection is:

Here, \(y_{\text {conv}}\) represents the convolutional enhancement output feature, x is the input image feature, and the coefficient 0.05 is used to balance the proportion of convolutional features and the original image features. The final output \(y_{\text {out}}\), obtained after the skip connection, is converted into an image format, making the enhanced image features more recognizable and helping to improve the model’s real-time pedestrian detection performance in high-density scenarios.

Model pruning

To meet the real-time detection requirements in high-density, view-limited dynamic environments of smart libraries, this study optimized the YOLOv8n model through pruning to reduce computational complexity and enhance detection efficiency on device endpoints, meeting the real-time requirements during peak crowd flow periods. Pruning, as a method of compressing deep learning models, significantly reduces model computational costs and storage requirements by removing redundant or low-importance model parameters, enabling efficient inference on resource-constrained devices.

During the pruning process, first, the model undergoes sparsity regularization training to guide the weight distribution of the model towards sparsity, weakening the impact of non-important parameters, making subsequent pruning easier. Specifically, sparsity regularization is introduced to specific layers of the model (such as BatchNorm layers), adjusting the original loss function to:

Here, \(\lambda\) is the sparsity coefficient that controls the strength of the sparsity regularization term on the weights, and \(\sum \nolimits _i \left| w_i \right|\) is the sum of the absolute values of the weights to be pruned. This process gradually reduces the absolute values of low-importance weights, achieving the reduction of redundant parameters.

During the weight screening phase, the model further performs global pruning based on the sparsity distribution of the weights. Specifically, the weights of the entire model are sorted in ascending order by their absolute values. Let the model weights be \(| w_i|\). A pruning threshold \(\tau\) is determined globally based on the specified sparsity rate p which is the value at the position corresponding to the top \(p\%\) of the weights in the sorted list. The formula is expressed as:

Here, \(\text {Quantile}\left( \left\{ \left| w_i \right| \right\} , p \right)\) represents the percentile of the absolute values of the weights sorted at the proportion p. Ultimately, weights below this threshold are set to zero, achieving the pruning of redundant parameters. The formula is expressed as:

After the pruning strategy involving sparsity regularization and global screening, the model parameters are streamlined. Subsequent fine-tuning training will be conducted to recover the accuracy, ensuring that the compressed model maintains effective detection and real-time performance in the high-density, view-limited dynamic environment of smart libraries.

Knowledge distillation

To enhance the performance of the pruned model, this study adopts the knowledge distillation method by constructing a teacher model YOLOv8l and a student model YOLOv8n, to achieve knowledge transfer. The teacher model YOLOv8l has the complete original model architecture and parameters, capable of providing rich features and accurate predictions. In contrast, the student model YOLOv8n is a lightweight model that has undergone data augmentation and pruning optimization. The knowledge distillation process mainly includes two parts of loss: logits distillation and feature distillation, to guide the learning of the student model, making it as close as possible to the performance of YOLOv8l in the reduced scale.

First, logits distillation is based on the similarity of the output probability distributions between YOLOv8l and the improved YOLOv8n, using the Kullback-Leibler divergence to measure the difference between the two44. The formula is as follows:

Here, \(P_T\) and \(P_S\) represent the output probability distributions of the teacher model and the student model, respectively. By minimizing the logits distillation loss, the improved YOLOv8n can better mimic the prediction behavior of the teacher model.

Secondly, feature distillation focuses on aligning the intermediate feature layers of YOLOv8l and the improved YOLOv8n, calculating the difference between the two using mean squared error (MSE) to enable the student model to learn the rich feature representations from the teacher model. The feature distillation loss is defined as:

Here, \(F_T\) and \(F_S\) are the feature outputs of the teacher model and the student model at the ii-th layer, respectively. Through feature distillation, the improved YOLOv8n can effectively acquire the deep-level information from YOLOv8l, enhancing its feature representation capability during inference.

The overall loss function of the distillation process is the weighted sum of the logits distillation loss and the feature distillation loss, which is given by:

Here, \(\alpha\) and \(\beta\) are the weighting factors that balance the contributions of the logits and feature distillation losses.

Through this knowledge distillation process, the lightweight model YOLOv8n, after data augmentation and pruning optimization, can approach the accuracy and robustness of the teacher model YOLOv8l while maintaining a small scale and low computational complexity. This makes it effectively adaptable to the real-time detection needs in the high-density, view-limited dynamic environment of smart libraries.

Reference area counting algorithm

To meet the needs of personnel entry and exit judgment and precise counting in the high-density, view-limited dynamic environment of smart libraries, this study proposes a baseline region counting algorithm. By improving the traditional baseline detection method45 to baseline region detection, the counting accuracy in occluded and dense environments is enhanced, thereby improving the monitoring precision in dynamic scenes of personnel entry and exit, achieving more reliable crowd flow monitoring. The flowchart of this algorithm is shown in Fig. 4.

The algorithm first expands the baseline to a baseline region to provide a longer detection trigger time, thus better adapting to high-density, view-limited dynamic environments. The position of the baseline region is optimized by the ratio parameters a and b . Specifically, these parameters represent the relative positions of the left and right boundaries of the baseline region within the video frame, with values ranging from 0 to 1. The specific values of the parameters need to be adjusted according to the actual scene layout and the installation position of the camera equipment to ensure that the baseline region effectively covers areas that people must pass through. The positioning of the baseline region can be expressed by the following formula:

Here, W and H are the width and height of the video frame, respectively; a and b are the ratio parameters for the left and right boundaries of the baseline region.

During the object detection process, the algorithm uses the improved lightweight model YOLOv8n to obtain the center position of each detected object and records the position information in the track_history dictionary. By analyzing the trajectory data, the movement direction of each target can be determined, providing support for subsequent counting. When a target is detected entering from the left, i.e., the coordinates of the target in the previous frame satisfy the following condition relative to the boundary coordinates of the baseline region, it is marked as “in”:

When a target is detected entering from the right, i.e., the coordinates of the target in the previous frame satisfy the following condition relative to the boundary coordinates of the baseline region, it is marked as “out”:

The pseudocode for this process is shown in Table 2:

Analysis of results and evaluation of the models

Experimental environment

The hardware environment consists of Windows 11, a processor of 12th Gen Intel(R) Core(TM) i5-12400F @ 2.50 GHz, and a GPU of NVIDIA GeForce RTX 4060. The development environment is PyCharm Community Edition 2023.3.4, with the interpreter being Python 3.8.19. The libraries imported include Ultralytics 8.0.135, OpenCV 4.6.0.66, and Numpy 1.24.4, among others.The main parameters set during training in this study are shown in Table 3:

In the experiment, a learning rate annealing strategy was adopted, with an initial learning rate set at Lr0=0.001 and gradually decaying to Lrf=0.01 to prevent unstable convergence in the later stages of training. The early stop strategy is set to patience=30, which means that no increase was observed for 30 consecutive 0.9* mAP@0.5 +0.1* mAP@0.5 , the training will be terminated early. In addition, in terms of optimizer selection, SGD, which performs more stably in high-density object detection tasks, was chosen as the optimizer. Through the above optimization strategy and hyperparameter optimization strategy, Dense Stream YOLOv8n can maintain excellent detection accuracy while ensuring lightweight, ensuring the stability and generalization ability of the model in high-density occlusion scenes.

Ablation experiment

To verify the effectiveness of various optimization modules in improving the detection performance of the model, this experiment progressively introduces the DensityNet module, model compression (pruning), and knowledge distillation strategies on the basis of the YOLOv8n model, designing multiple ablation studies. The comparison models include the original YOLOv8n, YOLOv8n-DensityNet, YOLOv8n-DensityNet-compress, and Dense-Stream YOLOv8n, to systematically analyze the contribution of each optimization module to the model’s performance.The experimental results are shown in Table 4. From the data in the table, it can be observed that the various optimization strategies have had varying degrees of impact on the model’s detection accuracy, inference speed, and computational load.

Specifically, the original YOLOv8n model achieves mAP@0.5(IoU=0.5) and mAP@0.5:0.95(Average mAP of IoU=0.5 to 0.95) of 0.991 and 0.898, respectively, with a frame rate (FPS) of 189.5 and a computational load of 8.1 GFLOPs, and a parameter count of 3.01M. After introducing the lightweight convolution module DensityNet, the YOLOv8n-DensityNet model maintains an mAP@0.5 of 0.991, with a slight drop in mAP@0.5:0.95 to 0.896, but the FPS increases to 191.7, and the parameter count remains at 3.01M. This indicates that the DensityNet module enhances detection accuracy and preserves detailed features while having a limited impact on computational resources.

Additionally, it improves the convergence speed of model training.On this basis, the YOLOv8n-DensityNet model is pruned using compression strategies to obtain the YOLOv8n-DensityNet-compress model. After pruning, the model’s mAP@0.5 slightly decreases to 0.989, and mAP@0.5:0.95 drops to 0.847, but the frame rate (FPS) significantly increases to 253.4, the parameter count reduces to 2.04M, and the computational load (GFLOPs) decreases to 4.0. This result shows that although there is a slight decrease in mAP, the loss in accuracy is within an acceptable range, and there is a significant improvement in inference speed and resource efficiency.

Furthermore, by introducing knowledge distillation strategies to the YOLOv8n-DensityNet-compress model, the Dense-Stream YOLOv8n model is obtained. Experimental data show that this model achieves mAP@0.5 and mAP@0.5:0.95 of 0.99 and 0.861, respectively, with the FPS further increasing to 254.0, and the GFLOPs and parameter count remaining at 4.0 and 2.04M. The introduction of knowledge distillation strategies ensures low computational cost while further enhancing the model’s generalization ability, significantly optimizing its detection performance in high-density scenarios.

To more intuitively demonstrate the detection effects of pruning and knowledge distillation strategies in high-density occlusion scenarios, Fig. 5b,c show the detection result heatmaps of the original YOLOv8n model and the optimized Dense-Stream YOLOv8n model in high-density crowds. As shown in Fig. 5c, the optimized model can more accurately focus on the key parts of side-by-side individuals, improving detection performance in occluded situations. In contrast, the original model performs less effectively in handling similar occlusion scenarios (see Fig. 5b). Figure 5a shows the original image of the actual scene for comparative analysis. The experimental results further validate that the Dense-Stream YOLOv8n model, with the support of pruning and knowledge distillation strategies, can achieve superior detection performance in high-density, occluded environments.

Additionally, to comprehensively analyze the effects of various optimization strategies, this experiment also plots precision-recall curves (Fig. 6a) and loss function curves (box_loss, dfl_loss, cls_loss) (Fig. 6b) to assist in verifying the improvements in detection performance brought by each optimization module. Additionally, to comprehensively analyze the effects of various optimization strategies, this experiment also plots precision-recall curves (Fig. 6a) and loss function curves (box_loss, dfl_loss, cls_loss) (Fig. 6b) to assist in verifying the improvements in detection performance brought by each optimization module. Additionally, to comprehensively analyze the effects of various optimization strategies, this experiment also plots precision-recall curves (Fig. 6a) and loss function curves (box_loss, dfl_loss, cls_loss) (Fig. 6b) to assist in verifying the improvements in detection performance brought by each optimization module.

From the precision-recall curves (Fig. 6a), it can be observed that after introducing the lightweight convolution module DensityNet, YOLOv8n-DensityNet shows improvements in both detection speed and accuracy compared to the original YOLOv8n model. After incorporating the pruning compression strategy, the mAP@0.5 of YOLOv8n-DensityNet-compress further improves in high-density scenarios, indicating that the pruning operation effectively reduces redundant parameters, enhances inference speed, and makes the model suitable for real-time detection on edge devices. With the addition of the knowledge distillation strategy, Dense-Stream YOLOv8n performs optimally in high-density scenarios, further validating the critical role of distillation strategies in enhancing model generalization and robustness.

In terms of the loss functions (Fig. 6b), as each optimization strategy is progressively introduced, the box_loss, dfl_loss, and cls_loss in both the training and validation processes gradually decrease and stabilize around 200 epochs. This trend indicates that the model achieves good convergence under the optimization of each strategy. Among them, Dense-Stream YOLOv8n performs best in terms of box_loss and dfl_loss, further confirming that the introduction of distillation strategies allows the model to maintain low detection errors while improving detection accuracy.

At the same time, this experiment compared and analyzed the accuracy recall curves (see Fig. 7a,b) and F1 score curves (see Fig. 7c,d) of YOLOv8n and Dense Stream YOLOv8n during the training process to evaluate the impact of parameter reduction on model performance.

From Fig. 7a,b, it can be seen that compared to YOLOv8n, Dense Stream YOLOv8n only showed a decrease of 0.01 in the highest F1 score and 0.023 in the lowest F1 score with a reduction of about 50% in parameter quantity, indicating that the model still maintains high stability in balancing accuracy and recall. Meanwhile, from Fig. 7c,d, it can be observed that under the same training conditions, Dense Stream YOLOv8n exhibits mAP@0.5 Only a decrease of 0.002 further validates that the model can maintain excellent detection performance while reducing computational costs. These experimental results indicate that Dense Stream YOLOv8n can maintain detection accuracy close to YOLOv8n while significantly reducing parameter size, fully demonstrating the effectiveness and efficiency of its lightweight design.

In summary, through the improvements introduced by the DensityNet module, pruning compression strategy, and knowledge distillation strategy, the YOLOv8n model has achieved a good balance between accuracy, inference speed, and model complexity. Especially in high-density dynamic scenarios, the pruning and knowledge distillation strategies have significantly enhanced the model’s real-time performance and robustness, validating the effectiveness of the proposed optimization schemes.

Simulation

To verify the practical application effects of the improved YOLOv8n model and the baseline region counting algorithm in high-density, view-limited dynamic library environments, simulation experiments were designed and conducted to systematically evaluate the performance of the optimization strategies in this scenario.

This study randomly selected 10 densely populated video clips from peak hours and 10 non-densely populated video clips from off-peak hours from previously collected videos of the Nanchang University Library. To ensure the representativeness and rigor of the results, each video segment was trimmed to 1-2 minutes, retaining the segments with the most characteristic human flow, to fully reflect the typical human flow environment in actual applications. Such processing can more effectively test the detection accuracy and robustness of the model and algorithm under different human flow densities, providing more realistic and reliable sample data for the subsequent analysis of detection performance.

During the sample video detection process, the actual number of people entering and exiting, the number of people detected as entering and exiting, and the processing speed (FPS) were recorded to analyze the model’s detection accuracy and real-time performance under different human flow densities, Please refer to Supplementary Video 1 (detection effects after trimming at triple speed for the entire day) and Supplementary Video 2 (detection effects at normal speed for a specific time period). To further illustrate the model’s performance in dense and non-dense human flow scenarios, Fig. 8a,b show the real-time detection results in high-density and low-density scenarios, respectively.

To further analyze the consistency of the improved model in classification tasks, Fig. 9a,b show the confusion matrices of the original YOLOv8n model and the optimized Dense-Stream YOLOv8n model. It can be observed that both models exhibit similar classification performance on the “person” and “background” categories, achieving high classification accuracy. This indicates that the optimized model, while reducing computational complexity and improving inference speed, can maintain classification performance comparable to the original model, ensuring detection stability and accuracy in high-density environments.

At the same time, in order to evaluate the generalization performance of the model in different scenarios, the detection ability of the original YOLOv8n and the optimized Dense Stream YOLOv8n was compared and tested on two datasets, ShanghaiTech Crowd Counting Dataset (Fig.10a,b) and AudioVisual (Fig.10c,d).

From Fig.10, it can be seen that the lightweight Dense Stream YOLOv8n performs better in pedestrian detection in side view scenes in certain situations. This phenomenon can be attributed to the fact that the model learned the feature extraction ability of the YOLOv8L model with high accuracy during the knowledge distillation process, allowing Dense Stream YOLOv8n to maintain high detection accuracy even with reduced parameter count. This result further validates the generalization ability of Dense Stream YOLOv8n on different datasets, indicating that its lightweight design has strong adaptability while ensuring detection accuracy, and can be extended to a wider range of intelligent monitoring scenarios.

Additionally, this study recorded the processing speeds in both CPU and NVIDIA RTX 4060 GPU environments to evaluate the real-time performance of the model. Table 5 summarizes the detection results for the two types of videos, including key metrics such as the number of people entering and exiting, detection accuracy, and processing speed.

The experimental results show that the model exhibits extremely high detection accuracy in both high-density and low-density human flow environments, reaching 99.41% and 99.88% respectively, effectively verifying its adaptability in complex human flow environments. In terms of processing speed, the Fps on NVIDIA RTX 4060 GPU reached around 34 FPS, fully meeting the real-time monitoring needs of high-density dynamic scenes in smart libraries. Although the CPU processing speed is relatively low (5.14 FPS for dense scenes and 5.53 FPS for non dense scenes), recording its frame rate is to demonstrate the applicability of the model in resource constrained environments; For application scenarios that do not require strict real-time performance, CPU processing still has certain reference value. Despite occasional extreme occlusion and environmental complexity challenges, the model still exhibits good stability overall in practical applications.

Comparative experiment

To further validate the superior performance of Dense-Stream YOLOv8n in detecting high-density and side-view visitor flows in dynamic smart library environments, this study selected several commonly used object detection models as comparative benchmarks. These include DDOD (Disentangle Your Dense Object Detector)46, Faster R-CNN (Fast Region-based Convolutional Network), SSD (Single Shot Multibox Detector), YOLOv11n, and the original YOLOv8n model. The models were evaluated based on metrics such as mean Average Precision (mAP@0.5 and mAP@0.95), inference frames per second (FPS), computational complexity (GFLOPs), and the number of parameters. The experimental results are summarized in Table 6.

Based on the experimental results in Table 6, Dense-Stream YOLOv8n demonstrates superior performance across multiple key metrics. In terms of mean Average Precision (mAP), it achieves an mAP@0.5 of 0.990, which is nearly on par with YOLOv8n and SSD at 0.991, and slightly higher than other models such as Faster R-CNN (0.981), DDOD (0.984), and YOLOv11n (0.859). This indicates its competitive accuracy. Additionally, for the mAP@0.5:0.95 metric, Dense-Stream YOLOv8n reaches 0.861, which, although lower than SSD (0.896) and YOLOv8n (0.898), remains within an acceptable range. Compared to DDOD (0.846), Faster R-CNN (0.835), and YOLOv11n (0.606), its accuracy is improved by 1.8%, 3.1%, and 29.61%, respectively. This suggests that Dense-Stream YOLOv8n has good adaptability across various density scenarios, making it suitable for real-world visitor flow monitoring tasks in smart libraries.

In terms of inference speed, Dense-Stream YOLOv8n achieves a frame rate (FPS) of 254.0, significantly higher than the other benchmark models. Specifically, DDOD and Faster R-CNN have FPS rates of 22.5 and 18.3, respectively, while SSD has an FPS of 39.7. In comparison, the original YOLOv8n and YOLOv11n achieve 189.5 FPS and 243.9 FPS, respectively, which, although good, are still lower than Dense-Stream YOLOv8n. This significant improvement in performance clearly indicates that Dense-Stream YOLOv8n has a substantial advantage in real-time monitoring capabilities, effectively meeting the demands of high-density visitor flow environments in smart libraries.

Furthermore, in terms of computational complexity (GFLOPs) and the number of parameters, Dense-Stream YOLOv8n has values of 4.0 GFLOPs and 2.04M parameters, respectively. Compared to other models such as Faster R-CNN (203 GFLOPs, 43.61M parameters) and SSD (30.49 GFLOPs, 23.74M parameters), it exhibits a lightweight characteristic, effectively reducing resource consumption. This makes it well-suited for deployment on edge devices, which is crucial for real-time monitoring in high-density visitor flow environments.

In summary, through comparisons with other models, Dense-Stream YOLOv8n demonstrates superior performance in high-density, side-view visitor flow monitoring in smart libraries. It provides strong support for achieving accurate and real-time visitor flow monitoring. The model’s balanced performance in accuracy, real-time capability, and resource efficiency makes it an excellent choice for practical applications in smart library settings.

Discussion

Performance advantages and comparative analysis of dense-stream YOLOv8n model

The proposed Dense-Stream YOLOv8n model in this study demonstrates significant performance advantages in object detection, notably outperforming existing modified YOLOv8 models. For instance, the FPS of the improved YOLOv8 model proposed by Lei et al. is 41.4647, and the FPS of the improved YOLOv8 model proposed by Safaldin et al.is 30.048 , whereas the FPS of our method reaches 254.0, significantly enhancing real-time detection capabilities. In terms of accuracy, Dense-Stream YOLOv8n achieves an mAP@0.5 of 0.99 and an mAP@0.5:0.95 of 0.861, which surpasses the GR-YOLO proposed by Li et al. (mAP@0.5 of 0.855 and mAP@0.5:0.95 of 0.600) and other modified models49.

Moreover, the model size of Dense-Stream YOLOv8n is only 2.04M, which is significantly smaller than the 13.3MB improved YOLOv8 model proposed by Wang et al.50. This results in lower storage requirements and higher computational efficiency, making it more suitable for embedded and edge device applications.

In addition to outperforming existing modified YOLOv8 models, Dense-Stream YOLOv8n also significantly outperforms lightweight networks such as PFEL-Net and PeleeNet. Compared to PFEL-Net51, Dense-Stream YOLOv8n has a clear advantage in accuracy. Although PFEL-Net has a smaller model size (0.9MB), Dense-Stream YOLOv8n (2.04M) achieves an mAP@0.5 of 0.99, far exceeding PFEL-Net’s 0.656. Furthermore, PeleeNet is limited in detection accuracy in complex scenarios, especially for small objects and occluded scenes52. In contrast, Dense-Stream YOLOv8n, with the introduction of the DensityNet module, effectively enhances the detection capability for small objects and occluded scenes.

Therefore, Dense-Stream YOLOv8n not only ensures high real-time performance but also offers superior accuracy and broader applicability. Overall, Dense-Stream YOLOv8n achieves an excellent balance between precision, speed, and resource consumption, making it well-suited for object detection tasks in complex and dynamic environments.

Limitations

Although the Dense Stream YOLOv8n model has shown good performance in detecting side view angles in high-density populations, it still has certain limitations in extreme occlusion environments and complex lighting conditions. Specifically, when the target is severely occluded, the model may have difficulty accurately distinguishing individuals, resulting in missed or false detections, especially in scenes with dense pedestrian overlap, where detection accuracy significantly decreases. In addition, changes in lighting conditions may affect the extraction of target features, leading to instability in the detection capability of the model under different lighting conditions. At the same time, although this study optimized the detection performance of the model in side view scenes, when applied to top-down or other non ideal viewing environments, changes in the shape of the target may lead to a decrease in the model’s generalization ability, thereby affecting the robustness and stability of detection.

To further improve the generalization ability of Dense Stream YOLOv8n in complex scenes, future research can improve it from multiple perspectives and multimodal data expansion, feature enhancement, and structural optimization53. On the one hand, multimodal data fusion provides richer feature representations for occluded targets, thereby enhancing the model’s understanding of target morphology and spatial information54. On the other hand, introducing more advanced attention mechanisms, such as Swin Transformer structure or self attention based feature enhancement methods, can effectively improve the ability to capture global information in the object detection process, enabling the model to locate targets more accurately in occlusion situations55. Taking into account these optimization directions, not only can the detection capability of Dense Stream YOLOv8n be improved under different perspectives and occlusion conditions, but it also provides a more adaptive solution for future real-time object detection intelligent systems in complex environments.

Conclusions

This study proposes a lightweight pedestrian flow detection method based on an improved YOLOv8n model, Dense Stream YOLOv8n, aimed at addressing the challenges of pedestrian flow monitoring in high-density and side view dynamic environments of smart libraries. This method introduces a lightweight convolutional enhancement module DensityNet, pruning, and knowledge distillation techniques to significantly reduce computational complexity while improving detection accuracy, making it suitable for real-time requirements on edge devices. The experimental results show that Dense Stream YOLOv8n has an average accuracy in high-density scenes (mAP@0.5) reaching 0.99, close to the original YOLOv8n (0.991), while at the same time mAP@0.5:0.95 reaches 0.861. In terms of real-time performance, the frame rate (FPS) of Dense Stream YOLOv8n has been increased to 254, which is 34.0% higher than the original YOLOv8n (189.5 FPS). At the same time, the computational load has been reduced to 4.0 GFLOP and the parameter count has been reduced to 2.04M, significantly reducing computational resource consumption and making it more suitable for edge devices with limited computation. In addition, simulation experiments and generalization tests have shown that this method exhibits high detection stability under different crowd density conditions, especially in dynamic environments with occlusion in the side view angle, and still maintains good detection performance. The comparison with different detection models further confirms that Dense Stream YOLOv8n achieves a good balance between detection accuracy, inference speed, and computational resource consumption. The method proposed in this study provides theoretical and technical support for integrated solutions in intelligent environments such as smart library management systems and smart city infrastructure.

Data availability

The data used in this study is not publicly available, so please contact 9109222234@email.ncu.edu.cn.

References

Zimmerman, T. & Chang, H. C. Getting smarter: Definition, scope, and implications of smart libraries. In Proceedings of the 18th ACM/IEEE on Joint Conference on Digital Libraries 403–404. https://doi.org/10.1145/3197026.3203906 (2018).

Shi, X., Tang, K. & Lu, H. Smart library book sorting application with intelligence computer vision technology. Libr. Hi Tech 39, 220–232. https://doi.org/10.1108/LHT-10-2019-0211 (2021).

Farkhari, F., CheshmehSohrabi, M. & Karshenas, H. Smart library: Reflections on concepts, aspects and technologies. J. Inf. Sci. https://doi.org/10.1177/01655515241260715 (2024).

Qolomany, B. et al. Leveraging machine learning and big data for smart buildings: A comprehensive survey. IEEE Access 7, 90316–90356. https://doi.org/10.1109/ACCESS.2019.2926642 (2019).

Zhou, Z., Ding, J., Liu, Y., Jin, D. & Li, Y. Towards generative modeling of urban flow through knowledge-enhanced denoising diffusion. In Proceedings of the 31st ACM International Conference on Advances in Geographic Information Systems 1–12. https://doi.org/10.1145/3589132.3625641 (2023).

Qu, M. Exploring patron behavior in an academic library: A wi-fi-connection data analysis. Educ. Inf. Technol. 29, 11235–11256. https://doi.org/10.1007/s10639-023-12248-9 (2024).

Abdou, M. & Erradi, A. Crowd counting: a survey of machine learning approaches. In 2020 IEEE International Conference on Informatics, IoT, and Enabling Technologies (ICIoT) 48–54 (IEEE, 2020). https://doi.org/10.1109/ICIoT48696.2020.9089594.

Zheng, J. & Yao, D. Intelligent pedestrian flow monitoring systems in shopping areas. In 2010 2nd International Symposium on Information Engineering and Electronic Commerce 1–4 (IEEE, 2010). https://doi.org/10.1109/IEEC.2010.5533215.

Gao, M. et al. Deep learning for video object segmentation: A review. Artif. Intell. Rev. 56, 457–531. https://doi.org/10.1007/s10462-022-10176-7 (2023).

Cob-Parro, A. C., Losada-Gutiérrez, C., Marrón-Romera, M., Gardel-Vicente, A. & Bravo-Muñoz, I. A new framework for deep learning video based human action recognition on the edge. Expert Syst. Appl. 238, 122220. https://doi.org/10.1016/j.eswa.2023.122220 (2024).

Jiang, Z. et al. Social nstransformers: Low-quality pedestrian trajectory prediction. IEEE Trans. Artif. Intell. https://doi.org/10.1109/TAI.2024.3421175 (2024).

Li, Z., Li, Y. & Wang, X. Current researches and trends of crowd counting in the field of deep learning. In 2020 IEEE 4th Information Technology, Networking, Electronic and Automation Control Conference (ITNEC), vol. 1, 1326–1329 (IEEE, 2020). https://doi.org/10.1109/ITNEC48623.2020.9084927.

Niu, J., Yang, Y. & Yue, T. Current status and development trend of crowd counting. In 2021 IEEE 4th Advanced Information Management, Communicates, Electronic and Automation Control Conference (IMCEC), vol. 4, 904–909 (IEEE, 2021). https://doi.org/10.1109/IMCEC51613.2021.9482237.

Pouw, C. A. et al. Benchmarking high-fidelity pedestrian tracking systems for research, real-time monitoring and crowd control. arXiv preprint arXiv:2108.11719https://doi.org/10.17815/CD.2021.134 (2021).

Baqui, M., Samad, M. D. & Löhner, R. A novel framework for automated monitoring and analysis of high density pedestrian flow. J. Intell. Transp. Syst. 24, 585–597. https://doi.org/10.1080/15472450.2019.1643724 (2020).

Ning, C., Menglu, L., Hao, Y., Xueping, S. & Yunhong, L. Survey of pedestrian detection with occlusion. Complex Intell. Syst. 7, 577–587. https://doi.org/10.1007/s40747-020-00206-8 (2021).

Wang, C. H. et al. Lightweight deep learning: An overview. IEEE Consum. Electron. Mag. https://doi.org/10.1109/MCE.2022.3181759 (2022).

Feng, Y., Duives, D., Daamen, W. & Hoogendoorn, S. Data collection methods for studying pedestrian behaviour: A systematic review. Build. Environ. 187, 107329. https://doi.org/10.1016/j.buildenv.2020.107329 (2021).

Jing, Y., Mao, L. & Xu, J. Research on a library seat management system. In 2019 2nd International Conference on Information Systems and Computer Aided Education (ICISCAE) 297–301 (IEEE, 2019). https://doi.org/10.1109/ICISCAE48440.2019.221639.

Molyneaux, N., Scarinci, R. & Bierlaire, M. Design and analysis of control strategies for pedestrian flows. Transportation 48, 1767–1807. https://doi.org/10.1007/s11116-020-10111-1 (2021).

Cheong, K. H. et al. Practical automated video analytics for crowd monitoring and counting. IEEE Access 7, 183252–183261. https://doi.org/10.1109/ACCESS.2019.2958255 (2019).

Jin, C. J., Shi, X., Hui, T., Li, D. & Ma, K. The automatic detection of pedestrians under the high-density conditions by deep learning techniques. J. Adv. Transp. 2021, 1396326. https://doi.org/10.1155/2021/1396326 (2021).

Jiang, P., Ergu, D., Liu, F., Cai, Y. & Ma, B. A review of yolo algorithm developments. Procedia Comput. Sci. 199, 1066–1073. https://doi.org/10.1016/j.procs.2022.01.135 (2022).

Ren, S., He, K., Girshick, R. & Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 39, 1137–1149. https://doi.org/10.1109/TPAMI.2016.2577031 (2016).

Dollar, P., Wojek, C., Schiele, B. & Perona, P. Pedestrian detection: An evaluation of the state of the art. IEEE Trans. Pattern Anal. Mach. Intell. 34, 743–761. https://doi.org/10.1109/TPAMI.2011.155 (2011).

Liang, Y. et al. Research on water meter reading recognition based on deep learning. Sci. Rep. 12, 12861. https://doi.org/10.1038/s41598-022-17255-3 (2022).

Jiang, Z. et al. Noise interference reduction in vision module of intelligent plant cultivation robot using better cycle gan. IEEE Sens. J. 22, 11045–11055. https://doi.org/10.1109/JSEN.2022.3164915 (2022).

Gawande, U., Hajari, K. & Golhar, Y. Pedestrian detection and tracking in video surveillance system: issues, comprehensive review, and challenges. In Recent Trends in Computational Intelligence 1–24 (2020).

Geng, S., Yu, Q., Wang, H. & Song, Z. Airhf-net: An adaptive interaction representation hierarchical fusion network for occluded person re-identification. Sci. Rep. 14, 27242. https://doi.org/10.1038/s41598-024-76781-4 (2024).

Zhou, X., Wang, D. & Krähenbühl, P. Objects as points. arXiv preprint arXiv:1904.07850https://doi.org/10.48550/arXiv.1904.07850 (2019).

Lv, H., Yan, H., Liu, K., Zhou, Z. & Jing, J. Yolov5-ac: Attention mechanism-based lightweight yolov5 for track pedestrian detection. Sensors 22, 5903. https://doi.org/10.3390/s22155903 (2022).

Zhao, C., Liu, R. W., Qu, J. & Gao, R. Deep learning-based object detection in maritime unmanned aerial vehicle imagery: Review and experimental comparisons. Eng. Appl. Artif. Intell. 128, 107513. https://doi.org/10.1016/j.engappai.2023.107513 (2024).

Liang, J. A. & Ding, J. J. Swin transformer for pedestrian and occluded pedestrian detection. In 2024 IEEE International Symposium on Circuits and Systems (ISCAS) 1–5. https://doi.org/10.1109/ISCAS58744.2024.10558302 (IEEE, 2024).

Li, E. et al. Fish detection under occlusion using modified you only look once v8 integrating real-time detection transformer features. Appl. Sci. 13, 12645. https://doi.org/10.3390/app132312645 (2023).

Guefrachi, N., Shi, J., Ghazzai, H. & Alsharoa, A. Leveraging 3d lidar sensors to enable enhanced urban safety and public health: Pedestrian monitoring and abnormal activity detection. arXiv preprint arXiv:2404.10978 (2024).

Meuser, T. et al. Revisiting edge ai: Opportunities and challenges. IEEE Internet Comput. 28, 49–59. https://doi.org/10.1109/MIC.2024.3383758 (2024).

Jiang, Z., Guo, Y., Jiang, K., Hu, M. & Zhu, Z. Optimization of intelligent plant cultivation robot system in object detection. IEEE Sens. J. 21, 19279–19288. https://doi.org/10.1109/JSEN.2021.3077272 (2021).

Chen, F., Li, S., Han, J., Ren, F. & Yang, Z. Review of lightweight deep convolutional neural networks. Arch. Comput. Methods Eng. 31, 1915–1937. https://doi.org/10.1007/s11831-023-10032-z (2024).

Liu, Z., Sun, M., Zhou, T., Huang, G. & Darrell, T. Rethinking the value of network pruning. arXiv preprint arXiv:1810.05270https://doi.org/10.48550/arXiv.1810.05270 (2018).

Gou, J., Yu, B., Maybank, S. J. & Tao, D. Knowledge distillation: A survey. Int. J. Comput. Vis. 129, 1789–1819. https://doi.org/10.1007/s11263-021-01453-z (2021).

Zhou, Y. A yolo-nl object detector for real-time detection. Expert Syst. Appl. 238, 122256. https://doi.org/10.1016/j.eswa.2023.122256 (2024).

Chen, C. et al. Yolo-based uav technology: A review of the research and its applications. Drones 7, 190. https://doi.org/10.3390/drones7030190 (2023).

Gu, Z. et al. Tomato fruit detection and phenotype calculation method based on the improved rtdetr model. Comput. Electron. Agric. 227, 109524. https://doi.org/10.1016/j.compag.2024.109524 (2024).

Hershey, J. R. & Olsen, P. A. Approximating the kullback leibler divergence between gaussian mixture models. In 2007 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP’07), vol. 4, IV–317 (IEEE, 2007). https://doi.org/10.1109/ICASSP.2007.366913.

Peng, F., Zheng, L., Cui, X. & Wang, Z. Traffic flow statistics algorithm based on yolov3. In 2021 International Conference on Communications, Information System and Computer Engineering (CISCE) 627–630 (IEEE, 2021). https://doi.org/10.1109/CISCE52179.2021.9445932.

Chen, Z. et al. Disentangle your dense object detector. In Proceedings of the 29th ACM International Conference on Multimedia 4939–4948. https://doi.org/10.1145/3474085.3475351 (2021).

Lei, S., Yi, H. & Sarmiento, J. S. Synchronous end-to-end vehicle pedestrian detection algorithm based on improved yolov8 in complex scenarios. Sensors 24, 6116. https://doi.org/10.3390/s24186116 (2024).

Safaldin, M., Zaghden, N. & Mejdoub, M. An improved yolov8 to detect moving objects. IEEE Access https://doi.org/10.1109/ACCESS.2024.3393835 (2024).

Li, N. et al. Dense pedestrian detection based on gr-yolo. Sensors 24, 4747. https://doi.org/10.3390/s24144747 (2024).

Wang, B., Li, Y. Y., Xu, W., Wang, H. & Hu, L. Vehicle-pedestrian detection method based on improved yolov8. Electronics 13, 2149. https://doi.org/10.3390/electronics13112149 (2024).

Shao, Z. et al. Real-time and accurate uav pedestrian detection for social distancing monitoring in covid-19 pandemic. IEEE Trans. Multimed. 24, 2069–2083. https://doi.org/10.1109/TMM.2021.3075566 (2021).

Tang, J., Lai, H., Gao, G. & Wang, T. Pfel-net: A lightweight network to enhance feature for multi-scale pedestrian detection. J. King Saud Univ.-Comput. Inf. Sci. 36, 102198. https://doi.org/10.1016/j.jksuci.2024.102198 (2024).

Song, L. et al. Stacked homography transformations for multi-view pedestrian detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision 6049–6057 (2021).

Wang, Y. et al. Multi-modal 3d object detection in autonomous driving: A survey. Int. J. Comput. Vis. 131, 2122–2152. https://doi.org/10.1007/s11263-023-01784-z (2023).

Liu, Z. et al. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision 10012–10022. https://doi.org/10.48550/arXiv.2103.14030 (2021).

Funding

This work is supported by the National Natural Science Foundation of China (Nos. 62366023).

Author information

Authors and Affiliations

Contributions

Zini Chen: Performed the experimental work, Data processing and analysis, Writing-Origin draft, Writing-Review; Xuming Xie: Supervision, Data acquisition; Taorong Qiu: Conceptualization, Grammar proofreading; Leiyue Yao: Funding support, Wring-Review.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Consent to participate

All peoples confirms informed consent was obtained to publish the information.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Chen, Z., Xie, X., Qiu, T. et al. Dense-stream YOLOv8n: a lightweight framework for real-time crowd monitoring in smart libraries. Sci Rep 15, 11618 (2025). https://doi.org/10.1038/s41598-025-94659-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-94659-x