Abstract

The limited availability of high-quality SAR images severely affects the accuracy and robustness of target detection, classification, and segmentation. To solve this problem, a novel image generation method based on a diffusion model is introduced that requires only one training sample to generate a realistic SAR image. We propose a single-scale architecture to avoid image noise accumulation. In addition, an attention module for the sampling layer in the generator for improving feature extraction is designed. Then, an information-guided attention module is proposed to suppress redundant information. Ship targets were selected as the research objects, and the proposed method was tested using an open-source dataset. We also built our own Sentinel-1 dataset to increase the number of challenges. The experimental results show that our method is optimal compared with the classical method SinGAN. Specifically, the SIFID is decreased from 4.80 × 10^(-4) to 1.66 × 10^(-7), the SSIM is improved from 0.07 to 0.51, and the LPIPS is decreased from 0.61 to 0.23. Compared with that of ExSinGAN, generation diversity increases by 27.35%.

Similar content being viewed by others

Introduction

Synthetic aperture radar (SAR) is an important method for Earth observation due to its all-weather and day‒night imaging capabilities. Given the background of remote sensing intelligent big data, SAR image automatic target recognition (ATR)1has been applied in many fields, such as target detection2, segmentation3, and scene fusion4. Most of these algorithms are data-driven methods and rely strongly on datasets5. However, obtaining SAR images remains challenging6; for example, high-quality ship images with wake trails, which are essential for studying the effects of ocean currents, are scarce7,8. The number and quality of training samples determine the performance ceiling9, but the lack of high-quality SAR datasets has been a constraint for downstream tasks. Thus, there is a pressing need for SAR image generation methods to support the development of intelligent technologies. With the development of generative artificial intelligence (AI) models, diffusion models have made great progress; however, these models are seldom used in remote sensing.

Unlike natural images captured by cameras, SAR is a coherent imaging process, leading to issues such as coherent spots, sea clutter and shadows10,11, which also create difficulties in image generation tasks. In fact, research in SAR image-based generative methods has been a topical issue, and traditional SAR image generation methods have focused on achieving quality breakthroughs. For example, Li and Bao12generated high-resolution SAR images of ground scenes by retrieving unambiguous full-spectrum signals. Jackson and Moses13combined model parameters with physical scene characteristics to research low-frequency SAR image generation. The Technical University of Denmark has introduced a tool to generate realistic SAR images from computer-aided design (CAD) models. This research has been financially supported by TERMA A/S14. Hydrodynamic modeling has been applied to SAR sea target image generation15,16. These methods rely on rigorous mathematical modeling and complex generation processes but exhibit low diversity, leading to reduced mobility.

Furthermore, Dong et al.17used random flipping, multiplication, rotation, translation, and flipping to improve the accuracy of image classification. However, this enhancement method does not change the original distribution of pixels, thus leading to reduced benefits. With the development of deep learning and generative AI approaches, feature extraction of SAR images has significantly improved18. Typically, generative methods based on convolutional neural networks (CNNs) have made some advances19,20,21,22. There are also approaches that combine image features with CNNs for image generation22,23. Although these intelligent methods improve image quality, they achieve their goal by fitting a distribution between the two image domains. This end-to-end approach lacks effective conditional supervision and is thus vulnerable to overfitting as well as training failure.

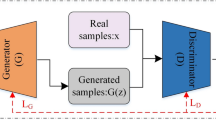

Significant advancements in SAR image generation have emerged with the introduction of generative adversarial networks (GANs)24. As a subset of generative AI, GANs have been used to perform image generation tasks by training a set of adversarial models25,26. They have been widely used in the generation of remote sensing data27,28,29. In the field of SAR image generation, Ju et al.30 proposed SARGAN, which can generate realistic SAR images with ships, land, and backgrounds. Song et al.31 implemented an adversarial self-encoder for SAR image generation. Cao et al.5investigated a demand-driven SAR target sample generation method for imbalanced data learning. Certainly, combining GAN-based SAR image generation becomes an effective approach to compensate for data shortcomings6,32,33,34,35. Unfortunately, most of these methods rely on datasets with simple textures and low noise levels36. However, these methods lack robustness to image generation under strong disruptions or require a large amount of training data to complete tasks.

In recent years, with the progress of DALLE237, StableDiffusion38etc., diffusion models (DMs) have gradually become mainstream in generative AI applications. Remote sensing image generation based on DMs has become a research topic, but this technique has fewer applications in the SAR field. Owing to their rigorous mathematical frameworks, DMs perform better than GANs do39. Despite the great success of DMs in recent years, long-term training is still needed in remote sensing image generation27,40.

In summary, the use of a small number of samples or single samples in the data enhancement of SAR images is highly valuable. However, existing methods still require high-quality and large amounts of data to obtain effective results. Single-sample generation methods have evolved from GANs41to DMs42, which can solve the above problems. This article introduces a DM-based SAR image generation method that requires only one image sample to generate high-quality and diverse SAR images. The contributions of this article are as follows:

(1) We propose a single SAR denoising diffusion probabilistic model (SSDDPM) and perform experiments using open-source and private SAR ship datasets. First, a single image is sliced into different patches, the diffusion model is applied to learn its statistical distribution, and SAR image generation is completed through information aggregation. Notably, we use only one single-scale generator to predict diffuse noise, effectively avoiding the accumulation of redundant information. Compared with competing algorithms, the SSDDPM is optimized in terms of generation quality and diversity. The generation results show that our method achieves a 71.53% enhancement in image quality, as measured by the SIFID, and a 27.35% increase in diversity, as measured by the IS.

(2) We design an attention-based noise estimate generation model (ANEM) as the SSDDPM generator to reconstruct noise information and reverse diffusion. The ANEM incorporates a three-layer coding and decoding network, in which a dual-stream attention-gated sampling (DAGM) module is proposed at the sampling layer. This approach can enhance sampling feature extraction and avoid information loss. Ablation experiments demonstrate that the DAGM improved the SIFID metric by 259.32%.

(3) To solve the issue of the uneven distribution of SAR patches internally, we propose an information-guided attention module (IGAM) to filter out redundant information. An information guide operator is designed to achieve a balance between spatial and channel attention weights. The IGAM is utilized in the copy and crop process of the ANEM module, enabling the establishment of a feature mapping between the encoder and decoder in the hidden space. Ablation experiments demonstrate that the IGAM improves the SIFID metric by 297.46%.

Related work for single image generation

The concept of producing an image by single-sample learning is both ambitious and indispensable43. With the development of generative AI methods, it has become easier to use single images as training samples for image generation tasks. Owing to the material available to us, single-sample generation algorithms have few applications in remote sensing, and almost no studies have used them to generate SAR images. As shown in Table 1, we have summarized the representative GANs and DMs methods in the field of single-sample image generation.

Single generative adversarial networks

TR Shaham first proposed SinGAN, a new unconditional generative scheme that learns from a single natural image41. Deng et al.44 designed haze-aware representation distillation layers for a single image dehazing task. Sun et al.45 applied the pixel attention mechanism for image superresolution. Lin et al.46 employed two pairs of images to achieve the image-to-image translation (UI2I) task. Hinz et al.47proposed ConSinGAN to increase the training speed of single images. Since then, GAN-based single-sample image generation methods have been widely applied48,49,50,51,52,53. These types of methods rely on an adversarial generation strategy, which is prone to pattern collapse in practical training. The pyramid training architecture increases the instability of training.

Single diffusion module networks

DMs have achieved much progress in the field of computer vision and have many applications in image generation, image editing, and image conversion. They provide enhanced methods for single-sample generation tasks. The majority of previous studies have focused mostly on GANs; however, DMs have demonstrated superior competitiveness in the domain of individual sample generation. Dhariwal and Nichol39 demonstrated that, compared with current cutting-edge generative models, diffusion models can achieve higher image sample quality. Nikankin et al.42first applied this technique to a single-sample video generation task. Wang introduced SinDiffusion, a model that examines how the model scale and the receptive field impact the generation effect. This study focuses on capturing internal statistical information to generate single-sample images. Zhang54 investigated the ability of text-guided image generation under single-sample conditions. Kulikov et al.55 used multiscale diffusion processes to learn the internal statistics of training images. A DMs significantly improved the quality of the generated samples, but there is still room for improvement in the noise prediction module, especially for specific image generation tasks such as SAR.

Methods

In this section, the proposed SSDDPM framework aims to establish an effective mapping between real and generated images. First, patches are obtained from a single image, and information reconstruction is completed by learning the patch distribution pattern through a diffusion model. In this process, we propose an ANEM to predict the diffusion noise, where the backbone consists of an encoding and decoding network, a DAGM, and an IGAM.

Overall learning imaging framework

Conventional single-sample generation approaches use multiscale generation models, where several generators need to be trained to make predictions at each scale. The image generation process begins with smaller scales and gradually progresses to larger scales41,44. In this case, increasing the scale can result in excessive noise accumulation, leading to generation failure56. For SAR images, which typically involve small targets and backgrounds with speckle noise, the use of a progressive architecture can contribute additional noise that can cause instability in the generation process.

To address this issue, we have developed a novel denoising technique named the SSDDPM. This model draws inspiration from previous studies42,57. Our approach involves designing a diffusion model that operates at a single scale, hence simplifying the training procedure by requiring only one generator to facilitate the diffusion process along the time dimension. The training structure is depicted in Fig. 1:

The main objective of the SSDDPM is to divide the input image into several patches and feed them into the diffusion network during the forward diffusion stage. This approach allows for the extraction of internal statistical information from a single image and the prediction of the patches. Owing to the rigorous thematic derivation process of the DDPM, the generator (G) in the diffusion process has the sole responsibility of training a noise filter \(\:\theta\:\). The inputs to the noise filter are \(\:{x}_{t}\) and the moment \(\:t\), and the output is the expected noise \(\:{\epsilon}_{\theta\:}\) of the current patch. Therefore, a valid mapping between \(\:{\epsilon}_{\theta\:}\) and the patch is established. Here, we use the ANEM to predict the noise value \(\:{\epsilon}_{\theta\:}\), which is described in Sect. 3.2.

The diffusion part consists of two processes: forward noise addition and reverse denoising generation. First, we define a diffusion time step \(\:T\). Given \(\:x\) and a time-embdded \(\:t\sim\:uniform(1,\dots\:T)\), we sample an \(\:\epsilon\sim\mathcal{N}(0,I)\) from a Gaussian noise distribution so that the noisy image \(\:{x}_{t}\) corresponding to a given \(\:t\) in the process of forward noise addition can be expressed as follows:

where \(\:{\stackrel{-}{\alpha\:}}_{t}\) represents a dynamically decaying hyperparameter and the diffusion target is to fit the original image \(\:{\varvec{x}}_{0}\) by predicting the added noise so that the optimal noise can be selected to participate in the calculation in the process of reverse denoising. In this process, training a model \(\:\theta\:\) to generate the noise \(\:{\epsilon\:}_{\theta\:}\) is necessary. The objective function is expressed as follows:

Then, the DDPM is used for reverse patch prediction, in which a given \(\:{\varvec{x}}_{\text{t}-1}\) can be represented by the previous stage \(\:{\varvec{x}}_{\text{t}}\) as follows:

where \(\:{\mathbf{z}}_{t}\) denotes Gaussian noise involved at timestep t. Furthermore, we obtain the prediction noise \(\:{\epsilon\:}_{\theta\:}\) through G, and the predicted patches \(\:{x}_{0}\) are obtained by reverse denoising iterations according to (3). Ultimately, the patches are integrated to obtain the generated image.

Attention-based noise estimate generation model (ANEM)

In the diffusion model, training a noise prediction generator G determines the noise quality, which then influences the generated image results. UNet, one of the dominant frameworks in existing image segmentation tasks, serve as a source of inspiration in this study for its clean and efficient codec paradigm that is widely applied in diffusion model applications as the backbone. In UNet, too many sampling layers can lead to a large receptive field, which may reduce diversity56. The model tends to generate samples that are exactly the same as the original image.

Thus, as shown in Fig. 2, the ANEM is proposed for noise prediction. The ANEM backbone consists of input, output, encoder and decoder block. The encoder and decoder both contain three feature learning layers and two sampling layers. We limit the number of sampling layers to obtain the optimal diversity generation capability56. In the encoding stage, the inputs are an image patch \(\:{x}_{t}\) and a time-embedded \(\:t\), and a 2D convolution layer preprocessing block is used to obtain a feature map of size H×W×C. Downsampling is performed to obtain different sizes of feature maps, thus creating feature learning layers at three resolution scales and mapping input images to high-level feature representations.

In the encoder stage, the ANEM contains a three-layer cascade residual block during the encoder for the feature learning layer, which is used to extract valid information at different resolution scales. For each ResBlock, the input and time embedding are reconstructed using the convolutional and linear layers, respectively, and the results are then summed. The obtained embedded feature map is passed through a convolution module with residual joins to obtain the result. The calculation expression is as follows:

where \(\:F\) and \(\:{F}^{{\prime\:}}\) represent inputs and outputs, respectively, \(\:Identity\) represents a residual connection, \(\:EFM\) represents an embedded feature map, and \(\:FC\) represents a fully connected layer for dimension matching.

We apply BatchNorm (BN) regularization and \(\:SiLU\) (5) activation before each convolution. \(\:BN\) enhances the training speed and avoids the vanishing gradient problem in the activation function. \(\:SiLU\) has efficient approximation performance, and its low computational complexity can effectively improve the model response speed. Moreover, using identity connections to enhance network performance yields more comprehensive image characteristics, preventing performance degradation resulting from increasing the depth of the convolution.

Notably, we propose a DAGM for the sampling layers. The ANEM uses four such modules in total, addressing the problem of information loss due to size changes during sample processing. The DAGM uses two parallel convolutional layers (dual streams) to obtain different feature maps. The parallel feature map is designed to replace dropout operations, effectively improving overfitting and model collapse. This design avoids the problem of performance degradation caused by randomly dropping neurons during dropout. One part is used to form the initial sampling result, and the other part is used to obtain its high-dimensional information through an attention module. Finally, an output gate58,59 aggregates the information to obtain the result, for which the calculation expression is as follows:

where \(\:F\) is the input feature map. We choose EMA 66 as the attention module, which has lightweight characteristics and enhances the feature representation ability. \(\:\otimes\sigma\:\) forms the output gate used to aggregate dual-stream information. \(\:\otimes\) represents elementwise multiplication, \(\:\sigma\:\left(\cdot\right)\) is the sigmoid activation function, and its expression is as follows:

In the decoder stage, the structure is designed similar to that of its encoding counterpart, with the difference that the DAGM assumes the role of upsampling. After the DAGM, the size of the feature map is doubled, effectively eliminating the checkerboard grid effect caused by the deconvolution and interpolation methods. Each feature learning layer in the decoder is connected by a copy and crop for information supplementation, and we use an IGAM for information filtering. The network details with the IGAM are described in Sect. 3.3.

Information guided attention module (IGAM)

In traditional codec models, copying and cropping are mostly used to connect the encoder to the decoder (Fig. 3(a))60. This process provides a useful mechanism for transferring information to the decoder. Unfortunately, for SAR single image diffusion, the patch set has a large proportion of SAR speckle noise. This noise can cause the encoder to contain a large amount of redundant information, overwhelming the SAR target and ultimately leading to generation failure. In other words, SAR speckle noise introduces excessive and unnecessary information, making establishing a meaningful connection between accurate prediction noise and patches difficult.

To solve this problem, we propose an IGAM, as shown in Fig. 4, for the ANEM decoder following the copy and crop feature map (as depicted in Fig. 3(b)).

The IGAM consists of a spatial attention module (SAM), a channel attention module (CAM) and an information guided module (IGM). The SAM captures the contribution of the feature map in the spatial dimension, allowing the model to focus on interest regions during training. The CAM focuses on the channel dimension to model the importance of each channel of the feature map, thus enhancing or suppressing different channels. The IGM adjusts the SAM and CAM weights before feature fusion. Eventually, information-guided operators output enhanced features.

Spatial attention module (SAM)

First, the input feature map undergoes max pooling and mean pooling according to H-dim, and two feature maps with a size of \(\:H\times\:W\times\:1\) are obtained. Max pooling primarily extracts high-frequency information in the spatial dimension, and mean pooling extracts global information. After concatenation (\(\:H\times\:W\times\:2\)), the feature maps are subsequently input into the Conv2D layer to achieve balanced spatial attention (\(\:H\times\:W\times\:1\)). Ultimately, the values are normalized by the sigmoid activation function to obtain the weight output of spatial attention (\(\:H\times\:W\times\:1\)), which is calculated as follows:

where \(\:F\) is the input, \(\:Cat\) represents concatenation to combine the feature map, \(\:MP\) is max pooling and \(\:MaP\) is mean pooling. \(\:\sigma\:(\cdot)\) is the sigmoid activation function.

We use \(\:MP\) and \(\:MaP\) to obtain a richer representation of spatial information. To enhance feature extraction, the concatenation strategy ensures comprehensiveness. The combined feature maps are uniformly processed through the convolutional layer, enabling the computation of attention weights with more balanced information.

Channel attention module (CAM)

First, the input feature map undergoes mean pooling and max pooling, and then two \(\:1\times\:1\times\:C\) vectors are created. Each vector passes through two Conv1D layers. Then, the two vectors \(\:(1\times\:1\times\:C\)) are summed to obtain the reconstruction vector. Mean pooling captures global information, while max pooling filters out invalid information and highlights key information. The mixed vectors are input into the sigmoid activation function, and finally, the channel attention weights are obtained as follows:

For the two convolution layers used in (9), after the first convolution, the length of the vector is reduced to 1/4 of the original length. After the SiLU activation function, the other convolution is used to return the vector to \(\:(1\times\:1\times\:C\)).

Information guided module (IGM)

An imbalance in SAR patches leads to model degradation. To combine SAM and CAM attention, common strategies adopt direct addition (Fig. 3(a))60. However, for the SAR single-sample generation task, the statistical patterns between patches vary widely and are unevenly distributed, inevitably leading to a large difference in the SAM and CAM weights. Specifically, patches containing too few SAR targets can lead to generation failure. On the other hand, there may be too many patches containing contextual information, resulting in a large amount of redundant noise in the generated samples.

Therefore, we argue that differential patches should be targeted for weighting information guidance. Our proposed IGM is the core module of the IGAM, which contains an information-guided operator. This method effectively aggregates the SAM and CAM outputs. First, a sigmoid activation function is employed to standardize the outputs to a consistent magnitude. Then, we apply the guided operator to obtain the weight information of the SAM and CAM outputs separately. For a feature map F(\(\:H\times\:W\times\:C\)), given \(\:SAM\left({F}_{i}\right)\) and \(\:CAM\left({F}_{i}\right)\), the total weight \(\:{W}_{T}\) is defined as:

where \(\:SAM\left({F}_{i}\right)\) represents each element in \(\:SAM\left(F\right)\) and \(\:CAM\left({F}_{i}\right)\) represents each element in \(\:CAM\left(F\right)\). On this basis, the SAM weights (\(\:{W}_{S}\)) and CAM weights (\(\:{W}_{C}\)) are obtained as follows:

Ultimately, \(\:{W}_{s}\) and \(\:{W}_{c}\) form the information weight vectors in the spatial and channel dimensions. Both guide the feature aggregation process of the SAM and CAM, and the final IGAM results are obtained as follows:

In general, the proposed IGAM embeds a new information-guided learning mechanism. For a given feature graph, we use the IGAM to learn information under different attention modes. For each patch, it is possible to establish independent feature graph mapping methods. For the overall patch, it is more beneficial for the ANEM to learn the differences in the background distribution, thus improving the diversity of the prediction noise. Moreover, it is easier to highlight patches that contain target information, leading to higher-quality generated samples.

Experiments and analysis

In this section, we introduce the data required for the experiments and the comparison metrics. Then, we present comparisons by using the most recent and dominating single-sample generation methods as competing approaches, including evaluations of generation quality, generation diversity, and ablation experiments.

Overall learning imaging framework

Dataset collection

Owing to the lack of SAR single-sample generation datasets, we select images in open-source datasets as training samples. To validate the proposed SSDDPM algorithm, we consider the effects of sea clutter, ship textures, wake trails, etc., and construct 2 datasets composed of 8 typical scenes. To effectively support the data requirements for downstream tasks such as SAR target detection, classification, and segmentation, a unified image format has been adopted. For Dataset 2, we mapping the single-channel images to the RGB color space. The SAR images described the details in Fig. 5.

Dataset 1 is from the SAR-Ship dataset proposed by Wang61, which is a standard ship detection dataset. The dataset was constructed with a 43,819 ship chip with an image size of 256 × 256 and contains a total of 5 imaging modes (UFS, FS1, etc.). Then, four scenes are selected from to form dataset 1. The data sources are GaoFen-3, and each contains different numbers of vessels and noise levels.

Dataset 2 is a self-constructed dataset. We select a scene from Sentinel-1 data. The image is in single look complex (SLC) format, the swath is 250 km, the resolution is 15 m, and the imaging mode is IW. Single cross-polarization SAR images are used. After performing the necessary filtering process using remote sensing image processing software, we crop four scenes from the image. These cropped scenes form dataset 2. Dataset 2 has a smaller target size and more sea clutter, more closely aligning with real sea conditions. Moreover, we select ship data with wake trails to test the ability of single-sample image generation for complex textures. Original Sentinel-1 data are shown in Fig. 6.

Learning parameters and equipment

The proposed method is run in Python (3.10) with PyTorch (1.11 + cu11.3) as the backend framework. The hardware setup includes a graphics workstation equipped with an AMD Ryzen 7 5800 × 8-core processor (3.8 GHz, 32 GB RAM) and an NVIDIA GeForce RTX 3090. We utilize the CUDA computing architecture and the cuDNN library for accelerated training.

The size of the scene image used for learning is \(\:256\times\:256\). After extensive experimental validation, we use random cropping to slice 15,000 samples from the original image to form a cropped patch dataset. Every 4 patches form a batch. Each batch is defined as a training step, and the total training step is set to 120,000. Finally, the initial learning rate and diffusion step are set to 0.0002 and 500, respectively.

Comparison methods

(1) SinGAN41: A classical single-sample generation method using unsupervised learning. The sample generation task is accomplished by constructing a GAN with multiple scales.

(2) ExSinGAN52: An improved approach to SinGAN using triple representations of images. Three modular GANs are proposed that learn the distributions of structure, semantics, and texture, making the generative model more comprehensible.

(3) SinDiffusion56: This is a single-sample generation method based on a diffusion model. The relationship between receptive fields and sample generation is generated, and then a DMs for single-scale generative architectures is proposed.

(4) SinFusion42: Presented in the same year as SinDiffusion. The fully convolutional chain of the ConvNext block is used as a backbone network, facilitating image and video generation. By training with large crops, the generated outputs retain the global structure of the input image.

(5) SinDDM55: In this work, a multiscale diffusion model is designed that combines the power and flexibility of DDMs with the multiscale structure of SinGAN. To drive the reverse diffusion process, a fully convolutional lightweight denoiser was employed and conditioned in terms of both the noise level and the scale.

Evaluation metric and parameter setting

To analyze the results of various methods quantitatively, structural similarity (SSIM)62, single image FID (SIFID)41, and learned perceptual image patch similarity (LPIPS)63were chosen as quantitative metrics to evaluate the generation quality of our proposed technique, and the inception score (IS)64 was used to evaluate the diversity of the generated samples. Notably, for each metric, we chose 50 generated samples to calculate the mean.

The SIFID and LPIPS are generation sample quality evaluation metrics that are based on pretrained networks. The generated and real images are input into a pretrained network to calculate the distance between them. The SIFID is the widely accepted single-sample generation quality evaluation index. First, a single image is loaded into the inception network65to obtain the intermediate layer statistical distribution. The Frechet inception distance (FID)66 of the intermediate statistical distribution between the generated and real images is then evaluated. The LPIPS measures the perceptual similarity between images. This linearly-weighted distance metric is based on deep network features. The LPIPS can obtain image difference metrics that are similar to those perceived by the human visual system.

The SSIM is an image similarity evaluation metric based on pixel statistics that integrates luminance, contrast, and structural factors and thus closely aligns with human visual perception. The calculation is shown in (13).

where \(\:x,y\) represent the real and generated images, respectively. \(\:{\mu\:}_{x}\:\text{a}\text{n}\text{d}\:{\mu\:}_{y}\) denote the means of the images, respectively, \(\:{\sigma\:}_{x}\) and \(\:{\sigma\:}_{y}\) represent the standard deviations of the images, and \(\:{\sigma\:}_{x}^{2}\) and \(\:{\sigma\:}_{y}^{2}\) represent the variances of the images.

We used the IS to evaluate the diversity of the generated images, which is a no-reference image diversity evaluation method that calculates the results through the distribution of image categories.

where \(\:x\sim\:{p}_{g}\) represents the generated samples, \(\:p(y\mid\:x)\) represents the InceptionV3 output, and \(\:\widehat{p}\left(y\right)\) represents the mean of the probability distributions of all generated samples.

Overall learning imaging framework

Comparison of image generation quality

The results of the proposed SSDDPM method are represented in Figs. 7 and 8. For each scene, we selected four samples of the best results from all the generated images for presentation. Column 1 of each figure represents the real image, and columns 2 ~ 5 represent generated images. Subjectively, our method generated SAR images with excellent similarity to the original images. More importantly, the images SSDDPM generated exhibited greater diversity; this feature is beneficial for applications in areas such as sample augmentation.

To validate the effectiveness of the proposed SSDDPM method, we trained the comparison algorithms to summarize the representative generated samples, as shown in Fig. 9. Similarly, we select the subjectively best generated image for each method to display. These generated results mainly contain images from datasets 1 and 2, where the image size is 256 × 256. Figure 9 clearly shows that our method is better than the competing algorithms.

Generation results for dataset 1

In terms of the subjective results, the SSDDPM adapted to the four scenes in dataset 1. Specifically, this model generated images that better capture the subtle changes in the original image. For example, as the number of ships increased (scenes (1–3)), our model did not lose its ability to capture the details of the targets. The generated ship targets are highly consistent with the original image.

Generation results for dataset 2

Since this dataset originates from raw images without extra postprocessing, image generation is challenging. First, as the target decreases, the proposed method maintains excellent generative ability. For example, some methods were applicable for dataset 1, but they failed to generate images for dataset 2 (SinDiffusion). Moreover, the background generation of many models worsened, and many SAR artifacts appeared (SinFusion in scene 8). Conversely, the ability of the SSDDPM to generate background noise in dataset 2 did not change with the number of ships (SSDDPM in scene 7).

Quantitative analysis of generation effects

To evaluate the quality of image generation, we created 50 images for each method and then analyzed them quantitatively. We summarized the results by calculating the mean values, which are presented in Table 2. The red text represents the best result for a given indicator, whereas the blue text represents the second-bets result.

As shown in Table 2, in scene 5, the proposed method is optimal for most of the metrics. Compared with the traditional SinGAN, the proposed method decreases the SIFID from \(\:4.80\times\:{10}^{-4}\) to \(\:1.66\times\:{10}^{-7}\), increases the SSIM from 0.07 to 0.51, and decreases the LPIPS from 0.61 to 0.23. Compared with the DM results, our results are competitive. Additionally, when a single-scale generative framework is used, the SSDDPM reduces the SIFID values by 45% and 9% compared with those SinDiffusion and SinFusion, respectively.

Additionally, the proposed method achieves 7 best results and 1 s-best result in terms of the SIFID, 3 best and 4 s-best results for the SSIM, and 5 best and 3 s-best results for the LPIPS. In fact, owing to the differences in the calculation methods of the different evaluation metrics, it is reasonable to assume that our method does not need to achieve the best results in the full range of scenarios. Therefore, we statistically counted and summed the number of first- and second- best options as an indicator of advantage, the details of which are displayed in Fig. 10. Here, our method achieved 23 indicators of advantage, 91.67% and 100.09% higher than those of the second- and third-best methods, respectively. Therefore, our image generation quality metrics were better than those of the competing methods.

In particular, we compared the detailed differences in generation quality between the SSDDPM and other methods in a more visual manner. The results for scene 5 are used to calculate the percentage improvement in the SSDDPM over the benchmark method, and the results are shown in Table 3. Compared with SinFusion (second-best in terms of the SIFID), our proposed method yields a 71.53% lower value. Similarly, our method improved the SSIM by 8.51% compared with that of SinDiffusion and reduced the LPIPS by 20.69% compared with that of ExSinGAN.

At the same time, Table 4 represents the signal to noise ratio (SNR) of the SAR images. Meanwhile, we analyzed the distance of the SNR between the generated image and the input image. It is evident that SSDDPM achieved optimal results across all seven scenes, indicating that the SNR of the generated images is much closer to that of the real images. This further demonstrates the proposed method’s ability to comprehensively map SAR features in terms of texture, shape, and noise.

Generation details of ship targets and sea clutter

Generation details for SAR ship targets

An essential goal of the SAR single-sample generation task is that the generated images are similar in distribution to that of the original images. One characteristic of SAR ship images is that they contain different sea clutter, leading to challenging image generation if the target information is complex. Therefore, balancing ship-generated detail and background information poses a model design challenge.

Thus, we use the scene 6 result to analyze the ship generative detail, as shown in Fig. 11. Notably, our images represent better performance in generating ship information, especially for the ship wake (blue box), which is continuous and realistic. Other methods generate ship wakes with breakage or shortage and unrealistic ship targets (red boxes), while some methods failed to generate images (SinGAN). Furthermore, we display the power spectrum conversion results for scene 6, as shown in Fig. 12, which demonstrates that our power spectrum distribution is more uniform and closer to the original distribution. This figure shows the effect of the proposed method from the frequency domain perspective.

Generation details for sea clutter

We particularly focused on the effect of generating background information, especially sea clutter. This task relates to image realism and downstream task robustness. Therefore, we cropped a batch of background images (yellow boxes) from Fig. 11 and then counted the gray value probability distribution, as shown in Fig. 13. By comparing these results, we found that the background image generated by the SSDDPM is closer to the real image. The gray value probability distribution curves between the real image and the SSDDPM image are well aligned. This finding also demonstrates the ability of the proposed approach to generate background information effectively.

Comparison of image generation diversity

Image generation diversity is based on the generation quality. However, generating noisy masses can also lead to high diversity scores. Therefore, we refer to Fig. 10 and chose ExSinGAN and SinFusion, known for their high-quality generation capabilities, as the benchmark methods to evaluate the diversity performance of the SSDDPM. First, as shown in Figs. 7 and 8, the SSDDPM can generate samples of different descriptions with guaranteed generation quality. From Table 5, the SSDDPM achieved 5 best and 3 s-best IS metrics, showcasing its strong performance in diversity generation. In scene 6, the SSDDPM exhibits a 27.35% performance improvement over ExSinGAN. In scene 8, although the diversity metric is reduced by 11.00%, the generation quality improves by 50% (LPIPS in scene 8), which does not affect our overall judgment. Notably, the number of advantage metrics (8 for SSDDPM and 4 for SinFusion) indicates that single-scale generation methods can enhance diversity.

In conclusion, by comparing subjective and objective indicators, the SSDDPM exhibits subjectively better generation quality and better performance in individual scenes under comprehensive metrics. Furthermore, we summarize the top three methods in the tasks related to this article: SSDDPM, ExSinGAN and SinFusion. Among them, ExSinGAN improves the shortcomings of SinGAN and therefore achieves better results. SinDiffusion can enhance diversity when limiting the receptive field and choosing a single-scale architecture61; however, the lower network complexity leads to less effective results. Although SinDDM employs a diffusion-based approach, its multiscale architecture and parallel use of diffusion overlap noise also constrain model learning and lead to a lack of ability to generate small targets (scenes 5 and 8 in Fig. 9). The proposed SSDDPM draws on the above issues and employs a single scale to enhance the diversity generation capability, and the proposed ANEM module makes full use of the advantages of the DAGM and IGAM to ensure the image generation quality.

Ablation experiment

We have demonstrated the superiority of the SSDDPM in terms of image generation quality and diversity. Compared with similar methods in terms of generation efficiency, the ability of the SSDDPM to generate images efficiently is due to the use of ANEM module, in which the DAGM and IGAM play core roles. To further demonstrate the effects of these two important improvements, we designed ablation experiments using scene 6, and the results are shown in Fig. 14.

Through comparison, it is found that after using the ANEM, the SSDDPM is optimal in terms of all the metrics. After we removed the DAGM and IGAM, the model performance worsened quickly, the SIFID increased by 259.32% and 297.46%, the SSIM decreased by 39.13% and 21.74%, and the LPIPS increased by 13.51% and 21.62%, respectively. This finding indicates that both modules contribute to efficient image generation.

Discussion

Influence of diffusion steps

Unlike other natural images, SAR images contain considerable noise. Therefore, an excessive diffusion step increases only the computational cost. As shown in Fig. 15, the LPIPS performance of the model remains stable in the interval of 400 ~ 800 steps. Therefore, we set the diffusion step size to 500 to achieve better performance. The number of diffusion time steps required differs because of the variation in image properties, and there seems to be some mathematical correlation between them. We will continue to investigate this problem to obtain the minimum training cost.

Effect of different band

To investigate the impact of different SAR frequencies on SSDDPM, we conducted experiments using X-band and L-band SAR images. The X-band images were obtained from the MSTAR dataset, while the L-band data were sourced from the literature67. As shown in Figs. 16 and 17, SSDDPM effectively learns the features of both high-frequency and low-frequency SAR system images. Table 6 demonstrates that the proposed method achieves the best results in terms of SIFID, SSIM, and LPIPS, indicating the strong generalization capability of SSDDPM.

The SSDDPM model is proposed for SAR image generation. This model requires only one training sample for SAR image data augmentation and enhancement. We design a diffusion model for SAR image generation that slices image patches to obtain internal statistical information. At the same time, the patch distribution is learned using a DM. The advantage of this architecture is that only one generator needs to be designed for training, effectively avoiding redundant information accumulation. To better predict the noise parameters in the diffusion model stage, we design the ANEM, DAGM and IGAM. The ANEM consists mainly of encoding and decoding networks. According to existing studies, we limit the feature extraction layers to 3 to obtain the optimal efficiency and use the ResBlock. In addition, the DAGM is designed as a dual-stream attention sampling module to avoid information loss during the sampling process. Considering the characteristics of SAR images with considerable noise and complex textures, we design the IGAM, which is characterized by a proposed IGM that can effectively balance the weights of spatial attention and channel attention. We insert the IGAM into the copy and crop part of the ANEM, enabling the establishment an efficient mapping between the encoder and decoder.

Furthermore, we analyze the impact of these modules on the image generation quality. The ANEM improves the noise prediction level, the DAGM prevents information omission, and the IGAM filters out redundant information. The above design effectively improves the data enhancement level of SAR images. As shown in Figs. 9 and 10, our method is effective in significantly improving the generated image quality. In addition, the ablation experiments (Fig. 14) show that the combined use of the DAGM and IGAM allows the optimal model performance to be achieved. This is because these two modules maximize the preservation of detailed information from the ANEM. From Fig. 9, it can be concluded that our method can satisfy the needs of data enhancement and interpretation both in the public dataset and the self-built dataset. Our model is adept in both learning gray values and redrawing texture. In addition, for the characteristics of SAR images, we focus on analyzing the ship details (Fig. 11) and the simulation ability of sea clutter (Fig. 13), which is attributed to the adaptive information guidance strategy of the IGAM. In terms of local details, the SSDDPM is superior to the other comparison methods.

However, the SSDDPM still has much room for improvement. First, the DDPM has a rigorous mathematical derivation, but this also increases the number of parameters that need to be predicted, which can lead to performance degradation. In the next step of our research, we will consider solving this problem from the perspective of algorithmic optimization, such as by using the denoise diffusion implicit model to increase the speed. Second, our generation process is uncontrollable; in future studies, we will consider the use of a controllable generation algorithm in the SAR single-sample generation process to achieve single-SAR image editing. Finally, in our future work, we will focus on generating SAR data using different polarization combinations. This approach aims to explore the practical value of SAR image generation methods in multi-band combination scenarios.

Application

To validate the effectiveness of SSDDPM in downstream tasks. We performed the SAR ship wake and aircraft target detection. First, training models of ship wake and airplane targets were constructed using YoloV868. The generated ship and airplane images were used as test data for the target detection. As shown in Fig. 18, the targets generated by SSDDPM can be effectively detected, responding effectively to both wake and dense aircraft information. This provides a meaningful approach to the application of SSDDPM to downstream tasks that require data augmentation, especially for insufficient sample scenarios.

Conclusion

In this article, to address the problems of insufficient quality and diversity in SAR image generation, we propose a novel single-sample SAR image generation method, the SSDDPM. By using a DM to learn the statistical patterns of patches, image reconstruction can be achieved. To ensure the quality of the single-scale architecture, we design an ANEM to generate or predict diffusion noise. The ANEM contains a DAGM and an IGAM. For SAR ship scenes, the SSDDPM achieved the optimal performance among that of the competitive algorithms. Compared with the classical GAN method SinGAN, the proposed method decreases the SIFID from \(\:4.80\times\:{10}^{-4}\) to \(\:1.66\times\:{10}^{-7}\). Compared with the DM method SinFusion, the SSDDPM decreased the SIFID from \(\:4.68\times\:{10}^{-6}\) to \(\:2.16\times\:{10}^{-6}\).

Data availability

The datasets used and analysed during the current study available from the corresponding author on reasonable request.

References

Zha, C. et al. SAR ship detection based on salience region extraction and multi-branch attention. Int. J. Appl. Earth Obs Geoinf. 123, 103489 (2023).

Jianxiong, Z., Zhiguang, S., Xiao, C. & Qiang, F. Automatic target recognition of SAR images based on global scattering center model. IEEE Trans. Geosci. Remote Sens. 49, 3713–3729 (2011).

Wu, Z. et al. CCNR: cross-regional context and noise regularization for SAR image segmentation. Int. J. Appl. Earth Obs Geoinf. 121, 103363 (2023).

Wu, W. et al. Quantifying the sensitivity of SAR and optical images three-level fusions in land cover classification to registration errors. Int. J. Appl. Earth Obs Geoinf. 112, 102868 (2022).

Cao, C. et al. A demand-driven SAR target sample generation method for imbalanced data learning. IEEE Trans. Geosci. Remote Sens. 60, 1–15 (2022).

Sun, Y. et al. Attribute-guided generative adversarial network with improved episode training strategy for few-shot SAR image generation. IEEE J. Sel. Top. Appl. Earth Obs Remote Sens. 16, 1785–1801 (2023).

Ding, K. et al. Towards real-time detection of ships and wakes with lightweight deep learning model in Gaofen-3 SAR images. Remote Sens. Environ. 284, 113345 (2023).

Xu, C., Qi, R., Wang, X. & Tao, M. Instability of energy spectrum disturbance for ship turbulent wakes: SAR imaging simulation and analysis. Ocean. Eng. 292, 116502 (2024).

Wang, Y., Wang, C. & Zhang, H. Ship classification in high-resolution SAR images using deep learning of small datasets. Sensors 18, 2929 (2018).

Pauciullo, A., De Maio, A., Perna, S., Reale, D. & Fornaro, G. Detection of partially coherent scatterers in multidimensional SAR tomography: a theoretical study. IEEE Trans. Geosci. Remote Sens. 52, 7534–7548 (2014).

Choi, J. H., Lee, M. J., Jeong, N. H., Lee, G. & Kim, K. T. Fusion of target and shadow regions for improved SAR ATR. IEEE Trans. Geosci. Remote Sens. 60, 1–17 (2022).

Li, Z. & Bao, Z. A novel approach for wide-swath and high‐resolution SAR image generation from distributed small spaceborne SAR systems. Int. J. Remote Sens. 27, 1015–1033 (2006).

Jackson, J. A. & Moses, R. L. A model for generating synthetic VHF SAR forest clutter images. IEEE Trans. Aerosp. Electron. Syst. 45, 1138–1152 (2009).

Kusk, A., Abulaitijiang, A. & Dall, J. Synthetic SAR image generation using sensor, terrain and target models in Proceedings of EUSAR : 11th European conference on synthetic aperture radar 1–5 (VDE, 2016). (2016).

Wang, J. K., Zhang, M., Cai, Z. H. & Chen, J. L. SAR imaging simulation of ship-generated internal wave wake in stratified ocean. J. Electromagn. Waves Appl. 31, 1101–1114 (2017).

Wang, L., Zhang, M. & Wang, J. Synthetic aperture radar image simulation of the internal waves excited by a submerged object in a stratified ocean. Waves Random Complex. Media. 30, 177–191 (2018).

Dong, M., Cui, Y., Jing, X., Liu, X. & Li, J. End-to-end target detection and classification with data augmentation in SAR images in 2019 IEEE international conference on computational electromagnetics (ICCEM) 1–3IEEE, (2019).

Bandi, A., Adapa, P. V. S. R. & Kuchi, Y. E. V. P. K. The power of generative AI: a review of requirements, models, input–output formats, evaluation metrics, and challenges. Future Internet. 15, 260 (2023).

Wang, P. & Patel, V. M. Generating high quality visible images from SAR images using CNNs in 2018 IEEE radar conference (RadarConf18) 0570–0575IEEE, (2018).

Bhamidipati, S. R. M., Srivatsa, C., Gowda, C. K. S. & Vadada, S. Generation of SAR images using deep learning. SN Comput. Sci. 1, 1–9 (2020).

Ding, J., Chen, B., Liu, H. & Huang, M. Convolutional neural network with data augmentation for SAR target recognition. IEEE Geosci. Remote Sens. Lett. 13, 364–368 (2016).

Lv, J. & Liu, Y. Data augmentation based on attributed scattering centers to train robust CNN for SAR ATR. IEEE Access. 7, 25459–25473 (2019).

Jia, H., Wang, Y., Fu, S. & Xu, F. SAR image generation by integrating differentiable SAR renderer with neural networks in IGARSS 2023–2023 IEEE international geoscience and remote sensing symposium 2057–2060 (IEEE, 2023).

Goodfellow, I. et al. Generative adversarial networks. Commun. ACM. 63, 139–144 (2020).

Zhang, H. et al. StackGAN++: realistic image synthesis with stacked generative adversarial networks. IEEE Trans. Pattern Anal. Mach. Intell. 41, 1947–1962 (2018).

Wang, G., Ye, J. C., Mueller, K. & Fessler, J. A. Image reconstruction is a new frontier of machine learning. IEEE Trans. Med. Imaging. 37, 1289–1296 (2018).

Yuan, Z. et al. Efficient and controllable remote sensing fake sample generation based on diffusion model. IEEE Trans. Geosci. Remote Sens. 61, 1–12 (2023).

Rui, X., Cao, Y., Yuan, X., Kang, Y. & Song, W. DisasterGAN: generative adversarial networks for remote sensing disaster image generation. Remote Sens. 13, 4284 (2021).

Jozdani, S., Chen, D., Pouliot, D. & Johnson, B. A. A review and meta-analysis of generative adversarial networks and their applications in remote sensing. Int. J. Appl. Earth Obs Geoinf. 108, 102734 (2022).

Ju, M., Niu, B. & Hu, Q. SARGAN: a novel SAR image generation method for SAR ship detection task. IEEE Sens. J. 23, 28500–28512 (2023).

Song, Q., Xu, F., Zhu, X. X. & Jin, Y. Q. Learning to generate SAR images with adversarial autoencoder. IEEE Trans. Geosci. Remote Sens. 60, 1–15 (2022).

Du, S., Hong, J., Wang, Y. & Qi, Y. A high-quality multicategory SAR images generation method with multiconstraint GAN for ATR. IEEE Geosci. Remote Sens. Lett. 19, 1–5 (2021).

Gao, H., Wang, C., Xiang, D., Ye, J. & Wang, G. TSPol-ASLIC: adaptive superpixel generation with local iterative clustering for time-series quad- and dual-polarization SAR data. IEEE Trans. Geosci. Remote Sens. 60, 1–15 (2021).

Liu, J., Wang, Q., Cheng, J., Xiang, D. & Jing, W. Multitask learning-based for SAR image superpixel generation. Remote Sens. 14, 899 (2022).

Xia, W., Liu, Z. & Li, Y. SAR-PeGA: a generation method of adversarial examples for SAR image target recognition network. IEEE Trans. Aerosp. Electron. Syst. 59, 1910–1920 (2022).

Zhang, J. et al. Application of deep generative networks for Sar/Isar: A review. ARTIF. INTELL. REV. 56, 11905–11983 (2023).

Ramesh, A., Dhariwal, P., Nichol, A., Chu, C. & Chen, M. Hierarchical text-conditional image generation with clip latents. arXiv preprint arXiv:2204.06125, (2022).

Rombach, R., Blattmann, A., Lorenz, D., Esser, P. & Ommer, B. High-resolution image synthesis with latent diffusion models in 2022 IEEE/CVF conference on computer vision and pattern recognition (CVPR) 10674–10685IEEE, (2022).

Dhariwal, P. & Nichol, A. Diffusion models beat Gans on image synthesis. Adv. Neural Inf. Process. Syst. 34, 8780–8794 (2021).

Cao, Z. H. et al. Ddrf: denoising diffusion model for remote sensing image fusion. arXiv preprint arXiv:2304.04774, (2023).

Shaham, T. R., Dekel, T. & Michaeli, T. SinGAN: learning a generative model from a single natural image in 2019 IEEE/CVF international conference on computer vision (ICCV) 4569–4579IEEE, (2019).

Nikankin, Y., Haim, N. & Irani, M. Sinfusion: training diffusion models on a single image or video. arXiv preprint arXiv:2211.11743, (2022).

Asano, Y. M., Rupprecht, C. & Vedaldi, A. A critical analysis of self-supervision, or what we can learn from a single image. arXiv preprint arXiv:13132, (2019). (1904).

Deng, Q., Huang, Z., Tsai, C. C. & Lin, C. W. HardGAN: a haze-aware representation distillation GAN for single image dehazing in European conference on computer vision (eds. Vedaldi, A., Bischof, H., Brox, T., & Frahm, J. M.) 722–738Springer International Publishing, (2020).

Sun, W. & Liu, B. D. ESinGAN: enhanced single-image GAN using pixel attention mechanism for image super-resolution in 2020 15th IEEE international conference on signal processing (ICSP) 181–186IEEE, (2020).

Lin, J., Pang, Y., Xia, Y., Chen, Z. & Luo, J. TuiGAN: learning versatile image-to-image translation with two unpaired images in Computer vision–ECCV 2020: 16th European conference, proceedings, part IV 16 18–35Springer International Publishing, (2020).

Hinz, T., Fisher, M., Wang, O. & Wermter, S. Improved techniques for training single-image GANs in IEEE winter conference on applications of computer vision (WACV) 1300–1309 (IEEE, 2021). (2021).

Chen, J., Xu, Q., Kang, Q. & Zhou, M. Mogan: morphologic-structure-aware generative learning from a single image. arXiv preprint arXiv:2103.02997, (2024).

Yoo, J. SinIR: efficient general image manipulation with single image reconstruction in International conference on machine learning 12040–12050PMLR, (2021).

Sushko, V., Zhang, D., Gall, J. & Khoreva, A. Generating novel scene compositions from single images and videos. arXiv preprint arXiv:2103.13389, (2024).

Zheng, Z., Xie, J. & Li, P. Patchwise generative convnet: training energy-based models from a single natural image for internal learning in 2021 IEEE/CVF conference on computer vision and pattern recognition (CVPR) 2961–2970IEEE, (2021).

Zhang, Z. C., Han, C. Y. & Guo, T. D. ExSinGAN: learning an explainable generative model from a single image. arXiv preprint arXiv:2105.07350, (2021).

Li, Z., Wang, Q., Snavely, N. & Kanazawa, A. Infinitenature-zero: learning perpetual view generation of natural scenes from single images in European conference on computer vision (eds. Avidan, S., Brostow, G., Cissé, M., Farinella, G. M., & Hassner, T.) 515–534 (Springer Nature Switzerland, 2022).

Zhang, Z. et al. IEEE, : SINgle image editing with text-to-image diffusion models in 2023 IEEE/CVF conference on computer vision and pattern recognition (CVPR) 6027–6037 (2023).

Kulikov, V., Yadin, S., Kleiner, M. & Michaeli, T. Sinddm: a single image denoising diffusion model in International conference on machine learning 17920–17930PMLR, (2023).

Wang, W. et al. Sindiffusion: learning a diffusion model from a single natural image. arXiv preprint arXiv:2211.12445, (2022).

Ho, J., Jain, A. & Abbeel, P. Denoising diffusion probabilistic models. Adv. Neural Inf. Process. Syst. 33, 6840–6851 (2020).

Dey, R. & Salem, F. M. Gate-variants of gated recurrent unit (GRU) neural networks in 2017 IEEE 60th international midwest symposium on circuits and systems (MWSCAS) 1597–1600IEEE, (2017).

Fu, R., Zhang, Z., Li, L. & Using LSTM and GRU neural network methods for traffic flow prediction in 31st youth academic annual conference of Chinese association of automation (YAC) 324–328 (IEEE, 2016). (2016).

Wang, D. et al. ADS-Net:An attention-based deeply supervised network for remote sensing image change detection. Int. J. Appl. Earth Obs Geoinf. 101, 102348 (2021).

Wang, Y., Wang, C., Zhang, H., Dong, Y. & Wei, S. A SAR dataset of ship detection for deep learning under complex backgrounds. Remote Sens. 11, 765 (2019).

Wang, Z., Bovik, A. C., Sheikh, H. R. & Simoncelli, E. P. Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 13, 600–612 (2004).

Zhang, R., Isola, P., Efros, A. A., Shechtman, E. & Wang, O. IEEE,. The unreasonable effectiveness of deep features as a perceptual metric in 2018 IEEE/CVF conference on computer vision and pattern recognition 586–595 (2018).

Barratt, S. & Sharma, R. A note on the inception score. arXiv preprint arXiv:1801.01973, (2018).

Szegedy, C. et al. IEEE,. Going deeper with convolutions in 2015 IEEE conference on computer vision and pattern recognition (CVPR) 1–9 (2015).

Heusel, M., Ramsauer, H., Unterthiner, T., Nessler, B. & Hochreiter, S. Gans trained by a two time-scale update rule converge to a local nash equilibrium in Advances in neural information processing systems 30 (NIPS 6629–6640 (ACM, 2017). (2017).

Dierking, W. & Busche, T. Sea ice monitoring by L-Band Sar: an assessment based on literature and comparisons of Jers-1 and Ers-1 imagery. IEEE T GEOSCI. REMOTE. 44, 957–970 (2006).

Zhang, X. & Zuo, G. Small target detection in UAV view based on improved YOLOv8 algorithm. Sci. Rep. 15, 421. https://doi.org/10.1038/s41598-024-84747-9 (2025).

Acknowledgements

This work was supported by the National Natural Science Foundation of China (Grants 62005320 and 61975044).

Author information

Authors and Affiliations

Contributions

H.C. and J.W. wrote the main manuscript text and H.Y. and Z.L completed the experiments and data analysis. All authors reviewed the manuscript. All authors revised the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Wang, J., Yang, H., Liu, Z. et al. SSDDPM: A single SAR image generation method based on denoising diffusion probabilistic model. Sci Rep 15, 10867 (2025). https://doi.org/10.1038/s41598-025-95106-7

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-95106-7