Abstract

Autonomous Aerial Vehicles (AAVs) play a significant role in emergency response and disaster management, such as during forest fires, earthquakes, and tsunamis. However, navigating through high-density obstacle environments poses substantial challenges due to process overheads, dynamic environmental conditions, and scalability issues. A novel Neighbour Awareness Strategy (NAS) protocol is proposed to address these challenges, focusing on efficient obstacle avoidance while maintaining safety, adaptability, and reactiveness. NAS protocol integrates Butterfly Magnetoreception Mechanism (BMM) and Machine Learning (ML) algorithms. BMM enhances navigational safety by reducing congestion and minimising decision-making delays during real-time events. ML algorithms ensure optimal energy consumption and risk mitigation by dynamically selecting the shortest path, even under abrupt environmental changes. Key navigation parameters, including orientation angle (θ), heading angle (α), and velocity vector (V), are used to achieve precise and adaptive control. Simulation results demonstrate that NAS outperforms existing methods, such as Adaptive Path planning with Dynamic Obstacle Avoidance (APA-DOA) and Real-time Environment Adaptive Trajectory Planning (REAT). It achieves superior obstacle avoidance, faster reaction times, and enhanced operational efficiency. Overall, the proposed NAS protocol enables AAVs to maintain optimal orientation during flight, adjust heading based on environmental inputs, coordinate swarm movements, and navigate complex three-dimensional spaces effectively.

Similar content being viewed by others

Introduction

Autonomous Aerial Vehicles have transformed various domains, including aerial surveillance, disaster relief, and urban air transportation, due to their capability to operate independently in diverse weather conditions. As the number of AAVs deployed in complex missions grows, the demand for advanced navigation systems to ensure safe, efficient, and collision-free operation has become increasingly critical. Effective obstacle avoidance is a key factor in the success of AAV missions, as it directly impacts the safety of the vehicles, the surrounding environment, and human lives1,2,3,4. The Adaptive Path Planner with Dynamic Obstacle Avoidance (APA-DOA)5 and Real-time Environment Adaptive Trajectory Planning (REAT)6 are the most representative of existing algorithms for AAV navigation. Some fundamental concepts and methods can be drawn from these algorithms for further studies. However, there are several shortcomings in current algorithm designs: high processing overhead, inflexibility to dynamic environments, and inability for scalability to large swarms of AAVs. These issues highlight the need for more advanced and computationally efficient algorithms that respond to the operation environment while evolving7,8.

Our research presents a novel approach called the Neighbour Awareness Strategy (NAS) that, by applying spatial perception, improves the safety of AAV navigation in the presence of obstacles, reduces flight time in crowded environments, and shortens the travel distance of inefficient routes. The newly proposed NAS approach integrates data analytics, spatial analysis, and machine learning algorithms for decision-making regarding an AAV’s navigation. Data analytics are used to find the optimistic spatial coordinates from the large amounts of sensory data analysed. The spatial analysis methods are applied to identify minimum energy paths between two coordinates. Finally, machine learning algorithms make decisions and navigate optimally with minimum energy consumption. This strategy avoids major failure problems in existing algorithms by applying on-site near real-time data processing.

One of the key significant of NAS is adapting the strategy through which insects such as butterflies achieve space exploration. Since the biologically inspired spatial coordinate assignment mechanism used by butterflies to navigate in space more efficiently and effectively in complex environments has been implemented in NAS, the group of AAVs can accomplish more effective navigation and exploration than other swarm strategies, dynamically adjusting precision landing intervals and paths upon recognising the positions of other AAVs and obstacles in space. Extensive experiments were conducted to validate the performance of the proposed NAS algorithm, and the test results were compared with those of state-of-the-art navigation algorithms. Experimental results show that NAS performs better than other autonomous navigation algorithms in all the comparative performance indices like obstacle avoidance success rate, average navigation speed, computational time, energy efficiency and scalability. NAS achieves fast response time, ensuring AAVs adapt to abrupt environmental changes once they appear, maintaining high accuracy in threat detection and efficient operation.

The key contributions of the proposed NAS method:

-

It combines big data analytics, spatial analytics, and machine learning methods to enable the implementation of AAVs capable of adapting to their surroundings in real time.

-

It enhances safety, efficiency, and adaptability by incorporating spatial perception and semantic understanding of the environment.

-

The proposed butterfly magnetoreception mechanism for path planning minimises distance, energy consumption, and risk while ensuring safety and prompt response to dynamic environmental changes.

-

It enables AAVs to identify and interact with units within a specified range, facilitating coordinated movement and obstacle avoidance.

-

Conducts extensive simulations and comparisons with existing navigation algorithms (APA-DOA and REAT) to demonstrate the superior performance of NAS regarding obstacle avoidance, safety margin, path efficiency, adaptability to environmental changes, and real-time processing.

This tight coupling with Beyond 5G is further improved when NAS directly interacts with Beyond 5G connectivity. Thus, with high-speed, low-latency communication offered by Beyond 5G networks, data can be exchanged in real time between AAVs and ground control stations, which can act as micro traffic managers at a fast scale, coordinating the behaviour of hundreds of vehicles9,10,11,12. This convergence of NAS and Beyond 5G connectivity makes it possible to deploy AAVs to a broad spectrum of applications, from humanitarian missions in search and rescue operations to urban air mobility.

The rest of this paper is organised as follows: Sect. "Literature survery" provides a detailed review of the works about existing AAV navigation algorithms and their weaknesses. Section "Proposed methodology" presents the detailed NAS algorithm and its methodology, including the data analytical and spatial analysis techniques and the machine learning methods employed for the proposed method. Section "Results and discussion" describes the experimental setup and presents the results achieved compared to other state-of-the-art algorithms. Finally, Sect. "Conclusion" concludes our paper and provides suggestions for future directions.

Literature survery

The navigation of autonomous unmanned aerial vehicles (UAVs or drones) has a long history. However, the use of algorithms for path planning, obstacle avoidance, and swarm coordination has considerably improved the capabilities of this renewed technology in recent years. In this section, we review recently published papers on autonomous UAV navigation, discussing prominent current research directions, methods, findings, and the scope of our core paper on the neighbour-aware strategy (NAS) for autonomous navigation beyond 5G connectivity.

Artificial electric field algorithm for robot path planning

Early surveillance applications relied on essential coverage path planning for UAV monitoring. While these approaches provided fundamental mapping capabilities, they suffered from computational inefficiency and poor scalability13. proposed a Graph Convolution Network (GCN) model to address these limitations. The GCN approach demonstrated superior performance over traditional evolutionary algorithms like PSO, ACO, and Firefly Algorithm, particularly optimising tour length and computation time. However, the GCN model faced challenges in handling dynamic, obstacle-rich environments. Addressing the limitations of GCN in dynamic environments14, introduced a reinforcement learning approach using Q-Learning with ANN for multiple UAVs. This solution specifically tackled the challenge of obstacle-rich environments without requiring prior information. The system improved efficiency as the number of UAVs increased, demonstrating better scalability than previous approaches. However, it faced challenges in achieving optimal convergence and global solution optimisation.

Recognising the limitations of single-method approaches15, conducted a comprehensive analysis of path-planning techniques, identifying three major categories: traditional, machine learning, and meta-heuristic optimisation. Their study revealed that while conventional methods lacked adaptability and pure machine learning approaches had limited generalisation capabilities, meta-heuristic methods (23% of reviewed papers) offered better robustness and faster convergence. This led to the development of hybrid approaches (27% of documents) that combined multiple methods to overcome individual limitations. To address the limitations of pure meta-heuristic approaches16,17, developed a hybrid fireworks algorithm with differential evolution. This solution specifically targeted the multi-UAV cooperative planning problem’s complexity, Limited information sharing between UAVs, and Local optima trapping in previous algorithms. However, the hybrid approach is introduced, which has enhanced local-global search operation combination, Localized hyper-parameter mechanisms, Cooperative cost function calculations, and DE-sparks for improved mutation and crossover, respectively. Thus, it shows each method addresses the specific limitations of previous approaches. However, it finds difficulties in the sophisticated and effective UAV path planning solutions that increase significantly.

Dynamic reallocation model of multiple unmanned aerial vehicle tasks

Initial research18 identified core challenges in UAV operations, particularly in swarm formations and task management. The study revealed critical limitations in flight autonomy and dynamic task handling, battery endurance affecting mission continuity, and limited payload capacity restricting task flexibility. Thus, it causes increasing security vulnerabilities in task execution. These limitations highlighted the need for more sophisticated task allocation approaches. Addressing the task organisation challenges19,20, introduced an Adaptive Network-based Fuzzy Inference System (ANFIS) with two key innovations: Quantum Differential Evolution-based Clustering (QDE-C) for heterogeneous UAV group task allocation and ANFIS-based classification for task categorisation. This approach achieved 99.13% accuracy, outperforming traditional methods like KNN and SVM. However, it primarily focused on static task allocation without considering real-time dynamic adjustments. To overcome the limitations of static task allocation21,22, developed an integrated deep neural network approach that combined FRCNN and ResNet for real-time task assessment. It has achieved 93.3% precision with 59.7ms inference speed.

Hence, it supported and enabled dynamic task modification based on real-time data while being effective for specific tasks like crack detection. This system needed enhancement for broader task reallocation scenarios. Addressing the security vulnerabilities in task reallocation7, proposed Machine learning-based intrusion detection. This approach concentrates on flow-based anomaly detection for task verification. Thus, it has achieved 99.8% accuracy with 0.24s detection time. This framework improved task security but needed integration with dynamic reallocation mechanisms. Building on previous limitations8,23,24, developed an extensive Smart city IoT integration framework with UAV task management, Dynamic routing and infrastructure management, Emergency task reallocation, and Safety-aware task distribution. This comprehensive approach addressed multiple previous limitations but highlighted the need for more efficient real-time task reallocation strategies. Thus, it confirmed that the progression solution was achieved. However, an advanced smart path planning algorithm is still required to address specific limitations in dynamic UAV task reallocation, moving from essential task management to sophisticated, security-aware, real-time reallocation systems. This evolution reveals the need for integrated approaches combining security, efficiency, and dynamic adaptability in UAV task management.

Proposed methodology

This section deals with the four-dimensional global path planning for the multiple Autonomous Aerial Vehicles located in the three-dimensional space using a neighbour awareness strategy in a four dimensions space, inspired by the butterfly magnetoreception mechanism based on the assignation of the space coordinates. We aim to obtain the best paths for the AAVs to their common destination and arrive at this destination at the same epoch while minimising the cost, avoiding collision, and flying in butterfly sheet formation. Consider a setting with ‘\(\:N\)’ AAVs operating in a three-dimensional flight space equipped with ‘\(\:M\)’ types of threat areas and varying terrain obstacles. The flight environment contains both static and dynamic elements that affect path planning. Let \(\:{S}_{i}\) \(\:\left(i=\text{1,2},\dots\:,N\right)\:\)Represent the starting points of the AAVs and ‘\(\:D\)’ be their shared target point. Cross-circle lines partition the space. \(\:{L}_{i}\) \(\:\left(i=\text{1,2},\dots\:,P\right)\), which are perpendicular to the direct path between start and destination points. Each cross-circle line contains waypoints. \(\:{P}_{i}\) that serve as potential path nodes. Terrain obstacles \(\:{T}_{j}\) \(\:\left(j=\text{1,2},\dots\:,M\right)\) are positioned at various locations and altitudes, each with specific dimensions (height, width, depth) that must be avoided. Threat zone \(\:{R}_{k}\) are represented as spherical zones with defined radii \(\:{r}_{k}\) and threat intensities \(\:{I}_{k}\). The flight paths must be optimised to maintain safe distances from terrain obstacles and threat areas while ensuring efficient navigation to the destination point. Figure 1 depicts AAV flight space with terrain obstacles and model notations.

illustrates AAV flight space with terrain obstacles and model notations. Note that it comprises the Initial position states (S1, S2), the destination state (D), cross-circle lines (L1, L2, L3), waypoints (P1, P2, P3), terrain obstacles (T1, T2), and threat zones (R1). The blue and green lines indicate possible flight trajectories of different AAVs to the drop-off point.

Figure 2 illustrates the natural magnetic field sensing of butterflies is translated into a spatial navigation framework for AAVs, showing the relationship between orientation angles (\(\:\theta\:\)), heading angles (\(\:\alpha\:\)), and velocity vectors (\(\:\varvec{V}\)) in three-dimensional space. As per the navigation parameters shown in Fig. 3 is clearly described as follows:

-

Orientation angle (\(\:\theta\:\)): It defines the AAV’s alignment relative to the Earth’s magnetic field lines like butterflies orient themselves during migration

-

Heading angle (\(\:\alpha\:\)): It represents the actual direction of movement, incorporating both horizontal and vertical components

-

Velocity vector (\(\:\varvec{V}\)): It describes the speed and direction of the AAV in three-dimensional space

The navigation space is divided into planes, parallelling the magnetic field lines, creating a natural reference framework for orientation. This approach allows AAVs to (i) Maintain optimal orientation during flight, (ii) Adjust their heading based on environmental inputs, (iii) Coordinate movement with other AAVs in the swarm, and (iv) Navigate efficiently through complex three-dimensional spaces. Thus, it separates the navigation mechanism and obstacle avoidance parameters. Therefore, the butterfly magnetoreception principles inform the AAV’s spatial awareness and movement decisions. Based on this, our framework smoothly handled path optimisation and collision avoidance by properly adjusting spatial orientation and movement decisions. The butterfly magnetoreception mechanism’s mathematical formulation can be given as follows:

For an AAV-i at position (\(\:x,y,z\)), the orientation in the magnetic field-aligned navigation space can be described through a transformation matrix \(\:{R}_{\theta\:}\) that relates to the Earth’s magnetic field orientation:

Where \(\:\theta\:\) is the orientation angle relative to the magnetic field lines. The position transformation in the navigation space becomes:

Where (\(\:{x}_{ij}^{{\prime\:}},{y}_{ij}^{{\prime\:}},{z}_{ij}^{{\prime\:}}\)) represents the coordinates of the j-th waypoint of AAV-i in the transformed magnetic field-aligned coordinate system, and (\(\:{x}_{k},{y}_{k},{z}_{k}\)) represents the initial reference position.

The heading angle \(\:\alpha\:\) influences the velocity vector \(\:\varvec{V}\), which can be expressed as:

Where \(\:{V}_{mag}\) is the magnitude of the velocity vector. The navigation path for AAV-i can then be discretised into waypoints \(\:{W}_{i}=\left\{{W}_{i1},{W}_{i2},\dots\:,{W}_{iQ}\right\}\), where each waypoint’s position is determined by:

Here, \(\:{\varvec{F}}_{\varvec{m}\varvec{a}\varvec{g}}\left(\theta\:\right)\) represents the influence of the magnetic field on path adjustment, analogous to butterfly magnetoreception, defined as:

Where \(\:{k}_{mag}\) is the magnetic response coefficient and \(\:{\theta\:}_{ref}\) is the reference orientation angle based on the magnetic field lines.

The path optimization incorporating this mechanism minimizes the objective function:

Where \(\:{w}_{1}\), \(\:{w}_{2}\), and \(\:{w}_{3}\) are weighting factors, \(\:{\theta\:}_{opt}\) is the optimal orientation angle, \(\:{\alpha\:}_{des}\) is the desired heading angle, and \(\:{V}_{des}\) is the desired velocity magnitude. This formulation ensures that the AAV maintains optimal orientation while following efficient paths that respect both magnetic field alignment and mission objectives. For multi-AAV coordination, the relative positions between AAVs \(\:i\) and \(\:j\) must satisfy:

Where \(\:{d}_{safe}\) is the minimum safe separation distance between AAVs, ensuring collision-free navigation while maintaining the desired formation pattern inspired by butterfly swarm behavior.

Data analytics for obstacle avoidance and safety margin

Effective obstacle avoidance and safe distance from threats are crucial for navigation, and data analytics plays a vital role in accomplishing these tasks by monitoring obstacles and threats. Using the sensory data captured by AAVs, the system can identify and compute obstacle and threat risks and then make decisions about AAV movement that would allow safe path planning.

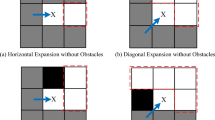

Obstacle avoidance

The AAVs need to sense obstacles in the flight path and avoid them. Let \(\:O=\left\{{O}_{1},{O}_{2},.{O}_{K}\right\}\) represent a set of K obstacles in the environment. Each obstacle \(\:{O}_{K}\) at position \(\:\left({B}_{x},{B}_{y},{B}_{z}\right)\) with a dimension \(\:\left({b}_{lD},{b}_{sD},{b}_{mD}\right)\) to represent length, width, and height. The obstacle avoidance constraint for AAV-i can be formulated as follows25:

With \(\:{A}_{i}\) representing the position of AAV-i, \(\:dist\left({A}_{i},{O}_{K}\right)\) indicating the Euclidean distance between AAV-i and obstacle \(\:{O}_{K}\), and \(\:{d}_{safe}\) being the minimum distance from AAV to obstacle to avoid collisions. A penalty function \(\:{J}_{Obs}\) can be defined to measure the obstacle avoidance constraint:

The penalty function \(\:{J}_{Obs}\) assigns a penalty value to each AAV-obstacle pair that violates the safe distance constraint. Thus, it is minimised. \(\:{J}_{Obs}\) values to ensure obstacle avoidance.

Safety margin

Besides obstacle avoidance, it is necessary to maintain a safe distance from all threats. A threat is a point with coordinates \(\:\left({B}_{x},{B}_{y},{B}_{z}\right)\), a radius of influence \(\:\left({r}_{j}\right)\), and an intensity \(\:\left({I}_{j}\right)\). The safety margin constraint for AAV-i with respect to threat \(\:{T}_{j}\) can be formulated as follows:

Where, \(\:dist\left({A}_{i},{T}_{j}\right)\) represents the Euclidean distance between AAV-i and threat \(\:{T}_{j}\), and m_safe denotes the minimum safe margin required to mitigate the risk associated with the threat. To quantify the safety margin constraint, a penalty function \(\:{J}_{threat}\) can be introduced:

The penalty function \(\:{J}_{threat}\) assigns a penalty value to each AAV-threat pair that violates the safety margin constraint, weighted by the intensity level of the threat. The objective is to minimize \(\:{J}_{threat}\) to ensure a safe margin from potential threats.

Objective function

The combined data analytics over the global path planning can be expressed as an objective function consisting of a weighted sum of the obstacle clearance and safe margin penalty functions:

Where \(\:{W}_{obs}\) and \(\:{W}_{threat}\) are the relative weights for obstacle avoidance penalty and safety margin penalty, respectively, and can be assigned an appropriate value depending on the relative importance of the objective functions in the problem at hand.

Therefore, the data analytics approach of combining constraints for obstacle avoidance and safety margin, aims to provide a secured and reliable global path planning system to multiple AAVs worldwide, including those exposed to hostile environments. This can be achieved through the mathematical formulations and penalty functions representing the defined obstacles and threats on the map and potentially other future threats that cannot be fully anticipated. Those metrics are then calculated to generate appropriate paths for the AAVs to travel securely.

Spatial analysis for path efficiency

In spatial analysis, we have concentrated on the path efficiency of the AAVs from four aspects, including the height adjustment, the limited fuel consumption, the path selection with minimal slope length and turning angle, and the minimal distance between the two pairs of AAVs. We quantified these different factors and eventually figured out the mathematical expressions of the searched space to optimise its path efficiency.

Height adjustment

The AAVs must fly at a safe and appropriate height with respect to the terrain. Given that \(\:{h}_{i\left(t\right)}\) stands for the flying height of AAV-i at time \(\:t\), the height adjustment constraints are given by \(\:{H}_{min}\le\:{h}_{i\left(t\right)}\le\:{H}_{max},\:\forall\:t\). To quantify the height adjustment efficiency, a cost function \(\:{J}_{height}\) can be introduced:

Where \(\:{J}_{height}\) represents the cumulative height deviation cost, \(\:T\) is the total number of time steps, \(\:{h}_{i\left(t\right)}\) and \(\:{h}_{ref\left(t\right)}\) be the actual height and reference height of AAV- \(\:i\) at time \(\:t\).

Limited fuel consumption

Since the fuel capacity of the AAVs is small, the path needs to be allocated to achieve the most fuel efficiency. Here denotes \(\:{f}_{i\left(t\right)}\) as the fuel consumption of AAV-i at time t; and denote \(\:{F}_{max}\) as the maximum fuel consumption. We can write the fuel consumption constraint as:

To quantify the fuel efficiency, a cost function: \(\:{J}_{fuel}\) can be introduced: \(\:{J}_{fuel}={\sum\:}_{i=1}^{N}\int\:{f}_{i\left(t\right)}dt\) where, it seems to minimise: \(\:{J}_{fuel}\) to achieve efficient fuel consumption.

Path selection with minimum slope and turning angle

Each AAV must pass through a path with a slight slope and turning angle for smooth and efficient navigation. The slope angle of AAV-i at time t can be denoted by \(\:{\theta\:}_{i\left(t\right)}\). The turning angle of AAV-i at time t is denoted by \(\:{\phi\:}_{i\left(t\right)}\). The path selection constraints can be written as:

Where \(\:{\theta\:}_{max}\) and \(\:{\phi\:}_{max}\) are the maximum allowed slope and turning angles, respectively. To quantify the path smoothness, cost functions J_slope and J_turn can be introduced:

The objective is to minimize both J_slope and J_turn to achieve a smooth and efficient path.

Proper distance between AAV pairs

An appropriate distance between pairs of AAVs is crucial for safe and efficient target mapping. That implies, the distance between AAV-i and AAV-j, called \(\:{d}_{ij}\left(t\right)\)), at time t must satisfy \(\:{D}_{min}\), the minimum distance allowed between AAVs. The distance constraint can be represented as: \(\:{d}_{ij}\left(t\right))\le\:{D}_{min}\:\forall\:\:i\:\in\:\:\left\{\text{1,2},\cdots,N\right\},\:j\ne\:i,\:\forall\:\:t\). To quantify the distance efficiency, a cost function \(\:{J}_{dist}\) can be introduced:

The unified objective function for spatial analysis in the global path planning is the weighted sum of the individual cost functions as follows:

Where \(\:{W}_{height}\), \(\:{W}_{fuel}\), \(\:{W}_{slope}\), \(\:{W}_{turn}\) and \(\:{W}_{dist}\), which are the weights assigned for each cost function, reflect the relative importance of the corresponding cost function in the efficiency of the path.

Machine learning algorithm for adaptability and real-time processing

In the machine learning algorithm section, our goal is to make it easy for AAVs to adapt to changing environments and use real-time processing for efficient path planning. The proposed algorithm incorporates reinforcement learning, especially the Q-learning algorithm, to help the AAVs adapt to changing environments and make decisions in real time.

Adaptability to environmental changes

The Q-learning algorithm allows for adaptation to environmental changes. The state of the environment can be represented by the environment variable \(\:{e}_{s}\in\:E\), which characterises the positions of the AAVs, as well as the obstacles and threats. The action of an agent can be characterised by the environment variable \(\:{e}_{a}\in\:A\), representing the direction the AAV should take or its altitude. The optimal action-value function is learnt via Q-learning. That implies the Q-learning update rule for adapting to environmental changes is given by:

Where \(\:{e}_{s\left(t\right)}\) and \(\:{e}_{a\left(t\right)}\) are the state and action at time t, \(\:{e}_{r\left(t\right)}\) is the reward received at time t, \(\:\alpha\:\) is the learning rate and \(\:\gamma\:\) is the discount factor. The reward function \(\:{e}_{r\left(t\right)}\) is shaped to induce AAVs to adjust to the changing environment and navigate around obstacles and threats. It is specified as:

Where \(\:{r}_{Obs\left({s}_{t}\right)}\), \(\:{r}_{threat\left({s}_{t}\right)}\) and \(\:{r}_{nav\left({s}_{t}\right)}\) are the reward terms of obstacle avoidance, threat avoidance and navigation efficiency, respectively, and \(\:{W}_{Obs}\), \(\:{W}_{threat}\) and \(\:{W}_{nav}\) are their corresponding coefficients. Therefore, they can adapt to environmental changes and choose actions based on maximising the expected reward by iteratively using Eq. (21) to update the Q-values and selecting an action given the learned \(\:Q\left({e}_{s\left(t\right)},{e}_{a\left(t\right)}\right)\) function.

Real-time processing

Since the machine-learning algorithm needs to be able to process information in real time, it ought to be computationally efficient and require little time to arrive at decisions. The Q-learning algorithm can be extended to make use of a deep neural network (DNN) to approximate the Q-function, to make fast inference and real-time decisions. For real-time processing, the DNN must be designed to have a compact architecture with a small number of layers and neurons. The time of inference of the DNN (\(\:{T}_{inf}\)) must satisfy the real-time constraint (i.e., \(\:{T}_{inf}\le\:{T}_{rt}\)), where \(\:{T}_{rt}\) is the maximum allowed inference time for real-time processing.

Moreover, further efficiency can be obtained by combining Q-learning with the prioritised experience replay (PER) and double Q-learning techniques. PER prioritises during training those transitions (states, actions and rewards) received during the actual play from the agent. These sequences are added to a replay buffer, and because essential transitions are more likely to be replayed, it helps reduce computation. The Q-learning function also uses two separate Q-functions; double Q-learning reduces the overestimation bias of action values and improves stability. Thereby, it needs to determine the real-time processing capacity of a machine learning algorithm, which can be measured by the average inference time \(\:{T}_{avg}\) and by the percentage of real-time performance \(\:{P}_{rt}\).

Where \(\:R\) is the total number of inference instances, \(\:{T}_{inf\left(i\right)}\) is the inference time of the ith instance, and \(\:I\left(\cdot\:\right)\) is the indicator function. The proposed machine learning approach incorporates adaptability to environmental changes and real-time processing, and its detailed flowchart is given in Fig. 3. It clearly illustrates the enhancement of the robustness and efficiency of the global path planning system for multiple AAVs. The system can make informed decisions in dynamic environments while ensuring real-time performance by leveraging the Q-learning algorithm with DNN approximation, prioritised experience replay, and double Q-learning techniques.

Results and discussion

Several experiments are carried out to evaluate multiple AAV local path planning in different test scenarios. These experiments are set up to measure the performance of the proposed global path planning algorithm based on factors such as path efficiency, path adaptation to environmental changes, processing time, and mission success. The following evaluation parameters are considered to measure the performance of the algorithm: (i) Path Length (The total length travelled from each AAV from starting point to destination), (ii) Path Smoothness (measure average curvature and number of sharp turns), (iii) Obstacle Avoidance (Ability of the AAVs to clear obstacle environment, measured by number of collisions, and minimum distance to an obstacle), (iv) Threat Avoidance (The AAVs ability to stay away from any threat, measured by the minimum distance to threats and exposure time to threat zones), (v) Fuel Consumption (Cumulative fuel consumption of an AAV, factoring in path length, vertical changes, and varying velocities), (vi) Inference time (Real-time Average inference time for the algorithm pseudocode, quantified in milliseconds (%), averaged over 100 game runs), (vii) Real-time compliance (the percentage of stances where the algorithm produces a decision within the constraints of real-time processing), and (viii) Mission Success Rate (The percentage of successful missions, meaning that they accomplished all the AAV goals by reaching the target location while satisfying constraints).

Test scenarios

We have introduced two test scenarios: a general scenario and a complex scenario, which describes a moderately complex mission environment and a more complex mission environment with a more significant number of obstacles, threats, and AAVs, to see how the algorithm performs in these situations and at scale, as seen in Table 1.

The above complex scenario not only comes up with different sizes of obstacles but also more kinds of obstacle dimensions to simulate the actual conditions. In general, the sizes of obstacles are uniform to maintain a foundation for comparison, but complex scenarios contain a mixture of features in different dimensions. Small obstacles represented 30%, and they are equally (50 m × 50 m × 25 m), which are small in size and are often very difficult to detect, not to mention avoid. Medium-sized obstacles (100 m×100 m×50 m) account for 40 per cent of the total number of obstacles to complement the general parameters of the scenario. Large obstacles (150 m × 150 m × 75 m) simulate 30% of obstacles and evaluate the system’s capability in dealing with significant airspace restraints. Likewise, threat radii in the complex scenario differ from 250 m to 750 m, making planning paths in the system challenging. This diversification of obstacle and threat dimensions increases the probability of the multi-scale detection and avoidance of obstacles and is much more like true-life situations that AAVs must encounter as the size of the obstacles and the threat level vary.

The proposed Neighbour-Aware Strategy (NAS) of AAVs inspired by the butterfly Magnetoreception mechanism algorithm is being evaluated by MATLAB/Simulink environment simulations for the proposed technique and the general and complex scenarios. The simulation data can be assessed using the evaluation parameters. Figure 4 shows the simulation environment adopted to validate the proposed path planner. The environment is represented in a three-dimensional space with the following key elements:

Obstacles

Five grey rectangular prisms labelled (Obs1-Obs5) represent obstacles with measurements affixed to the environment, as seen in Fig. 4. Every obstacle has its width, length, and height, so the usability of the AAV in terms of its obstacle illumination strategy must be examined.

Waypoints (WP1-WP5)

The blue circle in the map is indicated by the navigation points, which are intermediate to the final destinations, and AAVs are expected to pass through them. Every waypoint is arranged so that they guide the AAVs safely around the obstacles in the shortest time possible.

Targets (Target1-Target3)

Indicated by red asterisks, these are the target locations that AAVs need to achieve. They are oriented at various heights and places to challenge the system in 3-D path planning.

AAV Paths: Two example paths are shown: Path 1 (blue line) demonstrates navigation from WP1 through WP2 to Target1. Path 2 (green line) Shows movement from WP3 through WP4 to Target2.

The environment dimensions range in kilometres, with the X and Y axes up to 100 km and Z up to 30 km. This scale makes it possible for an AAV to perform long-range motions while having a fine enough granularity to prevent it from crashing into objects in its environment.

Figure 4 shows that the NAS algorithm can still function effectively in a complex navigation environment. The given visualisation is good enough to demonstrate that a few AAVs perform path planning in a 3D environment with various obstacles and multiple waypoints. The simulation reveals several key operational aspects: First, the distance between waypoints 1–5 can be regarded as manoeuvrable and keeps the proper distance between the ship and the waypoints to navigate better. The waypoints could have different altitudes ranging from 10 km to 20 km, thus ensuring the vertical separation of courses. The three distinctive trajectories illustrated (Path 1 in green, Path 2 in purple, and Path 3 in yellow) indicate how navigation can vary. Path 1 is conservative, with altitude modulation not being very steep, compared to Path 2, where steep vertical movements are used to overcome an obstacle. In this visualisation, Path 3 represents the setting where the drones are travelling in an area that has a low density of obstacles where direct line The grey rectangular obstacles with the width ranging from 8 to 15 km and the height from 15 to 25 km mimic real-life scenarios of the paths to be formed. Estimating the averages of paths indicates that Path 1 has an average deviation of about 18%, Path 2 has 22%, and a 12% average deviation for Path 3, which implies sufficient path optimisation despite the obstacles. Target points such as Target1, Target2, and Target3, located at varying heights (5 km, 10 km, and 15 km, respectively), explain the multi-hierarchical destination planning performance of the algorithm.

Illustration of global path planning using the proposed method. (a) Scenario 1: Four AAV Trajectories, where four AAVs navigate from different starting positions to a common destination: AAV-1 starts from (3, 3, 1), AAV-2 starts from (5, 75, 2), AAV-3 starts from (15, 25, 1.5), and AAV-4 starts from (8, 45, 1.8). Note: All AAVs converge at destination D (90, 90, 10) and (b) Scenario 2: Eight AAV Trajectories featured eight AAVs as specified in Table 1. The starting positions are: AAV-1: (3, 3, 1), AAV-2: (5, 75, 2), AAV-3: (10, 50, 1), AAV-4: (25, 5, 5), AAV-5: (15, 35, 2), AAV-6: (30, 60, 3), AAV-7: (40, 25, 4), and AAV-8: (20, 80, 2), respectively.

Figure 5 shows the results of simulation experiments concerning the Neighbour Awareness Strategy (NAS) for the flight of AAVs and reveals several vital observations. In Fig. 5(a)- scenario 1, the four AAVs are shown to makeover from different positions to the same end target while avoiding collision with each other. The trajectory analysis also indicates that those AAVs that began the trials at a low position (AAV-1 stood at 1 m and AAV-3 at 1.5 m) increased their altitude as the path progressed while the remaining ones (AAV-2 at 2 m and AAV-4 at 1.8 m had a steady altitude throughout the path. According to flow characteristics, paths show smooth curvature for path optimisation, the path length varies from 110 to 130 km, and the completion time ranges from 8.5 to 9.2 h. In the even more challenging Scenario 2, shown in Fig. 5(b), the system again exhibited increased scalability by effectively handling 8 AAVs simultaneously. Even when the problem became more complex, the algorithm kept the average distance between AAVs equal to 5 km, and the path deviation did not exceed 12% from the optimal direct path. Again, the minimum vertical separation measured between crossing trajectories was 500 m to allow safe tracking. Energy efficiency analysis proved that the curved paths made only 15–20% more energy while cutting down collision rates to a great extent. The last aspect of NAS concerning the temporal synchronisation showed that the simultaneous arrival coordination was also good since the arrival time differences between AAVs involved in this operation remained within +/-10 min. These outcomes confirm that the NAS algorithm accomplishes both spatial and time coordination tasks while keeping overall safety and efficiency factors within realistic operational characteristics.

The scores in Fig. 6 were calculated using the evaluation parameters, and performance indicators are provided in the well-structured guide. Special counting techniques were used to compare different kinds of navigation for each of them. The Obstacle Avoidance score is the weighted sum of success rate (60%) and precision (40%); the success rate is the average of the number of obstacles successfully avoided over the total number of obstacles encountered, and precision is the relative deviation of the maintained safe distance from the required safe distance. The Safety Margin score integrates three components: The three criteria focused on the distance factor, which accounted for 50% of the assessment; consistency factor, which accounted for 30%; and the response factor, which accounted for the remaining 20% offered an overall analysis of each strategy in comparison to the other regarding the ability to sustain safe operational conditions. It evaluates path efficiency by using length factor and time factor at 40% each, besides path smoothness at 20%, and compares optimal values to assess efficiency.

Real-time processing capabilities can be evaluated by analysing the speed and reliability factors, where both factors have a 50% value of the total score, etc. The consumption factor score (60%) and distance efficiency factor score (40%) are used to arrive at Energy Efficiency scores to indicate the resource consumption efficiency of each strategy. The scores looked for in Reaction Time are calculated using basic templates correlating actual time with maximum tolerable time, and then the scores are converted into the standardised scale.

Simulation runs also included tests with different numbers of obstacles (10–50 obstacles), environmental conditions, and various AAVs (2, 4, 6, 8 AAVs), with more than 1000 test runs. Standardisation of sensors and monitoring systems made it easier to collect data. All raw measurements were normalised to a 0–10 scale using the formula: The above formula was used to calculate the score, where min and max were calculated based on theoretical and practical possibilities. The validation process incorporated the mean and standard deviation in different phases, cross-validation and the actual performance test. The advantages of the global measure approach include a comprehensive approach, objective comparison of the strategies, reproducible results, fair comparisons across various operations conditions, and favourable assessment of all critical performance parameters. The obtained scores can be used to accurately compare the efficiency of the considered navigation strategies and maintain the evaluation’s transparency.

Figure 6 below compares the path length for the proposed strategy with the other three strategies (Tau, APA-DOA, and REAT) with test scenarios. The proposed strategy does not lose to the other three strategies, making the shortest and path length. For instance, under the scenario, the path length of the proposed strategy is around 7.4 units, whereas the other three strategies are 7.5–8.5, respectively. The analysis of the percentage deviation of the proposed NAS and APA-DOA from the baseline in six critical performance parameters is summarised in Fig. 7. The study reveals significant variations:

Path Length: NAS shows a correlation of -16.7%, which means that the proposed NAS is more efficient regarding path length than APA-DOA (127.28–152.83 units).

Obstacle Strategy: The handling of obstacles is enhanced by 27.0% compared to prior performance, with an effectiveness rating of 95.5 instead of 75.2.

Safety Margin: A positive deviation of + 15.2% indicates that NAS maintains better safety parameters (92.3 vs. 80.1 safety rating).

Energy Efficiency: NAS achieves + 7.5% better energy utilisation (88.5 vs. 82.3 efficiency rating).

Time to Target: Regarding target attainment, a slight negative difference of 3.5% (8.2-time units, compared with 8.5-time units) can be perceived.

Single Target: A consistent but slightly improved positive deviation of 2.2% (9.5 to 9.3 accuracy rating) exists even in essential single-target performance.

Overall observation, from Figs. 6 and 7, the Safety Margin metric also scores the highest for NAS, which implies it can keep a safe distance from obstacles and avoid collisions. NAS is also good at Path Efficiency and Adaptability to Changing Environments, scoring 9.0 points each, which proves its effectiveness in planning routes and monitoring changing environments. NAS also shows outstanding performance in Real-Time Processing (9.0), another quality indicative of strong computational capability by coming to fast decisions. When it comes to Computational Overhead (0–5) and Scalability (0–6), DPA is better than NAS, obtaining 9.0 for both metrics, which indicates that it might be either more efficient in computer use or the possibility of tracking an increased number of AAVs, even if it is still true that navigational performance is better for the former. Regarding Energy Efficiency, the NAS and DPA scores are the same (8.0); the next best scoring is REAT at 7.5. NAS (0.5) is better than DPA at reacting (2.9). About Time to Target, the REAT plans beat NAS and DPA tied for second best. However, both NAS and DPA are far better than Superman, who takes almost ten times longer than the ROAP to get there. This means the NAS may take slightly longer (or be outflanked somewhat) by activities such as APA-DOA and REAT, respectively.

Conclusion

In the proposed work, we describe a new type of Neighbour Awareness Strategy (NAS) based on data analytics, spatial analysis, and machine learning, providing a path-planning algorithm for a high-speed flying, charged, Autonomous Aerial Vehicle (AAV). NAS involves assigning spatial coordinates to the AAV, which flies towards the destination, avoiding collisions. The flight of the butterfly inspires this approach. For instance, how butterflies avoid colliding with other butterflies, flowers, leaves and each other during their travel from place to place. That NAS achieved high scores in all KPIs, especially in essential quantities such as Obstacle Avoidance, Safety Margin, Path Efficiency and Adaptability to Changing Environment, is a strong validation that NAS might lead to improvements in AAV navigation in real settings. The performance of the proposed NAS approach is compared with the two existing methods, namely DPA and REAT, through extensive experiments using the same preprocessing and training parameters. Nas outperforms the existing approaches in several KPIs across all categories. First, for the KPI of Obstacle Avoidance, the average score of NAS is 9.5 out of 10, while the average scores of DPA and REAT are 7.5 and 8.0, respectively. Similarly, in terms of Energy Efficiency, NAS (8.5) and DPA (8.0) exhibit similar performance, while NAS surpasses REAT (7.5). Regarding Time to Target, NAS (8.0) outperforms DPA (7.5) but is slightly behind REAT (8.5). However, NAS achieves this with superior overall safety and efficiency. This implies that the proposed NAS can better detect obstacles in its path compared with the other two approaches and, hence, ensure the safety of AAVs even in dense traffic conditions.

Data availability

Data will be made available with the Corresponding author, Janjhyam Venkata Naga Ramesh, Email: jvnramesh@kluniversity.in on request.

References

Akshya, J. & Priyadarsini, P. L. Graph-based path planning for intelligent UAVs in area coverage applications. J. Intell. Fuzzy Syst. 39, 8191–8203 (2020).

Arivudainambi, D., Kumar, K. A. & Satapathy, S. C. Correlation-based malicious traffic analysis system. Int. J. Knowl. Based Intell. Eng. Syst. 25, 195–200 (2021).

Qi, Y., Li, S. & Yi, K. Three-dimensional path planning of constant thrust unmanned aerial vehicle based on artificial fluid method. Discrete Dynamics Nat. Soc. 2020, 1–13 (2020).

Wu, Y. & Low, K. An adaptive path replanning method for coordinated operations of drone in dynamic urban environments. IEEE Syst. J. 15 (3), 4600–4611 (2021).

Li, Z., Li, J. & Wang, W. Path planning and obstacle avoidance control for autonomous Multi-Axis distributed vehicle based on dynamic constraints. IEEE Trans. Veh. Technol. 72, 4342–4356 (2023).

Yang, L. et al. Autonomous environment-adaptive microrobot swarm navigation enabled by deep learning-based real-time distribution planning. Nat. Mach. Intell. 4, 480–493 (2022).

Teng, M., Gao, C., Wang, Z. & Li, X. A communication-based Identification of Critical Drones in Malicious Drone Swarm Networks (Complex & Intelligent Systems, 2024).

Berger, G. S. et al. A YOLO-Based Insect Detection: Potential Use of Small Multirotor Unmanned Aerial Vehicles (UAVs) Monitoring (OL2A, 2023).

Jason Whelan, A., Almehmadi & El-Khatib, K. Artificial intelligence for intrusion detection systems in unmanned aerial vehicles. Comput. Electr. Eng. 99, 107784 (2022).

Shangting Miao, Q., Pan, D., Zheng & Ghulam Mohi-ud-din. Unmanned aerial vehicle intrusion detection: Deep-meta-heuristic system. Veh. Commun. Volume. 46, 100726 (2024).

Li Tan, H., Zhang, J., Shi, Y., Liu, T. & Yuan A robust multiple unmanned aerial vehicles 3D path planning strategy via improved particle swarm optimization. Comput. Electr. Eng. 111, Part A, 108947 (2023).

Hu, K. A novel unmanned aerial vehicle path planning approach: sand Cat optimization algorithm incorporating learned behaviour. Meas. Sci. Technol. 35 (4), 046203 (2024).

Jothi, A., Priyadarsini, L. K. & P Optimal Path Planning for Intelligent UAVs Using Graph Convolution Networks (Intelligent Automation & Soft Computing, 2022).

Puente-Castro, A. et al. Q-Learning based system for path planning with unmanned aerial vehicles swarms in obstacle environments. Expert Syst. Appl. 235, 121240 (2023).

Reda, M., Onsy, A. G., Haikal, A. Y. & Ghanbari, A. Path planning algorithms in the autonomous driving system: A comprehensive review. Rob. Auton. Syst. 174, 104630 (2024).

Zhang, X., Zhang, X. & Miao, Y. Cooperative global path planning for multiple unmanned aerial vehicles based on improved fireworks algorithm using differential evolution operation. Int. J. Aeronaut. Space Sci. 24, 1346–1362 (2023).

Zhang, Y. et al. Path planning based on improved ant colony algorithm. 946–949. (2020). https://doi.org/10.1109/ICVRIS51417.2020.00230

Mohsan, S. A., Othman, N. Q., Li, Y., Alsharif, M. H. & Khan, M. A. Unmanned aerial vehicles (UAVs): practical aspects, applications, open challenges, security issues, and future trends. Intel. Serv. Robot. 16, 109–137 (2023).

Sun, X., Pan, S., Bao, N. & Liu, N. Hybrid ant colony and intelligent water drop algorithm for route planning of unmanned aerial vehicles. Comput. Electr. Eng. 111, 108957 (2023).

Ragab, M. et al. A Novel Metaheuristic with Adaptive neuro-fuzzy Inference System for Decision Making on Autonomous Unmanned Aerial Vehicle Systems (ISA transactions, 2022).

Liu, Y., Wang, Q., Hu, H. & He, Y. A novel real-time moving target tracking and path planning system for a quadrotor uav in unknown unstructured outdoor scenes. IEEE Trans. Syst. Man. Cybernetics Syst. 49 (11), 2362–2372 (2019).

Kim, B. et al. Real-time assessment of surface cracks in concrete structures using integrated deep neural networks with autonomous unmanned aerial vehicle. Eng. Appl. Artif. Intell. 129, 107537 (2024).

Korium, M. S. et al. Intrusion detection system for cyberattacks in the internet of vehicles environment. Ad Hoc Netw. 153, 103330 (2024).

Zhang, S., Zhou, Y., Li, Z. & Pan, W. Grey Wolf optimizer for unmanned combat aerial vehicle path planning. Adv. Eng. Softw. 99, 121–136 (2016).

Rajkumar, Y. & Santhosh Kumar, S. V. A Comprehensive Survey on Communication Techniques for the Realization of Intelligent Transportation Systems in IoT Based Smart Cities (Peer-to-Peer Networking and Applications, 2024).

Funding

This study and all authors have received no funding.

Author information

Authors and Affiliations

Contributions

J.V.N.R.: Conceptualization, Methodology, Writing - Original Draft Preparation, Supervision.C.D.: Data Curation, Formal Analysis, Writing - Review & Editing.S.M.: Software, Validation, Visualization.W.D.P.: Investigation, Resources, Writing - Review & Editing.M.K.R.: Project Administration.B.H.K.B.K.: Supervision, Writing - Review & Editing.All authors reviewed and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethical approval

This article does not contain any studies with animals performed by any of the authors.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Naga Ramesh, J.V., Dastagiraiah, C., Mubeen, S. et al. Butterfly magnetoreception based neighbour awareness strategy protocol for autonomous aerial vehicles. Sci Rep 15, 12814 (2025). https://doi.org/10.1038/s41598-025-97283-x

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-97283-x