Abstract

Point Cloud can be considered as non-Euclidean structure data since it is disordered and irregular. When training on point cloud, it is difficult to apply spatial discrete convolution directly. In this paper, we propose a novel three-dimensional spatial convolution operator called frame points attention convolution (FPAC). FPAC pre-defines a set of frame points in space and quantifies the correlation between the input local points and the frame points through an attention mechanism. FPAC then combines the quantified correlations with the weights of the frame points to generate spatially continuous filters. The convolution weights for different local areas in the filters are calculated dynamically, without relying on generative models or probabilistic assumptions. Furthermore, FPAC is reformulated to reduce the internal dimensions during training, which reduces memory consumption and significantly improves training speed. Several optimization measures are also implemented to further enhance the performance of FPAC. We built three common point cloud task networks using FPAC and conducted experiments to train these networks on widely used datasets. Experimental results show that the method proposed in this work is competitive with state-of-the-art methods for point cloud tasks.

Similar content being viewed by others

Introduction

In recent years, deep learning1 has dramatically advanced the state of the art in several research domains, including computer vision, speech recognition, and natural language processing. In the computer vision domain, convolutional neural networks (CNNs) have been widely applied in image classification, semantic segmentation, and other applications due to their powerful feature extraction capabilities. With the development of 3D scanning technology, it has become easier to obtain 3D information of objects, three-dimensional information analysis and understanding have become a research hotspot. Previous studies have achieved notable progress in various 3D vision tasks, including point cloud classification, segmentation, registration2, object detection, geometry reasoning3 and others4. Point Cloud is a type of non-Euclidean structure data with an irregular spatial structure. Therefore, CNNs cannot be directly applied.

In contrast, images as Euclidean structure data5 have dense and regular feature arrangements in Euclidean space. There are no gaps between adjacent pixels in an image. Therefore, when defining the convolution filter for an image, we only need to set a small number of discrete weights according to the regularly-shaped local area (e.g., a rectangle) in the image. Point Clouds do not have the same dense features as regular data in terms of spatial structure. The space between two adjacent points theoretically contains an unlimited number of points. Figure 1 illustrates this difference. Figure 1a shows a local area on an image, which has the same size as the filter. In the figure, a number corresponds to a feature, while different colors represent the weights of the filter. Figure 1b, c show what it is like when we apply a filter of the same size to a set of cloud points.

First, when using this filter, the points \(x_1\) and \(x_2\) are located in the same cell of the filter. Therefore, these two points cannot be distinguished in the Point Cloud. The points \(x_4\) and \(x_7\) reside on the edges between two cells. Therefore, the values of the filter weights cannot be determined for these two points. This example shows that we cannot define a regularly shaped filter with a fixed set of discrete weights to extract features from the points in a point cloud.

Second, the information of the pixels in an image is stored in a fixed order, typically using a matrix data structure. However, the points in a Point Cloud can be stored in any order. In Fig. 1b, c, the labeling order of the points represents their storing order. These two sets of points in Fig. 1b, c form the same Point Cloud. However, when we define the filter, we can only define the weights in the filter according to the existing order of the points. We cannot apply the filter to another point cloud that is essentially identical but with a different order of points.

Therefore, unlike training on Euclidean data such as images, we cannot directly apply a regularly shaped filter with a fixed set of discrete weights to perform convolutional operations on point cloud data.

The attention mechanism6,7 has been widely used in various types of deep learning in recent years, such as image processing and natural language processing. It defines a correlation index between pieces of information, enabling more correlated information to exert a higher influence on the final training result.

In this work, we propose a new scheme called Frame Point Attention Convolution (FPAC) for performing 3D point cloud convolution and extracting features from individual points. We also exploit FPAC to build three types of point cloud deep learning task networks. FPAC defines a continuous convolution filter in space and provides the convolutional weight for any point in the point cloud. Figure 2 illustrates how FPAC is applied to a point cloud. In the figure, the (a) shows a set of cloud points (grey points) forming a point cloud. FPAC defines a local area (shown in the dashed circle) and a set of virtual, user-defined points in the area, which we call frame points. There are five frame points in this example, as shown in the (b). Each frame point contains information about its coordinates and the associated convolution weights (different colors of the frame points represent different weight values). A method called Frame Points Attention (FPA) is designed as an attention mechanism to measure the correlation between cloud points and frame points, which are quantified as attention weights. The convolution weights of the frame points and the attention weights between the frame points and cloud points are combined to generate the convolution weight for each cloud point in the local area, as illustrated by (c) in Figure 2, where different colors of the cloud points represent different convolution weight values applied to these points. The same process can be applied to generate the convolution weight for any point in the point cloud (shown in (d) in Fig. 2). Note that a convolution weight can be generated for any physical location in space, not just for the points in the point cloud.

Although we differentiate the points into frame points and cloud points in FPAC, they both are points in the physical space. We define the convolution weights for frame points and then generate the convolution weights for cloud points through the attention mechanism. Since FPAC can generate convolution weights for any physical points in space, the convolution weights defined for the frame points should be consistent with those generated for cloud points. Namely, the convolution weights defined for a frame point should be the same as the weights generated for a cloud point when the cloud point and the frame point are in the same physical position. In this work, we propose a method to make the weights defined for frame points and the weights generated for cloud points converge.

When there are a large number of output channels, the native implementation of FPAC consumes a large amount of memory, making it difficult to run the network constructed with FPAC on a GPU. In this work, we optimize the implementation of FPAC and significantly reduce its memory consumption. We also investigate the impact of frame point selection strategies and neighborhood selection strategies on the effectiveness of feature extraction.

We conducted the following experiments to evaluate different aspects of FPAC. First, we evaluated the performance of the classification and segmentation networks built with FPAC. The experimental results show that the classification and segmentation networks proposed in this work can achieve excellent performance in the three tasks of the point cloud model and obtain accuracy comparable to the state-of-the-art on various benchmarks. Second, we investigated the impact of frame point selection. Third, we conducted ablation studies regarding the effectiveness of individual components in FPAC. Finally, we verified the generalization ability of FPAC by applying it to image data.

The main contributions of our work are summarized as follows:

1. We propose and systematically derive the Frame Point Attention Convolution (FPAC) process for performing convolution operations in a point cloud. 2. We propose a novel method to ensure the consistency of the convolution kernel in 3D space. 3. We conduct a thorough analysis of the memory consumption involved in performing convolution operations in a point cloud and propose a method to reduce this consumption. 4. We conduct extensive experiments to evaluate the effectiveness of the proposed FPAC.

The rest of this paper is organized as follows. Related work is discussed in the second section. The FPAC scheme is presented in detail in section “Methodology”. The implementation of the network architecture with FPAC is presented in the fourth section. The experiments are presented in the fifth section. Finally, this paper is concluded in section “Conclusion”.

Related work

The powerful feature abstraction ability of CNNs has been widely verified in 2D computer vision applications8,9,10. However, there are still many challenges in extending convolution to 3D computer vision. Images have a regular Euclidean data structure. Due to the disorder, sparsity, and non-uniformity of point clouds, defining a 3D convolution filter is not as simple and clear as in 2D.

Early work borrowed from the success of two-dimensional convolutions, attempting to convert point clouds into regular Euclidean data structures. Some works11,12 rendered the point cloud model into a two-dimensional image with multiple views and applied two-dimensional convolution. This method overcomes occlusion between parts of the three-dimensional object. Other works13,14,15 converted the point cloud model into a voxel model, a three-dimensional regular Euclidean data structure. 3DCNN can directly extract spatial shape features from the voxel model. Some methods16,17,18 avoid a large number of void calculations in the point cloud model through unique data structures. However, these methods result in a loss of detailed information during data structure conversion, and the computational and storage requirements are immense, which limits the input scale of the model. Additionally, due to the transformation of the data structure, it is difficult to apply these methods to fine-grained tasks such as semantic segmentation.

PointNet19 proposes the idea of directly training point cloud data. It performs spatial feature transformation between points using shared MLPs, which not only reduces the number of parameters but also ensures the consistency of feature transformation at each point. A symmetric aggregation function is used for global feature aggregation, addressing the problem of point cloud disorder. However, since PointNet only performs global feature aggregation, some local features are ignored. PointNet++20 extends PointNet into a hierarchical local aggregation network, retaining local features at different scales. Both PointNet and PointNet++ transform features using MLPs, ignoring the relationships between points, which reduces the robustness of the model.

Based on the exploration of PointNet++, several improvements have been proposed. One type of method constructs graph relations locally in the point cloud and uses graph convolution for local feature aggregation. ECC21 proposed a method to construct a graph from the point cloud and calculate the weight of the filter in the local area of the point cloud by using the labels on the graph edges. FeaStNet22 defines a fixed number of filters in the local area of the point cloud, then uses MLP to adaptively output the relationship between features and filters. This method solves the difficulty of matching irregular data to filters directly. PointGCN23 is an application of GCN24 to point clouds. It performs graph convolution on point clouds using Chebyshev polynomials. DGCNN25 realized that constructing the feature graph in the feature space is different. However, the feature graph construction in high-dimensional space poses a challenge in computation and memory, making it impossible to use DGCNN to build a very deep network. Graph Attention Networks (GAT)26 describe the correlation between graph vertices through the attention mechanism. GAC27 introduced GAT to point cloud tasks. HAPGN28 used gated networks to assign different importance to different representation spaces. ABEM29 introduces error feedback to EdgeConv25 to control the feature output. Graph convolution methods focus more on the differences in node (point) features, which may ignore some spatial relation features.

The adaptive 3D filter generation method is another type of improvement to PointNet++. PointCNN30 alleviates the disorder of point clouds through a special feature sorting method. A-CNN31 determines the input order of the convolution through the neighborhood point projection on the tangent plane of the central point. However, they cannot achieve complete permutation invariance. PointConv32 posits that the weight of the three-dimensional spatial convolution at the neighborhood point is only related to the three-dimensional coordinates of the neighborhood point, and the local inverse density can be used to balance the density difference of the point cloud, thus improving the filter’s robustness to density variations. However, when transforming similar spatial coordinates, it is more likely to output similar weights. Therefore, it only fits well into the convolution filter in which the weight values of two weights have smaller changes when the coordinates of these two points are closer.

KPConv33 describes a linear relationship between the distance from feature coordinates to kernel points. This relationship, combined with the weights of the kernel points, determines the weights for the features in the filter. This method uses a truncated linear kernel function to estimate convolutional weights for local points, where weights are inversely proportional to the distance from the kernel’s center. KPConv overemphasizes the distance effect on convolutional weights and neglects local relative positional relationships, limiting its effectiveness.

Recent researches34,35,36,37 have utilized Transformers and generative pre-training, recent advancements in natural language processing, to achieve satisfactory results in point cloud feature extraction by stacking self-attentive codecs or incorporating additional data for model pre-training. However, these methods do not enhance basic local feature encoding and introduce significant computational and storage overheads to compute multi-head attention.

A major distinction between our approach and traditional point cloud spatial convolution methods (e.g., PointCNN30, PointConv32, RS-CNN38) is the method of weight computation for high-dimensional feature transformations. Conventional methods rely on low-dimensional heuristic relationships to fit the convolutional weights. In contrast, our approach employs a predefined weight framework and uses an attention mechanism to calculate the convolutional weights for each point. The removal of heuristic constraints gives the framework’s weights a higher degree of freedom. This increased flexibility enables the generation of more diverse convolutional filters. As a result, the convolution operation can extract local shape features more comprehensively. These improvements ultimately enhance the overall effectiveness of the model.

Methodology

Regular form and equivalent form of two-dimensional convolution on regular data. (a) is the conventional form of two-dimensional convolution. It defines multiple filters with the same shape as the local area to extract features from the image. (b) is the equivalent form, which defines the weights according to the input and output channels of each pixel. The filters in (a) are equivalent to the weights in (a). The two forms can be converted to each other.

Equivalent form of convolution

In this section, we first introduce the general convolution representation on regular data and then present its equivalent form, which facilitates performing the convolution operation on irregular data.

General convolution representation on regular data

Given the input data \(f\left( x\right)\) with \(C_{in}\) input channels, Eq. (1) defines the convolution of one of the output channels, where \(g\left( x\right)\) is the convolution kernel for that channel, \(\mathbb {R}\) is the set of all real numbers.

For regular data, Eq. (1) can also be expressed as a discrete function. For example, Eq. (1) can be expressed as a two-dimensional discrete function for image data. In convolutional neural networks for images, when a filter performs a convolution operation on a local area, the position of each pixel is fixed. Therefore, the weights in the filter that correspond to each position in the local area can be defined. The convolution operation can be converted to calculating the weighted sum between the local area features and the filter. The convolution of the i-th output channel can be expressed as Eq. (2), where \(f_{local}\in \mathbb {R}^{\left\| local \right\| \times C_{in}}\) is the local feature set, and \(W_i\in \mathbb {R}^{\left\| local \right\| \times C_{in}}\) is the filters of convolution.

The feature \(f_{out}\left( x\right)\) for \(C_{out}\) output channels can be expressed as Eq. (3):

This process is illustrated in Fig. 3a.

Equivalent representation of convolution on regular data

For the convolution of regular data, we can segment the weights in the filter based on the local area and express the convolution operation in an equivalent form.

\(W_n\in \mathbb {R}^{C_{in}\times C_{out}}\) denotes the filter weight corresponding to the n-th unit of the local area with \(C_{in}\) input channels and \(C_{out}\) output channels. Therefore, Eq. (4) represents the equivalent form of the convolution, where \(f_n\) is the feature of the n-th unit and \(\otimes\) represents the tensor product.

Figure 3b illustrates the operations performed by Eq. (4). The equivalent form in Eq. (4) differs from the general convolution form in Eq. (2). In Eq. (2), the unit of the convolution function is a local area, while in Eq. (4), the unit is a single input object consisting of the corresponding cells of the local area in each input channel. As a result, the convolution weight is bound to a single input object, not to a set of points in a local area. This approach facilitates the performance of convolution operations on irregular data.

Representation of point cloud convolution

Unlike regular data, the sparseness, irregularity, and disorder of point clouds make it challenging to define filter weights that correspond to the positions of local cloud points precisely. In this section, we propose the FPAC process, which aims to generate a spatially continuous filter for point cloud convolution.

Define a point cloud \(P=\{x_i|i=1,2,\cdots ,m\}\), where m is the number of points in P. Each point \(x_i\in \mathbb {R}^3\) represents the 3D coordinates of point i. Let \(f_i\) denote the input feature of point i in the current convolution layer. The point set \(local_i\) consists of s points surrounding point i in a local area.

According to Eq. (4), the key to defining filters for irregular data lies in the definition of \(W_n\), which is closely related to the position of the input \(f_n\). As shown in Fig. 4, \(\widetilde{g}\left( x_n\right)\) is defined as the mapping from the coordinates of the input feature \(f_n\) to the filter weight \(W_n\), where \(x_n\) are the coordinates of point n. To maintain the translation invariance of the feature, the coordinates of the input points can be replaced with the local coordinates of the feature:

Equation (4) can be modified to Eq. (6).

The process following Eq. (6) is illustrated in Fig. 4. The key now is to define \(g\left( x\right)\) in Eq. (6). The method proposed in32 uses MLP to fit \(g\left( x\right)\) directly without constraints. However, as discussed in related work, this fitting method has drawbacks. In this paper, we propose the frame point attentions (FPA) method to define \(\widetilde{g}\left( x_n\right)\). FPA specifies a set of virtual points (called frame points) \(x_{frame}=\{x_k | k=1, 2, \cdots , v \}\) from the local area space and defines a set of weights \(W_k\in \mathbb {R}^{C_{in} \times C_{out}}\) corresponding to the k-th frame point. The virtual frame points, together with their weights, constitute the frame of the filter.

However, the number and coordinates of the frame points may not match the actual cloud points in the local area. Therefore, the weights assigned to the frame points cannot be directly used in the convolution of the local area. To address this, we utilize the equivalent form of the convolution to construct a shared attention mechanism between the real points and the virtual frame points, through which the weights corresponding to the real points are obtained.

Frame points attention

The attention scores between local points and frame points are evaluated based on their three-dimensional feature relationships. Since 3D spatial features directly capture the positional relationships between points, this approach reduces the potential distortion and contamination of attention scores caused by the abstraction of high-dimensional feature spaces.

With the advancement of Transformer technology, Scaled Dot-Product Attention has been widely adopted across various tasks, establishing itself as the dominant paradigm for modern attention computation. However, Scaled Dot-Product Attention is primarily designed for high-dimensional feature embeddings (typically exceeding 128 dimensions). When applied to low-dimensional input features, the available information is often insufficient, making it challenging to effectively assess correlation scores between tokens.

Given that the input dimension of Frame Points Attention (FPA) is relatively low, typically limited to three-dimensional spatial coordinates, FPA employs an MLP-based affine transformation to compute attention scores, following the Additive Attention mechanism.

The attention correlation weight between the local points \(x_n\) and the virtual frame points \(x_k\) (denoted as \(a_{nk}\) can be computed as follows. Let \(\alpha \left( x_{query}, x_{key}\right) \in \mathbb {R}\) represent the correlation function between points \(x_{query}\) and \(x_{key}\).

Considering a local area of the point cloud, the attention score between the local point \(x_n\) and the k-th frame point \(x_k\) is given by \(\widetilde{a_{nk}} = \alpha \left( x_n, x_k\right)\). To ensure transitional invariance of attention across different locals, we compute the attention scores using the local coordinates of point \((x_n)\), as defined in Eq. (7). Specifically, the function \(m_1: \mathbb {R}^6 \rightarrow \mathbb {R}\) maps two 3D coordinates (represented by six real numbers) to a single real-valued attention score. The symbol \(\parallel\) denotes the concatenation operation.

Next, we apply the softmax normalization to \(\widetilde{a_{nk}}\) across all frame points (i.e., over the index k) to obtain the attention relation weight \(a_{nk}\):

The FPA calculation process, as described in Eq. (8), is illustrated in Fig. 5. This process involves performing convolution between five points in a point cloud and five defined frame points. Steps (I), (II), and (III) represent the broadcast concatenation operation, the MLP processing in Eq. (7), and the softmax function in Eq. (8), respectively.

However, we argue that a linear combination is insufficient to capture the complex relationship between frame point weights and attention correlation as defined in our work. Therefore, we adopt a nonlinear combination of attention weights and frame weights to construct the filter weight of FPA as follows.

\(m_2:\mathbb {R}^{C_{in}\times C_{out}}\rightarrow \mathbb {R}^{C_{in}\times C_{out}}\) denotes the nonlinear transformation of the weight corresponding to the frame point, which can be implemented by MLP. The linear combination of the attention relation weight \(a_{nk}\) and the \(m_2\) transformation of weight constitutes \(g\left( x\right)\) in FPA. Therefore, \(W_n\) (i.e. the filter weight corresponding to the n-th point) can be formulated as Eq. (9).

The mappings \(m_1\) and \(m_2\) are shared for processing different local areas in the point cloud. The coordinates \(x_{frame}\) and the weights \(W_{k}\) of the virtual frame point are predetermined, which means that the filter \(g\left( x_n-x_i\right)\) is shared across different local areas. In the local area of the point cloud, the filter weight \(W_n\) is independent of the input order of the points, as it is calculated based on the coordinates of the points. It is determined solely by the local coordinates \(x_n-x_i\) to maintain permutation invariance.

Substituting \(g\left( x\right)\) in Eq. (9) into Eq. (6), we can obtain the FPAC operator:

This process is shown in Fig. 6a.

Uniqueness of FPA weights

In this work, we preset the weights (\(W_k\)) of the frame points (\(x_k\)), and then apply Eq. (9) and these frame point weights to calculate the weight for any point in the Euclidian space. Since the frame points are also the points in the space, the predefined weights \(W_k\) for the frame point \(x_k\) should be consistent with the weight \(g\left( x_k\right)\) calculated by FPA. Namely, there should be only one unique set of weights \(W_n\) corresponding to any point \(x_n\) in the local area. In order to achieve this, we define the following loss function:

The loss function defined in Eq. (11) constrains \(g\left( x\right)\) to meet the condition of the consistency between \(W_k\) and \(g\left( x_k\right)\). During the training of the model, \(loss_w\) ensures that the weights calculated by FPA at the positions of the frame points (i.e., \(g\left( x_k\right)\)) converge to the preset weights for those frame points (i.e., \(W_k\)), which we refer to as the frame constraint of \(g\left( x\right)\).

Selection of frame points

The selection of frame points significantly impacts the ability to satisfy the $g(x)$ frame constraint and affects the diversity of computed filters. FPAC generates filter weights for specific spatial positions by leveraging the attention relationships between spatial locations and frame points within continuous local areas. To accommodate variations in point count and density across different local areas in point clouds, the selection of frame points should ensure a uniform distribution of influence within the local space.

Based on these considerations, we establish the following principles for frame point selection: Uniform influence across all points within the local area; invariance to spatial rotations; confinement of the local area to a spherical region with a radius of $r$.

Based on these criteria, the center point \(\left( 0,0,0\right)\) and the vertices of l regular polyhedrons are chosen as the frame points, where the radius of the circumscribed spheres of the regular polyhedrons is \(\left\{ cr\left| c\in \left\{ \frac{1}{l},\frac{2}{l},\cdots ,1\right\} \right. \right\}\) and l is the number of layers of the frame. Figure 7 shows two examples of frame points: \(l=2\) in Figure 7a and \(l=3\) in Fig. 7b. In both cases, the frame points are the vertices of regular icosahedra.

These frame points are evenly distributed on the surface and at the center of n spheres, each centered at \(x_i\) with a radius of cr. These points uniformly influence the attention of all points in the local area. When the frame points are rotated about the spherical center, they remain evenly distributed on the surface of the sphere. In each layer of the network and each training iteration, the frame points randomly rotate around the spherical center to maintain rotation invariance.

It is noteworthy that the selection of frame points primarily focuses on facilitating the learning of diverse filters for continuous local areas, independent of the actual points present in the local areas. Specifically, even in the absence of points within a local area, the Frame Points Attention mechanism can still learn and generate filters for the continuous space of the region. This independence ensures that the learning process is not influenced by variations in the number, density, or shape of points within local areas in the point cloud.

Optimzing memory consumption in FPAC

Mainstream deep learning frameworks accelerate neural network training through vectorization and parallel computing on GPUs39,40. This requires all variables and parameters of the operators discussed in previous sections to be stored in GPU memory. In a single layer of FPAC, the weight values \(g\left( x\right)\) vary across different local areas of the point cloud. When FPAC is run on a GPU, the parameters and variables needed for each local area must be stored in GPU memory.

Assume each mini-batch contains 16 samples, each with 1024 points, the maximum number of points in a local area is 32, there are 15 frame points, the input channel is 256, and the output channel is 1024. According to Eq. (13), the tensor size, calculated as \(B\times m\times s\times v\times C_{in}\times C_{out}\), will be enormous: \(16\times 1024\times 32\times 15\times 256\times 1024=2,061,584,302,080\), at the most demanding point in the network. If single-precision float is used for storage, this tensor will consume about 7.5 TB of memory, making GPU acceleration infeasible.

We find that in the FPA tensor, the large size of \(C_{in}\times C_{out}\) is the primary factor contributing to high memory consumption. This term originates from the size of the convolution weight \(W_k\) defined by the frame point. Thus, we modify the \(m_2\) transformation from \(m_2: \mathbb {R}^{C_{in}\times C_{out}}\rightarrow \mathbb {R}^{C_{in}\times C_{out}}\) to \(\widetilde{m_2}: \mathbb {R}^{C_{in}\times C_{out}}\rightarrow \mathbb {R}^{mid}\). This modification reduces the dimension of \(W_k\) to mid during the non-linear transformation.

We define \(\overline{g}\left( x \right)\) as the FPA weight function after the dimensionality reduction:

According to Eq. (15), the size of the FPA tensor in the local area is reduced from \(s\times v\times C_{in}\times C_{out}\) to \(s\times v\times mid\). This reduction means that the memory consumption is now only a fraction (i.e., \(\frac{mid}{C_{in}\times C_{out}}\); for example, \(mid=32\), \(C_{in}=256\), \(C_{out}=1024\)) of the original FPA’s memory usage.

Since \(\overline{W_n}\in \mathbb {R}^{mid}\), matrix multiplication with \(f_n\) as per the equivalent form of convolution is not possible. Thus, the convolution needs to be modified. Let \(f_{local_i}\in \mathbb {R}^{s\times C_{in}}\) represent the feature set of s points in the local area, and \(\overline{W_{local_i}}\in \mathbb {R}^{s\times mid}\) represent the vector of s convolution weights. We define the function \(\overline{Conv}\left( local_i\right) \in \mathbb {R}^{C_{in}\times mid}\) as follows:

\(\overline{Conv}\left( local_i\right)\) can be seen as performing a convolution between the local features and the mid-dimensional weights across different channels, resulting in an output feature of dimension \(C_{in}\times mid\).

The output dimension of PFAC is \(C_{out}\), differing from the feature dimension of \(\overline{Conv}\left( local_i\right)\). To match the output dimensions of FPAC, we define the mapping function \(m_r: \mathbb {R}^{C_{in}\times mid}\rightarrow \mathbb {R}^{C_{out}}\).

We can prove that Eqs. (14) and (10) are equivalent through Theorem 1.

Theorem 1

The calculations in Eqs. (14) and (10) are equivalent.

Proof

Let \(\widetilde{m_2}\left( W_{k}\right)\) be the input of the last layer of \(m_2\), and \(E_{m_2}\in \mathbb {R}^{mid\times \left( C_{in}\times C_{out}\right) }\) be the weight of the last layer of \(m_2\). Then Eq. (10) can be modified as:

We modify the tensor product to the cumulative form:

Let \(m_r: \mathbb {R}^{C_{in}\times m i d}\rightarrow \mathbb {R}^{C_{out}}\), Eq. (16) can be reformulated as:

where \(m_1:\mathbb {R}^6\rightarrow R\), \(\widetilde{m_2}:\mathbb {R}^{C_{in}\times C_{out}}\rightarrow \mathbb {R}^{mid}\) and \(m_r:\mathbb {R}^{C_{in}\times mid}\rightarrow \mathbb {R}^{C_{out}}\) are \(1\times 1\) convolution, \(x_{local_i} \in \mathbb {R}^{\left\| local_i \right\| \times 3 }\) is the collection of neighbor points. \(\square\)

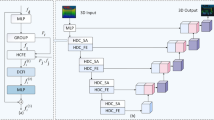

Equation (14) represents the more efficient memory implementation of FPAC. The computation process is shown in of Figure 6b. The MLP is implemented as \(1\times 1\) convolution, which takes the full advantage of the deep learning framework and the parallelization of GPU to improve the network efficiency.

Moreover, since the memory efficient implementation no longer outputs the convolution weight of dimension \(C_{in}\times C_{out}\) to participate in the calculation of \(loss_w\) directly, \(W_{k}\) in the loss function \(loss_w\) can be replaced by the \(\widetilde{m_2}\left( W_{k}\right)\) in the efficient implementation. This way, we can obtain \(loss_w\) during the parallel computation of the forward propagation in the network.

where \(W_{\bar{k}}\) is the weights of \(\bar{k}\)-th frame point, \(a_{\bar{k} k}\) is the attention weight of \(\bar{k}\)-th and k-th frame points

Implementation of network architecture

Residual block

To train a deeper network, we designed a Residual Block for FPAC, inspired by ResNet. The internal architecture is shown in Fig. 8. FPAC serves as the main path in the residual block. In the shortcut connection, a \(1\times 1\) convolution is an optional module. If the feature dimension changes in the main path, the feature dimension in the shortcut path is adjusted via the convolution shortcut to match the output dimension of the main path. Since FPAC aggregates local features at the center point, the local feature set in the shortcut path also needs to be aggregated. We use the maxpooling function, widely recognized as effective in literature, for feature aggregation in the shortcut path. The outputs of the main and shortcut paths are then combined to form the output of the Residual Block.

Network architecture

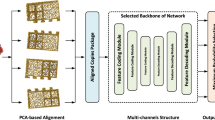

We utilized FPAC to develop hierarchical deep learning models for point cloud shape classification and semantic segmentation tasks. Figure 9 illustrates the architecture, where the height of each rectangle denotes the number of points (referred to as the resolution), and the width represents the feature dimension.

The network architecture for classification and segmentation. The number below the feature tensor represents the dimension of the feature. The length of the tensor indicates the resolution of the feature. The first half of the architecture is feature abstraction. The classification network inputs the abstract features into an FC network to obtain the classification probability. The segmentation network inputs the abstract features into the feature propagation part, and obtains the part label of each point.

As illustrated in Fig. 9, the feature abstraction process (ranging from dimensions 3 to 1024) for both classification and segmentation tasks is identical within the network. In each layer, points are uniformly sampled from the point cloud using Farthest Point Sampling (FPS), with the sampled points serving as the centers of local areas. The local area of a center point is then identified using the ball query (or kNN). Within each local area, the FPAC Residual Block (represented by dark blue rectangles) abstracts and aggregates the local features. The point cloud’s resolution decreases layer by layer, while the feature dimension increases. At the end of this abstraction, the entire point cloud’s features are condensed into a high-dimensional representation (1024 in the figure) with a resolution of 1. For the shape classification task, this abstracted feature is fed into a fully connected classification network (shown at the bottom of the figure) to output category probabilities.

For the semantic segmentation model, the abstracted feature undergoes feature propagation within the network (occurring after the green rectangle labeled 1024 in Fig. 9). The concept of feature propagation is akin to that in U-Net41. However, unlike U-Net, which employs deconvolution to incrementally restore resolution, using the ball query for neighborhood selection can result in overlapping local areas, making deconvolution unsuitable. Instead, we utilize linear interpolation to restore spatial resolution. Specifically, to determine the feature value of a point (e.g., point A), we identify a few (e.g., 3) of the closest neighboring points to A that have already been assigned feature values. We then assign the feature value of point A as the weighted sum of the feature values of these neighboring points, with the weights being the inverse of the distances between the points.

Similar to U-Net, the interpolated features are concatenated with the features of the same resolution obtained during the feature abstraction stage (as indicated by the dotted arrows in Fig. 9). This concatenated feature represents the combination of abstracted and interpolated features. The concatenated feature then passes through an MLP (depicted as the orange block in Fig. 9) for feature transformation. These transformed features are further processed through interpolation in the subsequent layer of feature propagation. In the final layer of the semantic segmentation model, the spatial resolution of the features is restored to match that of the original point cloud model. The last three layers of MLPs transform the feature dimensions to align with the number of part categories in the segmentation task, thereby enabling each point in the point cloud model to be labeled with a part category.

Experiments

In this section, we present experiments to evaluate the effectiveness of FPAC across various aspects, including model accuracy, frame point selection, neighborhood selection, and robustness. We also demonstrate FPAC’s applicability to regular data, validating it as a convolutional operation. Additionally, we perform ablation studies to assess the contribution of different components within the FPAC network. Our implementation of FPAC, based on PyTorch, is available as open-source at https://github.com/lly007/FPAC.

This study evaluates FPAC-based models on three representative point cloud tasks. Classification tasks are used to validate the feature encoding capability of FPAC. Part segmentation tasks measure the model’s performance in fine-grained feature extraction. Scene semantic segmentation tasks, which involve large-scale datasets with significant sensor noise, test the model’s robustness in handling real-world scanned data.

To conduct these evaluations, we selected three widely used datasets: ModelNet40 for classification, ShapeNet Core Part for part segmentation, and S3DIS (Stanford 3D Large-scale Indoor Spaces) for scene semantic segmentation. These datasets are standard benchmarks in point cloud research, which are widely used in related studies19,20,31,32,34,38 on point cloud feature encoding. Using these datasets allows for a fair comparison with previous studies.

Classification

Classification is a common and crucial task for evaluating point cloud feature abstraction performance. To compare with state-of-the-art methods, we used the ModelNet40 benchmark, which is widely used for this purpose. The ModelNet project14 offers a comprehensive set of 3D CAD models, with ModelNet40 being a 40-class subset. ModelNet40 includes 12,311 CAD models across 40 categories, with 9,843 for training and 2,468 for testing. The data format used is a point cloud model with 1024 points sampled from each CAD model.

To enhance the network’s robustness against model rotation and jitter, and to reduce overfitting on the training set, we employed data augmentation as described in19. This includes rotating the point cloud along the Z-axis and adding Gaussian noise for small random jitter. The number of input points is set to 1024, with point coordinates as the only input. The results are presented in Table 1. As shown, our method achieves the highest accuracy compared to existing methods with a smaller number of parameters. For instance, FPAC’s accuracy is 1.0% higher than KPConv, while FPAC has significantly fewer parameters (1.94 M vs. 6.15 M). Although PointCNN has the fewest parameters (with FPAC being second), its accuracy is 1.7% lower than FPAC’s. Additionally, compared to 3DmFV, FPAC uses only 42.2% of the parameters and improves accuracy by 2.3%. FPAC is also efficient in inference runtime, being only slightly slower than PointNet and PointNet++. The simpler feature abstraction in PointNet and PointNet++ contributes to their lower accuracy. Comparisons with PointTransformer34 and PointBERT35 show that FPAC performs highly competitively with the most recent Transformer-based and pre-training-based methods.

Part segmentation

The part segmentation task involves performing semantic segmentation on manually annotated data, requiring the model to have a strong capability for fine-grained analysis. The ShapeNet dataset45, commonly used in the literature19,20, is employed for experiments in this section. ShapeNet contains 16,681 3D shapes across 16 categories, with each shape composed of 50 part types. Each point in the dataset is labeled with a part type.

The experimental model follows the architecture of the semantic segmentation network described in section “Network architecture”. The segmentation network takes the coordinates of 2048 points as input. In the final layer of Feature Propagation, a one-hot vector indicating the shape category is appended to the feature of each point. Following the approach of PointNet++, we constructed single-scale grouping (SSG) and multi-scale grouping (MSG) segmentation networks based on FPAC. Two metrics were used to evaluate performance: category average mean Intersection over Union (category mIoU) and instances mIoU. The results are shown in Table 2.

The experimental results in Table 2 indicate that the FPAC-SSG model achieves higher category mIoU compared to other models on ShapeNet benchmark. However, its instance mIoU is slightly lower than that of PointConv, with a difference of approximately 0.3%. The use of MSG further improves the feature encoding performance. Specifically, the MSG model increases category mIoU and instance mIoU by 0.5% and 0.7%, respectively, compared to the SSG model. Additionally, the MSG model outperforms all listed benchmarks in both metrics. These findings confirm the strong capability of FPAC in fine-grained point cloud segmentation tasks.

Scene segmentation

Compared to shape classification and part segmentation, scene segmentation is more complex due to the use of real scanned point cloud data. Real scene data is typically larger in scale and often contains noise and errors. Additionally, scene semantic segmentation poses significant challenges to a model’s ability to process large-scale point clouds effectively. The S3DIS dataset, as a large-scale point cloud benchmark, further amplifies these challenges, requiring models to handle extensive spatial structures, high point density, and complex semantic variations. In this section, we evaluate the performance of the FPAC-based semantic segmentation model using the Stanford 3D Large-scale Indoor Spaces (S3DIS) dataset.

The S3DIS50 dataset consists of 271 rooms spread across six areas, with area 5 being entirely separate from the other five. We used the preprocessing method from20 for the input data. To compare with existing work, we trained the model on all areas except area 5 and tested it on area 5. Note that, unlike methods that use voxel grid paths to assist local feature abstraction51 or local consistency constraints27 (which can improve mIoU), our method only uses the spatial features of points for semantic segmentation. Performance is evaluated using two metrics: overall accuracy and instance mIoU. The experimental results are presented in Table 3.

The results demonstrate that the FPAC-based semantic segmentation network outperforms the latest methods in the literature for scene segmentation. These findings indicate that FPAC is effective for fine-grained tasks with large-scale point cloud data and robust to noise in real scene data.

The selection of frame points

To study the impact of frame point selection on effectiveness, we conducted comparative experiments with various frame point selection strategies. The influence of frame point selection primarily arises from two factors: the number of points v and the number of frame layers l. Theoretically, the more frame points selected, the more weight values are available for attention reference, enabling the generation of more diverse filters. This diversity enhances the convolution’s feature extraction capabilities, making it more comprehensive. Multi-layered frames help evenly distribute the spatial influence of the frames, benefiting the calculation of attention for points far from the frame points. However, increasing the number of points and frame layers also increases computation and memory usage, making the model harder to converge.

To evaluate the impact of frame point selection on model accuracy, we selected eight groups of frame points for our experiments. The groups are as follows: (a) point \(\left( 0,0,0\right)\) (1 point in total); (b) 4 vertices and point \(\left( 0,0,0\right)\) of a regular tetrahedron with \(R=1\) (5 points in total); (c) \(4 \times 2\) vertices and point \(\left( 0,0,0\right)\) of two regular tetrahedra with \(R=r\) and \(R=0.5r\) (9 points in total); (d) \(4 \times 3\) vertices and point \(\left( 0,0,0\right)\) of three regular tetrahedrons of \(R=r\), \(R=\frac{2}{3}r\) and \(R=\frac{1}{3}r\) (13 points in total); (e) \(8 \times 2\) vertices and point \(\left( 0,0,0\right)\) of two regular hexahedrons with \(R=r\) and \(R=0.5r\) (17 points in total); (f) \(8 \times 3\) vertices and point \(\left( 0,0,0\right)\) of three regular hexahedrons with radius \(R=r\), \(R=\frac{2}{3}r\) and \(R=\frac{1}{3}r\) (25 points in total); (g) \(6 \times 2\) vertices and point \(\left( 0,0,0\right)\) of two regular octahedra with \(R=r\) and \(R=0.5r\) (13 points in total); (h) 12 vertices and point \(\left( 0,0,0\right)\) of the icosahedron with \(R=r\) (13 points in total); Here, R is the circumscribed sphere radius of a regular polyhedron, and r is the neighborhood radius.

To prevent memory overflow caused by large v values, we constructed a 2-layer classification network using FPAC and evaluated its performance on the ModelNet40 benchmark. The experimental results are summarized in Table 4.

In the table, v represents the number of frame points, l denotes the layer of the regular polyhedron where the frame points are located, and share in local indicates the percentage ratio of frame points to the total points in are local area.

Table 4 presents the experimental results, which show that increasing the number of frame points (v) improves model performance. This improvement occurs because a higher number of frame points increases the density of spatial discrete convolution kernels, thereby enhancing feature extraction. However, the results also reveal that once v exceeds a threshold (approximately 17 in this study), further increases in frame points do not lead to significant accuracy gains. Instead, they add unnecessary computational cost.

In Groups d, g, and h, where the total number of frame points remains constant but the number of frame layers (l) varies, the results suggest that increasing frame layers initially benefits accuracy. For example, Group h achieves higher accuracy than Group g when l increases from 1 to 2. This improvement arises because multi-layer frame points better distribute attention among neighborhood points near the center of the local area. However, when l increases from 2 to 3, as observed in Group h, accuracy declines slightly. This decline occurs because the outer layer points become too sparse, reducing their ability to calculate attention effectively. Furthermore, a comparison between Group d and Group f shows that increasing the total number of frame points (from 13 to 25) while keeping the number of layers constant results in improved accuracy. These results highlight the importance of optimizing the spatial distribution of frame points to improve the model’s performance.

The analysis confirms that increasing the number of frame points is an effective way to enhance FPA performance. However, once the number of frame points exceeds a threshold (approximately 25% of local points), the model reaches a bottleneck, and further increases in frame points do not significantly improve feature encoding. The results also demonstrate that distributing frame points across multiple layers of a regular polyhedron leads to better performance. This distribution allows frame points to exert more uniform influence over local regions, effectively capturing attention in both central and peripheral areas. Consequently, this approach improves the encoding quality and effectiveness of the FPA.

Furthermore, we investigated strategies for selecting frame points by sampling directly within local areas, including the farthest point sampling (FPS) for uniform sampling, random sampling (RS), and adaptive sampling (AS) methods56. Through these methods, we sampled 17 frame points within local areas and compared them with group e. The experimental results are documented in Table 5.

The experimental results indicate that neither FPS nor adaptive sampling methods demonstrated superior performance, despite the introduction of additional parameters or computational overhead. Although random sampling did not introduce further computational overhead, it exhibited a slight decrease in accuracy compared to our method, which may be attributed to the instability inherent in random sampling. Considering computational efficiency, we conclude that the vertices of a regular polyhedron represent the most suitable strategy for selecting framework points.

Interpretability of FPAC

This section visualizes the attention scores of frame points to improve the interpretability of FPAC. We selected a local area from a point cloud object and generated 9 frame points. Then, we chose several local points and visualized the attention scores (before softmax) between them and the frame points. This helps to identify which frame points contribute significantly to the convolutional weights of the selected local points. The results are shown in Fig. 10. In the figure, square markers represent frame points, blue dots denote the local area, and black pentagrams indicate the selected local points for visualization. The color of the frame points reflects their attention scores toward the pentagram points.

We observed that the number of frame points with significant influence varies. In (a), 4 frame points have a strong impact, while in (b), there are 3, in (c), 3, and in (d), 5. This suggests that methods33 using a fixed number of weight anchors and simple kernel functions to estimate convolutional weights may be insufficient. Frame points closer to the pentagram points generally have higher attention scores, such as frame point 2 in (a) and frame point 9 in (d). However, this is not always the case. For example, in (a), frame points 6 and 8, in (b), frame point 6, which are farther away, also show significant attention scores. This might be because the pentagram point in (a) is on the connecting rod of the chair backrest, while frame points 6 and 8 are near the lower end of the rod, influencing the upper points. In (c), frame point 2, which is the farthest from the pentagram, still exhibits a certain degree of influence. This can be attributed to its closer proximity to the rod. In (d), the pentagram point is located at the corner where the chair cushion and the rod intersect, requiring multiple frame points to jointly contribute to the weight computation. Our experiments demonstrate that FPAC effectively captures and leverages the spatial features and relationships of frame points when computing convolution weights. By dynamically adjusting the weight contributions of frame points, FPAC enhances the model’s effectiveness.

Visualization of FPA convolutional filter weights. The first row shows the filter weights generated by FPA, while the second row displays those obtained through linear interpolation (LI). The color of each point represents the spatial convolutional weight generated by FPA, with the color scale indicating the magnitude of the weight: warmer colors (closer to red) correspond to larger weight values, signifying a stronger ability to extract shape features from those points. Larger points represent the frame points, specifically the 8 vertices and the center point of the hexahedron. The similarity in color between a point and the frame points reflects the degree of attention the convolutional weights assign to those frame points.

Furthermore, we visualize the weights generated by Frame Points Attention (FPA), focusing on two aspects: the features of filter weights and the attentions between filters and frame points. Figure 11 shows the filter weights generated by FPA in local areas of the point cloud, alongside convolution weights generated by linear interpolation for comparison.

As shown in Fig. 11, the weights generated by the FPA filter outperform those of the linear interpolation filter in emphasizing unique local features: (a) distinguishing between the surface and edges of a chair; (b) capturing the distinct curvature of a cup’s waistline compared to its general surface; (c) highlighting the defining edge lines of a lampshade; (d) accentuating the right-angled edges of a monitor; and (e) differentiating between the two layers of wings that share a similar shape but differ significantly in positional height. The weights generated by FPA are particularly effective at extracting diverse features, including surface features (e.g., (a) and (b)), edge line features (e.g., (c) and (d)), and features of similar shapes in different locations (e.g., (e)). In contrast, the weights produced by linear interpolation, while capable of capturing some differences in these features, exhibit smooth transitions across continuous positions, making them less effective at emphasizing non-smooth shape features. The FPA-generated filter weights, by comparison, exhibit greater diversity and adaptability.

Observing the attention between FPA weights and frame points, we notice a certain relationship between weight attention and Euclidean distance. Unlike linear interpolation, this relationship is non-linear, providing more diversity in FPA filters. In contrast, the linear method is merely a special case of FPA. In fact, if necessary, FPA can generate filters similar to the linear interpolation method, changing more smoothly and continuously in space. We illustrate this in Fig. 12.

Robustness

We also conducted the experiments to evaluate the robustness of FPAC in terms of (i) the sensitivity of the model to the number of input points, (ii) point perturbations, and (iii) object rotation.

Sensitivity of the model to the number of input points

In this experiment, we randomly sampled the original ModelNet40 dataset to include 1024, 768, 512, and 128 points, and downsampled the model using FPS. Since 512 and 128 points are too few for a deep network, we constructed the network with only one FPAC layer and aggregated the features globally only once. The experimental results are shown in Fig. 13a.

The model accuracy is similar when the number of input points is 1024 or 768. When 50% of the points are missing (512 points), the accuracy drops by only 4.1% with random sampling and 2% with FPS sampling. However, when the number of points drops further, the accuracy drops significantly.

Point perturbations

Ten sets of Gaussian noise, all with a mean of zero but standard deviations ranging from 0.01 to 0.1 in increments of 0.01, were independently added to the points in the point cloud.

The experimental results are shown in Fig. 13b. The figure shows that as the noise level increases, the accuracy gradually decreases, which is expected. The decrease is slow and smooth, indicating that our method is robust to noise. Even with a 5% increase in random perturbations, we still achieve model accuracy of over 80%.

Object rotation

In this section, we assess the model’s robustness to rotations in point cloud objects. Rotating a point cloud object can be considered as observing it from different viewpoints, which mimics viewpoint variations of a LiDAR sensor. Therefore, evaluating the model’s robustness to rotation is equivalent to assessing its ability to handle viewpoint changes. To conduct this analysis, we applied random rotations to the objects and recorded the classification performance of the model on the transformed point clouds, as presented in Table 6. For comparative analysis, we also included the experimental results of PointNet++. The experiment is conducted on the ModelNet40 benchmark.

The experimental findings indicate that FPAC demonstrates strong robustness to object rotations, with only a 0.3% reduction in accuracy under random rotations. We attribute this to the enhanced capability of the frame attention mechanism in capturing rigid transformations. Compared to PointNet++, FPAC exhibits a lower performance degradation under rotation, further validating its effectiveness in handling viewpoint variations.

FPAC on regular dataset

FPAC is designed to work with irregular datasets such as point clouds. To verify that FPAC is a true convolution operator, we applied it to CIFAR-10 [40] to test its validity on a regular dataset. CIFAR-10 is a dataset of 60,000 RGB color images with 10 classifications and \(32\times 32\) resolution. The local area of an image can be regarded as a set of 2D points. The coordinates of the pixels in the local area can be defined and normalized using the center point as the origin. We uniformly select some points as frame points in the local area and define their corresponding weights. The feature calculation of FPAC is similar to that for 3D point clouds. We only need to replace \(\widetilde{m_1}: \mathbb {R}^6\rightarrow \mathbb {R}\) with \(\widetilde{m_1}: \mathbb {R}^4\rightarrow \mathbb {R}\) and the input feature \(\left( x,y,z\right)\) of the first layer with \(\left( R,G,B\right)\). We used AlexNet as the network architecture. The accuracy of FPAC on the CIFAR-10 dataset is about 86%, which is very close to the performance achieved by CNNs on the dataset. This result verifies that FPAC is a true convolution operator.

Ablation studies

In this subsection, we conducted the ablation studies to verify the effectiveness of various parts of the FPAC model, including frame points, attention mechanism and the value of the middle channel. The results are shown in Table 7.

Frame points

In this experiment, we replaced the FPA component in the network with convolutional weights directly generated by an MLP from the coordinates of neighborhood points, while keeping all other experimental settings identical to those in previous subsections. We then compared the classification accuracies achieved by this modified network and our FPAC model. The results indicate that removing frame points reduces the model’s classification accuracy from 93.9% to 91.6%, demonstrating the effectiveness of incorporating frame points. The improvement can be attributed to the increased diversity of filters induced by the weights associated with frame points.

Attention mechanism

In this section, we evaluate the impact of the attention mechanism on model performance to gain deeper insights into and validate its effectiveness in enhancing the model’s capability. Specifically, we compare three experimental settings: additive attention, scaled dot-product attention, and a model without an attention mechanism.

In the model without an attention mechanism, the \(\widetilde{m_1}\) operation (in Eq. (7)), which calculates the attention weights in the model, is replaced by Eq. (19) below. This means that the influence of frame points on the real points is only related to the Euclidean distance between them: the longer the distance, the weaker the influence.

where \(d\left( x_j,x_k \right)\) is the distance between \(x_j\) and \(x_k\).

Experimental results demonstrate that employing an attention mechanism to compute the weight relationships between local points and frame points significantly enhances the model’s effectiveness. Furthermore, the findings validate that the additive attention adopted in this study achieves more precise attention score computation compared to scaled dot-product attention, thereby further improving the model’s accuracy.

Residual block

This section evaluates the impact of residual blocks. Residual connections enable the effective training of deeper networks. To assess this effect, we conducted comparative experiments by removing residual connections and constructing classification models with varying depths. The accuracy of these models was then compared against those with residual connections. The experimental results are presented in Table 8.

The results indicate that increasing the depth of the model improves its effectiveness until a depth bottleneck is reached. For models without residual connections, the depth bottleneck occurs at three layers, and models with more than three layers do not demonstrate significant performance advantages. This suggests that networks without residual connections exceeding three layers are difficult to train effectively. In contrast, models with residual connections reach a depth bottleneck at five layers, where deeper models yield significant performance improvements.

Additionally, by observing the accuracy of three-layer models, we found that models with residual connections exhibit a slight performance advantage even before reaching the depth bottleneck.

Frame constraint

In this section, we evaluate the frame constraint loss function. The primary purpose of the frame constraint loss is to ensure that the weights generated by the FPA at frame point locations align with the weights of the frame points themselves. This alignment requires the FPA to compute accurate weight values at frame point locations, thereby enhancing the consistency and reliability of the FPA-generated weights. By reducing conflicts between the frame point weights and the FPA, the frame constraint loss accelerates the convergence of model training.

To assess its impact, we conducted comparative experiments by including and excluding the frame constraint loss during model training. The training processes of both groups were analyzed, and the results are presented in Fig. 14.

The experimental results demonstrate that models with frame constraints converge in approximately 180 epochs, significantly faster than models without frame constraints, which require around 260 epochs. Furthermore, models with frame constraints achieve approximately 4% higher classification accuracy, indicating improved performance and effectiveness. These findings strongly validate the design motivation behind the frame constraint.

The value of the middle channel

The memory-efficient implementation of FPAC replaces the \(C_{in} \times C_{out}\) dimensional weights in feature convolution with weights of a lower mid dimension. A major concern with this approach is the potential loss of feature information when the mid dimension is too small, which could create a bottleneck in feature encoding. Conversely, increasing the mid dimension significantly may result in higher computational and memory overhead.

This section investigates the optimal range of the mid dimension to minimize information loss without excessive computational costs. A simple two-layer classification network was constructed for the experiments. The mid dimension was varied across six values: 8, 16, 32, 48, 64, and 128. Each model was trained and evaluated independently, and the experimental results are presented in Fig. 15.

The results show that small mid values, such as 8 and 16, cause significant information loss. This loss creates a feature encoding bottleneck and negatively impacts model performance. When the mid dimension reaches 32, the bottleneck effect is eliminated. Further increasing the mid dimension beyond 32 does not lead to noticeable improvements in model effectiveness.

These findings suggest that the mid dimension can be effectively set within the range of 32–64. Larger mid values are unnecessary and not recommended, as they increase computational and memory costs proportional to the size of model tensors.

Neighborhood selection methods

The choice of neighborhood selection method plays a critical role in determining model accuracy by influencing local feature extraction. To analyze this impact, we conducted experiments comparing the ball query and k-Nearest Neighbors (kNN) methods. For the kNN method, experiments were performed with different numbers of neighborhood points (\(N=8,16{,}32{,}64{,}128\)). For the ball query method, experiments were conducted using varying radii (\(r=0.1,0.2,0.4\)) in the first layer of the ModelNet40 dataset. Table 9 summarizes the experimental results.

The results show that small local areas (\(N=8,16,32\) or \(r=0.1\)) constrain feature encoding effectiveness. This constraint arises because small areas lack sufficient semantic information to represent features comprehensively. Increasing the size of the local area to a threshold (\(N=64\), \(r=0.2\)) significantly improves accuracy by capturing more meaningful feature semantics. In contrast, overly large local areas (\(N=128\), \(r=0.4\)) reduce feature encoding effectiveness. This reduction is caused by the inclusion of multiple overlapping semantic features, which decreases focus and introduces ambiguity in feature encoding.

The ball query method outperforms kNN in both feature encoding performance and memory efficiency. This performance advantage is attributed to the robustness of ball query in handling density variations across local areas. Based on these results, the ball query method is identified as a more effective approach for neighborhood selection.

Discussion

FPAC is a local feature abstraction operator designed for sparse 3D point clouds, typically accommodating fewer than 10,000 points. High-density point clouds or large-scale scenes require preprocessing, such as sparsification through random or farthest point sampling, or partitioning into 3D grid chunks, to reduce the input size. This preprocessing highlights FPAC’s current limitations in directly handling dense point clouds due to the computational demands of large data volumes.

Furthermore, FPAC-based networks require careful hyperparameter tuning, including adjustments to frame point structure, neighborhood discovery methods, and layer count. As shown in our experiments, excessive layers lead to increased computational costs without significant performance gains. To balance accuracy and efficiency, we recommend limiting the feature encoding layers to a maximum of six.

While FPAC effectively encodes sparse point clouds, its scalability to large-scale dense point clouds remains a challenge, warranting further exploration in future research.

Conclusion

This paper introduces FPAC, a convolution operator designed to effectively learn 3D point clouds. FPAC computes convolution weights using frame points and an attention mechanism, incorporating a frame constraint to ensure weight consistency. The filter, formed by the computed weights, is continuous in space, allowing FPAC to generate weights for any position in the 3D point cloud model. Furthermore, we reformulated FPAC for a memory-efficient implementation, enabling its application to larger models. Extensive experiments were conducted to evaluate FPAC’s effectiveness, and the results demonstrate that FPAC-based classification networks achieve state-of-the-art performance compared to existing methods. These findings validate FPAC’s effectiveness in spatial feature extraction for point clouds.

Moving forward, we aim to explore several research directions. First, we plan to extend FPAC to non-Euclidean data, such as graphs, to further investigate its generalizability. Additionally, we will explore its integration into more complex 3D vision tasks, such as scene understanding and dynamic point cloud processing. Finally, optimizing FPAC for real-time applications will be another key focus, enhancing its practical usability in large-scale 3D data analysis.

Data availability

All data generated or analysed during this study are included in this published article. ModelNet dataset14 is accessible from https://modelnet.cs.princeton.edu/, ShapeNet dataset45 is accessible from https://shapenet.org/, S3DIS dataset50 is accessible from https://github.com/alexsax/2D-3D-Semantics.

References

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436–444 (2015).

Zhang, J., Huang, S., Liu, J., Zhu, X. & Xu, F. Pyrf-pcr: A robust three-stage 3d point cloud registration for outdoor scene. IEEE Trans. Intel. Veh. 9, 1270–1281. https://doi.org/10.1109/TIV.2023.3327098 (2024).

Zhang, J., Su, Q., Tang, B., Wang, C. & Li, Y. Dpsnet: Multitask learning using geometry reasoning for scene depth and semantics. IEEE Trans. Neural Netw. Learn. Syst. 34, 2710–2721. https://doi.org/10.1109/TNNLS.2021.3107362 (2023).

Zhang, J., Liu, Y., Ding, G., Tang, B. & Chen, Y. Adaptive decomposition and extraction network of individual fingerprint features for specific emitter identification. IEEE Trans. Inf. Forensics Secur. 19, 8515–8528. https://doi.org/10.1109/TIFS.2024.3427361 (2024).

Bronstein, M. M., Bruna, J., LeCun, Y., Szlam, A. & Vandergheynst, P. Geometric deep learning: Going beyond Euclidean data. IEEE Signal Process. Mag. 34, 18–42 (2017).

Mnih, V., Heess, N., Graves, A. & koray kavukcuoglu. Recurrent models of visual attention. In Advances in Neural Information Processing Systems, Vol. 27 2204–2212 (2014).

Vaswani, A. et al. Attention is all you need. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Vol. 30 5998–6008 (2017).

Zhang, W., He, X. & Lu, W. Exploring discriminative representations for image emotion recognition with cnns. IEEE Trans. Multimed. 22, 515–523 (2020).

Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J. & Wojna, Z. Rethinking the inception architecture for computer vision. In 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2818–2826 (2016).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 770–778 (2016).

Le, T., Bui, G. & Duan, Y. A multi-view recurrent neural network for 3d mesh segmentation. Comput. Graph. 66, 103–112 (2017).

Qi, C. R. et al. Volumetric and multi-view cnns for object classification on 3d data. In 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 5648–5656 (2016).

Maturana, D. & Scherer, S. Voxnet: A 3d convolutional neural network for real-time object recognition. In 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 922–928 (2015).

Wu, Z. et al. 3d shapenets: A deep representation for volumetric shapes. In 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 1912–1920 (2015).

Ben-Shabat, Y., Lindenbaum, M. & Fischer, A. 3dmfv: Three-dimensional point cloud classification in real-time using convolutional neural networks. IEEE Robot. Autom. Lett. 3, 3145–3152 (2018).

Wang, P.-S., Liu, Y., Guo, Y.-X., Sun, C.-Y. & Tong, X. O-cnn: octree-based convolutional neural networks for 3d shape analysis. ACM Trans. Graph. 36, 72 (2017).

Riegler, G., Ulusoy, A. O. & Geiger, A. Octnet: Learning deep 3d representations at high resolutions. In 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 6620–6629 (2017).

Klokov, R. & Lempitsky, V. Escape from cells: Deep kd-networks for the recognition of 3d point cloud models. In 2017 IEEE International Conference on Computer Vision (ICCV), 863–872 (2017).

Charles, R. Q., Su, H., Kaichun, M. & Guibas, L. J. Pointnet: Deep learning on point sets for 3d classification and segmentation. In 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 77–85 (2017).

Qi, C. R., Yi, L., Su, H. & Guibas, L. J. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. Adv. Neural Inf. Process. Syst. 30, 5099–5108 (2017).

Simonovsky, M. & Komodakis, N. Dynamic edge-conditioned filters in convolutional neural networks on graphs. In 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 29–38 (2017).

Verma, N., Boyer, E. & Verbeek, J. Feastnet: Feature-steered graph convolutions for 3d shape analysis. In 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2598–2606 (2018).

Zhang, Y. & Rabbat, M. A graph-cnn for 3d point cloud classification. In 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 6279–6283 (2018).

Kipf, T. N. & Welling, M. Semi-supervised classification with graph convolutional networks. In ICLR 2016 : International Conference on Learning Representations 2016 (2016).

Wang, Y. et al. Dynamic graph cnn for learning on point clouds. ACM Trans. Graph. 38, 146 (2019).

Veličković, P. et al. Graph attention networks. In ICLR 2018 : International Conference on Learning Representations 2018 (2018).

Wang, L., Huang, Y., Hou, Y., Zhang, S. & Shan, J. Graph attention convolution for point cloud semantic segmentation. In 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 10296–10305 (2019).

Chen, C., Qian, S., Fang, Q. & Xu, C. Hapgn: Hierarchical attentive pooling graph network for point cloud segmentation. IEEE Trans. Multimed. 1–1 (2020).

Qiu, S., Anwar, S. & Barnes, N. Geometric back-projection network for point cloud classification. IEEE Transactions on Multimedia 1–1 (2021).

Li, Y. et al. Pointcnn: convolution on x-transformed points. In NIPS’18 Proceedings of the 32nd International Conference on Neural Information Processing Systems, Vol. 31, 828–838 (2018).

Komarichev, A., Zhong, Z. & Hua, J. A-cnn: Annularly convolutional neural networks on point clouds. In 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 7421–7430 (2019).

Wu, W., Qi, Z. & Fuxin, L. Pointconv: Deep convolutional networks on 3d point clouds. In 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 9621–9630 (2019).

Thomas, H. et al. Kpconv: Flexible and deformable convolution for point clouds. In 2019 IEEE/CVF International Conference on Computer Vision (ICCV), 6411–6420 (2019).

Zhao, H., Jiang, L., Jia, J., Torr, P. H. & Koltun, V. Point transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 16259–16268 (2021).

Yu, X. et al. Point-bert: Pre-training 3d point cloud transformers with masked point modeling. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 19313–19322 (2022).

Huang, Z., Zhao, Z., Li, B. & Han, J. Lcpformer: Towards effective 3d point cloud analysis via local context propagation in transformers. IEEE Trans. Circuits Syst. Video Technol. 33, 4985–4996 (2023).

Robert, D., Raguet, H. & Landrieu, L. Efficient 3d semantic segmentation with superpoint transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 17195–17204 (2023).

Liu, Y., Fan, B., Xiang, S. & Pan, C. Relation-shape convolutional neural network for point cloud analysis. In 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 8895–8904 (2019).

Paszke, A. et al. Pytorch: An imperative style, high-performance deep learning library. Adv. Neural Inf. Process. Syst. 32, 8026–8037 (2019).

Abadi, M. et al. Tensorflow: A system for large-scale machine learning. In Proceedings of the 12th USENIX Conference on Operating Systems Design and Implementation, 265–283 (2016).

Ronneberger, O., Fischer, P. & Brox, T. U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention, 234–241 (2015).

Te, G., Hu, W., Zheng, A. & Guo, Z. Rgcnn: Regularized graph cnn for point cloud segmentation. In Proceedings of the 26th ACM International Conference on Multimedia, 746–754 (2018).

Pan, G., Liu, P., Wang, J., Ying, R. & Wen, F. 3dti-net: Learn 3d transform-invariant feature using hierarchical graph cnn. In Pacific Rim International Conference on Artificial Intelligence, 37–51 (2019).

Shen, Y., Feng, C., Yang, Y. & Tian, D. Mining point cloud local structures by kernel correlation and graph pooling. In 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 4548–4557 (2018).

Chang, A. X. et al. Shapenet: An information-rich 3d model repository. arXiv preprint arXiv:1512.03012 (2015).

Li, J., Chen, B. M. & Lee, G. H. So-net: Self-organizing network for point cloud analysis. In 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 9397–9406 (2018).

Atzmon, M., Maron, H. & Lipman, Y. Point convolutional neural networks by extension operators. ACM Trans. Graph. 37, 71 (2018).

Su, H. et al. Splatnet: Sparse lattice networks for point cloud processing. In 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2530–2539 (2018).

Xu, Y., Fan, T., Xu, M., Zeng, L. & Qiao, Y. Spidercnn: Deep learning on point sets with parameterized convolutional filters. In Proceedings of the European Conference on Computer Vision (ECCV), Vol. 11212 90–105 (2018).

Armeni, I. et al. 3d semantic parsing of large-scale indoor spaces. In 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 1534–1543. https://doi.org/10.1109/CVPR.2016.170 (2016).