Abstract

The advancement of transformer neural networks has significantly enhanced the performance of sentence similarity models. However, these models often struggle with highly discriminative tasks and generate sub-optimal representations of complex documents such as peer-reviewed scientific literature. With the increased reliance on retrieval augmentation and search, representing structurally and thematically-varied research documents as concise and descriptive vectors is crucial. This study improves upon the vector embeddings of scientific text by assembling domain-specific datasets using co-citations as a similarity metric, focusing on biomedical domains. We introduce a novel Mixture of Experts (MoE) extension pipeline applied to pretrained BERT models, where every multi-layer perceptron section is copied into distinct experts. Our MoE variants are trained to classify whether two publications are cited together (co-cited) in a third paper based on their scientific abstracts across multiple biological domains. Notably, because of our unique routing scheme based on special tokens, the throughput of our extended MoE system is exactly the same as regular transformers. This holds promise for versatile and efficient One-Size-Fits-All transformer networks for encoding heterogeneous biomedical inputs. Our methodology marks advancements in representation learning and holds promise for enhancing vector database search and compilation.

Similar content being viewed by others

Introduction

The remarkable success of transformer-based large language models (LLMs)1 has significantly increased our confidence in their abilities and outputs. Nowadays, LLMs are treated as de facto knowledge bases and have been adopted on a mass scale with the release of services like ChatGPT and open-source counterparts like Llama, Mistral, and DeepSeek-V32,3,4. However, despite their widespread use, challenges persist, particularly concerning the accuracy and reliability of these models. For example, common issues like LLM hallucinations5,6 highlight the ongoing need for improvement. The ability to generate reliable vector embeddings and perform precise classification is crucial, especially for technologies that rely on information retrieval and web search.

One approach to further curate transformer latent spaces is to utilize contrastive learning to create sentence similarity models, initially revolutionizing sentiment analysis with broader applications in vector search7,8,9. More recently, the E5 line of models has demonstrated strong performance by applying contrastive learning on mean-pooled embeddings derived from the CCPairs dataset10. This resulted in a strong sentence similarity model that still has the top spot on the Massive Text Embedding Benchmark (MTEB) leaderboard at the time of writing10,11. However, as we showcase below, even strong sentence similarity models like E5 miss out-of-distribution domain-specific nuances12,13, resulting in sub-optimal representations of many important documents, including scientific literature.

Fortunately, several advancements have paved the way toward effective sentence similarity models over an arbitrary number of domains. Work from the metascience community has introduced co-citation networks as a practical way to gather many similar papers14,15,16,17,18,19,20,21,22. While this degree of similarity may not be perfect, co-citations have been shown to imply a high degree of similarity between papers21. Another promising advancement comes from the deep learning community with Mixture of Experts (MoE) models. Their learned input-dependent routing of information constitutes a promising multidomain / multitask learning architecture without significant added overhead23. Taking advantage of these methods, we propose the following MoE extension framework to build discriminative vector representations of input documents across diverse domains:

-

1.

Domain-specific fine-tuning Apply contrastive fine-tuning methods to pretrained BERT (Bidirectional Encoder Representation Transformers) models using a predefined similarity heuristic, tailoring them to learn and understand domain-specific nuance.

-

2.

Universal applicability through mixture of experts (MoE) Introduce a scalable method of seeding MoE models from dense pretrained transformers, aiming for a versatile “One-Size-Fits-All” model.

In this study, we conduct a case analysis on biomedical scientific literature - building a strong sentence similarity model that leverages co-citations as a similarity heuristic to differentiate niche literature across diverse domains from their textual abstracts alone. Our results show that the MoE extension framework improves LLMs performance in identifying semantically similar or niche intradisciplinary texts, showcasing a scalable method to produce effective vector representations that generalize across a wide range of scientific literature. Our methods substantially outperform general pretrained models and fine-tuned sentence similarity models, including science-oriented BERT models and Llama3.

Methods

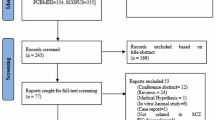

Data compilation

We used co-citations as a similarity heuristic to generate sufficiently large training datasets for contrastive learning over scientific domains. Co-citations represent instances where two papers are cited together in a third paper. Our strategy enabled the production of large training datasets from small amounts of data due to the nonlinear scaling of citation graphs, as a single paper citing N other papers produces \(N\atopwithdelims ()2\) co-citation pairs. For context, a dataset of 10,000 individual papers can produce well over 125,000 co-citation pairs. While this measurement of similarity is not perfect, co-citations have generally been shown to imply a high degree of similarity between papers21. We assume for our modeling purposes that two co-cited papers are more similar than two random papers, even if they are from the same field.

To build our dataset, we randomly chose five biomedical subfields with little overlap. The domains of choice include papers related to cardiovascular disease (CVD), chronic obstructive pulmonary disease (COPD), parasitic diseases, autoimmune diseases, and skin cancers. PubMed Central was queried with Medical Subject Heading (MeSH) terms for each domain, requiring at least one citation and an abstract present between 2010 and 2022. This means that within the time period, we kept the co-citation pairs of the possible \(N\atopwithdelims ()2\) co-citations per paper that were returned from the same common MeSH terms. We sampled preferentially from samples co-cited more times when constructing our final dataset.

For evaluation, we constructed “negative” examples of abstract pairs that were not co-cited. The training dataset was split randomly in a 99:1 ratio followed by deduplication. We built negative pairs by pairing abstracts that had not been co-cited and had both been cited at least 15 times. This criteria allowed us to construct a representative evaluation set for binary classification with balanced classes, with 1’s for co-cited pairs and 0 if not. The exact dataset counts are outlined in Table 1.

Transformer neural networks

The transformer architecture is adept at sequential processing and is state-of-the-art for various natural language processing (NLP) and vision tasks24,25,26,27,28,29,30. A transformer block comprised a self-attention layer and multi-layer perception (MLP) interleaved with skip connections. Full transformers were made of T transformer blocks stacked together1.

Prior to the transformer blocks is the token embedding process, where tokenization maps an input string into a list of L integers from a dictionary. These integers served as the indices for a matrix \(W_e\), where each row is a learnable representative vector for that token, making \(W_e\in \mathbb {R}^{v\times d}\) where v is the total number of unique tokens in the vocabulary and d an arbitrarily chosen hidden dimension. The initial embedding is \(\mathbb {R}^{L\times d}\).

Each block in the transformer then transforms this embedding, i.e., the \(i^{th}\) transformer block maps the embedding \(X^{(i-1)} = [x_1^{(i-1)}, ..., x_L^{(i-1)}]^\top \in \mathbb {R}^{L\times d}\) to \(X^{(i)} = [x_1^{(i)}, ..., x_L^{(i)}]^\top \in \mathbb {R}^{L \times d}\)1,31,32. \(X^{(T)}\) is the last hidden state of the network. The first part of this map is self-attention, which mixes information across the vectors, followed by the MLP which mixes information across d31,33.

Including the MLP, the entire transformer block can be written as:

where \(b_1\) and \(b_2\) are biases associated with learned linear transformations \(W_1 \in \mathbb {R}^{d\times I}\) and \(W_2 \in \mathbb {R}^{I\times d}\), where \(I > d\). The activation function \(\sigma\), e.g., ReLU or GeLU, introduces non-linearity1. More recently, biases are not included, which improves training stability, throughput, and final performance. Additionally, improvements like SwiGLU activation functions and rotary positional embeddings are also commonly utilized3,4,34,35.

GPT (Generative Pretrained Transformer) models, such as OpenAI’s GPT series (GPT-3, GPT-4, etc.), are designed for generative tasks and use transformer decoders36,37,38. They employ causal (unidirectional) attention, meaning each token attends only to previous tokens in the sequence, enabling autoregressive generation during inference. This allows them to predict the next word in a sequence without direct access to future words.

In contrast, BERT models utilize transformer encoders with bidirectional attention, meaning they can attend to all tokens within an input simultaneously. This structure enables them to capture additional contextual dependencies, making them well-suited for tasks like text classification and sentence similarity39. Unlike GPT models, BERT is trained using a masked language modeling (MLM) objective, where some tokens are randomly hidden, requiring the model to predict them based on the surrounding context.

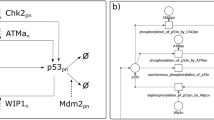

Mixture of Experts

Mixture of Experts (MoE) models add a linear layer or router network to each transformer block, which outputs logits from \(H^{(i)}\). These logits route \(H^{(i)}\) to multiple equivalent copies of the MLP section with different weights called experts40. In many transformer variants, this routing is typically done on a per-token basis, allowing for experts to specify in language classes like punctuation, nouns, numbers, etc41. We chose sentence-wise routing of the entire \(H^{(i)}\) so that we could purposely structure our experts for specific domains42.

Controlling the routing of \(H^{(i)}\), allowed for a one-size-fits-all approach to text classification where one expert per transformer layer was an expert in a specific domain. For faster fine-tuning, we utilized pretrained models for our novel MoE extension approach (Fig. 1). As such, each MLP section was copied into five identical components to be differentiated during training. We also removed the learned router entirely and routed examples to a specific expert based on which domain the text comes from. The final MoE models had five experts each, where all COPD inputs were routed to a single expert and all CVD inputs to another, etc. To further enhance the nuance behind the representations built from our model, and to allow for the attention layers to distinguish which type of input was fed to the model, we added special tokens for each domain, e.g., [CVD], [COPD], etc. The token embedding for these new special tokens was seeded with the pretrained weight from the [CLS] token, and the [CLS] token was replaced with the correct domain token during tokenization. As such, the domain tokens were equivalent to the [CLS] token before further training.

Visualization of our MoE extension pipeline, where the MLP of each transformer network is copied into equivalent experts to further differentiate during training. Additionally, domain-specific special tokens are seeded from the pretrained Token Embedding Matrix (TEM) using the [CLS] token, which is replaced with the correct domain token upon tokenization.

Models of choice

We chose the recent ModernBERT base model as the pretrained model of choice for our experiments35. This bidirectional model employs efficient implementations of masking and attention to speed up training and inference while reducing memory costs. Local attention was used in most layers, with global attention at every third layer. We trained ModernBERT directly without modification and with MoE extension. The ModernBERT models offer much higher NLP benchmark performance per parameter than the first generation of BERT models following the 2019 release and subsequent fine-tuning releases35.

To benchmark against our training scheme, we compiled several popular BERT-like models, BERT models fine-tuned on scientific literature, sentence similarity models, and a recent SOTA GPT-like transformer (Table 2). ModernBERT, BERT, and RoBERTa have all been solely pretrained with MLM objectives35,39,43. SciBERT, BioBERT, and PubmedBERT have all been trained further on a scientific corpus with additional MLM44,45,46. all-MiniLM-L6-v2 (Mini), MPNet, and E5 have been fine-tuned using contrastive learning for sentence similarity and embedding-based tasks10,47,48,49,50,51,52,53. Llama-3.2 is a state-of-the-art “small” generative language model that has seen wide use and success in local and open-source use cases3. We also benchmarked against a basic term frequency-inverse document frequency (TF-IDF), acting as a baseline for expected performance54.

All transformer models were downloaded and used with the Huggingface [SPSVERBc1SPS] package, leveraging custom embedding classes for efficient resource management. The TF-IDF scheme was fit on each domain separately using Python’s scikit-learn implementation with 4,096 maximum features54,55. The wide diversity in representation learning models allowed for an effective comparison to our training scheme.

Training strategy

To minimize training and inference time, we chose to use abstracts rather than entire papers as the text input to the model. Abstracts represent a human-generated summarized version of a paper and, as a result, include much of the relevant textual information contained in a paper. We trained regular ModernBERT\(_{base}\) models (single expert or SE models) on one domain at a time, on every domain (SE\(_{all}\)), and our MoE extended model (MoE\(_{all}\)) on every domain.

The training objective was to summarize two paired mini-batches of abstracts separately. The abstract of index i in each batch was a co-cited abstract pair. The last hidden state of the model \(H^{(L)}\) was mean pooled to build fixed-length vector representations of each batch. Then, we compared these embeddings with the variant of the Multiple Negative Rankings (MNR) loss used to train cdsBERT56,57. MNR Loss is a loss function that has seen significant success with sentence embedding problems58 and was highly successful in our local experiments. Our variant used dot products as an inter-batch similarity heuristic and constructed the targets based on the average intra-batch dot products. The loss was formulated as follows:

where b is the batch size, \(B_i \in \mathbb {R}^{b \times d}\) is mini-batch i and H is the cross-entropy. Batches \(B_1, B_2\) must be paired such that element \(i=i\) are “similar,” in our case co-cited, and assumed to be dissimilar for other indices \(i \ne j\) of a paired batch. This can be easily achieved by passing two paired batches to the model in two forward passes and combining their gradients for one backward pass in a standard autograd library. We used PyTorch for our experiments59.

The advantage of MNR losses and their variants is precisely this property requiring only positive/similar text pairs, generating negative/dissimilar text pairs from the other indices \(i \ne j\) of the mini-batch. As a result, MNR loss removed the need to generate dissimilar text pairs for our training dataset under the assumption that the random chance of finding a similar paper randomly that is co-cited, is sufficiently small. Indices \(i \ne j\) during single-domain training would be randomly paired papers from the same field, while during multi-domain training, it could be two random papers from different fields or the same. In either case, this approach satisfied our modeling assumptions that two co-cited papers were more similar than two random papers.

During training, we randomly switched the order of the two input abstract pairs to prevent any bias in how they were fed to the loss function. A batch size \(b=16\) was chosen for computational throughput and minimizing the chance for multiple positive abstract pairs showing up in a mini-batch. We trained models with a cosine learning rate scheduler with warm up using a learning rate of \(1e^{-4}\), and performed periodic validation to measure training progress. Training was halted when a patience of 5 was exceeded for the evaluation set \(F1_{max}\).

Evaluation strategy

All models were evaluated on the evaluation sets separately for each domain, as well as averaged together. We used cosine similarity between two vectors extracted from an abstract pair to classify the abstracts as co-cited (similar) or not, given a threshold, shown in Fig. 2. Cosine similarity is a common vector similarity measure ranging from -1 to 1, where -1 is exactly the opposite and 1 occurs for a pair of the same vector. We thresholded the cosine similarly to create a decision boundary and measured the \(F1_{max}\) to evaluate performance60,61. \(F1_{max}\) is the maximum F1 score calculated for all possible thresholds for a reported metric, calculated using a precision-recall curve. While typically used for imbalanced multilabel classification, randomly choosing a binary threshold for the reported F1 would not be a fair comparison of different models. For example, one model may perform much better with a cosine similarity threshold at 0.5 for abstract text similarity compared to 0.4, or vice versa. We also reported average precision and recall at the optimal threshold found for \(F1_{max}\), the ROC-AUC, as well as the average cosine similarity ratio between positive examples and negative examples. This ratio showcases the average discriminative power of a model, where a higher ratio implies that positive and negative examples are more separable.

Results

The results for all domains averaged together are shown in Table 3. On average, our full approach with MoE extension and contrastive learning (MoE\(_{all}\)), showcased the highest performance with an \(F1_{max}\) of 0.8875 across all domains. For single domain evaluation, we expected single expert (SE) models that were fine-tuned for only that domain, for example, SE\(_{cancer}\) for the parasitic diseases, to perform the best. For skin cancer (Table 5), COPD (Table 6), CVD (Table 7), and parasitic (Table 8) literature this was the case. This trend did not hold in the autoimmune domain (Table 4), with MoE\(_{all}\) narrowly outperforming SE\(_{autoimmune}\). Of note, MoE\(_{all}\) and SE\(_{all}\) failed to perform better than base sentence similarity models on the CVD abstracts, where only SE\(_{cvd}\) outperformed MPnet, E5, and Mini. Statistical analysis of all pairwise model performance can be found in the supplemental materials (Supplemental Fig. 1–6).

Discussion

Our work advances the use of transformer language models with a focus on improving their domain-specific understanding and document-wide comprehension from summaries (abstracts). We have shown that popular pretrained models cannot distinguish the differences in highly discriminative scientific literature despite further fine-tuning for sentence similarity tasks with contrastive learning or additional MLM on scientific papers. For example, when examining the COPD domain results (Table 6), all evaluated pretrained models showcase random or near random performance, with no model achieving even a 0.7 \(F1_{max}\). We assume this phenotype is even worse in prompting scenarios when considering common prompt construction and formatting. If a user were to ask ChatGPT if multiple scientific documents were similar or potentially co-cited, they would have to paste the documents together in the same input. When considering the self-attention mechanism, the semantic similarity of related tokens across the multiple documents may prevent effective distinction between the documents, as portions of each document will attend highly to each other even if they are “different” as defined by a desired discrimination. Therefore, a document-wise embedding approach is much more tractable, enforcing that multiple documents are input separately and embedded in a close vector space if similar enough.

As vector databases become more prevalent for search and retrieval tasks, the quality of these numerical representations becomes increasingly important. Our innovative framework, which incorporates contrastive learning through a custom MNR variant, novel special tokens, and MoE seeding, extension, and forced routing techniques, significantly enhances vector-based classification compared to pretrained transformers. We leveraged co-citation networks to construct large datasets of similar abstracts and applied our framework to scientific literature. This created nuanced representations with a specific focus on discriminative biomedical domains.

Specifically, MoE\(_{all}\) and SE\(_{all}\) performed the best on average, with 0.8875 and 0.8770 \(F1_{max}\), respectively. Whereas SE models trained on a single domain were the best performers for that domain. This was the case for all domains except for autoimmune, where MoE\(_{all}\) narrowly outperformed SE\(_{autoimmune}\)with a \(F1_{max}\) of 0.8908 vs. 0.8904. MoE\(_{all}\) routinely outperformed SE versions of the models with respect to the vector ratio metrics, often 1.7 vs. 1.5 between MoE\(_{all}\) and SE\(_{all}\), highlighting that the MoE extensions increased average separation of co-cited papers against others in the same field. Interestingly, the TF-IDF scheme often had the highest vector ratio, implying the highest average separation. Due to its subpar \(F1_{max}\) and low threshold for binary classification, we can conclude that TF-IDF may be “over-confident” in general, placing small text motifs in a unique vector-space, perhaps missing the nuance that trained language models can capture.

Importantly, the SE\(_{all}\) model with no MoE extension also performed exceedingly well, almost performing on par with MoE\(_{all}\) in \(F1_{max}\) and ratio. In our previous experiments and preprint, we used SciBERT for the classification of co-cited scientific documents62. With a SciBERT base model, the SE models trained on each domain outperformed the MoE version by a large margin, whereas the MoE version outperformed SE\(_{all}\) by an even larger margin. We attribute this difference in performance to ModernBERT, a new optimized language model with excellent representations, as being a stronger base model for experimentation. As a result, the MoE extension had less of an effect than previous experiments using SciBERT.

Compellingly, when looking at the SE performance for models trained on one domain but evaluated on all domains (Table 3), they outperform many of the base models. For example, SE\(_{cancer}\) outperforms even the sentence similarity models, which is not surprising considering that the parasitic data comprises a large portion of the overall dataset size. More interestingly, the SE\(_{autoimmune}\) outperforms Llama-3.2, and SE\(_{cvd}\), SE\(_{copd}\), and SE\(_{cancer}\) outperform all of the MLM-trained BERT models. This implies that when training on one scientific domain, performance is not significantly hampered across other unrelated domains. This is observed especially between the SE models and the weights they were seeded from, ModernBERT\(_{base}\), with a 0.02 - 0.08 higher \(F1_{max}\) and 0.2 - 0.4 increase in vector ratio.

Of course, our training scheme has limitations as well. For datasets that are already easily discriminated, such extensive fine-tuning may harm the overall performance. We support this notion when looking at the CVD domain results. The pretrained models had much higher natural scores, implying that these documents are already fairly separable, with a strong 2.7 ratio from MPnet, 2.8 ratio from Mini, and 3.8 ratio for TF-IDF. This led to MPnet, E5, and Mini outperforming MoE\(_{all}\) and SE\(_{all}\). It is also unclear if our scheme is resilient to datasets with vector ratios that the pretrained models disagree with. For example, even though we see low \(F1_{max}\) across the base pretrained models, they all result in a ratio above 1.0 for every dataset. This means, based on MLM (or contrastive learning for the sentence similarity models), that the model already “agreed” that the co-cited papers were more semantically similar than other pairs that are not co-cited. This is the evidence behind co-citations as a measurement for similarity, but it also opens the door for future work. We believe it would be fruitful to explore our scheme on a paired dataset where the “natural” semantic similarity after MLM was less than 1.0 but is paired by some similarity heuristic. Many molecular sequences, like proteins, share this property, where pretrained transformers often lack true semantic embeddings after MLM alone63,64.

Of note, routing each example to a single expert based on the domain of the input means that the active parameters for the model are exactly equivalent to the model before MoE extension. In other words, the forward pass FLOPs are exactly equivalent to the original pretrained model. For homogeneous (all examples from one domain) inference batches, the throughput of the MoE extended version of the model vs. the original will be exactly the same as long as there is enough VRAM to store the additional inactive weights. Even without the necessary VRAM, portions of the model could be stored in the CPU memory when doing inference for a certain task, and then they could be switched for another subsequent task. Unfortunately, with heterogeneous batches during inference or training, the forward and backward pass will be slower based on how many experts need to be called. While requiring the same amount of FLOPs, they are not perfectly parallelized in the forward pass using naive implementations of switch versions for MoE. Issues like this may be alleviated in the future by efficient MoE parallelization efforts like DeepSeek’s DeepEP project4,65. For the backward pass, it is necessarily slower as more weights and gradients are involved. Even with naive implementations, we conclude from the strong performance across all five diverse evaluated domains that compute may be better spent on an N expert-wide MoE network than fine-tuning N equivalent networks, especially when considering domain overlap and the possibility of merging and LoRA methodologies.

We believe that there are many potential applications of the MoE extension framework coupled with contrastive and/or other fine-tuning methods. One compelling avenue is named entity recognition66. Because experts are only routed examples from a specific user-defined domain, an expert may produce informative hidden states surrounding niche and nuanced terms. We suspect the token-wise embeddings from intermediate experts or from the last hidden state may present strong correlations with downstream NER tasks due to specific domain embedding structures. Once pooled, the fixed-length vector embeddings may offer a useful platform for retrieval tasks, including Retrieval Augmented Generation (RAG). Pre-embedded datasets will be much more separable for intra-domain searches from prompted LLM systems, capable of returning closely related content based on domain-specific context. Even inter-domain searches may be ideally separable if the system was trained like MoE\(_{all}\), with an MNR-like loss on multiple domains at once. One application that could benefit from separable inter-domain embeddings would be clinical or medical notes, where token-wise or pooled embeddings are fed to experts trained on other notes from a particular medical specialty or practice.

Another exciting application lies in mechanistic interpretability. We chose sentence-wise routing over other reasonable MoE routing schemas to keep expert weights completely separate for distinct domains. We believe this type of scheme is an ideal playground for mechanistic interpretability, examining nuanced and niche concepts learned for specific domains. There are many relevant questions here: How are the expert weights structured? Do sparse autoencoders reveal distinct neuron activations for niche concepts consistent across domains and experts67? Do the expert MLPs act like specified Hopfield networks68,69? It may also be possible to conduct weight merging or ensembling to increase performance and reduce VRAM costs70. Such research may also want to augment the attention layers in a domain-specific way. To accommodate this, we have included code to apply Low Rank Adaptation (LoRA) to attention layers, allowing for researchers to train domain-specific adapters alongside the MoE extended MLPs71.

Overall, our use of co-citation networks enables rapid and efficient dataset compilation for training transformers on niche scientific domains. The fine-tuning of base BERT models through contrastive learning with an MNR-inspired loss significantly improves sentence similarity capabilities. The MoE approach further expands these capabilities, suggesting the feasibility of a universal model for text classification and vector embeddings across various domains through MoE seeding and enforced routing. Given efficient inference considerations, one could embed large datasets such as the entire Semantic Scholar database without any additional overhead for using a MoE model. Using the building blocks of our approach, effective BERT models with specialized knowledge across multiple fields, vocabularies, or tasks can be developed.

Data availability

Links to all training data, model weights, and code can be found at Github [Gleghorn Lab, Mixture of Experts Sentence Similarity].

References

Vaswani, A. et al. Attention is all you need. In Advances in Neural Information Processing Systems (eds. Guyon, I. et al.) vol. 30 (Curran Associates, Inc., 2017).

Touvron, H. et al. Llama 2: Open foundation and fine-tuned chat models. https://doi.org/10.48550/arXiv.2307.09288. arXiv:2307.09288.

Grattafiori, A. et al. The llama 3 herd of models. https://doi.org/10.48550/arXiv.2407.21783. ArXiv:2407.21783 (2024).

DeepSeek-AI et al. Deepseek-v3 technical report. https://doi.org/10.48550/arXiv.2412.19437. ArXiv:2412.19437 (2024).

Branco, R., Branco, A., António Rodrigues, J. & Silva, J. R. Shortcutted commonsense: Data spuriousness in deep learning of commonsense reasoning. In (eds. Moens, M.-F. et al.) Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing 1504–1521 (Association for Computational Linguistics, 2021). https://doi.org/10.18653/v1/2021.emnlp-main.113.

Guerreiro, N. M., Voita, E. & Martins, A. Looking for a needle in a haystack: A comprehensive study of hallucinations in neural machine translation. In Proceedings of the 17th Conference of the European Chapter of the Association for Computational Linguistics (eds. Vlachos, A. & Augenstein, I.) 1059–1075 (Association for Computational Linguistics, 2023). https://doi.org/10.18653/v1/2023.eacl-main.75.

Reimers, N. & Gurevych, I. Sentence-BERT: Sentence embeddings using Siamese BERT-networks. In (eds. Inui, K., Jiang, J., Ng, V. & Wan, X.) Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP) 3982–3992 (Association for Computational Linguistics, 2019). https://doi.org/10.18653/v1/D19-1410.

Quan, Z. et al. An efficient framework for sentence similarity modeling https://doi.org/10.1109/TASLP.2019.2899494 (2019).

Yao, H., Liu, H. & Zhang, P. A novel sentence similarity model with word embedding based on convolutional neural network. https://doi.org/10.1002/cpe.4415, https://onlinelibrary.wiley.com/doi/pdf/10.1002/cpe.4415 (2018).

Wang, L. et al. Text embeddings by weakly-supervised contrastive pre-training. https://doi.org/10.48550/arXiv.2212.03533. ArXiv:2212.03533 (2024).

Muennighoff, N., Tazi, N., Magne, L. & Reimers, N. Mteb: Massive text embedding benchmark. https://doi.org/10.48550/arXiv.2210.07316. ArXiv:2210.07316 (2023).

Giorgi, J., Nitski, O., Wang, B. & Bader, G. DeCLUTR: Deep contrastive learning for unsupervised textual representations. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers) (eds. Zong, C., Xia, F., Li, W. & Navigli, R.) 879–895 (Association for Computational Linguistics, 2021). https://doi.org/10.18653/v1/2021.acl-long.72.

Deka, P., Jurek-Loughrey, A. & Deepak. Unsupervised Keyword Combination Query Generation from Online Health Related Content for Evidence-based Fact Checking. https://doi.org/10.1145/3487664.3487701 (2021).

Abrishami, A. & Aliakbary, S. Predicting Citation Counts Based on Deep Neural Network Learning Techniques https://doi.org/10.1016/j.joi.2019.02.011 (2019).

Zhang, T., Chi, H. & Ouyang, Z. Detecting research focus and research fronts in the medical big data field using co-word and co-citation analysis. In 2018 IEEE 20th International Conference on High Performance Computing and Communications; IEEE 16th International Conference on Smart City; IEEE 4th International Conference on Data Science and Systems (HPCC/SmartCity/DSS) 313–320. https://doi.org/10.1109/HPCC/SmartCity/DSS.2018.00072 (2018).

Brizan, D. G., Gallagher, K., Jahangir, A. & Brown, T. Predicting citation patterns: Defining and determining influence. https://doi.org/10.1007/s11192-016-1950-1 (2016).

Malkawi, R., Daradkeh, M., El-Hassan, A. & Petrov, P. A semantic similarity-based identification method for implicit citation functions and sentiments information. https://doi.org/10.3390/info13110546 (2022).

Rodriguez-Prieto, O., Araujo, L. & Martinez-Romo, J. Discovering related scientific literature beyond semantic similarity: A new co-citation approach. https://doi.org/10.1007/s11192-019-03125-9 (2019).

Eto, M. Extended co-citation search: Graph-based document retrieval on a co-citation network containing citation context information. https://doi.org/10.1016/j.ipm.2019.05.007 (2019).

Galke, L., Mai, F., Vagliano, I. & Scherp, A. Multi-modal adversarial autoencoders for recommendations of citations and subject labels. In Proceedings of the 26th Conference on User Modeling, Adaptation and Personalization, UMAP ’18, 197–205 (Association for Computing Machinery, 2018).https://doi.org/10.1145/3209219.3209236.

Colavizza, G., Boyack, K. W., van Eck, N. J. & Waltman, L. The closer the better: Similarity of publication pairs at different cocitation levels. https://doi.org/10.1002/asi.23981(2018). https://asistdl.onlinelibrary.wiley.com/doi/pdf/10.1002/asi.23981.

Gipp, B. & Beel, J. Citation proximity analysis (cpa): A new approach for identifying related work based on co-citation analysis. In Computer Science (2009).

Gupta, S. et al. Sparsely activated mixture-of-experts are robust multi-task learners https://doi.org/10.48550/arXiv.2204.07689 (2022).

Beeching, E. et al. Open llm leaderboard. https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard (2023).

Clark, P. et al. Think you have solved question answering? try arc, the ai2 reasoning challenge (2018). arXiv:1803.05457.

Zellers, R., Holtzman, A., Bisk, Y., Farhadi, A. & Choi, Y. Hellaswag: Can a machine really finish your sentence? (2019). arXiv:1905.07830.

Hendrycks, D. et al. Measuring massive multitask language understanding (2021). arXiv:2009.03300.

Lin, S., Hilton, J. & Evans, O. Truthfulqa: Measuring how models mimic human falsehoods (2022). arXiv:2109.07958.

Sakaguchi, K., Bras, R. L., Bhagavatula, C. & Choi, Y. WINOGRANDE: an adversarial winograd schema challenge at scale (2019). arXiv:1907.10641.

Cobbe, K. et al. Training verifiers to solve math word problems (2021). arXiv:2110.14168.

Sharma, P., Ash, J. T. & Misra, D. The truth is in there: Improving reasoning in language models with layer-selective rank reduction. https://doi.org/10.48550/arXiv.2312.13558. arXiv:2312.13558.

Hallee, L. & Gleghorn, J. P. Protein-protein interaction prediction is achievable with large language models. https://doi.org/10.1101/2023.06.07.544109.

Fu, D. Y. et al. Monarch mixer: A simple sub-quadratic GEMM-based architecture. https://doi.org/10.48550/arXiv.2310.12109. arXiv:2310.12109.

Shazeer, N. Glu variants improve transformer. https://doi.org/10.48550/arXiv.2002.05202. ArXiv:2002.05202 (2020).

Warner, B. et al. Smarter, better, faster, longer: A modern bidirectional encoder for fast, memory efficient, and long context finetuning and inference. https://doi.org/10.48550/arXiv.2412.13663 (2024). ArXiv:2412.13663.

Radford, A. et al. Language models are unsupervised multitask learners (2019).

Brown, T. B. et al. Language models are few-shot learners. https://doi.org/10.48550/arXiv.2005.14165. arXiv:2005.14165.

OpenAI et al. GPT-4 technical report. https://doi.org/10.48550/arXiv.2303.08774. arXiv:2303.08774.

Devlin, J., Chang, M.-W., Lee, K. & Toutanova, K. BERT: Pre-training of deep bidirectional transformers for language understanding. https://doi.org/10.48550/arXiv.1810.04805. arXiv:1810.04805.

Shazeer, N. et al. Outrageously large neural networks: The sparsely-gated mixture-of-experts layer. https://doi.org/10.48550/arXiv.1701.06538. arXiv:1701.06538.

AI, M. Mixtral of experts. Section: news.

Zuo, S. et al. MoEBERT: from BERT to mixture-of-experts via importance-guided adaptation. https://doi.org/10.48550/arXiv.2204.07675. arXiv:2204.07675.

Liu, Y. et al. Roberta: A robustly optimized bert pretraining approach. https://doi.org/10.48550/arXiv.1907.11692 (2019). ArXiv:1907.11692.

Beltagy, I., Lo, K. & Cohan, A. SciBERT: A pretrained language model for scientific text. https://doi.org/10.48550/arXiv.1903.10676. arXiv:1903.10676.

Lee, J. et al. Biobert: a pre-trained biomedical language representation model for biomedical text mining. https://doi.org/10.1093/bioinformatics/btz682 (2020). ArXiv:1901.08746.

Gu, Y. et al. Domain-specific language model pretraining for biomedical natural language processing. https://doi.org/10.1145/3458754 (2022). ArXiv:2007.15779.

Wang, W. et al. MiniLM: Deep self-attention distillation for task-agnostic compression of pre-trained transformers. https://doi.org/10.48550/arXiv.2002.10957. arXiv:2002.10957.

Lewis, P. et al. PAQ: 65 million probably-asked questions and what you can do with them. https://doi.org/10.48550/arXiv.2102.07033. arXiv:2102.07033.

Khashabi, D. et al. GooAQ: Open question answering with diverse answer types. https://doi.org/10.48550/arXiv.2104.08727. arXiv:2104.08727.

Dunn, M. et al. SearchQA: A new qanda dataset augmented with context from a search engine. https://doi.org/10.48550/arXiv.1704.05179. arXiv:1704.05179.

Koupaee, M. & Wang, W. Y. WikiHow: A large scale text summarization dataset. https://doi.org/10.48550/arXiv.1810.09305. arXiv:1810.09305.

Henderson, M. et al. A repository of conversational datasets. https://doi.org/10.48550/arXiv.1904.06472. arXiv:1904.06472.

Song, K., Tan, X., Qin, T., Lu, J. & Liu, T.-Y. Mpnet: Masked and permuted pre-training for language understanding. https://doi.org/10.48550/arXiv.2004.09297 (2020). ArXiv:2004.09297.

Robertson, S. Understanding inverse document frequency: on theoretical arguments for idf https://doi.org/10.1108/00220410410560582 (2004).

Pedregosa, F. et al. Scikit-learn: Machine learning in Python (2011).

Hallee, L., Rafailidis, N. & Gleghorn, J. P. cdsBERT - extending protein language models with codon awareness. https://doi.org/10.1101/2023.09.15.558027.

Shariatnia, M. M. Simple CLIP https://doi.org/10.5281/zenodo.6845731 (2021).

Henderson, M. et al. Efficient natural language response suggestion for smart reply (2017).

Paszke, A. et al. Pytorch: An imperative style, high-performance deep learning library. https://doi.org/10.48550/arXiv.1912.01703 (2019). ArXiv:1912.01703.

TorchDrug. DeepGraphLearning/torchdrug. Original-date: 2021-08-10T03:51:24Z.

Su, J. et al. SaProt: Protein language modeling with structure-aware vocabulary. https://doi.org/10.1101/2023.10.01.560349.

Hallee, L., Kapur, R., Patel, A., Gleghorn, J. P. & Khomtchouk, B. Contrastive learning and mixture of experts enables precise vector embeddings. https://doi.org/10.48550/arXiv.2401.15713 (2024). ArXiv:2401.15713.

Li, F.-Z., Amini, A. P., Yue, Y., Yang, K. K. & Lu, A. X. Feature reuse and scaling: Understanding transfer learning with protein language models https://doi.org/10.1101/2024.02.05.578959 (2024).

Hallee, L., Rafailidis, N., Horger, C., Hong, D. & Gleghorn, J. P. Annotation vocabulary (might be) all you need. https://doi.org/10.1101/2024.07.30.605924 (2024).

DeepSeek. Deepep: An efficient expert-parallel communication library (2025).

Wang, K . et al. NERO: a biomedical named-entity (recognition) ontology with a large, annotated corpus reveals meaningful associations through text embedding. npj Syst Biol Appl. 7, 38. https://doi.org/10.1038/s41540-021-00200-x (2021).

Cunningham, H., Ewart, A., Riggs, L., Huben, R. & Sharkey, L. Sparse autoencoders find highly interpretable features in language models (2023). arXiv:2309.08600.

Karakida, R., Ota, T. & Taki, M. Hierarchical associative memory, parallelized mlp-mixer, and symmetry breaking. https://doi.org/10.48550/arXiv.2406.12220(2024). ArXiv:2406.12220.

Ota, T. & Taki, M. imixer: hierarchical hopfield network implies an invertible, implicit and iterative mlp-mixer. https://doi.org/10.48550/arXiv.2304.13061 (2024). ArXiv:2304.13061.

Yang, E. et al. Model merging in llms, mllms, and beyond: Methods, theories, applications and opportunities. https://doi.org/10.48550/arXiv.2408.07666 (2024). ArXiv:2408.07666.

Hu, E. J. et al. Lora: Low-rank adaptation of large language models. https://doi.org/10.48550/arXiv.2106.09685 (2021). ArXiv:2106.09685.

Acknowledgements

The authors thank Katherine M. Nelson, Ph.D., for reviewing and commenting on drafts of the manuscript. This work was partly supported by grants from the University of Delaware Graduate College through the Unidel Distinguished Graduate Scholar Award (LH), the National Institutes of Health R01HL133163 (JPG), R01HL145147 (JPG), R01DK132090 (BBK), and Indiana University (BBK). Figures created in https://BioRender.com.

Author information

Authors and Affiliations

Contributions

Conceptualization (RK, AP, BBK, LH, JPG), Co-citation Methodology (RK, AP, BBK), MoE Methodology (LH, JPG), Data Curation (RK, AP, BBK), Investigation (LH, RK, AP, BBK), Formal Analysis (LH, RK, AP, JPG, BBK), Writing - Original Draft (LH, RK, AP, BBK), Writing - Review & Editing (LH, JPG, BBK), Supervision (JPG, BBK), Project Administration (JPG, BBK), Funding acquisition (LH, JPG, BBK).

Corresponding authors

Ethics declarations

Conflict of interest

LH and JPG are co-founders of and have an equity stake in Synthyra, PBLLC.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hallee, L., Kapur, R., Patel, A. et al. Contrastive learning and mixture of experts enables precise vector embeddings in biological databases. Sci Rep 15, 14953 (2025). https://doi.org/10.1038/s41598-025-98185-8

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-98185-8