Abstract

The burgeoning application of Large Language Models (LLMs) in Natural Language Processing (NLP) has prompted scrutiny of their domain-specific knowledge processing, especially in the construction industry. Despite high demand, there is a scarcity of evaluative studies for LLMs in this area. This paper introduces the “Arch-Eval” framework, a comprehensive tool for assessing LLMs across the architectural domain, encompassing design, engineering, and planning knowledge. It employs a standardized dataset with a minimum of 875 questions tested over seven iterations to ensure reliable assessment outcomes. Through experiments using the “Arch-Eval” framework on 14 different LLMs, we evaluated two key metrics: Stability (to quantify the consistency of LLM responses under random variations) and Accuracy (the correctness of the information provided by LLMs). The results reveal significant differences in the performance of these models in the domain of architectural knowledge question-answering. Our findings show that the average accuracy difference between Chain-of-Thought (COT) evaluation and Answer-Only (AO) evaluation is less than 3%, but the response time for COT is significantly longer, extending to 26 times that of AO (62.23 seconds per question vs. 2.38 seconds per question). Advancing LLM utility in construction necessitates future research focusing on domain customization, reasoning enhancement, and multimodal interaction improvements.

Similar content being viewed by others

Introduction

Use of artificial intelligence has led to a significant transformation in NLP (Natural Language Processing)1, largely due to the emergence of Large Language Models (LLMs). These models have created new horizons for intelligent support in professional fields of research on architecture. Originating with BERT2, LLMs have made significant strides in diverse language tasks, from text classification to machine translation, by pre-training on vast textual datasets to capture nuanced linguistic patterns. The progression has seen models like XLNet3 and RoBERTa4 enhancing comprehension capabilities, culminating in 2023 with GPT-45. This model is notable for its multimodal capabilities, and it can be used to process both images and text, setting a new standard by achieving near-human performance on specialized benchmarks. The developments underscore LLMs’ potential in natural language understanding and generation, as well as their expanding role in handling complex, multimodal challenges, signaling a paradigm shift in AI-assisted professional services6.

Due to the advances in LLM technology, its application in the architectural field has been drawing the attention of related professionals, particularly for automated document generation and regulatory text parsing.7,8. The review has summarized the integration of NLP in construction, highlighting its utility in documentation, safety management, Building Information Modeling (BIM) automation, and risk assessment9. The performance of NLP tasks was enhanced by the creation of a specialized corpus and the fine-tuning of models10, providing innovative approaches for document automation and regulatory interpretation. An extensive analysis has been conducted on the application of TM/NLP in construction management, highlighting its crucial role in the advancement of construction automation11. Beyond textual analysis, the application of LLMs has extended to image processing and architectural style analysis. The integration of architects’ personal styles and a diverse range of architectural styles into architectural visualization has been successfully achieved through the use of text-to-image technology, which has significantly enhanced the efficiency and personalization of the design process12. Despite these contributions, the application of LLMs in construction remains in its infancy, with existing research not fully addressing the practical deployment challenges. This presents a clear avenue for future research to enhance the precision and dependability of LLMs in construction and to overcome technical hurdles related to industry-specific demands.

Despite the new opportunities for intelligent assistance that LLMs bring to various professional fields, the complexity and specialized nature of architecture present unique challenges for their application. Traditionally, architectural design and construction processes rely on architects’ profound expertise and practical experience, which are guided by extensive theoretical knowledge and accumulated practice. The vast and intricate knowledge system of architecture, encompassing numerous regulations, standards, and design principles, increases the difficulty for LLMs to understand and apply this knowledge effectively. Therefore, establishing a framework is crucial for ensuring the effective application of LLMs in architectural research. Utilizing such a framework facilitates a comprehensive assessment of LLMs’ capabilities in knowledge comprehension and inferential reasoning specific to the architectural domain. It enables the identification of the models’ applicative potential, the precise identification of their shortcomings, and offers a foundation for informing the trajectory of subsequent model development and educational paradigms.

Related work

Examination of academic databases including Google Scholar, Scopus, and CNKI, indicates a rapid progression in the deployment of LLMs within the discipline of NLP. However, the application of LLMs within the architectural sector has yet to be thoroughly investigated. In the NLP domain, a series of established standardized benchmarks has been proposed to serve as critical instruments for the holistic assessment of language models’ competencies across diverse tasks.

The GLUE13 benchmark exemplifies this, offering a multi-task assessment platform that evaluates models on a range of Natural Language Understanding (NLU) tasks such as sentiment analysis and natural language inference, thereby assessing their linguistic competence. The subsequent iteration, Superglue14, elevated the benchmark’s rigor, fostering model advancement in sophisticated reasoning and interpretative capabilities through the introduction of more arduous tasks. Furthermore, the XNLI15 benchmark contributed a cohesive evaluative framework for cross-linguistic model performance, integrating tasks in 86 languages, which is instrumental for the development of globally adaptive NLP systems. These benchmarks collectively drive the evolution of NLP models in multi-task learning, intricate reasoning, and multilingual comprehension, underscoring the scientific significance of the benchmarks and utility in the field.

In the domain of Chinese NLP, esteemed evaluation benchmarks such as the CLUE benchmark16 and the C-Eval benchmark17 have been established. The CLUE benchmark encompassed a spectrum of tasks including text categorization and named entity recognition, which served to rigorously evaluate the models’ linguistic comprehension capabilities. The C-Eval expanded upon this by constructing a multi-tiered, interdisciplinary framework that assesses a variety of linguistic competencies ranging from foundational to sophisticated tasks, such as idiomatic expression understanding and comprehensive reading assessment. Hence, it offered a nuanced lens through which to scrutinize the performance of LLMs. Despite the comprehensive comparative analysis of evaluations provided regarding the models’ processing capabilities within the Chinese linguistic sphere, there was still a discernible gap in the in-depth interrogation of domain-specific knowledge and the assessment of models’ generalization capabilities in real-world deployment contexts.

Benchmarks for domain-specific LLM assessment have been introduced sequentially, each tailored to evaluate expertise within particular fields. For instance, FinEval18, which targets financial knowledge assessment, employs a multidisciplinary approach through multiple-choice questions that span finance, economics, and accounting to effectively gauge LLMs’ domain-specific proficiency. The CBLUE benchmarks19 concentrated on language understanding tasks within the biomedical domain, encompassing named entity recognition and information extraction. Meanwhile, the AGIEval benchmark20 evaluated model performance on standardized human-centric tests, such as college entrance exams and professional licensing exams, which are indicative of general cognitive abilities.

The collective development of these benchmarks has significantly advanced the research and practical application of LLM technology. The methodologies, though insightful, may not be directly transferable to architecture, due to the unique specializations and complex factors involved in the field. Architectural assessment necessitates a multifaceted evaluation that captures LLMs’ capabilities in mathematical computation, design concept interpretation, and historical analysis, among other skills. This calls for bespoke assessment frameworks and metrics that are specifically calibrated to the architectural domain’s specific requirements. In conclusion, despite the notable progress of LLMs within the domain of NLP and their incipient impact in select professional spheres, their deployment and assessment within the architectural discipline remain in the early phases. Therefore, the establishment of an evaluative benchmark specifically for LLMs in architecture is imperative for advancing both the scholarly inquiry and practical integration of LLMs in the field of architectural studies.

Approach

Overview

Existing benchmarks for knowledge assessment of LLMs are notable for their diversity, complexity, and standardization, but they have limitations such as domain-specificity, dataset bias, and selection of assessment metrics. The unique assessment needs of LLMs in the construction domain require us to focus not only on the generic performance of the models, but also to gain a deeper understanding of their application potential and practical effectiveness in specific domains. Therefore, the principles for constructing an LLM assessment system oriented towards the architectural profession are given as follows:

-

1.

Domain specialization and comprehensiveness in architectural assessment: The evaluation framework must be meticulously crafted to address the unique demands and expertise inherent to the architectural domain, ensuring that the metrics and methodologies employed are intrinsically aligned with the professional standards of architecture. Concurrently, the system should aspire to encompass a holistic view of the critical knowledge domains within architectural studies.

-

2.

Data set authority: The assessment system must consider the practical use of building models in real construction scenarios to ensure that the indicators are meaningful and applicable in actual practice.

-

3.

Architectural practice applicability: The assessment system must be closely aligned with the actual application needs of LLM in architectural research, ensuring that the assessment indicators are significant and effectively reflect the models’ effectiveness and potential for practical application.

Based on the requirements of the above three principles, the research methodology can be divided into three parts as follows: Knowledge System Construction, Preliminary Experiment, and Formal Experiment (Figure 1). The construction of the architectural knowledge system provides a basis for the classification of data set collection and organization. The design of these topics often simulates real-world architectural and planning problems, closely related to actual work needs; the Preliminary Experiment is used to confirm the size of the test set for a single test and the number of tests for a single LLM, ensuring the coexistence of test accuracy and cost-effectiveness; the Formal Experiment phase includes two assessment objectives of ‘stability’ and ‘accuracy’, using a variety of assessment methods such as Answer-only (AO) Evaluation and Chain-of-thought (COT) Evaluation to assess the comprehensive capabilities of LLM.

Architectural knowledge framework for evaluation

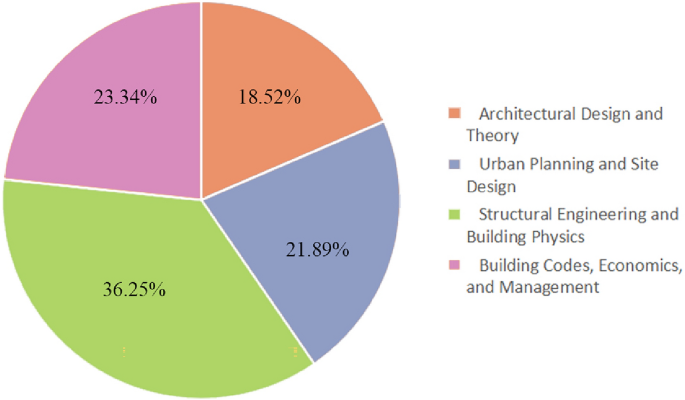

In this section, a comprehensive architectural knowledge system is proposed with four core domains: Architectural Design and Theory, Structural Engineering and Building Physics, Architectural Regulations, Economic and Business Management, and Urban Planning and Site Design. The system encompasses a broad spectrum of architectural disciplines including architectural design, urban and rural planning, structural engineering, and environmental science, which can further extend to involve a diverse array of architectural technologies spanning from antiquity to the modern era.

-

(1)

Architectural design and theory. The Architectural Design and Theory area includes the core courses in architecture such as architectural style, planning principles, environmental adaptability, and sustainable design. These courses are essential to the understanding and practice of architectural design, covering everything from the aesthetic principles of architectural color and interior design, to the safety requirements for building fire prevention and various design codes, to the practical considerations of residential and public building design. Such a broad and in-depth knowledge system requires LLMs to possess an understanding of the deep theoretical foundations of the architectural discipline as well as an accurate grasp of the details of practical applications.

-

(2)

Structural engineering and building physics. The domain, centered on the structural integrity and environmental interaction of buildings, evaluates LLMs’ grasp of structural engineering, materials science, architectural physics, and environmental engineering principles. It aims to assess the LLMs’ acceptability to address structural engineering and architectural physics challenges, thereby offering informed decision support to architectural professionals during the design, construction, and maintenance phases. The model’s capabilities are scrutinized in explaining foundational design principles, analyzing seismic resilience strategies, selecting suitable structural systems for diverse building requirements, optimizing daylighting and artificial lighting, managing building water and fire safety systems, devising energy-efficient electrical systems, and ensuring the performance of HVAC systems both energy efficiency and occupant comfort. Additionally, it evaluates thermal and acoustic performance control within the built environment.

-

(3)

Building codes, economics, and management. The category encompasses legal regulations, professional ethics, project management, and practical operations within the construction industry. It evaluates compliance, project management proficiency, and hands-on skills in architectural practice. Through the assessment of these representative subjects, a comprehensive understanding of LLMs’ applicability in the realms of architectural regulations and economic and business management can be achieved. LLMs are expected to accurately interpret the standard processes of construction work execution and acceptance, grasp the composition and control methods of basic construction costs, understand the key steps in real estate development, adhere to the regulations of construction engineering supervision,have a thorough understanding of the relevant laws and regulations governing engineering survey and design, and understand the regulatory requirements of urban and rural planning. It provides robust support to architectural professionals in adhering to legal regulations, conducting economic benefit analyses, and making business management decisions.

-

(4)

Urban planning and site design. Urban Planning and Site Design is a pivotal subfield within architecture and urban planning, dedicated to the creation of functional, sustainable, and aesthetic urban spaces. This category encompasses a range of disciplines related to urban development, ecological conservation, infrastructure construction, and site planning, aimed at evaluating LLMs’ comprehensive understanding and application of urban planning principles, environmental integration, and site design skills. The key disciplines include urban sociology, urban ecology, and municipal public utilities, which explore the social functions and ecological impacts of urban spaces, as well as how to improve the quality of life for urban residents through efficient municipal facilities. The assessment provides a thorough understanding of the LLMs’ capabilities in urban planning and site design, offering scientific and rational decision support for urban planning and site design professionals.

A comprehensive assessment of LLMs’ competencies in the field of architecture research is facilitated by the classifications mentioned above. Subjects within each category are strategically chosen for their representativeness, ensuring a wide-ranging and in-depth evaluation (Figure 2). The taxonomy elucidates the model’s strengths and weaknesses in different architectural fields and guides the trajectory of future research and development endeavors.

Preliminary experiment

The purpose of the preliminary experimental phase is to explore the fundamental performance of LLMs in the field of research on architecture and to determine the appropriate size of the test set. The phase comprises two sub-studies:

-

Preliminary experiment ① It aims to assess the performance of the LLM on identical questions. Two involved distinct deployment modes are the locally deployed model of LLM-LLM1 and an online model of LLM2. By randomly selecting a test set (Dataset 1) of 500 questions from the total pool, each models must be queried repeatedly seven times to compare the stability of the responses from these models (i.e., whether the answers given on multiple occasions are consistent).

-

Preliminary experiment ② The objective is to ascertain the optimal size of the test set to economically evaluate the performance of LLMs. Different test sets with varying sizes are established with increments of 100, 200, 500, 1000, and 1500 questions, labeled as Dataset 2, Dataset 3, and so on through Dataset 6. To reduce randomness in responses, each set size is replicated seven times (for example, there are seven test sets of 100 questions each, named Dataset 2-1, Dataset 2-2, ..., Dataset 2-7). Subsequently, the sample variance of the accuracy rates across these datasets is assessed to determine the efficient and stable test set size.

Main experiment

In the formal experiment, two objectives for evaluating LLMs were defined: stability and accuracy.

-

Stability: It is imperative that the LLM delivers uniform responses to the same query multiple times, signifying a consistent and predictable comprehension of specific inputs by the model. This attribute is essential for mitigating discrepancies arising from stochastic elements or model variability, especially within the domain of architecture, which demands accurate information. The methodology for examining the criteria involves extracting a novel test set, adhering to the optimal size standards established in Preliminary Experiment 2 (referred to as Dataset 8-1 through Dataset 8-7). Utilizing the test set, the consistency of each LLM’s responses is gauged through the sample variance of accuracy rates, thus ascertaining the steadiness of the model’s answer reliability.

-

Accuracy: The precision of information is paramount for reducing the risks stemming from misinformation. In this paper, two distinct questioning methodologies of AO and COT are proposed to assess the LLMs. The average correctness of responses garnered from test sets with diverse content is used as a standard to provide an equitable metric for gauging the proficiency of the model’s replies.

Answer-only (AO) evaluation

The AO Evaluation is designed to evaluate the proficiency of the LLM in directly responding to questions (Figure 3), with a specific focus on the accuracy and efficiency of the model in dispensing specialized knowledge pertaining to architectural design, history, structural engineering, and environmental adaptability. The approach examines the model’s grasp of technical jargon and concepts, as well as its proficiency in providing clear and swift answers in practice. The AO Evaluation is valuable in gauging and enhancing the applicability of the model within the realms of architectural practice and theoretical inquiry. It serves to validate the model’s capability to deliver responses that align with professional benchmarks and to ensure a precise understanding and application of architectural concepts and terminology.

Chain-of-thought (COT) evaluation

The COT Evaluation requires that the model not only articulates the answer but also presents the inferential trajectory from question to response (Figure 4), including a comprehensive dissection of architectural design principles, structural engineering challenges, and environmental adaptability tactics. The evaluation paradigm demonstrates the cognitive processes and reasoning sequences of the model when confronted with intricate architectural inquiries, which can facilitate an assessment of its intellectual capacity and the efficacy of its strategic approaches within the field of architecture.

Beyond standard experimental protocols, several identified and scrutinized models have been selected to test their superior performance within various research collectives, utilizing the Chain-of-Thought (COT) methodology. A comparative analysis is conducted between the accuracy rates of the COT and Answer-only (AO) approaches, with the mean variance in correctness rates between these two graphically represented paradigms. To quantitatively evaluate the influence of AO and COT on the LLM’s response efficacy, a paired sample t-test is applied to statistically validate the observed discrepancies in mean accuracy.

Results

Data collection and organization

The core of dataset construction is the authority and practicality of its content. Hence, the official examination questions released through public channels for the registered architects and registered planners’ examinations are primarily collected. The question bank includes a collection of actual examination questions from previous years and a selection of high-quality mock questions to cover the core knowledge points of professional qualification certification. The entire test set comprises 10,440 questions, all of which are single-choice questions with only one correct answer among four options. Additionally, paper-based examination papers and practice questions from universities are also obtained, which have been meticulously compiled by experienced teachers, ensuring the precision and widespread recognition of the questions and answers. Since these resources are typically not publicly available online, they are not easily accessible to web crawlers and provide our dataset with unique value and depth.

To ensure the scientific rigor and comprehensiveness of the test set, the sampling strategy strictly follows the distribution ratio of questions across various subjects in the entire question bank (Figure 5). This approach is designed to ensure that the test set fully covers and represents the entire knowledge system, thereby providing an accurate and balanced data foundation for assessing the performance of LLMs.

Models

To investigate the latest applications and advancements of LLMs in the Chinese architectural knowledge domain, a comprehensive evaluation of 14 high-performance LLMs that support Chinese input was conducted (Table 1). The selection of these models was based on their widespread use and high frequency in the Chinese domain, as well as the support from professional development and operation teams behind them. This not only ensures the authority and professionalism of the models but also guarantees their potential for future continuous development and optimization.

Preliminary experimental results

All experiments in this paper were conducted in the following configured environment, with the relevant configurations and their parameters shown in Table 2.

Preliminary experiment ①

Preliminary Experiment ① selected two LLMs, Qwen-14B-Chat and GPT-3.5-turbo, for testing. Qwen-14B is a locally deployed LLM, while GPT-3.5-turbo is an online LLM, representing two different deployment methods of LLMs. A test set (Dataset 1) with a random sample of 500 questions was drawn from the total pool. Both models were asked the same questions for 7 times, using the AO method to streamline the questioning process and reduce the time for question and answer. The experimental results (Figure 6) indicate that Qwen-14B-Chat’s answers were completely consistent for the 7 attempts, whereas GPT-3.5-turbo gave different responses. GPT-3.5-turbo’s overall output was essentially stable across the 7 responses, with accuracy differences \(\le 0.8\%\). There was no clear linear relationship between the rounds and accuracy, meaning that the accuracy of GPT-3.5-turbo did not significantly improve during the testing period. The reason might be that the online model GPT-3.5-turbo employs unsupervised learning techniques to adjust its parameters, leading to different outputs at different times. As a locally deployed model, Qwen-14B-Chat likely uses the same environment and parameter settings each time it runs, resulting in relatively stable output.

Preliminary experiment ②

The Preliminary Experiment ② aimed to determine an optimal test set size capable of ensuring stable efficiency without compromising the accuracy and representativeness of the test results. This can streamline the quantity of questions in the test set to save time for subsequent experiments. In this section, we implemented a methodically increased of the test set size, starting with 100 questions and incrementally increasing the number to 200, 500, 1000, and ultimately reaching 1500 questions, to monitor the variance in the sample distribution.

The test results were shown in Figure 7. It was indicated that the sample variance of the model’s accuracy stabilizes and remains at a low level when the test set size is approximately 875 questions. Such finding suggested that the influence of test set size on the model’s evaluation outcomes is minimized at the size of 875 questions, thus ensuring the stability and reliability of the assessment. Hence, the test size is set to be 875 questions for all subsequent experiments.

Main experiment results

Stability assessment of LLMs

To assess the stability for LLMs, we utilized an appropriately scaled test set, as determined in the preceding preliminary experiments, to evaluate each model. It was achieved by calculating the sample variance of the test results, thereby quantifying the models’ stability. The magnitude of the sample variance was directly correlated with the fluctuation in the LLM’s performance across different test sets: a larger variance indicated greater fluctuation in the LLM’s test results, reflecting lower stability. whereas a smaller variance implied more consistent test outcomes, indicating higher stability of LLM.

According to the experimental data in Figure 8, the online mode of GPT-4-turbo exhibited the highest overall stability, with a sample variance of 0.000060. Chatglm2-6B and Qwen1.5-7B-Chat were next in line, also demonstrating commendable stability, with sample variances of 0.000068 and 0.000081, respectively. Other models, including Qwen-7B-Chat and Baichuan2-7B-Chat, also displayed good stability in certain specialized domains.

The charts further delineated model stability across different subjects: in the subjects of ‘Design’ and ‘Urban Planning’, the GPT-3.5-turbo, Qwen-14B-Chat, and Baichuan2-7B-Chat models had relatively low sample variances, suggesting more stable performance. In the subjects of ‘Management’ and ‘Structure’, the Qwen-7B-Chat, Baichuan2-7B-Chat, and Qwen1.5-14B-Chat models exhibited lower sample variances, signifying superior stability within these disciplines.

Accuracy assessment of LLMs

During the process of accuracy validation for LLMs, the mean accuracy rates derived from the stability assessment were utilized as the benchmark for evaluation. The latest assessment outcomes indicated that the Qwen1.5-14B-Chat model had particularly excelled in terms of accuracy, surpassing the previously leading GPT-4-turbo. Moreover, other models in the ‘Qwen’ series had demonstrated remarkable performance (Figure 9).

It was particularly noteworthy that in the domain of Building Codes, Economics, and Management, all models assessed have generally exhibited a high level of accuracy, suggesting that LLMs had already possessed a relatively mature application potential in the areas. However, the top-ranking models had not achieved the average level of performance in the subject of Design as they have in other areas. Such phenomenon was particularly prominent for the top models. This not only reveals the potential limitations of LLMs in this field but also provides an important reference for future model optimization and fine-tuning training.

Comparison of AO and COT

In the study of the predictive guidance methods of LLMs, researchers had paid special attention to the impact of prompt variations on obtaining the desired answers. To gain an in-depth understanding of the phenomenon, we selected several models that had shown outstanding performance across different teams to test them using the Chain of Thought (COT) answering approach. Subsequently, we compared the accuracy rates of COT with those of Answer Only (AO). By calculating the difference in the mean accuracy rates between the two answering methods, we visually demonstrated the results’ discrepancy and employed hypothesis testing for an objective statistical validation.

The difference in output accuracy rates between COT (Chain of Thought) and AO (Answer Only) was depicted in Figure 10. Among the 5 tested LLMs, AO outperformed COT in 4 of them. For examining the results across different domains, COT only outperforms AO in 5 out of a total of 20 sub-tests. It was indicated that the use of the COT answering method is not necessarily superior to AO, which is in contrast to the widespread belief that COT might be more accurate than AO.

Otherwise, it was also found that the different answering methods indeed had impacted the LLMs outputs. The difference in the mean accuracy rates between the two answering methods was noted to be smaller than 3%. However, whether this impact is positive or negative was unclear. To further verify this phenomenon, we assumed that there was no significant difference in the accuracy rates of LLMs when answering in AO and COT modes during the evaluation (Figure 11). Paired sample t-tests were performed on the sample data, with a p-value threshold of 0.05. The test results indicated that, in most cases, there was no statistically significant difference between the results obtained by COT and AO answering methods.

During the experimental procedure, an intriguing observation was noticed that the models required significantly more time to respond using the COT approach compared to the AO method. Specifically, in the case of the Qwen1.5-14B-Chat model, the mean response time for AO was recorded at 2.38 seconds per question, whereas the COT method extended to 62.23 seconds per question. The revelation underscored the importance of incorporating response time as a critical metric alongside accuracy when evaluating the efficiency of LLMs.

This study acknowledges the existing challenges in balancing reasoning path length and computational efficiency within current COT methodologies. Recent studies have demonstrated the feasibility of streamlining COT processes through approaches such as dynamic pruning of redundant reasoning steps (COT-Influx)21 and the implementation of iterative optimization preference mechanisms22. Building upon these advancements, our future work will focus on developing lightweight reasoning path generation strategies for COT, aiming to enhance comprehensive performance in practical applications while maintaining computational efficiency.

Conclusion

This research, meticulously crafted through a systematic approach encompassing the synthesis of architectural knowledge, preliminary and formal experimentation, has successfully formulated an evaluative framework for gauging the efficacy of LLMs within the architectural domain. The conclusions drawn from this study are as follows:

-

(1)

Initially, we encapsulated the components of the architectural knowledge system, thereby establishing a solid foundation for constructing the test set. Subsequently, based on the results of the preliminary experiments ensuring the stability and reliability of the evaluation, we determined the minimum test set size for a single evaluation to be 875 questions through seven iterations. In the formal experiments, we established two key objectives for assessing LLMs: stability and accuracy, which were measured by the sample variance and mean of the accuracy rates, respectively.

-

(2)

Through this process, we conducted an in-depth evaluation of 14 large language models extensively utilized in the Chinese architectural domain. The study revealed significant differences in the generalization capabilities of language models across various subfields of architecture. Particularly in the areas of Building Codes, Economics, and Management, all models demonstrated higher accuracy rates, which may suggest that these models have undergone more in-depth training or optimization in these domains. Conversely, in the field of architectural design and theory, even the higher-performing models exhibited accuracy rates below average, implying that even advanced language models require domain-specific fine-tuning to enhance precision.

-

(3)

Additionally, the experiments also explored the impact of different evaluation methods, including Answer-only (AO) Evaluation and Chain-of-thought (COT) Evaluation, on the model’s output. The results indicated that although the COT method can demonstrate the model’s reasoning process, in terms of the accuracy of knowledge responses in the architectural domain, there was no significant difference between the AO and COT methods among the 14 LLMs tested, and the COT method took a considerably longer time.

Through in-depth research and exploration, we anticipate more profound applications of LLMs in the architecture field. However, this study has limitations regarding dataset annotation refinement, particularly the incomplete classification labeling of quantitative versus qualitative problems, which may impact the comparative evaluation of AO and COT methodologies. Given the hybrid descriptive-numerical nature inherent to architectural problems-where boundaries between conceptual analysis (e.g., design philosophy evaluation) and computational tasks (e.g., structural simulation) often blur-future investigations will necessitate collaborative annotation framework development with architectural engineers to establish scientifically rigorous classification protocols. In this process, future research should focus on advancing model fine-tuning using domain-specific datasets from the architecture sector.These efforts will continuously enhance the model’s task comprehension and output stability in specialized scenarios such as architectural plan generation, engineering parameter calculation, and construction progress scheduling.

In addition, future work will explore strategies to optimize the COT method to reduce response time while improving accuracy.A hybrid evaluation approach that combines quantitative and qualitative evaluations will provide a more comprehensive perspective on the performance of LLMs. The integration of multimodal data is also noteworthy, as the fusion of visual, spatial, and textual information within LLMs will significantly enhance the model’s ability to handle complex tasks. For example, in design visualization, the combination of images with text or the integration of spatial layouts with engineering parameters in project planning can provide more efficient and accurate intelligent support for the architecture field. This increased domain adaptability will enable large language models to become truly trusted intelligent collaborators for architecture professionals, injecting innovative momentum into the construction industry and ultimately creating greater social value.

Data availability

The data that support the findings of this study are available from the corresponding author, [Shimin Li], upon reasonable request.

References

Bubeck, S. et al. Sparks of artificial general intelligence: Early experiments with gpt-4. arXiv preprint arXiv:2303.12712 (2023).

Devlin, J., Chang, M.-W., Lee, K. & Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805 (2018).

Yang, Z. et al. Xlnet: Generalized autoregressive pretraining for language understanding. Advances in neural information processing systems. 32 (2019).

Liu, Z., Lin, W., Shi, Y. & Zhao, J. A robustly optimized bert pre-training approach with post-training. In China National Conference on Chinese Computational Linguistics. 471–484 (Springer).

Achiam, J. et al. Gpt-4 technical report. arXiv preprint arXiv:2303.08774 (2023).

Naveed, H. et al. A comprehensive overview of large language models. arXiv preprint arXiv:2307.06435 (2023).

Olender, M. L., de la Torre Hernández, J. M., Athanasiou, L. S., Nezami, F. R. & Edelman, E. R. Artificial intelligence to generate medical images: augmenting the cardiologist’s visual clinical workflow. Eur. Heart J. Digit Health. 2, 539–544 https://doi.org/10.1093/ehjdh/ztab052 (2021).

Jo, H., Lee, J.-K., Lee, Y.-C. & Choo, S. Generative artificial intelligence and building design: early photorealistic render visualization of façades using local identity-trained models. J. Comput. Des. Eng. 11, 85–105 (2024).

Ding, Y., Ma, J. & Luo, X. Applications of natural language processing in construction. Autom. Constr. 136, 104169 (2022).

Zheng, Z., Lu, X.-Z., Chen, K.-Y., Zhou, Y.-C. & Lin, J.-R. Pretrained domain-specific language model for natural language processing tasks in the aec domain. Computers in Industry. 142, 103733 (2022).

Shamshiri, A., Ryu, K. R. & Park, J. Y. Text mining and natural language processing in construction. Autom. Constr. 158, 105200 (2024).

Lee, J.-K., Yoo, Y. & Cha, S. H. Generative early architectural visualizations: Incorporating architect’s style-trained models. J. Comput. Des. Eng. https://doi.org/10.1093/jcde/qwae065 (2024).

Wang, A. et al. Glue: A multi-task benchmark and analysis platform for natural language understanding. arXiv preprint arXiv:1804.07461 (2018).

Sarlin, P.-E., DeTone, D., Malisiewicz, T. & Rabinovich, A. Superglue: Learning feature matching with graph neural networks. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 4938–4947.

Conneau, A. et al. Xnli: Evaluating cross-lingual sentence representations. arXiv preprint arXiv:1809.05053 (2018).

Xu, L. et al. Clue: A chinese language understanding evaluation benchmark. arXiv preprint arXiv:2004.05986 (2020).

Huang, Y. et al. C-eval: A multi-level multi-discipline chinese evaluation suite for foundation models. Advances in Neural Information Processing Systems 36 (2024).

Zhang, L. et al. Fineval: A chinese financial domain knowledge evaluation benchmark for large language models. arXiv preprint arXiv:2308.09975 (2023).

Zhang, N. et al. Cblue: A chinese biomedical language understanding evaluation benchmark. arXiv preprint arXiv:2106.08087 (2021).

Zhong, W. et al. Agieval: A human-centric benchmark for evaluating foundation models. arXiv preprint arXiv:2304.06364 (2023).

Huang, X., Zhang, L. L., Cheng, K.-T., Yang, F. & Yang, M. Fewer is more: Boosting llm reasoning with reinforced context pruning. arXiv preprint arXiv:2312.08901 (2023).

Pang, R. Y. et al. Iterative reasoning preference optimization. arXiv preprint arXiv:2404.19733 (2024).

Acknowledgements

This work was supported by the projects of National Natural Science Foundation of China (NO.51968002) and the Interdisciplinary Scientific Research Foundation of GuangXi University (2022JCC027).

Author information

Authors and Affiliations

Contributions

J.W. conceived the experiments and administered the project. M.J., H.X., and Y.Z. developed the methodology. J.F., S.L., and Y.Z. conducted the investigation. M.J. and J.F. developed software. J.W., M.J., J.F., and Y.Z. curated the data. M.J. and S.L. visualized data. J.W., M.J., and S.L. wrote the original draft. J.W., S.L., and H.X. reviewed and edited the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Wu, J., Jiang, M., Fan, J. et al. Arch-Eval benchmark for assessing chinese architectural domain knowledge in large language models. Sci Rep 15, 13485 (2025). https://doi.org/10.1038/s41598-025-98236-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-98236-0

Keywords

This article is cited by

-

Multi-agent collaborative pathways for Chinese traditional architectural image generation

Scientific Reports (2025)