Abstract

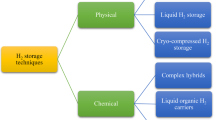

Hydrogen storage is a crucial technology for ensuring a sustainable energy transition. Underground Hydrogen Storage (UHS) in depleted hydrocarbon reservoirs, aquifers, and salt caverns provides a viable large-scale solution. However, hydrogen dispersion in cushion gases such as nitrogen (N2), methane (CH4), and carbon dioxide (CO2) lead to contamination, reduced purity, and increased purification costs. Existing experimental and numerical methods for predicting hydrogen dispersion coefficients (KL) are often limited by high costs, lengthy processing times, and insufficient accuracy in dynamic reservoir conditions. This study addresses these challenges by integrating experimental data with advanced machine learning (ML) techniques to model hydrogen dispersion. Various ML models—including Random Forest (RF), Least Squares Boosting (LSBoost), Bayesian Regression, Linear Regression (LR), Artificial Neural Networks (ANNs), and Support Vector Machines (SVMs)—were employed to quantify KL as a function of pressure (P) and displacement velocity (Um). Among these methods, RF outperformed the others, achieving an R2 of 0.9965 for test data and 0.9999 for training data, with RMSE values of 0.023 and 0.001, respectively. The findings highlight the potential of ML-driven approaches in optimizing UHS operations by enhancing predictive accuracy, reducing computational costs, and mitigating hydrogen contamination risks.

Similar content being viewed by others

Introduction

The storage of gas in underground formations, particularly in natural reservoirs, has emerged as a key technology for energy management and environmental sustainability. In the gas storage process, cushion gases play a crucial role in enhancing reservoir stability and efficiency1. Among the critical factors influencing the overall performance of this process is the gas dispersion coefficient, which quantifies gas distribution within porous media. This study explores the concept and importance of the dispersion coefficient in the presence of cushion gases2.

The global shift towards renewable energy sources, particularly wind and solar power, is progressing at a remarkable pace3. However, the intermittent nature of these energy sources poses significant challenges in maintaining a stable balance between energy supply and demand. To address these challenges, the development of reliable energy storage technologies is essential for ensuring consistent and dependable energy availability4.

One promising solution for large-scale energy storage is hydrogen, which can be produced during periods of surplus renewable energy and stored for later use5. Underground hydrogen storage (UHS) in geological formations, such as depleted oil and gas reservoirs, is being considered an efficient method for large-scale hydrogen and energy storage6,7. These geological formations offer vast storage capacities and are often supported by readily available geological data, making it feasible to repurpose existing infrastructure for hydrogen storage6,8,9. Nevertheless, UHS systems necessitate the use of cushion gases such as nitrogen (N2), methane (CH4), or carbon dioxide (CO2) to maintain minimum pressure, alter wettability, prevent water ingress, and regulate volume during the injection and retrieval of hydrogen6,10,11,12.

Cushion gases, which are essential for maintaining reservoir pressure, significantly enhance gas transfer and storage efficiency. These gases interact with the primary stored gas, affecting the dispersion coefficient, so understanding their interactions is crucial. Cushion gases, being denser than hydrogen, remain in the reservoir without directly impacting the hydrogen extraction process13. The required amount of cushion gas varies depending on the geological formation type. For example, in depleted oil and gas reservoirs, cushion gas may account for 40–70% of the total volume, while aquifers may need up to 80% cushion gas, highlighting the economic considerations in selecting suitable cushion gases for UHS systems14,15.

A challenge in UHS systems is the potential mixing of hydrogen with cushion gases, leading to contamination and costly purification. Accurate reservoir simulations are needed to predict mixing behavior, relying on dispersion coefficients (KL) between hydrogen and cushion gases to understand gas behavior within the reservoir16,17,18,19. The dispersion coefficient influences gas mixing and distribution, affecting fluid behavior and extraction efficiency20. It is influenced by reservoir rock type, gas composition, and the physicochemical properties of the interacting fluids21,22. Analyzing the dispersion coefficient is crucial for optimal reservoir design and reducing energy losses23.

Despite its significance, there is a notable lack of reliable data on the dispersion coefficient for hydrogen mixed with cushion gases under realistic storage conditions. Many studies have either neglected the effects of mixing or relied on estimated KLvalues, which may not accurately represent real-world behavior24. This knowledge gap poses challenges in accurately modeling UHS systems and developing optimized storage strategies.

Recent research has introduced innovative techniques for measuring dispersion coefficients in rock cores, with a particular focus on hydrogen mixed with nitrogen, methane, and carbon dioxide. Using Fontainebleau sandstone as the porous medium, these studies conducted dispersion measurements under varying pressures and temperatures to replicate UHS conditions. The resulting data are critical for selecting appropriate cushion gases, enhancing the design and simulation of UHS systems, advancing the understanding of gas mixing dynamics, and optimizing hydrogen storage for large-scale applications25.

Environmental parameters such as temperature, pressure, and the surface properties of the porous medium significantly influence the dispersion coefficient26. For example, increased pressure may suppress gas dispersion, while temperature variations can alter the molecular behavior of gases, resulting in changes in this coefficient27. Additionally, the type of cushion gas plays a crucial role in determining the dispersion coefficient28. Lighter gases, such as hydrogen (H₂), enhance dispersion due to their high diffusivity, whereas heavier gases, like carbon dioxide (CO₂), tend to reduce the coefficient. This highlights cushion gas selection as a critical engineering challenge29,30.

The dispersion coefficient can be determined through experimental methods and numerical simulations, such as gas injection experiments and computational fluid dynamics (CFD) models, which offer valuable insights into gas behavior under storage conditions31. While cushion gases enhance reservoir efficiency, they also bring challenges like operational costs and environmental impacts32. Studies suggest that optimizing gas selection and operational processes can address these issues. Analyzing the dispersion coefficient with cushion gases aids in designing more efficient reservoirs, reducing environmental effects. Future research should focus on refining models and solutions to optimize gas dispersion for sustainable energy storage.

Similar artificial neural network (ANN) models, which have been effectively utilized for prediction and modeling in this article, can also be applied for predicting and modeling hydrogen diffusion in Cushion gases33. The transparency and interpretability of these models could prove beneficial in various sections of the paper, particularly in the section explaining machine learning models34. Just as ANN models were employed in this article to predict CO2penetration, neural networks can also be used to predict and calculate hydrogen diffusion in Cushion gases, especially considering the importance of pressure and temperature characteristics35. Similar methods can be applied to predict hydrogen diffusion characteristics in Cushion gases, particularly under conditions of temperature and pressure variations. As temperature and pressure play a determining role in this article, variables such as temperature and pressure can also be influential in the study36.

Despite previous studies on hydrogen dispersion in underground environments, a lack of precise data persists regarding real reservoir conditions and the impact of operational parameters on the dispersion coefficient (KL). Most prior research has primarily relied on numerical methods or limited experimental approaches, with the integration of ML and experimental data remaining largely unexplored. This study addresses this gap by employing an advanced ML model that combines experimental and analytical data to enhance the accuracy of hydrogen dispersion coefficient predictions in the presence of cushion gases. By systematically analyzing the mixing behavior of hydrogen and cushion gases under reservoir conditions, this research identifies key influencing factors, such as pressure and displacement velocity, and develops a predictive framework for time-dependent interactions during hydrogen injection and withdrawal. The proposed ML-based approach not only optimizes gas mixing behavior but also reduces the complexity and costs associated with conventional laboratory methods. These findings contribute to the advancement of UHS strategies, facilitating improved system performance and minimizing hydrogen contamination risks.

Gas dispersion and storage studies

Nuclear magnetic resonance

Nuclear Magnetic Resonance (NMR) is a well-established technique used to analyze the compositions of effluent gases and fluids during dispersion experiments through rock cores. This technique operates by applying an external magnetic field to active nuclei, specifically ¹H protons, causing them to precess at the Larmor frequency. The resulting precession generates detectable signals, enabling the differentiation of various molecular environments based on their chemical shifts. Typically, tetramethylsilane (TMS) is used as a reference with a shift of 0 ppm37. A secondary oscillating magnetic field is applied to displace the magnetization vector from the z-axis, inducing a measurable signal known as Free Induction Decay (FID), which subsequently decays over time. By applying a Fourier transform to the FID, an NMR spectrum is obtained, revealing distinct chemical shifts corresponding to different molecules, such as CH4 and H2, thus facilitating the analysis of their mixture composition38.

For accurate detection of chemical shifts, a homogeneous magnetic field is required, which can be achieved using benchtop NMR spectrometers with Halbach magnet arrays. These mobile spectrometers have been widely utilized across various fields, including research on cemented paste backfill materials, hydrate inhibitors, oilfield emulsions, and rock core dispersion behavior. Such applications demonstrate the versatility of NMR spectroscopy in analyzing gas mixtures, which is essential for investigating gas behavior during UHS39,40,41.

Gas dispersion in porous reservoirs

Dispersion in porous media like rock cores involves the spreading and mixing of fluids as they move through the interconnected pore network. This is influenced by two key mechanisms: molecular diffusion, where molecules move randomly, and mechanical advection, which refers to mixing caused by velocity differences in the flow. The Péclet number (Pe) is a crucial parameter used to identify the dominant flow mechanism, with diffusion dominating at low Pe values (Pe < 0.1) and advection becoming dominant at higher Pe values (Pe > 10). For intermediate Pe values, both diffusion and advection contribute to the dispersion42.

Where um is the mean interstitial velocity through the porous medium, D is the relevant diffusion coefficient and dp is a characteristic length scale of the medium, such as the grain or particle diameter.

To mathematically model dispersion in porous media, the advection-dispersion (AD) equation is commonly employed, combining advective and diffusive transport processes. In experimental setups, a gas pulse, such as hydrogen, is injected into a stream of cushion gas flowing through a rock core43.

Where, C represents the concentration of the dispersing component in the fluid phase, KL is the longitudinal dispersion coefficient along the direction of bulk flow (x direction), and t is time. The effluent breakthrough curves generated during these experiments provide valuable data on the dispersion dynamics. By comparing the breakthrough curves to the analytical solution of the AD equation, researchers can determine the longitudinal dispersion coefficient (KL), which provides insights into the dispersion characteristics of the porous material.

Literature review

Machine learning (ML) has emerged as a transformative tool in analyzing gas dispersion coefficients and optimizing related processes in underground storage systems. By processing vast datasets from laboratory experiments and numerical simulations, ML models with advanced algorithms uncover complex patterns and predict dispersion variations accurately, influenced by environmental conditions and gas properties44,45. Furthermore, these models enable rapid evaluation of scenarios, facilitating the selection of optimal cushion gases and reservoir conditions, minimize dispersion and enhance system efficiency46. This approach not only reduces experimental costs and time but also accelerates the development of innovative gas storage technologies with reduced environmental impact47,48.

In 2024, Dąbrowski49 explored the dispersion behavior of hydrogen and methane in rock cores and investigated their mixing effects in UHS within saline aquifers. The study focused on measuring dispersion coefficients for hydrogen displacing methane and vice versa, as these processes are essential for simulating the evolution of mixing zones in storage reservoirs. Using a core flooding system equipped with Raman spectroscopy, gas mixing behaviors were measured under controlled conditions with Berea sandstone cores to validate system accuracy and account for entry and exit effects. The findings revealed differing dispersion coefficients for hydrogen and methane based on displacement direction and identified unique dispersion behaviors for pure hydrogen and hydrogen/methane blends in four reservoir structures in Poland, aiding in the selection optimal storage sites. Similarly, Kobeissi et al.2 studied the dispersion behavior of hydrogen in cushion gases such as N2, CH4, and CO2 to evaluate gas mixing impacts in UHS within porous media. They measured the dispersion coefficient (KL) between hydrogen and cushion gases using a core flooding apparatus with Fontainebleau sandstone as the test material. KL values were examined as functions of displacement velocity (0.2–8.4 cm/min) and system pressure (50–100 bar), demonstrating significant dependency on these parameters. Scaling KL values with diffusion coefficients highlighted the unique behavior of liquid CO2. This research underscores the critical need to understand hydrogen-cushion gas mixing to reduce hydrogen contamination risks and associated purification costs. Together, these studies offer valuable insights for optimizing UHS methods and improving reservoir simulations by controlling gas mixing dynamics.

In 2023, Li et al.50 introduced a hybrid deep probabilistic learning-based model, DPL_H2Plume, for predicting hydrogen plume concentration in refueling stations, addressing overconfidence issues in existing models that hinder decision-making. By integrating deep learning with Variational Bayesian Inference, the model achieved high prediction accuracy (R2 = 0.97) and a fast inference time (3.32 s), outperforming state-of-the-art models, especially in predicting plume boundaries. In 2023, Kanti et al.51 focused on improving the thermal performance and hydrogen storage rate of LaNi5 metal hydride reactors using graphene oxide (GO) nanofluids as heat transfer fluids. Their 2D numerical model, developed in COMSOL Multiphysics, demonstrated that GO nanofluids reduced hydrogen storage time by 61.7% compared to water, showcasing their potential for optimizing reactor performance. Additionally, in 2023, Asif et al.23 investigated the CO2-Enhanced Coalbed Methane (CO2-ECBM) process, emphasizing methane displacement by CO2 under high-pressure conditions. They introduced the concept of “caucus time” to analyze sorption kinetics, revealing that CO2’s adsorption capacity surpasses methane’s by 2 to 2.3 times. Critical pressures for retrograde adsorption were identified, and dispersion modeling highlighted the economic importance of optimizing operational pressures and flow rates. Together, these studies advance the fields of hydrogen storage and enhanced methane recovery by integrating innovative modeling and experimental approaches.

In 2022, Maniglio et al.52developed a methodology to evaluate the impact of dispersive mixing on the efficiency of UHS systems, focusing on hydrogen loss during storage due to diffusive and dispersive phenomena in depleted gas reservoirs. The study emphasized that large-scale reservoir heterogeneity significantly influences hydrogen dispersion, outweighing molecular diffusion, core-scale dispersion effects, and numerical diffusion effects depending on grid resolution. Additionally, reservoir-scale heterogeneities were found to have a greater impact on hydrogen dispersivity than pore-scale heterogeneities, questioning the need for experimental campaigns on reservoir rock samples. The methodology offers a practical workflow to estimate the impact of large-scale heterogeneities, providing valuable insights for pilot applications by tailoring the approach to specific asset scales and storage strategies. In parallel, Blaylock and Klebanoff53 examined hydrogen dispersion during releases from 250-bar storage tanks on vessels modeled using CFD under varying exit velocities and wind conditions. The results revealed that hydrogen dispersion is momentum-driven at high release speeds (800–900 m/s), with minimal wind influence, while at lower speeds (10 m/s), hydrogen becomes entrained by wind and disperses laterally. Wind with a downward component was shown to direct hydrogen downward, despite its buoyancy. These findings contribute to improving safety systems for hydrogen-powered vessels, addressing key challenges in hydrogen storage and dispersion dynamics.

In 2021, Liu et al.54 reviewed research on CO2-enhanced gas recovery (EGR) and CO2–CH4 dispersion in depleted natural gas reservoirs. They identified that temperature and flow rate promote dispersion, whereas pressure and residual water salinity suppress it under supercritical conditions. The CO2–CH4 dispersion coefficient decreases in highly permeable media, and the effect of residual water on dispersion require further investigation. The review also pointed out the scarcity of research on impurities such as N2 and ethane in supercritical CO2. While field-scale simulations confirm EGR’s feasibility, factors such as permeability heterogeneity and water saturation should be considered in future studies to improve recovery efficiency.

Methodology

A simplified schematic of the experimental apparatus used in the study by Kobeissi et al.2 is illustrated in Fig. 1. This apparatus was specifically designed to investigate the dispersion of hydrogen with potential cushion gases (N2, CH4, or CO2) through vertically aligned rock cores under reservoir-relevant conditions, including pressure, temperature, and flow rate.

The experimental setup described by Kobeissi et al.2 was developed to accurately measure the hydrogen dispersion coefficient (KL) in the presence of cushion gases under these conditions. The key experimental parameters, including gas composition, rock properties, and flow conditions, were meticulously controlled to ensure the reproducibility and reliability of the measurements.

In their study, N2, CH4, and CO2 were selected as cushion gases due to their industrial relevance for UHS. Each gas was used in its high-purity form (> 99.99%) to ensure consistency in experimental results and eliminate interference from trace contaminants. The gases were injected separately to evaluate their specific effects on hydrogen dispersion behavior.

The overall experimental procedure involves the use of Fontainebleau sandstone as the porous medium, as this rock is considered a standard reference material for gas transport studies in porous media due to its well-characterized mineralogical and petrophysical properties. The rock samples used in these experiments exhibited the following characteristics:

-

Porosity: 7.5–8.3% (measured by helium pycnometry).

-

Permeability: 120–150 millidarcies (determined using a gas permeameter).

-

Mineralogical composition: Predominantly quartz (> 99%), with minor amounts of feldspar and iron oxides.

-

Grain size distribution: Uniform, with an average grain diameter of 250 μm.

These properties create a homogeneous porous network, minimizing the effects of lithological heterogeneities on diffusion measurements.

The experiments were conducted over a wide range of reservoir-like flow conditions to evaluate the variability in hydrogen transport dynamics. The experimental parameters included:

-

Flow rate (Um): Ranging from 0.2 to 8.4 cm/min to assess the influence of convection on diffusion.

-

Pressure (P): Controlled between 50 and 100 bar, representing typical operational conditions for underground hydrogen storage (UHS).

-

Temperature: 323 K (50 °C) to simulate subsurface conditions.

-

Gas composition: Initial hydrogen concentration of 80%, with the remaining 20% consisting of cushion gases, replicating real mixing conditions in underground storage scenarios.

-

Core sample dimensions: 10 cm in length, 3.8 cm in diameter, prepared using standard cleaning and drying procedures to remove residual fluids and ensure uniform experimental conditions.

A pulse injection setup was utilized to determine the dispersion coefficient KL2.

The experimental apparatus, designed by Kobeissi et al.2, was developed to replicate reservoir conditions and investigate gas dispersion mechanisms with precise control over pressure, temperature, and flow rate. To reduce CO2 interaction errors, the rock core was wrapped in protective layers, while a Viton sleeve and core holder ensured structural stability. A syringe pump enabled accurate pressurization, enhancing experimental reliability. Although such setups yield valuable real-world data, they are resource-intensive. In contrast, ML methods efficiently analyze large datasets, identify patterns, and predict gas dispersion behavior under various conditions, offering a cost-effective and scalable alternative to extensive physical experimentation.

Data collection & processing

In this study, the data required for the development of high-accuracy and efficient ML models were collected from previous research available in various databases. The data used in this study were extracted from the dataset presented in Kobeissi et al.2 These data were obtained from experimental tests conducted under controlled laboratory conditions. The aim of this process was to ensure the comprehensiveness and full coverage of information. Figure 2 illustrates the details of the extracted data. The dataset comprises 360 independent data points, enabling the analysis of variable behavior under different conditions. These data include the dispersion coefficients (KL) between hydrogen and three cushion gases (CH4, CO2, N2), which were processed as a function of pressure and displacement velocity for reservoir models.

The data were pre-processed statistically and quantitatively, and their correlation, sensitivity analysis, evaluation, and validation were examined. Subsequently, ML models were implemented on training and testing datasets, and the performance of the models was assessed. The extensive dataset ensures that the ML models can effectively predict and analyze complex scenarios. The necessary statistical information is detailed in Table 1.

Figure 2 present box plots depicting the statistical characteristics of KL, pressure (bar), and um (cm/min). The KL values range from 0.078 to 0.908, with a median of 0.344 and a mean of 0.393. The first (Q1) and third quartiles (Q3) are 0.176 and 0.583, respectively, indicating the spread of data around the median. The pressure varies between 50 and 100 bar, with a median of 75 bar and a mean of 71.667 bar. The velocity (um) ranges from 0.209 to 8.368 cm/min, with a median of 2.720 cm/min and a mean of 3.371 cm/min. The variance values for KL, pressure, and velocity are 0.056, 433.333, and 6.902, respectively, reflecting the degree of dispersion in the dataset. The skewness values indicate a slight positive asymmetry in all three parameters, while the kurtosis values are negative, suggesting a relatively flat distribution compared to a normal distribution.

Figure 3 provides an explanation of the Relevancy Factor, which is a measure used to assess the degree of association between a variable, feature, or model and a specific target or dataset. One of the common methods for evaluating this association is the Pearson correlation coefficient (𝑟), which quantifies the linear dependence between two variables. The value of this coefficient ranges from [−1, 1], where 𝑟 = 1 indicates a perfect positive correlation, 𝑟 = −1 represents a perfect negative correlation, and 𝑟 ≈ 0 suggests no linear relationship between the variables.

Where, \(\:{X}_{i}\) and \(\:{Y}_{i}\) are the values of the input and output variables and \(\:\stackrel{-}{X}\) and \(\:\stackrel{-}{Y}\) are the mean values of the respective variables. The numerator represents the covariance between the two variables. The denominator is the product of the standard deviations of the input and output variables.

In machine learning and petroleum engineering, the Pearson correlation coefficient is used to identify key variables in prediction and optimization processes. For example, analyzing the correlation between porosity and permeability with oil production or assessing the impact of pressure and temperature on recovery rates can help develop more accurate models. Therefore, integrating the Relevancy Factor with statistical methods such as Pearson correlation plays a crucial role in optimizing and predicting engineering processes.

Machine learning methods

Bayesian

The Bayesian approach is a fundamental machine learning technique that applies Bayesian probability principles to data modeling and learning. This method combines prior knowledge with observed data to refine predictions. At its core, Bayes’ Theorem establishes the relationship between conditional probabilities (Fig. 4). Bayes’ Theorem is expressed as:

In this equation, \(\:P\left(H|D\right)\) represents the posterior probability, which reflects the likelihood of the hypothesis \(\:H\) given the observed evidence \(\:D\). The term \(\:P\left(D|H\right)\), known as the likelihood, measures the probability of observing the data \(\:D\) assuming that \(\:H\) is true. \(\:P\left(D\right)\) denotes the prior probability, encapsulating the initial belief about \(\:H\) before considering the evidence, while \(\:P\left(D\right)\)is the marginal likelihood, serving as a normalization constant55.

In the context of ML, \(\:H\) typically refers to the model or its parameters, and \(\:D\) corresponds to the training dataset. The objective is to compute the posterior probability \(\:P\left(H|D\right)\)to infer the model or estimate its parameters. Bayesian methods are generally divided into parametric and non-parametric Bayesian learning56. In parametric Bayesian learning, the model parameters are considered fixed but unknown. For example, if \(\:\theta\:\) represents the model parameters, the posterior distribution is given as:

The Bayesian approach offers both advantages and limitations. Among its key strengths are the integration of prior knowledge with observed data, the provision of probabilistic distributions instead of fixed values, and its effectiveness in situations with limited datasets. However, it also has notable challenges, including high computational demands and sensitivity to the choice of the prior distribution57.

Linear regression

Linear Regression is a fundamental and extensively utilized supervised learning algorithm in ML. It aims to establish a linear relationship between independent variables (features) and a dependent variable (target) by fitting a straight line to the observed data58,59,60. The primary goal of this method is to predict the dependent variable’s value based on the given independent variables. The mathematical formulation of linear regression is generally expressed as:

Where, \(\:y\) is the dependent variable, \(\:{x}_{1},{x}_{2},\:\dots\:,\:{x}_{p}\:\)are the dependent variable, \(\:{\beta\:}_{0}\) is the intercept, \(\:{\beta\:}_{1},{\beta\:}_{2},\:\dots\:,\:{\beta\:}_{p}\) are the coefficients, and \(\epsilon\) is the error term. In matrix form, it can be written as:

Here, \(\:y,\:X,\:\beta\:,\:and\,\epsilon\) are matrices and vectors representing the data, coefficients, and errors. The primary objective of linear regression is to reduce the discrepancy between the predicted and actual values, commonly quantified through the Mean Squared Error (MSE). The MSE formula is:

Where, \(\:{y}_{i}\) represents the actual values, and \(\:{\widehat{y}}_{i}\) represents the predicted values.

The parameters of the model \(\:\left(\beta\:\right)\) are estimated using the Ordinary Least Squares (OLS) method. The OLS solution is derived as:

Once the model is trained, predictions for new data points are made using the following formula:

Here, \(\:x\) is the feature vector of the new data point.

Artificial neural network

Artificial Neural Networks (ANNs) are a key technique in ML, inspired by the structure and functionality of biological neural systems61,62. They are utilized for tasks such as pattern recognition, data classification, prediction, and modeling of complex relationships between inputs and outputs. ANNs consist of multiple interconnected layers of nodes (neurons), with each neuron processing the input data and transmitting it to subsequent layers. The architecture of an ANN typically includes three main components: the input layer, hidden layers, and the output layer. The input layer takes in raw data, with each neuron representing a specific feature of the dataset63. Hidden layers apply transformations to the input data, while the output layer generates the final outputs, such as predictions or classifications (Fig. 5).

For a single neuron in a hidden or output layer, the pre-activation value \(\:z\) is calculated as:

Here, \(\:{x}_{i}\) inputs to the neuron, \(\:{w}_{i}\) weights associated with the inputs, \(\:b\) bias term, and \(\:z\) pre-activation value.

Support vector machine

Support Vector Machines (SVMs) are a powerful supervised ML algorithms widely employed for tasks such as classification, regression, and outlier detection64. They are especially effective in high-dimensional spaces and can manage both linear and nonlinear classification problems65. The primary objective of an SVM is to identify a hyperplane that maximally separates different classes of data points in the feature space66.

The central idea of SVM is to determine a hyperplane that maximizes the margin between two classes. This margin is defined as the distance between the hyperplane and the nearest data points from each class, known as support vectors. For a training dataset represented as \(\:\left\{\left({x}_{i},\:{y}_{i}\right)\right\}\), where \(\:{x}_{i}\) is the feature vector and \(\:{y}_{i}\in\:\left\{-1,\:+1\right\}\) is the class label, the hyperplane is mathematically defined as:

Where, \(\:w\) represents the weight vector, \(\:x\) is the input feature vector, and \(\:b\) is the bias term. The hyperplane acts as the decision boundary, while the support vectors are the data points closest to this boundary.

In cases where the data can be separated linearly, SVM aims to find the optimal hyperplane that maximizes the margin between the two classes. The equations that define the margin boundary are:

This method guarantees that the hyperplane provides the greatest separation, while keeping the nearest points (support vectors) at the margin’s boundary.

Least squares boosting

Least Squares Boosting (LSBoost) is ML technique that combines boosting with least squares regression to enhance prediction accuracy67. Although primarily used for regression tasks, it can also be adapted for classification problems. LSBoost improves upon traditional boosting methods by concentrating on reducing the least squares error of the model. The process starts with a simple initial model, often the mean of the target values. During each boosting iteration, residuals are calculated as the difference between the actual values and the model’s current predictions. A new weak learner, typically a decision tree, is trained to predict these residuals, with the goal of minimizing the least squares error. The model is updated by adding the scaled predictions of the weak learner, which are modified by a learning rate. After a set number of iterations or when the model’s performance becomes stable, the final model is formed by combining the predictions from all weak learners68.

The initial prediction is computed as:

Where, \(\:{y}_{i}\) is the actual target value for the \(\:{i}^{th}\) instance and \(\:N\) is the total number of instances.

At iteration \(\:m\), the residuals are calculated as:

Where \(\:{\widehat{y}}_{i}\left(m\right)\) represents the prediction for the \(\:{i}^{th}\) instance at iteration \(\:m\).

A weak learner is then fit to these residuals, aiming to minimize the least squares error, expressed as:

Where \(\:{f}_{m}\left({x}_{i}\right)\) is the prediction of the weak learner for the \(\:{i}^{th}\) instance.

The model is updated by adding the predictions of the weak learner, scaled by a learning rate \(\:\alpha\:\):

Where \(\:\alpha\:\) is the learning rate (also known as the shrinkage parameter).

After \(\:M\) iterations, the final prediction is given by:

Random forest

Random Forest (RF) is a commonly used ML method that performs exceptionally well in classification and regression tasks, particularly with large and complex datasets. Its effectiveness in reducing variance and preventing overfitting contributes to its widespread use69. RF is an ensemble approach that constructs multiple decision trees, with each tree trained independently on a random subset of the data. The final prediction is made by combining the outputs of these individual trees (Fig. 6).

The RF algorithm begins by creating random samples from the training data using bootstrap sampling (sampling with replacement). Each decision tree is trained on a different random subset of the data. To ensure diversity among the trees, a random set of features is selected at each node of the tree. During prediction, the outputs from all the trees are aggregated. For classification tasks, the final prediction is based on majority voting, while for regression tasks, the predictions are averaged70.

In classification, the final prediction for a new sample \(\:x\) is computed using the following formula, where \(\:{T}_{1},\:\:{T}_{2},\:\dots\:,\:\:{T}_{n}\) represent the decision trees and \(\:{C}_{1},\:\:{C}_{2},\:\dots\:,\:\:{C}_{n}\) are the possible classes:

Here, \(\:I\) is an indicator function that equals 1 if \(\:{T}_{j}\left(x\right)\) equals \(\:{C}_{i}\) and 0 otherwise.

For regression tasks, the final prediction for a new sample \(\:x\) is calculated as the average of the predictions from all the decision trees:

Where \(\:{T}_{j}\left(x\right)\) is the predicted value from decision tree \(\:{T}_{j}\) for the sample \(\:x\).

Results and discussion

Data division into training and testing sets

At the beginning of this study, all the collected input parameters were thoroughly and comprehensively analyzed. Then, the input parameters of these data, including the scattering coefficients (KL) between hydrogen and three cushion gases (CH4, CO2, N2), which were processed as a function of pressure and displacement velocity for reservoir models, were selected for performing calculations using ML algorithms in MATLAB with version R2021b. Subsequently, the input data was divided into two separate sets: one for training and the other for testing, in order to evaluate the performance of the algorithms. The proposed algorithms for prediction were executed based on different training-to-testing data ratios, and the accuracy of each method was calculated and presented graphically.

Random selection of input data based on these ratios can significantly affect the final accuracy of the algorithms. Therefore, each method was evaluated through ten independent executions, and the average R2 values obtained from these executions were reported as the final results. This evaluation approach provides an accurate representation of the performance and accuracy of each algorithm.

Table 2 reports the final R2 values for each layer within the range of 0.1 to 0.9. These values are calculated independently for each ML method and are presented for the combined datasets of testing, training, and training/testing. In fact, based on the results obtained from the training/testing rows, optimal models can be selected.

The optimal performance of each ML method is shown graphically in Fig. 7, using both training and testing data. This figure provides a detailed evaluation and comparison of the accuracy of each method. Accordingly, the Train/Test ratio was set between 0.2 and 0.9, while the R-squared values were limited to the range of 0.90 to 1. These settings facilitated a more thorough analysis of the results and assisted in identifying the optimal conditions for each method.

Based on the results presented in Fig. 7, the R2 values indicate that the highest accuracy of the algorithms is achieved under specific conditions of the training-to-test data ratio. Specifically, these ratios are as follows for the different algorithms: 0.2 for Bayesian, 0.2 for LR, 0.9 for LSBoost, 0.6 for ANN, 0.3 for RF, and 0.5 for SVM. These findings highlight the sensitivity of algorithm performance to the training-to-test data ratio and emphasize the importance of fine-tuning this parameter throughout the modeling process.

Subsequently, the R2 values for each ML method will be examined in greater detail, providing a comprehensive analysis of their performance.

Performance of each method in the training and testing phases

The outcomes from the regression analyses and R2 values are displayed in graphical form, where data from both the training (Train) and testing (Test) sets are shown together. These plots effectively illustrate how well the model fits the data, with the final R2 value serving as an accurate indicator of the model’s performance. This approach is especially useful for assessing the model’s accuracy in predicting test data and allows for a direct comparison of its performance across both training and testing datasets.

Figure 8 shows the R2 values obtained from hydrogen solubility. In addition, marginal distribution plots, by displaying the distribution of data around the main plot, provide information about data density and distribution patterns. Positioned along the horizontal and vertical axes, these plots enable the examination of symmetry and skewness and play a significant role in analyzing relationships between variables and assessing the accuracy of predictive models.

Table 3 provides the regression equations used to predict the output based on a particular input variable. These equations were developed using different ML techniques to model and simulate the mathematical relationships between input and output variables. In this study, the equations are tailored to analyze and predict the behavior of various systems. By applying these methods, highly accurate models have been developed to define the relationship between inputs and outputs, which can be effectively utilized for decision-making and process optimization.

Statistical criteria in measuring the accuracy of machine learning methods

In conclusion, the effectiveness and performance of the methods discussed in this article have been assessed and compared using statistical metrics, including the correlation coefficient and mean relative error. The following equations outline the calculation process for each of these statistical measures. Table 4 displays the performance values for each method.

Root Mean Square Error (RMSE) is a statistical measure used to assess the accuracy of predictive models. It quantifies the difference between the predicted and actual values. RMSE is widely used to evaluate model accuracy by calculating the average squared error. The RMSE value ranges from zero to infinity, with values closer to zero indicating a more accurate model.

The Mean Absolute Deviation (MAD) is a measure used to evaluate the accuracy of predictive models by calculating the average of the absolute differences between the actual and predicted values. A smaller MAD value indicates higher model accuracy, with a MAD of zero representing a perfect match between the model’s predictions and the actual data. This metric is particularly useful for comparing different models’ performance and optimizing them for better results.

In this formula, \(\:{y}_{i}\) represents the actual value for sample \(\:i\), and \(\:{\widehat{y}}_{i}\) is the predicted value for the same sample. \(\:n\) denotes the total number of samples. This formula allows us to account for both positive and negative errors as absolute values, meaning all errors are calculated as positive values, which reduces the impact of large errors.

R2 is a statistical metric commonly used to assess the performance of prediction models and is considered a crucial indicator for model evaluation. Also referred to as the coefficient of determination, R2 reflects the proportion of variance in the dependent variable that can be explained by the independent variables in the model’s predictions.

An R2 value nearing 1 signifies that the model has effectively accounted for most of the variations in the dependent variable, with highly accurate predictions. Conversely, an R2 value close to 0 indicates that the model has not captured much of the variability in the dependent variable, resulting in predictions that significantly deviate from the actual outcomes.

Figure 9 provides a visual representation of the error data from Table 4, enabling an easy comparison of the different methods.

Table 5 displays the results for the most effective method (RF) in predicting hydrogen solubility characteristics. It provides several metrics to assess the accuracy of the model, including the following:

This table illustrates the performance of the RF method as the best approach for data analysis. Below, the various model evaluation criteria are explained:

Coefficient of Determination (R2):

-

For test data (Test): A value of 0.9965 indicates very high prediction accuracy.

-

For training data (Train): A value of 0.9999 shows that the model also performs very accurately during training.

-

For all data (All): A value of 0.9983 reflects the overall balance and efficiency of the model.

Mean Absolute Deviation (MAD):

-

For test data (Test): A value of 0.019 indicates a low average difference between predicted and actual values.

-

For training data (Train): A value of 0.001 demonstrates higher accuracy during the training phase.

Root Mean Squared Error (RMSE):

-

For test data (Test): A value of 0.023 represents relatively low prediction error.

-

For training data (Train): A value of 0.001 highlights the model’s very high accuracy on training data.

These results demonstrate that the RF method has been selected as the best approach, offering excellent performance in data prediction and analysis.

The field deployment of ML models for predicting hydrogen dispersion coefficients in UHS requires careful consideration of data acquisition, model adaptability, and operational challenges. In real-field applications, implementing the developed ML models necessitates the integration of reservoir monitoring systems, such as pressure and flow rate sensors, to provide continuous and reliable input data.

One of the key challenges in deploying ML models in industrial settings is the inherent heterogeneity of geological formations, which may introduce uncertainties in model predictions. Unlike controlled laboratory environments, field conditions involve complex interactions between cushion gases and reservoir rock, requiring adaptive model calibration.

From an industrial perspective, the developed ML models can be leveraged to streamline operational workflows in UHS facilities. By reducing the reliance on expensive and time-consuming experimental evaluations, these models facilitate rapid scenario analysis, enabling proactive adjustments in storage strategies. Additionally, their application extends to automated control systems, where predictive analytics can optimize gas injection and withdrawal cycles, minimizing energy losses and improving overall storage efficiency.

Based on the results obtained from the R2 index, it was determined that the best model for predicting and evaluating H2 distribution is the RF machine learning model. This model has provided the most accurate predictions, and the results confirm its high reliability. However, for a more precise evaluation and to identify which of the cushion gases, including CH4, CO2, and N2, is the most important and sensitive parameter in hydrogen distribution, it is necessary to analyze these gases using machine learning techniques.

The final results show that the RF algorithm is the most accurate and efficient method compared to other algorithms. To evaluate each gas separately, the use of an optimized algorithm, such as RF, is essential.

Figure 10 illustrates that, ultimately, the findings indicate that CO2 gas demonstrates the best performance in terms of the determination index (R2) compared to other gases. This represents the highest accuracy and alignment of CO2 in predicting the distribution of H2 in underground storage environments. Based on this, CO2 can be considered an optimal option for improving prediction processes in hydrogen storage systems. Therefore, utilizing CO2 to enhance the performance of hydrogen distribution prediction models can significantly improve the accuracy and efficiency of these systems.

Limitations and future directions

Despite the promising outcomes of this study in predicting hydrogen dispersion coefficients in UHS systems using ML, certain limitations must be acknowledged. One major constraint is the reliance on experimental data obtained under controlled laboratory conditions, which may not fully capture the complexities of real reservoir environments. Geological heterogeneity, variations in temperature and pressure, and dynamic reservoir interactions could introduce deviations from the predicted dispersion behavior. Additionally, while the selected ML models demonstrate high accuracy, their performance is inherently dependent on the quality and representativeness of the training dataset. Limited availability of field-scale data may affect the model’s ability to generalize its predictions to large-scale industrial applications.

Another limitation pertains to the simplifications made in defining the input parameters. The study primarily considers pressure and displacement velocity as key variables influencing the dispersion coefficient; however, additional factors such as reservoir wettability, gas composition, rock-fluid interactions, capillary effects, and molecular diffusion mechanisms may further impact hydrogen dispersion dynamics. Incorporating these parameters into future models could enhance predictive robustness. Furthermore, the study does not fully explore the temporal variations in gas dispersion, which can be crucial in long-term UHS operations. Future studies should incorporate time-dependent modeling approaches to capture transient effects on gas mixing and storage efficiency.

To address these limitations, future research should focus on expanding the dataset by incorporating field-scale hydrogen storage experiments and real-time monitoring data from operational UHS sites. Developing hybrid modeling frameworks that integrate ML techniques with physics-based numerical simulations could improve model interpretability and applicability in heterogeneous reservoirs. Additionally, optimizing ML algorithms through feature selection and hyperparameter tuning can further enhance predictive accuracy. Future work should also explore the economic and environmental implications of ML-driven hydrogen storage optimization, ensuring that the proposed methodologies align with industry standards and regulatory requirements.

Moreover, practical implementation of these models in industrial settings requires validation through pilot-scale projects and collaboration with energy storage operators. Conducting large-scale sensitivity analyses and feasibility studies will be crucial in demonstrating the effectiveness of ML models in real-world applications. Future research should also focus on developing automated control systems that leverage ML predictions to optimize hydrogen injection and withdrawal cycles, thereby reducing energy losses and improving overall storage efficiency. By addressing these research gaps, ML-based approaches can be effectively translated into scalable solutions for efficient and reliable underground hydrogen storage, ultimately supporting the transition to a sustainable energy economy.

Conclusions

Underground Hydrogen Storage (UHS) is a promising large-scale hydrogen storage solution that supports a sustainable energy transition by reducing reliance on fossil fuels. Utilizing depleted hydrocarbon reservoirs, aquifers, and salt caverns offers practical benefits, but challenges such as hydrogen dispersion in cushion gases (N2, CH4, and CO2) remain critical. This mixing can lead to contamination, reduced hydrogen purity, and increased processing costs, making it essential to develop effective predictive models. This study addresses these challenges by leveraging machine learning (ML) techniques to predict the hydrogen dispersion coefficient (KL) under reservoir conditions, enhancing the efficiency and feasibility of UHS systems.

This study introduces a novel approach for accurately predicting the hydrogen dispersion coefficient in the presence of cushion gases by integrating machine learning techniques with experimental data. Compared to previous studies, the proposed model has demonstrated superior accuracy in predicting key parameters influencing hydrogen dispersion while addressing the limitations of traditional numerical and experimental models. The findings of this research directly contribute to optimizing hydrogen storage reservoir design, reducing purification costs, and improving overall storage efficiency. Given these innovations, this study represents a significant step toward the development of advanced predictive methods for large-scale hydrogen storage systems.

Among the ML models evaluated, Random Forest (RF) demonstrated the highest predictive accuracy, achieving an R2 of 0.9965 for test data and 0.9999 for training data, with minimal prediction errors (RMSE = 0.023 and 0.001, respectively). The results highlight the effectiveness of data-driven approaches in modeling complex gas dispersion behavior, offering significant advantages over conventional experimental and numerical methods.

The key findings of this study include:

-

Hydrogen dispersion behavior varies significantly depending on the type of cushion gas. Among them, CO2 exhibits the lowest dispersion coefficient (KL) due to its higher density and lower diffusivity compared to other gases.

-

Pressure and displacement velocity (Um) are the most influential factors affecting hydrogen dispersion. These parameters directly impact gas mixing dynamics in UHS systems, determining the extent of hydrogen spread and distribution within the storage environment.

-

ML enables fast and cost-effective prediction of the dispersion coefficient (KL). This approach reduces reliance on expensive and time-consuming laboratory experiments, allowing for more accurate modeling of various storage conditions.

These findings provide a solid foundation for optimizing UHS by reducing hydrogen contamination, improving storage efficiency, and selecting the most suitable cushion gases. Future research should focus on integrating field-scale data to enhance model accuracy, incorporating key reservoir parameters such as wettability and capillary effects, and refining ML algorithms for real-time prediction and adaptive control.

This study underscores the transformative potential of ML in UHS, demonstrating its ability to enhance predictive accuracy, streamline operations, and reduce costs. The integration of advanced methods like RF with traditional reservoir studies offers a robust framework for addressing hydrogen storage challenges. These advancements support the economic viability of UHS and contribute to a cleaner, more sustainable energy future, aligning with global environmental and energy goals.

Data availability

Data availabilityThe datasets used and/or analyzed during the current study available from the corresponding author on reasonable request.

Abbreviations

- β:

-

Coefficients

- ε:

-

Error term

- θ:

-

Model parameters

- AD:

-

Advection-Dispersion

- B:

-

Bias term

- \(K_L\) :

-

Dispersion coefficient

- P:

-

Pressure

- \(T_j\) :

-

Decision trees

- Um:

-

Displacement velocity

- ANN:

-

Artificial Neural Network

- CFD:

-

Computational Fluid Dynamic

- EGR:

-

Enhanced Gas Recovery

- FEP:

-

Fluorinated Ethylene Propylene

- FID:

-

Free Induction Decay

- GEP:

-

Gene Expression Programming

- GO:

-

Graphene Oxide

- HTF:

-

Heat Transfer Fluid

- IAST:

-

Ideal Adsorption Solution Theory

- LSBoost:

-

Least Squares Boosting

- ML:

-

Machine Learning

- MAD:

-

Mean Absolute Deviation

- NMR:

-

Nuclear Magnetic Resonance

- OLS:

-

Ordinary Least Squares

- RF:

-

Random Forest

- RMSE:

-

Root Mean Square Error

- SVM:

-

Support Vector Machines

- TMS:

-

Tetramethylsilane

- UHS:

-

Underground Hydrogen Storage

References

Jahanbakhsh, A., Potapov-Crighton, A. L., Mosallanezhad, A., Kaloorazi, N. T. & Maroto-Valer, M. M. Underground hydrogen storage: A UK perspective. Renew. Sustain. Energy Rev. 189, 114001. https://doi.org/10.1016/j.rser.2023.114001 (2024).

Kobeissi, S., Ling, N. N., Yang, K., May, E. F. & Johns, M. L. Dispersion of hydrogen in different potential cushion gases. Int. J. Hydrog. Energy. 60, 940–948. https://doi.org/10.1016/j.ijhydene.2024.02.151 (2024).

M. Maleki, Y. Kazemzadeh, A. Dehghan Monfared, A. Hasan-Zadeh, and S. Abbasi, Bio‐enhanced oil recovery (BEOR) methods: All‐important review of the occasions and challenges,The Canadian Journal of Chemical Engineering, 2024, doi: https://doi.org/10.1002/cjce.25216.

Banos, R. et al. Optimization methods applied to renewable and sustainable energy: A review. Renew. Sustain. Energy Rev. 15 (4), 1753–1766. https://doi.org/10.1016/j.rser.2010.12.008 (2011).

Amin, M. et al. Hydrogen production through renewable and non-renewable energy processes and their impact on climate change. Int. J. Hydrog. Energy. 47 (77), 33112–33134. https://doi.org/10.1016/j.ijhydene.2022.07.172 (2022).

Maleki, M., Dehghani, M. R., Akbari, A., Kazemzadeh, Y. & Ranjbar, A. Investigation of wettability and IFT alteration during hydrogen storage using machine learning, Heliyon, vol. 10, no. 19, (2024). https://doi.org/10.1016/j.heliyon.2024.e38679

Muhammed, N. S. et al. Hydrogen storage in depleted gas reservoirs: A comprehensive review, Fuel, vol. 337, p. 127032, (2023). https://doi.org/10.1016/j.fuel.2022.127032

Amid, A., Mignard, D. & Wilkinson, M. Seasonal storage of hydrogen in a depleted natural gas reservoir. Int. J. Hydrog. Energy. 41 (12), 5549–5558. https://doi.org/10.1016/j.ijhydene.2016.02.036 (2016).

Portarapillo, M. & Di Benedetto, A. Risk assessment of the large-scale hydrogen storage in salt caverns, Energies, vol. 14, no. 10, p. 2856, (2021). https://doi.org/10.3390/en14102856

Heinemann, N., Scafidi, J., Pickup, G. & Wilkinson, M. Hydrogen storage in saline aquifers-the role of cushion gas, in AGU Fall Meeting 2021, : AGU, doi: https://doi.org/10.1016/j.ijhydene.2021.09.174. (2021).

Tarkowski, R. Underground hydrogen storage: characteristics and prospects. Renew. Sustain. Energy Rev. 105, 86–94. https://doi.org/10.1016/j.rser.2019.01.051 (2019).

Kanaani, M., Sedaee, B. & Asadian-Pakfar, M. Role of cushion gas on underground hydrogen storage in depleted oil reservoirs. J. Energy Storage. 45, 103783. https://doi.org/10.1016/j.est.2021.103783 (2022).

Du, S., Bai, M., Shi, Y., Zha, Y. & Yan, D. A Review of the Utilization of CO2 as a Cushion Gas in Underground Natural Gas Storage, Processes, vol. 12, no. 7, p. 1489, (2024). https://doi.org/10.3390/pr12071489

Zamehrian, M. & Sedaee, B. Underground hydrogen storage in a partially depleted gas condensate reservoir: influence of cushion gas. J. Petrol. Sci. Eng. 212, 110304. https://doi.org/10.1016/j.petrol.2022.110304 (2022).

Muhammed, N. S., Haq, B. & Al Shehri, D. Role of methane as a cushion gas for hydrogen storage in depleted gas reservoirs. Int. J. Hydrog. Energy. 48 (76), 29663–29681. https://doi.org/10.1016/j.ijhydene.2023.04.173 (2023).

Bo, Z., Hörning, S., Underschultz, J. R., Garnett, A. & Hurter, S. Effects of geological heterogeneity on gas mixing during underground hydrogen storage (UHS) in braided-fluvial reservoirs, Fuel, vol. 357, p. 129949, (2024). https://doi.org/10.1016/j.fuel.2023.129949

Feldmann, F., Hagemann, B., Ganzer, L. & Panfilov, M. Numerical simulation of hydrodynamic and gas mixing processes in underground hydrogen storages. Environ. Earth Sci. 75, 1–15. https://doi.org/10.1007/s12665-016-5948-z (2016).

Zivar, D., Kumar, S. & Foroozesh, J. Underground hydrogen storage: A comprehensive review. Int. J. Hydrog. Energy. 46 (45), 23436–23462. https://doi.org/10.1016/j.ijhydene.2020.08.138 (2021).

Ghasemi, K., Akbari, A., Jahani, S. & Kazemzadeh, Y. A critical review of life cycle assessment and environmental impact of the well drilling process. Can. J. Chem. Eng., https://doi.org/10.1002/cjce.25539

Meng, Y., Jiang, J., Wu, J. & Wang, D. A Physics-Enhanced Neural Network for estimating longitudinal dispersion coefficient and average solute transport velocity in porous media, Geophysical Research Letters, vol. 51, no. 17, p. e2024GL110683, 2024. https://doi.org/10.1029/2024GL110683

Singh, H. Jacobi collocation method for the fractional advection-dispersion equation arising in porous media, Numerical methods for partial differential equations, vol. 38, no. 3, pp. 636–653, (2022). https://doi.org/10.1002/num.22674

Hu, X. et al. Numerical study on leakage dispersion pattern and hazardous area of Ammonia storage tanks. Energy Technol. 12 (3), 2301067. https://doi.org/10.1002/ente.202301067 (2024).

Asif, M. et al. Influence of competitive adsorption, diffusion, and dispersion of CH4 and CO2 gases during the CO2-ECBM process. Fuel 358, 130065. https://doi.org/10.1016/j.fuel.2023.130065 (2024).

Shi, Z., Jessen, K. & Tsotsis, T. T. Impacts of the subsurface storage of natural gas and hydrogen mixtures. Int. J. Hydrog. Energy. 45 (15), 8757–8773. https://doi.org/10.1016/j.ijhydene.2020.01.044 (2020).

Yang, K. et al. Measurement of hydrogen dispersion in rock cores using benchtop NMR. Int. J. Hydrog. Energy. 48 (45), 17251–17260. https://doi.org/10.1016/j.ijhydene.2023.01.197 (2023).

Edo, C., González-Pleiter, M., Leganés, F., Fernández-Piñas, F. & Rosal, R. Fate of microplastics in wastewater treatment plants and their environmental dispersion with effluent and sludge. Environ. Pollut. 259, 113837. https://doi.org/10.1016/j.envpol.2019.113837 (2020).

Wang, G. et al. Modeling venting behavior of lithium-ion batteries during thermal runaway propagation by coupling CFD and thermal resistance network. Appl. Energy. 334, 120660. https://doi.org/10.1016/j.apenergy.2023.120660 (2023).

Sadeghi, S. & Sedaee, B. Mechanistic simulation of cushion gas and working gas mixing during underground natural gas storage. J. Energy Storage. 46, 103885. https://doi.org/10.1016/j.est.2021.103885 (2022).

Shoushtari, S., Namdar, H. & Jafari, A. Utilization of CO2 and N2 as cushion gas in underground gas storage process: A review. J. Energy Storage. 67, 107596. https://doi.org/10.1016/j.est.2023.107596 (2023).

Ebrahimi, A., Kazemzadeh, Y. & Akbari, A. Impact of the Marangoni phenomenon on the different Enhanced Oil Recovery methods, Heliyon, vol. 10, no. 21, (2024). https://doi.org/10.1016/j.heliyon.2024.e39919

Pantusheva, M., Mitkov, R., Hristov, P. O. & Petrova-Antonova, D. Air pollution dispersion modelling in urban environment using CFD: a systematic review, Atmosphere, vol. 13, no. 10, p. 1640, (2022). https://doi.org/10.3390/atmos13101640

Hamza, A. et al. CO2 enhanced gas recovery and sequestration in depleted gas reservoirs: A review. J. Petrol. Sci. Eng. 196, 107685. https://doi.org/10.1016/j.petrol.2020.107685 (2021).

Agwu, O. E., Alatefi, S., Alkouh, A., Azim, R. A. & Wee, S. C. Carbon capture using ionic liquids: an explicit data driven model for carbon (IV) oxide solubility Estimation. J. Clean. Prod. 472, 143508. https://doi.org/10.1016/j.jclepro.2024.143508 (2024).

Alatefi, S., Agwu, O. E. & Alkouh, A. Explicit and explainable artificial intelligent model for prediction of CO2 molecular diffusion coefficient in heavy crude oils and bitumen. Results Eng. 24, 103328. https://doi.org/10.1016/j.rineng.2024.103328 (2024).

Alkouh, A., Elraies, K., Agwu, O. E., Alatefi, S. & Azim, R. A. Explicit Data-Based model for predicting Oil-Based mud viscosity at downhole conditions. ACS Omega. 9 (6), 6684–6695. https://doi.org/10.1021/acsomega.3c07815 (2024).

Alatefi, S., Agwu, O. E., Azim, R. A., Alkouh, A. & Dzulkarnain, I. Development of multiple explicit data-driven models for accurate prediction of CO2 minimum miscibility pressure. Chem. Eng. Res. Des. 205, 672–694. https://doi.org/10.1016/j.cherd.2024.04.033 (2024).

Hahn, E. L. Nuclear induction due to free Larmor precession, Physical Review, vol. 77, no. 2, p. 297, (1950). https://doi.org/10.1103/PhysRev.77.297.2

Harris, R. K., Becker, E. D., Cabral de Menezes, S. M., Goodfellow, R. & Granger, P. NMR nomenclature. Nuclear spin properties and conventions for chemical shifts (IUPAC Recommendations 2001), Pure and Applied Chemistry, vol. 73, no. 11, pp. 1795–1818, (2001). https://doi.org/10.1351/pac200173111795

Nasharuddin, R. et al. Cemented paste backfill compressive strength enhancement via systematic water chemistry optimisation. Constr. Build. Mater. 347, 128499. https://doi.org/10.1016/j.conbuildmat.2022.128499 (2022).

Shilliday, E. R. et al. Quantitative measurement of mono-ethylene glycol (MEG) content using low-field nuclear magnetic resonance (NMR). J. Nat. Gas Sci. Eng. 101, 104520. https://doi.org/10.1016/j.jngse.2022.104520 (2022).

Ling, N. et al. Quantifying the effect of salinity on oilfield water-in-oil emulsion stability. Energy Fuels. 32 (9), 10042–10049. https://doi.org/10.1021/acs.energyfuels.8b02143 (2018).

Perkins, T. K. & Johnston, O. A review of diffusion and dispersion in porous media. Soc. Petrol. Eng. J. 3 (01), 70–84. https://doi.org/10.2118/480-PA (1963).

Delgado, J. A critical review of dispersion in packed beds. Heat Mass Transf. 42, 279–310. https://doi.org/10.1007/s00231-005-0019-0 (2006).

Gadylshin, K., Lisitsa, V., Gadylshina, K., Vishnevsky, D. & Novikov, M. Machine learning-based numerical dispersion mitigation in seismic modelling, in Computational Science and Its Applications–ICCSA 2021: 21st International Conference, Cagliari, Italy, September 13–16, Proceedings, Part I 21, 2021: Springer, pp. 34–47, (2021). https://doi.org/10.1007/978-3-030-86653-2_3

Akbari, A., Ranjbar, A., Kazemzadeh, Y., Mohammadinia, F. & Borhani, A. Estimation of minimum miscible pressure in carbon dioxide gas injection using machine learning methods. J. Petroleum Explor. Prod. Technol. 15 (2), 25. https://doi.org/10.1007/s13202-024-01915-3 (2025).

Helland, J. O., Friis, H. A., Assadi, M. & Nagy, S. Machine learning for underground gas storage with cushion CO2 using data from reservoir simulation, in IOP Conference Series: Materials Science and Engineering, vol. 1294, no. 1: IOP Publishing, p. 012058, (2023). https://doi.org/10.1088/1757-899X/1294/1/012058

Helland, J. O., Friis, H. A., Assadi, M., Klimkowski, Ł. & Nagy, S. Prediction of optimal production time during underground CH4 storage with cushion CO2 using reservoir simulations and artificial neural networks. Energy Fuels. 37 (23), 19022–19038. https://doi.org/10.1021/acs.energyfuels.3c03382 (2023).

Karami, A., Akbari, A., Kazemzadeh, Y. & Nikravesh, H. Enhancing hydraulic fracturing efficiency through machine learning. J. Petroleum Explor. Prod. Technol. 15 (2), 1–16. https://doi.org/10.1007/s13202-024-01914-4 (2025).

Dąbrowski, K. Laboratory determination of hydrogen/methane dispersion in rock cores for underground hydrogen storage. Energy Rep. 11, 4290–4296. https://doi.org/10.1016/j.egyr.2024.04.011 (2024).

Li, J. et al. Real-time hydrogen release and dispersion modelling of hydrogen refuelling station by using deep learning probability approach. Int. J. Hydrog. Energy. 51, 794–806. https://doi.org/10.1016/j.ijhydene.2023.04.126 (2024).

Kanti, P. K., Shrivastav, A. P., Sharma, P. & Maiya, M. Thermal performance enhancement of metal hydride reactor for hydrogen storage with graphene oxide nanofluid: model prediction with machine learning. Int. J. Hydrog. Energy. 52, 470–484. https://doi.org/10.1016/j.ijhydene.2023.03.361 (2024).

Maniglio, M., Pizzolato, A., Panfili, P. & Cominelli, A. A simple and practical approach to estimate dispersive mixing in underground hydrogen storage systems, in SPE Annual Technical Conference and Exhibition? : SPE, p. D011S020R001, (2022). https://doi.org/10.2118/210251-MS

Blaylock, M. & Klebanoff, L. Hydrogen gas dispersion studies for hydrogen fuel cell vessels I: vent mast releases. Int. J. Hydrog. Energy. 47 (50), 21506–21516. https://doi.org/10.1016/j.ijhydene.2022.04.262 (2022).

Liu, S., Yuan, L., Zhao, C., Zhang, Y. & Song, Y. A review of research on the dispersion process and CO2 enhanced natural gas recovery in depleted gas reservoir. J. Petrol. Sci. Eng. 208, 109682. https://doi.org/10.1016/j.petrol.2021.109682 (2022).

Rios Insua, D., Naveiro, R., Gallego, V. & Poulos, J. Adversarial machine learning: bayesian perspectives. J. Am. Stat. Assoc. 118 (543), 2195–2206. https://doi.org/10.1080/01621459.2023.2183129 (2023).

Gao, H. et al. Revolutionizing membrane design using machine learning-bayesian optimization. Environ. Sci. Technol. 56 (4), 2572–2581. https://doi.org/10.1021/acs.est.1c04373 (2021).

Qiu, J., Jammalamadaka, S. R. & Ning, N. Multivariate time series analysis from a bayesian machine learning perspective. Ann. Math. Artif. Intell. 88 (10), 1061–1082. https://doi.org/10.1007/s10472-020-09710-6 (2020).

Maulud, D. & Abdulazeez, A. M. A review on linear regression comprehensive in machine learning. J. Appl. Sci. Technol. Trends. 1 (2), 140–147 (2020). https://jastt.org/index.php/jasttpath/article/view/57

Jumin, E. et al. Machine learning versus linear regression modelling approach for accurate Ozone concentrations prediction. Eng. Appl. Comput. Fluid Mech. 14 (1), 713–725. https://doi.org/10.1080/19942060.2020.1758792 (2020).

Pentoś, K., Mbah, J. T., Pieczarka, K., Niedbała, G. & Wojciechowski, T. Evaluation of multiple linear regression and machine learning approaches to predict soil compaction and shear stress based on electrical parameters, Applied Sciences, vol. 12, no. 17, p. 8791, (2022). https://doi.org/10.3390/app12178791

Otchere, D. A., Ganat, T. O. A., Gholami, R. & Ridha, S. Application of supervised machine learning paradigms in the prediction of petroleum reservoir properties: comparative analysis of ANN and SVM models. J. Petrol. Sci. Eng. 200, 108182. https://doi.org/10.1016/j.petrol.2020.108182 (2021).

Ahmad, M., Al Mehedi, M. A., Yazdan, M. M. S. & Kumar, R. Development of machine learning flood model using artificial neural network (ann) at var river, Liquids, vol. 2, no. 3, pp. 147–160, (2022). https://doi.org/10.3390/liquids2030010

Choi, R. Y., Coyner, A. S., Kalpathy-Cramer, J., Chiang, M. F. & Campbell, J. P. Introduction to machine learning, neural networks, and deep learning. Translational Vis. Sci. Technol. 9 (2), 14–14. https://doi.org/10.1167/tvst.9.2.14 (2020).

Abdullah, D. M. & Abdulazeez, A. M. Machine learning applications based on SVM classification a review. Qubahan Acad. J. 1 (2), 81–90 (2021). https://journal.qubahan.com/index.php/qaj/article/view/50

Mahesh, B. Machine learning algorithms-a review, International Journal of Science and Research (IJSR).[Internet], vol. 9, no. 1, pp. 381–386, doi: (2020). https://www.researchgate.net/profile/Batta-Mahesh/publication/344717762_Machine_Learning_Algorithms_-A_Review/links/5f8b2365299bf1b53e2d243a/Machine-Learning-Algorithms-A-Review.pdf?eid=5082902844932096t

Pisner, D. A. & Schnyer, D. M. Support vector machine, in Machine Learning: Elsevier, 101–121. (2020).

Zhang, Y. & Xu, X. Solid particle erosion rate predictions through LSBoost. Powder Technol. 388, 517–525. https://doi.org/10.1016/j.powtec.2021.04.072 (2021).

Zhang, Y. & Xu, X. Modulus of elasticity predictions through LSBoost for concrete of normal and high strength. Mater. Chem. Phys. 283, 126007. https://doi.org/10.1016/j.matchemphys.2022.126007 (2022).

Schonlau, M. & Zou, R. Y. The random forest algorithm for statistical learning. Stata J. 20 (1), 3–29. https://doi.org/10.1177/1536867X20909688 (2020).

Liu, Y., Wang, Y. & Zhang, J. New machine learning algorithm: Random forest, in Information Computing and Applications: Third International Conference, ICICA Chengde, China, September 14–16, 2012. Proceedings 3, 2012: Springer, pp. 246–252, (2012). https://doi.org/10.1007/978-3-642-34062-8_32

Author information

Authors and Affiliations

Contributions

A.A. and M.M wrote the main manuscript text and prepared figures. Y.K. and A.R. supervisor, editor and article analysis. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Akbari, A., Maleki, M., Kazemzadeh, Y. et al. Calculation of hydrogen dispersion in cushion gases using machine learning. Sci Rep 15, 13718 (2025). https://doi.org/10.1038/s41598-025-98613-9

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-98613-9

Keywords

This article is cited by

-

Machine learning-based prediction of well performance parameters for wellhead choke flow optimization

Scientific Reports (2025)

-

Machine learning models for the prediction of hydrogen solubility in aqueous systems

Scientific Reports (2025)

-

Enhanced water saturation estimation in hydrocarbon reservoirs using machine learning

Scientific Reports (2025)

-

Machine learning analysis of CO2 and methane adsorption in tight reservoir rocks

Scientific Reports (2025)