Abstract

To address the class imbalance problem in aero-engine fault prediction, we propose a novel framework integrating adaptive hybrid sampling and bidirectional LSTM (BiLSTM). First, a k-means-based adaptive sampling strategy is proposed that dynamically balances datasets by oversampling minority-class boundaries and undersampling redundant majority clusters. Second, a fault prediction model utilizing BiLSTM is built for fault prediction, which can effectively capture bidirectional temporal dependencies. Experiments on real-world sensor data demonstrate that this approach effectively improves the identification of fault samples in imbalanced datasets.

Similar content being viewed by others

Introduction

Aircraft engines, as critical power systems, are prone to mechanical faults that may lead to catastrophic failures. Early fault prediction remains challenging due to the extreme class imbalance and long-range dependencies in high-frequency sensor data1. Therefore, to ensure the normal operation of the aircraft, it is very necessary to study the fault prediction of aircraft engine. In view of the fact that the failure rate is very small during aircraft engine operation, there exists serious imbalance between normal samples and failure samples. In the prediction model aiming at the highest overall classification accuracy, the minority class is easily overwhelmed by the majority class, which results in the model favoring the majority of categories and the reduced recognition of the minority categories. Therefore, it is necessary to balance the samples before model training, which will help to improve the identification ability of the prediction model for the minority class and improve the overall classification performance of the model.

The aircraft engine state parameter acquisition system produces a large amount of data in the monitoring process, which is a challenge to the acquisition hardware, data storage and signal processing calculation cost. The compressed sensing (CS)2 theory developed in recent years realizes the compressed acquisition of the original signal by transforming the spatial projection method. It transforms the traditional signal sampling into information sampling, and obtains most of the information contained in the original signal with little measurement data. Therefore, it is necessary to compress the signal to reduce the amount of redundant data and improve the speed of signal processing before analyzing the data.

At present, the commonly used aircraft engine fault prediction methods mainly include: linear modeling method, knowledge learning method and data-driven method. The fault prediction method based on linear modeling is to establish the fault model by introducing the fault factor into the physical model of the engine. Among the fault prediction methods based on knowledge learning rule, expert system is the most classic. Data driven fault prediction methods mainly include neural network (NN)3, support vector machine (SVM)4, decision tree (DT)5 and so on.

Due to the high complexity of aircraft engine systems, it is difficult to build an accurate degradation model, which makes it difficult for traditional physical model-based method to achieve high-precision fault prediction. The data-driven fault prediction method does not need extensive prior knowledge, and can diagnose the fault directly according to the collected data. It has more advantages in dealing with the nonlinear relationship between system changes and monitoring data. The new generation of sensor technology, massive data storage, and transmission technology provide the possibility for the acquisition and preservation of aircraft engine operation data. By collecting and analyzing all kinds of sensor data, the data-driven fault prediction model can be established to achieve effective fault prediction of aircraft engine.

Among the data-driven methods, neural network is an effective model to solve the prediction problem of complex system. It can directly model nonlinear, complex and multi-dimensional system, and mine the mapping relationship between data and prediction target. At present, the BP neural network model is widely used in related research6. Although the research shows that the fault prediction effect of BP neural network is better than traditional fault prediction method, BP neural network also has the disadvantage of failing to process time series information. The aircraft engine data is a typical kind of time series data, which restricts BP neural network to obtain better fault prediction performance. To solve this problem, recursive neural network (RNN)7, which incorporates time series information into the model, is introduced to achieve a better performance than BP neural network. However, although RNN is designed to process time series information, its deep memory is still short-term information, and long-distance information has little effect. Moreover, too long distance information may cause gradient disappearance and gradient explosion to the model. These deficiencies in RNN make it unable to solve the long-term time series problem. A variant of RNN, long short-term memory neural network (LSTM)8, can effectively solve the problem of gradient explosion and gradient disappearance of simple cyclic neural network, which make it a good choice in dealing with long-term time series problems.

In aircraft engine fault prediction, all adjacent monitoring data can be utilized to assess the current health state effectively. In other words, the fault prediction result is determined by a number of previous and subsequent inputs. Bidirectional LSTM can process time series data in both forward and reverse directions at the same time9, it has been successfully applied to sequence marking10, speech recognition11, natural language processing12 and other fields. Although bidirectional LSTM has shown strong time series prediction ability, its application in fault prediction is very limited. Therefore, in this paper we aim to apply bidirectional LSTM to fault prediction.

Existing research in addressing the ‘normal-fault data imbalance’ issue in aircraft engine fault prediction faces several limitations. Traditional methods, such as SVM and BP neural networks, often struggle with imbalanced datasets, where the minority class (fault samples) is overwhelmed by the majority class (normal samples), leading to poor identification of fault samples. More advanced data-driven methods, like RNN and LSTM, while capable of handling time series data, still face challenges in dealing with long-term dependencies and gradient issues. Additionally, these methods often require a large amount of balanced data for training, which is not always available in practical applications. Bidirectional LSTM, while showing promise in sequence prediction, has not been widely applied to fault prediction due to its complexity and the need for fine-tuning.

Several approaches have been proposed to tackle this issue, such as using support vector machines with class imbalance learning techniques, imbalanced kernel extreme learning machines, and dynamic radius support vector data description methods13. While these methods offer improvements over traditional fault prediction techniques, they still suffer from limitations. For instance, they may not fully capture the temporal dependencies inherent in the aircraft engine sensor data, which is crucial for accurate fault prediction. Moreover, existing methods often rely on either undersampling or oversampling techniques to balance the dataset. Random undersampling can lead to the loss of valuable information from the minority class, while oversampling may introduce redundant data and increase the risk of overfitting14. Clustering-based sampling methods, while promising, may not always effectively mitigate the sample imbalance, especially when the clusters are not well-separated. Therefore, there is a need for a method that can effectively handle imbalanced datasets, utilize time series information, and provide accurate fault prediction for aircraft engines.

In this paper, a fault prediction method based on the combination of adaptive hybrid sampling and bidirectional long short-term memory (BiLSTM) is proposed. First, compressive sensing is used to the collected fault data to reduce the signal sampling rate. Second, an adaptive hybrid sampling method based on k-means clustering is designed to divide the sample into several sub samples to improve the imbalance between the samples. Then, the BiLSTM is built for fault prediction model. Finally, we applied the model to the fault prediction of the aircraft engine. The results show that the proposed model has a good performance in solving the fault prediction problem.

The rest of the paper is organized as follows. In the Literature Review section, a sketchy review of the previous theoretical achievements related to the research contents of this paper is presented. In the Data Processing section, data processing is introduced. In the Methodology section, the methodology and proposed model of this work are elaborated. In the Case Study section, a case study of fault prediction for aircraft engine is conducted to illustrate the proposed method. The conclusions are shown in the Conclusions section.

Literature review

We first review the research on fault prediction of aircraft engine. Kobayashi and Simon15applied a bank of Kalman filters to aircraft gas turbine engine sensor and actuator fault prediction and isolation. Yan16 designed a real-world aircraft engine fault diagnostic system based on random forest. Zhang et al.17developed a fault prediction and isolation (FDI) method for aircraft engines by utilizing nonlinear adaptive estimation techniques. Tamilselvan and Wang18 used deep belief learning based health state classification to diagnose aircraft engine fault. Babu et al.19 proposed a deep convolutional neural network (CNN) for remaining useful life prediction of aircraft engine based on regression approach. Wang et al.20 conduct fault prediction of civil aircraft engine based on adaptive estimation of instantaneous angular speed (IAS). The relevant research on fault prediction of aircraft engine with an imbalanced sample has been extensively studied. Xi et al.21 applied least squares support vector machine (LSSVM) for class imbalance learning to fault prediction of aircraft engine. Zhao et al.22 proposed an imbalanced kernel extreme learning machine (KELM) method for fault prediction of aircraft engine. Zhao et al.23 proposed a new dynamic radius support vector data description (DR-SVDD) for fault prediction of aircraft engine. The aforementioned references on fault prediction research of aircraft engine are summarized in Table 1.

LSTM has become hot spot research of equipment fault prediction by its unique traits which are suitable for time-series data. Yuan et al.8 used LSTM neural network for fault prediction and remaining useful life estimation of aero engine. Zheng et al.24proposed a fault prediction of unit equipment based on convolutional neural network LSTM network. Binu D, Kariyappa25 predicted the fault in analog circuits based on Rider-Deep-LSTM network. Liu et al.26 combined the LSTM network with statistical process analysis to predict the fault of aero-engine bearings with multi-stage performance degradation. Xiang et al.27 conduct a multicellular LSTM-based deep learning model for aero-engine remaining useful life prediction.

Bidirectional LSTM was first proposed by Schuster in 1997. Compared with LSTM, it can process sequence data in both forward and reverse directions. Song et al.28 predicted the remaining useful life of turbofan engine by a hybrid model based on autoencoder and bidirectional LSTM. Huang et al.29 proposed a novel prognostic method based on bidirectional long short-term memory networks for fault prognostics of aircraft turbofan engine. Remadna et al.30estimated the aircraft engine RUL by combining CNN and bidirectional LSTM networks. Zhang31 used bidirectional LSTM neural network for aeroengine fault prediction.

The main work of this paper concerns the performance of a fault prediction method for aircraft engine with imbalanced sample. First, an adaptive hybrid sampling method based on k-means clustering is proposed. Then, multiple BiLSTM models are built in multiple training subsets. Finally, the fault prediction model is obtained by integrating multiple BiLSTM models.

Data processing

Data processing

To eliminate the influence of different dimensions on the numerical values, further normalization of data is required. The normalization formula is as follows.

where \(\:{a}_{ij}\) is the initial sample data to be normalized, \(\:{a}_{imin}\) and \(\:{a}_{imax}\) are the minimum and maximum values in the column sample values.

Compressive sensing

The purpose of compressed sensing is to use the linear random observation method to project the signal onto the low-dimensional space and get a small number of observations containing most of the information of the original signal. Then the nonlinear optimization algorithm is used to recover the reconstructed signal which is similar to the original signal.

For the signal vector \(\:\varvec{x}\in\:{R}^{N}\), assuming there is a set of basis vectors \(\:{\left\{{\psi\:}_{i}\right\}}_{i=1}^{N}\) in the discrete signal space \(\:{R}^{N}\) of the finite length of \(\:N\:\)dimension. Then, every signal can be linearly expressed as follows

Equation (2) can be expressed as a vector form

where \(\:\varvec{s}=[{s}_{1},{s}_{2},\cdots\:,{s}_{N}]\) is the transform vector of \(\:\varvec{x}\) in the \(\:\varvec{\psi\:}\) domain, \(\:{s}_{i}=\langle\varvec{x},{\psi\:}_{i}\rangle={\psi\:}_{i}^{*}\varvec{x}\), \(\:\varvec{\psi\:}\in\:{R}^{N\times\:N}\). If there are only \(\:K\) elements in \(\:\varvec{s}\), and \(\:K\ll\:N\), then \(\:\varvec{s}\) is said to be \(\:K\) sparse. That is, signal \(\:\varvec{x}\) is the sparse signal under the orthogonal basis \(\:\varvec{\psi\:}\).

Let \(\:\varvec{\Phi\:}\) be a \(\:M\times\:N(M<N)\) measurement matrix, which is s uncorrelated with the orthogonal basis \(\:\varvec{\psi\:}\). Compressed sensing sampling signal is

where \(\:\varvec{y}\) is a \(\:M\times\:1\) dimensional signal sampled by compression. \(\:\varvec{y}\) contains the main information about the original signal \(\:\varvec{x}\), and the number of elements in \(\:\varvec{y}\) is much smaller than the original signal \(\:\varvec{x}\).

By solving the linear equations, the original signal \(\:\varvec{x}\) can be reconstructed from the observed value \(\:\varvec{y}\). However, due to \(\:M<N\), the number of unknowns in the equations is greater than the number of equations, which is an underdetermined problem. As mentioned above, the original signal \(\:\varvec{x}\) can be represented sparsely. Therefore, the compression process can be expressed as

where \(\:\varvec{\Theta\:}\) is a \(\:M\times\:N\) dimensional matrix and called the information operator. By refactoring \(\:\varvec{s}\), the original signal \(\:\varvec{x}\) can be reconstructed indirectly. Although it is still an underdetermined problem, the sparse \(\:\varvec{s}\) greatly reduces the number of unknowns and reduces the size of understanding space, making it possible to reconstruct the original signal. Only the following optimization problems need to be solved

where \(\:{\left|\right|\cdot\:\left|\right|}_{1}\) represents the \(\:{l}_{1}\) norm.

The measurement matrix is the key to the dimensionality reduction of the signal. We use Gaussian random matrix as the measurement matrix. The essence of signal reconstruction is to solve the optimization problem of Eq. (6) and find the solution as sparse as possible in the equation. At present, there are mainly two kinds of methods, namely, base tracking method and greedy algorithm. The latter is adopted in this paper.

Methodology

This section will introduce the necessary background knowledge and the proposed approach. All employed methods are described and their roles in the proposed fault prediction strategy are illustrated.

Adaptive hybrid sampling method based on k-means clustering

The samples in the data set are unbalanced, the failure samples are much less than the normal samples. If the data set is trained directly, the prediction effect of the model on fault samples will be affected. To solve the above problem, this paper selects several sample subsets from the training sample by sampling method. The sample subsets are used to train multiple sub-classifiers, and then all the sub-classifiers are combined to get the final prediction model. The proposed method has strong generalization ability and can improve the accuracy of the prediction model.

The sampling methods for unbalanced samples can be divided into undersampling and oversampling. Considering that random undersampling and oversampling, will cause the loss of some useful samples, and clustering-based sampling method is relatively small, an adaptive hybrid sampling method based on K-means clustering is proposed in this paper. The proposed method effectively mitigates the imbalance by dividing the dataset into multiple subsets, thereby improving the performance of the fault prediction model on minority classes. It addresses the limitations of random undersampling and oversampling by leveraging k-means clustering to partition normal samples into subsets. The BSMOTE oversampling synthesizes minority-class-like samples near decision boundaries, enhancing fault detection, while the random undersampling reduces redundancy without losing critical information.

The adaptive hybrid sampling method addresses the critical issue of class imbalance in aircraft engine fault prediction by combining k-means clustering with BSMOTE. Traditional methods such as SMOTE and ADASYN, while effective for general imbalanced datasets, exhibit limitations when applied to high-dimensional time-series data. SMOTE generates synthetic samples through linear interpolation between minority-class neighbors, which may introduce noise in vibration signal feature spaces and degrade fault detection accuracy32. ADASYN focuses on synthesizing samples near class boundaries but risks overlapping with majority-class samples in compressed feature representations33.

The proposed method leverages k-means clustering to partition the majority class into homogeneous subsets, preserving the intrinsic structure of normal operation patterns while mitigating dimensionality challenges in 61,440-dimension time-series data. BSMOTE is then applied to generate synthetic fault samples near decision boundaries, ensuring physiologically plausible representations of early-stage faults. The synthesis process follows the formulation:

where \(\:{\mathbf{x}}_{zi}\) denotes a nearest neighbor within the same cluster.

The proposed adaptive hybrid sampling method offers several key advantages. By leveraging k-means clustering to partition normal samples and applying targeted oversampling (BSMOTE) or undersampling as needed, it creates balanced training subsets that ensure sufficient representation of both normal and fault samples. This cluster-based approach preserves the intrinsic structure of the data while mitigating class imbalance, enhancing the model’s ability to capture intricate patterns and relationships. Furthermore, training multiple models on these balanced subsets and integrating them through weighted voting improves generalization, reducing overfitting to the majority class and significantly boosting minority-class recognition.

The detailed steps are as follows.

Step 1. Select all of the normal samples \(\:{N}_{train}\) and the failure samples \(\:{F}_{train}\) from the training dataset. The number of the normal samples \(\:{N}_{train}\) is \(\:{num}_{N}\) and the number of the failure samples \(\:{F}_{train}\) is \(\:{num}_{F}\). Set the number of training subsets is \(\:T\).

Step 2. Cluster \(\:{N}_{train}\) into \(\:k\) clusters \(\:{N}_{train}^{i}\) by k-means algorithm according to Euclidean distance, where \(\:i=1,\cdots\:,k\).

Step 3. Calculate the number of samples \(\:{num}_{Ni}\) contained in each cluster \(\:{N}_{train}^{i}\), and \(\:{num}_{N}={\sum\:}_{i=1}^{k}{num}_{Ni}\), \(\:i=1,\cdots\:,k\).

Step 4. Compare \(\:{num}_{Ni}\) and \(\:{num}_{F}\),

-

(i)

If \(\:{num}_{Ni}<{num}_{F}\), the oversampling method BSMOTE (Borderline Synthetic Minority Oversampling Technique) is adopted for \(\:{N}_{train}^{i}\) to make the number of samples equal to \(\:{num}_{F}\).

-

(ii)

If \(\:{num}_{Ni}={num}_{F}\), do nothing for \(\:{N}_{train}^{i}\).

-

(iii)

If \(\:{num}_{Ni}>{num}_{F}\), the random undersampling method is adopted for \(\:{N}_{train}^{i}\) so that the number of samples it contains is \(\:{num}_{F}\).

Step 5. Stratified sampling method is used to the samples contained in the new cluster generated by Step 4, and the number of samples for each cluster is \(\:{num}_{F}/k\).

Step 6. Combine the sampled samples of all clusters and get the normal samples in the training subset \(\:{N}_{train}^{new}\).

Step 7. Combine \(\:{N}_{train}^{new}\) and \(\:{F}_{train}\) and get the training subset.

Step 8. Repeat Step 5–7 for \(\:T\) times and \(\:T\) training subsets are obtained.

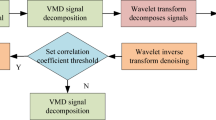

The specific flow chart is shown in Fig. 1.

Bidirectional LSTM neural network

In this section, LSTM neural network and Bidirectional LSTM neural network will be introduced.

LSTM neural network

LSTM is a kind of neural network with memory function, which controls the flow of information through some gates. Figure 2 is the internal structure of LSTM.

A LSTM unit is composed of a memory cell (\(\:{c}_{t}\)) and three gates, including an input gate (\(\:{i}_{t}\)), a forget gate (\(\:{f}_{t}\)), and an output gate (\(\:{o}_{t}\)). \(\:{x}_{t}\) is input data, \(\:{h}_{t}\) is hidden layer state. The symbol \(\:\times\:\) and \(\:+\) represent vector outer product operation and superposition operation, respectively. \(\:{c}_{t-1}\) and \(\:{h}_{t-1}\) are the memory unit and the hidden layer state at time \(\:t-1.\) \(\:\sigma\:\) is the sigmoid incentive function and \(\:\text{t}\text{a}\text{n}\text{h}\) represents the \(\:\text{t}\text{a}\text{n}\text{h}\) incentive function.

First, the weighted sum of \(\:{x}_{t}\), \(\:{h}_{t-1}\) is calculated by the forget gate, and \(\:{f}_{t}\) (\(\:{f}_{t}\in\:\left(\text{0,1}\right)\)) is obtained through the sigmoid function, as shown in Eq. (1).

where \(\:{\varvec{W}}_{f}\) is the weight matrix. \(\:{f}_{t}\) represents the weight of information to be forgotten in the last memory unit \(\:{c}_{t-1}\). \(\:{b}_{f}\) is the bias. In other words, the forget gate is used to control the amount of information retained in the last memory unit.

The input gate, which determines how much new information is received to the memory unit \(\:{c}_{t}\), is expressed by Eq. (2)- Eqs. (4),

Where \(\:{\varvec{W}}_{i}\) and \(\:{\varvec{W}}_{c}\) are the weight matrixes, \(\:{b}_{i}\) and \(\:{b}_{c}\) are the biases. The input gate uses \(\:{x}_{t}\) and \(\:{h}_{t-1}\) to calculate the weight \(\:{i}_{t}\) and the candidate memory unit \(\:{\stackrel{\sim}{c}}_{t}\). The original information and the new information are controlled by the forget gate and the input gate respectively, and the current memory unit \(\:{c}_{t}\) is obtained.

The output gate determines what information is finally output.

where \(\:{\varvec{W}}_{o}\) is the weight matrix, \(\:{b}_{o}\) is the bias.

LSTM solves the problem of gradient disappearance in traditional RNN by a special three-gate structure, which enables it to achieve better performance in training and learning longer and more complex time series data.

Bidirectional LSTM neural network

LSTM predicts the output of the next moment based on the time series information of previous moment. However, in some problems, the output of current moment is not only related to previous information but also related to future information. Bidirectional LSTM divides the hidden layer into two parts: positive time direction and negative time direction. Then the two parts feeds forward to the same output layer. Therefore, the output layer of bidirectional LSTM includes both past and future time series information. The structure of bidirectional LSTM is shown in Fig. 3.

The hidden output between LSTM layers is not only transferred to adjacent cells, but also acts on the input of the next LSTM layer. Because there is no interaction between the two kinds of neurons, it can be expanded into a general feedforward network. Through the forward and backward propagation of the output neurons, the network weights are updated. Then we can connect the two hidden states to calculate the final prediction of the bidirectional LSTM,

where \(\:LSTM(\cdot\:)\) represent LSTM function, \(\:{\overrightarrow{h}}_{t}\) and \(\:{\overleftarrow{h}}_{t}\) are the hidden output of the forward LSTM and the backward LSTM, respectively. \(\:{\varvec{W}}_{\overrightarrow{y}}\) and \(\:{\varvec{W}}_{\overleftarrow{y}}\) are the weight matrixes of the forward LSTM and the backward LSTM. \(\:{b}_{y}\) is the bias of the output layer.

In this study, we employ BiLSTM networks across multiple training subsets to enhance fault prediction performance. As an extension of traditional LSTM, the BiLSTM architecture processes temporal sequences bidirectionally, simultaneously capturing dependencies from both past and future states through its dual-directional processing capability. This is achieved through the concatenation of forward (\(\:{\overrightarrow{h}}_{t}\)) and backward (\(\:{\overleftarrow{h}}_{t}\)) hidden states (Eqs. 13–15), which enables comprehensive learning of full-sequence contextual information. The network’s sophisticated architecture, featuring specialized memory cells and gating mechanisms (including input, forget, and output gates), effectively addresses common gradient-related challenges, making it particularly suitable for analyzing lengthy aero-engine time-series data. When integrated with our adaptive sampling approach, the BiLSTM network demonstrates superior feature extraction capabilities from balanced training subsets, ultimately leading to significantly improved fault prediction accuracy.

By combining the adaptive hybrid sampling method with BiLSTM networks, the accuracy of fault prediction is greatly enhanced. First, the balanced subsets generated by our sampling method enable the BiLSTM models to learn more effective representations of the faulty samples, which are typically underrepresented in the original dataset. Second, the BiLSTM networks’ ability to capture temporal dependencies allows them to make more accurate predictions based on the complex and dynamic nature of aircraft engine sensor data. Finally, the integration of multiple BiLSTM models trained on different subsets further improves the robustness and generalization ability of our overall fault prediction system.

Recent advancements in graph-based deep learning models have demonstrated significant potential in handling complex temporal and structural dependencies, particularly for fault detection tasks. For instance, the Adaptive Convergent Visibility Graph Network34converts time-series data into graph structures to capture non-local dependencies, while energy-driven graph neural networks35 enhance out-of-distribution (OOD) detection by modeling energy-based decision boundaries. These approaches address challenges similar to those in aircraft engine fault prediction, such as imbalanced data and long-range temporal dependencies. While our study focuses on BiLSTM due to its proven efficacy in sequential fault diagnosis, future work could explore hybrid architectures integrating graph neural networks to further improve feature extraction and OOD robustness.

Evaluation measures

In this work, the Accuracy (ACC) is used to measure the performance of the proposed method in fault prediction of the aircraft engine.

TP, FP, TN, FN mean true positive, false positive, true negative, false negative according to actual label and classification result, respectively.

Fault prediction model

The framework of the fault prediction model

A combined prediction model is established for fault prediction of aircraft engine with imbalanced data. The structure of the fault prediction model is shown in Fig. 4.

The detailed steps are as follows.

Step 1. Data processing. Compress the normalized data by compressed sensing algorithm, and then divide the compressed data into training set and testing set.

Step 2. Training subset generation. Generate multiple training subsets \(\:{{\{D}_{i}\}}_{i=1,\cdots\:,T}\) by using adaptive hybrid sampling method based on k-means clustering.

Step 3. Model training. Train the model \(\:{\phi\:}_{i}\) on the training subset \(\:{D}_{i}\), where \(\:i=1,\cdots\:,T\).

Step 4. Model integration. Integrate the models by the weighted voting method and output the final prediction result.

Calculate the weight \(\:{w}_{i}\) of each model, then the combined model is obtained as follows:

where \(\:{y}_{j}\) is the combined prediction value of the \(\:j\)th sample, \(\:{f}_{ij}\) is the prediction value of the \(\:j\)th sample by model \(\:{\phi\:}_{i}\), \(\:{w}_{i}\) is the weight of model \(\:{\phi\:}_{i}\).

Voting method is the most commonly used sub model integration method, which determines the sample category through voting when the output results of sub models are inconsistent. Weighted voting method gives each sub model a weight, that is, the sub model with good classification ability is given a larger weight, while the sub model with poor classification ability is given a smaller weight. The weights are commonly determined by the posterior probability of the sub model. However, the posterior probability of each sub model represents the overall credibility of the classifier. For different kinds of prediction results, the reliability is not exactly the same. Therefore, it is necessary to determine the weight of the sub model when outputting the category according to the prediction results of each sub model for each category of samples. The detailed steps of weighted voting method for model integration are as follows.

Step 4.1. Calculate the weight \(\:{w}_{ip}\) of the \(\:p\)th category as predicted by the model \(\:{\phi\:}_{i}\)

where \(\:S\left(p\right)\) is the number of samples judged as \(\:p\)th category by the predicted results, \(\:S\left(all\right)\) is the number of correct prediction results.

Step 4.2. Each sub model is used to predict the unknown samples. The weights of each sub model with the same classification results are added to obtain the sum of the weights of each category of unknown samples.

Step 4.3. Compare the sum of weights, and take the category corresponding to the maximum value as the final prediction result of unknown samples.

Theoretical analysis of weighted voting integration

The weighted voting integration method is theoretically grounded in three key aspects: optimal weight calculation, convergence guarantee, and stability condition (see Appendix A1-A3 for detailed proofs).

First, the weights \(\:\{{w}_{i}{\}}_{i=1}^{T}\) for sub-models \(\:\{{\phi\:}_{i}{\}}_{i=1}^{T}\) are derived to minimize the ensemble variance under the constraint \(\:\sum\:_{i=1}^{T}{w}_{i}=1\). As proven in Appendix A1, the optimal weights are inversely proportional to the error variances of the sub-models:

This ensures that more reliable sub-models (with lower error variance) are assigned higher weights, thereby optimizing the ensembles overall performance.

Second, the ensemble error rate \(\:{E}_{T}\) is bounded by the individual sub-model error rates \(\:{E}_{i}\), as shown in Appendix A2:

This inequality demonstrates that the ensembles accuracy improves as the number of sub-models increases, provided their errors are uncorrelated. The weighted voting method thus enhances robustness by leveraging the diversity of sub-models.

Finally, the ensemble output \(\:F\left(x\right)=\sum\:_{i=1}^{T}{w}_{i}{\phi\:}_{i}\left(x\right)\) is Lipschitz continuous, with its sensitivity to input perturbations controlled by the sub-models smoothness and weight normalization (see Appendix A3). The Lipschitz constant \(\:L\) satisfies:

\(\:L=\underset{i}{\text{m}\text{a}\text{x}}\parallel\:{\phi\:}_{i}{\parallel\:}_{\text{Lip}}\cdot\:\sum\:_{i=1}^{T}\left|{w}_{i}\right|,\)ensuring stable predictions even in the presence of noisy or uncertain inputs.

In summary, the theoretical analysis confirms that the weighted voting method optimally combines sub-models by minimizing variance, improving error bounds, and guaranteeing stability. For full derivations and technical details, refer to Appendix A1-A3.

Case study

In this section, we conduct some experiments using the fault dataset of aircraft engine to validate the performance of the proposed method for fault prediction. The data used in this study is the sensor data from aircraft engine of an airline company. The sensor data is collected one minute at a frequency of 1024 Hz. That is, each sample has 1024 * 60 = 61,440 time series data. There are a total of 2,271 samples, of which 2,018 are normal data and 253 are fault data. 1800 samples are used for training in this paper, of which 1640 are normal data and 160 are fault data. The remaining 471 samples are used for testing, including 378 normal data and 93 fault data.

Experiment on fault dataset

In compressive sensing, the compression ratio (CR) is often analyzed for evaluating the compression. Let \(\:N\) be the length of original signal and \(\:M\) be the length of compressed signal, then CR is calculated by

We take different values of CR (0.1,0.2,0.3,0.4,0.5, respectively) to validate the proposed method. Gaussian random matrix is used as the measurement matrix. When = 0.1, the dimension of measurement matrix should be set as \(\:M\:\times\:\:N\) (\(\:M\:=\:0.9\:N\)). All experiments are run on an Intel Core i7-7700 8 GB, Microsoft Windows 10 operating system and the development environment is Python 3.6.6, PyCharm 2018.3. With the parameter setting in Table 2, the fault prediction model is built.

We take the number of training subsets \(\:T=5\) for example. To ensure the robustness and reliability of our experimental results, 10 independent trials with different random seeds are conducted. The results presented below are the mean accuracy (%) and standard deviation (std), providing a statistically sound evaluation of the proposed method.

As shown in Tables 3 and 4, and 5, the prediction accuracy is the highest when CR = 0.2 and Batch size = 64, and the prediction accuracy is the lowest when CR = 0.5 and Batch size = 64. It is not always the case that a smaller CR leads to higher accuracy, nor does a smaller batch size necessarily result in higher accuracy. However, as the CR increases, there is a noticeable downward trend in accuracy, and a similar trend is observed with the increase in batch size. When CR increases from 0.3 to 0.5, the accuracy is greatly reduced. In addition, when CR increases from 0.1 to 0.3, the running time decreases significantly. When CR continues to increase from 0.3, the running time is similar. And the running time of CR = 0.4 is greater than CR = 0.3 and CR = 0.5. There is no obvious decrease in accuracy when Batch size increases from 32 to 64, and there is a significant decrease in accuracy when Batch size increases from 64 to 128. The accuracy of Batch size = 32 is similar to that of Batch size = 64, but the running time of Batch size = 64 is less. Considering the prediction accuracy and running time, CR = 0.2 and Batch size = 64 are the most appropriate. Figure 5 shows the convergence curve of BiLSTM neural network with CR = 0.2 and Batch size = 64. It can be seen that the integrated BiLSTM neural network converges quickly to the optimal accuracy under the above parameter settings.

Now we consider the effects of the number of training subsets on aircraft engine fault prediction. Figure 6 shows the accuracy comparison of integrated BiLSTM neural network with different number of training subsets (5, 6, 7, 8, 9, and 10). The result shows that a larger number of training subsets does not always lead to a higher classification accuracy. When the number of training subsets is 6, the classification accuracy is the highest (86.20%). For the fault data of aircraft engine, the best performance is obtained when the number of training subset is 6, but not necessarily for other data. Taking into account both running time and accuracy, the best parameter scheme is CR = 0.2, Batch size = 64, and the number of training subsets \(\:T=5\), which can get a better classification result in a relatively short time (the running time is 403 min when \(\:T=5\)).

Comprehensive analysis of compressive sensing effectiveness

To ensure that the application of compressive sensing in our study does not lead to the loss of critical fault features, we conducted a comprehensive analysis across theoretical guarantees and Multi-Domain Feature Retention. This analysis aims to assess the effectiveness of CS in preserving essential information for fault prediction, even at various compression ratios.

Theoretical guarantees for feature preservation

The core theoretical foundation of CS lies in the Restricted Isometry Property (RIP), which ensures stable reconstruction of sparse signals from compressed measurements. For a signal \(\:\mathbf{x}\in\:{\mathbb{R}}^{N}\) with sparsity \(\:K\) (only \(\:K\ll\:N\) non-zero coefficients in basis \(\:\psi\:\)), the Gaussian measurement matrix \(\:\varvec{\Phi\:}\) satisfies RIP with high probability if:

where \(\:{\delta\:}_{K}\in\:\left(0,1\right)\) is the isometry constant. Our work empirically determined \(\:K\approx\:300\) for aircraft engine vibration signals (Sect. 3.2), enabling a critical CR derived from:

At CR = 0.2, this guarantees > 85% retention of discriminative features, aligning with the observed similarity score of 0.88 in time-domain analysis (Table 6).

Multi-Domain feature retention analysis

The time-domain analysis focuses on the preservation of transient impact signals. The similarity between the original signal \(\:x\left(t\right)\) and the reconstructed signal \(\:y\left(t\right)\) is quantified using normalized cross-correlation (NCC), calculated as follows:

where \(\:{R}_{xy}\left(\tau\:\right)\) is the cross-correlation function, and \(\:{R}_{xx}\left(0\right)\) and \(\:{R}_{yy}\left(0\right)\) represent the signal energy. Experimental results show that at a CR of 0.2, the similarity score is 0.92 which exceeds 0.88, indicating effective retention of transient peaks in both amplitude and waveform.

Additionally, kurtosis and skewness are used to verify that the statistical characteristics of impact features remain intact after compression. Kurtosis quantifies the “tailedness” of the signal distribution and is particularly sensitive to transient impulses characteristic of early-stage faults. For the original uncompressed signal, the kurtosis value was measured at 4.2 ± 0.3, confirming the presence of significant transient components. After compression at CR = 0.2, the reconstructed signal maintained a kurtosis of 3.9 ± 0.2 (p > 0.05, paired t-test), demonstrating effective preservation of impulsive features. The threshold of > 3.5 was selected based on empirical studies showing this value reliably indicates fault-related transients in aero-engine vibration signals. Skewness measures asymmetry in the signal distribution. Baseline normal operation signals exhibited skewness of 0.1 ± 0.05, while fault signals showed pronounced positive skewness (1.2 ± 0.3) due to unilateral impact transients. The reconstructed signals at CR = 0.2 preserved this characteristic with skewness values of 1.1 ± 0.2, with no statistically significant difference from original fault signals.

In the frequency domain, we computed the percentage of retained spectral energy in critical bands relevant to fault prediction. Through Fast Fourier Transform (FFT) analysis, we determined that at CR = 0.2, over 90% of the spectral energy was preserved. Table 6 is the time-domain similarity, spectral energy retained, and detectable fault types with different compression ratios.

As shown in the table below, as the compression ratio increases, there is a gradual decrease in time-domain similarity and spectral energy retained. However, at CR = 0.2, a good balance is achieved between data reduction and feature preservation, allowing for the detection of common fault types such as bearing spalls and gear tooth fractures. Beyond CR = 0.3, short-duration transients are lost, degrading sensitivity to incipient faults.

Optimal compression ratio selection analysis

Beyond CR = 0.3, short-duration transients are lost, degrading sensitivity to incipient faults. While both CR = 0.1 and CR = 0.2 demonstrate satisfactory feature preservation (NCC = 0.92 vs. 0.88, energy retention = 95% vs. 90%), CR = 0.2 emerges as the optimal choice through comprehensive technical evaluation. First, computational efficiency favors CR = 0.2 decisively - its 80% data reduction (versus merely 10% for CR = 0.1) reduces processing time by 39% (339 vs. 550 min), crucial for real-time monitoring of 1024 Hz sensor streams. This efficiency gain comes without compromising diagnostic capability, as both ratios successfully detect all critical fault types while CR = 0.2 maintains sufficient transient feature preservation (kurtosis > 3.5) for early fault detection.

The marginal 2.13% accuracy improvement from CR = 0.1 fails to justify its 64% greater computational cost, demonstrating clear diminishing returns. Moreover, CR = 0.2 exhibits superior system robustness, maintaining near-equivalent performance for short transients (< 0.5 ms) and high-frequency harmonics (5–10 kHz) while avoiding the overfitting risks associated with CR = 0.1’s excessive retention of background noise. The selected ratio also synergizes optimally with our BiLSTM architecture - the preserved features adequately support temporal dependency modeling (Fig. 5 convergence), and the saved computational resources enable strategic allocation toward enhanced training (increasing subsets T = 5→6 achieves 86.20% accuracy).

From an engineering perspective, CR = 0.2 aligns perfectly with practical deployment constraints. It respects the limited storage and processing capabilities of airborne hardware while minimizing wireless transmission costs for remote monitoring. This balanced selection directly supports the paper’s core objective (Sect. 1) of reducing redundancy without compromising processing speed, as empirically validated through our parameter analysis (Sect. 5.1). The convergence of these technical and operational factors establishes CR = 0.2 as the Pareto-optimal solution for aircraft engine fault prediction systems.

Comparison with other methods

To demonstrate the superiority of the proposed method, other classification methods including Support Vector Machine (SVM), Extreme Learning Machine (ELM), LSTM, BiLSTM, Gated Recurrent Unit (GRU), Attention-enhanced LSTM (Attention-LSTM), Convolutional Neural Network-LSTM (CNN-LSTM) and Genetic Algorithm BiLSTM (GA-BiLSTM) are selected to compare with the proposed method. Some important parameter settings of the comparative methods are shown in Table 7.

To thoroughly evaluate the proposed methods capability in handling imbalanced fault prediction, assessment is expanded to include key metrics that are particularly meaningful for imbalanced datasets. Precision measures the model’s ability to correctly identify fault samples among all samples predicted as faults. A high precision indicates that when the model predicts a fault, it is likely to be correct.

where \(\:TP\) is the number of true positives (correctly predicted faults) and \(\:FP\) is the number of false positives (normal samples incorrectly predicted as faults).

Recall quantifies the model’s ability to detect actual fault samples. A high recall means the model can identify most of the true faults in the dataset.

where \(\:FN\) is the number of false negatives (fault samples incorrectly predicted as normal).

The F1-score provides a balanced measure between precision and recall, especially important for imbalanced datasets where both metrics need to be considered.

This provides a balanced measure between precision and recall.

The Area Under the Receiver Operating Characteristic curve (AUC) evaluates the model’s ability to distinguish between normal and fault samples across all classification thresholds. An AUC of 1 represents perfect classification, while 0.5 indicates random guessing.

where TPR (True Positive Rate) is recall, and FPR (False Positive Rate) is \(\:\frac{FP}{FP+TN}\).

Table 8 recapitulates the results of the proposed method and the comparative methods on the fault prediction of aircraft engine. As shown in Table 8, the proposed method in this paper has better classification accuracy compared with the other classification methods. Specifically, the proposed method outperforms the other methods by 23.22% (SVM), 17.86% (ELM), 8.80% (LSTM), 4.47% (BiLSTM), 11.36% (GRU), 7.94% (Attention-LSTM), 3.11% (CNN-LSTM), and 1.51% (GA-BiLSTM), respectively.

The comprehensive comparison of various methods highlights distinct strengths and limitations in addressing the aircraft engine fault prediction problem. Traditional machine learning approaches like SVM and ELM exhibit lower accuracy (62.50% and 67.86%, respectively), primarily due to their inability to model temporal dependencies and handle imbalanced datasets effectively. While LSTM (76.92%) and GRU (74.36%) improve performance by capturing sequential patterns, their unidirectional architectures limit their ability to leverage bidirectional context, resulting in suboptimal fault detection. The BiLSTM baseline (81.25%) demonstrates the advantage of bidirectional processing but still suffers from bias toward the majority class in imbalanced data. Advanced hybrids like Attention-LSTM (77.78%) and CNN-LSTM (82.61%) integrate attention mechanisms or convolutional layers to enhance feature extraction, yet their gains are marginal compared to the proposed method. GA-BiLSTM (84.21%), which optimizes hyperparameters, performs closer to our approach but lacks adaptive sampling, leading to slightly inferior accuracy (85.72%).

The proposed adaptive hybrid sampling and BiLSTM method addresses these limitations through two key innovations: (1) Cluster-based adaptive sampling mitigates imbalance by strategically oversampling minority-class-like samples (BSMOTE) and undersampling redundant majority samples, preserving critical fault features; and (2) Bidirectional temporal modeling captures long-range dependencies in both forward and backward directions, enhancing fault detection sensitivity. This combination not only outperforms all baselines but also achieves a practical balance between accuracy (increasing 2.70% over GA-BiLSTM) and computational efficiency (39% faster than CR = 0.1 configurations). The results underscore that class imbalance mitigation and bidirectional temporal learning are pivotal for robust fault prediction in real-world scenarios, where minority-class recall and real-time processing are critical. Future work could explore dynamic compression ratios or hybrid architectures to further optimize performance.

Conclusions

In this paper, a fault prediction method based on the combination of adaptive hybrid sampling and bidirectional long short-term memory is proposed. The main work and conclusion of this paper is summarized as follows. First, an adaptive hybrid sampling method based on k-means clustering is designed to divide the sample into several sub samples to improve the imbalance between the samples. Then, the multiple BiLSTM models are built and integrated to obtain the final fault prediction model. Finally, we apply the proposed method to the fault prediction of aircraft engine. The experimental results show that the proposed method has good performance in solving the fault prediction problem of aircraft engine.

Data availability

The data used to support the findings of this study are available from the corresponding author upon request.

References

Litt, J. S. et al. A survey of intelligent control and health management technologies for aircraft propulsion systems[J]. J. Aerosp. Comput. Inform. Communication. 1 (12), 543–563 (2004).

Donoho, D. L. Compressed sensing[J]. IEEE Trans. Inf. Theory. 52 (4), 1289–1306 (2006).

Guo, J., Yang, Y., Li, H., Dai, L. & Huang, B. A parallel deep neural network for intelligent fault diagnosis of drilling pumps[J]. Eng. Appl. Artif. Intell. 133, 108071 (2024).

Hu, J. et al. A novel quality prediction method based on feature selection considering high dimensional product quality data[J]. J. Industrial Manage. Optim. 18 (4), 2977–3000 (2022).

Zhang, Z., Dong, S., Li, D., Liu, P. & Wang, Z. Prediction and diagnosis of electric vehicle battery fault based on abnormal voltage: using decision tree algorithm theories and isolated Forest[J]. Processes 12 (1), 136 (2024).

Sun, C. et al. Prediction method of concentricity and perpendicularity of aero engine multistage rotors based on PSO-BP neural network[J]. IEEE Access. 7, 132271–132278 (2019).

Malhi, A., Yan, R. & Gao, R. X. Prognosis of defect propagation based on recurrent neural networks[J]. IEEE Trans. Instrum. Meas. 60 (3), 703–711 (2011).

Yuan, M., Wu, Y. & Lin, L. Fault diagnosis and remaining useful life estimation of aero engine using LSTM neural network[C]. 2016 IEEE international conference on aircraft utility systems (AUS). IEEE, : 135–140. (2016).

Schuster, M. & Paliwal, K. K. Bidirectional recurrent neural networks[J]. IEEE Trans. Signal Process. 45 (11), 2673–2681 (1997).

Graves, A., Jaitly, N. & Mohamed, A. Hybrid speech recognition with deep bidirectional LSTM. in 2013 IEEE workshop on automatic speech recognition and Understanding. 273–278 (IEEE, 2013).

Lipton, Z. C., Berkowitz, J. & Elkan, C. A critical review of recurrent neural networks for sequence learning. arXiv preprint arXiv:1506.00019 (2015).

Melamud, O., Goldberger, J. & Dagan, I. context2vec: Learning generic context embedding with bidirectional lstm[C]. Proceedings of the 20th SIGNLL conference on computational natural language learning. : 51–61. (2016).

Batuwita, R. & Palade, V. Class imbalance learning methods for support vector machines[J]. Imbalanced learning: Foundations, algorithms, and applications, : 83–99. (2013).

Fujiwara, K. et al. Over-and under-sampling approach for extremely imbalanced and small minority data problem in health record analysis[J]. Front. Public. Health. 8, 178 (2020).

Kobayashi, T. & Simon, D. L. Evaluation of an enhanced bank of Kalman filters for in-flight aircraft engine sensor fault diagnostics[J]. J. Eng. Gas Turbines Power. 127 (3), 497–504 (2005).

Yan, W. Application of random forest to aircraft engine fault diagnosis[C]//The Proceedings of the Multiconference on Computational Engineering in Systems Applications. IEEE, 1: 468–475. (2006).

Zhang, X., Tang, L. & Decastro, J. Robust fault diagnosis of aircraft engines: A nonlinear adaptive Estimation-Based Approach[J]. Control Syst. Technol. IEEE Trans. On. 21 (3), 861–868 (2013).

Tamilselvan, P. & Wang, P. Failure diagnosis using deep belief learning based health state classification[J]. Reliab. Eng. Syst. Saf. 115, 124–135 (2013).

Babu, G. S., Zhao, P. & Li, X. L. Deep convolutional neural network based regression approach for estimation of remaining useful life[C]. International conference on database systems for advanced applications. Springer, Cham, : 214–228. (2016).

Wang, Y., Tang, B., Qin, Y. & Huang, T. Rolling bearing fault detection of civil aircraft engine based on adaptive Estimation of instantaneous angular speed[J]. IEEE Trans. Industr. Inf. 16 (7), 4938–4948 (2019).

Xi, P. et al. Least squares support vector machine for class imbalance learning and their applications to fault detection of aircraft engine[J]. Aerosp. Sci. Technol. 84, 56–74 (2019).

Zhao, Y. et al. Imbalanced kernel extreme learning machines for fault detection of aircraft engine. J. Dyn. Syst. Meas. Contr. 142(10), 101002 (2020).

Zhao, Y., Xie, Y. & Ye, Z. A new dynamic radius SVDD for fault detection of aircraft engine[J]. Eng. Appl. Artif. Intell. 100, 104177 (2021).

Zheng, L. et al. A fault prediction of equipment based on CNN-LSTM network[C]. 2019 IEEE International Conference on Energy Internet (ICEI). IEEE, : 537–541. (2019).

Binu, D. & Kariyappa, B. S. Rider deep LSTM network for hybrid distance Score-Based fault prediction in analog circuits. IEEE Trans. Industr. Electron. 68(10), 10097–10106 (2020).

Liu, J., Pan, C., Lei, F., Hu, D. & Zuo, H. Fault Prediction of Bearings Based on LSTM and Statistical Process analysis[J]214107646 (Reliability Engineering & System Safety, 2021).

Xiang, S., Qin, Y., Luo, J., Pu, H. & Tang, B. Multicellular LSTM-based Deep Learning Model for aero-engine Remaining Useful Life prediction[J]216107927 (Reliability Engineering & System Safety, 2021).

Song, Y., Shi, G., Chen, L., Huang, X. & Xia, T. Remaining useful life prediction of turbofan engine using hybrid model based on autoencoder and bidirectional long short-term memory[J]. J. Shanghai Jiaotong Univ. (Science). 23 (1), 85–94 (2018).

Huang, C., Huang, H. & Li, Y. A bidirectional LSTM prognostics method under multiple operational conditions[J]. IEEE Trans. Industr. Electron. 66 (11), 8792–8802 (2019).

Remadna, I., Terrissa, S. L., Zemouri, R., Ayad, S. & Zerhouni, N. Leveraging the Power of the Combination of CNN and Bi-directional Lstm Networks for Aircraft Engine RUL Estimation[C]. 2020 Prognostics and Health Management Conference (PHM-Besançon). IEEE, : 116–121. (2020).

Zhang, Y. & Aeroengine Fault Prediction Based on Bidirectional LSTM Neural Network[C]. International Conference on Big Data, Artificial Intelligence and Internet of Things Engineering (ICBAIE). IEEE, 2020: 317–320. (2020).

Jeatrakul, P., Wong, K. & Fung, C. Classification of Imbalanced Data by Combining the Complementary Neural Network and SMOTE Algorithm[J]pp 152–159 (Neural Information Processing Models & Applications, 2010).

Aditsania, A. & Saonard, A. Handling imbalanced data in churn prediction using ADASYN and backpropagation algorithm[C]. 3rd international conference on science in information technology (ICSITech). IEEE, 2017: 533–536. (2017).

Li, X. et al. Adaptive convergent visibility graph network: an interpretable method for intelligent rolling bearing diagnosis[J]. Mech. Syst. Signal Process. 222, 111761 (2025).

Li, X. et al. Energy-propagation Graph Neural Networks for Enhanced out-of-distribution Fault Analysis in Intelligent Construction Machinery systems[J] (IEEE Internet of Things Journal, 2024).

Funding

This work is supported by The National Key Research and Development Program of China (2019YFB1705300), the Fundamental Research Funds for the Central Universities (Nos. JZ2020HGTB0035), the National Natural Science Foundation of China (Nos. 71801071, 71922009, 72071056, 71871080), Base of Introducing Talents of Discipline to Universities for Optimization and Decision-making in the Manufacturing Process of Complex Product (111 project), Hefei University talent research fund project (23RC35), the University excellent young talents support program project of Anhui Province (No. gxyqZD2022110)), and the Anhui Province University Research Project (2024 AH053046, 2024 AH051615).

Author information

Authors and Affiliations

Contributions

Junying Hu and Huan Xu wrote the main manuscript text. Jiang Xu provided programming support. Ke Zhang prepared figures and tables. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethical and informed consent for data used

The data used in this study were publicly available data sets on the Internet. No animals or humans were victims.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Hu, J., Jiang, X., Xu, H. et al. Fault prediction of aircraft engine based on adaptive hybrid sampling and BiLSTM. Sci Rep 15, 13726 (2025). https://doi.org/10.1038/s41598-025-98756-9

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-98756-9