Abstract

Source images and predicted target images differ in image features. When heterogeneous transfer learning is applied some difficulties and further issues appear. For example, noise in image recognition appears and is required to be reduced. The Image Feature Data Learning and the Definition of Image Feature Data Consistency modules adopt the normalization layer of a neural network to extract 3 types of features, namely, global features, feature space, and feature labels. A noise reduction method, Rudin-Osher-Fatemi, is implemented. Thus, the Image Feature Data Association Fusion Heterogeneous Transfer Learning Model is proposed. Also, a correlation coefficient is computed for image feature vectors, and effective correlation mapping matrices are constructed through multi-dimensional vectorized correlation. Then, the feature vectors and correlation coefficients are aggregated using the Batch Normalization Layer to assess correlations between image features. Furthermore, to check the variance between the features of the source images and the target images to be minimum and the common space of the transfer mapping features to be maximum, the Definition of Image Feature Data Consistency deals with controlling parameter separability by designing the constraint matrix with minimum variance score for the source images and the target images. Finally, the regularization of the transfer mapping matrix is carried out to create the loss function to consistently train the image features to construct the heterogeneous transfer learning module. When the transfer learning weight matrix is attained, the consistent constraint strategy of the image features is introduced to update image features in real time. Besides, the Gaussian kernel function is employed to control the generated noise in transfer learning. The results indicate that the SNR is greater than 35dB and the edges in the image feature map are clearer and contain less noise in the Image Feature Data Learning module with the Rudin-Osher-Fatemi denoising strategy.

Similar content being viewed by others

Introduction

The research on how successful heterogeneous transfer learning models are applied to image recognition usually depends upon the similarity of image features1,2,3,4,5. However, generally, features or feature space of source images and predicted target images do not show consistency when performing feature mapping between source images and predicted target images. Hence, the research focusing on heterogeneous transfer learning has a high practical value in dealing with this problem. In the feature extraction and recognition of some captured images, a transfer process cannot accurately match source image features with the same features or dimensions of target image features (the spatial structure of images). So, heterogeneous transfer learning plays a vital role6,7,8,9. In image recognition processes, the distribution of image features in transfer learning has differences when the source image features or the feature space of images differ from those of the target images. Thus, the pre-processing effect declines sharply when a large gap exists in image features. Several types of gaps may undermine the success rates of the transfer matching between the features of the source images and the target images. Due to feature differences in heterogeneous transfer learning, the minimization of gaps plays a significant role in successful image recognition tasks. For example, when correlations between the features of the source images and the predicted target images are calculated and employed, the gap will drop dramatically, which will seriously enhance the overall performance of the transfer learning model. Consequently, accurate image recognition tasks require a minimum difference between the source images and the predicted target images to apply a transfer learning model.

In further research and application of heterogeneous feature transfer learning, 3 different methods are mainly implemented, namely, function-based transfer learning3,4,8,9,13,14,15, subspace transfer learning11,12, and fuzzy inference transfer learning10,16. Functional transfer learning improves the learning of a new task by transferring knowledge from a related task that has already been learned and using available knowledge and model parameters to support the training of a new model. Thus, the model’s learning efficiency and speed increases. Subspace transfer learning employs the data in the source domain to assist in training the model in the target domain by transferring data between the source domain and the target domain, which can align the feature space of the source domain and the target domain so that the knowledge in the source domain can be better transferred to the target domain. The model’s generalization capability can be effectively improved. On the other hand, Fuzzy inference transfer learning can move knowledge between different domains, which has advantages in dealing with imprecise or fuzzy data and can better understand and deal with uncertainty vagueness, and imprecision in the data, thus improving the model generalization capability.

However, the difficulties that need to be resolved when heterogeneous transfer learning is applied to image recognition are mainly grouped into 3 aspects: (1) Different distributions for image features are available, (2) Determining the heterogeneity of image features is not strong, (3) The noise is difficult to control.

In this research, the image features, feature space, and feature labels are clearly defined by establishing and computing associations. So, the discrepancy control of image features is realized by introducing the noise control strategy.

The research focuses on resolving 3 problems: feature learning from source images and predicted target images, feature data label consistency in transfer learning, and feature label updating. The Image Feature Data Learning (IFDL) module uses the Rudin-Osher-Fatemi (ROF) to control noise. The association between the 4 sets of vectors is established, and the reliability of the definitions of the 4 sets of vectors through correlation verification is confirmed when the global features, feature space, feature labels, feature space of the source images, and the predicted target images are defined. The Definition of Image Feature Data Consistency (DIFDC) module establishes the association between the source images and the predicted target images, controlling the differences between the 3 kinds of feature sets, namely, features, feature space, and feature labels, realizing accurate transferring and matching from the features of the source images to the features of the predicted target images. Thus, the consistent transfer learning is completed and updates features, feature space, and feature labels of images to control noise. Eventually, the proposed method, the Image Feature Data Association Fusion Heterogeneous Transfer Learning Model (IFDAFHTL), combines the 2 modules with a less noise scheme. More up-to-date research can be found26,27,28,29,30.

The construction of a heterogeneous transfer learning model

Image feature data learning (IFDL) in source and predicted target images

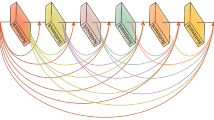

To constrain the differences in the distribution of features between the source images and the predicted target images, the IFDL, which consists of 2 sub-units, is introduced to enhance correlations between the 2 types of image features, namely, Hierarchical Representation of Image Features (HRIF) and Image Feature Association Learning (IFAL). The HRIF has a 3-layer structure, including description layers of image features, an image feature space, and an image feature label, and is capable of training the image features, feature space, and feature labels of source images and predicted target images. On the other hand, the IFAL completes 3 tasks: association operation, association aggregation, and the evaluation of the association effect between source images and recognition of target image features. The internal structure and process flow of the IFDL module is shown in Fig. 1.

The description layer of the image feature acquires the global features of the source images and predicts target images. First, Convolutional Neural Networks (CNN) extract image features through forward learning. Then, Rudin-Osher-Fatemi (ROF) is used to denoise the extracted image features17. Finally, the global features of source images and predicted target images are obtained. The ROF adopts the derivative operation of the parameter to control the smoothness of the image feature gradient, which can effectively protect the edge features in the image features.

The description layer of the image feature space is responsible for applying constraints to the global feature space of source images and predicted target images. After normalizing the convolutional kernel defined by the image feature space, the combination of Batch Normalization Layer (BNL) and Convolutional Layer (ConvL) is introduced, which is trained to extract the feature space of source images and predicted target images through forward learning as well. The BNL can ensure stability for the distributions of the input features of source images and predicted target images. The learning and training efficiency are improved and the optimal image feature space is obtained with a quick convergence. On the other hand, the occurrence of a vanishing gradient can be prevented by designing a saturated nonlinear activation function to train the model. In combination with a normalized convolutional kernel, real-time regularization can be achieved without repeated initialization of the model parameters.

The representation layer of image features acquires the feature labels of the source images and predicted target images. By extracting the global features, the feature space of the source images, and predicted target images from the previous 2 layers reversing learning process is used to train the 2 models in the description layer of the image features and the description layer of the feature space. Then, the verification is achieved that can acquire global features, the feature space of the 2 types of images. An Improved Nonparallel Support Vector Machine (INPSVM) was introduced to classify the global features, and feature space, and then complete the definitions of the 2 types of image feature labels, which contain the complete image features and feature space in the defined image feature labels18. The 3-layer structures are also the components of the HRIF sub-unit.

The main tasks accomplished by the IFAL sub-unit are: (1) Establish the association between the global features, feature space, and the feature labels of the source image and the predicted target image, and then obtain the correlation coefficients between the three spaces. (2) Construct the association between global features and feature space, global features and feature labels, and feature space and feature labels of the source images and the predicted target images, respectively, and then obtain the correlation coefficients among the 3 spaces. (3) Combine the six correlation coefficients. The correlation coefficients are aggregated directly using the BNL in CNN to obtain the correlations between global features, global features, and feature space, global features and feature labels, and the feature space and feature labels of the source and predicted target images, respectively. The influencing factors involved in the 4 correlation coefficients are shown in Fig. 1. (4) Obtain the rankings of the 4 correlations, as well as the outputs of the feature mapping from the source images to the predicted target images by constructing a network module, which combines linear Sigmoid activation function, Fully Connected layer (FC), and multidimensional feature mapping (MIFMM), to conduct training. (5) Conclude that the global features, feature space, and feature label of the extracted source images and predicted target images have high accuracy when the rankings of the 4 correlations are attained; otherwise, the IFDL needs a rerun until accurate image features are obtained.

Let source and predicted target images be defined as \(X\) and\(Y\), respectively. Let the global features of images denoted by \(W(X)\)and\(W(Y)\). Let the feature spaces of images represented by\(V(X)\)and\(V(Y)\). Let the feature labels of images shown by \(B(X)\)and\(B(Y)\). Let the correlation coefficients between the global features, the source image and the predicted target images, the feature space, and the feature labels represented by\({A_1}(X,Y)\)、\({A_2}(X,Y)\)、\({A_3}(X,Y)\), respectively. Let the correlation coefficients between global features and feature space, the global features and feature labels, and feature space and feature labels of the source image and the predicted target image denoted by \(C(W,V)\)、\(C(W,B)\)、\(C(V,B)\), respectively. The global features, feature space, and feature labels of the images are obtained through training conducted by the constructed network model, and the correlation coefficients are acquired based on the similarity calculation of the vectors. Since the determination of the correlation coefficients is operated with image feature vectors as parameters, if the metric calculation is carried out using methods such as Euclidean distance or cosine similarity, the correlations between feature vectors in the independent levels of the HRIF subunits will be obtained only when the correlation of the feature vectors in the three-layer structure is defined. Therefore, the definition of correlation coefficient in association vectors19 is utilized to establish the correlation of feature vectors between the same layer and among different layers, respectively. Equation (1) is attained using the definition of the correlation coefficient between different spaces:

where \({\left| \cdots \right|^2}\)denotes the square difference operation for all the constituent elements in the eigenvectors,\({\left\| \cdots \right\|_2}\)denotes the \({l_2}\)paradigm operation for all the constituent elements in the eigenvectors, and \({H_1} - {H_6}\)denotes the mapping matrix that establishes the correlation between the corresponding 2 eigenvectors in each of the six correlation coefficients, through which the correlation eigenvector scores can be computed. Then,\({H_1} - {H_6}\)is found by the definition of the correlation of the multidimensional vectors. The matrices \(W(X)\),\(W(Y)\),\(V(X)\),\(V(Y)\),\(B(X)\),\(B(Y)\)and \({H_1} - {H_6}\)are presented by

According to the definition of the correlation of the multidimensional vectors, six equalities can be established, namely, \(W(X)=W(Y) \times H{}_{1}\),\(V(X)=V(Y) \times H{}_{2}\),\(B(X)=B(Y) \times H{}_{3}\),\((W(X),V(X))=(W(Y),V(Y)) \times H{}_{4}\),\((W(X),B(X))=(W(Y),B(Y)) \times H{}_{5}\)、\((V(X),B(X))=(V(Y),B(Y)) \times H{}_{6}\). \({H_1}\)represents a correlation determined between \(W(X)\)and \(W(Y)\), if all vector elements in \({H_1}\)are all non-zero. If the more vector elements of \({H_1}\)are non-zero, the correlation between\(W(X)\)and\(W(Y)\)will be stronger. The fact that the vector elements in \({H_1}\)are all zero indicates that \(W(X)\)and \(W(Y)\)are not correlated. The correlation of the other five sets of parameters can be determined in the same way as for \({H_1}\)by whether all the vector elements in\({H_2} - {H_6}\)take all non-zero values or the number of elements being zero. Based on this principle, \({H_1} - {H_6}\)can be determined using an iterative polynomial solver, or some deep learning network model can be used to define the input and output vectors, and then\({H_1} - {H_6}\)can be determined by training.

With the defined six correlation coefficients, the BNL in the CNN is used by combining feature values with correlation coefficients. Firstly, the global feature correlation \(S{}_{W}(X,Y)\), feature spatial correlation\(S{}_{V}(X,Y)\), and feature label correlation \(S{}_{B}(X,Y)\)of the source images and the predicted target images are determined. The mentioned 3 correlation expressions are trained by aggregating the correlations between the global features, feature space, and feature labels of the source image and the predicted target image, respectively. The attributes of the training parameters are the same and adopt the Sigmoid activation function and the arithmetic rules of the BNL. Its training process can be expressed by

In Eq. (3), Sigmoid is a linear activation function, where the main parameters that need to be input are listed. BNL is denoted as processing using a normalized convolutional kernel, where the main parameters that need to be input are also listed, and the → symbol is used to describe the training process.

The training of the 3 correlations, namely, the correlation between image features and feature space\(S{}_{{W - V}}(X,Y)\), the correlation between image features and feature labels\(S{}_{{W - B}}(X,Y)\), and the correlation between feature space and feature space\(S{}_{{V - B}}(X,Y)\)involves not only parameters such as corresponding global features, feature space, feature labels, correlation mapping matrix, and correlation coefficients but also the influencing factors of the 3 correlations, namely, 、、 also\(S{}_{B}(X,Y)\)need to be taken into account as well. Thus, for the training process, more relevant parameters are required to be added to the Sigmoid function and BNL. The training process is defined by

Finally, the linear Sigmoid activation function and fully connected (FC) structure are applied to construct a training for 4 correlations, namely, \(S{}_{W}(X,Y)\)、\(S{}_{{W - V}}(X,Y)\)、\(S{}_{{W - B}}(X,Y)\)、\(S{}_{{V - B}}(X,Y)\), to evaluate the correlation effect. Then, the results are obtained and ranked. The rendering of feature mapping from the source images to the predicted target images can also be acquired by utilizing multidimensional feature mapping.

The primary objective of the IFDL is to achieve higher accuracy for the three types of features: global features, feature space, and feature labels, of both the source images and the predicted target images. The IFDL determines the accuracy of the 4 types of features. To design the IFDL based on 4 different feature types, differences existing in 3 datasets, namely, image features, feature space, and feature labels, constructed by the heterogeneous transfer model are taken into account in advance. A noise control strategy is introduced in the global feature extraction stage of the source image and the predicted target images. These mechanisms lay a good foundation to construct the main part of the subsequent heterogeneous migration model. However, the IFDL does not completely resolve the problems of controlling the distribution differences of features between the source images and the predicted target images and determining whether the feature difference between the source images and the predicted target images is heterogeneous.

Image feature data consistency learning and feature data updating

Heterogeneous transfer learning models map features between a source image and a predicted target image in the image recognition process, usually by using Principal Component Analysis (PCA)20. The PCA method is used to detect the differences between the image features and the image feature space regarding the source images and the predicted target images. The feature analysis of a source image constrains the consistency with the spatial dimensions of the predicted target image features by adjusting the feature space dimensions of the source images, which results in a loss of information in image features before the source image is mapped to the predicted target image features to match. The main idea is to resolve the design issues of different mapping matrices between the source image features and the predicted target image features, and then to clearly define the distributional differences among the 3 kinds of datasets, namely, image features, feature space, and feature labels, regarding the source and predicted target images. When the source and predicted target images have differences in image feature classes and different feature space dimensions, the decision strategy of mapping feature common space is introduced by designing a mapping matrix, which is conducive to the transfer learning model to accurately distinguish and select the feature common space during its runtime. Then, the model extracts the predicted target image features and defines the feature labels by training. The independence and separateness of the 3 kinds of datasets, namely, image features, feature space, and feature labels, between the source image and the predicted target images, can be achieved by controlling the variance that exists between the 3 kinds of features of the 2 types of the images. The variances need to be minimized. Through the combination of the minimized variances of the 3 feature datasets and maximizing the common feature space of the constructed mapping features, the analysis and determination of the distributional differences available in the 3 feature datasets between the 2 types of images are achieved. Finally, the loss function of the transfer learning model is designed to ensure the consistent learning of the 3 types of image feature datasets. Based on the parameters defined in Eq. (1), Eq. (5) presents the construction and solution of the model:

Equation (5) is to constrain the differences that exist in the 3 kinds of feature datasets, namely, image features, feature space, and feature labels between the source images and the predicted target images, and to ultimately ensure that the feature labels of the source images and the predicted target image have consistency, namely, 2 types of image feature labels are coherent. So, the first stage underlying the consistency between the 2 types of image features and the feature space is achieved. Then, \(K(W(X,Y),V(X,Y),B(X,Y),P(X),P(Y))\)is defined as the control parameter difference of 3 kinds of datasets, that is, image features, feature space, and feature labels, regarding between the source images and the predicted target images, namely, the variance of the 3 kinds of datasets, as the final control parameter of the migration learning model solution. \(P(X)\)and \(P(Y)\)represent the mapping matrices for the source images and the predicted target images, completing the accurate definition of the differences between the 3 datasets. With the function determining the differences between the 3 datasets, the accurate selection of the common feature space is ensured when the source image is mapped to the predicted target image. \(K(W(X,Y),P(X),P(Y))\),\(K(V(X,Y),P(X),P(Y))\),\(K(B(X,Y),P(X),P(Y))\)represent the variance of the 3 kinds of datasets. Listing these 3 parameters independently is the basis for the 3 datasets to have independence and separability. The solution can be reached by controlling the variance of the 3 kinds of features as the minimum value.\(L(W(X,Y),V(X,Y),B(X,Y),P(X),P(Y))\)which is a control parameter for the independence and separability of the 3 kinds of feature datasets, whose solution can be obtained by calculating the maximum score of the mapping common space of the 3 kinds of feature datasets, and can analyze and determine the differences of the 3 kinds of feature datasets. \(\alpha (P(X),P(Y))\)is a term, that regularizes the mapping matrices of the source images and the predicted target images, respectively, and aims at controlling the overfitting that occurs in the processing of the transfer learning model, that is, an important component of the loss function in the model.

The solution for the minimum variance of the image\(K(W(X,Y),P(X),P(Y))\)between the source images and the predicted target images can be expressed by

Equation (6) determines the minimum variance of the image features between the source images and the predicted target images. The variance is calculated by separately describing the average difference that exists between each of the 2 types of image features for all. For a more comprehensive and accurate comparison of the differences that exist, let \(W({X_i})\)be the i-th class feature of the source image, \(i \in I\). Let \(W({Y_j})\)be defined as the j-th class feature of the source image, \(j \in J\).\({\left\| \cdots \right\|^2}\)represents the square of the vector membrane; \(tr\)denotes the trace of the matrix, that is, the sum of the eigenvalues; \(\tau\) is transposed matrix; \(P(X,Y)\)represents the loss control matrix of the image feature mapping, taking into account both the source images and the predicted target image features in the mapping space; \({D_1}(X,Y)\)represents the minimum variance constraint matrix of source image features and predicted target image features, \({\left\| \cdots \right\|_2}\)designates the paradigm operations based on \({l_2}\).

Similarly, the solution for \(K(V(X,Y),P(X),P(Y))\)and \(K(B(X,Y),P(X),P(Y))\)can be expressed by

To attain the control parameters’ solution the independence and separability of the source images from the predicted target images \(L(W(X,Y),V(X,Y),B(X,Y),P(X),P(Y))\)can be expressed by

Equation (8) analyzes and determines the differences available in the 3 kinds of datasets extracted from the source images and the predicted target images based on the results of Eqs. (6) and (7) in the maximum common space of the source images mapped to the predicted target images, which provides the foundation for the subsequent construction of the loss function of the transfer learning model.

The regularized solution \(\alpha (P(X),P(Y))\)to the mapping matrix of the source images and the predicted target images can be expressed by

Equation (9) is to regularize the mapping matrices of the 2 types of images, containing parameters with 3 types of datasets: image features, feature space, and feature labels, which are attained according to the construction method of the loss function based on the \({l_2}\)-paradigm arithmetic. \(\lambda\)represents the regularization weight, determined based on Lagrange multipliers, and \(\left\| \cdots \right\|_{2}^{2}\) represents a vector membrane squaring operation based on\({l_2}\)-paradigm numbers.

Combined with the objective function described in Eq. (5), using the operation process and operation results from Eqs. (6) and (8), the loss function of the transfer learning model is constructed, and the loss function of the consistent training for the 3 kinds of datasets in the 2 types of images can be expressed by

Equation (10) is used to construct images based on the extracted source image features after training the input source images to the predicted target images for transfer learning. The reconstructed image is the target image to be predicted.

The construction process of the DIFDC is presented above and can extract 3 kinds of datasets, namely, image features, feature space, and feature labels of the source images and the predicted target images, and complete the recognition process of the input source image.

The DIFDC \(W({X_i})\)is defined as the\(i\) -th class feature data of the source image, \(V({X_i})\)is delineated as the \(i\) -th class feature space data of the source image, and \(B({X_i})\)is described as the \(i\) -th class feature label data of the source image. After extracting the 3 kinds of datasets for \(i \in I\), \(W({Y_j})\) is defined as the\(j\) -th class feature data of the predicted target image, \(V({Y_j})\) is described as the \(j\) -th class feature space data of the predicted target image, and \(B({Y_j})\) is delineated as the \(j\) -th class feature label data of the predicted target image, for \(j \in J\). The source image feature data set \(Z(X)\)and the predicted target image feature data set\(Z(Y)\)can be denoted as:

Equation (11) defines the complete information of the 3 kinds of image feature datasets and lists the categories of the 3 kinds of image feature datasets in the 2 types of images, to facilitate real-time updating of the 3 kinds of image feature datasets for learning and training in the DIFDC. The key control element for real-time updating of image features, feature space, and feature labels is the transfer design matrix of the transfer learning model. Let the transfer design matrix be denoted by \(Q({X_i} \to {Y_j})\), let the weighting matrix in the transfer learning process be represented by\({\sigma _l}({X_i} \to {Y_j})\), and let \(i\) be as the\(l\) -th time of weight allocation for model transfer learning, \(l \in L\). So, the transfer design matrix with autonomous updates can be expressed by

The parameter\(Q({X_i} \to {Y_j})\)implements the constraint consistency of the corresponding 3 image features between the source images and the predicted target images during the training of the DIFDC transfer learning, and the constraint consistency is achieved by normalizing the weight matrix\({\sigma _l}({X_i} \to {Y_j})\), which ensures that the corresponding 3 feature datasets between the source and predicted heterogeneous target images have a higher degree of similarity. The parameter \({\sigma _l}({X_i} \to {Y_j})\)establishes a relationship between the 3 types of image feature datasets corresponding to the 2 types of images, ensuring consistency among the 3 types of image feature datasets during the DIFDC transfer learning training process. The parameter\({\sigma _l}({X_i} \to {Y_j})\)is found by constructing a mathematical model using a Gaussian kernel function21, and the parameter\(\mu\)functions as a smoothing control factor for the 3 image feature datasets and serves to control the noise. \({\left\| {Z(X) - Z(Y)} \right\|_2}\)represents the Euclidean distance between the 3 complete image feature datasets between the source images and the predicted target images, which is computed using the \({l_2}\) paradigm arithmetic.

According to the discussion above regarding the major components and key processing strategies for constructing the DIFDC, the main features of the DIFDC are expressed as follows: When the source image is mapped to the predicted target image into a shared feature space, it can be ensured that the inevitable differences between the source image and the predicted target image feature datasets can be effectively controlled for the case where the image features do not belong to the same category. In the case where the image features belong to the same category, it can be ensured that the possible differences between the source image and the predicted target image feature datasets can be effectively controlled. The internal structure and process flow of DIFDC are shown in Fig. 2.

The model structure and the implementation of the algorithm

The proposed model, the Image Feature Data Association Fusion Heterogeneous Transfer Learning Model (IFDAFHTL), is composed of 2 modules, the IFDL and the DIFDC. The DIFDC embodies heterogeneous transfer learning, and the IFDL is the key strategy introduced by the DIFDC operation. The IFDL can pinpoint the differences in the 3 kinds of datasets, namely, image features, feature space, and feature labels of source and predicted target images. The IFDL effectively constrains the range of the distribution of differences in the common feature space of the transfer learning mapping and controls the noise by minimizing the constraints on the 3 types of feature datasets before the training of transfer learning. When transfer learning mapping to match the maximum extension of the common feature space is achieved the DIFDC ensures that the corresponding 3 kinds of image feature datasets in the source and predicted target images can always be consistent in the transfer learning, and carries out a step to improve the control of the difference distribution of the transfer learning mapping to match the common feature space. The association strategy between the 3 image feature datasets in the source images and the predicted target images is introduced in both IFDL and DIFDC. The framework and image recognition process of the IFDAFHTL model are shown in Fig. 3.

The input and output of the IFDAFHTL Algorithm.

Inputs: the sample set of source images, the sample set of predicted target image library, the IFDL module, DIFDC modules, and hyperparameters.

Output: source image edge features, predicted target image edge features, edge features matched by mapping between the source images and the predicted target images, and recognized images.

The steps of the IFDAFHTL:

Step 1: Input the source image sample set, call the predicted target image sample library, start the IFDL and the DIFDC, and input the hyperparameters;

Step 2: Extract the 3 types of feature datasets, namely, global edge features, feature space, and feature labels are extracted from the source and predicted target images, respectively. Use the IFDL and Complete noise control processing. Use Eqs. (1) and (2) Calculate 3 sets of correlations between the source images and the predicted target images in terms of global edge features, feature space, and feature labels, and then Calculate 3 sets of correlations between the 3 types of feature datasets, Obtain six correlation coefficients for the six sets, Obtain 4 correlation parameters using the BN layer by aggregating between 3 feature datasets, six sets of correlations, and six correlation coefficients through grouping; Apply Eqs. (3) and (4), the linear Sigmoid activation function, Obtain and Rank outcomes of the 4 correlations, and then Utilize the MIFMM multidimensional feature mapping model to output the result of feature mapping matching from the source image to the predicted target image; the correlations and feature mapping matching results are attained satisfactorily.

Step 3: If the results are not satisfactory, the IFDL is restarted until the evaluation and matching results become satisfactory. The learning and training of all machine learning network models applied in the IFDL are also completed.

Step 4: The edge features, feature space, and feature labels of the source and predicted target images are extracted using the DIFDC to obtain a set of edge features, a set of feature space, and a set of feature labels, a total of 3 sets of image feature datasets for the source and predicted target images. Equations (6) and (7) resolve the variance minimization for each group of feature datasets. Equation (8) is used to complete the maximum common feature space determination of the transfer mapping for 3 groups of image feature datasets. Equation (9) is used to obtain the substantial edge features, feature space, and feature labels of the source images, and run the IFDL to validate the substantial 3 source image feature datasets, when the validation is unqualified, rerun the DIFDC to complete the previous processing in this step until the verification is qualified. By applying Eqs. (10–12), the accurate 3 source image feature datasets are input to train the constructed DIFDC. The predicted target image edge features, feature space, and feature labels that have been processed by minimizing variance are input to validate the trained DIFDC to ensure that the DIFDC is reliable and stable. If the verification fails, the IFDL is enabled to verify the edge features, feature space, and feature labels of the source images and the predicted target images, and if it fails again, the DIFDC is rerun to complete the previous processing in this step until the verification passes. At this point, the training of all the machine learning network models applied in the DIFDC was completed. The IFDL was further revalidated, which also led to the solution of Eq. (5).

Step 5: The model in Fig. 3 is activated to complete the classification of the exact source image edge features extracted in the third step, and the new image is reconstructed using the inverse convolution operation, and the resulting reconstructed image is the target resultant image for recognition; applying Single-scale Structural Similarity Index (SS-SSIM), a comparative analysis is performed between the reconstructed images and the target images in the predicted target image library. If the structural similarity ratio of the 2 images gets close to 1, the image recognition process is completed. If less than 0.8, the process from the first to the fourth step is rerun.

Step 6: Output all the required results.

Experiments and discussion

Experiments

To experiment with image recognition to verify the reliability and accuracy of the proposed model, the laptop computer with the Office-31 image contains 31 object categories in three domains. The objects commonly encountered in office settings, such as keyboards, file cabinets, and laptops are used. The bicycle in the Caltech-256 image contains 30,607 real-world images, of different sizes, spanning 257 classes. Each class is represented by at least 80 images. The zebra in the NUS-WIDE network image contains 269,648 images with a total of 5,018 tags collected from Flickr. The flower in the ImageNet image datasets contains 14,197,122 annotated images according to the WordNet hierarchy. All are used as the source input images, and the above four image corresponding image sets in the dataset are set as the prediction target image library.

The processor for the experiment was AMD Ryzen 5 5600G with Radeon Graphics 3.90 GHz, 16.0 GB of RAM, the operating system was Windows 10, 64-bit, and the experimental software applied was Mat Lab 2014a.

This article uses ImageNet to pre train an experimental network model with a learning rate of 0.0001, batch size of 256, optimizer Adam, and 150 iterations.

The 3 experiments are conducted to validate the performance of the proposed model.

Experiment 1 is run to detect the outcome of noise control of the IFDL features extracted from the source images and predicted target images, and verify its effect on the features of the extracted images to investigate whether the ROF denoising strategy works well. Then, the smoothness control factor\(\mu\)is tested to determine the effect of the image features on noise control in transfer learning of the DIFDC.

Experiment 2 tests the feature extraction and feature data association performance of the images in the IFDL. Firstly, the correlation between global features, global features and feature space, global features and feature labels, image space and feature labels of the source image, and the predicted target image is calculated to detect whether the\({H_1} - {H_6}\)is valid for the six correlation coefficients, respectively, and the rankings of the 4 correlation parameters \(S{}_{W}(X,Y)\)、\(S{}_{{W - V}}(X,Y)\)、\(S{}_{{W - B}}(X,Y)\) and \(S{}_{{V - B}}(X,Y)\)are checked. Then, the IFDL’s feature extraction performance on the image edges was tested using a Deep Belief Network (DBN)22, Deep Stacked Denoising Sparse Autoencoders (DS-DSA)23, Logarithmic Differential Convolutional Neural Network (LDiffCNN)24, and IFDL. The 4 deep learning models are compared to detect which one is successful regarding the accuracy of the extracted image features.

Experiment 3 tests the transfer learning performance of the DIFDC. Frst, comparative experiments for the differences in edge features between the source images and the predicted target images are conducted when the differences in the edge feature space exist between the source images and the predicted target images. Second, the impact of key control parameters in the DIFDC on the accuracy of the transfer learning mapping is validated.

Experiment 4 performs a comparison among the 5 algorithms.

Results and performance evaluation

Experiment 1

The introduction of the ROF denoising strategy is a step in the IFDL to achieve noise control to extract image features. Signal Noise Ratio (SNR) is applied as a noise control evaluation metric when extracting image features from the subject targets in the 4 different image categories. The larger the SNR value, the better the noise control effect on the images. Table 1 presents the experimentally detected noise control effect.

The noise control effect with the ROF denoising strategy is better than without it. In the feature extraction of the 4 different image categories, the SNR scores are not so different and indicate a more stable ROF denoising strategy.

To process an autonomous update of the matrix with transfer learning of the DIFDC, the Gaussian kernel function is introduced to design the weight matrix\({\sigma _l}({X_i} \to {Y_j})\). The smoothness control factor\(\mu\)of image features plays a vital role in image noise control. The experiment selects 4 different image categories as the source images, and 4 image datasets as the predicted target image library. The estimated target images by transfer learning mapping are obtained, and the ideal\(\mu\)score is detected as well as the SNR metric for controlling noise. Table 2 presents the outcomes.

Table 2 shows that when\(\mu =0.00\) the score \({\sigma _l}({X_i} \to {Y_j})\)is invalid and the implementation cannot detect the SNR value. When \(\mu =0.10 \to 0.60\), the detected SNR value shows an increasing trend, and when\(\mu =0.60\), the maximum value of SNR is detected in the 4 transfer mapping to estimate images. When \(\mu =0.70 \to 1.00\)the detected SNR value shows a decreasing trend. Therefore, the DIFDC has the image noise control functionality.

When the noise control strategies are used in the IFDL and DIFDC, the average SNR after transfer mapping and image recognition for the 4 different image categories in Tables 1 and 2 reaches 48.33, and the results are better than the image noise control effect when the ROF and Gaussian kernel function are applied singly. It indicates that the proposed IFDAFHTL model possesses good noise control capability.

Experiment 2

The IFDL can not only achieve the feature extraction of the source images and predicted target images concurrently, but also implement the association operation and evaluate their results between global features, feature space, and feature labels. Among the key parameters involved are the mapping matrices of the six correlation coefficients and the values of the 4 correlation parameters, namely, \({H_1} - {H_6}\),\(S{}_{W}(X,Y)\),\(S{}_{{W - V}}(X,Y)\),\(S{}_{{W - B}}(X,Y)\)and \(S{}_{{V - B}}(X,Y)\), as well as their ranking results. The dimension of the \({H_1} - {H_6}\)matrix is the same as that of the 4 images, which are all 945 × 630 pixels, which means that the number of vectors in the \({H_1} - {H_6}\)matrix is 945 × 630. If the vector elements in the \({H_1} - {H_6}\)matrix are not all 0, a correlation can be calculated between the features of the six image datasets \(W(X)\),\(W(Y)\),\(V(X)\),\(V(Y)\),\(B(X)\)and \(B(Y)\). The more the number of vector elements not being zero in the matrix, the greater the correlation between the image feature datasets. The 4 correlation parameters \(S{}_{W}(X,Y)\),\(S{}_{{W - V}}(X,Y)\),\(S{}_{{W - B}}(X,Y)\)and \(S{}_{{V - B}}(X,Y)\)take values ranging from 0.00 to 1.00. The larger the value, the stronger the correlation between the image feature datasets. The results of the 4 correlations are sorted. The computed correlations of the detected image features are presented in Tables 3 and 4, respectively.

Table 3 indicates that no vector elements exist in the\({H_1} - {H_6}\)matrix that are all zeroes, and the vector elements in the matrix that are not zeroes are biased. It suggests that the correlation between the six image features \(W(X)\),\(W(Y)\),\(V(X)\),\(V(Y)\)\(B(X)\)and \(B(Y)\)is relatively strong. The\({H_1} - {H_6}\)mapping matrix is determined comprehensively, and the correlations are found among the 6 image features.

Table 4 demonstrates the correlation evaluations of the global features between the source images and the predicted target images\(S{}_{W}(X,Y)\), the correlation evaluations between the image features and the feature space \(S{}_{{W - V}}(X,Y)\), the correlation evaluations between the image features and the feature labels\(S{}_{{W - B}}(X,Y)\), and the correlation evaluations between the feature space and the feature space\(S{}_{{V - B}}(X,Y)\). All results are greater than 0.90, while\(S{}_{W}(X,Y)\) has 0.96 as the highest. The IFDL employs image feature extraction as the key point and the 3 correlation parameters of \(S{}_{{W - V}}(X,Y)\)、\(S{}_{{W - B}}(X,Y)\)and \(S{}_{{V - B}}(X,Y)\)are defined based on \(S{}_{W}(X,Y)\). The connection between image features, feature space, and feature labels is very close, which effectively improves the correlations between the 3 image features.

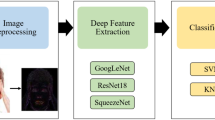

In the experiment extracting the image features in the IFDL, the image feature extraction methods involved in the comparison include the deep learning models of the DBN, DS-DSA, and LDiffCNN. The IFDL is an improved model constructed with Convolutional Neural Networks (CNN) as the basic framework. The experimental data of image features extracted using the above 4 deep learning models are shown in Table 5; Fig. 4.

Figure 4 The extracted image features using 4 deep learning models.

Table 5 shows the evaluation indexes for the edge features of the 2 image categories: Average Precision (AP) and F-measure. The AP contains the 2 quality evaluation indexes Precision (P) and Recall (R) of the image edge features, which are the integral values of the PR curve. The F-measure metric can achieve a balanced control between the Precision and Recall scores of the image edge features. When 0 ≤ F-measure ≤ 1, the closer the value is to 1, the more precise the extracted image edge features are.

Table 5; Fig. 4 indicate that the AP and F-measure values computed using the IFDL are slightly better than the 3 deep learning models, DBN, DS-DSA, and LDiffCNN. The extracted image feature maps have accurate edge information and contain less noise. In the edge feature extraction of the image categories, the evaluation metrics indicate that the operation of the IFDL is stable. The experimental data using the other 3 deep learning models also show that these classical models also have strong advantages. The AP and F-measure values of the experimental results indicate that all 4 deep learning models have good gradient convergence. The IFDL employs CNN to construct the basic framework, which contains the processing mechanisms of the ROF noise control strategy, the normalization of the BN image feature space, and the label definition of the fused INPSVM image features. Constraints are imposed on the differences existing between image features, feature space, and feature labels. As a result, the edge features of the extracted images are more desirable, which provides a high guarantee for the subsequent image recognition of heterogeneous transfer learning models.

Experiment 3

Firstly, the edge features are designed to conduct mapping experiments from the source images to the target images. To be more specific, differences are set to occur in 2 cases to implement experiments, namely, between edge features and edge image feature space of 2 kinds of images, the source images, and the target images, respectively. When mapping is completed between the source image to the predicted image, the variances, \(K(W)\), \(K(V)\)), \(K(B)\), corresponding to the 2 types of image features, image feature spaces, and image feature labels, and the mapping of the common feature space of the dimensions becomes maximum. Then, the consistency of the key control parameter in the image features of the learning module designed in this paper is verified for their impact on the accuracy of transfer learning mapping. The critical control parameters include\(K(W)\),\(K(V)\)and \(K(B)\), and \(L\) for the source and predicted images, the mapping matrix for regularization, and the loss function control parameter\(\lambda\)of the transfer learning model, similarly used for the accuracy of image edge feature classification. The experiments jointly run the source image and predicted target image feature modules, and verify the correlations between the image edge features of the 2 modules. The experimental outcomes are shown in Table 6; Fig. 5, respectively.

Table 6 presents the values of \(K(W)\),\(K(V)\)and \(K(B)\)after the source images are mapped to the edge features of the recognized target images. The accuracy of the image edge features, the image edge feature space, and the image edge feature labels are determined by the ratios of the edge features, the edge feature space, and the edge feature labels. Figure 5 gives\(K(W)\),\(K(V)\)and \(K(B)\)values set in the range between 0.00 and 0.10. \(L\) represents values set in the range between 0% and 100%, and \(\lambda\) is set in the range between 0.00 and 0.10. The parameters are designated as the hyper-parameters for the image features of the consistent learning module. The classification accuracy of the image edge features of the testing module is computed by using different parameter values after the source images are mapped to the predicted edge features of the target images. Then, SVM is used to obtain the classification results of the image edge feature.

Table 6 demonstrates that the variances of the image edge features, edge feature space, and edge feature labels between the source images and the predicted images are in the range between 0.01 and 0.03, and the image feature consistency of the learning module designed has a high accuracy to extract image edge features for heterogeneous transfer learning. The maximum common edge feature space can be obtained when the source image is mapped to the edge feature space of the predicted target image for transfer learning. The differences in edge features, edge feature space, and edge feature labels between the source images and the predicted target images are effectively constrained while ensuring that no loss occurs on the edge features.

Figure 5 demonstrates that when the variance of the image edge features, edge feature space, and edge feature labels between the source images and the predicted images is between 0.01 and 0.03. The accuracy of the extracted image edge features is higher, and the more accurate the results of the edge feature classification are attained, which is basically in line with the experimental data in Table 6. Theoretically, when the variances of \(K(W)\), \(K(V)\)and \(K(B)\)are 0.00, the classification results of the image edge features are the most accurate, but the detection results are the opposite. Thus, it illustrates that the transfer learning of the module is based on the actual input source image for training, and does not completely depend on the hyperparameters of the module. The hyperparameter \(L\) presents normal separability in the 0–100% when iterative transfer learning is trained, and the accuracy of image edge feature extraction increases sequentially. The mapping matrix regularization and loss function control parameters in the transfer learning module share the\(\lambda\)hyperparameter, which takes the value of 0.01–0.03. The higher the accuracy of image edge feature extraction, the more accurate the results of edge feature classification.

Experiment 4

This experiment compares the 5 algorithms of MTSL-DRDR11, TATD4, SRW8, DTR9, HDARMST12 with the proposed algorithm, IFDAFHTL. The evaluation metrics are Accuracy, Precision, Recall, Structure Similarity Index Measure (SSIM), and Peak Signal-Noise Ratio (PSNR).

The MTSL-DRDR, TATD, SRW, DTR, and HDARMST construct network models and set the model hyperparameters according to the scheme proposed in Ref. The hyperparameters of the convolutional neural network are involved in the IFDAFHTL set as in the ResNet25 model. The key hyperparameter \(\lambda\)is set to 0.01, the correlation coefficients between the edge features of the source images and the predicted images, the variances of the image edge features, edge feature space, and edge feature labels between the source image and the predicted image, and the weight matrix for the transfer learning process and other parameters are determined to take the values according to the model design. The experimental statistics are shown in Table 7.

Table 7 shows that the IFDAFHTL has a slight advantage in image recognition compared with the available 5 transfer learning algorithms. The IFDL module in the IFDAFHTL increases the feature correlations between the source images and the predicted target images, and the DIFDC module effectively controls the feature differences between the source images and the predicted target images. Meanwhile, both IFDL and DIFDC perform image noise control well.

When all the vector elements in all the correlation coefficients mapping matrices are not 0, and the vector elements are not 0, then the correlations between the image feature data are above 0.93. The IFDL has good convergence when the minimum average accuracy of image features extracted by the IFDL is 92% and the minimum of the F-measure is 0.93. When the DIFDC module is in the smoothness control factor of 0.60, the SNR value is above 36 dB, the Gaussian kernel function is effective for image noise control. When the variance of the detected image features is not more than 0.04, the minimum accuracy of the extracted image features is 90%. When the variance of the image features is less than 0.04, the regularization of the mapping matrix and the loss function control parameter is in the range of 0.01–0.03, and the accuracy of the extracted image edge features is the highest. The accuracy of the extracted image edge features is the highest when the value of the separability of the image feature data is 100%. The IFDAFHTL has a mean 48.33 dB SNR, a minimum value of 93% for Image Recognition Accuracy, 91% for Precision, 86% for Recall, 0.87 for SSIM, and 41.97 for PSNR when both IFDL and DIFDC denoising strategies are applied.

Conclusion

Source images and predicted target images differ in image features. When heterogeneous transfer learning is applied some difficulties and further issues appear. For example, noise in image recognition appears and is required to be reduced. The Image Feature Data Learning and the Definition of Image Feature Data Consistency modules adopt the normalization layer of a neural network to extract 3 types of features, namely, global features, feature space, and feature labels. A noise reduction method, Rudin-Osher-Fatemi, is implemented. Thus, the Image Feature Data Association Fusion Heterogeneous Transfer Learning Model is proposed.

Also, a correlation coefficient is computed for image feature vectors, and effective correlation mapping matrices are constructed through multi-dimensional vectorized correlation. Then, the feature vectors and correlation coefficients are aggregated using the Batch Normalization Layer to assess correlations between image features. Furthermore, to check the variance between the features of the source images and the target images to be minimum and the common space of the transfer mapping features to be maximum, the Definition of Image Feature Data Consistency deals with controlling parameter separability by designing the constraint matrix with minimum variance score for the source images and the target images. Finally, the regularization of the transfer mapping matrix is carried out to create the loss function to consistently train the image features to construct the heterogeneous transfer learning module. When the transfer learning weight matrix is attained, the consistent constraint strategy of the image features is introduced to update image features in real time. Besides, the Gaussian kernel function is employed to control the generated noise in transfer learning.

A heterogeneous transfer learning model with the 2 modules, which are feature learning of the source images and predicted target images and provide image features with the transfer learning module, containing the ROF noise control strategy proposed and compared with the 5 available algorithms available in the literature. Four benchmark datasets are used to make comparisons. The proposed method, IFDAFHTL, excels over the available algorithms regarding several metrics. The 4 groups of vectors, namely, local features, global features and feature space, global features and feature labels, and feature space and feature labels, are explicitly defined for the source images and the predicted target images. The accuracy of the image feature datasets is improved by calculating the correlations between the 4 groups of vectors. Then, correlation coefficients, and rankings are utilized to improve the outcomes.

More specifically, the research results contain very comprehensive feature parameters when the correlations of image feature datasets are included. However, some research results consider the establishment of a correlation between image feature labels. The factors leading to the differences between the source image and target image feature datasets are fully considered, and the transfer learning training using the source image or predicted target image feature datasets is improved.

The results indicate that the SNR is greater than 35 dB and the edges in the image feature map are clearer and contain less noise in the Image Feature Data Learning module with the Rudin-Osher-Fatemi denoising strategy.

The future work plans to use the IFDAFHTL applied not only in image recognition but also in computer image vision, computer network routing control, automation control, and other areas of artificial intelligence control. The IFDL can also be applied when the correlation between cross-domain feature datasets for the fusion of images, text, voice letters, and other kinds of datasets.

Data availability

Data is provided within the manuscript or supplementary information files.

References

Oscar, D. & Khoshgoftaar, T. M. A survey on heterogeneous transfer Learning[J]. J. Big Data. 4, 29–48 (2017).

Zhao, P. et al. A Cross-Media heterogeneous transfer learning for preventing Over-Adaption[J]. Appl. Soft Comput. 85, 819–837 (2019).

Lang, H. T. et al. Multisource heterogeneous transfer learning via feature augmentation for ship classification in SAR Imagery[J]. IEEE Trans. Geosci. Remote Sens. 60, 8814–8833 (2022).

Pierluigi, Z. R. et al. Learning good features to transfer across tasks and Domains[J]. IEEE Trans. Pattern Anal. Mach. Intell. 45, 9981–9995 (2023).

Wang, P. P. et al. Log GT: Cross-system log anomaly detection via heterogeneous graph feature and transfer learning[J]. Expert Syst. Appl. 251, 569–587 (2024).

Zhou, J. T. Y., Pan, S. J. L. & Tsang, L. W. A deep learning framework for hybrid heterogeneous transfer learning[J]. Artif. Intell. 275, 310–328 (2019).

Jia, J. et al. Transferable heterogeneous feature subspace learning for JPEG mismatched Steganalysis[J]. Pattern Recogn. 100, 105–123 (2020).

Xiao, Q., Zhang, Y. & Yang, Q. Selective random walk for transfer learning in heterogeneous label Spaces[J]. IEEE Trans. Pattern Anal. Mach. Intell. 46, 4476–4488 (2024).

Liu, Z. H. et al. Discriminative transfer regression for Low-Rank and sparse subspace Learning[J]. Eng. Appl. Artif. Intell. 133, 8445–8463 (2024).

Lou, Q. D. et al. Rules-Based heterogeneous feature transfer learning using fuzzy Inference[J]. IEEE Trans. Fuzzy Syst. 32, 306–321 (2022).

Liu, Z. H. et al. Manifold transfer subspace learning based on double relaxed discriminative Regression[J]. Artif. Intell. Rev. 56, 959–981 (2023).

Liu, Y. F., Du, B., Chen, Y. Y. & Zhang, L. F. Robust multiple subspaces transfer for heterogeneous domain Adaptation[J]. Pattern Recogn. 152, 473–491 (2024).

Diwakar, M., Singh, P. & Shankar, A. Multi-Modal medical image fusion framework using Co-Occurrence filter and local extrema in NSST Domain[J]. Biomed. Signal Process. Control. 68, 2788–2806 (2021).

Jie, Y. H. et al. TSJNet: A Multi-modality Target and Semantic Awareness Joint-driven Image Fusion Network[C]. IEEE Conference on Computer Vision and Pattern Recognition, 2024:1–10. (2024).

Diwakar, M. et al. Directive clustering contrast-based multi-modality medical image fusion for smart healthcare system[J]. Netw. Model. Anal. Health Inf. Bioinf. 11, 3647–3663 (2022).

Jiang, X. W. et al. iFuzzyTL: interpretable fuzzy transfer learning for SSVEP BCI System[J]. Hum Comput Interact. 10, 2267–2285 (2024).

Xu, J. J. & Osher, S. Iterative regularization and nonlinear inverse scale space applied to Wavelet-Based Denoising[J]. IEEE Trans. Image Process. 16, 534–544 (2007).

Liu, L. M., Chu, M. X., Gong, R. F. & Zhang, L. An improved nonparallel support vector Machine[J]. IEEE Trans. Neural Networks Learn. Syst. 32, 5129–5143 (2022).

Rusakov, D. A. A misadventure of the correlation Coefficient[J]. Trends Neurosci. 46, 94–96 (2023).

Greenacre, M. et al. Principal component Analysis[J]. Nat. Reviews Methods Primers. 100, 273–291 (2022).

Alvarez, S. A. & Gaussian, R. B. F. Centered kernel alignment (CKA) in the Large-Bandwidth Limit[J]. IEEE Trans. Pattern Anal. Mach. Intell. 45, 6587–6593 (2023).

Yu, J. B. & Liu, G. L. Knowledge Transfer-Based sparse deep belief Network[J]. IEEE Trans. Cybernetics. 53, 7572–7583 (2023).

Dinesh, P. S. & Manikandan, M. Fully convolutional deep stacked denoising sparse auto encoder network for partial face Reconstruction[J]. Pattern Recogn. 130, 8783–8801 (2022).

Yasin, M., Sarıgül, M. & Avci, M. Logarithmic learning differential convolutional neural Network[J]. Neural Netw. 172, 6114–6132 (2024).

Zhang, H. S. et al. Stabilize deep ResNet with a Sharp scaling factor [J]. Mach. Learn. 111, 3359–3392 (2022).

Diwakar, M., Singh, P. & Shankar, A. Multi-modal medical image fusion framework using co-occurrence filter and local extrema in NSST domain. Biomed. Signal Process. Control. 68, 102788. https://doi.org/10.1016/j.bspc.2021.102788 (2021).

Das, M. & Gupta, D. B. AshwiniAn end-to-end content-aware generative adversarial network-based method for multimodal medical image fusion, Data Analytics for Intelligent Systems, IOP Publishing,2024, pp 7–10 https://doi.org/10.1088/978-0-7503-5417-2ch7

Jie, Y., Xu, Y., Li, X. & Tan, H. TSJNet: A Multi-modality Target and Semantic Awareness Joint-driven Image Fusion Network, e-print 2402.01212, arXiv, (2024). https://arxiv.org/abs/2402.01212

Rashmi Dhaundiyal et al. J. Phys. : Conf. Ser. 1478 012024, doi:https://doi.org/10.1088/1742-6596/1478/1/012024. (2020).

Diwakar, M. et al. Directive clustering contrast-based multi-modality medical image fusion for the smart healthcare system. Netw. Model. Anal. Health Inf. Bioinforma. 11, 15. https://doi.org/10.1007/s13721-021-00342-2 (2022).

Acknowledgements

We thank all the researchers who provided references for this study. This study was funded by the Key Project of Jingmen Science and Technology Plan (No. 2023YFZD056), the Research Center of Functional Printing and Packaging Materials and Technology, Jingchu University of Technology, and the Intelligent Sensing and Perception Research Team, Jingchu University of Technology (No. KY20241101). The Outstanding Young and Middle-Aged Scientific and Technological Innovation Team of Colleges and Universities in Hubei Province (grant number T2022038).

Author information

Authors and Affiliations

Contributions

Wen-Fei Tian, Experimental tesing and translation of papers.Ming Chen, theortical research and expereimenral testing.Zhong Shu, theoretical research and experimental testing.Xue-Jun Tian, theoretical research guidance.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Tian, WF., Chen, M., Shu, Z. et al. A construction of heterogeneous transfer learning model based on associative fusion of image feature data. Sci Rep 15, 14880 (2025). https://doi.org/10.1038/s41598-025-99163-w

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-99163-w