Abstract

In contemporary society, career readiness holds paramount significance for individual life, exerting a direct influence on initial employment, job satisfaction, and the sense of career identity. Framed within multidimensional item response theory and text mining, this study embarks on exploring assessment methodologies for high school students’ career readiness by revising the “Career Readiness Questionnaire – Adolescent Version” and employing text mining techniques. Study One collected 1261 valid data points through cluster sampling. With the aid of Bayesian multivariate item response theory parameter estimation procedures and R language, the career readiness measurement tool was revised, yielding a concise scale that aligns with psychometric requirements. The research findings indicated that the concept of “career readiness” is more suitable for the multidimensional graded response model than for the bifactor model. The dataset’s discrimination parameters fell within the range of [1.59, 3.84], the difficulty parameters fell between [−2.91, 2.24], and the peak values of the maximum information functions fell within [0.24, 2.35]. After six items with the lowest peaks were removed (Items 4, 5, 6, 31, 32, and 33), the remaining 30 items composed the Chinese concise version “Career Readiness Questionnaire – Adolescent Version,” with discrimination parameters ranging from [1.45, 3.38], difficulty parameters between [−3.31, 1.76], and maximum information function peaks within [0.50, 2.64]. Building upon the effective participants from Study One, Study Two matched questionnaire data with textual information, resulting in 1012 valid participants. Leveraging text mining, a machine learning model was constructed to predict high school students’ career readiness based on essay texts. The results of Study 2 prove that the revised lexicon was more accurate in feature extraction. Building upon this, the machine learning model for essay text demonstrated excellent performance in predicting career readiness, with random forest outperforming the other algorithms. This study provides a novel approach for schools and parents to comprehend the state of career readiness among high school students, offering a convenient and effective tool for educational activities related to students’ career development.

Similar content being viewed by others

Introduction

In a competitive contemporary society, every parent aspires for their children to attain a leading position in both academics and careers, achieving success in their career. The attainment of success in one’s career is crucial for individuals and is closely related to various facets of life (Gao and Ding, 2022; Zhan, 2020).

Brown and Lent (2012) characterized career readiness as the diverse attitudes, behaviours, and abilities required to master occupational tasks, transitions, and challenges. Patton and Skorikov (2007) conceptualized career readiness as a component of the career identity commitment process, emphasizing its critical role in the late adolescence of youth and transition into adult careers. Career readiness is correlated with various factors influencing professional success (Salomon et al. 2024). For instance, Lau et al. (2020) discovered a direct correlation between a positive self-concept and job preparation skills. A longitudinal study conducted by Liu (2016) revealed that the dimensions of career readiness, including readiness in abilities, mindset, and behaviour, significantly predict the initial employment quality of vocational school graduates. Furthermore, Gysbers (2013) asserted that students who are well prepared for their careers show more active engagement, greater psychological resilience, and a firm commitment to a self-defined professional future, thereby adding meaning and purpose to their lives. Most previous research on career readiness has focused on college students or other groups of students about to enter the workforce, such as nursing students (He et al. 2021), college athletes (August, 2020), students in specialized art schools (Li and Qin, 2021), and special students (Binghashayan et al. 2022; Lombardi et al. 2023). However, high school students have been largely excluded from the scope of career readiness studies. High school students are at the turning point of entering adult society and need to accumulate and prepare adequately to ensure future career success (Super, 1980; Castellano et al. 2017; Kenny et al. 2023). Consequently, the level of career readiness among high school students is considered a pivotal factor in determining their ability to smoothly adapt to adult society (Crespo et al. 2013; Hirschi et al. 2009).

Item response theory (IRT) is a modern measurement theory that emerged after classical testing theory (CTT) and has widespread applications in the fields of psychology and educational measurement. In comparison, IRT has distinct advantages in handling data and item-level analysis (Embretson, 1996; Hirsch et al. 2023). The core assumption of IRT is that an individual’s performance on a specific item is determined by his or her ability at a latent level, differing from the assumptions of CTT. IRT utilizes item characteristic curves (ICCs) to depict the probability of an individual responding to an item across various levels of ability. However, traditional IRT models are unidimensional, assuming that an individual’s performance is influenced by only one latent ability. In practice, an individual’s performance is often affected by multiple latent abilities (Reckase, 2006; Kang and Xin, 2010; Alam et al. 2023). Hence, multidimensional item response theory (MIRT) emerged. MIRT integrates factor analysis and unidimensional IRT, describing the relationships between item parameters, the multidimensional latent traits of respondents, and response patterns to items through nonlinear functions. Compared to one-dimensional IRT, MIRT can better handle multidimensional data and enhance measurement accuracy and precision by considering the interdimensional correlations. This superiority is particularly pronounced when dealing with a limited number of items and a small number of items per dimension (Wang et al. 2006; Quansah et al. 2024).

In the development and revision of measurement scales, the successful application and advancement of MIRT have effectively addressed the limitations of unidimensional models on multidimensional data, providing a powerful tool for more efficient measurement (Jiménez et al. 2023; Sepehrinia et al. 2024). Dodeen and Al-Darmaki (2016) revised the UAE National Marital Satisfaction Scale, and the results of the shortened revised scale were very close to those of the original scale. Zang et al. (2012) utilized IRT to revise the Parent–Youth Companion Attachment Scale, resulting in a new scale with significantly increased test information peak functions and greater reliability and effectively examining the attachment status of Miao ethnic junior high school students in China. Ma et al. (2023) revised the Multidimensional-Multi-Attribution Causality Scale based on MIRT, and the revised scale exhibited improved psychometric properties. Sarah et al. (2018) demonstrated that the results of the multidimensional graded response model supported the scoring process of the two subscales of the Cushing QoL questionnaire, contributing to the enhancement of scale quality in the field of health sciences. Sukhawaha et al. (2016) used MIRT and exploratory factor analysis to develop a scale with 35 items and 4 factors (stressors, pessimism, suicide, and depression) and validated it through confirmatory MIRT analysis of 450 adolescents, which effectively distinguished individuals with suicide attempts from those without suicidal tendencies. These previous studies affirm the effectiveness of IRT-based methods in the development of psychological scales.

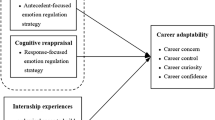

In the era of big data, the online space has accumulated rich individual behaviour and expression data, providing developmental opportunities for psychological science (Idris and Ken, 2018; Yue et al. 2013; Raghavendra et al. 2024). With the prevalence of social media and online learning platforms, researchers can collect more open and real text data to delve into individual behaviours and psychological traits (Feldman and Sanger, 2008; Morrow, 2024; Macanovic and Przepiorka, 2024). By utilizing dictionaries such as the Linguistic Inquiry and Word Count (LIWC), researchers can extract lexical features from text posted by Facebook users to predict their Big Five personality traits (Markovikj et al. 2013; Tay et al. 2020). In China, researchers have noted the extensive user populations on online social media platforms such as Weibo and Zhihu. They used the Chinese version of the LIWC to extract lexical features to analyse user-generated content and delve into users’ psychological states (Wang et al. 2016). They explored the thematic characteristics of public mental health topics on online platforms and analysed the anxiety and depression of the public (Cheng et al. 2017; Mi et al. 2021). Additionally, researchers have employed text, such as essays, to predict personality traits. Hirsh and Peterson (2009) studied the relationship between word usage and the Big Five personality traits through participants’ self-narrative materials. Zhang et al. (2017) utilized the life design paradigm and designed essay test materials named “My Career Story” to predict career adaptability in college students. Luo et al. (2021) researched the shy traits and shy language style models of primary school students through their essays, diaries, and comments. Overall, the integration of text mining and psychological research is deepening, and the scope of research is continually expanding.

Previous research has indicated that the development or revision of career readiness scales predominantly relies on CTT. For instance, Lei et al. (2000) investigated the psychological state of career readiness and its influencing factors among university students in Chongqing. Bai (2021) analysed the current status of career readiness among maritime graduates. Vanessa et al. (2022) aimed to enhance students’ career readiness through career guidance, while Tian (2022) conducted a study on the career attitudes of new students in rural high schools in Hangzhou. The use of IRT to develop or refine career readiness scales is still relatively unexplored. Therefore, this study aimed to thoroughly investigate the assessment tools for high school students’ career readiness using MIRT and text mining. By enhancing the quality of measurement tools through the utilization of MIRT, coupled with the integration of text mining, this study aims to explore the optimal machine learning model for achieving automated predictions of career readiness. The ultimate goal is to enhance the convenience and effectiveness of career readiness measurements.

Study 1 Revising the ‘Career Readiness Questionnaire - Adolescent Version’ using multidimensional item response theory

Method

Participants

Conducting randomized cluster sampling, we selected second-year high school students from a province, involving 37 classes and a total of 1585 individuals. Following the school’s approval and informed consent from the students and their guardians, we conducted online collective assessments on a class-by-class basis. A total of 324 participants were excluded due to invalid responses (questionnaires with 99% of answers selecting the same option), leaving a remaining 1261 valid participants (Mage = 16.23, SDage = 0.69). Among them, 607 were male (48.1%), and 654 were female (51.9%).

Instruments

Career Readiness Scale-Adolescent Version (CRS-A)

Marciniak et al. (2020) developed the CRS-A, which comprises 36 items organized into 12 dimensions: occupational expertise (OE), labour market knowledge (LMK), soft skills (SS), career involvement (CI), career confidence (CCo), career clarity (CCl), social support—school (SS-S), social support—family (SS-Fa), social support—friends (SS-Fr), networking (Nw), career exploration (CE), and self-exploration (SE). Each dimension consists of 3 items, and there is no reverse scoring. The items were measured on a 5-point Likert scale ranging from 1 (strongly disagree) to 5 (strongly agree).

Occupational Identity Scale (OIS)

The OIS was developed by Mauer and Gysbers (1990) and consists of 18 items with no reverse scoring. It employs a 5-point scoring system (1 = strongly disagree, 5 = strongly agree). An example item is as follows: “Deciding on a career is a long-term and challenging issue for me.” In this assessment, the Cronbach’s α coefficient for the OIS was 0.92.

Career Decision Self-Efficacy Scale (CDSES)

The subscale within the CDSES, developed by Lent (2013) to measure career decision self-efficacy, comprises 8 items. There is no reverse scoring, and it utilizes a 10-point scoring system (1 = not confident at all, 10 = extremely confident). An example item is as follows: “How confident are you in your ability to find a career that suits your personality?” In this assessment, the Cronbach’s α coefficient for this subscale was 0.96.

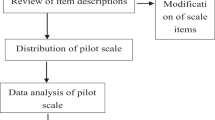

Research process

Initially, two bilingual translators translated the original scale into Chinese and performed multiple back-translations. Four psychology professors and psychology master’s students were then invited to evaluate and modify the content. Subsequently, an equivalent measurement tool with the same number of items and scoring methods as the original scale was developed. The initial scale was then administered, and the data were randomly divided. One-half of the data (Dataset A) were used for MIRT analysis, where a multidimensional graded response model and a bifactor model were constructed, and their results were compared. In this process, items with inappropriate discrimination, difficulty, and item information functions (IIFs) were deleted. The other half of the data (Dataset B) were used for validation analysis. The model fit results were re-evaluated based on the model’s item discrimination, difficulty and IFF. This led to the formation of the formal scale. Finally, criterion-related validity analysis was employed to further examine the validity of the revised scale (Yao, 2003).

Analytical approach

Data management and analysis were conducted with SPSS 26.0. The Bayesian multivariate item response theory (BMIRT) developed by Yao (2003) was employed for MIRT analysis. Additionally, the “mirt” package in the R programming language was utilized for estimating the ICCs and IIFs (Chalmers, 2012).

Results

Parameter Estimation Results Based on Dataset A

Comparison between the Multidimensional Graded Response Model (MGRM) and bifactor model

Considering the dimensionality of the original scale and the comparison of the two models, this study employed a statistical method based on information criteria for fit testing. We established the MGRM and bifactor model and compared them using the Akaike information criterion (AIC) and Bayesian information criterion (BIC). Generally, the principle is to select the optimal model based on the minimum AIC (Cheng et al. 2015). Additionally, the BIC imposes a stronger penalty on the model than does the AIC. The results showed that the AIC of the MGRM was 43658.03 and the BIC was 79428.02, while the AIC of the bifactor model was 45303.01 and the BIC was 84030.02. Both the AIC and BIC for the MGRM were superior to those for the bifactor model. Therefore, the MGRM was selected for further in-depth analysis.

MGRM parameter estimation results for Dataset A

-

(1)

Item Difficulty and Item Discrimination

The comparative results of the two models’ fits indicated that the MGRM model outperforms the bifactor model. This model assumes that each item’s response is influenced by only one ability, resulting in each item having only one discrimination on its corresponding dimension. In BMIRT, the Q-matrix is utilized to define the relationship between items and dimensions’ measurement properties, facilitating the estimation of model parameters (Tu et al. 2011).

According to IRT, excessively low item discrimination suggests limited differentiation among participants with different abilities, while overly high discrimination can adversely affect the overall test results. Therefore, following the recommendations of Yang et al. (2008), a criterion for discrimination (a) was adopted, in which items with a ≤ 0.3 or a ≥ 4 were removed. For difficulty (b), guidance from Zan et al. (2008) was considered, leading to the removal of items with b ≤ −4 or b ≥ 4. Table 1 presents the parameter estimation results for all items. Notably, all the items’ discrimination parameter estimates fell within the range of [1.59, 3.84], and the difficulty parameter estimates were within the range of [−2.91, 2.24]. This indicates that the distribution ranges for both the discrimination and difficulty parameters are within acceptable limits. Therefore, no items were removed at this stage.

Table 1 MGR Parameter Estimation Results for Dataset A. -

(2)

Item Information Functions

The IIF is employed to illustrate the degree of discrimination that a test or scale provides to participants within a specific θ range. Higher information values indicate greater precision and reliability (Dodeen and Al-Darmaki, 2016). As depicted in Fig. 1 and Table 2, the distribution of the maximum information function peaks for the 36 items fell within the range of [0.24, 2.35]. For instance, Items 13, 15, and 14 exhibited higher maximum information function peaks than did the other items (Items 6, 5, and 33), indicating their superior performance in providing more informative measurements. Considering a balance between item information functions, item content, and the original dimensional structure of the scale, six items with the lowest maximum information function peaks were removed (Items 4, 5, 6, 31, 32, and 33). The remaining 30 items formed the Chinese abridged version of the “Career Readiness Questionnaire-Adolescent Version”.

Validation analysis of the parameter estimation results for Dataset A based on Dataset B

MGRM parameter estimation results for Dataset B

-

(1)

Item Difficulty and Item Discrimination

To validate the reliability of the results obtained from constructing the MGRM based on Dataset A, we further conducted parameter estimation analysis on Dataset B. Considering the parameter estimation results and IIF analysis on Dataset A, a total of 6 items were removed, involving 2 dimensions. Thus, in the subsequent confirmatory parameter estimation, 30 items were retained, encompassing 10 dimensions. Table 3 displays the results, showing that the discrimination parameter estimates for all items in the abbreviated scale ranged from [1.45, 3.38], and the difficulty parameter estimates ranged from [−3.31, 1.76]. In Dataset B, both the discrimination and difficulty parameter distributions fell within acceptable standard range (Yang et al. 2008; Zan et al. 2008). Therefore, it can be inferred that the results based on dataset A are reliable.

Table 3 MGR Parameter Estimation Results for Dataset B. -

(2)

Item Information Functions

The results of the IIF based on Dataset B are presented in Table 4 and Fig. 2. The peak values of the maximum information functions for the 30 items in the abbreviated scale ranged from [0.50, 2.64], which is higher than the range of the 36 items in the original scale. Notably, in the analysis conducted on Dataset A, original Items 13, 15, and 14 ranked in the top three in terms of maximum information. In the analysis of the abbreviated scale, due to the removal of some items, the original Items 13, 14, and 15, now renumbered as 10, 11, and 12, respectively, continued to rank among the top three in providing the most information. The maximum amount of information provided by the retained items remained relatively stable. For instance, the maximum information of original Item 10, now renumbered 7, increased from 0.64 to 0.65, and the maximum information of Item 25, now renumbered 22, decreased from 1.44 to 1.26. It is worth noting that all items provided maximum information greater than or equal to 0.5, and there were no items that significantly fell below the others (Items 5 and 6 in the original scale had noticeably lower maximum information than other items). Based on these comparisons, this study considers the performance of the 30 items in the abbreviated scale to be excellent and suitable for subsequent research.

Results of the ability parameter estimation of the participants

Using the MGRM on Dataset B, the ability parameters of the participants were estimated. As indicated in Table 5, the average ability scores of all the participants across the 10 dimensions ranged from 0.14 to 0.27. Specifically, among the 10 career readiness dimensions, the participants exhibited the highest abilities in the CCl, SS-S, and OE dimensions (0.27, 0.25, and 0.25, respectively) and the lowest abilities in the SSFr, Nw, and SS-Fa dimensions (0.14, 0.15, and 0.18, respectively). Additionally, one of the advantages of MIRT is the ability to estimate participants’ abilities across multiple dimensions. This enables researchers to conduct in-depth analyses of each participant’s abilities in various test dimensions, thereby achieving the function of cognitive diagnosis. Table 5 reports the ability scores of some participants in Dataset B across the 10 dimensions. Participant “7023747” performed well in all dimensions, while participants “7023742” and “7023718” performed poorly in all dimensions. Participant “7023733” performed poorly in five dimensions—OE, SS, CCl, SS-Fr, and Nw—but performed well in five dimensions—CI, CCo, SS-S, SS-Fa, and SE.

Criterion-related validity analysis

Career readiness, occupational identity, and career decision self-efficacy exhibit distinct characteristics to a certain extent. However, previous research indicates a certain degree of correlation between occupational identity, career decision self-efficacy, and career readiness (Nam et al. 2008; Praskova et al. 2015). Therefore, we used the OIS and CDSES as criterion measures to comprehensively validate the performance of the revised scale.

The results of the criterion-related validity analysis are presented in Table 6. The domain score of the revised career readiness scale exhibited a significant correlation with the total scores of the occupational identity scale and the career decision self-efficacy scale (r1 = 0.305, r2 = 0.682). The correlations between the domain score of the revised career readiness scale and various dimensions of the occupational identity scale ranged from 0.203 to 0.346, indicating strong validity. Moreover, the internal correlations among the dimensions of the revised career readiness scale ranged from 0.283 to 0.702, with an average of 0.459. The correlations between the dimensions of the revised career readiness scale and dimensions of the occupational identity scale ranged from 0.016 to 0.422, with an average of 0.190. Last, the correlations between dimensions of the revised career readiness scale and the total score of the career decision self-efficacy scale ranged from 0.215 to 0.682, with an average of 0.442. These findings suggested that while career readiness has connections with occupational identity and career decision self-efficacy, it also demonstrates distinctiveness.

Study 2 Predicting high school students’ career readiness based on text mining

Method

Participants

The participants were sourced consistently with Study 1, but data collection occurred at different times. First, after obtaining authorization from the school and informed consent from the students and their guardians, we systematically collected and organized essay texts written by participants from December 2021 to March 2022. These essays covered various themes, including appreciation, comparison, and the Paralympic spirit. Subsequently, based on the pool of valid participants from Study 1 (1261 individuals), we matched the questionnaire data with the essay texts. Only participants with complete sets of questionnaire and essay data were included in the subsequent analyses. Ultimately, we obtained data from 1012 valid participants (Mage = 16.21, SDage = 0.71), comprising 455 males (45.0%) and 557 females (55.0%).

The results of the chi-square test indicated a statistically significant association between the matching of the questionnaire data and essay data and sex (χ2 = 50.49, df = 1, p < 0.001). This suggested a nonindependent relationship with sex. This distribution may be due to significant differences in the degree of collaboration and completion attitudes of high school students of different sexes towards tasks outside of academic tasks.

Procedures and processes

Sample grouping

Based on the revised “Career Readiness Questionnaire-Adolescent Version,” we initially calculated participants’ domain scores. Using the Z score for grouping is a common method; for instance, Luo et al. (2021) employed a Z score of 1 as the cut-off to categorize elementary school students into “shy” and “ordinary” groups. Considering existing research and the issues addressed in this study, we chose a Z score of −1 as the grouping boundary. Individuals with Z scores less than or equal to −1 were classified into the “low career readiness group” and labelled 0, indicating a need for improvement in their career readiness. Individuals with Z scores greater than −1 were categorized into the “high career readiness group” and labelled as 1, signifying relatively high career readiness (Dai, 2022, p.92).

Career readiness dictionary selection and revision

In text analysis, a dictionary typically refers to a dataset containing a series of words along with their related information, such as part of speech and emotional polarity. These dictionaries encompass categories to which all words belong, accompanied by a comprehensive list of words (Zhang, 2015). The “TextMind” Dictionary is the core lexicon of the “TextMind” Chinese psychological analysis system, integrating the commonalities and specificities of the LIWC2007 and the Chinese CLIWC lexicon (Lin, 2021). This dictionary is specifically designed for text analysis of simplified Chinese texts in mainland China, comprising 102 vocabulary categories with a total of 12,175 words. The dictionary focuses on simplified Chinese text analysis with high inclusiveness and ecological validity. Given the need of this study, we chose to utilize the “TextMind” Dictionary for the subsequent analysis.

Additionally, to ensure the effective extraction of features from high school students’ texts, we formatted and organized the collected textual data. Using a self-developed word frequency counting program, we calculated the frequency of all vocabulary words and arranged them in descending order. Words that appeared frequently in the essay texts but were not in the original “TextMind” Dictionary were selected and subsequently included. This process encompassed the expansion of vocabulary across 102 word classes in the original dictionary, culminating in a comprehensive revision of the entire lexicon.

Feature extraction

In this study, we collected data from 1012 valid participants, with an average word count of 735 and a standard deviation of 73.20 for the essay texts. We used the “jieba” library in Python for segmentation processing of all participants textual data. The revised dictionary was employed to perform vocabulary statistics on each participant’s text, resulting in the frequency of words in different word categories. This process yielded 102 vocabulary feature variables for subsequent feature selection and model construction.

Feature screening

This study aimed to identify differences in vocabulary usage among high school students with high and low career readiness skills and construct a model for prediction. Therefore, the key components of this study include the removal of interfering features and the selection of important vocabulary features that can be used to effectively distinguish between the two skill levels.

First, a preliminary screening of features extracted from the revised career readiness lexicon was conducted to exclude features that may interfere with the research concept. The revised lexicon comprises 102 vocabulary categories (VCs), with VC1~VC80 being the first 80 categories. Most of these categories play a crucial role in sentence structure and meaning integrity, making them relevant to the research concept of “career readiness.” Therefore, VC1~VC80 were considered important vocabulary features and were included in the model. VC81~VC91 represent 11 punctuation marks, and VC92~VC102 are statistical features generated by the Chinese TextMind system, which are irrelevant to the research objectives and were excluded from the model.

Subsequently, content validity was employed to investigate whether the revised lexicon adequately reflected the studied concept. The content validity was measured using the content validity index (CVI), including the item-level content validity index (I-CVI) and scale-level content validity index (S-CVI) (Liu, 2010; Lou, 2022). Three master’s students in psychology and two master’s students in education assessed the 80 vocabulary categories VC1~VC80. The task of the evaluators was to judge the extent to which each vocabulary category reflected the concept of “career readiness” and to score it on the evaluation form. The evaluation form used a 4-point scale, where “1” indicates no relevance, “2” indicates weak relevance, “3” indicates moderate relevance, and “4” indicates strong relevance.

Finally, to determine the most effective vocabulary features for distinguishing between the two groups of high and low career readiness, the chi-square test was applied to further screen the 80 vocabulary features VC1~VC80 (Kastrin et al. 2010). The chi-square test is commonly used for feature selection and measures the dependence between a specific feature and a category (Forman, 2003). Higher scores indicate a stronger dependence between a category and a given feature, while features with lower scores have less information and are considered for removal.

Model construction and evaluation

Using machine learning algorithms, a predictive model for high school students’ career readiness is constructed based on filtered features. Considering various evaluation metrics, the optimal model is selected. In psychological modelling, it is common to explore multiple machine learning methods on a specific dataset, continually refining and tuning them. Ultimately, one or more optimal models are determined based on specific model evaluation metrics (Su et al. 2021). Therefore, using Python 3.9, this study employed five algorithms—naive Bayes, decision tree, support vector machine (SVM), K-nearest neighbour (KNN), and random forest—for constructing a classification model. These algorithms are widely applied in relevant psychological research and have demonstrated good predictive performance (Farnadi et al. 2013; Marouf et al. 2019; Liu and Niu, 2016).

This study employed the following model evaluation metrics: accuracy, recall, precision, F1-score, and log-loss. The model evaluation metrics are calculated using the “sklearn.metrics” library in the Python 3.9 environment. The interpretation of these metrics is complemented by the confusion matrix presented in Table 7 (Li et al. 2023).

Accuracy is the ratio of correctly predicted outcomes, encompassing both positively identified instances that match the true positives and negatively identified instances that align with the true negatives, among the entire sample set. Recall represents the percentage of samples belonging to a specific category that are accurately categorized as such. In the context of this study, recall in the high career readiness group denotes the percentage of individuals inherently belonging to this group who are precisely labelled as part of it. This metric, when applied to positive instances, is also known as “sensitivity.” Conversely, in the low career readiness group, recall represents the percentage of individuals inherently belonging to this group who are accurately identified as such. When applied to negative instances, this metric is alternatively referred to as “specificity.” The aggregate recall across both categories yields the model’s overall recall. Precision quantifies the proportion of samples genuinely belonging to the positive category among all those labelled as positive. As an evaluation criterion, the F1-score includes precision and recall, which serve as their harmonic means. The log-loss, or cross-entropy loss, assesses the divergence between the true and predicted probability distributions, thereby measuring the classification model’s performance, with lower values indicating superior performance.

Results

Career readiness dictionary revision

High-frequency vocabulary filtering was conducted in the Python 3.9 environment. The results showed that the cumulative word frequency of the first 8073 high school student essays reached 90% of the total cumulative word frequency (total cumulative word frequency: 262,486 times; first 90% word frequency: 236,237 times). Subsequently, for every additional 1000 words, the incremental increase in cumulative word frequency did not exceed 1%. Based on this distribution feature, we chose the first 8073 words as high-frequency words and compared them with the “TextMind” Dictionary. The results showed that 3760 words exactly matched the “TextMind” Dictionary. After removing 559 meaningless words and 33 duplicate words from the remaining 4313 words, we categorized the remaining 3721 words into 102-word categories, forming the revised Career Readiness Dictionary, which includes 102-word categories and 15,896 words. Figure 3a, b present the frequency statistics of the word categories in the original dictionary and the revised Career Readiness Dictionary in the form of Pareto charts.

In the revised dictionary, the word frequency of each word category had increased. Although the top 10 word categories in the original dictionary and the revised dictionary were the same, there were some differences in their relative positions. For example, emotional process words were positioned after cognitive process words in the revised dictionary, while they were positioned before cognitive process words in the original dictionary. Similarly, the position of money words relative to social process words was adjusted in the revised dictionary. This indicates that the revised dictionary is more effective at expanding and adjusting than the original dictionary and better reflects the linguistic features of “career readiness”.

Career readiness feature extraction

Content validity

The I-CVI reflects the consistency of ratings by experts for a particular tool. The calculation method is the number of experts who gave a score of 3 or 4 divided by the total number of experts. When the number of experts is less than or equal to 5, the I-CVI should be equal to 1. When the number of experts is more than 5, the I-CVI should be at least 0.78. Therefore, based on the I-CVI of a specific item, decisions can be made about whether to retain or discard that item. The calculation method for the S-CVI is the average of all I-CVIs, and the S-CVI should be at least 0.80 (Polit et al. 2007). The evaluators’ ratings for the dictionary used in this study are shown in Table 8. The I-CVI for the 80 retained word categories in the dictionary, after the initial screening, is 1, which means that the I-CVI = 1 > 0.78, meeting the required standard. The S-CVI = 1 × 80/80 = 1 > 0.80. Therefore, it can be considered that the revised dictionary has good content validity.

Feature screening

Based on the revised dictionary, the word frequency of each vocabulary category in the essay text was calculated. The results of the word frequency feature extraction from the essay text are shown in Fig. 4. Then, using the chi-square test from the “sklearn.feature_selection” library, each feature was subjected to chi-square calculations. The top 30 vocabulary features that could distinguish between the two groups of texts were selected for the construction of the machine learning prediction model. The final dictionary included in the prediction model consisted of 30 vocabulary categories (Table 9) with a total of 9416 words.

Career readiness prediction results and evaluation

At the model prediction stage, we constructed five classification models and used five model evaluation metrics to select the optimal prediction model. A comparison of the prediction results for the different models is shown in Table 10. Considering various indicators, the random forest model outperformed the other models in all aspects, performing the best in the prediction task. The naive Bayes model also demonstrated high performance, ranking second after random forest. In contrast, the KNN model showed poor performance in terms of accuracy and recall, with values of only 0.542 and 0.553, respectively.

Discussion

Considering the issue of cultural differences, this study undertook a localized revision of the career readiness measurement tool based on the MIRT, making it more suitable for assessing the career readiness status of Chinese high school students (Marciniak et al. 2020). Furthermore, text mining and machine learning methods were employed to construct predictive models for both scale data and textual data, aiming to achieve the automatic prediction of career readiness level.

In this study, we revised the “Career Readiness Questionnaire–Adolescent Version” using the MIRT. Through a combination of factor analysis and item response theory, we achieved a more accurate measurement of career readiness among Chinese high school students. In comparison to the bifactor model, we selected the MGRM for parameter estimation, which demonstrated better performance in fitting the concept of career readiness (Jiang et al. 2016; Xie, 2015). Based on the IIF, the tool provided more item information for measuring individuals with moderate to low career readiness abilities, making it effective in distinguishing between individuals with lower to moderate career readiness abilities and those with higher career readiness abilities. Additionally, considering the balance in item information functions, item content, and the original scale’s dimensional structure, we chose to eliminate the six items with the lowest peaks in information functions (Dodeen and Al-Darmaki, 2016). These items belonged to the labour market and knowledge occupational exploration dimensions. This decision was influenced by the fact that high school students often devote more time to academic studies, and their career planning is often not solely determined by themselves but influenced by their parents and teachers (Zhao, 2023). Although all these items demonstrated acceptable discrimination and difficulty, there were differences in the item information metrics compared to the other items. This may be related to the level of career education in the schools and regions where the research sample is located. Future researchers may explore the trade-off between these two dimensions more deeply in a broader and more diverse sample, especially covering samples from different regions and schools, to better understand the performance of these items in different contexts. Furthermore, this study highlights that, compared to existing measurement tools related to “career readiness” in China, the revised measurement tool in this study is more comprehensive in its dimensional considerations. For instance, it includes the social support dimension, which might not have been covered in similar studies (Liu, 2016; Wang et al. 2014; Wang, 2022). This indicates progress in the development and revision of career readiness measurement tools, providing valuable references for further research and practice.

In terms of model construction, Study 2 automated the prediction of high school students’ career readiness abilities based on self-report data and essay text data using text mining and machine learning methods. We selected the “TextMind” Dictionary and employed the chi-square test to filter out key features (Kastrin et al. 2010). Ultimately, random forest was determined to be the optimal model, which is consistent with the results of previous studies that also utilized random forest for psychological feature prediction (Lushi et al. 2017; Luo et al. 2021). Compared to other algorithms, the random forest algorithm performed best, providing robust methodological support for future similar research. This study integrated text mining with psychology, constructing prediction models for individuals based on self-report data and text data. The study compared the actual performance of different machine learning algorithms in predicting high school students’ career readiness, aiming to achieve automated prediction of career readiness and expand the field of psychological research (Luo et al. 2021). Overall, our research provides multilayered data support for understanding and assessing high school students’ career readiness. The revised questionnaire tool is more culturally aligned with the Chinese context, and the application of text mining and machine learning allows for a more comprehensive and dynamic understanding of individuals’ career readiness abilities. This is crucial for schools and parents to provide more scientific educational guidance and promote the development of adolescent career education.

However, this study has several limitations. First, despite the localization revision of the tool, there is a need for further exploration of the impact of cultural differences on the measurement results (Wang et al. 2020; Cheng and Liu, 2016; Zhang et al. 2018). Additionally, due to practical constraints, the study did not adequately consider the issue of sample representativeness. Therefore, caution should be taken when generalizing and applying the results. Future research may delve into the influences of cultural differences between East and West, North and South, and urban and rural areas on career readiness utilizing larger and more diverse samples (Wen et al. 2015). Second, an insufficient amount of data might affect the robustness and generalizability of machine learning models (Jollans et al. 2019). Subsequent research could explore text data features more extensively on a larger and more diverse sample. Finally, this study addresses the issue of “imbalanced sample category distribution”, where the proportion of the low career readiness group was much smaller than that of the high career readiness group. This may significantly interfere with the accuracy of predictive models, as many raw algorithms for classification problems, such as decision tree and support vector machines, are more suitable for datasets with balanced sample distributions (He and Garcia, 2009). Future research could explore solutions to solve this problem through data preprocessing, loss-sensitive models and ensemble methods (López et al. 2013; McCarthy et al. 2005).

Conclusion

This study draws the following conclusions:

(1) The concept of “career readiness” is more suitable for the multidimensional graded response model than for the bifactor model. Using a measurement tool revised based on MIRT allows for effective measurement of high school students’ career readiness, providing a more accurate reflection of individual levels of career readiness.

(2) Based on the revised TextMind Dictionary, more accurate feature extraction can be achieved for high school students’ essay texts. The chi-square test can be used to select word class features that better represent career readiness. The various models constructed showed good predictive performance, with the random forest model performing the best. This indicates the effectiveness of machine learning models constructed based on essay text data in predicting high school students’ career readiness.

Data availability

The data collected and reported in this study are available on request from the corresponding author. The data are not publicly available due to privacy or ethical considerations.

References

Alam T, Kirk N, Hirsch E, Briggs‐Gowan M, Wakschlag LS, Roy AK, Wiggins JL (2023) Characterizing the spectrum of irritability in preadolescence: Dimensional and pragmatic applications. International Journal of Methods in Psychiatric Research 32(S1):e1988

August RA (2020) Understanding career readiness in college student-athletes and identifying associated personal qualities. Journal of Career Development 47(2):177–192

Bai JJ (2021) A practical study on social work intervention in the career preparation of graduates of marine majors. Dissertation, Dalian Maritime University

Binghashayan NS, Yeo KJ, Kosnin AM (2022) Relationship between Student’s Self-Determination, Parental Involvement, Special Education Teachers’ Support, and College and Career Readiness among Secondary Students with Learning Disabilities in Riyadh, Kingdom of Saudi Arabia.Sustainability, (21), 14221

Brown SD, Lent RW (2012) Career development and counseling : putting theory and research to work. Career Development Quarterly 40(4):376–377

Castellano ME, Richardson GB, Sundell K, Stone JR (2017) Preparing students for college and career in the United States: the effects of career-themed programs of study on high school performance. Vocations and Learning 10(1):47–70

Chalmers RP (2012) MIRT: A multidimensional item response theory package for the R environment. Journal of Statistical Software 48(6):1–29

Cheng H, Liu AS (2016) Revision of the Chinese version of attention-related cognitive errors scale. Chinese Journal of Clinical Psychology 24(05):874–877

Cheng Q, Li TM, Kwok CL, Zhu T, Yip PS (2017) Assessing suicide risk and emotional distress in Chinese social media: a text mining and machine learning study. Journal of Medical Internet Research 19(7):e243

Cheng W, Zhang ZS, He ZJ (2015) Information criterion-based source number estimation methods with comparison. Journal of Xi’an Jiaotong University, 24(05):874–877

Crespo C, Jose PE, Kielpikowski M, Pryor J (2013) “On solid ground”: Family and school connectedness promotes adolescents’ future orientation. Journal of Adolescence 36(5):993–1002

Dai H (2022) Psychometrics. Higher Education Press, Shanghai

Dodeen H, Al-Darmaki F (2016) The application of item response theory in developing and validating a shortened version of the Emirate Marital Satisfaction Scale. Psychological Assessment 28(12):1625–1633

Embretson SE (1996) The new rules of measurement. Psychological Assessment 8(4):341. AAA

Farnadi G, Zoghbi S, Moens MF, Cock MD (2013) Recognising personality traits usIng Facebook status updates. In Proceedings of the International AAAI Conference on Web and Social Media 7(No. 2):14–18

Feldman R, Sanger J (2008) The text mining handbook: advanced approaches to analyzing unstructured data. Computational Linguistics 34(1):125–127

Forman G (2003) An extensive empirical study of feature selection metrics for text classification. Journal of Machine Learning Research 3:1289–1305

Gao SY, Ding RC (2022) How do secondary schools prepare students for future employment? Findings from the OECD Career Readiness Program. Shanghai Education, (08), 54–59

Gysbers NC (2013) Career-ready students: a goal of comprehensive school counseling programs. The Career Development Quarterly 61(3):283–288

He H, Garcia EA (2009) Learning from Imbalanced Data. IEEE Transactions on Knowledge and Data Engineering 21(9):1263–1284

He LF, Huang HY, Li C, Li SY (2021) Work readiness of newly graduated nurses: a review. Chinese Journal of Nursing 56(02):305–309

Hirsch E, Alam T, Kirk N, Bevans KB, Briggs‐Gowan M, Wakschlag LS, Roy AK (2023) Developmentally specified characterization of the irritability spectrum at early school age: Implications for pragmatic mental health screening. International Journal of Methods in Psychiatric Research 32(S1):e1985

Hirschi A, Niles SG, Akos P (2009) Engagement in adolescent career preparation: Social support, personality and the development of choice decidedness and congruence. Journal of Adolescence 34(1):173–182

Hirsh JB, Peterson JB (2009) Personality and language use in self-narratives. Journal of Research in Personality 43(3):524–527

Idris A, Ken K (2018) Big data in psychology: A framework for research advancement. The American Psychologist 73(7):899–917

Jiang S, Wang C, Weiss DJ (2016) Sample size requirements for estimation of item parameters in the multidimensional graded response model. Frontiers in Psychology 7:109

Jiménez S, Moral de la Rubia J, Varela-Garay RM, Merino-Soto C, Toledano-Toledano F (2023) Resilience measurement scale in family caregivers of children with cancer: Multidimensional item response theory modeling. Frontiers in Psychiatry 13:985456

Jollans L, Boyle R, Artiges E, Banaschewski T, Desrivières S, Grigis A, Whelan R (2019) Quantifying performance of machine learning methods for neuroimaging data. NeuroImage 199:351–365

Kang CH, Xin T (2010) New developments in test theory: multidimensional item response theory. Advances in Psychological Science 18(03):530–536

Kastrin A, Peterlin B, Hristovski D (2010) Chi-square-based scoring function for categorization of MEDLINE citations. Methods of Information in Medicine 49(4):371–378

Kenny ME, Wu X, Guterres KM, Gordon P, Schmidtberger R, Masters A, … & Cunningham S (2023) Youth perspectives on decent education and college and career readiness. Journal of Career Assessment, 10690727231217108

Lau PL, Anctil T, Ee GT, Jaafar JLS, Kin TG (2020) Self‐concept, attitudes toward career counseling, and work readiness of Malaysian vocational students. The Career Development Quarterly 68(1):18–31

Lei KQ, Zhang M, Wang ZY (2000) A study on the psychological status and causes of career preparation of college students in Chongqing. Journal of Southwest University(Social Sciences Edition), (02), 70-74

Lent RW (2013) Career‐life preparedness: revisiting career planning and adjustment in the new workplace. The Career Development Quarterly 61(1):2–14

Li HF, Qin Y (2021) On the current situation and influencing factors of college art majors’s preparation for job hunting: a case study of Department of Fine Arts in Guilin Normal College. Journal of Guilin Normal College 35(01):60–65

Lin XB (2021) Outcome related factors in counseling process: Text-mining and Set-theory based method research. Dissertation, Central China Normal University

Liu BQ, Niu Y (2016) Gender recognition of Chinese micro-blog users based on emotion features. Computer Engineering & Science 38(09):1917–1923

Liu K (2010) How to test content validity. Journal of Nurses Training 25(01):37–39

Liu X (2016) Analysis on Impact of employment preparation on initial employment quality of higher vocational college students-based on 489 tracking data. Vocational and Technical Education 37(27):49–55

Li WK, Liu YC, Liu ZY, Huang WJ, Hu XM (2023) A calibrated confusion matrix based on positive and background data. Natural Science Journal of Hainan University 41(03):293–302

Lombardi AR, Rifenbark GG, Rogers HJ, Swaminathan H, Taconet A, Mazzotti VL, … & Langdon S (2023) Establishing construct validity of a measure of adolescent perceptions of college and career readiness. Career Development and Transition for Exceptional Individuals (1),4–14

López V, Fernández A, García S, Palade V, Herrera F (2013) An insight into classification with imbalanced data: Empirical results and current trends on using data intrinsic characteristics. Information Sciences 250:113–141

Lushi C, Tao G, Michal K, David S, Davidson RL (2017) Building a profile of subjective well-being for social media users. PloS One 12(11):e0187278

Luo F, Jiang LM, Tian XT, Xiao MG, Ma YZ, Zhang S (2021) Shyness prediction and language style model construction of elementary school students. Acta Psychologica Sinica 53(02):155–169

Lou S (2022) Improvement of pupils’ learning motivation: intervention based on growth thinking. Dissertation, Northwest Normal University

Macanovic A, Przepiorka W (2024) A systematic evaluation of text mining methods for short texts: Mapping individuals’ internal states from online posts. Behavior Research Methods, 1-22

Marciniak J, Hirschi A, Johnston CS, Haenggli M (2020) MeasurinG Career Preparedness Among Adolescents: Development And Validation Of The Career Resources Questionnaire—adolescent Version. Journal of Career Assessment 29(1):106907272094383

Markovikj D, Gievska S, Kosinski M, Stillwell D (2013) Mining FacebooK Data For Predictive Personality Modeling. AAAI International Conference on Weblogs & Social Media 7(No. 2):23–26

Marouf AA, Hasan MK, Mahmud H (2019) Identifying Neuroticism from user generated content of social media based on psycholinguistic cues. 2019 International Conference on Electrical, Computer and Communication Engineering (ECCE)(PP. 1-5). IEEE

Ma RT, Ai T, Zhang F, Chen Q (2023) Psychometric evaluation of the multidimensional-multiattributional causality Scale based on multidimensional item response theory. Journal of Mathematics Education 32(04):41–49

Mauer EB, Gysbers NC (1990) Identifying career concerns of entering university freshmen using My Vocational Situation. The Career Development Quarterly 39(2):155–165

McCarthy K, Zabar, B, Weiss G (2005) Does cost-sensitive learning beat sampling for classifying rare classes? In Proceedings of the 1st International Workshop on Utility-based Data Mining (pp. 69-77)

Mi GW, Xian ZQ, Wang L, Lyu DS (2021) Public psychological health information needs during the COVID-19-take the social Q&A Platform “Zhihu” as an example. Journal of Modern Information 41(06):108–117

Morrow E (2024) Scamming higher ed: An analysis of phishing content and trends. Computers in Human Behavior 158:108274

Nam J, Wang J, Zhang G (2008) Managerial career concerns and risk management. The Journal of Risk and Insurance 75(3):785–809

Patton W, Skorikov VB (2007). Career Development in Childhood and Adolescence (Vol. 2.). BRILL

Polit DF, Beck CT, Owen SV (2007) Is the CVI an acceptable indicator of content validity? Appraisal and recommendations. Research in Nursing & Health 30(4):459–467

Praskova A, Creed PA, Hood M (2015) The development and initial validation of a career calling scale for emerging adults. Journal of Career Assessment 23(1):91–106

Quansah F, Agormedah EK, Ankomah F, Srem‐Sai M, Nugba RM, Hagan Jr, JE, Schack T (2024) Revalidation of teachers’ sense of efficacy scale using Ghanaian in‐service teachers: Multidimensional item response theory with factor analyses. Psychology in the Schools

Raghavendra AH, Bala PK, Mukherjee A (2024) Text mining analysis of retail and consumer service leaders’ sustainability narratives: Are they actually true? Journal of Retailing and Consumer Services 80:103921

Reckase MD (2006) 18 Multidimensional Item Response Theory. Handbook of Statistics 26:607–642

Salomon I, Pals AM, Elsey T, Golding JM, Sheehan EA (2024) Exploring how undergraduate psychology majors seek information and experiences for career preparation. Teaching of Psychology 1:71–80

Sarah D, Jitske T, Felt JM (2018) Assessment of health surveys: fitting a multidimensional graded response model. Psychology, Health & Medicine 23(sup1):13–31

Sepehrinia M, Farahani H, Watson P, Amini N (2024) Psychometric properties and item response theory analysis of the Persian version of the social pain questionnaire. Frontiers in Psychology 15:1372229

Sukhawaha S, Arunpongpaisal S, Hurst C (2016) Development and psychometric properties of the Suicidality of Adolescent Screening Scale (SASS) using multidimensional item response theory. Psychiatry research 243:431–438

Super DE (1980) A life-span, life-space approach to career development. Journal of vocational behavior 16(3):282–298

Su Y, Liu MM, Zhao N, Liu XQ, Zhu TS (2021) Identifying psychological indexes based on social media data: A machine learning method. Advances in Psychological Science 29(04):571–585

Tay L, Woo SE, Hickman L, Saef RM (2020) Psychometric and Validity Issues in Machine Learning Approaches to Personality Assessment: A Focus on Social Media Text Mining. European Journal of Personality 34(5):826–844

Tian H (2022) A study on the relationship between family rearing attitudes and career readiness among rural high school freshmen. College Entrance Examination, (17), 158-160

Tu DB, Cai Y, Dai HQ, Ding SL (2011) Parameters Estimation of MIRT Model and Its Application in Psychological Tests. Acta Psychologica Sinica 11:1329–1340

Vanessa D, Jill H, Tristram H (2022) Increasing students’ career readiness through career guidance: measuring the impact with a validated measure. British Journal of Guidance & Counselling 50(2):260–272

Wang JY, Gan SQ, Zhao N, Liu TL, Zhu TS (2016) Chinese mood variation analysis based on Sina Weibo. Journal of University of Chinese Academy of Sciences 33(06):815–824

Wang QK, Qian H, Huang MY (2014) The Research on Employment Preparation Situation and Countermeaseures of College Students. China University Students Career Guide, (22), 13–18

Wang WC, Yao G, Tsai YJ, Wang JD, Hsieh CL (2006) Validating, improving reliability, and estimating correlation of the four subscales in the WHOQOL-BREF using multidimensional Rasch analysis. Quality of Life Research 15(4):607–620

Wang Y, Lei T, Liu X (2020) Chinese system usability scale: Translation, revision, psychological measurement. International Journal of Human-Computer Interaction 36(10):953–963

Wang ZYZ (2022) The development reliability and validity of the evaluation scale of College students’ Employment Readiness. Journal of Mudanjiang Normal University (Social Sciences Edition), (06), 82–90

Wen L, Liang C, Ying Y, Shuai Z (2015) Psychometric properties of the formal characteristics of behavior-temperament inventory in Chinese samples. The Spanish journal of psychology 18:E73

Xie RH (2015) The Parameter Estimation of Multidimensional Item Response Theory Graded Response Model.Dissertation, Southwest University

Yang YB, Miao DM, Tian JQ, Xiao LJ, Su, H, Hong X (2008) Item analysis of the Chinese Soldier Personality Questionnaire Using Item Response Theory. Acta Psychologica Sinica, (05), 611–617

Yao LH (2003) BMIRT: Bayesian multivariate item response theory. CTB/McGraw-Hill, Monterey, CA

Yue GA, Dong YH, Chen H, Lai KS (2013) Online text sentiment analysis techniques and it’s applications. Advances in Psychological Science 21(10):1711–1719

Zang YH, Zhao SY, Chen W, Pan Y, Zhang Y (2012) Modifying parents peer attachment scale with Item Response Theory. Journal of Guizhou Normal University(Natural Sciences) 30(02):22–27

Zan LL, Liu BL, Liu ZX (2008) Development of internet addiction disorder diagnostic scale for middle school students. Chinese Journal of Clinical Psychology, (02), 123-125

Zhang LH, Tian Y, Chu PP, Wen X, Zhang SJ, Shi JR, Wang P (2017) Career apaptability evaluation method: the item response theory model based on text mining. Paper presented at the 20th National Psychological Academic Conference, 3 November 2017

Zhang XY (2015) LIWC: A text analysis tool based on word count. Journal of Southwest Minzu University(Humanities and Social Sciences Edition) 36(04):101–104

Zhang X, Zuo B, Zhao Y (2018) Revision of Chinese Version of Fatalism Scale. Chinese Journal of Clinical Psychology 26(05):914–917

Zhan YJ (2020) Research on Career Planning Capability Status Quo and Countermeasures of Secondary Vocational Students-Take GuangZhou S Secondary Vocational College as an Example. Dissertation, Guangzhou University

Zhao X (2023) A Study on the Relationship between Social Support, Self-Identity and Career Exploration among High School Students and Educational Countermeasures. Dissertation, Shanxi University

Acknowledgements

Open access funding was provided by the 2023 Shandong Provincial Graduate Quality Education Teaching Resource Project (SDJAL2023009), Experimental Teaching and Teaching Laboratory Construction Research Project of Shandong Province in 2024 (No.67), the Third Batch of First-Class Undergraduate Courses in Shandong Province (Psychometrics), Experimental Teaching and Teaching Laboratory Construction Research Project of Shandong Normal University in 2024 (2024ZS05), 2024 Teaching Reform Research Project of Shandong Normal University (2024ZJ41), 2023 Graduate Education and Teaching Reform Research Project of Shandong Normal University (No. 18) and the Project of 2023 Shandong Normal University Graduate Student Course in English (Big Data Psychology and Applications).

Author information

Authors and Affiliations

Contributions

PW, XJW, and YXZ conducted the research and wrote the main manuscript. YXZ, MZZ, and KXY collected the data and performed the data analyses. KXY, FG, FXZ, and JCM participated in the data analysis and revised the manuscript. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethical approval

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki Declaration and its later amendments. The protocol was approved by the Ethics Committee of Shandong Normal University.

Informed consent

Informed consent was obtained from all participants for inclusion in the study.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wang, P., Zheng, Y., Zhang, M. et al. Methods for measuring career readiness of high school students: based on multidimensional item response theory and text mining. Humanit Soc Sci Commun 11, 922 (2024). https://doi.org/10.1057/s41599-024-03436-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1057/s41599-024-03436-0