Abstract

Bibliometrics can help program directors to conduct objective and fair assessments of scholar impact, progress, and collaboration, as well as benchmark performance against peers and programs. However, different academic search engines use different methodologies to provide bibliometric information, so intermixing results from multiple search engines might contribute to inequitable decision-making. Google Scholar and Scopus provide useful bibliometric information for scholars, including the h-index; however, a search of the literature revealed h-index was higher in Google Scholar than Scopus in other scholar populations; therefore, we hypothesized that h-index might also be higher in Google Scholar than Scopus for translational science (TS) trainees. Trained investigators gathered scholarly profile information from Google Scholar and Scopus for all trainees from NIH-supported TS PhD and TS Training (TST) Programs for predoctoral and postdoctoral trainees. Investigators calculated number of citations/year and m-quotient using the data contained therein. M-quotient was defined as h-index divided by “n,” where “n” equaled the number of years since first publication. Investigators used the Wilcoxon Signed Rank test to compare bibliometrics (citations, citations/year, h-index, and m-quotient) from both sources for TS students and trainees. A total of 38 trainees (13 TS PhD students and 26 TST trainees) had active profiles in both Google Scholar and Scopus. Of the TST trainees, 21 were predoctoral and five were postdoctoral trainees. All four metrics (citations, citations/year, h-index, and m-quotient) were significantly higher (p < 0.05) in Google Scholar than Scopus for the entire study population, TS PhD students, TST trainees, and TST predoctoral trainees. All four bibliometrics were numerically higher (but not significantly higher) in Google Scholar than Scopus for TST postdoctoral trainees as well. This is the first study to compare bibliometrics in Google Scholar and Scopus among translational science trainees. We discovered higher overall citation counts in Google Scholar. Significant differences between Google Scholar and SCOPUS in bibliometrics, such as h-index, could impact the decisions made by program directors if the results are intermixed. Stakeholders should be consistent in their choice of academic search engine and avoid cross engine comparisons, as failure to do so might contribute to inequitable decision-making.

Similar content being viewed by others

Introduction

Bibliometrics can help program leaders to conduct objective and fair assessments of scholar impact, progress, and collaboration, as well as benchmark performance against peers and programs. However, different academic search engines use different methodologies to collect bibliometric information, so intermixing results from multiple search engines might contribute to inequitable decision-making.

Bibliometrics, including h-index, are often used to assess overall impact of a researcher’s work and are sometimes used in decision-making for resource allocation or academic progression (Maurya and Kumar, 2022). Additionally, h-index is often reviewed and considered during the job recruitment and grant review processes (Mondal et al., 2023).

The h-index is important because it helps to create balance between number of publications and citation count. According to Koltun and Hafner, h-index is a scientist impact in a single number, it can be computed at all career levels, it is easily interpreted and gives a powerful measure of a scientist’s impact. Institutions have used h-index to recruit members for fellowship programs, give grants to researchers, hire researchers for top positions in universities, and even for Nobel prize (Koltun and Hafner, 2021). Thus, Koltun and Hafner are right to say h-index has shaped the progress of scientific community greatly (Koltun and Hafner, 2021).

Google Scholar and Scopus were launched in 2004 and have quickly become two of the most popular academic search engines to track bibliometrics for researchers; however, the academic search engines differ in a variety of ways ranging from cost of use, content curation processes, and scope of coverage (Aziz et al., 2009a). These significant differences between Google Scholar and Scopus bibliometrics could significantly impact the decisions made by leaders when evaluating programs. Therefore, a thorough understanding of the differences in this important metric between two popular academic search engines is necessary for those charged with evaluating and comparing scholars and programs.

A study involving two NIH-Funded translational science training programs

The Institute for Integration of Medicine and Science (IIMS) at the University of Texas Health San Antonio (UTHSA) established the Translational Science Training (TST) TL1/T32 Program and the Translational Science (TS) PhD Program in 2009 and 2011, respectively. These programs are funded by the United States government’s National Institutes of Health (NIH), National Center for Advancing Translational Science (NCATS), through the Clinical and Translational Science Award (CTSA) Program.

The mission and vision of the TST Program is to build and optimize predoctoral and postdoctoral training programs to help trainees overcome the rate-limiting steps of translational science. We aim to improve health and reduce disparities by providing trainees with a program to help them develop the skills in team science, innovation, and scientific rigor necessary for a successful career in translational science. Research education and training in translational science will sustain development of a highly skilled workforce that efficiently advances patient-focused preclinical, clinical, clinical implementation, and public health research.

The TS PhD Program, one of only eight in the United States, provides in-depth, rigorous, and individualized multidisciplinary and multi-institutional research education and training that prepares scientists to integrate information from multiple domains and conduct independent and team-based research in TS. The program has a unique structure where students can simultaneously enroll in the graduate schools at three Hispanic-Serving Institutions and have access to supervisors and coursework at all universities.

Both the TST and TS PhD programs track trainee publications using Google Scholar and Scopus and report those to NIH/NCATS through Research Performance Progress Reports (RPPRs). These indexes provide useful bibliometric information for trainees, including the h-index; however, when program personnel used both to collect information for translational science trainees, we discovered h-index was often higher in Google Scholar than Scopus. A search of the literature revealed this is a known phenomenon across multiple disciplines; (Maurya and Kumar, 2022; Aziz et al., 2009a; Anker et al., 2019; De Groote and Raszewski, 2012) therefore, we hypothesized that h-index might also be higher in Google Scholar than in Scopus for translational science trainees. As far as the authors are aware, this is the first study to evaluate differences in translational science bibliometrics between Google Scholar and Scopus. A thorough understanding of the discrepancies between Google Scholar and Scopus allows for program directors to accurately represent the value of training programs. Additionally, program directors should be aware of the discrepancies between academic search engines when using these to evaluate trainees, mentors, and programs. The objective of this study was to assess and compare bibliometric information in two academic search engines (Google Scholar and Scopus) for NIH-supported translational science trainees.

Methods

Trained investigators gathered scholarly profile information from Google Scholar and Scopus for all trainees from the date they started in a NIH/CTSA-supported TS PhD or TST Program until the date of this analysis (8/3/2023). It is required by NIH to follow trainees for at least 10 years after completion of their program, so this approach included the maximum possible time to capture their scholarly activity during and after their time in-program. A total of 141 trainees participated in these programs. All 141 trainees were considered for study inclusion. Trainee profiles were included when the person had an affiliation with the institution or its CTSA partners. If it could not be determined with confidence that a profile belonged to the individual of interest, that person’s information was not included in this analysis. Trainees were divided into two groups: TS PhD students and TST trainees. TST trainees were further subdivided into two subgroups: TST predoctoral and TST postdoctoral trainees.

Google Scholar and Scopus both contained number of publications, number of citations, h-index, and first publication year. Investigators calculated number of citations/year and m-quotient using the data contained therein. M-quotient was defined as h-index divided by “n,” where “n” equaled the number of years since first publication. For trainees with active profiles in both Google Scholar and Scopus, investigators used the Wilcoxon Signed Rank test (for non-normally distributed, paired, numerical data) to evaluate possible differences in number of citations, citations/year, h-index, and m-quotient between the two sources.

Results

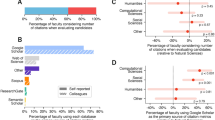

A total of 38 (13 TS PhD students and 26 TST trainees) of 141 trainees (27%) had active profiles in both Google Scholar and Scopus that could be matched with confidence, and were therefore eligible for this study. One eligible person was both a TS PhD student and a TST predoctoral trainee; this person was included in both groups (hence the sum of 39). Of the TST trainees, 21 were predoctoral and five were postdoctoral trainees.

All four metrics (citations, citations/year, h-index, and m-quotient) were significantly higher (p < 0.05) in Google Scholar than Scopus for the entire study population, TS PhD students, TST trainees, and TST predoctoral trainees (Table 1). The comparison for TST postdoctoral trainees (only five individuals), lacked sufficient study power to detect a significant difference; nevertheless, all four bibliometrics were also numerically higher in Google Scholar than Scopus for these postdoctoral trainees as well.

Study limitations

It is important to recognize that our study has limitations, such as possible selection bias, as only trainees with an affiliation with the institution were included. Additionally, this study did not include contextual factors such as trainee history, area of study, or authorship trends in these analyses. Finally, as Google Scholar and Scopus continue to improve, certain updates may not be reflected in this work. Despite these limitations, the current work provides valuable insights on the variance in h-index and other bibliometrics when using Google Scholar and Scopus.

Discussion

To our knowledge, this is the first study to compare bibliometrics in Google Scholar and Scopus among translational science trainees. We discovered overall higher values for all four metrics for these trainees in Google Scholar. This is consistent with previous studies found in the literature (Maurya and Kumar, 2022; Aziz et al., 2009a; Anker et al., 2019; De Groote and Raszewski, 2012). For example, when comparing the Google Scholar and Scopus profiles of Nobel Prize Laureates for Chemistry (22) and Economic Sciences (15), Maurya and Kumar reported that Google Scholar had higher h-index and citation counts (Maurya and Kumar, 2022). In another study evaluating citation counts among Google Scholar, Scopus, and Web of Science, for general medicine journals, Kulkarni et al. found that articles indexed in Google Scholar and Scopus received higher citation counts than those indexed in Web of Science (Kulkarni et al. (2009a). However, upon multivariate analysis, only group authored manuscripts maintained this trend, with Google Scholar demonstrating slightly fewer citations that Scopus or Web of Science (Kulkarni et al. (2009a). Both studies also mentioned lower citation accuracy for Google Scholar when compared to other options (Maurya and Kumar, 2022; Kulkarni et al. (2009a).

There are several reasons for variance between academic search engines like Google Scholar and Scopus. Previous research has demonstrated that Google Scholar includes more content and citations when compared to Scopus (Maurya and Kumar, 2022; Harzing and Alakangas, 2016). Content is added to Google Scholar using automatic robots, or “crawlers”, which continuously search the internet for indexable items and bibliographic data 2023. This contributes to Google Scholars broader coverage and inclusion of items from a variety of sources and time periods across the internet, including some that would be considered non-scholarly sources like personal websites, student handbooks, magazines, dissertations, and blogs (Maurya and Kumar, 2022; De Groote and Raszewski, 2012; Harzing and Alakangas, 2016; 2023; Sauvayre, 2022). Additionally, Google Scholar does not have a content authentication board or similar committee (Maurya and Kumar, 2022; Mondal et al., 2023; Harzing and Alakangas, 2016; Khan et al., 2013). For websites to be indexed in Google Scholar, their content must be primarily scholarly articles and they must make the abstract or full text of the articles available and easy to see 2023. Further, websites that require the user to sign or log in, download additional software, interact with popups, or use links to view the abstract or paper are not included 2023.

Bibliographic data is identified and extracted from items added to Google Scholar by automatic software called “parsers.” 2023 The automatic nature of the data extraction and mistakes in bibliographic data can contribute to errors such as inclusion of duplicate publications, duplicate and false positive citations, incorrect author names, or incorrect titles (Anker et al., 2019; Sauvayre, 2022; Myers and Kahn, 2021; Haddaway et al., 2015). In addition to this, if needed, researchers can also add works manually into their Google Scholar profile, 2023 creating a gateway into potentially claiming work that is not theirs. Upon review of the senior author’s Google Scholar profile, it was confirmed that Google Scholar counts preprints and published abstracts as distinct from their corresponding published journal articles (Maurya and Kumar, 2022; 2023; Martin-Martin et al., 2018). While this does contribute to higher publication counts, many of these inclusions have little or no citations (Harzing and Alakangas, 2016). Google Scholar also indexes large volumes of non-English literature and older articles, (Anker et al., 2019; 2023; Martin-Martin et al., 2018) which can lead to both higher publication counts and higher citation counts. Finally, for proprietary reasons, Google Scholar does not publicly disclose which publishers it has agreements with or the algorithms and methods used in data linking (Aziz et al., 2009a).

Scopus has a Content Selection and Advisory Board; it is estimated that about 25% of content submitted to Scopus is accepted for indexing (Maurya and Kumar, 2022; Khan et al., 2013). Scopus receives its content directly from publishers, includes published journal articles, conference proceedings, preprints, and books, and is updated daily (Aziz et al., 2009a). Scopus creates profiles for researchers using an automatic algorithmic data processing instead of having scholars construct their own profiles. A new scholar profile is created automatically when Scopus links two or more articles to the scholar. This can cause researchers to accumulate multiple profiles over time if they change institutions. Merging profiles can be done by individuals and third parties by clicking the “request to merge authors” tab in the author search results view or “edit profile” tab when viewing a specific profile.

The discovery that academic search engines differ in h-index and other bibliometrics has several important implications, including possible different interpretations of a scholar’s impact, productivity, and influence depending on the search engine used. Also, intermixing bibliometrics from multiple search engines could result in suboptimal or even unfair comparisons of scholars and programs. Since Scopus focuses on what it deems to be high-quality peer-reviewed journals, and Google Scholar indexes a broader source of documents, work outside those mainstream peer-reviewed journals might be reflected more in Google Scholar than Scopus (Aziz et al., 2009a; Harzing and Alakangas, 2016). Furthermore, if only one of the search engines counts self-citations, then that one might report higher citation counts. The user should choose an academic search engine based upon specific goals—whether it is publishing in peer-reviewed journals, minimizing self-citations, or other metrics. Some platforms might be more valuable for academic leaders who wish to compare scholars and programs, while other platforms might better serve scholars aiming to highlight their most favorable bibliometric indicators. Ultimately, lack of standardized methods to collect and count citations could lead to different interpretations regarding the value of scholars and programs. These findings add to growing concerns that relying too heavily on a single bibliometric, like h-index, is potentially problematic, and a more holistic approach should be employed.

Conclusion

In this study of translational science trainees from one NIH/CTSA hub in South Texas, four scholarly metrics (citations, citations/year, h-index, and m-quotient) were higher in Google Scholar than Scopus. Understanding and recognizing that there are differences in bibliometrics in these two resources is important, especially when conducting comparative evaluations of peers and programs. Stakeholders should be consistent in their choice of academic search engine and avoid cross engine comparisons, as failure to do so might contribute to inequitable decision-making.

Data availability

The data for this study are available directly from the online sources: Google Scholar (https://scholar.google.com/) and Scopus (https://www.elsevier.com/products/scopus). In addition, those who are interested may obtain the analytic data file used for this data analysis from the corresponding author.

References

Inclusion guidelines for webmasters, Google Scholar (2023) last accessed 9/30/23 at: https://scholar.google.com/intl/en/scholar/inclusion.html

Anker MS, Hadzibegovic S, Lena A, Haverkamp W (2019) The difference in referencing in Web of Science, Scopus, and Google Scholar. ESC Heart Fail 6:1291–1312

De Groote SL, Raszewski R (2012) Coverage of google scholar, scopus, and web of science: a case study of the h-index in nursing. Nurs Outlook 60:391–400

Haddaway NR, Collins AM, Coughlin D, Kirk S (2015) The role of Google Scholar in evidence reviews and its applicability to grey literature searching. PLoS ONE 10:e0138237

Harzing A, Alakangas S (2016) Google Scholar, Scopus and the Web of Science: a longitudinal and cross-disciplinary comparison. Scientometrics 106:787–804

Khan NR, Thompson CJ, Taylor DR, Gabrick KS, Choudhri AF, Boop FR, Klimo Jr P (2013) Part II: Should the h-index be modified? An analysis of the m-quotient, contemporary h-index, authorship value, and impact factor. World Neurosurg 80:766–774

Koltun V, Hafner D (2021) The h-index is no longer an effective correlate of scientific reputation. PLoS ONE 16(6):e0253397

Kulkarni A, Aziz B, Shams I, Busse JW (2009b) Comparisons of citations in Web of Science, Scopus, and Google Scholar for articles published in general medical journals. JAMA 302(10):1092–1096

Kulkarni AV, Aziz B, Shams I, Busse JW (2009a) Comparisons of citations in Web of Science, Scopus, and Google Scholar for articles published in general medical journals. JAMA 302:1092–1096

Martin-Martin A, Orduna-Malea E, Thelwall M, Delgado Lopez-Cozar E (2018) Google Scholar, Web of Science, and Scopus: a systematic comparison of citations in 252 subject areas. J Informetrics 12:1160–1177

Maurya A, Kumar A (2022) Comparing the h-index (Hirsch index) of Nobel Laureates Scopus and Google Scholar profiles in chemistry and economics sciences. Webology 19:142–151

Mondal H, Deepak KK, Gupta M, Kumar R (2023) The h-index: understanding its predictors, signficance, and criticism. J Family Med Primary Care 12(11):2531–2537

Myers BA, Kahn KL (2021) Practical publication metrics for academics. Clin Transl Sci 14:1705–1712

Sauvayre R (2022) Types of errors hiding in Google Scholar data. J Med Internet Res 24:e28354

Acknowledgements

YPC and CRF were Program Directors for the TST and TS PhD programs, supported by the National Center for Advancing Translational Sciences, National Institutes of Health, through Grants T32 TR004544, T32 TR004545, and UM1 TR004538, during the conduct of this study. The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIH.

Author information

Authors and Affiliations

Contributions

Study concept and design: CRF. Statistical analysis: LDD, CRF. Interpretation of data: all authors. Drafting of the manuscript: LDD, CMG, and CRF. Critical revision of the manuscript for important intellectual content: all authors. Study supervision: CRF.

Corresponding author

Ethics declarations

Competing interests

The others declare no competing interests.

Ethical approval

This work did not require approval by an institutional review board (IRB) as all data are publicly available.

Informed Consent

This work did not require informed consent by an institutional review board (IRB) as all data are publicly available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Davis, L.D., Gilmore, C.M., Vargus, A. et al. Comparison of h-index and other bibliometrics in Google Scholar and Scopus for articles published by translational science trainees. Humanit Soc Sci Commun 12, 153 (2025). https://doi.org/10.1057/s41599-025-04462-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1057/s41599-025-04462-2