Abstract

There is a strong tendency in prevailing discussions about artificial intelligence (AI) to focus predominantly on human-centered concerns, thereby neglecting the broader impacts of this technology. This paper presents a categorization of AI risks highlighted in public discourse, as reflected in written online media accounts, to provide a background for its primary focus: exploring the dimensions of AI threats that receive insufficient attention. Particular emphasis is dedicated to the ignored issues of animal welfare and the psychological impacts on humans, the latter of which surprisingly remains inadequately addressed despite the prevalent anthropocentric perspective of the public conversation. Moreover, this work also considers other underexplored dangers of AI development for the environment and, hypothetically, for sentient AI. The methodology of this study is grounded in a manual selection and meticulous, thematic, and discourse analytical manual examination of online articles published in the aftermath of the AI surge following ChatGPT’s launch in late 2022. This qualitative approach is specifically designed to overcome the limitations of automated, surface-level evaluations typically used in media reviews, aiming to provide insights and nuances often missed by the mechanistic and algorithm-driven methods prevalent in contemporary research. Through this detail-oriented investigation, a categorization of the dominant themes in the discourse on AI hazards was developed to identify its overlooked aspects. Stemming from this evaluation, the paper argues for expanding risk assessment frameworks in public thinking to a morally more inclusive approach. It calls for a more comprehensive acknowledgment of the potential harm of AI technology’s progress to non-human animals, the environment, and, more theoretically, artificial agents possibly attaining sentience. Furthermore, it calls for a more balanced allocation of focus among prospective menaces for humans, prioritizing psychological consequences, thereby offering a more sophisticated and capable strategy for tackling the diverse spectrum of perils presented by AI.

Similar content being viewed by others

Introduction

This paper examines the ongoing public discourse on the risks associated with AI, as reflected in written online media coverage. Specifically, a classification framework of AI threats consisting of 37 + 1 categories is introduced, derived from a thematic and discourse analysis of how these dangers are portrayed in online media articles. The key purpose of this study is to reveal that the current discussion surrounding AI threats overlooks multiple critical areas: the psychological effects of AI on humans, the dangers posed to non-human animals (referred to simply as animals), the environment, and artificial agents potentially evolving into self-aware and/or sentient entities due to the development of the technology.

The structure of this paper is as follows: subsequent to this introductory section, which aims to illuminate the fundamental ideas to be elaborated on later, the second section presents the theoretical background of the investigation, focusing on the thematic and discourse analysis of the written online media coverage of AI risks, and derived from that, the third section outlines the categorization of these threats. The fourth section delves into the evaluation of the findings, conveying the principal aim of this study: identifying blind spots within the public discourse and highlighting the necessity to adjust and broaden its focus beyond exclusively human concerns. Within the domain of anthropocentric perspectives, it is argued that attention must be redirected toward specific elements, particularly the psychological implications. Additionally, it will be maintained that a more inclusive approach to risk assessment is crucial, considering the interests of non-human entities—foremost among these, a vast range of animals—and recognizing them as subjects of moral concern due to their capacity for suffering. The concluding fifth section will outline the findings and propose a significant shift in discussing AI perils, advocating for a more comprehensive framework that thoroughly addresses the diverse threats posed by AI advancements.

Significance and challenges of AI risk assessment

Many hold the view that the survival of living organisms, as well as the quality of life that the Earth offers to beings living on it, are of fundamental importance. Therefore, it appears to be an essential task to consider and seek to prevent any circumstances that could possibly threaten the continuation of the existence of the natural world, diminish the living conditions on our planet, or cause suffering to any sentient entities. This holds true even though a significant portion of society seems to underestimate the cognitive abilities of animals compared to scientific evidence (Leach et al. 2023). Nevertheless, the recognition of sentience across a broad spectrum of animals is increasingly reflected in legal and cultural frameworks. (Treaty of Lisbon 2007, 49; Animal Welfare Sentience Act (2022), Andrews et al. (2024))

These apprehensions arise in relation to every novel, highly potent technology invented by humanity. However, with advancements like AI, which has an immense potential to become extremely powerful and versatile and is already altering the way we live (Salvi and Singh 2023, pp. 5441–43), these concerns are particularly well-founded and relevant.

In order to effectively address the potential risks posed by AI, it is first necessary to ascertain the nature of the various dangers we might encounter, a task that is inherently challenging to accomplish. Merely pondering upon the expression ‘superintelligent AI,’ which denotes an artificial agent possessing a level of cognitive capability that considerably surpasses human intellect, can quickly lead to the conclusion that attempts to foresee the approaching adversities are futile. With the mental faculties of Homo sapiens, it is—by the very meaning of the term superintelligent—unattainable to anticipate all the actions such an entity might undertake (Bostrom 2014, p. 52). Nevertheless, given the immense scale of the stakes, efforts must be made to predict the scenarios that might unfold.

Some hazards involved in this conversation are notably easier to formulate prognoses about. Besides frequent discussions about employment shifts as a result of a new wave of automatization and its possible consequences, the perils posed by AI seem to be overly emphasized regarding artificial agents potentially leading to annihilation or enslavement of humanity, both in the scientific literature, as well as in everyday narratives (Turchin and Denkenberger 2020, pp. 147–48). Clearly, these topics draw greater attention than their more down-to-earth counterparts, but be that as it may, it appears evident that additional steps should be taken to reveal the less severe but intuitively more realistic and, fortunately, potentially also more predictable outcomes of AI technology.

Need for expanding and refocusing the AI risk framework

There seems to be a middle ground between the far-fetched, extremely severe, even catastrophic scenarios threatening the very existence of human civilization and the rather obvious fears, such as automation-driven job losses. A much stronger emphasis must be put on the dangers that fall into this zone of insufficient focus across both academic research and public opinion. The issues of psychological damage inflicted on humans, along with the technology’s sustainability concerns, undoubtedly belong to this area. Yet, an even more alarming oversight is the almost complete neglect of the suffering caused to animals by AI, which demands urgent attention.

Various and significant risks are posed to the human psyche, including but not limited to eroding mental health and emotional well-being through manipulative relationships and deceptive content, fostering digital addiction, social isolation, as well as diminished human functions (Shanmugasundaram and Tamilarasu 2023; Ienca 2023). In the case of animals, primary concerns that stand out as particularly pressing and demand urgent attention emerge from AI-driven enhancements in factory farming efficiency, potentially exacerbating already appalling conditions for animals, alongside algorithmic bias against animals that may be capable of solidifying the exploitation of animals in the social fabric (Singer and Tse 2023, pp. 541–547). Concerning the ecological effect and the feasibility, the associated perils include energy consumption and, in particular, the greenhouse gas emissions it induces, the technology’s water footprint, and the demand for specific materials such as lithium or cobalt, to name a few, across the life cycle of an AI system (Ligozat et al. 2022).

This study seeks to draw attention to the insufficiently addressed or utterly disregarded menaces and stresses the importance of prioritizing the highly realistic perils among these. Having said all this, with each new development that we witness and are likely to see in the near future, it becomes increasingly more challenging to determine which ideas hide genuine threats and which should still be considered unfounded speculations. Therefore, even the possibilities that might strike one as extremely unlikely, bearing in mind the tremendous risks they carry, must be taken into account to some degree, even if we pay closer attention to more probable eventualities. For instance, Bostrom argues that the possible amount and severity of suffering that artificial agents might have to endure in the future is so monumental in extent that it by far exceeds the aggregated agony of all biological organisms that have ever inhabited our planet (Bostrom 2014, pp. 101–103). Despite the highly speculative nature and extremely low likelihood of these scenarios, the immense stakes, often referred to as ‘astronomical suffering’, ‘mind crime’, and especially the combination of the two (Bostrom 2014, p. 152; Gloor and Althaus 2016; Sotala and Gloor 2017), provide valid grounds for their inclusion in our analysis. Nonetheless, this work argues that greater focus should be directed toward developments affecting human psychology, animals, and the environment.

Exploring the ongoing discourse from a different angle, it must be pointed out that the public discussion, as well as the ethical debate surrounding the dangers originating from AI is predominantly human-centered in the sense that the inquiries and reports on the topic tend to be fixating on the impact of the technology on humans exclusively (Owe and Baum 2021; Rigley et al. 2023, pp. 844–848). One flaw of this perspective is that it overlooks the fact that mankind constitutes only an insignificant proportion of the total animal population on Earth, not to mention other forms of biological life.

Risk assessment must factor in the interests of entities capable of experiencing subjective sensations comprising joy and suffering. For a substantial segment of the animal kingdom, the capacity for pain perception is clearly established, encompassing mammals, birds (Low 2012), and arguably, fish (Balcombe 2017, pp. 71–85; Braithwaite 2010). This investigation also extends the scope from biological beings to include other dimensions. Specifically, it addresses, though with less emphasis, the speculative issue of artificial agents that might have the potential to reach a state of sentience (James and Scott 2008). Adopting Bentham’s stance, sentience is considered a necessary and sufficient prerequisite for agents to have interests and, thereby, also a satisfactory condition for possessing some kind of moral status (Bortolotti et al. 2013). Additionally, the concept of the natural environment as a moral subject will be addressed, a notion that aligns with several ethical frameworks (Palmer, McShane, and Sandler 2014).

Analysis of the media coverage of AI risks

This section will introduce the methodology for identifying focal points and blind spots—with the latter serving as the central focus of this study—in the public perception of AI risks, examined through the online media that both shapes and represents public opinion.

Written online media as public reflection

The press serves as a paradoxical medium, with journalists both representing and actively shaping public opinion and collective societal attitudes. (McLuhan 1994, p. 213)

Since, for the general public, one of the primary sources of information today is online news coverage, the issues addressed in digital media strongly shape people’s opinions and bring certain areas into focus (Zhou and Moy 2007, pp. 81–84; Shrum 2017, pp. 9–10; Sun et al. 2020, p. 1). The topic of AI is no exception to this influence (Chuan et al. 2019, p. 339).

Accordingly, through their cultural influence, journalists have an impact on the trajectory along which these tendencies—in this particular instance, the integration of AI into the fabric of society—unfold. The relation is two-sided, though, in the sense that journalists also represent society through their personal news decisions (Patterson and Donsbagh 1996), therefore, the questions they discuss reflect the concerns and topics of everyday people.

Complementing this, Neri and Cozman (2020) demonstrated that experts in the spotlight often drive AI risk perception, playing pivotal roles in shaping and moderating the discourse, particularly through social media platforms. Be that as it may, this paper will not deeply delve into this phenomenon nor the complex dynamics between laypeople and journalists. Instead, aligned with the previous paragraph’s assertions, it will be assumed that columnists either represent broader societal views or direct public attention to prevalent issues and, as a result, their narratives reflect or converge on the most frequently discussed and most captivating or unsettling matters among the general public. According to this, this paper starts from the premise that an exploration of written online media coverage will uncover meaningful understandings of public opinion. This analysis extends beyond established digital news outlets to include a variety of online media sources, as non-traditional media forums serve a comparable function and mirror a similar format.

Limitations of prior research on AI risks in media

Research has already explored how AI technology and the threats it poses are portrayed in the news media, specifically in the studies conducted by Chuan et al. (2019), Sun et al. (2020), Nguyen and Hekman (2022), and Nguyen (2023). Nevertheless, their work concentrated on a longer timeframe that preceded the recent AI surge, which was undoubtedly triggered by the launch of the large language model (LLM), ChatGPT, at the end of 2022, significantly enhancing public awareness of generative AI technologies (Waters 2023; Roe and Perkins 2023, p. 2). Accordingly, the referenced ‘pre-ChatGPT’ media analyses could not capture the most recent perspectives in the discourse solely because of the timeframe of their investigation. Furthermore, while the authors’ findings on the prevalence of topics such as bias, surveillance, job losses, and cyberattacks in public dialog are consistent with my own results, my greater emphasis on the dimension of risks and the consequent more comprehensive exploration of AI threats provide deeper and novel insights into the matter, which are also more current due to the later date range.

It is also important to acknowledge the research conducted by Xian et al. (2024), which explored news articles from a timeframe partly overlapping with the scope of this study. Nonetheless, their attention to the aspect of dangers surrounding AI was comparatively less substantial, therefore, this paper provides a more complex understanding of this aspect.

Overview of the methodology

A summary of the procedure followed is provided in Fig. 1 to promote a quick and transparent overview of the method used.

In the first phase, articles were collected by conducting an online search using predefined keywords related to AI and risks. A substantial number of articles were found, but only those presenting general discussions of AI threats were selected, and those focusing on specific aspects were excluded.

As a typical example, the publication “15 AI risks businesses must confront and how to address them” (Pratt 2024) was not selected due to its narrow focus, specifically on business-related concerns, while “The 15 Biggest Risks Of Artificial Intelligence” (Marr 2023), was chosen for its broader perspective.

This process yielded 56 online media articles published between November 2022 and October 2024, all offering an overview of the dangers of AI.

Preliminary experiments were carried out to assess the capability of automated text analysis to identify the risks addressed by the authors in the articles and then to structure them into categories—however, these approaches failed to yield the expected results in terms of comprehensiveness and precise sorting of the perils along the lines of the narratives presented. Consequently, a manual methodology was adopted for further investigation.

A discourse analytical approach was employed to explore the nuanced and context-driven nature of language in texts, which extends beyond the surface meaning of words, considering the broader context of verbal expression, and is focused on uncovering the underlying dynamics that shape the communication of the authors. Discourse refers to the construction of meaning beyond individual statements, addressing the implicit structures and influences behind them, including the authors’ motives, background, etc. Guided by discourse analytical interpretation, each article was thoroughly read and critically reviewed, with all mentions of risks or harms, along with the section headings, systematically compiled into two—partially overlapping—lists. As a result of this, 1971 excerpts were extracted, primarily consisting of quotations, that provided an extensive catalog of the perils covered in online media publications, as well as hundreds of section titles.

As an illustration, the following section headings were extracted from the article “What Are the Dangers of AI?” (Reiff 2023): Deepfakes and Misinformation; Privacy; Job Loss; Bias, Discrimination, and the Issue of “Techno-Solutionism”; Financial Volatility; The Singularity—as well as excerpts such as ‘AI can be used to create and to widely share material that is incorrect’ and ‘Deepfakes are an emerging concern.’

Building on the discourse analytical framing of the data, thematic analysis—integrating elements of framework analysis—was used to identify patterns in the excerpts. The process began with concept-driven coding. That is, from the gathered section headings, an initial set of danger groups (codes) was aggregated to include the most representative ones. As a first step, for each article, the excerpts stemming from that publication were assigned to these risk classes.

For instance, at the outset, a threat category labeled ‘Employment’ was established, and the following excerpts from the article “What Exactly Are the Dangers Posed by A.I.?” (Metz 2023) were assigned to it: Job Loss; new A.I. could be job killers; they could replace some workers. To provide another example, through discourse-oriented interpretation, the excerpt “AI recruitment tool … preferred male applicants over females” (Kaur 2024) was initially framed under the label ‘Bias and Discrimination’. This classification process ensured that categories did not merely reflect explicit labels used in the articles but also captured underlying meanings and evolving discursive constructions.

Afterward, the codes were—over an extended series of successive iterative cycles—refined into categories that captured the media narratives more and more accurately. This process involved splitting, combining, and adjusting the existing groups, as well as, through the application of thematic coding, reassigning the excerpts to the newly created classes at each stage, following repeated readings of the quotations and their context within the original articles.

Illustratively, the original ‘Legal’ category—over the course of roughly a dozen iteration phases—was ultimately divided, with most of its elements sorted into the subsets ‘Accountability and Liability’ and ‘Intellectual Property and Copyright.’

This process eventually resulted in a multi-level hierarchical classification system consisting of 37 thematic clusters, effectively covering the media narratives to which all the excerpts were assigned.

The following subsections will present a detailed account of the methodology.

Selection of media articles for review

A systematic online search was conducted using predefined keywords related to ‘AI’ and ‘risks’ to identify relevant articles for analysis. This approach also mirrors a primary way how the mainstream audience seeks information on the topic online, thereby enabling the identification of articles likely to align with those encountered by the general audience.

Publications were not limited to those from online news portals but also non-conventional media platforms, including opinion sites, blogs, networking sites, as well as academic and institutional websites were considered, taking into account that all the chosen articles serve a corresponding purpose to and share a comparable format with those published by traditional online news media outlets. For the lay audience searching online for publications on AI risks from a wide-ranging perspective, the selected pieces from these sources are just as accessible and likely to be found as those on established digital news channels.

Exclusively, online media articles published after November 2022 were considered, with the latest examined piece from October 2024. This interval is extremely relevant due to the fact that during this period, the hype around AI reached what might well have been its highest peak for years, subsequent to the public launch of the LLM ChatGPT in November 2022, which revealed the capabilities of the technology to the global citizenry and transformed public attitudes toward AI tools, initiating a new phase that calls for fresh insights. Undoubtedly, the current limitations of generative AI were also exposed, but it seems clear from the frenzy during the investigated period that the potential has surpassed the prior expectations within mainstream society, potentially making some of the earlier speculative fears into more tangible realities.

The candidate articles were thoroughly reviewed, and those discussing AI hazards in a broader context rather than concentrating on a single, specific aspect were picked. This decision was grounded in the assumption—which, however, is neither supported nor contradicted by the literature—that these are the pieces the internet readership with a general curiosity about the topic is more likely to discover and read rather than the domain-oriented ones (such as centering on cyber risks, threats for businesses, etc.). Thus, they are regarded as more accurately reflecting what the average reader is likely to have encountered.

Given the manually curated approach employed, evaluating a vast multitude of articles on AI risks published on specific aspects was not feasible, as performing an overarching, non-automated analysis from those publications would have required an unmanageable amount of effort. Fortunately, the number of articles discussing the matter of AI dangers in broad terms—gathered through the online search process—fell in the range that was manageable with the applied methodology.

The exclusive reliance on general-interest articles limits the scope of this study, while topic-specific online media pieces could serve as a valuable source for further research. Despite this, it is assumed that these comprehensive publications provide a clear overview of the most important themes in the public discussion.

Comparison of automated and manual text analysis

A manual analysis was deliberately employed, even if it could only focus on a smaller selection of articles, on the other hand, it enabled a deeper and more nuanced exploration beyond the limitations of automated large-scale dataset analyses. Although the methodological approach of the investigation inherently limits the scope of articles to be examined, algorithmic assessment of a substantial amount of publications reduces the accuracy of the evaluation, overlooks critical details, and results in less nuanced findings, which could not be allowed for the execution of the task that had been set: the categorization of AI risks based on the narratives in which they are presented in online publications.

Research on automated text analysis (Grimmer and Stewart 2013, pp. 268–271; Mahrt and Scharkow 2013, pp. 25–30; Zamith and Lewis 2015, p. 315; Günther and Quandt 2016, p. 86) suggests that while algorithmic evaluations might enhance efficiency and prove useful in many cases, however, they have considerable limitations. Mahrt and Scharkow (2013, p. 29) also indicate that, in some cases, the analysis of a smaller dataset can yield more insightful conclusions than that of a larger one.

Primarily due to the complexity and indeterminacy of human language (Grimmer and Stewart 2013, p. 268; Humphreys and Wang 2018, p. 1277), automated methods might lead to outcomes that are unreliable (Zamith and Lewis 2015, p. 315), practically unverifiable (Grimmer and Stewart 2013, p. 271), prone to misinterpretation (Mahrt and Scharkow 2013, p. 29), or simply are insufficient to perform specific tasks (Günther and Quandt 2016, p. 77)—these concerns must be borne in mind even if they offer extensive sample sizes (Günther and Quandt 2016, p. 86). Consequently, automated techniques cannot replace the layered understanding gained from the close reading of texts and careful reflection, which remain essential for scholarly accuracy (Grimmer and Stewart 2013, p. 268, 270; Günther and Quandt 2016, p. 86; Lind and Meltzer 2021, p. 934).

Programmed text processing generally struggles with deeper contextual and cultural meanings and connotations, including sarcasm, metaphors, as well as complex and figurative rhetorical arguments (Humphreys and Wang 2018, p. 1277). These mechanisms also often fail to recognize narrative patterns and connections between related concepts (Ceran et al. 2015, p. 942).

Furthermore, automated content analysis is often less effective at determining positive or negative tone, which was crucial when dealing with delicately formulated arguments in the selected publications (Conway 2006, p. 196). This distinction was essential to discern what were considered genuine risks from those that were not and were mentioned only as contrasts, coupled with the fact that numerous articles underlined both AI’s benefits and perils. Moreover, when using pieces of work from news media platforms as inputs to a model, distinguishing between relevant text and advertisements poses a significant challenge for programmed techniques (Günther and Quandt 2016, p. 77).

The limitations of automated techniques were also indicated by the failure of preliminary experiments with LLM-aided processing of entire news articles, as well as the failure of manually curated excerpts, due to the inability to capture the contextual significance and the overlap of terms describing distinct threats.

Even with this compromise between the breadth of publications and the depth of analysis, the number of hand-selected articles totaled 56, and the overall number of manually chosen and repeatedly evaluated excerpts reached nearly 2000. This dataset was considered to be sufficient to identify narrative patterns in the representation of AI hazards, that is, to identify risk categories as they thematically appear in the online media.

Thematic and discourse analysis of media articles

The thematic and discourse analysis of the 56 finally selected online media articles was conducted to identify the various hazards they discuss. In total, over 1971 excerpts were extracted from the publications, comprising mostly short quotations and, in some instances, citations. These items were then systematically categorized into 37 + 1 thematic clusters. This categorization facilitated the identification of frequently mentioned AI threat areas in online media outlets, those that are seldom referenced—and, crucially, areas that may be overlooked in the media discourse.

The development of the classification framework was an iterative procedure involving repeated reevaluation of excerpts within their full article context and reconsideration of previously proposed categories. This process ultimately resulted in the categories reaching their most detailed resolution, ensuring they could no longer be further disentangled into distinct narratives. It is not implied, however, that some categories could not be slightly further differentiated, but doing so would fail to represent the AI danger narratives as they appear in the media.

As already indicated, contrary to the quantitative methods employed in investigations on the news media put forth by Chuan et al. (2019), Nguyen and Hekman (2022), Nguyen (2023), and Xian et al. (2024), this study concentrated on a limited number of articles chosen purposefully to be pieces that deal exactly with the problem of AI risks but are still general-interest writings that are not confined to specialized areas. Moreover, diverging from the approaches mentioned, instead of relying on statistical and sampling methods, topic modeling, and automated content analysis, among other procedures available, a manual qualitative process was chosen, namely the application of thematic and discourse analysis. This decision was motivated by the belief that automated content analysis lacks the capability to discern subtle nuances and fine differences in interpretation, as elaborated in the preceding subsection.

The study’s core methodology was rooted in thematic analysis, as it primarily focused on identifying patterns in media narratives rather than developing new theoretical constructs. Discourse analysis was applied mainly in framing the media narratives, guiding the interpretation of these patterns within their broader media and societal context. This means that thematic coding was not merely an inductive categorization process but also guided by an awareness of the rhetorical and discursive structures shaping media AI risk narratives. Additionally, elements of grounded theory contributed to the inductive generation and iterative refinement of thematic codes.

Thematic coding: integrating framework analysis and elements from grounded theory

Since thematic analysis focuses on uncovering and organizing patterns in qualitative data, it provided a structured approach for examining the ways AI risks are addressed in the selected media articles.

Thematic coding (Gibbs 2007, p. 38) was conducted by interpreting each relevant excerpt—whether discussing risks implicitly or explicitly—to extract its contextual meaning, followed by assigning a specific code that linked the passage to the identified idea.

The first set of codes (codebook) emerged after the initial reading of the corpus, aggregating risk categories from the sample’s online media articles based on their section headings, functioning as predefined indices—a method known as concept-driven coding. The strategy of compiling a list of thematic ideas and then applying these codes to the text can be described as framework analysis within thematic analysis (Ritchie and Lewis 2003, pp. 220–24; Gibbs 2007, p. 44).

The process of reviewing excerpts within the broader context of their respective articles and systematically indexing them was conducted iteratively. As decisions had to be made on borderline cases between closely related groups regarding which label an excerpt would fall under, definitions of the codes were altered (Gibbs 2007, p. 40).

The process of tagging excerpts with thematic codes extended beyond mere description. It involved integrating these codes into broader categories, which were sometimes later refined or subdivided, and developing analytic codes. Unlike descriptive codes, which closely reflect the authors’ explicit expressions, analytic codes provide a deeper understanding by interpreting how the author perceives an issue, drawing on implicit meanings within the text (Gibbs 2007, pp. 42–43).

While this applied method of thematic coding did not fully adopt a grounded theory approach, it incorporated elements of it. Specifically, emergent coding and recursive category refinement were integrated as the process aimed to inductively generate insights in a cyclic process, drawing directly from the data rather than relying on predefined theories, given that the purpose of the investigation was to identify the narratives through which AI risks are presented in the online media. Considering that both the original and iteratively revised codebooks were entirely anchored in the investigated corpus itself—without drawing from any pre-existing scholarly frameworks on AI risks—all ideas reflected in the classification system are ‘grounded’ in the data, emerging from and supported by it (Gibbs 2007, pp. 49–50).

The comparison of this derived structure with existing classification systems and taxonomies of AI-related challenges in the literature was conducted only later, as will be presented in sections “Taxonomy and classification of AI risks in the literature” and “Comparison of the proposed categorization with existing frameworks”.

Discourse analytical approach: contextual framing and meaning construction

Given that risk communication is inherently language-centered, discourse analysis provided a framework for examining how AI risks are framed and portrayed within the media landscape (Sarangi and Candlin 2003).

The discourse analysis approach examines texts beyond surface meaning, considering the broader context of verbal expression, focusing on uncovering the underlying motives and background, while taking into account extralinguistic elements. (Sarangi and Candlin 2003, p. 116). These might include, in the case of written online media, contextual information, intertextuality, paratextual references, and visual features.

This was achieved by systematically analyzing recurring discursive patterns in AI risk narratives, examining how different risks were framed, and identifying both implicit and explicit rhetorical strategies applied by the authors to construct these danger portrayals. Additionally, intertextual references, such as links to broader societal themes—including automation, governance, and ethical responsibility—were considered to contextualize the positioning of AI threats within public discourse.

In the examination of individual online media articles discussing AI risks, a specific criterion for identifying mentions of risk was applied: the publication must treat the issue as an actual threat rather than simply discussing it in general (not as a hazard) or suggesting it is a danger that is not worth consideration. Mere references to issues without recognizing their significance as dangers did not qualify as a valid mention in this study.

Gee (2014, pp. 80–82) argues that language must be understood in context, as words derive meaning from their application rather than their mere presence. His concept of situated meanings—assembled ‘on the spot’ based on contextual cues—aligns with the focus employed in this study on examining how terms are used to convey significance.

Given that various keywords correspond to fundamentally distinct issues, the analysis of the mentions carried out in this study went beyond just identifying the presence of terms within an article. Instead, the context in which these keywords were used was minutely examined to determine whether a topic was meaningfully addressed and in what sense the term was applied.

As Gee (2014, pp. 82–85) highlights, language relies on contextual references to convey meaning, reflecting and constructing reality. Therefore, examining terms within their specific contexts is crucial to uncover their significance, focusing on how language shapes meaning in discourse.

Certain matters are solely implied in the articles rather than explicitly stated, as illustrated by the subtle way of referring to existential threats in several cases. For instance—using discourse analysis—it was examined how discussions might indirectly suggest that AI has the potential for consequences comparable to nuclear disasters, subtly hinting at the possibility of an apocalypse. Then again, these were counted as valid mentions for different categories in the analysis.

Gee’s (2014, pp. 80–82) concept of situated meanings provides a valuable framework for understanding how media articles convey significant implications, such as existential threats posed by AI, through indirect references. When articles compare AI risks to nuclear disasters or allude to an apocalypse, they offer contextual cues that trigger readers to construct meanings based on their prior experiences and shared cultural knowledge, in this case, for instance, our historical understanding of nuclear bombs. Such indirect suggestions, though not explicit, evoke associations with large-scale devastation and existential risks. This mutual construction of meaning between the text and the reader justifies counting these indirect references as valid mentions of dangers.

Despite focusing on a limited sample size, this work was characterized by careful, individual attention both in the selection of the articles for investigation, as well as in the processing of the chosen journalistic pieces, as opposed to efficiency-driven, shallow automated analyses. The approach employed is believed to provide substantial added value to the discourse on AI risks.

Taxonomy and classification of AI risks in the literature

In the following, a review of existing taxonomies and frameworks for categorizing AI threats is provided, offering a concise overview of scholarly approaches to classification. However, unlike these systems, which mainly aim to identify all potential dangers systematically, this work takes a different approach. Following the principles of thematic and discourse analysis as described above, the categorization was developed directly from the narratives emerging in articles, ensuring that the framework created reflects the public discourse on AI risks as it appears in the online media.

Numerous studies have presented a comprehensive categorization of AI risks, employing diverse approaches, either with the explicit goal of developing a classification system or as an integral part of the methodology to address the relevant concerns. While not all referenced articles prioritized the question of menaces, they did include them in their discussions in one form or another.

Some authors focused on identifying prevalent topics or specifically types of hazards as outlined in AI guidelines (Jia and Zhang 2022; appliedAI Institute for Europe 2023) or as discussed in academic literature (Clarke and Whittlestone 2022; Hagendorff 2024), while others initiated from theoretical frameworks aimed at dissecting and addressing the complex perils AI presents (Yampolskiy 2016; Tegmark 2018; Cheatham et al. 2019; Russell and Norvig 2020, pp. 1037–57; Schopmans 2022; Ambartsoumean and Roman 2023; Kilian et al. 2023; Federspiel et al. 2023; Lin 2024).

Additionally, efforts were made to provide solutions to these identified threats, ranging from more abstract perspectives to practical guidelines (Turchin et al. 2019; Bécue et al. 2021; Bommasani et al. 2021 Kaminski 2022; Hendrycks and Mazeika 2022; Weidinger et al. 2022; Hendrycks et al. 2023; Shelby et al. 2023; Crabtree et al. 2024) not to mention the categorization outlined in the EU AI Act itself (European Commission 2021). Connected to the topic of regulation, applying the viewpoint of industry and government, Zeng et al. (2024) provide a unified taxonomy rooted in government regulation and company policies.

Beyond explicit undertakings to categorize AI risks and deliver taxonomies, some works provide a form of clustering indirectly by addressing concerns about AI’s impact and risks from specific aspects, like in a broader societal or more narrow business operation context, like Acemoglu (2021) and Sharma (2024), respectively.

A select number of existing assessment frameworks addressing AI risks are presented in the following to provide a basis for comparison with the framework developed in this study. It must be emphasized again that the classification proposed in the subsequent section was created following thematic analysis, integrating elements from grounded theory—the categories did not emerge from a literature review but from the analysis of articles and excerpts, representing the narratives in the way they appear in online media articles.

To promote diversity and enable meaningful comparison, the first categorization system explored below will bear little resemblance to the one proposed in this paper, offering a contrasting perspective, while the others will exhibit varying degrees of similarity, allowing for a more reflective analysis across absolutely differing and in many respects aligned frameworks.

Crabtree, McGarry, and Urquhart (2024) classify AI risks by separating them into four domains and/or systemic levels of interaction where they must be addressed: risks in the innovation environment (e.g., novelty, limited understanding of developers, regulatory ambiguity), the internal operating environment, that is, the computational system (e.g., model boundary overreach, system integration issues), the external operating environment, that is, the human system (e.g., user-induced flaws, demands prioritizing automation over accuracy leading to malfunctions), and the regulatory environment (e.g., frequent changes, expertise gaps, compliance impact on development efficiency). This framework reflects the diverse sources and stakeholders of risks throughout AI development, deployment, and regulation, and also presents how “iterable epistopics” provide practical insights into risk management.

Similar to this paper, Thomas et al. (2024) primarily focus on identifying and addressing the “hidden” harms associated with AI while additionally also creating a classification. Nevertheless, while the work presented here centers on the media risk narratives, Thomas et al. explore dangers and detect neglected harms in mainstream AI risk frameworks, including environmental harms, which they highlight as particularly overlooked in risk assessment approaches. Thomas et al. map AI risks across two intersecting dimensions: the scale of harm (individual, collective, societal) and AI supply chain stages (resource extraction, resource processing, deployment). The prior aspect—even if adopting different terminology—addresses the same dimension, the magnitude of potential impact, aligning with the framework proposed in the following section of this paper.

Finally, the most comprehensive framework of AI risks is proposed by Slattery et al. (2024). Their AI Risk Repository consolidates 777 risks derived from 43 taxonomies, organized into a publicly accessible and modifiable database. Built through systematic reviews and expert consultations, the repository employs a best-fit framework synthesis to classify risks into two main taxonomies: a Causal Taxonomy, which categorizes risks by the entity (human or AI), intentionality (intentional or unintentional), and timing (pre- or post-deployment) and a Domain Taxonomy, which organizes risks into seven domains—Discrimination & toxicity, Privacy & security, Misinformation, Malicious actors & misuse, Human-computer interaction, Socioeconomic & environmental, and AI system safety, failures, & limitations—further divided into 23 subdomains. Additionally, while the framework offers a robust classification system, it does not explicitly include the impact, magnitude of potential harms, or probability of risks as formal dimensions. Nonetheless, the authors acknowledge the significance of these factors, particularly for policymaking, and suggest that their integration could be a valuable direction for the future development of their system.

In contrast to the above-enumerated approaches, the focus of this study shifts towards understanding AI risks through the lens of public opinion in several aspects, similar to the work presented by Chuan et al. (2019), Nguyen and Hekman (2022), Nguyen (2023), and Xian et al. (2024). Yet, my investigation concentrates more heavily on dangers and spans a subsequent and exceptionally intense phase in AI development, namely the ‘post-ChatGPT era,’ characterized by widespread adoption and application of LLMs.

Categorization of AI risks

This section, building on the analysis presented in the previous segment, will introduce the present-day viewpoints from the general public regarding the dangers associated with AI, as reflected in media portrayals.

Given that a significant proportion of the AI risks represented in the online articles are deeply interconnected, the goal was to disentangle them as much as possible according to the narratives in which they are typically discussed in media discourse. Another aim was to incorporate them into a multilevel hierarchical structure based on the various angles and layers presented in the articles.

The core themes, representing the first and top level of the hierarchy and denoted by Roman numerals, along with their corresponding subgroups, will be explored in the following subsections. Due to the diverse spectrum of societal risks, the fourth core theme was broken down into four focus areas, marked by capital Latin letters—this second hierarchical level, therefore, is conditional. At the smallest increments, on the third and lowest level of the hierarchy, the specific risk categories are identified using Arabic numerals. It must also be noted that the expression ‘core theme’ does not fit the fifth group on the first hierarchical level, V. Undervalued risks, since that did not emerge ‘organically’ as a theme addressed in the articles but was ‘artificially’ constructed in order to bring attention to certain un- or under-discussed dangers of AI.

Naturally, the classification proposed here is not the only possible one. However, the iterative process, involving repeated reviews of the excerpts in their original article contexts, confirmed the grouping presented in the following, as visualized in Fig. 2.

The online media narratives can be separated into two perspectives, which are not mutually exclusive, namely, concentrating on the causes or the consequences, suggesting an inherent, though unintentional, alignment with mainstream moral philosophy by the authors (or the categorizer, or both). On the one hand, in terms of the origin, the authors’ accounts implicitly differentiate based on human intentionality (not considering AI agency), that is, between intentional and unintentional harm. On the other hand, regarding the outcomes, the dimension of their severity is suggested, however, only indistinctly: ranging from limited-, over societal- to existential scale. Altogether, the focus in the case of each excerpt was either rather clearly on the roots or the results, allowing categorization into I. Dependence risks or II. Malicious use risks for the former, and into III. Societal risks or IV. Existential risks for the latter. For clarity, it must be underlined that no implication of any hierarchy between the two perspectives is intended—the core themes merely reflect the authors’ mode of portrayal, which was relatively distinct. When it came to risks suggesting limited-scale impact, the question primarily revolved around the intentionality dimension.

Only the specific categories assigned to V. Undervalued risks were isolated from the narrative-based framework to shed additional light on them. However, no suggestion will be made regarding where these should be integrated into the structure given by the four core themes, as they do not constitute a normative but a purely descriptive classification aiming to capture how these risks are portrayed in online media. In contrast, the fifth top-level group, V. Undervalued risks, represents a strongly normative framework element, aiming to highlight the problematic nature of the infrequent discussion of its underlying topics in public discourse. Then again, while most of these risks could be thematically classified within the framework established by the earlier core themes, this will not be done, as their most defining feature is their very restricted presentation, both relative to their importance and in absolute terms.

The detailed categorization of AI threats, derived from the online media analysis, is outlined in Table 1.

In addition to identifying whether a topic was simply mentioned (‘mere mentions’), it was also considered whether a specific topic was discussed in detail (‘deeper coverage’) within the articles. The distinction between these two was not merely based on the number of excerpts corresponding to a given category, but also the average depth of the discussion of the specific risk across the articles was considered, therefore in some cases, an article with a few excerpts related to a given risk class was recognized as providing a ‘deeper coverage’ on the matter.

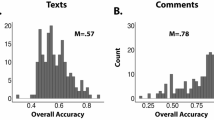

While Appendix A provides a statistical overview of the occurrence of all the risk types in the articles, this section—for clarity—details only the quantitatively coverage of V. Undervalued Risks, as these underappreciated dangers are the key concern of this study.

Moreover, in Appendix B, a compilation of the most representative terms (‘Key terms connected’) for each separated class, referenced from the reviewed articles with minimal modification for a formal and standardized presentation, is also provided. Following the approach used for occurrences, this section outlines only the related terms for V. Undervalued Risks.

It must be admitted that there are dangers that still might fit into multiple categories, and the complexity is reflected in the fact that, as previously mentioned, the same terms can refer to various forms of AI risks. Consequently, identical terms may reoccur as keywords for different categories of hazards.

Dependence risks

The core theme I. Dependence Risks illuminates the concerns due to reliance on technology, without suggesting any malevolent intent by the developers or the operators of AI, or the AI entity itself. They presume that dependency might lead to defects or unfulfilled expectations stemming from human or model flaws, often marked by severe opacity. In more severe cases, human command might be diminished and conflicts with human interests might arise, potentially resulting in runaway operations by the artificial agent.

This core theme, however, does not address extreme cases threatening humanity’s future: in these instances, consequences of that severity were not part of the authors’ narratives. Altogether, in the portrayals of risks being categorized here, online media writers emphasize that the implications stem from technological dependency, shifting focus away from the scale of the resulting events, even if these could be considered societal or existential in scope. It is crucial to underline that this core theme captures accidental outcomes without any harmful intent by humans. To put it another way, the emphasis here lies on the origin, which is, in this case, unintentional, rather than the outcomes.

Dependence—general: Excerpts that could not be classified into the five underlying specific categories fall into this group. These address dependence on AI tools in overarching terms without specific details, implying adverse consequences.

Malfunction and underperformance: it covers technical shortcomings and AI falling short of meeting expectations regarding its performance.

Human error: it emphasizes the aspect of human faults in AI employment or, secondarily, model development, leading to harmful outcomes.

Transparency and explainability: it highlights hazards arising from the difficulty of understanding AI systems and interpreting their outputs.

Control loss and alignment: it addresses concerns about AI operating beyond human supervision and command, potentially conflicting with human values, and evolving in ways that pose significant risks without detailing specific adversarial outcomes.

Rogue AI: it goes beyond a mere loss of control and spans over the severe dangers of AI exceeding human authority, exhibiting manipulative or adversarial behaviors, explicitly acting against human values, and evolving unpredictably, potentially causing widespread and critical disruptions. Extreme cases where this loss of control reaches a level with the potential to fundamentally and destructively transform civilization are already classified as IV. Existential risks.

With regard to these hazards, the idea of AI as a conscious agent arises, though not in a context that raises questions about its potential to suffer. That aspect of risks will be addressed under the category V/3. AI sentience.

Malicious use risks

The core theme II. Malicious Use Risks comprises the results of humans’ intentional misuse of AI technologies for harmful purposes. It includes criminal activities, the creation of damaging content, exploitation by hostile actors, and the potential weaponization of AI or enabling weapon creation through AI.

This core theme does not deal with drastically severe scenarios that threaten the trajectory of societal development or the survival of humanity as a whole, as neither humanity-endangering outcomes nor societal-scale risks were implied in the authors’ narratives within the excerpts falling under this theme. In other words, the primary focus in these cases is on the cause—which is, in this case, intentional—rather than the effects.

Malicious use—general: Excerpts not fitting into the five specific underlying categories are included in this class. These discuss the malicious use of AI tools broadly, suggesting negative outcomes, without delving into the particulars.

Cybersecurity: it captures perils stemming from the malignant exploitation of AI to target digital systems and infrastructures, creating vulnerabilities that compromise the safety and well-being of individuals without causing direct physical harm.

Data protection and privacy: it covers menaces from unauthorized and malevolent access, use, or exposure of sensitive information, leading to the erosion of personal confidentiality and potential misuse of data. It pertains to non-physical consequences and excludes specific societal-scale intrusions to privacy, as well as copyright or intellectual property-related narratives.

Autonomous and AI-enabled weaponry: it explores the dangers of AI-powered technologies being used by hostile actors in weaponized autonomous applications or facilitating the creation and use of harmful and destructive systems. This class focuses on nefarious exploitations of AI technology causing real physical harm rather than digital impact, specifically by non-state forces, excluding military applications.

Societal risks

The core theme III. Societal Risks spans the broad spectrum of perils that arise due to AI technology on a societal scale. The emphasis in this theme shifts to the consequences rather than the cause. Nevertheless, the impacts discussed here, as described in media narratives, do not reach an existential scale.

Societal—general: Excerpts that do not align with the four focus areas or the associated specific categories are grouped into this class. These discuss the malicious use of AI tools broadly, suggesting negative outcomes without delving into specifics.

Sociocultural risks

It incorporates concerns related to fairness, trust, the transformation of values, education, and the reduction of human capabilities in a rapidly evolving social reality led by AI advancements.

Bias, discrimination, and inequality: it covers a wide range of risks of AI systems reinforcing existing disparities and creating unfair outcomes across various societal and institutional contexts.

Information integrity and public trust: it highlights the dangers of AI contributing to the creation and dissemination of misleading content, with significant societal consequences such as reduced societal trust and the degradation of information integrity.

Societal and cultural transformation: it addresses the widespread effects of AI on the societal status quo and stability, including shifts in cultural norms and social structures, underlining both the risks to societal cohesion and democratic integrity.

Education and skill degradation: it explores the adverse impact of AI on knowledge acquisition, cognitive and general human development, and the thriving of imagination and ingenuity, focusing on concerns about diminished abilities and the potential deterioration of scholarly practices and educational frameworks.

Socioeconomic risks

It comprises the broad economy-related challenges introduced by AI to society, touching on threats arising from shifts in labor dynamics, market stability, organizational integrity, and the exploitation of workers driven by profitability considerations.

Workforce displacement: it captures the impact of AI and automation on labor force activities, including reduced demand for certain roles, transformations in the job market, and potentially leading to vast-scale unemployment.

Economic disruption and market volatility: it sheds light on the diverse economic risks associated with AI, including challenges to market stability, industry transformations, and the unpredictability of AI-driven decision-making systems in financial operations.

Organizational and reputational: it focuses on the risks businesses encounter with AI adoption, including utilization dilemmas, operational risks, financial impacts, and potential harm to public trust and organizational integrity.

Outsourcing and worker exploitation: it reflects the perilous outcomes surrounding the reliance on undervalued and often outsourced labor, where workers facilitating AI systems often endure poor conditions, inadequate compensation, and insufficient occupational norms.

Sociopolitical and strategic risks

It outlines the pitfalls posed by AI to civil liberties, democratic processes, global security, and power dynamics, showcasing concerns over its misuse in governance, information control, military applications, and the concentration of technological authority.

Political manipulation: it includes the complex set of threats posed by AI in distorting information, influencing public perception, and challenging the fairness and integrity of political systems and discourse.

Authoritarianism and privacy erosion: it embraces risks connected to AI-based monitoring systems and the menaces they pose to personal autonomy and freedom, focusing on the misuse by authorities or governments.

AI arms race and cyber warfare: it explores the concerns linked to AI in military and defense systems, highlighting the strategic and security hazards it presents to global stability in both conventional and digital conflicts.

Power concentration: it encompasses the threats associated with the intense accumulation or monopolization of AI control and influence, leading to imbalances in technological authority and resulting in disproportionate power structures.

Ethical* and legal risks

It examines the risks associated with legal, ethical, and corporate governance issues in AI, drawing attention to problems connected to oversight gaps, slow regulation, lack of accountability mechanisms, violation of intellectual property rights, and irresponsible industry conduct.

The asterisk is used to emphasize that the term ‘ethics’ is applied in its conventional sense—primarily considering human interests while neglecting the well-being of animals, the condition of the natural environment, and the potential experiences of artificial entities.

Ethical* and legal—general: excerpts that fall outside the scope of the four underlying specific categories are assigned to this class, exploring the risks associated with navigating the moral and legal challenges posed by the use of AI technologies, without delving into the nuances.

Regulation and oversight: it explores the challenges related to the governance of AI, focusing on the perils posed by insufficient supervision of development and employment, the rapid pace of technological progress, and the potential for harm due to inadequate regulatory frameworks.

Accountability and liability: it points out the challenges arising from the difficulties of assigning responsibility for the outcomes of AI systems both morally and legally.

Intellectual property and copyright: it captures the ethical and legal challenges surrounding the use of creative works in AI development as outputs, leading to disputes over ownership, consent, and the potential for negative implications for artists, writers, and the broader creative industries.

Negligent and immoral corporate practices: it addresses the risks associated with unregulated and immoral AI use by profit-oriented entities, including ethical lapses and increasing profit through algorithms that present danger to the well-being of individuals and the stability of communities.

Existential risks

The core theme IV. Existential risks, encompasses the most severe risks AI poses to humanity, including potential civilization collapse, human subjugation, and extinction of the entire human race.

Essentially, these dangers can be considered the most radical culmination of either the rogue AI scenarios or those of the malicious, direct or indirect, weaponization of AI. They address the most drastic outcomes, surpassing consequences that could merely be described as societal-scale, since in these, the very survival of society is put into question. Moreover, given the high frequency of mentions and discussions surrounding these perils, they warrant a distinct subset, that is, a separate core theme within this framework.

In many cases, the risk of extinction is not explicitly mentioned in the publications, but a parallel is drawn between the most severe consequences of nuclear and AI technology, clearly conveying the fear of AI being capable of causing annihilation.

There are no specific subcategories to this set of perils, as it is usually not possible to narrow down the narrative to one specific aspect (such as marginalization or destruction of humanity) within an article.

Undervalued risks

To create core theme V. Undervalued risks, as quantitatively outlined in Table 2, the three underlying specific categories were removed from the classification structure presented above. This was done despite the fact that Psychological and Mental Health, as well as Environmental and Sustainability threats, could have been sorted into the core theme of III. Societal risks.

Moreover, an additional group, namely V/4. Animal Welfare was introduced, despite no threats posed to animals being even just mentioned in any of the articles reviewed. It was included, nevertheless, because it is firmly asserted here that animals should be a part of our moral framework and the circle of entities whose interests deserve consideration, as also argued by other researchers on AI risks (Ziesche 2021; Bossert and Hagendorff 2021; Hagendorff et al. 2023; Singer and Tse 2023; Bossert and Hagendorff 2023; Coghlan and Parker 2024; Ghose et al. 2024).

As was already indicated, although the three groups—discussed to some degree in the media—could have been categorized within the framework of earlier core themes, they remain excluded and constitute a fifth core theme due to their most defining characteristic: their extremely limited representation, both in relation to their significance and also in absolute terms.

Psychology and mental health

Overall, this category covers the perils posed by AI systems in social, emotional, and mental health contexts, including the potential for emotional dependency and manipulation, exposure to deceptive content, as well as the weakening of real-life connections leading to social isolation, with profound effects on individual well-being and societal stability.

The narratives presented in the media highlight a variety of perils that AI can cause to the human psyche. However, these issues appear in only a limited number of publications—fewer than one-third of the articles in the investigated sample—which contrasts with the significance of mental health-related concerns. What is more, the authors usually just mention them without delving into a deeper evaluation. In the following, a brief overview of the psychological threats discussed in the online publications will be provided.

As AI replaces jobs, many people may face psychological distress, and if their vocation provided them with purpose and fulfillment, it could even lead to a loss of identity (Regalbuto et al 2023; Nolan 2023). Additionally, those outsourced to moderate explicit material for AI training often suffer severe trauma (O’Neil 2023).

AI systems carry considerable dangers to social, emotional, and psychological well-being, particularly in delicate contexts. Generative AI chatbots might promote emotional dependence, reducing empathy and creating unhealthy attachments (Metz 2023; Thomas 2023; Kundu 2024). The features of AI systems that mimic human behavior encourage overtrust and emotional dependency (El Atillah 2024), cultivating unhealthy expectations in relationships (Jones 2024). This reliance has the potential to weaken genuine human connections, leading to detachment and isolation, coupled with a decline in social competence (Marr 2023; Bremmer 2023; Rushkoff 2022).

In fields that are potentially even more vulnerable, such as mental health practice, AI’s lack of human sensitivity risks harmful outcomes (Ryan-Mosley 2023), with extreme cases of interactions with chatbots already being reported to lead to suicides (Hale 2023; Sodha 2023). Recommendation algorithms tend to amplify extreme content (Ryan-Mosley 2023), addictively captivating users (El Atillah 2024), often intensifying harmful behaviors and thoughts (Sodha 2023), further impacting the human psyche.

Surprisingly, only a marginal fraction of the articles (in the examined sample, solely one (Gow 2023)) explicitly tackle the issue of AI algorithms on social media platforms, which are employed in manipulating users and exerting a significant impact on their mental health. It is highly likely that this is due to the general notion that as people become accustomed to a technology that incorporates AI, they often no longer perceive it as AI-driven. Accordingly, when authors discuss the dangers, their focus is perhaps not on existing technologies but on future developments.

Key terms connected: reduced human empathy, decline in human connection, chatbots lead to unhealthy expectations, deep emotional attachment to AI, emotional dependence on AI, emotionally compelling content, addictive algorithms, psychological manipulation, AI in mental health therapy, chatbot-linked suicide, creation of a false sense of importance.

Environment and sustainability

To sum up, this class discusses the significant ecological risks associated with AI systems, ranging from the extensive use of natural resources and greenhouse gas emissions due to the high energy demand of training and operation, coupled with the water consumption for cooling servers running AI software, to the consequences of scaling up the technology, which could compromise the sustainability and environmental stability.

In media portrayals, the risks associated with ecological and long-term viability issues center around a handful of primary concerns. These are covered in only a small fraction of publications, with fewer than 20% of the articles in the examined sample addressing them, which is disproportionate to the scale and urgency of the environmental challenges. The following is a brief summary of the environmental hazards covered in online articles.

AI systems impose significant environmental costs, requiring large quantities of energy and natural resources while also leading to substantial carbon emissions (Mittelstadt and Wachter 2023). Training powerful models requires massive server farms, leading to high electricity consumption (Hunt, 2023; McCallum 2023) and a significant carbon footprint due to the energy-intensive nature of these computations (Baxter and Schlesinger 2023). Additionally, cooling systems for these models use vast amounts of water, exacerbating the depletion of water sources (Caballar 2024), especially in vulnerable regions (Isik et al. 2024). The development of LLMs and facilitating high-performance computing (Ryan-Mosley 2023; Barrett and Hendrix 2023) also rely heavily on rare earth metals, further straining global resources (Rushkoff 2022). The ongoing trend of training increasingly larger models only amplifies these environmental challenges, placing additional pressure on the planet’s sustainability (Wai 2024).

Key terms connected: environmental impact, carbon footprint, energy consumption, electricity usage, energy-intensive platforms, carbon emissions, water use, fast depletion of water sources from vulnerable parts of the planet, massive server farms, resource-intensive datasets/models, the trend to train bigger models, consume a huge volume of hardware/natural resources, computers and servers require massive amounts of rare earth metals, a disservice to the planet.

AI sentience

In essence, this group addresses the dilemmas posed by the hypothetical potential for AI to develop subjective, phenomenological experiences and the capability to suffer, raising questions about their moral status.

In the overwhelming majority of publications on the risks of this technology, AI sentience-related menaces remain ignored. These concerns appear in less than 5% of the articles in the analyzed sample. The following offers a compilation of the threats related to AI sentience in online media.

As AI technology advances, the possibility of artificial agents achieving sentience—experiencing emotions and sensations—becomes a more and more realistic scenario. Determining whether AI deserves ethical consideration, similar to the separate moral statuses of humans and animals, will present a significant challenge. The risk lies in the potential mistreatment of sentient AI, either unintentionally or intentionally, if proper rights are not granted (El Atillah 2024).

Even though Rogue AI risks and the related scenarios might indicate a level of self-awareness, the focus in that category was entirely on the impact the agent exerts on humans, not on the implications of possessing consciousness for the entity itself.

Key terms connected: AI becomes sentient, AI achieves sentience, humans mistreat sentient AI, moral considerations of sentient AI, AI systems reaching the level of sentience/consciousness/self-awareness.

Animal welfare

It is not mentioned or suggested in any of the reviewed articles, even in the subtlest manner, that the interests of animals should be taken into consideration when examining the potential dangers of AI technology.

Key terms connected: none.

Evaluation of the media review, categorization, and underappreciated risks

This section evaluates the proposed AI threat categorization and the representation of the categories in online media articles, as these portrayals, in turn, reflect public opinion. The discussion begins by relating the developed classification system to other established frameworks before turning to the key concern of a detailed examination of the neglected AI risks—an examination that forms the primary objective of this paper. While potential explanations for the disregard of these topics will be explored, an indicative collection of potential risks, rather than an exhaustive list, will also be outlined.

Reviewing online news articles is an established method for investigating overall media discourse, as demonstrated in the work of other researchers (Chuan et al. 2019; Sun et al. 2020; Nguyen and Hekman 2022; Nguyen 2023; Xian et al. 2024). While relying solely on these sources does not offer a comprehensive analysis of the media discourse on AI risks due to the exclusion of various other platforms, online media articles sufficiently illustrate the public dialogue about this topic. They also effectively underscore that the dominant narrative is predominantly centered on human concerns, providing insight into the media’s approach.

The discourse fails to address the role of animals and the environment, not even acknowledging their positions within feedback loops that could impact human civilization. Likewise, the matter of sentient AI has barely been explored. Clearly, while specific reports may cover these topics, their absence from general-interest articles may result in the general public remaining uninformed about these pitfalls. This lack of awareness persists unless individuals seek out this information intentionally due to a personal interest in these matters.

Then again, this anthropocentric approach is hardly unexpected (Owe and Baum 2021; Hagendorff 2022; Rigley et al. 2023, pp. 844–848), however, it still misses the conditions of the overwhelming majority of the animals currently living on the planet on the one hand, and our environment as a system on the other. To say nothing of the moral catastrophe that might be hypothetically caused due to the emergence of sentience or consciousness in machines.

Comparison of the proposed categorization with existing frameworks

Building on the overview of AI risk taxonomies in the section “Taxonomy and classification of AI risks in the literature”, one parallel deserves further attention.

From the structure developed by Slattery et al. (2024), many comparisons can be made with the classification framework introduced in this paper. Their theoretical framework operates in the three-dimensional matrix of entity, intentionality, and timing. The categorization derived from the media analysis also highlighted the relevance of the causal dimension of intentionality, but the core themes developed, as illustrated in Fig. 2, did not exhaust all logical possibilities of that theoretical matrix, as my empirical approach prioritized the capturing of the narrative structures articulated by the authors of the articles, and these perspectives were not prominently represented in the textual corpus.

The entity dimension appeared only marginally, however, the introduction of a temporal perspective—distinguishing between pre- and post-deployment—could have enriched the analysis. Having said that, drawing on the terminology of Slattery et al., this study focused on the Domain Taxonomy rather than the Causal Taxonomy. Therefore, adding another dimension to the latter would have unnecessarily complicated the categorization into core themes.

Furthermore, Slattery et al. emphasize the importance of incorporating the magnitude or scale of impact, supporting the two-dimensional framing of media discourse based on intentionality and severity, as suggested in this paper. While this does not confirm that these dimensions represented the dominant narrative threads, it does demonstrate that their separation along these lines provides a coherent and meaningful framework for analysis.

About the anthropocentricity

The representation of different types of dangers in online media articles clearly shows that the ongoing discussion regarding the technology’s potential dangers is overwhelmingly human-centered. This is clearly reflected in the fact that only 5% of the reviewed publications mention any entity other than humans that might be capable of suffering, namely those that ponder upon the hypothetical possibility of AI gaining sentience and/or consciousness. What is more, only one article in the entire sample explicitly regards artificial agents as moral subjects, whereas the interests of animals are ignored altogether. Even though Rogue AI risks might suggest a degree of self-awareness, these narratives make no reference whatsoever to the potential of these entities to endure suffering.

Anthropocentricity is also evidenced by the way nearly one-fifth of the reviewed publications, which address the technology’s environmental dangers, frame this issue. While ecological and sustainability concerns might also stem from non-anthropocentric, such as planet-focused roots, these articles provide no indication—and thus offer no basis for believing—that the authors consider the natural world as an independent moral subject. In other terms, the focus of environmental issues seems to lie in their human-centered impacts.

Disregard for animals

What stands out about the previously revealed human-centeredness is that none of these articles mention the harm that the technology may pose to non-human animals by any means. Even the media pieces that address environmental issues do not mention wild animals or refer to any species other than Homo sapiens in any way.

Potential explanations for the neglect of animals

This observation, which is the absolute neglect of AI’s potential impact on animal welfare, resonates perfectly with what was stated previously regarding ethics (with an asterisk). Namely, moral considerations, as a rule, refer solely to issues in which harm is potentially being done against human beings, either directly or indirectly. This investigation has provided evidence that our moral sentiments and the prevailing methods of risk assessment in our civilization are profoundly deficient. From a perspective that aims to consider the interests of all beings capable of experiencing physical and mental agony, our decision-making processes fall significantly short.

In Singerian terms, most people in our civilization maintain a speciesist bias (Singer and Tse 2023, p. 1–5), which is obviously reflected in the examined articles. Even if the term speciesist bias appears in the unfortunately rather marginal segment of AI ethics that encounters animal welfare issues (Ziesche 2021; Bossert and Hagendorff 2021; Hagendorff et al. 2023; Singer and Tse 2023; Bossert and Hagendorff 2023; Coghlan and Parker 2024; Ghose et al. 2024) and the overwhelming majority of the reviewed news coverage addresses the difficulties rooted in AI-amplified bias, it is abundantly clear from the context that the authors of these articles were using the phrase in a human-centric manner.