Abstract

The continued emergence of challenges in human, animal, and environmental health (One Health sectors) requires public servants to make management and policy decisions about system-level ecological and sociological processes that are complex, poorly understood, and change over time. Relying on intuition, evidence, and experience for robust decision-making is challenging without a formal assimilation of these elements (a model), especially when the decision needs to consider potential impacts if an action is or is not taken. Models can provide assistance to this challenge, but effective development and use of model-based evidence in decision-making (‘model-to-decision workflow’) can be challenging. To address this gap, we examined conditions that maximize the value of model-based evidence in decision-making in One Health sectors by conducting 41 semi-structured interviews of researchers, science advisors, operational managers, and policy decision-makers with direct experience in model-to-decision workflows (‘Practitioners’) in One Health sectors. Broadly, our interview guide was structured to understand practitioner perspectives about the utility of models in health policy or management decision-making, challenges and risks with using models in this capacity, experience with using models, factors that affect trust in model-based evidence, and perspectives about conditions that lead to the most effective model-to-decision workflow. We used inductive qualitative analysis of the interview data with iterative coding to identify key themes for maximizing the value of model-based evidence in One Health applications. Our analysis describes practitioner perspectives for improved collaboration among modelers and decision-makers in public service, and priorities for increasing accessibility and value of model-based evidence in One Health decision-making. Two emergent priorities include establishing different standards for development of model-based evidence before or after decisions are made, or in real-time versus preparedness phases of emergency response, and investment in knowledge brokers with modeling expertise working in teams with decision-makers.

Similar content being viewed by others

Introduction

Every decision a person makes is based on a model. A model is an idea about how a process works based on previous experience, observation, or other data. Models may not be explicit or stated (Johnson-Laird, 2010), but they serve to simplify a complex world. Models vary dramatically from conceptual (idea) to statistical (mathematical expression relating observed data to an assumed process and/or other data) or analytical/computational (quantitative algorithm describing a process). Predictive models of complex systems describe an understanding of how systems work, often in mathematical or statistical terms, using data, knowledge, and/or expert opinion. They provide means for predicting outcomes of interest, studying different management decision impacts, and quantifying decision risk and uncertainty (Berger et al. 2021; Li et al. 2017). They can help decision-makers assimilate how multiple pieces of information determine an outcome of interest about a complex system (Berger et al. 2021; Hemming et al. 2022).

People rely daily on system-level models to reach objectives. Choosing the fastest route to a destination is one example. Such a decision may be based on either a mental model of the road system developed from previous experience or a traffic prediction mapping application based on mathematical algorithms and current data. Either way, a system-level model has been applied and there is some uncertainty. In contrast, predicting outcomes for new and complex phenomena, such as emerging disease spread, a biological invasion risk (Chen et al. 2023; Elderd et al. 2006; Pepin et al. 2022), or climatic impacts on ecosystems is more uncertain. Here public service decision-makers may turn to mathematical models when expert opinion and experience do not resolve enough uncertainty about decision outcomes. But using models to guide decisions also relies on expert opinion and experience. Also, even technical experts need to make modeling choices regarding model structure and data inputs that have uncertainty (Elderd et al. 2006) and these might not be completely objective decisions (Bedson et al. 2021). Thus, using models for guiding decisions has subjectivity from both the developer and end-user, which can lead to apprehension or lack of trust about using models to inform decisions.

Models may be particularly advantageous to decision-making in One Health sectors, including health of humans, agriculture, wildlife, and the environment (hereafter called One Health sectors) and their interconnectedness (Adisasmito et al. 2022). Because interdisciplinary health fields require collaboration among professionals with different professional backgrounds, methodologies, and traditions, a model is a useful tool to bring interdisciplinary data, concepts, and knowledge into a single framework. In a policy-making context, cross-agency and cross-sector collaboration is crucial. It requires integration of interdisciplinary perspectives for making decisions about system-level ecological and sociological processes that are complex, often poorly understood, and change over time (e.g., pandemic response, agricultural biosecurity preparedness, invasive species control, ecosystem conservation). Here, traditional risk assessment approaches involving experiments in pre-defined contexts can be infeasible or insufficient for science-informed decision-making. Instead, predictive models of complex systems may provide a better understanding of how systems work, preferably using mathematical or statistical terms, and including data, knowledge, and/or expert opinion from different sectors. Decision theory has demonstrated that models can improve decisions in One Health sectors by making risks more transparent and improving outcomes (Berger et al. 2021), but challenges remain (Bedson et al. 2021).

Our objective was to identify guidance for effective development and use of predictive models in decision-making within and across One Health sectors. We refer to this process as: ‘model-to-decision workflows’ to highlight our research emphasis on evidence-informed decision-making, where at least some evidence is coming from predictive models. We use the term ‘model-to-decision’ for simplicity but emphasize that there are a variety of other evidence sources and considerations in policy decisions in addition to potential evidence from models (model-based evidence). Based on our own experience with developing models for use by decision-makers in One Health sectors, our beginning mindset was that: (1) decision-makers in public service face an increasing number of health and environment challenges that require integration of data and knowledge within and across One Health sectors (e.g., WHO, 2024; CDC, 2024), (2) predictive models are useful for understanding One Health systems and generating evidence for informing decisions (e.g., Keshavamurthy et al. 2022; Lloyd-Smith et al. 2009), and (3) the frequency that model-based evidence is used for informing decisions, and factors that affect its uptake, are unclear. These observations led us to hypothesize that model-based evidence may be underserving decision-makers in some conditions, and pose the question: what conditions maximize the value of model-based evidence in decision-making in One Health sectors?

To better understand factors affecting the uptake of model-based evidence in decision-making in One Health sectors, we interviewed experts from a range of health and environment sectors who have experience with developing or using predictive models (‘Practitioners’) for decision-making. Broadly, our interview guide was structured to understand each participant's formal training, current professional role, familiarity with different types of models, perspectives about the utility of models in health policy or management decision-making, challenges and risks with using models in this capacity, experience with using models, factors that affect trust in model-based evidence, and perspectives about conditions that lead to the most effective model-to-decision workflow. We used qualitative inductive analysis to identify emergent themes within these areas. Our analysis provides guidance for improved collaboration among modelers and decision-makers in public service. Reflecting on results from interviewees, we describe priorities for increasing accessibility, uptake, and value with using evidence from models in One Health decision-making.

Methods

Study design

We chose a qualitative case study approach (Yin, 2018) to gain an in-depth understanding of individuals’ perceptions of models and insights into the conditions under which they find models useful in decision-making. Data were collected using semi-structured interviews, an interview approach that is well suited to exploratory research, as it allows the interviewer to follow up on and explore relevant and meaningful ideas that emerge in the course of the interview (Adeoye-Olatunde and Olenik, 2021). The semi-structured interview approach had two benefits for this research. First, it allowed us to identify and learn more about additional themes that participants raised that we did not directly describe in our interview guide a priori. This was important as the interviewer did not have a deep knowledge of the New Zealand (NZ) landscape and the interview guide was aimed at targeting themes the United States of America (US) research team had identified from the literature and from the US context they were most familiar with. Second, the semi-structured interview format enabled the interview to go into greater depth in those themes where an interviewee had the most expertise, and to explore in greater detail contrasting opinions and approaches to other interviewees. This qualitative data collection approach enabled us to provide greater nuance to the themes than using a quantitative survey or similar approach (Grigoropoulou and Small, 2022).

Interview topics were developed based on: (1) the US-based team’s experience with working at the interface of modeling science, disease management, and policy, primarily in the animal health and wildlife sectors of One Health, (2) a NZ-based researcher’s experience conducting One Health research in NZ and internationally, and (3) scientific literature on science communication, models in decision-making, and strategies for co-developing objectives among numerous stakeholders (such as structured decision-making). The interview guide was not a static research instrument—it evolved throughout the study as reflections were made about the NZ relative to NZ contexts (see SI for interview guide). The initial interview guide was reviewed by two US-based One Health professionals in operational decision-making roles and pre-tested by two other US-based One Health professionals in other operational decision-making roles.

Recruitment

We used snowball sampling combined with purposive sampling (Parker et al. 2019; Tongco, 2007), a non-random sampling that involves participants suggesting other participants (snowball; Parker et al. 2019) and deliberate selection based on experience or knowledge (purposive; Tongco, 2007). Our process included identifying an initial subset of individuals who met our inclusion criteria using our own professional network. Inclusion criteria were (a) experience informing management or policy decisions that consider science, (b) some exposure to building, interpreting, or making decisions using science from models, and (c) a professional background within or across the various One Health sectors (Public health, Agriculture, Wildlife, Environment). We aimed to include at least 2–3 participants working in each One Health sector and for each level of the following dimensions that were not mutually exclusive from the One Health sector experience: organizational context (levels: National Government, Local government, University, other non-governmental organization), and professional role (levels: Science production, Science advisor to decision-maker, Operational management, Policy decision-maker) (Fig. 1). The initial subgroup consisted of 7 professionals who were thought to be well connected in their professional networks, including Chief Science Advisors, and represented a variety of contexts (1 national government agency, 2 non-profit research with a national mission, 1 non-profit operation with a national mission, 3 university), domains (3 domestic animal, 1 environment, 1 public health, and 2 wildlife health), and roles (3 science producers, 2 senior or chief scientists, or 2 decision-makers). Of the 7 initial individuals, 6 agreed to participate and all suggested additional colleagues. The process repeated itself until we had at least 2–3 individuals across all dimensions of interest. We continued to add new participants after the interviews started until we reached theme saturation (no new themes) in the recommendations for effective model-to-decision workflows. This process resulted in a grand total of 41 interviews being conducted. Participants came from a wide variety of technical backgrounds (Fig. 2).

Main plot: Different health sectors the participants worked in across the One Health sectors. The x-axis shows all potential sector combinations with sector combinations identified using a + sign (H: humans; D: domestic animals; W: wildlife, and E: environment). Colors indicate the number of sectors the participants reported working in—black (one), gray (two), red (three), and blue (four). Inset: Number of different professional roles the participants worked in across the sectors. Roles were not mutually exclusive, with some participants having multiple roles, so the total number of roles described (N = 60, total for the bars in the inset plot) is greater than the total number of participants (N = 41).

Implementation

Prior to the study, our study protocol was submitted to the Sterling Institutional Review board (number: 10,792) and determined to be exempt due to its very low risk for negative impacts to participants, based on the terms of the US Department of Health and Human Service’s (DHHS) Policy for Protection of Human Research Subjects at 45C.F.R. §46.104(d)—following a Category 2 exemption. Prior to conducting the interviews each interviewee received a participant information sheet to review, which described the purpose, objectives, participant roles, and methods of the study, and an informed consent form that participants signed to acknowledge their understanding of the expectations and role of the interview process and reporting of results. Interviews lasted 45–90 min, and were audio recorded. All interviews were conducted by the first author.

Transcription and qualitative data analysis

Audio files of interviews were transcribed using artificial intelligence software (Descript). Each transcript was manually verified by listening to the audio recording while viewing the AI-transcribed text and editing as necessary for accuracy. Text transcripts were then sent to participants for review (if this preference was indicated on the consent form) to check for additional errors. Content analysis of text transcripts (Hsieh and Shannon, 2005) was conducted by the first author using inductive analysis with iterative coding to identify and synthesize themes. For this, the first author first developed a spreadsheet that listed each interview question topic as column headers. For each interview question topic, the first author analyzed each participant’s transcript and identified themes. As more transcripts were analyzed for each question, a topic-consistent labeling protocol for each theme was developed. Previous transcripts were re-visited as needed to verify consistency and accuracy of each theme label. This produced a synthesized spreadsheet of all themes that could be visually searched by question topic to identify and quantify (when appropriate) themes for each question topic. We used a combination of both deductive coding and inductive coding approaches (i.e., abductive or iterative coding), where we started with deductive coding and added inductive codes as new themes arose.

We described the emergent themes under sub-headers, where we synthesize responses from participants and provided direct quotes from participants in single quotes (sometimes modified slightly to hide identity) to illustrate the themes. Concurrently, we interpreted the emergent themes relative to previous work where applicable.

Reflexivity statement

Our research team primarily consisted of researchers of European descent, residing in the US and NZ, with academic and professional backgrounds in the biomedical sciences. Our training has therefore been rooted in Western scientific frameworks, which often emphasize quantitative analysis, evidence-based methodologies, and a positivist approach to knowledge generation. Recognizing this shared background, we acknowledge that our perspectives on health and disease, as well as our interpretations of data, are shaped by these paradigms. We are mindful of how this collective background may influence the design, execution, and interpretation of our research, especially in studies that explore decision-making processes and the societal role of scientific models.

The lead author, based in the US, has a background in ecological, public health, and animal disease modeling, and has frequently collaborated with federal health agencies on infectious disease and wildlife population forecasting, including predicting the impacts of interventions. This experience has led to a strong familiarity with the policy-making process in the US context, where decision-makers may display mixed preferences for quantitative evidence from models. This understanding may have influenced how we framed questions about model utility and reliability in the interviews.

The other authors, mostly based in NZ, have experience with a range of epidemiological and ecological modeling in settings where health policies are encouraged to consider other community perspectives and local stakeholder engagement alongside quantitative models due to researchers and governments being expected to honor Te Tiriti o Waitangi (known in English as the Treaty of Waitangi), an agreement made in 1840 between representatives of the British Crown and indigenous Māori. This typically involves adhering to the treaty’s core principles: partnership, participation, and protection. This background may have shaped a perspective that considers models as only one scientific tool and these authors’ contributions may reflect a focus on the interpersonal dynamics between modelers and policymakers and how models are adapted to reflect local values more because of this. However, throughout the research, we remained aware of our potential biases and assumptions and tried to ensure questions were culturally and contextually neutral.

Results

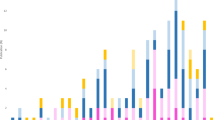

Variation in participant definitions and familiarity with models

When asked what a model is, participants most commonly said: a tool for understanding a system, a decision-support tool, and/or a simplification of real-world complexity (Fig. 3). The top three types of models participants were familiar with included: computation or simulation models including scenario trees, statistical models, or mathematical models that solve equations (Fig. 3), highlighting an emphasis on knowledge of quantitative models among the professionals interviewed.

How models are useful for informing decisions

When participants were asked how models could be useful for guiding decisions in One Health sectors, 80% (33/41) said models were useful for comparing disease control policy scenarios. The second most frequently described use was in creating a joint understanding among stakeholders. Here, the process of developing the model was seen as the most useful deliverable—it helped decision-makers understand the system well enough to make informed decisions. Models provide a robust method for assimilating relevant information and bridging information gaps that cannot be resolved through other means.

When asked to describe an experience where participants were involved in a model-to-decision workflow, most participants said the model provided a critical role. Critical roles described included:

-

Motivating policy development through risk assessment

-

Facilitating communication, collaboration, and understanding at the science-management-policy interface

-

Identifying important knowledge gaps for focusing future data collection

-

Providing inference for processes that cannot be directly measured

-

Identifying refined resource prioritization strategies

-

Building knowledge in the early stages of a new event (e.g., COVID-19 emergence) when knowledge of the system is poor

-

Motivating decision-makers by rapidly building evidence about whether action is needed when experiments are infeasible and when they are unmotivated to act on sparse information

-

Building agreement among stakeholders on priorities

-

Quantifying and framing decision risk—providing numbers alongside intuition about the likelihood of outcomes

-

Managing stakeholder expectations and social licensing for a policy by providing intelligence about how long it might take before changes are observed

Risks and challenges with using models to inform decisions

Participants reported effective model-to-decision workflows are challenged by decision-makers not being informed sufficiently in the development, evaluation of robustness, and interpretation of evidence from models. Participants thought lack of understanding can lead to misinterpretation of evidence, inability to understand what can and cannot be asked from a model, or misunderstanding uncertainty, including having a false sense of precision. A decision-maker may want to feel confident about the distinction between risk categories, yet:

‘Some models can be very bad at distinguishing unlikely from very unlikely.’ (Policy advisor and decision-maker)

Often decision-makers need intelligence on a relative (or qualitative) scale (e.g., outcome likely to be worse than X or better than Y), for example,

‘It’s [referring to model utility] on the scale of ‘government must act’. And so that was when it was super useful. And to me, the actual number, whether it was 5000 or 80,000, didn’t matter, but it was the fact that it was in thousands that mattered. It made it material. It was a ‘way worse than flu’. So we needed to act. That’s where the model came in.’ (Science advisor)

And to use the qualitative scale of effects to evaluate potential policy outcomes, for example,

‘They [models] are incredibly useful to show worst case and best case scenarios and illustrate that there are policy levers to pull that can shift you one way or another.’ (Science advisor),

yet scientists may present the information in a more nuanced format that makes it challenging for decision-makers to interpret on the time scale they need answers. Also, with increasing misinformation (Scheufele and Krause, 2019) and scientific specialization, it is challenging to bridge information gaps between the two cultures (scientists and policy-makers) (Snow, 2012). The average level of scientific literacy for decision-makers and increased scientific misinformation are obscuring the ability of decision-makers to efficiently and effectively consider scientific evidence.

‘The huge problem these days is this vast misinformation blogosphere [blogs considered collectively as an interconnected source of information] of nonsense [speaking about information that is not scientific or from rational thinking, but readily accessible in people’s immediate realm of information] that’s just got worse with COVID.’ (Science producer)

Another risk described by participants is that a user can refine a model to deliver a desired output.

‘You can construct a model and parametrize it to give you the decision that you want.’ (Operational Manager)

This can be done through inserting narrow ranges of parameter values that meet a desired outcome (e.g., prior distributions in a Bayesian analysis or parameter ranges in a sensitivity analysis of a computational or mathematical model), excluding processes one does not want effects from, or interpreting uncertainty in a way that supports one’s agenda (e.g., biased presentation of model results and conclusions about untested effects (Pepin et al. 2017; Stien, 2017). Users who do not understand how using models this way violates the scientific process are susceptible to unintentional misuse, while users who understand this well can be susceptible to intentional misuse. Example statements included:

‘I guess the key thing is making sure that decision makers are not cherry picking information they want to use - this is where one of the risks arises. …if you’ve got different models that have different results’ (Policy decision-maker)

or

‘…it felt like some of the policy people had learned enough math epi terms or had enough of a feel for roughly how things worked that they could sort of form their own assumptions about if I want to get to here, this is how the model might be able to create a narrative that gets me there.’ (Science producer—modeler)

or

‘There’s probably been examples where maybe models have been developed in order to try and push a particular policy agenda, which can be problematic or models that presuppose their own conclusions. I think you see that sometimes as well. There are certainly bad ways of building a model and that’s a risk.’. (Science production—Modeler)

or

‘There’s always a conflation of ‘this is what the model says versus this is what you parameterize the model to say’. Isn’t there? And that really worries me more and more as I carry on in my career.’ (Policy advisor and decision-maker)

The risk of unintentional and intentional misuse affects trust in models, therefore potentially creating mistrust issues from both those who do understand the process and those who do not. These concerns suggest that it’s important to develop standards for model-to-decision workflows, including transparent and professional expert peer review to reduce misinterpretation and misuse. One participant noted:

‘Often there was the pressure for the scientist or the researcher to be able to justify how they had arrived at conclusion A to the Nth degree and yet the policy person could ultimately choose to ignore that science and just do whatever they felt was appropriate, but not the same kind of equivalent, robust, and transparent process around their decision making.’ (Operational Manager)

Several participants thought models should not be developed and used for real-time forecasting during emergencies—the appropriate use of models is for preparedness when time is available for appropriate rigor and consideration (i.e., during non-emergency situations). One reason is that scientists are eager to provide real-time intelligence provision and advocate for their approaches even though each has uncertainty and potentially conflicting results. For example,

‘On the day we say we’ve got an outbreak, every university in NZ and some offshore will start modeling our outbreak. I’d like us to be a little bit ahead and to have had some of these conversations because I’m not gonna be in a conversation with 10 academics who all think their model is God’s gift…[while managing an emergency]’ (Policy advisor and decision-maker)

This suggests that staff dedicated to preparedness modeling may increase the use of models in management and policy decisions. Some participants thought real-time forecasting might also open the door for selecting models that provide confirmation bias or support political agendas that might not align with robust science, which erodes trust in science quality from models. Also, participants said models may have too much uncertainty to provide the level of precision that the public would hold decision-makers accountable for when different numbers play out in reality. It can be counterproductive to put

‘…scary numbers with high uncertainty in the public domain’, (Policy advisor & decision-maker)

And equally counterproductive to provide numbers with the wrong level of detail. For example,

‘And once you tell people that probably X number of million people will die, it’s very hard for them to think about other risks, right? So how do we responsibly portray what models are actually able to predict in the band [speaking about range of statistical uncertainty] and what the dependencies [potential downstream impacts] are on prioritization [of actions that may be taken]’. (Policy advisor & decision-maker)

Participants warned that modeling should be one source of evidence considered alongside other sources. However, some people may value numerical comparisons more than qualitative information, and numerical comparisons can give the semblance of clear-cut answers, when reality is more complex. Thus, participants warned that modelers should not be

‘…let out of their pens unaccompanied’, (Science producer—subject matter expert and Policy advisor)

meaning that subject-matter-expert practitioners (e.g.public health specialists, physicians, veterinarians, wildlife managers) should be working hand-in-glove with them to shape their questions and interpret the results in terms of policy, social, or health impacts. For example,

‘So that needs to be clear…just technical experts [speaking about modelers] aren’t decision makers and that’s mostly appropriate because the decision makers have to worry about a whole lot of stuff that’s not technical, right? So they have to balance up hard choices. And in a way, that’s why I think when models go to decision makers, then you start having bizarre outcomes because they’re interpreting them as another equally weighted, but uninterrogated source of advice. So the model says this and so they must be okay, whereas if the model goes to technical experts [speaking about health practitioners/subject-matter-experts] who then formulate advice that’s in a conversation with decision makers, there’s some kind of interpretation of what the model said, which is where I think my main focus has been.’ (Policy advisor and decision-maker)

Several participants referenced the outbreak of foot-and-mouth in the United Kingdom in 2001 as an example of why it is important to emphasize communication and not to have modelers translating the health policy intelligence from modeling results (e.g., modelers directly making management recommendations to decision-makers without filtering the model results through subject-matter experts in the application of health management in the system being modeled (Kitching et al. 2006; Mansley et al. 2011). Even though NZ has set up effective workflows for model-to-decisions in some sectors, there is a legacy effect due to both past experiences and ongoing current hurdles that have made it difficult to build trust.

Participants highlighted that models can reveal contextual details that impact other policy-making challenges because models can identify phenomena that were unanticipated. Policy impacts can vary dramatically for different groups of people or animals. For example,

‘One of the big things was around if you move anything from the mandatory to being optional in terms of protections, then people who have more privilege can take those protections. They can stay home when case numbers are up, they can buy better masks, all the rest of it. Meanwhile, people who are most vulnerable get infected. Those people are then removed from your susceptible populations. That’s doubly benefiting the people who are more privileged. So they benefit first from their initial privilege and then because they infected these other people who are now recovered and at least in the short-term, aren’t going to infect you anymore.’ (Science producer—modeler)

When variation of policy effects on different groups is accounted for, policy decision-making becomes more complex and can require longer to achieve consensus among stakeholders.

Effective use of models

When asked to describe their experience in model-to-decision workflows, participants mentioned a variety of applications. These ranged from—What transportation policies should we implement to improve human and environmental health? to What is the local probability of disease freedom of bovine tuberculosis? We summarize two examples participants described to show common themes that led to the use of the models in practical decision-making (Box 1 and 2). In both cases, individuals in decision-making roles successfully championed the use of models and acted as ‘navigators’ (knowledge brokers) of the models into the decision-making ecosystem. The drive to champion models often came from long-term trusted relationships between the decision-maker and scientists that develop models. The decision-maker that championed the models invested substantial effort in regular communication between modelers and decision-makers to ensure the models addressed context-specific needs and constraints and provided a trusted source of evidence. Thus, trusted relationships and dedicated knowledge brokers are essential for the successful uptake of modeling intelligence in policy decisions).

How models become trusted

There are many ways to build models (Silberzahn et al. 2018), each with advantages and disadvantages, so what gives professionals confidence in using results from models? Participants described a variety of metrics that gave them confidence. The most frequent answer was having a trusted interpersonal (often collaborative) relationship between scientists (and/or science advisors) and decision-makers (Gluckman et al. 2021). Also, the model predictions proved accurate (validation of the model predictions against eventual real-life outcomes) and the decision-maker was

‘brought on the journey of model development’ (Operational manager)

And

‘understood what was under the hood.’ (Operational manager)

It was also important that the model and results were peer-reviewed, rigorous statistical evaluation was conducted, and well-established scientific theories were applied (trust in the scientific method). It was equally important that the model led to a high-impact policy change (added value to the decision-makers bottom line). One participant said having models was considered essential by other colleagues or stakeholders, thus the model was trusted to meet a variety of participants’ demands, for example,

‘…having them [models] and the answers they [models] provide was considered a hundred percent necessary to the investment’ (Operational manager)

Models for shaping versus supporting decisions

Models can be applied before a decision is made to shape decisions or post hoc to evaluate decisions. Most participants felt the use of models a priori is ideal but post hoc use can be valuable and appropriate when decisions need to be made more quickly than the science can be produced. Retrospective evaluation of decisions with models was seen as advantageous for providing support for further investment, providing scientific evidence for high-stakes decisions, or evaluating whether the decision should be revised to improve outcomes. Participants said it’s useful to have a model for evaluation as conditions change and to identify alternatives. However, many thought there are special considerations and increased risk for model development as post hoc decision intelligence, for example,

‘I think the risk in using a model to justify a past decision is that models have bias and scientists have biases. And I think if you’re not careful about those biases and the assumptions that you make in a model, you’d have to be quite disciplined in just making sure that you are testing the full range of model assumptions or options.’ (Science producer—modeler)

Participants said when modeling for post hoc decision intelligence it’s important to understand whether the decision could be changed with additional intelligence. They said more safeguards are needed for conducting the science objectively because of the risk of confirmation bias (Nickerson, 1988), meaning it can be difficult to design the scientific questions objectively and comprehensively when a desired outcome is known. Using multiple independent scientific groups (Li et al. 2017) may be important to safeguard against this risk.

Based on risks previously described regarding decision-makers lacking expertise in modeling, there may be an additional risk of misuse of science relative to other intelligence production techniques because, as mentioned above, one can construct and parametrize a model to produce a desired result. Using models for post hoc evaluation of decisions requires good-faith decision-making. One participant described this as

‘You need to have clean governance over decisions—you need to separate the decision-making from the people who are going to benefit from the decisions’. (Operational manager)

Other participants said that post hoc use of models requires encouraging decision-makers to ask the right (objective) questions with an understanding of what is and what isn’t scientific evidence that aligns with robust scientific practice. Otherwise, evidence claimed to be derived from science is actually misinformation. Examples of bad practice with models relayed by participants included:

‘…models that presuppose their own conclusions’ (Science producer—modeler)’,

or

‘…a model with that predetermined outcome in mind’ (Science producer)

or

‘…creating the science to support what you want to do—it [the model] shouldn’t be reverse engineered’ (Science producer—modeler),

or

‘…what someone referred to as policy-based evidence. I think the desire for evidence that will support the policy decision you’ve already made is quite high.’ (Science producer and advisor)

Participants agreed it was bad practice to choose one result or model from a set that has similar scientific rigor, probabilities of being true, and/or similar levels of uncertainty about the truth. Modeling science has developed a variety of methods for objectively combining information gained from multiple models with different uncertainty and divergent sources of data to produce combined and more robust model-based evidence (e.g., value of information analysis, ensemble modeling (Li et al. 2017; Oidtman et al. 2021). Post hoc modeling needs to be about testing the value and effectiveness of a decision and evaluating the potential impacts of alternative decisions, for example,

‘I think as a scientist in a policy making space, it’s incumbent upon you to point out what the evidence says, what the range of evidence is. If a policymaker chooses to cherry pick the bit that suits them, then there is a limited amount you can do about that. But I think that you need to be presenting them, if you’re talking about modeling, presenting the model outputs as they stand and encouraging policymakers to consider asking the right questions and then seeing the answers’ (Science producer and advisor)

Because political pressure can be high to not ‘rock the boat’ (i.e., change the business rules), and humans have a natural tendency to trust evidence that best matches their understanding (confirmation bias), it is especially important to develop standards for appropriate use of models in post hoc decision-making.

Tension in the scientist–decisionmaker relationship

There is tension between professionals working where the mission is more research-focused versus public policy or government-based operational programs. Considering the frustrations expressed by “both sides”, collaboration could be improved (Power, 2018). Key frustrations from research-focused professionals include difficulty keeping up with which scientific questions are most helpful to pursue. Reasons included not understanding the government context, little transparency on who to contact for different issues (leading to relationship-building with someone at the wrong level of government for use of the science in decision-making), and not having the capacity to develop and maintain relationships among all the other responsibilities of a research scientist, for example,

‘Mid-career folks are just dropping out at the moment. Going to do other stuff because of the workload at universities. For academic scientists time is a big challenge for them which is a challenge for relationship building. So the kind of thing where I took this really strategic approach [to relationship building], but also using those informal connections, I think for a lot of academics, they just don’t have time to do that.’ (Science producer—modeler)

Participants also said there is high turnover in government positions, which leads to researchers investing less time in building relationships because when collaborators move positions, the knowledge and relationships are lost, for example,

‘One of the biggest challenges in New Zealand is most government agencies’ staff change their jobs like they change their underwear. As a result, they move around way too quickly and they never actually get anything finished. And you’re always starting again. Not just relationships, but knowledge.’ (Science producer—modeler)

Additionally, participants said government professionals can be unhelpful in connecting researchers in a meaningful way, for example,

‘We’ve got data, we’ve done all the work for you, we want to engage. We’re reaching out to them and really not getting any kind of feedback or any response from people there.’ (Science producer)

One participant said the solution to improving relationships involves actively discussing and planning for

‘…how to go about building an ecosystem where there’s a richer, more resilient network of relationships between researchers and policy makers so that policy makers can quickly take soundings from the research community in a very informal way and build those relationships that enable deeper pieces, whether the scientists are really coming together with a more proactive, long-term evidence base for more strategic work.’ (Science advisor)

Participants expressed that policy-making processes take a very long time with numerous reviews that involve input from a variety of stakeholders. An original policy proposal that was well justified by scientific evidence can be refined repeatedly by different people so the rationale is changed or lost altogether. It can be difficult to bring outside modelers in later in the process, making it important to have involvement from the beginning and a clear mechanism for incorporating new evidence. Decision-makers admitted that researchers often understand so little about policy processes that they cannot bridge the gap to work with researchers. Potential solutions described by participants included increasing opportunities for scientist secondments into government contexts (Gluckman et al. 2021), such as AAAS Science & Technology Policy Fellowships in the USA for early career researchers or science advisors for those more senior.

Modeler (or scientist) behavior can prohibit collaboration. For example:

‘It doesn’t really help the situation—saying I told you so when it does actually happen. That’s the time when we roll up our sleeves and see, how can we minimize the damage that is associated with this?’ (Science advisor)

Thus, the attitude of being patronizing or unsupportive when an adverse event occurs is counterproductive to engagement and shows a lack of sensitivity or awareness of the position of other professionals. Another comment was that scientists should:

‘park the passive aggression, and listen to hear rather than listen to respond’ (Science advisor),

meaning that the model-based evidence contributes only in part to the solutions and that modelers must be constructive, and respect the contributions from others. For example,

‘Technical experts know what they know, but they very rarely know the context in which that information needs to feed into. They tend to be passive aggressive. It’s a common trait within scientists. And so when their evidence isn’t listened to, they tend to be highly critical of the people that didn’t listen to their evidence, even though their evidence was listened to. It just was other decisions, other pieces of information fed into the decision making process.’ (Science advisor)

The contribution from any one modeler will be considered in a team environment that may or may not have the capacity for direct communication between the modeler and the decision-maker. Counterproductive behaviors can be minimized with more transparent communication between modelers and decision-makers about the constraints experienced by each group and opportunities for engagement.

Relatedly, it’s important for researchers to come to the table with the attitude that

‘modeling is only one piece in the puzzle’ (Policy advisor and decision-maker)

For example, statements such as

‘The fact that science only informs policy, it will never set policy. It can’t set policy. When you think about it, we have the Treaty of Waitangi, a legal document that must be overlayed over the top of everything that we decide within the country. So that’s got to get an equal weighting to science in a lot of those discussions.’ (Science advisor)

And

‘Policy making is not a pure process. It’s messy, and a values process. It’s a judgment process ultimately, and evidence and data inputs and modeling are one group of inputs into it. But there’s a whole lot of other inputs and lenses that are brought to bear. Of course, governments, for the most part, set out to make the best decisions they can for their populations. We had the good fortune of being able to use some modeling to help shape and inform decisions and policy options.’ (Policy advisor and decision-maker),

meaning that the model results contribute only in part to the solutions. For this reason, some decision-makers are replacing the term ‘science-based decisions’ with ‘science-informed decisions’ (Gluckman et al. 2021).

Finally, similar to research-focused professionals, decision-makers are usually overburdened with little flexible time and have very tight timelines for decision-making. Participants said that these constraints generally do not align well with, for example, the participation of graduate students. Limitations for decision-makers include that most graduate students cannot solve problems fast enough nor can they communicate the results effectively. Involving graduate students in solutions for decision-makers can lead to needs not being met and a lack of willingness for a decision-maker to want to involve outside scientists in the future. Yet, the involvement of graduate students is part of the business structure for research organizations and trains the next generation of scientists to be more policy-aware when defining scientific directions.

What practitioners recommend for effective generation and use of evidence from models

Consistent with previous work, several participants said one important feature for successful model-to-decision workflows is to have a knowledge broker (Gluckman et al. 2021; Kiem et al. 2014). For example,

‘You need those people who can really walk across the bridge there and turn the outputs into understandable intelligence that paints a picture rather than a series of numbers or figures or whatever. It’s communicating here’s what this overall picture means. That intelligence.’ (Policy advisor and decision-maker)

The knowledge broker is often a science advisor but may be a subject-matter expert who serves as a trusted advisor or navigator to the decision-maker (e.g., health practitioner or health/environment policy expert in our system, Table 1). A good knowledge broker for model-to-decision workflows will be experienced in science communication to decision-makers, be able to quickly convert complex evidence into intelligence, have a strong grasp on quantitative analysis techniques, understand the decision-maker’s context, be objective about consideration of relevant intelligence, and have a trusted relationship with the decision-maker. An example comment describing beneficial traits for knowledge brokers:

‘…bring it back to the honest broker and go remembering that minister has five minutes to listen to you. Five minutes to gather as much as they can from this topic, and then make an important decision. You need to have gone through all of that literature, deduced it all down into one pager and go, if I was in your position on the basis of what I’ve read, I would do this. Now, that’s not an advocate, that’s a person that has actually just developed this balanced understanding of the literature.’ (Science advisor)

Being explicit about the roles and responsibilities of team members is important for minimizing miscommunication or unwanted communication. In decision-making for public policy, the role of science producers is to explain to the decision-maker, not the public. The role of a science advisor is to recommend decisions to the decision-maker based on a broad set of intelligence (Table 1). This can be confusing because, for example, NZ’s Education Act encourages academic freedom and a role for academics as critics and conscience of society, making it important to discuss roles when academic scientists engage with decision-makers as science producers because roles are context-dependent.

Decision-making is a values-based process (von Winterfeldt, 2013). Because decision-makers use different criteria for gaining trust in intelligence, it’s important to understand the value system used to gain confidence about information quality. This can be done when modelers work with knowledge brokers and/or decision-makers to define the problem. When identifying the decision-makers objectives, it is important to understand what the decision-maker’s constraints are (e.g., timelines for answers, budget, infrastructure, time they or their knowledge broker have to participate) and ensure decision-makers understand what the scientist’s constraints are (data quality needs, resources, feasible timelines, the type of answers that can be generated). During NZ’s early COVID-19 experience, this exchange was facilitated through a key knowledge broker who set up regular communication among modelers and policy developers in an incident command type of structure. All participants we interviewed who were part of this workflow commented on how effective this approach was in developing models and appropriate timelines for policy decisions. These conversations are important for determining the level of investment needed for modeling and appropriate modeling techniques. An ability to clearly understand the problem the decision-maker is trying to solve is a critical skill for building trust and adding value. When done effectively, the modeler and knowledge broker can assist and empower the decision-maker to refine questions in the most effective way for their problem. This approach is synergistic with how participants described their vision for collaboration with Māori—NZ’s Indigenous population—to address structural disparities (Box 3).

Making a communication plan to establish the preferred frequency and method of communication can help manage inefficient delivery of products, which causes frustration. Establishing early on who the science producer should work with to access data and get input for model development is important for efficiency.

It is good practice to make sure the decision-maker understands what value modeling can and cannot bring to the problem. This helps to manage expectations and regret in the investment, which can have legacy effects for future investment. For emerging fields, it is important to articulate to decision-makers the long-term benefits of investment in terms of capacity building and readiness. Relatedly, it is useful to understand concerns the decision-maker might have about the modeling and identify a workflow that minimizes those risks. After the model has been developed and used, demonstrating the return on investment is important for continued building of trust in the use of models for future decision-making.

Discussion

Application beyond New Zealand

We focused our inquiry on NZ because NZ has a reputation for effective consideration of model-based evidence outcomes in policy decisions (Mccaw and Plank, 2022; Bremer and Glavovic, 2013; Cowled et al. 2022; Mazey and Richardson, 2020; Rouse and Norton, 2017). However, many NZ professionals (66% of participants) trained or originated from other countries, providing insight beyond NZ. However, NZ has some unique features with its remote location and small government, suggesting some results from our study may not transfer readily to other contexts. Although several participants cited increasing scientific misinformation as a challenge for science-informed decision-making, these challenges may be lower than in other contexts (Scheufele and Krause, 2019). For example, one participant said:

‘If you look at public trust and what’s happened to it over the last 20 years, and if you look actually globally, the groups that are the most trusted are scientists. I think that 70% of people trust science and scientists, in New Zealand it’s slightly higher than that, as opposed to the media, which is right down there on the other end. And we’ve got low levels of trust in the media. So I think there’s a real opportunity actually. Most people have quite a high degree of trust in science.’ (Policy advisor and decision-maker)

Thus decision-makers in NZ might be more likely to use accurate science and scientific methodologies or have more trust in science as a starting point when faced with the potential for predictive models to help their decision. One participant did say that they believed scientific misinformation tendencies in media in the US are influencing the NZ public, suggesting this difference from other contexts might be changing. For example, when discussing science supporting animal disease control:

‘I feel a bit discouraged at the moment. I feel like the things that I and my colleagues have worked on for years carry less weight than they used to, and that’s worrying for the future problems that are going to arise.’ (Science producer),

And referring to misinformation about science in the public relative to COVID-19 control policy:

‘a lot of it honestly is coming in from American social media. And so you see the exact same arguments that you are seeing in Tennessee replaying here’ (Science producer).

Also, most participants mentioned that NZ’s small size provides a unique advantage for rapid cross-agency and science-community/agency coordination at a national scale. Several participants mentioned that finding the right scientist or agency professional is simplified in NZ by its relatively small population and government size. This potential advantage was described as:

‘In NZ, because it’s small and everybody knows each other, it is slightly easier than in other places and especially in emergencies. So, one thing NZ does well is scramble’. (Science and policy advisor)

Speaking about NZ’s response to COVID-19:

‘It wasn’t perfect but talking to my counterparts from around the world, they really struggled to scramble like we scrambled because there were much more formal mechanisms and fewer relationships to draw on. So I think if we could somehow use the mechanism we used for COVID and apply it when there’s no emergency on, we’d find some sweet spot.’ (Science and policy advisor).

In countries with larger or decentralized governments, building relationships for effective model-to-decision workflows requires more strategic planning and dedicated resources.

Study limitations

Semi-structured interviews allow for some in-depth exploration but can be limited by interviewer influence and variability in data quality, as the flexibility of this approach can lead to inconsistency in the focal topics that are challenging to analyze. These interviews also require time and skill to perform, making them resource-intensive and difficult to replicate, as each interview may differ depending on the interviewer’s techniques and approach. We addressed part of these concerns by using a single interviewer and analyst. Here, the interview guide was only practiced on US participants, yet the study was undertaken in NZ, which could potentially miss key factors. However, the guide was developed in collaboration with NZ-based researchers (first and last author), mitigating some of this. We acknowledge further limitations in our reflexivity statement.

Snowball sampling is useful for reaching specific or hard-to-find populations but has notable limitations, including sampling bias and lack of generalizability, as participants tend to recruit others within their social networks. This method risks overrepresenting certain perspectives and may raise privacy concerns, as participants are asked to share contacts who may feel pressured to join the study.

Conclusions

Priorities for improving the development and use of predictive models for informing decisions

After reflecting on results from participants, our own experience, and scientific literature, we suggest the following priorities for improving the effectiveness of the model-to-decision workflows:

-

Address differing value systems: Decision-makers differ in how they value or trust different sources of information, including Indigenous knowledge/people, which can vary with each specific issue and across One Health sectors. Thus, it’s important to establish how scientific information from models is valued relative to other sources of information, and what criteria need to be met beyond scientific rigor for information to be trusted. This will help deliver results in a format that is useful to decision-makers.

-

Effective communication to decision-makers: Consistent with the field of science communication (Fischoff, 2013), communication about models needs to meet people where they are. This means modelers need to understand what decision-makers know and do not know and have the flexibility to adapt their communication style (and visual assets) accordingly. Iterative improvements and designs underpin effective communication. Decision-makers are ‘time poor’, needing information and communication that is triaged, prioritized, and presented in a way that enables rapid uptake. Thus, effective communication about models for decision-makers requires clear and concise communication so that time-limited decision-makers can rapidly interpret output and limitations but deliver to not demean the knowledge level of the decision-maker.

-

Effective communication to the public: Modelers need to be cautious in providing information and advice directly to the public without expert training in environmental, human or animal health or acting as spokespersons for relevant agencies. There is potential to add to misinformation and damage trust in science-informed advice through inconsistent messaging and lack of awareness about contextual challenges beyond science. It is important to work with other disciplines, particularly colleagues with medical, public health, veterinary, environmental, and cultural expertise, as appropriate, to shape consistent messages about current and future risk levels, and risk management approaches for prevention and control measures.

-

Expand relationship- and network-building opportunities between modelers and knowledge brokers or decision-makers: Effective model-to-decision workflows require trusted relationships that can be accessed readily. Dedicated capacity building of broad scientific networks of modeling expertise that have trusted relationships with decision-makers is needed for cutting-edge modeling tools to be used more readily in high-impact policy decisions. Key gaps include opportunities for communication for relationship building and cross-education and developing mechanisms that both expand the breadth of trusted modeling-science networks and allow next-generation modelers to be trained and incorporated.

-

Ensure multiple modeling groups operate for quality assurance: Trust in modeling work will be enhanced by supporting the establishment of more than one modeling group to look at similar questions (Shea et al. 2023), allowing for multi-model comparisons (den Boon et al. 2019). Achieving this goal depends on resourcing workforce development and effective networks.

-

Increase transparency about institutional and decision-making contexts: Decision-makers may have constraints that modelers are unaware of and that decision-makers do not think to communicate. Both parties need to identify these constraints for consideration in models, or even for starting the collaboration early enough that a model could be more useful. The difference in context often comes with very different jargon that complicates understanding each other’s needs and roles. The knowledge broker role is key for bridging this communication gap. It would be helpful to increase opportunities for temporary co-appointments of university-based scientists into government roles or for knowledge brokers and decision-makers to run lectures or workshops at research institutes to better expose researchers to the operational environment where science and models are used.

-

Create more model-educated knowledge broker positions: Decision-makers frequently do not understand the state of the science in an area they are making decisions about, and model-based evidence can be complicated. There is a lack of knowledge brokers in One Health sectors in government positions that understand the scope of possibilities from different data sources, and what is and is not rigorous modeling science. This gap and the increase in science misinformation make it challenging for decision-makers to identify rigorous modeling intelligence. Increasing the number of professionals in government with strong quantitative backgrounds that could serve as knowledge brokers is essential for unlocking the full potential for modeling intelligence to be used in decision-making. It is naive to think these positions (and expertise) will occur organically, rather such roles need to be identified, supported, and valued.

-

Establish standards for development and use of models in different contexts (e.g., as a priori versus post hoc decision-informing tools, or for real-time response versus preparedness phases of emergency management): It can be challenging to use modeling results for decision-making partly because modelers use a variety of different modeling workflows (Silberzahn et al. 2018) and decision-makers use a variety of different decision-making processes and value systems. In general, establishing standards for how to use modeling results in decisions (see Committee on Models in the Regulatory Decision Process, 2007; Modeling, 2009; Treasury, 2015 for progress) will improve the uptake and efficiency of decision-support models. Post hoc modeling for decision evaluation versus a priori decision-informing modeling, or real-time response guidance versus pre-emptive preparedness modeling, must require different methodological standards to maintain the integrity of the scientific process.

Summary

In general, our work suggests that deliberate capacity building for quantitative skills in knowledge broker positions in public service is necessary for realizing the full potential and maintaining the integrity of model-to-decision workflows. Personnel in these positions will be well-positioned to lead other priorities such as establishing robust standards for development and use of model-based evidence in decisions in One Health sectors. These actions will continue to build trust in model-based evidence, thus increasing its value in One Health applications.

Data availability

Summarized data are available upon request. We cannot share raw transcript material due to our agreement with participants.

References

Adeoye-Olatunde OA, Olenik NL (2021) Research and scholarly methods: semi-structured interviews. J Am Coll Clin Pharm 4:1358–1367

Adisasmito WB et al. (2022) One Health: a new definition for a sustainable and healthy future. PLoS Pathog 18:e1010537. https://doi.org/10.1371/journal.ppat.1010537. ARTN e1010537

Anderson DP et al. (2013) A novel approach to assess the probability of disease eradication from a wild-animal reservoir host. Epidemiol Infect 141:1509–1521. https://doi.org/10.1017/S095026881200310x

Anderson DP, Gormley AM, Bosson M, Livingstone PG, Nugent G (2017) Livestock as sentinels for an infectious disease in a sympatric or adjacent-living wildlife reservoir host. Prev Vet Med 148:106–114. https://doi.org/10.1016/j.prevetmed.2017.10.015

Baker MG, Wilson N, Blakely T (2020b) Elimination could be the optimal response strategy for covid-19 and other emerging pandemic diseases. Br Med J 371:m4907. https://doi.org/10.1136/bmj.m4907

Baker MG, Kvalsvig A, Verall AJ, Telfar-Barnard L, Wilson N (2020a) New Zealand’s elimination strategy for the COVID-19 pandemic and what is required to make it work. NZ Med J 133:10–14

Barron MC, Tompkins DM, Ramsey DS, Bosson MA (2015) The role of multiple wildlife hosts in the persistence and spread of bovine tuberculosis in New Zealand. NZ Vet J 63(Suppl 1):68–76. https://doi.org/10.1080/00480169.2014.968229

Bedson J et al. (2021) A review and agenda for integrated disease models including social and behavioural factors. Nat Hum Behav 5:834–846. https://doi.org/10.1038/s41562-021-01136-2

Berger L et al. (2021) Rational policymaking during a pandemic. Proc Natl Acad Sci USA 118:e2012704118. https://doi.org/10.1073/pnas.2012704118

Binny RN et al (2021) Early intervention is the key to success in COVID-19 control. R Soc Open Sci 8. https://doi.org/10.1098/rsos.210488

Bremer S, Glavovic B (2013) Exploring the science–policy interface for Integrated Coastal Management in New Zealand. Ocean Coast Manag 84:107–118. https://doi.org/10.1016/j.ocecoaman.2013.08.008

Came HA, McCreanor T, Doole C, Simpson T (2017) Realising the rhetoric: refreshing public health providers’ efforts to honour Te Tiriti o Waitangi in New Zealand. Ethn Health 22:105–118. https://doi.org/10.1080/13557858.2016.1196651

Came H, O’Sullivan D, Kidd J, McCreanor T (2023) Critical Tiriti analysis: a prospective policy making tool from Aotearoa New Zealand. Ethnicities https://doi.org/10.1177/14687968231171651

CDC (Centers for Disease Control and Prevention) (2024). Avian influenza (bird flu). https://www.cdc.gov/bird-flu/situation-summary/index.html. Accessed: Nov. 15th, 2024

Chen Z, Lemey P, Yu H (2023) Approaches and challenges to inferring the geographical source of infectious disease outbreaks using genomic data. Lancet Microbe https://doi.org/10.1016/S2666-5247(23)00296-3

Committee on Models in the Regulatory Decision Process (2007) Models in environmental regulatory decision making. The National Academies Press

Cowled BD et al. (2022) Use of scenario tree modelling to plan freedom from infection surveillance: Mycoplasma bovis in New Zealand. Prev Vet Med 198:105523. https://doi.org/10.1016/j.prevetmed.2021.105523

den Boon S et al. (2019) Guidelines for multi-model comparisons of the impact of infectious disease interventions. BMC Med 17:ARTN 163 https://doi.org/10.1186/s12916-019-1403-9

Douglas J et al. (2021b) Phylodynamics reveals the role of human travel and contact tracing in controlling the first wave of COVID-19 in four island nations. Virus Evol 7:veab052. https://doi.org/10.1093/ve/veab052

Douglas J et al. (2021a) Real-time genomics to track COVID-19 post-elimination border incursions in Aotearoa New Zealand. Emerg Infect Dis 27:2361–2368

Elderd BD, Dukic VM, Dwyer G (2006) Uncertainty in predictions of disease spread and public health responses to bioterrorism and emerging diseases. Proc Natl Acad Sci USA 103:15693–15697. https://doi.org/10.1073/pnas.0600816103

Fischoff B (2013) The sciences of science communication. Proc Natl Acad Sci USA 110:14033–14039

Gluckman PD, Bardsley A, Kaiser M (2021) Brokerage at the science–policy interface: from conceptual framework to practical guidance. Humanit Soc Sci Commun 8:ARTN 84 https://doi.org/10.1057/s41599-021-00756-3

Gormley AM, Holland EP, Barron MC, Anderson DP, Nugent G (2016) A modelling framework for predicting the optimal balance between control and surveillance effort in the local eradication of tuberculosis in New Zealand wildlife. Prev Vet Med 125:10–18. https://doi.org/10.1016/j.prevetmed.2016.01.007

Greenhalgh S, Müller K, Thomas S, Campbell ML, Harter T (2022) Raising the voice of science in complex socio-political contexts: an assessment of contested water decisions. J Environ Policy Plan 24:242–260. https://doi.org/10.1080/1523908x.2021.2007762

Grigoropoulou N, Small ML (2022) The data revolution in social science needs qualitative research. Nat Hum Behav 6:904–906. https://doi.org/10.1038/s41562-022-01333-7

Haar J, Martin WJ (2022) He aronga takirua: cultural double-shift of Māori scientists. Hum Relat 75:1001–1027 https://doi.org/10.1177/00187267211003955

Harrison S et al. (2020) One Health Aotearoa: a transdisciplinary initiative to improve human, animal and environmental health in New Zealand. One Health Outlook 2:4. https://doi.org/10.1186/s42522-020-0011-0

Hemming V et al. (2022) An introduction to decision science for conservation. Conserv Biol 36:e13868. https://doi.org/10.1111/cobi.13868

Hendy S et al. (2021) Mathematical modelling to inform New Zealand’s COVID-19 response. J R Soc N Z 51:S86–S106. https://doi.org/10.1080/03036758.2021.1876111

Hikuroa D (2017) Matauranga Maori—the ukaipo of knowledge in New Zealand. J R Soc N Z 47:5–10. https://doi.org/10.1080/03036758.2016.1252407

Hsieh HF, Shannon SE (2005) Three approaches to qualitative content analysis. Qual Health Res 15:1277–1288. https://doi.org/10.1177/1049732305276687

James A, Hendy SC, Plank MJ, Steyn N (2020) Suppression and mitigation strategies for control of COVID-19 in New Zealand. https://www.medrxiv.org/content/10.1101/2020.03.26.20044677

Jelley L et al. (2022) Genomic epidemiology of Delta SARS-CoV-2 during transition from elimination to suppression in Aotearoa New Zealand. Nat Commun 13:4035. https://doi.org/10.1038/s41467-022-31784-5

Johnson-Laird PN (2010) Mental models and human reasoning. Proc Natl Acad Sci USA 107:18243–18250. https://doi.org/10.1073/pnas.1012933107

Keshavamurthy R, Dixon S, Pazdernik KT, Charles LE (2022) Predicting infectious disease for biopreparedness and response: a systematic review of machine learning and deep learning approaches. One Health 15:100439. https://doi.org/10.1016/j.onehlt.2022.100439

Kiem AS, Verdon-Kidd DC, Austin EK (2014) Bridging the gap between end user needs and science capability: decision making under uncertainty. Clim Res 61:57–74. https://doi.org/10.3354/cr01243

Kitching RP, Thrusfield M, Taylor NM (2006) Use and abuse of mathematical models: an illustration from the 2001 foot and mouth disease epidemic in the United Kingdom. Rev Sci Tech OIE 25:293–311. https://doi.org/10.20506/rst.25.1.1665

Li SL et al. (2017) Essential information: uncertainty and optimal control of Ebola outbreaks. Proc Natl Acad Sci USA 114:5659–5664. https://doi.org/10.1073/pnas.1617482114

Livingstone PG, Hancox N, Nugent G, de Lisle GW (2015) Toward eradication: the effect of infection in wildlife on the evolution and future direction of bovine tuberculosis management in New Zealand. N Z Vet J 63:4–18. https://doi.org/10.1080/00480169.2014.971082

Lloyd-Smith JO et al. (2009) Epidemic dynamics at the human–animal interface. Science 326:1362–1367

Mansley LM, Donaldson AI, Thrusfield MV, Honhold N (2011) Destructive tension: mathematics versus experience—the progress and control of the 2001 foot and mouth disease epidemic in Great Britain. Rev Sci Tech OIE 30:483–498

Mazey S, Richardson J (2020) Lesson-drawing from New Zealand and Covid-19: the need for anticipatory policy making. Polit Q 91:561–570. https://doi.org/10.1111/1467-923x.12893

McAllister TG (2022) 50 reasons why there are no Māori in your science department. J Glob Indig 6:1–10

McAllister TG, Naepi S, Wilson E, Hikuroa D, Walker LA (2022) Under-represented and overlooked: Māori and Pasifika scientists in Aotearoa New Zealand’s universities and crown-research institutes. J R Soc N Z 52:38–53. https://doi.org/10.1080/03036758.2020.1796103

Mccaw JM, Plank MJ (2022) The role of the mathematical sciences in supporting the Covid-19 response in Australia and New Zealand. Anziam J 64:315–337. https://doi.org/10.1017/S1446181123000123

Ministry for Primary Industries, Manatū Ahu Matua (2023) Mycoplasma bovis disease eradication programme. https://www.mpi.govt.nz/biosecurity/mycoplasma-bovis. Accessed: Dec. 11th, 2023

Modeling C. f. R. E. (2009) Guidance on the development, evaluation, and application of environmental models. U.S. Environmental Protection Agency

Nickerson RS (1988) Confirmation bias: a ubiquitous phenomenon in many guises. Rev Gen Psychol 2:175–220

Nugent G, Buddle BM, Knowles G (2015) Epidemiology and control of Mycobacterium bovis infection in brushtail possums (Trichosurus vulpecula), the primary wildlife host of bovine tuberculosis in New Zealand. N Z Vet J 63(Suppl 1):28–41. https://doi.org/10.1080/00480169.2014.963791

Nugent G, Gormley AM, Anderson DP, Crews K (2018) Roll-back eradication of bovine tuberculosis (TB) from wildlife in New Zealand: concepts, evolving approaches, and progress. Front Vet Sci 5:ARTN 277 https://doi.org/10.3389/fvets.2018.00277

Office of the Prime Minister's Chief Science Advisors (2024) Chief Science Advisor Forum. https://www.pmcsa.ac.nz/who-we-are/chief-science-advisor-forum/. Accessed Jan. 12th, 2024

Oidtman RJ et al. Trade-offs between individual and ensemble forecasts of an emerging infectious disease. Nat Commun 12:ARTN 5379 https://doi.org/10.1038/s41467-021-25695-0 (2021)

Parker C, Scott S, Geddes A (2019) Snowball sampling. SAGE Publications Ltd

Pepin KM et al. (2022) Optimizing management of invasions in an uncertain world using dynamic spatial models. Ecol Appl 32:e2628. https://doi.org/10.1002/eap.2628

Pepin KM, Kay SL, Davis AJ (2017) Comment on: ‘Blood does not buy goodwill: allowing culling increases poaching of a large carnivore’. Proc R Soc B 284:20161459

Power N (2018) Extreme Teams: towards a greater understanding of multi-agency teamwork during major emergencies and disasters. Am Psychol 73:478–490

Ramsey DSL, Efford MG (2010) Management of bovine tuberculosis in brushtail possums in New Zealand: predictions from a spatially explicit, individual-based model. J Appl Ecol 47:911–919. https://doi.org/10.1111/j.1365-2664.2010.01839.x

Rouse HL, Norton N (2017) Challenges for freshwater science in policy development: reflections from the science-policy interface in New Zealand. N Z J Mar Fresh 51:7–20. https://doi.org/10.1080/00288330.2016.1264431

Scheufele DA, Krause NM (2019) Science audiences, misinformation, and fake news. Proc Natl Acad Sci USA 116:7662–7669. https://doi.org/10.1073/pnas.1805871115

Shea K et al (2023) Multiple models for outbreak decision support in the face of uncertainty. Proc Natl Acad Sci USA 120:ARTN e2207537120, https://doi.org/10.1073/pnas.2207537120

Silberzahn R et al. (2018) Many analysts, one data set: making transparent how variations in analytic choices affect results. Adv Methods Pract Psychol Sci 1:337–356. https://doi.org/10.1177/2515245917747646

Snow CP (2012) The two cultures. Cambridge University Press, 179pp

Stats NZ (2021). COVID-19 lessons learnt: recommendations for improving the resilience of New Zealand’s government data system. Stats NZ Tatauranga Aotearoa, Wellington, New Zealand. https://data.govt.nz/assets/Uploads/Covid-19-lessons-learnt-full-report-Mar-2021.pdf

Stien A (2017) Blood may buy goodwill: no evidence for a positive relationship between legal culling and poaching in Wisconsin. Proc R Soc B 284:20170267

Tongco DC (2007) Purposive sampling as a tool for informant selection. Ethnobot Res Appl 5:147–158

Treasury H (2015) The Aqua Book:: guidance on producing quality analysis for government. The National Archives

von Winterfeldt D (2013) Bridging the gap between science and decision making. Proc Natl Acad Sci USA 110:14055–14061. https://doi.org/10.1073/pnas.1213532110

WHO (World Health Organization) (2024). Antimicrobial resistance. https://www.who.int/news-room/factsheets/detail/antimicrobial-resistance. Accessed: Nov. 15th, 2024

Yin RK (2018) Case study research and applications: design and methods. Sage Publications, Inc

Acknowledgements

Thanks to William Doutt, David Reinhold, Steven Kendrot, and Anthony Pita (Ngāpuhi/Ngātiwai) for their helpful comments and advice, and all interviewed participants in NZ. DTSH is funded by a Percival Carmine Chair in Epidemiology and Public Health and KMP acknowledges the United States Department of Agriculture, Animal and Plant Health Inspection Service (APHIS), Wildlife Services, National Wildlife Research Center sabbatical program and the APHIS National Feral Swine Damage Management Program for funding.

Author information

Authors and Affiliations

Contributions

KMP designed the study, conducted the interviews, analyzed the data and wrote the first draft of the manuscript, KC provided guidance on study design and analysis using qualitative methods, RBC and DC helped develop the question guide and edited the manuscript, all other authors contributed to data collection and editing the manuscript draft.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests. None of the authors are on the editorial board of Humanities and Social Science Communications.

Ethical approval

Our study protocol was submitted to the Sterling Institutional Review Board (number: 10792) and determined to be exempt on March 6, 2023 due to its low risk for negative impacts to participants, based on the terms of the US Department of Health and Human Service’s (DHHS) Policy for Protection of Human Research Subjects at 45 C.F.R. §46.104(d)—following a Category 2 exemption.

Informed consent