Abstract

This study explores artificial intelligence (AI) algorithm transparency to mitigate negative attitudes and to enhance trust in AI systems and the companies that use them. Given the growing importance of generative AI such as ChatGPT in stakeholder communications, our research aims to understand how transparency can influence trust dynamics. Particularly, we propose a shift from a reputation-focused prism model to a knowledge-centric pipeline model of AI trust, emphasizing transparency as a strategic tool to reduce uncertainty and enhance knowledge. To investigate these, we conducted an online experiment using a 2 (AI algorithm transparency: High vs. Low) by 2 (Issue involvement: High vs. Low) between-subjects design. The results indicated that AI algorithm transparency significantly mitigates the negative relationship between a general negative attitude toward AI and trust in the parent company, particularly when issue involvement was high. This suggests that transparency serves as an essential signal of trustworthiness and is capable of reducing skepticism even among those predisposed to distrust AI as a technical feature and a communicative strategy. Our findings extend prior literature by demonstrating that transparency not only fosters understanding but also acts as a signaling mechanism for organizational accountability. This has practical implications for organizations integrating AI, offering a viable strategy to cultivate trust. By highlighting transparency’s role in trust-building, this research underscores its potential to enhance stakeholder confidence in AI systems and support ethical AI integration across diverse contexts.

Similar content being viewed by others

Introduction

The integration of generative artificial intelligence (AI) with chatbots such as ChatGPT, is becoming increasingly prevalent across various sectors. AI chatbot adoptions are largely fueled by the benefits they offer in terms of efficiency, precision, and the ability to provide immediate responses (Deloitte Insights, 2021). Yet, the rapid progress of technology has historically been both a source of anxiety and promise (Dwivedi et al. 2021; Sturken et al. 2004). A recent survey in the US showed that less than half of adults (41%) supported the development of AI technology and 22% opposed it (Zhang and Dafoe, 2019). This ambivalence is particularly pronounced with AI, where the potential for disruption is both vast and uncertain. These apprehensions are not without merit, as the opacity of AI decision-making might result in unanticipated and perhaps adverse consequences.

Recent studies have pointed out how algorithmic systems may reinforce biases or even spur radicalization (Shin and Jitkajornwanich, 2024; Shin and Zhou, 2024), which highlights the critical importance of transparency in mitigating these unintended consequences. This study investigates how transparency in AI algorithms can play a significant role in diminishing negative perceptions and bolstering trust towards AI systems. The general negative attitude toward AI arises from the unpredictable and uncertain outcomes of AI, leading to fear of the unknown (Johnson and Verdicchio, 2017; Li and Huang, 2020; Youn and Jin, 2021). To mitigate this anxiety and promote the use of AI systems, companies and governments have explored mechanisms for building trust. For example, the European Union’s AI Act has emphasized the need for trustworthy AI to manage inherent risks associated with these systems (European Union, 2024; Laux et al. 2024). However, there is a noticeable gap in academic efforts to suggest practical strategies for building trust in AI, particularly strategies that consider the general public’s varying attitudes toward AI.

Trust-building serves as a fundamental mechanism for reducing uncertainty in human relations (Sorrentino et al. 1995). In interpersonal relations, trustworthiness is traditionally assessed through direct interactions between two parties or endorsements from mutual acquaintances. Podolny (2001) conceptualizes this trust dynamics as “pipes” and “prisms” of connected networks. The “pipes” paradigm emphasizes direct, dyadic interactions as channels through which trust is built incrementally, such as through repeated exchanges or shared experiences (Hsiao et al. 2010). This process relies on observable behaviors and consistency over time, where trust functions as a conduit between individuals or organizations (Rockmann and Bartel, 2024). In contrast, the “prisms” paradigm highlights how trust is mediated by third-party signals and reputational cues, reflecting an actor’s perceived reliability through their network position or institutional endorsements (Podolny, 2001; Rockmann and Bartel, 2024; Utz et al. 2012; Uzzi, 1996, 2018). Here, trust is not merely transmitted but refracted through social structures, enabling assessments even in the absence of direct interaction.

Applying this framework to AI systems reveals distinct challenges. While human interactions naturally foster trust through pipelines—ongoing communication, transparency, and reliability—AI lacks the embodied reciprocity that underpins such processes. Conversely, the prism effect, where trust is inferred from institutional reputation (e.g., a tech company’s brand), can compensate for this gap. Studies suggest that users often rely on the credibility of AI developers as a proxy for trustworthiness (Youn and Jin, 2021). Once the priming prism effect is established, it can be facilitated through human-AI interaction, creating a cascading impact on trust. However, this mechanism is contingent on recognizable affiliations; for startups or lesser-known entities, the absence of strong reputational signals may exacerbate public skepticism. If the corporation is not a leading participant in the market for AI systems, a pervasive pessimistic outlook toward AI could deter consumers from initially adopting the AI systems. In this case, developing pipelines of AI trust is required to enhance public trust in AI systems and mitigate unfavorable perceptions, but this approach has been underexplored.

This study proposes a move from a reputation-focused prism model to a knowledge-centric pipeline model of AI trust, emphasizing the role of AI algorithm transparency as a trust-building strategy. Reducing uncertainty is essential for establishing trust in AI systems. Previous literature indicates that transparency in AI systems can reduce uncertainty and enhance trust in AI systems (Liu, 2021) and serve as a direct pipeline effect that fosters knowledge and understanding. We expand the existing body of literature to show that AI algorithm transparency signaling can enhance trust in AI systems, implying that individuals may not completely comprehend the algorithm or logical operation of the AI system. However, AI transparency can also have a signaling effect that can also be extended to the organization’s accountability for the AI system.

Taken together, building on the elaboration likelihood model (ELM), we propose an empirical study to investigate how transparency of AI algorithms can lessen pervasive negative attitudes toward AI, especially for highly involved issue communication assisted by AI. We expect this study to provide a viable strategy for cultivating trust in AI systems and their parent organizations. Therefore, the results have practical implications for organizations on successful AI integration. Moreover, given the rise of misinformation and generative AI content (Shin et al. 2024; Shin et al. 2024), this study also addresses societal concerns by promoting an inclusive approach to AI adoption that avoids technological disparity.

Literature review

Effects of general negative attitudes toward AI and trust in AI

A general negative attitude toward AI can be defined as concerns and reservations that individuals have about the development and integration of AI in society (Schepman and Rodway, 2020; 2023). These concerns often stem from the potential negative consequences of AI on human life. For instance, individuals may fear that AI will lead to fewer job opportunities, negatively impact interpersonal relationships, and contribute to economic crises (Persson et al. 2021; Schepman and Rodway, 2020). Expected ethical issues also contribute to the negative attitude toward AI. Murtarelli et al. (2023) identified three key ethical challenges of AI-assisted and chatbot-based communications in the context of organization-public interaction: “the risk of the asymmetrical redistribution of informative power, the need to humanize chatbots to reduce users’ risk perceptions and increase organizational credibility, and the need to guarantee network security and protect users’ privacy” (p. 932). Negative attitudes toward new technology can vary depending on an individual’s background, with differences observed between those with humanities or technical specializations, as well as between genders (e.g., Aguirre-Urreta and Marakas, 2010). Kaya et al. (2022) indicated that negative perceptions of AI were influenced by agreeableness, anxiety related to AI setup, and anxiety about learning AI.

The general negative attitude toward AI may hinder people from trusting the AI system. Trust is an expectation held in the absence of complete information about another party (Bhattacharya et al. 1998; Blomqvist, 1997). It helps reduce the transactional costs associated with uncertainty (Gefen et al. 2003; Kesharwani and Singh Bisht, 2012; Wang et al. 2016). Previous research has consistently demonstrated that trust is a mediator influencing the adoption of new technologies across diverse contexts, including online shopping (Gefen et al. 2003), banking (Kesharwani and Singh Bisht, 2012), and social media platforms (Wang et al. 2016). Trust influences the perceived ease of use and perceived usefulness, which, in turn, affect users’ new technology adoption intentions as predicted by the technology acceptance model (TAM, Gefen et al. 2003; Kesharwani and Singh Bisht, 2012). The role of trust is also more important in leading people to engage in new technology-mediated experiences when perceiving higher risks related to the technology (e.g., Kesharwani and Singh Bisht, 2012; Wang et al. 2016).

The formation of trust based on technology-mediated experiences typically follows the direct pipeline pathway, built through repeated positive interactions (Podolny, 2001; Rockmann and Bartel, 2024), which could remedy general negative attitudes toward AI. However, these negative attitudes often prevent initial AI adoption, rendering the direct pipeline approach ineffective and making the indirect “prism” pathway through institutional reputation more significant. This prism effect operates through public trust in parent companies that deploy AI, where stakeholders base their confidence on perceptions of organizational reliability, integrity, and competence (Hon and Grunig, 1999). Such reputation-based trust serves as a crucial foundation for establishing and maintaining long-term relationships with various stakeholders, driving outcomes like consumer loyalty (Sirdeshmukh et al. 2002), purchase intention (See-To and Ho, 2014), and employee engagement (Mishra et al. 2014).

However, emerging AI services like chatbots face challenges in leveraging these prism effects due to information asymmetries. The inherent opacity in AI decision-making processes can trigger algorithm aversion, where users develop strong resistance to AI systems after encountering even minor failures, regardless of the system’s overall competence or the parent company’s reputation. This phenomenon was notably observed in Microsoft’s Tay chatbot incident, where negative user interactions rapidly corrupted the system’s behavior despite Microsoft’s established reputation. Thus, the prism effect alone proves insufficient when fundamental transparency and reliability concerns remain unaddressed in the AI system itself, bringing the elaboration of the people and mediating role of AI transparency.

Application of the Elaboration Likelihood Model (ELM)

The relationship between a general negative attitude toward AI and trust in AI systems and the parent company may change over time or at least may be influenced by the level of contemplation people have about the relationship. ELM illustrates individuals’ information processing and persuasion process with two distinct routes to persuasion: the central route and the peripheral route (Petty and Cacioppo, 1981). The central route hinges on careful analysis of the presented information. Individuals taking the central route engage in deep contemplation of the merits of the presented arguments, potentially leading to a long-lasting attitude change, whereas the peripheral route requires minimal cognitive effort (Bhattacherjee and Sanford, 2006). Peripheral cues, such as a celebrity endorsement, can influence attitudes without in-depth evaluation, often resulting in temporary shifts. The level of elaboration likelihood, the degree to which an individual thinks critically about the message, determines the persuasion route. Higher elaboration likelihood is associated with greater allocation of cognitive resources to the arguments. Issue-relevant elaboration often leads to a more objective assessment of the issue and the strength of the arguments.

Previous literature has used ELM as the source of theoretical insights to understand users’ trust-building process in diverse communication environments. In the context of online consumers’ initial trust-building, for instance, ELM has been applied to understand how consumers develop trust when visiting a new website for the first time. According to Zhou et al. (2016), initial trust can be built through both the central route, which is represented by the quality of the arguments (or information) presented on the website, and the peripheral route, which is influenced by the source credibility, such as the reputation of the website or company. Self-efficacy has been found to moderate the effect of argument quality on initial trust, suggesting that individuals with higher self-efficacy are more likely to be persuaded through the central route. Additionally, cultural variables such as uncertainty avoidance and individualism have direct effects on initial trust, indicating that the cultural context can influence the trust-building process (Zhou et al. 2016).

While ELM (Petty and Cacioppo, 1981, 1986) was originally developed to explain how individuals process persuasive messages, it offers valuable insights for understanding how AI transparency might enhance trust. Persuasion and trust-building share a common foundation: both involve influencing individuals to change, refine, or reaffirm their beliefs or attitudes in the face of new situations. Thus, whether the goal is shifting someone’s purchase intentions or facilitating trust formation, similar cognitive processes of elaboration and cue recognition may apply (Bhattacherjee and Sanford, 2006). In particular, disclosing how an AI algorithm processes data can serve as a “central route” argument for those motivated and able to process more technical details. Recent research indicates that users engage in deeper, central-route processing when algorithmic transparency reveals system logic, particularly when they are motivated to understand the underlying mechanics. Springer and Whittaker (2020) found that transparency feedback helped users construct mental models of system operation, a hallmark of central-route processing, which can enhance sustained trust.

Conversely, even minimal transparency can function as a “peripheral cue” for individuals who lack the motivation or expertise to parse detailed algorithmic explanations but who nonetheless perceive transparency as a signal of accountability and trustworthiness. Previous research adopting a causal perspective suggests that even calibrated or partial disclosures can compel organizations to conform to broader societal norms by enabling stakeholder scrutiny (Heimstädt and Dobusch, 2020). This finding shows the impact of the peripheral route’s emphasis on signals or heuristics (i.e., “the company appears transparent”) over deep elaboration. Hence, the ELM framework is relevant for explaining why different audience segments, characterized by varying motivations, expertise, and attitudes, might respond differently to AI transparency, with some engaging in thorough evaluation and others relying on superficial but confidence-enhancing cues.

Individual attitudes have been consistently identified as a significant determinant of trust (Jones, 1996). Previous research has explored the effects of pre-existing attitudes on trust toward received messages and technological acceptance. White et al. (2003) suggested that individuals with inherently positive attitudes are more inclined to trust affirming messages, while those with negative predispositions are likely to trust adverse messages. Within the framework of dual-process models such as ELM, strong pre-existing attitudes are conceptualized as cues. These cues either direct individuals toward the peripheral route, where they are likely to reject information that contradicts their existing beliefs, or motivate them toward the central route, reinforcing their pre-existing attitudes (Kunda, 1990; Wagner and Petty, 2022).

Pre-existing attitudes toward AI significantly influence the development of trust toward AI systems and their parent companies. In particular, pre-existing negative attitudes can lead to skepticism and lower trust, especially in AI-mediated communication and chatbot engagement. Glikson and Woolley (2020) showed the impact of early views toward AI on trust dynamics in human-AI interactions, emphasizing that negative preconceptions can impede meaningful engagement with AI technologies. Hence, we expect that when individuals with a general negative attitude toward AI encounter situations necessitating communication through AI systems, they are likely to maintain their negative perceptions and exhibit distrust toward these systems. This skepticism extends not only to the AI systems (e.g., chatbots and AI-driven customer service platforms), but also to the parent company deploying the AI systems. The parent company is the ultimate source of the communication and is expected to offer reliable responses. Thus, it is reasonable to assume that there is no justification for altering one’s previous viewpoint when interacting through AI systems.

Therefore, we propose the first hypothesis for this study as follows:

H1: Individuals with a more negative general attitude toward AI are less likely to trust (a) AI systems and (b) the parent company.

Moderating role of AI- algorithm transparency

Transparency refers to the perceived quality of intentionally communicated information (Schnackenberg and Tomlinson, 2016). The assessment of transparency hinges on several factors, including substantiality, responsiveness, and accountability (Carroll and Einwiller, 2014). Additionally, attributes such as accuracy, timeliness, balance, and clarity are considered paramount in evaluating transparency. However, the detailed meaning of transparency varies upon the context of its application. Transparency in business is often associated with the disclosure of organizational information to the public (Bushman et al. 2004). Transparency in computer science, particularly concerning AI, entails highlighting the operational mechanisms of computer algorithms for specific objectives (Diakopoulos, 2020). Likewise, transparency operates at two distinct but interrelated levels in AI systems: technical transparency concerning algorithmic functioning, and organizational transparency regarding corporate accountability. This dual-layer framework clarifies how different transparency types influence user perceptions through separate yet complementary pathways.

Rooted in computer science, AI algorithm transparency is the technical transparency pertaining to the clarity and comprehensibility of the mechanisms and processes guiding the chatbot’s operation and interaction with users. The technical transparency emphasizes the explicability of AI systems’ operational mechanisms, encompassing: (1) disclosure of training data sources and methodologies, (2) explanation of decision-making processes, and (3) clarification of system limitations (Hill et al. 2015). Such technical transparency directly addresses the “black box” problem, enabling users to develop pipeline trust through understanding system operations (Khurana et al. 2021). When users comprehend how algorithms process inputs and generate outputs, they can form more accurate mental models of system capabilities, reducing uncertainty-driven aversion (Lee and See, 2004). This technical understanding facilitates central route processing in ELM terms, as users evaluate system merits through substantive engagement with operational details (Petty and Cacioppo, 1981).

Organizational transparency is the layer reflecting corporate disclosure practices regarding AI development and deployment (Bushman et al. 2004). This includes: (1) ethical guidelines governing AI use, (2) accountability measures for system errors, and (3) responsiveness to stakeholder concerns (Carroll and Einwiller, 2014). Unlike technical transparency’s direct pipeline effects, organizational transparency operates primarily through prism mechanisms - shaping perceptions of corporate credibility that indirectly influence product trust (Schnackenberg and Tomlinson, 2016). For instance, Google’s transparency reports (Google, 2024) demonstrate organizational accountability without requiring users to understand technical specifics, functioning as peripheral cues in ELM’s persuasion model.

The interplay between these transparency dimensions is essential when examining the formation of trust. Technical transparency enables the central route by providing evaluable content about system operations, while organizational transparency facilitates the peripheral route through corporate reputation signals. This explains why comprehensive trust-building requires both: technical transparency alone cannot compensate for poor corporate accountability (as seen in cases where technically sophisticated systems face public backlash over ethical concerns), nor can organizational transparency overcome fundamental misunderstandings of system capabilities (as demonstrated by the Tay chatbot incident).

Thus, we anticipate that AI-algorithm transparency will mitigate the relationship between a general negative attitude toward AI and trust in both AI systems and the AI parent company for several reasons. First, AI-algorithm transparency is an available resource that shifts the route of persuasion from peripheral to central for individuals assessing the trustworthiness of AI systems. According to ELM, the central route of persuasion is activated when individuals are motivated and able to process information (Petty and Cacioppo, 1981). High AI-algorithm transparency may prompt individuals with a general negative attitude toward AI to reconsider their stance after they understand how AI operates, potentially mitigating their negative perceptions of the AI system. This reasoning aligns with the ELM’s prediction that high-quality arguments, such as transparent communication of the functionality and benefits of the algorithm, can enhance persuasion effectiveness by encouraging users to engage in central processing. This in-depth evaluation of the algorithm’s merits is likely to lead to increased trust in both the AI system and its parent company (e.g., Lee and See, 2004). Second, even if AI-algorithm transparency fails to lead individuals to the central route, it can be an accessible cue for evaluating the trustworthiness of the AI system and its parent company. When individuals are not motivated or able to process information deeply, they rely on peripheral cues (Petty and Cacioppo, 1986). In such cases, the mere presence of transparency, regardless of the content, can act as a peripheral cue signaling trustworthiness or credibility. Thus, even without a deep understanding, individuals may perceive an AI system more positively if it is presented as transparent, thereby influencing their trust levels. Transparency enhances the perceived trustworthiness and credibility of the source. When individuals observe that a company is willing to be transparent about its AI algorithms, they may interpret this transparency as a signal of honesty and reliability, encouraging them to pay closer attention to the message content. Therefore, we propose the following hypothesis:

H2: The relationship between individuals’ general attitude toward AI and trust in (a) the AI system and (b) the parent company is moderated by AI-algorithm transparency, such that the negative relationship is weaker for individuals in the high transparency group compared to those in the low transparency group.

Issue involvement, personal relevance, risk perception, and transparency

In the ELM framework, personal relevance is construed as the extent to which an individual perceives a message or topic as germane to their personal life, ambitions, or values (Van Lange et al. 2011). Heightened personal relevance predisposes individuals to engage in the central route of processing, characterized by a deliberate contemplation of the arguments presented (Morris et al. 2005). This study investigates the influence of personal relevance, positing that it directs individuals toward central route processing, albeit influenced by their perceived susceptibility and severity of the situation. When individuals interact with AI for insights or solutions related to their interests, the issue involvement determines their processing pathway. For trivial matters, individuals might resort to peripheral route processing, retaining their extant attitudes toward the AI system. Conversely, when involving significant risks, individuals are likely to engage in central route processing, utilizing available resources, notwithstanding their general skepticism toward AI. In this context, the transparency of AI algorithms becomes a crucial factor facilitating central route processing. Previous research has highlighted that an increased focus on health-related issues correlates with heightened perceptions of susceptibility and severity, thereby intensifying the propensity to adopt preventive actions (Cheah, 2006). This relationship holds across various health risks and cultural settings, delineating a robust link between individuals’ involvement level and risk perception (Cheah, 2006). The applicability of the persuasion principles of ELM is particularly salient in the context of AI and the importance of AI system transparency. The disclosure of the working logics of AI algorithms is theorized to lead users to central route processing, particularly when the communication issue is highly relevant to the users.

The impact of transparency as a mechanism for fostering trust is not universally applicable across all contexts. The efficacy of transparency cues may diminish in situations characterized by low user engagement or minimal issue involvement, especially in tasks perceived as simplistic or inconsequential. Moreover, when preconceived negative attitudes toward AI prevail, attempts to enhance AI algorithm transparency may paradoxically evoke skepticism rather than confidence, leading users to perceive these efforts as superficial corporate maneuvers through the lens of peripheral route processing. This observation is congruent with the ELM’s assertion that weak arguments, or in this context, perceived superficial or redundant transparency efforts, can inadvertently reinforce opposition (Petty and Cacioppo, 1986).

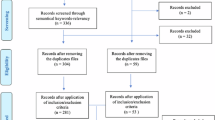

Building on these insights, we posit that the efficacy of transparency signals in AI is critically dependent on the congruence between users’ level of issue involvement and their pre-existing attitudes toward AI. In scenarios characterized by high involvement and a positive attitude toward AI, transparency measures are likely to engender trust through central route processing. In contrast, in low-involvement scenarios or where skepticism toward AI prevails, transparency initiatives might prove counterproductive, underscoring the imperative for strategic communication strategies that acknowledge the psychological foundations of user engagement and trust. Consequently, we propose the following hypothesis Fig. 1:

H3: The moderating effect of AI-algorithm transparency on the relationship between a general attitude toward AI and trust in (a) the AI system and (b) the parent company is moderated by the level of issue involvement, such that the moderating effect of AI-algorithm transparency is higher for individuals in the high issue involvement group compared to those in the low involvement group.

Method

Online experiment

We conducted an online experiment based on a 2 × 2 between-subjects design using the QuestionPro platform. Participants were sourced from Dynata, a professional research agency and compensated for their completed responses. Our sampling strategy was designed to reflect the demographics of the U.S. census, with a particular focus on age and gender. After registering, participants were presented with a consent form. They were then randomized into one of four groups according to a 2 × 2 factorial design that varied in AI-algorithm transparency signaling (high vs. low) and issue involvement (high vs. low). Participants viewed a 2-min video illustrating a dialog between a user and a chatbot. Following the video, they were asked to rate their trust in the AI-assisted communication as well as their trust in the company behind the AI system.

Stimuli development

We developed four video clips as stimuli, each depicting a conversation between a user and a chatbot in AI-assisted communication scenarios. These four scenarios were specifically designed to vary by AI-algorithm transparency signaling (high vs. low) and by issue involvement, either data security concerns (high-issue involvement) or shopping-related inquiries (low-issue involvement). Preliminary conversations for the scenarios were initially generated by ChatGPT. Subsequent refinement ensured uniformity in length, a consistent tone, and balanced emotional resonance across both conditions. The finalized scripts were produced as 2-min video clips, visually styled to mimic a mobile phone interface where a user interacts with a chatbot.

Manipulation of AI-algorithm transparency

We operationalized AI algorithm transparency signaling as communicative actions intended to deliver adequate insights into algorithmic processes to assist users to reassure about the AI system’s decision-making without burdening users with overly complex technical information. The level of transparency was manipulated by altering the chatbot’s responses. In scenarios with high transparency, the chatbot not only answered the user’s questions but also provided insights into the algorithmic processes behind its responses. For instance, when a user asked, “How do you ensure my data remains protected?” the chatbot replied: “To protect your data, I operate using advanced encryption methods and comply with international data protection regulations. Regular updates and audits ensure these measures remain effective.” In contrast, scenarios with low transparency featured a chatbot that answered questions without explaining the algorithmic basis of its responses. In response to the same question, the chatbot simply stated: “We use various security measures to ensure your data remains protected.”

Manipulation of issue involvement

We differentiated issue involvement through the topics of conversation. High-issue involvement scenarios include data security concerns, a topic of significant personal relevance due to the potential consequences of data breaches. Low-issue involvement scenarios focus on shopping-related concerns, considered to be of lesser personal importance.Footnote 1 After a series of revisions, the final scripts of the conversations were produced as 2-min video clips, visually styled to mimic a mobile smartphone interface where a user interacts with a chatbot.

Manipulation check

To assess the effectiveness of our stimuli manipulations, participants were asked to rate their agreement with specific items immediately after viewing one of the four video clips. These items were designed to measure the perceived levels of AI-algorithm transparency signaling and issue involvement. Participants’ perceptions of the chatbot’s algorithm transparency were measured using six items. Sample items included, “The chatbot provided a clear explanation of how it arrived at its responses” and “The chatbot was transparent about the data it used to generate answers.” Analysis of these items revealed significant differences between the high-signaling condition (M = 5.06, SD = 0.82) and the low-signaling condition (M = 4.57, SD = 1.11), t(1057) = 8.08, p < 0.001. The analysis indicates that our manipulation of AI-algorithm transparency signaling was successfully perceived by the participants as intended. To evaluate the manipulation of issue involvement, participants responded to items assessing the personal relevance and impact of the concerns presented in the video clips. Sample items included, “The concerns in the video clip affect my life” and “I see a close connection between the concerns in the video clip and me.” Results showed a significant difference in perceived issue involvement between the high-involvement condition (M = 4.24, SD = 1.52) and the low-involvement condition (M = 3.89, SD = 1.42), t(1055.56) = 3.79, p < 0.001, confirming the effective differentiation of issue involvement levels among participants.

Experiment Subjects Profile

In this study, the experiment subjects consisted of 1,059 participants with a diverse range of demographic characteristics. Gender representation was fairly balanced, with 47.9% (n = 507) identifying as male, 51.7% (n = 548) as female, 0.1% (n = 1) as other, and 0.3% (n = 3) preferring not to reveal their gender. The age of participants varied significantly, with a distribution from 18 to over 75 years. The largest proportion held a 4-year degree (29.2%, n = 309), followed by those with some college but no degree (21.4%, n = 227), and high school graduate (18.4%, n = 195). The sample also included individuals with a master’s or professional degree (15.8%, n = 167), those with 2-year degrees (11.1%, n = 118), and a smaller proportion with a doctorate (2.4%, n = 25). Income levels among participants were diverse, with the largest group, 19.1% (n = 202), earning between $30,000 and $49,999, followed by those earning $50,000 to $69,999 (16.5%, n = 175), and $10,000 to $29,999 (15.8%, n = 167). Ethnicity data revealed a predominantly Caucasian sample (75.0%, n = 794), with other ethnic groups represented to varying degrees, including Latino/Hispanic (6.7%, n = 71), Afro-American (7.6%, n = 80), and East Asian (3.3%, n = 35). among others. Table 1 presents details of the experiment subjects’ profiles.

Measures

General negative attitude toward AI

We assessed participants’ general negative attitudes toward AI using five items sourced from Schepman and Rodway (2023). These items were selected due to their theoretical grounding in previous empirical studies that identified common public concerns regarding AI ethics, control, and privacy (Schepman and Rodway, 2023). Sample statements included, “Organizations use artificial intelligence (AI) unethically” and “AI is used to spy on people.” The statements reflected concerns about the ethical use, errors, control, future uses, and privacy implications of AI. Responses were measured on a 7-point Likert scale, ranging from 1 (Strongly Disagree) to 7 (Strongly Agree). The scale demonstrated good reliability (Cronbach’s α = .83) with a mean score of 4.28 (SD = 1.24).

Trust

Measurement items for trust were adapted from Hon and Grunig (1999) and Ki and Hon (2007), and were tailored to the context of this study. These items are widely adopted and validated for measuring trust dimensions such as reliability, competence, and integrity in organization-public communication contexts. Trust in AI-Assisted Communication. Participants’ trust in the chatbot was assessed by seven items. A sample item was, “Users feel very confident about the chatbot service’s abilities and accuracy.” This scale showed excellent reliability (Cronbach’s α = 0.91) with a mean score of 4.65 (SD = 1.12). Trust in the AI Parent Company. Trust in the company employing the chatbot was evaluated using seven items, including the following sample item: “The company treats people like me fairly and justly.” This measure also displayed high reliability (Cronbach’s α = 0.96) with a mean score of 4.54 (SD = 1.23). Descriptive statistics for these measures across the four conditions are presented in Table 2.

Control variables

We included demographic variables as covariates to control their effects in the data analysis. Personal experience with chatbots, such as familiarity and knowledge of AI systems, was not controlled separately as the influence of such personal experience is embedded in the general negative attitude toward AI construct.

Results

Testing direct effects of a general negative attitude toward AI on trust in (a) the AI system (H1a) and (b) the parent company (H1b)

In examining the relationships posited in H1a and H1b, hierarchical regression analyses were conducted to predict trust in (a) the AI system and (b) the parent company based on participants’ general negative attitude toward AI, controlling for demographic variables including age, education, income, gender, and ethnicity.

The regression analysis for trust in AI systems suggested that a general negative attitude toward AI significantly predicted trust in AI systems (B = −0.33, SE = 0.03, p < 0.001). Specifically, as individuals’ negative attitudes toward AI increased, their trust in AI systems decreased. The model explained a significant proportion of variance in trust in AI systems (R² = 0.21, F(6, 1058) = 172.33, p < 0.001), indicating a substantial effect. Thus, H1a is supported. Similarly, another set of regression analysis for trust in the parent company showed that a general negative attitude toward AI was a significant predictor (B = −0.38, SE = 0.03, p < 0.001). An increase in a negative attitude toward AI was associated with a decrease in trust toward the parent company. The model accounted for a significant amount of variance (R² = 0.21, F(6, 1586) = 192.36, p < 0.001). Therefore, H1b is supported Tables 3 and 4.

Testing the moderating effects of AI-algorithm transparency (H2a & H2b) and the moderated moderating effects of issue involvement (H3a & H3b)

We employed PROCESS Macro Model 3, which is specifically designed for examining a moderated moderation model (i.e., three-way interaction between an independent variable (X), moderator (W), and moderating moderator (Z); Hayes, 2022). Conducting two sets of the PROCESS analysis, we examined the moderating effects of algorithm transparency (W) and moderated moderating effects of issue involvement (Z) on the relationship between a general negative attitude toward AI (X) and trust in (a) AI systems and (b) the parent company. Each model included demographic variables (i.e., age, gender, race, education, and income) as covariates to control their effects on the hypothesized paths

Model effects on trust in the AI system

First, this model accounted for 22.29% of the variance in trust in AI, R2 = 0.22, F(12,1046) = 25.00, p < 0.001, indicating a significant model fit. However, regarding the interaction effects, none reached statistical significance: X x W (b = −0.14, t(1046) = − 0.87, p = .N.S.), X x Z (b = −0.19, t(1046) = − 1.189, p = .N.S.), W x Z (b = −0.25, t(1046) = − 0.57, p = N.S.), X x W x Z (b = 0.12, t(1046) = 1.21, p = N.S.). The highest order unconditional interaction (X x W x Z) (b = 0.12, t(1046) = 1.21, p = N.S.). The highest order unconditional interaction (X x W x Z) did not indicate an R2 change (0.001) in a significant F(1,1046) = 1.46, p = 0.23). Therefore, H2a and H3a are not supported.

Model Effects on trust in the AI parent company

Results confirmed that this model accounted for 22.28% of the variance in trust in the parent company with a good model fit (R2 = 0.22, F(12, 1046) = 24.98, p < 0.001). The interaction between a negative attitude toward AI (X) and algorithm transparency (W) was significant at the p < 0.10 level (b = −0.29, t(1046) = − 1.67, p = 0.095), thus marginally supporting H2b. Moreover, the interaction between a negative attitude toward AI (X) and issue involvement (Z) was significant (b =−0.39, t(1046) = − 2.26, p < 0.05). The three-way interaction among a negative attitude toward AI (X), algorithm transparency (W), and issue involvement (Z) also significantly predicted trust in the parent company, (b = 0.25, t(1046) = 2.27, p < 0.05). Additionally, conditional process analysis indicated that the effect of a negative attitude toward AI (X) on trust in the parent company was moderated by both algorithm transparency (M) and issue involvement (W). Specifically, in the high level of issue involvement condition (W), the interaction effect of a negative attitude toward AI (X) and algorithm transparency (Z) was significant, (F(1,1046) = 7.31, p <0.01.). The conditional effects for the different conditions of the moderators (X, Z) indicated that for the low algorithm transparency condition and the high issue involvement condition, the effect of a negative attitude toward AI (X) on trust in the parent company was significantly negative (b = −.48, t(1046) = − 9.54, p 0.001.). Therefore, H3b is supported. The interaction effects are presented in Figs. 2 and 3.

Discussion

The integration of AI systems in stakeholder communications presents both opportunities and challenges. As AI technology becomes increasingly prevalent, understanding how to foster trust in these systems is crucial for organizations. Our study sheds light on what factors influence trust in AI-assisted communication, particularly focusing on the impact of AI algorithm transparency. While transparency has been widely touted as a means to alleviate fears and anxiety associated with AI, our findings offer nuanced insights into its effectiveness. This discussion explores the interplay between general attitudes toward AI, the role of transparency, and the implications for companies seeking to build and maintain trust with their stakeholders.

Key findings and theoretical implications

Impact of pre-existing negative attitudes toward AI on trust and decision-making

The general negative attitude toward AI often centers on concerns regarding the controllability of AI systems (Clarke, 2019; Johnson and Verdicchio, 2017; Zhan et al. 2023). Many argue that AI should remain under human supervision, with humans retaining the ultimate decision-making authority. This necessity arises from the complex nature of AI decision-making processes, which can be challenging to trust and understand, especially when decisions may have significant consequences. Our study highlights that individuals with a general negative attitude toward AI tend to distrust AI systems and the parent companies that employ them for communication purposes. This finding extends the earlier research by showing that individuals who already view AI negatively also translate this distrust to the parent companies behind AI systems, and thus, create a broader “halo” of skepticism. This finding aligns with previous literature indicating that pre-existing attitudes can influence individuals’ reception and processing of new information. Within the framework of dual-process models such as the ELM, strong pre-existing attitudes function as cues, directing individuals either toward the peripheral route, where they are inclined to reject contradictory information, or toward the central route, reinforcing their existing attitudes (Kunda, 1990; Wagner and Petty, 2022). By applying ELM to AI contexts, we show that entrenched negative beliefs can dominate even when new, potentially trust-building information is provided.

The role of transparency in the AI Algorithm and its impact on trust

Perceiving AI as controllable is proposed to alleviate anxiety and fears associated with AI use (Zhan et al. 2023). Building on this literature, our study supports the idea that transparency in AI algorithms positively correlates with trust in AI-assisted communication systems. This finding resonates with previous research suggesting that revealing how AI “thinks” can mitigate uncertainty (e.g., Liu, 2021; Xu et al. 2023). By revealing the inner workings of AI systems, we expected that individuals would mitigate their negative beliefs, such as concerns about being misled by the AI system or the parent company of the AI system.

However, our findings failed to identify a significant moderating effect of AI algorithm transparency on the relationship between a general negative attitude toward AI and trust in AI systems. This suggests that despite expectations based on the ELM framework (Petty and Cacioppo, 1986) that AI algorithm transparency, as an accessible resource, could motivate individuals to deeply engage with AI systems and potentially change their attitudes, individuals tended to maintain their pre-existing negative attitudes toward AI systems and were reluctant to trust them. Moreover, this tendency persisted even when processing highly involved issues such as data security concerns. Previous research has indicated that a general negative attitude toward AI systems often stems from a lack of knowledge about AI technology, in addition to emotional factors such as fear and anxiety (Huisman et al. 2021). Given that the intellectual ability to process new information is crucial for selecting the central route in the ELM framework (Morris et al. 2005; Petty and Cacioppo, 1986), AI algorithm disclosure may not serve as an available resource for individuals with a general negative attitude toward AI when logically evaluating the trustworthiness of AI systems. This may be particularly true when their attitude is linked to a lack of knowledge and experience with AI systems.

The role of AI algorithm transparency in enhancing trust in AI-parent companies

Interestingly, our findings revealed a significant effect of AI algorithm transparency in mitigating the negative impact of a general negative attitude toward AI on trust in the parent company that employs the AI system. In particular, a high level of personal relevance to the communication topic enhanced the moderating effect of AI algorithm transparency on this relationship. Despite the ineffectiveness of AI algorithm transparency in fostering trust in AI systems among those with a general negative attitude toward AI, it is worth discussing the role of AI algorithm transparency as a cue for peripheral processing in assessing trust. In this scenario, we can consider AI algorithm transparency as a signal indicating a company’s sincere efforts to provide trustworthy communication for its stakeholders by adopting AI systems. Signaling theory suggests that in contexts where information asymmetry exists, deploying communicative cues can mitigate this asymmetry (Spence, 1974). Such signals carry intrinsic information that enables receivers to assess the desired qualities of the sender. Similarly, the concept of transparency signaling refers to an organization’s efforts to demonstrate transparency (Carroll and Einwiller, 2014), which plays a crucial role in shaping the users’ perception of the organization.

Taken together, our finding showed that while transparency did not significantly alter users’ trust in the AI system itself, it did mitigate distrust toward the parent organization. One potential explanation stems from the difference in how users interpret technical disclosures versus organizational signals. Even when users are presented with algorithmic details, a lack of domain knowledge or strongly embedded fears (Huisman et al. 2021) may prevent them from translating that information into higher system-level trust when they perceive AI as inherently risky. However, transparency still demonstrates an organizational willingness to share information in the similar vein of signaling theory (Spence, 1974) by conveying accountability and trustworthiness on the company’s part (Carroll and Einwiller, 2014). Moreover, this distinction supports the idea that trust in technology and trust in a parent organization are not necessarily synonymous (Glikson and Woolley, 2020). From an ELM perspective (Petty and Cacioppo, 1986), disclosing the inner workings of AI may fail to engage deeply negative users in central-route processing and leave their concerns about the system unaddressed. However, peripheral cues (i.e., a company signaling transparency) may reinforce beliefs that the organization is ethically responsible. It may explain why transparency enhances trust in the parent company despite not shifting skepticism toward the AI itself.

Drawing on insights from signaling theory, we interpret our findings to suggest that the disclosure of AI algorithms can serve as an effective transparency signal and accessible cue, leading communicators to trust the AI’s parent company even when they do not trust the AI system due to their pre-existing general negative attitude toward AI. This distinction highlights the importance of transparency as a technical feature and as a strategic communicative tool that can enhance organizational trustworthiness in the eyes of skeptical stakeholders.

Theoretical implications

This study advances AI trust research by clarifying the effects of algorithmic transparency on trust in both AI systems and their parent organizations. First, our findings contribute to a deeper understanding of ELM by highlighting the complex interplay between pre-existing attitudes and message processing routes in the context of AI-assisted communication and the role of transparency, by showing that for individuals with strong negative biases toward AI, transparency did not catalyze central-route processing that might reshape their core attitudes. ELM posits that individuals process persuasive messages through either a central route, which involves careful and thoughtful consideration of the presented arguments, or a peripheral route, which involves a more superficial evaluation based on cues outside the message content (Petty and Cacioppo, 1986). Our study implies that for individuals with a general negative attitude toward AI, AI algorithm transparency does not necessarily engage them in central route processing to foster trust in the AI system. Instead, they may rely on peripheral cues, such as the transparency signal, to form judgments about the parent company. This insight expands the application of ELM by demonstrating that even when users do not take the central route due to negative pre-existing attitudes, peripheral cues can still play a significant role in shaping their perceptions of trust. This finding suggests that companies may benefit more from focusing on visible transparency signals rather than attempting to change deeply rooted negative attitudes through detailed technical explanations.

Our study also offers important contributions to signaling theory, particularly in the context of organizational trust when communicating with stakeholders. Signaling theory suggests that in situations of information asymmetry, the sender (in this case, the company using the AI system for stakeholder communications) can use signals to convey important information to the receiver (the stakeholders) (Spence, 1974). Transparency about AI algorithms serves as such a signal, indicating the company’s commitment to openness and trustworthiness. By demonstrating that AI algorithm transparency can enhance trust in the parent company, even among individuals with a negative predisposition toward AI, our findings highlight the power of transparency as a strategic signaling tool. This finding aligns with the notion that transparency can reduce information asymmetry and build reputational trust (Carroll and Einwiller, 2014). For organizations, this means that efforts to be transparent about their AI systems can foster a more positive public perception and mitigate the skepticism often associated with AI technologies.

Integrating insights from ELM and signaling theory, our study suggests an intersectional approach to building trust in AI and the organizations that use AI systems. While ELM highlights the challenges of changing deeply held attitudes through detailed information, signaling theory provides a pathway for building trust through transparent practices. This integration points to a strategic approach where companies use transparency signals to foster peripheral trust, which can then lay the groundwork for deeper engagement and possibly more central route processing over time. Therefore, our findings provide theoretical insights that bridge ELM and signaling theory, offering a comprehensive understanding of how transparency influences trust in AI-assisted communication. This integrated perspective can guide organizations in designing effective communication strategies that build and sustain trust in their AI systems.

Overall, our study adds to the growing conversation about AI trust by showing both the pros and cons of algorithmic transparency in changing how stakeholders see things. First, we bridge ELM and signaling theory to explain why individuals with entrenched negative attitudes may continue to distrust the AI system yet still credit the parent organization’s transparency efforts. This distinction clarifies inconsistent findings in previous research suggesting that transparency sometimes fails to boost trust in AI (Glikson and Woolley, 2020) but can bolster positive impressions of the deploying organization (Carroll and Einwiller, 2014). Second, we highlight the role of issue involvement: while technical disclosures may not sway deeply skeptical audiences, high-relevance topics can intensify transparency’s impact on organizational trust. Lastly, by showing that even minimal algorithmic transparency can function as a peripheral cue, our findings broaden existing approaches that focus primarily on central-route persuasion (Petty and Cacioppo, 1986). Together, these insights offer a more integrated framework for understanding how, when, and why AI transparency initiatives succeed or fall short in building trust, particularly as misinformation and algorithmic biases emerge as critical concerns (Shin and Akhtar, 2024; Shin, 2024a; Shin, 2023).

Practical implications

Our findings offer valuable practical implications for companies adopting AI systems for stakeholder communications. By disclosing AI algorithms, companies can demonstrate their commitment to transparency in stakeholder communications. Importantly, our study suggests that individuals do not necessarily need to comprehend the intricacies of AI algorithms to build trust. Rather, the act of signaling transparency alone is sufficient to engender users’ trust of AI-assisted communication and the corresponding parent company. This relationship holds true even for individuals with a generally negative attitude toward AI. Despite these predispositions, our result shows that signaling AI transparency enhances trust in AI-assisted communication and the parent company. This study implies that direct experiences or impressions of controllability, coupled with transparency signaling, can mitigate distrust in AI-assisted communication and the parent organization. Moreover, efforts to signal AI transparency can cultivate reputational trust even among those who are generally skeptical of AI.

Our findings imply that a negative attitude toward AI can be mitigated by sharing information about how AI works and by providing explanations about why it would be more beneficial, even though it is not perfect. By acknowledging AI’s limitations and signaling efforts to overcome its imperfections, the public will begin to start building a trust relationship, not only trusting the AI algorithm but also trusting the parent company. We have observed this phenomenon after the release of ChatGPT 3.5 by OpenAI, when the company released its service using the term “evaluation period.”

We suggest that AI companies adopt a tiered approach to transparency. First, AI companies can implement short, user-friendly statements or transparency labels that offer broad explanations of how the AI system processes user data and generates responses. This approach can function as a peripheral cue for less-motivated users who simply need to know that safeguards are in place. Moreover, they can provide an optional deeper layer of information, such as white papers, technical FAQs, or interactive demos, for stakeholders who wish to engage in central-route processing and thoroughly understand the algorithm’s logic. AI companies can tailor the complexity and frequency of disclosures to specific user groups’ knowledge levels and issue involvement. For example, corporate clients investing in enterprise AI may require more specialized disclosures compared to individual consumers. By tailoring transparency strategies in this multi-layered manner, organizations can signal transparency and accountability while also catering to varying levels of AI skepticism, ultimately building a long-term foundation of trust.

Limitations and future research suggestions

We acknowledge the limitation raised regarding our 2 × 2 experimental design potentially being too simplistic for the complex phenomena of AI algorithm transparency signaling and issue involvement. However, as an initial investigation into these phenomena, our study intentionally employed a simplified and clear experimental design to demonstrate fundamental relationships unequivocally. By confirming the basic effects of transparency signaling and issue involvement in a simplified context, our findings lay foundational groundwork for more complex investigations. Therefore, future research should build upon this foundation by exploring the optimal level of AI algorithm transparency signaling and investigating its effectiveness across more nuanced and diverse contexts of issue involvement, which potentially incorporates continuous measures or additional experimental conditions.

We categorized the operationalization of AI algorithm transparency into two distinct levels: high and low. In the high transparency condition, a simplistic algorithmic explanation was provided. While we observed significant effects of these transparency cues on trust in the parent company, we acknowledge that real-world AI transparency can involve multiple layers (e.g., data sources, bias audits, error metrics) and that our simplified operationalization might be seen as insufficiently granular by those with more advanced AI knowledge. Moreover, the study did not systematically account for participants’ prior AI literacy, which can be a variable that was beyond our primary scope but nonetheless influential in shaping how users interpret and evaluate transparency disclosures. Thus, future research might incorporate more contextual and multi-layered transparency conditions that incorporate detailed technical data or accountability mechanisms. This attempt could also stratify participants according to their AI literacy or personal attributes (Quazi and Talukder, 2011) to pinpoint optimal transparency levels for different user groups, thereby offering tailored communication strategies. By doing so, scholars could more precisely identify how varying complexities of AI algorithm explanations promote trust in diverse contexts and enhance our broader understanding of transparency’s role in fostering trustworthy AI communication systems.

Although we acknowledge the general lack of AI knowledge among participants, we have not fully explored how this knowledge gap influences trust formation and the perceived effectiveness of transparency signals. Future studies could stratify participants based on their AI expertise to determine how different levels of understanding shape perceptions of algorithmic disclosures and trust in AI systems. We expect that researchers can more precisely isolate the role of technological literacy in determining when and why transparency leads to higher trust, by capturing and analyzing these variations.

Moreover, although our sample was drawn to approximate U.S. census distributions for age, gender, and racial composition, it does not necessarily capture the full breadth of global cultural perspectives on AI trust. While 75% of our participants identified as Caucasian, reflecting general U.S. demographics, this sample composition may not demonstrate how attitudes toward AI and transparency differ in other cultural contexts (e.g., collectivist vs. individualistic societies). Future cross-cultural research could replicate or adapt our design across regions with distinct cultural norms and values, such as Asia, the Middle East, or South America, to examine whether and how transparency cues function differently under varying sociocultural conditions.

Finally, our study employed a short, 2-min video stimulus in an online experimental setting, which may not fully capture the dynamic and iterative nature of real-world AI interactions. Trust in AI systems often evolves through repeated engagements, and reliance on a single, scripted experience could limit ecological validity. Additionally, our focus on chatbot interactions may not translate directly to higher-stakes AI applications (e.g., healthcare diagnostics or autonomous vehicles) that require more detailed disclosures and carry different risk profiles. Future research should therefore consider longitudinal designs and broader application domains to verify and expand upon our findings.

Data availability

The datasets of the current study are available from the corresponding author for reasonable request.

Change history

13 October 2025

A Correction to this paper has been published: https://doi.org/10.1057/s41599-025-05917-2

Notes

In this study, the manipulation of issue involvement is central to examining the interaction effects with AI algorithm transparency on participant perceptions. To achieve this, we selected topics representing high and low issue involvement based on their personal relevance and potential impact on individuals. Data security concerns were chosen to embody high-issue involvement due to their significant implications for personal privacy, financial security, and identity protection. The inherently personal nature of data security, coupled with the severe consequences of breaches—ranging from financial loss to identity theft—ensures a heightened level of cognitive and emotional engagement with the topic. On the other hand, shopping-related concerns were utilized to represent low-issue involvement, reflecting situations with lesser personal stakes. These concerns, while relevant, typically do not affect individuals’ core personal or financial security and have relatively minimal long-term impact. This distinction in personal relevance and potential impact between the two topics allows for a comprehensive exploration of how varying levels of issue involvement influence trust in AI-assisted communication, particularly in the context of AI algorithm transparency signaling.

References

Aguirre-Urreta M, Marakas G (2010) Is it really gender? An empirical investigation into gender effects in technology adoption through the examination of individual differences. Hum Technol 6(2):155–190. https://doi.org/10.17011/ht/urn.201011173090

Bhattacharya R, Devinney TM, Pillutla MM (1998) A formal model of trust based on outcomes. Acad Manag Rev 23(3):459–472. https://doi.org/10.2307/259289

Bhattacherjee A, Sanford C (2006) Influence processes for information technology acceptance: An elaboration likelihood model. MIS Q 28(4):805–825. https://doi.org/10.2307/25148755

Blomqvist K (1997) The many faces of trust. Scand J Manag 13(3):271–286. https://doi.org/10.1016/S0956-5221(97)84644-1

Bushman RM, Piotroski JD, Smith AJ (2004) What determines corporate transparency? J Account Res 42(2):207–252. https://doi.org/10.1111/j.1475-679X.2004.00136.x

Carroll CE, Einwiller SA (2014) Disclosure alignment and transparency signaling in CSR reports. In RP Hart (Ed.), Communication and language analysis in the corporate world (pp. 249–270). University of Texas. https://doi.org/10.4018/978-1-4666-4999-6.ch015

Cheah W (2006) Issue involvement, message appeal and gonorrhea: risk perceptions in the US, England, Malaysia and Singapore. Asian J Commun 16:293–314. https://doi.org/10.1080/01292980600857831

Clarke R (2019) Why the world wants controls over Artificial Intelligence. Comput Law Secur Rev 35(4):423–433. https://doi.org/10.1016/j.clsr.2019.04.006

Deloitte Insights. (2021) The future of conversational AI. Deloitte. https://www2.deloitte.com/us/en/insights/focus/signals-for-strategists/the-future-of-conversational-ai.html/#endnote-sup-2 (accessed 17 March 2024)

Diakopoulos N (2020) Accountability, transparency, and algorithms. In Dubber, MD, Pasquale, F, & Das, S (Eds.). The Oxford handbook of ethics of AI. Oxford University Press (pp. 197-213)

Dwivedi YK, Hughes L, Ismagilova E, Aarts G, Coombs C, Crick T, Williams MD (2021) Artificial Intelligence (AI): Multidisciplinary perspectives on emerging challenges, opportunities, and agenda for research, practice and policy. Int J Inf Manag 57:101994. https://doi.org/10.1016/j.ijinfomgt.2019.08.002

European Union (2024) The Act Text: EU Artificial Intelligence Act. https://data.consilium.europa.eu/doc/document/ST-5662-2024-INIT/en/pdf (accessed 17 March 2024)

Gefen D, Karahanna E, Straub DW (2003) Trust and TAM in online shopping: An integrated model. MIS Q 27(1):51–90. https://doi.org/10.2307/30036519

Glikson E, Woolley AW (2020) Human trust in artificial intelligence: Review of empirical research. Acad Manag Ann 14(2):627–660. https://doi.org/10.5465/annals.2018.0057

Google (2024) YouTube Community Guidelines Enforcement. Google Transparency Report. https://transparencyreport.google.com/youtube-policy/removals?hl=en (accessed 18 March 2024)

Hill J, Ford WR, Farreras IG (2015) Real conversations with artificial intelligence: A comparison between human–human online conversations and human–chatbot conversations. Comput Hum Behav 49:245–250. https://doi.org/10.1016/j.chb.2015.02.026

Heimstädt M, Dobusch L (2020) Transparency and accountability: Causal, critical and constructive perspectives. Organ Theory 1(4):2631787720964216

Hon LC, Grunig JE (1999) Guidelines for measuring relationships in public relations. The Institute for Public Relations, Commission on PR Measurement and Evaluation, Gainesville, FL

Hsiao KL, Chuan‐Chuan Lin J, Wang XY, Lu HP, Yu H (2010) Antecedents and consequences of trust in online product recommendations: An empirical study in social shopping. Online Inf Rev 34(6):935–953. https://doi.org/10.1108/14684521011099414

Huisman M, Ranschaert E, Parker W, Mastrodicasa D, Kočí M, Santos D, Coppola F, Morozov S, Zins M, Bohyn C, Koç U, Wu J, Veean S, Fleischmann D, Leiner T, Willemink M (2021) An international survey on AI in radiology in 1041 radiologists and radiology residents part 1: Fear of replacement, knowledge, and attitude. Eur Radiol 31:7058–7066. https://doi.org/10.1007/s00330-021-07781-5

Johnson DG, Verdicchio M (2017) AI anxiety. J Assoc Inf Sci Technol 68(9):2267–2270. https://doi.org/10.1002/asi.23867

Jones K (1996) Trust as an affective attitude. Ethics 107(1):4–25. https://doi.org/10.1086/233694

Kaya F, Aydin F, Schepman A, Rodway P, Yetişensoy O, Demir Kaya M (2022) The roles of personality traits, AI anxiety, and demographic factors in attitudes toward artificial intelligence. Int J Hum–Comput Interact, 1–18. https://doi.org/10.1080/10447318.2022.2151730

Kesharwani A, Singh Bisht S (2012) The impact of trust and perceived risk on internet banking adoption in India: An extension of technology acceptance model. Int J Bank Mark 30(4):303–322. https://doi.org/10.1108/02652321211236923

Khurana A, Alamzadeh P, Chilana, PK (2021). ChatrEx: designing explainable chatbot interfaces for enhancing usefulness, transparency, and trust. In 2021 IEEE Symposium on Visual Languages and Human-Centric Computing (VL/HCC) (pp. 1-11). IEEE. https://doi.org/10.1109/VL/HCC51201.2021.9576440

Ki EJ, Hon LC (2007) Testing the linkages among the organization–public relationship and attitude and behavioral intentions. J Public Relat Res 19(1):1–23. https://doi.org/10.1080/10627260709336593

Kunda Z (1990) The case for motivated reasoning. Psychol Bull 108(3):480. https://doi.org/10.1037/0033-2909.108.3.480

Laux J, Wachter S, Mittelstadt B (2024) Trustworthy artificial intelligence and the European Union AI act: On the conflation of trustworthiness and acceptability of risk. Regul Gov 18(1):3–32. https://doi.org/10.1111/rego.12512

Lee JD, See KA (2004) Trust in automation: Designing for appropriate reliance. Hum Factors 46(1):50–80. https://doi.org/10.1518/hfes.46.1.50.30392

Li J, Huang JS (2020) Dimensions of artificial intelligence anxiety based on the integrated fear acquisition theory. Technol Soc 63:101410. https://doi.org/10.1016/j.techsoc.2020.101410

Liu B (2021) In AI we trust? Effects of agency locus and transparency on uncertainty reduction in human–AI interaction. J Comput-Mediat Commun 26(6):384–402. https://doi.org/10.1093/jcmc/zmab013

Mishra K, Boynton L, Mishra A (2014) Driving employee engagement: The expanded role of internal communications. Int J Bus Commun 51(2):183–202. https://doi.org/10.1177/2329488414525399

Morris J, Woo C, Singh A (2005) Elaboration likelihood model: A missing intrinsic emotional implication. J Target Meas Anal Mark 14:79–98. https://doi.org/10.1057/PALGRAVE.JT.5740171

Murtarelli G, Collina C, Romenti S (2023) “Hi! How can I help you today?”: Investigating the quality of chatbots-millennials relationship within the fashion industry. The TQM Journal 35(3):719–733

Persson A, Laaksoharju M, Koga H (2021) We mostly think alike: Individual differences in attitude towards AI in Sweden and Japan. Rev Socionetwork Strategy 15(1):123–142. https://doi.org/10.1007/s12626-021-00071-y

Petty RE, Cacioppo JT (1981) Attitudes and persuasion: Classic and contemporary approaches. W.C. Brown Company Publishers

Petty RE, Cacioppo JT (1986) Communication and persuasion: Central and peripheral routes to attitude change. New York: Springer-Verlag. https://doi.org/10.1007/978-1-4612-4964-1

Podolny JM (2001) Networks as the pipes and prisms of the market. Am J Sociol 107(1):33–60. https://doi.org/10.1086/323038

Quazi A, Talukder M (2011) Demographic determinants of adoption of technological innovation. J Comput Inf Syst 52(1):34–42. https://doi.org/10.1080/08874417.2011.11645484

Rockmann, KW, & Bartel, CA (2024). Interpersonal relationships in organizations: building better pipes and looking through prisms. Ann Rev Org Psychol Org Behav, 12. https://doi.org/10.1146/annurev-orgpsych-110622-061354

Schepman A, Rodway P (2020) Initial validation of the general attitudes towards Artificial Intelligence Scale. Comput Hum Behav Rep. 1:100014. https://doi.org/10.1016/j.chbr.2020.100014

Schepman A, Rodway P (2023) The General Attitudes towards Artificial Intelligence Scale (GAAIS): Confirmatory validation and associations with personality, corporate distrust, and general trust. Int J Hum Comput Interact 39(13):2724–2741. https://doi.org/10.1080/10447318.2022.2085400

Schnackenberg AK, Tomlinson EC (2016) Organizational transparency: A new perspective on managing trust in organization-stakeholder relationships. J Manag 42(7):1784–1810. https://doi.org/10.1177/0149206314525202

See-To EW, Ho KK (2014) Value co-creation and purchase intention in social network sites: The role of electronic Word-of-Mouth and trust–A theoretical analysis. Comput Hum Behav 31:182–189. https://doi.org/10.1016/j.chb.2013.10.013

Shin D, Jitkajornwanich K (2024) How algorithms promote self-radicalization: Audit of Tiktok’s algorithm using a reverse engineering method. Soc Sci Comput Rev 42(4):1020–1040. https://doi.org/10.1177/08944393231225547

Shin D, Zhou S (2024) A value and diversity-aware news recommendation systems: can algorithmic gatekeeping nudge readers to view diverse news? J Mass Commun Quarterly, 10776990241246680. https://doi.org/10.1177/10776990241246680

Shin D, Koerber, A, Lim JS (2024) Impact of misinformation from generative AI on user information processing: How people understand misinformation from generative AI. New Media & Society, 14614448241234040. https://doi.org/10.1177/14614448241234040

Shin D, Jitkajornwanich, K, Lim, JS, Spyridou A (2024) Debiasing misinformation: how do people diagnose health recommendations from AI? Online Information Review, (ahead-of-print). https://doi.org/10.1108/OIR-04-2023-0167

Shin D, Akhtar F (2024) Algorithmic inoculation against misinformation: how to build cognitive immunity against misinformation. J Broadcast Electron Media 68(2):153–175. https://doi.org/10.1080/08838151.2024.2323712

Shin D (2024a) Artificial misinformation. Exploring human-algorithm interaction online. https://doi.org/10.1007/978-3-031-52569-8

Shin D (2023) Algorithms, humans, and interactions: How do algorithms interact with people? Designing meaningful AI experiences (1st ed.). New York: Routledge. https://doi.org/10.1201/b23083

Sirdeshmukh D, Singh J, Sabol B (2002) Consumer trust, value, and loyalty in relational exchanges. J Mark 66:15–37. https://doi.org/10.1509/jmkg.66.1.15.18449

Sorrentino RM, Holmes JG, Hanna SE, Sharp A (1995) Uncertainty orientation and trust in close relationships: Individual differences in cognitive styles. J Pers Soc Psychol 68(2):314. https://doi.org/10.1037/0022-3514.68.2.314

Spence AM (1974) Market signaling: Information transfer in hiring and related processes. Harvard University Press

Springer A, Whittaker S (2020) Progressive disclosure: When, why, and how do users want algorithmic transparency information? ACM Trans Interact Intell Syst (TiiS) 10(4):1–32

Sturken M, Thomas D, Ball-Rokeach S (Eds.) (2004) Technological Visions: The Hopes and Fears that Shape New Technologies. Temple University Press

Utz S, Kerkhof P, Van Den Bos J (2012) Consumers rule: How consumer reviews influence perceived trustworthiness of online stores. Electron Commer Res Appl 11(1):49–58. https://doi.org/10.1016/j.elerap.2011.07.010

Uzzi B (1996) The sources and consequences of embeddedness for the economic performance of organizations: The network effect. Am Sociol Rev, 674–698. Cited 9372 times according to Google Scholar as of the date of writing this revision

Uzzi B (2018) Social structure and competition in interfirm networks: The paradox of embeddedness. In The sociology of economic life (pp. 213–241). Routledge. Cited 15324 times according to Google Scholar as of the date of writing this revision

Van Lange PA, Higgins ET, Kruglanski AW (2011) Handbook of theories of social psychology. Handb Theor Soc Psychol, 1–1144. https://doi.org/10.4135/9781446249215

Wagner BC, Petty RE (2022) The elaboration likelihood model of persuasion: Thoughtful and non‐thoughtful social influence. Theories in Social Psychology, Second Edition, 120–142. https://doi.org/10.1002/9781394266616.ch5

Wang Y, Min Q, Han S (2016) Understanding the effects of trust and risk on individual behavior toward social media platforms: A meta-analysis of the empirical evidence. Comput Hum Behav 56:34–44. https://doi.org/10.1016/j.chb.2015.11.011

White MP, Pahl S, Buehner M, Haye A (2003) Trust in risky messages: The role of prior attitudes. Risk Anal: Int J 23(4):717–726. https://doi.org/10.1111/1539-6924.00350

Xu Y, Bradford N, Garg R (2023) Transparency enhances positive perceptions of social artificial intelligence. Hum Behav Emerg Technol. https://doi.org/10.1155/2023/5550418

Youn S, Jin SV (2021) In AI we trust?” The effects of parasocial interaction and technopian versus luddite ideological views on chatbot-based customer relationship management in the emerging “feeling economy”. Comput Hum Behav 119:106721. https://doi.org/10.1016/j.chb.2021.106721

Zhan, ES, Molina, MD, Rheu, M, Peng W (2023) What is there to fear? Understanding multi-dimensional fear of AI from a technological affordance perspective. Int J Hum–Comput Interact, 1–18. https://doi.org/10.1080/10447318.2023.2261731

Zhang B, Dafoe A (2019) Artificial intelligence: American attitudes and trends. SSRN 3312874. https://doi.org/10.2139/ssrn.3312874

Zhou T, Lu Y, Wang B (2016) Examining online consumers’ initial trust building from an elaboration likelihood model perspective. Inf Syst Front 18:265–275. https://doi.org/10.1007/s10796-014-9530-5

Acknowledgements

This research is supported by the Arthur W. Page Center’s 2023 Page/Johnson Legacy Scholar Grant (No. 2023DIG06).

Author information

Authors and Affiliations

Contributions

H. Y. Yoon contributed to the conceptualization and writing of the manuscripts and the analysis of the dataset. K. Park contributed to the conceptualization and writing of the manuscripts and analysis of the dataset. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethical approval

This study was reviewed and approved by the Hong Kong Baptist University Research Ethics Committee (Human Research Ethics – Non-clinical; Ref. No. REC/22-23/0680) on 5 October 2023. All procedures involving human participants were conducted in accordance with the relevant guidelines and regulations, including the Declaration of Helsinki. The scope of the approval covered the administration of an online survey to examine how algorithm transparency signaling influences trust in AI-assisted communication.

Informed consent