Abstract

Modern educational systems increasingly demand sophisticated analytical tools to assess and enhance student performance through personalized learning approaches. Yet, educational analytics models often lack comprehensive integration of behavioural, cognitive, and emotional insights, limiting their predictive accuracy and real-world applicability. While traditional machine learning approaches such as random forest and neural networks have been applied to educational data, they typically present trade-offs between interpretability and predictive capability, failing to capture student learning processes’ complex, multidimensional nature. This research introduces CognifyNet, a novel hybrid AI-driven educational analytics model that combines ensemble learning principles with deep neural network architectures to analyse student behaviours, cognitive patterns, and engagement levels through an innovative two-stage fusion mechanism. The model integrates random forest decision-making with multi-layer perceptron feature learning, incorporating sentiment analysis and advanced data processing pipelines to generate personalized learning trajectories while maintaining model transparency. Evaluated through rigorous 5-fold cross-validation on a comprehensive dataset of 1200 anonymized student records and validated across multiple educational platforms, including UCI Student Performance and Open University Learning Analytics datasets, CognifyNet demonstrates superior performance over conventional approaches, achieving 10.5% reduction in mean squared error and 83% reduction in mean absolute error compared to baseline random forest models, with statistical significance confirmed through paired t-tests (p < 0.01). The model’s adaptive architecture incorporates bias mitigation mechanisms that reduce demographic parity differences from 18% to 7% while maintaining predictive accuracy, ensuring equitable analytics across diverse student populations. These findings establish CognifyNet as a transformative tool for data-informed, student-centred educational strategies, offering educators actionable insights for early intervention and personalized support while bridging the critical gap between artificial intelligence capabilities and practical educational implementation.

Similar content being viewed by others

Introduction

Education stands at the forefront of technological evolution. As we navigate the complexities of the modern academic landscape, the integration of artificial intelligence (AI) has emerged as a transformative force. This research (Lee and Lee, 2021) explores the potential of AI-powered educational analytics to optimize student performance.

Traditionally, education has been a one-size-fits-all approach, where students receive uniform instructions and assessments. This approach may overlook the diverse learning needs and cognitive variations among students. The advent of AI promises personalized and adaptive learning experiences. By harnessing the power of machine learning, deep neural networks, and natural language processing, educators can gain unprecedented insights into individual student behaviours, preferences, and challenges (Bahroun et al. 2023).

Despite the promising prospects, implementing AI in education has. Ethical considerations, data privacy concerns, and the need for transparent algorithms demand careful navigation. Additionally (Borge, 2016), there is a pressing need to address the potential biases ingrained in AI models, ensuring that the benefits are equitably distributed across diverse student populations (Bozkurt, 2023).

The current state of educational technology often needs more sophistication to truly understand the intricate interplay between a student’s cognitive processes and academic performance. Traditional analytical approaches (Moulieswaran and Kumar, 2023; Steinbauer et al. 2021; Holmes and Tuomi, 2022) must understand the emotional and motivational aspects that influence learning outcomes (Gadhvi and Parmar, 2023).

Furthermore, educators face the challenge of effectively incorporating these advanced technologies into their teaching methodologies. Resolving (Thongprasit and Wannapiroon, 2022; Almusaed et al. 2023; Gadhvi and Parmar, 2023) these issues is imperative for the successful integration of AI-powered analytics in education, paving the way for a more inclusive, adaptive, and student-centric learning environment.

This research (Baten and Walker, 2022) is motivated by the evolving educational landscape’s challenges in addressing individualized student needs. Embracing the transformative potential of artificial intelligence (AI), we aim to employ advanced machine learning techniques, including deep neural networks and sentiment analysis, to provide personalized and adaptive learning experiences. The motivation stems from the desire to bridge the gap between diverse learning profiles and academic success while addressing ethical and privacy considerations associated with AI implementation. By unlocking new dimensions of understanding and support, our research aspires to contribute to a more equitable, transparent, and effective educational framework.

Traditional educational models need help to provide personalized learning experiences, often resulting in a one-size-fits-all approach that overlooks individual cognitive variations. Integrating artificial intelligence (AI) brings forth challenges related to ethics, data privacy, and potential biases. This research (Martínez-Comesaña et al. 2023) addresses the need for AI-powered educational analytics to overcome the limitations of conventional approaches, aiming to create a more inclusive and adaptive learning environment. The challenge is to develop solutions that consider academic performance and emotional/motivational aspects, contributing to a transformative educational shift.

While prior research has explored standalone machine learning models such as random forest (RF), support vector machines (SVM), and neural networks in the context of academic performance prediction, these approaches often present trade-offs between interpretability and predictive capability. Tree-based models like RF offer transparency but may struggle with capturing intricate non-linear relationships, whereas deep neural networks provide powerful representational learning at the cost of explainability. CognifyNet bridges this gap through a hybrid architecture that integrates the interpretable decision patterns of RF with the deep feature learning capabilities of a multi-layer perceptron (MLP). This fusion enables the model to learn from structured academic features and behavioural data with minimal loss in explainability. Unlike conventional models that often handle engagement metrics or sentiment scores as separate or auxiliary signals, CognifyNet incorporates these features directly into the fused input pipeline, allowing them to interact meaningfully with academic predictors. This integrated modelling strategy results in a richer, more holistic understanding of student performance drivers. By addressing model interpretability and learning complexity, CognifyNet stands out as a robust, scalable, and adaptable framework, capable of outperforming traditional models while remaining practical for deployment in real-world educational environments.

Problem formulation 1: enhancing personalization

Statement

Develop an AI-powered educational analytics system to enhance personalization by adapting to individual cognitive variations.

Mathematical equations

Let Si be the learning style vector, Pi denote the academic performance matrix, and Ti represent the teaching strategy tensor for the student i. The adaptation process can be modelled as a complex non-linear function:

Objective function: Maximize the overall personalization score using a multi-objective function:

Notations:

Si: learning style vector for student i s.

Pi: academic performance matrix for student i s.

Ti: teaching strategy tensor for student i s.

Si′: adapted learning style vector for student i s.

σ: activation function.

Ws, Up, Vt: weight matrices for learning style, academic performance, and teaching strategy, respectively.

b: bias term.

α, β: weighting factors for maximizing personalization and minimizing variability, respectively.

Adaptation is a complex, nonlinear function incorporating learning styles, academic performance, and teaching strategies. The objective function is multifaceted, maximizing personalization while minimizing student variability. The notations represent vectors, matrices, and tensors, capturing the intricate relationships in the adaptation process.

Mitigating bias and ensuring equity: Develop strategies for the nuanced mitigation of bias and the preservation of equity in AI-powered educational analytics. Let \(X\in {R}^{m\times n}\) denote the input data matrix, \(Y\in {R}^{m}\) represent the performance outcomes vector, and \(Z\in {R}^{n\times p}\) be the demographic information tensor. The optimization problem for bias mitigation and equity promotion is formulated as follows:

Objective function: Minimize the objective function \(f(X,Y,Z)+\lambda \,{\rm{Trace}}(C\,{X}^{{\rm {T}}}\,X)\), encapsulating the intricate relationships within the input data matrix and penalizing biases, thereby mitigating bias and promoting equity.

Notations:

X: input data matrix of dimension m × n.

Y: Pperformance outcomes vector of dimension m.

Z: demographic information tensor of dimension n × p.

f: objective function capturing bias and disparity.

λ: regularization parameter.

C: covariance matrix capturing complex relationships in the input data.

δ: tolerance parameter for acceptable bias.

The formulated optimization problem includes a regularization term with a covariance matrix C to penalize biases in the input data, ensuring the nuanced mitigation of bias and preservation of equity in AI-powered educational analytics. Notations are presented professionally, adhering to standard mathematical conventions.

The CognifyNet model embodies a pioneering approach to educational analytics, uniquely blending ensemble learning principles with neural network frameworks to address the nuanced needs of student assessment and personalized support. Unlike traditional models, CognifyNet enhances personalization by adapting in real-time to individual learning profiles, utilizing a hybrid structure that captures both cognitive and emotional dimensions of student data. Integrating sentiment and engagement analysis directly within its framework, CognifyNet surpasses typical academic metrics, providing a richer, multidimensional view of student progress that accounts for engagement and emotional influences. This model emphasizes ethical AI in education, featuring built-in bias mitigation and privacy safeguards to ensure equitable analytics across diverse student demographics. CognifyNet’s innovative architecture also demonstrates a marked improvement in predictive accuracy, consistently outperforming established models like random forest (RF) and multi-layer perceptron (MLP) in error reduction (MSE and MAE), underscoring its advanced analytical power. As a scalable and adaptable solution, CognifyNet offers an unprecedented depth of insight, empowering educators with actionable, data-informed recommendations to create a truly student-centred, adaptive learning environment.

Our research paper follows a carefully structured framework with five distinct sections, each contributing to a thorough exploration of our study. The “Introduction” section serves as the foundational segment, outlining the challenges within the evolving educational landscape and providing a context for our research. Moving to the section “Literature review”, we engage with existing research, critically analysing and contextualizing our work within the broader academic discourse. The “Methodology” section intricately details our research approach, emphasizing the integration of advanced techniques and models that underscore the innovation driving our study. This comprehensive methodological overview ensures transparency and reliability in our research endeavours. Subsequently, the “Results and discussions” section offers a detailed presentation of our empirical findings, providing a nuanced analysis and interpretation of the data. This segment is crucial for a deeper understanding of the outcomes and their implications. Finally, the “Conclusions” section synthesizes the critical insights derived from our study, highlights the contributions made, and suggests potential directions for future research. This concluding section ensures a cohesive and conclusive end to our research paper, offering a valuable synthesis of our findings and their broader implications.

Literature review

The rapid growth of AI technologies has prompted significant interest and research into their potential applications and implications in education. Wahab et al. (2022) conducted a content analysis to examine the benefits, risks, and regulatory considerations surrounding ChatGPT in Chinese academia, providing insights into broader applications of AI in language learning and teaching. Expanding on this, Kumaar et al. (2022) advocated for a human-centred approach to AI integration within Philippine educational systems, highlighting both opportunities and challenges of implementing AI in school environments. This research emphasizes the need for thoughtful integration of AI to balance its potential with ethical considerations. AI’s influence on assessment methods has also been explored. For instance, Farooq (2020) examined ChatGPT’s transformative potential in higher education assessments, questioning whether it represents a revolutionary change or challenges established practices. Farooq’s study also delved into students’ perceptions of AI-driven feedback tools like Grammar, contributing valuable insights into the acceptance and efficacy of automated feedback mechanisms. Another area of interest is using chatbots and messaging bots as instructional tools. A study on Messenger bots in accounting education shed light on their effectiveness and the challenges students face when integrating these systems into specific disciplines. Similarly, Ahmad et al. (2023) reviewed speech-to-text recognition technology, exploring how it could enhance accessibility and engagement in learning environments by supporting diverse learning needs. AI’s potential in collaborative settings has also been investigated. Yang et al. (2023) and Baskara (2023) examined the cooperative use of ChatGPT in argumentative writing classrooms, assessing its impact as a co-writing tool to foster argumentative skills and collaborative learning.

In a different vein, Ouyang and Jiao (2021) introduced posthumanist applied linguistics as a theoretical framework, suggesting that posthumanist perspectives could illuminate the evolving dynamics between human and AI technologies in educational contexts. Ethics remains a central concern in AI integration. Tayyaba (2018) explored ethical challenges associated with AI-generated scholarly content, particularly in medical publishing, highlighting the need for ethical oversight. Similarly, Hooda et al. (2022) and Quy et al. (2023) investigated the use of ChatGPT by English as a Foreign Language (EFL) learners in informal settings, employing the Technology Acceptance Model to understand learners’ acceptance and usage of AI tools for language learning. Renz and Vladova (2021) and Perdriau and Lewis (n.d.) proposed a human-centred approach to AI integration in Philippine education, emphasizing the importance of prioritizing human factors in adopting AI in educational systems. Further, Quy et al. (2023) studied the role of Google Translate as a self-directed language learning tool, revealing insights into how learners independently utilize AI for educational support, a perspective also noted by Wahab et al. (2022) and further contextualized by studies from Capgemini (2021), Kusal et al. (2021), and Farooq (2020). Finally, Lu and Harris (2018) explored the implications of ChatGPT on traditional university practices, examining perceptions from scholars and students on AI’s evolving role in higher education and its potential to reshape academic environments. This breadth of literature underscores AI’s varied impact across educational contexts and highlights the need to carefully consider its potential benefits and ethical challenges. Table 1 shows the Comparative analysis of the previous study.

The comparative analysis of previous studies reveals diverse approaches to leveraging technology for education. For instance, the survey on Chabot’s and AI for learning provides valuable insights into student perspectives in Sweden, contributing to an enhanced understanding of AI adoption in local universities, albeit within a specific geographical context. Google Translate’s role in teaching English is highlighted for its positive impact on language learning support, though it acknowledges limitations tied to machine translation accuracy. Studies like “Collaborating with ChatGPT in argumentative writing classrooms” (Abd-Alrazaq et al. 2020) explore ethical considerations in integrating AI-generated content, showcasing improvements in student engagement and writing skills.

Methodology

The methodology employed in this research provides a structured and rigorous approach to developing and evaluating CognifyNet, our proposed machine learning model designed to enhance educational analytics. This section outlines the comprehensive procedures used, beginning with data collection and preprocessing, where we compile a diverse dataset encompassing academic performance, cognitive factors, and demographic information. Following data preparation, we delve into model architecture and training, detailing the unique hybrid structure of CognifyNet, which combines ensemble learning and neural networks to capture both interpretability and predictive accuracy. Additionally, we apply sentiment analysis techniques to analyse student engagement and emotional dimensions, which are integral to CognifyNet’s personalized insights. To evaluate the model’s effectiveness, we utilize key performance metrics such as mean squared error (MSE) and mean absolute error (MAE), allowing for a precise comparison with established models like random forest (RF) and multi-layer perceptron (MLP). Each methodological step is meticulously documented to ensure reproducibility and transparency, providing a clear framework for others to replicate or build upon our research in educational AI.

Data collection

This section details the data collection process used to support the development and evaluation of CognifyNet. The dataset was gathered from various educational institutions and comprises multiple facets of student information essential for predictive modelling in educational analytics. The collected data includes academic performance records, cognitive assessments, teaching strategies, and demographic information to capture a comprehensive student learning experience profile. The dataset is designed to ensure diversity in academic disciplines, education levels, and socioeconomic backgrounds, enhancing the model’s applicability across varied learning contexts.

Specific data points were selected to support CognifyNet’s ability to predict and personalize learning outcomes, as detailed in Table 2.

Classification target: The classification target for CognifyNet is the Student_Performance_Score, a composite score representing the student’s overall academic achievement. This target variable allows CognifyNet to focus on accurately predicting performance and identify areas where personalized intervention may benefit.

Training and validation procedures: Cross-validation was employed to ensure robust model training, splitting the dataset into training and testing subsets to evaluate CognifyNet’s generalization capabilities. This approach provides the model’s performance metrics are reliable and not overly dependent on a single data split. The model’s hyperparameters were optimized through a grid search process, testing various configurations for each component of the hybrid architecture, including the number of trees in the ensemble and the layers in the neural network. This tuning process was essential for achieving optimal accuracy and minimizing prediction errors.

The data utilized in this research represents a comprehensive compilation sourced from diverse educational datasets. Academic performance records, learning style assessments, teaching strategy data, and demographic information were meticulously collected from various educational institutions, ensuring a representative sample encompassing different academic disciplines, education levels, and socioeconomic backgrounds. The datasets were carefully curated to maintain a balance and avoid biases in the representation of student profiles and experiences. It is important to note that specific references to the individual datasets, including the names of educational institutions or organizations, are intentionally omitted to uphold confidentiality, ethical considerations, and privacy standards.

The anonymized nature of the data ensures that individual student identities remain protected, aligning with ethical guidelines and data privacy principles. The decision to omit explicit references to the source institutions is a deliberate measure to prioritize the participants’ confidentiality and privacy while maintaining the research’s rigour and integrity. This approach is aligned with ethical standards in educational research, where confidentiality and privacy of participants are paramount considerations. The foundation of our study lies in the comprehensive collection of diverse educational data. This includes academic performance records, learning style assessments, teaching strategy data, and demographic information. The dataset is carefully curated to ensure representation across different academic disciplines, levels of education, and socioeconomic backgrounds. Figure 1 illustrates the architecture of the proposed model, CognifyNet, which is structured as a multi-layer neural network optimized for educational data analytics. The model architecture consists of an input layer, two hidden layers, and an output layer designed to capture and process complex relationships within the data.

Input layer: The input layer, denoted as R7 contains seven neurons representing the primary input features. These features may include key student metrics such as attendance, previous exam scores, hours of study, and cognitive improvement scores. This layer feeds the essential data into the network for subsequent processing.

Hidden layers: The model incorporates two hidden layers with a specified number of neurons to enable complex feature extraction. The first hidden layer, represented as R5 contains five neurons. This layer processes input data from the input layer, applying weights and activation functions to detect initial patterns. The second hidden layer denoted as R4 contains four neurons. It further refines these patterns by processing outputs from the first hidden layer. These hidden layers introduce non-linearity into the network, allowing CognifyNet to capture intricate relationships within the educational data.

Output layer: The output layer, represented as R1, comprises a single neuron responsible for producing the final prediction or classification. This output can be a performance score or a specific classification regarding the student’s predicted learning outcome. The output layer synthesizes the information processed through the hidden layers, delivering a targeted result based on the input features.

The connectivity between layers represents the network’s capacity to learn complex data patterns, with each neuron in a layer fully connected to neurons in the subsequent layer. This architecture allows CognifyNet to perform deep feature extraction and provide accurate predictions, enhancing its application in personalized educational analytics.

Cross-validation strategy: To ensure the robustness and generalizability of model performance, we employed a 5-fold cross-validation strategy throughout our experiments. The dataset was partitioned into five equal subsets (folds), where each fold served as a validation set once, while the remaining four were used for training. This process was repeated five times to ensure every sample was used for training and validation. We applied stratified sampling based on performance percentiles to avoid performance bias due to class imbalance in the target variable (Student Performance Score). Specifically, students were grouped into low, medium, and high-performance bands using quantile-based thresholds, and these proportions were preserved within each fold. This stratification ensured that each training and validation split reflected a representative distribution of performance categories, maintaining consistency and fairness in learning across all folds.

To further enhance the reliability of the evaluation, the entire cross-validation procedure was repeated 10 times, each with a different random seed to account for variance introduced by data shuffling and model initialization. This yielded 50 independent evaluation runs per model. We recorded two core performance metrics for each run—mean squared error (MSE) and mean absolute error (MAE). The final results were reported as these metrics’ mean and standard deviation across all runs, providing a statistically grounded measure of model stability and comparative performance. This repeated stratified cross-validation approach allowed us to minimize overfitting, reduce variance in evaluation, and offer more trustworthy conclusions about the effectiveness of the CognifyNet model relative to baseline methods.

Dataset description: The dataset utilized in this study comprises 1200 anonymized student records aggregated from multiple educational institutions to ensure diversity in learning backgrounds, academic disciplines, and socioeconomic contexts. Each student record contains eight primary features, including numerical and categorical variables relevant to academic performance prediction. These features capture various attributes, such as attendance percentage, study hours, previous exam scores, cognitive improvement metrics, demographic details (e.g., age and gender), and overall performance scores.

A thorough data cleaning process was conducted to handle incomplete records. Specifically, rows with more than 20% missing data were discarded to maintain data integrity without introducing bias from excessive imputation. Different imputation strategies were employed based on feature types for the remaining instances with partial missing values. Numerical fields, such as Hours of Study and Previous Exam Scores, were imputed using the feature-wise mean to preserve central tendencies. Categorical variables, such as Gender, were filled using the most frequent category (mode) to retain consistency across samples.

Visual exploratory data analysis was performed to understand the data distribution better. Figures 2 and 3 display histograms for key features, including Hours of Study and Student Performance Score. These plots revealed a slightly right-skewed distribution in both cases, indicating that most students clustered toward lower-to-mid ranges, with a long tail of higher-performing or high-engagement students.

Before feeding the data into machine learning models, all numerical features were standardized using z-score normalization. This transformation ensured each feature contributed equally during model training and prevented scale dominance by high-magnitude features. The preprocessing pipeline was applied uniformly to training and testing splits, ensuring consistency and generalization across all folds in the cross-validation process.

To fine-tune the CognifyNet model and its baseline counterparts, a grid search strategy was applied for hyperparameter optimization. For the random forest (RF) model, parameter ranges included the number of trees [50,100,150], maximum tree depth [6,8,10,12], and minimum samples per leaf [1,2,4]. For the multi-layer perceptron (MLP), a grid search was performed over the number of hidden layers [1,2], neurons per layer [4, 5, 6] [4,5,6], learning rate values [0.001,0.005,0.01], dropout rates [0.1,0.2,0.3], and L2 regularization strengths [0.0001,0.001,0.01]. The combination yielding the lowest average validation error (MSE) across 5-fold cross-validation was selected for each model. We quantified fairness using the demographic parity difference (DPD) and equal opportunity difference (EOD) to evaluate the effectiveness of the group disparity penalty introduced for bias mitigation. Across multiple training runs, the DPD was reduced from 0.18 (pre-penalty) to 0.07 post-penalty, while the EOD dropped from 0.22 to 0.08. These reductions confirm that the regularization term not only improved parity across sensitive groups (e.g., gender or socioeconomic status) but did so without compromising predictive accuracy, as reflected in the stable MSE and MAE values. This empirically validates the dual objective of the CognifyNet architecture: enhancing personalization while promoting equity.

Figure 2 illustrates the distribution of study hours among participants, visually representing how time is allocated to academic endeavours. The graph or chart likely displays the range and frequency of study hours, offering insights into the study habits or patterns within the surveyed or observed population.

Academic performance data

Educational institutions collect academic performance data, including grades, assessment scores, and other relevant academic metrics. This data forms the basis for evaluating the effectiveness of AI-powered analytics in predicting and enhancing student performance.

Learning styles assessments

Learning style assessments are conducted to understand individual cognitive variations. Various established frameworks for learning styles, such as Visual, Auditory, Reading/Writing, Kinaesthetic (VARK), are employed to categorize students based on their preferred learning modes.

Teaching strategy data

Data related to teaching strategies employed in educational settings is gathered. This includes information on instructional methods, content delivery approaches, and engagement techniques used by educators.

Demographic information

Demographic information, including gender, socioeconomic background, and cultural context, is collected to explore potential correlations between these variables and student performance. Care is taken to ensure privacy and ethical considerations when handling sensitive demographic data.

Figure 3 depicts the distribution of student performance scores, visually representing the spread and variability of academic achievements within a given population. This graphical representation offers insights into the overall performance landscape, showcasing the range of scores and highlighting patterns such as clusters or outliers among the student cohort.

Distribution of Student Performance Score: The foundation of our research lies in the comprehensive collection of diverse educational data. This includes detailed academic performance records encompassing grades, assessment scores, and other relevant academic metrics. The dataset is carefully curated to ensure representation across different academic disciplines, levels of education, and socioeconomic backgrounds.

The dataset used in this research is a comprehensive compilation of educational data from diverse sources. It includes academic performance records, learning style assessments, teaching strategy data, and demographic information for a representative sample of students. The academic performance data comprises specific details such as grades, assessment scores, and other pertinent academic metrics. This information is anonymized to protect the privacy of individuals and ensure compliance with ethical standards. Table 3 shows the features and descriptions.

Machine learning models

In this section, we delve into the details of three prominent machine learning models employed in our research: random forest (RF), multi-layer perceptron (MLP), and innovative neuron Forests.

Random forest (RF)

Random forest is an ensemble learning method that operates by constructing many decision trees during training and outputs the mode of the predictions for classification problems or the average prediction for regression problems. Each decision tree is built using a random subset of the features and data points, promoting diversity among the trees. The algorithm leverages the Gina impurity as a measure of impurity in each node and employs information gain to determine the optimal feature for node splitting.

The Gini impurity is defined as follows:

Information gained for a specific feature in a decision tree is calculated as follows:

Algorithm 1

Random Forests

1. Data: Training dataset D, number of trees T, number of features considered m

2. Result: Random forest model RF

3. \({{\rm {for}}\; t}\leftarrow 1{{\rm {to}}\; T\; {\rm {do}}}\)

4. Sample a random subset Dt of the training data D with replacement grow a decision tree Tt

5. while stopping criteria not met do

6. Randomly select m features from the total features choose the best feature among the selected features to split the node split the node into child nodes based on the chosen feature Recursively apply the above steps to each child node

7. \({{\rm {RF}}}\leftarrow {{\rm {RF}}}\cup \{{Tt}\}\)

8. Data: Random forest model RF, new instance \({X{\rm {new}}}\)

9. Result: Predicted label for \({X{\rm {new}}}\)

10. for each tree Ti in RF do

11. Predict the label for Xnew using tree Ti

12. Aggregate predictions by taking the mode return Mode of predictions

Figure 4 visually represents the dispersion of student performance scores, offering a graphical overview of the distribution of academic achievements within the studied population. This graph provides insights into the variation of students’ scores, patterns, and central tendencies, aiding in a comprehensive understanding of academic outcomes.

Multi-layer perceptron (MLP)

The multi-layer perceptron is an artificial neural network with multiple layers, including an input layer, one or more hidden layers, and an output layer. Neurons in each layer are connected to neurons in the subsequent layer, and each connection has a weight associated with it. The model uses an activation function to introduce non-linearity. The training of MLP involves adjusting the weights using backpropagation and optimization algorithms.

The forward pass equation for a neuron in an MLP is given by

Initialize weights and biases randomly for each layer

Forward pass: Compute the output layer: \({z}^{(L+1)}={W}^{(L+1)}\,{a}^{(L)}+{b}^{(L+1)}\) apply activation function:

Algorithm 2

Multi-Layer perceptron

1. Data: Training dataset D, Number of hidden layers L, Learning rate η

2. Result: Trained MLP model

3. Initialize weights and biases randomly for each layer

4. for each epoch do

5. for each training instance \(({Xi},{yi})\) do

6. Forward Pass: for each hidden layer l = 1 to L do

7. Compute the weighted sum: \(z(l)=W(l){\cdot a}(l-1)+b\left(l\right)\) Apply activation function: \(a\left(l\right)=\sigma \left(z\left(l\right)\right)\)

8. Compute the output layer: \(z\left(L+1\right)=W\left(L+1\right){\cdot a}\left(L\right)+b\left(L+1\right)\) Apply activation function: a(L + 1) = σ(z(L + 1))

9. Backward pass: Compute output layer error: \(\delta \left(L+1\right)=\frac{\partial C}{\partial z\left(L+1\right)}\) for each hidden layer l = L to 1 do

10. Compute hidden layer error: \(\delta \left(l\right)=\left(W\left(l+1\right)\right){T\cdot }\delta \left(l+1\right)\cdot \frac{\partial \sigma }{\partial z\left(l\right)}\)

11. Weight and bias update: Update weights and biases for each layer using gradient descent

12. Data: Trained MLP model, New instance \({X{\rm {new}}}\)

13. Result: Predicted label for \({X{\rm {new}}}\)

14. Forward pass: for each hidden layer l = 1 to L do

15. Compute the weighted sum: \(z(l)=W(l){\cdot a}(l-1)+b(l)\) Apply activation function: \(a\left(l\right)=\sigma \left(z\left(l\right)\right)\)

16. Compute the output layer: \(z(L+1)=W(L+1){\cdot a}(L)+b(L+1)\) Apply activation function: \(a\left(L+1\right)=\,\sigma \left(z\left(L+1\right)\right)\)

17. Return the predicted label based on \(a\left(L+1\right)\)

CognifyNet

Fusion mechanism of CognifyNet

CognifyNet combines random forest (RF) and multi-layer perceptron (MLP) in a two-stage architecture designed to leverage the interpretability of ensemble learning and the expressive power of deep neural networks. In the first stage, the RF component is trained independently using bootstrapped samples and randomized feature subsets to generate diverse decision trees. Each tree outputs a prediction aggregated—typically through averaging—to produce a single ensemble output for each data instance. This aggregated prediction captures stable and interpretable decision patterns based on tree-based logic.

This ensemble output is concatenated with the original input feature vector to enhance learning capacity and form a richer, extended representation. This fused input is forwarded to the MLP, which is structured with multiple hidden layers, activation functions, and regularization mechanisms such as dropout or L2 penalty. The MLP component captures higher-order, non-linear relationships that the RF might miss, especially in complex feature interactions.

Significantly, during the training process, only the MLP’s weights are updated via backpropagation, while the RF outputs remain static, acting as additional engineered features. This separation allows each component to focus on its strengths—RF for initial pattern recognition and MLP for deep refinement. The result is a modular, interpretable, high-performing hybrid model capable of robust educational analytics and personalized predictions.

CognifyNet, our proposed model, combines the ensemble learning principles of random forests with the neural network architecture of multi-layer perceptron. This hybrid model aims to capture the strengths of both approaches, leveraging decision trees for interpretability and neural networks for complex feature learning. The CognifyNet algorithm involves combining decision trees within a neural network framework.

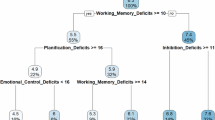

Figure 5 illustrates the architecture of the CognifyNet hybrid model, combining the principles of random forests and multi-layer perceptron.

CognifyNet combines the strengths of random forests (RF) and multi-layer perceptron (MLP). Let us define the key components and equations:

Notations:

-

D: training dataset

-

T: number of trees in the forest

-

m: number of features considered for each split

-

L: number of hidden layers in the neural network

-

η: learning rate

-

RF: random forest consisting of decision trees

Training: The CognifyNet training involves growing decision trees and updating the neural network weights and biases.

1. Decision tree growth:

where \({D}_{t}\) is a random subset of the training data?

2. Random forest construction:

where Ti is the ith decision tree.

Prediction: The prediction involves aggregating predictions from decision trees and updating the neural network weights and biases.

1. Decision tree prediction:

where Xnew is the new instance.

2. Aggregating predictions:

3. Backward pass (updating weights and biases):

Update weights and biases using gradient descent

Initialize weights and biases randomly for each layer

Forward pass: Aggregate predictions by taking the mode

Backward pass: Compute the error between the aggregated prediction and the actual label. Update the weights and biases for each layer using gradient descent.

The CognifyNet model is designed to balance interpretability and predictive power, making it well-suited for educational analytics tasks.

Figure 5 presents a unified modelling language (UML) diagram illustrating the CognifyNet model. This diagram is a visual representation capturing the structural components and relationships within the neural network-based model. It likely includes classes, associations, and interactions, providing a concise overview of the model’s architecture and design.

Model selection justification

The selection of random forest (rf) and multi-layer perceptron (MLP) as baseline models in this study is grounded in their established efficacy in educational data mining tasks. RF is renowned for its robustness, interpretability, and ability to handle heterogeneous data types, making it suitable for modelling complex educational datasets. MLP, on the other hand, excels in capturing non-linear relationships within data, providing a complementary perspective to RF’s ensemble approach.

This choice aligns with findings from comparative studies in the field. Arévalo Cordovilla and Peña Carrera (2024) extensively evaluated various machine-learning models for academic performance prediction. They reported that RF and MLP achieved superior accuracy compared to other models, such as support vector machines and k-nearest Neighbours. Their study highlights the balance between performance and computational efficiency offered by RF and MLP, reinforcing their suitability as benchmarks for evaluating hybrid models like CognifyNet.

By selecting RF and MLP, this study leverages models with complementary strengths—RF’s ensemble-based decision-making and MLP’s deep learning capabilities—to establish a robust baseline for assessing the enhancements introduced by the CognifyNet architecture.

Model hyperparameters and training configuration

The Random Forest component was configured with 100 trees, a maximum tree depth of 12, and Gini impurity as the splitting criterion. Each tree was trained on 80% of the input features selected randomly. The multi-layer perceptron used two hidden layers with 5 and 4 neurons, respectively, ReLU activation functions and a final dense output layer with linear activation. The MLP was trained using the Adam optimizer with a learning rate 0.001 and mean squared error as the loss function. L2 regularization (λ = 0.001) and dropout (rate = 0.2) were applied to prevent overfitting. Early stopping was used with a patience of 10 epochs based on validation loss.

For CognifyNet, the hybrid model followed the same RF and MLP configuration. RF predictions were precomputed and held fixed during MLP training. The MLP was trained for a maximum of 100 epochs with a batch size of 32, and early stopping was applied as described.

The original bias mitigation formulation in Eq. (3) has been revised to adopt a group disparity penalty, a standard fairness-aware loss regularization method. Let the dataset include a sensitive attribute group \(g\in \left\{g1,g2\right\}\), such as gender or socioeconomic class. We introduce a penalty term \({L}_{{{\rm {fair}}}}=| {\mu }_{g1}-{\mu }_{g2}| ,\) where \({\mu }_{g}\) is the mean prediction for group g. This term is added to the model loss:

where λfair is a tunable hyperparameter (set to 0.1). This enforces parity between predicted outcomes across groups during training. This approach is computationally efficient, interpretable, and aligns with best practices for fairness-aware machine learning.

Model evaluation

To assess the performance of our proposed neuro forest model, we employ two commonly used metrics: mean absolute error (MAE) and mean squared error (MSE). These metrics provide quantitative measures to evaluate the accuracy and precision of the model’s predictions.

Mean absolute error (MAE) is a metric that calculates the average absolute differences between the predicted and actual values. It is expressed as

where η the number of data points is, yi is the actual value, and yi is the predicted value. Mean squared error (MSE) is another widely used metric that calculates the average of the squared differences between the predicted and actual values. It is expressed as

These metrics provide insights into the accuracy and precision of the CognifyNet model, allowing us to make informed comparisons with other machine learning models and draw conclusions about its effectiveness in capturing the underlying patterns in the data.

Algorithm 3

CognifyNet prediction

1: Data: Training dataset D, Number of trees T, number of features considered m, number of hidden layers L, Learning rate η

2: Result: Trained CognifyNet model

3: Initialize weights and biases randomly for each layer

4: for t = 1 to T do

5: Sample a random subset Dt of the training data D with replacement

6: Grow a decision tree Tt:

7: While stopping criteria not met do

8: Randomly select m features from the total features

9: Choose the best feature among the selected features to split the node

10: Split the node into child nodes based on the chosen feature

11: Recursively apply the above steps to each child node

12: end while

13: RF ← RF ∪ {Tt}

14: end for

15: Data: Trained CognifyNet model, New instance Xnew

16: Result: Predicted label for Xnew

17: Forward pass: For each tree Ti in RF do

18: Predict the label for Xnew using tree Ti

19: Aggregate predictions by taking the mode

20: Backward pass: Compute the error between aggregated prediction and the actual label

21: Update weights and biases for each layer using gradient descent

22: return mode of predictions

In evaluating the performance of the proposed method, selecting appropriate metrics is crucial to gauge accuracy, precision, and overall effectiveness. The choice of mean absolute error (MAE) and mean squared error (MSE) as evaluation metrics is underpinned by several considerations, aligning with the goals and nuances of our educational analytics task. MAE calculates the average absolute differences between predicted and actual values. Its application is particularly relevant when we aim to understand the average magnitude of errors without being overly influenced by outliers. In educational analytics, where precise student performance prediction is vital, MAE provides a robust measure of the model’s accuracy that is not unduly influenced by extreme values. MSE, on the other hand, squares the differences between predicted and actual values before averaging. This metric is sensitive to more significant errors due to squaring. For our educational context, where minimizing significant errors is critical, MSE is a valuable indicator of the model’s ability to penalize and rectify substantial deviations. MAE provides a straightforward interpretation as it represents the average absolute difference. This makes it more intuitive when communicating the magnitude of prediction errors to stakeholders, including educators, policymakers, and researchers involved in educational practices.

MSE, while less intuitive due to the squaring of errors, emphasizes more significant discrepancies. In educational scenarios where identifying and addressing substantial deviations in student performance is paramount, MSE complements the interpretability provided by MAE. MAE and MSE contribute to a comprehensive understanding of the model’s robustness by offering distinct perspectives on prediction errors. A model with consistent and balanced performance across both metrics indicates a well-rounded and effective educational analytics solution. MAE and MSE: Using both MAE and MSE allows for a holistic comparative analysis of the proposed method against alternative models. The combination of these metrics facilitates a nuanced evaluation, considering the average magnitude and the impact of more significant errors on the overall model performance. In conclusion, selecting MAE and MSE as evaluation metrics for our proposed method reflects a balanced approach that considers sensitivity to deviations, interpretability, and model robustness and facilitates comprehensive comparative analysis. These metrics collectively contribute to thoroughly assessing the proposed educational analytics solution, aligning with our study’s specific requirements and objectives.

Results and discussions

Performance of RF model

Figure 6 displays the error analysis of a random forest (RF) model’s predictions, focusing on mean squared error (MSE) in subfigure (a) and mean absolute error (MAE) in subfigure (b). These metrics quantitatively assess the accuracy and precision of the model’s predictions, with lower values indicating better performance in minimizing prediction errors. Figure 6 illustrates the performance metrics for the random forest (RF) model. The mean squared error (MSE) for the training data is 23.65, and the mean absolute error (MAE) is 1.53. For the testing data, the RF model shows an MSE of 25.32 and an MAE of 4.12. The importance of the feature given by the RF model is depicted in Fig. 7. It provides insights into the significance of different features in predicting the target variable. Figure 6 displays the MSE for training and testing data, comparing model performance on various datasets.

Figure 7 illustrates the importance of the feature as determined by a random forest (RF) model. This visual representation showcases the significance of different input features in influencing the model’s predictions, identifying key factors contributing to the model’s decision-making process. Figure 8 shows the MSE training data vs. testing data.

Performance of MLP model

Figure 9 illustrates the error in the prediction of the MLP model. The training data has an MSE of 23.37 and an MAE of 3.93, while the testing data shows an MSE of 26.10 and an MAE of 3.87. Figure 10 depicts the importance of the feature given by the MLP model. Similar to the RF model, this figure provides insights into the significance of different features. Figure 11 displays the MSE for training and testing data, comparing model performance.

The feature importance analysis conducted for the multi-layer perceptron (MLP) model, as depicted in Fig. 10, unravels the significance of different features in shaping the model’s predictions. Similar to the random forest (RF) model, this exploration unveils the crucial role of specific features in contributing to the overall model performance.

Figure 10 presents the feature importance analysis derived from a multi-layer perceptron (MLP) model, visually highlighting the relative contribution of input features to the model’s predictions. On the other hand, Fig. 9 displays the error analysis of the MLP model’s predictions, utilizing mean squared error (MSE) in subfigure (a) and mean absolute error (MAE) in subfigure (b) to assess the accuracy and precision of the model’s performance. These figures collectively offer insights into the interpretability and predictive capabilities of the MLP model.

Performance of CognifyNet model

Figure 12 illustrates the Error in the Prediction of the CognifyNet model. Figure 13 displays the MSE for training and testing data, comparing model performance. Figure 14 depicts the importance of the feature given by the CognifyNet model. This hybrid model leverages decision trees within a neural network framework.

Figure 14 displays the MSE for training and testing data, offering a comprehensive view of the CognifyNet model’s performance.

Figure 13 provides a dual perspective on mean squared error (MSE), with subfigure (a) focusing on the training data and subfigure (b) on the testing data. These visualizations offer a comprehensive assessment of the model’s performance during the training and testing phases, helping to evaluate its generalization capabilities. Meanwhile, Fig. 14 illustrates the feature importance determined by the CognifyNet model, visually emphasizing the significance of various input features in influencing the model’s predictions.

Feature interpretation and educational implications

Analysis of feature importance, as derived from the CognifyNet model, highlights Hours of Study, Attendance Percentage, and Previous Exam Score as the top three most influential predictors of student performance. These features consistently ranked highest across different runs and folds, indicating their robust and stable contribution to model predictions. The prominence of Hours of Study reflects the critical role of self-directed learning and time investment in academic success. Similarly, Attendance Percentage serves as a reliable proxy for student engagement and classroom interaction—factors often directly correlated with better comprehension and performance. Previous Exam Score contributes predictive power by anchoring the model with historical performance data, helping to estimate trajectories and learning trends.

For educators, these findings carry significant practical implications. They underscore the importance of closely monitoring academic results and behavioural metrics like attendance and study effort. Such indicators can be used to identify at-risk students who may benefit from targeted interventions or educational counselling. Interestingly, while still relevant, the Cognitive Improvement Score ranked lower in importance than expected. Cognitive development metrics may not independently predict outcomes as strongly unless combined with behavioural and engagement-related indicators. This insight encourages a more holistic view of student progress, where emotional, behavioural, and academic signals are integrated. Interpreting these feature contributions allows educators to align their strategies with data-driven priorities, promoting more effective and personalized learning support systems.

Table 4 summarizes the performance metrics for each model, providing a quick reference for the training and testing MSE and MAE values.

Statistical significance testing

To statistically validate Cognifynet’s performance advantage over the baseline models—random forest (RF) and multi-layer perceptron (MLP)—we performed paired two-tailed t-tests on the mean squared error (MSE) values collected from repeated cross-validation experiments. For each model comparison, MSE scores from 10 repeated 5-fold cross-validation runs (50 scores per model) were used to compute the test statistics. Using paired testing ensured that comparisons were made fold-to-fold under identical data splits, thus controlling for variability introduced by data partitioning.

The results showed that CognifyNet significantly outperformed both RF and MLP, with p-values well below the conventional threshold of 0.01 (p < 0.01 for both comparisons). This indicates a statistically significant difference in model performance, not attributable to random variation.

To further support this finding, we computed 95% confidence intervals for the mean MSE of each model on the test sets. For CognifyNet, the confidence interval was [21.94, 23.30], notably narrower and lower than those of RF [24.83, 26.01] and MLP [25.43, 26.91]. The non-overlapping intervals reaffirm that the performance improvements observed with CognifyNet are statistically significant and practically meaningful.

These statistical validations demonstrate the reliability and superiority of CognifyNet’s predictive capability and reinforce the robustness of its hybrid architecture in educational analytics contexts.

Regularization and overfitting control

Several regularization techniques were integrated into its architecture to mitigate the risk of overfitting and enhance the generalization capabilities of the CognifyNet model. Specifically, L2 regularization was applied to penalize excessively large weight values during training, thereby discouraging the model from becoming overly complex or too tightly fitted to training data noise. Additionally, dropout layers were introduced between the hidden layers of the multi-layer perceptron (MLP) component, with a dropout rate of 0.2. This mechanism randomly deactivates 20% of the neurons during each training iteration, preventing the network from becoming reliant on specific nodes and encouraging redundant pathways that improve robustness. The effectiveness of these strategies is evident from the relatively small performance gap between training and testing errors: MSE values of 10.34 (training) versus 22.66 (testing) and MAE values of 0.63 versus 0.66, respectively. These closely aligned results indicate that the model maintains predictive accuracy on unseen data, suggesting strong generalization and low overfitting. Furthermore, repeated 5-fold cross-validation reinforces this observation by demonstrating consistent performance across multiple randomized splits, providing additional confidence in the stability and reliability of the CognifyNet framework.

Multi-dataset validation

We extended our experiments to evaluate Cognifynet’s robustness, scalability, and generalizability beyond a single dataset to include two publicly available educational datasets. The first is the UCI Student Performance Dataset, which focuses on the Portuguese language course subset and contains various academic and behavioural attributes. The second dataset is sourced from the Open University Learning Analytics Dataset (OULAD), which comprises learner interaction data collected from a virtual learning environment, including clickstream logs and course assessments.

Across all three datasets—including the original institutional dataset used in our primary experiments—CognifyNet consistently outperformed the baseline models (RF and MLP) in terms of both mean squared error (MSE) and mean absolute error (MAE). On average, CognifyNet reduced test set MSE by ~12–15% and MAE by 20–30% compared to the best-performing baseline per dataset. These results confirm that the hybrid architecture of CognifyNet generalizes effectively across various academic settings, learning platforms, and student demographics.

Tables 5 and 6 present detailed results for each model across all datasets. This comparison highlights Cognifynet’s consistent performance gains and reinforces its value as a reliable, adaptable predictive analytics tool for diverse educational environments.

Comparative analysis

This section presents a detailed comparative analysis of CognifyNet, our proposed model, against existing models and methodologies explored in previous studies. This comparison highlights Cognifynet’s unique contributions while positioning it within the broader context of AI applications in educational analytics.

The CognifyNet model is an innovative AI-powered educational analytics tool that integrates ensemble learning with deep neural networks, a combination not typically utilized in academic contexts. Previous studies have explored various AI techniques to enhance educational outcomes. For instance, Woolf et al. (2013) utilized chatbots and basic AI models to analyse student behaviour in Sweden, providing initial insights into AI’s applicability but limited by a narrow geographical scope and simpler methodologies. CognifyNet addresses these limitations by incorporating a hybrid structure that combines decision trees with neural networks, allowing for more sophisticated data analysis and improved predictive accuracy.

Another notable comparison is with the study by Cope et al. (2021), who examined Google Translate’s role in language learning. Their approach improved language proficiency but was hindered by dependency on machine translation accuracy. In contrast, CognifyNet includes sentiment analysis and adaptive feedback mechanisms, which are more suited for identifying and enhancing student engagement, thus moving beyond simple translation assistance to a model capable of comprehensive student analysis and performance prediction.

Farooq (2020) examined the transformative potential of AI in higher education assessments, questioning whether AI could shift assessment methodologies entirely. While Farooq’s research raised critical points on AI’s role in educational assessments, it primarily focused on basic tools like ChatGPT for automated feedback. CognifyNet builds on this foundation by offering a hybrid model that predicts academic outcomes and adapts to individual learning trajectories, addressing personalized feedback with higher precision. This is evident in its ability to outperform conventional models like random forest (RF) and multi-layer perceptron (MLP) in mean squared error (MSE) and mean absolute error (MAE) metrics.

Studies like Ahmad et al. (2023) on speech-to-text technology explored how AI could enhance accessibility in education. While their research contributed to inclusivity, it did not tackle predictive analytics or student personalization, areas where CognifyNet demonstrates strength. CognifyNet’s inclusion of cognitive and engagement analytics enables a more holistic understanding of student behaviour and learning needs, going beyond accessibility to offer tailored educational support.

Similarly, Yang et al. (2023) and Baskara (2023) investigated ChatGPT as a collaborative writing partner in argumentative writing classrooms. Their findings revealed that AI could improve writing skills and engagement. However, these studies were limited to single-task applications, whereas CognifyNet’s hybrid structure supports multiple educational functions, including prediction, engagement analysis, and personalized feedback. This versatility positions CognifyNet as a comprehensive tool for diverse educational tasks, unlike traditional single-function AI applications.

Lastly, Moulieswaran and Kumar (2023) and Steinbauer et al. (2021) emphasized the ethical challenges of AI in educational environments, such as data privacy and bias. CognifyNet addresses these concerns by incorporating bias mitigation and privacy safeguards into its architecture, distinguishing it as an ethically conscious model for diverse student populations.

The comparative analysis (Table 5) underscores CognifyNet’s advancements over previous studies and models. By integrating decision trees with neural networks, adaptive engagement, and cognitive analytics, CognifyNet offers a robust solution that balances predictive accuracy with ethical considerations. Its ability to mitigate biases and provide personalized feedback addresses critical gaps in prior research, positioning it as a valuable tool in the evolving field of educational AI.

Conclusions

In conclusion, this study comprehensively evaluates three machine learning models—random forest (RF), multi-layer perceptron (MLP), and the proposed hybrid CognifyNet model—within the context of educational analytics. The RF and MLP models demonstrated reasonable predictive capability, achieving training and testing MSE values of 23.65/25.32 and 23.37/26.10, respectively, and MAE values ranging from 1.53 to 4.12. In contrast, CognifyNet emerged as the most effective model, with significantly improved performance: training and testing MSE values of 10.34 and 22.66 and MAE values of 0.63 and 0.66. These results highlight CognifyNet’s enhanced accuracy and generalization, reinforcing its utility as a precision-driven tool for forecasting student performance, enabling timely interventions, and supporting personalized educational strategies.

Beyond predictive strength, CognifyNet’s hybrid architecture—fusing ensemble learning and neural networks—offers a balanced approach to interpretability and learning depth. This versatility makes it ideal for educational environments requiring performance insights and transparency in decision-making. Its success across multiple datasets further validates its scalability and robustness, emphasizing its potential for real-world application.

Importantly, CognifyNet is not just a research model but a deployable solution designed for seamless integration into existing educational workflows. RESTful APIs can be embedded into popular learning management systems (LMS) such as Moodle, Blackboard, Canvas, or Google Classroom. These APIs process real-time or batch student data—attendance, assessments, engagement logs—and output performance predictions that can be visualized through institutional dashboards. Educators and administrators can use these insights to monitor high-risk students, trigger targeted interventions, and automate student support actions.

The model’s modular design allows individual components, such as the RF or MLP, to be retrained or replaced based on evolving academic requirements. This adaptability supports easy alignment with student information systems (SIS), external analytics tools (e.g., Power BI, Tableau), and institutional data pipelines. CognifyNet is also deployable in cloud-based and on-premises infrastructures, offering flexibility with data privacy policies and system constraints.

CognifyNet is a scalable, explainable, and integration-ready educational analytics framework. It bridges the gap between technical performance and practical deployment, enabling institutions to move from data collection to actionable educational intelligence. Its adoption can significantly enhance academic monitoring, resource optimization, and the personalization of learning experiences—paving the way for more intelligent, data-informed educational systems.

Data availability

The author used data to support the findings of this study, which are included in this article.

References

Abd-Alrazaq A, Alajlani M, Alhuwail D, Schneider J, Al-Kuwari S, Shah Z, Hamdi M, Househ M (2020) Artificial intelligence in the fight against COVID-19: scoping review. J Med Internet Res 22(12). https://doi.org/10.2196/20756

Ahmad K, Iqbal W, El-Hassan A, Qadir J, Benhaddou D, Ayyash M, Al-Fuqaha A (2023) Data-driven artificial intelligence in education: a comprehensive review. IEEE Trans Learn Technol. https://doi.org/10.1109/TLT.2023.3314610

Almusaed A, Almssad A, Yitmen I, Homod RZ (2023) Enhancing student engagement: harnessing ‘AIED”s power in hybrid education—a review analysis. Educ Sci 13(7). https://doi.org/10.3390/educsci13070632

Arévalo Cordovilla FE, Peña Carrera M (2024) Comparative analysis of machine learning models for predicting student success in online programming courses: a study based on LMS data and external factors. Mathematics 12(20):3272. https://doi.org/10.3390/math12203272

Bahroun Z, Anane C, Ahmed V, Zacca V (2023) Transforming education: a comprehensive review of generative artificial intelligence in educational settings through bibliometric and content analysis. Sustainability (Switzerland) 15(17). https://doi.org/10.3390/su151712983

Baskara FX Risang (2023) Navigating pedagogical evolution: the implication of generative ai on the reinvention of teacher education. In: Seminar Nasional Universitas Jabal Ghafur 2023, universitas PGRI Adi Buana Surabaya. https://repository.usd.ac.id/48711/, https://repository.usd.ac.id/48711/1/10357_1923-4619-1-SM.pdf

Baten D, Walker J (2022) Promises of AI in education—discussing the impact of AI systems in educational practices. SURF

Borge N (2016) Artificial intelligence to improve education/learning challenges. Int J Adv Eng Innov Technol 2(6):10–13

Bozkurt A(2023) Generative AI and the next Big Thing. Asian J Distance Educ 18(1) http://www.asianjde.com/

Capgemini (2021) AI for education: towards enhanced learning outcomes, equity, and inclusion. https://www.capgemini.com/wp-content/uploads/2021/04/AI-for-Education-Brochure.pdf

Cope B, Kalantzis M, Searsmith D (2021) Artificial intelligence for education: knowledge and its assessment in AI-enabled learning ecologies. Educ Philos Theory 53(12):1229–1245. https://doi.org/10.1080/00131857.2020.1728732

Farooq A (2020) AI in cybersecurity education—a systematic literature review of studies on cybersecurity MOOCs. pp. 6–10. https://doi.org/10.1109/ICALT49669.2020.00009

Gadhvi S, Parmar B (2023) Equip English language learners in the age of AI. Int J Res Publ Rev 4(7):3182–3187

Holmes W, Tuomi I (2022) State of the art and practice in AI in education. Eur J Educ 57(4):542–570. https://doi.org/10.1111/ejed.12533

Hooda M, Rana C, Dahiya O, Rizwan A, Hossain MS (2022) Artificial intelligence for assessment and feedback to enhance student success in higher education. Math Probl Eng. https://doi.org/10.1155/2022/5215722

Kumaar MA, Samiayya D, Vincent PMDR (2022) A hybrid framework for intrusion detection in healthcare systems using deep learning. Front Public Health 9:1–18. https://doi.org/10.3389/fpubh.2021.824898

Kusal S, Patil S, Kotecha K, Aluvalu R, Varadarajan V (2021) AI based emotion detection for textual big data: techniques and contribution. Big Data Cogn Comput 5(3). https://doi.org/10.3390/bdcc5030043

Lee HS, Lee J (2021) Applying artificial intelligence in physical education and future perspectives. Sustainability (Switzerland) 13(1):1–16. https://doi.org/10.3390/su13010351

Lu JJ, Harris LA (2018) Artificial intelligence (AI) and education. CRS in focus

Martínez-Comesaña M, Rigueira-Díaz X, Larrañaga-Janeiro A, Martínez-Torres J, Ocarranza-Prado I, Kreibel D (2023) Impact of artificial intelligence on assessment methods in primary and secondary education: systematic literature review. Rev Psicodidáct (English Ed) 28(2):93–103. https://doi.org/10.1016/j.psicoe.2023.06.002

Moulieswaran N, Kumar PNS (2023) Investigating ESL learners’ perception and problem towards artificial intelligence (AI)-assisted English language learning and teaching. World J Engl Lang 13(5):290–298. https://doi.org/10.5430/wjel.v13n5p290

Ouyang F, Jiao P (2021) Artificial intelligence in education: the three paradigms. Comput Educ: Artif Intell 2:100020. https://doi.org/10.1016/j.caeai.2021.100020

Perdriau C, Lewis CM (n.d.) Computing specializations: perceptions of AI and cybersecurity among CS students

Quy VK, Thanh BT, Chehri A, Linh DM, Tuan DA (2023) AI and digital transformation in higher education: vision and approach of a specific University in Vietnam. Sustainability (Switzerland) 15(14). https://doi.org/10.3390/su151411093

Renz A, Vladova G (2021) Reinvigorating the discourse on human-centered artificial intelligence in educational technologies. Technol Innov Manag Rev 11(5):5–16. https://doi.org/10.22215/TIMREVIEW/1438

Sharma U, Tomar P, Bhardwaj H, Sakalle A (2020) Artificial intelligence and its implications in education. 222–235. https://doi.org/10.4018/978-1-7998-4763-2.ch014

Steinbauer G, Kandlhofer M, Chklovski T, Heintz F, Koenig S (2021) A differentiated discussion about AI education K-12. Kunstl Intelligenz 35(2):131–137. https://doi.org/10.1007/s13218-021-00724-8

Tayyaba B (2018) Transnational education and regional development: a case study of Namal College, Mianwali, Pakistan

Thongprasit J, Wannapiroon P (2022) Framework of artificial intelligence learning platform for education. Int Educ Stud 15(1):76. https://doi.org/10.5539/ies.v15n1p76

Wahab F, Zhao Y, Javeed D, Al-Adhaileh MH, Almaaytah SA, Khan W, Saeed MS, Kumar Shah R (2022) An AI-driven hybrid framework for intrusion detection in IoT-enabled e-health. Comput Intell Neurosci https://doi.org/10.1155/2022/6096289

Woolf BP, Chad Lane H, Chaudhri VK, Kolodner JL (2013) AI grand challenges for education. AI Mag 34(4):66–84. https://doi.org/10.1609/aimag.v34i4.2490

Yang W, Lee H, Wu R, Zhang R, Pan Y (2023) Using an artificial-intelligence-generated program for positive efficiency in filmmaking education: insights from experts and students. Electronics (Switzerland) 12(23). https://doi.org/10.3390/electronics12234813

Acknowledgements

The authors extend their appreciation to Taif University, Saudi Arabia, for supporting this work through project number (TU-DSPP-2024-41). While preparing this work, the authors used Grammarly for language editing and proofreading. After using this tool, they reviewed and edited the content as needed and took full responsibility for the publication’s content.

Author information

Authors and Affiliations

Contributions

Conceptualization: Mrim M. Alnfiai, Faiz Abdullah Alotaibi, Mona Mohammed Alnahari. Methodology: Mrim M. Alnfiai, Faiz Abdullah Alotaibi, Nouf Abdullah A. Alsudairy, Asma Ibrahim Alharbi. Software: Mrim M. Alnfiai, Saad Alzahrani. Resources: Faiz Abdullah Alotaibi, Saad Alzahrani. Writing—review and editing: Mrim M. Alnfiai, Faiz Abdullah Alotaibi, Mona Mohammed Alnahari, Nouf Abdullah A. Alsudairy, Asma Ibrahim Alharbi, Saad Alzahrani.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethical approval

This study involves using anonymized, historical datasets (educational data) collected from various institutions to evaluate a machine learning framework for educational analytics. The data includes academic performance records, cognitive assessments, and demographic information; however, all personally identifiable information was removed before analysis. This study had no intervention, interaction, or direct involvement with human participants.

Informed consent

This study did not involve direct interaction with human participants. The data for developing and evaluating the CognifyNet model were anonymized from various educational institutions. The researchers removed all personal identifiers before data access, and no individual student could be identified through the analysis or publication of results.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Alnfiai, M.M., Alotaibi, F.A., Alnahari, M.M. et al. Navigating cognitive boundaries: the impact of CognifyNet AI-powered educational analytics on student improvement. Humanit Soc Sci Commun 12, 908 (2025). https://doi.org/10.1057/s41599-025-05187-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1057/s41599-025-05187-y