Abstract

The past decade has seen a rapid and vast adoption of social media globally and over sixty percent of people were connected online through various social media platforms as of the start of 2024. Despite many advantages social media offers, one of the most significant challenges is the rapid rise of fake news and AI-generated deepfakes across these social networks. The spread of fake news and deepfakes can lead to a series of negative impacts, such as social trust, economic consequences, public health and safety crises, as demonstrated during the COVID-19 pandemic. Hence, it is more important now than ever to develop solutions to identify such fake news and deepfakes, and curb their spread. This paper begins with a review of the literature on the definitions of fake news and deepfakes, their different types and major differences, and the ways they spread. Building on this literature research, this paper aims to analyse how fake news can be identified using machine learning models, and understand how data analytics can be leveraged to evaluate the impact of such fake news on public behaviour and trust. A fake news detection framework is developed, where TF-IDF vectorization and bag of n-grams methods are implemented to extract text features, and six typical machine learning models are used to detect fake news, with the XGBoost classifier achieving the highest accuracy using both feature extraction methods. Additionally, a convolutional neural network model is designed to detect deepfake images with two distinct architectures, namely, ResNet50 and DenseNet121. To analyse the emotional impact of fake news on public behaviour and trust, a trained natural language toolkit called VADER lexicon is used to assign sentiment polarity and emotion strength to articles. The rampant rise of deepfake technology poses huge risks to social trust and privacy issues, which impacts both individuals and society at large, and leveraging the effective use of data analytics, machine learning and AI techniques can help prevent irreparable damage and mitigate the negative impacts of deepfakes in social networks. Finally, the paper discusses some practical solutions to mitigate the negative impacts of fake news and deepfakes.

Similar content being viewed by others

Introduction

Humans are inherently poor at differentiating between fact and fiction, especially when inundated with information from various sources. This notion has been confirmed by various previous research (Egelhofer and Lecheler, 2019; Rubin, 2010). The scenario worsens if the fiction is desirable to the public (desirability bias, Fisher, 1993) or if it endorses their pre-existing opinions (confirmation bias, Nickerson, 1998). The word ‘post-truth’ defines this behaviour succinctly, where personal beliefs and public emotions are far more influential than objective facts. In fact, the word ‘post-truth’ was used frequently in articles and social media, especially during events like the Brexit referendum in the United Kingdom and during the 2016 presidential elections in the United States. Due to its frequent usage, the word was named by Oxford Dictionaries in 2016 as its word of the year (Wang, 2016). The above points highlight just one of the many reasons why fake news is very quick to spread and hard to detect. Unlike traditional news outlets, social media has low barriers to entry, making it an ideal platform for rapidly sharing false information. This is further amplified by the echo chamber effect (Jamieson and Cappella, 2008), where like-minded users reinforce each other’s beliefs, increasing the reach of misinformation. In addition, the economic benefits, such as from social media advertisements, and political benefits for big businesses and opposition parties also provide a strong motive to create, distribute, and spread fake news.

However, the public today is more aware of the negative impacts of fake news than ever before, and there is a growing recognition of the need to take extra measures to verify the authenticity of the information they consume, especially after the world witnessed the harmful effects of fake news during the COVID-19 pandemic. This paper aims to investigate how data analytics and machine learning (ML) techniques can be leveraged to detect fake news and deepfakes accurately and the key limitations of such solutions. To achieve this aim, it is important to first define what constitutes fake news and deepfakes and review the relevant research surrounding the different types of fake news and deepfakes. This paper also explores ways of evaluating the impact of such fake news on public behaviour and trust using natural language processing (NLP) and sentiment analysis, especially during crises such as the COVID-19 pandemic and the ongoing armed conflicts around the world. Specifically, this work aims to explore and address the following formulated research questions,

-

(1)

What data analytics and machine learning techniques can be used to detect fake news and deepfake images, and how effective are these techniques in terms of accuracy and other performance metrics?

-

(2)

Can NLP be used to evaluate the impact of fake news and deepfakes on public behaviour and trust on an emotional level? How effectively can NLP help in quantifying these emotional responses?

Though the above research questions focus on different aspects of fake news and deepfakes, they are interrelated, and addressing all of them is essential to understanding the complexity of the problem from different perspectives and developing effective solutions. The first research question focuses primarily on how fake news and deepfakes can be detected effectively using data analytics and machine learning techniques. The emphasis on ‘effective’ detection is important, as this is a crucial step in preventing the spread and influence of fake news. The second research question addresses the ‘why’ behind fake news detection, highlighting its importance by exploring how constant exposure to such misinformation impacts public trust and alters behaviour during critical situations, such as crises or political events. Overall, the scope of this paper mainly covers how data analytics and machine learning techniques can be used to identify the spread of fake news and deepfakes in social networks and analyse their impact on public behaviour and trust. The research contributes to existing literature in a two-fold way: (1) This paper develops a framework for fake news detection, and highlights the underlying difference between two Convolutional Neural Network (CNN) architectures and analyses their suitability for the detection of deepfake images. (2) It is one of the few studies that deeply examines the emotional impact of fake news. For instance, sentences classified as ‘negative’ through sentiment analysis can be further categorised into specific emotions, such as anger and sadness, using emotional lexicons, which ultimately help in understanding the emotional influence of fake news. In addition, this paper explores the emerging research on how Blockchain and Graph Neural Networks can potentially be used to identify the source and influential nodes behind the spread of fake news and deepfake images.

Literature review

In this section, existing literature closely related to this work are reviewed to understand the definitions and various types of fake news and deepfakes as well as the factors contributing to their spread. The literature research lays out the theoretical background and the analytical findings in this field, identifies the gaps in current knowledge and discusses how future research in the right direction can help bridge these gaps.

Definition of terms and the factors contributing to the spread of fake news and deepfakes

The term ‘news’ usually refers to recent and significant events occurring around the world. Fake news is defined as false information presented as factual news (Zhou and Zafarani, 2020). The goal of fake news varies from just attracting more website traffic to deliberately misinforming people with an intent to manipulate (Tandoc Jr et al. 2018). Fake news can be broadly classified into: ‘misinformation’, ‘disinformation’ and ‘malinformation’ (Shu et al. 2020). Researchers have distinguished different types of fake news, such as clickbait, hoax, propaganda, satire and parody (Collins et al. 2020), and have analysed the motives behind each of the common types of fake news. While these studies provide valuable insights in understanding the nature and characteristics of fake news, the typology used to classify it has been inconsistent across literature sources. This classification of types of content-based and intent-based fake news should be standardised so that a more accurate framework can be developed for understanding and analyzing fake news.

According to an article by the Wall Street Journal (Auxier, 2022), over 51% of teenagers and 33% of adults aged between 20-25 in the United States use social media as a gateway to their daily consumption of news, which makes the rise of fake news highly alarming. A survey conducted in the United States during December 2020 showed that 38.2% of responders have accidentally shared fake news, and 7% were unsure if they had done so (Watson, 2023b). Another survey conducted at the same time revealed that 54% of the responders were only somewhat sure of their ability to identify fake news, and 6.6% were not confident at all (Watson, 2023a). These statistics painted a grim reality: most people are uncertain if the news they consume and share is real or fake, which is also a reason behind the rapid spread of fake news and deepfakes in various situations. A range of research has focussed on the audience who fall for fake news, and why (Altay et al. 2022; Batailler et al. 2022; Guadagno and Guttieri, 2021; Pennycook and Rand, 2020; Weiss et al. 2020), which defines misinformation and disinformation and analyses their relation to media consumption patterns (Hameleers et al. 2022). Studies have also explored whether social media literacy makes it easier to combat fake news (Jones-Jang et al. 2021; Wei et al. 2023) and examined the underlying differences between fake and reliable news sources using search engine optimization techniques (Mazzeo and Rapisarda, 2022).

Deepfakes are multimedia content that is artificially generated or altered to misrepresent reality. Technically, deepfakes are synthetic media generated by superimposing images or videos onto another set of destination media using neural networks and generative models (Kaur et al. 2020; Masood et al. 2023). Previous research suggests that deepfakes mainly exist in the following forms,

-

Lip sync: A video is manipulated to make the subject appear to be speaking in sync with an audio recording.

-

Voice deepfakes: The voice data of a target (mostly of an influential person) is manipulated to generate synthetic audio recordings.

-

Character deepfakes: The target is mimicked by another actor using the target’s facial expressions and lip movements.

-

Face swaps: The face of a person in the source image or video is superimposed on the body of another person in the target media.

Among these, face swaps are prevalent in deepfakes (Kaur et al. 2020; Yang et al. 2019). As the source images and videos can be obtained easily online, with the advent of online news and social media, almost anyone with an internet connection can obtain some source images and videos and reverse-engineer them to generate synthesized content which can be alarmingly convincing (Vaccari and Chadwick, 2020).

While the medium differs between text-based fake news and deepfake images, a logical connection can be established between the two, particularly in terms of the motives behind their creation and some of the features used in detecting them. Both fake news and deepfake images involve creating, altering, or misrepresenting information with the intent to mislead the general public (Verdoliva, 2020). Moreover, both fake news and deepfakes are often disseminated through social media platforms, which provide low barriers to entry, minimal or no verification of posted content, and wide-reaching nature of these networks to maximise the impact of such fake news and deepfakes (Zhou and Zafarani, 2020).

As argued in Stenberg (2006) and Witten and Knudsen (2005), individuals are more likely to process and believe information with greater conviction when it is accompanied by visual proof. This rise of deepfake content can be particularly dangerous, as exposure to such content multiple times across various platforms can lead to familiarity, which in turn fosters a sense of credibility (Newman et al. 2015), even though it is completely fabricated. Extensive research has been conducted to understand the most common pathways used for spreading such fake news and deepfakes through different channels, such as how television, social media and bot networks are utilized for political disinformation campaigns (Moore, 2019). However, much of such research predominantly focused on the spread of fake news by bots on X (formerly Twitter), with less attention given to other social media platforms. There are very few studies that analysed how application programming interfaces (APIs) for social media platforms contribute to the spread of disinformation across those networks.

Existing research on detecting fake news and deepfakes

While the concept of fake news is not entirely new, the rapid rise in its creation and consumption has been significantly accelerated by social media platforms in recent years. Alongside such fake news which contains artificially doctored images/videos, using old visuals which are out-of-context to the news content is a powerful and simpler means of spreading misinformation. There are many recent real-life examples as well where out-of-context images and videos were used in this manner. According to a post on X by the Metropolitan Police, many videos circulating about renewed unrest in London – allegedly linked to an attack at a dance class in Southport – were actually taken from unrelated past protests and were being used to incite riots across the UK (BBC Bitesize, 2025). This has also spurred a growth in research aimed at proposing solutions to detect fake news and deepfakes, while identifying the key strengths and limitations of these solutions. Although there is no standardised classification for fake news detection methods, most researchers explored detection methods based on analysing the news content, analysing the social context, and developing intervention-based solutions using computational and analytical models in identifying fake news (Aïmeur et al. 2023; Sharma et al. 2019). Machine learning and deep learning models and sentiment analysis in NLP have been widely used in existing research on text-based fake news detection (Korkmaz et al. 2023, 2024). For example, an NLP-based model (Devarajan et al. 2023) comprising four layers, including publisher, social media networking, enabled edge, and cloud layers, was developed to detect fake news articles from online social media using the credibility scores aggregated from various sources. Oshikawa et al. (2018) conducted a comprehensive review and comparison of diverse NLP approaches for fake news detection, highlighting the limitations and potential of these models. These techniques collectively demonstrate the significance of NLP in addressing fake news. They are also summarised in Fig. 1.

-

(1)

The widely adopted classification models include: Logistic regression, Decision tree, Gradient boosting classifier, Random forest classifier, Naïve Bayes classifier, Support vector classifier and XGBoost classifier (Aïmeur et al. 2023; Shu et al. 2017).

-

(2)

Deep-learning models: Few studies suggest that implementing a model with neural networks, for example, a CNN or a Recurring Neural Network provides better results than a supervised classification ML model. Specifically, a model (Drif et al. 2019) which combines CNN layers followed by Long Short-Term Memory (LSTM) layers can predict fake news with better accuracy in comparison to SVM classifiers. Another study (Wang, 2017) proves that an even higher accuracy and model performance can be achieved when different metadata features are integrated with text through a hybrid CNN.

-

(3)

Sentiment analysis in NLP models: A study by Paschen suggests that the news is more probable to be fake if the headline or title of the article in question is significantly more negative (Paschen, 2020). Further studies (Giachanou et al. 2019; Kumar et al. 2020) describe how deep-learning models such as CNN and LSTM-based models produce better results compared to other models, and these findings suggest that while genuine news is generally monotonous in nature, fake news tend to stimulate excitement to readers.

A comparative analysis of five classification algorithms, including logistic regression, decision tree, Naïve Bayes, random forest, and support vector machine, are provided with their respective accuracy scores and other performance measures (Singh and Patidar, 2023). The research highlights that the decision tree classification model outperforms the other models with an accuracy of 99.59%, closely followed by the support vector machine with an accuracy of 99.58% and the random forest classifier model with an accuracy of 98.85%. The Naïve Bayes model performs the worst in this research, with an accuracy of 94.99%. The authors emphasise that this can be attributed to Naïve Bayes being a generative model and the decision tree being a discriminative model, which makes the decision tree model more suitable for predicting and classifying fake news. Another research (Ahmad et al. 2020) introduces ensemble learning models for fake news detection. As a part of their study, baseline models such as logistic regression and linear support vector machine are used, alongside ensemble learning models such as random forest, Bagging and Boosting classifiers (e.g., XGBoost). The results from this study show that the ensemble learning model with the random forest classifier exhibits the highest accuracy with an accuracy of 99%. The performances of the bagging and boosting classifiers follow closely behind with 98% accuracy each, and logistic regression performs the worst with 97% accuracy.

For this work, the supervised classification models mentioned above are implemented to compare their accuracy in detecting text-based fake news. To detect deepfake images, a CNN model is designed using two different architectures, ResNet50 and DenseNet121, to compare the performance and analyse their suitability in detecting deepfakes. ResNet50 is a residual network. meaning it uses skip connections to jump over certain layers and train deeper networks effectively. The ‘50’ in ResNet50 indicates the presence of 50 layers in the architecture. According to the MathWorks website, this network has been pre-trained on over a million images from the ImageNet database. Whereas DenseNet121 is a densely connected convolutional neural network. It uses a feed-forward connection, meaning that each layer is directly connected to every other layer in the network. The ‘121’ in DenseNet121 refers to its 121 layers in the architecture. Hence, the higher number of layers combined with the dense connectivity of different layers makes it more computationally demanding in comparison to ResNet50.

In the applications of machine learning models to detect both fake news and deepfake images, the metadata features are summarised in Table 1 to help in understanding their nature and common characteristics.

Existing research on the impact of fake news and deepfakes

The impact of fake news spans various disciplines, from economics (Clarke et al. 2020; Goldstein and Yang, 2019) to psychology (Van der Linden et al. 2020). When the Cambridge Analytics scandal broke out in 2018, people began to see the real-world impact of misinformation as social media data from about 87 million Facebook users was secretly used to sway the 2016 presidential elections and in defining the Brexit referendum (Isaak and Hanna, 2018). The Pizzagate incident in 2016 was fuelled by a conspiracy theory that led to real-world consequences (Johnson, 2018). Another example is the disinformation surrounding the COVID-19 pandemic, which resulted in fewer people wearing face masks alongside a widespread disregard for social distancing (Ioannidis, 2020; Kouzy et al. 2020; Mattiuzzi and Lippi, 2020). Numerous studies have investigated how public sentiment (Korkmaz, 2022; Korkmaz and Bulut, 2023) is influenced by fake news during times of crisis, such as during the Japan earthquake in 2011 (Takayasu et al. 2015), Hurricane Sandy in 2012 (Gupta et al. 2013), and the COVID-19 pandemic (Caceres et al. 2022). According to Vosoughi et al. (2018), people tend to respond to fake news with feelings of anxiety, resentment and surprise whereas they respond to genuine news with feelings of anticipation, despair, optimism, and confidence.

As deepfakes are a relatively new form of visual deception, existing research on their behavioural impact is relatively limited. One notable research (Vaccari and Chadwick, 2020) suggests that an increase in spreading deepfake content will result in rising uncertainty among social media users. In addition, an immediate result of this uncertainty is likely to be a reduction of trust in news on social media. Another interesting research suggests that people may have a negative desire to “watch the world burn” if the consequences do not affect them directly (Petersen et al. 2018). This mindset can be understood as one of the key drivers for spreading false information. With the rising quantity of fake news and deepfakes across various social media sources, netizens will ultimately end up believing that a ground truth cannot be established in the content they see, further eroding their trust in social media, news agencies, and even government institutions.

Several studies have examined the effects of fake news on public trust during the COVID-19 pandemic or political scenarios such as the U.S. presidential elections. With the recent rise in tools powered by large-language models (LLMs) and their integration with mobile applications - coupled with free and easy access to it, there has also been a rise of fake news generated to trigger emotions. A recent study (Korkmaz et al. 2023) uses lexicon-based sentiment analysis to understand societal perception of news generated by ChatGPT worldwide. This study analysed over 540k messages posted on X, broke down the text into individual words, and matched the emotions invoked by each word to the emotions in the NRC dictionary. The researcher in this case notes that while users perceive positive emotions towards ChatGPT as a time-saving application to appear professional and generate content, the outputs of ChatGPT cause a feeling of fear in most users.

These emotions are not necessarily restricted to the fake news generation. Another notable study (Korkmaz, 2022) analyses tweets made by foreign users of the Turkish-based home delivery service ‘Getir’ on X. The researchers collected only English tweets containing the word ‘Getir’, removed the URLs, special characters and stopwords and used Sentiment Analysis to understand user emotions associated with the service they get from using ‘Getir’, as they expanded their operations abroad across many European cities. In this case, the researchers note that 67% of tweets were positive and 28% invoked negative emotions, emphasising that even service-related tweets can invoke emotions similarly seen in response to fake or misleading news. As the context varies across scenarios under research and depends on how the researcher perceives the emotion associated with user comments on fake news on social media, the research outcomes across different articles have a large degree of variation.

Existing research on controlling the spread of fake news

As stated earlier, there are currently minimal barriers to generating and spreading fake news on social media platforms. Even as social media platforms such as Facebook, TikTok, and X invest heavily in combating fake news using proprietary methods, evaluating the truthfulness of the news content requires significant time and manual labour. This challenge is heightened when a critical event happens unexpectedly in the complex social networks (Wen et al. 2024a, b, 2025) and there is a rapid increase in information about it. At this point, verified data about the event is scarce, which makes fake news detection even tougher.

One key limitation in this context, as highlighted in the literature (Shin et al. 2023), is the difficulty in obtaining labelled fake news data for supervised machine learning techniques. As a result, a combination of unsupervised machine learning techniques is explored with NLP techniques to cluster news articles by their topics. These topics can then be analysed for their veracity, which is more effective than individually analysing each news article. For example, Bidirectional Encoder Representations from Transformers (BERT) topic modelling was applied to the clusters of news articles, and their similarity scores were measured. The dataset used for this research was news articles primarily related to the Russian-Ukrainian conflict, and the articles were summarised with BERTSUM, a novel summarization method (Liu, 2019). The results of this study indicated that the topics with higher variance in similarity scores have a higher probability of being fake.

Another unique research (Savyan and Bhanu, 2017) designed an anomaly detection method in a social media context by analysing the change in reactions to Facebook posts. The researchers argued that analysing such reactions to posts is crucial for detecting anomalous behaviour as such reactions are immediate in nature. The study classified the reactions of the users to these Facebook posts and then identified the nature of such posts through this classification. This classification was mainly done by converting the reactions to vectors and computing the cosine similarity of such vectors, and through basic K-means clustering, which can then be used to identify unusual reactions or drastic changes to the posts.

Gaps in the existing research

Although a series of studies have been conducted using supervised classification models to detect deepfake images and videos, much of the research focuses on detecting deepfake multimedia content and fake news in separate domains, analysing them in isolation. In contrast, many fake news articles on social media today are a combination of text and multimedia content, and limited emphasis has been given to design models with datasets having this combination.

While there have been significant advancements in developing models based on sentiment analysis to detect fake news, only a few studies have explored the use of emotional lexicons for fake news detection. Text-based sentiment analysis majorly classifies the sentiments associated with a text as either positive and negative. For instance, with text-based sentiment analysis, emotions like anger, fear and sadness are negative, but analysing these emotions is crucial in understanding the collective behaviour of social media users towards a specific topic (Hamed et al. 2023).

Methodology & Results

Most news articles on social media today are a mix of text and supporting images, and hence it is increasingly important to determine the veracity of both text and images. The primary aim of this research is to explore the use of data analytics, machine learning, and interpretable AI techniques to analyse and detect fake news and deepfake images in social networks. To address this, two separate frameworks for detecting text-based fake news and deepfake images have been designed. It has been emphasized in literature research that fake news has a negative psychological impact, leading to an overall lack of trust in institutions and governments, as seen with mask refusal and vaccination hesitancy during the COVID-19 pandemic (Caceres et al. 2022). This paper also experiments how NLP techniques can be used to extract the emotional effect from texts.

Detecting text-based fake news in social networks

Two datasets are obtained from Buzzfeed News and Politifact (Ahmad et al. 2020; Aljabri et al. 2022; Shu et al. 2017, 2020; Singh and Patidar, 2023), comprising a complete sample of news published in social media platforms like Facebook, X and the likes, and other websites, which were verified by journalists at Buzzfeed and Politifact respectively. Two separate files with both fake and real news were combined to be one dataset, and the text data was pre-processed,

-

Tokenization: Breaking down sentences into smaller individual words.

-

Normalisation: Cleaning the text and removing punctuations, links, and numbers.

-

Removing the stopwords (words which have no semantic meaning on their own, such as ‘to’, ‘of’, ‘the’, etc.)

-

Lemmatisation: Converting the words to their base form.

After pre-processing, the dataset was split into training (70%) and test (30%), and then the linguistic features of the text were extracted using two methods, including the Term Frequency-Inverse Document Frequency (TF-IDF) method and the Bag of n-grams method.

The TF-IDF method extracts features of the text by evaluating the importance of a word in a document. Term Frequency (TF) refers to the frequency of a word (w) compared to the total number of words in the document, while Inverse Document Frequency (IDF) refers to the logarithmic value of the ratio of the number of documents to the number of documents with the word (w). The TF-IDF method converts text into a vector space model,

In the Bag of n-grams method, the text is grouped into a sequence of n-words (or tokens). Here, n = 1 is called a unigram, n = 2 is called a bigram, and so on. This method also converts text into a vector space model, and this vector is very sparse depending on the value of ‘n’. The higher the value of ‘n’, the sparser the input features of the vector, thereby hampering the model performance. The order of the words does not matter, hence the name ‘Bag of n-grams’. The vectorised data from both methods will be trained separately.

Six classification ML models are then trained and tested to classify if a news article is fake or real, including

-

(1)

The Logistic Regression is a widely used classification model to determine the probability of a categorical outcome given an input variable, which can be expressed as

$$\begin{array}{rcl}P(Y=0| X)&=&\frac{{e}^{-({\beta }_{0}+{\beta }_{1}{x}_{1}+{\beta }_{2}{x}_{2}+\cdots +{\beta }_{\rho }{x}_{\rho })}}{1+{e}^{-({\beta }_{0}+{\beta }_{1}{x}_{1}+{\beta }_{2}{x}_{2}+\cdots +{\beta }_{\rho }{x}_{\rho })}},\\ P(Y=1| X)&=&\frac{1}{1+{e}^{-({\beta }_{0}+{\beta }_{1}{x}_{1}+{\beta }_{2}{x}_{2}+\cdots +{\beta }_{\rho }{x}_{\rho })}},\end{array}$$(2)where P(Y = 0∣X) is the probability that variable Y is 0, given the input value X, P(Y = 1∣X) is the probability that variable Y is 1, given the input value X, β0, β1, ⋯, βρ are the coefficients that the model learns upon training, and x0, x1, ⋯, xρ are inputs of X.

-

(2)

The Decision Tree Classifier is a tree-like model that splits a dataset into smaller subsets until all the data points are assigned to a class. This assignment is done based on their input features. The top of the tree, representing a decision, is referred to as the node, and a branch signifies the outcome of this decision. This process is continued until a class is assigned (0 or 1 in this case), and then a leaf is created, signifying the end of the branch.

-

(3)

The Gradient Boosting Classifier is one of the most powerful ML algorithms, which combines multiple weak learners, mostly decision trees, to create a strong model. Upon each iteration, it corrects the errors of the previous iteration and subsequently improves its performance.

-

(4)

The Random Forest Classifier is an ensemble ML algorithm primarily used for classification tasks, which builds an ensemble of decision trees. The predictions of all individual decision trees are aggregated to provide a final classification result with a higher accuracy.

-

(5)

The Support Vector Classifier works by finding a hyperplane in a high-dimensional feature space that distinctly classifies the data points. For a binary classification problem, the equation of the hyperplane defining the decision boundary can be expressed as:

$$f(x)={\omega }^{T}x+b,$$(3)where ω is the normal vector to the hyperplane, x represents the input vector feature, and b represents the distance of the hyperplane from the origin.

-

(6)

Extreme Gradient Boosting or XGBoost Classifier, is an advanced ensemble machine learning algorithm that excels in classification problems. It achieves very high accuracy and performance by combining the power of gradient boosting with various optimisation techniques. As it is constructed by an ensemble of decision trees, it does not have a simple equation. The final prediction in the XGBoost classifier can be represented with the following equation:

$$\hat{{y}_{i}}=\mathop{\sum}\limits_{j}{\theta }_{j}{x}_{ij},$$(4)where \(\hat{{y}_{i}}\) is the predicted probability of instance i, j is the number of trees, and θjxij represents the output of instance xi for the tree j.

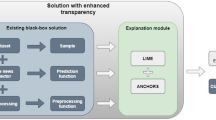

Overall, the framework adopted to design this solution is shown in Fig. 2.

The accuracy of the six ML models to detect fake news was compared in Table 2 after their features were extracted by both TF-IDF vectorisation and the Bag of n-grams method. It can be found that the XGBoost Classifier provides the highest accuracy across both TF-IDF and bag of n-grams feature extraction methods, which can be attributed to the higher efficiency and accuracy of combining multiple decision trees. Additionally, the lowest accuracy is obtained by the Decision Tree Classifier and Random Forest Classifier when the features were extracted by the TF-IDF vectorisation and the Bag of n-grams method, respectively.

In addition to analysing the accuracy scores above, analysing the confusion matrices for each of the classification algorithms will help in understanding the performance of each model with added depth. The confusion matrices for the six ML classifiers are shown in Fig. 3.

Detecting deepfake images in social networks

A dataset containing 140k real and GAN-generated deepfakes images (Alkishri et al. 2023; Shad et al. 2021; Taeb and Chi, 2022) was used to train this model. The dataset was split into train (50k), validation (10k), and test (10k) images for both real and GAN-generated deepfakes. These images should first be pre-processed with the steps below,

-

Performing Data Augmentation: This step generates batches of tensor image data to improve the accuracy of the model by exposing it to additional variations of the same images.

-

Cropping all the images to 256 × 256px, to make the dataset uniform in dimensions and to reduce the size of large images.

-

Ensuring all the images have 3 channels, that is, Red, Green, and Blue (RGB).

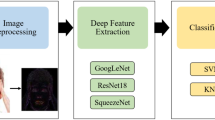

A CNN was used to identify the facial patterns from the training data. The initial step of the CNN is to convert the images into numerical data in the form of arrays. Since the dimensions of the images are consistent due to pre-processing in earlier steps, the numerical data is then provided to the CNN. Two distinct architectures, DenseNet121 and ResNet50, were used here. With both the DenseNet121 and ResNet50 architectures, 512 filters are provided as input parameters to the CNN to learn more distinct features. The CNN model with the DenseNet121 architecture is trained on the training data on 2 epochs and 200 steps per epoch, while the model with the ResNet50 architecture is trained on 10 epochs and 1562 steps per epoch. Due to the lower number of layers in the ResNet50 architecture compared to DenseNet121, and its ability to skip layers for effective model training, training the model with the ResNet50 architecture took significantly less time. Overall, the framework adopted to design this model is shown in Fig. 4.

The images in the test data were used to test the model and classify if an image is a deepfake or real. The architecture performance measures for the CNN models and other metrics such as precision, recall, and f1-scores are summarised to understand how the different architectures perform against each other in Table 3. It can be found that ResNet50 is more accurate while simultaneously being less computationally demanding. Thus, the first research question is answered by detecting fake news and deepfake images through a series of ML and deep learning models with comparable accuracy.

Evaluating the impact of fake news on public trust and behaviour

A dataset containing 44,898 articles classified as fake and real news from BuzzFeed (Shu et al. 2020) was used to compare the emotional effects, and the title and text of the articles were pre-processed. Within this dataset, 23,481 articles were labelled as fake, and the remaining 21,417 articles were labelled as real. Similarly, the text should be pre-processed first. The polarity of the news article was then detected by a trained NLTK lexicon, called Valence Aware Dictionary for sEntiment Reasoning (VADER) model. VADER can be directly applied to sentences, and it uses a dictionary to map lexical features to an emotion. This mapping returns a sentiment score consisting of 3 components: a positive score, negative score, and neutral score, and a normalised score of the 3 components is returned as a compound score. Using this compound score, the polarity of an article can be classified as positive, negative, or neutral. Specifically, if compound score > 0.01: The polarity of the article is positive; if compound score < −0.01: The polarity of the article is negative; otherwise, the polarity of the article is neutral. VADER can be used on complete sentences, which is suitable for the dataset used here. The polarity distribution across the 44,898 records was negative (20,838), positive (12,793), and neutral (11,267). In this dataset, 46.41% of the dataset has a negative polarity associated with it, which also shows that most news articles spread through social media invoke a negative sentiment in the reader, irrespective of their veracity. The next step was to measure the emotions associated with the article text. Based on the National Research Council Canada (NRC) affect lexicon and NLTK WordNet synonym sets, this dictionary contains about 27,000 words and can measure the emotions from a group of sentences. The emotions mainly detected are ‘Fear’, ‘Anger’, ‘Anticipation’, ‘Trust’, ‘Surprise’, ‘Positive’, ‘Negative’, ‘Sadness’, ‘Disgust’, and ‘Joy’. The goal here is to identify the most exhibited emotions across fake news articles. To achieve this, the words on the dataset segregated by polarity were converted to utf-8 format, and the NRCLex model was applied to the text of each dataset. The framework adopted to design this model is shown in Fig. 5.

On obtaining the polarity score of each news article through the VADER model, the dataset is further grouped by their veracity to get the distribution of real and fake articles against their respective polarities, which is described below,

-

Out of the 20,838 articles with a negative polarity: 12,613 articles were fake, and 8225 were real.

-

Out of the 12,793 articles with a positive polarity: 6814 were fake, and 5979 were real.

It is evident that among articles with a negative sentiment, the number of fake news articles is significantly higher than real news articles in comparison to their counterparts with a positive sentiment. This proves that fake news invokes a negative sentiment more often than real news, which is in line with the results from existing research.

The probability density graphs are shown in Fig. 6a and b, therby proving that there is a higher probability of a news article being fake if it invokes a negative sentiment as compared to a news article with a positive sentiment, especially for polarity scores lesser than − 0.5. The above deductions help in partially answering the second research question: by deducing that if individuals respond with a negative sentiment to an article on social media, there is a higher probability that the article is fake. However, the above results do not specify the specific emotions in play here. For instance, the emotions ‘sadness’, ‘anger’, and ‘fear’ belong to a negative sentiment, but the impact of each of these emotions is different. Hence, the NRCLex model helps analyse the emotions invoked in detail to completely answer the research question. After applying the NRCLex model on both datasets with positive and negative polarity articles, the results depict that negative articles invoked emotions like ‘fear’, ‘anger’, ‘sadness’ and the like, as summarised in Fig. 6c. On the contrary, positive articles invoked emotions such as ‘positive’, ‘trust’, ‘joy’, ‘surprise’, ‘anticipation’ and similar emotions, which are summarised in Fig. 6d.

As evident from Fig. 6c and d, emotions such as ‘disgust’, ‘sadness’, ‘anger’, and ‘fear’ were the least exhibited emotions in positive articles, and ‘joy’, ‘anticipation’, ‘disgust’, ‘surprise’ and ‘trust’ were least exhibited with negative articles, which are consistent with the findings from other researchers. Hence, the second research question is answered by analysing the emotional impact of fake news on public behaviour using data analytics.

Discussions

Discussion on the analytical results

Upon analysing the accuracy scores of the supervised classification models (Table 2), it is evident that the models perform slightly better when the TF-IDF vectorisation method is chosen over the bag of n-grams method. This improvement is likely because the bag of n-grams method emphasises words based on their frequency alone, while the TF-IDF method makes rare words more important and disregards common words, making the TF-IDF method a more effective method for feature extraction. The results of the deepfake detection model recorded in Table 3 suggest that ResNet50 is quicker to train and more suited to detect deepfakes as compared to DenseNet121. This could be attributed to the higher computational requirements by the DenseNet121 architecture owing to the higher number of layers. Figures 2 and 4 also exhibit possible overfitting of the model due to the lower validation accuracy score after the second epoch of training.

The high accuracy scores of the XGBoost classification algorithm can be attributed to its working principle, which identifies and fixes errors during each iteration. XGBoost employs several classification and regression trees that combine multiple weak learners, assign a greater negative weight to incorrectly classified data points, and consequently recognize misclassified data better with each successive iteration. Another major takeaway from the results shown by the text-based detection model is that the writing style of a social media post plays a major role here. In simpler terms, this means that if the model is trained on news articles with a specific writing style labelled as ‘real’, and if fake news content is written in this style, then there is a higher probability that the model will classify the news as real. Therefore, it is more important not to solely rely on the results of such models, and add additional checks in place before flagging a social media article as fake or real.

The emotional impact of fake news on public behaviour can also be analysed by data analytics and NLP techniques, and the practical solutions to address the impact of fake news and deepfakes on public behaviour and trust can be summarized as follows:

-

(1)

Improving media literacy and education: Promoting media literacy can educate users on the existence and risks of sharing and consuming deepfakes. Teaching individuals to analyse the media content critically and to verify the authenticity of sources will significantly reduce the spread of fake news and deepfakes.

-

(2)

Collaboration across institutions: When tech companies, researchers, and government agencies collaborate to share knowledge, datasets, and expertise, the spread of fake news and deepfake images can be combated more effectively.

-

(3)

Supporting and promoting independent fact-checking organisations: Fact-checking organisations can play a crucial role in identifying false content. They can perform a combination of tasks, such as manually correcting the content, tagging false content with warnings, and demoting false content in ranking algorithms, thereby reducing its visibility.

-

(4)

Updating and enforcing usage policies and content moderation: Social media companies should enforce clear, robust policies to deter the creation and spread of deepfake content. Guidelines should explicitly prohibit the malicious use of deepfakes, and users should be provided with mechanisms to report potentially fake content for review and moderation.

Discussion on the long-term impact of fake news and deepfakes on public trust and behaviour

There is no doubt that continued exposure to fake news will result reduction of trust and an increase in uncertainty among the general public in the long term. A research experiment by Vaccari and Chadwick (2020) tried to understand and quantify this impact by exposing about 2005 research participants to two deceptive versions and the unedited version of the BuzzFeed video featuring former US president Barack Obama and American actor Jordan Peele. The results of this research supported the hypothesis: watching a deepfake video that contains misinformation and is not labelled as fake is more likely to cause uncertainty.

Looking in the long term, the uncertainty caused due to continued exposure to fake news may lead to declining civic culture, which may potentially stimulate problematic behaviour. As the trust among society keeps declining and individuals become less cooperative, this drives an increase in the number of high-conflict situations (Balliet and Van Lange, 2013). As social media users find themselves trusting even less of the news they read online, they subsequently end up being less collaborative and less responsible in re-sharing the news themselves. In the long term, the populace would end up not trusting anything they see online, which may drive the attitude that anything can be posted online. This will further exacerbate the trust people have in others and will lead netizens to avoid reading the news altogether to avoid the stress and uncertainty that comes with it (Wenzel, 2019).

Discussion on further areas of research

Alongside improving on existing research with the above solutions, a promising research area for validating the reliability of article sources focuses on blockchain-based methods. Although limited in number, existing research includes methods that solely leverage blockchain to mitigate fake news spread and other methods that suggest an ensemble of blockchain methods with other techniques such as AI, data mining, graph theory, and crowdsourcing. These solutions are either still in their early stages of research or testing (DiCicco and Agarwal, 2020), and currently target general news articles, rather than fake news specifically originating from social media. Furthermore, there is potential to more closely integrate different types of content, such as examining cases in which accurate news is paired with fake images or vice versa, thereby contributing to a more holistic strategy for combating misinformation and enhancing trust in the digital ecosystem. This research direction is interesting as blockchain technology is decentralised and tamper-proof, which makes it well-suited for tracking the origin and propagation path of a fake news article, and subsequently helping in mitigating their spread.

In addition to the research on blockchain technology, adopting Graph Neural Networks (GNNs) to detect fake news and deepfakes is another emerging area of interest. GNNs utilize deep learning models on graph structure data, which allows them to work effectively with data that can be represented as graphs, such as social networks, rather than only Euclidean space data (Phan et al. 2023). The increasing interest in this field is evidenced by an over 40% increase in the number of research papers from 2019 to 2020 discussing the role of GNNs in fake news detection. The FakeNewsNet dataset used in this work (non-Euclidean data) is also used to analyse the propagation path of fake news across social media (Han et al. 2021), achieving an accuracy of 79.2%. Another research (Hamid et al. 2020) explores a method to identify the root users spreading misinformation with a good model performance (average ROC of 95%) by analysing the tweets on conspiracy theories related to COVID-19 and 5G networks, and capturing their textual and structural aspects. Increasing attention in this research direction will also prove to be vital in developing better fake news detection systems that identify the root user responsible for spreading such misinformation and place appropriate restrictions. To effectively address the challenges posed by fake news and deepfakes, which harm individuals, institutions, and society at large, global and multidisciplinary efforts are essential in the era of AI (Lazer et al. 2018). These efforts should involve collaboration across fields, such as psychology, sociology, politics, computer science, and mathematics. In addition, it is crucial to establish a contemporary information ecosystem that prioritizes and fosters truth within the evolving landscape of online social platforms through interdisciplinary research. Moreover, real-time analytics and monitoring approaches need to be considered in future work due to the dynamic nature of fake news and deepfakes.

Conclusion

While the concept of fake news is not new, the rampant spread of misinformation and its negative impact on people and society are more pronounced than ever. Furthermore, social bots and spammers are increasingly becoming better at projecting fake news in a convincing manner, and the use of generative models is becoming more prevalent and versatile in creating deepfakes. As a result, it is crucial to develop effective fake news and deepfake detection solutions using data analytics, machine learning, and AI techniques. This paper aims to develop prospective solutions and contribute to the existing body of research in this field. At the outset, the paper provided the research context by defining fake news, its various types, and the key factors behind its spread. To detect text-based fake news, six classification models were applied, with the accuracy ranging from approximately 91% to 99%, depending on the feature extraction method used. For detecting deepfake images, a CNN model was designed using two architectures: DenseNet121 and ResNet50, which achieved the accuracy of approximately 87% and 98% respectively. In designing such fake news and deepfakes detection models, it is equally important to understand the ‘why’ behind these efforts. As highlighted in the literature, exposure to fake news and deepfakes negatively affects public trust and behaviour, a concern that this paper aimed to explore further. By leveraging NLP techniques, including sentiment analysis and emotional lexicons, it was evident that articles or social media posts exhibiting negative emotions such as fear, anger, or sadness were more likely to be fake. This conclusion aligns with real-world events, such as during the COVID-19 pandemic, when fake news contributed to public vaccine hesitancy and the use of face masks in some countries. These findings are especially relevant during critical events, where there is an increased flow of misinformation from unverified sources. This makes early detection of fake news and deepfakes essential to prevent its widespread circulation and to avoid the “authentication effect” (Phan et al. 2023). Finally, this paper discussed the challenges and limitations of the research outcomes and explored ongoing advancements and further areas of research in developing more effective fake news and deepfake detection solutions.

Data availability

The datasets generated during and/or analysed during the current study are available upon request.

References

Ahmad I, Yousaf M, Yousaf S, Ahmad MO (2020) Fake news detection using machine learning ensemble methods. Complexity 2020:1–11

Aïmeur E, Amri S, Brassard G (2023) Fake news, disinformation and misinformation in social media: a review. Social Network Analysis and Mining 13(1):30

Aljabri M, Alomari DM, Aboulnour M (2022) Fake news detection using machine learning models. In 2022 14th International Conference on Computational Intelligence and Communication Networks, pages 473–477. IEEE

Alkishri W, Widyarto S, Yousif JH (2023) Detecting deepfake face manipulation using a hybrid approach of convolutional neural networks and generative adversarial networks with frequency domain fingerprint removal. Available at SSRN 4487525

Altay S, Hacquin A-S, Mercier H (2022) Why do so few people share fake news? it hurts their reputation. New Media & Society 24(6):1303–1324

Auxier B (2022) Digital, social media power Gen Z teens’ news consumption, The Wall Street Journal

Balliet D, Van Lange PA (2013) Trust, conflict, and cooperation: a meta-analysis. Psychological Bulletin 139(5):1090

Batailler C, Brannon SM, Teas PE, Gawronski B (2022) A signal detection approach to understanding the identification of fake news. Perspectives on Psychological Science 17(1):78–98

BBC Bitesize (2025) Timeline of How Online Misinformation Fuelled UK Riots

Caceres MMF, Sosa JP, Lawrence JA, Sestacovschi C, Tidd-Johnson A, Rasool MHU (2022) The impact of misinformation on the covid-19 pandemic. AIMS Public Health 9(2):262

Clarke J, Chen H, Du D, Hu YJ (2020) Fake news, investor attention, and market reaction. Information Systems Research 32(1):35–52

Collins B, Hoang DT, Nguyen NT, wang D (2020) Fake news types and detection models on social media a state-of-the-art survey. In Intelligent Information and Database Systems: 12th Asian Conference, ACIIDS 2020, Phuket, Thailand, March 23–26, 2020, Proceedings 12, pages 562–573. Springer

Devarajan GG, Nagarajan SM, Amanullah, SI, Mary, SSA, Bashir AK (2023) AI-assisted deep NLP-based approach for prediction of fake news from social media users. IEEE Transactions on Computational Social Systems

DiCicco, KW, Agarwal N (2020) Blockchain technology-based solutions to fight misinformation: a survey. Disinformation, Misinformation, and Fake News in Social Media: Emerging Research Challenges and Opportunities, pages 267–281

Drif A, Hamida ZF, Giordano S (2019) Fake news detection method based on text-features. France, International Academy, Research, and Industry Association (IARIA), pages 27–32

Egelhofer JL, Lecheler S (2019) Fake news as a two-dimensional phenomenon: A framework and research agenda. Annals of the International Communication Association 43(2):97–116

Fisher RJ (1993) Social desirability bias and the validity of indirect questioning. Journal of Consumer Research 20(2):303–315

Giachanou A, Rosso P, Crestani F (2019) Leveraging emotional signals for credibility detection. In Proceedings of the 42nd International ACM SIGIR Conference on Research and Development in Information Retrieval, pages 877–880

Goldstein I, Yang L (2019) Good disclosure, bad disclosure. Journal of Financial Economics 131(1):118–138

Guadagno RE, Guttieri K (2021) Fake news and information warfare: An examination of the political and psychological processes from the digital sphere to the real world. In Research anthology on fake news, political warfare, and combatting the spread of misinformation, pages 218–242. IGI Global

Gupta A, Lamba H, Kumaraguru P, Joshi A (2013) Faking sandy: characterizing and identifying fake images on twitter during hurricane sandy. In Proceedings of the 22nd International Conference on World Wide Web, pages 729–736

Hamed SK, Ab Aziz MJ, Yaakub MR (2023) Fake news detection model on social media by leveraging sentiment analysis of news content and emotion analysis of users’ comments. Sensors 23(4):1748

Hameleers M, Brosius A, de Vreese CH (2022) Whom to trust? Media exposure patterns of citizens with perceptions of misinformation and disinformation related to the news media. European Journal of Communication 37(3):237–268

Hamid A, Shiekh N, Said N, Ahmad K, Gul A, Hassan L et al. (2020) Fake news detection in social media using graph neural networks and NLP techniques: A COVID-19 use-case. arXiv preprint arXiv:2012.07517

Han Y, Karunasekera S, Leckie C (2021) Continual learning for fake news detection from social media. In International Conference on Artificial Neural Networks, pages 372–384. Springer

Ioannidis JP (2020) Coronavirus disease 2019: the harms of exaggerated information and non-evidence-based measures. European Journal of Clinical Investigation, 50(4)

Isaak J, Hanna MJ (2018) User data privacy: Facebook, Cambridge Analytica, and privacy protection. Computer 51(8):56–59

Jamieson KH, Cappella JN (2008) Echo chamber: Rush Limbaugh and the conservative media establishment. Oxford University Press

Johnson J (2018) The self-radicalization of white men:“fake news” and the affective networking of paranoia. Communication Culture & Critique 11(1):100–115

Jones-Jang SM, Mortensen T, Liu J (2021) Does media literacy help identification of fake news? Information literacy helps, but other literacies don’t. American Behavioral Scientist 65(2):371–388

Kaur S, Kumar P, Kumaraguru P (2020) Deepfakes: temporal sequential analysis to detect face-swapped video clips using convolutional long short-term memory. Journal of Electronic Imaging 29(3):033013

Korkmaz A (2022) Social media interaction of foreign users in Getir of Turkey’s second unicorn: Twitter sentiment analysis. Journal of Management and Economics Research 20(4):447–462

Korkmaz A, Bulut S (2023) Social media sentiment analysis for solar eclipse with text mining. Acta Infologica 7(1):187–196

Korkmaz A, Aktürk C, Talan T (2023) Analyzing the user’s sentiments of ChatGPT using Twitter data. Iraqi Journal for Computer Science and Mathematics 4(2):202–214

Korkmaz A, Bulut S, Kosunalp S, Iliev T (2024) Investigating Remote Work Trends in Post-COVID-19: A Twitter-based Analysis. IEEE Access

Kouzy R, Abi Jaoude J, Kraitem A, El Alam MB, Karam B, Adib E et al. (2020) Coronavirus goes viral: quantifying the COVID-19 misinformation epidemic on Twitter. Cureus, 12(3)

Kumar S, Asthana R, Upadhyay S, Upreti N, Akbar M (2020) Fake news detection using deep learning models: A novel approach. Transactions on Emerging Telecommunications Technologies 31(2):e3767

Lazer DM, Baum MA, Benkler Y, Berinsky AJ, Greenhill KM, Menczer F (2018) The science of fake news. Science 359(6380):1094–1096

Liu Y (2019). Fine-tune Bert for extractive summarization. arXiv preprint arXiv:1903.10318

Masood M, Nawaz M, Malik KM, Javed A, Irtaza A, Malik H (2023) Deepfakes generation and detection: State-of-the-art, open challenges, countermeasures, and way forward. Applied intelligence 53(4):3974–4026

Mattiuzzi, C, Lippi G (2020) Which lessons shall we learn from the 2019 novel coronavirus outbreak? Annals of Translational Medicine, 8(3)

Mazzeo V, Rapisarda A (2022) Investigating fake and reliable news sources using complex networks analysis. Frontiers in Physics 10:886544

Moore C (2019) Russia and disinformation: Maskirovka

Newman EJ, Garry M, Unkelbach C, Bernstein DM, Lindsay DS, Nash RA (2015) Truthiness and falsiness of trivia claims depend on judgmental contexts. Journal of Experimental Psychology: Learning, Memory, and Cognition 41(5):1337

Nickerson RS (1998) Confirmation bias: A ubiquitous phenomenon in many guises. Review of General Psychology 2(2):175–220

Oshikawa R, Qian J, Wang WY (2020) A Survey on Natural Language Processing for Fake News Detection. In Proceedings of the Twelfth Language Resources and Evaluation Conference, pp. 6086–6093

Paschen J (2020) Investigating the emotional appeal of fake news using artificial intelligence and human contributions. Journal of Product & Brand Management 29(2):223–233

Pennycook G, Rand DG (2020) Who falls for fake news? the roles of bullshit receptivity, overclaiming, familiarity, and analytic thinking. Journal of Personality 88(2):185–200

Petersen MB, Osmundsen M, Arceneaux K (2018) A “need for chaos” and the sharing of hostile political rumors in advanced democracies. PsyArXiv Preprints

Phan HT, Nguyen NT, Hwang D (2023) Fake news detection: A survey of graph neural network methods. Applied Soft Computing, 110235

Rubin VL (2010) On deception and deception detection: Content analysis of computer-mediated stated beliefs. Proceedings of the American Society for Information Science and Technology 47(1):1–10

Savyan P, Bhanu SMS (2017) Behaviour profiling of reactions in Facebook posts for anomaly detection. In 2017 Ninth International Conference on Advanced Computing, pages 220–226. IEEE

Shad HS, Rizvee MM, Roza NT, Hoq S, Monirujjaman Khan M, Singh A et al. (2021) Comparative analysis of deepfake image detection method using convolutional neural network. Computational Intelligence and Neuroscience, 2021

Sharma K, Qian F, Jiang H, Ruchansky N, Zhang M, Liu Y (2019) Combating fake news: A survey on identification and mitigation techniques. ACM Transactions on Intelligent Systems and Technology 10(3):1–42

Shin Y, Sojdehei Y, Zheng L, Blanchard B (2023) Content-based unsupervised fake news detection on Ukraine-Russia war. SMU Data Science Review 7(1):3

Shu K, Sliva A, Wang S, Tang J, Liu H (2017) Fake news detection on social media: A data mining perspective. ACM SIGKDD Explorations Newsletter 19(1):22–36

Shu K, Mahudeswaran D, Wang S, Lee D, Liu H (2020) Fakenewsnet: A data repository with news content, social context, and spatiotemporal information for studying fake news on social media. Big Data 8(3):171–188

Singh A, Patidar S (2023) Fake news detection using supervised machine learning classification algorithms. In Inventive Computation and Information Technologies: Proceedings of ICICIT 2022, 919–933. Springer

Stenberg G (2006) Conceptual and perceptual factors in the picture superiority effect. European Journal of Cognitive Psychology 18(6):813–847

Taeb M, Chi H (2022) Comparison of deepfake detection techniques through deep learning. Journal of Cybersecurity and Privacy 2(1):89–106

Takayasu M, Sato K, Sano Y, Yamada K, Miura W, Takayasu H (2015) Rumor diffusion and convergence during the 3.11 earthquake: a Twitter case study. PLoS one 10(4):e0121443

Tandoc Jr EC, Lim ZW, Ling R (2018) Defining “fake news” a typology of scholarly definitions. Digital Journalism 6(2):137–153

Vaccari C, Chadwick A (2020) Deepfakes and disinformation: Exploring the impact of synthetic political video on deception, uncertainty, and trust in news. Social Media+ Society 6(1):2056305120903408

Van der Linden S, Roozenbeek J et al. (2020) Psychological inoculation against fake news. The Psychology of Fake News: Accepting, Sharing, and Correcting Misinformation, 147–169

Verdoliva L (2020) Media forensics and deepfakes: an overview. IEEE Journal of Selected Topics in Signal Processing 14(5):910–932

Vosoughi S, Roy D, Aral S (2018) The spread of true and false news online. Science 359(6380):1146–1151

Wang AB (2016) ‘Post-truth’ named 2016 word of the year by Oxford Dictionaries

Wang WY (2017) “liar, liar pants on fire”: A new benchmark dataset for fake news detection. arXiv preprint arXiv:1705.00648

Watson A (2023a) Confidence in ability to recognise fake news US

Watson A (2023b) Fake news shared on social media in US

Wei L, Gong J, Xu J, Abidin NEZ, Apuke OD (2023) Do social media literacy skills help in combating fake news spread? modelling the moderating role of social media literacy skills in the relationship between rational choice factors and fake news sharing behaviour. Telematics and Informatics 76:101910

Weiss AP, Alwan A, Garcia EP, Garcia J (2020) Surveying fake news: Assessing university faculty’s fragmented definition of fake news and its impact on teaching critical thinking. International Journal for Educational Integrity 16:1–30

Wen T, Chen Y-W, Lambiotte R (2024) Collective effect of self-learning and social learning on language dynamics: a naming game approach in social networks. Journal of the Royal Society Interface 21(221):20240406

Wen T, Zheng R, Wu T, Liu Z, Zhou M, Syed TA et al. (2024b) Formulating opinion dynamics from belief formation, diffusion and updating in social network group decision-making: Towards developing a holistic framework. European Journal of Operational Research

Wen T, Chen, Y-W, Syed TA, Ghataoura D (2025) Examining communication network behaviors, structure and dynamics in an organizational hierarchy: A social network analysis approach. Information Processing & Management, 62(1):103927

Wenzel A (2019) To verify or to disengage: Coping with “fake news” and ambiguity. International Journal of Communication 13:19

Witten IB, Knudsen EI (2005) Why seeing is believing: merging auditory and visual worlds. Neuron 48(3):489–496

Yang X, Li Y Lyu S (2019) Exposing deep fakes using inconsistent head poses. In ICASSP 2019-2019 IEEE International Conference on Acoustics, Speech and Signal Processing, 8261–8265. IEEE

Zhou X, Zafarani R (2020) A survey of fake news: Fundamental theories, detection methods, and opportunities. ACM Computing Surveys (CSUR) 53(5):1–40

Author information

Authors and Affiliations

Contributions

T.M.A., T.W., and Y.W.C. conceptualised and designed the research. T.M.A. derived analytical results, performed numerical calculations, and analysed the data. All the authors wrote, reviewed, and approved the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethical approval

This article does not contain any studies with human participants performed by any of the authors.

Informed consent

This article does not contain any studies with human participants performed by any of the authors.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Abraham, T.M., Wen, T., Wu, T. et al. Leveraging data analytics for detection and impact evaluation of fake news and deepfakes in social networks. Humanit Soc Sci Commun 12, 1040 (2025). https://doi.org/10.1057/s41599-025-05389-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1057/s41599-025-05389-4