Abstract

The accelerated shift to digital learning in higher education, driven by the COVID-19 pandemic, significantly impacted student satisfaction and technology adoption. Utilizing the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) methodology, this systematic review and meta-analysis assesses the impact of this transition on student satisfaction, measured through the Net Promoter Score (NPS). Analysis across multiple educational programs reveals that postgraduate programs focused on educational innovation received a more favorable response to technological integration compared to undergraduate programs, which demonstrated lower NPS scores and encountered greater adaptation challenges. The findings highlight the necessity of conducting experimental research to further understand causal relationships between technology use and student satisfaction, considering diverse academic contexts, pedagogical strategies, and accessibility of resources. This research advances the discourse on digital transformation in education by emphasizing the importance of tailored technology-mediated strategies that address diverse student needs.

Similar content being viewed by others

Introduction

The COVID-19 pandemic, declared a global health emergency by the World Health Organization (WHO) on March 11, 2020, caused unprecedented disruption across all societal sectors, significantly affecting higher education (Li et al. 2023; Pardo-Jaramillo et al. 2023). Originating in Wuhan, China, in late December 2019, the virus rapidly spread internationally, prompting governments worldwide to enforce stringent containment measures. Consequently, educational institutions were compelled to swiftly transition to online learning platforms. By mid-2020, this sudden shift had impacted nearly 1.6 billion learners across over 190 countries, revealing considerable vulnerabilities and adaptability challenges in existing educational systems (UNESCO, 2020).

While the rapid pivot to digital learning environments demonstrated resilience within higher education, it also underscored disparities in technology access, digital literacy, and institutional capacities to maintain educational quality remotely (Patrinos et al. 2022). The widespread adoption of video conferencing tools such as Zoom, Microsoft Teams, and Google Classroom reshaped pedagogical practices, significantly influencing learning behaviors (Chen et al. 2023) and student-teacher interactions (Zhao et al. 2022). Despite these technological adaptations, concerns persisted regarding educational equity, student engagement, mental health, and the effectiveness of online learning compared to traditional face-to-face instruction (Bilal et al. 2022).

Given these transformative changes and their impact on student engagement, our research systematically examines how the COVID-19 pandemic affected student satisfaction in higher education. We employ the Net Promoter Score (NPS) as a quantitative measure of student satisfaction across different educational modalities, specifically comparing traditional and digital learning environments. Our systematic literature review addresses the following research questions (RQs):

-

RQ1: What methodologies have been predominantly used in evaluating student satisfaction via NPS in online learning during COVID-19?

-

RQ2: What outcomes related to technology-mediated learning have been reported by these studies?

-

RQ3: What significant gaps in knowledge remain regarding student satisfaction during the shift to virtual education?

This study further explores how technological tools differentially impacted undergraduate and graduate programs, aiming to provide novel insights for optimizing digital learning environments tailored to diverse student groups. Drawing upon Customer Experience (CX) and Student Experience (SX) theories, we seek to bridge existing literature gaps by offering a comprehensive understanding of educational technology’s role in crisis-driven learning contexts. This theoretical framing underscores the relevance of our research questions and the robustness of our methodological approach.

To systematically address these research questions, we conducted an exploratory review following the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines. This approach ensures transparency, reproducibility, and rigor in synthesizing scientific evidence from literature published between 2020 and 2024 that employed NPS to measure student satisfaction in virtual education settings during the pandemic. Clearly defined inclusion and exclusion criteria enhanced the relevance and quality of the selected studies. A key innovation of this research is its application of the NPS—a metric traditionally utilized in customer experience research—to the higher education context. This novel perspective provides valuable insights into student perceptions and levels of satisfaction regarding online learning tools.

This research contributes to existing literature by demonstrating the utility of NPS as a robust evaluative tool for student satisfaction in higher education, effectively bridging customer experience metrics and educational assessment. Through systematic analysis, we identify significant differences in undergraduate versus graduate experiences, highlighting that graduate programs generally benefited more from educational innovation and technological integration. These findings provide actionable insights for institutions navigating post-pandemic transitions toward hybrid and digital learning models, emphasizing the importance of equitable, flexible, and effective strategies tailored to diverse student needs.

The remainder of this paper is structured as follows: Section “State of the art” critically reviews existing literature related to technology adoption in higher education and its impact on student satisfaction, contextualizing our research within broader academic discourse. Section “Methodology” outlines our systematic review methodology, detailing the selection and analysis processes. Section “Results and Analysis” presents findings from the literature review, illuminating the effects of technological tools on student experiences during the pandemic. Section “Discussion, research agenda, and limitations” provides a discussion of these findings, examining implications for educational practice and policy. Finally, Section “Conclusions” offers conclusions and proposes a future research agenda.

State of the art

This section of the manuscript provides an overview of the theoretical foundations underpinning our study, focusing on CX, SX, and the NPS. Each concept contributes unique yet interconnected insights into how technological tools can enhance student satisfaction in higher education, particularly during the transformative conditions brought on by the COVID-19 pandemic.

Customer experience

Pine and Gilmore (1998) advanced the concept of the experience economy, distinguishing experiences from traditional goods and services by defining them as intentionally designed, memorable events. This foundational perspective laid essential groundwork for the development of the CX concept, now a critical element in today’s service-oriented economy. The application of CX principles within higher education can significantly impact student satisfaction, especially through the purposeful design of engaging and responsive learning experiences (de Melo Pereira et al. 2015).

The academic discourse surrounding the definition of CX has been marked by ongoing debate and complexity. Becker and Jaakkola (2020), together with Roy et al. (2022), highlight varying interpretations, ranging from company-designed offerings to customers’ personal responses during interactions with organizations. This complexity is further amplified by overlaps between CX and related concepts such as customer engagement and service quality, posing a multifaceted challenge for scholarly consensus. McColl-Kennedy et al. (2015) note that discussions around CX are largely informed by practical rather than purely theoretical insights, leading to an understanding of CX centered on critical interaction points and the visualization of customer touchpoints through service blueprints. We align with the definition proposed by LaSalle and Britton (2003), subsequently expanded by Gentile et al. (2007), viewing CX as customer reactions arising from interactions with organizations and their offerings. This definition emphasizes both the reactive dimension of CX and its strategic importance for fostering customer-centricity. A similar strategic alignment appears in corporate sustainability discussions, suggesting that integrating customer-centric and purpose-driven strategies not only promotes organizational sustainability but also enhances customer satisfaction through a nuanced understanding and fulfillment of expectations (Pardo-Jaramillo et al. 2020; Pardo-Jaramillo, Gomez, et al. 2025; Pardo-Jaramillo et al. 2025).

Effective CX management demonstrably influences customer retention and organizational financial performance (Ascarza et al. 2017; Becker and Jaakkola, 2020; Hogan et al. 2002). Gentile et al. (2007) provide a comprehensive multidimensional CX framework, integrating sensory, emotional, cognitive, pragmatic, lifestyle, and relational dimensions. This enriched perspective goes beyond transactional interactions, emphasizing deeper, meaningful customer engagement. Recent scholarly attention has expanded into digital contexts, dissecting CX nuances within sectors such as e-commerce and hospitality (Akin, 2024; Kim and Fung So, 2024; Luo et al. 2024; Youssofi et al. 2023). These studies underscore the importance of managing interconnected touchpoints across diverse service platforms, extending insights to the realm of educational experiences (Holz et al. 2023).

Student experience

Building upon foundational CX concepts, the notion of Student Experience (SX) conceptualizes students as customers of educational services, drawing clear parallels with CX principles as outlined by LaSalle and Britton (2003) and Hamdan et al. (2021). This perspective positions students as active participants interacting with diverse services provided by educational institutions. Matus et al. (2021) systematically review existing literature, defining SX as a specialized category of CX characterized by consumption and interactions within the academic ecosystem. This approach emphasizes critical factors influencing student satisfaction, such as academic support, student engagement, and perceived educational value (Pinar et al. 2024).

Recent advances in pedagogical strategies and educational technologies have significantly influenced SX. Studies by Iqbal et al. (2024) and Karlsen et al. (2024) examine innovative educational approaches, including in-situ simulation training and blended learning environments, highlighting their impact on enhancing SX. Similarly, Pettersson et al. (2024) explore the use of digital resources for self-directed study, emphasizing technology’s role in improving student learning outcomes.

The SX literature also addresses specific contextual factors affecting students’ experiences. Nolan and Owen (2024) investigate how equality, diversity, and inclusion influence medical students’ experiences, while Bono et al. (2024) examine anxiety triggered by the abrupt shift to online learning due to the COVID-19 pandemic. These studies underscore the importance of emotional and psychological dimensions in fostering inclusive, supportive educational environments. Additionally, technological adoption (Liu et al. 2023) and access to internet resources (Dilan Boztas et al. 2023) emerge as essential factors for ensuring equitable, effective educational experiences. Liu et al. (2024) further stress the significance of addressing students’ emotional and semantic needs in digital education. Collectively, these insights highlight the necessity of carefully integrating technological tools to enhance—rather than detract from—educational experiences, setting a clear rationale for evaluating these tools using metrics such as the Net Promoter Score.

Net promoter score

Building upon the preceding discussion on SX, which emphasizes the complex interactions students experience within academia, we turn now to a critical metric for evaluating broader aspects of satisfaction and loyalty: the Net Promoter Score (NPS). Introduced by Reichheld (2003) and widely adopted in both business and academic contexts, NPS provides a straightforward yet powerful means of measuring the likelihood that customers—or, in the educational context, students—would recommend a service or institution to others (Tariq Ismail Al-Nuaimi et al. 2016).

The widespread adoption and utility of NPS among Fortune 1000 companies underscore its practical relevance and value (Bendle et al. 2019). However, ongoing debates persist regarding its methodological limitations, particularly concerning respondent segmentation (Baehre et al. 2022a; Baehre et al. 2022b; Grisaffe, 2007). Some studies question NPS’s correlation with sales growth (Dawes, 2022; Keiningham et al. 2007; Morgan and Rego, 2006), whereas others confirm significant predictive relationships (Baehre et al. 2022a). These findings highlight the necessity of employing multiple metrics and considering contextual factors when evaluating customer satisfaction and loyalty. Despite these debates, NPS’s utility in predicting customer retention and business performance is widely recognized (de Haan et al. 2015), positioning it as a valuable, though complementary, metric within broader evaluative frameworks.

In this study, we employ NPS to rigorously quantify the impact of technological disruptions due to COVID-19 on student experiences in higher education. By integrating NPS, we bridge theoretical frameworks with practical application, effectively synthesizing diverse research findings into a coherent understanding of student satisfaction amid rapidly evolving educational environments. Grounding our analysis within robust theoretical frameworks of CX, SX, and NPS, this section sets the stage for examining technology’s transformative impacts on education, guiding our methodological approach, and ensuring a holistic understanding of student experiences.

Methodology

We conducted a systematic exploratory review following the guidelines provided by the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA; Page et al. 2021). Additionally, we adopted recommendations for systematic exploratory reviews proposed by Tricco et al. (2018), complemented by frameworks outlined by Arksey and O’Malley (2005) and Levac et al. (2010). These guidelines collectively informed the formulation of research questions, identification of relevant literature, and selection of pertinent studies.

Our methodological approach explicitly outlined each step of the selection process, including search strategies, databases queried, and clearly defined inclusion and exclusion criteria. To maintain rigor, we conducted a risk-of-bias assessment using standardized tools, ensuring consistent evaluation of study quality. Statistical methods were applied to quantify measures of effect, ensuring reliability and clarity in our meta-analysis.

While 12 studies initially met our systematic review criteria, only 5 provided sufficient data (counts of promoters and detractors) necessary for quantitative meta-analysis, specifically enabling comparisons between undergraduate and postgraduate programs. Each selected study adhered rigorously to predefined criteria, guaranteeing consistency and quality of outcomes relevant to our research questions. Given the expected heterogeneity and limited number of studies,

we chose a random-effects model to enhance generalizability, as recommended by Borenstein et al. (2009) and Davey et al. (2011).

To further ensure comprehensiveness, two independent reviewers conducted the study selection process. Discrepancies were resolved through discussion or consultation with a third reviewer. Additionally, we conducted extensive literature searches using keyword strategies, reference-list examination, and expert consultations to minimize the risk of omitting significant publications. The Quality Appraisal for Diverse Studies (QuADS) tool was employed to systematically identify and mitigate potential biases, further enhancing credibility and reliability.

The following subsection shows our methodological process, underscoring the rigor and transparency applied in examining technological adoption’s impact on student satisfaction during the COVID-19 pandemic.

Search strategy

Our review established strict inclusion criteria, focusing on observational studies evaluating student satisfaction via NPS within virtual learning environments during COVID-19. Specifically, we included studies published from 2020 to February 2024, written in English or Spanish, indexed in Scopus or Web of Science databases. We excluded narrative reviews, editorials, prior systematic reviews, and articles lacking abstracts or full-text accessibility. Additionally, studies that did not employ NPS as an evaluative measure were omitted.

We conducted structured searches in Scopus and Web of Science on February 17, 2024, using tailored search queries combining keywords such as “net promoter score,” “technology,” “COVID-19,” “student,” “education,” and “NPS,” with temporal filters limiting results to the post-2019 pandemic era. Table 1 outlines these search equations designed to comprehensively capture relevant literature.

Study selection

Initial searches yielded 487 articles; however, preliminary screening indicated many did not explicitly reference ‘Net Promoter Score.’ Refining the search parameters. After deduplication, 126 unique articles remained. Two primary authors screened titles, abstracts, and full texts, with a third reviewer consulted in cases of uncertainty. This rigorous evaluation excluded 114 articles that did not meet established inclusion criteria, leaving 12 eligible studies for detailed review.

Figure 1 presents the workflow from initial identification to final selection in a PRISMA flowchart, summarizing the selection process. A full list of reviewed articles is available in Appendix 1 or upon request to the corresponding author of this paper.

Data extraction, quality evaluation, and synthesis

Consistent with our systematic review protocol, we developed a structured data extraction form to accurately collect relevant information from studies meeting our inclusion criteria. The extraction form captured essential variables, including Net Promoter Score (NPS), counts of promoters and detractors, and the digital platforms utilized in each study. Additional data, such as the total number of respondents, geographical location of studies, and the nature of academic programs investigated, were recorded to support deeper analyses.

Two independent reviewers verified the accuracy of extracted data. Subsequently, all selected studies underwent a thorough quality assessment using the Quality Appraisal for Diverse Studies (QuADS) tool, recognized for its effectiveness in evaluating diverse study designs and systematically identifying potential biases (Harrison et al. 2021).

For data synthesis, NPS was computed based on participant ratings of their likelihood to recommend educational services. Ratings were categorized as detractors (scores of 0–6), passives (7–8), and promoters (9–10), as illustrated in Fig. 2. The NPS was calculated by subtracting the percentage of detractors from the percentage of promoters, yielding the resulting measure of satisfaction.

The diagram segments survey respondents by their 0–10 recommendation rating: Detractors (scores 0–6, red angry-face icon), Passives (scores 7–8, yellow neutral-face icon) and Promoters (scores 9–10, green smiling-face icon). The Net Promoter Score is calculated as the percentage of Promoters minus the percentage of Detractors; Passives do not enter the equation.

To quantify the variability in NPS across studies, variance was calculated using the standard deviation (SD) formula:\(=\sqrt{\frac{p* {pp}}{N}+\frac{d* {pd}}{N}}\), where p is the number of promoters, pp is the total number of survey respondents minus the number of promoters, d is the number of detractors, pd is the total number of survey respondents minus the number of detractors, and N is the total number of survey respondents (Glasziou et al. 2001). Furthermore, we conducted a meta-analysis to combine study outcomes into a weighted average, employing the inverse variance method (Hackshaw, 2011). The heterogeneity among studies was evaluated using the Q statistic and I2 index. The Q statistic assesses total variation across studies, indicating whether the observed variance exceeds chance. The I2 index quantifies heterogeneity as the percentage of total variation attributable to study differences rather than chance alone, with values (low), 30–60% (moderate), 50–90% (substantial), and 75–100% (considerable). Given the limited number of included studies, formal statistical testing for publication bias was not conducted (Dalton et al. 2016).

Finally, evidence collected through this process was summarized to characterize the impact of virtual education on student satisfaction during the pandemic, explicitly using NPS as the evaluative metric. Visual data representation techniques, such as Word Clouds generated using the Bibliometrix package in R software (version 4.2.1; Aria and Cuccurullo, 2017), were employed to illustrate key findings clearly.

Results and analysis

In this section, we present the results of our analysis regarding how the Net Promoter Score (NPS) reflects the impact of technology-mediated classes on university student satisfaction during the COVID-19 pandemic. Initially, 12 studies evaluating technology’s influence on student satisfaction were included. Of these, five provided sufficient NPS data to enable comparisons between undergraduate and postgraduate programs, forming the basis for our meta-analysis. This quantitative analysis offers insights into how different educational levels responded to technological adoption, highlighting broader implications for educational policy and practice. Results are structured around the original research questions: (1) prevalent methodologies used in NPS-based evaluations, (2) key outcomes on student satisfaction, and (3) significant knowledge gaps identified in existing literature.

Study characteristics and quality review

We organized and analyzed data extracted from the 12 selected studies, categorizing them according to their demographic focus as outlined in Table 2: undergraduate students (n = 5), postgraduate students (n = 4), and studies with primarily methodological or descriptive objectives (n = 3). A standardized Excel template ensured consistency and objectivity in data extraction and subsequent analysis, particularly regarding study quality and reported NPS results.

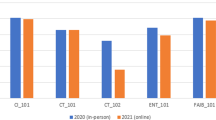

Analysis of NPS outcomes revealed predominantly positive scores in 9 out of 10 studies reporting this metric (Fig. 3). This trend indicates general favorability toward technology-mediated classes as measured by student satisfaction during the pandemic.

Quality and potential biases within the studies were carefully assessed. The studies employed diverse methodologies: validity testing of questionnaires (30%), longitudinal studies (40%), retrospective cohort studies (10%), and prospective cohort studies (20%). Postgraduate studies exclusively addressed medical fields, whereas undergraduate studies covered disciplines such as architecture, systems engineering, science and technology, mechanical engineering, and human nutrition. Risk-of-bias evaluation indicated an overall elevated risk across studies (Figs. 4, 5).

Horizontal stacked bars display, for each QUADAS-2 domain—risk of bias and applicability concerns—. Color coding and domain structure follow the standard QUADAS-2 guidance for diagnostic-accuracy reviews and the robvis visualisation scheme. Percentages are shown on the x-axis (0–100 %), allowing rapid comparison of methodological quality across domains.

Each column represents one of the 10 included studies, and each row corresponds to a QUADAS-2 domain: risk of bias and applicability concerns. Cell symbols are colour-coded following the robvis/Cochrane convention: green circle with plus sign (+) = low risk, yellow circle with question mark (?) = unclear risk, and red circle with minus sign (–) = high risk.

Digital platforms adopted for teaching varied notably. Undergraduate studies predominantly used Google Meet, whereas postgraduate studies favored Zoom and Webex (Fig. 6). Geographical mapping further illustrated platform adoption patterns, with Zoom emerging as the most common tool (Fig. 7).

A world map highlights countries that contributed at least one article to the review (shaded blue) and annotates each with the e-learning platforms those studies used. Countries with no included studies remain grey. Platform logos serve as visual keys and are positioned adjacent to the relevant country.

Studies originated primarily from Peru and the United States, each contributing four publications. Demographically, undergraduate students represented 34% (n = 541) of the total sample, while graduate students accounted for 13% (n = 207) from the total of 1562 students across 11 studies. Gender distribution was only reported in four studies, with a slight majority of female participants (n = 451) compared to males (n = 413), as depicted in Fig. 8.

Table 3 summarizes the key characteristics, NPS scores, and limitations identified within the selected studies.

Correlational analysis indicated that studies incorporating elements such as educational innovation, reliable internet access, and flexibility in learning generally reported higher NPS scores (Fig. 9). This highlights the importance of these factors in enhancing student satisfaction with virtual education. A detailed overview of these objective outcomes across studies is presented in Table 4.

Impact by program level

To investigate the differential impact of technological adoption on student satisfaction, we divided the analysis by educational level—undergraduate versus graduate programs. Our meta-analysis leveraged absolute and relative frequencies of promoters and detractors extracted from five studies meeting our criteria (three undergraduate and two graduate studies). Data from these studies were synthesized using Review Manager software (version 5.4.1), presenting results in Table 5 and Fig. 10. We recorded event proportions, weights assigned to each study, and risk differences with 95% confidence intervals (CI), utilizing the Mantel-Haenszel method within a random-effects model to accommodate inherent study variability.

A Mantel–Haenszel random-effects model compares the proportion of Promoters minus Detractors (risk difference, 95% CI) for individual studies and pooled sub-totals in graduate programmes (Moschovis 2022; Klifto 2021) and undergraduate programmes (Hakeem 2022; Nakwong 2020; Thakker 2020), with an overall total shown at the bottom. Each study is drawn as a blue square centred on its point estimate; square area is proportional to the inverse-variance weight assigned in the meta-analysis, and horizontal whiskers depict the 95% confidence interval. Black diamonds represent the pooled effect for each subgroup and for all studies combined; the lateral tips mark the pooled 95% CI, following standard forest-plot conventions. The vertical solid line at zero denotes “no NPS advantage”: estimates to the right favour higher NPS, while those to the left favour lower NPS. Arrows on whiskers indicate confidence limits extending beyond the x-axis scale.

Graduate-level studies showed significantly higher satisfaction, with an average NPS of 83%, indicating robustly positive experiences. Conversely, undergraduate studies revealed an average NPS of only 16%, reflecting mixed or neutral sentiment toward technological interventions.

Further heterogeneity analysis (Table 6) revealed substantial variability within both educational levels (I2 = 83% for graduate, 89% for undergraduate). However, combining undergraduate and graduate data increased heterogeneity significantly (I2 = 99%), suggesting marked differences in student satisfaction dynamics between these groups.

The observed high heterogeneity underscores distinct factors influencing satisfaction at different academic levels, emphasizing the necessity for separate evaluations in future research. Graduate students exhibited consistently positive experiences, while undergraduates displayed varied responses, highlighting specific contextual differences warranting further investigation.

This analysis demonstrates NPS’s effectiveness as a tool for assessing the diverse impacts of technology-mediated learning across educational demographics during COVID-19. The variability in findings between undergraduate and graduate programs elucidates complex dynamics associated with technological integration. These insights lay a robust foundation for exploring implications for educational policy and practice in subsequent discussions.

Discussion, research agenda, and limitations

This discussion explicitly addresses our research questions, highlighting methodologies (RQ1), outcomes (RQ2), and knowledge gaps (RQ3). First, most studies reviewed employed observational designs, particularly longitudinal or retrospective cohort studies, which limit definitive conclusions about causality. Second, graduate students consistently reported higher satisfaction levels compared to undergraduates, underscoring significant differences in technological adaptability by academic level. Lastly, substantial knowledge gaps emerged regarding the absence of experimental studies, longitudinal analyses, and comparative effectiveness evaluations, emphasizing the need for targeted future research.

Graduate programs yielded notably higher NPS scores than undergraduate programs, suggesting enhanced adaptability and satisfaction with technology-mediated education among graduate students. This finding aligns with Zhang et al. (2022), who attributed greater adaptability among graduate students to prior experience with digital tools and higher proficiency in self-directed learning. Similarly, Adedoyin and Soykan (2023) emphasized the necessity of tailored technological integration approaches, recognizing the distinct needs of different academic levels. In contrast, our study revealed significant heterogeneity within undergraduate responses, highlighting variability in their experiences and adaptability to online education. This variability points to the critical need for customized educational strategies addressing undergraduates’ diverse technological competencies.

Employing Customer Experience (CX) and Student Experience (SX) frameworks provided valuable theoretical foundations for interpreting our findings. CX principles, emphasizing strategic management of interactions, directly align with the successful integration of technology in educational settings. Our application of NPS validated its effectiveness as a practical tool to bridge theoretical insights with measurable educational outcomes, offering clarity on the impact of digital tools on student satisfaction.

The pandemic severely constrained the execution of rigorous experimental studies, limiting controlled scientific inquiries. Pandemic-imposed isolation and institutional focus on structured research opportunities leaving significant gaps regarding specific technological factors influencing student satisfaction (Ali, 2020; Sobaih et al. 2020). This highlights a critical future research need: systematically exploring technology’s nuanced role across diverse student demographics and educational settings.

Higher satisfaction among graduate students could stem from their advanced academic experience and readiness to navigate digital environments, facilitating more effective and satisfying educational experiences. Additionally, graduate cohorts’ relatively homogeneous interests may simplify targeted, effective educational strategies (Gao et al. 2021; Kumi‐Yeboah et al. 2023). Furthermore, our analysis confirms that integrating educational innovation, flexible learning options, and technological accessibility positively correlates with increased NPS scores, underscoring these factors as crucial determinants of student satisfaction (Khan et al. 2020; Portillo et al. 2020). This correlation emphasizes the urgency of conducting experimental studies to precisely quantify how these elements individually enhance educational experiences.

Proposed research agenda

Addressing identified knowledge gaps, our proposed research agenda (Table 7) outlines critical questions aimed at deepening the understanding of technology-mediated educational experiences. It emphasizes the design of optimal customer experiences, effective measurement techniques, technology usage, impacts across academic fields, and differential effects on undergraduate versus graduate learning experiences.

Future studies should prioritize rigorous research designs—including longitudinal, mixed-methods, and controlled experimental studies—to uncover the lasting effects of technological adoption, identify pivotal moments within the student journey, and clarify success factors in educational technology integration. Addressing these topics will offer educators and policymakers valuable insights to design effective, inclusive, and adaptable technology-enhanced learning environments.

Furthermore, variations in digital adoption and educational infrastructure strength across countries (Table 8) indicate that future research must consider technological proficiency and educational system robustness when evaluating student satisfaction. This inclusion will ensure contextually relevant and generalizable findings across diverse educational settings.

Proposed research agenda

Our review identified several limitations in the existing body of literature, most notably the reliance on observational study designs, absence of experimental methodologies, and the resulting inability to infer causality. Additionally, potential biases inherent in existing studies highlight the necessity of greater methodological rigor. Utilizing the Quality Appraisal for Diverse Studies (QuADS) tool allowed us to systematically identify such biases, emphasizing the importance of prospective experimental studies, particularly randomized controlled trials, to enhance research reliability and validity.

The review’s timeframe, ending in early 2024, may have missed recent studies, limiting insights into emerging trends. Future systematic reviews should periodically update searches to capture ongoing developments. Ensuring representative sampling in terms of geographic, cultural, and socio-economic diversity is essential for accurately capturing student experiences across contexts, particularly given the differential impacts educational technologies can have based on individual and systemic disparities.

Additionally, standardizing terminology and adopting comprehensive search strategies will mitigate risks associated with incomplete identification of relevant studies. Employing a controlled vocabulary, informed by extensive literature review, and adherence to guidelines such as PRISMA will enhance the transparency and reproducibility of future reviews, ensuring more robust and replicable outcomes.

To succinctly address our identified methodological and research limitations alongside recommended solutions, we provide Table 9, summarizing key limitations and strategies for overcoming them. By adopting these methodological improvements, future research will substantially enhance our understanding of how technology impacts educational outcomes. Ultimately, this will inform evidence-based strategies that effectively leverage technology to foster equitable, high-quality educational experiences for all students.

Conclusions

This systematic review addressed three central research questions concerning the methodologies, outcomes, and knowledge gaps in evaluating student satisfaction with technology-mediated learning during the COVID-19 pandemic. The reviewed studies, predominantly observational in design, demonstrated limitations in establishing causality. These methodological constraints underline the critical need for experimental and quasi-experimental approaches in future research to more effectively capture the direct impacts of technology integration on educational outcomes.

Graduate students consistently reported higher satisfaction levels than undergraduates, as measured by Net Promoter Scores (NPS). Graduate programs appeared better equipped to integrate technological innovations due to factors such as greater flexibility, prior experience with digital tools, and more uniform academic interests. In contrast, undergraduates showed notably lower and more variable satisfaction, highlighting the necessity for institutions to design targeted technological interventions specifically suited to undergraduate students’ diverse needs and competencies.

By applying Customer Experience (CX) and Student Experience (SX) frameworks to higher education contexts during a global crisis, our study validates the effectiveness of NPS as a practical tool for measuring student satisfaction. This research extends existing theories by demonstrating how customized, student-centered technology solutions significantly enhance educational outcomes. These findings advocate strongly for educational institutions to adopt tailored strategies aligned with best practices in service management and customer-centric approaches.

Our results suggest actionable strategies for higher education institutions aiming to optimize technology-mediated learning experiences. Recommendations include enhancing faculty professional development to improve technological integration in teaching, ensuring equitable technology access to address digital divides, and creating flexible learning environments responsive to diverse student learning styles and preferences. These initiatives collectively support improved student satisfaction and engagement, fostering more effective educational experiences.

Finally, our systematic review identifies crucial gaps, notably the scarcity of rigorous experimental studies and the high heterogeneity in existing observational research. Addressing these gaps through well-designed experimental methodologies will clarify causal relationships between technological tools and student satisfaction. Given the dynamic evolution of educational technology research, periodic updates to systematic reviews are essential to maintain relevance and accurately capture emerging trends and insights.

Data availability

The authors confirm that the data supporting the findings of this study are available within the article and its references.

References

Adedoyin OB, Soykan E (2023) Covid-19 pandemic and online learning: the challenges and opportunities. Interact Learn Environ 31(2):863–875. https://doi.org/10.1080/10494820.2020.1813180

Aguilar OG, Duche Perez AB, Aguilar AG (2020) Teacher performance evaluation model in Covid-19 times. 2020 XV Conferencia Latinoamericana de Tecnologias de Aprendizaje (LACLO), 1–6. https://doi.org/10.1109/LACLO50806.2020.9381159

Aguilar OG, Gutierrez Aguilar A (2020) A model validation to establish the relationship between teacher performance and student satisfaction. 2020 3rd International Conference of Inclusive Technology and Education (CONTIE), 202–207. https://doi.org/10.1109/CONTIE51334.2020.00044

Ali W (2020) Online and remote learning in higher education institutes: a necessity in light of COVID-19 pandemic. High Educ Stud 10(3):16. https://doi.org/10.5539/hes.v10n3p16

Akin MS (2024) Enhancing e-commerce competitiveness: a comprehensive analysis of customer experiences and strategies in the Turkish market. J Open Innov Technol Market Complex 10(1). https://doi.org/10.1016/j.joitmc.2024.100222

Aria M, Cuccurullo C (2017) Bibliometrix: an R-tool for comprehensive science mapping analysis. J Informetr 11(4):959–975. https://doi.org/10.1016/j.joi.2017.08.007

Arksey H, O’Malley L (2005) Scoping studies: towards a methodological framework. Int J Soc Res Methodol: Theory Pract 8(1):19–32. https://doi.org/10.1080/1364557032000119616

Ascarza E, Neslin S, Gupta S, Lemmens A, Libai B, Provost F, Schrift RY (2017) In Pursuit of Enhanced Customer Retention Management. SSRN Electron J. https://doi.org/10.2139/ssrn.2903548

Baehre S, O’Dwyer M, O’Malley L, Lee N (2022a) The use of net promoter score (NPS) to predict sales growth: insights from an empirical investigation. J Acad Mark Sci 50(1):67–84. https://doi.org/10.1007/s11747-021-00790-2

Baehre S, O’Dwyer M, O’Malley L, Story VM (2022b) Customer mindset metrics: a systematic evaluation of the net promoter score (NPS) vs. alternative calculation methods. J Bus Res 149(April):353–362. https://doi.org/10.1016/j.jbusres.2022.04.048

Becker L, Jaakkola E (2020) Customer experience: fundamental premises and implications for research. J Acad Mark Sci 48(4):630–648. https://doi.org/10.1007/s11747-019-00718-x

Bendle NT, Bagga CK, Nastasoiu A (2019) Forging a stronger academic-practitioner partnership–the case of net promoter score (NPS). J Mark Theory Pract 27(2):210–226. https://doi.org/10.1080/10696679.2019.1577689

Bono R, Núñez-Peña MI, Campos-Rodríguez C, González-Gómez B, Quera V (2024) Sudden transition to online learning: Exploring the relationships among measures of student experience. Int J Educ Res Open, 6. https://doi.org/10.1016/j.ijedro.2024.100332

Borenstein M, Hedges LV, Higgins JPT, Rothstein HR (2009) Introduction to Meta‐Analysis. Wiley. https://doi.org/10.1002/9780470743386

Chen G, Chen P, Wang Y, Zhu N (2023) Research on the development of an effective mechanism of using public online education resource platform: TOE model combined with FS-QCA. Interact Learn Environ 1–25. https://doi.org/10.1080/10494820.2023.2251038

Dalton JE, Bolen SD, Mascha EJ (2016) Publication bias: the elephant in the review. Anesthesia Analgesia 123(4):812–813. https://doi.org/10.1213/ANE.0000000000001596

Davey J, Turner RM, Clarke MJ, Higgins JP (2011) Characteristics of meta-analyses and their component studies in the Cochrane Database of Systematic Reviews: a cross-sectional, descriptive analysis. BMC Med Res Methodol 11(1):160. https://doi.org/10.1186/1471-2288-11-160

Dawes JG (2022) Net promoter and revenue growth: an examination across three industries. Australas Market J. https://doi.org/10.1177/14413582221132039

de Haan E, Verhoef PC, Wiesel T (2015) The predictive ability of different customer feedback metrics for retention. Int J Res Mark 32(2):195–206. https://doi.org/10.1016/j.ijresmar.2015.02.004

Dilan Boztas G, Berigel M, Altinay Z, Altinay F, Shadiev R, Dagli G (2023) Readiness for inclusion: analysis of information society indicator with educational attainment of people with disabilities in European Union Countries. J Chin Hum Resour Manag 14(3):47–58. https://doi.org/10.47297/wspchrmWSP2040-800504.20231403

de Melo Pereira FA, AS Martins Ramos, A Paula; BMK de Oliveira (2015) Continued usage of e-learning: expectations and performance. J Inform Syst Technol Manag 12(2). https://doi.org/10.4301/S1807-17752015000200008

Ferreira P, Antunes FO, Gallo H, Tognon M, Pereira HM (2021) Design teaching and learning in Covid-19 times: an international experience. In Communications in Computer and Information Science: Vol. 1384 CCIS. Springer Int Publishing. https://doi.org/10.1007/978-3-030-73988-1_20

Gamarra-Moreno A, Gamarra-Moreno D, Gamarra-Moreno A, Gamarra-Moreno J (2021) Assessing Problem-Based Learning satisfaction using Net Promoter Score in a virtual learning environment. EDUNINE 2021—5th IEEE World Engineering Education Conference: The Future of Engineering Education: Current Challenges and Opportunities, Proceedings. https://doi.org/10.1109/EDUNINE51952.2021.9429104

Gao S, Zhuang J, Chang Y (2021) Influencing factors of student satisfaction with the teaching quality of fundamentals of entrepreneurship course under the background of innovation and entrepreneurship. Front Educ 6. https://doi.org/10.3389/feduc.2021.730616

Gentile C, Spiller N, Noci G (2007) How to sustain the customer experience. An overview of experience components that co-create value with the customer. Eur Manag J 25(5):395–410. https://doi.org/10.1016/j.emj.2007.08.005

Glasziou P, Irwig L, Bain C, Colditz G (2001) Systematic reviews in health care. In Systematic Reviews in Health Care. https://doi.org/10.1017/cbo9780511543500

Grisaffe DB (2007) Questions about the ultimate question: conceptual considerations in evaluating reichheld’s net promoter score. J Consum Satisf Dissatisf Complain Behav 20:36–53

Hackshaw A (2011) A concise guide to clinical trials. In John Wiley & Sons. https://doi.org/10.3949/ccjm.59.5.553-c

Hakeem R, Azhar N, Jahan M (2022) Evaluation of virtual nutrition counseling as a nutrition care and educational tool. Pak J Life Soc Sci 20(1):139–145. https://doi.org/10.57239/PJLSS-2022-20.1.0014

Hamdan KM, Al-Bashaireh AM, Zahran Z, Al-Daghestani A, AL-Habashneh S, Shaheen AM (2021) University students’ interaction, Internet self-efficacy, self-regulation and satisfaction with online education during pandemic crises of COVID-19 (SARS-CoV-2). Int J Educ Manag 35(3):713–725. https://doi.org/10.1108/IJEM-11-2020-0513

Harrison R, Jones B, Gardener P, Lawton R (2021) Quality assessment with diverse studies (QuADS): an appraisal tool for methodological and reporting quality in systematic reviews of mixed- or multi-method studies. BMC Health Serv Res 21(1). https://doi.org/10.1186/s12913-021-06122-y

Hogan JE, Thomas JS, Verhoef PC (2002) Financial Perform 5(1):26–38

Holz HF, Becker M, Blut M, Paluch S (2023) Eliminating customer experience pain points in complex customer journeys through smart service solutions. Psychol Market. https://doi.org/10.1002/mar.21938

Iqbal M, Dias JM, Sultan A, Raza HA, uz Zaman L (2024) Effectiveness of blended pedagogy for radiographic interpretation skills in operative dentistry - a comparison of test scores and student experiences at an undergraduate dental school in Pakistan. BMC Med Educ 24(1). https://doi.org/10.1186/s12909-024-05062-5

Karlsen K, Nygård C, Johansen LG, Gjevjon ER (2024) In situ simulation training strengthened bachelor of nursing students’ experienced learning and development process—a qualitative study. BMC Nurs 23(1). https://doi.org/10.1186/s12912-024-01771-w

Keiningham TL, Cooil B, Andreassen TW, Aksoy L (2007) A longitudinal examination of net promoter and firm revenue growth. J Mark 71(3):39–51. https://doi.org/10.1509/jmkg.71.3.39

Khan MA, Vivek V, Nabi MK, Khojah M, Tahir M (2020) Students’ perception towards E-learning during COVID-19 pandemic in India: an empirical study. Sustainability 13(1):57. https://doi.org/10.3390/su13010057

Kim H, Fung So KK (2024) Customer touchpoints: Conceptualization, index development, and validation. Tourism Manag 103. https://doi.org/10.1016/j.tourman.2023.104881

Klifto KM, Azoury SC, Muramoto LM, Zenn MR, Levin LS, Kovach SJ (2021) The University of Pennsylvania flap course enters virtual reality: the global impact. plastic and reconstructive surgery—global open, 1–7. https://doi.org/10.1097/GOX.0000000000003495

Kumi‐Yeboah A, Kim Y, Armah YE (2023) Strategies for overcoming the digital divide during the COVID‐19 pandemic in higher education institutions in Ghana. Br J Educ Technol 54(6):1441–1462. https://doi.org/10.1111/bjet.13356

LaSalle D, Britton T (2003) Priceless: turning ordinary products into extraordinary experiences. In Harvard Business Press

Leung JS, Foohey S, Burns R, Bank I, Nemeth J, Sanseau E, Auerbach M (2023). Implementation of a North American pediatric emergency medicine simulation curriculum using the virtual resuscitation room. AEM Educ Train 7(3). https://doi.org/10.1002/aet2.10868

Levac D, Colquhoun H, O’Brien KK (2010) Scoping studies: Advancing the methodology. Implement Sci 5(1):1–9. https://doi.org/10.1186/1748-5908-5-69

Li J, Huang C, Yang Y, Liu J, Lin X, Pan J (2023) How nursing students’ risk perception affected their professional commitment during the COVID-19 pandemic: the mediating effects of negative emotions and moderating effects of psychological capital. Humanit Soc Sci Commun 10(1). https://doi.org/10.1057/s41599-023-01719-6

Liu Z, Kong X, Liu S, Yang Z (2023) Effects of computer-based mind mapping on students’ reflection, cognitive presence, and learning outcomes in an online course. Distance Educ 44(3):544–562. https://doi.org/10.1080/01587919.2023.2226615

Liu Z, Wen C, Su Z, Liu S, Sun J, Kong W, Yang Z (2024) Emotion-semantic-aware dual contrastive learning for epistemic emotion identification of learner-generated reviews in MOOCs. IEEE Transactions on Neural Networks and Learning Systems, 1–14. https://doi.org/10.1109/TNNLS.2023.3294636

Luo JM, Hu Z, Law R (2024) Exploring online consumer experiences and experiential emotions offered by travel websites that accept cryptocurrency payments. Int J Hospital Manag 119. https://doi.org/10.1016/j.ijhm.2024.103721

Matus N, Rusu C, Cano S (2021) Student eXperience: a systematic literature review. Appl Sci 11(20). https://doi.org/10.3390/app11209543

McColl-Kennedy JR, Gustafsson A, Jaakkola E, Klaus P, Radnor ZJ, Perks H, Friman M (2015) Fresh perspectives on customer experience. J Serv Mark 29(6–7):430–435. https://doi.org/10.1108/JSM-01-2015-0054

Millones-Liza DY, García-Salirrosas EE (2022) Dropout of students from a private university institution and their intention to return in COVID-19 times: an analysis for decision making. Cuadernos de Administracion, 35(May). https://doi.org/10.11144/Javeriana.cao35.aeiup

Morgan NA, Rego LL (2006) The value of different customer satisfaction and loyalty metrics in predicting business performance. Mark Sci 25(Issue 5):426–439. https://doi.org/10.1287/mksc.1050.0180

Moschovis PP, Dinesh A, Boguraev AS, Nelson BD (2022) Remote online global health education among U.S. medical students during COVID-19 and beyond. BMC Med Educ 22(1):1–10. https://doi.org/10.1186/s12909-022-03434-3

Nakwong P, Muttamara A, Soontorn B (2020) NPS better predict online classroom. Int Symp Proj Approaches Eng Educ 10:314–318

Nolan HA, Owen K (2024) Medical student experiences of equality, diversity, and inclusion: content analysis of student feedback using Bronfenbrenner’s ecological systems theory. BMC Med Educ 24(1). https://doi.org/10.1186/s12909-023-04986-8

Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD, Shamseer L, Tetzlaff, JM, Akl EA, Brennan SE, Chou R, Glanville J, Grimshaw JM, Hróbjartsson A, Lalu MM, Li T, Loder EW, Mayo-Wilson E, McDonald S, … Moher D (2021) The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ, n71. https://doi.org/10.1136/bmj.n71

Pardo-Jaramillo S, Gomez MI, Muñoz-Villamizar A, Lleo de Nalda A, Osuna Soto I (2025) Towards sustainable organizations through purpose-driven and customer-centric strategies. AMS Rev. https://doi.org/10.1007/s13162-025-00301-4

Pardo-Jaramillo S, Lleo de Nalda Á, Gómez M, Osuna I (2025) Enhancing corporate sustainability through customer centricity and corporate purpose. Business Strateg Environ. https://doi.org/10.1002/bse.4232

Pardo-Jaramillo S, Muñoz-Villamizar A, Gomez-Gonzalez JE (2023) Unveiling the influence of COVID-19 on the online retail market: a comprehensive exploration. J Retail Consum Serv 75. https://doi.org/10.1016/j.jretconser.2023.103538

Pardo-Jaramillo S, Muñoz-Villamizar A, Osuna I, Roncancio R (2020) Mapping research on customer centricity and sustainable organizations. Sustainability 12(19). https://doi.org/10.3390/su12197908

Patrinos H, Vegas E, Carter-Rau R (2022) An analysis of COVID-19 student learning loss. Educ Glob Pract Policy Res Work Pap 10033 10033(May):1–31. http://www.worldbank.org/prwp

Pettersson A, Karlgren K, Hjelmqvist H, Meister B, Silén C (2024) An exploration of students’ use of digital resources for self-study in anatomy: a survey study. BMC Med Educ 24(1). https://doi.org/10.1186/s12909-023-04987-7

Pinar M, Wilder C, Luth M, Girard T (2024) Student satisfaction with learning experience and its impact on likelihood recommending university: net promotor score approach. Jf Higher Educ Theory Pract 24(8). https://doi.org/10.33423/jhetp.v24i8.7200

Pine 2nd BJ, Gilmore JH (1998) Welcome to the experience economy. Harv Bus Rev 76(4):97–105

Portillo J, Garay U, Tejada E, Bilbao N (2020) Self-Perception of the Digital Competence of Educators during the COVID-19 pandemic: a cross-analysis of different educational stages. Sustainability 12(23):10128. https://doi.org/10.3390/su122310128

Reichheld FF (2003) The one number you need to grow. Harvard Business Review

Roy SK, Gruner RL, Guo J (2022) Exploring customer experience, commitment, and engagement behaviours. J Strateg Mark 30(1):45–68. https://doi.org/10.1080/0965254X.2019.1642937

Sanseau E, Lavoie M, Tay K-Y, Good G, Tsao S, Burns R, Thomas A, Heckle T, Wilson M, Kou M, Kou M, Auerbach M (2021) TeleSimBox: a perceived effective alternative for experiential learning for medical student education with social distancing requirements. AEM Educ Train 5(2). https://doi.org/10.1002/aet2.10590

Sobaih AEE, Hasanein AM, Abu Elnasr AE (2020) Responses to COVID-19 in Higher Education: Social Media Usage for Sustaining Formal Academic Communication in Developing Countries. Sustainability 12(16):6520. https://doi.org/10.3390/su12166520

Tariq Ismail Al-Nuaimi I, Kamil Bin Mahmood A, Elyanee Mustapha E, H.Jebur H (2016) Development of measurement scale for hypothesized conceptual model of e-service quality and user satisfaction relationship. Res J Appl Sci, Eng Technol 12(8):870–884. https://doi.org/10.19026/rjaset.12.2787

Thakker SV, Parab J, Kaisare S (2021) Systematic research of e-learning platforms for solving challenges faced by Indian engineering students. Asian Assoc Open Univ J 16(1):1–19. https://doi.org/10.1108/AAOUJ-09-2020-0078

Tricco AC, Lillie E, Zarin W, O’Brien KK, Colquhoun H, Levac D, Moher D, Peters, MDJ, Horsley T, Weeks L, Hempel S, Akl EA, Chang C, McGowan J, Stewart L, Hartling L, Aldcroft A, Wilson MG, Garritty C, … Straus SE (2018) PRISMA extension for scoping reviews (PRISMA-ScR): Checklist and explanation. In Annals of Internal Medicine (Vol. 169, Issue 7, pp. 467–473). American College of Physicians. https://doi.org/10.7326/M18-0850

UNESCO (2020) UN Secretary-General warns of education catastrophe, pointing to UNESCO estimate of 24 million learners at risk of dropping out. https://www.Unesco.Org/En/Articles/Un-Secretary-General-Warns-Education-Catastrophe-Pointing-Unesco-Estimate-24-Million-Learners-Risk-0#:~:Text=The%20Policy%20Brief%20points%20to,In%20South%20and%20West%20Asia

Youssofi A, Jeannot F, Jongmans E, Dampérat M (2023) Designing the digitalized guest experience: a comprehensive framework and research agenda. Psychol Market. https://doi.org/10.1002/mar.21929

Zhang L, Carter RA, Qian X, Yang S, Rujimora J, Wen S (2022) Academia’s responses to crisis: a bibliometric analysis of literature on online learning in higher education during COVID‐19. Br J Educ Technol 53(3):620–646. https://doi.org/10.1111/bjet.13191

Zhao X, Yang M, Qu Q, Xu R, Li J (2022) Exploring privileged features for relation extraction with contrastive student-teacher learning. IEEE Transactions on Knowledge and Data Engineering, 1–1. https://doi.org/10.1109/TKDE.2022.3161584

Bilal, Hysa E, Akbar A, Yasmin F, Rahman A ur, Li S (2022) Virtual Learning During the COVID-19. Pandemic: A Bibliometric Review and Future Research Agenda. Risk Manag. Healthc. Policy 15:1353–1368. https://doi.org/10.2147/RMHP.S355895

Acknowledgements

This work was supported by Universidad de La Sabana (project EICEA-157-2023).

Author information

Authors and Affiliations

Contributions

All authors were involved in the conception, design, and development of the study’s methodology, as well as in the analysis and regular meetings to refine the research. Sergio Pardo-Jaramillo, Daniel Aristizábal Hernández, and Paulo Cabrera Rivera conducted the material preparation, data collection, and drafting of the initial manuscript. Andres Muñoz and Sergio Pardo also had a big role in helping with the inclusion of the feedback from all the reviewers. All authors reviewed and provided valuable feedback on earlier versions of the manuscript. The final version has been read and approved by all authors.

Corresponding author

Ethics declarations

Competing interests

The authors declare no conflict of interest regarding the publication of this research article. We have no financial or personal relationships with individuals or organizations that could inappropriately influence our work. The research was conducted impartially and objectively, aiming to contribute to the scientific knowledge in the field. We confirm that the data presented in this article is accurate and truthful to the best of our knowledge. Furthermore, we have adhered to all ethical guidelines and regulations in conducting this research and reporting its findings. We are committed to upholding the highest standards of integrity and transparency in our work.

Ethical approval

Ethical approval was not required as the study did not involve human participants.

Informed consent

Informed consent was not necessary since the study did not involve human participants.

AI disclosure

While preparing this work, the authors used ChatGPT to assist with grammar corrections and translations. After utilizing this tool, the authors meticulously reviewed and edited the content as necessary and take full responsibility for the content of the publication.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Pardo-Jaramillo, S., Aristizábal-Hernández, D., Cabrera, P. et al. Navigating higher education during COVID-19: a systematic review and meta-analysis of NPS and customer experience in technological adoption. Humanit Soc Sci Commun 12, 1087 (2025). https://doi.org/10.1057/s41599-025-05474-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1057/s41599-025-05474-8