Abstract

The widespread use of AI-based applications, such as ChatGPT, in higher education has raised questions about how doctoral students adopt these technologies, particularly in science departments. However, traditional models, such as the Technology Acceptance Model (TAM), may fall short in fully capturing users’ behavioral intentions toward emerging technologies. This study aims to investigate PhD students’ behavioral intention to use ChatGPT for learning purposes by proposing an extended version of the TAM. The proposed model incorporates five additional factors: Social Influence (SI), Perceived Enjoyment (PEN), AI Self-Efficacy (AI-SE), AI’s Sociotechnical Blindness (AI-STB), and Perceived Ethics (AI-PET). Data were collected from 361 PhD students across 35 universities and analyzed using Partial Least Squares Structural Equation Modeling (PLS-SEM). The results reveal that all proposed factors significantly influence students’ behavioral intention to use ChatGPT. This study contributes to the literature by introducing new external variables into the TAM framework and providing empirical insights into doctoral students’ acceptance of AI tools in science education, thereby offering a novel perspective for future research and educational practice.

Similar content being viewed by others

Introduction

Artificial intelligence (AI) is defined as a broad field that aims to develop systems that mimic human intelligence (Collins et al. 2021). This technology, which offers human-like or more advanced capabilities, has been developed in response to the question “Can machines think?” posed by Alan Turing in the 1950s (Tlili et al. 2023; Taani and Alabidi, 2024). This fundamental question played an important role in the evolution of AI (Adamopoulou and Moussiades, 2020), leading to the development of the first chatbots, such as “ELIZA (Weizenbaum, 1966)”, “PARRY (Colby et al. 1971)”, “ALICE (Wallace, 2009)”, and “SmarterChild (Molnar and Szuts, 2018)”(Zhou et al. 2024). Over time, the functionality of chatbots has improved significantly, evolving into more complex and intelligent systems that enhance the user experience. Chatbots are now characterized as advanced AI systems that simulate human-like speech through text or voice interactions (Camilleri, 2024). These systems generate natural language responses based on user input using algorithms trained on large data sets (Menon and Shilpa, 2023). In higher education institutions (HEIs I), AI and AI tools, especially advanced language models like ChatGPT, play a critical role in teaching and learning processes and are widely discussed (Lo, 2023; Tupper et al. 2024; Lo et al. 2024; Wang et al. 2024c). Artificial Intelligence in Education (AIED) is “transforming teaching and learning processes”, “providing innovative learning experiences,” and “enhancing the effectiveness of educational management” (Duong et al. 2023; Gidiotis and Hrastinski, 2024; Saihi et al. 2024). AIED supports this transformation with functions such as monitoring student performance, identifying individual deficiencies, and providing customized feedback (Montenegro-Rueda et al. 2023; Gidiotis and Hrastinski, 2024; Saihi et al. 2024). Chatbots enhance students’ self-regulation skills (Montenegro-Rueda et al. 2023), provide insights into student behaviors to improve learning outcomes (Kuhail et al. 2023; Alhwaiti, 2023; Liu and Ma, 2024), and play a significant role in the presentation of course content (Taani and Alabidi, 2024).

These technologies support cognitive development (Bhullar et al. 2024), reduce learning anxiety (Chiu et al. 2023), facilitate second language learning (Karataş et al. 2024), and enhance programming skills (Yilmaz and Karaoglan Yilmaz, 2023). In addition, chatbots contribute to developing critical thinking and writing skills (Tupper et al. 2024), and career planning (Bhutoria, 2022). However, the use of AI chatbots in education also raises ethical, moral, and security issues (Stahl and Eke, 2024). Trustworthy AI should be based on principles such as human oversight, transparency, non-discrimination, and accountability (Díaz-Rodríguez et al. 2023). AIED applications face challenges such as ethical issues, inadequate evaluation, and user attitudes (Okonkwo and Ade-Ibijola, 2021), and non-transparent decision-making processes (Bathaee, 2018). These issues may limit the credibility and use of AI. In addition, the overuse of AI raises concerns that it may negatively affect users’ independent thinking skills (Qin et al. 2020). Despite these concerns, AI technologies can potentially revolutionize the academic world through innovative applications in education (Rawas, 2024).

ChatGPT, a large language model (LLM) based chatbot developed by OpenAI, has brought a critical perspective to teaching and learning practices in higher education (Bhullar et al. 2024) and has gained an important place (Habibi et al. 2023). The ChatGPT model, released in May 2024, stands out for its features that enable more natural and fluent communication in human-computer interaction (OpenAI, 2024). ChatGPT is a robust and reliable Natural Language Processing (NLP) model that can understand and generate natural language with high accuracy in many domains, such as text generation, language comprehension, and development of interactive applications (Gill and Kaur, 2023). The model’s competencies in contextual understanding, language generation, task adaptability, and multilingualism provide significant advances in the field of GenAI (Ray, 2023).

The impact of tools such as AI and ChatGPT on the learning processes of HEIs students is increasingly being explored (Lo, 2023; Strzelecki, 2023; Tayan et al. 2024; Bhullar et al. 2024; Lo et al. 2024). GenAI tools that offer quality human-like interactions, especially large language models such as ChatGPT, are found to be more useful and acceptable to learners. As Lin and Yu (2025a) state, “the perceived trustworthiness and human-like features of AI-generated pedagogical agents have a significant impact on students’ perceived usefulness and intention to use” (p. 1620). Several studies have examined university students’ behavioral intentions regarding using artificial intelligence tools in learning and developed policies and strategies using different models (Dahri et al. 2024; Al Shloul et al. 2024; Grassini et al. 2024). Conceptual models are used to explain individuals’ acceptance of new technologies. These models are generally based on individuals’ attitudes, intentions, and behaviors (Davis, 1989). The Technology Acceptance Model (TAM) is a widely utilized theoretical framework in the academic literature to elucidate the factors influencing individuals’ technology acceptance and the processes through which they accept new technologies (Davis and Venkatesh, 1996; Almogren et al. 2024; Saihi et al. 2024). TAM is considered an approach that provides researchers with important information to predict users’ behavioral intentions, especially regarding the use of technology decisions (Marangunić and Granić, 2015). However, due to the multidimensional nature of behavioral intentions toward AI technologies (Bergdahl et al. 2023; Zhang et al. 2024b), it is suggested in the literature that TAM does not form an ideal structure for determining user acceptance (Schepman and Rodway, 2020). In addition, some psychological factors may play a more dominant role in accepting AI than in accepting other technologies (Bergdahl et al. 2023). It can be concluded that some new external factors still need to be considered to explain the users’ behavioral intentions. Furthermore, there are very few studies on whether and how students accept ChatGPT for learning purposes. Specifically, there is a significant gap in the literature regarding how PhD students adopt these technologies. While AI-based chatbots have shown great potential in writing, little is known about whether and how PhD students accept the use of ChatGPT (Zou and Huang, 2023).

In light of the consideration given above, the motivation of this study comes from extending the TAM by jointly including new external factors such as Social Influence (SI), Perceived Enjoyment (PEN), AI Self-Efficacy (AI-SE), AI’s Sociotechnical Blindness (AI-STB), and AI Perceived Ethics (AI-PET) in addition to the factors of Perceived Usefulness (PU), Perceived Ease of Use (PEOU), and Behavioral Intention to Use (BI) already present in the TAM. Additionally, this study investigates the behavioral intentions of PhD students in natural sciences toward ChatGPT using the proposed model. In the application part, 361 PhD students from various scientific fields in natural sciences at 35 universities participated in the study. The data were analyzed using PLS-SEM.

This study offers a novel contribution by extending the TAM with AI-specific sociotechnical and ethical constructs to explain doctoral students’ behavioral intention to use ChatGPT for teaching and learning in science education.

Study objectives

This study aims to achieve the following objectives:

-

1.

To analyze the impact of these newly proposed external factors on the behavioral intention of PhD students in the natural sciences toward adopting ChatGPT.

-

2.

To develop and test an extended version of the TAM that includes Social Influence (SI), Perceived Enjoyment (PEN), AI Self-Efficacy (AI-SE), AI’s Sociotechnical Blindness (AI-STB), and AI Perceived Ethics (AI-PET) to explain PhD students’ behavioral intention to use ChatGPT for learning purposes.

ChatGPT in education

ChatGPT, with its human-like text generation and natural responsiveness (Jo and Park, 2024) transforms traditional teaching methods and provides students with personalized learning experiences (Tlili et al. 2023; Grassini et al. 2024). The main motivations for using ChatGPT among university students include quick response, ease of use, and accessibility (Klimova and de Campos, 2024). The use of ChatGPT in different disciplines varies depending on students’ familiarity with technology and perceived benefits (Romero-Rodríguez et al. 2023). ChatGPT and AI tools are found to be used more intensively by students in engineering, mathematics, and science fields due to their proximity to technology (von Garrel and Mayer, 2023). ChatGPT is gaining popularity in computer science and engineering, especially for skills such as writing, modifying, and debugging code (Geng et al. 2023; Kosar et al. 2024; Lo et al. 2024). In science, ChatGPT has a significant impact on knowledge analysis and understanding of complex concepts. ChatGPT responses to chemistry questions provide students with a better understanding of the topics (Fergus et al. 2023; Guo and Lee, 2023), while civil engineering students report that ChatGPT responses are more comprehensive and informative (Uddin et al. 2024). In modern statistics education, ChatGPT has been observed to help students with technical subjects such as R programming (Ellis and Slade, 2023; Xing, 2024). However, in subjects such as geometry, it has been reported that ChatGPT does not have a deep enough understanding, it is difficult to correct misconceptions, and its accuracy can vary depending on the complexity of the prompts given (Wardat et al. 2023).

The generative and iterative processes associated with artificial intelligence generated content (AIGC) require learners not only to evaluate the utility of these technologies but also to align their cognitive strategies with the ever-evolving capabilities of AIGC (Wang et al. 2025). Wang et al. (2025) stated that students need to interact with the outputs of generative AI tools such as ChatGPT, critically evaluate and reprocess these outputs; this process requires them to restructure their cognitive strategies in line with the technology.

Evaluations of the positive and negative effects of ChatGPT in higher education have been discussed with increasing interest in the academic world in recent years (Lo, 2023; Strzelecki, 2023; Tayan et al. 2024; Lo et al. 2024). Although ChatGPT has many potential benefits, such as enhancing the learning experience, speeding up administrative tasks (Tayan et al. 2024), increasing student engagement, and supporting adaptive learning processes (Wang et al. 2024b), research on the factors having an impact on using this technology and the causal relationships between these factors is limited (Baig and Yadegaridehkordi, 2024). Students’ acceptance and use of ChatGPT are affected by factors called perceived usefulness, perceived convenience, and familiarity with the technology. Furthermore, considering gender as an important variable in technology use (Gefen and Straub, 1997; Venkatesh and Morris, 2000; Arthur et al. 2025), research suggests that male students are more likely to use AI chatbots (Bastiansen et al. 2022; Stöhr et al. 2024).

In addition to its benefits, ChatGPT raises important ethical concerns such as social justice, individual autonomy, cultural identity, and environmental issues (Stahl and Eke, 2024). One of these concerns is that biased or outdated information in the datasets used for training ChatGPT may reproduce social and cultural biases, which may reduce the credibility of academic research (Farrokhnia et al. 2024). In the academic community, the ethical dimensions of ChatGPT are increasingly being debated, with issues such as the accuracy of the content, the impact on academic integrity, and the ethical use of AIED being among the main concerns (Cooper, 2023). Also, the high learning value provided by generative AI may increase the tendency of university students to move away from processes that require cognitive effort, and this may lead to a decrease in students’ willingness to use independent thinking skills over time (Tang et al. 2025).

Especially in thesis writing, exams, and assignments, the production of unverified content without citing sources or using original thinking (Acosta-Enriquez et al. 2024a) increases the risk of plagiarism and causes serious problems in terms of academic integrity (Lo, 2023; Chaudhry et al. 2023). The ease of use that ChatGPT offers in tasks ranging from text generation to coding (Stojanov, 2023), may lead students to use information directly without engaging their analytical thinking and decision-making skills (Abbas et al. 2024). This leads students to neglect the skills of content validation, critical evaluation, and ethical behavior, resulting in a superficial approach to knowledge and a lack of academic integrity. The risk of producing false or biased information misleads students and undermines trust in educational processes (Rahimi and Talebi Bezmin Abadi, 2023; Qadir, 2023).

ChatGPT misuse threatens academic integrity in higher education (Memarian and Doleck, 2023; Geerling et al. 2023; Espartinez, 2024), raising issues of plagiarism, academic dishonesty (Farhi et al. 2023) accountability, and confidentiality. However, “over-reliance on ChatGPT” and its potential consequences should be considered multidimensionally. Although ChatGPT increases student engagement in the learning process, its unconscious or excessive use has been reported to lead to cognitive overload (Woo et al. 2024). Unconscious use of this technology can negatively impact students’ critical thinking and problem-solving skills (Kasneci et al. 2023); students may miss opportunities to develop their knowledge and skills by focusing on academic success with pre-determined answers (Tlili et al. 2023). This situation may increase students’ reliance on technology, which in turn can reduce their capacity for independent thinking and problem-solving over time (Zhang et al. 2024a; Zhai et al. 2024).

Theoretical framework and research hypotheses

Technology acceptance model

The integration of new technologies, especially AI technologies, and tools, in the field of education and training (Elbanna and Armstrong, 2024) is receiving increasing attention. The education system has a large audience of students who can use AI technologies in the process of knowledge transfer and acquisition. Consequently, an understanding of the manner in which users accept AI technologies and an analysis of the adoption processes are of critical importance for the effective use of these technologies in an educational context. The TAM is a powerful model (Sagnier et al. 2020; Lim and Zhang, 2022) that has been widely used to study the acceptance of AI technologies and provides a comprehensive framework for understanding users’ cognitive and behavioral responses to technologies (Granić and Marangunić, 2019).

TAM, developed by Davis (1989), is considered one of the most effective models for examining what triggers users’ adoption of technology (Koenig, 2024). TAM was developed to understand the relationship between the characteristics of a technology and users’ adoption of that technology and emphasizes the mediating role of two key factors in this process, e.g., PEOU and PU (Marangunić and Granić, 2015).. These factors are important determinants shaping users’ cognitive and behavioral responses to technology.

TAM reflects users’ individual preferences for technology use; however, in complex technologies such as AI, apart from “individual preferences, social norms, other’ decisions” (Schepman and Rodway, 2020, p. 11), and various psychological factors (Bergdahl et al. 2023) play an important role. Sohn and Kwon (2020) argue that more than the TAM model may be required to explain innovative technologies, especially AI-based applications. Although TAM has been criticized for its repetitive use, it remains a valid and widely applied framework, especially when extended with context-specific variables. In this study, TAM was enriched with AI-related constructs to better reflect the dynamics of generative AI adoption, offering a simplified yet robust model suitable for the research context. While UTAUT and UTAUT2 offer comprehensive frameworks for understanding technology acceptance, their structural complexity and reliance on multiple moderating variables may limit their applicability in studies aiming for model parsimony and context-specific insights. Therefore, the extended TAM framework has been used in many studies to predict student acceptance of AI technologies and ChatGPT (Al-Adwan et al. 2023; Baig and Yadegaridehkordi, 2024; Saihi et al. 2024). Important studies examining university students’ use of AI tools and ChatGPT within the TAM framework are given in Table 1 below.

Studies examining HIE students’ intentions and behaviors toward the use of ChatGPT have not only been limited to TAM but also studies using other technology acceptance models, such as UTAUT2 with modifications (Foroughi et al. 2023; Habibi et al. 2023; Strzelecki, 2023; Bouteraa et al. 2024; Arthur et al. 2025). As shown in Table 1, the theoretical framework of the classical TAM model has been extended by adding various exogenous factors according to the characteristics of the studies. Al-Abdullatif (2023) combined the relationships between the constructs of TAM and the value-based model (VAM) to examine how students perceive and accept chatbots in their learning process. The results showed that PU, PEOU, attitude, perceived enjoyment, and perceived value were the determinants of students’ acceptance of chatbots. However, the perceived risk variable did not significantly affect students’ attitudes or acceptance of using chatbots for learning. Lai et al. (2023), in a study conducted with 50 undergraduate students from eight universities in Hong Kong, examined the role of intrinsic motivation in the intention to use ChatGPT and added only the intrinsic motivation variable to the classical TAM model. The research results show that PU strongly predicts behavioral intention, but there is no significant relationship between PEOU and behavioral intention (BI). The results also show that PU and PEOU do not mediate considerably in the relationship between intrinsic motivation and BI.

Zou and Huang (2023) examined PhD students’ acceptance of ChatGPT in writing and the factors influencing it within the framework of the TAM; it was found that there is a high intention among PhD students in China to use ChatGPT in writing. Bilquise et al. (2024) combined traditional TAM and UTAUT models with AI-driven self-service technology models and sRAM service robot acceptance models to examine UAE university students’ acceptance of ChatGPT for academic support within the Self Determination Theory (SDT) framework. Similar to previous research, this study shows that PU, PEU, trust, and social efficacy are important factors in acceptance. This reemphasizes the importance of the combined influence of individual and social factors in AI-based technologies. Similarly, Saihi et al. (2024) emphasized that PU and perceived efficacy factors have strong positive effects on the intention to adopt AI chatbots, but concerns about “trust and privacy,” “interaction,” and “information quality” are evident. Sallam et al. (2024) in their study conducted in higher education institutions in the United Arab Emirates found that students’ positive attitudes towards the use of ChatGPT were associated with gender (male), Arab origin, low GPA and PU, low perceived risk of use, and high cognitive structures and PEOU factors. These findings suggest that individual demographic characteristics and perceptual factors may determine acceptance of AI-based technologies. Almogren et al. (2024) in a study conducted with university students in Saudi Arabia, added seven exogenous factors to the TAM model and found that PEOU and PU were important determinants in explaining attitudes toward ChatGPT. The study showed that subjective norms did not significantly affect attitudes toward the educational use of ChatGPT. The results emphasize the importance of individual perceptions in technology acceptance by showing that individual perceptions, ease of use, and feedback quality are more effective than social pressure.

Liu and Ma (2024), in their study of EFL (English as a foreign language) students, found that perceived enjoyment (PEN) and attitude were strong determinants of ChatGPT adoption; however, PEOU did not directly affect attitude. Nevertheless, PEOU significantly affected PU, which mediated users’ development of positive attitudes and behaviors toward the technology. These findings suggest that improving the user experience can promote the adoption of ChatGPT in educational settings. In a study conducted with 108 students from public and private universities in Vietnam, it was found that PEOU influenced intention to accept ChatGPT, while PU had no direct effect but an indirect effect through personalization and interactivity (Maheshwari, 2024). This finding suggests that personalizing the user experience and providing interactive elements can increase the acceptance of AI-based tools like ChatGPT. Boubker (2024) found that in the acceptance of ChatGPT by students of higher education institutions in Morocco, output quality impacts PU, usage, and student satisfaction, and social influence also plays an important role in this process. Chang et al. (2024) investigated the differences between students with weak and strong digital AI skills, using a latent class model to assess the digital AI skills of college students. The results showed that attitude and perceived behavioral control played important roles in ChatGPT acceptance; furthermore, subjective norms were also effective in ChatGPT acceptance among students with strong AI digital abilities. These findings indicate that digital literacy is a critical factor in the technology adoption process. Güner et al. (2024), in their study of higher education students in Turkey, found that perceived enjoyment had a positive effect on PU, PEOU, and attitude toward ChatGPT use; however, personal innovativeness had a limited effect on attitude.

In this study, as similar to the literature (see Table 1), an extended TAM model was used to predict the acceptance of ChatGPT by PhD students in the field of science. In the extended model, in addition to the PU and PEOU variables of the classical TAM,

External predictors: The external predictors used to predict perceived usefulness and perceived ease of use include AI’s Sociotechnical Blindness (AI-STB), AI Perceived Ethics (AI-PET), and AI’s Perceived Self-Efficacy (AI-SE).

Factors from other theories: To increase the predictive validity of TAM, Social Influence (SI) and Perceived Enjoyment (PEN) factors were included, and the model was structured according to these two main categories.

SI and PEN encourage users to use technology more motivated, while AI-SE increases users’ trust in technology. AI-STB indicates that users can ignore the possible negative effects of AI, while AI-PET stands out as a critical factor for users to develop trust in AI technology. The extended model proposed in this study is illustrated in Fig. 1.

Hypotheses development

Social Influence (SI)

Social Influence (SI), which plays an important role in the early acceptance of new technologies (Venkatesh et al. 2003), is one of the determining factors in using AI technologies for learning purposes. The Theory of Reasoned Action (TRA), one of the main theoretical underpinnings of the original development of TAM, addresses social influence through subjective norm (Venkatesh and Davis, 2000) Subjective norm is defined by Ajzen and Fishbein (1980) as “a person’s perception that most people important to him or her think that he or she should or should not perform a particular behavior” (Venkatesh and Davis, 2000, p. 187). Venkatesh and Davis (2000) found that SI significantly affects perceived usefulness through internalization when people incorporate social influences into their perceptions of usefulness and use a system to improve their job performance. In this study, SI was defined as graduate students’ beliefs about the encouragement and support they receive from those around them (faculty members, peers, etc.) regarding the use of ChatGPT (Ayaz and Yanartaş, 2020; Venkatesh, 2022). Various studies (Algerafi et al. 2023; Zhang et al. 2023; Boubker, 2024; Dahri et al. 2024; Albayati, 2024; Abdalla, 2024) emphasized that SI has an effect on PU. Consequently, it is assumed that graduate students’ beliefs about the usefulness of ChatGPT are positively influenced by their social environment.

H1: SI positively influences the PU of ChatGPT.

Perceived Enjoyment (PEN)

PEN, which influences the acceptance of new technologies as an intrinsic motivator (Davis et al. 1992), refers to users’ beliefs that they can enjoy using a particular technology (Venkatesh, 2000). The positive effect of perceived enjoyment of learning technology on PEOU and BI has been confirmed in studies in the literature (Zhou et al. 2022; Algerafi et al. 2023; Zhang et al. 2023; Güner et al. 2024; Dehghani and Mashhadi, 2024; Abdalla, 2024). In this framework, it is expected that there is a strong relationship between PEN and PEOU (Davis et al. 1992) for PhD students using ChatGPT as a learning tool.

H2: PEN positively influences the PEOU of ChatGPT.

AI Self-Efficacy (AI-SE)

Self-efficacy, which expresses an individual’s belief in their ability to succeed in a specific task (Bandura, 1982), in the AIED perspective, refers to the general beliefs about an individual’s ability to interact with AI, successfully learn AI concepts and tools, and apply them (Wang and Chuang, 2024; Kim and Kim, 2024). AI-SE is considered a strong predictor of learning and success (Ng, 2023). It has been reported in many studies that individuals with high self-efficacy perceive the use of new technologies as easier (Granić, 2023; Al Darayseh, 2023; Algerafi et al. 2023; Al-Adwan et al. 2023; Zhang et al. 2023; Güner et al. 2024; Pan et al. 2024). It has been assumed that PhD students have a high AI-SE due to their educational level; therefore, their perceptions of the ease of use of ChatGPT would be higher.

H3: AI-SE positively influences the PEOU of ChatGPT.

Perceived AI Ethics (AI-PET)

Ethics is a moral principle determining the rightness or wrongness of specific actions (Raghunathan and Saftner, 1995). Ethics in the context of AIED are beliefs regarding the moral and ethical implications of using AI-based learning tools for academic purposes (Acosta-Enriquez et al. 2024b). AI-PET significantly influences student’s behavioral intentions to accept and integrate ChatGPT into learning environments (Khechine et al. 2020; Farhi et al. 2023; Salifu et al. 2024), while severe ethical concerns negatively impact these behavioral intentions (Shao et al. 2024). Therefore, it is anticipated that PhD students who find the use of AI tools for learning purposes ethical will find the use of ChatGPT easy and beneficial.

H4: AI-PET will be positively associated with the PEOU of ChatGPT.

H5: AI-PET ethics will be positively associated with the PU of ChatGPT.

AI’s Sociotechnical Blindness (AI-STB)

It has been emphasized in various studies that the stress and anxiety caused by students’ use of educational technologies in higher education has become an increasingly important problem (Lin and Yu, 2025b). “AI anxiety – AI-ANX” in its simplest definition, expresses individuals’ fears and concerns about uncontrolled AI (Johnson and Verdicchio, 2017a, b). AI-ANX is seen as a significant precursor belief (Wang and Wang, 2022) influencing attitudes and external factors that determine behavioral intentions to accept AI tools (Chan, 2023; Wang et al. 2024b). Unlike other technological concerns, AI-ANX triggers, such as confusion regarding autonomy and sociotechnical (Johnson and Verdicchio, 2017a, b, 2024), are being discussed more extensively. Wang and Wang (2022) defined AI-ANX dimensions as learning, job replacement, sociotechnical blindness, and AI configuration. Sociotechnical blindness refers to an excessive focus on AI programs that obscures people’s fundamental role at every stage of designing and implementing AI systems (Johnson and Verdicchio, 2017a). In the study, it is assumed that PhD students’ beliefs about the misuse, malfunction, or potential consciousness and surpassing human control of ChatGPT will have a negative impact on their PEOU and PU.

H6: AI-STB negatively influences the PEOU of ChatGPT.

H7: AI-STB negatively influences the PU of ChatGPT.

Perceived Ease of Use (PEOU)

PEOU refers to how easy it is perceived to use and learn a technology (Davis, 1989). The perception of ease of use enables individuals to find technology useful and increases their intention to use it (Venkatesh et al. 2003). The impact of PEOU on PU and BI regarding AI acceptance and adoption has been demonstrated in numerous studies (Alyoussef, 2023; Algerafi et al. 2023; Zhang et al. 2023; Pang et al. 2024; Shao et al. 2024; Almogren et al. 2024; Almulla, 2024; Abdalla, 2024). In this context, it is expected that doctoral students who perceive ChatGPT as easy to use will have a high perceived usefulness and stronger behavioral intentions.

H8: PEOU positively influences the PU of ChatGPT.

H9: PEOU positively influences BI to use ChatGPT.

Perceived Usefulness (PU)

The PU reflects individuals’ beliefs that using a particular system will enhance their job performance (Davis et al. 1989; Davis, 1989). PU significantly influences behavioral intentions as a strong determinant in the technology adoption process (Venkatesh et al. 2012; Alyoussef, 2023; Algerafi et al. 2023; Zou and Huang, 2023; Zhang et al. 2023; Güner et al. 2024; Pan et al. 2024; Abdalla, 2024). It is expected that PhD students’ beliefs in the benefits of ChatGPT for learning will significantly influence their intention to use this technology.

H10: PU positively influences BI to use ChatGPT.

When the research hypotheses presented above are considered in their entirety, it is anticipated that the direct, indirect, and total effects between the five exogenous variables (SI, PEN, AI-SE, AI-STB, and AI-PET) incorporated into the model and the two endogenous variables (PU and PEOU) representing mediation will provide the most comprehensive explanation of the behavioral intentions of PhD students about the use of ChatGPT. The study’s conceptual framework, shown in Fig. 1, was created based on the research hypotheses defined above.

Research method

Research design and sample

This study adopted a three-stage research design to minimize sampling bias and ensure the sample’s representativeness. In the first phase, the target population consisted of doctoral students in the natural sciences (mathematics, physics, chemistry, biology, and statistics) at 35 universities in Türkiye. These universities, scoring between 20 and 60 points in the national TUBITAK university ranking (TÜBİTAK, 2023), were selected because they are known for high-quality education and are typically preferred by students who are more successful in university entrance exams and more interested in technology and artificial intelligence. In the second phase, using a Python tool specifically developed for the research, information (title, field, email) about academic staff working in the field of natural sciences at the corresponding 35 universities was collected from the YÖKAKADEMIC (YÖK, 2024a) database (a central system containing information and resumes of academic staff). These academic staff members were informed via email and requested to provide us with the email addresses of their doctoral students. Then, the email addresses of doctoral students sent by their advisors were listed randomly in a document with a line number. In the third phase, a Python tool was developed to generate a uniform random number between 1 and 651. Then, units with line numbers matching the generated random number via the Python tool were selected as sampling units, i.e., samples were chosen randomly by following the random sampling rules (De Muth, 2014, pp. 43–48).

As a result, the online survey was completed by 402 PhD students, who were selected randomly, between May and June 2024. However, 27 participants reported not using any AI tools, and 14 did not complete the survey questions; therefore, the final sample consisted of 361 PhD students. This sample size satisfies the requirement for detecting a minimum path coefficient of 0.15 with a 5% error probability, as suggested by Kock and Hadaya (2018) using the inverse square root method, which requires a minimum sample size of 275 (Sarstedt et al. 2021, p. 29) During the data collection process, no incentives were offered to participants; participation was entirely voluntary and conducted in accordance with ethical guidelines. The demographic characteristics of the participants are presented in Table 2.

Data collection

Research instruments

The study data were collected through a structured questionnaire consisting of two parts. The first part of the questionnaire included questions about the demographic characteristics of the participants, while the second part included scale items to assess doctoral students’ behavioral intentions to use ChatGPT technology for educational purposes. These items were adapted from previously validated studies within the TAM framework and restructured to fit the context of the study. The second part of the questionnaire includes the following constructs: SI (4 items, Venkatesh et al. 2003), PEN (4 items, Venkatesh and Bala, 2008), AI-SE (4 items, Şahin et al. 2022; Salloum et al. 2019), AI-PET (5 items, Malmström et al. 2023), AI-STB (4 items, Y.-Y. Wang and Wang, 2022), PEOU (4 items, Davis, 1989), PU (4 items, Davis, 1989) and BI (3 items, Venkatesh and Davis, 2000). The survey was designed using a 5-point Likert scale and was arranged to be completed within 15–20 min.

Pre-testing

In order to ensure the content validity of the questionnaire, the opinions of four academicians who are experts in the field of AIED were obtained. In line with the expert feedback, conceptual clarity was ensured, linguistic expressions were improved, and the order of the items was rearranged. Following this process, a pilot study was conducted with 15 PhD students in the field of natural sciences in order to assess the comprehensibility, applicability, and contextual validity of the scale. This pre-testing process is in line with the good practices recommended to increase the validity of questionnaire applications, especially in the context of new learning technologies (Wang et al. 2024a). The questionnaire form was finalized in light of the feedback obtained as a result of the pilot application. Detailed survey items and references for each structure are presented in Appendix 1.

Data analysis

This exploratory study focuses on explaining the effects of the dimensions included in the conceptual model and the causal relationships to predict the BI of PhD students regarding the use of ChatGPT. In testing structural relationships, the data did not meet the multivariate normality assumption (Mardia’s MVN test, p < 0.001) due to the model’s complexity and exploratory nature. Therefore, the PLS-SEM method was used to test the proposed conceptual framework in the study, and the analyses were conducted using SmartPLS (v.4.1.0.8). PLS-SEM is preferred to understand theoretical models better when the data do not meet the assumptions of normal distribution, the model includes formative constructs, and there are higher-order constructs. For these reasons, PLS-SEM, a multivariate analysis technique (Hair et al. 2021) was used in the study to examine both the measurement and structural models.

Result

In this study, a two-stage method was used to evaluate the extended TAM; this method includes both measurement and structural model analysis. In the first stage, it was defined as measurement model analysis, and the validity and reliability of the model were examined (Hair et al. 2019). The following stage analyzed the structural model to evaluate the research hypotheses.

Measurement model evaluation

The measurement model has been evaluated based on indicator reliability, internal consistency reliability, convergent validity, and discriminant validity (Hair et al. 2021). Table 3 shows the structures, items, factor loadings, Cronbach’s alpha (CA α), composite reliability (CR), average variance extracted (AVE), and Variance Inflation Factor (VIF) values from the measurement model.

Convergent validity

In the examination of the validity and reliability of the measurement model, the standardized factor loadings of all items in the model were first examined, and the standardized factor loadings were found to be greater than 0.60 and statistically significant (p < 0.001). This result has been interpreted as an indication of high convergent validity. (MacCallum et al. 2001; Hair et al. 2019). For scale reliability, it is expected that the CA (α) and CR values are above 0.70 (Hair et al. 2019) and the AVE value is greater than 0.50. (Fornell and Larcker, 1981; Hair et al. 2019). In the study, it was determined that all latent constructs had CA (α) values (the range of 0.782–0.876), CR values (the range of 0.791–0.883), and AVE values (the range of 0.605–0.730) above the specified threshold values (Table 3), and that the measurement model’s reliability, construct reliability, and convergent validity were at an acceptable level. This result has provided evidence regarding the accuracy and consistency of the structures included in the model (Hair et al. 2021).

Additionally, the linearity condition of the measurement model was statistically examined using the VIF. Quantitatively measures the degree of collinearity between the indicators in the VIF formative measurement model and the set of predictive structures in the structural model, and VIF values of 3 and above are considered indicative of multicollinearity (Sarstedt et al. 2021). When Table 3 is examined, it is concluded that there is no multicollinearity among the items, as the VIF values for all items range from 1.430 to 2.842.

Discriminant validity

In examining the validity and reliability of the measurement model, the standardized Convergent validity assessment of all items in the model was conducted first, followed by the analysis of the model’s discriminant validity. Discriminant validity refers to “the high loading of a scale’s items on their constructs and their differentiation from other constructs” (Hair et al. 2019, p.9). The study assessed validity using the Heterotrait-Monotrait (HTMT) ratio and the Fornell-Larcker Criterion (FLC). The HTMT value between two latent constructs being below 0.90 (Hair et al. 2019) statistically indicates that discriminant validity is achieved between these two constructs. The results given in Table 4 show that all HTMT values between the latent structures are below 0.90. FLC, on the other hand, demonstrates discriminant validity by showing that the square root of a dimension’s AVE value is greater than its correlations with other dimensions. (Hair et al. 2019, p. 10). According to the FLC results presented in Table 5, the square root of each latent dimension’s AVE was greater than the correlations with other dimensions. These findings indicate that the measurement model successfully achieves discriminant validity.

Model fit indices

PLS-SEM, unlike classical SEM approaches, recommends the use of standardized root mean square residual (SRMR) and Bentler-Bonett normed fit index (NFI) for evaluating model fit (Henseler et al. 2016). SRMR = 0.057 and NFI = 0.835 were found to evaluate the approximate fit of the estimated model. Hu and Bentler (1999) suggested a threshold value of 0.08 for SRMR as acceptable. In terms of NFI, values above 0.90 generally represent an acceptable fit (Henseler et al. 2016), and a NFI value close to 1.00 is considered a sufficient criterion for evaluating model fit. These results indicate that the approximate fit of the measurement model is at an acceptable level (see Table 6).

Structural models’ evaluation

In order to ascertain the significance level for each of the theoretical paths presented in the research model, we conducted a bootstrapping procedure with 5000 subsamples based on the robust measurement model. In the evaluation of the structural model, the following methods proposed by Hair et al. (2021) were examined in order: assessment of the model’s collinearity status, the magnitude and, significance of path coefficients \((\beta -{values})\), R2 values of endogenous variables, Stone-Geisser’s Q2 value, and effect size f2 methods.

Analysis of collinearity

The collinearity assessment for the structural model, while similar to the collinearity assessment in the formative measurement model, is calculated using the scores of latent variables for predictor dimensions in partial regression to determine the VIF values (Hair et al. 2019). Ideally, VIF values should be close to 3 and lower. In cases where multicollinearity is an issue, creating theoretically supported higher-order models is a commonly used method. (Hair et al. 2019, 2022). For the structural model, the VIF values ranged from 1.001 to 2.104, and these results indicate that multicollinearity among the predictor constructs in the structural model does not pose a problem (Table 7).

Path coefficients

The path coefficients included in the model represent the relationships proposed in the research hypotheses. The statistical assessment of path coefficients is evaluated using the bootstrap method, and the values of the coefficients within the range of “−1 to +1” are examined. (Hair et al. 2020). In the structural model, 9 path coefficients were found to be statistically significant at the 99% confidence level, while one (PU → BI) was significant at the 95% confidence level, with path coefficients ranging from −0.412 to 0.520 (Table 7). The significance of path coefficients indicates that the hypothesized relationships are statistically reliable, while their importance shows how strong these relationships are in practice.

R 2 values

The next step in evaluating the structural model is to examine the R2 values of the endogenous variables. To determine the model’s explanatory power, the commonly used R2 measures the explained variance in each of the endogenous structures (Hair et al. 2021). R2 values of 0.75, 0.50, and 0.25 are considered high, medium, and low levels of explanatory power, respectively (Henseler et al. 2009; Sarstedt et al. 2021). In this study, the R2 values of the three latent constructs in the structural model were calculated as \({R}_{{BI}}^{2}=0.565\), \({R}_{{PU}}^{2}=0.717\), and \({R}_{{PEOU}}^{2}=0.744\) (p < 0.001). The R2 value regarding PhD students’ use of ChatGPT indicates how well behavincluded ioral intention explains the changes in the actual use of ChatGPT. The latent structures in the model explain 56.5% of the variation in PhD students’ use of ChatGPT, indicating that the model has a moderate explanatory power. According to the analysis, the R2 values calculated provide strong evidence that the model is consistent with the research context.

Predictive relevance (Q 2)

Stone-Geisser Q2 value (Stone, 1974; Geisser, 1974) was used as a criterion to evaluate the predictive relevance of the structural model. The Q2 values being greater than zero is considered an indicator of the predictive relevance of latent endogenous structures (Hair et al. 2019). However, when the Q2 values are “greater than 0, 0.25, and 0.50, the PLS-path model shows predictive relevance at low, medium, and high levels, respectively.” (Hair et al. 2020, p. 107) In the structural model, the endogenous latent constructs were found to have values of \({Q}_{{BI}}^{2}=0.540\), \({Q}_{{PU}}^{2}=0.682\), and \({Q}_{{PEOU}}^{2}=0.736\), indicating that the model has strong predictive capability, and this result was interpreted as the model accurately reflecting reality without error. (Hair et al. 2021).

Effect size (f 2)

The effect of removing a specific predictor construct from the structural model on the R2 value of an endogenous construct is called the f2 effect size and “is similar to the size of the path coefficients” (Hair et al. 2021, p. 119). Effect size f2 is classified as small (0.02 ≤ f2 < 0.15), medium (0.15 ≤ f2 < 0.35), and large (f2 ≥ 0.35) effects (Hair et al. 2020, p. 109). The f2 values given in Table 7 indicate that some exogenous variables have a more pronounced effect on endogenous variables, while others contribute at a small to moderate level. This evaluation reveals the complex interactions between the latent structures in the model.

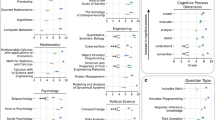

Evaluation of hypotheses

The results of the hypothesis tests are given in Table 7 and shown in Fig. 2, and the hypotheses have been discussed by evaluating the statistical significance of the structural parameters in the model. The analysis results have shown that social influence has a statistically significant positive effect \(\left(H1:\,\beta =0.375,t=12.613,p < 0.001\right)\) and a large effect size (magnitude of the effect) (f2 = 0.492) on PhD students’ perceived benefits of using ChatGPT. The perceived entertainment beliefs regarding the use of ChatGPT have a significant effect on the perceived ease of use \((H2:\beta =0.346,t=10.947,p < 0.001)\), and the effect size (f2 = 0.467) is found to be large. Similarly, AI-SE was found to have a statistically significant effect on PEOU \((H3:\beta =0.457,t=13.998,{p} < 0.001)\) and f2 =0.792 was considered large. It has been observed that the AI ethics perceived by students have a significant positive effect on the PEOU \((H4:\beta =0.428,t=13.995,p < 0.001)\) and the PU \((H5:\beta =0.224,t=4.417,p < 0.001)\) of ChatGPT, respectively. The effect size is interpreted as large for \({f}_{{AI}-{PET}\to {PEOU}}^{2}=0.699\) and small for \({f}_{{AI}-{PET}\to {PU}}^{2}=0.122\).

The AI sociotechnical blindness was expected to hypothetically have a negative impact on the perceived ease of use and benefits of using ChatGPT, and this assumption has been confirmed. The effect of AI-STB on PEOU was \(\beta =-0.412\) \((t=13.995,{p} < 0.001)\), while its effect on PU was \(\beta =-0.248(t=4.417,p < 0.001)\), indicating the presence of a significant negative relationship. As one of the pioneering variables defined in the classic TAM, perceived ease of use has shown a positive significant effect on the perceived usefulness of ChatGPT usage \((H8:\beta =0.500,t=8.955,p < 0.001)\) and on students’ behavioral intentions \((H9:\beta =0.520,t=6.073,p < 0.001)\), with effect sizes determined as \({f}_{{AI}-{PEOU}\to {PU}}^{2}=0.501\) large, \({f}_{{PEOU}\to {BI}}^{2}=0.295\) small.

Finally, it has been observed that the perceived usefulness of using ChatGPT has a statistically significant impact on behavioral intention \((H10:\beta =0.285,t=2.145,p < 0.05)\), and the effect size has been evaluated as small \(\left({f}^{2}=0.089\right)\).

Discussion

This study has addressed the behavioral intentions and factors determining the use of ChatGPT for educational purposes among doctoral students in the field of natural sciences at HEIs in Turkey, using the extended TAM and the PLS-SEM approach. The results confirmed that the behavioral intentions of PhD students to use ChatGPT for educational purposes were validated by the conceptually defined research model and that the latent structures of PEOU and PU positively impacted BI. The findings were supported by the literature (Güner et al. 2024; Shao et al. 2024; Almogren et al. 2024; Saihi et al. 2024).

The study has shown that social influence has a significant impact on the PU of using ChatGPT. The significant positive effect of social influence on PU indicates that doctoral students’ beliefs about the usefulness of ChatGPT are positively influenced by their social circles. This finding is consistent with the results reported by Güner et al. (2024), Boubker (2024) and Zhang et al. (2023), which indicates that the use of AI-based technologies like ChatGPT enhances perceived benefits through social influence.

The study has shown that the enjoyment perceived by doctoral students is an important factor in explaining the ease of use of ChatGPT. It has been predicted that doctoral students enjoy using ChatGPT, and this positively enhances their beliefs regarding its ease of use. This result deals with Zhang et al. (2023), Algerafi et al. (2023), Dehghani and Mashhadi (2024) and, Abdalla (2024), which explains the relationship between PEN and PEOU in ChatGPT usage. At the same time, the obtained result aligns with the theory that the perceived entertainment factor plays a motivational role in the technology adoption processes and affects the perceived ease of use (Compeau and Higgins 1995; Venkatesh and Bala 2008). As with perceived enjoyment, it has been determined that AI-SE is also positively related to PEOU. This result shows that PhD students’ belief in their ability to use AI technologies strengthens their perception of the ease of use of ChatGPT. At the same time, this result also shows that PhD students have a high belief in their ability to use ChatGPT in education and receive assistance. This finding also corroborates the findings reported by Al Darayseh (2023), Shao et al. (2024), Nja et al. (2023) and Algerafi et al. (2023), which states that AI-SE affects the PEOU of ChatGPT.

The AI-PET belief expresses the doctoral students’ views on the moral and ethical implications of using ChatGPT for academic purposes. Research findings have supported that students perceived ethical beliefs, PEOU, and PU regarding the use of ChatGPT have a significant positive impact. In the literature, studies integrating the perceived AI ethics framework into the TAM are limited (Shao et al. 2024). Our study addressing this gap considers the concept of perceived ethics as a premise for how students’ perceptions of the ease of use and usefulness structures of educational ChatGPT shape their positive beliefs. Using ChatGPT with an ethics-focused approach will help shape more accurate behavioral intentions. The findings obtained in the study indicate that students have a clear understanding of avoiding ethical dilemmas when using ChatGPT for the purpose of producing educational content, and this finding is consistent with the results of other studies on the ethical use of AI-based technologies like ChatGPT (Acosta-Enriquez, et al. 2024b; Farhi et al. 2023; Kajiwara and Kawabata, 2024; Malmström et al. 2023; Salifu et al. 2024; Usher and Barak, 2024).

AI anxiety is widely discussed in the literature, focusing on the use of AI technologies. In this study, the “sociotechnical blindness” dimension of the AI anxiety scale was developed in four dimensions by Wang and Wang (2022), has been integrated into the conceptual model framework of our study. Johnson and Verdicchio (2017a) state that, unlike other technophobias, AI anxiety can stem from misconceptions about AI technology, confusion about autonomy, and sociotechnical blindness. A type of “sociotechnical blindness” that conceals the fundamental role of humans in the design and implementation stages of an AI system (Johnson and Verdicchio 2017a). Sociotechnical blindness, by paving the way for the emergence of unrealistic scenarios that exclude the human factor (Johnson and Verdicchio 2024), causes the formation of belief patterns in which individuals fail to recognize the social and ethical implications of the development and use of AI technology. For these reasons, it is a priori assumed that doctoral students possess a higher level of ability to interpret AI anxiety, considering their educational levels and career plans. The AI sociotechnical blindness (AI-STB) perceived by doctoral students was expected to have a significantly negative impact on their perceived ease of use and usefulness of using ChatGPT, and this was confirmed by the findings. However, the total indirect effect of AI-STB on behavioral intention was calculated as \({\beta }_{{AI}-{STB}\to {BI}}=-0.343\) \(\left(t=14.701,p < 0.001\right)\). In the literature, users’ AI anxiety has been explained through various question forms and numbers; TAM (Al Darayseh, 2023; Algerafi et al. 2023; Chen et al. 2024; Guner and Acarturk, 2020; Saif et al. 2024; Sallam et al. 2024; Wang et al. 2021), UTAUT (Budhathoki et al. 2024; Li 2024) and other models ((Kaya et al. 2024; Wang et al. 2024b; Demir-Kaymak et al. 2024) and it has been reported that it has a negative impact on user behaviors. As a result, the opinions of PhD students regarding the usefulness and ease of ChatGPT are negatively affected by AI-STB and predicted based on findings that students have a significant level of concern.

The study revealed that the PEOU, PU, and BI of doctoral students when evaluating the use of ChatGPT as a learning tool align with the TAM theory. Within the scope of the study, it has been confirmed once again that PEOU is a key determinant of technology acceptance (Davis and Venkatesh,1996). This finding shows that the simple and effortless usability of ChatGPT increases doctoral students’ perception of its usefulness for learning purposes and their BI to use it. It has been confirmed in accordance with the literature that PU also has a positive effect on the BI of doctoral students towards the use of ChatGPT. However, within the classical TAM framework, PU is expected to have a stronger impact on BI than PEOU (Venkatesh and Davis, 2000). Algerafi et al. (2023) found that the effects of PEOU and PU on BI were equal \((\beta =0.203)\); Pan et al. (2024) investigated the key factors affecting university students’ willingness to use AI Coding Assistant Tools (AICATs) and found \({\beta }_{{PEOU}\to {BI}}=0.468\) ve \({\beta }_{{PU}\to {BI}}=0.312\); Almogren et al. (2024) obtained similar results to our study \({\beta }_{{PEOU}\to {BI}}=0.360\) ve \({\beta }_{{PU}\to {BI}}=0.130\). This situation is explained by the fact that PEOU is a strong predictor of PU (Kampa 2023) and BI in adopting new technologies (Zhou et al. 2022; Lai et al. 2023). One possible reason for this difference is thought to be the specificity of the conditions under which the study was conducted. Our study covers a period when ChatGPT was newly used in HIEs in Türkiye. It seems that intuitive usability may be more decisive than perceived long-term benefits at this early stage of use. The findings reveal that ease of use plays a stronger role in behavioral intention among users who encounter a new technology, such as ChatGPT, for the first time, even among students studying in disciplines closely related to technology, such as natural science. In this context, the study makes a theoretical contribution to the TAM literature by revealing the time-dependent factors that affect the PEOU-PU-BI relationship and user tendencies at the time of initial contact with the technology. In addition to these, the findings obtained in the study are similar to those reported by Algerafi et al. (2023), Zhang et al. (2023), Lai et al. (2023), Güner et al. (2024), Pan et al. (2024), and Zou and Huang (2023) which shows the PU affected by PEOU, and accordingly, these two factors have significant effects on BI in the context of AI chatbots and ChatGPT.

To the end, it has been determined that doctoral students in the field of science possess sufficient basic knowledge about AI, use ChatGPT for learning purposes, and have positive behavioral intentions.

Conclusions

In this study, the extended TAM was used to investigate the behavioral intentions of PhD students in the field of science toward the use of ChatGPT and the factors influencing it. The study used data obtained from 361 students pursuing PhD education in mathematics, physics, chemistry, biology, and statistics at 35 universities in Turkey and applied the PLS-SEM method for data analysis. The results have shown that external structures that positively and negatively affect behavioral intentions towards ChatGPT interact at a causal level. The study also found that the total indirect effects of social influence, perceived AI ethics, self-efficacy, and perceived entertainment structures on behavioral intentions were significant and positive, while “sociotechnical blindness,” which represents perceived AI anxiety, had a significant and negative impact.

The PEOU and PU have mediated the effect of the latent variables that are included in the proposed model to extend TAM on BI, and it has been emphasized that these mediating structures have created significant and positive effects on students’ behavioral intentions. The strong behavioral intention of PhD students in the field of natural sciences to use ChatGPT for learning purposes indicates that ChatGPT could enhance its function as an educational tool in PhD education in higher education. Additionally, while all causal relationships indicate that PhD students have a strong intention to use ChatGPT, measures should be taken to mitigate the negative effects of ChatGPT usage on PhD students.

Overall, this study is of great importance in providing a projection of the decisions made by doctoral students in the basic fields of science in Turkey regarding the use of ChatGPT, as well as filling the gap in the literature. The high level of AI ethics possessed by doctoral students, the significant AI anxiety they shape, their levels of AI self-efficacy, and their positive behavioral intentions towards the use of ChatGPT for learning purposes have emphasized the increasing importance of AI in higher education.

The Turkish Higher Education Council (YÖK, 2024b) has taken an important step in this regard by publishing an ethical guide in May 2024, which outlines fundamental values and principles to govern the integration of Generative AI into educational and scientific processes. However, based on research conducted using Turkish university websites (.edu.tr domain), few institutions appear to have implemented or published detailed guidance on AI usage or related ethical principles. This indicates that AI integration into scientific research and publication processes is still in its formative phase.

Both globally and within the Turkish higher education context, there is an urgent need to revise institutional policies and evaluation mechanisms to ensure academic integrity while using AI tools. As emphasized by Lo (2023) and Foroughi et al. (2023) a high level of sensitivity is required in adopting ChatGPT in education, and ethical risks must be actively addressed.

Theoretical implications

The findings underscore the theoretical relevance of extending the Technology Acceptance Model by integrating sociotechnical, affective, and ethical components such as perceived AI ethics, AI self-efficacy, perceived enjoyment, and AI anxiety (conceptualized as sociotechnical blindness). This model extension offers a richer framework to understand doctoral students’ behavioral intentions towards using generative AI tools like ChatGPT in academic contexts.

The role of mediating structures—Perceived Usefulness (PU) and Perceived Ease of Use (PEOU)—is particularly notable, as they serve as conduits through which external variables exert their influence on behavioral intention. This supports and enriches earlier findings in TAM-based literature by confirming the central role of PU and PEOU in facilitating technology adoption among advanced learners. In addition, this result suggests that users primarily focus on intuitive usability during the adoption process of new technologies, such as GenAI, and that their perception of functional benefits may develop over time. In addition, the strong negative effects of AI anxiety (AI-STB) on PU and PEOU indicate that not only cognitive but also psychosocial barriers are determinants of technology use. The results obtained regarding the effects of AI anxiety and other external factors included in the extended model reveal the necessity of considering the emotional and perceptual readiness levels of users, especially when using GenAI technologies; in this respect, it expands the theoretical scope of technology acceptance models.

Practical implications

This study has several practical implications for technology developers, educational policymakers, and faculty members. The results highlight the need for proactive strategies that promote the responsible and ethical integration of GenAI tools into doctoral education. In particular, institutions should focus on:

-

Raising awareness about ethical AI usage, especially considering the ethical concerns and anxiety identified among doctoral students (Foroughi et al. 2023; Lo, 2023),

-

Developing targeted training programs for both students and educators to ensure informed, responsible, and pedagogically meaningful AI use (Lund and Wang, 2023; Farrokhnia et al. 2024),

-

Embedding AI literacy into higher education as a component of graduation skills, aiming to improve learning outcomes and preserve academic integrity.

Additionally, the study findings suggest that emotional and cognitive barriers to AI should be considered not only at the level of individual awareness but also in institutional educational policies and instructional design processes. While ethical uncertainties and perceptual concerns arising from AI are also observed, especially among doctoral students, universities need to adopt holistic educational strategies that develop not only technical competence but also ethical thinking, technological critique, and psychological resilience.

Data availability

The datasets analyzed during the current study are available from the corresponding author upon reasonable request.

References

Abbas M, Jam FA, Khan TI (2024) Is it harmful or helpful? Examining the causes and consequences of generative AI usage among university students. Int J Educ Technol High Educ 21:1–22. https://doi.org/10.1186/S41239-024-00444-7/METRICS

Abdaljaleel M, Barakat M, Alsanafi M, et al. (2024) A multinational study on the factors influencing university students’ attitudes and usage of ChatGPT. Sci Rep. 14:1–14. https://doi.org/10.1038/s41598-024-52549-8

Abdalla RAM (2024) Examining awareness, social influence, and perceived enjoyment in the TAM framework as determinants of ChatGPT. Personalization as a moderator. J Open Innov: Technol Mark Complex 10:1–11. https://doi.org/10.1016/j.joitmc.2024.100327

Acosta-Enriquez BG, Arbulú Ballesteros MA, Huamaní Jordan O et al. (2024a) Analysis of college students’ attitudes toward the use of ChatGPT in their academic activities: effect of intent to use, verification of information and responsible use. BMC Psychol 12:255. https://doi.org/10.1186/s40359-024-01764-z

Acosta-Enriquez BG, Arbulú Ballesteros MA, Arbulu Perez Vargas CG et al. (2024b) Knowledge, attitudes, and perceived Ethics regarding the use of ChatGPT among generation Z university students. Int J Educ Integr 20:1–23. https://doi.org/10.1007/s40979-024-00157-4

Adamopoulou E, Moussiades L (2020) Chatbots: History, technology, and applications. Mach Learn Appl 2:1–21. https://doi.org/10.1016/J.MLWA.2020.100006

Ajzen I, Fishbein M (1980) Understanding Attitudes and Predicting Social Behavior, 1st edition. Englewood Cliffs, N.J: Prentice-Hall

Al Darayseh A (2023) Acceptance of artificial intelligence in teaching science: Science teachers’ perspective. Comput Educ: Artif Intell 4:100132. https://doi.org/10.1016/j.caeai.2023.100132

Al Shloul T, Mazhar T, Abbas Q, et al. (2024) Role of activity-based learning and ChatGPT on students’ performance in education. Comput Educ: Artif Intell 6:1–18. https://doi.org/10.1016/j.caeai.2024.100219

Al-Abdullatif AM (2023) Modeling students’ perceptions of chatbots in learning: integrating technology acceptance with the value-based adoption model. Educ Sci 13:1–22. https://doi.org/10.3390/educsci13111151

Al-Adwan AS, Li N, Al-Adwan A, et al. (2023) Extending the Technology Acceptance Model (TAM) to Predict University Students’ Intentions to Use Metaverse-Based Learning Platforms. Educ Inf Technol 28:15381–15413. https://doi.org/10.1007/s10639-023-11816-3

Albayati H (2024) Investigating undergraduate students’ perceptions and awareness of using ChatGPT as a regular assistance tool: A user acceptance perspective study. Comput Educ: Artif Intell 6:100203. https://doi.org/10.1016/j.caeai.2024.100203

Algerafi MAM, Zhou Y, Alfadda H, Wijaya TT (2023) Understanding the Factors Influencing Higher Education Students’ Intention to Adopt Artificial Intelligence-Based Robots. IEEE Access 11:99752–99764. https://doi.org/10.1109/ACCESS.2023.3314499

Alhwaiti M (2023) Acceptance of Artificial Intelligence application in the post-Covid Era and its impact on faculty members’ occupational well-being and teaching self efficacy: a path analysis using the UTAUT 2 Model. Appl Artif Intell 37:1–21. https://doi.org/10.1080/08839514.2023.2175110

Almogren AS, Al-Rahmi WM, Dahri NA (2024) Exploring factors influencing the acceptance of ChatGPT in higher education: A smart education perspective. Heliyon 10:e31887. https://doi.org/10.1016/j.heliyon.2024.e31887

Almulla MA (2024) Investigating influencing factors of learning satisfaction in AI ChatGPT for research: University students perspective. Heliyon 10:e32220. https://doi.org/10.1016/j.heliyon.2024.e32220

Alyoussef IY (2023) Acceptance of e-learning in higher education: The role of task-technology fit with the information systems success model. Heliyon 9:e13751. https://doi.org/10.1016/j.heliyon.2023.e13751

Arthur F, Salifu I, Abam Nortey S (2025) Predictors of higher education students’ behavioural intention and usage of ChatGPT: the moderating roles of age, gender and experience. Interact Learn Environ 33:993–1019. https://doi.org/10.1080/10494820.2024.2362805

Ayaz A, Yanartaş M (2020) An analysis on the unified theory of acceptance and use of technology theory (UTAUT): Acceptance of electronic document management system (EDMS). Comput Hum Behav Rep. 2:100032. https://doi.org/10.1016/j.chbr.2020.100032

Baig MI, Yadegaridehkordi E (2024) ChatGPT in the higher education: A systematic literature review and research challenges. Int J Educ Res 127:102411. https://doi.org/10.1016/j.ijer.2024.102411

Bandura A (1982) Self-efficacy mechanism in human agency. Am Psychol 37:122–147. https://doi.org/10.1037/0003-066X.37.2.122

Bastiansen MHA, Kroon AC, Araujo T (2022) Female chatbots are helpful, male chatbots are competent? Publizistik 67:601–623. https://doi.org/10.1007/s11616-022-00762-8

Bathaee Y (2018) The artificial intelligence black box and the failure of intent and causation. Harv J Law Technol 31:890–934

Bergdahl J, Latikka R, Celuch M, et al. (2023) Self-determination and attitudes toward artificial intelligence: Cross-national and longitudinal perspectives. Telemat Inform 82:1–15. https://doi.org/10.1016/j.tele.2023.102013

Bhullar PS, Joshi M, Chugh R (2024) ChatGPT in higher education - a synthesis of the literature and a future research agenda. Educ Inf Technol 29:21501–21522. https://doi.org/10.1007/s10639-024-12723-x

Bhutoria A (2022) Personalized education and Artificial Intelligence in the United States, China, and India: A systematic review using a Human-In-The-Loop model. Comput Educ: Artif Intell 3:1–18. https://doi.org/10.1016/J.CAEAI.2022.100068

Bilquise G, Ibrahim S, Salhieh SM (2024) Investigating student acceptance of an academic advising chatbot in higher education institutions. Educ Inf Technol 29:6357–6382. https://doi.org/10.1007/s10639-023-12076-x

Boubker O (2024) From chatting to self-educating: Can AI tools boost student learning outcomes. Expert Syst Appl 238:121820. https://doi.org/10.1016/J.ESWA.2023.121820

Bouteraa M, Bin-Nashwan SA, Al-Daihani M, et al. (2024) Understanding the diffusion of AI-generative (ChatGPT) in higher education: Does students’ integrity matter. Comput Hum Behav Rep. 14:1–11. https://doi.org/10.1016/J.CHBR.2024.100402

Budhathoki T, Zirar A, Njoya ET, Timsina A (2024) ChatGPT adoption and anxiety: a cross-country analysis utilising the unified theory of acceptance and use of technology (UTAUT. Stud High Educ 49:831–846. https://doi.org/10.1080/03075079.2024.2333937

Camilleri MA (2024) Factors affecting performance expectancy and intentions to use ChatGPT: Using SmartPLS to advance an information technology acceptance framework. Technol Forecast Soc Change 201:1–13. https://doi.org/10.1016/j.techfore.2024.123247

Chan A (2023) GPT-3 and InstructGPT: technological dystopianism, utopianism, and “Contextual” perspectives in AI ethics and industry. AI Ethics 3:53–64. https://doi.org/10.1007/s43681-022-00148-6

Chang H, Liu B, Zhao Y, et al (2024) Research on the acceptance of ChatGPT among different college student groups based on latent class analysis. Interact Learn Environ 1–17. https://doi.org/10.1080/10494820.2024.2331646

Chaudhry IS, Sarwary SAM, El Refae GA, Chabchoub H (2023) Time to Revisit Existing Student’s Performance Evaluation Approach in Higher Education Sector in a New Era of ChatGPT — A Case Study. Cogent Educ 10(1). https://doi.org/10.1080/2331186X.2023.2210461

Chen D, Liu W, Liu X (2024) What drives college students to use AI for L2 learning? Modeling the roles of self-efficacy, anxiety, and attitude based on an extended technology acceptance model. Acta Psychol 249:1–9. https://doi.org/10.1016/j.actpsy.2024.104442

Chiu TKF, Xia Q, Zhou X, et al. (2023) Systematic literature review on opportunities, challenges, and future research recommendations of artificial intelligence in education. Comput Educ: Artif Intell 4:100118. https://doi.org/10.1016/J.CAEAI.2022.100118

Colby KM, Weber S, Hilf FD (1971) Artificial Paranoia. Artif Intell 2:1–25. https://doi.org/10.1016/0004-3702(71)90002-6

Collins C, Dennehy D, Conboy K, Mikalef P (2021) Artificial intelligence in information systems research: A systematic literature review and research agenda. Int J Inf Manag 60:102383. https://doi.org/10.1016/J.IJINFOMGT.2021.102383

Compeau DR, Higgins CA (1995) Computer self-efficacy: development of a measure and initial test. MIS Q 19:189–211. https://doi.org/10.2307/249688

Cooper G (2023) Examining science education in ChatGPT: An exploratory study of generative artificial intelligence. J Sci Educ Technol 32:444–452. https://doi.org/10.1007/s10956-023-10039-y

Dahri NA, Yahaya N, Al-Rahmi WM, et al. (2024) Extended TAM based acceptance of AI-Powered ChatGPT for supporting metacognitive self-regulated learning in education: A mixed-methods study. Heliyon 10:e29317. https://doi.org/10.1016/j.heliyon.2024.e29317

Davis FD (1989) Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q 13:319–340. https://doi.org/10.2307/249008

Davis FD, Venkatesh V (1996) A critical assessment of potential measurement biases in the technology acceptance model: three experiments. Int J Hum Comput Stud 45:19–45. https://doi.org/10.1006/ijhc.1996.0040

Davis FD, Bagozzi RP, Warshaw PR (1989) User acceptance of computer technology: a comparison of two theoretical models. Manag Sci 35:982–1003. https://doi.org/10.1287/mnsc.35.8.982

Davis FD, Bagozzi RP, Warshaw PR (1992) Extrinsic and intrinsic motivation to use computers in the workplace. J Appl Soc Psychol 22:1111–1132. https://doi.org/10.1111/j.1559-1816.1992.tb00945.x

De Muth JE (2014) Basic Statistics and Pharmaceutical Statistical Applications, 3rd edn. CRC Press Taylor & Francis Group

Dehghani H, Mashhadi A (2024) Exploring Iranian English as a foreign language teachers’ acceptance of ChatGPT in English language teaching: Extending the technology acceptance model. Educ Inf Technol 1–22. https://doi.org/10.1007/s10639-024-12660-9

Demir-Kaymak Z, Turan Z, Unlu-Bidik N, Unkazan S (2024) Effects of midwifery and nursing students’ readiness about medical Artificial intelligence on Artificial intelligence anxiety. Nurse Educ Pr 78:103994. https://doi.org/10.1016/j.nepr.2024.103994

Díaz-Rodríguez N, Del Ser J, Coeckelbergh M, et al. (2023) Connecting the dots in trustworthy Artificial Intelligence: From AI principles, ethics, and key requirements to responsible AI systems and regulation. Inf Fusion 99:101896. https://doi.org/10.1016/J.INFFUS.2023.101896

Duong CD, Bui DT, Pham HT, et al (2023) How effort expectancy and performance expectancy interact to trigger higher education students’ uses of ChatGPT for learning. Interact Technol Smart Educ. https://doi.org/10.1108/ITSE-05-2023-0096

Elbanna S, Armstrong L (2024) Exploring the integration of ChatGPT in education: adapting for the future. Manag Sustain: Arab Rev 3:16–29. https://doi.org/10.1108/MSAR-03-2023-0016

Ellis AR, Slade E (2023) A new era of learning: considerations for ChatGPT as a tool to enhance statistics and data science education. J Stat Data Sci Educ 31:128–133. https://doi.org/10.1080/26939169.2023.2223609

Espartinez AS (2024) Exploring student and teacher perceptions of ChatGPT use in higher education: A Q-Methodology study. Comput Educ: Artif Intell 7:1–10. https://doi.org/10.1016/J.CAEAI.2024.100264

Farhi F, Jeljeli R, Aburezeq I, et al. (2023) Analyzing the students’ views, concerns, and perceived ethics about chat GPT usage. Comput Educ: Artif Intell 5:1–8. https://doi.org/10.1016/j.caeai.2023.100180

Farrokhnia M, Banihashem SK, Noroozi O, Wals A (2024) A SWOT analysis of ChatGPT: Implications for educational practice and research. Innov Educ Teach Int 61:460–474. https://doi.org/10.1080/14703297.2023.2195846

Fergus S, Botha M, Ostovar M (2023) Evaluating academic answers generated using ChatGPT. J Chem Educ 100:1672–1675. https://doi.org/10.1021/acs.jchemed.3c00087

Fornell C, Larcker DF (1981) Structural equation models with unobservable variables and measurement error: Algebra and Statistics. J Mark Res 18:382–388. https://doi.org/10.2307/3150980

Foroughi B, Senali MG, Iranmanesh M, et al (2023) Determinants of intention to use ChatGPT for educational purposes: findings from PLS-SEM and fsQCA. Int J Hum Comput Interact 1–20. https://doi.org/10.1080/10447318.2023.2226495

von Garrel J, Mayer J (2023) Artificial Intelligence in studies—use of ChatGPT and AI-based tools among students in Germany. Humanit Soc Sci Commun 10:799. https://doi.org/10.1057/s41599-023-02304-7

Geerling W, Mateer GD, Wooten J, Damodaran N (2023) ChatGPT has aced the test of understanding in college economics: now what? Am Econ 68:233–245. https://doi.org/10.1177/05694345231169654

Gefen D, Straub DW (1997) Gender differences in the perception and use of E-Mail: An extension to the technology acceptance model. MIS Q 21:389–400. https://doi.org/10.2307/249720

Geisser S (1974) A predictive approach to the Random Effect Model. Biometrika 61:101–107. https://doi.org/10.2307/2334290

Geng C, Zhang Y, Pientka B, Si X (2023) Can ChatGPT pass an introductory level functional language programming course? ArXiv 1–13. https://doi.org/10.48550/arXiv.2305.02230

Gidiotis I, Hrastinski S (2024) Imagining the future of artificial intelligence in education: a review of social science fiction. Learn Media Technol 1–13. https://doi.org/10.1080/17439884.2024.2365829

Gill SS, Kaur R (2023) ChatGPT: Vision and challenges. Internet Things Cyber-Phys Syst 3:262–271. https://doi.org/10.1016/J.IOTCPS.2023.05.004

Granić A, Marangunić N (2019) Technology acceptance model in educational context: A systematic literature review. Br J Educ Technol 50:2572–2593. https://doi.org/10.1111/bjet.12864

Granić A (2023) Technology Acceptance and Adoption in Education. In: Zawacki-Richter O, Jung I (eds) Handbook of Open, Distance and Digital Education. Springer Nature Singapore, Singapore, pp 183–197. https://doi.org/10.1007/978-981-19-2080-6_11

Grassini S, Aasen ML, Møgelvang A (2024) Understanding University Students’ Acceptance of ChatGPT: Insights from the UTAUT2 Model. Appl Artif Intell 38:1–22. https://doi.org/10.1080/08839514.2024.2371168

Guner H, Acarturk C (2020) The use and acceptance of ICT by senior citizens: a comparison of technology acceptance model (TAM) for elderly and young adults. Univers Access Inf Soc 19:311–330. https://doi.org/10.1007/s10209-018-0642-4

Güner H, Er E, Akçapinar G, Khalil M (2024) From chalkboards to AI-powered learning: Students’ attitudes and perspectives on use of ChatGPT in educational settings. Technol Soc 27:386–404

Guo Y, Lee D (2023) Leveraging ChatGPT for enhancing critical thinking skills. J Chem Educ 100:4876–4883. https://doi.org/10.1021/acs.jchemed.3c00505

Habibi A, Muhaimin M, Danibao BK, et al. (2023) ChatGPT in higher education learning: Acceptance and use. Comput Educ: Artif Intell 5:1–9. https://doi.org/10.1016/J.CAEAI.2023.100190

Hair JF, Howard MC, Nitzl C (2020) Assessing measurement model quality in PLS-SEM using confirmatory composite analysis. J Bus Res 109:101–110. https://doi.org/10.1016/j.jbusres.2019.11.069