Abstract

In the rapidly evolving landscape of artificial intelligence (AI), understanding the factors that influence individuals’ intentions to adopt AI technologies is crucial, particularly within educational contexts. This study addresses a critical gap in the literature by examining how AI literacy interacts with the constructs of the Unified Theory of Acceptance and Use of Technology (UTAUT)—Performance Expectancy, Effort Expectancy, Social Influence, and Facilitating Conditions—to shape university students’ behavioral intentions to adopt AI technologies. Data from 359 Chinese university students were analyzed using Partial Least Squares Structural Equation Modeling (PLS-SEM). The findings reveal that AI Literacy is a significant predictor of Behavioral Intention to adopt AI, with Social Influence, Performance Expectancy, and Effort Expectancy serving as important mediators. Notably, Facilitating Conditions did not have a significant effect on Behavioral Intention in this context. The results underscore the importance of enhancing AI Literacy among university students to foster positive adoption intentions, particularly through social and expectancy-related factors, providing practical implications for educators and policymakers aiming to promote AI integration in higher education.

Similar content being viewed by others

Introduction

The integration of artificial intelligence (AI) into educational systems has emerged as a transformative force in recent years, reshaping how teaching and learning are conducted. AI-driven tools, such as intelligent tutoring systems, adaptive learning platforms, and automated assessment technologies, have the potential to enhance educational outcomes by personalizing learning experiences and increasing operational efficiency (Zawacki-Richter et al., 2019). These tools provide students with real-time feedback, tailor educational content to individual learning styles, and assist educators in managing large amounts of data for more informed decision-making (Luckin & Holmes, 2016). In China, where the government has placed a strong emphasis on AI development and its integration into education, AI technologies are increasingly available in universities and schools. However, the adoption of AI technologies among university students has remained inconsistent, with wide variation in how students engage with and utilize these tools (C. Wang et al., 2024). Therefore, understanding the factors that drive students’ adoption of AI is crucial for maximizing the benefits these technologies offer in educational contexts.

One potential factor influencing AI adoption is AI literacy, which refers to the knowledge and skills required to understand, critically engage with, and effectively use AI technologies (Long & Magerko, 2020). AI literacy goes beyond basic technological competence; it includes understanding AI’s underlying algorithms, ethical concerns, biases, and its broader societal impacts (Müller, 2020). As AI technologies become increasingly integrated into educational systems, fostering AI literacy is essential for students to not only use these tools effectively but also to understand their limitations and ethical implications. While digital literacy has been widely studied in the context of technology adoption (Saad et al., 2021; Yeşilyurt & Vezne, 2023), the more specialized concept of AI literacy is still underexplored, particularly regarding how it influences students’ engagement with AI technologies in educational settings. Additionally, limited research has examined how AI literacy interacts with well-established technology adoption models such as the Unified Theory of Acceptance and Use of Technology (UTAUT) to influence adoption behaviors.

The UTAUT model, developed by Venkatesh et al. (2003), has been widely used to predict technology adoption by examining four key constructs: Performance Expectancy, Effort Expectancy, Social Influence, and Facilitating Conditions. Previous research has demonstrated that Performance Expectancy and Effort Expectancy are significant predictors of adoption behavior, especially in educational contexts where technology is integrated into the learning process (Teo et al., 2019). Students who believe that AI tools will improve their academic performance are more likely to adopt them, as are those who find these tools easy to use (Y. Wang et al., 2021). Similarly, Social Influence plays an important role, especially in collectivist cultures such as China, where students’ decisions are often influenced by their peers and educators (Venkatesh & Zhang, 2010). Finally, Facilitating Conditions—such as access to reliable internet, technical support, and training—are crucial for students to effectively use AI technologies (Alzahrani, 2023). While these factors are critical for understanding technology adoption in general, they do not fully account for the domain-specific knowledge, such as AI literacy, which is critical for understanding adoption in contexts that require specialized competencies.

Hence, this study aims to integrate the UTAUT with AI literacy to create a holistic model. Specifically, this research explores how AI literacy impacts the UTAUT constructs and how these constructs, in turn, influence the Behavioral Intention to adopt AI technologies among Chinese university students. The study is guided by the following research questions:

RQ1: What is the direct impact of AI literacy on the UTAUT constructs (Performance Expectancy, Effort Expectancy, Social Influence, and Facilitating Conditions) among university students?

RQ2: How do the UTAUT constructs (Performance Expectancy, Effort Expectancy, Social Influence, and Facilitating Conditions) influence university students’ Behavioral Intention to adopt AI technologies?

RQ3: To what extent do the UTAUT constructs mediate the relationship between AI literacy and university students’ Behavioral Intention to adopt AI technologies?

The findings from this study will have both theoretical and practical implications. Theoretically, this research extends the UTAUT model by incorporating AI literacy, offering a more robust framework for understanding AI adoption. Practically, the study provides insights for educational institutions seeking to improve AI literacy and promote the effective adoption of AI technologies among students. By identifying the factors that influence students’ intentions to adopt AI, universities can develop targeted strategies to enhance AI integration into the curriculum, ultimately improving learning outcomes and preparing students for an AI-driven future.

Literature review and hypothesis development

This literature review aims to synthesize current research on AI literacy and the UTAUT to understand their roles in AI adoption in educational contexts. By examining these constructs, the review identifies existing research gaps and presents a proposed research model alongside the development of hypotheses.

AI literacy

Conceptualization of AI literacy

The concept of AI literacy is still evolving and draws from various fields including education, human-computer interaction (HCI), information science, and ethics. Several frameworks and definitions have emerged from different disciplines, each highlighting distinct aspects of AI literacy. For instance, Ng et al. (2021) proposed a comprehensive framework that integrates understanding, application, evaluation, and ethical awareness of AI, aligning it with traditional literacies while extending it to address specific ethical concerns related to AI technologies. Long & Magerko (2020) offer a competency-based approach tailored to human-computer interaction, focusing on designing educational experiences that foster public comprehension and engagement with AI. Heyder & Posegga (2021) developed a taxonomy of AI literacy, which identifies three core dimensions: functional, critical, and sociocultural. These dimensions offer a nuanced understanding of how AI is applied, evaluated, and understood in organizational contexts. Additionally, Cetindamar et al. (2022) add a workplace perspective, suggesting AI literacy involves technology-related, human-machine interaction, and learning capabilities that help non-AI professionals effectively engage with AI in digital workplaces. In the educational context, Allen & Kendeou (2024) introduce the ED-AI Lit framework, which emphasizes interdisciplinary collaboration and a deep understanding of AI systems, focusing on knowledge, critical evaluation, contextualization, autonomy, and ethics. Chiu et al. (2024) propose a comprehensive framework for AI literacy and competency designed specifically for K–12 education, emphasizing five core components: technology, impact, ethics, collaboration, and self-reflection. Stolpe & Hallström (2024) conceptualize AI literacy within technology education, highlighting the need for a multiliteracy approach that combines technical skills with scientific knowledge and socio-ethical understanding to prepare students for the challenges AI presents in daily life and the workforce. Yue Yim (2024) proposes a framework for AI literacy for young students, integrating digital literacy, data literacy, computational thinking, and AI ethics. This framework calls for a shift beyond constructionist perspectives to include both human and non-human agents in AI education, providing a more comprehensive understanding of AI literacy.

Based on the various perspectives from recent literature, AI literacy can be understood as a multidimensional competency framework that equips individuals to effectively and ethically engage with AI technologies across different contexts, encompassing four main competencies: knowledge and understanding, practical skills, critical evaluation, and ethical awareness.

Emerging importance of AI literacy

AI literacy is increasingly critical across various sectors, playing a transformative role in effective AI Adoption. In workplaces, AI literacy equips employees with the knowledge to navigate AI-driven changes, fostering productivity and innovation by understanding AI applications, ethical implications, and human-machine interaction (Cetindamar et al., 2022). In healthcare, AI literacy is vital for professionals to leverage AI responsibly for diagnostics, personalized medicine, and patient care, enabling them to critically evaluate AI’s role and manage risks associated with its deployment (Kimiafar et al., 2023). Public awareness of AI, supported by promoting AI literacy among diverse groups, enables informed decision-making and critical engagement with AI technologies, ensuring broader societal benefits (Kasinidou, 2023). Additionally, in sectors like manufacturing and finance, AI literacy is crucial for leveraging AI’s full potential to enhance productivity, foster innovation, and drive strategic decision-making, aligning human capabilities with AI advancements (Rizvi et al., 2021). Across these sectors, AI literacy ensures that professionals are proficient in using AI tools and capable of critically evaluating and implementing AI to maximize benefits while mitigating risks, driving responsible and effective AI adoption.

In the education sector, AI literacy is particularly relevant, as it supports both educators and students in adapting to an AI-driven world. There is an increasing effort to integrate AI literacy into curricula to prepare future generations for the rapidly evolving digital landscape. For example, the “Five Big Ideas” framework has been adopted to teach core AI concepts in schools across eleven countries, with ongoing efforts to develop standardized assessments for AI literacy among junior secondary students (Yau et al., 2022). Additionally, studies such as Zhao et al. (2022) highlight the need for teacher training in AI to improve their AI literacy, thereby enhancing the quality and effectiveness of classroom teaching. In higher education, AI literacy is increasingly regarded as essential for preparing students for careers in a wide range of fields. Kong et al. (2021) evaluated an AI literacy course specifically designed for university students with diverse study backgrounds. Their findings showed that effective AI literacy programs can empower students from various disciplines to understand and apply AI technologies in practical settings. Additionally, Rütti-Joy et al. (2023) emphasize the need for sustainable teacher education programs that build AI literacy among teaching staff, enabling them to navigate the ethical and practical challenges of AI in educational settings. Tzirides et al. (2024) highlight the importance of AI literacy in higher education and propose an innovative pedagogical approach that integrates Generative AI (GenAI) tools and cyber-social teaching methods to enhance students’ AI literacy. These efforts to enhance AI literacy in education are crucial for ensuring that both students and educators are equipped with the skills and understanding needed to use AI effectively and ethically.

The unified theory of acceptance and use of technology

The UTAUT model

The UTAUT model is a widely used framework for understanding the factors that influence the acceptance and use of technology. The UTAUT model was developed by Venkatesh et al. (2003) and integrates elements from eight previous models of technology acceptance. It is designed to explain user intentions to use a technology and subsequent usage behavior. The model includes four key constructs: Performance Expectancy, Effort Expectancy, Social Influence, and Facilitating Conditions. The model posits that these constructs directly influence Behavioral Intention, which in turn predicts actual technology use. Performance Expectancy refers to the degree to which an individual believes that using a technology will enhance their performance. In the context of AI, this could involve perceptions of how AI can improve efficiency, decision-making, or productivity in various tasks (Cao et al., 2021). Effort Expectancy captures the perceived ease of use of a technology. In the case of AI, Effort Expectancy may be particularly relevant, given the often-complex nature of AI systems and the learning curve associated with interacting with AI tools (Cabrera-Sánchez et al., 2021). Social Influence refers to the extent to which individuals perceive that important others believe they should use a particular technology. In the context of AI, Social Influence may stem from endorsements by educators, peers, or industry leaders, impacting users’ willingness to engage with AI technologies (Nadella et al., 2023). Facilitating Conditions represent the perceived availability of organizational or technical resources that support the use of technology. In the context of AI adoption, this includes access to reliable AI tools, technical support, and adequate training.

UTAUT and AI adoption

UTAUT has been effectively utilized across various industries to examine AI technology adoption. For instance, in the consumer sector, Cabrera-Sánchez et al. (2021) found that Performance Expectancy, Effort Expectancy, Social Influence, and Facilitating Conditions significantly influenced consumers’ intentions to adopt AI applications. In organizational contexts, Chatterjee et al. (2023) highlighted that the adoption of AI-integrated customer relationship management (CRM) systems was largely driven by Performance Expectancy and Effort Expectancy, with Social Influence and supportive organizational conditions also playing crucial roles. Similarly, in the social development sector, Jain et al. (2022) identified these factors as key drivers of AI adoption, emphasizing the importance of addressing fears and concerns about AI to facilitate acceptance. In the human resources sector, Tanantong & Wongras (2024) identify perceived value, perceived autonomy, effort expectancy, and facilitating conditions as key drivers of the intention to adopt AI in recruitment processes. While social influence and trust in AI do not directly impact adoption intention, social influence affects perceived value, and trust in AI influences effort expectancy. These studies underscore the UTAUT model’s broad applicability in understanding the determinants of AI adoption across different industries, aligning with Venkatesh (2022), who proposes using the UTAUT model to explore research directions focused on individual characteristics, technology characteristics, environmental factors, and interventions to enhance AI tool adoption.

In the educational sector, UTAUT has been applied to a more limited extent, but several studies have focused on how AI technologies are adopted by educators and students. Chatterjee and Bhattacharjee (2020) utilized UTAUT to explore AI adoption in higher education in India, finding that Performance Expectancy, Effort Expectancy, and Social Influence were significant predictors of stakeholders’ intentions to adopt AI in academic settings. Similarly, Lin et al. (2022) found that Performance Expectancy and Effort Expectancy were significant predictors of AI adoption in learning environments, with Social Influence and Facilitating Conditions also playing important roles in shaping user acceptance of AI-based learning platforms. Budhathoki et al. (2024) explore university students’ intention to adopt ChatGPT in higher education using an extended UTAUT model that incorporates anxiety as an additional factor. Their study, conducted in the UK and Nepal, finds that performance expectancy, effort expectancy, and social influence significantly influence ChatGPT adoption in both countries. However, the impact of anxiety differed between the two contexts, highlighting the role of country-specific factors in the reception of ChatGPT. Foroughi et al. (2023) apply the UTAUT2 model to explore factors influencing the intention to use ChatGPT for educational purposes, highlighting the role of performance expectancy, effort expectancy, hedonic motivation, and learning value in driving its use. While personal innovativeness and information accuracy negatively moderate these relationships, the findings suggest that social influence, facilitating conditions, and habit do not significantly impact usage. Similarly, Tang et al. (2025) find that performance expectancy and effort expectancy positively influence learning value, which is the strongest predictor of students’ intention to use generative AI, with social influence and facilitating conditions impacting the formation of habit, which in turn affects adoption. These studies underscore the importance of adapting UTAUT to specific educational contexts to identify unique drivers and barriers to AI adoption, enabling more effective implementation of AI-driven technologies in learning environments.

In summary, the reviewed literature establishes the growing importance of AI literacy in influencing AI adoption while highlighting the UTAUT model as a valuable framework for examining the factors that shape AI adoption behaviors. However, while research on both AI literacy and technology adoption has expanded rapidly, these two streams have largely developed in parallel rather than in dialogue. The AI literacy literature presents rich conceptual frameworks, yet remains fragmented across fields and under-theorized in relation to behavioral models. Definitions range from technical competence to socio-ethical awareness (Long & Magerko, 2020; Stolpe & Hallström, 2024), but few frameworks are empirically tested in higher education, and even fewer linked to user behavior.

Meanwhile, the UTAUT model has been validated across sectors and contexts, including recent applications to AI adoption (Cabrera-Sánchez et al., 2021; Foroughi et al., 2023). However, UTAUT assumes a baseline cognitive readiness for technology use and lacks mechanisms for modeling domain-specific understanding or ethical discernment - aspects now essential for complex AI environments. More importantly, studies focusing on AI adoption in education either apply UTAUT without considering students’ cognitive preparedness (Teo et al., 2019; Tang et al., 2025) or explore AI literacy without integrating it into behavioral intention frameworks (Kong et al., 2021).

As a result, there is a clear theoretical and empirical gap in understanding how knowledge, perception, and contextual factors interact to shape students’ willingness and readiness to adopt AI technologies. This study addresses that gap by integrating AI literacy into the UTAUT model, providing a more nuanced view of adoption that captures both behavioral perceptions and cognitive competencies. It also contributes to educational research by offering an empirically testable model relevant to institutions undergoing rapid digital transformation, particularly in policy-driven contexts such as China.

Proposed research model

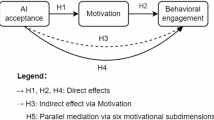

Building on the insights from the literature review, this study addresses the identified research gap by exploring the relationship between AI literacy and UTAUT constructs in shaping Chinese university students’ Behavioral Intention to use AI tools. The proposed model suggests that AI Literacy influences students’ Behavioral Intention through the mediation of UTAUT constructs: Performance Expectancy, Effort Expectancy, Social Influence, and Facilitating Conditions. Figure 1 visually represents the relationships in the proposed conceptual model.

Hypothesis development

Based on the literature review and the proposed research model, this study develops several hypotheses to investigate the relationships between AI Literacy, UTAUT constructs, and Behavioral Intention to use AI tools among Chinese university students.

AI literacy and UTAUT constructs

H1: AI Literacy has a positive effect on Performance Expectancy.

H2: AI Literacy has a positive effect on Effort Expectancy.

H3: AI Literacy has a positive effect on Social Influence.

H4: AI Literacy has a positive effect on Facilitating Conditions.

UTAUT constructs and behavioral intention

H5: Performance Expectancy positively affects Behavioral Intention to use AI.

H6: Effort Expectancy positively affects Behavioral Intention to use AI.

H7: Social Influence positively affects Behavioral Intention to use AI.

H8: Facilitating Conditions positively affect Behavioral Intention to use AI.

The mediating role of UTAUT constructs

H9: Performance Expectancy mediates the relationship between AI Literacy and Behavioral Intention to use AI.

H10: Effort Expectancy mediates the relationship between AI Literacy and Behavioral Intention to use AI.

H11: Social Influence mediates the relationship between AI Literacy and Behavioral Intention to use AI.

H12: Facilitating Conditions mediate the relationship between AI Literacy and Behavioral Intention to use AI.

Methodology

Participants

The participants in this study were undergraduate students from a large public university in China. Ethical approval was obtained from the university prior to data collection, and informed consent was secured from all participants. A total of 400 students were initially recruited through convenience sampling, which involved reaching out to students enrolled in various academic programs, including engineering, management and education, to ensure a diverse representation of academic backgrounds. Convenience sampling was chosen due to its practicality and efficiency in recruiting participants from an accessible population within a limited time frame. This method is commonly used in exploratory research where the goal is to gain initial insights into a specific population (Fowler, 2009; Leedy et al., 2019). The sample size was determined based on the recommendation of Hair et al. (2017) for PLS-SEM analysis, which suggests a minimum sample size of 200. Out of the 400 distributed questionnaires, 386 were returned. Following a thorough data cleaning process, questionnaires with excessive missing values or those containing inconsistent responses that could potentially compromise the validity of the analysis were excluded. As a result, a total of 359 valid questionnaires were retained for the subsequent analysis.

The demographic breakdown of the participants is presented in Table 1. The sample consisted of a slightly higher proportion of female participants, with most respondents falling within the 18–24 age range. Academic year distribution was relatively even, ensuring representation across different stages of undergraduate study. AI usage in learning varied among participants, with a majority using AI tools sometimes. Notably, only a small proportion reported never or always using AI, suggesting that while AI adoption is growing, its integration into students’ academic routines remains inconsistent across the sample.

Instrumentation

The data collection instrument for this study was a structured questionnaire designed to measure variables based on the AI Literacy scale and UTAUT scale. The questionnaire was divided into three sections: demographic information, AI Literacy, and technology acceptance. The scales used in the study are summarized in Table 2.

AI literacy scale

To measure AI literacy among university students, this study adapted the AI Literacy Scale developed by B. Wang et al. (2023). The original 12-item scale, validated for measuring user competence in AI technologies, was modified to fit the context of higher education. The scale comprises four key constructs: Awareness, Usage, Evaluation, and Ethics. Each construct is assessed using three items. For instance, the Awareness construct includes items that evaluate students’ ability to recognize AI technologies used in their academic tools. The Usage construct assesses proficiency in applying AI tools for coursework and research activities, while the Evaluation construct measures students’ ability to critically assess the capabilities and limitations of AI tools used for academic purposes. The Ethics construct gauges awareness of privacy, security, and ethical considerations associated with using AI in an academic setting. Each item was rated on a 5-point Likert scale ranging from 1 (Strongly Disagree) to 5 (Strongly Agree).

UTAUT scale

The study utilized an adapted version of the UTAUT scale, originally developed by Venkatesh et al. (2003), to assess university students’ intention to adopt AI technologies in their academic activities. The scale measures five key constructs: Performance Expectancy, Effort Expectancy, Social Influence, Facilitating Conditions, and Behavioral Intention. Items in each construct was modified to align with the specific context of higher education. For example, Performance Expectancy items assess students’ perceptions of how AI tools enhance their academic productivity and performance, while Effort Expectancy items evaluate how easy students find AI tools to use for their studies. Social Influence items measure the extent to which students feel that important people, such as peers and educators, support their use of AI technologies. Facilitating Conditions items assess the availability of resources, knowledge, and support needed to use AI tools effectively. The Behavioral Intention items gauge students’ likelihood of using AI tools in their future coursework. Each item was rated on a 5-point Likert scale ranging from 1 (Strongly Disagree) to 5 (Strongly Agree).

Data analysis

This study primarily employed Structural Equation Modeling (SEM) to examine the relationships between AI literacy, UTAUT constructs, and Behavioral Intention to use AI. The analysis was conducted systematically using IBM SPSS and Smart PLS software, following a series of steps to ensure robust and reliable findings. Descriptive statistics were calculated using IBM SPSS to summarize the demographic characteristics of the sample and the main variables. Common Method Variance (CMV) was assessed using Harman’s Single-Factor Test, following the procedures outlined by Hair Jr et al. (2014), where CMV is considered a significant issue if the first factor accounts for more than 50% of the total variance. Measurement model was subsequently assessed for factor loading, construct reliability, convergent validity, and discriminant validity. Following the guidelines outlined by Hair Jr et al. (2017), factor loadings greater than 0.70 are generally considered acceptable, indicating that the item strongly reflects the construct it is intended to measure. Cronbach’s alpha was calculated to assess internal consistency, with values above 0.70 indicating acceptable reliability. Additionally, composite reliability (CR) was computed to measure the overall reliability of the construct, with values greater than 0.70 deemed acceptable. To evaluate convergent validity, AVE values should exceed 0.50, indicating that the construct explains more than half of the variance in its indicators (Fornell & Larcker, 1981). Discriminant validity was assessed using two methods: the Fornell-Larcker criterion and the Heterotrait-Monotrait (HTMT) ratio. According to the Fornell-Larcker criterion, discriminant validity is established when the square root of the AVE for each construct is greater than its correlations with any other construct (Fornell & Larcker, 1981). Additionally, the HTMT ratio was calculated, with values below 0.85 indicating adequate discriminant validity (Henseler et al., 2015).

Once the measurement model was confirmed to be reliable and valid, SEM was employed using Smart PLS to test the hypothesized relationships between AI literacy, UTAUT constructs and behavioral intention to use AI. The SEM model incorporated both first-order constructs and higher-order constructs. Specifically, AI literacy was conceptualized as a higher-order construct, consisting of four dimensions: Awareness, Usage, Evaluation, and Ethics. Each of these dimensions was measured using three specific indicators, reflecting the multidimensional nature of AI literacy. This approach is consistent with the use of PLS-SEM for complex models that aggregate related first-order factors into a broader latent variable (Sarstedt et al., 2019). In contrast, the UTAUT constructs were modeled as first-order constructs, each represented by four indicators. Both direct effects and mediation effects were analyzed in the model. R2 measures the explained variance in the dependent constructs. R2 values of 0.75, 0.50, and 0.25 are interpreted as substantial, moderate, and weak, respectively (Hair Jr et al. 2017). Effect Size (f2) used to assess the impact of each independent variable on the dependent variables. According to Cohen (2013), f2 values of 0.02, 0.15, and 0.35 represent small, medium, and large effects, respectively. The strength and direction of the relationships between constructs were assessed using path coefficients (β), with significance tested through bootstrapping with 5000 samples. Path coefficients are considered significant at p < 0.05 (Hair Jr et al. 2017).

Results and findings

Descriptive statistics

The descriptive statistics for the constructs in this study are presented in Table 3. The mean scores on a 5-point Likert scale ranged from 3.380 to 3.744, reflecting generally moderate levels of agreement or perception. The mean scores for all constructs—AI Literacy (M = 3.405), Performance Expectancy (M = 3.744), Effort Expectancy (M = 3.522), Social Influence (M = 3.665), Facilitating Conditions (M = 3.380), and Behavioral Intention (M = 3.608)—fell within the moderate range on a 5-point Likert scale. Notably, Performance Expectancy was the highest-rated construct, suggesting that students generally perceive AI tools as beneficial for their academic tasks. In contrast, Facilitating Conditions received the lowest score, indicating some perceived limitations in the resources and support available for using AI tools.

Common Method Variance Testing

The data for this study were collected using a self-reported questionnaire, which, due to its single-source nature, may be susceptible to Common Method Variance (CMV). To mitigate potential biases arising from shared measurement methods or item characteristics, several strategies were employed during data collection. Specifically, constructs were placed on separate pages with intervals between them to minimize any bias arising from respondents’ tendency to use similar response patterns across consecutive items, in line with recommendations by Shiau et al. (2019) and Wang et al. (2024). Additionally, Harman’s Single-Factor Test was applied to assess the presence of Common Method Bias which involves performing an exploratory factor analysis (EFA) on all the items in the study. Principal component analysis (PCA) revealed the extraction of nine factors, with the first factor accounting for 38.943% of the total variance, which is well below the 50% threshold suggested by Hair Jr et al. (2014). Based on these results, it was concluded that CMV does not present a significant concern in this study, and the relationships among the constructs are not influenced by method biases.

Measurement model

As shown in Table 4, all factor loadings ranged from 0.733 to 0.927, exceeding the recommended threshold of 0.70, which indicates strong indicator reliability (Hair Jr et al. 2017). Composite reliability values (rho_c) ranged from 0.865 to 0.950, and Cronbach’s Alpha values ranged from 0.791 to 0.930, both surpassing the minimum threshold of 0.70, confirming strong internal consistency for all constructs (Hair Jr et al. 2017). Additionally, the average variance extracted (AVE) values ranged from 0.616 to 0.826, all above the 0.50 threshold, suggesting that more than 50% of the variance in the indicators is explained by their respective constructs, demonstrating adequate convergent validity (Fornell & Larcker, 1981).

Discriminant validity was assessed using both the Fornell-Larcker criterion and the HTMT ratio. As shown in Table 5, the square root of the AVE for each construct was higher than the correlation with other constructs, confirming discriminant validity (Fornell & Larcker, 1981). The HTMT ratio for all constructs was below 0.85, ranging from 0.327 to 0.702, indicating that the constructs are distinct from one another (Henseler et al., 2015).

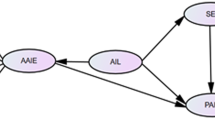

Structural model evaluation

The structural model was evaluated using the partial least squares structural equation modeling (PLS-SEM) approach, with the aim of analyzing both the direct and mediating relationships between constructs. The path model in Fig. 2 visually illustrates the relationships between the constructs, providing a clear representation of how AI Literacy influences the UTAUT constructs and subsequently influence Behavioral Intention to use AI.

R squared (R2) values

The R2 values indicate the amount of variance explained by the independent variables in the dependent variables. As shown in Table 6, the model demonstrated acceptable levels of variance explained across constructs. Behavioral Intention had an R2 of 0.359, indicating that 35.9% of the variance in Behavioral Intention is explained by the predictors. Performance Expectancy, Effort Expectancy, and Social Influence had R2 values of 0.340, 0.342, and 0.257, respectively, demonstrating moderate explanatory power. Facilitating Conditions, however, exhibited a lower R2 of 0.108, suggesting that the model explains less variance for this construct.

Effect Sizes (f2)

The effect size (f2) measures the impact of each exogenous construct on the endogenous constructs. As presented in Table 7, AI Literacy shows large effects on Effort Expectancy (f2 = 0.520) and Performance Expectancy (f2 = 0.515), a medium effect on Social Influence (f2 = 0.346), and a small effect on Facilitating Conditions (f2 = 0.121). The relationships between Effort Expectancy and Behavioral Intention, as well as between Performance Expectancy and Social Influence on Behavioral Intention, demonstrated small effect sizes (f2 = 0.023, 0.047, and 0.059, respectively). The effect of Facilitating Conditions on Behavioral Intention is negligible (f2 = 0.003), implying that Facilitating Conditions do not significantly contribute to predicting Behavioral Intention in this context.

Path coefficients and hypothesis testing

The bootstrapping technique was used with 5000 sub-samples to assess the significance of the relationships. Path coefficients were analyzed to test the hypothesized relationships, including both direct and mediating effects. Table 8 provides a summary of the path coefficients (β-values), standard deviations (STD), t-values, and p-values, along with the results of hypothesis testing.

The analysis of direct relationships demonstrated that AI Literacy had significant positive effects on Performance Expectancy (β = 0.583, t = 11.809, p < 0.001), Effort Expectancy (β = 0.585, t = 12.066, p < 0.001), Social Influence (β = 0.507, t = 9.188, p < 0.001), and Facilitating Conditions (β = 0.328, t = 6.149, p < 0.001), indicating that AI Literacy is a strong predictor of these UTAUT constructs. Furthermore, Performance Expectancy (β = 0.243, t = 3.640, p < 0.001), Effort Expectancy (β = 0.154, t = 2.656, p = 0.008), and Social Influence (β = 0.278, t = 4.293, p < 0.001) were found to significantly predict Behavioral Intention. However, the relationship between Facilitating Conditions and Behavioral Intention was not statistically significant (β = 0.047, t = 0.898, p = 0.369).

In terms of mediation, AI literacy exhibited significant indirect effects on behavioral intention through performance expectancy (β = 0.141, p = 0.001), effort expectancy (β = 0.090, p = 0.011), and social influence (β = 0.141, p < 0.001), confirming the mediating roles of these constructs. However, the mediating role of facilitating conditions was not supported (β = 0.016, p = 0.400).

These findings support most of the hypothesized relationships, except for the link between Facilitating Conditions and Behavioral Intention, which was not significant for either direct or mediating effects.

Discussion

The findings of this study reveal a complex interaction between AI Literacy and the core UTAUT constructs—Performance Expectancy, Effort Expectancy, Social Influence, and Facilitating Conditions —in predicting Behavioral Intention to adopt AI, offering a deeper understanding of the cognitive and social dynamics driving AI adoption among university students. While each construct plays a distinct role, their interplay reveals broader insights into how students approach AI adoption.

AI literacy as a central catalyst

One of the most significant contributions of this study is the centrality of AI Literacy in shaping how university students perceive, engage with, and adopt AI technologies. The results show that AI literacy significantly affects Performance Expectancy, Effort Expectancy, Social Influence, and, to a lesser extent, Facilitating Conditions, which in turn shape the behavioral intention to adopt AI technologies among Chinese university students. The strongest relationships observed in the model are those between AI literacy and Performance Expectancy (β = 0.583), as well as between AI literacy and Effort Expectancy (β = 0.585). These findings are highly significant and suggest that students who are more literate in AI are better able to see its utility in improving their performance and find it easier to use. This aligns with prior findings, where a solid understanding of AI technology can enhance users’ confidence in its benefits and reduce the perceived effort required to use it (Jang, 2024; C. Wang et al., 2024). The strong indirect effect of AI literacy on Behavioral Intention through Performance Expectancy and Effort Expectancy reinforces that literacy in advanced technologies like AI not only lowers psychological and cognitive barriers but also fosters a more positive attitude towards technology adoption.

A particularly notable finding in this study is the strong relationship between AI literacy and Social Influence (β = 0.507), which in turn significantly impacts Behavioral Intention. This result is intriguing because it suggests that AI literacy can directly shape how students perceive the social norms and pressures surrounding AI adoption. This aligns with the Theory of Social Information Processing, which posits that individuals with greater knowledge and cognitive resources are better able to decode and interpret social signals, leading to a heightened awareness of peer behaviors and perceived social expectations (Walther, 2008). When students are more knowledgeable about AI, they may feel more confident in engaging with AI-related discussions and more aware of the social dynamics that promote or discourage AI use within their peer groups. This heightened awareness and understanding likely make them more sensitive to the influence of peers, instructors, and broader academic networks regarding AI adoption.

While AI literacy has a significant positive impact on Facilitating Conditions (β = 0.328), the relationship is weaker compared to other constructs such as Performance Expectancy, Effort Expectancy, and Social Influence, and does not directly influence Behavioral Intention. Even though Facilitating Conditions do not directly impact Behavioral Intention in this model, the influence of AI literacy on Facilitating Conditions remains critical for understanding the broader dynamics of AI adoption. Higher AI literacy enhances students’ awareness and understanding of the available technological infrastructure, institutional resources, and support systems, enabling them to identify and utilize these resources more effectively. For instance, students with higher AI literacy are more likely to recognize the availability of AI-specific resources, such as university-provided software licenses, cloud storage, or access to specialized AI hardware, which might otherwise go unnoticed. This means that while Facilitating Conditions do not directly drive AI adoption, AI literacy enables students to fully exploit the available resources, making Facilitating Conditions more impactful for students who are well-versed in AI technologies.

These findings demonstrate that AI literacy significant impact on students’ Behavioral Intention to adopt AI technologies through multiple pathways, highlighting several important implications for educational institutions aiming to enhance AI adoption among students. For example, universities should prioritize developing comprehensive AI literacy programs that go beyond technical training. Curriculums should integrate ethical considerations, the societal impacts of AI, and real-world applications. Additionally, hands-on experiences with AI tools should be offered to foster a deeper understanding, which in turn will increase students’ likelihood of adoption. Furthermore, fostering a continuous learning mindset by offering regular updates and training on the latest AI advancements will ensure that students remain proficient and motivated to engage with AI, thereby increasing their intention to adopt AI.

Social influence as the strongest predictor

Among the UTAUT constructs, the strongest predictor of Behavioral Intention was Social Influence (β = 0.283), emphasizing the critical role that peer influence and social norms play in the context of technology adoption among Chinese students. This finding diverges from some studies in individualistic cultures, which often downplay the role of social influence in favor of individual factors like performance and effort expectancy (Wong et al., 2013; Andrews et al., 2021). However, it is consistent with research on collectivist societies, where social influence often outweighs individual perceptions of ease of use or usefulness (Gao et al., 2022). This highlights the unique cultural factors influencing technology adoption in China, suggesting that future AI adoption strategies should emphasize community engagement and peer-driven initiatives to encourage broader use. Educational institutions should leverage peer influence to drive AI adoption by implementing peer-led AI initiatives and creating opportunities for collaborative AI projects. These strategies can capitalize on the strong role of social influence by enabling AI-literate students to act as opinion leaders, encouraging their peers to adopt AI through social modeling and group-based learning. Additionally, institutions can launch social campaigns that normalize AI use, highlighting successful AI adopters to establish AI technologies as a social norm within the student community. By fostering a culture where peer endorsement and collaboration drive AI adoption, institutions can effectively use social dynamics to encourage wider engagement with AI technologies.

Performance expectancy as a key motivator

Performance Expectancy emerged as a strong predictor of Behavioral Intention (β = 0.243). The prominence of Performance Expectancy in this model highlights that students are primarily motivated to adopt AI technologies based on their perception of utility and tangible benefits—specifically, how AI can improve their productivity, learning outcomes, or problem-solving capabilities. This finding aligns with studies by Teo et al. (2019) and Wang et al. (2022), who also found that performance-related benefits are paramount in shaping technology adoption in educational contexts. AI’s potential to support personalized learning, automate data processing, and assist with complex academic tasks makes it a compelling resource for students seeking better academic results. To leverage Performance Expectancy in promoting AI adoption, universities should focus on showcasing how AI can enhance academic performance through practical, measurable applications. Integrating AI into the curriculum through assignments and projects that require its use for data analysis, research, or problem-solving will allow students to experience tangible academic benefits. Additionally, institutions should provide AI training that emphasizes specific academic improvements, such as faster research processes, improved accuracy in assignments, or personalized learning tools that cater to individual student needs. By demonstrating AI’s potential to improve academic outcomes, universities can motivate students to adopt these technologies as essential tools for achieving academic excellence and professional readiness.

Effort expectancy as a secondary driver of AI adoption

It is worth noting that while Effort Expectancy plays a significant role, its effect size (f2 = 0.024) is relatively small compared to Performance Expectancy (f2 = 0.047) and Social Influence (f2 = 0.064). This suggests that while students value the ease of use when deciding to adopt AI technologies, it plays a secondary role compared to other factors. The possible reason is that in higher education settings, students might be willing to invest time and effort into learning more complex technologies like AI if they perceive significant benefits in terms of performance. Therefore, while ease of use is important, it may not be most critical factor once students recognize AI’s broader potential. This finding resonates with previous studies that have found ease of use to be less critical once users have acquired sufficient knowledge and familiarity with the technology (Peng, 2022). To promote AI adoption, universities should focus on minimizing perceived complexity and ensuring AI tools are accessible and easy to use. This can be achieved by providing comprehensive tutorials, intuitive AI platforms, and low-barrier entry projects that help students get started with AI quickly and confidently. Institutions should offer user-friendly AI applications and guided learning experiences that reduce the initial effort required to engage with AI, lowering resistance to adoption and encouraging students to explore these technologies in their academic work.

Reassessing the role of facilitating conditions

A surprising result was the minimal role of Facilitating Conditions in predicting AI adoption (β = 0.047), indicating that students’ external environment, such as available infrastructure or technical support, might not be a critical pathway linking AI Literacy to adoption. This finding contrasts with previous studies in the field, where Facilitating Conditions has been identified as a significant predictor of technology adoption. For example, Taposh et al. (2024) demonstrated that facilitating conditions, including the availability of AI tools, training, and institutional support, was a significant predictor of AI adoption in Bangladeshi universities. Similarly, Al-Zahrani & Alasmari (2025) found that infrastructure and technical support was essential in the integration of AI in education in higher education across the Middle East and North Africa (MENA) region, with institutions investing in these areas to encourage adoption and use. In the case of AI-driven smart education systems. The discrepancy observed in this study, where facilitating conditions had minimal predictive power, can be attributed to a range of contextual factors. In the context of higher education in China, students may already have consistent access to the resources required for AI adoption, making facilitating conditions a less critical determinant. The increasing availability of personal devices, such as smartphones, tablets, and laptops, has substantially diminished the reliance on external support systems, such as university-provided devices, software, or technical assistance. Students today can access AI technologies directly on their personal devices, bypassing the need for institutional resources or support. This stands in contrast to environments where students may lack prior exposure to such technologies, thus necessitating the implementation of comprehensive facilitating conditions to support their initial engagement with AI tools. Furthermore, as students become more proficient with technology, they are increasingly likely to prioritize their personal assessment of AI’s utility and ease of use over the availability of institutional support. This finding is echoed by Teo et al. ’s (2023), who similarly found that facilitating conditions were not a significant factor influencing technology use among Malaysian university students, reinforcing the idea that external support may be less critical in certain educational contexts. The minimal role of facilitating conditions in this study points to a growing trend toward self-sufficiency in technology adoption, particularly among younger, digitally literate populations who are accustomed to navigating digital environments independently. This perspective invites a reevaluation of strategies aimed at promoting AI adoption in higher education — instead of solely investing in improving facilitating conditions, educational institutions might benefit more from initiatives that boost AI literacy, thereby fostering a more self-sufficient and confident user base that requires less external support to engage with AI technologies.

Implications

The findings of this study underscore the need for a multifaceted approach to promoting AI adoption in higher education. Central to this strategy is the development of comprehensive AI literacy programs that move beyond mere technical training. Universities should focus on integrating AI education that combines technical proficiency with a strong understanding of AI’s practical applications, ethical considerations, and societal impacts (Sharma et al., 2024). This holistic approach to AI literacy will not only improve students’ ability to engage with AI but will also foster a deeper, more sustained engagement, equipping them to effectively navigate and leverage AI technologies in diverse contexts. This approach aligns with a growing body of literature advocating for multidimensional AI literacy in higher education. Hazari (2024) highlights the need for AI literacy courses that combine ethical awareness with practical skills, aiming to prepare students for responsible AI usage. Similarly, Selvaratnam & Venaruzzo (2023) emphasize the importance of integrating AI governance and bias mitigation strategies into AI literacy curricula to address the ethical challenges posed by AI adoption. By focusing on these aspects, universities can create a foundation that facilitates the effective adoption of AI.

The study also highlights the critical role of Social Influence in driving AI adoption. Universities should harness the power of peer dynamics by creating peer-led initiatives and mentorship programs where AI-literate students can serve as role models and influencers within their academic communities. These peer-driven networks can significantly enhance adoption, as students are more likely to adopt AI when they see its success and endorsement within their social circles (Al-kfairy, 2024). Such initiatives can create a culture of AI adoption, where peer validation encourages broader and deeper engagement with AI technologies. The importance of social influence in AI adoption is particularly relevant in the context of higher education in China, where collective social behaviors and peer interactions significantly shape individual decision-making (Venkatesh & Zhang, 2010; Huang et al., 2023). This social context further underscores the importance of peer-led initiatives, which can cultivate a supportive ecosystem that encourages widespread and sustained engagement with AI technologies within the context of higher education in China.

Moreover, the strong influence of Performance Expectancy indicates that students are motivated by AI’s ability to enhance their academic and professional outcomes. Therefore, universities must strategically integrate AI into curricula and research in ways that clearly demonstrate its performance-enhancing potential. By showcasing AI’s tangible benefits, such as improving research productivity, streamlining learning processes, and supporting complex problem-solving, universities can illustrate the practical value of AI, positioning it as a useful tool for academic success. This aligns with ongoing discussions in the literature about strategies to strengthen performance expectancy in higher education, recognizing its crucial role in fostering AI adoption. Lin & Chen (2024) found that when students observe practical AI applications such as its role in automating research tasks or enhancing problem-solving—they are more likely to perceive AI as valuable and engage with it meaningfully. Similarly, Southworth et al. (2023) emphasizes that universities should move beyond theoretical AI education and incorporate hands-on learning experiences, allowing students to interact with AI tools in ways that directly impact their academic work. In addition, research by Kim et al. (2022) suggests that when faculty actively use AI in their own teaching and research, it reinforces students’ belief in AI’s credibility and usefulness. Faculty-led AI-driven projects can serve as a model for AI adoption, demonstrating its practical benefits in real academic settings and encouraging students to explore AI-based learning and research strategies.

While Effort Expectancy played a secondary role, the importance of reducing perceived complexity should not be underestimated. Universities must provide user-friendly AI tools and ensure that students have access to comprehensive training resources that simplify the adoption process. This includes offering guided tutorials, workshops, and structured learning pathways that minimize the barriers associated with adopting new technologies (Al-Abdullatif & Alsubaie, 2024). In line with this, existing literature emphasizes the importance of addressing usability concerns to enhance effort expectancy and support AI adoption. For instance, Ayanwale & Ndlovu (2024) highlight that intuitive AI tools and tailored training programs significantly boost students’ willingness to engage with AI by making it more accessible. Additionally, research by Shao et al. (2024) suggests that simplified AI interfaces and practical, hands-on experiences are critical to fostering effort expectancy and building users’ confidence in using AI technologies. By lowering these entry barriers, universities can ensure that students, regardless of their initial skill level, feel confident in engaging with AI, thereby fostering broader adoption and integration of AI technologies in academic settings.

In sum, promoting AI adoption in higher education requires an integrated strategy that focuses on enhancing AI literacy, leveraging peer influence, showcasing performance benefits, and ensuring ease of use. This comprehensive approach will not only encourage widespread AI adoption but also equip students with the skills and confidence needed to navigate the increasingly AI-driven academic and professional landscapes.

Limitations and future research directions

While this study provides valuable insights into the factors influencing AI adoption among university students, several limitations should be acknowledged. First, the study was conducted within the context of Chinese higher education, a collectivist culture where peer influence plays a significant role in decision-making. As such, the findings, particularly the strong role of social influence, may not be fully generalizable to more individualistic cultures, where personal motivation and individual perceptions of technology could have a more pronounced impact. Future research should explore AI adoption in different cultural contexts to determine the variability of these findings across cultures.

Second, the study utilized convenience sampling, which was chosen due to its feasibility and accessibility, allowing for efficient participant recruitment within time and resource constraints. However, this method introduces notable limitations in terms of sample representativeness and generalizability. To address this, future studies should consider using random sampling or other more rigorous sampling methods to ensure a broader and more diverse representation. This would improve the generalizability of findings across different student demographics, disciplines, and levels of exposure to AI technologies.

Third, the study’s use of cross-sectional data collected through a single survey inherently limits the ability to infer causal relationships. Although Structural Equation Modeling (SEM) is effective in identifying associations between variables, it does not establish causality. Given the complexity of the variables involved, such as AI literacy and behavioral intention, future research could benefit from longitudinal studies, providing stronger evidence of causal relationships and offering insights into how students’ intentions to adopt AI evolve as they gain more exposure and experience with the technology.

Fourth, the study’s reliance on quantitative survey data constrains the depth of insights into the subjective experiences of students. While the survey captured key constructs influencing adoption, it does not provide a rich understanding of the personal motivations, challenges, and contextual factors that may influence students’ decisions to adopt AI. Future research should incorporate qualitative methods, such as interviews and focus groups, to uncover more nuanced and personal perspectives on AI adoption. Furthermore, employing Qualitative Comparative Analysis (QCA) could offer a valuable approach in understanding how different configurations of factors, such as cultural influences, personal motivations, and technological perceptions, interact to influence AI adoption. QCA allows for the identification of complex relationships and configurations that might be overlooked by traditional quantitative methods, thus offering a more nuanced view of the adoption process.

Lastly, while the study focused on the AI literacy and key constructs of the UTAUT model, other factors influencing AI adoption were not explored. Variables such as attitudes toward technology, anxiety, or perceived risks could also play a significant role in shaping AI adoption, especially given the complexity and evolving nature of AI technologies. Including these factors in future research could offer a more comprehensive understanding of the dynamics driving AI adoption in higher education settings.

Conclusion

This study provides new insights into the dynamic relationship between AI Literacy and UTAUT constructs in shaping university students’ Behavioral Intention to adopt AI. The study confirms that AI Literacy is a foundational enabler of AI adoption through its influence on Performance Expectancy, Effort Expectancy, and Social Influence. Social influence emerged as the strongest predictor, showing how peer dynamics, amplified by AI literacy, play a crucial role in adoption. Performance expectancy remains a key driver, as students value AI for its potential to enhance academic performance, while effort expectancy was less critical, reflecting students’ willingness to engage with AI despite initial complexity. Facilitating conditions had a non-significant direct effect on Behavioral Intention, indicating that the availability of external resources and support may not be as influential in driving AI adoption compared to individual and social factors. These findings add new knowledge to the understanding of AI adoption within the rapidly evolving landscape of AI in education, providing practical implications for educational institutions aiming to promote AI adoption. Future research could expand on these insights by exploring additional factors that shape AI adoption among university students in different contexts, thereby enabling a more comprehensive understanding of the forces at play.

Data availability

Anonymized data supporting the findings of this study are available from the corresponding author upon reasonable request. Requests will be reviewed to ensure compliance with institutional and ethical guidelines for protecting participant privacy.

References

Al-Abdullatif AM, Alsubaie MA (2024) ChatGPT in Learning: Assessing Students’ Use Intentions through the Lens of Perceived Value and the Influence of AI Literacy. In Behavioral Sciences (Vol. 14, Issue 9). https://doi.org/10.3390/bs14090845

Al-kfairy M (2024) Factors Impacting the Adoption and Acceptance of ChatGPT in Educational Settings: A Narrative Review of Empirical Studies. In Applied System Innovation (Vol. 7, Issue 6). https://doi.org/10.3390/asi7060110

Al-Zahrani AM, Alasmari TM (2025) A comprehensive analysis of AI adoption, implementation strategies, and challenges in higher education across the Middle East and North Africa (MENA) region. Education and Information Technologies. https://doi.org/10.1007/s10639-024-13300-y

Allen LK, Kendeou P (2024) ED-AI lit: an interdisciplinary framework for AI literacy in education. Policy Insights Behav Brain Sci 11(1):3–10. https://doi.org/10.1177/23727322231220339

Alzahrani L (2023) UTAUT as a model for understanding intention to adopt AI. Int J Recent Technol Eng (IJRTE) 11(6):65–73. https://doi.org/10.35940/ijrte.F7475.0311623

Andrews JE, Ward H, Yoon J (2021) UTAUT as a model for understanding intention to adopt AI and related technologies among librarians. J Acad Librariansh 47(6):102437. https://doi.org/10.1016/j.acalib.2021.102437

Ayanwale MA, Ndlovu M (2024) Investigating factors of students’ behavioral intentions to adopt chatbot technologies in higher education: Perspective from expanded diffusion theory of innovation. Comput Hum Behav Rep. 14:100396. https://doi.org/10.1016/j.chbr.2024.100396

Budhathoki T, Zirar A, Njoya ET, Timsina A (2024) ChatGPT adoption and anxiety: a cross-country analysis utilising the unified theory of acceptance and use of technology (UTAUT). Stud High Educ 49(5):831–846. https://doi.org/10.1080/03075079.2024.2333937

Cabrera-Sánchez J-P, Villarejo-Ramos ÁF, Liébana-Cabanillas F, Shaikh AA (2021) Identifying relevant segments of AI applications adopters–Expanding the UTAUT2’s variables. Telemat Inform 58:101529. https://doi.org/10.1016/j.tele.2020.101529

Cao G, Duan Y, Edwards JS, Dwivedi YK (2021) Understanding managers’ attitudes and behavioral intentions towards using artificial intelligence for organizational decision-making. Technovation 106:102312

Cetindamar D, Kitto K, Wu M, Zhang Y, Abedin B, Knight S (2022) Explicating AI literacy of employees at digital workplaces. IEEE Trans Eng Manag 71:810–823. https://doi.org/10.1109/TEM.2021.3138503

Chatterjee S, Bhattacharjee KK (2020) Adoption of artificial intelligence in higher education: A quantitative analysis using structural equation modelling. Educ Inf Technol 25:3443–3463. https://doi.org/10.1007/s10639-020-10159-7

Chatterjee S, Rana NP, Khorana S, Mikalef P, Sharma A (2023) Assessing organizational users’ intentions and behavior to AI integrated CRM systems: A meta-UTAUT approach. Inf Syst Front 25(4):1299–1313. https://doi.org/10.1007/s10796-021-10181-1

Chiu TKF, Ahmad Z, Ismailov M, Sanusi IT (2024) What are artificial intelligence literacy and competency? A comprehensive framework to support them. Comput Educ Open 6:100171. https://doi.org/10.1016/j.caeo.2024.100171

Cohen J (2013) Statistical power analysis for the behavioral sciences. routledge. https://doi.org/10.4324/9780203771587

F. Hair Jr J, Sarstedt M, Hopkins L, G. Kuppelwieser V (2014) Partial least squares structural equation modeling (PLS-SEM). Eur Bus Rev 26(2):106–121. https://doi.org/10.1108/EBR-10-2013-0128

Fornell C, Larcker DF (1981) Evaluating structural equation models with unobservable variables and measurement error. J Mark Res 18(1):39–50. https://doi.org/10.2307/3151312

Foroughi B, Madugoda Gunaratnege S, Iranmanesh M, Khanfar A, Ghobakhloo M, Annamalai N, Naghmeh Abbaspour B (2023) Determinants of Intention to Use ChatGPT for Educational Purposes: Findings from PLS-SEM and fsQCA. International Journal of Human-Computer Interaction, 1–21. https://doi.org/10.1080/10447318.2023.2226495

Fowler F (2009) Survey Research Methods (4th ed.) (4th ed.). SAGE Publications, Inc. https://doi.org/10.4135/9781452230184

Gao L, Vongurai R, Phothikitti K, Kitcharoen S (2022) Factors influencing university students’ attitude and behavioral intention towards online learning platform in Chengdu, China. ABAC ODI J Vision Action Outcome 9(2):21–37. https://doi.org/10.14456/abacodijournal.2022.2

Hair J, Hollingsworth CL, Randolph AB, Chong AYL (2017) An updated and expanded assessment of PLS-SEM in information systems research. Ind Manag Data Syst 117(3):442–458

Hair Jr JF, Matthews LM, Matthews RL, Sarstedt M (2017) PLS-SEM or CB-SEM: updated guidelines on which method to use. Int J Multivar Data Anal 1(2):107–123. https://doi.org/10.1504/IJMDA.2017.087624

Hazari S (2024) Justification and Roadmap for Artificial Intelligence (AI) Literacy Courses in Higher Education. J Educ Res Practice

Henseler J, Ringle CM, Sarstedt M (2015) A new criterion for assessing discriminant validity in variance-based structural equation modeling. J Acad Mark Sci 43:115–135. https://doi.org/10.1007/s11747-014-0403-8

Heyder T, Posegga O (2021) Extending the foundations of AI literacy. ICIS

Huang F, Teo T, Zhao X (2023) Examining factors influencing Chinese ethnic minority English teachers’ technology adoption: an extension of the UTAUT model. Comput Assist Lang Learn 1–23. https://doi.org/10.1080/09588221.2023.2239304

Jain R, Garg N, Khera SN (2022) Adoption of AI-enabled tools in social development organizations in India: An extension of UTAUT model. Front Psychol 13:893691. https://doi.org/10.3389/fpsyg.2022.893691

Jang M (2024) AI literacy and intention to use text-based GenAI for learning: the case of business students in Korea. Informatics 11(3):54. https://doi.org/10.3390/informatics11030054

Kasinidou M (2023) Promoting AI literacy for the public. Proceedings of the 54th ACM Technical Symposium on Computer Science Education V. 2, 1237. https://doi.org/10.1145/3545947.3573292

Kim J, Lee H, Cho YH (2022) Learning design to support student-AI collaboration: perspectives of leading teachers for AI in education. Educ Inf Technol 27(5):6069–6104. https://doi.org/10.1007/s10639-021-10831-6

Kimiafar K, Sarbaz M, Tabatabaei SM, Ghaddaripouri K, Mousavi AS, Mehneh MR, Baigi SFM (2023) Artificial intelligence literacy among healthcare professionals and students: a systematic review. Front Health Inform 12:168. https://doi.org/10.30699/fhi.v12i0.524

Kong S-C, Cheung WM-Y, Zhang G (2021) Evaluation of an artificial intelligence literacy course for university students with diverse study backgrounds. Comput Educ: Artif Intell 2:100026. https://doi.org/10.1016/j.caeai.2021.100026

Leedy PD, Ormrod JE, Johnson LR (2019) Practical Research: Planning and Design. Pearson

Lin H-C, Ho C-F, Yang H (2022) Understanding adoption of artificial intelligence-enabled language e-learning system: An empirical study of UTAUT model. Int J Mob Learn Organ 16(1):74–94. https://doi.org/10.1504/IJMLO.2022.119966

Lin H, Chen Q (2024) Artificial intelligence (AI) -integrated educational applications and college students’ creativity and academic emotions: students and teachers’ perceptions and attitudes. BMC Psychol 12(1):487. https://doi.org/10.1186/s40359-024-01979-0

Long D, Magerko B (2020) What is AI literacy? Competencies and design considerations. Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, 1–16. https://doi.org/10.1145/3313831.3376727

Luckin R, Holmes W (2016) Intelligence unleashed: An argument for AI in education. https://www.researchgate.net/publication/299561597_Intelligence_Unleashed_An_argument_for_AI_in_Education

Müller, VC (2020). Ethics of artificial intelligence and robotics. https://plato.stanford.edu/archives/fall2023/entries/ethics-ai/

Nadella GS, Meduri SS, Gonaygunta H, Podicheti S (2023) Understanding the Role of Social Influence on Consumer Trust in Adopting AI Tools. Int J Sustain Dev Comput Sci 5(2):1–18. https://ijsdcs.com/index.php/ijsdcs/article/view/516

Ng DTK, Leung JKL, Chu KWS, Qiao MS (2021) AI literacy: definition, teaching, evaluation and ethical issues. Proc Assoc Inf Sci Technol 58(1):504–509. https://doi.org/10.1002/pra2.487

Peng Z (2022) An empirical study of mobile teaching: Applying the UTAUT model to study University teachers’ mobile teaching behavior. Curric Teach Methodol 5:55–70. https://doi.org/10.23977/curtm.2022.050510

Rizvi AT, Haleem A, Bahl S, Javaid M (2021). Artificial intelligence (AI) and its applications in Indian manufacturing: a review. Current Advances in Mechanical Engineering: Select Proceedings of ICRAMERD 2020, 825–835. https://doi.org/10.1007/978-981-33-4795-3_76

Rütti-Joy O, Winder G, Biedermann H (2023) Building AI literacy for sustainable teacher education. Z Für Hochschulentwicklung 18(4):175–189. https://doi.org/10.21240/zfhe/18-04/10

Saad AM, Mohamad MB, Tsong CK (2021) Behavioural intention of lecturers towards mobile learning and the moderating effect of digital literacy in saudi arabian universities. Int Trans J Eng, Manag, Appl Sci Technol 12(1):1–9. https://doi.org/10.14456/ITJEMAST.2021.2

Sarstedt M, Hair J, Cheah J, Becker J-M, Ringle C (2019) How to specify, estimate, and validate higher-order constructs in PLS-SEM. Australas Mark J 27:197–211. https://doi.org/10.1016/j.ausmj.2019.05.003

Selvaratnam R, Venaruzzo L (2023) Governance of artificial intelligence and data in Australasian higher education: A snapshot of policy and practice. An ACODE Whitepaper. Australasian Council on Open, Distance and eLearning (ACODE), Canberra, Australia (2023). https://doi.org/10.14742/apubs.2023.717

Shao C, Nah S, Makady H, McNealy J (2024) Understanding User Attitudes Towards AI-Enabled Technologies: An Integrated Model of Self-Efficacy, TAM, and AI Ethics. International Journal of Human–Computer Interaction, 1–13. https://doi.org/10.1080/10447318.2024.2331858

Sharma R, Kumar P, Singh DK, Suri D, Rajput P, Kumar S (2024) The Intersection of AI, Ethics, and Education: A Bibliometric Analysis. 2024 International Conference on Computing and Data Science (ICCDS), 1–6. https://doi.org/10.1109/ICCDS60734.2024.10560363

Shiau W-L, Sarstedt M, Hair JF (2019) Internet research using partial least squares structural equation modeling (PLS-SEM). Internet Res 29(3):398–406. https://doi.org/10.1108/IntR-10-2018-0447

Southworth J, Migliaccio K, Glover J, Glover J, Reed D, McCarty C, Brendemuhl J, Thomas A (2023) Developing a model for AI Across the curriculum: Transforming the higher education landscape via innovation in AI literacy. Comput Educ Artif Intell 4:100127. https://doi.org/10.1016/j.caeai.2023.100127

Stolpe K, Hallström J (2024) Artificial intelligence literacy for technology education. Comput Educ Open 6:100159. https://doi.org/10.1016/j.caeo.2024.100159

Tanantong, T, & Wongras, P (2024). A UTAUT-based framework for analyzing users’ intention to adopt artificial intelligence in human resource recruitment: a case study of Thailand. In Systemīs (Vol. 12, Issue 1). https://doi.org/10.3390/systems12010028

Tang X, Yuan Z, Qu S (2025) Factors influencing university students’ behavioural intention to use generative artificial intelligence for educational purposes based on a revised UTAUT2 Model. J Comput Assist Learn 41(1):e13105. https://doi.org/10.1111/jcal.13105

Taposh Y, Neogy K, Akter K, Ali Y, Neogy T (2024) Determinants of AI Adoption Intent among Bangladeshi Students: An Extended UTAUT Model Analysis. https://www.researchgate.net/publication/385510923_Determinants_of_AI_Adoption_Intent_among_Bangladeshi_Students_An_Extended_UTAUT_Model_Analysis?enrichId=rgreq-a55a2377e78e865532703faca17c3b6f-XXX&enrichSource=Y292ZXJQYWdlOzM4NTUxMDkyMztBUzoxMTQzMTI4MTI4ODM2NTkwNEAxNzMwNjkwMDE5MDk5&el=1_x_2&_esc=publicationCoverPdf

Teo T, Moses P, Cheah, PK, Huang F, Tey TCY (2023) Influence of achievement goal on technology use among undergraduates in Malaysia. Interactive Learning Environments, 1–18. https://doi.org/10.1080/10494820.2023.2197957

Teo T, Zhou M, Fan ACW, Huang F (2019) Factors that influence university students’ intention to use Moodle: a study in Macau. Educ Technol Res Dev 67(3):749–766. https://doi.org/10.1007/s11423-019-09650-x

Tzirides AO(Olnancy), Zapata G, Kastania NP, Saini AK, Castro V, Ismael SA, You Y, Santos TAdos, Searsmith D, O’Brien C, Cope B, Kalantzis M (2024) Combining human and artificial intelligence for enhanced AI literacy in higher education. Computers Educ Open 6:100184

Venkatesh V (2022) Adoption and use of AI tools: a research agenda grounded in UTAUT. Ann Oper Res 308(1):641–652

Venkatesh V, Morris MG, Davis GB, Davis FD (2003) User acceptance of information technology: Toward a unified view. MIS Quarterly, 425–478. https://doi.org/10.2307/30036540

Venkatesh V, Zhang X (2010) Unified theory of acceptance and use of technology: US vs. China. J Glob Inf Technol Manag 13(1):5–27. https://doi.org/10.1080/1097198X.2010.10856507

Walther, JB (2008). Social information processing theory. Engaging Theories in Interpersonal Communication: Multiple Perspectives, 391. https://doi.org/10.4135/9781483329529.n29

Wang B, Rau P-LP, Yuan T (2023) Measuring user competence in using artificial intelligence: validity and reliability of artificial intelligence literacy scale. Behav Inf Technol 42(9):1324–1337. https://doi.org/10.1080/0144929X.2022.2072768

Wang C, Dai J, Zhu K, Yu T, Gu X (2024) Understanding the Continuance Intention of College Students toward New E-Learning Spaces Based on an Integrated Model of the TAM and TTF. Int J Hum Comput Interact 40(24):8419–8432. https://doi.org/10.1080/10447318.2023.2291609

Wang C, Wang H, Li Y, Dai J, Gu X, Yu T (2024) Factors Influencing University Students’ Behavioral Intention to Use Generative Artificial Intelligence: Integrating the Theory of Planned Behavior and AI Literacy. Int J Hum Comput Interact, 1–23. https://doi.org/10.1080/10447318.2024.2383033

Wang C, Wu G, Zhou X, Lv Y (2022) An empirical study of the factors influencing user behavior of fitness software in college students based on UTAUT. Sustainability 14(15):9720. https://doi.org/10.3390/su14159720

Wang Y, Liu C, Tu Y-F (2021) Factors affecting the adoption of AI-based applications in higher education. Educ Technol Soc 24(3):116–129. https://www.jstor.org/stable/27032860

Wong K-T, Teo T, Russo S (2013) Interactive whiteboard acceptance: Applicability of the UTAUT model to student teachers. Asia-Pac Educ Res 22:1–10. https://doi.org/10.1007/s40299-012-0001-9

Yau, KW, Chai, CS, Chiu, TKF, Meng, H, King, I, Wong, SW-H, Saxena, C, & Yam, Y (2022). Developing an AI literacy test for junior secondary students: The first stage. 2022 IEEE International Conference on Teaching, Assessment and Learning for Engineering (TALE), 59–64. https://doi.org/10.1109/TALE54877.2022.00018

Yeşilyurt E, Vezne R (2023) Digital literacy, technological literacy, and internet literacy as predictors of attitude toward applying computer-supported education. Educ Inf Technol 28(8):9885–9911. https://doi.org/10.1007/s10639-022-11311-1

Yue Yim IH (2024) A critical review of teaching and learning artificial intelligence (AI) literacy: Developing an intelligence-based AI literacy framework for primary school education. Comput Educ: Artif Intell 7:100319. https://doi.org/10.1016/j.caeai.2024.100319

Zawacki-Richter O, Marín VI, Bond M, Gouverneur F (2019) Systematic review of research on artificial intelligence applications in higher education–where are the educators? Int J Educ Technol High Educ 16(1):1–27. https://doi.org/10.1186/s41239-019-0171-0

Zhao L, Wu X, Luo H (2022) Developing AI literacy for primary and middle school teachers in China: based on a structural equation modeling analysis. Sustainability 14(21):14549. https://doi.org/10.3390/su142114549

Acknowledgements

This research was supported by the Planning Fund Project of Humanities and Social Sciences Research of Ministry of Education of China (Grant No. 23YJA880068) and the Yunnan Provincial Educational Research and Planning Program (Grant No. BB24011).

Author information

Authors and Affiliations

Contributions

Qi Ke was responsible for conceptualizing the study, designing the research framework, performing data analysis, and drafting the manuscript. Yunhong Gong contributed to data collection and assisted with the manuscript review process. Changping Ke supervised the project, reviewed, and edited the manuscript. All authors read and approved the final manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethical approval