Abstract

Lung cancer (LC) remains a leading global cause of cancer mortality, with current diagnostic and prognostic methods lacking precision. This meta-analysis evaluated the role of artificial intelligence (AI) in LC imaging-based diagnosis and prognostic prediction. We systematically reviewed 315 studies from major databases up to January 7, 2025. Among them, 209 studies on LC diagnosis yielded a combined sensitivity of 0.86 (0.84–0.87), specificity of 0.86 (0.84–0.87), and AUC of 0.92 (0.90–0.94). For LC prognosis, 106 studies were analyzed: 58 with diagnostic data showed a pooled sensitivity of 0.83 (0.81–0.86), specificity of 0.83 (0.80–0.86), and AUC of 0.90 (0.87–0.92). Additionally, 53 studies differentiated between low- and high-risk patients, with a pooled hazard ratio of 2.53 (2.22–2.89) for overall survival and 2.80 (2.42–3.23) for progression-free survival. Subgroup analyses revealed an acceptable performance. AI exhibits strong potential for LC management but requires prospective multicenter validation to address clinical implementation challenges.

Similar content being viewed by others

Introduction

Lung cancer (LC) is one of the deadliest malignancies, accounting for 12.4% and 18.7% of all cancer-related morbidity and mortality worldwide, respectively1. The heterogeneity of LC is manifested by its various histological subtypes and growth patterns. Tissue-based tests are currently the gold standard for diagnosis2. However, tissue sampling methods are invasive, time-consuming, and require substantial tumor tissue, which may prevent biopsies when lesions are close to the trachea or blood vessels owing to the high risks involved3,4. Precise prognostic risk stratification of patients with LC is essential for developing therapeutic strategies. Despite being the most objective and authoritative prognostic indicator for LC, the tumor, node, and metastasis (TNM) classification still fails to account for the heterogeneous prognoses observed among patients at identical tumor stages5,6,7. Thus, a non-invasive approach that provides more comprehensive and individualized predictions is required and might help drive a shift toward personalized precision healthcare.

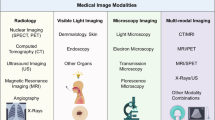

The field of medical imaging is continuing to evolve, with increasing evidence supporting its central role in LC detection and response assessment8,9. In contrast to conventional biopsy-based assays representing solely a tumor sample, imaging reflects the entire tumor burden, offering comprehensive insights into each cancer lesion through a solitary non-invasive examination10. In radiological practice, imaging characteristics other than size quantifications are qualitative and descriptive in nature2. Consequently, manual interpretation of imaging is imperfect, inefficient, and has low inter-reader agreement, making proper and immediate assessment from medical imaging challenging11. The recent emergence of quantitative data extracted from medical images, such as computed tomography (CT) and positron emission tomography (PET) provide promising opportunities for more detailed tumor characterization12.

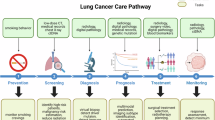

Computational imaging approaches derived from artificial intelligence (AI) have demonstrated remarkable success in the automated assessment of radiographic characteristics in tumors10,13. Radiomics, based on medical imaging, enables comprehensive assessment of the tumor and its surrounding parenchyma, forming a high-dimensional data space suitable for AI analysis2. In the realm of medical image analysis, a pivotal aspect of utilizing handcrafted radiomics machine learning (hereafter abbreviated as ML) is the identification and extraction of informative features that accurately capture the intrinsic patterns and regularities within the data. This method heavily depends on researchers’ choice on what to segment, and the (manual) segmentation quality. However, deep learning (DL), a novel class of ML, has addressed this challenge by integrating the feature engineering step into its learning step14 (Fig. 1). Recent systematic reviews and meta-analyses have revealed that image-based AI serves as a potent tool in some aspects of LC diagnosis15,16,17 or prognosis18. However, LC is a heterogeneous disease with multiple histopathology subtypes and complex clinical outcomes. Focusing solely on a specific subtype is insufficient for a comprehensive understanding of the image-based AI in LC precision healthcare.

a Conventional radiomics pipeline. b Deep learning pipeline. ADC adenocarcinoma, EGFR epidermal growth factor receptor, LASSO least absolute shrinkage and selection operator, LR logistic regression, PCA principal component analysis, RECIST response evaluation criteria in solid tumors, SCC squamous cell carcinoma.

Therefore, this study acknowledged the existence of prior systematic reviews and meta-analyses and aimed to provide a broader and more detailed overview by synthesizing the existing image-based AI research across multiple disciplines related to LC diagnosis and prognosis. Additionally, this paper incorporated previously underrepresented data points and consolidated multiple research objectives into three meta-analyses to provide a more comprehensive analysis.

Results

Study selection and characteristics of eligible studies

The initial search identified a total of 18,905 records. After excluding 8130 duplicates, 10,775 unique records were screened based on their titles and abstracts. Subsequently, 1312 full-text articles were assessed, and 997 articles were further excluded, of which 16 articles were excluded from the review because they only presented training performance. Finally, 315 articles were included in this systematic review, with 209 and 106 focusing on diagnosis and prognosis, respectively. Among prognosis-related studies, 58 studies providing diagnostic accuracy data were included in the first meta-analysis, and 53 studies providing hazard ratio (HR) and corresponding 95% confidence intervals (CIs) were included in the second meta-analysis (Fig. 2). Specifically, five studies providing both types of data were included in both meta-analyses.

Most studies (n = 309) were based on retrospective data, and only six used prospective data. Eighty-eight studies excluded poor-quality images, while others omitted the process in the methodology. One hundred and forty studies employed manual or supplementary mini-procedures to extract nodules as the region of interest. One hundred and four studies conducted external validation utilizing out-of-sample datasets. Fifty-one studies used data augmentation to expand raw data volumes. The specific details of the 315 included studies are presented in Supplementary Tables 1–6. The distribution of AI algorithms and the geographic origin of the included studies are summarized in Table 1 and Table 2, respectively.

Quality assessment

The results of the quality assessment of diagnostic accuracy studies-AI (QUADAS-AI) assessment are summarized in Supplementary Fig. 1. Most of the included studies (n = 267) exhibited a low risk of bias in patient selection (n = 208), reference standard (n = 229), and flow and timing (n = 267). Owing to the absence of external validation, a high risk of bias and applicability concerns regarding index testing were frequently observed. Relevant individual assessments for each quality analysis are summarized in Supplementary Fig. 2.

The eligible studies (n = 53) had Newcastle–Ottawa Scale (NOS) quality scores ranging from 5 to 9, with 8 (67.92%) being the most commonly acquired score, indicating that most included studies were considered high quality. The detailed scores are presented in Supplementary Table 7.

Pooled performance of AI algorithms

For LC diagnosis, the meta-analysis included a total of 209 studies with 251 datasets. The summary receiver-operating characteristic curve (SROC) curves presented in Fig. 3a demonstrate that the pooled sensitivity (SENS) and specificity (SPEC) values were 0.86 (95%CI = 0.84–0.87) and 0.86 (95%CI = 0.84–0.87), respectively, with a highly satisfactory area under the curve (AUC) of 0.92 (95%CI = 0.90–0.94) for all validation datasets.

For LC prognosis, the first meta-analysis that included 58 studies with 78 datasets yielding pooled performance measures for SENS, SPEC, and AUC of 0.83 (95%CI = 0.81–0.86), 0.83 (95%CI = 0.80–0.86), and 0.90 (95%CI = 0.87–0.92), respectively, as depicted in Fig. 3b. The second meta-analysis included 53 studies concerning risk stratification, comprising 40 studies with 44 datasets for overall survival (OS), 23 studies with 29 datasets for progression-free survival (PFS), 6 studies with 7 datasets for disease-free survival (DFS), and 3 studies with 4 datasets for recurrence-free survival (RFS). The results comparing outcomes between high- and low-risk groups exhibited a combined HR of 2.53 (95%CI = 2.22–2.89), 2.80 (95%CI = 2.42–3.23), 2.20 (95%CI = 1.54–3.14), and 4.73 (95%CI = 2.54–8.80) for OS, PFS, DFS, and RFS, respectively (Fig. 4a–d).

Subgroup meta-analyses

The meta-analysis showed significant heterogeneity in SENS (I2 = 94.71%) and SPEC (I2 = 97.35%) for LC diagnosis. Therefore, subgroup analyses were performed based on diverse study objectives, AI algorithm, validation cohort, model variable, nodules sketched, and imaging quality control.

Based on study objectives, 128 studies with 151 datasets focused on detecting LC, having pooled SENS, SPEC, and AUC values of 0.88 (95%CI = 0.86–0.90), 0.86 (95%CI = 0.84–0.87), and 0.94 (95%CI = 0.92–0.96), respectively. 19 studies with 22 datasets aimed at distinguishing between adenocarcinoma (ADC) and squamous cell carcinoma (SCC), having pooled SENS, SPEC, and AUC values of 0.81 (95%CI = 0.76–0.85), 0.80 (95%CI = 0.74–0.84), and 0.87 (95%CI = 0.84–0.90), respectively. 16 studies with 23 datasets focused on identifying invasive from preinvasive LC, having pooled SENS, SPEC, and AUC values of 0.86 (95%CI = 0.82–0.89), 0.82 (95%CI = 0.79–0.84), and 0.89 (95%CI = 0.86–0.92), respectively. 46 studies with 55 datasets targeted predicting epidermal growth factor receptor (EGFR) mutation status, having pooled SENS, SPEC, and AUC values of 0.78 (95%CI = 0.75–0.81), 0.81 (95%CI = 0.77–0.84), and 0.86 (95%CI = 0.83–0.89), respectively (Fig. 5a–d).

a Distinguishing malignant from benign lung nodules (128 studies with 151 tables). b Classification between ADC and SCC (19 studies with 22 tables). c Identifying invasive from pre-invasive lung cancer (16 studies with 23 tables). d Predicting EGFR mutant status (46 studies with 55 tables). SROC summary receiver operating characteristic, SENS summary sensitivity, SPEC summary specificity.

The ML algorithms had pooled SENS, SPEC, and AUC values of 0.84 (95%CI = 0.82–0.86), 0.83 (95%CI = 0.81–0.86), and 0.90 (95%CI = 0.88–0.93), respectively. The DL algorithms had pooled SENS, SPEC, and AUC values of 0.87 (95%CI = 0.85–0.89), 0.87 (95%CI = 0.85–0.89), and 0.94 (95%CI = 0.91–0.95), respectively (Supplementary Figs. 3a, b). Additionally, 22 studies utilized an algorithm termed three-dimensional (3D) DL, which had pooled SENS, SPEC, and AUC values of 0.87 (95%CI = 0.82–0.90), 0.89 (95%CI = 0.85–0.92), and 0.94 (95%CI = 0.92–0.96), respectively. Two-dimensional (2D) DL had pooled SENS, SPEC, and AUC values of 0.87 (95%CI = 0.85–0.90), 0.87 (95%CI = 0.84–0.89), and 0.93 (95%CI = 0.91–0.95), respectively (Supplementary Figs. 4a, b).

Datasets using the internal validation cohort performed better than those using the external validation cohort for all datasets. In the internal validation cohort, the pooled SENS, SPEC, and AUC values were 0.86 (95%CI = 0.85–0.88), 0.86 (95%CI = 0.84–0.88), and 0.93 (95%CI = 0.90–0.95), respectively. In the external validation cohort, the pooled SENS, SPEC, and AUC values were 0.82 (95%CI = 0.78–0.86), 0.84 (95%CI = 0.79–0.88), and 0.90 (95%CI = 0.87–0.92), respectively (Supplementary Fig. 5). Furthermore, the diagnostic accuracy of the AI algorithms was found to be excellent when categorized based on additional attributes, such as the number of model variables, nodule segmentation, and image quality control, as detailed in Supplementary Figs. 6–8. Multivariable, segmentation by algorithm, and conducting quality control had better performance.

For LC prognosis, the first meta-analysis utilizing diagnostic accuracy data included five sub-analyses encompassing AI algorithms, validation cohorts, model variables, nodules sketched, and imaging quality control. Fifty-two datasets from the internal validation cohort had higher combined performance in pooled SENS (0.85 vs. 0.79) and pooled SPEC (0.84 vs. 0.81) than 26 datasets from the external validation cohort (Supplementary Fig. 9). Moreover, thirteen studies containing DL algorithms exhibited a slightly lower pooled SENS but a higher pooled SPEC than 45 studies containing ML algorithms (Supplementary Fig. 10), which could be owing to the limited number of included studies and their small datasets. Furthermore, models built with only imaging variables achieved higher pooled SENS (0.84 vs. 0.83) and SPEC (0.86 vs. 0.79) than those built with imaging variables combined with clinical features (Supplementary Fig. 11). More detailed results are presented in Supplementary Figs. 12–14.

Owing to the limited datasets in the PFS, DFS, and RFS analyses, subgroup analyses of the second meta-analysis were only conducted in OS-related studies. Fifteen studies based on DL algorithms exhibited a higher pooled result for prognostic risk prediction (HR = 2.94, 95%CI = 2.27–3.82) than 25 studies based on ML algorithms (HR = 2.37, 95%CI = 2.03–2.76) (Supplementary Fig. 15a). Subgroup analysis revealed variations in the pooled results based on different types of LC treatment. A higher performance in prognostic risk prediction (HR = 3.64, 95%CI = 2.48–5.34) was observed for patients who underwent surgery. Conversely, a lower pooled performance was observed in two subgroups: (1) patients who received adjuvant therapy, including radiotherapy, chemotherapy, immunotherapy, or tyrosine kinase inhibitors (HR = 2.80, 95% CI = 2.34–3.55), and (2) patients from seven studies where the specific type of therapy was not specified (HR = 1.71, 95% CI = 1.52–1.94) (Supplementary Fig. 15b). No significant changes were observed in the pooled performance of image-based AI models in other subgroup analyses (Supplementary Figs. 15c–g).

Heterogeneity analysis

The results of three meta-analyses in two fields, with a total of 315 studies, suggested that image-based AI technology was beneficial in both the diagnosis and prognostic prediction of LC. However, substantial heterogeneity was observed. In diagnosis-related studies, SENS and SPEC had I2 of 94.71% and 97.35%, respectively. Tau2 was 0.0063. Multivariate meta-regression analysis revealed that variations in research objective and data source were primary contributors of heterogeneity (p < 0.05). In prognosis-related studies, SENS, SPEC, and HR had I2 of 79.29%, 79.48%, and 70.10%, respectively. Tau2 was 0.022. Multivariate meta-regression analysis revealed that the variation in data source was the primary contributor of heterogeneity (p < 0.05). The detailed findings of subgroup and multivariable meta-regression analyses investigating the potential sources of heterogeneity among studies are presented in Tables 3–4 and Supplementary Figs. 16–30.

The funnel plot results suggested the presence of publication bias in diagnosis-related studies (P < 0.001), whereas there was no statistically significant difference found in the prognosis-related studies included in the first meta-analysis (P = 0.11) (Supplementary Fig. 31). The results of Egger’s and Begg’s tests suggested the presence of publication bias in OS-related (P < 0.05) and PFS-related (P < 0.05) studies included in the second meta-analysis (Supplementary Figs. 32 and 33), whereas not powered to detect significant differences in DFS-related (P > 0.05) and RFS-related (P > 0.05) studies (Supplementary Figs. 34 and 35).

Discussion

This study identified the comprehensive utility of AI algorithms in two fields as follows: assisting in disease diagnosis and prognostic prediction for patients with LC. Our findings from three specialty-specific meta-analyses demonstrated the excellent performance of image-based AI models in both fields. Despite variation in workflows, pathological subtypes, and imaging modalities, ML or DL approaches consistently demonstrated high accuracies for diagnostic and prognostic stratification, indicating the potential of deploying AI algorithms in LC precision healthcare of radiology.

Although pooled analysis provides an overview of overall performance, the high heterogeneity suggests that calculating “average” performance metrics might yield misleading conclusions. We acknowledge the limitations of this pooling approach. To provide a more comprehensive evaluation of AI model performance, we further explored the impact of different subgroups through multivariable meta-regression analysis. This stratified approach helps identify the performance of models under specific conditions and offers more precise guidance for future research. Our meta-analyses offer critical insights for designing related studies, advancing methodological standards, and accelerating the comprehensive implementation of AI models as efficient tools in disease diagnosis and prognostic prediction for patients with LC.

LC is the leading cause of cancer-related mortality, with only a 22% 5-year survival rate following diagnoses19. However, most early-stage LCs are treatable, and the postoperative 5-year survival rate with preinvasive lesions is close to 100%20. Early and accurate diagnosis of LC is essential for improving survival rates; however, it is subject to wide disparities in human rater expertise11. Given this, AI advances effectively bridge the gap between the high demand for accurate assessment and low inter-reader agreement. In our sub-analysis, utilizing image-based AI models to detect LC and distinguish (pre-)invasive lesions exhibited excellent performance, with pooled AUCs of 0.94 (95%CI = 0.92–0.96) and 0.89 (95%CI = 0.86–0.92), respectively. Additionally, accurate histological subtype classification based on radiological images is crucial in assisting clinicians with treatment decision-making for patients with LC21. The primary focus of histological subtype classification in LC is non-small cell lung cancer (NSCLC, 85% of total diagnoses)22. Within NSCLC classifications, ADC (78% of total NSCLC diagnoses) is the most common, followed by SCC (18% of total NSCLC diagnoses)22. The sub-analysis for LC subtype classification yielded satisfactory performance with a pooled AUC of 0.87 (95%CI = 0.87–0.90). Currently, molecular profiling has transformed the therapeutic landscape in advanced NSCLC, particularly ADC23. Accurately identifying tumors harboring sensitizing EGFR mutations, the most prevalent targetable molecular alteration, is of utmost clinical priority24. However, the current clinical gold standard remains the genotyping of tumor biopsies, which is limited by insufficient tissue and potential complications24. Moreover, in patients with EGFR-mutant NSCLC who developed acquired resistance to EGFR tyrosine kinase inhibitors, rebiopsies are impracticable for half of the patients25 owing to patient refusal, inaccessible tumor sites, or physician discretion26. The sub-analysis for predicting EGFR yielded a pooled AUC of 0.86 (95%CI = 0.83–0.89), indicating that the image-based AI models hold promising potential for predicting EGFR mutant status and might even be used for EGFR genotyping, serving as a non-invasive, repeatable, and time-efficient instrument. Additionally, there is a lack of robust predictive biomarkers in clinical practice to help identify patients likely to benefit from specific therapeutic strategies or alternatives and predict their survival outcomes. Our study found that radiological images combined with an AI algorithm might provide an effective means for LC prognostic prediction, with a pooled AUC of 0.90 (95%CI = 0.87–0.92) and a pooled HR for OS of 2.53 (95%CI = 2.22–2.89).

Algorithms utilized in AI for constructing the diagnostic and prognostic models of LC primarily encompass ML and DL. ML, a subdiscipline of AI, learns from large amounts of information, rapidly scans all medical image features, and synthesizes these data to help decision-making using a trained algorithm27. DL uses artificial neural networks to extract high-order characteristics and make predictions from vast datasets28. Notwithstanding their utility, ML methods are knowingly limited by manual extraction and selection of features29. In contrast, DL methods using convolutional neural networks (CNN) are being investigated as a potential substitute for handcrafted radiomics, where the input image undergoes encoding into data representations through convolution and pooling operations, enabling the extraction of DL features30. Considering the progress in AI technology and the inherent differences between ML and DL, a sub-analysis was conducted based on these two algorithms. DL algorithms demonstrated better performance in both diagnosis and prognosis-related studies. A special DL algorithm, 3D CNN, exhibited better performance than 2D DL in diagnosis-related studies, which derived DL features of whole 3D lung regions on CT scans. These findings indicate that DL and 3D CNN provide promising utility in extracting representative features and could be extensively employed in future research endeavors.

In this study, we conducted multiple subgroup analyses. Notably, subgroup analysis based on imaging modalities was restricted to prognosis-related studies, given that few articles in diagnostic studies, particularly those focused on differentiating malignant from benign lesions, utilized PET/CT. PET/CT results themselves are often considered a clinical diagnostic standard31, and including such studies in our meta-analysis could lead to inflated performance metrics. Therefore, we excluded studies using PET/CT for malignant-benign differentiation and did not perform imaging modality-based subgroup analysis in the diagnosis-related studies. In LC prognosis-related studies, the observed outperformance of imaging-only models over multimodal approaches may be attributed to several factors, including the challenges of handling missing or incomplete clinical data, the potential introduction of noise or redundancy from clinical features, and the complexity of effectively fusing multimodal data. Additionally, the prognostic tasks under investigation may be more strongly driven by imaging features, reducing the relative contribution of clinical variables. These findings highlight the need for more sophisticated multimodal fusion techniques and careful handling of clinical data to fully realize the potential of combining imaging and clinical features. In addition, we acknowledge that the prognostic part of our meta-analysis relies on cutoff points for inherently time-to-event outcomes (e.g., OS, PFS), which may introduce bias due to the arbitrary selection of time thresholds (e.g., 6-month survival). While SENS and SPEC provide useful metrics, they do not fully capture the dynamic nature of time-to-event data. Although some included studies utilized Cox proportional hazards (CoxPH) models for time-to-event analyses, others employed cutoff-based approaches. To enhance the robustness of future research, we recommend prioritizing time-to-event analyses (e.g., CoxPH models) to minimize bias and improve interpretability.

Despite the demonstrated potential of AI technology in various tasks within LC precision healthcare, image-based AI models still have room for improvement. Methodological issues and future applications emerged during our systematic analysis of this evidence base and should be critically considered.

First, data remain the most central and crucial element for learning AI systems, regardless of model robustness32. In our meta-analysis, most included studies were retrospective, relying on hospital medical records rather than real-world clinical data, resulting in lower evidence quality. For example, prognostic studies based on retrospective data often lack follow-up precision. Additionally, the absence of external validation further compromises model generalizability. To address these limitations, future research should prioritize prospective, multicenter clinical trials, including diverse patient populations and robust external validation. We recommend adhering to standardized protocols and transparently reporting model performance, limitations, and failure cases to comprehensively assess clinical value. Moreover, some included studies mentioned using a data augmentation method to expand raw data volumes. This method reinforces the utilization of affine image transformation strategies, including rotation, flipping, scaling, and contrast adjustment, to compensate for data deficiencies. However, this method is regarded as a makeshift solution for insufficient data for model training and validation33. Though this approach is widely employed in almost every training run of DL, validation/testing should always be done on non-augmented images. Therefore, more realistic and large-scale studies are needed in the future to confirm the real clinical value of AI technology in LC precision healthcare. Furthermore, a rigorous examination of the included studies revealed that only 104 of the 315 studies performed an external validation, resulting in a more conservative but reliable estimate of their pooled performance. The lack of independent validation significantly leads to overestimating the results, compromising the model’s generalizability34. To fully harness the potential of AI, it is essential to accumulate adequately annotated imaging data accompanied by clinical, histological, and genomic/transcriptomic data within large-scale databases35. The integration of these multi-omics data with imaging features was also observed in our included studies, yielding satisfactory outcomes. As research matures, the future application of AI in LC precision healthcare will likely focus on multilevel multimodality fusion-based radiomics, considering the advantages of multimodality fusion in better recognition of tumor heterogeneity36.

Second, just as raw data determine an AI model’s performance, a meta-analysis’s credibility relies heavily on both the quality and quantity of included studies. Ninety-four percent of the published AI-centered diagnostic test accuracy studies have been conducted without a dedicated tool specifically designed to assess AI algorithm quality37. One of the most frequently employed instruments in these studies is the QUADAS-2, which does not account for the specialized terminology commonly encountered in AI-centered diagnostic test accuracy studies, nor does it effectively identify potential sources of bias inherent to this particular class of research38. To address these specific inquiries, Sounderajah et al. proposed a new tool known as QUADAS-AI, which offers researchers and policymakers a specific framework for assessing the risk of bias and applicability37. Accordingly, the adapted QUADAS-AI assessment tool was rigorously accepted in this study for rating the quality and risk of bias. The NOS is a well-established tool for assessing the quality of non-randomized studies, specifically designed to incorporate quality assessments into the interpretation of meta-analytic findings, with careful consideration given to its design, content, and user-friendliness. A “star system” for evaluating has been created, focusing on the following three main aspects: the selection of the study groups, the comparability of the groups, and the ascertainment of either the exposure or outcome of interest. The Cochrane Collaboration endorsed using the NOS to assess the quality of non-randomized studies in its 2011 handbook, and the NOS has become one of the most frequently used tools in practice39. Consequently, it was also adopted in our study for the quality assessment of non-randomized studies. In relation to poor methodological reporting, the TRIPOD-AI guidelines have been recently published that present a checklist of items that should be reported in prediction model development and validation40.

Additionally, efforts were made to include as many eligible studies as possible in the meta-analysis to mitigate potential bias and provide sufficient datasets for subgroup analyses. However, approximately half of the diagnostic accuracy studies lacked the necessary data to develop contingency tables. For example, in studies from computer science journals, metrics, including recall, precision, dice ratio, and F1 score, are commonly present as their default standards of measurement, which are difficult to use as the desired information for calculation. AI-enabled healthcare provision, at the intersection of computer science and healthcare systems, should be depicted by well-defined metrics to bridge the gap between these two territories. In the risk-stratification studies, many studies depicted the model’s performance based solely on a Kaplan–Meier (K–M) curve without its HR and 95% CI. Attempts were made to analyze the time-to-event outcomes and form their estimation based on the K–M curve through a previously documented methodology41, but failed owing to the absence of necessary information about the maximum and minimum follow-up. Moreover, this analysis required the presentation of the numbers at risk at specific time points, which necessarily restricted the division of the curve to these time points. The time points may be relatively few, and this method could be problematic if the event rate between time points surpasses 20%42. The results of Egger’s and Begg’s tests were not powered to detect significant differences in DFS-related (P > 0.05) and RFS-related (P > 0.05) studies, but funnel plots of show substantial asymmetry. The observed asymmetry may be due to random variation rather than genuine publication bias, given the limited number of studies in the meta-analysis. Future research with a larger number of studies is needed to more accurately assess the presence of bias.

Third, the ability of AI technology in LC precise healthcare remains unperfect. Although we assessed publication bias using funnel plots and Egger’s test, it should be acknowledged that many AI-centered studies primarily publish positive results, and AI research is prone to positive result bias, leading to inflated performance metrics. This phenomenon may have skewed the dataset and is not conducive to the objective comparison between AI models and clinicians33. Publication bias may obscure the true performance of AI models in real-world applications, especially with limited data quality or model generalizability. Therefore, we propose that future AI-centered studies include more transparency by publishing neutral or negative findings to confirm their real clinical value and promote the healthy development of the AI field. Moreover, during the process of identifying eligible studies based on title and abstract, it was found that several prognostic studies for therapy response prediction were indirectly completed by predicting molecular signatures, such as EGFR mutation status, programmed cell death-ligand 1 (PD-L1), and CD8 + T-cell infiltrate. However, these molecular signatures are not sufficiently robust to accurately identify patients likely to benefit from specific therapeutic strategies. For example, the US Food and Drug Administration has approved PD-L1 and tumor mutational burden as biomarkers to guide therapy selection in patients with LC43,44; however, the durable response remains confined to a minority of patients (20–30%)45. These studies aimed to develop imaging surrogates for the existing imperfect molecular signatures instead of determining whether radiographic features can enhance outcome prediction on their own. Therefore, it is proposed that future studies adopt a more reasonable approach that establishes a direct correlation between medical images and patients’ prognosis. Furthermore, as previously mentioned, the abundance of information in medical images offers substantial potential for enhancing model performance in subsequent research endeavors.

Fourth, the interpretability of AI models in clinical scenarios is a well-recognized challenge. Although their high-level performance has been observed in recent studies, the inherent “black-box” nature constrained the deployment of AI models in clinical settings46. For example, a clinician considering whether a lung nodule is malignant from CT images would base their decision on a set of judgment criteria. However, the rationale behind certain classifications made by an AI model in image analysis may remain elusive and defy explanations, which makes their application in clinical practice unreliable. To enhance the transparency and actionability of AI models, future research should focus on developing interpretable AI methods. For example, visualization techniques based on attention mechanisms (e.g., Grad-CAM) can highlight key regions of interest47, while decision trees or rule extraction methods can generate human-understandable decision rules48. Additionally, model design should align with clinical needs, ensuring that outputs match physicians’ diagnostic workflows and decision-making frameworks. For instance, providing probability estimates rather than binary classifications can help clinicians better weigh risks and benefits. There are many AI software as a medical devices (SaMD) have been approved for application in clinical workflows49. Radiology is one of the most represented medical specialties in AI/ML-enabled medical devices50. Meanwhile, it is also the standard-of-care of lung cancer patients for early detection, diagnosis, treatment planning and monitoring51. However, the majority of current AI SaMD sorely focuses on LC detection. It is aligned with the pooled performance in our sub-analyses that the subgroup detecting LC has the best SENS, SPEC and AUC. We should acknowledge that LC is a heterogeneous disease with multiple histological subtypes and complex prognosis. Therefore, more effort should be attempted to accelerate the deployment of AI SaMD in various territories of LC precision healthcare, from diagnosis to prognostic prediction.

We have searched current systematic reviews and meta-analyses studying the performance of image-based AI models in LC precision healthcare, of which Forte et al. synthesized 14 studies researching the AI performance in LC detection, with pooled SENS and SPEC of 0.87 (0.82–0.90) and 0.87 (0.82–0.91), respectively15. Nguyen et al. extracted datasets from 35 studies for analyzing the AI performance in predicting EGFR mutant status, with pooled SENS and SPEC of 0.72 (0.67–0.76) and 0.73 (0.69–0.78), respectively52. However, there is a lack of relative literatures focusing on the classification of histopathological variations or invasiveness profiles. Moreover, existing meta-analyses encompass a limited number of studies, typically fewer than fifty, we venture to guess that they exclude the articles that do not directly present binary diagnostic accuracy data for a reason of “incomplete data”. In our study, we tried our best to calculate these metrics by using all available information (such as using the combination of SENS, SPEC, accuracy and total number, or the combination of SENS, SPEC and the number of case and control). Furthermore, our study encompasses the broadest range of study aspects and incorporates more than three hundred including articles. Consequently, this study represents the most comprehensive evaluation of existing literature on image-based AI algorithms for precision healthcare of LC patients, from diagnosis to prognosis. This review provides valuable insights into the potential impact of AI applications in medical imaging as one of the most prevalent, time-saving, and resource-intensive tools.

Nonetheless, this study had some inherent limitations. First, most included studies were retrospective; however, incorporating prospective studies would yield more favorable evidence. Therefore, only internal and external validation datasets were included in this study to ensure the most credible estimation. Second, the presence of an inclusion bias should be admitted owing to the exclusive focus on English articles, which may overlook essential information from articles in other languages. Third, the prognostic studies involved patients at various stages of LC, potentially introducing selection bias in identifying patient characteristics. Another limitation is that we did not contact the authors because most of the studies included in full-text screening (73%) provided the necessary data.

Generally, the current evidence supports that the potential application of AI technology in aiding diagnosis and prognostic prediction from lung imaging is promising. Nevertheless, current studies are limited by insufficient quality and the immature phases of AI technology. Future studies, including prospective investigations and multicenter validations, can promote the maturity of AI models as medical devices, widen avenues for accurate diagnosis and personalized treatment strategies, and further mitigate LC’s serious threat to human health.

Methods

Protocol registration and study design

This systematic review was pre-registered in the Prospective Register of Systematic Reviews (Registration No. CRD42023424366). HR was added post hoc to complement the original analysis plan. This update has also been registered, with full version history preserved. This study followed the Preferred Reporting Items for Systematic Reviews and Meta-Analyses guidelines53, as well as the Meta-analyses of Observational Studies in Epidemiology guidelines54.

Search strategy and eligibility criteria

Four electronic databases, Embase, Web of Science, PubMed, and Cochrane Library, were systematically searched until January 7, 2025, using a broad search strategy. Keywords included (“artificial intelligence” OR “deep learning” OR “machine learning”) AND (“lung cancer”). Only articles published in English were included in the analysis. Detailed search strategies used in each database are summarized in Supplementary Note 1.

Eligible primary studies investigated the performance of AI technology in image-based LC diagnosis and prognosis. For this article, all research objectives focused on distinguishing LC from other benign diseases or characterizing the diverse LC subtypes, encompassing histopathological variations, invasiveness profiles, and gene mutation statuses, referred to as “LC diagnosis”. Moreover, all purposes focused on predicting therapy response and survival outcomes, such as OS and PFS, referred to as “LC prognosis.” For the meta-analysis, articles that presented sufficient information on a 2 × 2 contingency table or contained data enabling the construction of a 2 × 2 contingency table were included. Articles reporting a risk-stratification result as a HR with corresponding 95% CI were also incorporated.

Articles were excluded if they: 1) did not provide sufficient information for the construction of a 2 × 2 contingency table, 2) only reported performance in the training dataset, 3) studied phantoms or animal models, or 4) were comments, case reports, letters, editorials, or reviews. Four reviewers (H-HC, X-YY, LW and H-LX) designed these eligible criteria. Two reviewers (X-YY and J-KZ) screened the titles and abstracts, which were subsequently cross-checked by another two reviewers (B-YP and Z-XY). Any confusion was resolved via consensus through reviewers’ discussions.

Data extraction

All eligible articles were subjected to data extraction by two reviewers (X-YY and J-KZ), followed by a thorough double-check conducted by two other reviewers (B-YP and Z-XY). They cross-examined the results, and all inconsistencies were addressed by engaging in dialogue or seeking input from three supplementary investigators (H-HC, LW and H-LX).

Diagnostic accuracy data were obtained from each study, including the number of true positives, false positives, false negatives, and true negatives, or their SENS, SPEC, accuracy, and sample size for calculation. Malignant lung nodule, ADC, invasive LC, EGFR mutant, longer survival time, and response to therapeutic strategy were respectively considered as the cutoff to determine a positive in multiple objectives we included here. HR and 95% CI were also extracted as risk-stratification data from the studies comparing prognostic outcomes, such as OS and PFS, between high- and low-risk groups. If there were multiple models present in the same study, the main or best-performing model was chosen. Results from internal and external validation cohorts were extracted and recorded separately. For internal validation, n-fold cross-validation was considered a valid approach, as it provides a robust assessment of model performance by averaging results across multiple folds. External validation was strictly defined as the use of completely independent datasets, ensuring no overlap with the training data. Supplementary Tables 8 and 9 summarize the contingency tables and the results as HR with corresponding 95% CIs derived from the included studies, respectively.

Study quality assessment

The QUADAS-AI tool37 and the NOS55 were used by two reviewers (X-YY and J-KZ) to evaluate the risk of bias, followed by a thorough double-check conducted by another two reviewers (B-YP and Z-XY). Conflicts were discussed with three additional investigators (H-HC, LW and H-LX). For the studies assessed using diagnostic accuracy data, such as SENS and SPEC, QUADAS-AI was employed, encompassing four domains (including patient selection, index test, reference standard, and flow and timing). Subsequently, the risk of bias was assessed and categorized into the following three levels: “high,” “low,” or “unclear” risk in all four domains. For the studies assessed using HR and 95% CI, the NOS was utilized to evaluate the risk of bias, containing three domains (including selection bias, group comparability, and cohort exposure). The NOS scores varied from 0 to 9, and higher scores indicated better quality of the study. The details of QUADAS-AI and NOS are presented in Supplementary Tables 10 and 11, respectively.

Meta-analysis

This study encompasses the following two fields in the investigation of image-based AI models in LC: diagnosis and prognosis. For diagnosis-related studies, all diagnostic accuracy data were extracted from each study, and a meta-analysis was performed using a bivariate random-effects model to jointly analyze sensitivity and specificity while accounting for between-study heterogeneity. The accuracy of the AI model was evaluated by fitting a hierarchical SROC. Heterogeneity was assessed using Cochran’s Q test (p-values), the I² statistic (quantifying the proportion of variability due to heterogeneity), and Tau² (estimating the between-study variance). Subgroup and regression analyses were further conducted to investigate potential sources of heterogeneity. The utilization of the random-effects model aimed to account for the assumed differences among studies. The potential of publication bias was evaluated using a funnel plot and regression test.

In the prognosis-related research group, two meta-analyses were conducted as follows: a meta-analysis of studies investigating the utilization of image-based AI to predict therapeutic response or patient survival and another meta-analysis of articles about the utilization of image-based AI to compare patient outcomes between high- and low-risk groups in overall datasets. The first meta-analysis was implemented using the methodology applied in the diagnosis-related studies. Articles with HR and 95% CI were included in the second meta-analysis. The overall effects were assessed using the random-effects model, and the pooled HRs with their corresponding 95% CIs were calculated. Additionally, we conducted Begg’s and Egger’s tests to assess the presence of potential publication bias.

The following sub-analyses were employed in the meta-analysis of LC diagnosis: 1) multiple study objectives; 2) different algorithms; 3) data source from internal or external validation dataset; 4) only imaging variable or combined with clinical features; 5) nodules sketched manually or by algorithm; 6) exclusion of low-quality images (yes or no); and 7) imaging modality. In the meta-analyses of LC prognosis, the sub-analyses also considered the above variations. Moreover, the diverse types of therapy (surgery, adjuvant therapy, or NR) were also employed for the sub-analysis here.

The methodological quality of the included studies was assessed using the QUADAS-AI with RevMan (Version 5.4). The statistical analyses in studies with diagnostic accuracy data and HR and 95%CIs were respectively conducted using the package of midas56 and metan57 in Stata software (Version 15.0).

Data availability

Data is provided within the manuscript or supplementary information files.

References

Bray, F. et al. Global cancer statistics 2022: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J. Clin. 74, 229–263 (2024).

Chen, M., Copley, S. J., Viola, P., Lu, H. & Aboagye, E. O. Radiomics and artificial intelligence for precision medicine in lung cancer treatment. Semin Cancer Biol. 93, 97–113 (2023).

Yin, X. et al. Artificial intelligence-based prediction of clinical outcome in immunotherapy and targeted therapy of lung cancer. Semin Cancer Biol. 86, 146–159 (2022).

Chen, K., Wang, M. & Song, Z. Multi-task learning-based histologic subtype classification of non-small cell lung cancer. Radio. Med. 128, 537–543 (2023).

Rami-Porta, R., Asamura, H., Travis, W. D. & Rusch, V. W. Lung cancer - major changes in the American Joint Committee on Cancer eighth edition cancer staging manual. CA Cancer J. Clin. 67, 138–155 (2017).

Zhai, W. et al. Risk stratification and adjuvant chemotherapy after radical resection based on the clinical risk scores of patients with stage IB-IIA non-small cell lung cancer. Eur. J. Surg. Oncol. 48, 752–760 (2022).

Schegoleva, A. A. et al. Prognosis of Different Types of Non-Small Cell Lung Cancer Progression: Current State and Perspectives. Cell Physiol. Biochem 55, 29–48 (2021).

Kim, J., Lee, H. & Huang, B. W. Lung Cancer: Diagnosis, Treatment Principles, and Screening. Am. Fam. Physician 105, 487–494 (2022).

Salehjahromi, M. et al. Synthetic PET from CT improves diagnosis and prognosis for lung cancer: Proof of concept. Cell Rep. Med. 5, 101463. https://doi.org/10.1016/j.xcrm.2024.101463 (2024).

Trebeschi, S. et al. Predicting response to cancer immunotherapy using noninvasive radiomic biomarkers. Ann. Oncol. 30, 998–1004 (2019).

Rubin, G. D. Lung nodule and cancer detection in computed tomography screening. J. Thorac. Imaging 30, 130–138 (2015).

Tang, F. H. et al. Radiomics-Clinical AI Model with Probability Weighted Strategy for Prognosis Prediction in Non-Small Cell Lung Cancer. Biomedicines 11, https://doi.org/10.3390/biomedicines11082093 (2023).

Bi, W. L. et al. Artificial intelligence in cancer imaging: Clinical challenges and applications. CA Cancer J. Clin. 69, 127–157 (2019).

Shen, D., Wu, G. & Suk, H. I. Deep Learning in Medical Image Analysis. Annu Rev. Biomed. Eng. 19, 221–248 (2017).

Liu, M. et al. The value of artificial intelligence in the diagnosis of lung cancer: A systematic review and meta-analysis. PLoS One 18, e0273445 (2023).

Forte, G. C. et al. Deep Learning Algorithms for Diagnosis of Lung Cancer: A Systematic Review and Meta-Analysis. Cancers (Basel) 14, https://doi.org/10.3390/cancers14163856 (2022).

Thong, L. T., Chou, H. S., Chew, H. S. J. & Lau, Y. Diagnostic test accuracy of artificial intelligence-based imaging for lung cancer screening: A systematic review and meta-analysis. Lung Cancer 176, 4–13 (2023).

Kothari, G. et al. A systematic review and meta-analysis of the prognostic value of radiomics based models in non-small cell lung cancer treated with curative radiotherapy. Radiother. Oncol. 155, 188–203 (2021).

Siegel, R. L., Miller, K. D., Fuchs, H. E. & Jemal, A. Cancer statistics, 2022. CA Cancer J. Clin. 72, 7–33 (2022).

Hirsch, F. R. et al. Lung cancer: current therapies and new targeted treatments. Lancet 389, 299–311 (2017).

Li, H. et al. Reconstruction-Assisted Feature Encoding Network for Histologic Subtype Classification of Non-Small Cell Lung Cancer. IEEE J. Biomed. Health Inf. 26, 4563–4574 (2022).

Thai, A. A., Solomon, B. J., Sequist, L. V., Gainor, J. F. & Heist, R. S. Lung cancer. Lancet 398, 535–554 (2021).

Goldman, J. W., Noor, Z. S., Remon, J., Besse, B. & Rosenfeld, N. Are liquid biopsies a surrogate for tissue EGFR testing?. Ann. Oncol. 29, i38–i46 (2018).

Zhang, Y. et al. Global variations in lung cancer incidence by histological subtype in 2020: a population-based study. Lancet Oncol. 24, 1206–1218 (2023).

Hasegawa, T. et al. Feasibility of Rebiopsy in Non-Small Cell Lung Cancer Treated with Epidermal Growth Factor Receptor-Tyrosine Kinase Inhibitors. Intern Med. 54, 1977–1980 (2015).

Kawamura, T. et al. Rebiopsy for patients with non-small-cell lung cancer after epidermal growth factor receptor-tyrosine kinase inhibitor failure. Cancer Sci. 107, 1001–1005 (2016).

Rajpurkar, P., Chen, E., Banerjee, O. & Topol, E. J. AI in health and medicine. Nat. Med. 28, 31–38 (2022).

Tran, K. A. et al. Deep learning in cancer diagnosis, prognosis and treatment selection. Genome Med. 13, 152 (2021).

Litjens, G. et al. State-of-the-Art Deep Learning in Cardiovascular Image Analysis. JACC Cardiovasc Imaging 12, 1549–1565 (2019).

Avanzo, M., Stancanello, J., Pirrone, G. & Sartor, G. Radiomics and deep learning in lung cancer. Strahlenther. Onkol. 196, 879–887 (2020).

Al-Jahdali, H., Khan, A. N., Loutfi, S. & Al-Harbi, A. S. Guidelines for the role of FDG-PET/CT in lung cancer management. J. Infect. Public Health 5, S35–S40 (2012).

Hosny, A., Parmar, C., Quackenbush, J., Schwartz, L. H. & Aerts, H. Artificial intelligence in radiology. Nat. Rev. Cancer 18, 500–510 (2018).

Xue, P. et al. Deep learning in image-based breast and cervical cancer detection: a systematic review and meta-analysis. NPJ Digit Med. 5, 19 (2022).

Altman, D. G. & Royston, P. What do we mean by validating a prognostic model?. Stat. Med. 19, 453–473 (2000).

Shimizu, H. & Nakayama, K. I. Artificial intelligence in oncology. Cancer Sci. 111, 1452–1460 (2020).

Zhang, Y. P. et al. Artificial intelligence-driven radiomics study in cancer: the role of feature engineering and modeling. Mil. Med Res. 10, 22 (2023).

Sounderajah, V. et al. A quality assessment tool for artificial intelligence-centered diagnostic test accuracy studies: QUADAS-AI. Nat. Med. 27, 1663–1665 (2021).

Whiting, P. F. et al. QUADAS-2: a revised tool for the quality assessment of diagnostic accuracy studies. Ann. Intern Med. 155, 529–536 (2011).

Margulis, A. V. et al. Quality assessment of observational studies in a drug-safety systematic review, comparison of two tools: the Newcastle-Ottawa Scale and the RTI item bank. Clin. Epidemiol. 6, 359–368 (2014).

Collins, G. S. et al. TRIPOD+AI statement: updated guidance for reporting clinical prediction models that use regression or machine learning methods. Bmj. 385, e078378 (2024).

Tierney, J. F., Stewart, L. A., Ghersi, D., Burdett, S. & Sydes, M. R. Practical methods for incorporating summary time-to-event data into meta-analysis. Trials. 8, 16 (2007).

Parmar, M. K., Torri, V. & Stewart, L. Extracting summary statistics to perform meta-analyses of the published literature for survival endpoints. Stat. Med. 17, 2815–2834 (1998).

Doroshow, D. B. et al. PD-L1 as a biomarker of response to immune-checkpoint inhibitors. Nat. Rev. Clin. Oncol. 18, 345–362 (2021).

Hellmann, M. D. et al. Nivolumab plus Ipilimumab in Lung Cancer with a High Tumor Mutational Burden. N. Engl. J. Med. 378, 2093–2104 (2018).

Ribas, A. & Wolchok, J. D. Cancer immunotherapy using checkpoint blockade. Science. 359, 1350–1355 (2018).

Huang, Z. et al. A pathologist-AI collaboration framework for enhancing diagnostic accuracies and efficiencies. Nat. Biomed. Eng. https://doi.org/10.1038/s41551-024-01223-5 (2024).

Selvaraju, R. R. et al. in 2017 IEEE International Conference on Computer Vision (ICCV).618–626.

Lundberg, S. M. & Lee, S. I. A Unified Approach to Interpreting Model Predictions. Adv. Neural Inf. Process. Syst. 30, 4765–4774 (2017).

Muehlematter, U. J., Bluethgen, C. & Vokinger, K. N. FDA-cleared artificial intelligence and machine learning-based medical devices and their 510(k) predicate networks. Lancet Digit Health. 5, e618–e626 (2023).

Zhu, S., Gilbert, M., Chetty, I. & Siddiqui, F. The 2021 landscape of FDA-approved artificial intelligence/machine learning-enabled medical devices: An analysis of the characteristics and intended use. Int J. Med Inf. 165, 104828 https://doi.org/10.1016/j.ijmedinf.2022.104828 (2022).

Tunali, I., Gillies, R. J. & Schabath, M. B. Application of Radiomics and Artificial Intelligence for Lung Cancer Precision Medicine. Cold Spring Harb Perspect Med. 11 https://doi.org/10.1101/cshperspect.a039537 (2021).

Nguyen, H. S. et al. Predicting EGFR Mutation Status in Non-Small Cell Lung Cancer Using Artificial Intelligence: A Systematic Review and Meta-Analysis. Acad. Radio. 31, 660–683 (2024).

Page, M. J. et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. Bmj. 372, n71 (2021).

Stroup, D. F. et al. Meta-analysis of observational studies in epidemiology: a proposal for reporting. Meta-analysis Of Observational Studies in Epidemiology (MOOSE) group. Jama. 283, 2008–2012 (2000).

Stang, A. Critical evaluation of the Newcastle-Ottawa scale for the assessment of the quality of nonrandomized studies in meta-analyses. Eur. J. Epidemiol. 25, 603–605 (2010).

Dwamena, B. MIDAS: Stata module for meta-analytical integration of diagnostic test accuracy studies. (2007).

Harris, R. J. et al. metan: fixed- and random-effects meta-analysis. (2008).

Acknowledgements

This work was supported by the National Natural Science Foundation of China (No.82304235 to Chen HH) and the Doctoral Start-up Foundation of Liaoning Province (No.2023-BS-092 to Chen HH).

Author information

Authors and Affiliations

Contributions

X.-Y.Y., H.-L.X., H.-H.C., and L.W. conceived of and designed the study. X.-Y.Y., J.-K.Z., Z.-X.Y., and B.-Y.P. performed literature searches, data extraction, and risk of bias evaluation. All the authors interpreted the data, read the manuscript, and approved the final version. X.-Y.Y. and H.-L.X. contributed equally to the study. All authors had full access to all data in the study and had final responsibility for the decision to submit for publication.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Yuan, X., Xu, H., Zhu, J. et al. Systematic review and meta-analysis of artificial intelligence for image-based lung cancer classification and prognostic evaluation. npj Precis. Onc. 9, 300 (2025). https://doi.org/10.1038/s41698-025-01095-1

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41698-025-01095-1