Abstract

Accurate early detection of breast and cervical cancer is vital for treatment success. Here, we conduct a meta-analysis to assess the diagnostic performance of deep learning (DL) algorithms for early breast and cervical cancer identification. Four subgroups are also investigated: cancer type (breast or cervical), validation type (internal or external), imaging modalities (mammography, ultrasound, cytology, or colposcopy), and DL algorithms versus clinicians. Thirty-five studies are deemed eligible for systematic review, 20 of which are meta-analyzed, with a pooled sensitivity of 88% (95% CI 85–90%), specificity of 84% (79–87%), and AUC of 0.92 (0.90–0.94). Acceptable diagnostic performance with analogous DL algorithms was highlighted across all subgroups. Therefore, DL algorithms could be useful for detecting breast and cervical cancer using medical imaging, having equivalent performance to human clinicians. However, this tentative assertion is based on studies with relatively poor designs and reporting, which likely caused bias and overestimated algorithm performance. Evidence-based, standardized guidelines around study methods and reporting are required to improve the quality of DL research.

Similar content being viewed by others

Introduction

Female breast and cervical cancer remain as major contributors to the burden of cancer1,2. The World Health Organization (WHO) reported that approximately 2.86 million new cases (14.8% of all cancer cases) and 1.03 million deaths (10.3% of all cancer deaths) were recorded worldwide in 20203. This disproportionately affects women, especially in low- and middle-income countries (LMICs), which can be largely attributed to more advanced stage diagnoses, limited access to early diagnostics, and suboptimal treatment4,5. Population-based cancer screening in high-income countries might not be as effective in LMICs, due to limited resources for treatment and palliative care6,7. Integrative screening for cancer is a complex procedure that needs to take biological and social determinants, as well as ethical constraints into consideration, and as is already known, early detection of breast and cervical cancers are associated with improved prognosis and survival8,9. Therefore, it is vital to select the most accurate and reliable technologies that are capable of identifying early symptoms.

Medical imaging plays an essential role in tumor detection, especially within progressively digitized cancer care services. For example, mammography and ultrasound, as well as cytology and colposcopy are commonly used in clinical practice10,11,12,13,14. However, fragmented health systems in LMICs may lack infrastructure and perhaps the manpower required to ensure high-quality screening, diagnosis, and treatment. This hinders the universality of traditional detection technologies mentioned above, which require sophisticated training15. Furthermore, there may be substantial inter- and intraoperator variability which affects both machine and human performances. Therefore, the interpretation of medical imaging is vulnerable to human error. Of course, experienced doctors tend to be more accurate although their expertise is not always readily available for marginalized populations, or for those living in remote areas. Resource-based testing and deployment of effective interventions together could reduce cancer morbidity and mortality in LMICs16. In line with this, an ideal detection technology for LMICs should at least have low training needs.

Deep learning (DL), as a subset of artificial intelligence (AI), could be applied to medical imaging and has shown promise in automatic detection17,18. While media headlines tend to overemphasize the polarization of DL model findings19, few have demonstrated inferiority or superiority. However, the Food and Drug Administration (FDA) has approved a select number of DL-based diagnosis tools for clinical practice, even though further critical appraisal and independent quality assessments are pending20,21. To date, there are few medical imaging specialty-specific systematic reviews such as this, which assess the diagnostic performance of DL algorithms, particularly in breast and cervical cancer.

Results

Study selection and characteristics

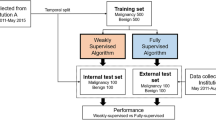

Our search initially identified 2252 records, of which 2028 were screened after removing 224 duplicates. 1957 were also excluded as they did not fulfil our predetermined inclusion criteria. We assessed 71 full-text articles and a further 36 articles were excluded. 25 of these articles focused on breast cancer, and 10 were on cervical cancer (see Fig. 1). Study characteristics are summarized in Tables 1–3.

Thirty-three studies utilized retrospective data. Only two studies used prospective data. Two studies also used data from open access sources. No studies reported a prespecified sample size calculation. Eight studies excluded low quality images, while 27 studies did not report anything around image quality. 11 studies performed external validation using out-of-sample dataset, while the others performed internal validation using in-sample-dataset. 12 studies compared DL algorithms against human clinicians using the same dataset. Additionally, medical imaging modalities were categorized into cytology (n = 4), colposcopy (n = 4), cervicography (n = 1), microendoscopy (n = 1), mammography (n = 12), ultrasound (n = 11), and MRI (n = 2).

Pooled performance of DL algorithms

Among the 35 studies in this sample, 20 provided sufficient data to create contingency tables for calculating diagnostic performance and were therefore included for synthesis at the meta-analysis stage. Hierarchical SROC curves for these studies (i.e. 55 contingency tables) are provided in Fig. 2a. When averaging across studies, the pooled sensitivity and specificity were 88% (95% CI 85–90), and 84% (95% CI 79–87), respectively, with an AUC of 0.92 (95% CI 0.90–0.94) for all DL algorithms.

Most studies used more than one DL algorithm to report diagnostic performance, therefore we reported the highest accuracy of different DL algorithms for included studies in 20 contingency tables. The pooled sensitivity and specificity were 89% (86–92%), and 85% (79–90%), respectively, with an AUC of 0.93 (0.91–0.95). Please see Fig. 2b for further details.

Subgroup meta-analyses

Four separate meta-analyses were conducted:

-

I.

Validation types—15 studies with 40 contingency tables included in the meta-analysis were validated with an in-sample dataset and had a pooled sensitivity of 89% (87–91%), and pooled specificity of 83% (78–86%), with an AUC of 0.93 (0.91–0.95), see Fig. 3a for details. Only 8 studies with 15 contingency tables performed an external validation, for which the pooled sensitivity and specificity were 83% (77–88%), and 85% (73–92%), respectively, with an AUC of 0.90 (0.87–0.92), see Fig. 3b.

-

II.

Cancer types—10 studies with 36 contingency tables targeting breast cancer, had a pooled sensitivity of 90% (87–92%) and specificity of 85% (80–89%), with an AUC of 0.94 (0.91–0.96), see Fig. 4a. 10 studies with 19 contingency tables considered cervical cancer with a pooled sensitivity and specificity of 83% (78–88%), and 80 (70–88%), respectively, with an AUC of 0.89 (0.86–0.91), see Fig. 4b for details.

-

III.

Imaging modalities—4 mammography studies with 15 contingency tables had a pooled sensitivity of 87% (82–91%), a pooled specificity of 88% (79–93%), and with an AUC of 0.93 (0.91–0.95), see Fig. 5a. There were four ultrasound studies with 17 contingency tables with a pooled sensitivity of 91% (89–93%), pooled specificity of 85% (80–89%), and an AUC of 0.95 (0.93–0.96), see Fig. 5b. There were four cytology studies with six contingency tables which had a pooled sensitivity of 87% (82–90%), pooled specificity of 86% (68–95%), and an AUC of 0.91(0.88–0.93), Fig. 5c. There were four colposcopy studies with 11 contingency tables which had a pooled sensitivity of 78% (69–84%), pooled specificity of 78% (63–87%), and an AUC of 0.84 (0.81–0.87), see Fig. 5d.

Fig. 5: Pooled performance of DL algorithms using different imaging modalities. a Receiver operator characteristic (ROC) curves of studies using mammography (4 studies with 15 tables), b ROC curves of studies using ultrasound (4 studies with 17 tables), c ROC curves of studies using cytology (4 studies with 6 tables), and d presented ROC curves of studies using colposcopy (4 studies with 11 tables).

-

IV.

DL algorithms versus human clinicians—of the 20 included studies, 11 studies compared diagnostic performance between DL algorithms and human clinicians using the same dataset, with 29 contingency tables for DL algorithms, and 18 contingency tables for human clinicians. The pooled sensitivity was 87% (84–90%) for DL algorithms, which human clinicians had 88% (81–93%). The pooled specificity was 83% (76–88%) for DL algorithms, and 82% (72–88%) for human clinicians. The AUC was 0.92 (0.89–0.94) for DL algorithms, and 0.92 (0.89–0.94) for human clinicians (Fig. 6a, b).

Heterogeneity analysis

All included studies found that DL algorithms are useful for the detection of breast and cervical cancer using medical imaging when compared with histopathological analysis, as the gold standard; however, extreme heterogeneity was observed. Sensitivity (SE) had an I2 = 97.65%, while specificity (SP) had I2 = 99.90 (p < 0.0001), see Fig. 7.

A funnel plot was produced to assess publication bias. The p value of 0.41 suggests there is no publication bias although studies were widely dispersed around the regression line. See Supplementary Fig. 3 for further details. In order to identify the source/sources of such extreme heterogeneity we conducted subgroup analysis, and found:

-

I.

Validation types—Internal validation (SE, I2 = 97.60%, SP, I2 = 99.19, p < 0.0001), and external validation (SE, I2 = 96.15%, SP, I2 = 99.96, p < 0.0001). See Supplementary Fig. 4.

-

II.

Cancer types of DL algorithms included breast cancer (SE, I2 = 95.84%, SP, I2 = 99.86 p < 0.0001) and cervical cancer (SE, I2 = 98.16%, SP, I2 = 99.89, p < 0.0001). Please see Supplementary Fig. 5 for further details.

-

III.

Imaging modalities including mammography (SE, I2 = 97.01%, SP, I2 = 99.93, p < 0.0001), and ultrasound (SE, I2 = 86.49%, SP, I2 = 96.06, p < 0.0001), cytology (SE, I2 = 89.97%, SP, I2 = 99.90, p < 0.0001), and colposcopy (SE, I2 = 98.12%, SP, I2 = 99.59, p < 0.0001), see Supplementary Fig. 6.

However, heterogeneity was not aligned to a specific subgroup, nor was it reduced to an acceptable level, with all subgroup I2 values remained high. Therefore, we could infer whether different validation types, cancer types, and imaging modalities were likely to have influenced DL algorithm performances for detecting breast and cervical cancer.

To further investigate this finding, we performed meta-regression analysis with these covariates (see Supplementary Table 1). The results highlighted a statistically significant difference, which is line with sub-group and meta-analytical sensitivity analyses.

Quality assessment

The quality of the included studies was assessed using QUADAS-2 and a summary of findings has been provided with an appropriate diagram in the supplementary materials as Supplementary Fig. 1. A detailed assessment for each item based on the domain of risk of bias and concern of applicability has also been provided as Supplementary Fig. 2. For the patient selection domain of risk of bias, 13 studies were considered high or unclear risk of bias due to unreported inclusion criteria or exclusion criteria, and improper exclusion. For the index test domain, only one studies was considered high or at unclear risk of bias due to having no predefined threshold, whereas the others were considered at low risk of bias.

For the reference standard domain, three studies were considered at high or unclear risk of bias due to reference standard inconsistencies. There was no mention of whether the threshold was determined in advance and whether blinding was implemented. For the flow and timing domain, five studies were considered high or with an unclear risk of bias because the authors had not mentioned whether there was an appropriate time gap or whether it was based on the same gold standard.

In the applicability concern domain, 12 studies were considered to have high or unclear applicability in patient selection. One study also had unclear applicability in the reference standard domain, with no applicability concern in the index test domain.

Discussion

Artificial Intelligence in medical imaging is without question improving however, we must subject emerging knowledge to the same rigorous testing we would for any other diagnostic procedure. Deep learning could reduce the over-reliance of experienced clinicians and could, with relative ease, be extended to rural communities and LMICs. While this relatively inexpensive approach may help to bridge inequality gaps across healthcare systems generally, evidence is increasingly highlighting the value of deep learning in cancer diagnostics and care. Within the field of female cancer diagnosis, one of the representative technologies is computer-assisted cytology image diagnosis such as the FDA-approved PAPNET and AutoPap systems, which dates back to at least the 1970s22. While rapid progress in AI technology is made, they are also becoming an increasingly important element involved in automated image-based cytology analysis systems. These technologies have the potential to reduce the amount of time spent and improve cytologics during the reading process. Here, we attempted to ascertain which is the most accurate and reliable detection technology presently available in the field of breast and cervical cancer diagnostics.

A systematic search for pertinent articles identified three systematic reviews with meta-analyses which investigated DL algorithms in medical imaging. However, these were in diverse domains which make it difficult to compare directly with the present review. For example, Liu et al. 23 found that DL algorithm performance in medical imaging might be equivalent to healthcare professors. However, only breast and dermatological cancers were analyzed with more than three studies, which not only inhibits generalizability but highlights the need for further DL algorithm performance research in the field of medical imaging. In identifying pathologies, Aggarwal et al. 24 found that DL algorithms have high diagnostic performance. However, the authors also found high heterogeneity which was attributed to combining distinct methods and perhaps through unspecified terms. They concluded that we need to be cautious when considering the diagnostic accuracy of DL algorithms and that there is a need to develop (and apply) AI guidelines. This was also apparent in this study and therefore we would reiterate this sentiment.

While the findings from the aforementioned studies are incredibly valuable, at present there is a need to expand upon the emerging knowledge-base for metastatic tumor diagnosis. The only other review in this field was conducted by Zheng et al. 25 who found that DL algorithms are beneficial in radiological imaging with equivalent, or in some instances better performance than healthcare professionals. Although again, there were methodological deficiencies which must be considered before we adopt these technologies into clinical practice. Also, we must strive to identify the best available DL algorithm and then develop it to enhance identification and reduce the number of false positives and false negatives beyond that which is humanly possible. As such, we need to continue to use systematic reviews to identify gaps in research and we should not only consider technology-specific reviews, but also disease-specific systematic reviews. Of course, DL algorithms are in an almost constant state of development but the purpose of this study was to critically appraise potential issues with study methods and reporting standards. By doing so, we hoped to make recommendations and to drive further research in this field so that the most effective technology is adopted into clinical practice sooner rather than later.

This systematic review with meta-analysis suggests that deep learning algorithms can be used for the detection of breast and cervical cancer using medical imaging. Evidence also suggests that while the deep learning algorithms are not yet superior, nor are they inferior in terms of performance when compared to clinicians. Acceptable diagnostic performance with analogous deep learning algorithms was observed in both breast and cervical cancer despite having dissimilar workflows with different imaging modalities. This finding also suggests that these algorithms could be deployed across both breast or cervical imaging, and potentially across all types of cancer which utilize imaging technologies to identify cases early. However, we must also critically consider some of the issues which emerged during our systematic analysis of this evidence base.

Overall, there were very few prospective studies and few clinical trials. In fact, most included studies were retrospective studies which may be the case because of the relative newness of DL algorithms in medical imaging. However, the data sources used were from either pre-existing electronic medical records or online open-access databases, which were not explicitly intended for algorithmic analysis in real clinical settings. Of course, we must first test these technologies using retrospective datasets to see whether they are appropriate and with a view to modifying and enhancing accuracy perhaps for specific populations or for specific types of cancer. We also encourage more prospective DL studies in the future. If possible, we should investigate the potential rules of breast or cervical images through more prospective studies, and identify possible image feature correlations and diagnostic logic for risk predictions. Most studies constructed and trained algorithms using small labeled breast or cervical images, with labels which were rarely quality-checked by a clinical specialist. This design fault is likely to have created ambiguous ground-truth inputs which may have caused unintended adverse model effects. Of course, the knock-on effect is that there is likely to be diagnostic inaccuracies through unidentified biases. This is certainly an issue which should be considered when designing future deep learning-based studies.

It is important to note that no matter how well-constructed an algorithm is, its diagnostic performance depends largely upon the volume of raw data and quality26. Most studies included in this systematic review mentioned a data augmentation method which adopted some form of affine image transformations strategy e.g. translational, rotation or flipping, in order to compensate for data deficiencies. This, one could argue, is symptomatic of the paucity of annotated datasets for model training, and prospective studies for model validation. Though fortunately, there has been a substantial increase in the number of openly available datasets around cervical or breast cancer. However, given the necessity for this research, one would like to see institutions collaborating more frequently to establish cloud sharing platforms which would increase the availability (and breadth) of annotated datasets. Moreover, training DL algorithms requires reliable, high-quality image inputs, which may not be readily available, as some pre-analytical factors such as incorrect specimen preparation and processing, unstandardized image digitalization acquisition, improper device calibration and maintenance could lower image quality. Complete standardization of all procedures and reagents in clinical practice is required to optimally prepare pre-analytical image inputs in order to develop more robust and accurate DL algorithms. Having these would drive developments in this field and would benefit clinical practice, perhaps serving as a cost-effective replacement diagnostic tool or an initial method of risk categorization. Although, this is beyond the scope of this study and would require further research to consider this in detail.

Of the 35 included studies, only 11 studies performed external validation, which means that an assessment of DL model performance was conducted with either an out-of-sample dataset or with an open-access dataset. Indeed, most of the studies included here split a single sample by either randomly and non-randomly assigning individuals’ data from one center into one development dataset or the other internal validations dataset. We found that studies with internal validation were higher than externally validated studies for early detection of cervical and breast cancer. However, this was to be expected because using an internal dataset to validate models is more likely homogenous and may lead to an overestimated diagnostic performance. This finding highlights the need for out-of-sample external validation in all predictive models. A possible method for improving external validation would be to establish an alliance of institutions wherein trained deep learning algorithms are shared and performances tested, externally. This might provide insight into subgroups and variations between various ethnic groups although we would also need to maintain patient anonymity and security, as several researchers have previously noted27,28.

Most of the studies that were retrospective using narrowly defined binary or multi-class tests focusing on the diagnostic performance in the field of DL algorithms rather than clinical practice. This is a direct consequence of poor reporting, and the lack of real-world prospective clinical practice, which has resulted in inadequate data availability and therefore may limit our ability to gauge the applicability of these DL algorithms to clinical settings. Accordingly, there is uncertainty around the estimates of diagnostic performance provided in our meta-analysis and adherence levels should be interpreted with caution.

Recently, several AI-related method guides have been published, with many still under development29,30. We found most of the included studies we analyzed were probably conceived or performed before these guidelines were available. Therefore, it is reasonable to assume that design features, reporting adequacy and transparency of studies used to evaluate the diagnostic performance of DL algorithms will be improved in the future. Even though, our findings suggest that DL is not inferior in terms of performance compared to clinicians for the early detection of breast or cervical cancer, this is based on relatively few studies. Therefore, the uncertainty which exists is, at least in part, due to the in silico context in which clinicians are being evaluated.

We should also acknowledge that most of the current DL studies are publications of positive results. We must be aware that this may be a form of researcher-based reporting bias (rather than publication-based bias), which is likely to skew the dataset and adds complexity to comparison between DL algorithms and clinicians31,32. Differences in reference standard definitions, grader capabilities (i.e. the degrees of expertise), imaging modalities and detection thresholds for classification of early breast or cervical cancer also make direct comparisons between studies and algorithms very difficult. Furthermore, non-trivial applications of DL models in the healthcare setting will need clinicians to optimize clinical workflow integration. However, we found only two of studies which mentioned DL versus clinicians and versus DL combined with clinicians. This hindered our meta-analysis of DL algorithms but highlighted the need for strict and reliable assessment of DL performance in real clinical settings. Indeed, the scientific discourse should change from DL versus clinicians dichotomy to a more realistic DL-clinician combination, which would improve workflows.

35 studies met the eligibility criteria for the systematic review, yet only 20 studies could be used to develop contingency tables. Some DL algorithm studies from computer science journals only reported precision, dice coefficient, F1 score, recall, and competition performance metric. Whereas indicators such as AUC, accuracy, sensitivity, and specificity are more familiar to healthcare professionals25. Bridging the gap between computer sciences research would seem prudent if we are to manage interdepartmental research and the transition to a more digitized healthcare system. Moreover, we found the term “validation” is used causally in DL model studies. Some authors used it for assessing the diagnostic performance of the final algorithm, others defined it as a dataset for model tuning during the development process. This confuses readers and makes it difficult to judge the function of datasets. We combined experts’ opinions33, and proposed to distinguish datasets used in the development and validation of DL algorithms. In keeping with the language used for nomogram development, a dataset for training the model should be named ‘training set’, while datasets used for tuning should be referred to as the ‘tuning set’. Likewise, during the validation phase, the hold-back subset split from the entire dataset should be referred to a ‘internal’ validation, which is the same condition/image types as the training set. While a completely independent dataset for our-of-sample validation should be referred to as ‘external’ validation34.

Most of the issues discussed here could be avoided through more robust designs and high-quality reporting, although several hurdles must be overcome before DL algorithms are used in practice for breast and cervical cancer identification. The black box nature of DL models without clear interpretability of the basis for the clinical situations is a well-recognized challenge. For example, a clinician considering whether breast nodules represent breast cancer based on mammographic images for a series of judgement criteria. Therefore, a clinician developing a clear rationale for a proposed diagnosis maybe the desired state. Whereas, having a DL model which merely states the diagnosis may be viewed with more skepticism. Scientists have actively investigated possible methods for inspecting and explaining algorithmic decisions. An important example is the use of salience or heat maps to provide the location of salient lesion features within the image rather than defining the lesion characteristics themselves35,36. This raises questions around human-technology interactions, and particularly around transparency and patient-practitioner communications which ought to be studied in conjunction with DL modeling in medical imaging.

Another common problem limiting DL algorithms is model generalizability. There may be potential factors in the training data that would affect the performance of DL models in different data distribution settings28. For example, a model only trained in US may not perform well in Asia because a model trained using data from predominantly caucasian patients may not perform well across other ethnicities. One solution to improve generalizability and reduce bias is to conduct large, multicenter studies which can enable the analysis of nationalities, ethnicities, hospital specifics, and population distribution characteristics37. Societal biases can also affect the performance of DL models and of course, bias exists in DL algorithms because a training dataset may not include appropriate proportions of minority groups. For example, a DL algorithm for melanoma diagnosis in dermatological study may lack diversity in terms of skin color and genomic data, but this may also cause an under-representation of minority groups38. To eliminate embedded prejudice, efforts should be made to carry out DL algorithm research which provides a more realistic representation of global populations.

As we have seen, the included studies were mostly retrospective with extensive variation in methods and reporting. More high-quality studies such as prospective studies and clinical trials are needed to enhance the current evidence base. We also focused on DL algorithms for breast and cervical cancer detection using medical imaging. Therefore, we made no attempt to generalize our findings to other types of AI, such as conventional machine learning models. While there were a reasonable number of studies for this meta-analysis, the number of studies of each imaging modality was limited like cytology or colposcopy, Therefore, the results of the subgroup analyses around imaging modality needs to be interpreted with caution. We also selected only studies in which histopathology was used as the reference standard. Consequently, some DL studies that may have shown promise but did not have confirmatory histopathologic results, were excluded. Even though the publication bias was not identified through funnel plot analysis in Supplementary Fig. 3 based on data extracted from 20 studies, the lack of prospective studies and the potential absence of studies with negative results can cause bias. As such, we would encourage deep learning researchers in medical imaging to report studies which do not reject the null hypothesis because this will ensure evidence clusters around true effect estimates.

It remains necessary to promote deep learning in medical imaging studies for breast or cervical cancer detection. However, we suggest improving breast and cervical data quality and establishing unified standards. Developing DL algorithms needs to feed on reliable and high-quality images tagged with appropriate histopathological labels. Likewise, it is important to establish unified standards to improve the quality of the digital image-production, the collection process, imaging reports, and final histopathological diagnosis. Combining DL algorithm results with other biomarkers may prove useful to improve risk discrimination for breast or cervical cancer detection. An example would be a DL model for cervical imaging that combines with additional clinical information i.e. cytology and HPV typing, which could improve overall diagnostic performance39,40. Secondly, we need to improve the error correction ability and DL algorithm compatibility. Prophase developing DL algorithms are more generalizable and less susceptible to bias but may require larger and multicenter datasets which incorporate diverse nationalities and ethnicities, as well as those with different socioeconomic status etc., if we are to implement algorithms into real-world settings.

This also highlights the need for international reporting guidelines for DL algorithms in medical imaging. Existing reporting guidelines such as STARD41 for diagnostic accuracy studies, and TRIPOD42 for conventional prediction models are not available to DL model study. The recent publication of CONSORT-AI43 and SPIRIT-AI44 guidelines are welcomed but we await disease-specific DL guidelines. Furthermore, we would encourage organizations to develop diverse teams, combining computer scientists and clinicians to solve clinical problems using DL algorithms. Even though DL algorithms appear like black boxes with unexplainable decision-making outputs, these technologies need to be discussed for development and require additional clinical information45,46. Finally, medical computer vision algorithms do not exist in a vacuum, we must integrate DL algorithms into routine clinical workflows and across entire healthcare systems to assist doctors and augment decision-making. Therefore, it is crucial that clinicians understand the information each algorithm provides and how this can be integrated into clinical decisions which enhance efficiency without absorbing resources. For any algorithm to be incorporated into existing workflows it has to be robust, and scientifically validated for clinical and personal utility.

We tentatively suggest that DL algorithms could be useful for detecting breast and cervical cancer using medical imaging, with equivalent performance to human clinicians, in terms of sensitivity and specificity. However, this finding is based on poor study designs and reporting which could lead to bias and overestimating algorithmic performances. Standardized guidelines around study methods and reporting are needed to improve the quality of DL model research. This may help to facilitate the transition into clinical practice although further research is required.

Methods

Protocol registration and study design

The study protocol was registered with the PROSPERO International register of systematic reviews, number CRD42021252379. The study was conducted according to the preferred reporting items for systematic reviews and meta-analyses (PRISMA) guidelines47. No ethical approval or informed consent was required for the current systematic review and meta-analysis.

Search strategy and eligibility criteria

In this study, we searched Medline, Embase, IEEE and the Cochrane library until April 2021. No restrictions were applied around regions, languages, or publication types; however, letters, scientific reports, conference abstracts, and narrative reviews were excluded. The full search strategy for each database was developed in collaboration with a group of experienced clinicians and medical researchers. Please see Supplementary Note 1 for further details.

Eligibility assessment was conducted by two independent investigators, who screened titles and abstracts, and selected all relevant citations for full-text review. Disagreements were resolved through discussion with another collaborator. We included studies that reported the diagnostic performance of a DL model/s for the early detection of breast or cervical cancer using medical imaging. Studies reporting any diagnostic outcome, such as accuracy, sensitivity, and specificity etc., could be included. There was no restriction on participant characteristics, type of imaging modality or the intended context for using DL models.

Only histopathology was accepted as the study reference standard. As such, imperfect ground truths, such as expert opinion or consensus, and other clinical testing were rejected. Likewise, medical waveform data or investigations into the performance of image segmentation were excluded because these could not be synthesized with histopathological data. Animals’ studies or non-human samples were also excluded and duplicates were removed. The primary outcomes were various diagnostic performance metrics. Secondary analysis included and assessment of study methodologies and reporting standards.

Data extraction

Two investigators independently extracted study characteristics and diagnostic performance data using predetermined data extraction sheet. Again, uncertainties were resolved by a third investigator. Binary diagnostic accuracy data were extracted directly into contingency tables which included true-positives, false-positives, true-negatives, and false-negatives. These were then used to calculate pooled sensitivity, pooled specificity, and other metrics. If a study provided multiple contingency tables for the same or for different DL algorithms, we assumed that they were independent of each other.

Quality assessment

The risk of bias and applicability concerns of the included studies were assessed by the three investigators using the quality assessment of diagnostic accuracy studies 2 (QUADAS-2) tool48.

Statistical analysis

Hierarchical summary receiver operating characteristic (SROC) curves were used to assess the diagnostic performance of DL algorithms. 95% confidence intervals (CI) and prediction regions were generated around averaged sensitivity, specificity, and AUCs estimates in SROC figures. Further meta-analysis was performed to report the best accuracy in studies with multiple DL algorithms from contingency tables. Heterogeneity was assessed using the I2 statistic. We also conducted the subgroup meta-analyses and regression analyses to explore potential sources of heterogeneity. The random effects model was implemented because of the assumed differences between studies. Publication bias was assessed visually using funnel plots.

Four separate meta-analyses were conducted: (1) according to validation type, DL algorithms were categorized as either internal or external. Internal validation meant that studies were validated using an in-sample-dataset, while external validation studies were validated using an out-of-sample dataset; (2) according to cancer type i.e., breast or cervical cancer; (3) according to imaging modalities, such as mammography, ultrasound, cytology, and colposcopy, etc; (4) according to the pooled performance for DL algorithms versus human clinicians using the same dataset.

Meta-analysis was only performed where there were more than or equal to three original studies. STATA (version 15.1), and SAS (version 9.4) were for data analyses. The threshold for statistical significance was set at p < 0.05, and all tests were two-sides.

Reporting Summary

Further information on research design is available in the Nature Research Reporting Summary linked to this article.

Data availability

The search strategy and aggregated data contributing to the meta-analysis is available in the appendix.

References

Arbyn, M. et al. Estimates of incidence and mortality of cervical cancer in 2018: a worldwide analysis. Lancet Glob. Health 8, e191–e203 (2020).

Li, N. et al. Global burden of breast cancer and attributable risk factors in 195 countries and territories, from 1990 to 2017: results from the Global Burden of Disease Study 2017. J. Hematol. Oncol. 12, 140 (2019).

Sung, H. et al. Global Cancer Statistics 2020: GLOBOCAN Estimates of Incidence and Mortality Worldwide for 36 Cancers in 185 Countries. CA Cancer J. Clin. 71, 209–249 (2021).

Ginsburg, O. et al. Changing global policy to deliver safe, equitable, and affordable care for women’s cancers. Lancet 389, 871–880 (2017).

Allemani, C. et al. Global surveillance of trends in cancer survival 2000-14 (CONCORD-3): analysis of individual records for 37 513 025 patients diagnosed with one of 18 cancers from 322 population-based registries in 71 countries. Lancet 391, 1023–1075 (2018).

Shah, S. C., Kayamba, V., Peek, R. M. Jr. & Heimburger, D. Cancer Control in Low- and Middle-Income Countries: Is It Time to Consider Screening? J. Glob. Oncol. 5, 1–8 (2019).

Wentzensen, N., Chirenje, Z. M. & Prendiville, W. Treatment approaches for women with positive cervical screening results in low-and middle-income countries. Prev. Med 144, 106439 (2021).

Britt, K. L., Cuzick, J. & Phillips, K. A. Key steps for effective breast cancer prevention. Nat. Rev. Cancer 20, 417–436 (2020).

Brisson, M. et al. Impact of HPV vaccination and cervical screening on cervical cancer elimination: a comparative modelling analysis in 78 low-income and lower-middle-income countries. Lancet 395, 575–590 (2020).

Yang, L. et al. Performance of ultrasonography screening for breast cancer: a systematic review and meta-analysis. BMC Cancer 20, 499 (2020).

Conti, A., Duggento, A., Indovina, I., Guerrisi, M. & Toschi, N. Radiomics in breast cancer classification and prediction. Semin Cancer Biol. 72, 238–250 (2021).

Xue, P., Ng, M. T. A. & Qiao, Y. The challenges of colposcopy for cervical cancer screening in LMICs and solutions by artificial intelligence. BMC Med 18, 169 (2020).

William, W., Ware, A., Basaza-Ejiri, A. H. & Obungoloch, J. A review of image analysis and machine learning techniques for automated cervical cancer screening from pap-smear images. Comput Methods Prog. Biomed. 164, 15–22 (2018).

Muse, E. D. & Topol, E. J. Guiding ultrasound image capture with artificial intelligence. Lancet 396, 749 (2020).

Mandal, R. & Basu, P. Cancer screening and early diagnosis in low and middle income countries: Current situation and future perspectives. Bundesgesundheitsblatt Gesundheitsforschung Gesundheitsschutz 61, 1505–1512 (2018).

Torode, J. et al. National action towards a world free of cervical cancer for all women. Prev. Med 144, 106313 (2021).

Coiera, E. The fate of medicine in the time of AI. Lancet 392, 2331–2332 (2018).

Kleppe, A. et al. Designing deep learning studies in cancer diagnostics. Nat. Rev. Cancer 21, 199–211 (2021).

Nagendran, M. et al. Artificial intelligence versus clinicians: systematic review of design, reporting standards, and claims of deep learning studies. BMJ 368, m689 (2020).

Benjamens, S., Dhunnoo, P. & Meskó, B. The state of artificial intelligence-based FDA-approved medical devices and algorithms: an online database. NPJ Digit Med 3, 118 (2020).

Liu, X., Rivera, S. C., Moher, D., Calvert, M. J. & Denniston, A. K. Reporting guidelines for clinical trial reports for interventions involving artificial intelligence: the CONSORT-AI Extension. BMJ 370, m3164 (2020).

Bengtsson, E. & Malm, P. Screening for cervical cancer using automated analysis of PAP-smears. Comput Math. Methods Med 2014, 842037 (2014).

Liu, X. et al. A comparison of deep learning performance against health-care professionals in detecting diseases from medical imaging: a systematic review and meta-analysis. Lancet Digit Health 1, e271–e297 (2019).

Aggarwal, R. et al. Diagnostic accuracy of deep learning in medical imaging: a systematic review and meta-analysis. NPJ Digit Med 4, 65 (2021).

Zheng, Q. et al. Artificial intelligence performance in detecting tumor metastasis from medical radiology imaging: A systematic review and meta-analysis. EClinicalMedicine 31, 100669 (2021).

Moon, J. H. et al. How much deep learning is enough for automatic identification to be reliable? Angle Orthod. 90, 823–830 (2020).

Beam, A. L., Manrai, A. K. & Ghassemi, M. Challenges to the Reproducibility of Machine Learning Models in Health Care. Jama 323, 305–306 (2020).

Trister, A. D. The Tipping Point for Deep Learning in Oncology. JAMA Oncol. 5, 1429–1430 (2019).

Kim, D. W., Jang, H. Y., Kim, K. W., Shin, Y. & Park, S. H. Design Characteristics of Studies Reporting the Performance of Artificial Intelligence Algorithms for Diagnostic Analysis of Medical Images: Results from Recently Published Papers. Korean J. Radio. 20, 405–410 (2019).

England, J. R. & Cheng, P. M. Artificial Intelligence for Medical Image Analysis: A Guide for Authors and Reviewers. AJR Am. J. Roentgenol. 212, 513–519 (2019).

Cook, T. S. Human versus machine in medicine: can scientific literature answer the question? Lancet Digit Health 1, e246–e247 (2019).

Simon, A. B., Vitzthum, L. K. & Mell, L. K. Challenge of Directly Comparing Imaging-Based Diagnoses Made by Machine Learning Algorithms With Those Made by Human Clinicians. J. Clin. Oncol. 38, 1868–1869 (2020).

Altman, D. G. & Royston, P. What do we mean by validating a prognostic model? Stat. Med 19, 453–473 (2000).

Kim, D. W. et al. Inconsistency in the use of the term “validation” in studies reporting the performance of deep learning algorithms in providing diagnosis from medical imaging. PLoS One 15, e0238908 (2020).

Becker, A. S. et al. Classification of breast cancer in ultrasound imaging using a generic deep learning analysis software: a pilot study. Br. J. Radio. 91, 20170576 (2018).

Becker, A. S. et al. Deep Learning in Mammography: Diagnostic Accuracy of a Multipurpose Image Analysis Software in the Detection of Breast Cancer. Invest Radio. 52, 434–440 (2017).

Wang, F., Casalino, L. P. & Khullar, D. Deep Learning in Medicine-Promise, Progress, and Challenges. JAMA Intern Med 179, 293–294 (2019).

Topol, E. J. High-performance medicine: the convergence of human and artificial intelligence. Nat. Med 25, 44–56 (2019).

Xue, P. et al. Development and validation of an artificial intelligence system for grading colposcopic impressions and guiding biopsies. BMC Med 18, 406 (2020).

Yuan, C. et al. The application of deep learning based diagnostic system to cervical squamous intraepithelial lesions recognition in colposcopy images. Sci. Rep. 10, 11639 (2020).

Bossuyt, P. M. et al. STARD 2015: an updated list of essential items for reporting diagnostic accuracy studies. BMJ 351, h5527 (2015).

Collins, G. S., Reitsma, J. B., Altman, D. G. & Moons, K. G. Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis (TRIPOD): the TRIPOD statement. BMJ 350, g7594 (2015).

Liu, X., Cruz Rivera, S., Moher, D., Calvert, M. J. & Denniston, A. K. Reporting guidelines for clinical trial reports for interventions involving artificial intelligence: the CONSORT-AI extension. Nat. Med 26, 1364–1374 (2020).

Cruz Rivera, S., Liu, X., Chan, A. W., Denniston, A. K. & Calvert, M. J. Guidelines for clinical trial protocols for interventions involving artificial intelligence: the SPIRIT-AI extension. Nat. Med 26, 1351–1363 (2020).

Huang, S. C., Pareek, A., Seyyedi, S., Banerjee, I. & Lungren, M. P. Fusion of medical imaging and electronic health records using deep learning: a systematic review and implementation guidelines. NPJ Digit Med 3, 136 (2020).

Guo, H. et al. Heat map visualization for electrocardiogram data analysis. BMC Cardiovasc Disord. 20, 277 (2020).

Moher, D., Liberati, A., Tetzlaff, J. & Altman, D. G. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. BMJ 339, b2535 (2009).

Whiting, P. F. et al. QUADAS-2: a revised tool for the quality assessment of diagnostic accuracy studies. Ann. Intern Med 155, 529–536 (2011).

Xiao, M. et al. Diagnostic Value of Breast Lesions Between Deep Learning-Based Computer-Aided Diagnosis System and Experienced Radiologists: Comparison the Performance Between Symptomatic and Asymptomatic Patients. Front Oncol. 10, 1070 (2020).

Zhang, X. et al. Evaluating the Accuracy of Breast Cancer and Molecular Subtype Diagnosis by Ultrasound Image Deep Learning Model. Front Oncol. 11, 623506 (2021).

Zhou, J. et al. Weakly supervised 3D deep learning for breast cancer classification and localization of the lesions in MR images. J. Magn. Reson Imaging 50, 1144–1151 (2019).

Agnes, S. A., Anitha, J., Pandian, S. I. A. & Peter, J. D. Classification of Mammogram Images Using Multiscale all Convolutional Neural Network (MA-CNN). J. Med Syst. 44, 30 (2019).

Tanaka, H., Chiu, S. W., Watanabe, T., Kaoku, S. & Yamaguchi, T. Computer-aided diagnosis system for breast ultrasound images using deep learning. Phys. Med Biol. 64, 235013 (2019).

Kyono, T., Gilbert, F. J. & van der Schaar, M. Improving Workflow Efficiency for Mammography Using Machine Learning. J. Am. Coll. Radio. 17, 56–63 (2020).

Qi, X. et al. Automated diagnosis of breast ultrasonography images using deep neural networks. Med Image Anal. 52, 185–198 (2019).

Salim, M. et al. External Evaluation of 3 Commercial Artificial Intelligence Algorithms for Independent Assessment of Screening Mammograms. JAMA Oncol. 6, 1581–1588 (2020).

Zhang, Q. et al. Dual-mode artificially-intelligent diagnosis of breast tumours in shear-wave elastography and B-mode ultrasound using deep polynomial networks. Med Eng. Phys. 64, 1–6 (2019).

Wang, Y. et al. Breast Cancer Classification in Automated Breast Ultrasound Using Multiview Convolutional Neural Network with Transfer Learning. Ultrasound Med Biol. 46, 1119–1132 (2020).

Li, Y., Wu, W., Chen, H., Cheng, L. & Wang, S. 3D tumor detection in automated breast ultrasound using deep convolutional neural network. Med Phys. 47, 5669–5680 (2020).

McKinney, S. M. et al. international evaluation of an AI system for breast cancer screening. Nature 577, 89–94 (2020).

Shen, L. et al. Deep Learning to Improve Breast Cancer Detection on Screening Mammography. Sci. Rep. 9, 12495 (2019).

Suh, Y. J., Jung, J. & Cho, B. J. Automated Breast Cancer Detection in Digital Mammograms of Various Densities via Deep Learning. J. Pers. Med 10, 211 (2020).

O'Connell, A. M. et al. Diagnostic Performance of An Artificial Intelligence System in Breast Ultrasound. J. Ultrasound Med. 41, 97–105 (2021).

Rodriguez-Ruiz, A. et al. Stand-Alone Artificial Intelligence for Breast Cancer Detection in Mammography: Comparison With 101 Radiologists. J. Natl Cancer Inst. 111, 916–922 (2019).

Adachi, M. et al. Detection and Diagnosis of Breast Cancer Using Artificial Intelligence Based assessment of Maximum Intensity Projection Dynamic Contrast-Enhanced Magnetic Resonance Images. Diagnostics (Basel) 10, 330 (2020).

Samala, R. K. et al. Multi-task transfer learning deep convolutional neural network: application to computer-aided diagnosis of breast cancer on mammograms. Phys. Med Biol. 62, 8894–8908 (2017).

Schaffter, T. et al. Evaluation of Combined Artificial Intelligence and Radiologist Assessment to Interpret Screening Mammograms. JAMA Netw. Open 3, e200265 (2020).

Kim, H. E. et al. Changes in cancer detection and false-positive recall in mammography using artificial intelligence: a retrospective, multireader study. Lancet Digit Health 2, e138–e148 (2020).

Wang, F. et al. Study on automatic detection and classification of breast nodule using deep convolutional neural network system. J. Thorac. Dis. 12, 4690–4701 (2020).

Yu, T. F. et al. Deep learning applied to two-dimensional color Doppler flow imaging ultrasound images significantly improves diagnostic performance in the classification of breast masses: a multicenter study. Chin. Med J. (Engl.) 134, 415–424 (2021).

Sasaki, M. et al. Artificial intelligence for breast cancer detection in mammography: experience of use of the ScreenPoint Medical Transpara system in 310 Japanese women. Breast Cancer 27, 642–651 (2020).

Zhang, C., Zhao, J., Niu, J. & Li, D. New convolutional neural network model for screening and diagnosis of mammograms. PLoS One 15, e0237674 (2020).

Bao, H. et al. The artificial intelligence-assisted cytology diagnostic system in large-scale cervical cancer screening: A population-based cohort study of 0.7 million women. Cancer Med 9, 6896–6906 (2020).

Holmström, O. et al. Point-of-Care Digital Cytology With Artificial Intelligence for Cervical Cancer Screening in a Resource-Limited Setting. JAMA Netw. Open 4, e211740 (2021).

Cho, B. J. et al. Classification of cervical neoplasms on colposcopic photography using deep learning. Sci. Rep. 10, 13652 (2020).

Bao, H. et al. Artificial intelligence-assisted cytology for detection of cervical intraepithelial neoplasia or invasive cancer: A multicenter, clinical-based, observational study. Gynecol. Oncol. 159, 171–178 (2020).

Hu, L. et al. An Observational Study of Deep Learning and Automated Evaluation of Cervical Images for Cancer Screening. J. Natl Cancer Inst. 111, 923–932 (2019).

Hunt, B. et al. Cervical lesion assessment using real-time microendoscopy image analysis in Brazil: The CLARA study. Int J. Cancer 149, 431–441 (2021).

Wentzensen, N. et al. Accuracy and Efficiency of Deep-Learning-Based Automation of Dual Stain Cytology in Cervical Cancer Screening. J. Natl Cancer Inst. 113, 72–79 (2021).

Yu, Y., Ma, J., Zhao, W., Li, Z. & Ding, S. MSCI: A multistate dataset for colposcopy image classification of cervical cancer screening. Int J. Med Inf. 146, 104352 (2021).

Acknowledgements

This study was supported by CAMS Innovation Fund for Medical Sciences (Grant #: CAMS 2021-I2M-1-004).

Author information

Authors and Affiliations

Contributions

P.X., Y.J., and Y.Q. conceptualised the study, P.X., J.W., D.Q., and H.Y. designed the study, extracted data, conducted the analysis and wrote the manuscript. P.X. and S.S. revised the manuscript. All authors approved the final version of the manuscript and take accountability for all aspects of the work. P.X. and J.W. contributed equally to this article.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Xue, P., Wang, J., Qin, D. et al. Deep learning in image-based breast and cervical cancer detection: a systematic review and meta-analysis. npj Digit. Med. 5, 19 (2022). https://doi.org/10.1038/s41746-022-00559-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41746-022-00559-z

This article is cited by

-

Effect of vaccination against HPV in the HPV-positive patients not covered by primary prevention on the disappearance of infection

Scientific Reports (2025)

-

Systematic review and meta-analysis of artificial intelligence for image-based lung cancer classification and prognostic evaluation

npj Precision Oncology (2025)

-

A scoping and bibliometric review of deep learning techniques in breast cancer imaging: mapping the landscape and future directions

Neural Computing and Applications (2025)

-

Deep learning enabled liquid-based cytology model for cervical precancer and cancer detection

Nature Communications (2025)

-

Artificial intelligence performance in detecting lymphoma from medical imaging: a systematic review and meta-analysis

BMC Medical Informatics and Decision Making (2024)