Abstract

Clinical trial matching is the task of identifying trials for which patients may be eligible. Typically, this task is labor-intensive and requires detailed verification of patient electronic health records (EHRs) against the stringent inclusion and exclusion criteria of clinical trials. This process also results in many patients missing out on potential therapeutic options. Recent advancements in Large Language Models (LLMs) have made automating patient-trial matching possible, as shown in multiple concurrent research studies. However, the current approaches are confined to constrained, often synthetic, datasets that do not adequately mirror the complexities encountered in real-world medical data. In this study, we present an end-to-end large-scale empirical evaluation of a clinical trial matching system and validate it using real-world EHRs. We perform comprehensive experiments with proprietary LLMs and our custom fine-tuned model called OncoLLM and show that OncoLLM outperforms GPT-3.5 and matches the performance of qualified medical doctors for clinical trial matching.

Similar content being viewed by others

Introduction

Clinical trials are essential to advance scientific discovery, improve patient care, and drive innovation in medicine1. Their significance is particularly pronounced in oncology, where they can provide potential treatment options for patients with limited alternatives2,3,4,5. Despite this importance, a substantial number of clinical trials encounter recruitment challenges. Only about 7% of adults participate in cancer clinical trials1. One of the major factors contributing to this recruitment bottleneck is the considerable challenge that physicians encounter when systematically reviewing each patient against the list of available trials. This cumbersome task leads to a lower rate of trial recommendations6, often due to the intricacy involved in deciphering the eligibility of a patient against the nuanced inclusion and exclusion criteria of these trials7,8,9,10,11.

The core information pertinent to the inclusion and exclusion criteria of clinical trials are primarily found within unstructured EHRs, such as medical notes12. A recent study shows that this is particularly relevant for oncology, where key parameters for clinical trial screening are virtually absent in structured EHRs, such as cancer staging13. These conditions create substantial challenges in interpretation at scale. Even when dealing with structured EHRs, creating queries using the trial criteria often proves challenging in interpretation and scaling14,15. To address this complexity, several research studies leveraging natural language processing (NLP) have emerged, aiming to streamline the trial matching process.

There are two conventional approaches for applying NLP to match patients with clinical trials. One common approach involves converting the inclusion and exclusion criteria of trials into structured queries13,15,16,17. These queries can then be utilized to efficiently retrieve relevant data from dedicated clinical data warehouses. An alternate strategy focuses on the extraction of key data elements from patient notes and reformatting this information into a structured layout to aid in filtering clinical trials12.

While both approaches have demonstrated effectiveness in clinical trial matching, they come with limitations. Both approaches frequently rely on rule-based engineering, which is cumbersome and inflexible. Additionally, scaling these approaches to encompass the wide range of clinical trials, the variety of potential patient scenarios, and the diverse clinical notes from different EHR systems is also challenging. Recent advancements in leveraging LLMs for patient-trial matching show considerable promise14,18,19,20. However, these studies predominantly utilize relatively simplistic or synthetic patient notes and clinical trial setups, which do not accurately reflect the complexity of real-world scenarios. Furthermore, they do not deal with the long context scenarios where a single patient may have hundreds of notes in their EHRs. While14 aims to address the entire patient journey, it usually limits its focus to a narrowly defined variable, which they extract using a highly specialized model. This method is prone to overfitting due to the intricacies of building a training dataset robust enough to train such a model. Moreover, it struggles to encompass the complete range of clinical trial criteria, potentially leading to inadequate processing of certain criteria, especially the more complex ones. Finally, most of these methods, if not all, rely on proprietary LLMs, which are difficult to deploy in the sensitive and privacy-focused healthcare domains21.

To the best of our knowledge, our study is the first to present a comprehensive pipeline to demonstrate an end-to-end clinical matching system using real-world patient EHR data and LLMs. This approach solely relies on the direct input of unstructured patient notes and the detailed clinical trial inclusion and exclusion criteria into the LLMs.

Our contributions can be summarized as follows:

-

Scalable end-to-end pipeline: We introduce a novel pipeline, PRISM, which performs patient records interpretation and directly uses the semantics of inclusion and exclusion criteria in clinical trials to match patients through a mechanism to directly ingest free text criteria into the pipeline without any rule-based processing.

-

Fine-tuned LLM: Our custom-tuned model, OncoLLM, demonstrates superior performance over GPT-3.5 and comparable efficacy to GPT-4. OncoLLM is significantly smaller than both and can be hosted within the private infrastructure to address privacy concerns.

-

Benchmarking against medical doctors: For the first time, we present evidence that LLMs can almost match the performance of qualified medical doctors for the task of clinical trial matching. This finding suggests the potential of LLMs for real-world clinical applications.

-

Comprehensive evaluation: We conduct an extensive evaluation of the proposed pipeline, not only in ranking clinical trials but also in providing detailed interpretation for the eligibility of patients with specific trial criteria.

-

Ranking algorithm: We propose a novel algorithm for ranking clinical trials, significantly improving the average position of relevant trials compared to a baseline approach.

-

Both search directionalities: We demonstrate that the same pipeline used to identify trials for patients (patient-centric search) can also enable researchers to create eligible patient cohorts for a trial (trial-centric search). Our work is the first to demonstrate both directionalities using the same pipeline.

Results

Criteria/question level accuracy

We compare the accuracy of different LLMs in assessing whether a given patient meets a particular clinical trial’s criteria. We evaluate the performance of OncoLLM model, a 14B parameter model developed by our team, against several prominent LLMs, including Azure OpenAI’s GPT-3.5 Turbo (175B), Qwen14B-Chat (14B)22, Mistral-7B-Instruct (7B)23, Mixtral-8 × 7B-Instruct (56B)24, and Azure OpenAI’s GPT-4. These models were considered the best general-purpose models according to the open leaderboards at the time this research began. We further compare OncoLLM against domain-specific and task-specific models such as Meditron25, Medllama (Hugging Face. ”JSL-MedLlama-3-8B-v2.0.” Available at: https://huggingface.co/johnsnowlabs/JSL-MedLlama-3-8B-v2.0. Accessed July 29, 2024, and TrialLlama19. While Meditron and TrialLlama only had a context length of 4000 which prevented us from testing them on all the samples, MedLlama was tested on the entire test set.) Fig. 1a shows the performance of different LLMs at criteria/question level answering accuracy. The accuracy is defined as the percentage of instances in which the model’s predictions match the clinically annotated answers for questions regarding patients, derived from the inclusion and exclusion criteria of clinical trials. Our results demonstrate that OncoLLM significantly outperforms both the similarly sized models and the larger GPT-3.5 Turbo, achieving an accuracy of 63% compared to 53% by GPT-3.5 Turbo, 43% by Qwen14B-Chat, 41% by Mistral-7B-Instruct, and 49% by Mixtral-8 × 7B-Instruct. It also outperforms domain-specific models such as Meditron and MedLlama, along with the task-specific model TrialLlama by significant margins. Although GPT-4 reached a higher accuracy of 68%, it incurs substantially greater computational costs, being approximately more than 100x times larger than OncoLLM (even though there are no official details on the parameter size of GPT-4, it is estimated to be more than a trillion parameters). Our statistical analysis conducted using McNemar’s test for GPT-3.5 Turbo and OncoLLM also confirms the significance of the results with a p-value < 0.05 (0.0013). Additionally, the Mistral-7B-Instruct model requires rule-based regex processing to output in JSON format, highlighting further limitations beyond raw accuracy. Another key aspect of this finding is that institutions can implement OncoLLM in their privacy-compliant infrastructure rather than transmitting sensitive patient data to external cloud servers. In an additional analysis where all questions initially marked as ‘N/A’ (meaning not enough information was available in patient records to answer those questions) were excluded to reduce ambiguity, we observed significant changes in model performances. By removing these uncertain inputs, the accuracy of our OncoLLM model increased to 66% (from 63%), and the accuracy of Azure OpenAI’s GPT-4 model also rose, reaching 72% (from 68%). Interestingly, the accuracy rates of Azure OpenAI’s GPT-3.5 Turbo and the open-sourced models decreased, suggesting that these models might rely more on ambiguous inputs to maintain higher performance levels or may exhibit less robustness in more clearly defined scenarios. This result indicates that some of the performance of the other “weaker” models is inflated, highlighting the gap between our model’s performance and GPT-3.5. This is further illustrated in Fig. 2. The graph displays the question level accuracy of different models plotted against the inverse of # of N/A responses for various models. OncoLLM and GPT-4 most closely match the actual N/A count determined by human annotators.

a OncoLLM outperforms most of the prominent LLMs at criteria/question level answering accuracy. The first column, All, shows the question level accuracy across all the 720 Q&A dataset for oncology-related clinical trials. The second column, Without N/A samples shows question level accuracy after removing those questions whose answers were `N/A' by medical experts. * Human accuracy was obtained only on 109 questions, which was annotated by two medical experts ** Meditron and TrialLLAMA could only process ≈30% of samples due to their small context window of 4k tokens. b OncoLLM (in red) performs consistently well across all the relevant oncology-related concepts.

This figure presents a comparison of model accuracy with the frequency of “N/A” outputs. A higher frequency of “N/A” outputs indicates a lower usefulness of the model. The size of each bubble represents the number of parameters of the model. This highlights the close performance of OncoLLM to GPT-4 despite having relatively fewer parameters.

We also conducted a comparison of the accuracy of each model on the Q&A task at the Concept level. For this analysis, we classified each question into various oncology-related clinical trial concepts, such as cancer type, cancer sub-type, biomarkers, etc. We used GPT-4 to label each criterion with a concept from a “book of concepts” (See Supplementary Note 2 and Supplementary Fig. 10). Each question created using that criterion was assigned the same concept as that of the parent criterion. These concepts were decided on the basis of physician input and were organized into different tiers based on their estimated clinical importance. For the purpose of this task, we define the clinical importance of an I/E criteria as the measure of confidence in rejecting or selecting a particular trial solely on the basis of patient meeting or not meeting that criteria. Overall, we delineated 13 different concepts with the help of expert oncologists and categorized them into four tiers (Tier 1, Tier 2, Tier 3, and Tier 4), with the importance level as Tier 1 > Tier 2 > Tier 3 > Tier 4 (See Supplementary Note 2 for details). We observed a similar pattern of accuracy at the concept level as well. OncoLLM generally secured the second position for most concepts. Notably, it outperformed GPT-4 in the biomarkers concept (Fig. 1b).

We manually annotated the Q&A dataset from 10,000 notes and 50 cancer patients. We extracted 720 questions (each inclusion and exclusion criteria was converted into multiple questions with “yes” or “no” as possible answers), ensuring a balance across various patients, disease types, and categories of trial criteria (See section “Methods”). To simplify the annotation, we used GPT-3.5 to identify and assess relevant sections for each question, reducing the potential workload from 800,000 segments to approximately 8,000. GPT-3.5 showed 98% precision and 94% recall in determining the relevance of segments to questions after refining our prompts. However, due to the high cost and token consumption (>2 billion) for processing, we opted not to use this method in our final setup as it would cost too much in the end-to-end pipeline for all the patients and trials. Five medical doctors annotated each question based on the chunks marked as relevant by GPT-3.5, labeling them as “YES”, “NO”, or “N/A”. Each of the 720 questions was reviewed by at least one doctor, with about 12 randomly selected questions reviewed by all five. The best-performing annotators from this round participated in a second round, where 109 randomly selected questions were used to evaluate inter-annotator reliability. The average inter-annotator agreement (calculated as the percentage of times the annotators gave the same answer to a particular question) among all five annotators was 64 and 70% for the two selected annotators (See Supplementary Note 1).

Ranking scores

In this analysis, we conducted a comparative assessment of the ranking efficacy between our proposed approach (outlined in the section “Methods”) for OncoLLM compared to the GPT-3.5 Turbo model. Due to the cost associated with matching 1000 patient-trial pairs, we did not evaluate GPT-4 for this task. To facilitate ranking, we utilized a scoring module (detailed in the section “Methods”), which assigns a matching score to each patient-trial pair. This score was used to rank trials for a patient and vice-versa.

Patient-centric ranking

For this evaluation, we assembled a cohort of 98 cancer patients who had participated in clinical trials. We identified ten real-world trials for each patient, all of which shared the same cancer disease type (lung cancer, breast cancer, etc.) and were actively recruiting patients at the time the patient enrolled in a clinical trial. Among these ten trials, one trial served as the ground truth trial, denoting the trial in which the patient was actually enrolled (see section “Methods”). To assess the performance, we analyzed the proportion of times the rank of the ground truth trial fell within the top-three ranks. This metric directly reflects the practical utility of our method, as it aids in the efficient shortlisting of eligible trials for patients within a hospital or institution setting. It was finalized after interviewing ten clinical research coordinators for their workflows. Their existing process involved shortlisting two or three trials for patients by looking at the upcoming appointments of the oncologists.

In our assessment, OncoLLM demonstrated superior performance compared to GPT-3.5 Turbo across all three scoring methods (Fig. 3). Specifically, with the Weighted Tier method, OncoLLM achieved a score of 0.65, outperforming GPT-3.5 Turbo’s score of 0.59. Similarly, with the Iterative Tier method, OncoLLM achieved a score of 0.63, surpassing GPT-3.5 Turbo’s score of 0.61. Additionally, with the Simple method, OncoLLM attained a score of 0.62 compared to GPT-3.5 Turbo’s score of 0.57. These findings are consistent with our observations regarding question-level accuracy (Fig. 1a); typically, a model exhibiting higher accuracy in answering eligibility questions tends to perform better in ranking tasks.

a OncoLLM (Weighted tier) ranked ground truth trials 65.3% of times in the top-3 among ten considered trials, while GPT-3.5 Turbo (Iterative tier) ranked ground truth trials only 61.2% of times in the top-3. b OncoLLM (Weighted tier) scored an NDCG (Normalized Discounted Cumulative Gain) score of 68% as compared to 62.6% of GPT-3.5 Turbo (Iterative tier). See section “Methods” for details on the scoring methods.

To evaluate the utility of both GPT-3.5 Turbo and OncoLLM, we conducted an additional analysis for all patient and ground truth trials. Ideally, for ground truth trials where a patient is already enrolled, all eligibility criteria should be met. With this hypothesis in mind, we examined the statistics of all eligibility criteria for the ground truth trials. A superior system should meet a higher number of criteria, and produce fewer indecisive cases (instances where the system failed to produce a definitive Met/Not-Met result for the given criteria, referred to as “N/A” henceforth). We discovered that OncoLLM meets 62% of the criteria on average across all 98 patients, compared to GPT-3.5 Turbo’s score of 55.4% (Fig. 4A). This analysis was extended to instances where the ground truth trial ranked within the top-3. Predictably, the overall criteria Met numbers increased for both models, with OncoLLM (66.7%) outperforming GPT-3.5 Turbo (59%). Intriguingly, the overall average for OncoLLM across all ground truth trials remains higher than GPT-3.5 Turbo’s score for top-three ranked ground truth trials. When it comes to ranking trials, OncoLLM’s outcomes prove more practical as GPT-3.5 Turbo tends to return “N/A” responses to simplified questions derived from top-three ranked ground truth trials considerably more often than OncoLLM (Figs. 4C, 2). When a human is involved in the loop, this becomes problematic as N/A responses provide very little information to determine the patient’s final eligibility.

Trial-centric ranking

For this evaluation, we assembled a set of 36 clinical trials, all of which recruited patients from the same institution. For each clinical trial, we compiled a group of patients who shared the same cancer disease type and were enrolled in some trials when the specified clinical trial was active. This patient set also included those who were actually enrolled in the specified trial. On average, each trial had 2 ± 1 ground truth patients and 13 ± 8 additional patients. Here, “ground truth patients” refer to those who were enrolled in the specified trial. Based on this data, we computed the NDCG (Normalized Discounted Cumulative Gain) score for ranking patients for a given clinical trial. To calculate the NDCG score, we utilized a binary relevance score, where ground truth patients were assigned a relevance score of 1, and the remaining patients were assigned a relevance score of 0.

In our evaluation, OncoLLM exhibited superior performance compared to GPT-3.5 Turbo across all scoring methods, except for the Simple method where both models achieved similar scores. Specifically, using the Weighted Tier method, OncoLLM attained a score of 0.68, outperforming GPT-3.5 Turbo’s score of 0.62. Similarly, with the Iterative Tier method, OncoLLM achieved a score of 0.66, surpassing GPT-3.5 Turbo’s score of 0.63. For the Simple method, both models scored 0.62. Once again, these results align with our observations regarding question-level accuracy (Fig. 1a); typically, a model demonstrating higher accuracy in answering eligibility questions should excel in ranking tasks.

Error analysis

Trial level

While the patients were enrolled in a specific trial, this did not preclude their eligibility for other concurrent trials. Consequently, the ranking numbers do not accurately reflect the model’s performance strength. To further assess this, we engaged a qualified clinical data expert to manually check the eligibility of patients for 10 randomly chosen trials that OncoLLM ranked in the top-1, but which were not the trials in which the patients were actually enrolled. We discovered that out of these ten trials, the patients were deemed eligible for 9, effectively increasing the actual top-3 accuracy to ≈95%. Interestingly, the only trial for which a patient was ineligible was due to the presence of tumors in both breasts; the patient was eligible for the trial concerning the tumor in the left breast but not for the one in the right breast. This highlights OncoLLM’s effectiveness in identifying suitable trials for patients.

Criteria level

To assess the capability of OncoLLM in generating accurate interpretation and citations of corresponding trial criterion to a patient, a set of questions (provided in the supplement) and their corresponding responses generated by the model were randomly selected and reviewed by qualified medical professionals. These reviewers were tasked with verifying the correctness of the responses (categorized as “Yes”, “No”, or “N/A”) and the accuracy of interpretation and citations provided with each answer. Our findings indicated that the accuracy of the final answers was 75.26%. When the final answer was deemed correct, the accompanying explanations were accurate 90.91% of the time. Additionally, citations included in the explanations were rated as correct 86.71% of the time and partially correct 6.29% of the time, highlighting OncoLLM’s effectiveness in delivering reliable information and its utility in supporting further manual verification processes.

Cost-benefit analysis

We also conducted a cost analysis to estimate the expenses associated with running different OncoLLM vs Azure OpenAI’s GPT-4 for patient-trial matching, considering 98 patients with ten trials for each patient (refer to section “Methods”). For estimation, we calculated the input prompt token and expected generated token count, from which we derived the pricing. For OncoLLM, we benchmarked the input and output generation speed (tokens/sec) when hosted using vLLM and calculated the running time using the formula:

The final cost is determined by multiplying the hourly cost of the Google Cloud GPU virtual machine by the running time. For Azure OpenAI’s GPT-4 model, we estimated the price using their pricing table (Pricing Table: https://azure.microsoft.com/en-in/pricing/details/cognitive-services/openai-service/).

Our calculations indicate that the cost of operating OncoLLM is ~$170, while running GPT-4 incurs an expense of around $6055. This implies an expense increase of about 35-fold. When we dissect the cost to compute the expense of a single patient-trial match, OncoLLM costs ~$0.17 per patient-trial pair, whereas GPT-4 demands $6.18 per patient-trial pair. In addition, we have not considered several other optimizations available for LLMs26,27 in this assessment. Other factors not taken into account include the availability of various cloud services offering more affordable computation, as well as the potential for a local in-house setup. We fine-tuned the base LLM using low-rank adaptation (LoRA) adapters, which is both time-efficient and resource-conservative. The final model required ~7 days of training on a machine equipped with eight NVIDIA A100 GPUs (each with 40GB memory). The total training cost was ~$2,688 USD (calculated as 7 days × 24 h/day × $16 USD/hour). Although this represents a significant initial investment, it is a one-time expense that can be rapidly amortized by screening a few hundred patients.

Importance of using all notes

To determine the necessity of utilizing all patient notes, we conducted a detailed analysis of 980 patient-trial pairs. For each pair, we pinpointed two crucial data points: the dates of the earliest and latest chunks of patient EHR information cited by OncoLLM while evaluating any trial criterion, as detailed in section “Error analysis”. OncoLLM demonstrates high accuracy in identifying relevant evidence, suggesting these chunks represent the pertinent data for assessing trial criteria. We analyzed the distribution of this relevant information across the patient journey by plotting these data points against the number of trial criteria (See Fig. 5). The x-axis measured the number of days between the earliest and latest chunks. Our findings indicate that the information necessary for eligibility screening can span over a year, underscoring the importance of accessing comprehensive patient notes. However, the most critical data were often found in the most recent notes. Given the unpredictable location of relevant information, it is advisable to review the entire patient journey.

Discussion

In this study, we developed and validated an end-to-end pipeline for matching patients to clinical trials based on inclusion and exclusion criteria and unstructured patient notes from real-world EHRs. Our findings indicate that leveraging LLMs, with carefully implemented controls, could significantly shift the paradigm for accruing and enrolling eligible patients in clinical trials. Unlike existing processes that rely on time and personnel-intensive manual EHR review, the proposed workflow-based platform can enhance clinical trial efficiency and improve cancer care.

The task of clinical trial matching, or patient-trial matching, has attracted significant attention from academia as well as industry. Based on the directionality of the search, patient-trial matching can either be patient-centered, i.e., identifying trials for a patient18,28,29,30,31, or trial-centered, i.e., finding eligible patients given a trial15,32,33,34. Both have their individual importance with some overlaps. The patient-centered viewpoint offers a direct methodology for physicians to treat trials as a care option - an important goal of the cancer moonshot program13. Trial-centric methodology, on the other hand, allows researchers to conduct pragmatic clinical trials or conduct feasibility analyses. In this study, we focus on patient-centered trial matching when we mention clinical trial matching, but we also show that our framework can be readily extended to support both directions.

One well-studied approach involves converting the inclusion and exclusion criteria of a trial into a structured query and using that query to search over structured clinical data repository15,16,17. This, however, has limitations in oncology as the majority of information relevant to clinical trial screening for cancer patients is found in unstructured data13,14. An alternative approach uses deep neural networks to directly convert patient information to an embedding representation to match against trials30,31. Another method involves using patients already enrolled in trials as representations of those trials and employing embedding similarity techniques to find patients similar to those recruited35. These techniques have, however, shown limited success in a complicated domain such as oncology.

Language models have recently shown great promise for a variety of use cases in healthcare25,36,37,38. For clinical trial matching, TrialGPT18 demonstrated the capabilities of GPT-3.539 in effectively ranking clinical trials for patients. This was succeeded by the TrialLlama research19, which provided evidence that open-source models, specifically LLaMA 240, could surpass GPT-3.5’s performance, demonstrating their potential in privacy-sensitive applications14. utilize GPT-4 but in a limited setup converting clinical trial criteria into a structured schema. Then they use Bidirectional Encoder Representations from Transformers (BERT) and alike models41,42,43,44 to extract information from notes and use this structured information to check final eligibility. In parallel with our work, a study by ref. 20 employed GPT-445 and other open-source models for processing multiple patient notes while integrating retrievers to enhance cost-efficiency and overall effectiveness. However, their approach has a few significant limitations. It depended on a curated selection of patient charts containing only 2–5 notes, which is minimal compared to the usual 100–200 notes in real-world patient records. Furthermore, their method was tested on matching with a single clinical trial that used generic inclusion and exclusion criteria with only 13 criteria, greatly reducing the complexity typical of real-world clinical trials. In contrast, we evaluate the proposed pipeline in a setup with a number of potential trial candidates and the entire EHR of the patients, resulting in a dataset of over 200 trials and around 10,000 diverse clinical trial criteria. This substantial scope significantly differentiates our approach from previous studies.

While there are certain limitations, the performance of our proposed pipeline closely matches that of qualified medical professionals in terms of criteria-level accuracy. This significant achievement highlights the models’ near readiness for practical deployment. Although the model sometimes makes errors, our citation-based approach-similar to techniques used in several LLM-based search engines-offers a solid pipeline to help humans rectify these inaccuracies.

Our research challenges the necessity of relying exclusively on proprietary and centralized models in contexts where data privacy is paramount. We demonstrate that smaller models, when fine-tuned appropriately, can surpass the performance of their proprietary counterparts. This opens the door to deploying these efficient models in environments where both privacy and cost are of concern. Notably, our model achieves performance metrics comparable to those of GPT-4, yet with a significantly lower operational cost, showcasing its potential for scalable applications.

To address this question, we extended our pipeline to include a criteria-based ranking system for clinical trials. Our empirical evaluations confirm the feasibility of using LLMs to systematically rank clinical trials against patient records and vice-versa. This approach not only facilitated the identification of trials in which patients were actively enrolled but also ended up highlighting trials which the patient was eligible for but was not eventually enrolled. These results substantiate the practicality of employing large language models in the initial phases of clinical trial screening, significantly reducing the time and effort required in trial-patient matching processes. Additionally, we highlighted the importance of assigning different weights to various types of inclusion/exclusion criteria to achieve optimal performance. Oncologists and clinical research coordinators do not treat all criteria with equal importance when initially assessing a patient’s eligibility before a more detailed screening. Therefore, it is crucial to integrate this understanding into LLM-based pipelines. To this end, we experimented with tiering and weighting systems to rank clinical trials based on a patient’s criterion-level eligibility. Our experiments showed that these methods outperform existing approaches that do not incorporate this domain-specific insight.

While our approach has shown considerable promise, it has limitations that necessitate careful consideration for future development. One of the primary constraints is our reliance solely on unstructured data. Critical information, particularly laboratory values, is often recorded in structured formats and may not be consistently mentioned in patient notes. To address this issue, a hybrid data retrieval approach that integrates both structured and unstructured data could be more effective. Such a model would enhance the comprehensiveness and accuracy of the information retrieval, potentially leading to more precise patient-trial matching. Additionally, our current dependence on embedding-based retrievers presents challenges. Despite their advancements, these retrievers have inherent limitations in processing the complex nuances of medical data. It is imperative to rigorously evaluate the impact of these retrievers on the final outcomes of our pipeline. Although we conducted preliminary evaluations of different retrievers, we did not extend this to the fine-tuning or enhancement of these components.

The accuracy of our end-to-end system, although improved, does not yet meet the ideal standards. Obtaining ‘real’ ground truth in clinical environments is challenging. We observed significant variations in the responses provided by different annotators, especially for questions where it is difficult to determine whether the available information suffices for accurate criteria assessment. This variation underscores the need for more robust annotation processes and perhaps a reevaluation of the criteria used for determining patient eligibility.

These insights pave the way for future work to focus on developing more integrated models that utilize a balanced mix of structured and unstructured data. Enhancing the capabilities and precision of embedding-based retrievers, alongside a more rigorous evaluation framework, will be critical to advancing the technology for clinical application. Further, efforts to standardize the annotation process and refine the accuracy benchmarks will significantly contribute to the reliability of AI-driven clinical trial matching systems.

Methods

This study was carried out with the approval of the Medical College of Wisconsin Institutional Review Board, under # 00044894.

Problem formulation

We formulate the problem of matching patients to clinical trials through a compositional question-answering framework. Consider a patient P, associated with a set of patient notes N = {n1, n2, …, ni}, where each ni contains distinct aspects of the patient’s medical history. These aspects include medical history, family history, radiotherapy summary, chemotherapy notes, among others (See Supplementary Section 4) Each clinical trial is defined by its criteria text T. From T, a set of questions Q = {q1, q2, …, qj} is systematically derived, where each qj corresponds directly to specific inclusion or exclusion criteria.

The set of possible answers to each question, denoted as A = {a1, a2, …, aj}, is extracted through our pipeline from the dataset N. Each element in A corresponds to an answer which can be “Yes”, “No”, or “NA”. Here, ‘NA’ indicates that the question is not applicable to the patient in question or that there is insufficient information available to provide a conclusive answer. Conversely, ‘Yes’ and ‘No’ explicitly indicate whether the patient meets or does not meet the specified criteria, respectively.

A logical composition function C is then employed to integrate these answers into a single framework. This function C is defined by logical operators and possibly fuzzy logic controls that aggregate the individual answers based on the logical interdependencies specified in T. The output of this function is a composite score S, calculated as S = f(C(a1, a2, …, aj)), where f is a scoring function that quantitatively evaluates the overall compliance of the patient data N with the trial criteria T.

The score S thus represents a quantified suitability of the clinical trial for patient P, enabling the ranking of potential trials. Higher values of S indicate a better match, facilitating prioritization of trials for which the patient is most eligible.

Dataset preparation

Patients were identified using an institutional clinical research data warehouse that includes deidentified clinical notes and clinical trial enrollment information. To develop the analytic dataset, we selected patient note types that include oncology-relevant information (See Supplementary Note 3). For each patient P, who may have been enrolled in one or multiple trials T1, T2, …, Tn, we selected a single trial based on the complexity of its criteria, as determined by the length of the text in the inclusion and exclusion sections. This process resulted in identifying one “ground truth” trial for each patient. The patient notes were limited to include information only until the enrollment date of the patient.

This curated dataset comprised 98 patient-trial combinations. To facilitate the ranking experiments, it was necessary to identify potential negative trials for each patient. We achieved this by first filtering both patients and trials based on the type of cancer. We then searched the trial database and retained a trial in a patient’s set of negatives if it satisfied all of the following criteria: (1) the patient was not previously enrolled in that trial, and (2) the trial was active according to ClinicalTrials.gov (Registry of Clinical Trials: https://clinicaltrials.gov/) at the time of the patient’s enrollment. This method yielded 980 patient-trial combinations (See Supplementary Note 9 and Supplementary Fig. 15 for more details.)

PRISM pipeline

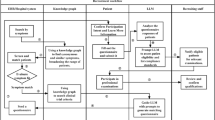

Our end-to-end PRISM pipeline comprises several modules as shown in Fig. 6. It takes clinical trial information and patient notes as input and provides a scalar score for the match between the patient and the trial. Once we have the score, it is used to rank patients by calculating the score of that trial for multiple patients or vice-versa.

The pipeline uses only unstructured notes to effectively match the patients to potential clinical trials. Patient notes are first filtered as per the defined rules and are then chunked using a contextual chunker (See Section “Chunking and retrieval module”). The chunks are then stored in a database. The trial criteria are ingested as plain text as extracted from clinicaltrial.gov and are converted into a graphical question representation using our trial composition module as described in Section Trial Composition Module and Fig. 7. This graph is then used to retrieve relevant snippets of information, and our proprietary fine-tuned language model calculates a score for the graph (See Section “Question-Answering Module”). We then also apply weights to that graph using our developed heuristics, which allow the pipeline to rank the trials accurately (See Section “scoring module”).

Trial composition module

For each clinical trial T, we utilize our trial-to-questions module that can be developed with any LLM. We used GPT-3.5 in this study as the trial criteria is public information and GPT-3.5 is cost-effective. This module is tasked with converting the textual content of the trial criteria, as extracted from ClinicalTrials.gov, into a set of simplified, independent questions. Each question is designed to be answerable with responses such as “Yes”, “No”, or “NA”. Given the inherent complexity of these questions, a single criterion from the trial criteria does not always translate directly into a single question. To address this, each criterion is decomposed into multiple questions that are interconnected through Boolean logic, specifically in disjunctive normal form (DNF) (See Fig. 7). This transformation ensures that our downstream modules receive one simplified question at a time, facilitating more straightforward processing and evaluation. This is based on the approach used by ref. 14. We conducted a manual evaluation of the output quality of 50 trials based on three metrics: (1) The percentage of incorrectly formed questions; (2) The percentage of questions missed by the LLM model; and (3) The percentage of incorrectly formed Boolean logic. Only 1% of questions were missed, and merely 2% of questions were incorrectly formed. Additionally, we achieved an accuracy rate of ~89% in correctly forming boolean logic at the criterion level. We tested the performance of the entire pipeline with and without this module. Our results showed that adding this module in the pipeline resulted in significant improvement in the performance (See Trial composition module in Supplementary Note 5).

Each criterion in the clinical trial’s free text is condensed into one or more questions using an LLM via an appropriate prompt. For criteria leading to multiple questions, they are structured into a disjunctive normal form (DNF). When assessing whether the patient meets that criteria on the basis of the answer to these questions, we employ these logical constraints to determine if the criteria are fulfilled, rather than burdening the LLM with interpreting all complex questions simultaneously. This approach enables the model to address one straightforward question sequentially.

Chunking and retrieval module

Each patient’s EHRs usually include hundreds of notes, which often surpass the context length capabilities of not only most open-source LLMs but also those of proprietary LLMs. This challenge requires the use of a semantic retrieval engine to manage and extract relevant information from each criterion46. To efficiently chunk patient notes, we implemented a methodology using the spaCy tokenizer with a one-sentence overlap configuration. We conducted a series of experiments to finalize this chunking method (See Supplementary Note 8 for details and results for different chunking methods). The spaCy tokenizer aids in this process by effectively tokenizing the text into individual sentences. By setting a one-sentence overlap, each chunk had three sentences, including one additional sentence from the preceding chunk and one from the succeeding chunk. This overlap ensures context continuity between adjacent chunks and helps prevent important information from being fragmented across chunks.

In order to eliminate duplicate chunks (due to copying of notes), we retained a chunk in our vector store when creating the embeddings for a patient only if (a) it was not an exact match to a chunk already stored in the vector store, and (b) its similarity in terms of cosine embedding similarity score with any existing chunk in the vector store was less than 0.96 (c) It was the latest copy of the chunks that were found to be duplicates of each other using (a) or (b). This method enabled us to build a fairly distinct set of chunks. This not only led to decreased processing cost and increased model throughput but also ensured that the experiments in section “Methods” did not consider duplicated chunks spread over the patient journey.

For the retrieval process, we utilized Azure OpenAI’s Ada embedding-based model and leveraged cosine similarity between the embeddings of the chunks and the queries derived from the inclusion and exclusion criteria of trials.

Question-answering module

This module utilizes the information retrieved to answer questions relevant to the clinical trial criteria. Unlike traditional retrieval-augmented generation (RAG) pipelines, which typically arrange information chunks based on their relevance scores, our approach organizes the chunks chronologically. This sequence begins with the patient's age and the date of enrollment. Each chunk is further supplemented with the date of the note from which it was extracted and the type of note. We observed that this approach benefited the model by enabling it to interpret the patient’s journey chronologically, which enhanced its ability to answer temporal questions accurately (See With and Without Chronological Order of Notes in Supplementary Note 5).

We use zero-shot prompting and maintain a temperature setting of zero throughout our experiments to ensure deterministic outputs. We also limit the maximum response length to 8K characters. For experiments utilizing GPT architectures, we employ Azure’s HIPAA-compliant APIs. In other scenarios, we deploy models using the vLLM framework on our HIPAA-compliant cloud environment, powered by 4 A100s with 80GB GPU memory each.

The question-answering process also uses a chain of thought (CoT) strategy building upon47,48 to improve model performance and generates the following key-value pairs in the output JSON.

-

Question explanation: The model is prompted to explain the requirements of each question, delineate its strategy for answering, and identify additional information that may be required. This step ensures that the model comprehends and recalls the necessary medical concepts from the clinical trial criteria. This builds upon a strategy that has been proven to help the model in understanding and answering the question correctly48.

-

Answer explanation: The model synthesizes and summarizes the information from the patient’s records in a step-by-step manner before concluding whether the patient meets the specified criteria.

-

Answer: The model provides a definitive response of “Yes”, “No”, or “N/A”, based on the analysis conducted.

-

Confidence: The model quantifies the confidence of its answers on a scale from 1 to 5, where 1 indicates the least confidence and 5 the highest (See Importance of Model Confidence in Supplementary Note 5).

Answers → criteria module

The decision logic for determining whether a criterion is met is based on the outputs from a predefined logical tree (See Fig. 7) for that criterion. Each node in this tree corresponds to a specific question as extracted in the Trial composition module. The response to each question can influence the pathway taken through the decision tree, ultimately determining whether that criterion is met or not. In cases where one or more questions’ answers are marked as N/A (Not Answerable) for a given criterion, the decision logic involves marginalizing over possible values for all the questions with N/A answers. Mathematically, this process is described as follows:

Here, X represents the possible combinations of answers to all the questions with “N/A” as the answer, and P(X = x∣data) is the probability of each combination given the patient data. This probability is \(\frac{1}{{2}^{N}}\), where N is the number of questions with “N/A” answers. The final determination of whether a criterion is met is based on a threshold model:

This approach allows for a probabilistic evaluation of criteria even when we do not know the answers to some questions corresponding to those criteria. This approach also takes care of the cases when it is possible to answer the Boolean logic even when answers to a few questions are not available. For instance, if the criteria met expression is Q1&Q2, and we know the answer for Q1 is “No”, we do not need the answer to Q2. The expression should resolve to False regardless of the answer to Q2. This approach efficiently accommodates such scenarios and ensures robust evaluation of criteria.

Scoring module

This module implements an intelligent scoring module that can be used to rank clinical trials for a patient or vice-versa. Once we have determined the final answer for each criterion in the trial, we employ three strategies to finalize the answer.

Simple counting is a straightforward method where each criterion is evaluated independently, and then the total number of fulfilled criteria is counted. This scoring method is based on the existing scoring engine proposed in refs. 18. We decided to use their best-performing metric that uses only I/E criteria to arrive at the final score(we don’t generate a relevance score as was proposed in18 and therefore don’t include that comparison in this paper). The final score is normalized by the total number of conditions (Equation (4), Fig. 8a):

a Simple counting: In this approach, the total number of criteria met is counted and normalized by the total number of conditions. b Iterative tier: Here, we move through all the criteria by categorizing them into tiers. While traversing the nodes, if we encounter a violation (criteria unmet), we stop and use the number of criteria met till that point and normalize it by the total number of conditions. c Weighted tier: Similar to the iterative tier, this method also utilizes tiers, but instead of stopping at the first violation, it continues through the entire tree. It assigns greater importance to the first tier and progressively less weight to lower tiers.

Two other methods are based on Tier-based criteria categorization. The importance of clinical trial criteria varies. Certain criteria, such as cancer type and histology, are essential for eligibility and can disqualify a patient even if they meet all other requirements. Others, like laboratory values, are more flexible and may not always be accurately represented in electronic health records due to their fluctuating nature. After consulting domain experts, we developed a tiered system to evaluate these criteria Each eligibility criterion is first classified into different levels (T1, T2, T3, and T4) according to their clinical importance for the patient-trial matching task (See more details on the breakdown of different tiers in Supplementary Note 2), and finally, the scores are computed using two approaches: iterative tier and weighted tier.

Iterative tier method applies strict rules, traversing from higher to lower tiers until a condition is violated. The final score reflects the proportion of criteria met before encountering a violation, normalized by the total number of conditions (Equation (5), Fig. 8b):

Weighted tier method involves ranking criteria by their estimated clinical importance and applying different weights accordingly. It first calculates an intermediate score for each criterion at the individual tier level (Equation (6)). Due to the importance of tier 1 criteria as determined by the discussions with domain experts, we penalize tier 1 criteria more than the others. The final score calculation is based on weighted averages of the criteria level scores obtained at each tier (Equation (9), Fig. 8c). We have separated the score calculation for a single criterion and overall trial-level score calculation into equation (6) and (9) for better readability:

where s(xi) is the score and xi is the result for the ith criterion, and sgn(x) is the signum/sign function. Tk represents the set of criteria in tier k, wk represents the weight assigned to tier k, and K represents the number of tiers with non-zero criteria present in the clinical trial.

For simplicity, we assigned the following weights in this work: w1 = 2, w2 = 1.5, w3 = 1, and w4 = 0.5. However, in the future, these weights can be tuned to achieve better performance.

OncoLLM

Our model, OncoLLM, is a specialized oncology-focused LLM that has been fine-tuned on a single cancer center’s oncology EHR datasets for question-answering tasks, using manually curated outputs. It builds upon the open-source Qwen-1.5 14B model22, adapting it specifically for this field using both synthetic and real-world data. We chose this model because it was the top performer among similar-sized models on the LLM leaderboard when our experiments began49. Importantly, no patient data or clinical trials that have been used to report the scores here were added to the training data. The fine-tuning process was carefully chosen to enhance the model’s ability to provide medical explanations and reference evidence within an RAG-based framework on EHR records. Annotators crafted ideal responses to EHR-related questions to facilitate the Chain of Thought (CoT) prompting strategy as discussed in section “Methods”. The model was then trained via supervised fine-tuning and LoRA (rank = 64, alpha = 32, dropout = 0.1) on several thousand chunk-question pairs of proprietary data along with over 2B tokens worth of synthetic book data. We have provided a couple of samples of such annotations—both for question-chunk-answer triplets as well as for synthetic book data in Supplementary Note 11. The model was fine-tuned on 8 A100 GPUs and took over 1500 GPU hours to train. We used a learning rate of 0.0003 and batch size of 1 with gradient accumulation steps of 16 and trained the model for a total of 5 epochs over the clinical data and for a single epoch over the synthetic oncology-related question-answering dataset. The clinical data contained deidentified dataset of over 5790 cancer patients (See Supplementary Note 2 for the distribution of different cancer types in the training set). The questions and patient pairs were randomly sampled from the dataset. While we can’t divulge further details due to privacy and commercial concerns, we provide all the important information in Tables 1, 2).

Data availability

This study is retrospective, and it does not generate any new data. The existing data present a risk of re-identification preventing its sharing according to approved IRB. Questions regarding data access should be addressed to the corresponding author.

Code availability

The code created and used for creating and assessing the clinical trial matching system for the current study is available from the corresponding author on reasonable request.

References

Unger, J. M., Cook, E., Tai, E. & Bleyer, A. The role of clinical trial participation in cancer research: barriers, evidence, and strategies. Am. Soc. Clin. Oncol. Educ. Book 35, 185–198 (2016).

Lamberti, M., Wilkinson, M., Harper, B., Morgan, C. & Getz, K. Assessing study start-up practices, performance, and perceptions among sponsors and contract research organizations. Ther. Innov. Regul. Sci. 52, 572–578 (2018).

Getz, K. Enrollment performance: weighing the facts. Appl. Clin. Trials 21, 24–25 (2012).

Unger, J. M., Vaidya, R., Hershman, D. L., Minasian, L. M. & Fleury, M. E. Systematic review and meta-analysis of the magnitude of structural, clinical, and physician and patient barriers to cancer clinical trial participation. J. Natl Cancer Inst. 111, 245–255 (2019).

Stensland, K. D. et al. Adult cancer clinical trials that fail to complete: an epidemic? J. Natl Cancer Inst. 106, dju229 (2014).

Nuttall, A. Considerations for improving patient recruitment into clinical trials. Clinical Leader Newsletter http://vertassets.blob.core.windows.net/download/64c39d7e/64c39d7e-c643-457b-aec2-9ff7b65b3ad2/rdprecruitmentwhitepaper.pdf (2012).

Kadam, R., Borde, S., Madas, S., Salvi, S. & Limaye, S. Challenges in recruitment and retention of clinical trial subjects. Perspect. Clin. Res. 7, 137–143 (2016).

Bennette, C. et al. Predicting low accrual in the national cancer institute’s cooperative group clinical trials. J. Natl Cancer Inst. 108, djv324 (2016).

Berger, M., Curtis, M., Smith, G., Harnett, J. & Abernethy, A. Opportunities and challenges in leveraging electronic health record data in oncology. Future Oncol. 12, 1261–1274 (2016).

Clinical Research Professionals, A. Tufts analysis: Patient recruitment shortcomings laid at feet of poor provider, researcher engagement (2017).

Fayter, D., McDaid, C. & Eastwood, A. A systematic review highlights threats to validity in studies of barriers to cancer trial participation. J. Clin. Epidemiol. 60, 990–1001 (2007).

Kong, H.-J. Managing unstructured big data in healthcare system. Healthc. Inform. Res. 25, 1–2 (2019).

Shriver, S. et al. Feasibility of institution-agnostic, ehr-integrated regional clinical trial matching. Cancer 130, 60–67 (2024).

Wong, C. et al. Scaling clinical trial matching using large language models: A case study in oncology. ArXiv (2023). https://arxiv.org/abs/2308.02180. Accessed April 6, 2024.

Yuan, C. et al. Criteria2query: a natural language interface to clinical databases for cohort definition. J. Am. Med Inf. Assoc. 26, 294–305 (2019).

Weng, C. et al. Elixr: an approach to eligibility criteria extraction and representation. J. Am. Med. Inform. Assoc. 18, i116–i124 (2011).

Thadani, S. R., Weng, C., Bigger, J. T., Ennever, J. F. & Wajngurt, D. Electronic screening improves efficiency in clinical trial recruitment. J. Am. Med. Inform. Assoc. 16, 869–873 (2009).

Jin, Q., Wang, Z., Floudas, C. S., Sun, J. & Lu, Z. Matching patients to clinical trials with large language models. Preprint at https://arxiv.org/abs/2307.15051 (2023).

Nievas, M., Basu, A., Wang, Y. & Singh, H. Distilling large language models for matching patients to clinical trials. J Am Med Inform Assoc. 31, 1953–1963 (2024).

Wornow, M. et al. Zero-shot clinical trial patient matching with llms. Preprint at https://arxiv.org/abs/2402.05125 (2024).

Toma, A., Senkaiahliyan, S., Lawler, P. R., Rubin, B. & Wang, B. Generative ai could revolutionize health care - but not if control is ceded to big tech. Nature https://www.nature.com/articles/d41586-023-03803-y (2023).

Bai, J. et al. Qwen technical report. Preprint at arXiv:2309.16609 (2023).

Jiang, A. Q. et al. Mistral 7b. Preprint at arXiv:2310.06825 (2023).

Jiang, A. Q. et al. Mixtral of experts. Preprint at arXiv:2401.04088 (2024).

Chen, Z. et al. Meditron-70b: scaling medical pretraining for large language models. Preprint at arXiv:2311.16079 (2023).

Dao, T. FlashAttention-2: Faster Attention with Better Parallelism and Work Partitioning. In International Conference on Learning Representations (ICLR, 2024).

TensorRT. https://github.com/NVIDIA/TensorRT-LLM.

Roberts, K., Demner-Fushman, D., Voorhees, E. M., Bedrick, S. & Hersh, W. R. Overview of the trec 2021 clinical trials track. In Proc. Thirtieth Text Retrieval Conference, TREC 2021 (2021).

Koopman, B. & Zuccon, G. A test collection for matching patients to clinical trials. In Proc. 39th International ACM SIGIR Conference on Research and Development in Information Retrieval 669–672 (ACM, 2016).

Rybinski, M., Nguyen, V. & Karimi, S. A self-learning resource-efficient re-ranking method for clinical trials search. In Proc. 32nd ACM International Conference on Information and Knowledge Management 4249–4253 (Association for Computing Machinery, 2023).

Pradeep, R., Li, Y., Wang, Y. & Lin, J. Neural query synthesis and domain-specific ranking templates for multi-stage clinical trial matching. In Proc. 45th International ACM SIGIR Conference on Research and Development in Information Retrieval 2325–2330 (Association for Computing Machinery, 2022).

Segura-Bedmar, I. & Raez, P. Cohort selection for clinical trials using deep learning models. J. Am. Med Inf. Assoc. 26, 1181–1188 (2019).

Parker, C. G. Generating Medical Logic Modules for Clinical Trial Eligibility. PhD thesis, Brigham Young University (2005).

Fang, Y. et al. Combining human and machine intelligence for clinical trial eligibility querying. J. Am. Med. Inform. Assoc. https://doi.org/10.1093/jamia/ocac051 (2022).

Miotto, R. & Weng, C. Case-based reasoning using electronic health records efficiently identifies eligible patients for clinical trials. J. Am. Med. Inform. Assoc. 22, e141–e150 (2015).

Singhal, K. et al. Large language models encode clinical knowledge. Nature 620, 172–180 (2023).

Nori, H. et al. Can generalist foundation models outcompete special-purpose tuning? Case study in medicine. Preprint at arXiv:2311.16452 (2023).

Hernandez, E. et al. Do we still need clinical language models? In Conference on Health, Inference, and Learning 578–597 (PMLR, 2023).

Brown, T. B. et al. Language models are few-shot learners. Preprint at https://arxiv.org/abs/2005.14165 (2020).

Touvron, H. et al. Llama 2: Open foundation and fine-tuned chat models. Preprint at https://arxiv.org/abs/2307.09288 (2023).

Lee, J. et al. BioBERT: a pre-trained biomedical language representation model for biomedical text mining. Bioinformatics, 36, 1234–1240 (2020).

Gu, Yu et al. Domain-Specific Language Model Pretraining for Biomedical Natural Language Processing. ACM Transactions on Computing for Healthcare (HEALTH) 3, 1–23 (2021).

Liu, F., Shareghi, E., Meng, Z., Basaldella, M. & Collier, N. Self-Alignment Pretraining for Biomedical Entity Representations. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (pp. 4228-4238). Association for Computational Linguistics (2021).

Yasunaga, M., Leskovec, J. & Liang, P. LinkBERT: Pretraining Language Models with Document Links. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 8003–8016, Dublin, Ireland. Association for Computational Linguistics (2022).

Achiam, J. et al. Gpt-4 technical report. Preprint at https://arxiv.org/abs/2303.08774 (2023).

Basu, A., Gupta, S., Taylor, B., Kothari, A. & Singh, H. Onco-retriever: generative classifier for retrieval of EHR records in oncology. Preprint at https://arxiv.org/pdf/2404.06680.pdf (2024).

Wei, J. et al. Chain-of-thought prompting elicits reasoning in large language models. In Proc. 36th International Conference on Neural Information Processing Systems, NIPS ’22 (Curran Associates Inc., 2024).

Mekala, R. R., Razeghi, Y. & Singh, S. EchoPrompt: instructing the model to rephrase queries for improved in-context learning. Preprint at https://arxiv.org/abs/2309.10687 (2023).

Chiang, W. et al. Chatbot arena: an open platform for evaluating llms by human preference. Preprint at arXiv:2403.04132 (2024).

Acknowledgements

This research received no specific grant from any funding agency. This study was carried out with the approval of the Institutional Review Board, under PRO #: 00044894. We are grateful for the assistance provided by medical professionals, doctors, and research coordinators who shared insights into existing clinical trial matching processes and challenges. We extend special thanks to Harsh Jain, Aniket Jaiswal, and Sebastien Rhodes for their help in interpreting results and offering valuable prompt engineering suggestions. Additionally, we express our gratitude to Sorena Nadaf, Warren Kibbe, and the entire Ci4CC (Cancer Informatics for Cancer Centers) community for facilitating this collaborative effort.

Author information

Authors and Affiliations

Contributions

The authors H.S., S.G., A.B., J.T., M.N., and R.S. are employed by Triomics. They were primarily responsible for code implementation, conducting experiments, and various evaluations. R.S. also conducted manual verification of the results obtained from the ranking pipeline. N.W., A.R., A.K., and B.T. from the Medical College of Wisconsin supervised the overall quality and conceptualization of the experiments. They also aided in developing data pipelines, ensuring data quality for dataset preparation, and implementing ranking metrics. Y.W., R.S., S.N., and T.M. contributed to conceptualizing experimental methods and supported the writing of the manuscript. All authors read and approved the manuscript. The opinions and views expressed in this paper are solely those of the authors and do not reflect the positions or policies of their respective institutions.

Corresponding authors

Ethics declarations

Competing interests

S.G., A.B., M.N., J.T., R.S., and H.S. are employees of Triomics, Inc. Y.W. consults for Pfizer Inc. and Triomics, Inc., and has ownership/equity interests in BonafideNLP, LLC. The remaining authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Gupta, S., Basu, A., Nievas, M. et al. PRISM: Patient Records Interpretation for Semantic clinical trial Matching system using large language models. npj Digit. Med. 7, 305 (2024). https://doi.org/10.1038/s41746-024-01274-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41746-024-01274-7

This article is cited by

-

Making shiny objects illuminating: the promise and challenges of large language models in U.S. health systems

npj Health Systems (2025)

-

A prospective pragmatic evaluation of automatic trial matching tools in a molecular tumor board

npj Precision Oncology (2025)

-

Synthetic data distillation enables the extraction of clinical information at scale

npj Digital Medicine (2025)