Abstract

Clinical notes recorded during a patient’s perioperative journey holds immense informational value. Advances in large language models (LLMs) offer opportunities for bridging this gap. Using 84,875 preoperative notes and its associated surgical cases from 2018 to 2021, we examine the performance of LLMs in predicting six postoperative risks using various fine-tuning strategies. Pretrained LLMs outperformed traditional word embeddings by an absolute AUROC of 38.3% and AUPRC of 33.2%. Self-supervised fine-tuning further improved performance by 3.2% and 1.5%. Incorporating labels into training further increased AUROC by 1.8% and AUPRC by 2%. The highest performance was achieved with a unified foundation model, with improvements of 3.6% for AUROC and 2.6% for AUPRC compared to self-supervision, highlighting the foundational capabilities of LLMs in predicting postoperative risks, which could be potentially beneficial when deployed for perioperative care.

Similar content being viewed by others

Introduction

More than 10% of surgical patients experience major postoperative complications, such as pneumonia and blood clots ranging from pulmonary embolism (PE) to deep vein thrombosis (DVT)1,2,3,4,5. These complications often lead to increased mortality, intensive care unit admissions, extended hospital stays, and higher healthcare costs4. Many of these preventable complications could be avoided through early identification of patient risk factors6,7. A recent report by the Centre for Perioperative Care reveals that clear perioperative pathways— defined as non-surgical procedures before and after surgery like shared decision-making, preoperative assessments, enhanced surgical preparation and discharge planning—can reduce hospital stays by an average of two days across various surgeries, with subsequently carefully designed interventions reducing complications by 30% to 80%. This exceeds the effects of drug or treatment interventions6. This underscores the role of identifying patient risk factors early on and implementing effective preventive measures to improve patient outcomes.

Most machine learning models aimed at predicting postoperative risks primarily utilize numerical and categorical variables, or time-series measurements1,5. These models typically include features such as demographics, history of comorbidities, lab tests, medications, and statistical features extracted from time series1,5, along with features reflecting surgical settings like scheduled surgery duration, surgeon name, anesthesiologist name, and location of the operating room5, as well as factors such as drug dosing, blood loss, and vital signs8.

Text-based clinical notes taken during the surgical care journey hold immense informational value, with the potential to predict postoperative risks. Unlike discrete electronic health records (EHRs) like tabular or time-series data, clinical notes represent a form of clinical narratives, thereby allowing clinicians to communicate personalized accounts of a patients history’s that could not otherwise be conveyed through traditional tabular data9. Informational value from clinical notes could therefore aid the decision-making processes that impact the course of a patient’s perioperative journey and beyond10. This includes the preparation for surgery, the transfer of patients to operating rooms, and the prioritization of clinicians’ tasks10,11, emphasizing their importance in achieving safe patient outcomes11,12.

Following the emergence of ChatGPT, a large body of research on LLMs in medicine has been primarily centered on the application and development of conversational chatbots to support clinicians, provide patient care, and enhance clinical decision support13. These efforts include automating medical reports14, creating personalized treatments15, and generating summaries16 or recommendations13 from clinical notes, respectively. However, there has been lesser exploration of specialized fine-tuned models in directly analyzing clinical notes to make robust predictions regarding surgical outcomes and complications. Most work has primarily leveraged publicly available resources, such as open-sourced EHR databases like MIMIC to predict event-based outcomes such as ICU readmission17 or benchmarks like i2b2 to perform information extraction tasks, like identifying concepts, diagnoses, and medications mentioned in clinical notes18. Some models have been developed for specialized medical diagnostics, such as COVID-19 prediction19 and Alzheimer’s disease (AD) progression prediction20. However, the application of LLMs in predicting specific postoperative surgical complications remains relatively limited. This provides us with an opportunity to leverage the informational value embedded in clinical notes to facilitate the early identification of potential risk factors. Developing robust language models capable of predicting postoperative risks from clinical notes could potentially assist clinicians in the early recognition of high-risk patients or patients experiencing critical deterioration, thereby mitigating unnecessary interventions or requiring fewer follow-ups.

Therefore, our work aims to develop models for the prediction of specific postoperative surgical complications through preoperative clinical notes, with the goal of enabling the early identification of patient risk factors. By mitigating adverse surgical outcomes, we hope to leverage LLMs to enhance patient safety and improve patient outcomes. Henceforth, our contributions are multifold: First, we explore the predictive performance that pretrained language models offer in comparison to traditional word embeddings. Specifically, by comparing the predictive performance of postoperative risk predictions between clinically-oriented pretrained LLMs and traditional word embeddings, we demonstrate the potential of pretrained transformer-based models in predicting postoperative complications from preoperative notes, relative to traditional NLP methods. Second, we explore a series of fine-tuning techniques to enhance the performance of LLMs for predicting postoperative outcomes, as illustrated in Fig. 1. This would allow us to understand the potential of pretrained models in perioperative care and determine optimal strategies to maximize their predictive capabilities in the early identification of postoperative risks. Third, we demonstrate the foundational capabilities of LLMs in the potential early identification of postoperative complications from preoperative care notes. This suggests that a unified model could be applied directly to predict a wide range of risks, rather than training separate models for each specific clinical use case. The versatility of such foundation models could offer potential tangible benefits if embedded into clinical workflows.

An illustration comparing architectures utilizing distinct fine-tuning strategies experimented with in our study. a Compares the use of pretrained models alone versus fine-tuning the models using their self-supervised objectives. Self-supervised fine-tuning involves refining the pretrained model’s weights through its objective loss function(s) using the provided clinical notes. b Illustrates the differences between semi-supervised fine-tuning and foundation fine-tuning. Semi-supervised fine-tuning focuses on optimizing the model for a specific outcome of interest, whereas foundation fine-tuning employs a multi-task learning (MTL) objective, incorporating all available postoperative labels in the dataset.

Results

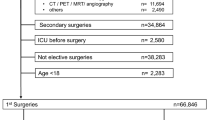

A total of 84,875 clinical notes containing descriptions of scheduled procedures derived from electronic anesthesia records (Epic) of all adult patients (mean [SD] age, 56.9 [16.8] years; 50.3% male; 74% White) at Barnes Jewish Hospital (BJH) from 2018 to 2021 were included in this study. The six outcomes were death within 30 days (1694 positive cases; positive event rate of 2%), Deep Vein Thrombosis (DVT) (498 positive cases; positive event rate of 0.6%), Pulmonary Embolism (PE) (287 positive cases; positive event rate of 0.3%), pneumonia (475 positive cases; positive event rate of 0.6%), Acute Kidney Injury (AKI) (11,418 positive cases; positive event rate of 13%), and delirium (5695 positive cases; positive event rate of 47%).

Pretrained LLMs in perioperative care

When comparing the predictive performances of clinically-oriented pretrained LLMs, such as bioGPT21, ClinicalBERT17, and bioClinicalBERT18, with traditional word embeddings, including word2vec’s continuous bag-of-words (CBOW)22, doc2vec23, GloVe24, and FastText25, we observed absolute increases that ranged from up to 20.7% in delirium to 38.3% in death within 30 days for the Area Under the Receiver Operating Characteristic curve (AUROC). Similarly, increases in the Area Under the Precision-Recall Curve (AUPRC) ranged from up to 0.9% in PE to an impressive 33.2% in AKI. Details in the comparisons between each experimented traditional word embedding and pretrained LLM could be found in Table 1.

To contextualize our results, we fixed the specificity at 95% and focused on improvements in sensitivity across the outcomes demonstrating the most notable gains between the baselines and the best-performing pretrained models. We report these improvements for each experimental strategy. Consequently, for every 100 patients who retrospectively experienced a specific postoperative complication, the pretrained models correctly identified up to 33.2 additional patients who would have otherwise been missed by models relying on traditional embeddings. This is best illustrated in Fig. 2. Early detection of these 33.2 patients ensures timely diagnoses and interventions, potentially mitigating the occurrence of complications.

An illustration of improvements in sensitivity across the experimented fine-tuning strategies compared to baseline word embeddings. Sensitivity improvements are measured as the fraction of high-risk patients correctly identified by each fine-tuned model that would otherwise have been missed by the baseline models, with specificity fixed at 95%. The bar graph represents the means, and the error bars indicate the standard errors from a 5-fold cross-validation among the postoperative outcomes that demonstrated the greatest gains.

These noticeable gains in performance highlights the potential capabilities of pretrained LLMs in grasping clinically relevant context in comparison to traditional word embeddings, despite being trained on broad biomedical and/or clinical notes that are not specific to perioperative care. These improvements, experimented akin to a ‘zero-shot setting’26, are noteworthy given their minimal exposure to perioperative care notes.

Transfer learning: improvements from adapting pretrained models to perioperative corpora

Exposing each of these clinically-oriented pretrained models to clinical texts specific to perioperative care through self-supervised fine-tuning, as best illustrated in Fig. 1, resulted in absolute improvements ranging from up to 0.6% in AKI to 3.2% in PE for AUROC compared to using the pretrained models which were demonstrated in the “Pretrained LLMs in perioperative care" section above. Similarly, increases ranged from up to 0.3% in PE to 1.5% in 30-day mortality for AUPRC. The results spanning the three base models— bioClinicalBERT, bioGPT and ClinicalBERT—across all outcomes are best illustrated in Fig. 3 and detailed in Supplementary Table 1.

An illustration of predictive performance across the experimented fine-tuning strategies for all outcomes. Each figure panel depicts the predictive performance for the following outcomes: a 30-day mortality, b Acute Kidney Injury (AKI), c Pulmonary Embolism (PE), d Pneumonia, e Deep Vein Thrombosis (DVT), and f Delirium. The bar graph represents the means, while the error bars indicate the respective standard errors from a 5-fold cross-validation. Fine-tuning the models using their self-supervised training objectives improved predictive performance compared to using pretrained models alone. Furthermore, incorporating labels as part of the fine-tuning objective further enhanced prediction performance. The foundation fine-tuning strategy performed best, where the model was fine-tuned using a multi-task learning objective across all outcomes in the dataset.

To interpret these maximal performance gains in context: for every 100 patients who retrospectively experienced a specific postoperative complication, the self-supervised model correctly identifies 3 additional patients who would have otherwise been missed by pretrained models and 36.2 additional patients who would have been missed by models using traditional word embeddings. This is best illustrated in Fig. 2. Such early detection may enable these patients to receive timely diagnoses and interventions, potentially reducing the risk of complications.

These consistent improvements witnessed upon adapting these pretrained models to perioperative-specific corpora highlights potential gaps between the corpora on which the pretrained models were originally trained on and copora of perioperative care. This suggests that leeways for improvement in adapting the models to the textual characteristics specifically observed in perioperative care notes.

Transfer learning: improvements from further incorporating labels

When incorporating labels as part of the fine-tuning process through a semi-supervised approach, we witnessed further improvements ranging from up to 0.4% in delirium to 1.8% in PE, and AUPRC values ranging from 0% in Delirium to 2% in death within 30 days, relative to the self-supervised approach. The results spanning the three base models across all outcomes are illustrated in Fig. 3 and detailed in Supplementary Table 1. The convergence in losses, which influenced the selection of newly introduced parameters designed to balance the self-supervised and labeled losses, is demonstrated in Supplementary Figures 1 and 2, with additional details provided in Supplementary Note 1.

To interpret these maximal performance gains in context: for every 100 patients who retrospectively experienced a specific postoperative complication, the semi-supervised model correctly identifies 1.1 additional patients who would have otherwise been missed by self-supervised models, 4.1 additional patients who would have been missed by pretrained models, and 37.3 additional patients who would have been missed by models using traditional word embeddings. This is best illustrated in Fig. 2. Such early detection may enable these patients to receive timely diagnoses and interventions, potentially reducing the risk of complications.

This demonstrates that predictive performance is enhanced relative to the self-supervised approach since both textual and labeled data were utilized during training.

The foundational capabilities of LLMs in predicting postoperative complications from preoperative notes

Foundation fine-tuning involves incorporating a multi-task learning framework to simultaneously fine-tune all labels, resulting in a single robust model capable of predicting all six possible postoperative risks. Through our foundation model, we observe increases in AUROC ranging from up to 0.8% in AKI to 3.6% in PE and AUPRC values increases ranging from up to 0.4% in Pneumonia to 2.6% in death within 30 days, when contrasted with the self-supervised approach. The results spanning the three base models across all outcomes are illustrated in Fig. 3 and detailed in Supplementary Table 1. The convergence in losses, which influenced the selection of newly introduced parameters designed to balance the self-supervised and labeled losses, is demonstrated in Supplementary Figures 1 and 2, with additional details provided in Supplementary Note 1.

To interpret these maximal performance gains in context: for every 100 patients who retrospectively experienced a specific postoperative complication, the foundation model correctly identifies 2.9 additional patients who would have otherwise been missed by self-supervised models, 5.9 additional patients who would have been missed by pretrained models, and 39.1 additional patients who would have been missed by models using traditional word embeddings. These results are best illustrated in Fig. 2. Such early detection may enable these patients to receive timely diagnoses and interventions, potentially reducing the risk of complications.

The convergence in losses, which influenced the selection of newly introduced parameters designed to balance the self-supervised and labeled losses, is demonstrated in Supplementary Figures 1 and 2, with additional details provided in Supplementary Note 1.

In lieu of the performances obtained from foundation models, we provide each model’s accuracy, precision, sensitivity, specificity and F1 scores in Table 2 to provide a comprehensive view of each foundation model’s performance. These competitive performances in predicting various clinical outcomes highlights the foundational potential of LLMs in perioperative care.

Examining the effects of ML predictors on predictive performance

To facilitate consistent comparisons between the traditional word embeddings and the distinct fine-tuning strategies from pretrained LLMs, we defaulted to using eXtreme Gradient Boosting Tree (XGBoost)27 as the predictor used for the text embeddings. However, in practice, the choice of predictors remains flexible.

As such, we compare the performances of applying distinct ML predictors among the best-performing fine-tuning strategy—foundation models. These include (1) the default XGBoost, (2) logistic regression, (3) random forest, and (4) task-specific fully connected neural networks found on top of the contextual representations. The results are mixed, with no single classifier dominantly outperforming the others, as illustrated in Fig. 4 and detailed in Supplementary Table 2. Surprisingly, logistic regression performed slightly better than the others, demonstrating that well-tuned models can generate precise contextual representations that suit a simple classifier with better generalizability than complex classifiers.

Changes in predictive performance across distinct machine learning classifiers compared to the default eXtreme Gradient Boosting (XGBoost) used in our experiments, when applied to the textual representations of foundation models across all outcomes (i.e., modeli − XGBoosti for the ith outcome). These classifiers include logistic regression, random forest, and the outcome-specific feed-forward fully connected auxiliary neural network integrated in the foundation model. The figure panels illustrate the changes for the following outcomes: a 30-day mortality, b Acute Kidney Injury (AKI), c Pulmonary Embolism (PE), d Pneumonia, e Deep Vein Thrombosis (DVT), and f Delirium. The bar graphs represent the means, while the error bars indicate the standard errors across a 5-fold cross-validation. Our results reveal that no single classifier consistently outperformed the others across all outcomes and metrics. Interestingly, the logistic regression classifier performed slightly better in some cases, suggesting that well-tuned language models can generate precise contextual representations that work effectively with a simple classifier.

Qualitative evaluation of our foundation models for safety and adaption to perioperative care

We ran several broad case prompts to examine and ensure the safety16,28 of our foundation models. This also provides us with the opportunity to qualitatively ensure its adaptation to perioperative care beyond the reported quantitative results.

Table 3 suggests that our foundation models have adapted from the generic texts in biomedical literature or non-perioperative clinical notes to the terminologies expected among perioperative care notes. More importantly, the results suggest that our model produced minimally harmful outputs. This is contrary to what we witnessed in the outputs among some of the pretrained models of which our foundation models were based on.

Explainability of models—what keywords leads to elicits the prediction of specific outcomes?

SHapley Additive exPlanations (SHAP)29 was employed to explain how each sub-word or symbol, represented as tokens, contributed to the prediction of outcomes in the best-performing model. Figure 5 summarizes the 10 most influential tokens that explained the model’s predictions, indicating factors that either contributed to (positive values) or detracted from (negative values) the outcome. Due to the relatively large vocabulary present in the clinical notes and the similarity of Shapley values across tokens, each token generally explains only a small contribution to any specific outcome compared to the entire set of tokens. Figure 5 illustrates how texts associated with risky procedures—such as ‘thrombectomy’ in DVT and 30-day mortality—or undesirable events—such as ‘clot’ in 30-day mortality—often elicits the prediction of potential postoperative risks.

SHAP values summarizing the 10 most influential tokens explaining the model’s predictions for (or against) each outcome. Each figure panel presents the most influential tokens explaining the following outcomes: a 30-day mortality, b Acute Kidney Injury (AKI), c Pulmonary Embolism (PE), d Pneumonia, e Deep Vein Thrombosis (DVT), and f Delirium. Each token typically represents a word, sub-word, or symbol. The best-performing model for each outcome was selected to perform the respective SHAP analyses. The illustration demonstrates that each token typically contributes only a small amount to any specific outcome compared to the entire set of tokens. For BioGPT-based models, < /w > marks the boundary where the word ends in the tokenizer’s vocabulary, indicating that the word is complete and no longer broken down into sub-words.

BERT vs GPT/biomedical vs clinical-domain-based models—which performs best in predicting postoperative risks?

From the pretrained models alone (i.e., without any fine-tuning), BioGPT excelled in 5 of the 6 tasks, while ClinicalBERT excelled in 1 of the 6 tasks. This demonstrates that the text and terminologies in biomedical literature sufficiently capture the text and terminologies of a similar magnitude compared to their counterparts trained on critical care unit notes.

However, upon fine-tuning the models with the clinical notes and the six labels, the foundation BioGPT model excelled in 4 of the 6 tasks, while BioClinicalBERT excelled in 2 of the 6 tasks. This can be potentially attributed to the fact that the training texts from the biomedical corpora of the base model was complemented by terminologies and texts infused from our perioperative care notes during fine-tuning, thereby creating robust, diverse, and rich representations compared to notes solely exposed to clinical corpora as in the case of the ClinicalBERT version of our foundation models.

Minimal differences were also observed between the encoder-only Bidirectional Encoder Representations from Transformers (BERT) models30 and the decoder-only Generative pretrained Transformer (GPT) models31, in-spite the BERT models being more tailored to downstream classification tasks in other domains, such as sentiment classification and name entity recognition (NER)32. This suggests that GPT models, traditionally used for generative tasks, can also perform equally or more competitively in classification tasks in perioperative care contrary to what is expected of other domains.

How do these models perform with the incorporation of tabular-based features

Upon incorporating tabular features—such as demographics, laboratory results, medical history, and physiological measurements— alongside clinical notes for generating predictions, we observe that the foundation models maintain a robust advantage over the semi-supervised and self-supervised fine-tuned models, although the improvement is less pronounced compared to using clinical notes alone. Specifically, the foundation model demonstrates absolute improvements of up to 1.8% in AUROC and 1.2% in AUPRC compared to the self-supervised approach, and up to 9.6% in AUROC and 10.7% in AUPRC compared to the semi-supervised approach. This is detailed in Table 4.

Our results reveals relative changes in AUROC \(\left(\,\text{i.e.}\frac{{\rm{AUROC}}\,{\rm{w}}/ {\rm{tabular}}\,{\rm{data}}-{{\rm{AUROC}}\, {\rm{w}}/{\rm{o}}\, {\rm{tabular}}\, {\rm{data}}}}{{{\rm{AUROC}}\, {\rm{w}}/{\rm{o}}\, {\rm{tabular}}\, {\rm{data}}}\,}\times 100 \% \right)\) ranging from 6% to 16%, and relative changes in AUPRC ranging from 17% to 241%. These changes could be best illustrated in Fig. 6 below. Notably, the relative gains achieved by incorporating tabular-based features are most pronounced in heavily imbalanced outcomes, with AUPRCs more than doubling for rare events such as DVT and PE. This suggests that tabular-based features, such as laboratory results, medical history, and physiological measurements, play a critical role in enhancing the predictive power for rare outcomes.

A comparison of predictive performance when tabular data is concatenated with textual representations derived from clinical notes versus using clinical notes alone. Tabular features include demographics, laboratory results, the patient’s medical history, and physiological measurements. a Illustrates changes in Area Under the Receiver Operating Characteristic Curve (AUROC), while b illustrates changes in Area Under the Precision-Recall Curve (AUPRC). The figure shows that the relative gains achieved by incorporating tabular features are particularly pronounced for heavily imbalanced outcomes, with AUPRCs more than doubling for rare events such as Deep Vein Thrombosis (DVT) and Pulmonary Embolism (PE).

Comparison with NSQIP Surgical Risk Calculator

We compared our best-performing foundation model with the American College of Surgeons (ACS) NSQIP Risk Calculator33, a proprietary tool that is widely regarded as the ‘gold standard’ in postoperative predictions. The tool bases its prediction on procedural information along with 20 discrete variables. Hence, we compared the NSQIP Risk Calculator’s performance with the foundation models under two settings—using clinical notes alone and using clinical notes combined with tabular features—to ensure a fair comparison. The results of our evaluation are reflected in Table 5.

Our results suggest that combining clinical notes with tabular features in foundation models achieves the best performance, surpassing the NSQIP Risk Calculator in accuracy, precision, and F-score, but falling short in sensitivity. Similarly, using clinical notes alone with the foundation model surpasses the NSQIP Risk Calculator in accuracy and precision, but falls behind in sensitivity and f-score. Notably, the NSQIP Risk Calculator demonstrates superior sensitivity but sub-par performance in accuracy and precision, potentially suggesting its tendency to frequently classify patients as “high-risk” despite these patients retrospectively not experiencing any postoperative complications. We attribute this discrepancy to our observation that the NSQIP Risk Calculator’s heavily weighs on select features, such as ASA class and functional status, which are prominent in our dataset due to its relatively high ASA classes and a significant proportion of patients with dependent functional status, as noted in Table 6 at the Methods section.

Generalizability of our methods

Ensuring generalizability of methods across datasets spanning distinct health centers and hospital units is pertinent in building reliable predictive models towards clinical care34. To test the generalizability of our methods beyond BJH and perioperative care, we replicate them towards the publicly available MIMIC-III35,36 dataset. A comparison of the cohort characteristics between our BJH dataset and MIMIC-III is presented Supplementary Table 3, with a detailed analysis provided in Supplementary Note 2. Our results mostly align with the main findings of our study. Specifically, the baseline models were outperformed by pretrained LLMs, with absolute increases that ranged from up to 14.6% in 12-h discharge to 30% for In-hospital mortality for AUROC. These results are detailed Supplementary Table 4. Similarly, increases in the AUPRC ranged from 17.7% in 12-h discharge to 28.2% for in-hospital mortality. Similar to the results observed in our BJH dataset, self-supervised fine-tuning witness maximal absolute improvements in AUROCs of up to 0.4% in 12-h discharge to 3% in in-hospital mortality and AUPRCs of up to 0.4% in 30-day mortality to 0.8% in 12-h discharge. Incoporating labels into the fine-tuning process also saw further improvements of 0.5% in 12-h discharge to 1.6% in 30-day mortality and 0.3% in 12-h discharge to 3.6% for in-hospital mortality for AUROC and AUPRC, respectively. Finally, foundation models again performed the best, with AUROC improvements of 0.4% in 12-h discharge to 1.4% in 30-day mortality and AUPRC improvements of 0.2% in 12-h discharge to 2.6% for in-hospital mortality when compared to self-supervised fine-tuning. In the same order, the mean squared error (MSE) of LOS decreased by up to 85.9 days, 3.1 days, 8 days and 8.2 days, respectively. The results are detailed in Supplementary Table 5 whilst the differences in performances across fine-tuning strategies could be illustrated in Supplementary Figure 3.

The replicability of methods suggests that the foundational capabilities of LLMs potentially extends beyond perioperative care and BJH to other domains of clinical care spanning different healthcare systems.

Discussion

The release of ChatGPT and its rise in popularity have sparked interest in applying LLMs to decision support systems in medical care37,38. However, challenges such as the low-resource nature of clinical notes—which are neither readily open-sourced nor abundantly available39 —and the complex abbreviations and specialized medical terminologies that are not well-represented in the training corpora of pretrained models38 have made the applications of LLMs in healthcare challenging. These obstacles have in-part inspired efforts to refine, adapt, and evaluate LLMs for reliable use across distinct clinical environments, such as personalized treatment in patient care15, the automation of medical reports14 or summaries16, and medical diagnosis for conditions like COVID-1919 or Alzheimer’s20. Despite these advancements, the application of LLMs in forecasting postoperative surgical complications from preoperative notes remains relatively unexplored. Addressing this gap could facilitate the early identification of patient risk factors and enable the timely administration of evidence-based treatments, such as antibiotics in postoperative settings1,40,41, from information-rich clinical notes.

While various AI models leveraging discrete EHR data have demonstrated success in the early identification of postoperative risks1,5, clinical notes taken during the preoperative phase holds informational value that cannot be captured through tabular data alone. Hence, our retrospective study utilizes open-source, pretrained clinical LLMs on preoperative clinical notes to predict postoperative surgical complication. Drawing on ~85,000 notes from Barnes-Jewish Hospital (BJH)—the largest hospital in Missouri and the largest private employer in the Greater St. Louis region42—our study aims to facilitate the potential integration of LLMs into the perioperative decision-making processes. By supporting surgical care management, optimizing perioperative care, and enhancing early risk detection, LLMs have the potential to improve patient outcomes and reduce complications that could be avoided through early identification of patient risk factors.

Advanced improvements observed when using pretrained clinical LLMs compared to traditional word embeddings underscore the capability of such models without needing model training, eliminating the potential constraints in training data collection and computational costs whilst highlighting their potential adjacent to a ‘zero-shot’ setting26. These results are noteworthy given differences between the training corpora of such models, such as PubMed articles or public EHR databases like MIMIC, and the semantic characteristics of our clinical notes. Such findings may be handy when data or computational resources may be limited43. In addition, the enhancements through transfer learning via fine-tuning in a self-supervised setting could be attributed to the adaption of these pretrained models in ‘familiarizing’ itself towards the texts of our preoperative clinical notes30,31. Such differences might arise from variations in text distributions or frequency, as well as the distinctive use of abbreviations and terminology in each task-specific dataset, contrasting the textual contents found in databases of pretrained models like PubMed or Wikipedia16,38,44. This perspective reveals potential limitations of relying purely entirely on pretrained models to capture precise context within diverse clinical texts and highlights the advantages of refining LLMs using more extensive clinical datasets38,44. This thereby shows that even in cases where labeled data is scarce, tuning the models with respect to the clinical texts can still lead to further improvements compared to using pretrained models alone. Incorporating labels into the fine-tuning process, when available, can substantially improve performance relative to self-supervised fine-tuning. This ensures tokens linked to specific outcomes are effectively represented in the optimized model, thereby achieving slightly improved performances17,45. The foundation models obtained impressive performances by incorporating a multi-task learning (MTL) framework at scale, as concurred by Aghajanyan et al.46. Resultingly, a model-agnostic foundation language model—where a single robust model is fine-tuned across all accessible labels—yielded more competitive outcomes. This is potentially attributed to the model’s ability to efficiently capture and retain crucial predictive information through knowledge sharing among distinct postoperative complications during the fine-tuning phase, thereby revealing the foundational capabilities of LLMs in predicting risks from notes taken preoperatively.

The foundation approach offers potential tangible benefits beyond achieving strong results. From a computational standpoint, a single model can be fine-tuned across various tasks, conserving time and resources that would be expended on tailoring and storing parameters for each specific model37,46,47. In addition, this model can better generalize across tasks it has been optimized for through knowledge sharing, outperforming bespoke models and mitigating concerns of potential overfitting to the limited samples from a specific task48. From a clinical standpoint, because a single, more robust model can be deployed to predict multiple tasks, it could potentially facilitate greater convenience when integrated into clinical workflows37,47.

Upon integrating tabular-based features with text embeddings from clinical notes, the predictive performance of foundation models largely remains robust. This robustness can be attributed to the knowledge-sharing capabilities inherent in multi-task learning (MTL), which enhance model generalizability. In addition, we observe that imbalanced outcomes, such as deep vein thrombosis (DVT) and pulmonary embolism (PE), derive most of their predictive power from tabular-based features. These outcomes are influenced by underlying coagulation abnormalities (e.g., hypercoagulability, venous stasis), which are more effectively captured through laboratory results49.

Minor variances in outcomes emerged when comparing the results of different machine learning predictors on extracted textual embeddings, including the utilization of the fully connected neural network found atop the contextual representations. This affirms that the robustness of LLMs lies mostly in the attention-based architecture, which leads to precise text representations, while the domain-specific dataset on which the language model is fine-tuned plays a crucial role in its adaptability18,35,36. Similarly, there was no consistent performance gain using BERT-based clinical LLM models over GPT-based models, even though they had different training strategies and the former is more widely used in downstream prediction.

Given that model harmfulness and hallucinations are core concerns in the application of LLMs in medicine16, we tested our model with several broad-case prompts. Our outputs suggest it’s safe usage, contradicting some of the potentially harmful outputs witnessed from the base models of which our foundation models were trained on. In addition, it represented a semantic shift towards perioperative-specific corpora from the broad biomedical or critical care unit notes on which these base models were trained38.

Our findings could provide useful potential when integrated into workflows. The versatility and robustness of foundation models can potentially assist clinicians by simultaneously predicting multiple postoperative risks, enabling the early identification of high-risk patients with fewer false positives and false negatives. This process would otherwise rely on manual assessment and interpretation50, which can be time-consuming and limited to what a single clinician can process at a time. By augmenting these manual efforts, foundation models can provide scalable insights and predictions, allowing clinicians to prioritize critical diagnostic and treatment decisions while enhancing workflow efficiency and optimizing clinical operations. Henceforth, with the early recognition of critical deterioration, these models have the potential to facilitate timely interventions, which could potentially reduce unnecessary procedures and follow-ups.

Despite these benefits, hurdles in embedding LLMs into clinical workflows, which could lead to unfavorable outcomes, could limit these foundation models from realizing their full potential. Gaining the trust and confidence of clinicians to encourage the adoption of LLMs over widely accepted ‘gold’ standards33, such as the NSQIP ACS Risk Calculator, hinges on demonstrating superior predictive performance and ensuring explainability51. Existing ‘gold’ standards are often favored due to their simplicity compared to the ‘black-box’ nature of deep learning models such as LLMs. While tools like the NSQIP Surgical Risk Calculator can often produce predictions with minimal input features, they may potentially be highly sensitive to specific features that disproportionately influence surgical risk predictions. This is observed in the predictive results in the comparisons between foundation models and the NSQIP Surgical Risk Calculator, where the NSQIP Surgical Risk Calculator frequently classifies patients in our dataset as having a high risk of serious complications due to the dataset’s average high ASA class and relatively high amount of patients with dependent functional status—features the tool heavily relies on for its predictions. This reliance led to subpar precision and F-scores, raising concerns about the generalizability of ‘gold-standard’ models when applied across healthcare centers with distinct patient characteristics. In contrast, language models consider a vast array of features (i.e., words), where no single word is likely to sway the prediction of an outcome. This demonstrated by the minimal feature importance values observed in our SHAP analysis, as shown in Fig. 5. This suggest that language models are less reliant on specific features and could potentially be better equipped to generalize across distinct patient populations. It is on this same note that the integration of SHAP could proof potentially useful when embedding LLMs into clinical workflows to enhance the explainability of model predictions. Incorporating such interpretability mechanisms could enable clinicians to better understand the outputs, allowing them to make informed decisions which is critical in facilitating the clinician adoption of foundation models.

Moving forward, as a important part of our future work, we hope to collaborate closely with clinicians to gather feedback on the meaningfulness of these model outputs. This feedback will guide the iterative development of clinical decision support systems, ensuring that our models can be better operationalized to efficiently improve patient care.

Despite demonstrating the potential of LLMs in predicting risks from notes taken during preoperative care, our study possesses limitations. First, the primary data for our study is centered around anesthesia notes from BJH, which encompasses a single healthcare system. While we have attempted to demonstrate the generalizability of our methods by replicating them on the MIMIC-III dataset, we acknowledge that different types of clinical notes spanning distinct hospital systems are characteristically heterogeneous. This includes surgical notes, which may contain relevant content specific to each case that could potentially enhance the predictive power for postoperative complications. Second, quality of the textual data may possess limitations in itself, ranging from incompleteness52, to potentially missing data52, to the potential lack of frequent updates53.

This could have a potentially adverse impact on the performances of our fine-tuned LLMs. Third, our study utilized BERT- and GPT-2-based models. These reasonably sized models represent both encoder- and decoder-only architectures that can be implemented and integrated into clinical workflows without requiring exorbitant computing resources. Nonetheless, in the era of increasingly large open-source models, such as the Llama-based BioMedGPT54, as well as high-performing proprietary medical domain-specific models like Med-PaLM-255, it remains unclear whether our observations can be generalized to these large-scale LLMs or effectively embedded into clinical workflows at that scale. As such, our ongoing study is actively exploring the use of parameter-efficient fine-tuning (PEFT) methods on large-scale models. Forth, subgroup analysis based on the various surgery types was not conducted because of the small number of patients within each subgroup; hence, the clinical utility of the predictions of postoperative complications based on specific surgery types is limited. To address these limitations, our ongoing work involves data triangulation across the administrative data, clinical text, and other data to align with high-quality manual health record review provided by National Surgical Quality Improvement Program adjudicators.

Methods

Our dataset originated from electronic anesthesia records (Epic) for all adult patients undergoing surgery at Barnes-Jewish Hospital (BJH), the largest healthcare system in the greater St. Louis (MO) region, spanning four years from 2018 to 2021. The dataset included n = 84, 875 of preoperative notes and its surgical outcomes.

The text-based notes were single-sentence documents with a vocabulary size of ∣V∣ = 3203, and mean word and vocabulary lengths of \({\overline{l}}_{w}=8.9\) (SD: 6.9) and \({\overline{l}}_{v}=7.3\) (SD: 4.4), respectively. The textual data contained detailed information on planned surgical procedures, which were derived from smart text records during patient consultations. To preserve patient privacy, the data was provided in a de-identified and censored format, with patient identifiers removed and procedural information presented in an arbitrary order, ensuring that no information could be traced back to any uniquely identifiable patient.

Of the 84,875 patients, the distribution of patient types included 17% (14,412) in orthopedics, 8.8% (7442) in ophthalmology, and 7.4% (6236) in urology. The gender distribution was 50.3% male (42,722 patients). Ethnic representation included 74% White (62,563 patients), 22.6% African American (19,239 patients), 1.7% Hispanic (1488 patients), and 1.2% Asian (1015 patients). The mean weight was 86 kg (± 24.7 kg) and the mean height was 170 cm (± 11 cm). Other characteristics of the patients could be found in Table 6.

Outcomes

Our six outcomes were: 30-day mortality, acute knee injury (AKI), pulmonary embolism (PE), pneumonia, deep vein thrombosis (DVT), and delirium. These six outcomes remain pertinent in perioperative care, particularly during OR-ICU handoff1,56,57.

AKI was defined according to the Kidney Disease Improving Global Outcomes criteria and was determined using a combination of laboratory values (serum creatinine) and dialysis event records. Exclusion criteria for AKI included baseline end-stage renal disease, as indicated by structured anesthesia assessments, laboratory data, and billing data. Delirium was determined from nurse flow-sheets (positive Confusion Assessment Method for the Intensive Care unit test result); pneumonia, DVT, and PE were determined based on the International Statistical Classification of Diseases and Related Health Problems, Tenth Revision (ICD-10) diagnosis codes. Patients without delirium screenings were excluded from the analysis of that complication.

Data quality

The dataset originally included a total of 90,005 records with postoperative outcomes. However, 5130 instances did not have clinical notes associated with their EHR records, resulting in a final sample size of n = 84,875. A total of 72,697 patients without delirium screenings were excluded from the analysis pertaining to the delirium outcome, resulting in an 86% missing rate for that complication. The remaining five outcomes—30-day mortality, DVT, PE, pneumonia, and AKI—had complete data and no missing outcomes among all cases with associated clinical notes.

Traditional word embeddings and pretrained LLMs

We employed a combination of BERT30 and GPT-based31 large language models (LLMs) that were trained on either biomedical or open-source clinical corpora—specifically BioGPT21, ClinicalBERT17, and BioClinicalBERT18—for predicting risk factors from notes taken during perioperative care. BioGPT is a 347-million-parameter model trained on 15 million PubMed abstracts21. It adopts the GPT-2 model architecture, making it an auto-regressive model trained on the language modeling task, which seeks to predict the next word given all preceding words. In contrast, ClinicalBERT was trained on the publicly available Medical Information Mart for Intensive Care III (MIMIC-III) dataset, which contains 2,083,180 de-identified clinical notes associated with critical care unit admissions17. It was initialized from the BERTbase architecture with the masked language modeling (MLM) and next sentence prediction (NSP) objectives30. Similarly, BioClinicalBERT was pretrained on all the available clinical notes associated with the MIMIC-III dataset18. However, unlike ClinicalBERT, BioClinicalBERT was based on the BioBERT model58, which itself was trained on 4.5 billion words of PubMed abstracts and 13.5 billion words of PubMed Central full-text articles. This allowed BioClinicalBERT to leverage texts from both the biomedical and clinical domains.

These models have been tested across representative NLP benchmarks in the medical domains, including Question-Answering tasks benchmarked by PubMedQA21, recognizing entities from texts18 and logical relationships in clinical text pairs59. In addition to the clinically-oriented LLMs, we included traditional NLP word embeddings as baselines for comparison. These include word2vec’s continuous bag-of-words (CBOW)22, doc2vec23, GloVe24, and FastText25.

By comparing transformer-based LLMs with traditional words embeddings, we aim to analyze the magnitude of improvements which clinically-oriented pretrained LLMs could potentially possess in grasping and contextualizing perioperative texts towards postoperative risk prediction, in comparison to traditional word embeddings that represent each token as an independent vector.

Transfer learning: self-supervised fine-tuning

A comparison of the distinct fine-tuning methods employed among our study could be best illustrated in Fig. 1.

To bridge the gap between the corpora of the pretrained models and that of perioperative notes, we first expose and adjust these models to our perioperative text through self-supervised fine-tuning. We adapt the pretrained LLMs with our training data in accordance with their existing training objectives. This process leverages the information contained within the source domain and exploits it to align the distributions of source and target data. For BioGPT, this entails the language modeling task21,31. For ClinicalBERT and BioClinicalBERT, it involves the masked language modeling (MLM), as well as the Next Sentence Prediction (NSP) objectives if the document contains multiple sentences, as elaborated in the “Traditional word embeddings and pretrained LLMs" section above30.

Transfer learning: incorporating labels into fine-tuning

In lieu of anticipated improvements from self-supervised fine-tuning, we took a step further by incorporating labels as part of the fine-tuning process, in the hopes of boosting predictive performances as demonstrated in past studies17,45. We do this through a semi-supervised approach, as illustrated in Fig. 1. This means that in contrast to the self-supervised approach which adjusts weights based solely on training texts, the semi-supervised method infuses label information during the fine-tuning process. In doing so, the model leverages an auxiliary fully-connected feed-forward neural network atop of the contextual representations, found in the final layer of the hidden states, to predict the labels as part of its fine-tuning process. The auxiliary neural network uses the Binary-Cross-Entropy (BCE), Cross-Entropy (CE), and Mean-Square-Error (MSE) losses for binary classification, multi-label classification, and regression tasks, respectively. A λ parameter was introduced to balance the magnitude of losses between the supervised and self-supervised objectives. The λ parameter, as well as all other parameters used to fine-tune the models, are detailed in Table 7. The appropriate values for λ were selected to balance each model’s self-supervised objectives with the losses across distinct labels within the same training batch, ensuring both losses converged at relatively similar rates. Henceforth, in addition to the potential improvements brought by the self-supervised training objectives, we are now able to leverage the labels to supervise the textual embedding to better align with the training labels.

Foundation fine-tuning strategy

To build a foundation model with knowledge across all tasks, we extended the above-mentioned strategies and exploited all possible labels available within the dataset, including but not limited to selected tasks, as inspired by Aghajanyan et al.46. This involves employing a multi-task learning framework for knowledge sharing across all available labels from all six outcomes—death in 30 days, AKI, PE, pneumonia, DVT, and delirium—present in the dataset. This task-agnostic approach therefore enables knowledge sharing across all available labels. Therefore, the model becomes foundational in the sense that it solves various tasks simultaneously, meaning a single robust model can be deployed to a wide range of postoperative risks. This contrasts previous approaches that required separate models dedicated to each specific outcome. To achieve this, each label is assigned a task-specific auxiliary fully-connected feed-forward neural network, wherein the losses across all labels are pooled together. To control for the magnitude of losses between each task-specific auxiliary network, a λ parameter, where \(\lambda * \mathop{\sum }\nolimits_{i}^{m}los{s}_{i}\) given i outcome across m total outcomes, is introduced as weights that contribute to the overall loss calculation across all labels from each training batch. The λ parameter, as well as all other parameters used to fine-tune the models, are detailed in Table 7. The appropriate values for λ were selected to balance each model’s self-supervised objectives with the losses across distinct labels within the same training batch, ensuring both losses converged at relatively similar rates.

Predictors

We defaulted to XGBoost27 for predicting outcomes from the generated word embeddings or text representations to facilitate consistent comparisons between traditional word embeddings, pretrained LLMs, and their fine-tuned variants. This selection allows to accommodate a diverse range of input types while leveraging XGBoost’s widespread use in healthcare due to its robust performance in various clinical prediction tasks. Nonetheless, the choice of predictors remain flexible. This includes utilizing the task-specific fully-connected feed-forward neural network found in models that were fine-tuned with respect to the labels.

To examine if the choice of predictors makes a noticeable difference in predictive performances, we experimented with various predictors among the models of the best-performing fine-tuning strategy. These include (1) the default XGBoost, (2) Random Forest, (3) Logistic Regression (4) and the feed-forward network found in models that incorporate labels into the fine-tuning process. The range of hyper-parameters used for each predictor could be found in the Table 8.

Evaluation metrics and validation strategies

For a rigorous evaluation, experiments were stratified into 5-folds for cross-validation48. A nested cross-validation approach was used to ensure a robust and fair comparison among the best combination of hyperparameters across all models and approaches spanning multiple folds.

The main evaluation metrics included area under the receiver operating characteristic curve (AUROC) and the area under the precision-recall curve (AUPRC) to get a comprehensive evaluation of the models’ overall prediction performance in the face of class imbalance. To contextualize our results, we fixed the specificity at 95% and reported the improvements in sensitivity across all the experimented strategies for the postoperative complication demonstrating the greatest gains compared to baseline traditional word embeddings. In our case, pneumonia showed the largest gains, while 30-day mortality was excluded as it is not classified as a postoperative surgical complication. These gains can be interpreted as the number of correctly identified high-risk patients (per 100 cases) that would have otherwise been missed by baseline models. This underscores the model’s ability to detect previously overlooked cases across complications. In addition, for our best-performing foundation models, we reported accuracy, sensitivity, specificity, precision, and F-scores.

Qualitative evaluation of our foundation models

We qualitatively evaluate our models for safety while ensuring their adaptation to perioperative corpora beyond the quantitative results. As such, we ran some broad case-prompts on our best-performing foundation models through their objective loss functions. For the BERT-based models, this encompassed the masked language modeling (MLM) objective, where we get the model to fill a single masked token in a ‘fill-in-the-blank’ format30. For the GPT models, this involved the language modeling objective, where we get the model to ‘complete the sentence’ based on the provided incomplete sentences31.

Incorporation of tabular-based features

To evaluate how predictive performance differs with the incorporation of tabular-based data and assess their contribution to predictive power, we utilized tabular features tied to the respective clinical notes of each patient. These tabular features includes demographics (e.g., age, race, and sex), medical history (e.g., smoking status and heart failure), physiological measurements (e.g., blood pressure and heart rate), laboratory results (e.g., white blood cell count), and medications. These features were concatenated with the textual representations of the clinical notes obtained from each fine-tuned language model.

Tabular features with a missing rate greater than 50% were excluded, while missing values were imputed using the most frequent class for categorical features and median values for continuous features. XGBoost was then re-utilized among the combined textual representations and encoded tabular features, using the same parameters across our experiments without the tabular data, as specified in Table 8.

Explainability of models

SHAP29, a technique that uses Shapley values from game theory to explain how each feature affects a prediction, was employed to explain the influence of distinct tokens in the clinical notes on the prediction. In this context, each feature corresponds to a token—typically representing a sub-word or symbol—present among the clinical texts. Consequently, each token is assigned a feature value based on its influence on the model’s prediction. We utilize the best-performing model and the corresponding tokenizer of each outcome to generate our SHAP visualizations among all six outcomes.

Comparison with NSQIP Surgical Risk Calculator

The ACS NSQIP Risk Calculator33, often regarded as the ‘gold standard’ for risk estimation, uses 20 distinct discrete variables to predict the risk of complications. These variables include procedural details, demographic information such as age and sex, chronic conditions or comorbidities like congestive heart failure and hypertension, and patient status indicators such as ASA class and functional status. The ACS NSQIP Risk Calculator is proprietary software developed and maintained by the American College of Surgeons.

To compare the performances of our models with the classifications obtained from the NSQIP Surgical Risk Calculator, we randomly selected 100 cases from the test data of the first fold, stratified by the occurrence of serious postoperative complications. Each patient’s discrete features were manually entered into the ACS NSQIP Risk Calculator (https://riskcalculator.facs.org/RiskCalculator/), and the predicted outcomes were compared with those generated by our best-performing foundation model, the BioGPT variant. For this comparison, a prediction of a “higher than average" risk of serious complications by the NSQIP calculator was considered to be a high-risk patient (i.e., positive case). Since the NSQIP Risk Calculator incorporates procedural information as discrete variables, we ensured a fair comparison by evaluating its performance both with clinical notes alone and with clinical notes combined with tabular features.

Replication of methods on MIMIC-III

To ensure that our methods are generalizable beyond perioperative care and the BJH dataset, we replicated our methods on the publicly available MIMIC-III dataset35,36, which contains de-identified reports and clinical notes of critical care patients at the Beth Israel Deaconess Medical Center between 2001 and 2012. The notable differences arises from MIMIC-III’s primary focus critical care cases, including emergency operations across various specialties, whilst our dataset encompasses a broader range of both emergency and elective surgical cases, with Orthopedics, Ophthalmology, and Urology among the most represented specialties. To closely mimic the approach and settings employed in BJH’s clinical notes, we utilized the long-form descriptive texts of procedural codes in MIMIC-III. Specifically, ICD-10 codes containing procedural information from each patient were traced and formatted into their respective long-form titles. For each patient, these long-form titles were then combined to form a single-sentenced clinical note, thereby aligning with the textual characteristics of BJH’s clinical notes. The selected outcomes were length-of-stay (LOS), in-hospital mortality, 12 h discharge status, and death in 30 days, as adapted from previous studies1,35,36,60.

Computing resources

A single RTX A6000 with 40GB of Random Access Memory (RAM) was used to fine-tune all the models. The range of RAM required ranged from 8GB in bioClinicalBERT and clinicalBERT with self-supervised fine-tuning to 37GB in bioGPT among foundation fine-tuning. As bioClinicalBERT and clinicalBERT models contained 110 million parameters and bioGPT contained 347 million parameters, the ranking of computing resources required to fine-tune these models are bioClinicalBERT ≈ clinicalBERT < bioGPT. Similarly, the self-supervised models were fine-tuned on a single objective and could accommodate to smaller batch sizes, therefore requiring lesser resources compared to the supervised counterparts. The foundation model naturally required more resources as it was fine-tuned on multiple outcomes, with each outcome represented as a separate feed-forward function, thereby requiring larger batch sizes to ensure each batch had a fair representation among the outcomes. Therefore, methods-wise, the rank of computing resources required to fine-tune these models were unsupervised < semi-supervised < foundation.

Ethics consideration

For the BJH dataset, the internal review board of Washington University School of Medicine in St. Louis (IRB #201903026) approved the study with a waiver of patient consent. The establishment of the MIMIC-III database was approved by the Massachusetts Institute of Technology and Beth Israel Deaconess Medical Center, and informed consent was obtained for the use of data. The study was conducted in accordance with the Declaration of Helsinki (as revised in 2013).

Data availability

The main data of this study (BJH) is not openly available due to our IRB of which a patient waiver was obtained for the study. The data used to replicate our methods (MIMIC-III) is available as open data via the PhysioNet Repository: https://physionet.org/content/mimiciii/1.4/.

Code availability

The source codes can be publicly accessed through the github repository without restrictions at https://github.com/cja5553/LLMs_in_perioperative_care. The best performing foundation models are gated in HuggingFace and could be requested with the proper permissions.

References

Xue, B. et al. Use of machine learning to identify risks of postoperative complications. JAMA Netw Open 4, e212240 (2021).

Hamel, M. B., Henderson, W. G., Khuri, S. F. & Daley, J. Surgical outcomes for patients aged 80 and older: morbidity and mortality from major noncardiac surgery. J. Am. Geriatr. Soc. 53, 424–429 (2005).

Healey, M. A. Complications in surgical patients. Arch. Surg. 137, 611–618 (2002).

Turrentine, F. E., Wang, H., Simpson, V. B. & Jones, R. S. Surgical risk factors, morbidity, and mortality in elderly patients. J. Am. Coll. Surg. 203, 865–877 (2006).

Xue, B. et al. Perioperative predictions with interpretable latent representation. In Proceedings of the 28th ACM SIGKDD Conference on Knowledge Discovery and Data Mining. 4268–4278 (ACM, 2022).

Selwyn, D. Impact of perioperative care on healthcare resource use: rapid research review. Tech. Rep., Centre for Perioperative Care https://www.cpoc.org.uk/sites/cpoc/files/documents/2020-09/Impact%20of%20perioperative%20care%20-%20rapid%20review%20FINAL%20-%2009092020MW.pdf (2020).

Wolfhagen, N., Boldingh, Q. J. J., Boermeester, M. A. & de Jonge, S. W. Perioperative care bundles for the prevention of surgical-site infections: meta-analysis. Br. J. Surg. 109, 933–942 (2022).

Hofer, I. S., Lee, C., Gabel, E., Baldi, P. & Cannesson, M. Development and validation of a deep neural network model to predict postoperative mortality, acute kidney injury, and reintubation using a single feature set. NPJ Digit. Med. 3, 58 (2020).

Spasic, I. & Nenadic, G. et al. Clinical text data in machine learning: systematic review. JMIR Med. Inform. 8, e17984 (2020).

Braaf, S., Manias, E. & Riley, R. The role of documents and documentation in communication failure across the perioperative pathway. a literature review. Int. J. Nurs. Stud. 48, 1024–1038 (2011).

Riley, R. & Manias, E. Governing the Operating Room List, 67–89 (Palgrave Macmillan UK, 2007).

FitzHenry, F. et al. Exploring the frontier of electronic health record surveillance. Med. Care 51, 509–516 (2013).

Nazi, Z. A. & Peng, W. Large language models in healthcare and medical domain: a review. Informatics 11, 57 (2024).

Wang, S. et al. Interactive computer-aided diagnosis on medical image using large language models. Commun. Eng. 3, 133 (2024).

Yang, H. et al. Exploring the potential of large language models in personalized diabetes treatment strategies. medRxiv 2023-06 (2023).

Van Veen, D. et al. Adapted large language models can outperform medical experts in clinical text summarization. Nat. Med. 30, 1134–1142 (2024).

Huang, K., Altosaar, J. & Ranganath, R. Clinicalbert: modeling clinical notes and predicting hospital readmission. In CHIL 2020 Workshop (2019).

Alsentzer, E. et al. Publicly available clinical Bert embeddings. In Proceedings of the 2nd Clinical Natural Language Processing Workshop, 72–78 (2019).

Li, H. et al. Text-based predictions of COVID-19 diagnosis from self-reported chemosensory descriptions. Commun. Med. 3, 104 (2023).

Mao, C. et al. Ad-bert: using pre-trained language model to predict the progression from mild cognitive impairment to Alzheimer’s disease. J. Biomed. Inform. 144, 104442 (2023).

Luo, R. et al. Biogpt: generative pre-trained transformer for biomedical text generation and mining. Brief. Bioinforma. 23, bbac409 (2022).

Church, K. W. Word2Vec. Nat. Lang. Eng. 23, 155–162 (2016).

Le, Q. & Mikolov, T. Distributed representations of sentences and documents. In Proceedings of the 31st International Conference on Machine Learning, Vol. 32 of Proceedings of Machine Learning Research (eds. Xing, E. P. & Jebara, T.) 1188–1196 (PMLR, 2014).

Pennington, J., Socher, R. & Manning, C. Glove: Global vectors for word representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP) (Association for Computational Linguistics, 2014).

Athiwaratkun, B., Wilson, A. G. & Anandkumar, A. Probabilistic fasttext for multi-sense word embeddings. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), 1–11 (Association for Computational Linguistics, Melbourne, Australia, 2018) https://doi.org/10.18653/v1/P18-1001.

Brown, T. B. et al. Language models are few-shot learners. In Proceedings of the 34th International Conference on Neural Information Processing Systems, NIPS '20 (Curran Associates Inc., 2020).

Chen, T. & Guestrin, C. Xgboost. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (ACM, 2016).

Farquhar, S., Kossen, J., Kuhn, L. & Gal, Y. Detecting hallucinations in large language models using semantic entropy. Nature 630, 625–630 (2024).

Scott, M. & Su-In, L. et al. A unified approach to interpreting model predictions. Adv. Neural Inf. Process. Syst. 30, 4765–4774 (2017).

Devlin, J., Chang, M.-W., Lee, K. & Toutanova, K. Bert: pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), 4171–4186 (Association for Computational Linguistics, Minneapolis, Minnesota, 2019) https://doi.org/10.18653/v1/N19-1423.

Radford, A., Narasimhan, K., Salimansa, T. & Sutskever, I. Improving language understanding by generative pre-training. Tech. Rep., OpenAI. https://cdn.openai.com/research-covers/language-unsupervised/language_understanding_paper.pdf (2019).

Aftan, S. & Shah, H. A survey on Bert and its applications. In 2023 20th Learning and Technology Conference (L&T), 161–166 (2023).

Bilimoria, K. Y. et al. Development and evaluation of the universal ACS NSQIP surgical risk calculator: a decision aid and informed consent tool for patients and surgeons. J. Am. Coll. Surg. 217, 833–842 (2013).

Goetz, L., Seedat, N., Vandersluis, R. & van der Schaar, M. Generalization—a key challenge for responsible AI in patient-facing clinical applications. NPJ Digit. Med. 7, 126 (2024).

Johnson, A. E. et al. MIMIC-III, a freely accessible critical care database. Sci. Data 3, 1–9 (2016).

Johnson, A., Pollard, T. & Mark, R. MIMIC-III clinical database (version 1.4). PhysioNet 10, 2 (2016).

Raza, M. M., Venkatesh, K. P. & Kvedar, J. C. Generative AI and large language models in health care: pathways to implementation. NPJ Digit. Med. 7, 62 (2024).

Wornow, M. et al. The shaky foundations of large language models and foundation models for electronic health records. NPJ Digit. Med. 6, 135 (2023).

Wu, H. et al. A survey on clinical natural language processing in the United Kingdom from 2007 to 2022. NPJ Digit. Med. 5, 186 (2022).

Bland, K. Antimicrobial prophylaxis for surgery: an advisory statement from the national surgical infection prevention project. Yearb. Surg. 189, 225–226 (2006).

Young, P. Y. & Khadaroo, R. G. Surgical site infections. Surg. Clin. North Am. 94, 1245–1264 (2014).

Hospital, B.-J. About us: Barnes-Jewish Hospital at Washington University Medical Center. https://www.bjc.org/locations/hospitals/barnes-jewish-hospital. (2025)

Sandmann, S., Riepenhausen, S., Plagwitz, L. & Varghese, J. Systematic analysis of ChatGPT, google search and llama 2 for clinical decision support tasks. Nat. Commun. 15, 2050 (2024).

Shojaee-Mend, H., Mohebbati, R., Amiri, M. & Atarodi, A. Evaluating the strengths and weaknesses of large language models in answering neurophysiology questions. Sci. Rep. 14, 10785 (2024).

Chen, X., Beaver, I. & Freeman, C. Fine-tuning language models for semi-supervised text mining. In 2020 IEEE International Conference on Big Data (Big Data), 3608–3617 (2020).

Aghajanyan, A. et al. Muppet: Massive multi-task representations with pre-finetuning. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing (Association for Computational Linguistics, 2021).

Perez-Lopez, R., Ghaffari Laleh, N., Mahmood, F. & Kather, J. N. A guide to artificial intelligence for cancer researchers. Nat. Rev. Cancer 24, 427–441 (2024).

Kernbach, J. M. & Staartjes, V. E. Foundations of machine learning-based clinical prediction modeling: Part II-generalization and overfitting. In Machine Learning in Clinical Neuroscience: Foundations and Applications 15–21 (Springer, 2022).

Cushman, M. Epidemiology and risk factors for venous thrombosis. In Seminars in hematology, Vol. 44, 62–69 (Elsevier, 2007).

Koopman, R. J. et al. Physician information needs and electronic health records (EHRs): time to reengineer the clinic note. J. Am. Board Fam. Med. 28, 316–323 (2015).

Choudhury, A. & Chaudhry, Z. Large language models and user trust: consequence of self-referential learning loop and the deskilling of health care professionals. J. Med. Internet Res. 26, e56764 (2024).

Troiani, J. S., Finkelstein, S. M. & Hertz, M. I. Incomplete event documentation in medical records of lung transplant recipients. Prog. Transplant. 15, 173–177 (2005).

Walker, J. et al. OpenNotes after 7 years: patient experiences with ongoing access to their clinicians’ outpatient visit notes. J. Med. Internet Res. 21, e13876 (2019).

Zhang, K. et al. A generalist vision–language foundation model for diverse biomedical tasks. Nat. Med. 30, 3129–3141 (2024).

Singhal, K. et al. Toward expert-level medical question answering with large language models. Nat. Med. https://doi.org/10.1038/s41591-024-03423-7 (2025).

Bellini, V. et al. Machine learning in perioperative medicine: a systematic review. J. Anesth. Analg. Crit. Care 2, 2 (2022).

Amin, A. P. et al. Reducing acute kidney injury and costs of percutaneous coronary intervention by patient-centered, evidence-based contrast use: the barnes-jewish hospital experience. Circulation: Cardiovasc. Qual. Outcomes 12, e004961 (2019).

Lee, J. et al. Biobert: a pre-trained biomedical language representation model for biomedical text mining. Bioinformatics 36, 1234–1240 (2020).

Shivade, C. et al. MedNLI—a natural language inference dataset for the clinical domain. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium. Association for Computational Linguistics, 1586–1596 (2019).

Luo, M., Chen, Y., Cheng, Y., Li, N. & Qing, H. Association between hematocrit and the 30-day mortality of patients with sepsis: a retrospective analysis based on the large-scale clinical database MIMIC-IV. PLoS ONE 17, e0265758 (2022).

Acknowledgements

The authors would like to thank Brad Fritz, Michael Avidan, Christopher King, and Sandhya Tripathi from Washington University for collecting and providing the dataset. This study is supported, in part by, funding from the Agency for Healthcare Research and Quality (R01 HS029324-02).

Author information

Authors and Affiliations

Contributions

C.A.: Methodology, software, validation, formal analysis, investigation, writing—original draft, visualization. B.X.: Conceptualization, methodology, validation, formal analysis, investigation, writing—original draft. J.A.: Resources, writing—review & editing, funding acquisition, project administration. T.K.: Resources, writing—review & editing, project administration. C.L.: Conceptualization, methodology, resources, writing—review & editing, supervision, project administration. All authors have read and approved the manuscript. Individuals who contributed to the data curation have been credited in the Acknowledgments.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Alba, C., Xue, B., Abraham, J. et al. The foundational capabilities of large language models in predicting postoperative risks using clinical notes. npj Digit. Med. 8, 95 (2025). https://doi.org/10.1038/s41746-025-01489-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41746-025-01489-2