Abstract

The Segment Anything Model (SAM) was fine-tuned on the EchoNet-Dynamic dataset and evaluated on external transthoracic echocardiography (TTE) and Point-of-Care Ultrasound (POCUS) datasets from CAMUS (University Hospital of St Etienne) and Mayo Clinic (99 patients: 58 TTE, 41 POCUS). Fine-tuned SAM was superior or comparable to MedSAM. The fine-tuned SAM also outperformed EchoNet and U-Net models, demonstrating strong generalization, especially on apical 2-chamber (A2C) images (fine-tuned SAM vs. EchoNet: CAMUS-A2C: DSC 0.891 ± 0.040 vs. 0.752 ± 0.196, p < 0.0001) and POCUS (DSC 0.857 ± 0.047 vs. 0.667 ± 0.279, p < 0.0001). Additionally, SAM-enhanced workflow reduced annotation time by 50% (11.6 ± 4.5 sec vs. 5.7 ± 1.7 sec, p < 0.0001) while maintaining segmentation quality. We demonstrated an effective strategy for fine-tuning a vision foundation model for enhancing clinical workflow efficiency and supporting human-AI collaboration.

Similar content being viewed by others

Introduction

Echocardiography provides a comprehensive anatomy and physiology assessment of the heart and is one of the most widely available imaging modalities in the field of Cardiology given its non-radiative, safe, and low-cost nature1,2,3. Cardiac chamber quantification, especially the left ventricle (LV), is one of the fundamental tasks of echocardiography studies in the current practice4, and the results can have direct effects on clinical decisions such as the management of heart failure, valvular heart diseases, and chemotherapy-induced cardiomyopathy5,6,7,8. Although LV chamber quantification tasks are performed by trained sonographers or physicians, it is known to be subject to intra- and inter-observer variance, which can be up to 7%-13% across studies9,10,11,12. Many artificial intelligence (AI) applications have been applied to address this essential task and to minimize variations3,13,14,15,16,17,18.

While established AI systems are potential solutions, the training of segmentation AI models requires large amounts of training data and their corresponding expert-defined annotations, making them challenging and costly to implement19. In recent years, transformers, a type of neural network architecture with the self-attention mechanism that enables the model to efficiently capture complex dependencies and relationships, have revolutionized the field from natural language processing to computer vision20,21,22. Vision transformers (ViT)20 are a type of transformer specifically designed for images, which have shown impressive performance with simple image patches and have become a popular choice for the building of foundation models that can be fine-tuned for various applications23. Building on this success, Meta AI introduced the “Segment Anything Model” (SAM), a foundation large vision model that was trained on diverse datasets and that can adapt to specific tasks. This model achieves “zero-shot” segmentation, i.e., segments user-specified objects at different data resources without needing any training data24.

However, while the zero-shot performance of SAM on natural image datasets has been promising24, its performance on complex image datasets, such as medical images, has not been fully investigated. While not specifically including echocardiography images, one study tested SAM on different medical image datasets, including ultrasound, and the zero-shot performance was not optimal25. Recently, MedSAM was introduced as a universal tool for medical image segmentation. However, ultrasound or echocardiography was less represented in the training set despite >1 million medical images being used26. This prompted a core research question: How can we effectively develop a segmentation model for a specific medical image segmentation task? Is it necessary to build a universal medical image segmentation model, or can a generic foundation model be fine-tuned with a relatively small, task-specific dataset to achieve comparable performance? In this context, we aim to study a novel strategy of using representative echocardiography examples for the fine-tuning of a foundation segmentation model (SAM) and compare its effectiveness with MedSAM and a state-of-the-art segmentation model trained with a domain-specific dataset (EchoNet)3.

We hypothesized that fine-tuning the foundation segmentation model with the above strategy can achieve similar or superior performance on LV segmentation compared to MedSAM or EchoNet. We further designed a human annotation study on a subset of images to investigate whether the model can enhance the efficiency of LV segmentation. Within the echocardiography domain, images obtained from different institutions and modalities (with different image qualities) were used to evaluate the generalization capability of SAM performance on external evaluation sets.

Results

SAM’s zero-shot performance on echocardiography and POCUS

We first tested the zero-shot performance of SAM. Overall, the zero-shot performance on the EchoNet-dynamic test set had a mean DSC of 0.863 ± 0.053. In terms of individual cardiac phase performance, end-diastolic frames were better than end-systolic frames (mean DSC 0.878 ± 0.040 vs. 0.849 ± 0.060) (Table 1). Supplementary Table 1 summarizes the zero-shot performance on the EchoNet-dynamic training and validation sets. On the Mayo Clinic dataset, the mean DSC was 0.882 ± 0.036 and 0.861 ± 0.043 for TTE and POCUS, respectively. On the CAMUS dataset, we observed a mean DSC of 0.866 ± 0.039 and 0.852 ± 0.048 on A4C and A2C views, respectively (Table 1). When compared to the ground truth LVEF, the calculated LVEF had an MAE of 11.67%, 6.28%, and 6.38%, on EchoNet, Mayo-TTE, and Mayo-POCUS data, respectively (Supplementary Table 2). When evaluated by Hausdorff Distance (HD) and Average Surface Distance (ASD), MedSAM was superior across most of the datasets (Table 2).

SAM’s fine-tuned performance on echocardiography and POCUS

Compared to zero-shot, fine-tuning generally improved the performance of SAM, with a mean DSC of 0.911 ± 0.045 (SAM112) on the EchoNet-dynamic test set (Table 1 and Supplementary Table 1). Similar improvement was also observed in Mayo TTE data, with an overall mean DSC of 0.902 ± 0.032. In contrast, no significant improvement was observed on the POCUS data (DSC 0.857 ± 0.047), while the performance was numerically improved when compared with the ground truth of the second observer (DSC 0.876 ± 0.038), as summarized in Table 3. The EchoNet model had a significant performance drop, especially on the Mayo-POCUS and CAMUS A2C datasets (Table 1). When compared to the ground truth LVEF, the calculated LVEF had an MAE of 7.52%, 5.47%, and 6.70%, on the EchoNet test, Mayo-TTE, and Mayo-POCUS data, respectively (Supplementary Table 2).

Qualitatively, poor-performing cases often featured suboptimal images (such as weak LV endocardial borders, off-axis views; Fig. 1, Panels c, f), highlighting the importance of image quality (Fig. 1). On a representative POCUS case, fine-tuned SAM112 predicted masks that were more consistent with LV geometry (Fig. 2).

From top to bottom: 97.5th to 2.5th percentile of DSC. Panels a, b, and c are end-diastolic frames, and Panels d, e, and f are end-systolic frames. We observed that many of the poor-performance cases had suboptimal image qualities, such as weak LV endocardial borders or off-axis views (Panels c and f, suggesting the importance of good input image quality on model performance. Additionally, end-diastolic frames usually have a better delineation of borders than end-systolic frames, which is consistent with the model performance (end-diastolic slightly better than end-systolic).

Panel a. end-diastolic frame, Panel b. end-systolic frame. From left to right are the ground truth, zero-shot, and fine-tuned mask, with an overlay of bounding boxes (green-colored) and mask (blue-colored), on the original POCUS image. Fine-tuned masks were more consistent with the anticipated left ventricular geometry on visualization. Note that POCUS images generally had worse quality compared to transthoracic echocardiography images.

Comparison of SAM, MedSAM, EchoNet, and U-Net models

Comparisons were made between fine-tuned SAM112 (fine-tuned using 112× 112 images), MedSAM, EchoNet, and U-Net. Under the same setting, the inference time for SAM was about 7.3 images/sec, MedSAM was 5.0 images/sec, EchoNet was 153.3 images/sec, and UNet was 26 images/sec. Note that EchoNet takes video input, so the inference time was averaged by the number of frames in each video.

When evaluated by DSC and IoU, EchoNet demonstrated a similar level of performance compared to fine-tuned SAM112 on the EchoNet test set (SAM112 vs. EchoNet: DSC 0.911 ± 0.045 vs. 0.915 ± 0.047, p < 0.0001) and the Mayo TTE dataset (DSC 0.902 ± 0.032 vs. 0.893 ± 0.090, p < 0.0001). However, EchoNet significantly underperformed on the Mayo POCUS and CAMUS datasets, with a performance drop ranging from 5–25% (Table 1). MedSAM also showed about 2–5% worse performance than fine-tuned SAM112across all datasets (all p < 0.0001), except for the CAMUS dataset, as it was part of MedSAM’s training data. U-Net had worse performance than fine-tuned SAM112 across all datasets (all p < 0.0001) (Table 1). When evaluated by HD and ASD, MedSAM had the best performance across most of the datasets, while fine-tuned SAM112 had the best performance on the EchoNet test set. This was followed by SAM (zero-shot), EchoNet, and then U-Net (Supplementary Table 3).

Further fine-tuned SAM1024 (fine-tuned using 1024 × 1024 images as MedSAM) essentially achieved the same performance as fine-tuned MedSAM, with a DSC of 0.935 and 0.936, respectively. Both of the fine-tuned models also demonstrated similar performance across different datasets, except for the CAMUS dataset, which is part of MedSAM’s training set (Table 4). We also observed that after the finetuning, both SAM1024 and MedSAM had dropped performance slightly on the Mayo and POCUS datasets, compared to fine-tuned SAM112 and base MedSAM.

Human Annotation Study

To test SAM’s potential in enhancing clinical workflow, we designed a human annotation study using SAM112. In the study, we observed that AI assistance significantly improved efficiency by decreasing 50% annotation time (p < 0.0001; Fig. 3a), which remains true for both expert-level and medical student-level annotators (Fig. 3b, c). The AI-assisted workflow maintained the quality of left ventricle segmentation, as measured by DSC (Fig. 3d–f). Qualitative assessments indicated an improvement in performance for inexperienced annotators, which may not be completely captured by the DSC scores (Supplementary Fig. 1).

a With SAM’s assistance, the average annotation time significantly decreased by 50%. b Among experienced annotators, the workflow decreased annotation time by 39.2%; (c) It also reduced 66.0% annotation time for an inexperienced annotator. d–f No significant difference was observed in segmentation quality when measured by the Dice Similarity Score. ***p < 0.0001.

Discussion

The major contributions of this work include: (1) presenting a data-efficient and cost-effective strategy for training a LV segmentation model for echocardiography images based on SAM and leveraging its generalization capability for POCUS images, and (2) showing the potential of optimizing clinical workflows through a human-in-the-loop approach, where SAM significantly improved efficiency while maintaining annotation quality.

Echocardiography, like other ultrasound modalities, is generally considered an imaging modality with more challenges due to its operator dependency and low signal-to-noise ratio3,27,28,29. Additionally, objects could often have weak border linings or be obstructed by artifacts on ultrasound/echocardiography images, which posed specific challenges for echocardiography segmentation tasks3,25. While the image/frame-based approach of SAM does not consider consecutive inter-frame changes and spatio-temporal features of the echocardiogram, fine-tuned SAM (both SAM112 and SAM1024) achieved superior frame-level segmentation performance on all datasets compared to the video-based EchoNet model3. Importantly, there were cases with suboptimal image quality and imperfect human labels (Fig. 1) in the EchoNet-dynamic data set3,30, which can limit the model performance.

In terms of generalization capability, the fine-tuned SAM112 model demonstrated robust performance on unseen TTE (including the A2C view in CAMUS) and POCUS data, with about a 1% and 5% drop in performance, respectively. In contrast, there was a significant drop in EchoNet and UNet’s performance on the POCUS dataset (Table 1). Similarly, when evaluated by border-sensitive metrics such as HD and ASD, foundation models (SAM and MedSAM) were able to generate more consistent borders compared to EchoNet and U-Net (Supplementary Table 3). This again demonstrated the advantage of leveraging the generalization capabilities of foundation models23 in building AI solutions for the rapidly growing use of POCUS in cardiac imaging31. While evaluation on a larger POCUS dataset is required to better evaluate inter-observer variations, we demonstrated a strategy to fine-tune foundation models using readily available and relatively high-quality TTE results, knowing that POCUS images usually come with larger variations in operator skill levels, image quality, and scanning modalities31,32,33,34.

On a head-to-head comparison, fine-tuned SAM112 outperformed base MedSAM across almost all datasets when evaluated by DSC and IoU (Table 1). This is likely due to the fact that cardiac ultrasound images were less represented in MedSAM’s training set of over 1 million images. When fine-tuned under the same setting, SAM1024 and fine-tuned MedSAM can achieve the same level of performance and generalization capability on a specialized LV segmentation task (Table 4). Our study supports a data-efficient foundation model training strategy that uses representative examples for specific tasks in medical subspecialties, providing a generalizable solution that overcomes the challenge of large-scale, high-quality dataset collection in this field19,35. Importantly, starting with SAM also saved computational resources for training on 20 A100 (80 G) GPUs in MedSAM’s training process26.

SAM’s interactive capability allows the potential integration of SAM into research or clinical workflows in echocardiography labs to create high-quality segmentation masks at scales23,25. We demonstrated the potential of a SAM-based, human-in-loop workflow for LV segmentation, significantly reducing the annotation time and maintaining the segmentation quality across users with different experience levels (Fig. 3; Supplementary Fig. 1). Compared to fully automatic models3, an interactive approach ensures that segmentations can be directly prompted or modified to the level of interpreters’ satisfaction24,25. This process also has the potential to gain the trust of human users to facilitate integration36. While future studies are needed to assess its real impact on clinical practice, the interactive functionality of SAM could be especially helpful in challenging cases or when fully automatic models fail to predict segmentations accurately25.

This study is limited by its retrospective nature and could be subject to selection bias. Since SAM is an image-based model, its performance was evaluated on pre-selected end-diastolic and end-systolic frames rather than the beat-to-beat assessment of the video as proposed by the EchoNet-Dynamic model. However, we still demonstrated superior frame-level performance with fine-tuned SAM. While adapters are another potential direction to use SAM for echocardiography segmentation37, we have not specifically explored this approach in the current paper. Since this manuscript focuses on exploring effective strategies for adapting a generic foundation model like SAM, we did not modify the architecture, and as such, the study does not include substantial technical innovations. The balance of performance and generalization capability was not comprehensively explored. Additionally, how SAM can be integrated into the current echocardiography lab workflow and its real-world effects will need to be validated in a prospective setting, which is beyond the scope of the study.

In conclusion, fine-tuning SAM on a relatively small yet representative echocardiography dataset resulted in superior performance compared to the state-of-the-art echocardiography segmentation model, EchoNet, as well as base MedSAM, and achieved the same performance as fine-tuned MedSAM. We demonstrated a data-efficient and cost-effective approach for handling specialized complex echocardiography images, and SAM’s potential to enhance current echocardiography workflows by improving the efficiency with a human-in-the-loop design.

Methods

Population and Data Curation

EchoNet-Dynamic

The EchoNet-Dynamic dataset is publicly available (https://echonet.github.io/dynamic/); details of the dataset have been described previously3. In brief, the dataset contains 10,030 apical-4-chamber (A4C) TTE videos at Stanford Health Care in the period of 2016–2018. The raw videos were preprocessed to remove patient identifiers and downsampled by cubic interpolation into standardized 112 × 112-pixel videos. Videos were randomly split into 7465, 1277, and 1288 patients, respectively, for the training, validation, and test sets3. The mean left ventricular ejection fraction of the EchoNet-dynamic dataset was 55.8 ± 12.4%, 55.8 ± 12.3%, and 55.5 ± 12.2%, for the training, validation, and test set, respectively3. Patient characteristics were not available for this public dataset. In this study, the cases without ground truth labels were excluded from this analysis (5 from the train set, 1 from the test set) (Supplementary Table 3).

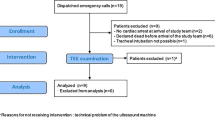

Mayo Clinic

A dataset from the Mayo Clinic (Rochester, MN) that includes 99 randomly selected patients (58 TTE in 2017–2018 and 41 point-of-care ultrasound studies (POCUS) in 2022) was used as an external validation dataset. The A4C videos were reviewed by a clinical sonographer (RW) and a cardiologist (JMF). Fifty-two (33 TTE and 19 POCUS) out of the total 99 cases were traced by both of the annotators to select and segment the end-diastolic and end-systolic frames. The tracings were done manually on commercially available software (MD.ai, Inc., NY). In the Mayo Clinic data set (n = 99), the mean age was 47.5 ± 17.8 years, 58 (58.6%) were male, and coronary artery disease, hypertension, and diabetes were found in 20 (20.2%), 42 (42.4%), and 15 (15.2%) of patients, respectively. The dataset contains an A4C view of 58 TTE cases and 41 POCUS cases, the LVEF was 61.7 ± 7.5% for TTE and 63.2 ± 11.9% for POCUS cases.

CAMUS

The Cardiac Acquisitions for Multi-structure Ultrasound Segmentation (CAMUS) dataset contains 500 cases from the University Hospital of St Etienne (France) with detailed tracings on both A4C and A2C views38. The CAMUS cohort (n = 500) had a mean age of 65.1 ± 14.4 years, 66% male, and a mean LVEF of 44.4 ± 11.9%.

Segment Anything Model (SAM), MedSAM, and U-Net

The Segment Anything Model (SAM) is an image segmentation foundation model trained on a dataset of 11 million images and 1.1 billion masks24. It can generate object masks from input prompts like points or boxes. SAM’s promptable design enables zero-shot transfer to new image distributions and tasks, achieving competitive or superior performance compared to fully supervised methods24. In brief, the model comprises a VisionEncoder, PromptEncoder, MaskDecoder, and Neck module, which collectively process image embeddings, point embeddings, and contextualized masks to predict accurate segmentation masks24. MedSAM is a fine-tuned version of the base SAM, trained on over 1.5 million medical image-mask pairs across 10 modalities and 30 cancer types, with trained weights available26. U-Net architecture was selected as the representative of commonly used segmentation deep learning models. In our study, the U-Net architecture39 leveraged a ResNet-18 backbone40.

Data Preprocessing

Each EchoNet-Dynamic video (112 × 112 pixels, in avi format) was exported into individual frames without further resizing. End-diastolic and end-systolic frames of each case were extracted, which corresponded to the human expert-traced, frame-level ground truth segmentation coordinates in the dataset. Ground truth segmentation masks were generated according to the coordinates and saved in the same size (112 × 112 pixels). The labeled frames of Mayo TTE and POCUS images were exported from the MD.ai platform and followed a similar preprocessing method to remove patient identifiers, then horizontally flipped and resized to 112 × 112 pixels3. The CAMUS images were rotated by 270 degrees and resized to be consistent with the EchoNet format. When importing to the SAM model, all the raw images were resized with the built-in function “ResizeLongestSide”24.

Zero-shot Evaluation

The original SAM ViT-base model (model type “vit_b”, checkpoint “sam_vit_b_01ec64.pth ”) without modification or fine-tuning was used to segment the dataset, referred to as zero-shot learning in machine learning literature24,26. The larger versions of SAM (ViT Large and ViT Huge) were not used as they did not offer significant performance improvement despite higher computational demands24. Bounding box coordinates of each left ventricle segmentation tracing were generated from the ground truth segmentations and used as the prompt for SAM24. Of note, while SAM supports both the bounding box and point prompts, our study focused exclusively on the bounding box prompt due to the incomplete and relatively weak borders in echocardiography images. Additionally, since the left atrium and LV are connected structures, point prompts may result in masks that incorrectly link the two chambers.

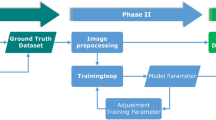

Model Fine-tuning

The SAM ViT-base model was used for fine-tuning with a procedure described by Ma et al.41. We used the training set cases (n = 7460) of the EchoNet-Dynamic as our customized dataset without data augmentation. The same bounding box was used as the prompt, as described above. We used a customized loss function, which is the unweighted sum of Dice loss and cross-entropy loss41,42. Adam optimizer43 was used (weight decay = 0), with an initial learning rate of 2e-5 (decreased to 3e-6 over 27 epochs). The batch size was 8. The model was fine-tuned on a node on the Stanford AI Lab cluster with a 24 GB NVIDIA RTX TiTAN GPU. The fine-tuning procedure of U-Net followed the same procedure except for using bounding boxes, and the training epoch was 23 for the best-performing model. No data augmentation was performed.

To ensure fair comparison between SAM and MedSAM, we used MedSAM’s fine-tuning setup with 1024 × 1024 images, and both models were trained for 70 epochs. The two versions of fine-tuned SAM models were referred to as SAM112 and SAM1024, respectively. The training time for SAM112 and SAM1024 was about 25 h and 40 h, respectively. MedSAM required a training time of about 50 h. UNet required 16 min.

Validation and Generalization

The fine-tuned SAM was tested on the test set of the EchoNet-Dynamic dataset. To test the generalization capacity of SAM, we used external validation samples from the CAMUS dataset (both A2C and A4C)38 and a Mayo Clinic dataset including the A4C view of cases of TTE and POCUS devices.

Human Annotation Study

We conducted a human annotation study to evaluate the enhancement of segmentation efficiency and quality when utilizing a fine-tuned SAM. Three annotators (two experts and one medical student) independently annotated 100 echocardiography images, randomly selected from the EchoNet-Dynamic test set. Each one annotated 100 images with and without SAM assistance. In the SAM-assisted workflow, annotators were instructed to draw minimal bounding boxes to prompt the model. In the regular workflow, annotators manually trace the endocardium contour, as per standard clinical practice. Annotators were allowed to refine their annotations until they were satisfactory. We recorded and compared the time required to complete annotations and the quality of annotations (DSC against ground truth) using both workflows.

Statistical Model Performance Evaluation

The model segmentation performance was directly evaluated by Intersection over Union (IoU), Dice similarity coefficient (DSC), Hausdorff Distance (HD), and Average Surface Distance (ASD) against human ground truth labels44. IoU and DSC assess the overlap of segmented areas, while HD and ASD evaluate the alignment of segmentation borders. The formulas are provided in Supplementary Note 1. Depending on the normality of distribution, two-tailed, paired t-tests or Wilcoxon tests were conducted to assess the statistical significance of the differences in accuracy between models (zero-shot vs. fine-tuned SAM, EchoNet vs. fine-tuned SAM, and MedSAM vs. fine-tuned SAM), as well as the annotation time and quality metrics in the human annotation study (AI-assisted vs. non-AI-assisted), with p < 0.05 as significant.

Ethical review and approval

All the studies have been performed in accordance with the Declaration of Helsinki.EchoNet-Dynamic dataset contains a publicly available, de-identified dataset that was approved by Stanford University Institutional Review Board and data privacy review through a standardized workflow by the Center for Artificial Intelligence in Medicine and Imaging (AIMI) and the University Privacy Office. The CAMUS dataset is also publicly available, under the approval of the University Hospital of St Etienne (France) ethical committee after full anonymization. The use of the Mayo Clinic dataset was approved by the institutional review board (protocol#22-010944); only patients providing informed consent for minimal-risk retrospective studies were included, thus the requirement for additional informed consent was waived.

Data availability

The EchoNet and CAMUS datasets are publicly available. The Mayo Clinic dataset can not be made publicly available due to patient privacy regulations; it is available from the corresponding author upon reasonable request.

Code availability

We released a checkpoint of the fine-tuned SAM and MedSAM models, along with the fine-tuning, inference, and statistical analysis code. The code and checkpoint are available on GitHub: https://github.com/chiehjuchao/SAM-Echo.git.

Abbreviations

- AI:

-

Artificial intelligence

- A2C:

-

apical 2 chamber, echocardiography view

- A4C:

-

apical 4 chamber, echocardiography view

- CAMUS:

-

Cardiac Acquisitions for Multi-structure Ultrasound Segmentation

- IoU:

-

Intersection over the union

- DSC:

-

Dice similarity coefficient

- LV:

-

Left ventricle

- POCUS:

-

Point-of-care ultrasound

- SAM:

-

Segment anything model

- TTE:

-

Transthoracic echocardiography

- ViT:

-

Vision Transformer

References

Antoine, C. et al. Clinical outcome of degenerative mitral regurgitation. Circulation 138, 1317–1326 (2018).

Matulevicius, S. A. et al. Appropriate use and clinical impact of transthoracic echocardiography. JAMA Intern. Med. 173, 1600–1607 (2013).

Ouyang, D. et al. Video-based AI for beat-to-beat assessment of cardiac function. Nature 580, 252–256 (2020).

Lang, R. M. et al. Recommendations for cardiac chamber quantification by echocardiography in adults: an update from the American society of echocardiography and the European association of cardiovascular imaging. J. Am. Soc. Echocardiogr. 28, 1–39.e14 (2015).

Liu, J. et al. Contemporary role of echocardiography for clinical decision making in patients during and after cancer therapy. JACC Cardiovasc Imaging 11, 1122–1131 (2018).

Fonseca, R., Jose, K. & Marwick, T. H. Understanding decision-making in cardiac imaging: determinants of appropriate use. Eur. Hear J. - Cardiovasc Imaging 19, 262–268 (2017).

Otto, C. M. et al. 2020 ACC/AHA Guideline for the Management of Patients With Valvular Heart Disease A Report of the American College of Cardiology/American Heart Association Joint Committee on Clinical Practice Guidelines. J. Am. Coll. Cardiol. https://doi.org/10.1016/j.jacc.2020.11.018 (2020).

Tam, J. W., Nichol, J., MacDiarmid, A. L., Lazarow, N. & Wolfe, K. What is the real clinical utility of echocardiography? A prospective observational study. J. Am. Soc. Echocardiogr. 12, 689–697 (1999).

Pellikka, P. A. et al. Variability in ejection fraction measured by echocardiography, gated single-photon emission computed tomography, and cardiac magnetic resonance in patients with coronary artery disease and left ventricular dysfunction. JAMA Netw. Open 1, e181456–e181456 (2018).

Malm, S., Frigstad, S., Sagberg, E., Larsson, H. & Skjaerpe, T. Accurate and reproducible measurement of left ventricular volume and ejection fraction by contrast echocardiography A comparison with magnetic resonance imaging. J. Am. Coll. Cardiol. 44, 1030–1035 (2004).

Cole, G. D. et al. Defining the real-world reproducibility of visual grading of left ventricular function and visual estimation of left ventricular ejection fraction: impact of image quality, experience and accreditation. Int J. Cardiovasc Imaging 31, 1303–1314 (2015).

Koh, A. S. et al. A comprehensive population-based characterization of heart failure with mid-range ejection fraction. Eur. J. Heart Fail 19, 1624–1634 (2017).

Gilbert, A. et al. Generating synthetic labeled data from existing anatomical models: an example with echocardiography segmentation. IEEET Med. Imaging 40, 2783–2794 (2021).

Salte, I. M. et al. Artificial intelligence for automatic measurement of left ventricular strain in echocardiography. Jacc Cardiovasc. Imaging 14, 1918–1928 (2021).

Huang, H. et al. Segmentation of echocardiography based on deep learning model. Electronics 11, 1714 (2022).

Amer, A., Ye, X. & Janan, F. ResDUnet: a deep learning-based left ventricle segmentation method for echocardiography. IEEE Access 9, 159755–159763 (2021).

Jafari, M. H., Woudenberg, N. V., Luong, C., Abolmaesumi, P. & Tsang, T. Deep Bayesian Image Segmentation For A More Robust Ejection Fraction Estimation. 2021 IEEE 18th Int. Symp. Biomed. Imaging (ISBI) 00, 1264–1268 (2021).

Mokhtari, M., Tsang, T., Abolmaesumi, P. & Liao, R. Medical Image Computing and Computer Assisted Intervention – MICCAI 2022. Lect. Notes Comput. Sci. 360–369, https://doi.org/10.1007/978-3-031-16440-8_35, (2022).

Yu, Y. et al. Techniques and challenges of image segmentation: a review. Electronics 12, 1199 (2023).

Dosovitskiy, A. et al. An image is worth 16×16 words: transformers for image recognition at scale. arXiv https://doi.org/10.48550/arxiv.2010.11929 (2020).

Vaswani, A. et al. Attention is all you need. arXiv https://doi.org/10.48550/arxiv.1706.03762 (2017).

Liu, Y. et al. Summary of ChatGPT/GPT-4 research and perspective towards the future of large language models. arXiv (2023).

Bommasani, R. et al. On the opportunities and risks of foundation models. arXiv https://doi.org/10.48550/arxiv.2108.07258 (2021).

Kirillov, A. et al. Segment Anything. arXiv (2023).

Mazurowski, M. A. et al. Segment anything model for medical image analysis: an experimental study. arXiv (2023).

Ma, J. et al. Segment anything in medical images. Nat. Commun. 15, 654 (2024).

Mintz, G. S. & Kotler, M. N. Clinical value and limitations of echocardiography: its use in the study of patients with infectious endocarditis. Arch. Intern. Med. 140, 1022–1027 (1980).

Mondillo, S., Maccherini, M. & Galderisi, M. Usefulness and limitations of transthoracic echocardiography in heart transplantation recipients. Cardiovasc. Ultrasound 6, 2–2 (2008).

Abdulla, A. M., Frank, M. J., Canedo, M. I. & Stefadouros, M. A. Limitations of echocardiography in the assessment of left ventricular size and function in aortic regurgitation. Circulation 61, 148–155 (2018).

Tromp, J. et al. Automated interpretation of systolic and diastolic function on the echocardiogram: a multicohort study. Lancet Digital Heal 4, e46–e54 (2022).

Huang, G. S., Alviar, C. L., Wiley, B. M. & Kwon, Y. The era of point-of-care ultrasound has arrived: are cardiologists ready?. Am. J. Cardiol. 132, 173–175 (2020).

Kalagara, H. et al. Point-of-care ultrasound (POCUS) for the cardiothoracic anesthesiologist. J. Cardiothor Vasc. 36, 1132–1147 (2022).

Palmero, S. L. et al. Point-of-Care Ultrasound (POCUS) as an extension of the physical examination in patients with bacteremia or candidemia. J. Clin. Med. 11, 3636 (2022).

Kline, J., Golinski, M., Selai, B., Horsch, J. & Hornbaker, K. The effectiveness of a blended POCUS curriculum on achieving basic focused bedside transthoracic echocardiography (TTE) proficiency. A formalized pilot study. Cardiovasc Ultrasoun 19, 39 (2021).

Zhang, D. et al. Deep learning for medical image segmentation: tricks, challenges and future directions. arXiv https://doi.org/10.48550/arxiv.2209.10307 (2022).

Bao, Y., Cheng, X., Vreede, T. D. & Vreede, G.-J. D. Investigating the relationship between AI and trust in human-AI collaboration. Proc. 54th Hawaii Int. Conf. Syst. Sci. https://doi.org/10.24251/hicss.2021.074 (2021).

Wu, J. et al. Medical SAM Adapter: Adapting Segment Anything Model for Medical Image Segmentation. arXiv (2023).

Leclerc, S. et al. Deep learning for segmentation using an open large-scale dataset in 2D echocardiography. IEEE Trans. Méd. Imaging 38, 2198–2210 (2019).

Ronneberger, O., Fischer, P. & Brox, T. U-net: convolutional networks for biomedical image segmentation. arXiv https://doi.org/10.48550/arxiv.1505.04597 (2015).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. 2016 IEEE Conf Comput Vis Pattern Recognit Cvpr 770–778, https://doi.org/10.1109/cvpr.2016.90, (2016).

Ma, J. & Wang, B. Segment anything in medical images. arXiv https://doi.org/10.48550/arxiv.2304.12306 (2023).

Isensee, F., Jaeger, P. F., Kohl, S. A. A., Petersen, J. & Maier-Hein, K. H. nnU-Net: a self-configuring method for deep learning-based biomedical image segmentation. Nat. Methods 18, 203–211 (2021).

Kingma, D. P. & Ba, J. Adam: a method for stochastic optimization. arXiv https://doi.org/10.48550/arxiv.1412.6980 (2014).

Maier-Hein, L. et al. Metrics reloaded: recommendations for image analysis validation. arXiv https://doi.org/10.48550/arxiv.2206.01653 (2022).

Acknowledgements

We would like to acknowledge Owen Crystal, MS, for his contributions to the organization of EchoNet-related data, which significantly facilitated the analysis.

Author information

Authors and Affiliations

Contributions

C.-J.C.: Conceptualization, methodology, software, validation, data curation, formal analysis, writing—original draft, visualization. Y.R.G.: Methodology, software. W.K.: Software, data curation. T.X.: Methodology, software. L.A.: Methodology, software. J.W.: Methodology, software. J.M.F.: Data curation. R.W.: Data curation. J.J.: Data curation, software. R.A.: Data curation, resources, writing- review and editing. G.C.K.: Data curation, resources, writing- review and editing. J.K.O.: Data curation, resources, writing- review and editing. C.P.L.: Resources, writing- review and editing. I.B.: Conceptualization, resources, writing—review and editing, supervision. F.-F.L.: Conceptualization, methodology, resources, writing—review and editing, supervision. E.A.: Conceptualization, methodology, resources, writing—review and editing, supervision.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Chao, CJ., Gu, Y.R., Kumar, W. et al. Foundation versus domain-specific models for left ventricular segmentation on cardiac ultrasound. npj Digit. Med. 8, 341 (2025). https://doi.org/10.1038/s41746-025-01730-y

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41746-025-01730-y

This article is cited by

-

PAM: a propagation-based model for segmenting any 3D objects across multi-modal medical images

npj Digital Medicine (2025)