Abstract

This multicenter retrospective study developed and validated an AI driven model for automated segmentation and quantitative evaluation of meibomian glands using infrared meibography images acquired by the Keratograph 5M device. A total of 1350 infrared meibography images were collected and annotated for model training and validation. The model demonstrated high segmentation performance, with an Intersection over Union of 81.67% (95% Confidence Interval [CI]: 81.03–82.31) and accuracy of 97.49% (95% CI: 97.38–97.62), outperforming conventional algorithms. The agreement was observed between AI-based and manual gland grading (Kappa = 0.93) and gland counting (Spearman r = 0.9334). Repeatability analysis confirmed the model’s stability, and external validation across four independent centers yielded consistent results with AUCs exceeding 0.99. This AI tool offers a standardized, efficient, and objective method for meibography image analysis, which may improve diagnostic precision and assist in the clinical management of meibomian gland dysfunction across diverse populations.

Similar content being viewed by others

Introduction

Dry eye disease (DED) is a multifactorial disorder of the tear film, often resulting from tear deficiency or excessive tear evaporation, leading to damage to the interpalpebral ocular surface and associated with symptoms of ocular discomfort1. Over the past few decades, DED has become a significant driver of ophthalmology clinic visits, with an estimated global prevalence affecting one in every eleven people2,3,4. Meibomian gland dysfunction (MGD), the most common cause of DED, leads to instability of the tear film’s lipid layer, contributing to excessive tear evaporation5. The introduction of meibography has enabled the visualization of meibomian gland morphology, which has become a critical tool for diagnosing DED6. However, traditional methods of evaluating meibomian gland morphology rely on subjective interpretations by clinicians, leading to variability, operational complexity, and time inefficiency.

Artificial intelligence (AI) has increasingly transformed ophthalmic imaging and diagnostic practices, offering the potential to enhance clinical accuracy and efficiency. In the management of diseases such as diabetic retinopathy, retinopathy of prematurity, cataracts, glaucoma, and age-related macular degeneration, AI has demonstrated outstanding capabilities in risk assessment and disease progression prediction7,8. In the field of dry eye disease, AI-assisted Optical Coherence Tomography (OCT) imaging enables the automated measurement of tear film characteristics, including tear meniscus area, height, depth, and radius9. Slit-lamp imaging allows for reliable grading of corneal punctate epithelial erosions10 and interferometry images facilitate highly accurate classification of the tear film lipid layer with AI integration11. Numerous algorithms based on computer vision12,13,14, supervised learning15,16,17, unsupervised learning18,19, and Vision Transformer (ViT)20 have been developed for meibomian gland evaluation, providing significant clinical benefits by automating and standardizing the diagnostic process. Additionally, several studies13,21,22 have developed deep learning-based automated methods for glands segmentation and multiparameter quantitative analyzers, enabling quantitative assessment. These AI-driven approaches improve diagnostic consistency, reduce subjectivity, and enable earlier and more accurate detection of MGD, leading to better monitoring of disease progression. For example, recent studies have utilized these AI techniques to explore risk factors for MGD, providing a quantitative assessment of the effects of allergic conjunctivitis23, orthokeratology lens wear22, and smoking and alcohol consumption on meibomian gland morphology24. These findings offer new insights for clinical diagnosis and management.

Given the high accuracy demands of meibomian gland evaluation, it is crucial to rigorously validate these AI models and assess their reliability across diverse clinical environments. This includes comparing AI model performance with manual annotations, evaluating repeatability, and performing external validation to confirm robustness. We herein present the development and multicenter validation of an AI-driven model for quantitative meibomian gland assessment. The proposed AI-driven model addresses critical limitations in current diagnostic approaches through comprehensive, quantitative morphological analysis. Our rigorous validation framework, encompassing repeatability assessments, correlation analysis with manual annotations, and multicenter external validation, establishes the model’s capacity to deliver objective, reproducible metrics with demonstrated generalizability and reliability. Our study specifically focused on the upper eyelid, which demonstrates higher meibomian gland density and exerts greater influence on tear film stability compared to the lower eyelid25,26. This anatomical selection is further justified by the upper eyelid’s suitability for standardized imaging, in contrast to the lower eyelid’s frequent challenges in achieving complete gland visualization during clinical examinations27. These advancements significantly contribute to ocular surface health management by enabling standardized evaluation protocols, enhancing diagnostic precision, and supporting personalized therapeutic strategies for MGD, thereby representing a substantial innovation in ophthalmic diagnostic technologies.

Results

Study images

A total of 881 infrared meibomian gland images were included in the internal validation phase of the study after undergoing preprocessing.

For the multicenter external validation, datasets were collected from four independent ophthalmology centers (Supplementary Table 1): Zhongshan Ophthalmic Center (124 images), Putian Ophthalmic Hospital (109 images), Dongguan Huaxia Eye Hospital (100 images), and Zhuzhou City Hospital in Hunan (136 images). These 469 external images were similarly processed for quality control and used to evaluate the model’s generalizability across different clinical environments.

Annotator agreement and reliability

To validate manual annotations, we compared annotations from three junior physicians and one senior ophthalmologist. Pearson correlation coefficients for gland and eyelid area pixel values exceeded 0.85 between junior and senior annotators (Supplementary Fig. 1a), with intra-annotator correlations also above 0.85. For eyelid area annotations, inter-annotator correlations were consistently above 0.90 (Supplementary Fig. 1b). The IoU for gland regions between junior and senior annotators was >91.57% (Supplementary Fig. 2).

AI model performance

The performance of the AI-driven segmentation model (UNet) was compared with several other state-of-the-art medical image segmentation models. The UNet model achieved an IoU of 81.67%, outperforming other models such as UNet++ (78.85%) and U2Net (79.69%). Key performance metrics are summarized in Table 1, showing the UNet model’s Dice coefficient at 89.72, recall at 90.48, and overall accuracy at 97.49%, reflecting its superior segmentation capability.

Repeatability of the AI model

The repeatability of the AI model was evaluated using Bland-Altman plots for 28 pairs of repeated images. The model showed excellent repeatability in gland length and count measurements, with most data points within the 95% limits of agreement and mean differences near zero (Supplementary Fig. 3a, b). Minor variability was observed in gland width and area measurements, but results remained within acceptable limits, confirming the model’s reliability for repeated clinical use (Supplementary Fig. 3c, d).

Comparison between AI and manual evaluation

The distribution of grades assigned by different graders and the AI Was illustrated in Fig. 1a. The AI-based grading system achieved 94.09% accuracy and a Kappa value of 0.93 (95% CI: 0.91–0.95) compared to manual grading (Fig. 1c). Gland counts from annotators and the AI are shown in Fig. 1b. Given the non-normal distribution of gland counts, Spearman’s correlation coefficient was used to evaluate consistency, revealing a strong correlation of 0.9334 between AI and manual counts (Fig. 1d).

External validation performance

The model exhibited robust performance across four independent centers, with AUC values of 0.9931 (Zhongshan Ophthalmic Center), 0.9921 (Dongguan Huaxia Eye Hospital), 0.9950 (Zhuzhou City Hospital), and 0.9940 (Putian Ophthalmic Hospital) (Fig. 2a–d). Decision curve analysis (DCA) confirmed a positive net benefit across a wide range of threshold probabilities, highlighting the model’s clinical utility (Fig. 2e–h). The model achieved high accuracy (97.83–98.36%), sensitivity (99.04–99.47%), and specificity (88.16–90.28%), with IOU values ranging from 97.4% to 98.1%. Additional metrics included positive predictive values (98.25–98.69%), negative predictive values (93.07–96.23%), positive likelihood ratios (8.3820–10.2307), negative likelihood ratios (0.0060–0.0108), and F1 scores (0.9100–0.9316) (Supplementary Table 2).

a ROC curve for Zhongshan Ophthalmic Center. b ROC curve for Dongguan Huaxia Eye Hospital. c ROC curve for Zhuzhou City Hospital. d ROC curve for Putian Ophthalmic Hospital. e DCA for Zhongshan Ophthalmic Center. f DCA for Dongguan Huaxia Eye Hospital. g DCA for Zhuzhou City Hospital. h DCA for Putian Ophthalmic Hospital.

Consistency between manual and automated assessments in multicenter validation

External validation demonstrated strong agreement between manual and automated assessments for MG grading and counting. At Zhongshan Ophthalmic Center, automated scoring achieved 91.93% accuracy, with a Kappa value of 0.81 (95% CI: 0.70–0.93) and Spearman’s ρ of 0.8524 for gland counts (Fig. 3a, e). Similar performance was observed at Dongguan Huaxia Eye Hospital (accuracy: 94.00%; Kappa: 0.91; 95% CI: 0.83–0.99; Spearman’s ρ: 0.9736) (Fig. 3b, f), Putian Ophthalmic Hospital (accuracy: 94.49%; Kappa: 0.95; 95% CI: 0.91–0.99; Spearman’s ρ: 0.9518) (Fig. 3c, g), and Zhuzhou City Hospital (accuracy: 93.39%; Kappa: 0.90; 95% CI: 0.85–0.96; Spearman’s ρ: 0.9206) (Fig. 3d, h).

Discussion

With the rapid advancement of AI, the diagnosis and management of DED have significantly improved. It can automatically identify tear film breakup regions, enabling more precise measurement of TBUT28 and can also objectively quantify lipid layer thickness and assess tear film stability through interference pattern analysis29. AI-driven analysis of OCT images allows for automated measurement of tear meniscus height, detection of corneal thickness changes, and classification of DED severity29. Furthermore, AI integrates various non-invasive clinical indicators to predict tear osmolarity without the need for invasive sampling, enhancing both patient comfort and diagnostic efficiency30. Similarly, AI-driven algorithms have emerged in the diagnosis of MGD31. Meibography has become a valuable tool for the clinical screening and diagnosis of MGD. Previous studies have made significant efforts in developing rapid and automated identification methods for meibography images32,33, achieving promising results in terms of algorithm innovation, model accuracy, and parameter diversity. However, these studies have some shortcomings in clinical validation. For example, many studies have not adequately validated the consistency among different annotators, which may lead to biases during model training and testing24,34. Some studies rely on narrow populations or small sample sizes, which may not adequately represent broader populations32,35. The innovation of our research lies in the comprehensive validation process, which included two stages of internal validation and extensive multicenter external validation. To the best of our knowledge, this is the first multicenter clinical validation study of an AI model for automated segmentation of meibomian glands.

Our findings clearly demonstrate the efficacy and reliability of the AI-based model. First, newly trained annotators achieved high consistency compared with expert annotations, underscoring the robustness of the annotation process. This result is crucial, as it ensures the reliability of large-scale data annotation, particularly during the training and validation phases of AI models. The high Pearson correlation coefficients between junior and senior doctors, as well as among junior doctors, demonstrate that the quality of annotations is both reproducible and scalable. Wang et al.36 similarly emphasized the value of newly trained annotators in improving annotation efficiency and accuracy. Some previous studies have not examined the qualifications and consistency of annotators, which may introduce bias into model training and evaluation37,38. This may limit the reproducibility of AI models across different clinical settings.

Another critical finding is the model’s strong performance in segmentation tasks. The UNet-based algorithm achieved an accuracy of 97.49%, an IoU of 81.67%, and a Dice coefficient of 89.72%, significantly outperforming other algorithms reported in prior studies. For instance, the models developed by Zhang et al.34, Wang et al.39, and Steven et al.17 reported lower accuracies (ranging from 62.7% to 92%) and limited generalizability across diverse datasets. Unlike these models, which often require extensive manual preprocessing or are tailored to specific imaging conditions, our algorithm demonstrates robust performance across a wide range of image qualities and clinical settings. This adaptability, combined with its high accuracy, suggests that our model could be effectively integrated into clinical workflows, particularly in complex cases where manual evaluation might be error-prone or inconsistent. Furthermore, while previous studies primarily focused on global gland analysis, our model’s ability to perform detailed, gland-level segmentation provides a more granular assessment, addressing a key limitation of earlier approaches.

For the model’s repeatability evaluation, we employed Bland-Altman analysis on repeated images. The results showed that the AI model demonstrated good repeatability in measuring meibomian gland length and count, with most data points falling within the 95% limits of agreement and an average difference close to zero. Although slight variability was observed in the measurements of gland width and area, the majority of results remained within an acceptable range. This level of repeatability is consistent with prior findings in AI-based meibomian gland segmentation, such as those by Shehzad et al.40, who highlighted the importance of robust and reproducible AI-driven morphometric analyses. Notably, Saha et al.21 also reported the reliability of their model in repeated measurements of meibomian gland area. However, the repeatability assessment of gland length, width, and count has been rarely reported in previous studies. This finding further validates the effectiveness of our model and underscores its potential and importance for application in clinical settings.

In addition, this study compared the performance of the AI-driven evaluation with manual assessments in meibomian gland counting and grading. The AI model demonstrated a strong correlation with manual counts (Spearman correlation coefficient = 0.9334), indicating higher consistency than manual counting methods that are subject to human error and variability. For manual grading, the AI achieved an accuracy of 95.37%, suggesting it can reliably replicate clinician assessments. Notably, our AI model outperformed previous systems like Saha et al.‘s deep learning model, which achieved only 59.17% accuracy21. This implies that the AI system can enhance clinical efficiency, reduce variability.

A key strength of this study is the multicenter external validation, which ensures the model’s generalizability across diverse clinical settings. Validation using datasets from four independent centers demonstrated that the AI model maintained robust performance, with accuracy values exceeding 0.97 across all sites. The model consistently achieved high accuracy, sensitivity, and specificity, further supported by decision curve analysis, which confirmed its positive net benefit across a wide range of threshold probabilities. Additionally, strong agreement between manual and automated meibomian gland grading and counting was observed at all centers, with high Kappa values (0.81–0.95) and Spearman’s correlation coefficients (>0.85), reinforcing the model’s reliability. These findings highlight the model’s robustness and suitability for real-world clinical applications, underscoring its potential for widespread adoption in diverse clinical environments.

The proposed AI model demonstrates significant clinical advantages over existing methods and tranditional commercial tools. Firstly, it provides objective quantification of meibomian gland morphology, overcoming the inherent limitations of subjective manual assessments. Secondly, through multiparametric analysis of glandular characteristics (including area, density, and tortuosity), it enables precise monitoring of disease progression and therapeutic responses. Thirdly, the multicenter-validated architecture ensures robust performance across diverse clinical settings, facilitating standardized evaluation and personalized treatment planning based on quantifiable morphological changes, thereby potentially improving clinical outcomes.

Despite the promising results, this study is not without limitations. First, a limitation of the model is its inability to track changes in individual meibomian glands over time. This impacts clinical applications, particularly in assessing treatment efficacy. Without automated tracking, clinicians need to manually ensure consistent imaging angles and regions for pre- and post-treatment comparisons. Deviations in eyelid positioning or camera angle may introduce variability, complicating accurate comparisons of gland morphology. Future development of gland tracking capabilities is essential to enable reliable, automated longitudinal analysis in clinical practice. Second, all images used in this study were obtained from the Keratograph 5 M device. While this ensured data consistency and leveraged a device widely used in clinical practice, our model has not yet been validated on other imaging systems (e.g. LipiView (TearScience, Inc.) and IDRA (SBM Sistemi)). Future studies should assess its performance across different platforms to confirm its broader applicability. Third, due to the incomplete exposure of lower eyelid glands and poor imaging quality, this study did not include the analysis of lower meibomian glands. Future studies should focus on validating the AI model’s performance in assessing lower eyelid morphology to further enhance its clinical applicability.

This study developed and validated a robust AI-driven model for standardized meibography assessment across multicenter clinical settings. The proposed model reduces subjectivity in manual evaluations through automated quantification of glandular morphology, establishes consistent diagnostic criteria via multiparametric analysis, and provides a scalable clinical tool validated across diverse healthcare environments. These advancements address the critical need for objective, reproducible evaluation in MGD management. Future research should explore longitudinal outcome correlations, cross-platform validation, and the integration of lower eyelid meibography to enhance clinical utility.

Methods

Study design and internal dataset

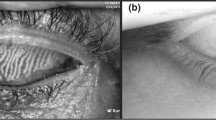

This multicenter, retrospective diagnostic accuracy study was conducted to evaluate the performance of an AI-driven model for meibography images evaluation. The study was conducted at Fujian Provincial Hospital, where meibography images were collected from patients who underwent Keratograph 5 M (Oculus, Wetzlar, Germany) examinations between June 2022 and April 2024. The initial dataset consisted of 1280 images from 646 patients. Enhanced contrast mode was used during image acquisition to highlight gland structures, and images were captured in RGB format with a resolution of 1578 × 966 pixels. The Keratograph 5 M device was selected for this study due to its standardized imaging protocol and widespread clinical adoption in blepharoplasty infrared photography. While the current implementation is specific to this device, the image processing pipeline was designed with cross-platform adaptability in mind, allowing for potential extension to other imaging systems with similar output characteristics.

To ensure uniformity, only upper eyelid images that fully captured the eyelid area were selected. During preprocessing, images were excluded if they exhibited significant blurriness, extensive eyelash obstruction (>20% gland area obscured), severe glare or reflection, or incomplete eyelid region capture, as these issues impeded accurate gland boundary identification or segmentation.

This study is a single-sample diagnostic trial. Based on previous research, the expected sensitivity was 70%, and specificity was 80%, with an allowable error of 0.1 for both. A two-sided test was required, with α set at 0.05 and power (1-β) at 90%. Using PASS 15 software, the calculated sample size was N = 241, indicating that at least 241 subjects were needed for the study. After manual quality checks, 881 images from 497 patients were retained. The final dataset was deemed sufficiently large to maintain a margin of error of approximately ±3% and ensure an appropriate level of statistical robustness. The study protocol received central ethical approval from the Fujian Provincial Hospital Research Ethics Committee (Ref: K2024-02-001), which was recognized by all participating centers. All procedures complied with the Declaration of Helsinki and local regulatory requirements. As this was a retrospective study, the requirement for informed consent was waived by the ethics committee. Additionally, we ensured that all shared data were fully de-identified and cannot be traced back to individual patients, thereby maintaining confidentiality.

Manual annotation of meibomian glands and eyelid regions

Manual annotations of meibomian glands and eyelid regions were performed to validate the AI model. Before annotation, four ophthalmologists (three junior, one senior) underwent standardized training on the LABEL ME tool and annotation guidelines to ensure consistency. They independently annotated a randomly selected subset of 25 images (including 5 duplicates) of meibomian glands and eyelid regions. The annotations from the three junior ophthalmologists were compared against those of the senior ophthalmologist, whose annotations served as the gold standard. Inter-annotator agreement was assessed using a Pearson correlation coefficient (>0.85) and Intersection over Union (IoU >90%). Annotators meeting these criteria qualified to label the full dataset. Using the LABEL ME tool, qualified annotators independently labeled 1350 images (internal and external datasets) based on standardized criteria, ensuring high repeatability and accuracy.

Manual MGs grading and gland quantity assessment

Three junior ophthalmologists assessed the quantity and grading of meibomian glands using infrared images. Gland counting was conducted sequentially from left to right, including atrophic glands as long as they were visible. Gland grading followed the criteria proposed by Pflugfelder et al.41, where gland dropout was classified into four grades: Grade 0 (no gland dropout), Grade 1 (<33% dropout), Grade 2 (33–66% dropout), and Grade 3 (>66% dropout). These manual assessments were used for comparison with the AI-driven model’s output.

AI model development and training

In this study, we used U-Net as the segmentation model for infrared meibomian gland images due to its effectiveness and versatility in medical image segmentation. The detailed network architecture is fully illustrated in Fig. 4. U-Net is a symmetric encoder-decoder architecture with a bottleneck layer in the middle. For meibography segmentation, U-Net progressively extracts features through convolution and pooling in the encoder while reducing spatial resolution. The bottleneck layer captures abstract information, which the decoder then reconstructs using upsampling and skip connections. Convolutional layers fuse multi-scale features, integrating global and local information for precise segmentation. To address gradient smoothness and class imbalance, a hybrid loss function is applied to optimize the model.

In the experiment, the 1130 annotated image sets were divided into training, validation, and test sets, along with their corresponding ground truth. The training set is used to tune the model weights, the validation set is used to evaluate the model’s performance during training, and the test set is used to assess the performance of the trained model. During the training process, we use 11 data augmentation techniques, including cropping, flipping, cutting, translation, rotation, equalization, contrast variation, and brightness variation, for online data augmentation to overcome the problem of insufficient training samples. The batch size is set to 8 and the number of epochs to 500. The AI model is optimized using the Adam optimizer with an initial learning rate of 0.0003. To prevent overfitting, the learning rate is halved every 50 epochs. We implement the experiment in Python using the PyTorch framework and all AI models are trained and tested on a single NVIDIA GeForce GTX 3090Ti GPU.

Multicenter dataset and external validation

To validate the generalizability of the model, an external validation dataset of 469 infrared meibomian gland images were collected using the Keratograph 5M device from four independent centers: Zhongshan Ophthalmic Center in Guangdong (124 images), Putian Ophthalmic Hospital in Fujian (109 images), Dongguan Huaxia Eye Hospital in Guangdong (100 images), and Zhuzhou City Hospital in Hunan (136 images). The multicenter population geographic distribution is shown in Supplementary Fig. 4. Experienced ophthalmologists manually annotated these images for gland counting and grading, serving as the reference standard.

Model evaluation metrics

The AI-driven model quantitatively evaluates several key parameters of meibomian glands, including gland count, gland dropout grading, gland width, gland length, gland area, gland deformation coefficient, and gland loss ratio. These parameters provide a comprehensive assessment of meibomian gland morphology and are critical for evaluating the severity of MGD. The detailed information on these parameters can be found in our previous studies22.

Statistical analysis

All statistical analyses were conducted using R version 4.4.1. Pearson correlation coefficients were used to measure inter-annotator agreement, and scatter plots from linear regression along with confusion matrices were used to compare the AI model’s results with manual annotations for gland counting and grading. IoU scores were employed to evaluate segmentation accuracy. Bland-Altman analysis was applied to assess the repeatability of both manual and AI-based evaluations for gland counting and grading. For key quantitative parameters, descriptive analyses were performed. For external validation, the model’s segmentation performance was assessed using metrics such as Accuracy, Intersection over Union(IoU), Sensitivity, Specificity, Positive Predictive Value, Negative Predictive Value, Positive Likelihood Ratio, Negative Likelihood Ratio, F1 Score, along with ROC and DCA curves. To evaluate the consistency between AI and manual assessments of gland quantity and grading across multiple clinical settings, scatter plots from linear regression analyses and confusion matrices were utilized.

Data availability

The data that support the findings of this study are not openly available due to ethics regulations. However, data access requests for future research will be considered by the corresponding author on a case-by-case basis and subject to relevant institutional and ethical approvals.

Code availability

Access to the code used in this study is available upon reasonable request from the corresponding author.

References

Craig, J. P. et al. TFOS DEWS II definition and classification report. Ocul. Surf. 15, 276–283 (2017).

Zhang, S. & Hong, J. Risk factors for dry eye in Mainland China: a multi-center cross-sectional hospital-based study. Ophthalmic Epidemiol. 26, 393–399 (2019).

Papas, E. B. The global prevalence of dry eye disease: a Bayesian view. Ophthalmic Physiological Opt. 41, 1254–1266 (2021).

McCann, P. et al. Prevalence and incidence of dry eye and meibomian gland dysfunction in the United States: a systematic review and meta-analysis. JAMA Ophthalmol. 140, 1181–1192 (2022).

Bron, A. J. et al. Tfos dews ii pathophysiology report. Ocul. Surf. 15, 438–510 (2017).

Swiderska, K., Read, M. L., Blackie, C. A., Maldonado-Codina, C. & Morgan, P. B. Latest developments in meibography: a review. Ocul. Surf. 25, 119–128 (2022).

Benet, D. & Pellicer-Valero, O. J. Artificial intelligence: the unstoppable revolution in ophthalmology. Surv. Ophthalmol. 67, 252–270 (2022).

Gu, C. et al. Application of artificial intelligence system for screening multiple fundus diseases in Chinese primary healthcare settings: a real-world, multicentre and cross-sectional study of 4795 cases. Br. J. Ophthalmol. 108, 424–431 (2024).

Stegmann, H. et al. Automatic assessment of tear film and tear meniscus parameters in healthy subjects using ultrahigh-resolution optical coherence tomography. Biomed. Opt. Express 10, 2744–2756 (2019).

Qu, J. H. et al. Fully automated grading system for the evaluation of punctate epithelial erosions using deep neural networks. Br. J. Ophthalmol. 107, 453–460 (2023).

da Cruz, L. B. et al. Interferometer eye image classification for dry eye categorization using phylogenetic diversity indexes for texture analysis. Comput Methods Prog. Biomed. 188, 105269 (2020).

Arita, R. et al. Objective image analysis of the meibomian gland area. Br. J. Ophthalmol. 98, 746–755 (2014).

Deng, Y. et al. Quantitative analysis of morphological and functional features in Meibography for Meibomian Gland Dysfunction: Diagnosis and Grading. EClinicalMedicine 40, 101132 (2021).

Koprowski, R. et al. A quantitative method for assessing the quality of meibomian glands. Comput. Biol. Med. 75, 130–138 (2016).

Yu, X. et al. Meibomian gland morphological changes in ocular herpes zoster patients based on AI analysis. Front. Cell Dev. Biol. 10, 1094044 (2022).

Khan, Z. K. et al. Image based analysis of meibomian gland dysfunction using conditional generative adversarial neural network. BMJ Open Ophthalmol. 6, e000436 (2021).

Setu, M. A. K., Horstmann, J., Schmidt, S., Stern, M. E. & Steven, P. Deep learning-based automatic meibomian gland segmentation and morphology assessment in infrared meibography. Sci. Rep. 11, 7649 (2021).

Yeh, C.-H., Stella, X. Y. & Lin, M. C. Meibography phenotyping and classification from unsupervised discriminative feature learning. Transl. Vis. Sci. Technol. 10, 4–4 (2021).

Li, S. et al. Unsupervised learning based on meibography enables subtyping of dry eye disease and reveals ocular surface features. Invest. Ophthalmol. Vis. Sci. 64, 43–43 (2023).

Lai, L. et al. Automatic meibomian gland segmentation and assessment based on TransUnet with data augmentation. In: International conference on intelligent computing, 154–165 (Springer, 2024).

Saha, R. K. et al. Automated quantification of meibomian gland dropout in infrared meibography using deep learning. Ocul. Surf. 26, 283–294 (2022).

Li, L. et al. Effects of orthokeratology lenses on tear film and tarsal glands and control of unilateral myopia in children. Front. Cell Dev. Biol. 11, 1197262 (2023).

Wei, J. et al. Meibomian gland alterations in allergic conjunctivitis: insights from a novel quantitative analysis algorithm. Front. Cell Dev. Biol. 12, 1518154 (2024).

Huang, B. et al. Impacts of gender and age on meibomian gland in aged people using artificial intelligence. Front. Cell Dev. Biol. 11, 1199440 (2023).

Srivastav, S., Hasnat Ali, M., Basu, S. & Singh, S. Morphologic variants of Meibomian glands: age-wise distribution and differences between upper and lower eyelids. Front. Med.10, 1195568 (2023).

Daniel, E. et al. Association of meibomian gland morphology with symptoms and signs of dry eye disease in the Dry Eye Assessment and Management (DREAM) study. Ocul. Surf. 18, 761–769 (2020).

Maskin, S. L. & Testa, W. R. Infrared video meibography of lower lid meibomian glands shows easily distorted glands: implications for longitudinal assessment of atrophy or growth using lower lid meibography. Cornea 37, 1279–1286 (2018).

Shimizu, E. et al. Artificial intelligence to estimate the tear film breakup time and diagnose dry eye disease. Sci. Rep. 13, 5822 (2023).

Bai, Y., Ngo, W. & Nichols, J. J. Characterization of the thickness of the tear film lipid layer using high resolution microscopy. Ocul. Surf. 17, 356–359 (2019).

Garaszczuk, I. K., Romanos-Ibanez, M. & Consejo, A. Machine learning-based prediction of tear osmolarity for contact lens practice. Ophthalmic Physiol. Opt. 44, 727–736 (2024).

Li, L. et al. Advances in artificial intelligence for meibomian gland evaluation: a comprehensive review. Surv. Ophthalmol. 69, 945–956 (2024).

Dai, Q. et al. A novel meibomian gland morphology analytic system based on a convolutional neural network. IEEE Access 9, 23083–23094 (2021).

Huang, H. et al. in ICASSP 2020-2020 IEEE international conference on acoustics, speech and signal processing (ICASSP), 1055–1059 (IEEE, 2020).

Zhang, Z. et al. Meibomian gland density: an effective evaluation index of meibomian gland dysfunction based on deep learning and transfer learning. J. Clin. Med. 11, 2396 (2022).

Xiao P, Luo Z, Deng Y, et al. An automated and multiparametric algorithm for objective analysis of meibography images [J]. Quant. Imaging Med. Surg. 11, 1586 (2021).

Wang, X. et al. Accurate label refinement from multiannotator of remote sensing data. IEEE Trans. Geosci. Remote Sens. 61, 1–13 (2023).

Yu, Y. et al. Automatic identification of meibomian gland dysfunction with meibography images using deep learning. Int. Ophthalmol. 42, 3275–3284 (2022).

Lin, J. W. et al. Meibomian glands segmentation in infrared images with limited annotation. Int. J. Ophthalmol. 17, 401–407 (2024).

Wang, J. et al. Quantifying meibomian gland morphology using artificial intelligence. Optom. Vis. Sci. 98, 1094–1103 (2021).

Shehzad, D., Gorcuyeva, S., Dag, T. & Bozkurt, B. Novel application software for the semi-automated analysis of infrared meibography images. Cornea 38, 1456–1464 (2019).

Pflugfelder, S. C. et al. Evaluation of subjective assessments and objective diagnostic tests for diagnosing tear-film disorders known to cause ocular irritation. Cornea 17, 38 (1998).

Ronneberger, O., Fischer, P. & Brox, T. in Medical image computing and computer-assisted intervention–MICCAI 2015: 18th international conference proceedings, part III 18. 234–241 (Springer, 2015).

Zhou, Z., Rahman Siddiquee, M. M., Tajbakhsh, N. & Liang, J. In Deep learning in medical image analysis and multimodal learning for clinical decision support: 4th international workshop, DLMIA 2018, and 8th international workshop, ML-CDS 2018, held in conjunction with MICCAI, proceedings 4. 3–11 (Springer, 2018).

Qin, X. et al. U2-Net: going deeper with nested U-structure for salient object detection. Pattern Recognit. 106, 107404 (2020).

Oktay, O. et al. Attention u-net: Learning where to look for the pancreas. Preprint at http://arxiv.org/abs/1804.03999 (2018).

Wang, J. et al. Deep high-resolution representation learning for visual recognition. IEEE Trans. pattern Anal. Mach. Intell. 43, 3349–3364 (2020).

Cao, Y., Liu, S., Peng, Y. & Li, J. DenseUNet: densely connected UNet for electron microscopy image segmentation. IET Image Process. 14, 2682–2689 (2020).

Cao, H. et al. Swin-unet: Unet-like pure transformer for medical image segmentation. In: European conference on computer vision. 205–218 (Springer, 2022).

Gu, Z. et al. Ce-net: context encoder network for 2d medical image segmentation. IEEE Trans. Med. imaging 38, 2281–2292 (2019).

Dong, Z. et al. MNet: rethinking 2D/3D networks for anisotropic medical image segmentation. In: Thirty-first international joint conference on artificial intelligence {IJCAI-22}. 870–876 (International Joint Conferences on Artificial Intelligence Organization, 2022).

Acknowledgements

This work was supported by the NHMRC Investigator Grant (APP1175405APP2010072, APP2041559), Natural Science Foundation of Fujian Province, China [grant number: 2024J011019], and the Fujian Science and Technology Innovation Joint Fund Program, China [grant number: 2023Y9346]. L.L. is supported by the China Scholarship Council – the University of Melbourne PhD Scholarship and primarily conducts research at the Centre for Eye Research Australia. The scholarship provided support solely for living expenses and did not influence the design or conduct of the study. The Centre for Eye Research Australia receives Operational Infrastructure Support from the Victorian State Government.

Author information

Authors and Affiliations

Contributions

Conceptualization: L.L., Y.X., M.H. and Z.Z.; Methodology: L.L., Z.Z., J.L.; Data acquisition: K.X., X.Z., L.Y.L., H.H., L.L.I.U., W.L. and L.L.; Manual segmentation: T.L., K.X., W.L. and L.L.; Data validation: L.L., K.L., T.L., Y.W.; Statistical analysis: K.X., X.S. and L.L.; Draft of the manuscript: K.X., L.L.; Revision of the manuscript: Y.W., J.L., Z.G., L.Y.L., M.H., Y.X. and Z.Z.; Supervision: L.Y.L., Z.G., M.H., Z.Z.; Funding acquisition: L.L., Y.X. All authors reviewed and approved the final manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Li, L., Xiao, K., Lai, T. et al. Development and multicenter validation of an AI driven model for quantitative meibomian gland evaluation. npj Digit. Med. 8, 403 (2025). https://doi.org/10.1038/s41746-025-01753-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41746-025-01753-5