Abstract

There are no prospective clinical studies evaluating artificial intelligence implementation for glaucoma detection in real-world settings. We developed an automated retinal photography and AI-based screening system and prospectively assessed its accuracy, feasibility, and acceptability in Australian general practice (GP) clinics. Adults aged 50 years or older were recruited during routine GP visits, with retinal images captured using an automated fundus camera and analysed by the AI system for glaucoma risk classification. Of 414 participants, 277 (66.9%) had analysable images, with a total of 483 eyes included. The AI system achieved an AUROC of 0.80, sensitivity of 65.0%, and specificity of 94.6%. Among 161 previously undiagnosed patients, 18 (11.2%) were identified as referable glaucoma. Patient feedback was positive, and clinic staff supported AI-assisted screening to enhance glaucoma care. Despite challenges such as lower sensitivity and image acquisition limitations, the system shows promise for opportunistic screening in primary care settings.

Similar content being viewed by others

Introduction

Glaucoma is the leading cause of irrevasible blindness and a significant public health concern globally1,2,3 with its prevalence increasing steadily due to aging populations and other risk factors4. Despite its prevalence and potentially devastating consequences if left untreated, glaucoma often goes undiagnosed, particularly in its early stages. Globally, over 70% of people with glaucoma remain undiagnosed5. This underlines the critical need for effective and accessible screening methods to detect glaucoma early and prevent irreversible vision loss. The impact of undiagnosed glaucoma is not only limited to individual patients but also extends to the healthcare system and society as a whole, highlighting the need for implementation of comprehensive screening strategies.

The importance of glaucoma screening is further underscored by the challenges associated with its detection. Traditional screening methods, while effective to some extent, often face limitations in terms of accessibility, cost, and resource requirements6. For example, in Australia, as well as in many other Western countries such as the USA, UK, and Canada, there are theoretically two primary care models for patients with eye problems– involving General Practitioners (GPs) and optometrists. However, in practice, GPs typically lack both the equipment and training necessary to diagnose glaucoma7. This gap can lead to two critical issues: missed diagnoses (effectively 0% sensitivity) or inaccurate referrals, which may overwhelm specialist services with false positives (low specificity). Both outcomes delay care for those who truly need it. In Australia, for instance, the median wait time for a public hospital ophthalmology appointment is currently 400 days8.

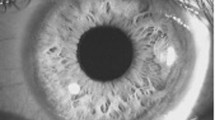

Furthermore, glaucoma diagnosis is like an American breakfast—composed of multiple essential tests that together form a complete picture. A key component is evaluating the optic disc and retinal nerve fibre layer (RNFL). Characteristic changes, such as increased cup-to-disc ratio (CDR), rim notching, and RNFL thinning, can appear before visual field defects, making fundus image interpretation crucial. However, detecting these signs can be challenging, especially in primary care settings where advanced imaging tools like OCT may not be available.

Artificial intelligence (AI) has emerged as a promising tool in the field of ophthalmology, offering the potential to revolutionize glaucoma detection9,10,11,12,13,14,15,16,17. AI algorithms can analyse large volumes of ocular data with remarkable speed and accuracy, aiding in the early identification of glaucomatous changes that may escape human detection18. Leveraging AI technologies with automomous fundus camera technologies in glaucoma screening holds immense promise for enhancing diagnostic accuracy, streamlining workflow, and improving patient outcomes.

However, there is no prospective clinical studies that validate or evaluate the real-world accuracy, feasibility and acceptability of glaucoma screening involving AI. Furthermore, the need for clinicians to operate the AI or related cameras present a challenge in many low-resource settings. The successful implementation of AI-assisted screening models necessitates more than just technological advancements. Engaging with end-users, including patients, clinicians, and organizational stakeholders, is crucial for understanding their preferences, needs, and concerns regarding AI technology in healthcare. By incorporating end-user feedback into the implementation process, novel AI-assisted screening models can be tailored to meet the specific requirements of diverse healthcare settings and populations, thereby maximizing their acceptance and utility.

To address these gaps, we have developed a fully autonomous hardware-software solution for glaucoma screening. In this study, we first assessed the real-world performance and accuracy of the AI algorithm in Australia GP settings. We then assessed AI’s contribution to the healthcare system by assessing the number of missed diagnosis by the existing healthcare system. Secondly, we investigated the feasibility and acceptability of this AI glaucoma opportunistic screening system among the patients. Thirdly, we gathered clinician feedback on this AI screening system.

Through comprehensive evaluation of the real-world performance, feasibility, and end-user experiences of this novel model, we endevour to refine the AI-driven glaucoma screening and pave the way for its widespread adoption in clinical practice.

Results

Success rate and test duration

Among the eligible participants invited to participate in the study from the two study sites, 414 patients provided consent and underwent the automated eye screening. Out of those, 277 (66.9%) had images captured from at least one eye, and were included in the person-level analysis. A total of 483 images were successfully captured, and were included in the image-based analysis. The reasons for unsuccessful imaging capture include small pupil size, patients not able to keep eyes still, or patients not cooperative in alignment. The average screening duration was 4.3 min (SD = 2.1 min) per person.

Patient characteristics

Table 1 shows the characteristics of those included versus those excluded from the analyses for accuracy, nMCC value, sensitivity, specificity and AUROC (Area Under the Receiver Operating Characteristic curve). The patients who were excluded because they did not have gradable image from at least one eye were significantly older (71.4 ± 9.58 vs. 64.8 ± 8.47 years, p < 0.0001), more likely for self reported existing glaucoma diagnosis (10/137 (7.30%) vs. 6/277 (2.17%), p = 0.012), and more likely to have self reported existing cataract diagnosis (56/137 (40.9%) vs. 53/277 (19.1%), p < 0.001).

Real-world accuracy of the algorithm at person-level

The AUROC, sensitivity, and specificity of the AI system for referable glaucoma across both clinics were 0.80, 65.0%, and 94.6% respectively (Table 2). In total, there were 21 disagreements (including 2 which were manually graded as ungradable and 4 which were AI ungradable) between manual grading and AI grading. Ten of these were false positives and there were 5 false negatives (Table 3).

Furthermore, through person-level analysis, the AI achieves a true negative rate of 0.95, a true positive rate of 0.65, a false positive rate of 0.05, a nMCC of 0.76, and an accuracy of 0.92 in diagnosing referable glaucoma cases compared to manual grading by eyecare providers. These results indicate that the AI model performs with high accuracy in identifying referable glaucoma cases when compared to manual grading by eyecare providers. Specifically, it correctly identifies non-glaucoma cases (true negatives) 95% of the time and detects glaucoma cases (true positives) 65% of the time. The false positive rate is low at 5%, suggesting a relatively low incidence of misidentifying non-glaucoma cases as glaucoma. The nMCC value of 0.76 indicates a strong overall correlation between the AI’s predictions and manual grading, with values closer to 1 indicating a perfect correlation and values close to -1 significant incorrect correlation with a strong inverse relationship between predicted and actual outcomes. The overall accuracy of 92% underscores the model’s effectiveness in making accurate diagnoses based on the provided images. These findings suggest that the AI system has potential as a reliable tool to assist non-eyecare practitioners such as GPs in screening for referable glaucoma cases.

Real-world accuracy at image level

The AUROC, sensitivity, and specificity of the AI system for referable glaucoma across both clinics were 0.78, 71.1%, and 94.4% respectively (Table 2). In total, there were 39 disagreements (including 3 which were manually graded as ungradable and 8 which were AI ungradable) between manual grading and AI grading. Seventeen of these were false positives and there were 11 false negatives (Table 3).

Furthermore, through image-based analysis, the AI achieves a true negative rate of 0.94, a true positive rate of 0.61, a false positive rate of 0.06, a nMCC of 0.75, and an accuracy of 0.92 in diagnosing referable glaucoma cases compared to manual grading by eyecare providers. These results indicate that the AI model performs with high accuracy in identifying referable glaucoma cases when compared to manual grading by eyecare providers. The nMCC value of 0.75 indicates a strong overall correlation between the AI’s predictions and manual grading.

Contribution of AI to undiagnosed glaucoma in the current health system

There were 161 people visiting GP clinics who have seen an eyecare provider in the past 12 months and have not been previously diagnosed with glaucoma, among them, AI detected 18 people (11.2%) with referable glaucoma, and were given referral recommendation based on the AI glaucoma grading to consult an eyecare provider to confirm the findings and to initiate management as required. With a minimum of three attempts we managed to make contact with 16 of the referred patients. Among them, only 6 (37.5%) had adhered to the referral recommendation, and among them, 1 (1/6 = 16.7%) reported a positive diagnosis for glaucoma.

Real-world feasibility and acceptibility

All 414 eligible consenting patients took the post-use feedback survey (Fig. 1), with majority of patients agreeing or strongly agreeing to the AI Workstation being easy to understand, comfortable to use, overall satisfied with its application, would like to use again, and would recommend to a family member or friend.

Survey results of artificial intelligence (AI) Workstation from 414 participants. majority of patients agreeing or strongly agreeing to the AI Workstation being easy to understand, comfortable to use, overall satisfied with its application, would like to use again, and would recommend to a family member or friend.

A qualitative component of the study was conducted involving individual interviews with 17 staff members, including 10 GPs, 3 clinic managers and 4 other clinic staff, from two GP clinics. The interviews, lasting between 10 to 15 minutes, aimed to gather feedback on clinical and patient adoption and the workflow of a new AI technology.

Feedback from the staff indicated a positive reception towards the AI Workstation as a beneficial diagnostic tool that could enhance patient care. Findings showed that decision-making regarding the integration of the workstation into clinical workflow primarily involved practice managers and head medical directors, with GPs being consulted but not directly involved in the final decision-making process. Successful integration relied heavily on the willingness of practice managers to incorporate the AI Workstation into staff and clinical workflows. Furthermore, it was suggested that the AI Workstation should demonstrate significant advantages over traditional examination methods to justify any additional costs to patients. Clinicians stressed the importance of the AI Workstation being an automated solution for healthcare, as clinics often lack sufficient support staff to assist with its use.

Staff members acknowledged that patient adoption of the AI Workstation would require active encouragement from both clinical and non-clinical staff. They highlighted the expectation among patients for the service to be bulk-billed under Medicare (Australia’s universal healthcare system which covers all Australian citizens, Australian permanent residents, New Zealand citizens, temporary residents covered by a ministerial order, and citizens and permanent residents of Norfolk Island, Cocos Islands, Christmas Island or Lord Howe Island)19, and any out-of-pocket expenses were likely to deter usage. Recommendations for increasing patient adoption included clearer signage and providing training materials to staff about the workstation’s benefits to patient care. It was suggested that the workstation should be actively promoted to patients and made accessible through online bookings. Additionally, recommendations included the necessity of having a dedicated staff member to assist patients with the workstation and implementing patient screening prior to appointments.

Discussion

Our study adopts a mixed methods approach to prospectively evaluate the performance and feasibility of an AI-assisted glaucoma screening model in GP clinics. Notably, this study stands as the first globally to investigate the real-world performance and user experience of AI in glaucoma screening from the perspectives of patients, clinicians, and organizational stakeholders20. Prior investigations into AI systems for glaucoma detection, based on retrospective datasets, have demonstrated high levels of accuracy9,10,11,12,13,14,15. However, assessing real-world performance is vital for evaluating the impact of factors such as image quality, camera models, system usability, workflow, and unanticipated practical challenges in clinical settings.

This AI algorithm was developed using colour fundus photos from a population of Chinese people. While maintaining adequate performance, we highlight that the sensitivity (65.0%) and specificity (94.6%) of referable GON from this Australian population varied from those (sensitivity = 95.6%, specificity = 92.0) reported in the original population16. The substantial drop in sensitivity from 95.6% in controlled lab settings to 65% in real-world practice underscores the critical importance of validating AI models outside ideal conditions. This discrepancy reflects the inherent challenges of real-world implementation, including variability in image quality and clinical workflows, as well as differences in ethnic, demographic and ocular characteristics of the screened population. These factors may not be fully represented in the curated datasets typically used in algorithm development. Our findings highlight the need for context-specific model retraining and system refinement to ensure reliable performance in diverse, real-world environments.

Our findings suggest that this AI tool may be more suitable for population screening and opportunistic screening in non-eyecare settings rather than eyecare settings because it demonstrated a pattern of performance characterised by low sensitivity, high specificity, and a low false positive rate. These results contrast significantly with established trends in glaucoma detection, where high sensitivity and relatively lower specificity often lead to increased false positive rates which burden tertiary hospitals and ophthalmologists with unnecessary referrals21. However, the mediocre sensitivity (and more false negatives) observed raises concerns about its effectiveness in detecting glaucoma in settings inside an eyecare provider’s clinic where comprehensive diagnosis using multi-factorial assays (see Supplementary Fig. 1) is crucial for early intervention and management.

We recommend several strategies at improving future iterations of the AI system. These include retraining the algorithm with more diverse and locally relevant datasets, enhancing image quality through preprocessing techniques, and adopting advanced imaging hardware better suited for older or difficult-to-image patients. While not feasible in the current screening settings, future systems may benefit from multimodal AI models incorporating clinical risk factors, as well as protocol refinements such as allowing multiple image capture attempts and applying age- or risk-stratified thresholds. Together, these algorithmic, hardware, and workflow improvements are expected to boost sensitivity—particularly for early or subtle cases—while maintaining the high specificity required for effective screening in non-specialist settings.

In non-eye care settings, where access to specialist eye professionals is limited, AI offers a promising solution to expand screening capacity. The intended use for this AI system is opportunistic and population-based screening in primary care and community health settings, where identification of individuals with glaucoma can enable timely intervention and improved outcomes. The high specificity and low false positive rate observed in this study suggest that the algorithm could help reduce unnecessary referrals, easing pressure on overstretched tertiary eye care services7. For instance, a recent study in Australia revealed that the median wait time for public hospital ophthalmology appointments was 400 days8. By streamlining referral pathways, the AI tool can ensure specialist care is prioritised for those who need it most. Moreover, this AI system adds value by detecting cases that GPs would otherwise entirely miss due to their lack of equipment or training to detect GON. The nMCC value of 0.76 further supports the strong correlation between AI predictions and manual grading, reinforcing the reliability of AI-driven assessments.

Our findings showed that patients excluded due to the absence of gradable photos from at least one eye were significantly older and exhibited a higher prevalence of pre-existing diagnoses of glaucoma and cataracts. These associations are anticipated, given that both glaucoma and cataracts are age-related chronic conditions. Advanced age is often accompanied by physiological changes that may hinder the successful capture of fundus photos. Older individuals may experience decreased pupil dilation and increased lens opacity, rendering fundus photography more challenging. Moreover, fundus images obtained from patients with cataracts present additional difficulties for both AI algorithms and human specialists in interpretation. The presence of opacities and distortions caused by cataracts can obscure critical features of the retina, complicating accurate analysis. Consequently, these challenges underscore the importance of refining imaging techniques and developing specialised algorithms tailored to address the unique characteristics of elderly patients with cataracts. Such advancements are essential for optimising the diagnostic accuracy and effectiveness of screening programs for age-related ocular pathologies. Furthermore, while excluded participants had a higher rate of self-reported glaucoma, it remains unclear whether their exclusion led to over- or underestimation of model performance, as the detectability of these cases is unknown. Importantly, AI-ungradable images were conservatively classified as referable, in line with current clinical practice, which helps mitigate the risk of inflating performance metrics.

AI detected referrable glaucoma in 11.2% of the patients visiting GP clinics who have seen an eyecare provider in the past 12 months and have not been previously diagnosed with glaucoma. This rate slightly lower than what was previously reported as glaucoma suspect in a national population-based study (15.5%)4. More crucially, this indicates AI’s contribution to the healthcare system is to screen those who have undiagnosed glaucoma and create a pathway for diagnosis and management. Unfortunately, too many patients in our study did not adhere to referral. Future studies need to identify those factors that contributed to the lack of adherence. Unsuccessful image capture and non-adherence to referral are major barriers for glaucoma detection and diagnosis.

The confirmation rate of glaucoma among adherent patients (1/6 or 16.7%, out of 6 referred glaucoma suspects, there was 1 confirmed glaucoma diagnosis) underscores the importance of clinical evaluation and highlights the role of AI as a screening tool rather than a definitive diagnostic tool. This performance is comparable to existing human screening programs in Victoria, Australia which showed a confirmation rate of around 1/5 or 20%4. Integrating AI systems into primary care settings for glaucoma screening shows promise in identifying potentially undiagnosed cases. However, optimizing referral pathways and patient education strategies are crucial to enhance adherence and ensure timely diagnosis and management.

The findings from the qualitative component suggest that successful adoption of the AI Workstation in GP clinics relies on effective integration into clinical workflows, active promotion to patients, and addressing patient concerns regarding cost and usability. Furthermore, recommendations for enhancing both clinical and patient adoption include dedicated staff support and clear communication of the AI Workstation’s benefits.

Clinicians and screening staff react positively to AI’s implementation. To enhance adherence and maximise public health benefits, future integrations of AI-based screening in routine clinical settings must address the referral pathways for identified glaucoma cases. Integration into the existing clinical process of GPs is paramount when contemplating the diagnosis of glaucoma. As previously highlighted in the Introduction, GPs in Australia, as well as in many other countries, often lack the requisite training and/or equipment for diagnosing glaucoma. To address this challenge, we have proposed a pathway designed to enable GPs to identify and promptly refer suspected cases of glaucoma to eyecare providers with ease and efficiency (Supplementary Fig. 1)7. Furthermore, as revealed by our study implementation and also from staff interviews, while designed to be autonomous, some participants—particularly older adults—required minimal assistance. This unexpected finding has important implications for AI deployment.

Our study has several strengths. First, instead reporting the performance of AI under laboratory setting using retrospective data, as did all the existing AI in glaucoma studies published so far, our mixed methods study design offered fresh insights into the real-world performance of an AI-supported glaucoma screening model in primary care settings. Second, our AI system employed an automated solution, eliminating the constant need for staff to take retinal photos. This aspect is particularly valuable for screening in rural and remote areas where internet access may be limited. Thirdly, the prospective mixed methods approach broadened our ability to explore and understand both patient and staff perspectives on this screening model.

This study also has several limitations that must be discussed. First, the discrepancy between our required and actual sample sizes for participants with referable GON may impact the validity and generalizability of our findings. Initially, we calculated a need for 494 eyes or at least 247 people to achieve adequate statistical power. However, although we have successfully included 277 people in our study only 483 eyes could be used for analyses and only 47 eyes (35 + 12) with referable glaucoma. The number of referable glaucoma eyes fell short primarily due to the real-world population’s characteristics and imaging challenges, with only 66.9% of participants having had successful images captured from either eye. While this represents a limitation, the 66.9% capture rate also reflects real-world challenges in imaging older adults or those with cataract, small pupils or limited cooperation. Recognising and quantifying this gap is in itself valuable, highlighting the need for improved imaging hardware and AI algorithms tailored to these populations. We are currently developing such solutions and will report them in future publications. Second, we did not utilise optical coherence tomography (OCT)22,23 or consider functional loss, such as that assessed through visual field testing24, as part of the reference standard for glaucoma diagnosis. The absence of these gold standard techniques may skew the true performance evaluation of glaucoma detection. It is important to recognise that diagnosing glaucoma is multifaceted, involving multiple tests and thorough patient history7. However, the objective of this AI-supported system is to serve as an opportunistic screening tool rather than a comprehensive diagnostic instrument; a full glaucoma diagnosis necessitates professional assessment by an eye care professional. But as this was a real-world study in GP settings, neither visual field testing nor OCT was available. This reflects typical constraints in non-eye-care environments and reinforces the value of retinal photos as a first-line screening tool where more advanced equipment is inaccessible. Our study also revealed low adherence rates to specialist follow-up, which would limit the feasibility of specialist diagnosis using all glaucoma diagnostic procedures, thus providing an important real-world finding relevant to future AI screening implementation strategies. Third, this study relies on a previously trained glaucoma model on a Chinese population. Architectural advancement, domain adaptation, or re-training using fundus image datasets from Australian population would strengthen performance, and we are actively working on such efforts in parallel research. Nevertheless, evaluating cross-ethnic generalisability is valuable in its own right and offers insights into the robustness of AI models across populations. Lastly, while cost-effectiveness analysis was not part of this study, such an analysis using comprehensive decision-analytic Markov models is in a separate paper currently under peer-review and will be critical for evaluating system-wide adoption.

In sum, real-world deployment of an AI-assisted glaucoma screening in GP settings demonstrated encouraging performance and was well-received by both patients and staff. While challenges such as lower sensitivity and image acquisition limitations remain, these findings underscores the potential for AI to support opportunistic screening in non-specialist settings. With further refinement and validation, such systems could help improve access to early glaucoma detection and support more efficient use of specialist care.

Methods

This prospective observational study employed a mixed methods approach (using both quantitative methods and qualitative methods) to evaluate the accuracy, feasibility, and acceptability of a novel AI-based glaucoma screening tool (or otherwise called the AI Workstation).

The study protocol was approved by the Human Research Ethics Committee of the St. Vincent’s Hospital Melbourne (HREC/64640/SVHM-2020) and adhered to the tenets of the Declaration of Helsinki with all participants providing informed consent.

Participants

This study involved patients attending two general practice (GP) clinics in the state of Victoria, Australia, between August 2021 and June 2022. Patients were recruited while they were waiting for their physician consultation. We included all patients aged 50 years or older. The screening AI Workstation at these sites included an automated fundus camera for self-imaging mounted on a kiosk with access to an AI analytical algorithm (Figs. 2, 3). Interviews were conducted among clinicians and other staff members from the 2 GP clinics.

AI enabled automated system for glaucoma screening

The AI algorithms employed in this system were initially trained in 2016, incorporating the International Society of Geographical and Epidemiological Ophthalmology (ISGEO) category 2 definition for glaucoma alongside classical glaucoma signs such as presence of disc hemorrhage and retinal nerve fiber layer (RNFL) defects (refer to Supplementary Table 1)16. Notably, only structural features identified in monoscopic fundus photographs were considered for analysis. The development and validation procedures for each algorithm have been documented in prior literature, with detailed references provided herein16,25,26. Referable glaucoma was defined as either definite glaucoma or glaucoma suspicion. Under controlled laboratory conditions, this AI model demonstrated a sensitivity of 95.6%, specificity of 92.0%, accuracy of 92.9%, and an area under the curve of 0.986 for diagnosing glaucomatous optic neuropathy (GON)16. Upon completion of the grading process, the images were randomly assigned to either a training or validation dataset. Deep learning models were subsequently developed for each disease entity, all leveraging the Inception-v3 architecture.

In this study, we used new fundus images acquired from an Australian population to validate the original AI algorithm. Furthermore, we designed an AI enabled automated fundus camera (Fig. 2) with audio prompts that guide the patient into position looking at a fixation target allowing the camera to automatically focus and capture retinal images. The sensors embedded on the camera detect the position of the eyes and give voice prompts until the patient correctly locates the target. Once correctly focused and aligned, the fundus camera automatically initiates and captures the image of the left eye first followed by the right eye. The patient receives voice prompts to move the forehead up or down, to look straight, close and open the eyes as appropriate, in order for image acquisition. Retinal photographs captured are immediately transferred to the AI-based glaucoma assessment. A QR code is printed from the system and upon scanning the QR code, the patient’s portable device (such as smart phones or iPad) receives an electronic grading report (Fig. 3).

Testing protocol

Prior to the initiation of patient recruitment, research assistants underwent individualized training sessions conducted by the same trainer.

Data pertaining to general health, previous ocular history, and duration since the last eye examination were systematically gathered from all eligible and consenting participants using a standardised questionnaire. Subsequently, a solitary 50-degree (macula-cantered), non-dilated, monoscopic, colour retinal image was captured for each eye by the fundus camera (RetiCam 3100 Non-mydriatic Automatic Fundus Camera) (Fig. 2, the authors have obtained written consent to publish the image). In cases where initial attempts resulted in suboptimal image quality, additional imaging attempts (up to 3 times) were made. Captured retinal images were immediately graded by the AI system, and a printout with QR code generated by the system was given to the participant. Upon scanning the QR code, the participant’s mobile device received an electronic grading report (Fig. 3), inclusive of glaucoma status.

Subsequent to the automated screening process, participants completed a modified iteration of the Client Satisfaction Questionnaire (CSQ-8) to elicit feedback on overall satisfaction and the likelihood of utilising the AI screening service again27. Staff from the two GP clinics underwent a short interview about the AI screening service.

Participant referral

Patients for whom the AI system identified high or medium risk were advised to see an eye specialist (optometrist or ophthalmologist) at the discretion of the physician. The printout with QR code was sent with the patient during their doctor consultation. The results could also be accessed via an online portal by the physician.

Reference standard grading

To determine the accuracy of the AI screening model, all retinal images were manually and independently graded for referable glaucoma by two Australian Health Practitioner Regulation Agency (AHPRA) registered optometrists (therapeutically qualified for glaucoma detection and management). Any disagreements were adjudicated by a licenced ophthalmologist in Australia. All graders underwent standardised training of the grading criteria (Supplementary Table 1). All graders were masked to AI outputs. Participants who were subsequently identified as false negatives (i.e. no glaucoma reported by the AI system for either eye, but glaucoma subsequently detected on manual grading) were contacted via telephone and advised to visit an eyecare provider.

Participant follow-up

Participants who had received a referral were contacted through phone call and/or email three months after the screening session, to assess their adherence to the referral, and to gather details of their consultation with an eyecare provider. A minimum of three contact attempts were made to obtain this information.

Sample size calculation

We performed sample size calculations designed for use in diagnostic accuracy studies28. We estimated an AI system with sensitivity of 95.6% 16for detecting referable GON and set a minimum acceptable lower confidence interval threshold of 85%. To achieve 95% confidence and 80% power, we required 76 eyes with referable GON. Assuming the prevalence of glaucoma suspect to be 15.4%4, this resulted a total required sample of 494 eyes or at least 247 people.

End-user feedback

A survey was conducted among the patient participants about their experience, and interviews with staff members from GP clinics regarding clinical adoption and participant adoption of this AI tool.

Data analysis

All study data were manually entered and managed using Research Electronic Database Capture (REDCap Nashville, TN, USA) which was hosted at the Centre for Eye Research Australia (CERA). De-identified data were downloaded from REDCap and imported into Stata/IC version 17 (College Station, Texas, USA) for statistical analysis. A p value of <0.05 was defined as statistically significant.

Participants with missing reference standard grading (due to bilateral missing images, or both eyes ungradable on manual grading), and those with missing/ungradable images from one eye and no referable glaucoma detectable in the opposite eye on manual grading were excluded from analysis. Participants with missing/ungradable images from one eye and referable glaucoma detected in the fellow eye on manual grading were included in the analysis and classified as having referable glaucoma. As specified a priori, participants with retinal images from either eye reported to be ungradable by the AI system were categorised as having a positive index test for referable glaucoma, to reflect current Australian referral guidelines which state that patients should be referred if the fundus is not able to be viewed during screening29. Gradability was assessed independently by both human graders and the AI using predefined criteria. Some overlap existed, but gradability status was determined separately by each party.

Sensitivity, specificity, area under the receiver operating characteristic curve (AUROC), positive predictive value (PPV) and negative predictive value (NPV) were estimated with 95% exact binomial confidence intervals (CI) to evaluate diagnostic accuracy. Nomalised Matthews correlation coefficient (nMCC) was also computed for potentially unbalanced data due to low prevalence of glaucoma30.

Data availability

Due to ethical restrictions, the data underlying this study are not publicly available. Requests for data access related to future research may be directed to the corresponding author and will be assessed individually, subject to appropriate institutional and ethics approvals. All data are securely stored in controlled-access facilities at the Centre for Eye Research Australia.

Code availability

The codes used for the current study available from the corresponding author on reasonable request.

References

Resnikoff, S. et al. Global data on visual impairment in the year 2002. Bull. World Health Organ. 82, 844–851 (2004).

Pascolini, D. & Mariotti, S. P. Global estimates of visual impairment: 2010. Br. J. Ophthalmol. 96, 614–618 (2012).

The Department of Health, Australian Government.Visual Impairment and Blindness in Australia. www.health.gov.au (2008).

Keel, S. et al. Prevalence of glaucoma in the Australian National Eye Health Survey. Br. J. Ophthalmol. 103, 191–195 (2019).

Wong, E. Y. et al. Detection of undiagnosed glaucoma by eye health professionals. Ophthalmology 111, 1508–1514 (2004).

Burr, J. M. et al. The clinical effectiveness and cost-effectiveness of screening for open angle glaucoma: a systematic review and economic evaluation. Health Technol. Assess.11, 1–190 (2007).

Jan, C., He, M., Vingrys, A., Zhu, Z. & Stafford, R. S. Diagnosing glaucoma in primary eye care and the role of Artificial Intelligence applications for reducing the prevalence of undetected glaucoma in Australia. Eye 38, 2003–2013 (2024).

Ford, B. K., Kim, D., Keay, L. & White, A. J. Glaucoma referrals from primary care and subsequent hospital management in an urban Australian hospital. Clin. Exp. Optom. 103, 821–829 (2020).

Fan, Z. et al. Optic disk detection in fundus image based on structured learning. IEEE J. Biomed. Health Inf. 22, 224–234 (2018).

Mookiah, M. R. et al. Automated detection of optic disk in retinal fundus images using intuitionistic fuzzy histon segmentation. Proc. Inst. Mech. Eng. H 227, 37–49 (2013).

Liu, H. et al. Development and validation of a deep learning system to detect glaucomatous optic neuropathy using fundus photographs. JAMA Ophthalmol. 137, 1353–1360 (2019).

Medeiros, F. A., Jammal, A. A. & Thompson, A. C. From machine to machine: an OCT-trained deep learning algorithm for objective quantification of glaucomatous damage in fundus photographs. Ophthalmology 126, 513–521 (2019).

Thompson, A. C., Jammal, A. A. & Medeiros, F. A. A Deep learning algorithm to quantify neuroretinal rim loss from optic disc photographs. Am. J. Ophthalmol. 201, 9–18 (2019).

Jammal, A. A. et al. Human versus machine: comparing a deep learning algorithm to human gradings for detecting glaucoma on fundus photographs. Am. J. Ophthalmol. 211, 123–131 (2020).

Raghavendra, U. et al. Deep convolution neural network for accurate diagnosis of glaucoma using digital fundus images. Inf. Sci. 441, 41–49 (2018).

Li, Z. et al. Efficacy of a deep learning system for detecting glaucomatous optic neuropathy based on color fundus photographs. Ophthalmology 125, 1199–1206 (2018).

Li, F. et al. Automatic differentiation of Glaucoma visual field from non-glaucoma visual filed using deep convolutional neural network. BMC Med. Imaging 18, 35 (2018).

Fan, R. et al. Detecting glaucoma in the ocular hypertension study using deep learning. JAMA Ophthalmol. 140, 383–391 (2022).

Australian Government. Medicare is Australia’s Universal Health Care System. https://www.servicesaustralia.gov.au/about-medicare?context=60092 (Accessed 5 July 2023).

Coan, L. J. et al. Automatic detection of glaucoma via fundus imaging and artificial intelligence: a review. Surv. Ophthalmol. 68, 17–41 (2023).

Tan, R. et al. Evaluating the outcome of screening for glaucoma using colour fundus photography-based referral criteria in a teleophthalmology screening programme for diabetic retinopathy. Br. J. Ophthalmol. 108, 933–939 (2024).

Huang, M. L. & Chen, H. Y. Development and comparison of automated classifiers for glaucoma diagnosis using Stratus optical coherence tomography. Investig. Ophthalmol. Vis. Sci. 46, 4121–4129 (2005).

Bussel, I. I., Wollstein, G. & Schuman, J. S. OCT for glaucoma diagnosis, screening and detection of glaucoma progression. Br. J. Ophthalmol. 98, ii15–ii19 (2014).

Asaoka, R., Murata, H., Iwase, A. & Araie, M. Detecting preperimetric glaucoma with standard automated perimetry using a deep learning classifier. Ophthalmology 123, 1974–1980 (2016).

Li, Z. et al. An automated grading system for detection of vision-threatening referable diabetic retinopathy on the basis of color fundus photographs. Diabetes Care 41, 2509–2516 (2018).

Keel, S. et al. Development and validation of a deep-learning algorithm for the detection of neovascular age-related macular degeneration from colour fundus photographs. Clin. Exp. Ophthalmol. 47, 1009–1018 (2019).

Attkisson, C. C. & Zwick, R. The client satisfaction questionnaire: psychometric properties and correlations with service utilization and psychotherapy outcome. Eval. Program Plan. 5, 233–237 (1982).

Flahault, A., Cadilhac, M. & Thomas, G. Sample size calculation should be performed for design accuracy in diagnostic test studies. J. Clin. Epidemiol. 58, 859–862 (2005).

Mitchell, P. et al. Guidelines for the Management of Diabetic Retinopathy (National Health and Medical Research Council, 2008).

Chicco, D. & Jurman, G. The advantages of the Matthews correlation coefficient (MCC) over F1 score and accuracy in binary classification evaluation. BMC Genom. 21, 1–13 (2020).

Acknowledgements

We would like to thank Dr. Malcolm Clark for being our GP advisor, Prof. Peter van Wijngaarden for funding management, Nicole and Kiki for their critical assistance in data management, A/Prof. Zhuoting Zhu for her administrative support, and our graders Peiying Lee and Trung Dang for their clinical expertise. Finally and most importantly, we thank all our patient and clinician participants without whom this project is not possible. C.J. is supported by Research Training Scholarship from the Australian Commonwealth Government. This project received grant funding from the Australian Government: the National Critical Research Infrastructure Initiative, Medical Research Future Fund (MRFAI00035) and the NHMRC Investigator Grant (APP1175405). The study was also supported by the Global STEM Professorship Scheme (P0046113), PolyU - Rohto Centre of Research Excellence for Eye Care (P0046333) and Henry G. Leong Endowed Professorship in Elderly Vision Health. We thank the InnoHK HKSAR Government for providing valuable supports. The Centre for Eye Research Australia receives Operational Infrastructure Support from the Victorian State Government. The funding sources had no role in the design and conduct of the study; collection, management, analysis, and interpretation of the data; preparation, review, or approval of the manuscript; and decision to submit the manuscript for publication.

Author information

Authors and Affiliations

Contributions

M.H., C.L.J. and A.J.V. designed the study. C.L.J., J.H., and S.J. were involved in data acquisition and cleaning, C.L.J. analyzed the data. C.L.J. wrote the manuscript. All authors (C.L.J., S.J., A.J.V., J.H., Z.G., R.S.S. and M.H.) interpreted the data. All (C.L.J., S.J., A.J.V., J.H., Z.G., R.S.S. and M.H.) authors critically revised the manuscript before publication.

Corresponding authors

Ethics declarations

Competing interests

Prof. Mingguang He holds an appointment with Eyetelligence Pty Ltd (Chief Medical Officer) which co-developed this AI system. Prof. Randall S Stafford is a cofounder and co-owner of Data Yakka however Data Yakka had no involvement in the development, validation or deployment of this AI system. C.L.J., A.J.V., S.J., ZG., and J.H. have no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Jan, C.L., Joseph, S., Vingrys, A.J. et al. Prospective pragmatic trial of automated retinal photography and AI glaucoma screening in Australian primary care. npj Digit. Med. 8, 386 (2025). https://doi.org/10.1038/s41746-025-01768-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41746-025-01768-y