Abstract

Preoperative differentiation between xanthogranulomatous cholecystitis (XGC) and gallbladder cancer (GBC) remains challenging due to overlapping clinical and imaging features. This multicenter retrospective study developed a machine learning (ML) model, LIDGAX, using preoperative clinical, imaging, and laboratory data from 1246 patients (554 XGC, 692 GBC). Twelve variables were identified as independent predictors via multivariate logistic regression and least absolute shrinkage and selection operator analyses. LIDGAX achieved area under the curve (AUC) values of 0.94 (internal validation) and 0.88 (external testing), outperforming the other five ML models. Calibration and decision curve analyses demonstrated its superior clinical utility. Compared to six radiologists, LIDGAX improved sensitivity (1.2–8.5%), specificity (0.0–4.6%), and balanced accuracy (1.8–6.6%), while reducing average diagnostic time per patient by 30.44–35.76 s. LIDGAX was deployed on an open-source online platform, maintaining high performance (AUC 0.95, accuracy 0.92). This non-invasive tool shows strong potential for clinical translation in preoperative differentiation of XGC and GBC.

Similar content being viewed by others

Introduction

In real-world clinical scenario, gallbladder diseases are primarily categorized as benign cholecystitis or malignant gallbladder cancer. Xanthogranulomatous cholecystitis (XGC) is a rare type of chronic inflammatory gallbladder diseases characterized histologically by focal or diffuse inflammatory infiltration of foamy cells, multinucleated giant cells, lymphocytes, and fibroblasts1. In contrast, gallbladder cancer (GBC) is the most aggressive malignancy of the biliary tract2, with most cases having a poor prognosis due to its aggressive nature and limited therapeutic options2,3. Accurate differentiation between XGC and GBC has critical implications for treatment decisions. XGC, being a chronic inflammatory condition, is typically managed with laparoscopic cholecystectomy4. Conversely, GBC often necessitates more aggressive interventions depending on the stage, including liver resection, bile duct excision, lymph node dissection, and possibly neoadjuvant chemoradiotherapy before surgery5. Misdiagnosing GBC as XGC could result in undertreatment, such as lack of preoperative surgical planning, incomplete resection, or inadequate follow-up, potentially accelerating disease progression. Conversely, misidentifying XGC as GBC could lead to unnecessary surgical procedures like liver resection or extensive lymph node dissection, as well as increased complications and resource usage. Therefore, distinguishing XGC from GBC preoperatively is crucial in clinical practice, which could reduce intraoperative frozen section misdiagnosis risks and guide postoperative surveillance strategies. However, this differentiation is highly challenging due to the overlapping clinical characteristics and imaging features of the two diseases, such as abdominal pain, jaundice, gallbladder wall thickening, and invasion of adjacent organs6,7. Additionally, misdiagnosis is common, with reported rates ranging from 10% to 30%6,8,9,10. Thus, non-invasive preoperative biomarkers are needed to improve differentiation between XGC and GBC and reduce overtreatment.

Preoperative diagnostic imaging for gallbladder diseases commonly includes ultrasound (US), contrast-enhanced computed tomography (CECT), and magnetic resonance imaging (MRI). Previous studies have explored imaging features on US, CT, and MRI to differentiate XGC from GBC. For instance, gallbladder mucosal line continuity, a low-density border surrounding the lesion, diffuse gallbladder wall thickening, hypo-attenuated or hypoechoic nodules in the thickened walls, and the presence of calculi were found to be strongly associated with XGC11,12,13,14. Lee et al.15 compared the diagnostic performance of these imaging modalities and demonstrated that MRI had the highest accuracy, followed by US and CT. Each imaging technique has distinct advantages and limitations. US is frequently used for initial screening due to its high temporal resolution, ability to observe blood flow, convenience, absence of radiation, and low cost, despite its lower spatial resolution. CT is effective for visualizing liver and lymph node involvement but involves radiation exposure and lower soft tissue resolution. MRI provides superior soft tissue resolution and effectively shows gallbladder wall invasion but is time-consuming and susceptible to respiratory motion artifacts. Therefore, integrating the features from these imaging modalities may enhance the differential diagnosis of XGC and GBC.

Machine learning (ML) algorithms, as novel non-invasive approaches, can flexibly and efficiently analyze high-throughput data, enabling the discovery of complex relationships between variables16. Due to their advanced capabilities, various ML techniques are widely used to identify disease risk factors, predict treatment outcomes in patients with tumors, and support clinicians in real-world practice17,18,19,20. However, a previous study has indicated that different ML methods can produce varying performance results21. Therefore, identifying the most effective ML techniques is essential for ensuring accurate and reliable predictions and classifications in clinical applications. To our knowledge, no current research has employed multiple ML methods on large-scale, multi-center datasets to differentiate XGC from GBC in real-world settings.

In this study, we developed distinct ML-based models to differentiate between XGC and GBC using preoperative clinical characteristics, imaging features, and laboratory tests. We compared the performance of these models and validated them on an independent, external, multi-center testing cohort to assess generalizability. We then evaluated the optimal model against results from a reader study involving six radiologists with varying levels of experience. Finally, we explored the application of the most effective model in real-world clinical settings, including outpatient, inpatient, and physical examination settings. Figure 1 illustrates the framework of the proposed ML-based model.

(1) Data collection. Clinical, imaging, and laboratory variables were collected for patients with XGC and GBC. (2) Variable selection. Univariate and multivariate logistic regression analyses, followed by LASSO regression, were conducted to select relevant variables, resulting in 12 robust features. (3) Model construction. Six ML models (LR, RF, SVM, XGB, LGB, and MLP) were constructed using the selected features. (4) Model performance. The six models were evaluated using AUC, calibration curves, and DCA across training, internal validation, and external testing cohorts. (5) Model interpretability. SHAP was used to interpret and visualize the feature importance of the optimal model (LIDGAX). (6) Reader study. LIDGAX’s diagnostic performance was compared to radiologists, both unassisted and LIDGAX-assisted, in distinguishing XGC from GBC. (7) Real-world study. An online platform for LIDGAX was developed to facilitate its application in clinical practice, enabling translation to real-world settings. XGC Xanthogranulomatous cholecystitis, GBC gallbladder cancer, LASSO least absolute shrinkage and selection operator, LR logistic regression, RF random forest, SVM support vector machine, XGB eXtreme gradient boosting, LGB light gradient boosting, MLP multilayer perceptron, ROC receiver operating characteristic, AUC area under the curve, DCA decision curve analysis, SHAP SHapley Additive exPlanations, LIDGAX LightGBM Intelligent Differentiator for XGC and GBC.

Results

Baseline characteristics

The baseline characteristics of patients in the four cohorts are summarized in Table 1. Between January 2023 and February 2024, a total of 1246 patients were included in the analysis, comprising 554 patients diagnosed with XGC and 692 with GBC (Fig. 2). The median age across the overall dataset was 63.0 years, with 574 males (46.1%) and 672 females (53.9%). The training cohort consisted of 674 patients (326 with XGC and 348 with GBC), while the internal validation cohort included 169 patients (82 with XGC and 87 with GBC). The external testing cohort contained 279 patients, distributed as 90 XGC and 189 GBC cases. Detailed definitions of clinical data, laboratory tests, and imaging features are available in Supplementary Tables 1, 2. Further details on the distribution of XGC and GBC across all cohorts can be found in Supplementary Table 3.

Construction of ML-based models

A total of 79 variables were collected, including clinical characteristics (n = 9), imaging features (n = 19), and laboratory tests (n = 51). To identify and retain only the most relevant indicators, univariate and multivariate logistic regression analyses were performed on all variables (Supplementary Table 4). The multivariate analysis identified 20 variables as independently associated with either XGC or GBC. Specifically, the variables independently associated with XGC included male, epigastric pain, hyperechoic findings on US, presence of gallbladder stones, regular gallbladder morphology, reduced gallbladder size, presence of intramural nodules, continuous mucosal line, elevated fibrinogen level, and higher total bilirubin level. In contrast, independent indicators for GBC included fever, smoking, other conditions (such as schistosomiasis or congenital biliary dilation/cyst), biliary duct dilation, intraluminal tumors, invasion of adjacent structures, enlarged peri-tumoral lymph nodes, hyperdense findings on CT, increased indirect bilirubin, higher CEA levels, and a higher CA199-to-total bilirubin (TB) ratio.

To further refine and determine the optimal number of features, LASSO analysis was employed for an in-depth selection of the 20 independent variables (Supplementary Fig. 1). This analysis ultimately selected 12 key variables for the construction of ML models, which included sex, other conditions, ultrasound echo, gallbladder stones, biliary duct dilation, gallbladder morphology, intramural nodules, intraluminal tumor, mucosal line, enlarged peri-tumoral lymph nodes, fibrinogen level, and indirect bilirubin level. Multicollinearity analysis confirmed that all 12 variables had VIF values below 1.50, indicating no significant collinearity issues among them (Supplementary Table 5).

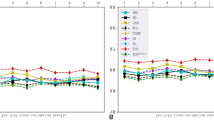

Diagnostic performance of ML-based models

Using the 12 selected variables, we constructed six ML-based models, including LR, RF, SVM, XGB, LGB, and MLP. In the training, internal validation, and external testing cohorts, the AUCs ranged from 0.98 to 1.00 (95% CI: 0.97–1.00), from 0.92 to 0.94 (95% CI: 0.90–0.98), and from 0.86 to 0.88 (95% CI: 0.81–0.92), respectively (Fig. 3a–c). Notably, the LGB model consistently achieved the highest AUC values in both the internal validation and external testing cohorts, outperforming the other ML-based models. We also compared the differences in AUCs among the six models in the training and internal validation cohorts (Supplementary Table 6), as well as in different external testing cohorts (Supplementary Fig. 2). Additionally, Fig. 3 and Table 2 summarize the diagnostic metrics for each model across the cohorts, including AUC, accuracy, sensitivity, specificity, PPV, NPV, and recall. LIDGAX achieved an AUC of 0.88 (95% CI: 0.84–0.93), accuracy of 0.80 (95% CI: 0.74–0.84), sensitivity of 0.79 (95% CI: 0.73–0.85), and specificity of 0.80 (95% CI: 0.70–0.88) in the external testing cohort. Supplementary Fig. 3 presents the confusion matrices of all models. Calibration curves demonstrated that all models showed good alignment between predicted and observed probabilities for differentiating XGC and GBC in each cohort (Fig. 3d–f). The DCA illustrated the net benefit of clinical utility in the six ML-based models across the three cohorts (Fig. 3g–i). These results strongly suggested that the LGB model outperformed the other five models in various performance parameters. Additionally, the performance of LIDGAX was assessed using time-stratified five-fold cross-validation, demonstrating robust predictive capabilities across all folds (AUC: 0.97–0.98 and 0.94–0.98 in the training and internal validation cohorts; Supplementary Table 7 and Supplementary Fig. 4). This temporal validation strategy effectively simulates real-world clinical deployment scenarios where model performance must remain stable despite temporal shifts in patient characteristics.

a–c ROC curves of each model across the three cohorts; d–f Calibration curves showing predicted vs. observed probabilities for each model; g–i DCA curves indicating net benefit and clinical utility for each model across the three cohorts. ML machine learning, XGC Xanthogranulomatous cholecystitis, GBC gallbladder cancer, LR logistic regression, RF random forest, SVM support vector machine, XGB eXtreme gradient boosting, LGB light gradient boosting, MLP multilayer perceptron, ROC receiver operating characteristic, DCA decision curve analysis, CI confidence interval.

Three thresholding strategies demonstrated distinct performance trade-offs across cohorts (Supplementary Table 8). In the external testing cohort, the Youden Index balanced sensitivity (0.79) and specificity (0.80). Maximizing sensitivity achieved near-perfect GBC detection (0.97) but caused significant specificity drops (0.46), increasing false positives. Conversely, maximizing specificity minimized overtreatment risks (0.96) but sacrificed sensitivity (0.42), raising missed diagnosis concerns. Confusion matrices further revealed classification accuracy of three strategies (Supplementary Fig. 5).

Interpretability of LIDGAX model

To enhance the explainability of LIDGAX, the SHAP explainer was utilized to interpret the diagnostic importance of features in the optimal LGB model for distinguishing XGC from GBC. The SHAP beeswarm plot (Fig. 4a) visualizes the 12 key variables, showing each variable’s contribution to model predictions. Variables were ranked by importance using average SHAP values and are displayed in descending order (Fig. 4b). SHAP values greater than zero correspond to predictions for the positive class, indicating a higher risk of GBC. For instance, features such as intraluminal tumors or enlarged peri-tumoral lymph nodes were associated with positive SHAP values, which drive predictions toward the “GBC” class. Additionally, Fig. 4c illustrates a case aligned with the “XGC” class, while Fig. 4d represents a case aligned with the “GBC” class according to LIDGAX predictions, with actual variable measurements displayed in each force plot.

a SHAP beeswarm plot illustrates the impact of each variable, where each dot represents a sample with the variable’s influence coded by color. b SHAP summary bar plot displays variable importance with mean SHAP values on the x-axis, indicating the predictive power of each variable. SHAP force plots with imaging and case details for two individual patients, one with XGC and one with GBC: c A 62-year-old male with no additional symptoms. Imaging findings included hypoechoic ultrasound, gallbladder stones, no biliary duct dilation, regular gallbladder morphology, presence of intramural nodules, absence of intraluminal tumor, continuous mucosal line, no enlarged peri-tumoral lymph nodes, a fibrinogen level of 5.27 g/L, and indirect bilirubin at 16.4 µmol/L. LIDGAX predicted XGC, confirmed by post-cholecystectomy pathology. The white arrow highlights the thickened gallbladder wall. d A 51-year-old female with no additional symptoms. Imaging showed hypoechoic ultrasound, gallbladder stones, no biliary duct dilation, irregular gallbladder morphology, absence of intramural nodules, presence of an intraluminal tumor, a discontinuous mucosal line, no enlarged peri-tumoral lymph nodes, fibrinogen at 2.34 g/L, and indirect bilirubin at 4.6 µmol/L. LIDGAX classified this case as GBC, also confirmed by pathology. SHAP SHapley Additive exPlanations, XGC Xanthogranulomatous cholecystitis, GBC gallbladder cancer, US ultrasound, CT computerized tomography, MRI magnetic resonance imaging, LIDGAX LightGBM Intelligent Differentiator for XGC and GBC, T2WI T2-weighted imaging, DWI diffusion-weighted imaging.

Subgroup analyses

We compared the performance of four models built with clinical, imaging, laboratory, and combined variables, respectively (Supplementary Fig. 6). Ultimately, the clinical model was constructed using MLP, the imaging model using MLP, the laboratory model using SVM, and the combined model using LGB (Supplementary Fig. 7). In the external testing cohort, the combined model outperformed the clinical model (0.68 vs. 0.88, adjusted P < 0.0001), the imaging model (0.88 vs. 0.88, adjusted P = 0.699), and the laboratory model (0.62 vs. 0.88, adjusted P < 0.0001). Furthermore, Supplementary Table 9 provides an overview of the AUC, accuracy, sensitivity, specificity, PPV, NPV, and recall of the four models across these cohorts. Supplementary Figs. 8–10 present the confusion matrices of six ML models based on clinical variables for differentiating XGC and GBC across all cohorts. The calibration and DCA curves for these models demonstrated that the combined model had a satisfactory alignment and net benefit of clinical utility (Supplementary Figs. 11, 12). All results showed that models utilizing combined variables outperformed those using single-variable groups, with imaging variables making the most substantial contribution. Furthermore, the subgroup analyses demonstrated robust performance of LIDGAX, with AUCs consistently ranging from 0.85 to 0.91 across all subgroups (P = 0.079–0.682; Supplementary Fig. 13 and Supplementary Table 10), confirming its generalizability despite demographic, temporal, and institutional variations.

Reader study

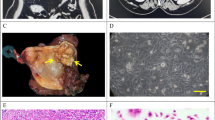

To evaluate the performance of LIDGAX compared to six radiologists (including gallbladder specialists, general radiologists, and radiology residents) in distinguishing XGC from GBC, we measured diagnostic accuracy and time efficiency, both with and without LIDGAX assistance (Fig. 5 and Supplementary Table 11). The study involved 169 patients (82 XGC and 87 GBC) from the internal validation cohort. Results showed that all six radiologists performed less accurately than LIDGAX alone in differentiating XGC from GBC (Fig. 5a, d, e), particularly for radiology residents, with significant differences observed in specificity (P = 0.009–0.010) and sensitivity (P = 0.041). When unassisted, the radiologists demonstrated sensitivity rates between 74.4% and 85.4%, which improved to 82.8–89.0% when assisted by LIDGAX (Fig. 5b, f). Similarly, specificity increased from 78.2–86.2% to 82.8–87.4% (Fig. 5b, f). Balanced accuracy rose from 76.3–85.2% unassisted to 82.8–87.6% with LIDGAX assistance, though it remained slightly lower than LIDGAX’s performance of 88.2% (Fig. 5c, f). This improvement was most pronounced for radiology residents compared to gallbladder specialists and general radiologists, although not statistically significant (P = 0.121–0.606). Furthermore, the average time per assessment decreased significantly from 68.23–89.36 s without LIDGAX to 37.79–53.60 s with its assistance (all P-values < 0.0001, Fig. 5f and Supplementary Table 11), highlighting LIDGAX’s potential to improve both diagnostic accuracy and efficiency in differentiating XGC from GBC.

The evaluation was conducted using 169 patients (82 XGC and 87 GBC) from the internal validation cohort. a LIDGAX versus six radiologists without the assistance of LIDGAX. b LIDGAX versus six radiologists with the assistance of LIDGAX. c Balanced accuracy improvement among radiologists with different levels of expertise for differentiating XGC and GBC. d Specificity comparison between LIDGAX and radiologists. e Sensitivity comparison between LIDGAX and radiologists. f Comprehensive comparison of specificity, sensitivity, and diagnostic time efficiency for radiologists without versus with LIDGAX assistance. g A case of XGC misdiagnosed by radiologists but correctly identified as XGC by LIDGAX. h A case of GBC misdiagnosed by radiologists but correctly identified as GBC by LIDGAX. XGC Xanthogranulomatous cholecystitis, GBC gallbladder cancer, LIDGAX LightGBM Intelligent Differentiator for XGC and GBC, US ultrasound, CT computerized tomography, MRI magnetic resonance imaging, T2WI T2-weighted imaging.

Real-world clinical evaluation

To better facilitate clinical translation in real-world settings, we developed an open-source online platform (Supplementary Fig. 14; Version 2.0; https://lidgaxmodel.streamlit.app) based on the LIDGAX model, making it convenient for physicians to use. This retrospective real-world cohort from Center A ultimately comprised 124 individuals, including 56 (45.0%) diagnosed with XGC and 68 (55.0%) with GBC (Fig. 6a). Supplementary Table 12 provides baseline characteristics for XGC and GBC patients in this cohort. LIDGAX achieved an AUC of 0.95 (95% CI: 0.91–0.99), an accuracy of 0.92 (95% CI: 0.86–0.96), a sensitivity of 0.94 (95% CI: 0.86–0.98), a specificity of 0.89 (95% CI: 0.78–0.96), a PPV of 0.91 (95% CI: 0.82–0.97), an NPV of 0.93 (95% CI: 0.82–0.98), and a recall of 0.94 (95% CI: 0.86–0.98) (Fig. 6).

a Proportions of XGC and GBC cases within the cohort (n = 124). b ROC curve illustrating LIDGAX’s diagnostic performance in differentiating XGC from GBC. c Radar chart quantitatively comparing LIDGAX’s performance metrics. d Confusion matrix of IDGAX in the real-world cohort. e An XGC case classified by LIDGAX with a 77.0% probability, confirmed by pathology. f A GBC case classified by LIDGAX with a 91.0% probability, also confirmed by pathology. XGC Xanthogranulomatous cholecystitis, GBC gallbladder cancer, LIDGAX LightGBM Intelligent Differentiator for XGC and GBC, AUC area under the curve, PPV positive predictive value, NPV negative predictive value, US ultrasound, CT computerized tomography, MRI magnetic resonance imaging.

Discussion

In our multicenter real-world study, we present LIDGAX, an advanced ML-based model developed to differentiate between XGC and GBC using clinical, imaging, and laboratory variables. Accurate differentiation between these conditions remains a significant challenge for hepatobiliary surgeons and radiologists, often leading to misdiagnoses and unnecessary healthcare resource usage. By curating a large dataset of pathologically confirmed XGC and GBC cases, we collected relevant clinical, imaging, and laboratory data to construct the LIDGAX model, based on the LGB intelligent differentiator for XGC and GBC, utilizing 12 selected variables. LIDGAX demonstrated high sensitivity (0.86 and 0.79) and specificity (0.90 and 0.80) in distinguishing XGC from GBC in both the internal validation and independent external testing cohorts. The subgroup analyses demonstrated its generalizability despite demographic, temporal, and institutional variations. Its diagnostic accuracy significantly outperformed that of radiologists, particularly enhancing precision and efficiency for residents. Additionally, real-world validation via an online platform further underscored its clinical utility potential.

Artificial intelligence has been utilized to analyze high-throughput data, revealing intricate connections between features and leveraging advanced computational techniques for categorization, prediction, and evidence-based decision-making in novel ways22. To our knowledge, only five previous studies have investigated ML- or deep learning (DL)-based approaches for differentiating XGC from GBC. Fujita et al.23 developed a CT-based DL model that attained high predictive accuracy, achieving an AUC of 0.989 with a dataset of 49 patients. Zhou et al. 24 established an ML-based prediction model that achieved an AUC of 0.888 for the preoperative differentiation of XGC and GBC. Zhang et al.25 developed a DL nomogram integrating CECT scans, reaching an accuracy of 0.89, a precision of 0.92, and an AUC of 0.92 across two affiliated hospitals, used as an external validation cohort. Gupta et al.26 employed three DL models to differentiate XGC from GBC on US, demonstrating superior accuracy over radiologists. However, these studies were limited by single-center designs and small sample sizes, with findings unvalidated in independent external cohorts. Another study27 constructed a predictive nomogram based on 436 patients from two centers, incorporating variables such as sex, Murphy’s sign, absolute neutrophil count, glutamyl transpeptidase levels, CEA levels, and imaging findings. Our study included the largest dataset to date—1246 patients from four centers—to differentiate between XGC and GBC. To assess generalizability, reliability, and effectiveness, we validated the LIDGAX model on independent external testing cohorts, achieving AUCs of 0.84 and 0.92 in Centers B and C, and Center D, respectively.

To enhance the clinical applicability of our model, we selected commonly relevant factors for diagnosing gallbladder disease, incorporating general clinical data, imaging features from US, CT, and MRI, and laboratory tests (including routine blood tests, biochemical tests, coagulation tests, and tumor markers). Following multivariate logistic regression for variable selection, 20 variables were identified as independently associated with XGC and GBC. Among these, factors such as sex, symptoms, gallbladder stones, biliary duct dilation, gallbladder morphology, gallbladder size, intramural nodules, intraluminal tumor, mucosal line, invasion of adjacent structures, enlarged peri-tumoral lymph nodes, and CEA were consistent with prior studies23,24,25,27,28. Notably, our analysis revealed that fever was significantly associated with GBC. This association may be mechanistically explained by tumor necrosis and systemic inflammatory response, which triggers elevated pro-inflammatory cytokines (e.g., TNF-α, IL-1) and COX-2 expression29,30,31. Additionally, gallstones or GBC-related biliary obstruction may predispose patients to bacterial infections, thereby further contributing to fever32,33. In contrast, XGC—a chronic granulomatous inflammatory condition characterized by lipid-laden macrophage infiltration—typically lacks such pronounced systemic inflammatory responses34. CT hyperdensity was another feature associated with GBC, likely reflecting desmoplastic stromal reactions with collagen deposition and fibroblast proliferation, whereas XGC typically exhibits hypodense regions from lipid-laden macrophages. Furthermore, we identified new key risk factors for differentiating XGC and GBC—namely smoking, fibrinogen, total bilirubin, indirect bilirubin, and the CA199-to-TB ratio—factors not previously reported in this context. Prior research has shown that preoperative serum fibrinogen and total bilirubin levels correlate with tumor progression and may independently predict GBC35,36,37,38. However, their potential role in distinguishing XGC from GBC has not been explored until now. Using LASSO to minimize redundancy, we refined these 20 variables to 12 final input parameters for the six ML-based models.

In our study, the inclusion of patients with complete US, CT, and MRI data may introduce selection bias, as this criterion excluded those typically managed with fewer imaging modalities in routine practice. However, the 12 key variables comprising LIDGAX—particularly key imaging features such as gallbladder stones, biliary duct dilation, gallbladder morphology, intramural nodules, intraluminal tumor, mucosal line, and enlarged peri-tumoral lymph nodes—are not modality-specific. These semantic features can be reliably identified across US, CT, or MRI. Consequently, LIDGAX remains applicable even when MRI is unavailable, provided the essential features are assessable through existing modalities. In clinical practice, US is often the first-line modality, followed by CT or MRI if needed for further characterization. For features requiring MRI confirmation (e.g., occult gallstones undetected by US/CT, intramural nodules demonstrating isoechoic density on US and isodense characteristics on CT), MRI is recommended as a supplementary modality to ensure input accuracy. When MRI is inaccessible, radiologists should flag such cases for multidisciplinary review. This protocol balances diagnostic accuracy with resource constraints. The retrospective requirement for multimodal imaging aimed to minimize feature omission during model development. While potentially introducing selection bias, this strategy ensured comprehensive data collection. In the future, prospective validations will specifically evaluate LIDGAX’s performance in settings with restricted imaging protocols. Besides, our study excluded seven GBC complicated with XGC cases, potentially introducing selection bias. Previous studies reported the incidence of XGC-GBC coexistence ranges from 3% to 12.5%39,40,41. For such cases, histopathological examination remains the gold standard for definitive diagnosis. LIDGAX was specifically designed not to replace histopathology but to improve preoperative differentiation between pure XGC and GBC, guiding clinical decision-making and surveillance planning. The exclusion of coexisting cases from model validation ensures alignment with its intended use scenario.

Six ML algorithms we used are widely used in medical diagnostics16. Among these, the LGB model (AUC: 0.94 and 0.88) showed superior performance compared to others (AUC: 0.92–0.94 and 0.86–0.87) in both internal validation and external testing cohorts. Multicollinearity can contribute to overfitting; thus, we evaluated it using VIF, with all values under 1.50, indicating no significant multicollinearity among these variables. All six ML-based models exhibited slight overfitting, with significant differences in AUC between the training and internal validation cohorts (P = 0.002–0.040). However, the LGB model had the smallest AUC discrepancy and achieved the highest AUC in the independent external testing cohort, highlighting its robustness and generalizability. Calibration and DCA curves further showed that the LGB model offered the best alignment and net clinical benefit. This led us to select it as the optimal model for differentiating XGC from GBC, naming it LIDGAX. Furthermore, the interpretability of LIDGAX is crucial for clinicians and radiologists in decision-making processes. Therefore, we employed SHAP values to enhance interpretability, revealing the underlying relationships between features and outcomes42. The SHAP value analysis highlighted that intraluminal tumor and mucosal line contributed the most to distinguishing XGC from GBC.

The choice of thresholding strategy should align with clinical priorities. In high-risk populations, a sensitivity-prioritized threshold is optimal for screening, minimizing missed GBC diagnoses and enabling timely resection. Though this increases false positives, confirmatory biopsies or short-term imaging follow-up can mitigate overdiagnosis risks. Conversely, a specificity-prioritized threshold is critical for surgical decision-making, reducing unnecessary extended hepatectomies, neoadjuvant therapies, and lymph node dissections for benign XGC. For example, misclassifying XGC as GBC could expose patients to toxic chemotherapy or aggressive lymphadenectomy—procedures avoided by LIDGAX’s 0.96 specificity. However, its low sensitivity (0.42) necessitates cautious use, particularly in younger patients prioritizing cancer detection. The Youden Index (0.79 sensitivity, 0.80 specificity) balances accuracy and resource allocation, mirroring real-world trade-offs. Future integration of cost-benefit analyses could refine personalized threshold selection.

We also compared LIDGAX with six radiologists of varying experience levels in differentiating XGC from GBC, finding that LIDGAX demonstrated superior diagnostic accuracy. Two main factors contributed to this advantage. First, LIDGAX was trained using a combination of clinical, imaging, and laboratory variables within a supervised learning framework—an approach that provided systematic, digitized data integration across multiple sources, unlike the workflow radiologists typically experience. Second, ML algorithms, such as LIDGAX, are inherently more effective in feature selection and weighting than manual assessments43, enabling our model to directly learn diagnostic patterns from detailed input data and apply them efficiently. Moreover, LIDGAX’s support enhanced radiologists’ performance across several metrics: sensitivity improved by 1.2–8.5%, specificity by 0.0–4.6%, and balanced accuracy by 1.8–6.6%. Additionally, the average diagnostic time per patient was reduced by 30.44–35.76 s, indicating that LIDGAX can substantially improve radiologists’ accuracy, lower misdiagnosis rates, and optimize time efficiency, particularly in high-demand clinical environments. ML still faces challenges in clinical translation44. To support LIDGAX’s implementation, we developed an open-source online platform designed for convenient clinical use. This platform enables clinicians to input 12 key factors and instantly receive diagnostic predictions. In a retrospective real-world cohort of 124 patients from Center A, the platform achieved an impressive AUC of 0.95, with an accuracy of 0.92, sensitivity of 0.94, and specificity of 0.89. These results demonstrate that the platform is user-friendly for clinicians and radiologists and achieves robust performance in distinguishing XGC from GBC in real-world clinical settings.

This study has several limitations. First, LIDGAX was developed using data from Chinese populations, so its generalizability to broader, global populations remains uncertain and requires further validation with additional datasets, despite our dataset being the largest available to date. Second, although LIDGAX was built using the clinical, imaging, and laboratory variables we were able to collect, there may be other relevant variables not considered in this study that could potentially enhance model performance. Future iterations could enhance performance by incorporating emerging biomarkers and genomic data. Third, the retrospective design inherently introduces selection bias, a limitation common to all observational studies. To address this, we are planning prospective randomized controlled trials to validate LIDGAX’s clinical efficacy. Lastly, given the rapid advancements in DL for medical imaging, our future goal is to incorporate DL to extract complex, high-dimensional features from multimodal imaging, enabling more accurate and intelligent differentiation between XGC and GBC for clinical applications.

In conclusion, we developed the LIDGAX model, utilizing the LGB algorithm, to accurately differentiate XGC from GBC. The model demonstrated robust diagnostic performance across independent external testing cohorts, surpassing six expert radiologists in diagnostic accuracy. By employing SHAP values, we improved the interpretability of LIDGAX for clinical applications. Additionally, we constructed an open-source online platform to validate the clinical translation potential of LIDGAX. Given its high accuracy and reliability, LIDGAX holds promise as a valuable, non-invasive tool for effectively distinguishing XGC from GBC in clinical settings.

Methods

Patients

This multicenter, retrospective study included patients diagnosed with XGC or GBC between January 2023 and February 2024 from four Chinese hospitals: The First Affiliated Hospital, Zhejiang University School of Medicine (Center A); The Second Affiliated Hospital, Jiaxing University (Center B); Beilun District People’s Hospital (Center C); and Huzhou Central Hospital (Center D). Adult participants who underwent either simple or radical cholecystectomy and were pathologically confirmed to have XGC or GBC were included. The exclusion criteria were: (1) patients with more than 5% incomplete clinical data and laboratory tests (Center A: n = 91; Centers B and C: n = 74; Center D: n = 54); (2) the lack of preoperative US, CECT, and CEMRI (Center A: n = 145; Centers B and C: n = 86; Center D: n = 70); (3) pathologically confirmed metastatic gallbladder malignancy (Center A: n = 13; Centers B and C: n = 5; Center D: n = 3); (4) GBC complicated with XGC (Center A: n = 4; Centers B and C: n = 1; Center D: n = 2). Details of the study population are illustrated in Fig. 2a.

In total, 1246 patients were included in the differential diagnosis task, comprising 554 XGC patients and 692 GBC patients. Patients from Center A (n = 843) were split chronologically into a training cohort and an internal validation cohort in a 4:1 ratio, while patients from Centers B, C, and D (n = 279) were assigned to independent external testing cohorts.

Variable collection

Baseline variables included clinical data, laboratory tests, and imaging features, selected based on consultations with gallbladder specialists and a review of recent literature on risk factors relevant to XGC and GBC. Clinical data were collected from medical records, including sex, age, symptoms, complications, smoking status, diabetes, gallbladder adenomyomatosis, biliary tract infection, as well as other conditions like schistosomiasis and congenital biliary dilatation/cyst. Laboratory tests conducted within two weeks prior to cholecystectomy, including routine blood tests, biochemical analyses, coagulation profiles, and tumor markers, were systematically extracted from electronic medical records. Further details on the clinical data and laboratory tests are provided in Supplementary Table 1. Two abdominal radiologists, each with over 10 years of experience, independently reviewed each US, CECT, and CEMRI scan as a standard reference and assessed all imaging features by consensus. In cases of disagreement, a third radiologist with over 20 years of experience in gallbladder disease performed the final evaluation. All scans conducted within two weeks were used as references. Detailed definitions of imaging features are available in Supplementary Table 2.

Model development

The development of ML-based models followed a two-step approach: (1) selecting robust features related to the differentiation of XGC and GBC from collected clinical, imaging, and laboratory variables; and (2) constructing six ML models using the selected features. For the first step, we implemented a three-stage feature selection approach within the training cohort (n = 674) to identify robust clinical, imaging, and laboratory variables. First, a preliminary univariate binary logistic regression analysis was conducted to identify variables with significant differences between XGC and GBC patients. Next, multivariate logistic regression analysis was applied to variables identified in the univariate analysis. Finally, the least absolute shrinkage and selection operator (LASSO) regression method was used to select the most predictive features with non-zero coefficients, with penalty tuning through 10-fold cross-validation (Supplementary Fig. 1).

For the second step, we developed six ML classification algorithms using the features selected by LASSO in the training cohort: logistic regression (LR), random forest (RF), support vector machine (SVM), eXtreme gradient boosting (XGB), light gradient boosting (LGB), and multilayer perceptron (MLP). Each algorithm was fine-tuned through grid search. After identifying optimal hyperparameters, each model was retrained on the full training subset with a set random seed, finalizing the weights and generating a locked model, which was then evaluated on the internal validation cohort.

Model evaluation

To systematically evaluate model performance, we compared the performance of six models across training, internal validation, and external testing cohorts. Evaluation metrics included the area under the curve (AUC), sensitivity, specificity, accuracy, positive predictive value (PPV), negative predictive value (NPV), recall, and confusion matrix. Calibration curves and decision curve analysis (DCA) were employed to assess model calibration and clinical utility across all cohorts45,46. Based on the integrated performance metrics, the LGB-based algorithm was identified as the optimal model and named LIDGAX (LGB Intelligent Differentiator for GBC and XGC). To confirm the robustness of the chronological split strategy, we performed time-stratified five-fold cross-validation on dataset A (n = 843) using LIDGAX. The model’s decision-making process was visualized using SHapley Additive exPlanations (SHAP)47,48, which quantified feature importance scores and elucidated the relationships between XGC, GBC, and selected features.

Our study implemented three thresholding strategies to address distinct clinical priorities: (1) Diagnostic balance: Optimized using the Youden Index (sensitivity + specificity − 1) to balance sensitivity and specificity; (2) Screening priority: Maximized sensitivity (>95%) to minimize missed diagnoses in gallbladder cancer (GBC) screening; (3) Treatment precision: Maximized specificity (>95%) to reduce overtreatment risks caused by false positives in therapeutic decision-making.

Subgroup analyses

To evaluate the effectiveness of the combined model, we constructed four separate models using these factors: a clinical model, an imaging model, a laboratory model, and a combined model. Each of these four models was developed using six different ML algorithms. To address potential variability and ensure the robustness of the findings, we conducted subgroup analyses in the external testing cohort, stratified by sex (female and male), age (<60 years and ≥60 years), time periods (2011–2015, 2016–2020, and 2021–2024), and centers (Centers B, C, and D).

Reader study

To assess the performance of radiologists in differentiating XGC from GBC, six radiologists independently diagnosed cases in the internal validation cohort (n = 169). Participants included two radiology residents (3–5 years of experience), two general radiologists (5–10 years of experience in abdominal imaging), and two gallbladder specialists (10–20 years of experience in gallbladder imaging). Prior to the study, a gallbladder specialist with extensive experience (over 3000 case reviews) conducted a training session for each radiologist, covering key imaging features identified in 40 representative cases from the training cohort.

This study included two main steps. In the first step, we compared the diagnostic performance of LIDGAX with that of the radiologists. Each radiologist reviewed anonymized US, CECT, and MRI images in random order using the local picture archiving and communication system, without access to clinical or laboratory data. They were tasked with determining whether each case represented XGC or GBC. In the second step, we assessed LIDGAX’s potential to support radiologists in diagnosis. Each radiologist received LIDGAX’s probability score for each case and then reanalyzed the same cases from the first step with this additional input. A minimum interval of one month separated the two steps to reduce recall bias.

Real-world study

For the real-world clinical evaluation, we deployed the LIDGAX model on an open-source online computing platform, allowing clinicians and radiologists to easily analyze cases through a user-friendly interface. This retrospective study included consecutive patients diagnosed with XGC or GBC between February 2023 and February 2024 from Center A. Exclusion criteria included: (1) patients with incomplete clinical and laboratory data (n = 3), and (2) patients without preoperative US, CECT, and CEMRI scans (n = 12). After applying these criteria, 124 patients were included in the real-world evaluation study (Fig. 2b).

Statistical analysis

All statistical analyses were conducted using R software (Version 4.2.2; https://www.rproject.org). To address incomplete clinical and laboratory data, multiple imputation was applied as part of data preprocessing49. Categorical variables were compared between groups using either the chi-square test or Fisher’s exact test and are presented as numbers and frequencies. For continuous variables, the Kolmogorov–Smirnov test assessed normality. Variables following a normal distribution are expressed as mean ± standard deviation (SD) and were compared using the t-test, while non-normally distributed variables are reported as median (interquartile range, IQR) and analyzed with the Mann–Whitney U test. Non-normally distributed continuous data were normalized before model development. Collinearity among variables was evaluated through the variance inflation factor (VIF), where a VIF > 5 indicated notable collinearity and a VIF > 10 suggested significant collinearity50. Univariate and multivariate binary logistic regression analyses identified variables associated with XGC and GBC. Variables found significant in univariate analysis were subsequently included in a stepwise multivariate analysis, using the Akaike information criterion for optimal variable selection51. The diagnostic performance of the ML-based models was evaluated by metrics including sensitivity, specificity, accuracy, PPV, NPV, recall, balanced accuracy, and confusion matrix. Model performance comparisons of AUCs between the six algorithms were carried out using the DeLong test. Confidence intervals (95% CIs) were obtained through 1000 bootstrap resampling. To compare the sensitivity and specificity of diagnostic performance before and after LIDGAX assistance, McNemar’s test was applied for paired categorical data analysis. Benjamini–Hochberg false discovery rate (BH-FDR, q < 0.05) was used for multiple testing correction52. Two-tailed P-values < 0.05 were considered statistically significant.

Data availability

The source data for all models, tables, and figures, along with supporting materials, are available from the corresponding author upon reasonable request.

Code availability

The code developed for this study is not publicly accessible to protect proprietary knowledge. However, qualified academic researchers may request access to the code—including preprocessing scripts, model architecture, and training protocols—for noncommercial research purposes. Requests should be submitted to the corresponding author at tiananjiang@zju.edu.cn and must include a detailed research proposal outlining the intended use. Access will be granted following approval by the institutional review committee, which evaluates proposals within 4-6 weeks. A formal code use agreement, prohibiting redistribution or commercial exploitation, will be required prior to release.

References

Rammohan, A., Cherukuri, S. D., Sathyanesan, J., Palaniappan, R. & Govindan, M. Xanthogranulomatous cholecystitis masquerading as gallbladder cancer: can it be diagnosed preoperatively?. Gastroenterol. Res. Pr. 2014, 253645 (2014).

Roa, J. C. et al. Gallbladder cancer. Nat. Rev. Dis. Prim. 8, 69 (2022).

Baiu, I. & Visser, B. Gallbladder cancer. Jama 320, 1294 (2018).

Güneş, Y., Bostancı, Ö, İlbar Tartar, R. & Battal, M. Xanthogranulomatous cholecystitis: is surgery difficult? Is laparoscopic surgery recommended?. J. Laparoendosc. Adv. Surg. Tech. A 31, 36–40 (2021).

Benson, A. B. et al. Hepatobiliary Cancers, Version 2.2021, NCCN Clinical Practice Guidelines in Oncology. J. Natl. Compr. Canc. Netw. 19, 541–565 (2021).

Feng, L., You, Z., Gou, J., Liao, E. & Chen, L. Xanthogranulomatous cholecystitis: experience in 100 cases. Ann. Transl. Med. 8, 1089 (2020).

Truant, S., Chater, C. & Pruvot, F. R. Greatly enlarged thickened gallbladder. Diagnosis: Xanthogranulomatous cholecystitis (XGC). JAMA Surg. 150, 267–268 (2015).

Deng, Y. L. et al. Xanthogranulomatous cholecystitis mimicking gallbladder carcinoma: An analysis of 42 cases. World J. Gastroenterol. 21, 12653–12659 (2015).

Spinelli, A. et al. Extended surgical resection for xanthogranulomatous cholecystitis mimicking advanced gallbladder carcinoma: a case report and review of literature. World J. Gastroenterol. 12, 2293–2296 (2006).

Huang, E. Y. et al. Distinguishing characteristics of xanthogranulomatous cholecystitis and gallbladder adenocarcinoma: a persistent diagnostic dilemma. Surg. Endosc. 38, 348–355 (2024).

Xiao, J., Zhou, R., Zhang, B. & Li, B. Noninvasive preoperative differential diagnosis of gallbladder carcinoma and xanthogranulomatous cholecystitis: a retrospective cohort study of 240 patients. Cancer Med. 11, 176–182 (2022).

Goshima, S. et al. Xanthogranulomatous cholecystitis: diagnostic performance of CT to differentiate from gallbladder cancer. Eur. J. Radio. 74, e79–e83 (2010).

Parra, J. A. et al. Xanthogranulomatous cholecystitis: clinical, sonographic, and CT findings in 26 patients. AJR Am. J. Roentgenol. 174, 979–983 (2000).

Ros, P. R. & Goodman, Z. D. Xanthogranulomatous cholecystitis versus gallbladder carcinoma. Radiology 203, 10–12 (1997).

Lee, E. S. et al. Xanthogranulomatous cholecystitis: diagnostic performance of US, CT, and MRI for differentiation from gallbladder carcinoma. Abdom. Imaging 40, 2281–2292 (2015).

Goecks, J., Jalili, V., Heiser, L. M. & Gray, J. W. How machine learning will transform biomedicine. Cell 181, 92–101 (2020).

Zhang, B. et al. Identifying behaviour-related and physiological risk factors for suicide attempts in the UK Biobank. Nat. Hum. Behav. 8, 1784–1797 (2024).

Zamanzadeh, D. et al. Data-driven prediction of continuous renal replacement therapy survival. Nat. Commun. 15, 5440 (2024).

Liu, R. et al. Development and prospective validation of postoperative pain prediction from preoperative EHR data using attention-based set embeddings. NPJ Digit Med. 6, 209 (2023).

Wagner, M. et al. Artificial intelligence for decision support in surgical oncology - a systematic review. Artif. Intell. Surg. 2, 159–172 (2022).

Teng, X. et al. Development and validation of an early diagnosis model for bone metastasis in non-small cell lung cancer based on serological characteristics of the bone metastasis mechanism. EClinicalMedicine 72, 102617 (2024).

Xu, Y. et al. Artificial intelligence: a powerful paradigm for scientific research. Innovation 2, 100179 (2021).

Fujita, H. et al. Differential diagnoses of gallbladder tumors using CT-based deep learning. Ann. Gastroenterol. Surg. 6, 823–832 (2022).

Zhou, Q. M. et al. Machine learning-based radiological features and diagnostic predictive model of xanthogranulomatous cholecystitis. Front. Oncol. 12, 792077 (2022).

Zhang, W. et al. Deep learning nomogram for preoperative distinction between Xanthogranulomatous cholecystitis and gallbladder carcinoma: a novel approach for surgical decision. Comput. Biol. Med. 168, 107786 (2024).

Gupta, P. et al. Deep-learning models for differentiation of xanthogranulomatous cholecystitis and gallbladder cancer on ultrasound. Indian J. Gastroenterol. 43, 805–812 (2024).

Fu, T. et al. Machine learning-based diagnostic model for preoperative differentiation between xanthogranulomatous cholecystitis and gallbladder carcinoma: a multicenter retrospective cohort study. Front. Oncol. 14, 1355927 (2024).

Ito, R. et al. A scoring system based on computed tomography for the correct diagnosis of xanthogranulomatous cholecystitis. Acta Radio. Open 9, 2058460120918237 (2020).

Yang, S. Q. et al. Prognostic significance of tumor necrosis in patients with gallbladder carcinoma undergoing curative-intent resection. Ann. Surg. Oncol. 31, 125–132 (2024).

P‚rez-Moreno, P., Riquelme, I., Garc¡a, P., Brebi, P. & Roa, J. C. Environmental and Lifestyle risk factors in the carcinogenesis of gallbladder cancer. J. Pers. Med. 12, 234 (2022).

Balkwill, F. Tumour necrosis factor and cancer. Nat. Rev. Cancer 9, 361–371 (2009).

Elinav, E. et al. Inflammation-induced cancer: crosstalk between tumours, immune cells and microorganisms. Nat. Rev. Cancer 13, 759–771 (2013).

Espinoza, J. A. et al. The inflammatory inception of gallbladder cancer. Biochim. Biophys. Acta 1865, 245–254 (2016).

Azari, F. S. et al. Kt. A contemporary analysis of xanthogranulomatous cholecystitis in a Western cohort. Surgery 170, 1317–1324 (2021).

Yang, Z. et al. Preoperative serum fibrinogen as a valuable predictor in the nomogram predicting overall survival of postoperative patients with gallbladder cancer. J. Gastrointest. Oncol. 12, 1661–1672 (2021).

Zhang, L. et al. Exploring the diagnosis markers for gallbladder cancer based on clinical data. Front. Med. 9, 350–355 (2015).

Yang, S. Q. et al. Unraveling early recurrence of risk factors in gallbladder cancer: a systematic review and meta-analysis. Eur. J. Surg. Oncol. 50, 108372 (2024).

Liu, F. et al. The prognostic value of combined preoperative PLR and CA19-9 in patients with resectable gallbladder cancer. Updates Surg. 76, 1235–1245 (2024).

Bolukbasi, H. & Kara, Y. An important gallbladder pathology mimicking gallbladder carcinoma: xanthogranulomatous cholecystitis: a single tertiary center experience. Surg. Laparosc. Endosc. Percutan Tech. 30, 285–289 (2020).

Kwon, A. H. & Sakaida, N. Simultaneous presence of xanthogranulomatous cholecystitis and gallbladder cancer. J. Gastroenterol. 42, 703–704 (2007).

Pandey, A., Kumar, D., Masood, S., Chauhan, S. & Kumar, S. Is final histopathological examination the only diagnostic criteria for xanthogranulomatous cholecystitis?. Niger. J. Surg. 25, 177–182 (2019).

Nohara, Y., Matsumoto, K., Soejima, H. & Nakashima, N. Explanation of machine learning models using shapley additive explanation and application for real data in hospital. Comput. Methods Prog. Biomed.214, 106584 (2022).

Wang, S. & Summers, R. M. Machine learning and radiology. Med. Image Anal. 16, 933–951 (2012).

Dinsdale, N. K. et al. Challenges for machine learning in clinical translation of big data imaging studies. Neuron 110, 3866–3881 (2022).

Vickers, A. J. & Elkin, E. B. Decision curve analysis: a novel method for evaluating prediction models. Med Decis. Mak. 26, 565–574 (2006).

Steyerberg, E. W. et al. Assessing the performance of prediction models: a framework for traditional and novel measures. Epidemiology 21, 128–138 (2010).

Lundberg S. M., Lee S.-I. A unified approach to interpreting model predictions. In Advances in neural information processing systems 30, (NeurIPS, 2017).

Lundberg, S. M., Erion, G. G. & Lee, S.-I. Consistent individualized feature attribution for tree ensembles. Preprint at https://arxiv.org/abs/1802.03888 (2018).

Little, R. J. et al. The prevention and treatment of missing data in clinical trials. N. Engl. J. Med. 367, 1355–1360 (2012).

O’brien, R. M. A caution regarding rules of thumb for variance inflation factors. Qual. Quant. 41, 673–690 (2007).

Akaike, H. A new look at the statistical model identification. IEEE Trans. Autom. control 19, 716–723 (1974).

Benjamini, Y. & Hochberg, Y. Controlling the false discovery rate: a practical and powerful approach to multiple testing. J. R. Stat. Soc. Ser. B. 57, 289–300 (1995).

Acknowledgements

Funding was provided by the Development Project of National Major Scientific Research Instrument (82027803), the National Natural Science Foundation of China (82202151), the Key Research and Development Project of Zhejiang Province (2024C03092), the National Key R&D Program of China (2022YFC2405505), Zhejiang Provincial Natural Science Foundation of China (Y24H180007), and Beilun Health Technology Project (2024BLWSQN002).

Author information

Authors and Affiliations

Contributions

Study concept and design: K.Z., J.H., L.X., and T.J.; Acquisition of data: K.Z., J.H., W.J., Q.P., W.X., L.W., and W.S.; Analysis and interpretation of data: K.Z. and J.H.; Drafting of the manuscript: K.Z., J.H., and L.X.; Critical revision of the manuscript: K.Z., J.H., L.X., and T.J.; Statistical analysis: K.Z. and J.H.; Study supervision: K.Z., J.H., W.J., Q.P., W.X., L.W., W.S., L.X., and T.J. All authors have read and approved this manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Zhang, K., He, J., Ji, W. et al. Machine learning model for differentiating xanthogranulomatous cholecystitis and gallbladder cancer in multicenter largescale study. npj Digit. Med. 8, 590 (2025). https://doi.org/10.1038/s41746-025-01991-7

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41746-025-01991-7