Abstract

In principle, ML/AI-based algorithms should enable rapid and accurate cell segmentation in high-throughput settings. However, reliance on large training datasets, human input, computational expertise, and limited generalizability has prevented this goal of completely automated, high-throughput segmentation from being achieved. To overcome these roadblocks, we introduce an innovative self-supervised learning method (SSL) for pixel classification that does not require parameter tuning or curated data sets, and instead trains itself on the end-users’ own data in a completely automated fashion, thus providing a more efficient cell segmentation approach for high-throughput, high-content image analysis. We demonstrate that our algorithm meets the criteria of being fully automated with versatility across various magnifications, optical modalities, and cell types. Moreover, our SSL algorithm is capable of identifying complex cellular structures and organelles, which are otherwise easily missed, thereby broadening the machine learning applications to high-content imaging. Our SSL technique displayed consistently high F1 scores across segmented cell images, with scores ranging from 0.771 to 0.888, matching or outperforming the popular Cellpose algorithm, which showed a greater F1 variance of 0.454 to 0.882, primarily due to more false negatives.

Similar content being viewed by others

Introduction

Today, 384 and 1536 well plate layouts are quickly replacing the past norm of 96 well plates and becoming commonplace in high-throughput assay applications, such as drug impact and toxicity assessment, expanding the range of cell lines, treatments, phenotypes, and surfaces for a given experimental dataset1,2,3. To keep pace, the application of machine vision for cell segmentation has grown remarkably to avoid time-consuming and intensive manual equivalents. Automated cell segmentation is seen as a cornerstone for high-throughput studies, highlighting the need for software that can facilitate the accurate assessment of cellular morphology. The applications are broad for automated cell segmentation due to the need for swift and precise analysis in high-throughput contexts for drug development, real-time gene-expression tracking, and cell therapy4,5,6,7 to name a few. Despite numerous reports of success with current automated segmentation tools, the application of deep learning in cell segmentation still encounters challenges, including a significant demand for data, the need for human intervention and biases within, limited generalizability, and specialized training.

Deep convolutional neural networks (CNNs) have gained prominence in deep learning-based cell segmentation, showcasing the efficiency and accuracy of UNET8,9,10,11,12 and Mask R-CNN13 in cell and nucleus segmentation. This technique interprets segmentation as a task of identifying cell boundary pixels, compelling the model to produce a ‘‘distance map’’ for each pixel to estimate its likelihood of being at a cell border. In high-throughput assays, a diverse dataset capturing a broad spectrum of cell morphologies is crucial for CNNs to accurately segment individual cells. However, the extensive requirement for data training and manual annotation restricts the application of CNNs in high-throughput studies with limited replication and presents a significant hurdle for researchers who are unable to expand their studies without additional training data. A recent study highlights that effective CNN model training requires a substantial, high-quality pre-training dataset consisting of 1.6 million cells, presenting the challenge of applying CNN methods in segmenting specialized high-throughput studies due to the sheer scale of data needed14. Other techniques like pixel classification are preferred for images with low cell density when it involves labeling each pixel in an image as a distinct class (e.g., cell versus background)15,16,17,18. High fidelity segmentation is essential to further downstream tasks, such as declumping and organelle identification using post-processing algorithms like watershed19, active contour20, or threshold-based segmentation21.

According to the 2018 Data Science Bowl, the top three performing CNN models, namely U-Nets, fully connected feature pyramid networks, and Mask R-CNN demand high computational expertise for segmentation tasks, resulting in a significant obstacle to biologists who are the primary end-users of these models9. Furthermore, it is of paramount importance to expand the training datasets and enhance their ability to generalize across different studies that go beyond competition scenarios. Given that scientists frequently resist expanding extensive imagery datasets for additional training, an innovative strategy in bio-imaging platforms adopted by CNNs, such as Cellpose (CP) 2.022, involves incorporating a “human-in-the-loop” feature to aid in segmentation. This feature enables users to adjust crucial cell parameters manually or undertake the segmentation of specific cells of interest for a more targeted training set. Nevertheless, this approach introduces additional labor and time, diverging from the goal of completely automated high-throughput imaging. Furthermore, users may have to offer numerous manual segmentation references for each condition in the high-throughput assay, potentially increasing bias.

Many high-throughput imagers are now equipped with high magnification, immersion objectives for high-resolution imaging (e.g. objectives with 50X–60X magnification and high numerical apertures), giving users far more intracellular information than the more traditionally utilized 10X–20X magnifications can provide. This synergy of high-throughput and high-resolution imaging can significantly enhance the comprehensive understanding of cell phenotype, covering aspects, such as sub-cellular structure23,24, molecular expression25, and organelle distribution26. For instance, high-resolution imaging’s potential enables scientists to quantify cell membrane convexity and concavity, offering insights into the interactions between cytoskeleton and substrate environment27. Despite these advances, current studies often overlook the meticulous analysis of fine cell structure due to the limitations of study size and a lack of annotated training data required for high-content automated segmentation. Consequently, biomedical researchers are frequently compelled to choose between image resolution and sample size. The future of cell imaging must bridge the gap between high-resolution and high-throughput methodologies by advancing toward their integration, making fully automated segmentation an essential analytical tool. With this capability, researchers can unlock a far more comprehensive understanding of cellular behavior captured from detailed structural nuances of vast datasets, generated from imaging thousands to millions of cells across various conditions, materials, and time points.

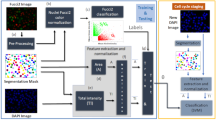

To address these challenges, we present a self-supervised learning (SSL) methodology for pixel classification tasks, aimed at automating cell segmentation for both high-content and high-throughput data studies. This user-friendly and robust approach is completely automated, eliminating the need for dataset-specific adjustments and data annotation in the training process. Our previous segmenting method utilized optical flow (OF) vector fields generated between consecutive images from live cell time series data sets as an inherent data structure to self-label training data, thereby creating a robust and truly automated cell segmentation technique that performed well against contemporary methods18. Building on this, we demonstrate a method applicable to both live or fixed cell imagery, achieved by blurring a single image and then employing SSL techniques to both the original and blurred images (Fig. 1). Specifically, we employ a Gaussian filter from an original input image, then calculate OF between the original and blurred image. OF vectors are used as a basis for self-labeling pixel classes (‘‘cell’’ vs ‘‘background’’) to train an image-specific classifier. We demonstrate that this SSL approach achieves complete automation while also capturing high content information with a robustness that extends across a range of magnifications and optical modalities. As a result, we present this algorithm as an enabling technology that applies across disciplines, from exploratory cell biology to targeted biomedical research studies to biomanufacturing quality control. This work focuses on pixel-based segmentation for cell confluency calculations and intracellular phenotyping investigations. As an additional feature, we demonstrate that our segmentation results can be utilized as inputs into cell declumping algorithms with high-fidelity results for non-adherent cells. Declumping of adherent cells will be the focus of future work.

The process starts with generating a blurred (m × n) image, then applying unsupervised learning via optical flow (OF) to label cell and background (bg) pixels. Supervised learning is performed by extracting labeled (cell or background) pixel features from the image, including entropy (e), gradient (g), and intensity (i). A Naïve Bayes classifier with the ten-fold split is used for training. The classification model is exported and applied to the original image. This method is repeated with each image, ensuring an adaptive process in which every image has a uniquely associated classification model.

Results

SSL approach for segmenting diverse microscopy modes across multiple cell types

We first aim to highlight the compatibility of our SSL code with high-throughput imaging in that robust cell segmentation takes place from single images in the absence of both curated training libraries and manual parameter settings. Our SSL method demonstrated versatility by working at different resolutions and with different microscopy modalities spanning from label free to fluorescent imagery (Fig. 2a–e). Representative images segmented by SSL varied from mammalian cell types to fungi, were processed in a completely automated fashion. Label-free examples includes breast cancer cells MDA-MB-231 imaged by phase contrast at 10X (Fig. 2a) and bright-field at 40X (Fig. 2b); differential interference contrast (DIC) images of MDA-MB-231 at 63X (Fig. 2c.i) and S. cerevisiae (Fig. 2c.ii); human fibroblast Hs27 imaged by interference reflection microscopy at 40X (Fig. 2d); epifluorescence images of GFP-labeled A549 cells (Fig. 2e.i and ii) at 40X (previously analyzed as live cell imagery in Robitaille et al. and Christodoulides et al.17,28,29), F-actin and vinculin-labeled MDA-MB-231 cells (Fig. 2e.iii and iv) at 63X. The combined segmentation of both structures—F-actin (in red) and vinculin (in green)—appears yellow, allowing for further co-localization analysis of these structures. An additional declumping step was employed in Fig. 2c.ii after segmentation as described in more detail below.

Phase contrast image of a MDA-MB-231 (10X objective), bright-field image of b MDA-MB-231 (40X objective), (c) DIC image of MDA-MB-231 (i) (20X objective) and S. cerevisiae (ii) (63X objective), (d) IRM image of Hs27 (40X objective), (e) epifluorescence images of (i), (ii) A549 cells with lifeAct (GFP-actin conjugate, 100X objective) and (iii, (iv) MDA-MB-231 (F-actin (red) and vinculin (green) expression, 63X objective). Yellow borders show SSL segmentation results. Scale bar a: 50 µm, b: 10 µm, c.i: 25 µm, c.ii: 5 µm, d: 20 µm and e: 20 µm.

SSL for segmenting diverse fluorescent channels across multiple cell treatments

Fluorescent Hs27 cell segmentation was performed on epi-fluorescent and confocal imagery with the cells cultured on different surfaces and stained with DAPI, phalloidin, and anti-Vinculin antibodies (Fig. 3). In a completely automate fashion, SSL achieved robust cellular segmentations across different stains: DAPI (Fig. 3a.i,iv), phalloidin (Fig. 3a.ii, b.i), and vinculin-antibody (Fig. 3a.iii, b.ii)); different modalities (epifluorescence vs confocal microscopy); and different resolutions (20X vs 63X). The nuclei were segmented with robust, clear borders, though in some instances, double nuclei were observed which could be explained by cell division (Fig. 3a.i). Most individual cells were successfully segmented by SSL via F-actin and Vinculin structures across different imaging setups, demonstrating the segmentation’s adaptability and precision at various resolutions without the need for curated pre-training data sets. In addition, membrane morphologies unique to a given stain could be distinguished upon merging these segmentations (Fig. 3b.iii).

Fluorescent images of Hs27 stained for nucleus (DAPI), cytoskeleton (F-actin), and focal adhesions (vinculin) cultured on a glass-bottomed petri dishes using a 20X objective (epifluorescence) and b 63X confocal images of Hs27 cultured on a glass-bottomed petri dish stained for F-actin and vinculin. Scale bar a: 50 µm, b: 10 µm.

SSL segmentation approach for quantifying phenotypic stimuli

Given that most high-throughput experiments are performed on fluorescent data across a variety of treatment conditions, we conducted an analogous experiment by compiling images of fibroblast Hs27 plated atop surfaces treated with different fibronectin concentrations in a single executable run. These surfaces were drop coated with fibronectin of 5, 10, 25, 50, 100 µg/ml, fixed, and stained with anti-vinculin antibodies, then imaged under fluorescence microscopy using a 40X objective. Images of Hs27 cells cultured on surfaces treated at different fibronectin concentrations were loaded and processed with SSL in one shot, ensuring the initial self-tuning values for background and cell pixel labeling during unsupervised learning remained consistent.

As in previous examples, the need for a training dataset or tuning parameters was eliminated, enabling wide-ranging phenotype segmentation in an automated fashion. The entire segmentation process for this particular experiment was completed in 165 s on the laptop computer described above, efficiently processing 15 images throughout five conditions. In parallel, the same images were analyzed using the CP cyto2 model, with manual input for the object diameter set to 150 pixels, which was effective in most cases. Figure 4 presents a direct comparison between the segmentation results of CP (in red) and SSL (in yellow). Our SSL approach consistently delivered robust segmentation outcomes for Hs27 cells, requiring no manual intervention, even with the variations in cell shapes induced by fibronectin treatments. Conversely, the CP model sometimes failed to identify the elongated trailing edges of fibroblasts (Fig. 4c, e, h, j) or missed entire cells (Fig. 4i). Hs27 morphology varies dramatically between low and high fibronectin concentration coated on the cultured surface. Therefore, an adaptable segmentation approach like SSL can ensure that every image has a uniquely associated classification model. Indeed, SSL had no difficulty segmenting the completely unique phenotypes associated with low fibronectin treatments of 5 or 10 µg/ml (Fig. 4a, b, f, g) alongside higher concentrations without the need for a pretrained dataset.

SSL for segmenting fine-detail cellular structure

The robustness of SSL in segmenting fine-detail cellular structures represents a significant advancement in high-resolution fluorescent imaging (Fig. 5). Our SSL methodologies exhibit a remarkable capacity for accurately delineating intricate cellular components, a task that is essential for detailed biological analysis and interpretation. In fact, these structures are not easily seen by eye without contrast enhancements (Fig. 5 a.iii, b.iii and c.iii) and were often missed by CP. This precision in segmentation is particularly vital when dealing with complex cellular structures like filopodia (Fig. 5a.iii and c.iii) and lamellipodia (Fig. 5b.iii), where the distinction between different cellular components can be subtle yet critical. To ensure the Fig. 5 results were not due to a lack of CP training data, we further compared results after implementing the CP human-in-loop feature (CP-HiL), which enables user-curated training sets to be incorporated into the model. A fluorescent dataset of Hs27 cells stained for F-actin, imaged by 10X epi-fluorescence and 63X confocal microscopy, was segmented using SSL, CP cyto (cyto2), and CP-HiL. For CP-HiL, seven 10X epi-fluorescence images and three 63X confocal images were used for the additional training.

Confocal images of Hs27 stained with F-actin (red) for cytoskeleton at 63X objective (a.i, b.i, c.i). Images were zoomed in (a.ii, b.ii, c.ii) and contrast enhanced to depict structures of filopodia (a.iii, c.iii) and lamellipodia (b.iii). SSL results are outlined in yellow and Cellpose in green. Scale bar (white) a.i: 20 µm.

The initial cyto2 segmentation failed to segment a number of cells on the 10X images (Fig. 6 a.i–iii), highlighted by white dotted boxes, prompting us to integrate the CP-HiL approach for manual corrections of misclassified segments. Though Fig. 6b.i shows that CP-HiL successfully identified cells missing from the CP method shown in Fig. 6a.i, CP-HiL failed to identify other cells or misinterpreted a single cell as two separate entities (Fig. 6b.ii,iii). Segmentation with SSL did not overlook any cells or falsely split single cells, though additional declumping algorithms for adherent cells will need to be added as a separate step in future work (Fig. 6c.iii).

As shown in Fig. 6, CP cyto2 and CP-HiL demonstrated higher fidelity in segmenting Hs27 images taken by confocal microscopy at 63X, but the two methods required manual tuning of the object diameter specific to each image. For instance, a user input diameter of 150 pixels was determined as optimal for CP methods to segment the Hs27 cells shown in Fig. 6a.iv and 6b.iv, but required diameters of 200 and 330 pixels for similar cell shapes shown in Fig. 6a.v and b.v, respectively. User adjustment of the CP diameter setting between 200 and 330 was also necessary for high fidelity segmenting of epithelial-like cells in Fig. 6a.vi. and b.vi. In contrast, our SSL approach maintained constant setting inputs across all images, resolutions, and cell shapes (Fig. 6c.i–vi), simplifying the segmentation process and eliminating the need for manual adjustments. In addition, and as detailed in Fig. 5, SSL consistently captured the fine structures in membrane extensions.

Given that the CP method provided similar results and required fewer human inputs comparing to CP-HiL, a quantitative comparison between CP and SSL was made. For this analysis, ten 10X fluorescent images were selected, and 80 cells within these images were randomly chosen for manual segmentation to create a ‘‘manual mask.’’ The same cells were then segmented using both CP and SSL methods, generating ‘‘automated masks’’ for comparison. The F1 score was calculated by comparing the ‘‘manual mask’’ against the ‘‘automated masks’’ from each method. The average F1 score for SSL was 0.852 (S.D. = ± 0.017), surpassing the CP method’s average F1 score of 0.804 (S.D. = ± 0.08). Representative masks from manual, CP, and SSL segmentation, along with their respective F1 scores, are illustrated in Fig. 6. The SSL method demonstrated stable F1 scores across all segmented images, ranging from 0.831 to 0.876. In contrast, the CP method’s F1 scores varied more significantly, from 0.645 to 0.8815, primarily due to false negative instances (Fig. 7c).

Quantitative analysis of SSL and CP performance on larger datasets

To broaden these results, a comparison between SSL and CP models was performed to evaluate segmentation performance across various biological objects, microscope modalities, image sizes, and fluorescent labels using hundreds of in-house and public data set images. Table 1 details the resulting F1 scores, processing times, and—because CP sometimes failed to segment entire images—the percentage of images successfully segmented. For the 10X epifluorescence and phase contrast Hs27 in-house datasets, the SSL F1 scores were comparable to CP cyto2, but with significantly faster processing times, saving 25 min across over 500 images. For the 40X Hs27 images, SSL achieved a higher F1 score of 0.831 and a faster processing time compared to CP cyto2, which also failed to segment approximately 12% of the images. A similar trend was observed for the 60X Hs27 images stained for F-actin and Vinculin in which the cyto2 and cyto3 F1 scores were 0.608 and 0.454, respectively, in contrast to a significantly higher F1 score of 0.758 for SSL. In addition, the CP models required more processing time while successfully segmenting only about half of those images.

When evaluating a Cell Paint dataset of Hoechst-stained nuclei that was also used to train the CP ''nuclei’’ model29, CP outperformed SSL, achieving a F1 score of 0.953 with 100% segmentation in 58 min, versus a 0.873 SSL F1 score in 23.5 min. In general, the table shows that SSL demonstrates faster processing times and higher fidelity segmentation, particularly for the in-house Hs27 10, 40, and 60X datasets, compared to CP. Furthermore, as described above, SSL does not require that manual tuning of a diameter parameter for optimization.

Figure 8 presents representative image segmentations from Table 1 by SSL (Fig. 8a, c, e) and CP (Fig. 8b, d, f). In most scenarios, it is clear by eye that CP has a tendency toward false negatives, which lower its respective F1 scores in Table 1. The exception is with the nuclei segmentation, where SSL tended toward over-segmentation (Fig. 8e) compared to the ‘‘nuclei’’ model of CP (Fig. 8f). Additionally, SSL counted surrounding flow signals near neighboring nuclei, reducing segmentation precision relative to CP. However, as noted above, CP was specifically trained on these images while still requiring the manual tuning of a diameter (d = 30) whereas SSL is self-trained and completely automated. Details of SSL and additional CP segmenting configurations, including models, sample size, percentage of successful segmentation, F1 score and time are given in Supplemental Note 3.

Additional SSL declumping feature for non-adherent cells and nuclei

While this work is focused on automated segmentation for quantifying cell confluency and detailed intracellular features, we recognize that identifying individual cells within clumps is a naturally desirable extension of the current algorithm. Here, we demonstrate that the application of the watershed algorithm to SSL-segmented clumps enables the declumping of both S. cerevisiae and nuclei for applications from fundamental research to biomanufacturing quality control. We view this as proof of principle that SSL segmentation results can be utilized as downstream inputs for additional processing applications such as declumping. Figure 9 shows representative imagery and declumping results for both SSL and CP (cp_yeastPC_cp3 model) using our in-house 63X DIC images of S. cerevisiae (n = 60); and for the nuclei images of Table 1. SSL with watershed declumping preformed especially well on the yeast imagery compared to CP, which often failed to segment cells in the clump. We will continue to develop this feature for both non-adherent and adherent cell types in future work.

Discussion and conclusion

High throughput in vitro imaging is now producing far more imagery—both in terms of number of images and diversity of imaging modalities—than even the most advanced deep learning algorithms can reliably segment. In addition, the increasing requirement of extracting fine cellular details from this imagery means that curated training data sets will need to grow exponentially at a rate that, in our view, is simply unsustainable. Machine learning and deep learning pretrained models, when trained on specific cell types or imaging techniques, often do not generalize effectively to other cell types or imaging modalities22. Challenges persist due to the inherent variability in cell phenotypes across different tissues and organisms that hampers the creation of universally applicable datasets. Transfer learning can offer a solution by conducting a pre-training model on a similar available dataset and applying it to an unseen target set. Nevertheless, limitations stemming from the pre-training dataset could lead to overfitting, where the model excels with familiar data but underperforms with unexpected phenotypes in the target datasets30. This point becomes increasingly more relevant as the popular use of 384 and 1536 well plates in drug discovery applications expands the range of phenotypes. Such wide-ranging imagery datasets can require repeated deep-learning model retraining, making the training procedure increasingly labor and computationally intensive. The innovation of SSL streamlines this process, as each input image generates a specific model for its segmentation purpose, circumventing the pitfalls of over-generalization that often result in reduced accuracy.

The key enhancement to our previous SSL algorithms17,18 is the applicability to single-frame data, eliminating the need for live-cell imagery and thus making the code more broadly applicable to include fixed cell imagery. The process of SSL begins with an unsupervised labeling of cell and background pixels by employing OF between the original image and a blurred version, thus eliminating the need for curated training data sets and ensuring data relevancy. This method proved effective in identifying intricate organelles and cellular structures regardless of magnification level or the use of external dyes (Figs. 2 and 3) as well as detecting structures that might be overlooked by the human eye (Figs. 4–6), thereby enhancing the generalizability of machine learning outcomes to high content imaging. The label library generated from the unsupervised step is employed in supervised learning training to classify pixels missed by the OF process. The supervised classification in this work leverages three features—entropy, gradient, and intensity—as they provide rich information at the cellular level but is readily expandable. Entropy, a measure of localized texture, is well-known for object edge detection31, and recently applied in a wide range of disciplines like ecology and landscapes32, evolutionary biology33, and cancer research34,35,36. For in vitro microscopy, the inherent intracellular variability in organelle and cytoskeletal arrangements makes for enhanced texturing versus the background.

Another advantage of this SSL approach is traceability, which we define as ability to trace backward from the segmentation results to the image feature vectors that proved most influential. The extensive range of data features in deep learning - often requiring the tuning of millions of hyperparameters—contributes to its “black box” nature such that scientists can be at a loss to understand the conclusions. This lack of transparency is particularly alarming in biomedical studies37, where understanding the rationale behind a model’s decisions is crucial for advancing discoveries in cellular activity, signaling pathways, or diagnostic procedures. Other pixel classification methods offer many more image features transformed by principal component analysis but their superposition can hamper insights38,39. In contrast, our SSL approach utilizes transparent and sufficient known data features focusing on entropy, gradient, and intensity without any transformation—each of which can be visualized with regards to ‘‘cell’’ vs ‘‘background’’ contribution. Furthermore, the entire SSL application can be operated on a standard laptop computer, which avoids any extensive hardware installation and data customization for cloud-based uploading. Our innovative SSL method not only shows improvement in segmentation but also reduces the need for advanced computational skills and risk to data security, thereby lowering the barriers for biological researchers integrating computer vision into their work.

Current studies in cell biology often overlook the meticulous analysis of fine cell structures, instead focusing primarily on basic cellular geometry and fluorescence intensity. This could be either attributed to the limitations of robust and reliable segmenting tools, the imaging tools40 or the data size41. The future of cell imaging will need to bridge the gap between high-resolution and high-throughput methodologies. This evolution depends on developing high-throughput cell imaging experiments that are complemented by reliable, straightforward, precise, and robust automated cell segmentation techniques. Here, we have presented results of our SSL algorithm across various modalities and resolutions (Figs. 2 and 8, Table 1) and experimental treatments (Fig. 4) that not only accurately segment cells but also provide detailed structural information (Figs. 4–6) suggesting our code’s ability to meet this need.

Future work will continue to expand upon the proof-of-principle declumping algorithms presented here for high cell density imagery of both non-adherent and adherent cell types. The segmentation algorithm we have presented here is foundational to all such subsequent analyses, including declumping, morphological measurements, and the extraction of deep learning parameters—all of which are enhanced by first classifying cells from background with high fidelity. Throughout this study, SSL robustly met this goal across various cell types, cell treatments, and imaging modalities.

Materials and methods

Cell culture and microscopy

All mammalian cells were grown in DMEM (ATCC, #30-2002) enriched with 10% fetal bovine serum (ATCC, #30-2020) at 37 °C in a 5% CO2 atmosphere. Cell treatments and growth conditions included a range of environments, including off-the-shelf glass-bottomed dish culturing, or fibronectin-treated surfaces. Detailed culture methodologies and immunofluorescence staining protocols are described in the refs. 17,18,42. Anti-vinculin antibodies (Sigma, V9131) were prepared at a 1:500 concentration. Microscopy information for each cell type, including the mode of microscopy, magnification, numerical aperture, and camera type is provided in Supplementary Note 1. The computer used for code testing was a Dell laptop with CPU: i7-13700H, 13th Gen Intel 14-core, 24MB Cache, 5.0 GHz and GPU: NVIDIA GeForce RTX 4060, 8GB GDDR6; 32GB RAM, DDR5, 4800 MHz.

Self-supervised learning

The foundational principles of employing OF for SSL-based live cell image analysis were elaborated in earlier publications in which two images from consecutive time points were utilized for labeling and training17,18. Here, we broaden the approach to single or fixed-cell imagery, requiring only a single input image for the entire segmentation process (Fig. 1). We extensively investigated a variety of image transformations for simulating motion—including translation, dilation, rotation, and found that Gaussian blurring produced the highest fidelity segmentation results. The success of the Gaussian blur algorithm in enabling OF segmentation was in large part attributed to the fact that both Gaussian blur and OF are conditioned on the constraint that overall image intensity is conserved, thereby resulting in fewer artifacts to be mistakenly labeled by OF as motion. More specific to the Farneback OF algorithm is that blurring mimics the down-sampling utilized by Farneback to create lower resolution images thereby making the two approaches synergistic. A more detailed description of the relationship between Farneback OF and the Gaussian blur filter can be found in Supplementary Note 2.

The Gaussian filter (σ = 0.1) is applied to the original image to generate a blurred image, which serves as an input for calculating a Farneback OF displacement field. The Farneback algorithm determines OF of a moving object by calculating the displacement field at multiple levels of resolution, starting at the lowest and continuing until convergence.

Cells typically have higher entropy (or texture) than the background due to complex internal structures, such as organelles and the cytoskeleton. We take advantage of this fact by using this entropy difference as a metric for self-tuning and optimizing the OF thresholds that label pixels as either ‘‘cell’’ or ‘‘background.’’ This self-tuning phase is termed unsupervised learning as it operates without a predefined training library. Following the unsupervised learning stage, masks for cell and background are created, each being multiplied by the original image. The resultant image products are analyzed to extract features, such as entropy, gradient, and intensity from every labeled pixel (Fig. 1).

The process transitions to supervised learning when feature vectors from each pixel, along with their corresponding labels (‘‘cell’’ and ‘‘background’’), are aggregated and introduced to a Naïve Bayes Classifier, employing a ten-fold split for validation. Subsequently, a model is derived and applied to the original image, culminating in the final segmentation of cells. This methodology underscores a comprehensive approach, integrating both unsupervised and supervised learning phases to achieve precise cell segmentation without the necessity for an extensive training dataset or manual parameter tuning. It is intrinsically adaptive in that a new model is created for each image. The entire process can be implemented using the MATLAB, Python or a graphic-user interface (GUI) supplied as supplementary code packages. A more detailed description of the entire algorithm architecture can be found in Supplementary Note 2.

Statistics and reproducibility: manual segmentation for F1 score evaluation

Manual segmentation is facilitated through Fiji, utilizing freehand selection tools. The resulting cell masks were then saved and employed to compute the F1 score for SSL, CP, and SSL + CP techniques. The F1 score, a harmonic mean of precision and recall, is defined as

where TP, FN, and FP are abbreviations for true positive, false negative, and false positive, respectively. This approach enables a quantitative comparison of the segmentation accuracy amongst the three segmenting methods, providing a measure of their performance in terms of both precision (the proportion of true positive results in all positive predictions) and recall (the proportion of true positive results in all actual positives).

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

Data availability

Images evaluated in Figs. 2–4, 6, 8 are available as TIFF files at https://doi.org/10.5281/zenodo.1496787643.

Code availability

The SSL application is available in MATLAB, as a stand-alone GUI download for Windows, and as two separate SSL MATLAB source code packages—one with declumping and one without. Additionally, an SSL Python source code without declumping feature is included—one for interface development environment and one for command line interface. All four packages are available for download at https://doi.org/10.5281/zenodo.14967684. They are included here as Supplementary Software 1–4, respectively.

References

Tang, H., Panemangalore, R., Yarde, M., Zhang, L. & Cvijic, M. E. 384-well multiplexed luminex cytokine assays for lead optimization. SLAS Discov. 21, 548–555 (2016).

Knight, S. et al. Enabling 1536-well high-throughput cell-based screening through the application of novel centrifugal plate washing. SLAS Discov. 22, 732–742 (2017).

Bigdelou, P. et al. High-throughput multiplex assays with mouse macrophages on pillar plate platforms. Exp. Cell Res. 396, 112243 (2020).

Shariff, A., Kangas, J., Coelho, L. P., Quinn, S. & Murphy, R. F. Automated image analysis for high-content screening and analysis. SLAS Discov. 15, 726–734 (2010).

Buggenthin, F. et al. An automatic method for robust and fast cell detection in bright field images from high-throughput microscopy. BMC Bioinforma. 14, 297 (2013).

Lugagne, J.-B., Lin, H. & Dunlop, M. J. DeLTA: automated cell segmentation, tracking, and lineage reconstruction using deep learning. PLOS Comput. Biol. 16, e1007673 (2020).

Chen, R. Q. et al. Real-time semantic segmentation and anomaly detection of functional images for cell therapy manufacturing. Cytotherapy 25, 1361–1369 (2023).

Al-Kofahi, Y., Zaltsman, A., Graves, R., Marshall, W. & Rusu, M. A deep learning-based algorithm for 2-D cell segmentation in microscopy images. BMC Bioinforma. 19, 365 (2018).

Caicedo, J. C. et al. Nucleus segmentation across imaging experiments: the 2018 data science bowl. Nat. Methods 16, 1247–1253 (2019).

Falk, T. et al. U-Net: deep learning for cell counting, detection, and morphometry. Nat. Methods 16, 67–70 (2019).

Long, F. Microscopy cell nuclei segmentation with enhanced U-net. BMC Bioinforma. 21, 8 (2020).

Din, N. U. & Yu, J. Training a deep learning model for single-cell segmentation without manual annotation. Sci. Rep. 11, 23995 (2021).

Vuola, A. O., Akram, S. U. & Kannala, J. Mask-RCNN and U-net ensembled for nuclei segmentation. 2019 IEEE 16th International Symposium on Biomedical Imaging (ISBI, 2019).

Edlund, C. et al. LIVECell—A large-scale dataset for label-free live cell segmentation. Nat. Methods 18, 1038–1045 (2021).

Berg, S. et al. iLASTIK: interactive machine learning for (bio)image analysis. Nat. Methods 16, 1226–1232 (2019).

McQuin, C. et al. CellProfiler 3.0: next-generation image processing for biology. PLoS Biol. 16, e2005970 (2018).

Robitaille, M. C., Byers, J. M., Christodoulides, J. A. & Raphael, M. P. Robust optical flow algorithm for general single cell segmentation. PLoS ONE 17, e0261763 (2022).

Robitaille, M. C., Byers, J. M., Christodoulides, J. A. & Raphael, M. P. Self-supervised machine learning for live cell imagery segmentation. Commun. Biol. 5, 1162 (2022).

Ji, X., Li, Y., Cheng, J., Yu, Y. & Wang, M. Cell image segmentation based on an improved watershed algorithm. In Proc. 8th International Congress on Image and Signal Processing 433–437 (IEEE, Shenyang, 2015).

Wu, P. et al. Active contour-based cell segmentation during freezing and its application in cryopreservation. IEEE Trans. Biomed. Eng. 62, 284–295 (2015).

Shen, S. P. et al. Automatic cell segmentation by adaptive thresholding (ACSAT) for large-scale calcium imaging datasets. Eneuro 5, ENEURO.0056-18.2018 (2018).

Pachitariu, M. & Stringer, C. Cellpose 2.0: how to train your own model. Nat. Methods 19, 1634–1641 (2022).

Greenwald, N. F. et al. Whole-cell segmentation of tissue images with human-level performance using large-scale data annotation and deep learning. Nat. Biotechnol. 40, 555–565 (2022).

Garvey, C. M. et al. A high-content image-based method for quantitatively studying context-dependent cell population dynamics. Sci. Rep. 6, 29752 (2016).

Luengo Hendriks, C. L. et al. Three-dimensional morphology and gene expression in the Drosophilablastoderm at cellular resolution I: data acquisition pipeline. Genome Biol. 7, R123 (2006).

Zinchenko, V., Hugger, J., Uhlmann, V., Arendt, D. & Kreshuk, A. MorphoFeatures for unsupervised exploration of cell types, tissues, and organs in volume electron microscopy. eLife 12, e80918 (2023).

Pieuchot, L. et al. Curvotaxis directs cell migration through cell-scale curvature landscapes. Nat. Commun. 9, 3995 (2018).

Christodoulides, J. A. et al. Nanostructured substrates for multi-cue investigations of single cells. MRS Commun. 8, 49–58 (2018).

Bray, M.-A. et al. Cell painting, a high-content image-based assay for morphological profiling using multiplexed fluorescent dyes. Nat. Protoc. 11, 1757–1774 (2016).

Corbe, M., Boncompain, G., Perez, F., Del Nery, E. & Genovesio, A. Transfer learning for versatile and training free high content screening analyses. Sci. Rep. 13, 22599 (2023).

El-Sayed, M. A. & Hafeez, T. A.-E. New edge detection technique based on the Shannon entropy in gray level images. 3, (2011).

Cushman, S. Calculation of configurational entropy in complex landscapes. Entropy 20, 298 (2018).

Cushman, S. A. Entropy, ecology and evolution: toward a unified philosophy of biology. Entropy 25, 405 (2023).

Lam, V. K., Nguyen, T. C., Chung, B. M., Nehmetallah, G. & Raub, C. B. Quantitative assessment of cancer cell morphology and motility using telecentric digital holographic microscopy and machine learning. Cytom. A 93, 334–345 (2018).

Lam, V. K. et al. Machine learning with optical phase signatures for phenotypic profiling of cell lines. Cytom. A 95, 757–768 (2019).

Vu, T. et al. FunSpace: a functional and spatial analytic approach to cell imaging data using entropy measures. PLoS Comput. Biol. 19, e1011490 (2023).

Ching, T. et al. Opportunities and obstacles for deep learning in biology and medicine. J. R. Soc. Interface 15, 20170387 (2018).

Elhaik, E. Principal component analyses (PCA)-based findings in population genetic studies are highly biased and must be reevaluated. Sci. Rep. 12, 14683 (2022).

Chari, T. & Pachter, L. The specious art of single-cell genomics. PLoS Comput. Biol. 19, e1011288 (2021).

Horwath, J. P., Zakharov, D. N., Mégret, R. & Stach, E. A. Understanding important features of deep learning models for segmentation of high-resolution transmission electron microscopy images. npj Comput. Mater. 6, 108 (2020).

Byun, H., Lee, K. & Shim, H. Cell segmentation in multi-modality high-resolution microscopy images with cellpose. Proc. Mach. Learn. Res. 212, 1–11 (2023).

Robitaille, M. C., Christodoulides, J. A., Calhoun, P. J., Byers, J. M. & Raphael, M. P. Interfacing live cells with surfaces: a concurrent control technique for quantifying surface ligand activity. ACS Appl. Bio Mater. 4, 7856–7864 (2021).

Acknowledgements

V.K.L. gratefully acknowledges support from the National Research Council Research Associateship Program and the Jerome and Isabella Karle Distinguished Scholar Fellowship Program. Funding for this project was provided by the Office of the Undersecretary of Defense through the Tri-Service Biotechnology for a resilient supply chain (T-BRSC) Program and Office of Naval Research through the Naval Research Laboratory’s Basic Research Program. In addition, we acknowledge the data provided by the Tissue Microenvironment and Mechanobiology Laboratory of Dr. C.R. at The Catholic University of America.

Author information

Authors and Affiliations

Contributions

V.K.L. Conceptualization, methodology, investigation, data curation, software, visualization, and writing. J.M.B.: conceptualization, methodology, formal analysis, software, visualization, and writing. M.C.R.: conceptualization, methodology, investigation, data curation, software, visualization, and writing. L.K.: methodology, investigation, data curation, software, visualization, and writing. J.A.C.: resources, validation, and writing. M.P.R.: conceptualization, funding acquisition, methodology, investigation, software, visualization, and writing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Communications Biology thanks N.G., T.R.J., and M.B. for their contribution to the peer review of this work. Primary handling editors: A.K. and A.B. A peer review file is available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lam, V.K., Byers, J.M., Robitaille, M.C. et al. A self-supervised learning approach for high throughput and high content cell segmentation. Commun Biol 8, 780 (2025). https://doi.org/10.1038/s42003-025-08190-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s42003-025-08190-w