Abstract

Image decomposition plays a crucial role in various computer vision tasks, enabling the analysis and manipulation of visual content at a fundamental level. Overlapping and sparse images pose unique challenges for decomposition algorithms due to the scarcity of meaningful information to extract components. Here, we present a solution based on deep learning to accurately extract individual objects within multi-dimensional overlapping-sparse images, with a direct application to the decomposition of overlaid elementary particles obtained from imaging detectors. Our approach allows us to identify and measure independent particles at the vertex of neutrino interactions, where one expects to observe images with indiscernible overlapping charged particles. By decomposing the image of the detector activity at the vertex through deep learning, we infer the kinematic parameters of the low-momentum particles and enhance the reconstructed energy resolution of the neutrino event. Finally, we combine our approach with a fully-differentiable generative model to improve the image decomposition further and the resolution of the measured parameters. This improvement is crucial to search for asymmetries between matter and antimatter.

Similar content being viewed by others

Introduction

Breaking down an image into its constituent components or layers, such as textures, colour channels, shading, or illumination, is known as image decomposition. It is a broad research area within computer vision and image processing with the main goal of extracting meaningful information from an image and separating it into different elements, which can be useful for various applications like denoising, image editing, object recognition, and scene understanding1,2,3,4. Techniques such as Fourier Transformations or Principal Component Analysis (PCA), respectively, are traditionally used for decomposing images into their frequencies or orthogonal components5,6,7,8,9. The use of deep learning for image decomposition is becoming an active area of research, too, with recent developments focused on developing new techniques and improving the accuracy and efficiency of the process10,11,12.

Furthermore, a sparse image is a visual representation that exhibits a relatively small percentage of filled or meaningful pixels compared to the total number of pixels, implying a significant fraction of the image is empty or irrelevant. Sparsity can occur for several reasons, such as the natural characteristics of the data, data acquisition processes, or deliberate compression techniques. It is common in several scientific fields, such as cosmology, particle physics, medical imaging, and molecular biology, and putting efforts to deal with such data is crucial for advancing our knowledge13,14,15,16. Some current image-decomposition solutions have been designed for sparse images; however, these methods tend to target non-scientific data since their final goal is to solve tasks such as image editing, compression or denoising17,18,19,20,21. The decomposition of overlapping-sparse images is an extremely complex task due to the inherent ambiguities, particularly in scenarios like overlaying tracks in a particle-physics event. In such cases, the challenge lies in disentangling the individual signal contributions and accurately reconstructing the underlying composition. The problem becomes even more intricate in cases where the number and type of tracks can vary, resulting in a vast number of potential candidates. Attempting to examine all possible combinations exhaustively becomes infeasible due to the rapidly expanding search space, rendering traditional computational methods impractical.

This paper explores the intricate task of deciphering overlapping-sparse images within the scientific domain. In particular, it tackles a challenge in particle physics: resolving degeneracies at the vertex of neutrino interactions to comprehend the properties of the particles involved. When a neutrino interacts within a scintillating target, it generates secondary charged particles that produce scintillation light. Specifically, we take the case:

where N is the interacting nucleon and X is the so called hadronic system. Within the finite granularity of the detector, some of these particles exhibit indistinct trajectories, leading to the production of coincidental scintillation signals, which can introduce ambiguity in the data.

Resolving such signals with percent-level energy resolution will play a critical role in the future high-precision long-baseline accelerator neutrino-oscillation experiments, such as DUNE22 and Hyper-Kamiokande23, directly impacting the sensitivity to the potential discovery of leptonic charge-parity (CP) violation and the measurement of the neutrino oscillation parameters, including the CP violating phase, the neutrino mass squared difference (\(| {m}_{3}^{2}-{m}_{2}^{2}|\)), and the mixing angle θ2324. Accurate neutrino energy reconstruction is pivotal for precise measurement of oscillation probabilities, as any mismodelling can introduce biases in inferred oscillation parameters. One source of systematic uncertainty is given by the so-called vertex activity, which refers to the energy loss by low-momentum final-state charged hadrons that leave overlapping indiscernible signatures in the detector near the neutrino interaction point. This phenomenon results in energy loss that is challenging to attribute to individual particles and, consequently, to the original neutrino energy25,26. The key role is played by the quenching process of the generated scintillation light27,28, exhibiting variations based on the type and energy of the ionising particle. Despite efforts in current accelerator neutrino experiments to measure vertex activity29,30,31,32, the absence of suitable reconstruction and analysis tools makes measurements subject to model dependence, wherein an arbitrary selection of the nuclear model for the unfolding process introduces biases in the overall neutrino energy reconstruction. Therefore, developing solutions that facilitate the precise unfolding of scintillation quenching by accurately estimating the number, type, and energy of final-state particles becomes crucial.

Deep learning offers a promising solution to this problem. By training neural networks on large datasets of known image configurations, deep-learning models can learn complex patterns and relationships within overlapping images. They can capture the underlying physical and statistical properties, enabling them to infer the constituent elements from the overlapping components. The advantage of deep learning lies in its ability to efficiently handle high-dimensional data and extract relevant features for accurate decomposition automatically. There has been some recent exploration in this area, particularly in utilising deep learning to decompose geophysical images or to recover events that are distorted by multiple collisions, known as pile-ups33,34,35. However, these approaches are limited in their applicability as they make prior assumptions to simplify the problem. These assumptions include a fixed number of overlapping elements and images of fixed size, which restrict their generic nature.

The hypothesis we propose suggests that the transformer model36,37, an architecture that is undeniably reshaping the landscape of deep learning and powering exceptional chatbots like ChatGPT38, possesses the ability to grasp pixel correlations among varied images that intersect within a limited 3D space, utilising its attention mechanisms through a self-supervised scenario. Furthermore, the decoding component inherent to the transformer model holds the potential to progressively deconstruct images by internally subtracting the predicted individual images in an iterative manner. In this study, we present a methodology to decompose multi-dimensional images into distinct and independent objects. To the best of our knowledge, our tool represents the first of its kind, enabling the inference of crucial parameters such as the number, type, and energy of final state hadrons within the vertex activity region of neutrino interactions, all without reliance on arbitrary a-priori nuclear models. Leveraging deep neural networks, our approach facilitates this decomposition efficiently, overcoming computational barriers that were previously prohibitive. This work employs a realistic particle detector simulation to demonstrate how to use a transformer to reconstruct the kinematics of each produced particle and extract independent particle images. Moreover, the article showcases the validation and improvement of the proposed process on detector data, employing a generative model and a comprehensive parameter space exploration through gradient-descent minimisation, exploiting the full differentiability of the model. The selection of a combination of particles that minimises a likelihood function further solidifies the effectiveness and reliability of the presented approach.

Results

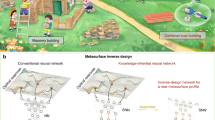

Figure 1 depicts the image-decomposition workflow designed for and applied to images of the region around the vertex of neutrino interactions, known as vertex activity, where independent particle tracks are not easily discernible. The input images consist of overlapping simulated particles, mainly hadrons, releasing their energy in the proximity of the neutrino interaction vertex in a voxelised 3D detector (details in the “Simulated datasets” subsection of the “Methods” section). The non-empty voxels represent the energy loss (in number of photoelectrons) by the particles in the detector, meaning that multiple particles can contribute to the same voxel, making it challenging to determine the number of photoelectrons that correspond to each particle or guess the number of particles that contribute to a particular interaction vertex. A transformer neural network is run on the overlapping image (see the “Transformer for sparse-image decomposition” subsection of the “Methods” section, for more details). It is used to extract both global features of the image (in the neutrino-interaction case, an accurate position of the interaction vertex) and some characteristics of each of the independent images involved: in our physics scenario, the transformer outputs iteratively for each reconstructed particle its type, some kinematics (i.e., initial kinetic energy and track direction), and a boolean value indicating whether to stop. This iterative process follows a scheme where the particles are reconstructed based on their kinetic energy in descending order; it stops when there are no particles left. In that way, the most-energetic particles, which are the ones that contribute most to the total energy loss in the event, are reconstructed first. In contrast, low-energetic particles are left for the end. A generator is used at this stage to create images of independent particles using the information reconstructed by the transformer as input. The choice of the generator can range from a classic simulation to a fully-differentiable one (e.g., in the form of a generative model or a differentiable simulation such as refs. 39,40,41). In this article, the generator developed is a generative adversarial network (GAN)42 (more information in the “Generative adversarial network for fast elementary-particle simulations” of the “Methods” section), a widely acknowledged algorithm known for its rapid image generation capabilities. It was used to verify the transformer decomposition and perform an additional optimisation of the reconstructed kinematics to further improve the decomposition. The process operates as follows: for a specific event, a minimisation algorithm is applied to further refine the parameters generated by the transformer. The individual voxel photoelectrons from the GAN-generated particle images are summed, creating a composite reconstructed image that is subsequently compared to the original image to minimise a chosen target metric, such as a loss or a likelihood function.

a Detection of the vertex activity (VA) region in the input event. The zoomed-in view unveils the particle mosaic at the interaction vertex. b Utilisation of the VA image, combined with the reconstructed kinematic parameters of the escaping muon, as input for the transformer encoder. The transformer encoder processes the input, resulting in c the reconstruction of the interaction vertex position and d an embedded representation of the VA event for the decoder. e The transformer decoder initially processes the encoder’s information, resulting in a prediction for the kinematics of the most energetic particle in the input VA. Simultaneously, it generates a boolean variable to signify the existence of additional particles that require reconstruction. In cases where additional particles are identified, the transformer decoder proceeds to iteratively provide their kinematic predictions in descending order of the kinetic energies of the particles. This process continues by incorporating encoder data and the kinematics of the previously predicted particle until the boolean variable signals termination, indicating that the transformer has determined no further particles are present in the VA event. f A generative adversarial network (GAN) generator produces images of particles based on the kinematics predicted by the transformer. Using the initial reconstructed kinematics, the GAN is also employed to generate an image of the escaping muon. g The generated images are aggregated by summing their voxel photoelectrons, and h compared with the input VA event to verify the decomposition process. The workflow may return to step 6 to further optimise kinematic parameters.

Initial case

The conducted analysis focused on a scenario involving exclusively multi-particle final states analogous to νμ charged-current (CC) interactions with no outgoing pions in the detector (CC0π topology), the most typical interaction mode of neutrino experiments like T2K43 or MicroBooNE44, both having the neutrino spectrum mostly below 1 GeV. Each event in our investigation was characterised by an energetic muon and a varying number of protons, ranging from 1 to 5, produced at the interaction vertex and dispersing along distinct directions with different energies. A comprehensive breakdown of the configurations employed in our study is presented in the “Simulated datasets” subsection of the “Methods” section.

The performance of the decomposing transformer is presented in Fig. 2. One of the outcomes of our study is the precision with which kinetic energy (KE) is reconstructed, both on a per-particle basis and in terms of the total KE per event (Fig. 2a). This is particularly evident for high-energy protons, which constitute the predominant contributors to the cumulative charge of the input vertex-activity images. This precision in reconstructing KE plays a pivotal role in accurately characterising the underlying physics in neutrino interactions. Besides, the estimation of the total number of protons within the vertex-activity region demonstrates an accuracy exceeding 70%. However, when considering Fig. 2b, it is evident that most under- and over-reconstructed protons occur in the lowest KE range. This feature can be attributed to protons with low KE contributing negligibly to the total energy loss in the event, and those protons tend to traverse distances not beyond the confines of a single voxel. By adopting a criterion allowing for an error of up to ±1 reconstructed protons, the accuracy of our estimation surpasses 98%, underscoring the effectiveness of our methodology. The reconstruction of the direction in spherical coordinates exhibits a strong dependency on the length of the particle track (Fig. 2c), which is influenced by its KE. As a consequence, the precision of the reconstructed direction significantly improves with longer particles. Conversely, particles failing to escape the initial voxel present challenges in terms of direction reconstruction due to their confinement within the detection volume. The spatial resolution achieved in vertex reconstruction (Fig. 2d) amounts to approximately 2 mm. This resolution is superior to the intrinsic resolution of the examined detector, comprised of voxels with dimensions of 1 cm3. The vertex position resolution underscores the capabilities of our approach in pinpointing the origin of neutrino interactions with high precision.

μ: mean, σ: standard deviation, false positives (negatives): over (under)-reconstructed particles (with default true (reconstructed) values: kinetic energy (KE) = 0 MeV; θ = 90 degrees; ϕ = 180 degrees). a Histogram of the total vs reconstructed KE for each event, including false positives and false negatives; KE resolution per particle relative to true KE and momentum (P) for each fitted particle, excluding false positives and false negatives; distribution of the difference between the reconstructed KE and the true KE per particle for the cases with 1–5 particles per event, including false positives and false negatives. b Confusion matrix showing the recall (normalisation by columns) and precision (normalisation by rows) of the true vs reconstructed number of particles; distribution of the false positives (particles predicted by the algorithm that are not present in the input events) and false negatives (particles not predicted by the algorithm that are present in the input events). c Density plot relating the difference between reconstructed and true ϕ and θ (spherical coordinates) per particle; density plot relating the difference between reconstructed and true angles and the particle length. Both plots include false positives and false negatives. d. Scatter plot comparing the true and reconstructed vertex per event for the x, y, and z coordinates; box plot of the 3D Euclidean distance between the reconstructed and true vertices for the cases with 1–5 particles per event.

Leveraging the generator for an enhanced decomposition

The output of our decomposing transformer presents a groundbreaking solution to reconstruct the vertices of neutrino interactions. As described previously, these findings serve as invaluable input for a generator capable of producing images for each reconstructed particle, which allows us to verify the decomposition process by comparing the target input image with the aggregated version of the reconstructed particles, as illustrated in steps 6, 7, and 8 of Fig. 1. We can further enhance our reconstruction by deploying a minimisation algorithm seeded by the transformer output. This algorithm leverages the GAN generator to create images for each particle, facilitating the search for combinations of particles (and, thus, their kinematics) that better match the target event. In our implementation, the generator is a fully differentiable generative model - a generative adversarial network - efficiently enabling us to perform gradient-descent optimisation on the kinematic properties using standard deep-learning frameworks, otherwise unfeasible using conventional simulations. The approach is shown in Fig. 3a.

a Processing of a target event: The target event, along with its reconstructed muon kinematics, is input to the transformer (depicted in blue). The transformer generates a set of possible kinematic combinations for all particles within the target event. These kinematics are subsequently forwarded to the gradient-descent minimiser (depicted in red), which leverages the generative adversarial network (GAN) to refine the kinematics and improve the correspondence with the target event. The diagram visually represents the different decompositions resulting from this process. b The plot shows two scenarios in a likelihood space (pre-computed for the kinetic energy of two most energetic protons of an arbitrary event: KE\({}_{{{{{{{{{\rm{proton}}}}}}}}}_{1}}\) and KE\({}_{{{{{{{{{\rm{proton}}}}}}}}}_{2}}\)). One starts from the transformer output and successfully reaches the target values, while the other begins at a random parameter space point and gets stuck in a local minimum. c Profiled negative log-likelihood \({{{{{{{\mathcal{L}}}}}}}}\) for the kinetic energy of the most energetic proton (KE\({}_{{{{{{{{{\rm{proton}}}}}}}}}_{1}}\)) of an arbitrary event, and the curve shows the 68% confidence interval determined by a \(\Delta {{{{{{{\mathcal{L}}}}}}}}\) of 1 for one degree of freedom. d The resolution of kinetic energy (KE), as determined through an analysis of sets of random and hard events (i.e., events where the image reconstructed from the transformer exceeded a predefined mean-squared-error threshold in comparison to the target image), was assessed for three distinct methodologies: the transformer and two gradient-descent techniques ("GAN (gr. descent 1)” and “GAN (gr. descent)'', as per Algorithms 1 and 2, respectively, from the “Gradient-descent minimisation of the image parameters” subsection of the “Methods” section). It illustrates the effectiveness of GAN-based minimisation in refining the kinematic parameters.

In our study, we have devised two distinct variants of the gradient-descent optimisation approach applied to particle kinematics for a specific target event, where the output of the transformer is utilised as the input initial kinematic parameters to optimise. These variants are contingent upon treating the stochastic component of the generative model, with the model having learnt to correlate noise seeds with the random fluctuations observed in the training dataset. The initial variant aims to identify the optimal set of kinematic parameters that closely align with the target image while simultaneously determining the most suitable random input for the GAN generator. This selection process involves running n independent gradient-descent runs, each minimising a loss, typically represented as the mean-squared error between the generated and target images. Each run employs a distinct input noise seed, and the run that results in the lowest loss is chosen to determine the kinematic parameters, thereby achieving the best fit with the target image. In contrast, the second variant seeks to strike a balance by disregarding the best random input and prioritising the ability to provide precise error metrics for subsequent physical analyses. In this variant, a single gradient-descent run is conducted, and n GAN-generated images are averaged and compared to the target image in each iteration to minimise a log-likelihood. The technical details of both variants are described in the “Gradient-descent minimisation of the image parameters” subsection of the “Methods” section.

As depicted in Fig. 3b, we observe the inherent utility of utilising the transformer output as an initial point for the gradient-descent process. This choice prevents the algorithm from becoming stuck in local minima, thus obviating the necessity for a more intricate learning-rate scheduling strategy that may not necessarily yield preferable outcomes. In addition, the second variant allows for the profiling of the chosen likelihood and the computation of confidence intervals for individual kinematic parameters while treating the other parameters as nuisances, as visualised in Fig. 3c. This step is pivotal in the process of quantifying uncertainties, which, in turn, facilitates the effective incorporation of our findings into extended data analyses. Besides, the additional refinement of the kinematic parameters intelligibly improves their correctness, as demonstrated in Fig. 3d, where both gradient-descent variants consistently outperform the results achieved by the transformer model, particularly when dealing with cases in which the image reconstructed from the transformer output deviates significantly from the desired target.

Nuclear clusters

The most complex scenario we investigated in our study pertains to the presence of nuclear clusters, as discussed in refs. 25,26. These clusters, composed explicitly of deuterium and tritium nuclei in our studies, in addition to the concurrent existence of muons and protons within our vertex activity, present a formidable challenge. Their composite nature introduces inherent complexities into the process of decomposition for several distinct reasons. One primary challenge lies in the intrinsic ambiguities that they introduce, which makes distinguishing these composite nuclear clusters from combinations of independent protons a particularly intricate endeavour. In fact, in certain instances, it becomes practically unfeasible to achieve such differentiation. In this scenario, the decomposing transformer model is confronted with the task of not only accurately inferring their kinematic properties but also deciphering the specific particle types. The interplay of these various components adds layers of complexity to the decompositional process, making it a crucial and challenging area of investigation within our research.

We revisited our original analysis, wherein each event comprised a muon, a variable number of protons ranging from 0 to 4, and added the possibility of including 0 to 1 deuterium and 0 to 1 tritium nuclei. We maintain the criterion that each event must include at least one particle in addition to the muon and not exceed a maximum total of seven particles.

In Fig. 4, we reproduce the same plots as presented previously, but now focusing on the case involving nuclear clusters. The reconstruction of kinetic energy (Fig. 4a) appears to be minimally affected, with any slight degradation in performance attributable to the increased complexity of events in this scenario. We observe a discernible reduction of nearly 10% in the accuracy of particle number estimation (Fig. 4b), with most under- and over-reconstructed protons concentrated within the lowest kinetic energy range as before. The decline in performance can be readily attributed to the increased number of particles in these events, aggravated by the inherent complexity of the nuclear clusters. Nevertheless, if we adopt a criterion allowing for an error of up to ±1 reconstructed particles as before, our estimation accuracy exceeds 96%. Similar observations can be made regarding the reconstruction of the vertex position (Fig. 4d). The inclusion of additional particles, rather than facilitating the reconstruction, results in the emergence of a small energy cluster around the interaction vertex, moderately complicating the precise determination of its location. Additionally, the reconstruction of the direction of the added nuclear clusters proves to be a challenging task, as evidenced by the accumulation of incorrectly reconstructed directions within lower energy ranges (Fig. 4c). The particle identification output exhibits strong performance, as evidenced in Fig. 4e. The network displays a notable level of accuracy, with a correct identification rate exceeding 78% for protons. Identification errors are infrequent when the output probability exceeds 0.8, stressing the robustness of the method. On the contrary, difficulties arise when differentiating nuclear clusters from short protons despite successfully identifying over half of the nuclear clusters. As expected, distinguishing nuclear clusters from short protons leads to significant ambiguities in the identification process.

μ: mean, σ: standard deviation, false positives (negatives): over (under)-reconstructed particles (with default true (reconstructed) values: kinetic energy (KE) = 0 MeV; θ = 90 degrees; ϕ = 180 degrees). a Histogram of the total vs reconstructed KE for each event, including false positives and false negatives; KE resolution per particle relative to true KE and momentum (P), excluding false positives and false negatives; distribution of the difference between the reconstructed KE and the true KE per particle for the cases with 1–6 particles per event, including false positives and false negatives. b Confusion matrix showing the recall (normalisation by columns) and precision (normalisation by rows) of the true vs reconstructed number of particles; distribution of the false positives (particles predicted by the algorithm that are not present in the input events) and false negatives (particles not predicted by the algorithm that are present in the input events). c Density plot relating the difference between reconstructed and true ϕ and θ (spherical coordinates) per particle; density plot relating the difference between reconstructed and true angles and the particle length. Both plots include false positives and false negatives. d Scatter plot comparing the true and reconstructed vertex per event for the x, y, and z coordinates; box plot of the 3D Euclidean distance between the reconstructed and true vertices for the cases with 1–6 particles per event. e Confusion matrix showing the recall (normalisation by columns) and precision (normalisation by rows) of the particle identification; particle-identification probability distributions when the true particle is a proton; particle-identification probability distributions when the true particle is a nuclear cluster (either deuterium or tritium).

In summary, the decompositional performance remains impressive. This approach can be regarded not only as a proof-of-concept but also as a potent method, demonstrating its potential for successful analyses when appropriately trained with the correct assumptions.

Comparison with standard method

The vertex-activity (VA) measurements reported in literature predominantly suffer from model dependence, meaning that an arbitrary choice of a specific neutrino interaction model is used to unfold the truth energy loss of VA hadrons, which typically simplifies to the following formula for the visible energy (VisE) of the VA, considering a single proton responsible for all VisE:

where:

-

CB: Birks coefficient, equal to 0.0126 cm MeV−1.

-

Ecali: represents a relation equal to \(\frac{{E}_{{{{{{{{\rm{loss}}}}}}}}}[p.e.]}{{c}_{{{{{{{{\rm{cali}}}}}}}}}}\), where Eloss[p. e. ] is the total deposited energy (in photoelectrons), and ccali is a calibration factor equal to 100 p.e. MeV−1.

-

ΔX: approximate length of the longest proton, in millimetres.

While widely adopted, this conventional method introduces systematic uncertainties due to its arbitrary assumptions29,30,31,32. These uncertainties arise from the fact that scintillation light produced by ionising charged particles is affected by Birks’ quenching27,28, which makes the reconstructed VisE vary as a function of the hypothesised number, type and kinetic energy of final-state particles.

To ensure the reliability of our approach, we tested it on neutrino interactions generated by the NEUT generator45. These events are distinct statistically from the dataset used to train our method, which was crafted to capture the full spectrum of neutrino interaction configurations. Results are presented in Table 1, which compares the reconstructed VisE for the standard method (Eq. (2)) and the proposed alternatives (transformer and transformer + GAN); for the latter, the VisE is calculated by summing the predicted particle kinetic energies. The table demonstrates a pronounced enhancement by the proposed models in every scenario, particularly evident with increasing proton multiplicities. In particular, we get absolute improvements in VisE resolution up to ~12% in the VA region, and ~4% for entire events.

Discussion

The results presented in this paper mark a significant advancement in the field of computer vision and its applications in the domain of particle physics. The successful implementation of deep-learning techniques for the decomposition of overlapping-sparse images not only showcases the potential of artificial intelligence in resolving intricate visual content but also opens up new exciting possibilities for addressing complex challenges. By introducing the decomposing transformer, an architecture initially hailing from natural language processing and underpinned by a self-supervised training scheme, this work represents a breakthrough in the analysis and manipulation of multi-dimensional overlapping-sparse images, a problem that has been traditionally problematic for conventional image processing methods.

Employing transformers for sequential tasks is a well-established practice. However, exploiting the iterative nature of transformers to decompose sparse images of overlapping elements introduces a more intricate spatial relationship challenge. We hypothesise that within this iterative process, the transformer internally subtracts the predicted individual components of the image. By reconstructing images based on their most representative features in a certain order (based on the kinetic energy in the neutrino-interaction case), the system prioritises the elements of the image that contribute most significantly to the overall structure. This approach represents a fundamental shift in image decomposition strategies, which often treat all image components equally, regardless of their significance46,47. This unique ordering strategy presents several distinct advantages. It closely mirrors the physical reality of various scenarios where specific components dominate the observed event. By prioritising the reconstruction of these influential components, the system efficiently captures elements of the highest interest, a characteristic that holds true in particle physics and many other computer vision applications. Focusing on pivotal components enables the swift creation of a coherent and informative representation of the scene, significantly elevating the efficiency and accuracy of the overall decomposition process.

Introducing a generative model such as a generative adversarial network (GAN) as the image generator represents a significant advancement in integrating computer vision and deep-learning techniques into this problem domain. GANs have demonstrated proficiency in creating highly realistic and detailed images, making them an ideal choice for generating independent image components based on the information extracted by the transformer. This innovation not only validates the effectiveness of the transformer output but also unveils exciting opportunities for further optimising the reconstructed kinematics. The synergy between the transformer and the GAN, facilitated by the gradient-descent minimisation algorithm, leverages the full differentiability of the GAN. The resultant composite reconstructed image undergoes a rigorous comparison with the original image, primarily focusing on minimising target metrics, such as loss or likelihood functions. This feedback loop, where the system iteratively enhances its decomposition, establishes a mechanism for continuous refinement, enabling greater precision in reconstructing complex and overlapping images. It offers an innovative perspective on the challenges posed by overlapping images, and it can potentially find applications in various fields where image decomposition and reconstruction play pivotal roles, such as medical imaging or environmental monitoring. Additionally, this method has the potential to be applied in mitigating the pile-up of overlapping tracks in particle or nuclear physics, providing a broader spectrum of applications.

One of the most compelling aspects of this research is its direct applicability in the study of neutrino interactions in long-baseline flavour-oscillation experiments. Neutrinos play a fundamental role in our understanding of particle physics and the cosmos at large48,49. The proposed approach offers an alternative perspective on comprehending and extracting valuable information on the processes occurring at the vertex. It addresses the challenge of identifying and measuring independent particles within this complex environment, where multiple tracks overlap. The implications of this advancement are profound, as it provides a means to delve deeper into the characteristics and properties of these particles, ultimately enabling a better understanding of the incoming neutrino kinematics. In the realm of neutrino physics, where accurate measurement of kinetic energy, direction, and particle counts is essential, the deep learning-based image decomposition approach offers a transformative opportunity. The high resolution achieved in particle kinetic energy is particularly noteworthy. This level of precision has the potential to significantly enhance our ability to explore oscillations50,51,52. By providing a clearer and more detailed view of the interaction vertex, this work lays the foundation for improved accuracy in neutrino-related measurements. Finally, the results presented in the “Comparison with standard method” subsection of the “Results” section, evaluated on a statistically independent dataset of neutrino interactions under a selected nuclear model, underscore excellent generalisation performance of the method for the selected case study. Besides, it proves the impact of our deep-learning approach on future high-precision long-baseline experiments, with the capacity to reduce associated systematic errors, avoid model dependence, and improve the neutrino energy resolution, which directly influences the sensitivity towards potentially discovering leptonic charge-parity violation and measuring neutrino oscillation parameters25.

Integrating a generative model of individual particles that can be fine-tuned on detector data (e.g., charged-particle beam tests) represents an exciting avenue for further enhancement of image decomposition. It is worth noting that the intrinsic differentiability of the generator exhibits an atypical characteristic within the realm of data analyses in particle physics, which is currently a thriving area of research39,53,54,55. This property facilitates the construction of a statistical likelihood, permitting the calculation of systematics and precise quantitative measurements. Thus, this approach not only enables improved accuracy in the reconstruction process but also allows for the computation and propagation of errors to downstream analysis methods. It is an example of how interdisciplinary approaches that combine computer vision and particle physics can mutually benefit from each other. As a result, the implications of this work extend beyond the confines of both fields, offering a synergistic approach between particle physics and computer vision.

In conclusion, our paper exhibits the potential of deep learning and computer vision techniques in advancing our understanding of particle physics experiments. The ability to address overlapping-sparse images in the context of neutrino physics holds the promise of not only enhancing our knowledge of fundamental particles but also pushing the boundaries of computer vision research, offering a powerful tool for image decomposition in various applications. As future work, we aim to analyse different network architectures more comprehensively and evaluate the system’s resilience to adversarial attacks for improved robustness.

Methods

Simulated datasets

Simulated datasets are generated within a cubic uniform plastic scintillator detector comprising a grid of 9 × 9 × 9 identical voxels, each possessing a volume of 1 cm3. The coordinates x, y, and z, as well as the angles phi and theta, are discussed with respect to the standard spherical coordinate system, where the centre of the volume is located at (0, 0, 0), and phi is defined as the angle orthogonal to the z-axis. The simulated detector bears a resemblance to 3D imaging detectors such as fine-grained plastic scintillators or liquid argon time projection chambers (LArTPCs), common in long-baseline neutrino experiments56,57,58. Particles are uniformly generated within the central voxel, with their initial directions isotropic and initial kinetic energies uniformly distributed. The simulation process consists of three consecutive steps:

-

1.

Energy loss simulation: employing the Geant4 toolkit59,60,61 to model particle propagation within the detector and compute the local energy loss along the particle trajectory. The energy loss calculation accounts for Birks’ quenching effect, with a fixed correction coefficient of 0.126 mm MeV−1 applied uniformly to all charged particles.

-

2.

Detector response simulation: transforming data obtained in the preceding step into “signal”, specifically scintillation light, that the instrument can detect. We implement a conversion factor to directly scale the energy loss from its physical unit (MeV) to a signal unit (photon electron, p.e.). The chosen scaling factor is set at 100 p.e. MeV−1. Additionally, we incorporate a detector effect in our simulation, known as crosstalk, which accounts for light leakage into neighbouring voxels. The leakage fraction per face is established at 3%.

-

3.

Event Summary: gathering the simulated photon electron signals within each voxel and compiling event information into a format compatible with neural network processing.

The simulated particles include four distinct types: muons (μ−), protons, ionised deuterium nuclei (D+), and tritium nuclei (T+). A total of five million events represent each type. The initial kinetic energies for these particles are determined based on the CC0π vertex-activity signals we anticipate observing: muons exhibit kinetic energies ranging from 300 to 1000 MeV; protons exhibit kinetic energies between 5 and 60 MeV, with the majority coming to a halt within one or two voxels, thereby contributing to an energy concentration in the vicinity of the vertex; ionised deuterium and tritium nuclei possess kinetic energies between 10 and 60 MeV, resulting in a more pronounced energy concentration in the vertex region due to their higher energy loss (dE/dx).

To replicate CC0π neutrino interaction events, the simulated particles are organised into two distinct event types during the training process, contingent upon the specific scenario under investigation:

-

Scenario 1 ("Initial case” subsection of the “Results” section): Each event consists of a single muon, accompanied by an arbitrary number of protons, with the number of protons being randomly selected from 1 to 5.

-

Scenario 2 ("Nuclear clusters” subsection of the “Results” section): This dataset includes one muon, an arbitrary count of protons (ranging from 0 to 4), and an arbitrary number of ionised deuterium (ranging from 0 to 1) and tritium (ranging from 0 to 1) nuclei.

To group particles by starting position, the central voxel was virtually divided into a grid of 125,000 smaller sub-voxels, each with dimensions of 0.2 × 0.2 × 0.2 mm3. We consider two particles to start from the same position if their starting point falls within the same virtual sub-voxel. Consequently, each dataset contains approximately 40 particles originating from each sub-voxel. To form events, we randomly select particles starting from the same position, sum their voxel charges, and introduce a random shift of ±1 voxel in every direction to the entire event. This random shifting enables the algorithm to learn that the interaction vertex position can be located anywhere within the central 3 × 3 × 3 voxels of the original 9 × 9 × 9 input volume (assuming a pessimistic prior vertex reconstruction for modern detectors to ensure robustness to the algorithm). The outer surface of the final volume is omitted to prevent incomplete events due to the shifting, resulting in a final event shape of 7 × 7 × 7 voxels. We choose a single simulated event per sub-voxel for the validation and test sets, resulting in a fixed number of 125K validation and 125K testing events. Just an example, for the proton case, the remaining approximately 37 protons per sub-voxel are allocated for training, yielding ~ 510K combinations per sub-voxel (combinations of 1–5 protons without repetition or order from a group of 35) and a total of ~63 billion (for all the 125K sub-voxels) possible combinations of training events.

The dataset utilised in the “Comparison with standard method” subsection of the “Results” section was generated using the NEUT neutrino generator version 5.7.045, with the following neutrino parameters: muon neutrinos only, initial energy uniformly distributed between 0 and 1.0 GeV, initial position uniformly distributed inside the central cube, and initial direction pointing to the Z-axis (in our detector reference system). The simulated interaction topology is CC0π (i.e., with one muon and no pions). To ensure a fair comparison to the events used for training, only events with proton energies ranging from 5 to 60 MeV and no neutrons or photons are included in this study.

Transformer for sparse-image decomposition

The objective of the decomposing algorithm is to take an image as input and output global features (i.e., the 3D position of the interaction vertex in our case study), along with specific properties of each constituent component in the image. In our neutrino-interaction case, those properties are (per particle): initial kinetic energy, initial direction specified in spherical coordinates (θ and ϕ), and particle type (optional if all particles share the same type).

The neural network architecture employed for the decomposing algorithm is a transformer model36 (illustrated in Fig. 5a). The transformer implemented has a hidden size of 192 and consists of 5 encoder layers and 5 decoder layers. Additionally, it utilises 16 attention heads. We selected Lamb62 as the optimiser. The total number of trainable parameters of the model is 10,393,165. The effective batch size used was 2048, achieved by accumulating gradients over an actual batch size of 512 with an accumulation factor of 4. Other optimiser parameters were set as follows: β1 = 0.9, β2 = 0.999, and weight decay of 0.01. The hyperparameters were chosen via a grid search process. In order to facilitate proper convergence of the model, a learning rate warm-up strategy was employed. During the first 20 epochs, the learning rate gradually increased until it reached an upper limit of 0.002. Subsequently, the learning rate was reduced using a cosine annealing with a warm restart schedule. The lower limit of the learning rate was set to 1000 times smaller than the upper limit. The number of epochs before the first restart was set to 400, and this number was multiplied by a factor of 2 after each restart. Besides, at each warm restart, the upper limit of the learning rate was multiplied by a decay factor of 0.9. The training and validation curves illustrating the model performance can be observed in Fig. 5b. The same architecture was used to obtain the results presented in the “Initial case” and “Nuclear clusters” subsections of the “Results” section. The training was carried out using an NVIDIA V100 GPU, with Python 3.10.12, PyTorch 2.0.063, and PyTorch Lightning64 used for the implementation.

a Left to right: at training time, a variable number of particles with similar (within ~ 0.2 mm) initial positions are selected from the dataset and combined to create an event, and multiple events form a training batch. The input voxel data (comprising energy loss and spatial coordinates) is subsequently processed and passed through the decomposing transformer. This transformer initially generates a prediction for vertex positions and subsequently provides estimates for kinematic parameters and termination conditions for each particle in the events. b Training and validation curves for the different outputs of the network, showing a smooth convergence of the model (this plot corresponds to the model from the “Initial case” subsection of the “Results” section. Similar curves are obtained for the model from the “Nuclear clusters” subsection of the “Results” section). The learning rate schedule used is appreciated by looking at the dashed purple lines. The parentheses indicate the tensor dimensions at each stage.

Generative adversarial network for fast elementary-particle simulations

To assess the performance of the vertex-activity fitting, one could generate candidate images on the fly using the transformer predictions as input (which can be extremely time-consuming due to the complexity of the simulation software) or use a pre-generated library (e.g., the training dataset) and, for each particle predicted by the fitting method, find the closest match in terms of kinematics from the library. However, this latter process might also be very time-consuming, especially when dealing with a large library. The purpose of needing a sizeable library is to ensure a correct validation of the method by sampling the entire distribution. In this article, an alternative approach is adopted, leveraging a generative model to replicate the simulation process. Once trained, the generative model efficiently produces particle images based on the provided kinematics as input, eliminating the need for time-consuming library searches. This approach enables rapid generation of particle images, bypassing the conventional procedure of matching particles with a pre-generated library.

The chosen generative model in this study is a generative adversarial network (GAN)42, specifically a conditional GAN (cGAN)65 integrated into the Wasserstein GAN with Gradient Penalty (WGAN-GP) framework66,67. This model is conditioned on input kinematic parameters and a Gaussian noise vector to generate particle images, allowing for controlled and realistic image synthesis. WGAN-GP addresses some of the limitations of standard GANs, enhancing their stability and convergence. It employs the Wasserstein distance (also known as Earth Mover’s distance) as the loss function and enforces a constraint such that the gradients of the critic’s output with respect to the inputs have a unit norm (gradient penalty). The implementation of WGAN-GP utilises a transformer encoder as the architecture for both the generator and the critic (revealed in Fig. 6a), each with 2 encoder layers, 8 attention heads, and a hidden size of 64. The generator takes the kinematics as input to generate particle images, while the critic assesses the quality of the generated images. Both the generator and the critic have the same number of trainable parameters, which amounts to 141,569. The RMSprop optimiser68 is employed for both the generator and the critic, with a batch size of 32 and a learning rate of 5 × 10−4 in both cases. The critic is updated 5 times for every update of the generator. Figure 6b presents the training curves and the validation metric. Again, the training process utilised an NVIDIA V100 GPU, with Python 3.10.12, PyTorch 2.0.063, and PyTorch Lightning64 used for the implementation.

a At training time, candidate kinematics are combined with random noise vectors and passed to the generator (highlighted in red), which transforms them into a synthetic image. The task of the discriminator (depicted in green) is to distinguish between true and synthetic images. The diagram illustrates the loss functions minimised during the training. b Training and validation curves display the performance of the different components within the GAN used for proton generation (similar curves are observed for other particles). The purple area denotes the average overlap between the GAN-generated and simulated distributions for five randomly selected parameter sets, each consisting of 10,000 real and synthetic events. On the right-hand side, we illustrate the true and GAN-generated distributions, highlighting their overlap for a randomly selected parameter set obtained from an arbitrary model checkpoint. It serves as an indicator of the overall performance of the GAN, with larger values indicating superior performance.

Gradient-descent minimisation of the image parameters

The initial variant involves the execution of n independent gradient-descent minimisation processes. Each minimisation employs a unique, predetermined noise seed for the GAN. The primary objective of this approach is to identify the optimised parameters that yield a reconstructed image with the closest resemblance to the target image. This operational procedure is elucidated in Algorithm 1. This optimisation endeavour aims to minimise the mean-squared error, serving as the pertinent loss function.

Algorithm 1

Parameter optimisation via gradient descent for best-fit image

Algorithm 2

Parameter optimisation via gradient descent for likelihood inference

In the context of the second variant, the primary objective differs from that of the first. Here, the aim is not to identify the specific parameters and noise seed that generate an image most closely resembling the target image. Instead, the focus is on mitigating the inherent stochasticity of the method, enabling the calculation of uncertainties and the utilisation of the results in subsequent physics investigations. This second variant (Algorithm 2) bears resemblances to the previous one, albeit with certain distinctions. Firstly, the optimisation process involves a single gradient-descent optimisation applied to the parameters. However, in each iteration, n independent reconstructed events are generated using the GAN, and these events are subsequently averaged to create a unified template image. This template image serves as the basis for minimising a likelihood function \({{{{{{{\mathcal{L}}}}}}}}\). The likelihood \({{{{{{{\mathcal{L}}}}}}}}\) is computed as a product of the Poisson likelihood ratios for the energy loss in each voxel, comparing the template (expected) and target (observed) images. This likelihood formulation is instrumental in quantifying the goodness of fit between the two images (inspired by Baker et al.69,70):

where obs is the observation (target image, presented as a flattened 1D vector), exp the expectation (template, also as a flattened 1D vector), and N denotes the total number of voxels in the image.

To provide further insight, the optimisation process of both variants employs the Adam71 optimiser with specific learning rates tailored to the various parameter categories: a learning rate of 0.005 is employed for the vertex-position parameters, 0.05 for the kinetic energy, and 0.2 for the direction. These values were chosen based on the discerned patterns in the transformer results, as illustrated in Fig. 2. Furthermore, the optimisation process encompasses 200 independent gradient-descent minimisations for the first variant, or the number of GAN runs per iteration for the second variant (designated as n). Each of these minimisation processes is iterated for 50 times the number of reconstructed particles present in the event under consideration.

Data availability

The datasets used to train and test our models are publicly available at https://doi.org/10.5281/zenodo.10075666.

Code availability

All code used to implement the methods and reproduce the findings presented in this paper is publicly available at https://github.com/saulam/NeutrinoVertex-DL.

References

Li, Z. & Snavely, N. Learning intrinsic image decomposition from watching the world. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (IEEE, 2018).

Ng, M. K., Ngan, H. Y. T., Yuan, X. & Zhang, W. Patterned fabric inspection and visualization by the method of image decomposition. IEEE Trans. Autom. Sci. Eng. 11, 943–947 (2014).

Monnier, T., Vincent, E., Ponce, J. & Aubry, M. Unsupervised layered image decomposition into object prototypes. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), 8640–8650 (IEEE, 2021).

Wong, K.-M. Multi-scale image decomposition using a local statistical edge model. In 2021 IEEE 7th International Conference on Virtual Reality (ICVR), 10–18 (IEEE, 2021).

He, C., Zhang, L., He, X. & Jia, W. A new image decomposition and reconstruction approach – adaptive fourier decomposition. In He, X. et al. (eds.) MultiMedia Modeling, 227–236 (Springer International Publishing, Cham, 2015).

Zheng, Y., Hou, X., Bian, T. & Qin, Z. Effective image fusion rules of multi-scale image decomposition. In 2007 5th International Symposium on Image and Signal Processing and Analysis, 362–366 (IEEE, 2007).

Javed, S., Oh, S. H., Heo, J. & Jung, S. K. Robust background subtraction via online robust pca using image decomposition. In Proceedings of the 2014 Conference on Research in Adaptive and Convergent Systems, RACS ’14, 105–110 (Association for Computing Machinery, 2014). https://doi.org/10.1145/2663761.2664195.

Strubbe, F. et al. Characterizing and tracking individual colloidal particles using fourier-bessel image decomposition. Opt. Express 22, 24635–24645 (2014).

Bai, J. & Feng, X.-C. Image decomposition and denoising using fractional-order partial differential equations. IET Image Process. 14, 3471–3480 (2020).

Yeh, C.-H., Huang, C.-H. & Kang, L.-W. Multi-scale deep residual learning-based single image haze removal via image decomposition. IEEE Trans. Image Process. 29, 3153–3167 (2020).

Lettry, L., Vanhoey, K. & van Gool, L. Darn: A deep adversarial residual network for intrinsic image decomposition. In 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), 1359–1367 (IEEE, 2018).

Gandelsman, Y., Shocher, A. & Irani, M. "double-dip": Unsupervised image decomposition via coupled deep-image-priors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (IEEE, 2019).

Lu, Y., Collado, J., Whiteson, D. & Baldi, P. Sparse autoregressive models for scalable generation of sparse images in particle physics. Phys. Rev. D. 103, 036012 (2021).

Chlis, N.-K. et al. A sparse deep learning approach for automatic segmentation of human vasculature in multispectral optoacoustic tomography. Photoacoustics 20, 100203 (2020).

Dousti Mousavi, N., Yang, J. & Aldirawi, H. Variable selection for sparse data with applications to vaginal microbiome and gene expression data. Genes 14, https://www.mdpi.com/2073-4425/14/2/403 (2023).

Zeng, L. & Wu, K. Medical image segmentation via sparse coding decoder. Preprint at https://ar5iv.labs.arxiv.org/html/2310.10957 (2023).

Mou, S. & Shi, J. Compressed smooth sparse decomposition. Preprint at https://arxiv.org/abs/2201.07404 (2022).

Manju, V. & Fred, A. L. Sparse decomposition technique for segmentation and compression of compound images. J. Intell. Syst. 29, 515–528 (2020).

Li, K., Wang, Y., Ye, X., Yan, C. & Yang, J. Sparse intrinsic decomposition and applications. Signal Process.: Image Commun. 95, 116281 (2021).

Du, S. et al. A new image decomposition approach using pixel-wise analysis sparsity model. Pattern Recognit. 136, 109241 (2023).

Liu, Y., Zhang, Q., Chen, Y., Cheng, Q. & Peng, C. Hyperspectral image denoising with log-based robust pca. In 2021 IEEE International Conference on Image Processing (ICIP). pp. 1634–1638, https://doi.org/10.1109/ICIP42928.2021.9506050 (Anchorage, AK, USA, 2021).

Abi, B. et al. Long-baseline neutrino oscillation physics potential of the DUNE experiment. Eur. Phys. J. C. 80, 978 (2020).

Abe, K. et al. Hyper-kamiokande design report. Preprint at https://arxiv.org/abs/1805.04163 (2018).

Abe, K. et al. Constraint on the matter–antimatter symmetry-violating phase in neutrino oscillations. Nature 580, 339–344 (2020).

Ershova, A. et al. Role of deexcitation in the final-state interactions of protons in neutrino-nucleus interactions. Phys. Rev. D. 108, 112008 (2023).

Ershova, A. et al. Study of final-state interactions of protons in neutrino-nucleus scattering with incl and nuwro cascade models. Phys. Rev. D. 106, 032009 (2022).

Birks, J. B. The scintillation process in organic systems. IRE Trans. Nucl. Sci. 7, 2–11 (1960).

BIRKS, J. Chapter 3 - the scintillation process in organic materials-i. In BIRKS, J. (ed.) The Theory and Practice of Scintillation Counting, International Series of Monographs in Electronics and Instrumentation, 39–67 (Pergamon, 1964). https://www.sciencedirect.com/science/article/pii/B9780080104720500082.

Rodrigues, P. A. et al. Identification of nuclear effects in neutrino-carbon interactions at low three-momentum transfer. Phys. Rev. Lett. 116, 071802 (2016).

Gran, R. et al. Antineutrino Charged-Current Reactions on Hydrocarbon with Low Momentum Transfer. Phys. Rev. Lett. 120, 221805 (2018).

Ascencio, M. V. et al. Measurement of inclusive charged-current νμ scattering on hydrocarbon at 〈Eν〉 ~ 6▒▒GeV with low three-momentum transfer. Phys. Rev. D. 106, 032001 (2022).

Ruterbories, D. et al. Simultaneous Measurement of Proton and Lepton Kinematics in Quasielasticlike νμ-Hydrocarbon Interactions from 2 to 20 GeV. Phys. Rev. Lett. 129, 021803 (2022).

Yu, S. & Ma, J. Deep learning for geophysics: Current and future trends. Rev. Geophys. 59, e2021RG000742 (2021). E2021RG000742 2021RG000742.

Komiske, P. T., Metodiev, E. M., Nachman, B. & Schwartz, M. D. Pileup mitigation with machine learning (pumml). J. High. Energy Phys. 2017, 1–20 (2017).

Kim, C., Ahn, S., Chae, K., Hooker, J. & Rogachev, G. Restoring original signals from pile-up using deep learning. Nucl. Instrum. Methods Phys. Res. Sec. A 168492, https://www.sciencedirect.com/science/article/pii/S0168900223004825 (2023).

Vaswani, A. et al. Attention is all you need. Preprint at https://arxiv.org/abs/1706.03762 (2017).

Kora, R. & Mohammed, A. A comprehensive review on transformers models for text classification. In 2023 International Mobile, Intelligent, and Ubiquitous Computing Conference (MIUCC), 1–7 (IEEE, 2023).

OpenAI. ChatGPT, https://chat.openai.com (2023).

Gasiorowski, S. et al. Differentiable simulation of a liquid argon time projection chamber. Machine Learning: Science and Technology. 5, 025012 (IOP Publishing, 2024).

Heiden, E., Denniston, C. E., Millard, D., Ramos, F. & Sukhatme, G. S. Probabilistic inference of simulation parameters via parallel differentiable simulation. In 2022 International Conference on Robotics and Automation (ICRA), 3638–3645 (IEEE, 2022).

Dorigo, T. et al. Toward the end-to-end optimization of particle physics instruments with differentiable programming: a white paper. Rev. Sci. 10, 100085 (2023).

Goodfellow, I. J. et al. Generative adversarial nets. In Proceedings of the 27th International Conference on Neural Information Processing Systems - Volume 2, NIPS’14, 2672–2680 (MIT Press, Cambridge, 2014).

Abe, K. et al. The T2K experiment. Nucl. Instrum. Methods Phys. Res. Sect. A 659, 106–135 (2011).

Acciarri, R. et al. Design and construction of the MicroBooNE detector. J. Instrum. 12, P02017–P02017 (2017).

Hayato, Y. & Pickering, L. The neut neutrino interaction simulation program library. Eur. Phys. J. Spec. Top. 230, 4469–4481 (2021).

Jaradat, Y., Masoud, M., Jannoud, I., Manasrah, A. & Alia, M. A tutorial on singular value decomposition with applications on image compression and dimensionality reduction. In 2021 International Conference on Information Technology (ICIT), 769–772 (IEEE, 2021).

Buades, A., Le, T., Morel, J.-M. & Vese, L. Cartoon+Texture Image Decomposition. Image Process. Line 1, 200–207 (2011).

Mohapatra, R. N. et al. Theory of neutrinos: a white paper. Rep. Prog. Phys. 70, 1757 (2007).

Stecker, F. W. Neutrino physics and astrophysics overview. Preprint at https://arxiv.org/abs/2301.02935 (2023).

Pontecorvo, B. Mesonium and anti-mesonium. Sov. Phys. JETP 6, 429 (1957).

Fukuda, Y. et al. Evidence for oscillation of atmospheric neutrinos. Phys. Rev. Lett. 81, 1562–1567 (1998).

Ahmad, Q. R. et al. Direct evidence for neutrino flavor transformation from neutral-current interactions in the sudbury neutrino observatory. Phys. Rev. Lett. 89, 011301 (2002).

Grinis, R. Differentiable programming for particle physics simulations. J. Exp. Theor. Phys. 134, 150–156 (2022).

Alonso-Monsalve, S. & Whitehead, L. H. Image-based model parameter optimisation using Model-Assisted Generative Adversarial Networks. IEEE Trans. Neural Netw. Learn. Syst. 31, 5645–5650 (2020).

Roussel, R. & Edelen, A. Applications of differentiable physics simulations in particle accelerator modeling. Preprint at https://arxiv.org/abs/2211.09077 (2022).

Blondel, A. et al. The SuperFGD prototype charged particle beam tests. J. Instrum. 15, P12003–P12003 (2020).

Alekseev, I. et al. SuperFGD prototype time resolution studies. J. Instrum. 18, P01012 (2023).

Majumdar, K. & Mavrokoridis, K. Review of liquid argon detector technologies in the neutrino sector. Appl. Sci. 11, https://www.mdpi.com/2076-3417/11/6/2455 (2021).

Agostinelli, S. et al. Geant4-a simulation toolkit. Nucl. Instrum. Methods Phys. Res. Sect. A 506, 250–303 (2003).

Allison, J. et al. Geant4 developments and applications. IEEE Trans. Nucl. Sci. 53, 270–278 (2006).

Allison, J. et al. Recent developments in geant4. Nucl. Instrum. Methods Phys. Res. Sect. A 835, 186–225 (2016).

You, Y. et al. Large batch optimization for deep learning: Training bert in 76 minutes. In International Conference on Learning Representations. https://openreview.net/forum?id=Syx4wnEtvH (2020).

Paszke, A.et al. PyTorch: An imperative style, high-performance deep learning library. In Advances in Neural Information Processing Systems 32, 8024–8035 (Curran Associates, Inc., 2019). http://papers.neurips.cc/paper/9015-pytorch-an-imperative-style-high-performance-deep-learning-library.pdf.

Falcon, W. & The PyTorch Lightning team. PyTorch Lightning, https://github.com/Lightning-AI/lightning (2019).

Mirza, M. & Osindero, S. Conditional generative adversarial nets. Preprint at https://arxiv.org/abs/1411.1784 (2014). 1411.1784.

Arjovsky, M., Chintala, S. & Bottou, L. Wasserstein GAN. Preprint at https://arxiv.org/abs/1701.07875 (2017). 1701.07875.

Gulrajani, I., Ahmed, F., Arjovsky, M., Dumoulin, V. & Courville, A. Improved training of Wasserstein GANs. In Proceedings of the 31st International Conference on Neural Information Processing Systems. 5769–5779 (Curran Associates Inc., 2017).

Tieleman, T. & Hinton, G. et al. Lecture 6.5-RMSprop: Divide the gradient by a running average of its recent magnitude. COURSERA Neural Netw. Mach. Learn. 4, 26–31 (2012).

Baker, S. & Cousins, R. D. Clarification of the use of chi-square and likelihood functions in fits to histograms. Nucl. Instrum. Methods Phys. Res. 221, 437–442 (1984).

Abe, K. et al. Improved constraints on neutrino mixing from the T2K experiment with 3.13 × 1021 protons on target. Phys. Rev. D. 103, 112008 (2021).

Kingma, D. P. & Ba, J. Adam: A method for stochastic optimization. In International Conference on Learning Representations (ICLR). (2015).

Acknowledgements

Part of this work was supported by the SNF grant PCEFP2_203261, Switzerland.

Author information

Authors and Affiliations

Contributions

S.A.-M. and D.S. conceived the analysis strategy. X.Z. and C.M. implemented the code needed for the simulation. X.Z. generated the datasets, and S.A.-M. preprocessed them. S.A.-M. designed, implemented, and trained the different algorithms, and together with A.M. tested them. A.M., D.S., X.Z., and S.A.-M. performed the analysis. A.R. and D.S. supervised the entire process. All authors wrote, discussed and commented on the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Communications Physics thanks Louis Lettry and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. A peer review file is available

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Alonso-Monsalve, S., Sgalaberna, D., Zhao, X. et al. Deep-learning-based decomposition of overlapping-sparse images: application at the vertex of simulated neutrino interactions. Commun Phys 7, 173 (2024). https://doi.org/10.1038/s42005-024-01669-8

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s42005-024-01669-8

This article is cited by

-

Novel sparse decomposition with adaptive evolutionary atoms for nonstationary signal extraction

Scientific Reports (2025)