Abstract

The hyperfine structure absorption lines of neutral hydrogen in high-redshift radio spectra, known as the 21-cm forest, have been demonstrated through simulations as a powerful probe of small-scale structures governed by dark matter (DM) properties and the thermal history of intergalactic medium (IGM). By measuring the one-dimensional power spectrum of the 21-cm forest, parameter degeneracies can be broken, offering key constraints on the properties of both DM and IGM. However, conventional methods are hindered by computationally expensive simulations and a non-Gaussian likelihood function. To overcome these challenges, we propose a deep learning approach that combines generative normalizing flows for data augmentation and inference normalizing flows for parameter estimation, enabling accurate results from minimally simulated datasets. Using mock data from the Square Kilometre Array, we demonstrate the capability of this deep learning-driven approach to generating posterior distributions, providing a robust tool for probing DM and the cosmic heating history.

Similar content being viewed by others

Introduction

The hyperfine structure transition of neutral hydrogen atoms from the epoch of reionization (EoR) creates narrow absorption lines on spectra of high-redshift radio bright sources (e.g. radio-loud quasars), with a rest-frame wavelength of 21 cm. The 21-cm absorption lines from various structures at different distances along the line of sight are redshifted to different frequencies, giving rise to forest-like features on the spectra, which is called the 21-cm forest1,2,3,4,5,6 in analogy to the Lyman-α (Ly-α) forest at lower redshifts. Simulations suggest that the 21-cm forest could serve as a sensitive probe of the cosmic heating history4,5. After the formation of the first galaxies, the generated light not only ionizes hydrogen atoms but also heats the gas simultaneously (with X-rays being the primary heating source). As the spin temperature of the gas increases, the absorption at 21 cm weakens (reducing the optical depth), thus causing the 21-cm forest signal to become weaker. In principle, the strength of the signal can be used to measure the cosmic heating history, thereby gaining insight into the properties of the first galaxies.

On the other hand, understanding the properties of dark matter (DM) hinges crucially on probing small-scale structures of the universe. The cold DM (CDM) leads to an excess of structure on small scales, while warm DM (WDM) or ultra-light DM results in suppressed structure formation on small scales7,8. Being sensitive to the small-scale structures, it has been shown that the 21-cm forest can be used to probe the minihalos and dwarf galaxies during the EoR5,9, so as to provide precise measurements of small-scale cosmic structures, and can be utilized to measure the mass of DM particles10.

During the Square Kilometre Array (SKA) era, conducting 21-cm forest observations theoretically allows the mass of DM particles to be constrained based on the number density of absorption lines2,10. However, detecting the 21-cm signal is by no means an easy task. In particular, if the extent of cosmic heating is too strong, it will render the 21-cm forest signal undetectable, thereby making it impossible to measure either the properties of DM particles or the temperature of the intergalactic medium (IGM) through this method.

Our recent work has proposed an effective solution to this problem, namely, measuring the one-dimensional (1D) power spectrum of the 21-cm forest11. This approach not only allows for the extraction of faint signals but also breaks the degeneracy between the mass of DM particles and the temperature of the IGM, enabling both to be measured simultaneously. This is because, when transforming from frequency space to k-space, the scale-dependence characteristics of the 21-cm forest signal can be displayed, while noise can be effectively suppressed as it lacks such scale dependence, enabling even faint signals to be extracted. Furthermore, the properties of DM particles (whether warm or cold) and the temperature of the IGM mainly affect the shape and amplitude of the power spectrum, respectively. Thus, both can be simultaneously constrained through power spectrum measurements. If this approach can be successfully implemented during the SKA era, it will be significant for the research of DM and first galaxies.

To achieve this goal, a series of challenges still need to be overcome. Observationally, it requires a sufficiently sensitive experiment and long observation times to detect the weak signals, as well as a sufficient number of high-redshift radio-bright point sources to suppress the cosmic variance12. Additionally, even if we can achieve 21-cm forest observations, it is not straightforward to constrain the properties of DM particles and the cosmic heating history, because currently there is no analytical or empirical model to connect the parameters (such as the mass of DM particles and the temperature of the IGM) with observables (the 1D power spectrum of the 21-cm forest). In this case, to achieve parameter inference, we can only rely on a large number of simulations spanning a sufficient large parameter space to calculate observables, and then construct a likelihood function, thus utilizing Bayesian methods for parameter inference13. This is how parameter inference is done for constraining DM particles using the Ly-α forest14. Such a large number of simulations require a significant amount of computing resources, making it both very expensive and extremely time-consuming. Moreover, the computational costs for the 21-cm forest simulations are considerably higher due to its major contributions from smaller scales (k as high as ~ 100 Mpc−1), compared to the Ly-α forest2,15.

Additional challenges arise from the non-Gaussian likelihood function of the 21-cm forest signals induced by the nonlinear effect of gravitational clustering16 and the non-local effect of radiation17. Due to the non-Gaussian likelihood function for the 1D power spectrum, traditional Bayesian methods for parameter inference are somewhat inadequate, potentially leading to severely distorted inference results. Although methods like traditional Gaussian mixture models can fit likelihood functions under non-Gaussian conditions18,19, they require significant computational resources, making them less practical for extensive data analysis. Additionally, the correlation between the 1D power spectrum at different scales also affects the precision of parameter inference framework, as the coupling of errors across scales increases the complexity of modeling the likelihood.

This work proposes a set of solutions to these issues, allowing parameter inference of 21-cm forest observations without the need for an explicit model or the construction of the likelihood function, using only a limited number of simulated samples. This enables us to obtain constraints on the properties of DM particles and the history of cosmic heating efficiently from 1D power spectrum measurements of the 21-cm forest. The key strategy relies on variational inference methods based on deep learning20, especially the technique of normalizing flows (NFs)21. These methods have found various applications in fields such as the large-scale generation of neutral hydrogen sky maps22,23, parameter inference during the EoR using 21-cm tomography24,25,26,27, inference of gravitational wave source parameters under the influence of noise transients28,29, and the inference of the IGM thermal parameters based on the Ly-α forest30,31. Within this framework, not only can a large number of simulated samples be easily generated through generative neural networks, but parameter inference can also be achieved without a likelihood function. This approach addresses the aforementioned challenges of large sample sizes and a non-Gaussian likelihood function, respectively, for 21-cm forest observations, paving the way for advancing the usage of this probe to constrain the fundamental physics during the cosmic dawn.

Results

Simulation setup

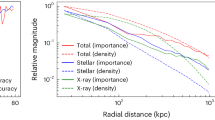

We take the same approach as ref. 11, and simulate the 21-cm forest signals from the EoR for various X-ray production efficiencies of the first galaxies parameterized by fX, and for various DM particle masses of mWDM (see Methods). The parameter fX controls the efficiency of X-ray heating in the early universe, directly influencing the IGM temperature TK. Given the current Hydrogen Epoch of Reionization Array (HERA) findings, TK is constrained to 15.6 K < TK < 656.7 K at z ~ 8 with 95% confidence32, which demarcates the rough signal level of the 21-cm forest constrained by the heating effect. Therefore, our analysis mainly focuses on two characteristic heating levels for discussion: the case with a weaker heating effect (TK ≈ 60 K at redshift 9 corresponding to fX ~ 0.1) and the case with a stronger heating effect (TK ≈ 600 K at redshift 9 corresponding to fX ~ 1). As fX increases, TK rises, regulating the X-ray heating in the early universe and strongly influencing the detectability of the 21-cm forest signal. For comparison, we also simulate cases with fX = 0 representing an unheated IGM and fX = 3 for extremely high productivity of X-rays in the early universe. For the DM properties, although some recent astrophysical observations suggest a lower limit on the WDM particle mass (mWDM) of approximately 6 kilo electronvolts (keV), models with lower WDM particle mass have not been definitively excluded33,34,35,36,37. Furthermore, as the value of mWDM increases, the structure formation characteristics of WDM models on small scales become increasingly similar to those of CDM model. We therefore simulate WDM models with mass in the several keV range for subsequent analysis. Assuming a fiducial WDM model with mWDM = 6 keV, Fig. 1 shows the signal-to-noise ratio (SNR) of the 1D power spectrum of the simulated 21-cm forest, represented by P(k)/PN, where P(k) is the 1D power spectrum of the pure 21-cm forest signal and PN is thermal noise of the 1D power spectrum, with different heating levels for the noise levels corresponding to phase-one and phase-two low-frequency arrays of the SKA (SKA1-LOW38 and SKA2-LOW39,40), respectively. Our results indicate that a lower heating level (TK = 60 K at z = 9) allows for measurement of the 1D power spectrum of the 21-cm forest with an integration time of 100 h per background source with a flux density of 10 mJy using the SKA1-LOW. In contrast, a higher heating level (TK = 600 K at z = 9) necessitates 200 h on each source with the same flux density using SKA2-LOW.

The blue, orange, green and red curves correspond to fX = 0, fX = 0.1, fX = 1 and fX = 3, respectively. a Depicts the SNR with an integration time of 100 h on each source using SKA1-LOW. b Depicts the results for an integration time of 200 h on each source using SKA2-LOW. The error bars show the sample variances of the 1D power spectra of the 21-cm forest. The black dashed line in each panel indicates the SNR of 1.

Given the high dynamic range required for the 21-cm forest simulations, the simulation-based Bayesian methods for parameter inference are inefficient and almost infeasible, considering the requirement of computational time and resources. For instance, simulating the density field for mWDM = 6 keV requires approximately 600 h on 64 cores (Intel(R) Xeon(R) Gold 6271C CPU @ 2.60GHz). To achieve parameter inference with minimal computational expenditure, we first simulate the 21-cm forest signals for a limited sampling of the parameter space. Specifically, mWDM ranges from 3 keV to 9 keV with intervals of 1 keV, while TK ranges from 40 K to 80 K with intervals of 5 K for weaker heating levels, and from 400 K to 800 K with intervals of 100 K for stronger heating levels. This parameter space results in a dataset of 567000 samples (corresponding to 7 values of mWDM and 9 values of TK, for a total of 7 × 9 × 9000 = 567000 samples; see Methods) for weaker heating levels, which, while sufficiently large, is unevenly distributed and does not fully cover the entire parameter space. To ensure robustness, the validation dataset, split from the training data, is employed to fine-tune model parameters and prevent overfitting. The testing dataset, also separated from the initial dataset, is reserved exclusively for evaluating the model’s predictive performance on unseen data.

As shown in Supplementary Fig. 1, this non-uniform sampling of the parameter space impairs likelihood-free inference methods, leading to inaccurate probability estimations in under-sampled regions of the parameter space. However, as presented in Supplementary Fig. 2, while the parameter space distribution is irregular, the corresponding power spectrum space exhibits continuity, indicating that the power spectra are less sensitive to gaps in parameter sampling. To leverage this property, our framework combines a generative normalizing flow (GNF) to fill the gaps in the parameter space and an inference normalizing flow (INF) to accurately infer parameters, as shown in Fig. 2.

a Depicts network workflow chart. b Depicts schematic diagram of the architecture of the NF. Both the GNF and INF are composed of RQ-NSF (AR). In the GNF, the conditions are parameters, and the output is a 1D power spectrum. Conversely, in the INF, the condition is a 1D power spectrum, and the outputs are parameters. The network consists of a sequence of flows, each of which consists of a sequence of autoregressive layers that share parameters. Input the condition and the sampling vector obtained from the base distribution into the NFs to generate the output. The simulated power spectra data set is divided into a training set, a validation set, and a testing set. The training set and validation set are used to train the GNF, which generates reasonable power spectra for any given parameters. The INF is trained using the 1D power spectra generated by the GNF to learn the parameter distribution corresponding to any given power spectrum. Different testing sets of simulated power spectra are used to verify the rationality of the GNF and the INF, respectively.

Data augmentation

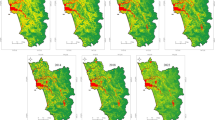

To guarantee a robust parameter inference, we first assess the reliability of the 1D power spectrum of the 21-cm forest generated by the GNF. Figure 3 compares the mean value and standard deviation of the 1D power spectra generated by the GNF with those from simulations. The results confirm that the GNF consistently reproduces the 1D power spectra generated from the training set as presented in (b, e, h) of Fig. 3. In addition, it effectively generates the 1D power spectra for both interpolated and extrapolated parameter regions as presented in (a, d, g) and (c, f, i) of Fig. 3, demonstrating its generalization capability. To quantify the reasonableness of the GNF model, we applied the coefficient of determination (R2) to measure the correlation between the 1D power spectra generated by the GNF and those from simulations. Achieving R2 > 0.99 indicates a high reliability of the GNF in reproducing these 1D power spectra. This examination, combining statistical measures and visual inspections, confirms the reliability of the GNF model in generating the 1D power spectrum of the 21-cm forest. Additionally, using the GNF, approximately 24,000 1D power spectra can be generated in about 1 s, a speed unattainable by traditional simulations. In contrast, generating the same number of samples with traditional simulations would require approximately 280 h.

The data are derived from results of different TK and mWDM, with an integration time of 100 h using SKA1-LOW. a – c, d – f, g – i Present the 1D power spectra of 21-cm forest with mWDM = 3 keV, mWDM = 6 keV and mWDM = 9 keV, respectively. The 1D power spectra of the 21-cm forest are presented in (a, d, g), (b, e, h) and (c, f, i) with TK = 47.5 K, TK = 60 K and TK = 82.5 K, respectively. The blue shaded area is the 1σ range of the 1D power spectrum generated by the GNF. The orange line and bar are the mean and 1σ error bar of the simulated power spectrum. \({R}_{{\mu }_{P(k)}}^{2}\) and \({R}_{{\sigma }_{P(k)}}^{2}\) are the correlation coefficients of the mean and standard deviation, respectively.

We used the skewness coefficient (S) to describe the asymmetry of the 1D power spectrum at each k in Fig. 4. It shows that at smaller scales and with longer integration times, deviations from a Gaussian distribution increase. This trend is attributed to the increasing dominance of small-scale strong nonlinear effects compared to thermal noise. Additionally, we observed gradual deviations from a normal distribution with increasing mWDM and TK. These deviations are attributed to the increased number of halos with higher mWDM, which significantly alters the density field, and to temperature variations influencing the 1D power spectrum of the 21-cm forest as TK increases. Additionally, we quantified the similarity between the distributions generated by the GNF and the simulation in Supplementary Fig. 3 using the Jensen-Shannon divergence (JSD)41,42, a symmetric measure of similarity between two probability distributions. Our analysis revealed that the JSD value is less than 0.05, indicating a high degree of similarity between the distributions generated by the GNF and the simulation. As previously noted with JSD and S analyses, these results confirm that under different heating levels, the 1D power spectrum of the 21-cm forest observed with an integration time of 100 h using SKA1-LOW and those measured with an integration time of 200 h using SKA2-LOW exhibit Gaussian and non-Gaussian distributions, respectively. This highlights the capability of the GNF in modeling the 1D power spectrum of the 21-cm forest under various astrophysical conditions.

The blue, orange, and green curves in (a) present the S of the 1D power spectrum at different k when mWDM = 6 keV and TK = 60 K, and the SKA1-LOW integration time is 100 h, 1000 h, and infinity (pure signal), respectively. The blue, orange, and green curves in (b) present the S of the 1D power spectra at different k when TK = 60 K, and mWDM is 3 keV, 6 keV, and 9 keV, respectively. The blue and orange curves in (c) present the 1D power spectrum S of the pure signal at different k when mWDM = 6 keV and TK is 60 K and 600 K, respectively.

The correlation coefficient ρij between different k bins of the 1D power spectrum of the 21-cm forest is also crucial for accurately estimating the uncertainties in parameter inference. Here, i and j refer to different k bins. Ignoring the correlation in the 1D power spectrum between these different k bins can lead to incorrect error estimates. In Supplementary Fig. 4, we show the values of ρij of the pure signal under different conditions. We find that the correlations at small scales are significant, especially in scenarios with strong heating effects. However, considering the impact of SKA thermal noise in actual observations, as shown in Supplementary Fig. 5, the dominance of thermal noise at small scales reduces the overall correlation strength. Nonetheless, the correlations remain non-negligible. Therefore, although combining GNF with Bayesian inference allows us to obtain posterior distributions, the aforementioned correlations significantly increase the complexity of modeling the likelihood function. As a result, generating 216 posterior samples requires several days of computation on 32 cores.

Parameter inference

To assess the performance of the INF in deriving parameters, we evaluate two key aspects: the reliability of the inferred parameters and the precision of the parameter estimates. First, we need to confirm the reliability of the INF inference parameters. We conducted the Kolmogorov-Smirnov (KS) test43. The KS test is a non-parametric test that compares the cumulative distribution function (CDF) of the sample data to a reference distribution. By computing the CDF of the percentiles of the true values of each parameter derived from the marginal 1D posterior distribution, we can evaluate the model’s performance. For each 21-cm forest 1D power spectrum and the corresponding confidence interval of the posterior distribution, the percentile values of the injected parameters are computed. Ideally, the CDF for each parameter should be close to the diagonal, as the percentiles should be evenly distributed between 0 and 1. We measured the CDF of the true parameters falling within specific confidence levels as presented in Fig. 5. The results demonstrated the CDF curves within the 3σ confidence bands, with p-values greater than 0.05 for each parameter. A KS test p-value greater than 0.05 indicates that there is no significant difference between the CDF of the sample data and the reference distribution, confirming that the observed deviations are within the expected range due to random sampling variability. These findings affirm the validity and accuracy of our inference method within the expected error ranges, providing confidence in its application for cosmological research.

a Depicts the P-P plot of the INF inference with an integration time of 100 h using SKA1-LOW and for a total of 2700 power spectra with mWDM ranging from 5 keV to 7 keV and TK from 50 K to 70 K. b Depicts the P-P plot of the INF inference with an integration time of 200 h using SKA2-LOW and for a total of 2700 power spectra with mWDM ranging from 5 keV to 7 keV and TK from 400 K to 800 K. The gray shaded area is the range of the 3σ confidence interval. KS test p-values are denoted in the legend.

Next, we determine the precision of the parameters inferred by INF. Similar to findings in refs. 25,30, we encounter challenges due to limited prior parameter bounds, as probabilities outside the predefined range cannot be estimated. However, this can be solved by expanding the coverage of the simulated parameter space. Additionally, using reciprocal parameter values in transformations could improve the inference framework’s applicability, making it possible to avoid simulating to infinity. Within the central regions of the parameter space, our analysis reveals distinct patterns in the relative error ε of parameter inference for mWDM and TK under various heating levels, as presented in Supplementary Fig. 6. Here, the relative error, defined as the precision, is given by ε(ξ) = σ(ξ)/ξ, where ξ represents the true parameter (mWDM or TK). Although SKA2-LOW is more sensitive, the higher error is due to the fact that our discussion for SKA1-LOW focused on cases with weaker heating effects, while increasing the heating effect suppresses the 21-cm forest signal, leading to larger inference errors. We find that TK significantly affects the precision of mWDM estimates. Conversely, mWDM has a relatively small impact on the precision of TK estimates. This is because TK influences the 1D power spectrum values across all scales, while mWDM primarily affects the 1D power spectrum values on small scales, as shown in Supplementary Fig. 2. Therefore, as presented in Supplementary Fig. 7, TK can achieve high precision by relying solely on large-scale information, almost unaffected by mWDM. On the other hand, mWDM mainly relies on small-scale and medium-scale information, which is influenced by TK. Therefore, the 1D power spectrum of different scales will break the degeneracy, resulting in a significant improvement in the precision of parameter estimates. This dependence highlights the interplay between these parameters and emphasizes the ability of our network to effectively constrain each parameter. Generating 216 posterior samples with INF takes only 32.2 ms, showcasing its remarkable efficiency, far surpassing the speed of GNF combined with Bayesian inference.

For low heating level conditions, we utilized SKA1-LOW with an integration time of 100 h to examine the constraint results of posterior parameter inference, assuming mWDM = 6 keV and TK = 60 K. We compared the precision of the posterior inference results from the INF with the range predicted by the Fisher matrix as presented in Fig. 6. The INF method successfully recovered the posterior distribution of parameters, with the true values lying within the estimated 1σ confidence region. This demonstrates precision comparable to the predictions of the Fisher matrix. The final average constraint result of the 1D power spectra of the 21-cm forest for all simulations are \({m}_{{{\rm{WDM}}}}=6.2{8}_{-1.59}^{+1.61}\) keV and \({T}_{{{\rm{K}}}}=60.1{7}_{-6.66}^{+6.16}\) K. This confirms the reliability of the INF method in achieving precise parameter inference under low heating conditions.

The black contour is based on the INF and the blue contour is based on the Fisher matrix. a Depicts the results when mWDM = 6 keV and TK = 60 K with an integration time of 100 h using SKA1-LOW. b Depicts the results when mWDM = 6 keV and TK = 600 K with an integration time of 200 h using SKA2-LOW. Contours represent 1σ and 2σ confidence intervals.

Under high heating level conditions, using SKA2-LOW with an integration time of 200 h (mWDM = 6 keV, TK = 600 K), we observed significantly different results. Although both the Fisher matrix and INF methods presented consistent degeneracy directions, the Fisher matrix approach failed to adequately constrain the parameters due to the non-Gaussian likelihood function of the 1D power spectrum of the 21-cm forest. This highlights the effectiveness of likelihood-free inference methods like the INF in such scenarios. The final average constraint results of the 1D power spectra for all simulations are \({m}_{{{\rm{WDM}}}}=6.4{7}_{-1.88}^{+2.04}\) keV and \({T}_{{{\rm{K}}}}=627.9{5}_{-91.07}^{+94.18}\) K. These results demonstrate that, while the Fisher matrix approach is inadequate under a non-Gaussian likelihood function, the INF method remains effective.

Discussion

Observing the 21-cm forest signals during the EoR provides a valuable opportunity to gain insight into DM properties and the heating history in the first billion years of the universe. However, due to the lack of explicit relation between the physical parameters and the observables, parameter inference has been impeded by the limited parameter space covered by computationally expensive simulations and the infeasible requirement on computational resources. Moreover, the non-Gaussian likelihood function of the 21-cm forest signal complicates the evaluation of the likelihood function, resulting in biased inference of parameters. In this work, we introduce a deep learning-based approach that does not rely on traditional analytical models, enabling inference of TK and mWDM with a small number of simulations, unlike analytical models that establish an explicit relationship between mWDM, TK during the reionization period, and the statistical characteristics of the 21 cm signal. Furthermore, this approach could extend to other non-Gaussian scenarios, allowing for parameter inference using arbitrary 21-cm forest signals.

For data analysis, we calculate the two-point correlation function of the 21-cm forest and convert it to the k-space, resulting in the 1D power spectrum along the line of sight. The choice stems from the faintness of the 21-cm forest signal, making a power spectrum analysis more beneficial. Nonetheless, other studies27 suggest alternative methods that could more effectively compress data and yield more precise parameter constraints. Future research will explore these methods for nearly lossless parameter inference, enhancing the effectiveness of constraints. Additionally, we plan to incorporate factors like metal absorption lines44 coupling and foreground to create a more accurate dataset. To improve the astrophysical modeling, the inclusion of spatial temperature non-uniformity and X-ray source distribution variations45,46 is also worth considering. Additionally, the impact of including additional parameters that influence the 21-cm forest warrants investigation, where methods like Latin hypercube sampling (LHS) could potentially enhance sampling efficiency47,48.

Overall, our research demonstrates that deep learning-based methods, particularly NFs, offer a promising approach to analyzing the 21-cm forest signal. These methods address the challenges of simulation data scarcity and complexity, providing robust and accurate parameter inference. In addition, the INF method can assist the 21-cm forest in distinguishing between the CDM and WDM models. Given the computationally intensive nature of CDM simulations, we conservatively use the simulated upper limit of 9 keV as a stand-in for CDM in our analysis. In Supplementary Fig. 8, we assess the discriminative power of the INF method by comparing the confidence intervals with the upper limit (9 keV) of the parameter space for the simulated mWDM under different mWDM and TK. The results indicate that the INF method can distinguish the 1D power spectrum of mWDM values less than 6 keV from that of the CDM model at a 2σ confidence level. Furthermore, the deep learning-based parameter inference method demonstrated here not only advances astronomy and cosmology but also shows potential for handling complex and irregular datasets49. In fields like biomedical research, where sample scarcity hinders the study of rare diseases50, our method can significantly improve data analysis capabilities. As the SKA era approaches, these innovative techniques will be invaluable for extracting maximum scientific information from 21-cm observations, contributing to a deeper understanding of the thermal history of the universe and the properties of DM.

Methods

Simulations of the 1D power spectrum of the 21-cm forest

The differential brightness of the observed 21-cm absorption signal relative to the brightness temperature of the background radiation at a specific direction \(\hat{{{\bf{s}}}}\) and redshift z is

where ν0 = 1420.4 MHz is the rest frame frequency of 21-cm photons, TS is the spin temperature of HI gas, \({\tau }_{{\nu }_{0}}\) is the 21-cm optical depth. In terms of average gas properties within each voxel, the 21-cm optical depth can be written as3,51,52

where \(\delta (\hat{{{\bf{s}}}},z)\), \({x}_{{{\rm{HI}}}}(\hat{{{\bf{s}}}},z)\) and H(z) are the gas overdensity, the neutral fraction of hydrogen and the Hubble parameter, respectively. dv∥/dr∥ is the inherent velocity projected along the line of sight gradient and we ignore this term in the simulation. Additionally, doppler shifts due to any peculiar velocities are also ignored.

For the spin temperature, we assume that it is coupled to TK (TS ≈ TK), due to the Wouthuysen-Field effect53,54. The gas temperature in each voxel is set by the thermal history of the early universe and its proximity to halos. Here, our focus is on neutral regions, where X-ray penetration primarily heats the gas, and subsequently affects the 21-cm signals. When we consider an unheated IGM (fX = 0), the temperature of gas outside the virial radius is mainly determined by the cosmic expansion. The 21-cm forest signal is more prominent on smaller scales, and thus our work prioritizes the small-scale features. As described in ref. 45, while X-ray heating introduces temperature variations on large scales due to the spatial distribution of X-ray sources, the long mean free path of X-ray photons ensures that, on scales of ~10 Mpc, the background temperature can be reasonably approximated as uniform. Additionally, we also account for the temperature inhomogeneities within halos and in their surrounding regions: inside halos, the temperature is set to the virial temperature, while outside halos, it depends on X-ray heating. This approach ensures that the influence of small-scale variations is included in our modeling. In addition, our work primarily considers the upper limit of halos with a virial temperature of 104 K. Below this threshold, it is difficult for stars and galaxies to form within the halo. Thus, these halos do not contain X-ray sources, allowing us to assume a relatively uniform distribution of X-ray sources, leading to uniform heating of IGM. While spatial variations in heating may exist in reality46, this approximation serves as a starting point for this work, which prioritizes the development of an inference method that can be extended to incorporate more complex physical scenarios in future work. For the brightness temperature of the background radiation Tγ, our assumption is the same as that in ref. 11, which is related to the flux density of the background source S150.

We employ the multi-scale modeling approach proposed in ref. 11 to simulate the 21-cm forest signal. Specifically, we use 21cmFAST55 to model the ionization field56,57 and density field58,59,60,61 on large scales in a (1 Gpc)3 box with 5003 grids. Subsequently, we identify neutral regions within the large-scale box to simulate the 21-cm signal, where each grid with a length of 2 Mpc is further divided into 5003 voxels by small-scale simulations. The initial conditions for the grids are derived from large-scale density field simulations. Since low-mass halos are the main contributors to the 21-cm forest signal10,62,63,64,65, we calculate their number and distribution within small-scale grid based on the conditional mass function. The density of each voxel is determined by its nearest halo, following the Navarro-Frenk-White profile66 within the virial radius and the infall model profile67 outside of it. Throughout this work, we adopted a set of cosmological parameters consistent with Planck 2018 results68: Ωm = 0.3153, Ωbh2 = 0.02236, ΩΛ = 0.6847, h = 0.6736 and σ8 = 0.8111. Here, Ωm is the matter density, Ωb is the baryonic density, ΩΛ is the dark energy density, h is the dimensionless Hubble constant, and σ8 is the matter fluctuation amplitude.

Observational uncertainties in the 21-cm forest include thermal noise, sample variance, contaminating spectral structure of foreground sources in the chromatic side lobes, and bandpass calibration errors11. Regarding the error consideration, we only consider the thermal noise of the interferometer array and the sample variance in the 1D power spectrum measurement as in ref. 11. The sample variance is generated by the simulation process and does not need to be added separately. When measuring individual absorption lines directly, the noise flux density averaged over the two polarizations can be expressed as69

where Aeff is the effective collection area of the telescope, Tsys is the system temperature, δν is the channel width, δt is the integration time. The corresponding thermal noise temperature is

where λz is the observation wavelength, Ω = π(θ/2)2 is the solid angle of the telescope beam, where θ = 1.22λz/D is the angular resolution, D is the longest baseline of a radio telescope or array. For SKA1-LOW, we use Aeff/Tsys = 800 m2/K38,39. For SKA2-LOW, we use Aeff/Tsys = 4000 m2/K39,40. For these two arrays, we assume D = 65 km, and the integration time δt is 100 h and 200 h, respectively.

In Supplementary Fig. 9, we calculated the brightness temperature for different cases. Direct measurement of individual absorption lines is easily hampered by early X-ray heating. To improve the sensitivity of detecting the 21-cm forest signal and reveal the clustering properties of the absorption lines to distinguish the effect on the 21-cm signal between the heating effect and WDM model, we followed the algorithm in ref. 11 and calculated 1D power spectrum of brightness temperature under the assumption of high redshift background sources70. The brightness temperature \(\delta {T}_{{{\rm{b}}}}(\hat{{{\bf{s}}}},\nu )\) as a function of the observation frequency ν can be equivalently used to represent the line-of-sight distance rz as \({T}_{{{\rm{b}}}}^{{\prime} }(\hat{{{\bf{s}}}},{r}_{z})\). The Fourier transform of \({T}_{{{\rm{b}}}}^{{\prime} }(\hat{{{\bf{s}}}},{r}_{z})\) is

The 1D power spectrum along the line of sight is defined as

Here 1/Δrz is the normalization factor, where Δrz is the line-of-sight length considered. For a reasonable number \({{\mathcal{O}}}\) (10) high-z background sources12, the expected value of the 1D power spectrum is obtained by incoherently averaging over neutral patches on the line of sight penetrating various environments, i.e. \(P\left({k}_{\parallel }\right)\equiv \left\langle P\left(\hat{{{\bf{s}}}},{k}_{\parallel }\right)\right\rangle\). The background sources are assumed to be located outside the other end of the simulation volume, with lines of sight randomly directed through the simulation, each crossing multiple neutral regions. This setup ensures a realistic representation of the spatial distribution of neutral patches and the effects of varying local density. In the rest of this work, we abbreviate k∥ to k because here we are always interested in k along the line of sight. We calculated the 1D power spectrum as presented in Supplementary Fig. 2 of the 21-cm forest corresponding to different conditions. The dotted line indicates the thermal noise of SKA2-LOW when the integration time is 200 h. It can be seen that utilizing the 1D power spectrum, the 21-cm forest signals can be detected.

Within the large-scale simulation box, we randomly selected 10 neutral hydrogen regions corresponding to 10 background radio sources at redshift z = 9 with flux densities S150 = 10 mJy. Each region contains 5 adjacent grid cells along the line of sight for detailed small-scale modeling. By tessellating these 2 Mpc grid units, we established a composite grid system spanning 2 Mpc × 2 Mpc × 10 Mpc, containing 500 × 500 × 2500 voxels (i.e., 500 × 500 independent line-of-sight paths, each penetrating a 10 Mpc-depth structure). This configuration preserves the 10 Mpc-scale correlations derived from the 21cmFAST simulations at large scales while introducing negligible artifacts in the 1D small-scale power spectrum along the line of sight. To mitigate edge effects caused by excluding external DM halos in our simulation, we implemented boundary truncation in two transverse dimensions: removing peripheral layers from the original 500 × 500 line array perpendicular to the line-of-sight direction, retaining only the central 300 × 300 = 90,000 valid line-of-sight paths. For each background source, we assumed that 10 distinct 10 Mpc neutral hydrogen segments could be extracted. Given potential intervening ionized bubbles between neutral segments, we adopted a conservative estimate of 200 Mpc total line-of-sight length, equivalent to a redshift span Δz ≈ 0.8—a range consistent with practical observational constraints. To effectively suppress thermal noise, we simulated 90,000 power spectra per background source. For each source, these power spectra were partitioned into 9000 groups, each containing 10 line-of-sight paths. All 10 paths within a group originate from identical simulation parameters, thereby representing 10 neutral hydrogen segments in a single background source spectrum. Finally, through incoherent averaging of 100 power spectra (10 sources × 10 segments), we generated 9000 synthetic 1D power spectra.

However, the infall model, suitable for high-density areas with numerous halos, tends to overestimate the density field, requiring normalization of the field to match the initial local density of 21cmFAST outputs. The normalization technique of ref. 11, which involves multiplying each voxel by a factor, alters the amplitude of the 1D power spectrum of the 21-cm forest and leads to a crossover in the 1D power spectrum across different DM models (See Fig. 3 in ref. 11). To address this, we employ a normalization method that does not affect the amplitude of the 1D power spectrum, adjusting the density field by adding or subtracting a number. Furthermore, to maintain the probability density distribution of the density field, we adjust the lower limit of halo mass to 105M⊙ in the simulation, corresponding to the Jeans mass71 at the redshift of interest, which also mitigates anomalies in the 1D power spectrum on large scales. These adjustments to the density field and halo mass lower limit modify the structure of the 1D power spectrum at different scales, thereby changing the direction of parameter degeneracies. Figure 6 in ref. 11 shows that the degeneracy direction between mWDM and TK is negatively correlated. As we previously discussed in Supplementary Fig. 7, different scales affect the degeneracy direction of the two parameters differently. In this work, the improvements we made have altered the relationship of the 1D power spectrum on large scales for different DM models. Consequently, in Fig. 6, the degeneracy direction between mWDM and TK becomes positively correlated. Meanwhile, using SKA2-LOW with an integration time of 100 h is insufficient to observe the 21-cm forest signal. As a result, we increased the integration time to 200 h.

Dataset settings

We finally obtained the simulated correspondence between parameters (mWDM, TK) and the 1D power spectrum in the presence of thermal noise. Specifically, we simulated 7 simulation regions with DM particle masses ranging from 3 keV to 9 keV at equal intervals (1 keV). For the gas temperature, we consider two situations. When the heating effect is weak (fX ≈ 0.1), the IGM will be heated to about 60 K at z = 9. Therefore, we choose equal intervals (5 K) between 40 K and 80 K. When the heating effect is strong (fX ≈ 1), the IGM will be heated to about 600 K at z = 9. Therefore, we choose equal intervals (100 K) between 400 K and 800 K. For each set of parameters, out of 9000 power spectra, we take 300 as the testing set and use the remaining 8700 for training. In addition, we also generated some data sets of other parameters for validation and testing sets as presented in Supplementary Fig. 10. It should be noted that this method is not limited to the above parameter range and is also applicable to other parameter ranges. Although LHS47,48 could reduce the number of sampling points per dimension, the computational cost of varying TK is relatively small once mWDM is fixed, making LHS less beneficial in this context. Specifically, for the density field simulation, when the mass is 9 keV, it can take up to 1317 h on 64 cores (Intel(R) Xeon(R) Gold 6271C CPU @ 2.60GHz). In contrast, the simulation of temperature field can be completed within 1 min. Moreover, given the correlation between mWDM and TK, this approach would be less effective. For future studies involving more parameters, exploring higher-dimensional spaces and considering methods like LHS to improve efficiency are worthwhile directions for further investigation. However, in studies of the 21-cm forest, the dominant factors influencing the signal arise from DM properties governing the density field and heating effects modulating the temperature field. Cosmological parameters exhibit minimal observational impact during the EoR, while astrophysical parameters linked to reionization processes remain subject to significant uncertainties. We therefore prioritize these two pivotal parameters that critically shape the 21-cm forest signal, as they encapsulate the dominant physical mechanisms relevant to near-future investigations.

To fit the input parameters to the deep learning model, we first need to normalize the 1D power spectrum and parameters before training and testing the network. For the parameters, we perform normalization as the formula

where ξ represents the original parameter (mWDM or TK), \({\xi }_{\max }\) is the maximum value of ξ, and \({\xi }_{\min }\) is the minimum value of ξ. Due to the significantly higher values of the power spectrum near the halo center, we used logarithmic normalization. This method helps handle the wide range of values and the outliers, ensuring more uniform analysis across different scales. We perform logarithmic normalization on the 1D power spectrum as the formula

where P(k) represents the value of the 1D power spectrum at a specific wavenumber k after binning, \(\log {P}_{\max }(k)\) is the logarithm of the maximum 1D power spectrum value at k, and \(\log {P}_{\min }(k)\) is the logarithm of the minimum 1D power spectrum value at k. This approach helps balance the contributions of each scale and improves the stability and efficiency of network training. The data set generated and processed by the above method are presented in Supplementary Fig. 10, which not only provides a solid foundation for training and testing our deep learning model but also ensures the reliability and scientific significance of the research results. Additionally, we conducted a test on two completely random points, and the results, as shown in Supplementary Fig. 11, validate the model.

The principle of the normalizing flow

Based on the above simulation, we propose a parameter inference method. The basic idea is to generate a large number of simulated data sets with relevant parameters and use these data sets to train a neural network, specifically an NF model21 to approximate the posterior distribution. Compared to traditional generative models such as generative adversarial networks72 and variational autoencoders73, which often suffer from challenges like mode collapse, posterior collapse, and imprecise probability estimation74, GNF provides a significant advantage by directly learning the exact likelihood function, which has been proven to enable high-quality data generation22. In addition, NFs have demonstrated their gravitational wave parameter estimation capabilities by enabling real-time inference in high-dimensional spaces (up to 17 dimensions)75, with scalability potential to higher dimensionality. Recent methodological advances reveal that emerging alternatives like diffusion models76 achieve superior sample generation quality while addressing aforementioned limitations, yet their multi-step iterative processes incur substantial computational latency. By contrast, the NF framework maintains implementation practicality—a critical advantage requiring systematic evaluation when deploying such models in time-sensitive astrophysical applications. By leveraging these simulated data sets, the trained network can quickly generate new posterior samples when observational data is obtained and tested. This eliminates the need to generate the 1D power spectrum at inference time, effectively amortizing the computational cost of training across all future detections. The general method for building such models is called neural posterior estimation (NPE)77.

In more detail, the NF is a method of probabilistic modeling using deep learning technology. Here, we use the notation of the INF for explanation. The differences between the INF and GNF, as shown in Fig. 2, are merely the conditions and outputs. The core idea of NF is to construct a bijective transformation fP based on the 1D power spectrum P, thereby obtaining the mapping relationship between a simple base distribution (e.g., a normal distribution) and a complex posterior distribution. It is a type of reversible neural network characterized by the ability to accurately calculate the Jacobian determinant of the probability density function through network transformation. The NF can be mathematically expressed as21

where \({{\mathcal{Q}}}(\xi | P)\) represents the approximate posterior distribution exported by the NF, π(u) is typically chosen to be a standard multivariate normal distribution for ease of sampling and density evaluation, and fP represents the transformation applied to the data. This transformation can be applied iteratively to construct complex densities:

where each fP,i(u) represents a block of the NF. This iterative approach is central to NPE, where the goal is to train a parameter conditional distribution that approximates the true posterior distribution. This task translates into an optimization problem aimed at minimizing the expected Kullback-Leibler divergence (KLD) between the true and approximate posterior distributions78:

where \({{\mathcal{P}}}(\xi )\) represents the true posterior distribution and \({{\mathcal{Q}}}(\xi )\) represents the approximate posterior distribution.

The training of the NF involves minimizing the loss function, which is the expected value of the cross-entropy between the true and model distributions79:

For a minibatch of training data of size N, this can be approximated as

Our NF implementation is based on the neural spline flow (NSF)80, specifically a rational-quadratic neural spline flow with autoregressive layers, RQ-NSF (AR). Each flow is connected by a series of autoregressive layers that share parameters. Specifically, the network uses the conditional and partially vectorized output from the previous flow (when i is equal to 0, this is the vector sampled from a standard distribution) as the input of a multilayer perceptron (MLP), and the output of the MLP is used to parameterize the RQ-NSF (AR). These parameters are defined as the width and height of K bins, which ultimately form K + 1 knots \({\left\{\left({x}^{(i)},{y}^{(i)}\right)\right\}}_{i = 0}^{K}\). The left and right endpoints are (−B, −B) and (B, B), respectively, and there are K − 1 derivatives of knots \({\left\{{\dot{y}}^{(i)}\right\}}_{i = 1}^{K-1}\), where the derivatives of the left and right endpoints are set to 1. Therefore, the data is divided into K bins from − B to B. Each stream is a map that maps −B to B and then −B to B again, so the y-axis is also divided into K bins from −B to B. Figure 1 in ref. 80 shows the mapping relationship of the coupling transformation when K is 10.

Neural network architecture

NFs, unlike Bayesian methods, do not require an explicit definition of the likelihood function. They employ a likelihood-free approach, making it suitable for handling non-Gaussian data, where deriving an analytical likelihood is either computationally expensive or infeasible. Since the scale of the 21-cm forest data is extremely small, generating the 1D power spectra of the 21-cm forest corresponding to a set of parameter points requires even up to 40,000 core hours. This will make it difficult to obtain 1D power spectrum for any parameter, potentially leading to incorrect estimates of the posterior distribution as presented in Supplementary Fig. 1. Therefore, our method consists of a GNF for generating the 1D power spectrum of arbitrary parameters and an INF for parameter inference. The primary difference between these two is the conditioning: the GNF conditions on the parameters to generate power spectra, while the INF conditions on the power spectra to infer parameters. All the NF networks were trained using the “Xavier”81 initialization for network parameters and the AdamW optimizer82 on a single NVIDIA GeForce RTX A6000 GPU with 48 GB of memory.

The GNF is used to generate a 1D power spectrum corresponding to all parameters in the parameter space. Interpolation is a common method for filling in missing data and can serve as a supplementary approach for generating the 1D power spectra. However, as shown in Supplementary Fig. 12, due to the inherent cosmic variance and the correlations between different scales k, interpolation often results in lower P(k) and less smooth transitions in the interpolated regions of parameter space. This highlights the limitations of interpolation methods, as they rely heavily on the assumption of smooth parameter variation, which is not always valid in the presence of cosmic variance. In contrast, the GNF model does not merely act as an interpolation tool but learns the underlying statistical relationships between parameters and the 1D power spectra, enabling it to reproduce both the smooth and stochastic features of the data. However, as is common in deep learning models, the GNF model may produce less reliable extrapolations in regions of parameter space that are underrepresented in the training data. For example, while the model performs well at high TK, it may yield unphysical results for large-scale modes at low TK. This limitation can be addressed by expanding the range of the training dataset to better cover the parameter space. Specifically, the GNF takes a parameter vector as input and finally outputs an estimate of the 1D power spectrum of the 21-cm forest. The training set is composed of 8700 black points from the data, as shown in Supplementary Fig. 10, the blue point data as the validation set, and the red point as the testing set. For GNFs, to prevent overfitting and achieve convergence, we employed early stopping with a patience of 15 epochs and utilized L1 regularization and L2 regularization83. A summary of key hyperparameters for GNF is provided in Supplementary Table 1.

The INF is designed to perform parameter inference based on data generated by the GNF. The architecture of the INF is similar to that of the GNF. Specifically, the INF receives the 1D power spectra generated by the GNF, conditioned on the 1D power spectra P(k), and maps the corresponding parameters θ onto a standard distribution. By utilizing the bijective transformation property of the INF, this process enables the reverse prediction of the model parameters θ. If the GNF can accurately capture the characteristics of the 1D power spectrum, then using the sampling points from the GNF within the prior range as the input of the INF, the INF can provide appropriate inference results for the simulation data. To prevent overfitting, the INF is trained on dynamically simulated data generated by the GNF in an iterative scheme. Specifically, during each training step of the INF, the GNF generates new samples based on the current training progress. These samples are used to update the INF’s parameters, and this iterative process continues until the loss function stabilizes, indicating that the training has converged. For this work, we use a batch size of 64, and the GNF generates 128 samples per batch during training. A summary of key hyperparameters for INF is provided in Supplementary Table 1. The remaining 300 black points in the data are used as the testing set as presented in Supplementary Fig. 10.

Model evaluation method

We use the coefficient of determination R2 to evaluate whether the mean and standard deviation of the 1D power spectra generated by the GNF are reasonable. Its expression is:

where ypred and y represent the mean or standard deviation of the 1D power spectra generated by the GNF and the corresponding values from the simulated 1D power spectra across different scales, respectively. \(\bar{y}\) represents the overall mean or standard deviation of the simulated generated 1D power spectra on different scales. To check whether the distributions generated under different conditions and scales are consistent with the simulated situation, we also calculate JSD:

where \({{{\mathcal{P}}}}_{{{\rm{sim}}}}\) and \({{{\mathcal{Q}}}}_{{{\rm{gen}}}}\) represent the distribution of simulated 1D power spectra and the GNF-generated 1D power spectra on different k, respectively. The JSD is a symmetric measure of similarity between two probability distributions. A smaller JSD value indicates a higher degree of similarity between the distributions, effectively addressing the asymmetry issue inherent in KLD.

To evaluate the distribution characteristics of the 1D power spectra of the 21-cm forest at specific scales under different astrophysical conditions, we used the skewness coefficient in statistical analysis as a key indicator to assess its Gaussianity. We calculated the skewness coefficient of the normalized 1D power spectrum at different scales k, which is defined as the ratio of the third standardized moment to the cube of the standard deviation:

where P(k) represents the normalized 1D power spectrum at different scales k, μ is the mean value of P(k), σ is the standard deviation of P(k), and E represents the expected value. If the value of S is close to zero, the distribution can be considered symmetric at that scale and close to a Gaussian distribution. If S is significantly different from zero, it indicates that the distribution has a noticeable skewness.

To quantify the correlation between different scales k of the 1D power spectrum of the 21-cm forest, we use the Spearman correlation coefficient84. The Spearman correlation coefficient is a non-parametric measure of rank correlation that assesses the monotonic relationship between two variables. It does not assume a normal distribution of the data, making it more robust for handling non-Gaussian distributions. Given the significant non-Gaussian characteristics of our data, we chose the Spearman correlation coefficient to more accurately capture the correlations between different scales. This approach helps avoid errors that could arise from assuming a Gaussian distribution of the data. If all n ranks are distinct integers, the Spearman correlation coefficient ρ is defined as

where di is the difference between the ranks of corresponding values of the two variables, and n is the number of observations.

In our analysis, we employ the KS test43 to assess the fit between the posterior distribution of the INF’s output and the theoretical distribution. The KS test compares the empirical distribution derived from the sample data with a specified theoretical distribution by measuring the maximum difference between their CDFs. This difference is known as the KS statistic d, which can be expressed as

where Fn(x) is the empirical CDF of the sample, and F(x) is the theoretical CDF. Theoretically, within a given confidence interval of the posterior distribution, the percentage of actual data should match the confidence level. Therefore, the theoretical CDF should be uniform. To interpret the d value in terms of statistical significance, we calculate a p-value using the distribution of the KS statistic under the null hypothesis. This p-value represents the probability of observing a KS statistic as extreme as d, assuming that the sample distribution is drawn from the theoretical distribution. The CDF of the KS statistic under the null hypothesis is given by

The p-value is derived from this function and represents the probability of observing a value as extreme as the calculated d. A small p-value (typically indicated by a significance level of 0.05) would lead us to reject the null hypothesis, suggesting a significant discrepancy between the empirical and theoretical distributions.

Data availability

The datasets for all figures in the text of this work are provided in Supplementary Data 1. Other datasets that support the findings of this work are available from the corresponding author upon reasonable request.

Code availability

The code 21cmFAST used for large-scale simulation is publicly available at https://github.com/andreimesinger/21cmFAST, the codes for simulating small-scale structures and 21-cm forest signals are available from the corresponding authors upon reasonable request. The code for building deep learning networks is publicly available at https://github.com/probabilists/lampe, and the codes for training and testing in deep learning are available from the corresponding authors upon reasonable request.

References

Carilli, C. L., Gnedin, N. Y. & Owen, F. H I 21 centimeter absorption beyond the epoch of reionization. Astrophys. J. 577, 22–30 (2002).

Furlanetto, S. & Loeb, A. The 21 cm forest: radio absorption spectra as a probe of the intergalactic medium before reionization. Astrophys. J. 579, 1–9 (2002).

Furlanetto, S. The 21 centimeter forest. Mon. Not. Roy. Astron. Soc. 370, 1867–1875 (2006).

Xu, Y., Chen, X., Fan, Z., Trac, H. & Cen, R. The 21 cm forest as a probe of the reionization and the temperature of the intergalactic medium. Astrophys. J. 704, 1396–1404 (2009).

Xu, Y., Ferrara, A. & Chen, X. The earliest galaxies seen in 21 cm line absorption. Mon. Not. Roy. Astron. Soc. 410, 2025–2042 (2011).

Ciardi, B. et al. Prospects for detecting the 21 cm forest from the diffuse intergalactic medium with LOFAR. Mon. Not. R. Astron. Soc. 428, 1755–1765 (2013).

Bode, P., Ostriker, J. P. & Turok, N. Halo formation in warm dark matter models. Astrophys. J. 556, 93–107 (2001).

Hu, W., Barkana, R. & Gruzinov, A. Cold and fuzzy dark matter. Phys. Rev. Lett. 85, 1158–1161 (2000).

Xu, Y., Ferrara, A., Kitaura, F. S. & Chen, X. Searching for the earliest galaxies in the 21 cm forest. Sci. China Phys., Mech., Astron. 53, 1124–1129 (2010).

Shimabukuro, H., Ichiki, K., Inoue, S. & Yokoyama, S. Probing small-scale cosmological fluctuations with the 21 cm forest: Effects of neutrino mass, running spectral index, and warm dark matter. Phys. Rev. D 90, 083003 (2014).

Shao, Y. et al. The 21-cm forest as a simultaneous probe of dark matter and cosmic heating history. Nat. Astron. 7, 1116–1126 (2023).

Niu, Q., Li, Y., Xu, Y., Guo, H. & Zhang, X. Prospects for observing high-redshift radio-loud quasars in the SKA Era: paving the way for 21 cm forest observations. Astrophys. J. 978, 145 (2025).

Bird, S. et al. PRIYA: a new suite of Lyman-α forest simulations for cosmology. JCAP 10, 037 (2023).

Baur, J., Palanque-Delabrouille, N., Yèche, C., Magneville, C. & Viel, M. Lyman-alpha forests cool warm dark matter. JCAP 08, 012 (2016).

Carilli, C., Gnedin, N. Y. & Owen, F. Hi 21cm absorption beyond the epoch of re-ionization. Astrophys. J. 577, 22–30 (2002).

Mondal, R., Bharadwaj, S. & Majumdar, S. Statistics of the epoch of reionization (EoR) 21-cm signal—II. The evolution of the power-spectrum error-covariance. Mon. Not. Roy. Astron. Soc. 464, 2992–3004 (2017).

Muñoz, J. B. An effective model for the cosmic-dawn 21-cm signal. Mon. Not. Roy. Astron. Soc. 523, 2587–2607 (2023).

Press, W. H., Teukolsky, S. A., Vetterling, W. T. & Flannery, B. P. Numerical Recipes: The Art of Scientific Computing, 3rd edn. (Cambridge University Press, New York, 2007).

McLachlan, G. & Peel, D. Finite Mixture Models. Wiley Series in Probability and Statistics (Wiley, New York, 2000).

Blei, D. M., Kucukelbir, A. & McAuliffe, J. D. Variational inference: a review for statisticians. J. Am. Stat. Assoc. 112, 859–877 (2017).

Rezende, D. J. & Mohamed, S. Variational inference with normalizing flows. In: Proc. 32nd International Conference on Machine Learning, ICML 2015, Lille, France, 6–11 July 2015 (eds Bach, F. R. & Blei, D. M.) Vol. 37 of JMLR Workshop and Conference Proceedings, 1530–1538 (JMLR.org, 2015). http://proceedings.mlr.press/v37/rezende15.html.

Hassan, S. et al. HIFlow: generating diverse Hi maps and inferring cosmology while marginalizing over astrophysics using normalizing flows. Astrophys. J. 937, 83 (2022).

Friedman, R. & Hassan, S. HIGlow: conditional normalizing flows for high-fidelity HI map modeling. Preprint at https://arxiv.org/abs/2211.12724 (2022).

Gillet, N., Mesinger, A., Greig, B., Liu, A. & Ucci, G. Deep learning from 21-cm tomography of the Cosmic Dawn and Reionization. Mon. Not. Roy. Astron. Soc. 484, 282–293 (2019).

Zhao, X., Mao, Y., Cheng, C. & Wandelt, B. D. Simulation-based inference of reionization parameters from 3d tomographic 21 cm light-cone images. Astrophys. J. 926, 151 (2022).

Zhao, X., Mao, Y. & Wandelt, B. D. Implicit likelihood inference of reionization parameters from the 21 cm power spectrum. Astrophys. J. 933, 236 (2022).

Zhao, X., Mao, Y., Zuo, S. & Wandelt, B. D. Simulation-based inference of reionization parameters from 3d tomographic 21 cm light-cone images. ii. application of solid harmonic wavelet scattering transform. Astrophys. J. 973, 41 (2024).

Sun, T.-Y. et al. Efficient parameter inference for gravitational wave signals in the presence of transient noises using temporal and time-spectral fusion normalizing flow. Chin. Phys. C 48, 045108 (2024).

Xiong, C.-Y., Sun, T.-Y., Zhang, J.-F. & Zhang, X. Robust inference of gravitational wave source parameters in the presence of noise transients using normalizing flows. Phys. Rev. D 111, 024019 (2025).

Nayak, P., Walther, M., Gruen, D. & Adiraju, S. LYαNNA: a deep learning field-level inference machine for the Lyman-α forest. Astron. Astrophys. 689, A153 (2024).

Maitra, S., Cristiani, S., Viel, M., Trotta, R. & Cupani, G. Parameter estimation from the Lyα forest in the Fourier space using an information-maximizing neural network. Astron. Astrophys. 690, A154 (2024).

Abdurashidova, Z. et al. Improved constraints on the 21 cm EoR power spectrum and the x-ray heating of the IGM with HERA phase I observations. Astrophys. J. 945, 124 (2023).

Garzilli, A., Magalich, A., Ruchayskiy, O. & Boyarsky, A. How to constrain warm dark matter with the Lyman-α forest. Mon. Not. Roy. Astron. Soc. 502, 2356–2363 (2021).

Enzi, W. et al. Joint constraints on thermal relic dark matter from strong gravitational lensing, the Ly α forest, and Milky Way satellites. Mon. Not. Roy. Astron. Soc. 506, 5848–5862 (2021).

Villasenor, B., Robertson, B., Madau, P. & Schneider, E. New constraints on warm dark matter from the Lyman-α forest power spectrum. Phys. Rev. D 108, 023502 (2023).

Zelko, I. A. et al. Constraints on sterile neutrino models from strong gravitational lensing, Milky Way satellites, and the Lyman-α forest. Phys. Rev. Lett. 129, 191301 (2022).

Iršič, V. et al. Unveiling dark matter free streaming at the smallest scales with the high redshift Lyman-alpha forest. Phys. Rev. D 109, 043511 (2024).

Acedo, E. d. L., Pienaar, H. & Fagnoni, N. Antenna design for the SKA1-LOW and HERA super radio telescopes. In 2018 International Conference on Electromagnetics in Advanced Applications (ICEAA), 636–639 (2018).

Braun, R., Bonaldi, A., Bourke, T., Keane, E. & Wagg, J. Anticipated performance of the square kilometre array—Phase 1 (SKA1) (2019). Preprint at https://arxiv.org/abs/1912.12699.

https://www.skao.int/en/science-users/118/ska-telescope-specifications.

Lin, J. Divergence measures based on the shannon entropy. IEEE Trans. Inf. Theory 37, 145–151 (1991).

Rao, C. R. Differential metrics in probability spaces. Differ. Geom. Stat. Inference 10, 217–240 (1987).

Lopes, R. H. C. Kolmogorov-Smirnov Test 718–720 (Springer Berlin Heidelberg, 2011). https://doi.org/10.1007/978-3-642-04898-2_326.

Bhagwat, A., Ciardi, B., Zackrisson, E. & Schaye, J. Cospatial 21 cm and metal-line absorbers in the epoch of reionization—I. Incidence and observability. Mon. Not. Roy. Astron. Soc. 517, 2331–2342 (2022).

Pritchard, J. R. & Furlanetto, S. R. 21 cm fluctuations from inhomogeneous X-ray heating before reionization. Mon. Not. Roy. Astron. Soc. 376, 1680–1694 (2007).

Park, J., Mesinger, A., Greig, B. & Gillet, N. Inferring the astrophysics of reionization and cosmic dawn from galaxy luminosity functions and the 21-cm signal. Mon. Not. Roy. Astron. Soc. 484, 933–949 (2019).

McKay, M. D. Beckman, R. J. & Conover, W. J. Comparison of three methods for selecting values of input variables in the analysis of output from a computer code. Technometrics 42, 55–61 (2000).

Schmit, C. J. & Pritchard, J. R. Emulation of reionization simulations for Bayesian inference of astrophysics parameters using neural networks. Mon. Not. Roy. Astron. Soc. 475, 1213–1223 (2018).

More, A. Survey of resampling techniques for improving classification performance in unbalanced datasets. Preprint at https://arxiv.org/abs/1608.06048 (2016).

Banerjee, J. et al. Machine learning in rare disease. Nat. Methods 20, 803–814 (2023).

Madau, P., Meiksin, A. & Rees, M. J. 21-CM tomography of the intergalactic medium at high redshift. Astrophys. J. 475, 429 (1997).

Field, G. B. An attempt to observe neutral hydrogen between the galaxies. Astrophysical J. 129, 525 (1959).

Wouthuysen, S. On the excitation mechanism of the 21-cm (radio-frequency) interstellar hydrogen emission line. Astronomical J. 57, 31–32 (1952).

Field, G. B. The spin temperature of intergalactic neutral hydrogen. Astrophysical J. 129, 536 (1959).

Mesinger, A., Furlanetto, S. & Cen, R. 21cmFAST: A Fast, Semi-Numerical Simulation of the High-Redshift 21-cm Signal. Mon. Not. Roy. Astron. Soc. 411, 955 (2011).

Yue, B. & Chen, X. Reionization in the warm dark matter model. Astrophysical J. 747, 127 (2012).

Dayal, P., Choudhury, T. R., Bromm, V. & Pacucci, F. Reionization and galaxy formation in warm dark matter cosmologies. Astrophysical J. 836, 16 (2017).

Cooray, A. & Sheth, R. K. Halo models of large scale structure. Phys. Rept. 372, 1–129 (2002).

Zentner, A. R. The excursion set theory of halo mass functions, halo clustering, and halo growth. Int. J. Mod. Phys. D 16, 763–816 (2007).

Press, W. H. & Schechter, P. Formation of galaxies and clusters of galaxies by selfsimilar gravitational condensation. Astrophys. J. 187, 425–438 (1974).

Smith, R. E. & Markovic, K. Testing the Warm Dark Matter paradigm with large-scale structures. Phys. Rev. D 84, 063507 (2011).

Shimabukuro, H., Ichiki, K. & Kadota, K. Constraining the nature of ultra light dark matter particles with the 21 cm forest. Phys. Rev. D 101, 043516 (2020).

Shimabukuro, H., Ichiki, K. & Kadota, K. Impact of dark matter-baryon relative velocity on the 21-cm forest. Phys. Rev. D 107, 123520 (2023).

Shimabukuro, H., Ichiki, K. & Kadota, K. 21 cm forest probes on axion dark matter in postinflationary Peccei-Quinn symmetry breaking scenarios. Phys. Rev. D 102, 023522 (2020).

Xu, Y., Yue, B. & Chen, X. Maximum absorption of the global 21 cm spectrum in the standard cosmological model. Astrophys. J. 923, 98 (2021).

Navarro, J. F., Frenk, C. S. & White, S. D. M. A Universal density profile from hierarchical clustering. Astrophys. J. 490, 493–508 (1997).

Barkana, R. A Model for infall around virialized halos. Mon. Not. Roy. Astron. Soc. 347, 57 (2004).

Aghanim, N. et al. Planck 2018 results. VI. Cosmological parameters. Astron. Astrophys. 641, A6 (2020). [Erratum: Astron.Astrophys. 652, C4 (2021)].

Thompson, A. R., Moran, J. M. & Swenson, G. W. Interferometry and Synthesis in Radio Astronomy (Springer Nature, 2017).

Thyagarajan, N. Statistical detection of IGM structures during cosmic reionization using absorption of the redshifted 21 cm line by HI against compact background radio sources. Astrophys. J. 899, 16 (2020).

Ripamonti, E., Mapelli, M. & Ferrara, A. The impact of dark matter decays and annihilations on the formation of the first structures. Mon. Not. Roy. Astron. Soc. 375, 1399–1408 (2007).

Goodfellow, I. et al. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 27 (2014).

Kingma, D. P. & Welling, M. Auto-encoding variational bayes. In 2nd International Conference on Learning Representations, ICLR (2014).

Bond-Taylor, S., Leach, A., Long, Y. & Willcocks, C. G. Deep generative modelling: a comparative review of vaes, gans, normalizing flows, energy-based and autoregressive models. IEEE Trans. Pattern Anal. Mach. Intell. 44, 7327–7347 (2021).

Dax, M. et al. Real-time inference for binary neutron star mergers using machine learning. Nature 639, 49–53 (2025).

Ho, J., Jain, A. & Abbeel, P. Denoising diffusion probabilistic models. Adv. Neural Inform. Process. Syst. 33, 6840–6851 (2020).

Papamakarios, G. & Murray, I. Fast ε-free inference of simulation models with Bayesian conditional density estimation. Adv. Neural Inform. Process. Syst. 29 (2016).

Csiszar, I. I-Divergence geometry of probability distributions and minimization problems. Ann. Probab. 3, 146–158 (1975).

Papamakarios, G., Sterratt, D. C. & Murray, I. Sequential neural likelihood: Fast likelihood-free inference with autoregressive flows. In: The 22nd International Conference on Artificial Intelligence and Statistics, AISTATS 2019, 16-18 April 2019, Naha, Okinawa, Japan Vol. 89 of Proceedings of Machine Learning Research (eds Chaudhuri, K. & Sugiyama, M.) 837–848 (PMLR, 2019). http://proceedings.mlr.press/v89/papamakarios19a.html.

Durkan, C., Bekasov, A., Murray, I. & Papamakarios, G. Neural spline flows. Adv. Neural Inform. Process. Syst. 32 (2019).

Glorot, X. & Bengio, Y. Understanding the difficulty of training deep feedforward neural networks. In: Proc. 13th International Conference on Artificial Intelligence and Statistics, AISTATS 2010, Chia Laguna Resort, Sardinia, Italy, May 13-15, 2010 Vol. 9 of JMLR Proceedings (eds Teh, Y. W. & Titterington, D. M.) 249–256 (JMLR.org, 2010). http://proceedings.mlr.press/v9/glorot10a.html.

Loshchilov, I. & Hutter, F. Decoupled weight decay regularization. In: 7th International Conference on Learning Representations, ICLR 2019, New Orleans, LA, USA, May 6-9, 2019 (OpenReview.net, 2019). https://openreview.net/forum?id=Bkg6RiCqY7.

Hoerl, A. E. & Kennard, R. W. Ridge regression: biased estimation for nonorthogonal problems. Technometrics 12, 55–67 (1970).

Fieller, E. C., Hartley, H. O. & Pearson, E. S. Tests for rank correlation coefficients. I. Biometrika 44, 470–481 (1957).

Acknowledgements

This work was supported by the National SKA Program of China (Grants Nos. 2022SKA0110200 and 2022SKA0110203), the National Natural Science Foundation of China (Grants Nos. 12473001, 11975072, 11875102, and 11835009), and the National 111 Project (Grant No. B16009).

Author information

Authors and Affiliations

Contributions

Tian-Yang Sun performed most of the computation and wrote the majority of the manuscript. Yue Shao performed part of the computation and wrote part of the manuscript. Yichao Li, Yidong Xu and He Wang wrote part of the manuscript. Xin Zhang and Yidong Xu proposed the study. Xin Zhang led the study and contributed to the manuscript writing. All authors discussed the results and commented on the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Communications Physics thanks the anonymous reviewers for their contribution to the peer review of this work. A peer review file is available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Sun, TY., Shao, Y., Li, Y. et al. Deep learning-driven likelihood-free parameter inference for 21-cm forest observations. Commun Phys 8, 220 (2025). https://doi.org/10.1038/s42005-025-02139-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s42005-025-02139-5